Abstract

Error variance estimation plays an important role in statistical inference for high dimensional regression models. This paper concerns with error variance estimation in high dimensional sparse additive model. We study the asymptotic behavior of the traditional mean squared errors, the naive estimate of error variance, and show that it may significantly underestimate the error variance due to spurious correlations which are even higher in nonparametric models than linear models. We further propose an accurate estimate for error variance in ultrahigh dimensional sparse additive model by effectively integrating sure independence screening and refitted cross-validation techniques (Fan, Guo and Hao, 2012). The root n consistency and the asymptotic normality of the resulting estimate are established. We conduct Monte Carlo simulation study to examine the finite sample performance of the newly proposed estimate. A real data example is used to illustrate the proposed methodology.

Keywords: Feature screening, Refitted cross-validation, Sparse additive model, Variance estimation

1 Introduction

Statistical inference on regression models typically involves the estimation of the variance of its random error. Hypothesis testing on regression functions, confidence/prediction interval construction and variable selection all require an accurate estimate of the error variance. In the classical linear regression analysis, the adjusted mean squared error is an unbiased estimate of the error variance, and it performs well when the sample size is much larger than the number of predictors, or more accurately when the degree of freedom is large. It has been empirically observed that the mean squared error estimator leads to an underestimation of the error variance when model is significantly over-fitted. This has been further confirmed by the theoretical analysis of Fan, Guo and Hao (2012), in which the authors demonstrated the challenges of error variance estimation in the high-dimensional linear regression analysis, and further developed an accurate error variance estimator by introducing refitted cross-validation techniques.

Fueled by the demand in the analysis of genomic, financial, health, and image data, the analysis of high dimensional data has become one of the most important research topics during last two decades (Donoho, 2000; Fan and Li, 2006). There have been a huge number of research papers on high dimensional data analysis in the literature. It is impossible for us to give a comprehensive review here. Readers are referred to Fan and Lv (2010), Bühlmann and Van de Geer (2011) and references therein. Due to the complex structure of high dimensional data, the high dimensional linear regression analysis may be a good start, but it may not be powerful to explore nonlinear features inherent into data. Nonparametric regression modeling provides valuable analysis for high dimensional data (Ravikumar, et al. 2009; Hall and Miller, 2009; Fan, Feng and Song, 2011). This is particularly the case for error variance estimation, as nonparametric modeling reduces modeling biases in the estimate, but creates stronger spurious correlations. This paper aims to study issues of error variance estimation in ultrahigh dimensional nonparametric regression settings.

In this paper, we focus on sparse additive model. Our primary interest is to develop an accurate estimator for error variance in ultrahigh dimensional additive model. The techniques developed in this paper are applicable to other nonparametric regression models such as sparse varying coefficient models and some commonly-used semiparametric regression models such as sparse partial linear additive models and sparse semi-varying coefficient partial linear models. Since its introduction by Friedman and Stuetzle (1981), additive model has been popular, and many statistical procedures have been developed for sparse additive models in the recent literature. Lin and Zhang (2006) proposed COSSO method to identify significant variables in multivariate nonparametric models. Bach (2008) studied penalized least squares regression with group Lasso-type penalty for linear predictors and regularization on reproducing kernel Hilbert space norms, which is referred to as multiple kernel learning. Xue (2009) studied variable selection problem in additive models by integrating a group-SCAD penalized least squares method (Fan and Li, 2001) and the regression spline technique. Ravikumar, et. al. (2009) modified the backfitting algorithm for sparse additive models, and further established the model selection consistency of their procedure. Meier, Van de Geer and Bühlmann (2009) studied the model selection and estimation of additive models with a diverging number of significant predictors. They proposed a new sparsity and smoothness penalty and proved that their method can select all nonzero components with probability approaching to 1 as the sample size tends to infinity. With the ordinary group Lasso estimator as the initial estimator, Huang, Horowitz and Wei (2010) applied adaptive group Lasso to additive model under the setting in which there are only finite fixed number of significant predictors. Fan, Feng and Song (2011) proposed a nonparametric independent screening procedure for sparse ultrahigh dimensional data, and established its sure screening property in the terminology of Fan and Lv (2008).

In this paper, we propose an error variance estimate in ultrahigh dimensional additive models. It is typical to assume sparsity in ultrahigh dimensional data analysis. By sparsity, it means that the regression function depends only on a few significant predictors, and the number of significant predictors is assumed to be much smaller than the sample size. Because of the basis expansion in nonparametric fitting, the actual number of terms significantly increases in additive models. Therefore, the spurious correlation documented in Fan, Guo and Hao (2012) increases significantly. This is indeed demonstrated in Lemma 1, which shows that the spurious correlation with the response increases from using one most correlated predictor among p variables to by using one most correlated predictor with dn basis functions. If s variables are used, the spurious correlation may increase to its upper bound at an exponential rate of s.

To quantify this increase and explain more clearly the concept and the problem, we simulate n = 50 data points from the independent normal covariates (with p = 1000) and also independently normal response Y . In this null model, all covariates and the response Y are independent and follow the standard normal distribution. As in Fan, Guo and Hao (2012), we compute the maximum “linear” spurious correlation and the maximum “nonparametric” spurious correlation , where f̂j(Xj) is the best cubic spline fit of variable Xj to the response Y , using 3 equally spaced knots in the range of the variable Xj which create dn = 6 B-spline bases for Xj . The concept of the maximum spurious “linear” and spurious “nonparametric” (additive) correlations can easily be extended to s variables, which are the correlation between the response and fitted values using the best subset of s-variables. Based on 500 simulated data sets, Figure 1 depicts the results, which show the big increase of spurious correlations from linear to nonparametric fit. As the result, the noise variance is significantly underestimated.

Figure 1.

Distributions of the maximum “linear” and “nonparametric” spurious correlations for s = 1 and s = 2 (left panel, n = 50 and p = 1000) and their consequences on the estimating of noise variances (right panel). The legend ‘LM’ stands for linear model, and ‘AM’ stands for additive model, i.e., nonparametric model.

The above reasoning and evidence show that the naive estimation of error variance is seriously biased. This is indeed shown in Theorem 1. This prompts us to propose a two-stage refitted cross-validation procedure to reduce spurious correlation. In the first stage, we apply a sure independence screening procedure to reduce the ultrahigh dimensionality to relative large dimensional regression problem. In the second stage, we apply refitted cross validation technique, which was proposed for linear regression model by Fan, Guo and Hao (2012), for the dimension-reduced additive models obtained from the first stage. The implementation of the newly proposed procedure is not difficult. However, it is challenging in establishing its sampling properties. This is because the dimensionality of ultrahigh dimensional sparse additive models becomes even higher.

We propose using B-splines to approximate the nonparametric functions, and first study the asymptotic properties of the traditional mean squared error, a naive estimator of the error variance. Under some mild conditions, we show that the mean squared error leads to a significant underestimate of the error variance. We then study the sampling properties of the proposed refitted cross-validation estimate, and establish its asymptotic normality. From our theoretical analysis, it can be found that the refitted cross-validation techniques can eliminate the side effects due to over-fitting. We also conduct Monte Carlo simulation studies to examine the finite sample performance of the proposed procedure. Our simulation results show that the newly proposed error variance estimate may perform significantly better than the mean squared error.

This paper makes the following major contributions. (a) We show the traditional mean squared errors as a naive estimation of error variance is seriously biased. Although this is expected, the rigorous theoretical development indeed is challenging rather than straightforward. (b) We propose a refitted cross-validation error variance estimation for ultrahigh dimensional nonparametric additive models, and further establish the asymptotic normality of the proposed estimator. The asymptotic normality implies that the proposed estimator is asymptotic unbiased and root n consistent. The extensions of refitted cross-validation error variance estimation from linear models to nonparametric models are interesting, and not straightforward in terms of theoretical development because the bias due to approximation error calls for new techniques to establish the theory. Furthermore, the related techniques developed in this paper may be further applied for refitted cross-validation error variance estimation in other ultrahigh-dimensional nonparametric regression models such as varying coefficient models and ultrahigh dimensional semiparametric regression models such as partially linear additive models and semiparametric partially linear varying coefficient models.

This paper is organized as follows. In Section 2, we propose a new error variance estimation procedure, and further study its sampling properties. In Section 3, we conduct Monte Carlo simulation studies to examine the finite sample performance of the proposed estimator, and demonstrate the new estimation procedure by a real data example. Some concluding remarks are given in Section 4. Technical conditions and proofs are given in the Appendix.

2 New procedures for error variance estimation

Let Y be a response variable, and x = (X1; ⋯ , Xp)T be a predictor vector. The additive model assumes that

| (2.1) |

where μ is intercept term, {fj(·), j = 1, ⋯ , p} are the unknown functions and ε is the random error with E(ε) = 0 and var(ε) = σ2. Following the convention in the literature, it is assumed throughout this paper that Efj(Xj) = 0 for j = 1, ⋯ , p so that model (2.1) is identifiable. This assumption implies that μ = E(Y ). Thus, a natural estimator for μ is the sample average of Y’s. This estimator is root n consistent, and its rate of convergence is faster than that for the estimator of nonparametric function fj’s. Without loss of generality, we further assume μ = 0 for ease of notation. The goal of this section is to develop an estimation procedure for σ2 for additive models.

2.1 Refitted cross-validation

In this section, we propose a strategy to estimate the error variance when the predictor vector is ultrahigh dimensional. Since fj’s are nonparametric smoothing functions, it is natural to use smoothing techniques to estimate fj . In this paper, we employ B-spline method throughout this paper. Readers are referred to De Boor (1978) for detailed procedure of B-spline construction. Let {Bjk(x), k = 1, ⋯ , dj , a ≤ x ≤ b} be B-spline basis of space with knots depending on j, the polynomial spline space defined on finite interval [a, b] with degree l ≥ 1. Approximate fj by its spline expansion

| (2.2) |

for some dj ≥ 1. In practice, dj is allowed to grow with the sample size n, and therefore denoted by djn to emphasize the dependence of n. With slightly abuse of notation, we use dn stands for djn for ease of notation. Thus, model (2.1) can be written as

| (2.3) |

Suppose that {(xi, Yi)}, i = 1, ⋯ , n is a random sample from the additive model (2.1). Model (2.3) is not estimable when pdn > n. It is common to assume sparsity in ultrahigh-dimensional data analysis. By sparsity in additive model, it means that only a few and other ||fj|| = 0. A general strategy to reduce ultrahigh dimensionality is sure independent feature screening, which enables one to reduce ultrahigh dimension to large or high dimension. Some existing feature screening procedures can be directly applied for ultrahigh dimensional sparse additive models. Fan, Feng and Song (2011) proposed nonparametric sure independent (NIS) screening method and further showed that the NIS screening method possesses sure screening property for ultrahigh dimensional additive models. That is, under some regularity conditions, with an overwhelming probability, the NIS is able to retain all active predictors after feature screening. Li, Zhong and Zhu (2012) proposed a model free feature screening procedure based on distance correlation sure independent screening (DC-SIS). The DC-SIS is also shown to have sure screening property. Both NIS and DC-SIS can be used for feature screening with ultrahigh dimensional sparse additive models, although we will use DC-SIS in our numerical implementation due to its intuitive and simple implementation.

Hereafter we always assume that all important variables have been selected by screening procedure. Under such assumption, we will overfit the response variable Y and underestimate the error variance σ2. This is due to the fact that extra variables are actually selected to predict the realized noises (Fan, Guo and Hao, 2012). After feature screening, a direct estimate of σ2 is the mean squared errors of the least squares approach. That is, we apply a feature screening procedure such as DC-SIS and NIS to screen x-variables and fit the data to the corresponding selected spline regression model. Denoted by 𝒟* the indices of all true predictors and 𝒟̂* the indices of the selected predictors respectively, satisfying the sure screening property 𝒟* ⊂ 𝒟̂*. Then, we minimize the following least squares function with respect to γ:

| (2.4) |

Denote by γ̂jk the resulting least squares estimate. Then, the nonparametric residual variance estimator is

Hereafter |𝒟| stands for the cardinality of a set 𝒟 and we have implicitly assumed that the choice of 𝒟̂* and dn is such that n ≫ | 𝒟̂*| · dn. It will be shown in Theorem 1 below that significantly underestimates σ2, due to spurious correlation between the realized but unobserved noises and the spline bases. Indeed we will show that is inconsistent estimate when | 𝒟̂*| · dn is large. Specifically, let P𝒟̂* be the corresponding projection matrix of model (2.4) with the entire samples. Denoted by , where ε = (ε1, ⋯ , εn)T . We will show that converges to σ2 with root n convergence rate, yet the spurious correlation is of order

| (2.5) |

See Lemma 1 and Theorem 1 in Section 2.2 for details. Our first aim is to propose a new estimation procedure of σ2 by using refitted cross-validation technique (Fan, Guo and Hao, 2012).

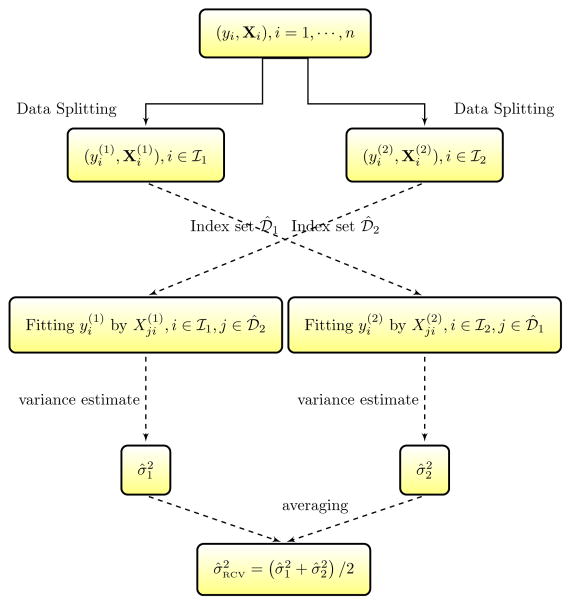

The refitted cross-validation procedure is to randomly split the random samples into two data sets denoted by ℐ1 and ℐ2 with approximately equal size. Without loss of generality, assume through this paper that ℐ1 and ℐ2 have the same sample size n/2. We apply a feature screening procedure (e.g., DC-SIS or NIS) for each set, and obtain two index sets of selected x-variables, denoted by 𝒟̂1 and 𝒟̂2. Both of them retain all important predictors. The refitted cross-validation procedure consists of three steps. In the first step, we fit data in ℐl to the selected additive model 𝒟̂3−l for l = 1 and 2 by the least squares method. These results in two least squares estimate γ̂(3−l) based on ℐl, respectively. In the second step, we calculate the mean squared errors for each fit:

for l = 1 and 2. Then the refitted cross-validation estimate of σ2 is defined by

This estimator is adapted from the one proposed in Fan, Guo and Hao (2012) for linear regression models, however, it is much more challenge in establishing the asymptotic property of for the large dimensional additive models than that for linear regression models. The major hurdle is to deal with the approximation error in nonparametric modeling as well as the correlation structure induced by the B-spline bases. The procedure of refitted cross validation is illustrated schematically in Figure 2.

Figure 2.

Refitted Cross Validation Procedure

2.2 Sampling properties

We next study the asymptotic properties of and . The following technical conditions are needed to facilitate the proofs, although they may not be the weakest.

-

(C1)

There exist two positive constants A1 and A2 such that E {exp(A1 |ε|)|x}≤ A2.

-

(C2)For all j, fj(·) ∈ 𝒞d ([a, b]), which consists of functions whose r-th derivative exists and satisfies

(2.6) for a given constant L > 0, where r ≤ l is the “integer part” of d and α ∈ (0, 1] such that d = r+α ≥ 2. Furthermore, it is assumed that dn = O(n1/(2d+1)), the optimal nonparametric rate (Stone, 1985).

-

(C3)

The joint distribution of predictors X is absolutely continuous and its density g is bounded by two positive numbers b and B satisfying that b ≤ g ≤ B. The predictor Xj , j = 1, ⋯ , p has a continuous density function gj , which satisfies that for any x ∈ [a, b], 0 < A3 ≤ gj(x) ≤ A4 < ∞ for two positive constants A3 and A4.

Condition (C1) is a tail condition on the random error. Condition (C2) is a typical smoothness condition in the literature of regression splines. Condition (C3) is a mild condition on the densities of the predictors, and this condition was imposed in Stone (1985) for low-dimensional additive models, and implies that there is no collinearity between the candidate predictors with probability one. The asymptotic properties of are given in the following theorem, in which we use pn to stand for p to emphasize that the dimension p of the predictor vector may depend on n. Since the DC-SIS and the NIS possess sure screening property, the resulting subset of predictors selected by the utilized screening procedure contains all active predictors, with probability tending to one. Thus, we assume that all active predictors are retained in the stage of feature screening in the following two theorems. This can be achieved by imposing the conditions in Li, Zhong and Zhu (2012) for the DC-SIS and the conditions in Fan, Feng and Song (2011) for the NIS. We first derive the orders of εTP𝒟̂*ε and in next lemma, which plays a critical role in the proofs of Theorems 1 and 2 below. The proofs of Lemma 1 and Theorems 1 and 2 will be given in the Appendix.

Lemma 1

Under Conditions (C1)—(C3), it follows that

where for some constant ζ0 ∈ (0, 1) with b and B being given in Condition (C3).

Lemma 1 clearly shows that the spurious correlation increases to its upper bound at an exponential rate of ŝ since δ ∈ (0, 1) and 2/(1 − δ) > 2.

Theorem 1

Assume that . Let ŝ = |𝒟̂*| be the number of elements in the estimated active index set 𝒟̂*. Assume that all active predictors are retained in the stage of feature screening. That is, 𝒟̂* contains all active predictors. Under Conditions (C1)–(C3), the following statements hold:

If log(pn) = O(n ζ), 0 ≤ ζ < 1 and ŝ = Op(log(n)), then converges to σ2 in probability as n → ∞;

-

If log(pn) = O(n ζ), 0 ≤ ζ < 3/(2d + 1) and ŝ = Op(log(n)), then it follows that

(2.7) where stands for convergence in law.

Theorem 1 (i) clearly indicates that the naive error variance estimator underestimates σ2 by a factor of ( ), yet by Lemma 1, is of order given in (2.5) and is not small. Since can not be estimated directly from the data, it is challenging to derive an adjusted error variance by modifying the commonly-used mean squared errors. On the other hand, the refitted cross-validation method provides an automatic bias correction via refitting and hence a consistent estimator, as we now show.

Theorem 2

Assume that contains all active predictors, for j = 1 and 2. Let be the number of elements in . Under Conditions (C1)–(C3), if ŝ1 = o(n(2d−1)/4(2d+1)), and ŝ2 = o(n(2d−1)/4(2d+1)), then

| (2.8) |

Comparing with the result in the theorem 1, the refitted cross-validation method can eliminate the side-effect of the selected redundant variables to correct the bias of the naive variance estimator through the contributions of refitting. This bias factor can be non-trivial.

Remark 1

This remark provides some implications and limitations of Theorems 1 and 2 and some clarification of conditions implicitly required by Theorem 2.

From the proof of Theorems 1 and 2, it has been shown that and . As a result, the ratio of RCV estimate to the naive estimator may be used to provide one an estimate of the shrinkage factor .

Theorem 2 is applicable provided that the active index sets , j = 1 and 2 include all active predictor variables. Here we emphasize that the RCV method can be integrated with any dimension reduction procedure to effectively correct the bias of naive error variance estimate, and do not directly impose condition on the dimension pn. In practical implementation, the assumption that both two active index sets include all important variables implies further condition on pn. In particular, the condition log(pn) = o(n) is necessary for DC-SIS (Li, Zhong and Zhu, 2012) to achieve sure screening property. This condition is also necessary for other sure screening procedures such as the NIS (Fan, Feng and Song, 2011) to achieve sure screening property. In Theorems 1 and 2, we have imposed conditions on ŝ, ŝ1 and ŝ2. These conditions may implicitly require extra conditions on the DC-SIS to ensure that the size of the subset selected by DC-SIS is of order required by the conditions. For NIS, by Theorem 2 of Fan, Feng and Song (2011), we need to impose some explicit conditions on the signal strength as well as the growth of the operator norm of the covariance matrix of covariates.

The RCV method can be combined with any feature screening methods such as DC-SIS and NIS and variable selection methods such as grouped LASSO and grouped SCAD (Xue, 2009) for ultrahigh dimensional additive models. The NIS method needs to choose a smoothing parameter for each predictor. The grouped LASSO and the grouped SCAD methods are expensive in terms of computational cost. We focus only on DC-SIS in the numerical studies to save space.

For sure independent screening procedures such as the SIS and DC-SIS, the authors recommended to set ŝ = n/log(n). The diverging rate of ŝ, ŝ1 and ŝ2 required in Theorems 1 and 2 are slower than this due to the nonparametric nature. It seems that it is difficult to further relax the conditions in Theorems 1 and 2. This can be viewed as a limitation of our theoretical results. From our simulation studies and real data examples, the performance of the naive method certainly relies on the choice of ŝ, while the RCV method performs well for a wide range of ŝ1 and ŝ2. As shown in Tables 1 and 2, the resulting estimate of the RCV method is very close to the oracle estimate across all scenarios in the tables. Theoretical studies on how to determine ŝ1 and ŝ2 are more related to the topic of feature screening than the variance estimation and we do not intend to pursue further in this paper. In practical implementation, the choices of these parameters should take into account of the degree of freedoms in the refitting stage so that the residual variance can be estimated with a reasonable accuracy. We would recommend considering several possible choices of ŝ1 and ŝ2 to examine whether the resulting variance estimate is relatively stable to the choices of ŝ1 and ŝ2. This is implemented in the real data example in Section 3.2.

Table 1.

Simulation Results for different ŝ (σ2 = 1)

| ŝ = 20 | ŝ = 30 | ŝ = 40 | ŝ = 50 | ||

|---|---|---|---|---|---|

| Method | a = 0 | ||||

| Oracle | 1.0042 ( 0.0618 )* | 1.0042 ( 0.0618 ) | 1.0042 ( 0.0618 ) | 1.0042 ( 0.0618 ) | |

| Naive | 0.8048 ( 0.0558 ) | 0.7549 ( 0.0589 ) | 0.7138 ( 0.0584 ) | 0.6771 ( 0.0584 ) | |

| RCV | 1.0022 ( 0.0656 ) | 0.9994 ( 0.0666 ) | 0.9990 ( 0.0698 ) | 0.9967 ( 0.0705 ) | |

|

|

|||||

| Oracle | 1.0049 ( 0.0617 ) | 1.0049 ( 0.0617 ) | 1.0049 ( 0.0617 ) | 1.0049 ( 0.0617 ) | |

| Naive | 0.9054 ( 0.0572 ) | 0.8683 ( 0.0592 ) | 0.8387 ( 0.0615 ) | 0.8143 ( 0.0644 ) | |

| RCV | 1.0704 ( 0.1300 ) | 1.0493 ( 0.1187 ) | 1.0374 ( 0.1095 ) | 1.0273 ( 0.1106 ) | |

|

|

|||||

| Oracle | 1.0072 ( 0.0618 ) | 1.0072 ( 0.0618 ) | 1.0072 ( 0.0618 ) | 1.0072 ( 0.0618 ) | |

| Naive | 0.9618 ( 0.0647 ) | 0.9618 ( 0.0647 ) | 0.9306 ( 0.0687 ) | 0.9194 ( 0.0780 ) | |

| RCV | 1.0026 ( 0.0657 ) | 1.0026 ( 0.0657 ) | 1.0020 ( 0.0735 ) | 1.0013 ( 0.0779 ) | |

Values in parentheses are standard errors

Table 2.

Simulation results with different n (σ2 = 1)

| n = 400 | n = 600 | ||

|---|---|---|---|

| Method | a = 0 | ||

| Oracle | 1.0044 ( 0.0646 )* | 0.9924 ( 0.0575 ) | |

| Naive | 0.6969 ( 0.0610 ) | 0.7340 ( 0.0542 ) | |

| RCV | 0.9905 ( 0.0837 ) | 0.9845 ( 0.0729 ) | |

|

|

|||

| Oracle | 1.0047 ( 0.0737 ) | 0.9970 ( 0.0552 ) | |

| Naive | 0.8390 ( 0.0815 ) | 0.8533 ( 0.0555 ) | |

| RCV | 1.1273 ( 0.1528 ) | 1.0144 ( 0.0954 ) | |

|

|

|||

| Oracle | 0.9903 ( 0.0687 ) | 1.0075 ( 0.0643 ) | |

| Naive | 0.9013 ( 0.0785 ) | 0.9340 ( 0.0691 ) | |

| RCV | 1.0241 ( 0.1886 ) | 1.0031 ( 0.0780 ) | |

Values in parentheses are standard errors

3 Numerical studies

In this section, we investigate the finite sample performances of the newly proposed procedures. We further illustrate the proposed procedure by an empirical analysis of a real data example. In our numerical studies, we report only results of the proposed RCV method with DC-SIS to save space, although the NIS method, the grouped LASSO and the grouped SCAD (Xue, 2009) can be used to screen or select variables. All numerical studies are conducted using Matlab code.

3.1 Monte Carlo simulation

Since there is little work to study the variance estimate for ultra-high dimensional nonparametric additive model, this simulation study is designed to compare the finite sample performances of two-stage naive variance estimate and refitted cross-validation variance estimate. In our simulation study, data were generated from the following sparse additive model

| (3.1) |

where ε ~ N(0, 1), and {X1, ⋯ , Xp} ~ Np(0, Σ) with where ρii = 1 and ρij = 0.2 for i ≠ j. We set p = 2000 and n = 600. We take a = 0, , and in order to examine the impact of signal-to-noise ratio to error variance estimation. When a = 0, the DC-SIS always can pick up the active sets and the challenge is to reduce spurious correlation, while when , the signal is strong enough to pick up active sets so that DC-SIS performs very well. The case corresponds to the signal-to-noise equalling to 1. This is a difficult case to distinguish signals and noises and is the most challenge one for DC-SIS among these three cases considered: the first and the third case are easy to achieve sure screening with relative fewer number of selected variables and this reduces the biases of the RCV method and leaves more degrees of freedoms for estimating the residual variance. We intended to design such a case to challenge our proposed procedure, as sure screening is harder to achieve.

As a benchmark, we include the oracle estimator in our simulation. Here the oracle estimator corresponds to the mean squared errors for the fitting of the oracle model that includes only X1, X2 and X5 for a ≠ 0, and include none of predictors when a = 0. In our simulation, we employ the distance correlation to rank importance of predictors, and screen out p − ŝ predictors with low distance correlation. Thus, the resulting model includes ŝ predictors. We consider ŝ=20, 30, 40 and 50 in order to illustrate the impact of choices of ŝ on the performance of the naive estimator and the refitted cross validation estimator.

In our simulation, each function fj(·) is approximated by a linear combination of an intercept and 5 cubic B-splines bases with 3 knots equally spaced between the minimum and maximum of the jth variable. Thus, when ŝ = 50, the reduced model actually has 251 terms, which is near half of the sample size. Table 1 depicts the average and the standard error of 150 estimates over the 150 simulations. To get an overall picture how the error variance estimates change over ŝ, Figure 3 depicts the overall average of the 150 estimates. In Table 1 and Figure 3, ‘Oracle’ stands for the oracle estimate based on nonparametric additive models using only active variables, ‘Naive’ for the naive estimate, and ‘RCV’ for the refitted cross validation estimate.

Figure 3.

Variance estimators for different signal-to-noise ratios

Table 1 and Figure 3 clearly show that the naive two-stage estimator significantly underestimates the error variance in the presence of many redundant variables. The larger the value ŝ, the bigger the spurious correlation , and hence the larger the bias of the naive estimate. The performance of the naive estimate also depends on the signal to noise ratio. In general, it performs better when the signal to noise ratio is large. The RCV estimator performs much better than the naive estimator. Its performance is very close to that of the oracle estimator for all cases listed in Table 1.

In practice, we have to choose one ŝ in data analysis. Fan and Lv (2008) suggested ŝ = [n/log(n)] for their sure independence screening procedure based on Pearson correlation ranking. We modify their proposal and set ŝ = [n4/5/log(n4/5)] to take into account effective sample size in nonparametric regression. Table 2 depicts the average and the standard error of 150 estimates over the 150 simulations when the sample size n = 400 and 600. The caption of Table 2 is the same as that in Table 1. Results in Table 2 clearly show that the RCV performs as well as the oracle procedure, and outperforms the naive estimate.

We further studied the impact of randomly splitting data strategy on the resulting estimate. As an alterative, one may repeat the proposed procedure several times, each randomly splitting data into two parts, and then take the average as the estimate of σ2. Our findings from our simulations study are consistent with the discussion in Fan, Guo and Hao (2012): (a) the estimates of σ2 for different numbers of repetitions are almost the same; and (b) as the number of repetitions increases, the variation slightly reduces at the price of computational cost. This implies that it is unnecessary to repeat the proposed procedure several times. As another alternative, one may randomly split the sample data into k groups. Specifically, the case k = 2 is the proposed RCV methods in the paper. Similarly, we can use data in one group to select useful predictors, data in other groups to fit the additive model. We refer this splitting strategy to as multi-folder splitting. Our simulation results implies that the multi-folder splitting leads to (a) less accurate estimate for the coefficients and (b) increased variation of used to construct the RCV estimate. This is because this strategy splits the data into many subsets with even smaller sample size. If the sample size n is large, as nowadays Big Data, it may be worth to try multiple random splits, otherwise we do not recommend it.

3.2 A real data example

In this section, we illustrate the proposed procedure by an empirical analysis of a supermarket data set (Wang, 2009). The data set contains a total of n = 464 daily records of the number of customers (Yi) and the sale amounts of p = 6, 398 products, denoted as Xi1, ⋯ , Xip, which will be used as predictors. Both the response and predictors are standardized so that they have zero sample mean and unit sample variance. We fit the following additive model in our illustration.

where εi is a random error with E(εi) = 0 and var(εi|xi) = σ2.

Since the sample size n = 464, we set ŝ = [n4/5/log(n4/5)] = 28. The naive error variance estimate equals 0.0938, while the RCV error variance estimate equals 0.1340, an 43% increase of the estimated value when the spurious correlation is reduced. Table 3 depicts the resulting estimates of the error variance with different values of ŝ, and clearly shows that the RCV estimate of error variance is stable with different choices of ŝ, while the estimate of error variance by the naive method reduces as ŝ increases. This is consistent with our theoretical and simulation results.

Table 3.

Error Variance Estimate for Market Data

| ŝ | 40 | 35 | 30 | 28 | 25 |

|---|---|---|---|---|---|

| Naive | 0.0866 | 0.0872 | 0.0910 | 0.0938 | 0.0990 |

| RCV | 0.1245 | 0.1104 | 0.1277 | 0.1340 | 0.1271 |

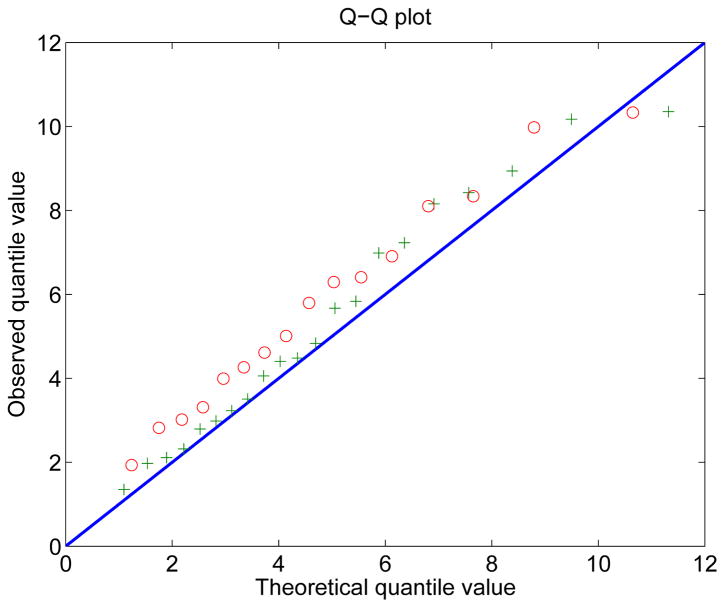

Regarding the selected models with ŝ predictors as a correct model and ignoring the approximation errors (if any) due to B-spline, we further employ the Wald’s χ2-test for hypothesis whether (γj1, ⋯ , γjdj)T equals zero, namely whether the jth variable is active in presence of the rest variables. Such Wald’s χ2 statistics offer us a rough picture whether Xj is significant or not. The Wald’s χ2-test with the naive error variance estimate concludes 12 significant predictors at significant level 0.05, while the Wald’s χ2-test with the RCV error variance estimate concludes seven significant predictors at the same significant level. Figure 4 depicts the Q-Q plot of values of the χ2-test statistic of those insignificant predictors identified by the Wald’s test. Figure 4 clearly shows that the χ2-test values using naive error variance estimate systematically deviate from the 45-degree line. This implies that the naive method results in an underestimate of error variance, while the RCV method results in a good estimate of error variance.

Figure 4.

Quantile-quantile plot of χ2-test values. “o” stands for χ2-test using naive error variance estimate. “+” stands for χ2-test using RCV error variance estimate.

The Wald’s test at level 0.05 is in favor that seven predictors, X11, X139, X3, X39, X6, X62 and X42, are significant. We refit the data with the additive model with these 7 predictors. The corresponding mean squared errors is 0.1207, which is close to the . Note that σ2 is the minimum possible prediction error. It provides a benchmark for other methods to compare with and is achievable when modeling bias and estimation errors are negligible.

To see how the above selected variables perform in terms of prediction, we further use the leave-one-out cross-validation (CV) and five-fold CV to estimate the mean squared prediction errors (MSPE). The leave-one-out CV yields MSPE=0.1414, and the average of the MSPE obtained from five-fold CV based on 400 randomly splitting data yields is 0.1488 with the 2.5th percentile and 97.5 percentile being 0.1411 and 0.1626, respectively. The MSPE is slightly greater than .

This is expected as the uncertainty of parameter estimation has not been accounted. This bias can be corrected from the theory of linear regression analysis.

Suppose that {xi, Yi}, i = 1, ⋯ , n is an independent and identically distributed random sample from a linear regression model Y = xTβ + ε, the linear predictor Ŷ = xT β̂, where β̂ is the least squares estimate of β, has prediction error at a new observation , where σ2 is the error variance and X is the corresponding design matrix. This explains why the MSPE is slightly greater than . To further gauge the accuracy of the RCV estimate of σ2, define weighted prediction error . Then the leave-one-out method leads to the mean squared weighted predictor error (MSWPE) 0.1289 and the average of five-fold CV based on 400 randomly splitting data yields MSWPE 0.1305 with the 2.5th percentile and 97.5 percentile being 0.1254 and 0.1366, respectively. These results imply (a) the seven selected variables achieves the benchmark prediction; (b) the modeling biases using the additive models of these seven variables are negligible; (c) provides a very good estimate for σ2.

Their estimated functions f̂j(xj) are depicted in Figure 5, from which it seems that all predictors shown in Figure 5 are not significant since zero crosses the entire confidence interval. This can be due to the fact that we have used too many variables which increases the variance of the estimate.

Figure 5.

Estimated functions based on 7 variables selected from 28 variables that survive DC-SIS screening by the χ2-test with the RCV error variance estimator.

We further employ the Wald’s test with Bonferroni correction for 28 null hypotheses. This leads only two significant predictors, X11 and X6, at level 0.05. We refit the data with the two selected predictors. Figure 6 depicts the plot of f̂11(x11) and f̂6(x6).

Figure 6.

Estimated functions based on 2 variables selected from 28 variables that survive DC-SIS screening by the χ2-test with the RCV error variance estimator and the Bonferroni adjustment.

4 Discussions

In this paper, we proposed an error variance estimator in ultrahigh dimensional additive model by using refitted cross validation technique. This is particularly important given the high level of spurious correlation induced by the nonparametric models (See Figure 1 and Lemma 1). We established the root n consistency and asymptotic normality of the resulting estimator, and examined the empirical performance of the proposed estimator by Monte Carlo simulation. We further demonstrated the proposed methodology via an empirical analysis of supermarket data. The proposed estimator performs well with moderate sample size. However, when the sample size is very small, the refitted cross validation procedure may be unstable. How to construct an accurate error variance estimate with very small sample size is challenging and will be an interesting topic for future research.

Supplementary Material

Acknowledgments

The authors thank the Editor, the AE and reviewers for their constructive comments, which have led to a dramatic improvement of the earlier version of this paper.

Appendix: Proofs

A.1 Proofs of Lemma 1 and Theorem 1

Let Ψ be the corresponding design matrix of model (2.3). Specifically, Ψ is a n × (pdn) matrix with ith row being (B11(Xi1), ⋯ , B1dn(Xi1), B21(Xi2), ⋯ , Bpdn(Xip)). Denote by Ψ(𝒟̂*) the corresponding design matrix of model 𝒟̂*, and P𝒟̂* the corresponding projection matrix. That is, P𝒟̂* = Ψ(𝒟̂*)(Ψ(𝒟̂*)T Ψ(𝒟̂*))−1Ψ(𝒟̂*)T. Denote . Without loss of generality, assume that the first s non-parametric components are nonzero and others are all zero. By the assumption that all active predictors are retained by DC-SIS screening procedure. For ease of notation and without loss of generality, assume that 𝒟̂* = {1, 2, ⋯ , ŝ}, where ŝ = |𝒟̂*|.

Proof of Lemma 1

Note that

| (A.1) |

where λmin(A) stands for the minimal eigenvalue of matrix A. To show Lemma 1, we need to derive the bound of eigenvalue of matrix Ψ(𝒟̂*)T Ψ(𝒟̂*). Note that Ψ(𝒟̂*) = (Ψ1, ⋯ , Ψŝ) with

| (A.2) |

Let and . Then we have Ψ(𝒟̂*)b = Ψ1b1 + ⋯ + Ψŝbŝ. As shown in Lemma S.5 in the supplemental material of this paper, it follows that

| (A.3) |

This yields that

| (A.4) |

since ||Ψibi||2 ≥ 0. Furthermore,

Recalling Lemma 6.2 of Zhou, Shen and Wolfe (1998), there exists two positive constants C1 and C2 such that, for any 1 ≤ i ≤ ŝ,

| (A.5) |

Thus,

| (A.6) |

The last equation is valid due to . Combining the equation (A.4) and (A.6), we have

| (A.7) |

Thus, it follows by using (A.1) that

| (A.8) |

By the notation (A.2), we have

| (A.9) |

Recalling that 0 ≤ Bij(·) ≤ 1, for any i, j and (Stone, 1985), we note the fact that for m ≥ 2, . Observe that, using Condition (C1), for any integers i and j

| (A.10) |

Taking A1 = 1/a and A2 = b in Condition (C1), it follows that the right hand side of above inequality will not exceed

| (A.11) |

Using Bernstein’s Inequality (see Lemma 2.2.11 of Van der Vaart and Wellner, 1996), we have

When we take , with and sufficiently large C5, the power in the last equation goes to negative infinity. Thus, with probability approaching to one, we have and

| (A.12) |

Due to the independent and identically distributed random errors with mean 0 and variance σ2, by the Law of Large Number, we have

| (A.13) |

Thus, we obtain that

| (A.14) |

Proof of Theorem 1

Note that

where fj(Xj) = (fj(Xj1), ⋯ , fj(Xjn)T , j = 1, ⋯ , p. To simplify the first term in , let . Then

where fnj(Xj) = (fnj(Xj1), ⋯ , fnj(Xjn))T = (Bj(Xj1)T Γj , ⋯ , Bj(Xjn)T Γj)T , j = 1, ⋯ , p. Define

Then Δ1 = Δ11 + Δ12 + Δ13. Note that is a projection matrix on the complement of the linear space of Ψ(𝒟̂*), and therefore . Thus, both Δ12 and Δ13 equal 0. We next calculate the order of Δ11. By the property of B-spline (Stone, 1985), there exists a constant c1 > 0 such that . Since is a projection matrix, its eigenvalues equal either 0 or 1. By the Cauchy-Schwarz inequality and some straightforward calculation, it follows that . Therefore . Under conditions in Theorem 1(i), . As a result, Δ1 = op(n). Under conditions in Theorem 1(ii), ŝ = o(n(2d−1)/4(2d+1)) and therefore .

Now we deal with the second term in . Denote . Since , it follows that

Denote and . Thus, Δ2 = 2(Δ21 − Δ22). To deal with Δ21, we bound the tails of (fj(Xji) − fnj(Xji)) εi, i = 1, ⋯ , n j = 1, ⋯ , ŝ. For any m ≥ 2, because fj ∈ 𝒞d ([a, b]) and fnj belongs to the spline space 𝒮l ([a, b]), we have

which is bounded by for some constant C6 by the property of B-spline approximation. There exists a constant c1 > 0 such that by the property of B-spline (Stone, 1985). Applying Condition (C1) for E{exp(A1 |εi|)|xi}, it follows that

Denote , and C8 = C6/A1. Using the Bernstein’s inequality, for some M, we have

| (A.15) |

If we take , and for sufficiently large C9, then the tail probability (A.15) goes to zero. Thus,

| (A.16) |

Under condition of Theorem 1(i), ŝ = o(n(4d+1)/2(2d+1)) with ζ < 1. Thus, . Following the similar arguments dealing with Δ11, it follows that Δ21 = op(n). Under condition of Theorem 1(ii), ŝ = o(nd/(2d+1)−ζ/2) with ζ < 3/(2d+1). Thus, . By the Cauchy-Schwarz inequality, it follows by Lemma 1 that

When ζ < 4d/(2d+1), and ŝ = Op(log(nα), α≤ 4d/(2d+1)− ζ, it follows that Δ22 = op(n) under condition of Theorem 1(i). When ζ < (2d − 1)/(2(2d + 1)) and ŝ = log(nα), α ≤ (2d − 1)/(2(2d + 1)) − ζ, . Thus, under condition of Theorem 1(ii). Comparing the order of Δ11, Δ21 and Δ22, we obtain the order of ŝ in Theorem 1. Therefore, we have

and it follows by the definition of that

Since ŝ dn = op(n) and , we have

| (A.17) |

Under conditions of Theorem 1(i), the small order term in (A.17) is bounded by op(1). We have

| (A.18) |

To establish the asymptotic normality, we should study the asymptotic bias of the estimator. By the Central Limit Theorem, it follows that

| (A.19) |

Note that under conditions of Theorem 1(ii), the small order term in (A.17) is bounded by op(n−1/2). Therefore, the asymptotic normality holds.

A.2 Proof of Theorem 2

Define events and 𝒜n = 𝒜n1 ∩ 𝒜n2. Unless specifically mentioned, our analysis and calculation are based on the event 𝒜n.

Let be the design matrix corresponding to , and . Note that . Thus,

By the same argument as that in the proof of Theorem 1, the second term in the above equation is of the order . Thus,

We next calculate the order of . Note that

We now calculate its variance

| (A.20) |

Denote by Pij the (i, j)th entry of matrix . The first term in the right-hand side of the last equation can be written as

It follows by the independence between X and ε that

Therefore, it follows that the equation (A.20) equals to

Noting the fact that σ4 = (E ε2)2 ≤ Eε4, the last equation is bounded by

| (A.21) |

Note that

and that . It follows that

since for the projection matrix . Consequently, by Markov’s inequality, we obtain

| (A.22) |

Therefore, we have that

Similarly, it follows that

Finally, we deal with . Take ŝ1 = o(n(2d−1)/4(2d+1)), and ŝ2 = o(n(2d−1)/4(2d+1)) so that n/(n − 2ŝ1dn) = 1 + op(1) and n/(n − 2ŝ2dn) = 1 + op(1). Then

This completes the proof of Theorem 2.

Footnotes

The content is solely the responsibility of the authors and does not necessarily represent the official views of the NSF, NIH and NIDA.

Contributor Information

Zhao Chen, Research Associate, Department of Statistics, The Pennsylvania State University at University Park, PA 16802-2111, USA. Chen’s research was supported by NSF grant DMS-1206464 and NIH grants R01-GM072611.

Jianqing Fan, Frederick L. Moore’18 Professor of Finance, Department of Operations Research & Financial Engineering, Princeton University, Princeton, NJ 08544, USA and Honorary Professor, School of Data Science, Fudan University, and Academy of Mathematics and System Science, Chinese Academy of Science, Beijing, China. Fan’s research was supported by NSF grant DMS-1206464 and NIH grants R01-GM072611 and R01GM100474-01.

Runze Li, Verne M. Willaman Professor, Department of Statistics and The Methodology Center, The Pennsylvania State University, University Park, PA 16802-2111. His research was supported by a NSF grant DMS 1512422, National Institute on Drug Abuse (NIDA) grants P50 DA039838, P50 DA036107, and R01 DA039854.

References

- 1.Bach FR. Consistency of the group lasso and multiple kernel learning. The Journal of Machine Learning Research. 2008;9:1179–1225. [Google Scholar]

- 2.Bühlmann P, Van de Geer S. Statistics for High-Dimensional Data. Springer; Berlin: 2011. [Google Scholar]

- 3.De Boor C. A Practical Guide to Splines. Vol. 27. New York: Springer-Verlag; 1978. [Google Scholar]

- 4.Donoho D. High-dimensional data analysis: The curses and blessings of dimensionality. AMS Math Challenges Lecture. 2000:1–32. [Google Scholar]

- 5.Fan J, Feng Y, Song R. Nonparametric independence screening in sparse ultra-high-dimensional additive models. Journal of the American Statistical Association. 2011;106:544–557. doi: 10.1198/jasa.2011.tm09779. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Fan J, Guo S, Hao N. Variance estimation using refitted cross-validation in ultrahigh dimensional regression. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 2012;74(1):37–65. doi: 10.1111/j.1467-9868.2011.01005.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the American Statistical Association. 2001;96:1348–1360. [Google Scholar]

- 8.Fan J, Li R. Statistical Challenges with High Dimensionality: Feature Selection in Knowledge Discovery. In: Sanz-Sole M, Soria J, Varona JL, Verdera J, editors. Proceedings of the International Congress of Mathematicians. III. European Mathematical Society; Zurich: 2006. pp. 595–622. [Google Scholar]

- 9.Fan J, Lv J. Sure independence screening for ultrahigh dimensional feature space. Journal of the Royal Statistical Society: Series B. 2008;70:849–911. doi: 10.1111/j.1467-9868.2008.00674.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Fan J, Lv J. A selective overview of variable selection in high dimensional feature space. Statistica Sinica. 2010;20(1):101. [PMC free article] [PubMed] [Google Scholar]

- 11.Friedman J, Stuetzle W. Projection pursuit regression. Journal of the American statistical Association. 1981;76:817–823. [Google Scholar]

- 12.Hall P, Miller H. Using generalized correlation to effect variable selection in very high dimensional problems. Journal of Computational and Graphical Statistics. 2009;18:533–550. [Google Scholar]

- 13.Huang J, Horowitz JL, Wei F. Variable selection in nonparametric additive models. Annals of Statistics. 2010;38:2282–2313. doi: 10.1214/09-AOS781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Li R, Zhong W, Zhu L. Feature screening via distance correlation learning. Journal of the American Statistical Association. 2012;107:1129–1139. doi: 10.1080/01621459.2012.695654. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Lin Y, Zhang HH. Component selection and smoothing in multivariate nonparametric regressionm. The Annals of Statistics. 2006;34:2272–2297. [Google Scholar]

- 16.Meier L, Van de Geer S, Bühlmann P. High-dimensional additive modeling. The Annals of Statistics. 2009;37:3779–3821. [Google Scholar]

- 17.Ravikumar P, Lafferty J, Liu H, Wasserman L. Sparse additive models. Journal of the Royal Statistical Society: Series B. 2009;71:1009–1030. [Google Scholar]

- 18.Stone CJ. Additive regression and other nonparametric models. The Annals of Statistics. 1985:689–705. [Google Scholar]

- 19.Van Der Vaart AW, Wellner JA. Weak Convergence and Empirical Processes. Springer; 1996. [Google Scholar]

- 20.Wang H. Forward regression for ultra-high dimensional variable screening. Journal of the American Statistical Association. 2009;104(488) [Google Scholar]

- 21.Xue L. Consistent variable selection in additive models. Statistica Sinica. 2009;19:1281–1296. [Google Scholar]

- 22.Zhou S, Shen X, Wolfe DA. Local asymptotics for regression splines and confidence regions. The Annals of Statistics. 1998;26:1760–1782. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.