Abstract

An experiment was conducted to investigate the feasibility of using functional near-infrared spectroscopy (fNIRS) to image cortical activity in the language areas of cochlear implant (CI) users and to explore the association between the activity and their speech understanding ability. Using fNIRS, 15 experienced CI users and 14 normal-hearing participants were imaged while presented with either visual speech or auditory speech. Brain activation was measured from the prefrontal, temporal, and parietal lobe in both hemispheres, including the language-associated regions. In response to visual speech, the activation levels of CI users in an a priori region of interest (ROI)—the left superior temporal gyrus or sulcus—were negatively correlated with auditory speech understanding. This result suggests that increased cross-modal activity in the auditory cortex is predictive of poor auditory speech understanding. In another two ROIs, in which CI users showed significantly different mean activation levels in response to auditory speech compared with normal-hearing listeners, activation levels were significantly negatively correlated with CI users’ auditory speech understanding. These ROIs were located in the right anterior temporal lobe (including a portion of prefrontal lobe) and the left middle superior temporal lobe. In conclusion, fNIRS successfully revealed activation patterns in CI users associated with their auditory speech understanding.

Keywords: cochlear implant, speech understanding, functional near-infrared spectroscopy, cortical activity

Introduction

There is wide variability in auditory speech understanding in adult cochlear implant (CI) users. Age at implantation, hearing-loss history, and CI experience only account for about 10% of this variance (Blamey et al., 2013). Studies have shown functional differences in the brains of hearing-impaired individuals compared with normal-hearing (NH) listeners (Coez et al., 2008; Giraud, Price, Graham, & Frackowiak, 2001; Lazard et al., 2010; Lazard, Lee, Truy, & Giraud, 2013; Strelnikov et al., 2013; Stropahl, Chen, & Debener, 2016). These differences, usually in the language-associated brain regions, have been proposed as a factor leading to the variance in auditory speech understanding among CI users after implantation. In this study, functional near-infrared spectroscopy (fNIRS) was used to investigate brain activation in experienced CI users in response to auditory and visual speech and to explore the association between brain activation in CI users in defined regions of interest (ROIs) and their auditory speech understanding ability.

In experienced CI users with postlingual deafness, brain functional changes may have occurred during their periods of deafness due to decreased auditory input and increased reliance on visual cues (speechreading) for communication. For example, studies using functional magnetic resonance imaging (fMRI) (Finney, Fine, & Dobkins, 2001; Lazard et al., 2010) and fNIRS (Dewey & Hartley, 2015) have observed significant activation evoked by visual stimuli in auditory-related brain regions in deaf people (preimplant) but not in NH listeners. These observed brain functional changes include greater activation in deaf people than in NH listeners, in the right auditory cortex (secondary and auditory association areas) when responding to visual object stimuli (Finney et al., 2001), in the right supramarginal gyrus when mentally rhyming visually presented written words (Lazard et al., 2010), and in the right auditory cortex (Heschl’s gyrus and superior temporal gyrus) in response to visual checkerboard stimuli (Dewey & Hartley, 2015). The right supramarginal gyrus in NH listeners has been associated with environmental sound processing but not for speech processing (Thierry, Giraud, & Price, 2003). However, deaf people showed abnormal activation in this region during phonological processing (Lazard et al., 2010), which suggests that brain functional changes may have occurred to support language processing.

The abnormal visually evoked activation in areas that are normally associated with auditory functions (the superior temporal sulcus [STS] or superior temporal gyrus [STG]) has also been consistently observed also in newly implanted CI users (Strelnikov et al., 2013) and in experienced CI users (Chen, Sandmann, Thorne, Bleichner, & Debener, 2015). In newly implanted CI users tested with positron emission tomography (PET; Strelnikov et al., 2013), visually evoked activation in the right STS or STG was negatively associated with later (after 6 months) speech understanding ability. An fNIRS study (Chen et al., 2015) also found that such increased visually evoked activation also existed in both left and right STS or STGs (auditory cortex) in experienced CI users, suggesting that the increased visual activation in areas normally associated with auditory function can persist long after implantation.

Further functional changes following implantation have been shown in longitudinal studies using PET as CI users adapt to the novel auditory stimulation throughout the first year of implant use (Giraud, Price, Graham, Truy, & Frackowiak, 2001; Petersen, Gjedde, Wallentin, & Vuust, 2013; Rouger et al., 2012; Strelnikov et al., 2010). Strelnikov et al. (2010) showed that resting-state activity increased over time in language-associated regions including the right visual cortex, auditory cortex, Broca’s area, and posterior temporal lobe. In another study, Strelnikov et al. (2013) investigated the correlation between brain activation in newly implanted CI users during resting state or when speechreading and their auditory speech understanding 6 months after implantation. They found that both resting-state and visually evoked activity in the left inferior frontal gyrus (Broca’s area) and in the right visual cortex of newly implanted CI users showed positive correlations with auditory speech understanding 6 months after implantation. In contrast, activity in both conditions in the right STS or STG was negatively correlated with their auditory speech understanding 6 months postoperatively. Thus, characteristics of functional brain activity before or shortly after implant switch-on have been positively or negatively associated with recovery of auditory speech understanding in the year following implantation.

Because fNIRS imaging systems are compatible with CIs and are noninvasive for clinic use (see review by Saliba, J., Bortfeld, H., Levitin, D. J., & Oghalai, J. S. 2016), fNIRS has recently been used to investigate functional brain activity in CI users (Anderson, Wiggins, Kitterick, & Hartley, 2017; Chen et al., 2015; McKay et al., 2016; Olds et al., 2016; Sevy et al., 2010; van de Rijt et al., 2016). Chen et al. (2015) used fNIRS and nonspeech stimuli to investigate both auditory and visual cross-modal brain activation, that is, the response in auditory cortex to visual stimuli and vice versa. They found that both types of cross-modal activation in CI users were greater than in NH listeners. However, Anderson et al. (2017) and van de Rijt et al. (2016) found that compared with NH listeners, there was no difference in auditory cross-modal activation between preimplanted deaf people, newly implanted CI users, or experienced CI users. The inconsistent results in the aforementioned three studies might be due to the different stimuli that were used, that is, nonspeech stimuli (Chen et al., 2015) and speech stimuli (Anderson et al., 2017; van de Rijt et al., 2016). Further, in the study of van de Rijt et al. (2016), fNIRS data were recorded from only five CI users leading to lack of statistical power.

This study aimed to determine whether fNIRS response amplitudes in CI users to auditory or visual speech in different brain regions correlated with their auditory speech understanding. The overall hypothesis of the study is that differences in speech understanding ability among experienced CI users are correlated with differences in brain activation evoked by auditory or visual speech stimuli.

An a priori ROI—STS or STG was selected—guided by previous research as discussed earlier, to test whether activity evoked by visual speech in the STS or STG is negatively correlated with the auditory speech understanding of CI users. It has been proposed by Strelnikov et al. (2013) that increased visual processing in the STS or STGs may be associated with reduced auditory processing ability in this region of the language network. This study specifically examined the hypothesis that high visual activation of the STS or STGs in experienced CI users is associated with poorer auditory speech understanding.

Further, two data-driven approaches were used to identify ROIs. The first approach was to locate brain regions in which the group mean difference between NH and CI listeners in fNIRS activation in response to visual or auditory speech stimuli was greatest. Activity in these regions (in which fNIRS activity in the CI users was most different to the NH listeners) is likely to reflect the functional differences that are common to all the CI users. Differences in visually evoked activation among the CI group within this identified region may be a result of variability in the functional changes that occurred during deafness or may be the result of reduced auditory activity in multimodal areas. On the other hand, differences across the CI group in auditory-evoked activity within this region may be due to the higher average difficulty in speech understanding in the CI group or other factors related to the electrical stimulation this group receives (rather than the acoustic hearing in the NH group). In addition, differences in auditory activity between the CI group and the NH group could be due to differences in the use of cognitive resources or in listening effort.

The second approach was to find brain regions where variance in activity was greater across subjects in the CI group compared with the NH group. Because auditory speech understanding varies greatly between CI users, this study proposes that the variance in speech understanding may be related to variance in the degree to which functional changes may have occurred during deafness or may be reflected in variance in the amount of cognitive resources needed to interpret the electrical speech signal.

Methods

Participants

Twenty postlingually deaf adult CI users and 19 NH listeners were recruited for this experiment. All the participants were native English speakers, healthy with no history of diagnosed neurological disorder, and had normal or corrected-to-normal vision. CI users were contacted through the Royal Victorian Eye and Ear hospital in Victoria, Australia. The NH listeners were recruited by word-of-mouth and were students, employees of the Bionic Institute, or acquaintances of the researchers involved in the project. The inclusion criterion for these participants was normal pure tone thresholds (20 dB HL or less) at octave frequencies between 250 Hz and 4000 Hz. Four CI users and 5 NH listeners were excluded from the analysis due to thick hair causing poor connection of the optodes with the scalp (Orihuela-Espina, Leff, James, Darzi, & Yang, 2010), and one additional CI user withdrew from the study part way through. Thus, usable data were obtained from 15 CI users (9 men and 6 women) and 14 NH listeners (8 men and 6 women). To remove side of implantation as a factor in the analysis, only CI users with a right-ear implant were recruited, and stimuli were presented to the right ear in both groups. In the CI group, 10 participants had a unilateral right-ear CI and 5 participants had bilateral CIs, and all participants had over 12 months experience using their right-ear implant. Table 1 presents the details regarding CI participants. This study aimed to recruit NH listeners who were age-matched with CI participants. However, this criterion was difficult to meet due to the incidence of high-frequency hearing loss in the aging population. The mean age of 14 NH listeners (ranging from 33 to 70, mean ± standard deviation [SD], 53.5 ± 12.0 years) was less than the mean age of 15 CI users (ranging from 46 to 79, mean ± SD, 64.2 ± 10.1 years), with . The potential effect of age difference between groups on the selection the ROIs for hypothesis testing was therefore considered in the analysis of results. This study was approved by the Royal Victorian Eye and Ear Hospital human ethics committee, and all the participants provided their written informed consent.

Table 1.

Information of CI Users, Including Speech Understanding Scores.

| CI users | Age | Ears of implant, CI duration (months) | Right-ear implant | CNC score (%) | CUNY sentence |

Speechreading scores (%) | |||

|---|---|---|---|---|---|---|---|---|---|

| Quiet (%) | SNR 15 dB (%) | SNR 10 dB (%) | SNR 5 dB (%) | ||||||

| CI1 (M) | 79 | B–L(1), R(66) | CI24RE | 83 | 99 | 96 | 67 | 45 | |

| CI2 (F) | 71 | U–R(78) | CI24RE | 96 | 100 | 100 | 99 | 67 | 81 |

| CI3 (M) | 73 | U–R(79) | CI24RE | 91 | 100 | 98 | 80 | 31 | 92 |

| CI4 (F) | 46 | U–R(20) | CI422 | 83 | 99 | 96 | 99 | 64 | 97 |

| CI5 (M) | 57 | U–R(89) | CI24RE | 89 | 99 | 100 | 47 | 11 | 94 |

| CI6 (M) | 44 | B–L(83), R(47) | CI512 | 97 | 60 | 100 | 100 | 100 | 97 |

| CI7 (M) | 66 | U–R(86) | CI24RE | 85 | 99 | 93 | 74 | 31 | 58 |

| CI8 (F) | 67 | U–R(14) | CI24RE | 49 | 60 | 48 | 33 | 6 | 94 |

| CI9 (F) | 64 | U–R(74) | CI24RE | 89 | 98 | 94 | 65 | 30 | 86 |

| CI10 (M) | 71 | U–R(40) | CI422 | 55 | 90 | 66 | 35 | 11 | 58 |

| CI11 (M) | 64 | U–R(95) | CI24RE | 75 | 96 | 85 | 59 | 26 | 100 |

| CI12 (F) | 56 | B–L(59), R(85) | CI24RE | 98 | 98 | 99 | 100 | 63 | 72 |

| CI13 (M) | 72 | U–R(12) | CI422 | 81 | 98 | 96 | 83 | 25 | 75 |

| CI14 (M) | 70 | B–L(5), R(88) | CI24RE | 61 | 95 | 93 | 43 | 9 | 81 |

| CI15 (F) | 64 | B–L(53), R(79) | CI24M | 49 | 86 | 73 | 40 | 8 | 89 |

Note. B = bilateral; U = unilateral; L = left; R = right; CI = cochlear implant; CNC = consonant-nucleus-consonant; CUNY = City University of New York; SNR = signal-to-noise ratio.

Tests of Auditory Speech Understanding

In the first session, the auditory speech understanding ability of each CI user was tested in a sound-treated booth with background noise level less than 30 dBA. Auditory stimuli were presented to the right-ear implant of CI users via direct-audio-input accessory, and the speech processor program was set to the everyday setting. CI users who had a hearing aid or implant in the left ear were instructed to take the hearing aid or implant off, and those with residual hearing in the left ear were asked to use an earplug in that ear to limit acoustic input, such as ambient sounds in the test booth. Open-set consonant-nucleus-consonant (CNC) words (Peterson & Lehiste, 1962) and City University of New York (CUNY) sentences (Boothroyd, Hanin, & Hnath, 1985) were used. Words and sentences were presented to CI users at an equivalent level of 60 dBA, that is, the direct-audio-input sound level was equalized to the level that the CI processor received in the free field at 60 dBA. The perception of CNC words was measured in quiet and assessed according to the percentage of the phonemes that participants repeated back correctly among a total of 150 phonemes. The CUNY sentences were presented both in quiet and with different levels of multitalker babble noise, with signal-to-noise ratios (SNRs) from 15 dB to 5 dB SNR in 5 dB steps. For each participant, four different sentence lists were randomly selected out of a group of 10 lists. Participants’ responses to CUNY sentences were scored according to the percentage of words that they repeated back correctly in a list of 12 sentences (a total of about 102 words). For participants with NH, no auditory speech tests were conducted.

Procedures for fNIRS Data Acquisition

Speech stimuli in fNIRS session

Speech stimuli consisted of 75 spondees (words that have two equally stressed syllables) that were recorded using a GoPro camera and an extra microphone in a sound-treated booth and were spoken by one female and one male Australian English-native speaker. Each spondee word was extracted from the video recording, and audio-only and visual-only components of the spondees were separated. The levels of all the auditory spondees were normalized to the same root mean square level.

Speechreading test

Before fNIRS data collection, a speechreading test was performed for each participant to make sure that the visual speech recognition during fNIRS data collection would not be too difficult. The speechreading test was conducted in a sound-treated booth. Participants were seated in an armchair, and the visual stimuli were presented on a CRT monitor in front of them at a distance of 1.5 m. During the closed-set speechreading test, a list of 12 spondee words were presented without auditory cues, with words presented 3 times each, in random order. Half of the words (randomly selected) were spoken by the male speaker and half by the female speaker. Participants were familiarized with the words before the test; after each presentation, participants were asked to select the word that they thought they saw from the list. The speechreading ability of participants was scored as the percentage of words they repeated back correctly.

fNIRS data collection

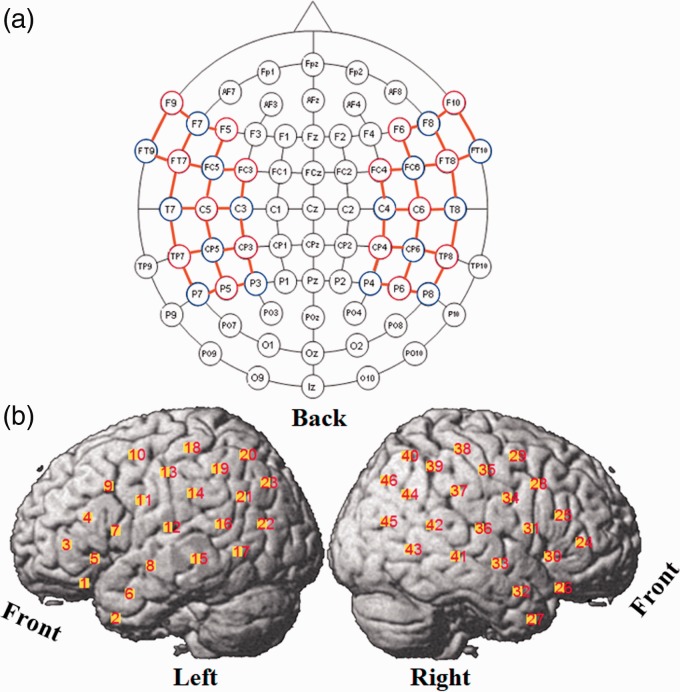

The fNIRS imaging method uses near-infrared light to measure the concentration changes of hemoglobin in the blood, which has been shown to be coupled with the neurons’ response to stimuli (Ogawa, Lee, Kay, & Tank, 1990). The fNIRS system used in this study (NIRScout, NIRX medical technologies, LLC) was a continuous-wave NIRS instrument, with 16 LED illumination sources and 16 photodiode detectors. Each source had two illuminators and emitted near-infrared light with wavelengths of 760 nm and 850 nm. An Easycap was used to hold the sources and detectors. By registering the positions of the sources and detectors on the Easycap to the 10–10 system (American Clinical Neurophysiology, 2006; Jurcak, Tsuzuki, & Dan, 2007), the probabilistic brain region where the fNIRS signals were measured was determined. The experimental montage is shown in Figure 1. Forty-six channels were defined as source-detector pairs (denoted as channels) that were adjacent in the montage and separated by approximately 3 cm (orange lines in Figure 1(a)). The central points of the channels relative to brain regions are shown in Figure 1(b).

Figure 1.

fNIRS montage. (a) Sources (red circles), detectors (blue circles), and channels (orange lines) in 10–10 system. (b) Channels positions on the brain cortex.

The Easycap was positioned on the head of the participant and secured by a chin strap. To reduce the influence of hair on the quality of the fNIRS signals, the hair under each grommet was carefully pushed aside before inserting the optodes into the grommets. Before commencing data collection, the automatic gains (0–8) set by the NIRScout system were checked to determine which source-detector pairs (channels) had good optode-skin contact. A maximum gain of 8 indicates that the detector received little or no light from the source, whereas gains less than 4 indicate that the light detected may have taken a more direct route from the source to detector rather than being scattered through layers of the cortex as required. In either of these cases, the optodes of those channels were removed to check for hair obstruction and skin contact and replaced. The procedure was repeated several times until as many channels as possible had gains in the acceptable range of 4 to 7.

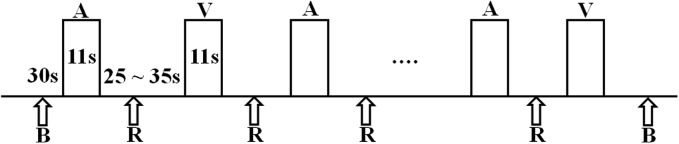

fNIRS data were collected in two test periods in the same booth as the speechreading test, with the room lights turned off during data collection. One test period was a 5-min resting-state period, during which participants were instructed to close their eyes and relax. The second test period lasted for 12 min, during which participants engaged in a word-recognition task. In the latter period, a pseudorandom block design was used, starting with a 30-s baseline (rest) and followed by 14 blocks of stimuli alternating between auditory and visual speech stimuli (Figure 2). Between auditory and visual blocks, there was a no-stimulus interval (rest) with a random duration between 25 and 35 s. Each 11-s-long stimulus block contained five spondee words. Thirty-five male-talker and 35 female-talker words were selected from the recorded set, and for each subject, the 70 words were randomly distributed across 14 blocks. To maintain attention to the speech stimuli, participants were asked to listen or watch for three specific spondee words in each block and to push a button every time they recognized one of those words. Auditory stimuli in the fNIRS session were presented in the same way as in the auditory speech tests, that is, to the right-ear processor of CI users or the right ear of NH listeners (ER-3A insert earphone, E-A-RTONETM GOLD) at a sound level equivalent to 65 dBA, with their left-ear devices being taken off or the left-ears being plugged if there was residual hearing. For NH listeners, auditory stimuli were presented to the right ear (ER-3A insert earphone, E-A-R TONE TM GOLD) only, with the left ear being plugged. Visual stimuli were presented on the monitor as in the speechreading test. Both oral and written instructions were given to participants before data collection.

Figure 2.

Pseudorandom block design of experimental stimuli presentation. A stands for auditory spondee words (11 s), B stands for baseline (30 s), R stands for rest between two blocks of stimuli (25–35 s), and V stands for visual spondee words (11 s). In total, seven blocks of auditory stimuli and seven blocks of visual stimuli were presented.

fNIRS Data Analysis

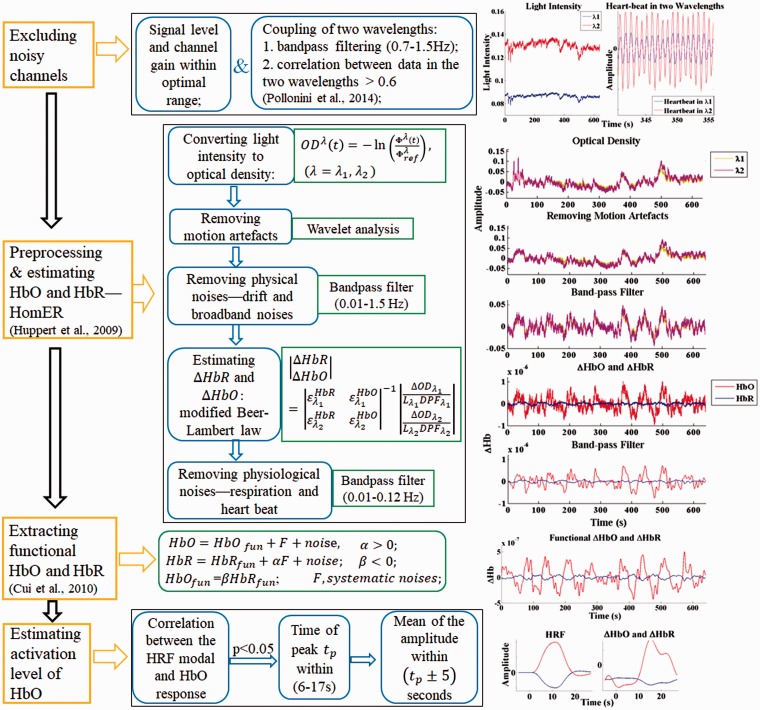

The signals recorded by the NIRScout system were imported to MATLAB for further processing. A flowchart of signal processing steps used in this study and an example of the results from each step are shown in Figure 3. There are four main steps, as shown in the yellow boxes on the left side: (a) exclusion of bad channels, (b) removal of motion artifacts and unwanted physiological signals, (c) conversion of data into oxygenated hemoglobins (HbO) and deoxygenated hemoglobins [HbR] concentration changes, and (d) calculation of activity level from the hemodynamic response.

Figure 3.

fNIRS signal processing. Flowchart of signal processing (left), explanation and algorithm of signal processing for each step (middle), and results after each step for one example channel (right). The oxygenated hemoglobins (HbO) concentration changes are in the unit of Mol.

Channels from which data were poor in quality were excluded from further analysis according to the following two criteria: first, if the automatic gain level was not in the range of 4 to 7, as explained earlier; second, if the scalp coupling index (Pollonini, L., Bortfeld, H., & Oghalai, J.S., 2016; Pollonini et al., 2014) was less than 0.6. The scalp coupling index is a measure of how well the source and detector optodes for a given channel are coupled to the skin. To determine the scalp coupling index, a bandpass filter (0.7–1.5 Hz) was used on to isolate the frequency range that corresponded to blood volume pulses related to the heartbeat. The correlation between the filtered signals corresponding to the two NIR wavelengths in each channel was then calculated. It was assumed that, if there was good optode-skin contact, heartbeat signals would be present in the signals of both NIR wavelengths and therefore lead to a significant correlation of those signals (Themelis et al., 2007). Channels with absolute correlation values less than 0.6 were excluded. This value is lower than the criterion value of 0.75 used by Pollonini et al. (2014) due to the different source-detector distances and NIRS devices that were used in the two studies.

After channel exclusion, signal processing was conducted on the raw (unfiltered) data in the remaining channels. Data analysis included preprocessing to remove motion artifacts, drift, and unwanted signals such as Mayer waves (Julien, 2006), respiratory fluctuations, and the heartbeat signal using the following steps:

(a) The raw data recorded from each channel (light intensity) were converted to the optical density, which is a logarithmic measurement of the changes in light intensity.

(b) The motion artifacts in each channel, which were caused by the physical displacement of the optical probes from the surface of the subject’s head, were removed using wavelet analysis in Homer software (Huppert, Diamond, Franceschini, & Boas, 2009). The wavelet-based motion artifact removal method as proposed by Molavi and Dumont (2012) was used. With wavelet decomposition, motion artifacts appear as abrupt breaks in the wavelet domain, whereas hemodynamic responses to stimuli have less variable coefficients. To remove the motion artifacts, wavelet coefficients out of the interquartile range of 0.1 were set to zero.

(c) Physical noises including the slow drift (frequencies lower than 0.01 Hz) and high-frequency broadband noises (frequencies higher than 1.5 Hz) were removed using a band-pass filter (cutoff frequency, 0.01–1.5 Hz).

Following the preprocessing steps, the hemodynamic response was estimated in each channel and further removal of artifacts was accomplished using the following steps:

(d) The processed optical density data were converted to the concentration changes of HbO and HbR (the hemodynamic response) using the Modified Beer-Lambert Law (Delpy et al., 1988). For the differential pathlength factors at two wavelengths, this study used values of 7.25 (760 nm) and 6.38 (850 nm) for all the participants, according to Essenpreis et al. (1993). The authors were aware that differences in participants’ ages (CI group: 64.2 ± 10.1 years and NH group: 53.5 ± 12.0 years) would affect the differential pathlength factors (Scholkmann & Wolf, 2013), thus affecting the estimation of fNIRS responses. However, the method proposed by Scholkmann and Wolf (2013) was only verified to estimate age-related differential pathlength factors in the frontal and frontotemporal lobes rather than other regions such as the temporal lobe, which was of interest in this study.

(e) High-frequency physiological noises including the cardiac response and respiration in the hemodynamic response were further removed using a band-pass filter (frequency band: 0.01–0.12 Hz).

(f) Further artifacts were removed by assuming that stimulus-related concentration changes of HbO and HbR are negatively correlated, while the concentration changes of HbO and HbR introduced by motion artifacts, blood oscillation, and task-related global signals are positively correlated (Yamada, Umeyama, & Matsuda, 2012). The HbO and HbR of interest (related to the stimuli) in channels were extracted by maximizing the anticorrelated relationships between them (Cui, Bray, & Reiss, 2010).

(g) The grand averages of HbO and HbR across the seven blocks of the same stimulus type (auditory or visual) were calculated after baseline correction, which was performed by subtracting the mean of the HbO (or HbR) concentration change over the 5 s preceding the stimulus onset in each block.

To identify channels in which a significant stimulus-evoked response could be determined, the following two steps were performed:

- (h) A canonical hemodynamic response function (HRF) consisting of two gamma functions (as shown in Equation 1) was adopted to represent the hemodynamic responses in the brain (Kamran, Jeong, & Mannan, 2015)

(1) (2)

where, on the left side of Equation 1, k is the discrete time and stands for the HRF. The right side of the equation consists of two gamma functions, with standing for the delay of response (undershoot) and standing for dispersion of the response (overshoot). The HRF model in Equation 2 was built as the convolution of the HRF in Equation 1 and the boxcar function of the stimulus (as shown in Figure 2). When neurons in a local brain area respond to a stimulus, leading to an increased consumption of oxygen, the concentration change of HbO is assumed to increase during the stimulation after a short delay and then gradually return to baseline after the stimulus offset, and the concentration change of HbR is assumed to be opposite to that of HbO, as shown in Figure 3.

(i) Pearson correlations were computed between the HRF model and the averaged HbO responses to each stimulus type. Only channels with significant correlations ( with ) were marked as channels with stimulus-related responses; channels with nonsignificant correlations were assumed to have no hemodynamic response to the stimuli and the activation levels were set as missing values in the later analyses.

Finally, the remaining channels (those considered to contain a significant response) were analyzed to determine an activation level as defined in the following step:

(j) To quantify brain activation in a channel, the peak of the amplitude of the HbO response in that channel within 6 s to 17 s after the onset of the stimulus was identified. A previous study (Handwerker, Ollinger, & D'Esposito, 2004) has shown that it takes on average at least 5 s for hemodynamic responses to reach the peak after stimulus onset. Because our stimulus block was 11 s long, the peak response was required to be within the range of 6 s to 17 s. The averaged amplitude of HbO within 5 s pre- and 5 s post-the identified peak was defined as the activation level to a particular stimulus.

Definition of ROI and Statistical Analysis

After signal processing, the calculated activation levels were imported to Minitab 17 for further statistical analysis. The main hypothesis in this study was that differences in speech understanding ability among experienced CI users were correlated with differences in brain activity evoked by auditory or visual speech stimuli. Three sets of ROIs were determined in CI users. Each ROI contained the mean signals from at least two adjacent channel positions and ROIs rather than individual channel locations were used for three reasons. First, there were several individual channels in some participants that were excluded due to poor signals. Second, the optodes located above the coil of CI participants were not able to obtain fNIRS data. Third, the precise registration of optode place to brain position has a degree of uncertainty and thus variation among participants.

Based on a review of existing literature (see Introduction), a pair of a priori ROIs were selected that covered the bilateral STS or STGs. Previous studies using functional magnetic resonance imaging, PET, or fNIRS suggested that increased cross-modal activation in this area (i.e., auditory cortex being activated by visual stimuli) may be related to poor auditory speech understanding. The ROIs were defined as the group of channels (12, 14, 15, and 16; see Figure 1) in the left hemisphere and (36, 37, 41, and 42) in the right hemisphere. In these ROIs, the mean activation level across the included channels was calculated for each CI user when responding to visual stimuli. Pearson correlations were then computed between the activation levels and auditory speech test scores, and Bonferroni’s method was used to adjust the p values for multiple comparisons.

Using a data-driven approach, a second set of ROIs were determined which consisted of fNIRS channels where there was a significant group mean difference in activation levels between the CI group and the NH group. These ROIs are the regions that reflect the functional changes that are common to all the CI users and therefore the changes may be proposed to be driven by plastic functional changes during periods of deafness and cochlear implantation, reflect (in the auditory condition) the higher average difficulty in speech understanding in the CI group, or other factors related to the electrical stimulation this group receives (rather than the acoustic hearing in the NH group). In addition, differences in auditory activity between the CI group and the NH group could be due to their differences in use of cognitive resources or listening effort. Using another data-driven approach, a third set of ROIs were determined which consisted of fNIRS channels where there was a larger variability in activation levels across CI users than across NH listeners. Because the auditory speech understanding varies greatly between CI users, it is proposed that the variance in speech understanding may be related to variance in the degree to which the plastic functional changes have occurred or may be reflected in variance in the amount of cognitive resources needed to interpret the speech signal.

The ROIs where mean activation levels differed between the two groups were derived as follows:

Two-sample (two-tailed) t tests were performed, channel by channel, to compare the activation levels between the CI group and the NH group in both audio-alone and visual-alone stimulus conditions. A threshold criterion of was used to select channels for the next step.

Adjacent channels retained from Step 1 were clustered into ROIs. Channels that had no adjacent channels with criterion significance level were ignored in the further statistical analysis.

For each participant, the activation level in an ROI was calculated as the averaged activation level across all channels included in the ROI.

The ROIs where activation levels were more variant across participants in the CI group compared with the NH group were derived as follows:

Two-sample (one-tailed) variance tests, using Bonett’s (2006) method, were performed on each channel, to compare the variance of activation levels between the CI group and the NH group in both audio-alone and visual-alone stimulus conditions.

Similar to the definition of the first type of ROIs, the second set of ROIs were defined as a group of at least two adjacent channels that satisfied the variance difference threshold ().

The three sets of ROIs derived were then used to test whether brain activation levels in CI users in these ROIs correlated with their speech understanding ability. For each ROI, Pearson correlations were computed between the activation levels in CI users and the auditory speech test scores. ROIs that showed significant correlations () between activation level and auditory speech understanding were identified, and Bonferroni’s method was used across the ROIs to adjust the p values for multiple comparisons.

Because the secondary factors including duration of CI use, age, and speechreading ability are likely to drive the primary factors of interest in this study (functional changes in the cortical speech processing networks), it was predicted that these secondary factors would not account for significant further variability in speech understanding after accounting for variability explained by the fNIRS. This prediction was tested using a stepwise regression analysis that used the fNIRS ROI activation levels as well as these secondary factors as predictors of speech understanding.

Results

Speech Tests

The results of the speech tests and speechreading test in CI users are presented in Table 1. It can be seen that the CNC scores in quiet range from 49% to 98% phonemes correct, and sentences in noise at ranged from 33% to 100% words correct. To avoid both ceiling and floor effects, the CUNY scores at 10 dB SNR were selected for analysis. For further analysis of correlations between brain activation levels in ROIs of CI users and their auditory speech understanding ability, the CUNY sentences scores and the CNC words scores were averaged for each participant. The two test scores were combined by averaging for two reasons: The two scores were highly correlated, , and use of a single speech understanding measure reduced the number of related correlations tested and hence increased the power of the analysis.

Hemodynamic Responses (HbO and HbR) to Speech Stimuli

Ninety-eight of 690 (11.16%) channels from the CI group and 68 of 644 (6.99%) channels from the NH group were excluded for further analysis. The higher percentage in the CI group was because some optodes were above the processor coil.

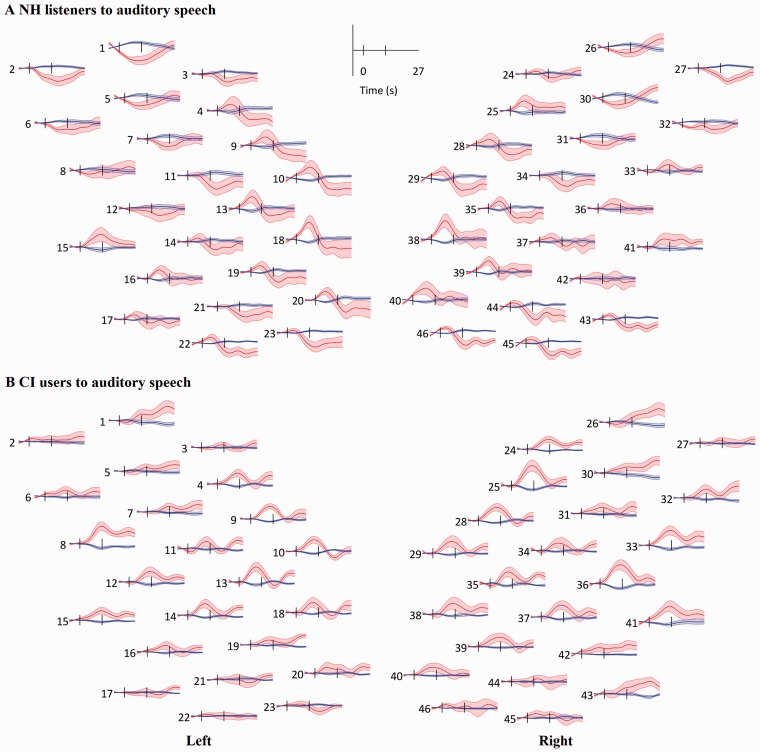

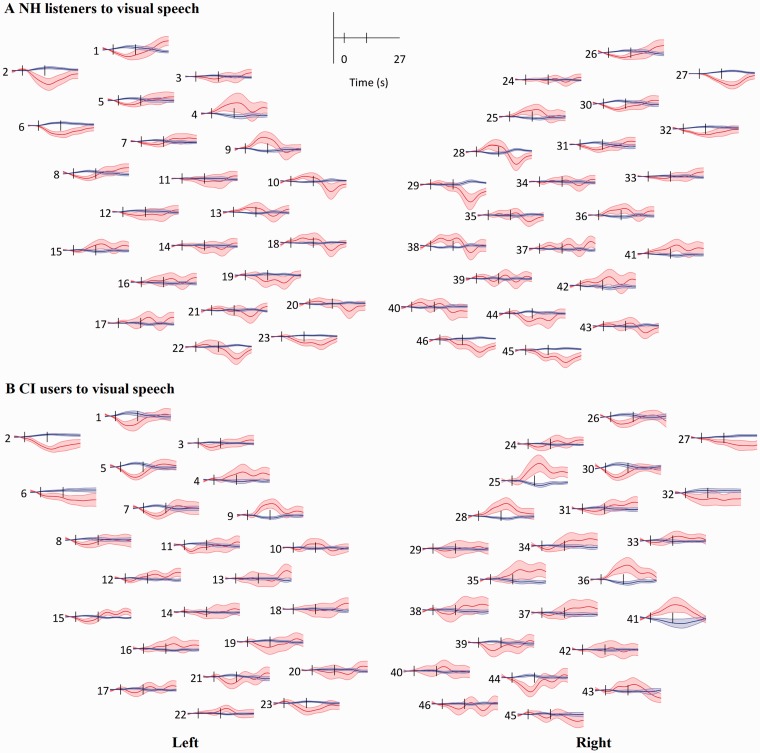

After excluding channels, the grand mean and standard error of the mean (SEM) of HbO (red) and HbR (blue) visually and auditory-evoked responses in the NH and CI groups when responding to the auditory and visual speech stimuli were computed for each channel. Responses in two groups to auditory speech stimuli are shown in Figure 4. The channel numbers and positions in Figure 4 correspond to those in Figure 1. With a channel showing activation to stimuli, HbO increases and HbR decreases after stimulus onset and both gradually return to baseline after stimulus offset. As shown in Figure 4, the NH group showed a deactivation pattern (a decrease in HbO concentration after stimulus onset) in the bilateral (symmetrical) frontal area, within channels 1, 2, 5–8, 11, 12 on the left and channels 26, 27, 30–32, 34 on the right. The CI group showed no such deactivation pattern in the corresponding channels when responding to auditory stimuli. Further, the CI group showed more activation than the NH group in the right auditory area within channels 33, 36, and 41, when responding to auditory speech.

Figure 4.

Grand mean response to auditory speech in each channel. (a) Response in the NH group. (b) response in the CI group. Red lines and shaded areas: mean and SEM of HbO and blue for HbR. Black vertical lines mark the onset and offset of stimulation. The layout of the channels corresponds to the montage shown in Figure 1.

The visually evoked HbO and HbR responses are shown in Figure 5. Both NH and CI group responses showed an overall deactivation (or no significant activation) on the left side, except in channels 4 and 9, which were located in the posterior frontal area that is involved in language processing. On the right side, the CI group showed larger HbO responses than the NH group in the right posterior frontal area (Channels 25 and 28) and the right temporal area (Channels 36 and 41) when responding to visual speech stimuli.

Figure 5.

Grand mean responses to visual speech. (a) Response in the NH group. (b) Response in the CI group. Red lines and shaded areas: mean and SEM of HbO and blue for HbR. Black vertical lines mark the onset and offset of stimulation. The layout of the channels corresponds to the montage shown in Figure 1.

Correlations Between fNIRS Responses and Speech Understanding Ability

A priori ROIs: Bilateral STS or STG of CI users

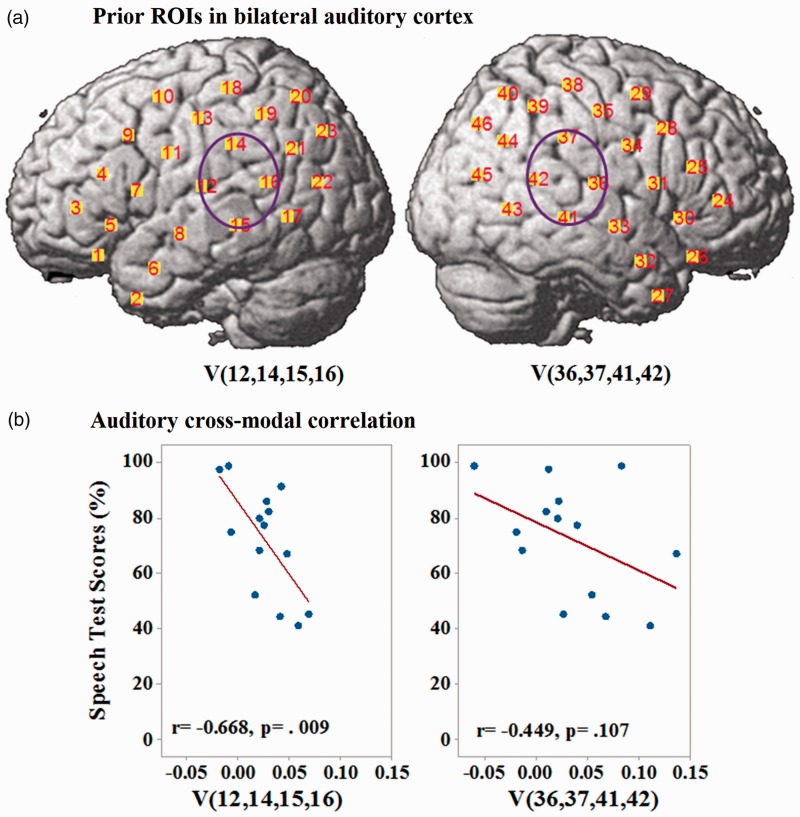

The locations of two regions of a priori interest—the left and right STS or STGs, marked as V (12, 14, 15, and 16) and V (36, 37, 41, and 42)—are shown in Figure 6(a). Pearson correlations between the activation levels of CI users in these two ROIs when responding to visual speech stimuli and their auditory speech test scores are shown in Figure 6(b). A significant and negative correlation was found in the left STS or STG (with adjusted p) but not in the right hemisphere (Table 2).

Figure 6.

Results of a priori ROIs. (a) Priori ROIs to investigate cross-modal activation in the bilateral superior temporal cortex. (b) Pearson correlations between activation levels in these ROIs of CI users to visual speech stimuli and their auditory speech test scores. Brain activation levels (concentration changes of HbO) are in the unit of µMol.

Table 2.

ROIs, Data for Selection of ROIs, and Correlations Between the ROI Activation Levels in CI Users and Their Speech Test Scores.

| Hypotheses | Stimuli, ROIs (channels) | Two-sample t tests CI versus NH | Group variances | Correlation with speech test scores | Bonferroni correction |

|---|---|---|---|---|---|

| Hypothesis 1 | A(1 and 2) | t(19) = 2.68, p = .015 | N/A | r = − .592, p = .020 | p > .017 |

| A(7, 8, 11, and 12) | t(23) = 3,02, p = .006 | r = −.650, p = .008* | p < .017 | ||

| A(26, 27,30) | t(26) = 3,18, p = .004 | r = −.620, p = .014* | p < .017 | ||

| Hypothesis 2 | A(26, 27,32) | N/A | R = 2.751, p = .031 | r = −.597, p = .019 | p > .017 |

| V(13,18) | R = 4.490, p = .007 | r = −.253, p = .404 | p > .017 | ||

| V(27,32) | R = 6.062, p = .014 | r = −.351, p = .200 | p > .017 | ||

| Hypothesis 3 | V(12,14–16) | N/A | N/A | r = − .668, p = .009* | p < .025 |

| V(36, 37,41, 42) | r = −.449, p = .107 | p > .025 |

Note. ROIs = regions of interest; CI = cochlear implant.

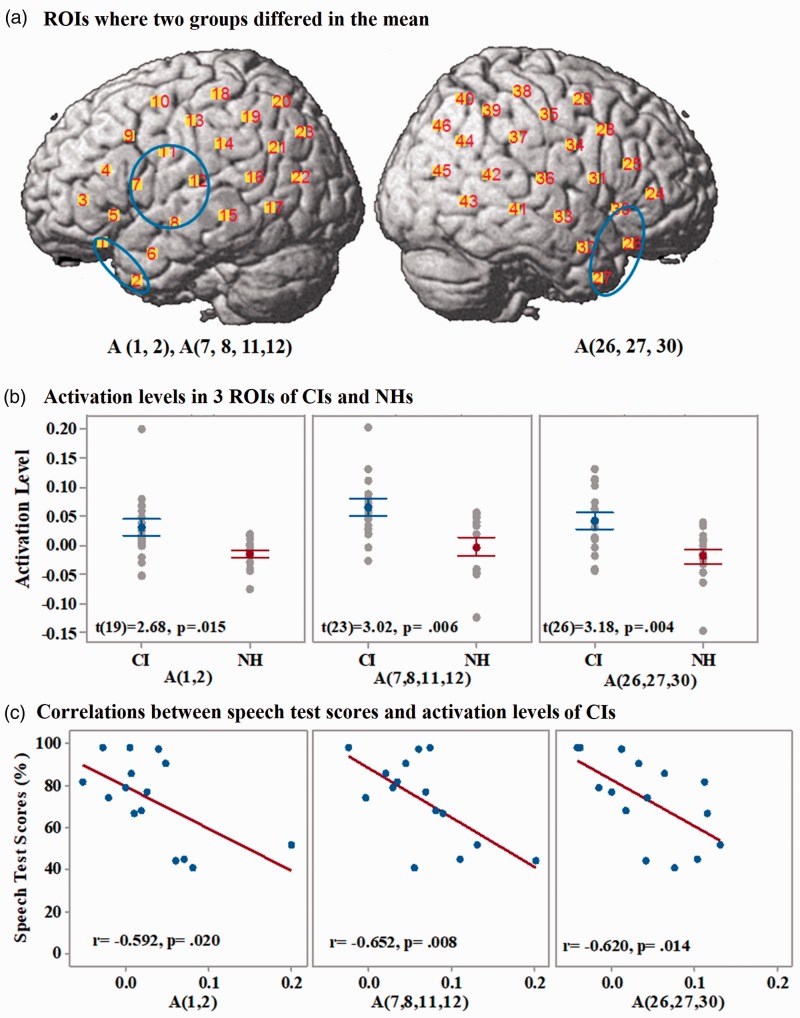

ROIs where brain activation in the CI group and the NH group were maximally different

The ROIs on the cortex where significant differences in the mean brain activation levels were found between the two groups of participants are shown in Figure 7(a) in blue circles. ROIs are designated with the stimulus type (A or V) and the channel numbers included in the ROI. Three ROIs in CI users were found, all of which showed significantly higher activation levels than NH listeners when responding to auditory stimuli. Statistical results are presented in Table 2. Two ROIs—A (1 and 2) and A (7, 8, 11, and 12)—were located in the left anterior temporal cortex and left the prefrontal and the middle part of the STG, respectively. The third ROI—A (26, 27, 30)—was located in the right anterior temporal cortex. No ROI with any significant group difference in the mean levels of brain activation evoked by visual stimuli was found. The mean ± SEM and results of two-sample t tests for activation levels in the three ROIs of CI users and NH listeners are shown in Figure 7(b). Pearson correlations between activation levels in the three ROIs and the auditory speech test scores of CI users are shown in Figure 7(c). With multiple comparison corrections, only ROIs A (7, 8, 11, and 12) and A (26, 27, and 30) showed significant negative correlations between the activation levels and the speech test scores of CI users (Table 2).

Figure 7.

Results of group mean differences-related ROIs. (a) ROIs (blue ellipses) where there were higher activation levels in CI users than in NH listeners when listening to auditory speech stimuli. (b) Comparisons of the activation levels in the 3 ROIs between CI users (in blue) and NH listeners (in red). Error bars are SEM. (c): Pearson correlations between the activations levels in the three ROIs of CI users and their speech test scores. Brain activation levels (concentration changes of HbO) are in the unit of µMol.

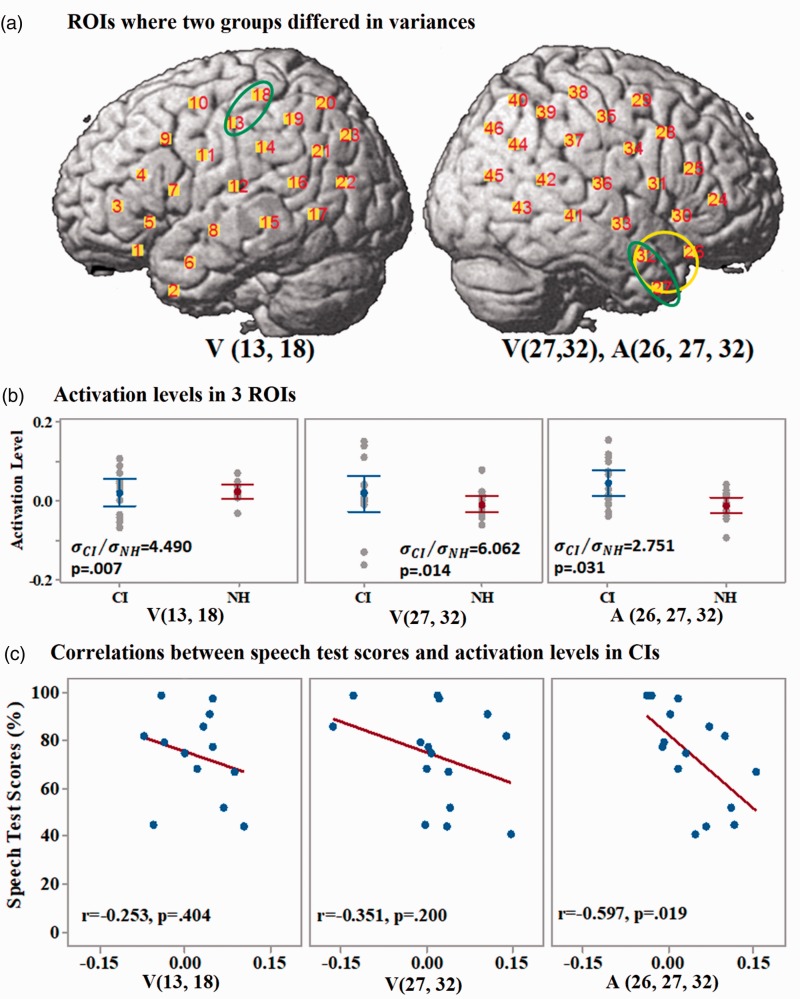

ROIs where variability in brain activation levels was larger across CI users than across NH listeners

The three ROIs where the CI group had a larger variance of brain activation levels than NH listeners when responding to auditory or visual speech stimuli are shown in Figure 8(a). One located in the left central gyrus—V (13, 18)—and two located in the right anterior temporal lobe—V (27, 32) and A (26, 27, 32). The latter two ROIs were overlapping regions in the brain, but one showed significant differences between NH listeners and CI users in the responses to visual stimuli and the other to auditory stimuli. Individual participant’s activation levels in the three ROIs and the results of Bonett’s test of two-sample (one-tailed) variances between two groups are shown in Figure 8(b). Pearson correlations between activation levels of CI users in the three ROIs and their speech test scores are shown in Figure 8(c). Among the three ROIs in CI users that showed significantly larger variances of the activation levels than NH listeners, none showed a significant correlation between the activation levels and speech test scores after Bonferroni correlation for multiple comparisons. The correlation result in the ROI A (26, 27, 32) was marginally significant (). Statistical results are presented in Table 2. It should be noted that this ROI significantly overlaps with the Region A (26, 27, and 30) that was found to have a significant correlation between activity and speech understanding using the first data-driven approach.

Figure 8.

Results of group variances differences-related ROIs. (a) ROIs where two groups differed in the variances of activation levels. Green and yellow ellipses mark the regions on the cortex that showed larger variances of activation levels in CI users than in NH listeners when responding to visual and auditory speech stimuli, respectively. (b) Comparisons of the activation levels in the three ROIs between CI users (in blue) and NH listeners (in red). Error bars are 95% confidence intervals. (c) Pearson correlations between the activations levels in the three ROIs of CI users and their speech test scores. Brain activation levels (concentration changes of HbO) are in the unit of µMol.

Effects of age, speechreading ability, and duration of CI use on speech understanding ability of CI users

Finally, stepwise multiple regression was performed to determine whether the prediction of speech understanding of CI users could be strengthened by including age, speechreading ability, and duration of CI use in addition to the activation levels in the three ROIs found to have significant correlations with speech understanding as described earlier. With a threshold of , ROIs A (7, 8, 11, and 12) and V (12, 14–16) were the only two ROIs that had a significant independent contribution to the prediction of speech test scores of CI users. The factors of age, speechreading ability, and duration of CI use showed no significant correlation with the speech test scores of CI users, , ; respectively. Nor did these factors provide any significant contribution in the multiple regression (with ) additional to that provided by the activation levels in the two ROIs (Table 3). These results showed that a combination of the activation levels in A (7, 8, 11, and 12) and V (12, 14–16) of CI users provided the best prediction of their speech test scores. The secondary factors including age, duration of CI use, and speechreading ability did not account for the significant variances in speech understanding ability in this group of CI users.

Table 3.

Stepwise Regression Using Activation Levels of Multiple ROIs to Predict the Speech Test Scores of CI Users.

| ROIs | Step 1: p value | Step 2: p values | Step 3: p values | Step 4: p values | Step 5: p values |

|---|---|---|---|---|---|

| A (7, 8, 11, and 12) | .004 | .008 | .018 | .039 | All p >. 05 |

| V (12, 14–16) | .015 | .021 | .069 | ||

| Age | .521 | .535 | |||

| CI duration | .873 | ||||

| Speechreading ability | |||||

| A (26, 27, and 30) | |||||

| R2 (adjusted) | .508 | .709* | .692 | .654 | |

Note. ROIs = regions of interest; CI = cochlear implant. *p<.001.

Discussion

This study used fNIRS to investigate brain activation in the CI users to speech stimuli and the correlations of those brain activation with their auditory speech understanding ability. The results showed that fNIRS responses in CI users to auditory stimuli in the left middle superior temporal lobe—ROI A (7, 8, 11, and 12)—and the right anterior temporal lobe—ROI A (26, 27, and 30)—were both greater on average than in the NH group, and negatively correlated with the auditory speech tests scores of CI users (Figure 7). Further, the response in CI users to visual stimuli in the left STS or STG—ROI V (12, 14, 15, 16)—was significantly negatively correlated with their auditory speech test scores (Figure 6). The combination of responses in the left middle superior temporal lobe and part of the prefrontal lobe of CI users to auditory stimuli—A (7, 8, 11, and 12)—and responses in the left STS or STG to visual stimuli—V (12, 14, 15, and16)—produced a better prediction of their auditory speech understanding ability than the activity in any one ROI alone (Table 2).

In CI users, the negative correlation between the response in the ROI A (7, 8, 11, and 12) to auditory speech and the auditory speech test scores is consistent with a maladaptive neuroplasticity in the ventral speech processing pathways. This ROI was located in the left middle superior temporal lobe and part of the prefrontal lobe, part of which is in ventral speech processing pathways in the NH listeners. As discussed earlier, when processing visually presented phonological information (Lazard et al., 2010), deaf people who showed greater activation in the ventral speech pathways before implantation had poorer subsequent speech understanding after implantation. Although the imaging was performed before implantation, the authors argued that functional changes that occurred during deafness may not be reversed after implantation and thus may influence the speech understanding of experienced CI users. Longitudinal studies using PET (Rouger et al., 2012) and magnetoencephalography (MEG) (Pantev, Dinnesen, Ross, Wollbrink, & Knief, 2006) have shown that functional changes occurring during periods of deafness can recover somewhat but not fully within the first 12 months after implantation. The drivers of the functional changes during periods of deafness (e.g., deterioration of phonological processing and increased reliance on speechreading) may continue to operate in CI users after implantation.

Alternatively, the negative correlation between auditory speech understanding of CI users and fNIRS responses in the ROI A (7, 8, 11, and 12) might be related to increased reliance on phonological processing and working memory. This ROI covers part of the working memory system in NH listeners (Vigneau et al., 2006). Previous studies (Classon, Rudner, & Ronnberg, 2013; Ronnberg, Rudner, Foo, & Lunner, 2008) in CI users have found that increased demand on working memory capacity (compared with NH listeners) occurred when performing phonological processing tasks. Moreover, their demand on working memory capacity was negatively correlated with the behavioral performance in the phonological processing task. The increase in working memory demand in CI users may result from a mismatch between long-term stored phonological memories and the CI input signal. Results of this study are consistent with this argument, because fNIRS activation in CI users in this region was higher than normal and also negatively correlated with auditory speech understanding. CI users with poor speech understanding had increased demand on cognitive resources, which may thus reflect their increased listening effort.

The negative correlation between auditory speech understanding in CI users and fNIRS responses to visual stimuli in the ROI V (12, 14, 15, and 16) could be related to functional changes in the auditory cortex driven by the increased reliance on visual speech processing. Such changes may be due to cross-modal plasticity or to changes in the connectivity strengths within existing multimodal networks. In this study, a negative correlation was found between activation to speechreading and fNIRS activity in the left STS or STG but not the right STS or STG (although there was a similar trend on the right side). Chen et al. (2015) also investigated cross-modal activation in experienced CI users using fNIRS when participants were responding to a mixture of tonal and speech-like unattended auditory stimuli and a checkerboard visual stimulus. They found that a combination of increased auditory activation in the visual cortex and decreased visual activation in the auditory cortex was associated with better auditory speech understanding, with effects seen in both hemispheres. The facilitatory effect of cross-modal auditory activation in the visual cortex was also noted by Giraud et al. (2000) using PET imaging. By following newly implanted CI users over 12 months and using auditory word stimuli, Giraud et al. found that cross-modal activation in the visual cortex increased in line with the participants’ speech understanding ability. They interpreted this increased activation as due to the expectancy effect, whereby an auditory word creates an expectancy of seeing the visual correlate and thus activates the visual system.

Increased cross-modal activation in the auditory cortex, in contrast, was associated with poor speech understanding in both our study and that of Chen et al. (2015). Because this increased visual activation implies a possible loss of auditory processing capacity, either due to weakened or taken over auditory networks, it is not surprising that it is associated with poor auditory speech understanding. An fNIRS study by Dewey and Hartley (2015) using checkerboard visual stimulus showed greater cross-modal activity in the right auditory cortex in deaf (unimplanted) individuals compared with NH listeners, demonstrating that this increased cross-modal activation is present before the auditory input is reestablished with the CI. It is interesting that Dewey and Hartley (2015) only found increased cross-modal activation in the right hemisphere and not the left hemisphere. The fact that this study showed a stronger correlation between increased cross-modal activation and speech understanding in the left hemisphere compared with the right hemisphere is possibly due to the dominance and importance of the left hemisphere for language processing.

It is interesting that both the present study in experienced CI users and a longitudinal fNIRS study in CI users either before or 6 months after implantation (Anderson et al., 2017) found no significant mean difference in cross-modal activation between CI users and NH listeners. Further, Anderson et al. (2017) found no difference between groups in the change in cross-modal activation over 6 months postoperatively. However, the change of right auditory cross-modal activation in CI users after 6 months postoperatively positively correlated with their auditory speech understanding ability in the best-aided condition at 6 months postoperatively. That is, those CI users with increased right auditory cross-modal activation postimplantation were those who also had the best speech understanding after 6 months postoperatively. The authors concluded from this observation that right auditory cross-modal activation has an adaptive benefit to speech understanding after implantation. In contrast, results of this study and other studies discussed earlier are consistent with a maladaptive role of auditory cross-modal activation. However, unlike in this study, the participants in the study of Anderson et al. (2017) included prelingually deaf adults, and the speech understanding was tested in best-aided condition, which included hearing aids for many participants. Therefore, it is unclear whether residual hearing or prelingual deafness played a role in the correlation they found. Furthermore, Anderson et al. (2017) reported the correlation between the change of cross-modal activation and speech understanding at 6 months postoperatively. However, they did not report whether the change in participants’ speech understanding correlated with the change in cross-modal activation, or whether there was any correlation between the two factors at the same time points. For example, CI users with larger than average cross-modal activation preimplantation (and hypothesized poorer than average speech perception outcome) might slightly decrease the cross-modal activation, but which is still above average cross-modal activation at 6 months postoperatively. This scenario would still be consistent with a maladaptive role of auditory cross-modal plasticity, which is only partially but not sufficiently reversed by implant use. Without the data to relate the absolute levels of preimplant cross-modal activation with its change over time after implantation, and the data that relate changes in cross-modal activation to changes in speech perception over time, this scenario would have to remain a speculation only.

Conclusion

In this study, fNIRS was used in a group of experienced CI users and NH listeners. Correlations were found between brain activation to both auditory and visual speech in CI users and auditory speech understanding ability with the implant alone. Three ROIs were found where the brain activation to auditory or visual speech stimuli was significantly negatively correlated with auditory speech understanding ability. A combination of response to auditory stimuli in the left prefrontal and middle superior temporal lobe and response to visual stimuli in the left STS or STG provided the best prediction of CI users’ auditory speech understanding. Results of this study showed that fNIRS can reveal functional brain differences between CI users and NH listeners that correlate with their auditory speech understanding after implantation. Thus, fNIRS may have the potential for clinical management of CI candidates and CI users, either in providing diagnostic information or in guiding hearing therapies.

Author Contributions

X. Z., W. C., R. L., and C. M. M. conceptualized the experiments. X. Z., A. S., and W. C. performed the experiments. A. K. S. and C. M. M contributed resources. X. Z., A. K. S., H. I. B., and C. M. analyzed the data. X. Z., A. K. S., H. I. B., W. C., R. L., and C. M. M. wrote and revised the article.

Declaration of Conflicting Interests

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding

The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This research was supported by a Melbourne University PhD scholarship to X. Z., a veski fellowship to C. M. M, an Australian Research Council Grant (FT130101394) to A. K. S, and the Australian Fulbright Commission for a fellowship to R. L., the Lions Foundation, and the Melbourne Neuroscience Institute. The Bionics Institute acknowledges the support it receives from the Victorian Government through its Operational Infrastructure Support Program.

References

- American Clinical Neurophysiology Society (2006) Guideline 5: Guidelines for standard electrode position nomenclature. Am J Electroneurodiagnostic Technol 46: 222–225. [PubMed] [Google Scholar]

- Anderson, C. A., Wiggins, I. M., Kitterick, P. T., & Hartley, D. E. H. (2017) Adaptive benefit of cross-modal plasticity following cochlear implantation in deaf adults. Proceedings of the National Academy of Sciences: 201704785. [DOI] [PMC free article] [PubMed]

- Blamey P., Artieres F., Baskent D., Bergeron F., Beynon A., Burke E., Lazard D. S. (2013) Factors affecting auditory performance of postlinguistically deaf adults using cochlear implants: An update with 2251 patients. Audiol Neurootol 18: 36–47. [DOI] [PubMed] [Google Scholar]

- Bonett D. G. (2006) Robust confidence interval for a ratio of standard deviations. Applied Psychological Measurement 30: 432–439. [Google Scholar]

- Boothroyd A., Hanin L., Hnath T. (1985) A sentence test of speech perception: Reliability, set equivalence, and short term learning, New York, NY: City University of New York. [Google Scholar]

- Chen L.-C., Sandmann P., Thorne J. D., Bleichner M. G., Debener S. (2016) Cross-modal functional reorganization of visual and auditory cortex in adult cochlear implant users identified with fNIRS. Neural Plasticity 2016: 4382656. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Classon E., Rudner M., Ronnberg J. (2013) Working memory compensates for hearing related phonological processing deficit. Journal of Communication Disorders 46: 17–29. [DOI] [PubMed] [Google Scholar]

- Coez A., Zilbovicius M., Ferrary E., Bouccara D., Mosnier I., Ambert-Dahan E., Sterkers O. (2008) Cochlear implant benefits in deafness rehabilitation: PET study of temporal voice activations. Journal of Nuclear Medicine 49: 60–67. [DOI] [PubMed] [Google Scholar]

- Cui X., Bray S., Reiss A. L. (2010) Functional near infrared spectroscopy (NIRS) signal improvement based on negative correlation between oxygenated and deoxygenated hemoglobin dynamics. NeuroImage 49: 3039–3046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delpy D. T., Cope M., van der Zee P., Arridge S., Wray S., Wyatt J. (1988) Estimation of optical pathlength through tissue from direct time of flight measurement. Physics in Medicine and Biology 33: 1433. [DOI] [PubMed] [Google Scholar]

- Dewey R. S., Hartley D. E. (2015) Cortical cross-modal plasticity following deafness measured using functional near-infrared spectroscopy. Hearing Research 325: 55–63. [DOI] [PubMed] [Google Scholar]

- Essenpreis M., Elwell C. E., Cope M., van der Zee P., Arridge S. R., Delpy D. T. (1993) Spectral dependence of temporal point spread functions in human tissues. Applied Optics 32: 418–425. [DOI] [PubMed] [Google Scholar]

- Finney E. M., Fine I., Dobkins K. R. (2001) Visual stimuli activate auditory cortex in the deaf. Nature Neuroscience 4: 1171–1173. [DOI] [PubMed] [Google Scholar]

- Giraud A. L., Price C. J., Graham J. M., Frackowiak R. S. (2001. a) Functional plasticity of language-related brain areas after cochlear implantation. Brain 124: 1307–1316. [DOI] [PubMed] [Google Scholar]

- Giraud A. L., Price C. J., Graham J. M., Truy E., Frackowiak R. S. (2001. b) Cross-modal plasticity underpins language recovery after cochlear implantation. Neuron 30: 657–663. [DOI] [PubMed] [Google Scholar]

- Giraud A. L., Truy E., Frackowiak R. S., Grégoire M. C., Pujol J. F., Collet L. (2000) Differential recruitment of the speech processing system in healthy subjects and rehabilitated cochlear implant patients. Brain 123(Pt 7): 1391–1402. [DOI] [PubMed] [Google Scholar]

- Handwerker D. A., Ollinger J. M., D'Esposito M. (2004) Variation of BOLD hemodynamic responses across subjects and brain regions and their effects on statistical analyses. NeuroImage 21: 1639–1651. [DOI] [PubMed] [Google Scholar]

- Huppert T. J., Diamond S. G., Franceschini M. A., Boas D. A. (2009) HomER: A review of time-series analysis methods for near-infrared spectroscopy of the brain. Applied Optics 48: D280–D298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Julien C. (2006) The enigma of Mayer waves: Facts and models. Cardiovascular Research 70: 12–21. [DOI] [PubMed] [Google Scholar]

- Jurcak V., Tsuzuki D., Dan I. (2007) 10/20, 10/10, and 10/5 systems revisited: Their validity as relative head-surface-based positioning systems. NeuroImage 34: 1600–1611. [DOI] [PubMed] [Google Scholar]

- Kamran M. A., Jeong M. Y., Mannan M. M. (2015) Optimal hemodynamic response model for functional near-infrared spectroscopy. Frontiers in Behavioral Neuroscience 9: 151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lazard D. S., Lee H. J., Gaebler M., Kell C. A., Truy E., Giraud A. L. (2010) Phonological processing in post-lingual deafness and cochlear implant outcome. NeuroImage 49: 3443–3451. [DOI] [PubMed] [Google Scholar]

- Lazard D. S., Lee H. J., Truy E., Giraud A. L. (2013) Bilateral reorganization of posterior temporal cortices in post-lingual deafness and its relation to cochlear implant outcome. Human Brain Mapping 34: 1208–1219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McKay C. M., Shah A., Seghouane A. K., Zhou X., Cross W., Litovsky R. (2016) Connectivity in language areas of the brain in cochlear implant users as revealed by fNIRS. Advances in Experimental Medicine and Biology 894: 327–335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Molavi B., Dumont G. A. (2012) Wavelet-based motion artifact removal for functional near-infrared spectroscopy. Physiological Measurement 33: 259. [DOI] [PubMed] [Google Scholar]

- Ogawa S., Lee T.-M., Kay A. R., Tank D. W. (1990) Brain magnetic resonance imaging with contrast dependent on blood oxygenation. Proceedings of the National Academy of Sciences 87: 9868–9872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olds, C., Pollonini, L., Abaya, H., Larky, J., Loy, M., Bortfeld, H., Beauchamp, M. S., & Oghalai, J. S. (2016). Cortical activation patterns correlate with speech understanding after cochlear implantation. Ear and Hearing, 37, e160e172. [DOI] [PMC free article] [PubMed]

- Orihuela-Espina F., Leff D. R., James D. R., Darzi A. W., Yang G. Z. (2010) Quality control and assurance in functional near infrared spectroscopy (fNIRS) experimentation. Physics in Medicine and Biology 55: 3701–3724. [DOI] [PubMed] [Google Scholar]

- Pantev C., Dinnesen A., Ross B., Wollbrink A., Knief A. (2006) Dynamics of auditory plasticity after cochlear implantation: A longitudinal study. Cerebral Cortex 16: 31–36. [DOI] [PubMed] [Google Scholar]

- Petersen B., Gjedde A., Wallentin M., Vuust P. (2013) Cortical plasticity after cochlear implantation. Neural Plasticity 2013: 318521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peterson G. E., Lehiste I. (1962) Revised CNC lists for auditory tests. Journal of Speech and Hearing Disorder 27: 62–70. [DOI] [PubMed] [Google Scholar]

- Pollonini, L., Bortfeld, H., & Oghalai, J.S. (2016). PHOEBE: a method for real time mapping of optodes-scalp coupling in functional near-infrared spectroscopy. Biomedical Optics Express, 7, 5104–5119. [DOI] [PMC free article] [PubMed]

- Pollonini L., Olds C., Abaya H., Bortfeld H., Beauchamp M. S., Oghalai J. S. (2014) Auditory cortex activation to natural speech and simulated cochlear implant speech measured with functional near-infrared spectroscopy. Hearing Research 309: 84–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ronnberg J., Rudner M., Foo C., Lunner T. (2008) Cognition counts: A working memory system for ease of language understanding (ELU). International Journal of Audiology 47: S99–S105. [DOI] [PubMed] [Google Scholar]

- Rouger J., Lagleyre S., Demonet J. F., Fraysse B., Deguine O., Barone P. (2012) Evolution of crossmodal reorganization of the voice area in cochlear-implanted deaf patients. Human Brain Mapping 33: 1929–1940. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saliba, J., Bortfeld, H., Levitin, D. J., & Oghalai, J. S. (2016). Functional near-infrared spectroscopy for neuroimaging in cochlear implant recipients. Hearing Research, 338, 64–75. [DOI] [PMC free article] [PubMed]

- Scholkmann F., Wolf M. (2013) General equation for the differential pathlength factor of the frontal human head depending on wavelength and age. Journal of Biomedical Optics 18: 105004. [DOI] [PubMed] [Google Scholar]

- Sevy A. B., Bortfeld H., Huppert T. J., Beauchamp M. S., Tonini R. E., Oghalai J. S. (2010) Neuroimaging with near-infrared spectroscopy demonstrates speech-evoked activity in the auditory cortex of deaf children following cochlear implantation. Hearing Research 270: 39–47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strelnikov K., Rouger J., Demonet J. F., Lagleyre S., Fraysse B., Deguine O., Barone P. (2010) Does brain activity at rest reflect adaptive strategies? Evidence from speech processing after cochlear implantation. Cerebral Cortex 20: 1217–1222. [DOI] [PubMed] [Google Scholar]

- Strelnikov K., Rouger J., Demonet J. F., Lagleyre S., Fraysse B., Deguine O., Barone P. (2013) Visual activity predicts auditory recovery from deafness after adult cochlear implantation. Brain 136: 3682–3695. [DOI] [PubMed] [Google Scholar]

- Stropahl M., Chen L.-C., Debener S. (2017) Cortical reorganization in postlingually deaf cochlear implant users: Intra-modal and cross-modal considerations. Hearing Research 343: 128–137. [DOI] [PubMed] [Google Scholar]

- Themelis G., D'Arceuil H., Diamond S. G., Thaker S., Huppert T. J., Boas D. A., Franceschini M. A. (2007) Near-infrared spectroscopy measurement of the pulsatile component of cerebral blood flow and volume from arterial oscillations. Journal of Biomedical Optics 12: 014033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thierry G., Giraud A. L., Price C. (2003) Hemispheric dissociation in access to the human semantic system. Neuron 38: 499–506. [DOI] [PubMed] [Google Scholar]

- van de Rijt L. P. H., van Opstal A. J., Mylanus E. A. M., Straatman L. V., Hu H. Y., Snik A. F., van Wanrooij M. M. (2016) Temporal cortex activation to audiovisual speech in normal-hearing and cochlear implant users measured with functional near-infrared spectroscopy. Frontiers in Human Neuroscience 10: 48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vigneau M., Beaucousin V., Herve P. Y., Duffau H., Crivello F., Houdé O., Tzourio-Mazoyer N. (2006) Meta-analyzing left hemisphere language areas: Phonology, semantics, and sentence processing. NeuroImage 30: 1414–1432. [DOI] [PubMed] [Google Scholar]

- Yamada T., Umeyama S., Matsuda K. (2012) Separation of fNIRS signals into functional and systemic components based on differences in hemodynamic modalities. PLoS One 7: e50271. [DOI] [PMC free article] [PubMed] [Google Scholar]