Abstract

Objective

To identify approaches to presenting cost and resource use measures that support consumers in selecting high‐value hospitals.

Data Sources

Survey data were collected from U.S. employees of Analog Devices (n = 420).

Study Design

In two online experiments, participants viewed comparative data on four hospitals. In one experiment, participants were randomized to view one of five versions of the same comparative cost data, and in the other experiment they viewed different versions of the same readmissions data. Bivariate and multivariate analyses examined whether presentation approach was related to selecting the high‐value hospital.

Principal Findings

Consumers were approximately 16 percentage points more likely to select a high‐value hospital when cost data were presented using actual dollar amounts or using the word “affordable” to describe low‐cost hospitals, compared to when the Hospital Compare spending ratio was used. Consumers were 33 points more likely to select the highest performing hospital when readmission performance was shown using word icons rather than percentages.

Conclusions

Presenting cost and resource use measures effectively to consumers is challenging. This study suggests using actual dollar amounts for cost, but presenting performance on readmissions using evaluative symbols.

Keywords: Quality improvement/report cards, health care costs, medical decision‐making

A key policy approach for curbing the growth in U.S. health care costs is cost transparency, or publicly reporting the prices that hospitals, physicians, and other health providers charge (Sinaiko and Rosenthal 2011; Mehrotra et al. 2012; Patel and Volpp 2012). This strategy is intended to enable consumers to select health care providers on value, in much the same way they make cost‐effective choices when purchasing other goods (Painter and Chernew 2012; Catalyst for Payment Reform 2013; White 2015). By making cost‐informed choices, consumers are expected to spark providers to compete on value (Berwick, James, and Coye 2003; Mehrotra et al. 2012). This “consumer pathway” is considered a crucial mechanism for containing health care costs.

Over the last decade, there has been tremendous growth in health care cost transparency websites. A census conducted in 2012 found 62 state health care price websites, compared with only 10 in 2004 (Kullgren, Duey, and Werner 2013). The growth in these websites has been fueled by state, federal, and foundation efforts. Efforts at the federal level include the Chartered Value Exchange program, which provided technical support on public reporting cost and quality data to 24 community collaboratives, as well as the Centers for Medicare and Medicaid Services, which began publishing individual hospitals’ average charges for key inpatient and outpatient procedures in 2013 (Tocknell 2013). At the state level, all but seven states as of mid‐2016 had passed some legislation on price transparency (de Brantes and Delbanco 2016). The Robert Wood Johnson Foundation also pushed this agenda through the Aligning Forces for Quality (AF4Q) program, in which 16 multistakeholder community coalitions were charged with publicly reporting cost data on health care providers, as well as reporting quality and resource use measures (Christianson et al. 2016).

There has also been an increase in publicly reporting resource use and efficiency measures (Hussey et al. 2009; Romano, Hussey, and Ritley 2010; American Institutes for Research 2015; Christianson and Shaw 2016). While these terms have been used synonymously (American Institutes for Research 2015), Romano and colleagues define resource use as the amount of resources used to produce health care, such as measures of hospital length of stay and readmission rates (Romano, Hussey, and Ritley 2010). Efficiency measures, they explain, are a subset of resource measures that compare use of health care resources to quality performance. The motivation to make these measures public is the same as for cost transparency: to enable consumers to make high‐value health care choices (Mehrotra et al. 2012).

There is considerable evidence that comparative reports on health provider quality are complex for consumers to comprehend, particularly when displays include large numbers of health providers or quality measures, technical language, or percentages rather than evaluative displays, like stars or icons (Peters et al. 2007; Faber et al. 2009; Greene and Peters 2009; Hibbard et al. 2012; Schlesinger et al. 2014; Kurtzman and Greene 2015). Comparative cost and resource use information may be even more challenging for consumers, as consumers may misinterpret the data or not find it relevant (The Center for Health Care Quality 2011). One key concern about cost data is that consumers may equate higher cost providers with higher quality providers—which in the context of price transparency means choosing higher cost rather than higher value providers (Fox 2011; Hibbard et al. 2012). In recent national surveys, between 17 and 48 percent make the incorrect assumption that medical care cost and quality are linked (Fox 2011; Schleifer, Hagelskamp, and Rinehart 2015; Schleifer, Silliman, and Rinehart 2017).

There may also be misinterpretation of resource use measures because a lower score for some measures, like readmission rates, indicates better performance. Prior research has shown that consumers often assume that higher values represent better performance, even when it is not the case (Peters et al. 2007; Hibbard et al. 2012). For example, Peters and colleagues found that 73 percent of study respondents selected the highest quality hospital when a nurse staffing measure was presented with a higher number indicating a better staffing ratio (number of nurses per 100 patients), compared to 60 percent when a lower number indicated better staffing (the number of patients per nurse) (Peters et al. 2007).

There are also questions regarding whether consumers will find cost information, as it is currently presented, to be relevant. In qualitative studies, consumers have expressed greater preference for their out‐of‐pocket costs, rather than the total cost of care, which is typically presented (Blumenthal and Rizzo 1991; Mehrotra et al. 2012; Sommers et al. 2013; Yegian et al. 2013; Blumenthal‐Barby et al. 2015). Also, consumers report a preference for the cost of an episode of care rather than for individual services (Yegian et al. 2013). Some experts have argued that as health plans protect consumers from most of the cost of care, consumers have little incentive to compare prices for health care services (Sinaiko and Rosenthal 2011; Mehrotra et al. 2012; Painter and Chernew 2012; Yegian et al. 2013; Frost and Newman 2016). Recent research has further suggested that only a minority of health care services are appropriate for comparison shopping, as shopping requires having time in advance of the medical need and having multiple available providers (Frost, Newman, and Quincy 2016). National survey data, however, suggest there is consumer demand for health care cost information (Schleifer, Hagelskamp, and Rinehart 2015; The Kaiser Family Foundation 2015; Duke et al. 2017; Schleifer, Silliman, and Rinehart 2017), and the rise in high‐deductible health plans is expected to increase the level of consumer interest in cost data (Mehrotra et al. 2012; Yegian et al. 2013; Ubel 2014).

There has been little empirical research identifying effective approaches to presenting health provider cost data to consumers. A 2012 study by Hibbard and colleagues found that cost data are less likely to be equated with quality when it is presented alongside quality performance data (Hibbard et al. 2012). The study also found that the use of dollar signs as symbols in comparative displays (e.g., $, $$, $$$) instead of actual dollar amounts, reduced consumers’ likelihood of selecting a low‐cost provider (Hibbard et al. 2012). A qualitative study by American Institutes of Research (AIR) found that consumers strongly prefer the terms “affordable,” “reasonable,” and “lower cost” to the term “low cost” (American Institutes for Research 2014). They also found that summary measures that combine cost and quality can be viewed as untrustworthy (American Institutes for Research 2014). The few studies that have assessed consumer use of health provider cost data suggest that few consumers are currently using the reports (Mehrotra et al. 2012; Bridges et al. 2015; Desai et al. 2016).

This study uses hypothetical choice experiments to build the evidence base on presenting cost and resource use data to support consumers in making high‐value health care choices. We conducted three online experiments to test the effectiveness of approaches currently used by public reporting websites or that prior qualitative studies suggest will support consumer choice. One experiment tested different approaches to presenting and labeling hospital cost data in order to identify approaches that support selection of high‐value hospitals. Another experiment tested ways to present readmissions data so that consumers did not mistakenly believe that a higher rate was better performance. A third experiment examined how much interest consumers showed in cost data relative to other comparative data, and the extent to which interest in cost data differed based on how it was described (out‐of‐pocket or total, and per visit or average annual). For all our analyses, we examined whether the displays had differential impact on study participants enrolled in high‐deductible health plans compared to those in traditional health plans.

Methods

The three experiments were conducted as part of an online survey of employees of Analog Devices, a large semiconductor company based in Massachusetts with 10,000 employees worldwide. In the summer of 2013, when the survey was conducted, U.S. employees were offered two health plans, a traditional health plan (an HMO) and a high‐deductible health plan, and they also began offering Castlight's cost and quality transparency product to employees (Castlight Health 2013). A link to the online Qualtrics survey was emailed out to Analog's employees across the United States, and participants were offered the opportunity to be entered into a drawing for one of 15 gift cards (either $50 or $100). A total of 420 employees completed this survey. The George Washington University Office of Human Research determined that the study was exempt from review.

Respondents were disproportionately male (66 percent), highly educated (32 percent with a graduate degree), and in excellent or very good health (21 and 44 percent, respectively) (Table S1 in Appendix SA2). Half (51 percent) reported enrollment in the traditional plan while 47 percent were enrolled in a high‐deductible health plan.

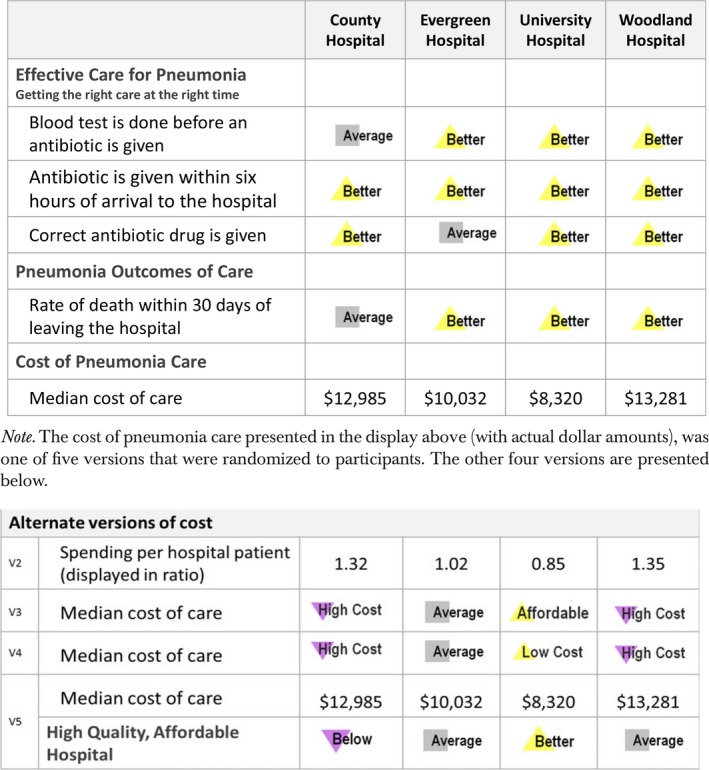

Experiment Testing Presentation of Cost Data

The goal of this experiment was to test whether we could identify approaches to presenting hospital cost data that supported consumer selection of a high‐value hospital, or a hospital with high quality and low cost. Study participants viewed a comparative display of pneumonia quality and cost information on four fictitious hospitals (Figure 1). Two hospitals had top quality ratings across the four quality measures. One of the two (University Hospital) had the lowest cost of care of any of the hospitals ($8,320), making it the high‐value choice, and the other (Woodland Hospital) had the highest median cost of care ($13,281). The other two hospitals had mixed quality measures, and their costs were below Woodland Hospital's median cost and above University Hospital's median cost.

Figure 1.

An Example of the Display from the Cost Presentation Experiment, with the Alternate Cost Displays below [Color figure can be viewed at http://wileyonlinelibrary.com]

We randomized participants to view one of five cost displays, which differed based upon the indicator of cost (actual dollar amounts, word icons to indicate the median cost of care, or Medicare.gov's Hospital Compare spending per beneficiary cost ratio). The word icons for lower cost hospitals were labeled one of two ways: “affordable” or “low cost.” These two options had been found, respectively, to be more and less effective in a prior qualitative study (American Institutes for Research 2014). We also tested whether adding an indicator that the hospital was a “high‐quality, affordable hospital” in addition to presenting the actual dollar amount would help simplify the choice or result in skepticism, as qualitative findings suggested (American Institutes for Research 2014).

Study participants were asked which hospital they would select if they were choosing a hospital to treat a family member with pneumonia. The primary dependent variable was whether they selected the high‐value hospital (University Hospital). We additionally examined the time it took participants to select a hospital from the comparative display, which is a measure of cognitive effort or processing time (Glöckner and Betsch 2012).

Analytic Approach

We examined the bivariate associations between the five cost displays and the dependent variables (hospital choice and time to make choice). Following that, we constructed logistic regression models to examine the relationship between cost display approach and selection of the high‐value hospital, controlling for gender, age, educational attainment, race/ethnicity, self‐reported health status, and type of health insurance plan (traditional or high deductible). We additionally tested models that included interactions between display type and health plan type (traditional or high deductible) to test whether there were differential impacts of display based upon insurance type. As there were no statistically significant interactions, the results are not presented.

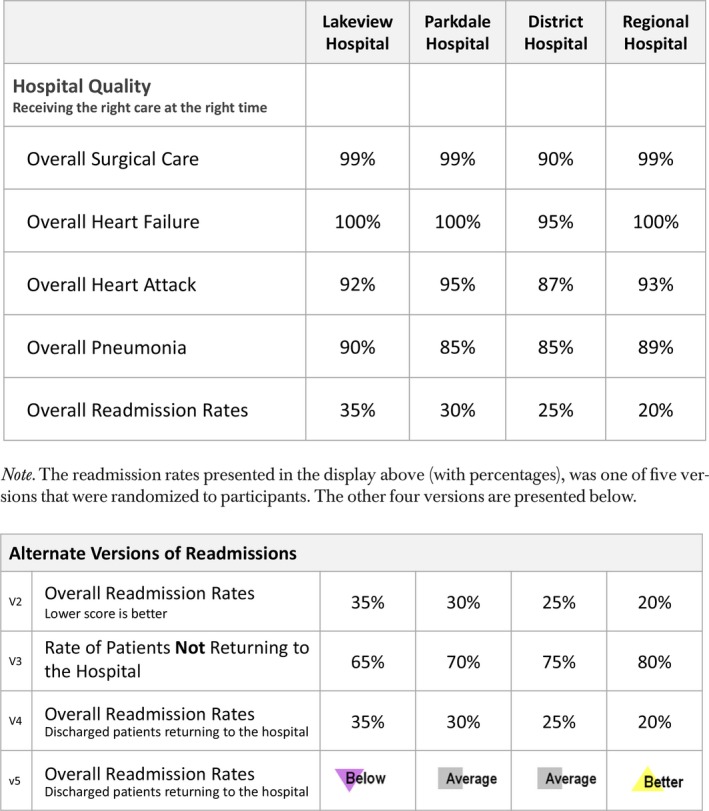

Experiment Testing Presentation of Readmissions Data

This experiment tested ways to present hospital readmission data so that consumers did not confuse low rates for poor performance. Similar to cost presentation experiment, participants viewed a comparative display of four fictional hospitals. In this experiment, three hospitals had high quality across the four quality measures (Figure 2). One of the three high‐quality hospitals (Regional Hospital) had a comparatively low readmission rate (20 percent), while the other two (Parkdale and Lakeview) had rates of 30 and 35 percent, respectively. The fourth hospital had lower quality performance.

Figure 2.

An Example of the Display from the Hospital Readmission Presentation Experiment, with the Alternate Readmission Displays below [Color figure can be viewed at http://wileyonlinelibrary.com]

Study participants were randomized to one of five versions of the readmission data. One was simply the readmission rate as a percentage. The second presented the readmission rate as a percentage and added “Lower score is better.” This strategy was used by four Aligning Forces for Quality public reporting websites, and current websites, like Oregon Hospital Guide and Missouri's Focus on Hospitals, use a similar approach. The third version flipped the readmission rate and presented the rate of patients not returning to the hospital, so a higher score indicated a better score similar to the approach tested by Peters et al. (2007). The fourth version provided descriptive information on a readmission rate measures, and the final approach used word icons rather than percentages to show the readmission rate performance (labeled: better, average, and below).

Study participants were asked which hospital they would select, and the main dependent variable was whether they selected the hospital with high quality and a low readmissions rate (Regional Hospital). We also measured the time it took participants to select a hospital.

Analytic Approach

We examined the bivariate relationships between the display approach and the two dependent variables. We then developed logistic regression models, predicting selection of the high‐value hospital. We again tested interactions between display approach and health plan type (traditional or high deductible) and found no significant interactions.

Experiment on Interest in Cost Data

This experiment examined how interested consumers were in cost information relative to other types of comparative information on primary care providers, and whether their interest in cost information differed based upon the type of cost information: out‐of‐pocket costs or total costs, and per visit costs or annual costs.

The experiment was conducted after study participants viewed a comparative display of physician quality performance on diabetes (not reported here) and prior to participants seeing any cost data. Study participants were simply asked how interested they would be in viewing four types of additional comparative information for making a choice among doctors to treat diabetes: patient survey results; quality information for other health conditions; actual reviews from patients, like from Angie's list or RateMDs.com; and cost information.

The way the cost information was described differed across study participants in order to test whether participants were more interested in one type of cost than another. Specifically, participants were randomized to view one of four descriptions of cost information: “your out‐of‐pocket costs for each visit,” “average out‐of‐pocket costs for 1 year of diabetes care,” “average cost per visit for you and the insurance company,” and “average cost of 1 year of diabetes care for you and the insurance company.” Respondents used a four‐point scale to indicate their interest level, where 1 indicated “not interested” and 4 indicated “very interested.”

Analytic Approach

We examined whether the level of interest in the four different types of cost data differed significantly, and whether there was more or less interest in cost data than the other types of comparative data. We examined this for the whole sample, as well as stratified by health plan type (traditional or high deductible). Following that, we constructed linear regression models to examine the relationship between the type of cost information and the participant's level of interest, controlling for gender, age, educational attainment, race/ethnicity, self‐reported health status, and health insurance type. Finally we tested models including interactions between cost description and type of health plan, which are presented in Table S4 in Appendix SA2.

Results

Experiment Testing Presentations of Cost Data

In bivariate analyses, the approach to presenting cost data was strongly related to participants’ selection of the high‐value hospital (Table 1). Two presentation approaches (using actual dollar amounts and the word icon “affordable”) resulted in high rates of selecting the high‐value hospital (94 and 93 percent, respectively). Using the term “low cost” in a word icon and adding a “high‐quality, affordable hospital” indicator resulted in a rates of selecting the high‐value hospital that were 8–9 points lower (and significantly different from the top presentation approaches at the .10 level). The Hospital Compare spending ratio resulted in the lowest level of selecting the high‐value hospital (77 percent). Those who viewed the indicator for high‐quality and affordable hospitals made their choice significantly faster than those who viewed the other presentations (22 seconds vs. the others which ranged from 30 to 36 seconds).

Table 1.

Cost Presentation Experiment: Choice of Hospital by Cost Presentation Approach

| Median Cost ($) (n = 80) | CMS Spending Ratio (n = 75) | Word Icon: Affordable (n = 87) | Word Icon: Low Cost (n = 86) | Indicator for High‐Quality, Affordable Hospital (n = 84) | p‐Value | |

|---|---|---|---|---|---|---|

| Choice of high‐quality/low‐cost hospital (University Hospital) (%, 95% CI) | 93.8 (88.4–99.1) | 77.3 (67.8–86.9) | 93.1 (87.7–98.5) | 84.9 (77.3–92.5) | 84.5 (76.7–92.3) | .01 |

| Time to make choice in seconds (mean, 95% CI) | 30.5 (24.0–36.9) | 35.8 (30.4–41.1) | 30.8 (25.9–35.7) | 30.1 (23.7–36.5) | 22.1 (18.2–26.0) | .01 |

Note. p‐Values are based upon a chi‐square test for the choice of high‐quality/low‐cost hospital, and ANOVA for time to make choice.

Findings from logistic regression analyses (Table S2 in Appendix SA2) were similar. There was no significant difference between actual dollar amounts and the “affordable” word icon, but compared to the actual dollar amounts, the other three presentation approaches resulted in .17–.33 the odds of selecting the high‐value hospital. Participants in fair/poor health and non‐whites also had lower odds of selecting the high‐value hospital compared to those in excellent health and whites, respectively. There was no significant difference in selection of the high‐value hospital based upon enrollment in a traditional or high‐deductible health plan.

Experiment to Test Presentations of Readmissions Data

Respondents who viewed readmission performance using word icons selected the high‐value hospital 25–33 percentage points more frequently than those who viewed any of the four other displays, which all presented performance using a version of percentage points (Table 2). Those who viewed the word icons selected a hospital in about one‐half to two‐thirds the time it took those who viewed the other presentations with percentages.

Table 2.

Readmissions Presentation Experiment: Choice of Hospital by Readmission Presentation Approach

| Readmission Rate (%) (n = 81) | Readmission Rate with “Lower Score Is Better” (n = 75) | Rate of Patients Not Returning to the Hospital (n = 87) | Readmission Rate with Explanation (n = 86) | Readmission Rate Using Word Icons (n = 91) | p‐Value | |

|---|---|---|---|---|---|---|

| Choice of highest quality hospital (regional) (%, 95% CI) | 58.0 (47.2–68.9) | 57.3 (46.0–68.6) | 65.5 (55.4–75.6) | 59.3 (48.8–69.8) | 91.2 (85.3–97.1) | <.00 |

| Time to make choice, in seconds (mean, 95% CI) | 47.6 (41.1–54.2) | 39.9 (35.2–44.6) | 44.4 (38.9–49.9) | 56.4 (48.2–64.6) | 26.2 (22.2–30.2) | <.00 |

Note. p‐Values are based upon a chi‐square test for the choice of high‐quality/low‐cost hospital, and ANOVA for time to make choice.

Notably, there was no difference in the rate of selecting the high‐value hospital when participants viewed percentages with a label stating a “lower score is better” or an explanation of what readmission rates measure, compared to presenting simply the readmission rate (57, 59, and 58 percent, respectively). Flipping the indicator to be the rate of patients not returning to the hospital resulted in a slightly higher rate of high‐value hospital selections (66 percent), but not significantly so.

Logistic regression analysis (Table S3 in Appendix SA2) confirmed the bivariate results. Respondents who viewed word icons had 8.6 times higher odds of selecting the high‐value hospital compared to those who viewed the readmission rate as a percentage. Again, there were no significant differences in selecting the high‐value plan based upon the type of health plan.

Experiment on Interest in Cost Data

Respondents were most interested in cost information when it was described as “your out‐of‐pocket costs for each visit” (Table 3). The mean interest score was 3.4 on a four‐point scale, as compared to 3.2 for average total cost per visit for you and the insurance company, 3.1 for average total cost of 1 year of diabetes care for you and the insurance company, and 3.0 for average out‐of‐pocket costs for 1 year of diabetes care, which was significantly lower. The interest level for out‐of‐pocket visit costs was significantly higher than for quality information for other health conditions. It was also higher, although not significantly so, compared with interest for patient survey results and actual reviews from other patients.

Table 3.

Interest in Cost Data of Varying Types

| How Much Interest Respondents Reported in Viewing the Following Types of Information for Making a Choice among Doctors to Treat Diabetes | Mean Score (95% CI) (1 – Not Interested – 4 Very Interested) | |||

|---|---|---|---|---|

| Total Sample (n = 409) | Health Plan Type | |||

| High‐Deductible Plan Respondents (n = 210) | HMO Plan Respondents (n = 185) | p‐Value | ||

| Patient survey results | 3.2 (3.2–3.3) | 3.2 (3.1–3.3) | 3.3 (3.1–3.4) | .59 |

| Quality information for other health conditions | 3.2a (3.1–3.2) | 3.0a (2.9–3.2) | 3.3 (3.1–3.4) | .02 |

| Cost options (randomized) | ||||

| Your out of pocket costs for each visit (n = 106) | 3.4 (3.2–3.5) | 3.3 (3.1–3.5) | 3.4 (3.2–3.7) | .41 |

| Average out‐of‐pocket costs for 1 year of diabetes care (n = 101) | 3.0a (2.8–3.2) | 3.1 (2.8–3.3) | 2.9a (2.6–3.2) | .47 |

| Average cost per visit for you and the insurance company (n = 105) | 3.2 (3.1–3.4) | 3.1 (2.8–3.4) | 3.4 (3.2–3.6) | .14 |

| Average cost of 1 year of diabetes care for you and the insurance company (n = 96) | 3.1 (2.9–3.3) | 3.3 (3.0–3.5) | 2.9a (2.6–3.2) | .04 |

| Actual reviews from other patients | 3.3 (3.2–3.4) | 3.2 (3.1–3.4) | 3.3 (3.2–3.4) | .43 |

p < .05 for the difference in interest from those shown “your out‐of‐pocket costs for each visit.”

Respondents in the high‐deductible plan valued the average annual total costs of care more than those in the traditional health plan (3.3 vs. 2.9), and as much as they valued out‐of‐pocket per visit cost. In contrast, traditional health plan enrollees valued quality information for health conditions other than diabetes more than the high‐deductible plan enrollees.

Table S4 in Appendix SA2 shows the multivariate analyses. The initial model confirms that out‐of‐pocket visit cost data are significantly preferred over the annual cost data (out‐of‐pocket and average annual) by about one‐third of a point. In a supplemental model that included interaction terms between the cost type and enrollment in the high‐deductible plan, we also confirm that high‐deductible enrollees had greater interest in average annual total cost information.

Discussion

This study confirms that consumers are interested in the cost of their health care (Schleifer, Hagelskamp, and Rinehart 2015; The Kaiser Family Foundation 2015; Duke et al. 2017; Schleifer, Silliman, and Rinehart 2017). Consumers expressed as much or more interest in comparative cost information on health providers as they did in other types of comparative information, like patient reviews and patient survey data. There was greatest interest in out‐of‐pocket per visit costs; however, the level of interest in total cost was still reasonably high. As many have predicted, interest in the total cost of care was significantly higher for those enrolled in a high‐deductible health plans compared to those in a traditional health plan. However, the difference in interest level by health plan type was not large in magnitude (less than a half point on a 5‐point scale).

The study also highlights that there are challenges in presenting cost information so that consumers make high‐value choices. Most notably, we found that consumers often misunderstood Hospital Compare's spending ratio. When they viewed the spending ratio, 77 percent selected the high‐value hospital compared to 94 percent of those who viewed the median cost of care in dollars. The study also suggests that language matters when describing low‐cost providers. We observed a trend in which fewer people selected the high‐value hospital when it was described as “low cost” compared to “affordable” (85 vs. 93 percent). And, finally, we found that we may have over‐nudged consumers by adding an indicator of “high‐quality, affordable hospital” in addition to the cost and quality information. When consumers viewed it, they were 9 percentage points less likely to select the high‐value hospital than when they viewed the same display without it. Future research should test the impact of how high‐value providers are described and the circumstances in which it is helpful to highlight high‐value providers.

We also found that presenting readmission data to consumers was tricky. By far the most effective way to do so was using an evaluative icon or symbol rather than presenting the rate as a percentage. When we used a word icon that described the hospital as “better,” “average,” or “below” on readmissions; we found 91 percent selected the top performing hospital, compared to 58 percent when the percentage was used. Further, we found that the common practices of adding language stating that a “lower score is better” and describing what the readmission rate measured did not increase selection of the top performing hospital at all.

Interestingly, while high‐deductible enrollees expressed more interest in cost information, they were no more likely than those in a traditional health plan to select the high‐value hospital in either the cost or readmissions experiments. Nor did the presentation approaches have a differential impact on high‐deductible enrollees.

The study findings should be understood in the context of the study's limitations. Most notably, study participants were asked to make hypothetical choices under fictional scenarios. We do not know whether consumers would make the same pattern of choices if the selection of hospitals were for actual care, when they might spend more time selecting hospitals. Nor do we know how much the specific scenarios (e.g., measures, levels, hospital names) presented influenced participants’ choices. For example, in the cost presentation experiment, the high‐value hospital was labeled “University Hospital.” This label might have positively impacted selection of the high‐value hospital; however, it would have done so across all five experimental conditions as the hospital was labeled the same way for all study participants regardless of the presentation they viewed. Additionally, the cost and resource use experiments were both for hospital care. While these are commonly reported measures for hospitals, which is why we tested them, consumers may be more amenable to “shopping” for elective procedures (Shaller, Kanouse, and Mark 2013).

The study sample was also a highly educated sample. Therefore, the absolute levels of our findings are likely not generalizable. However, as such a highly educated sample still often misunderstood the spending ratio and readmission rate percentages, we probably underestimated the challenge that many people would face when presented with cost and efficiency displays.

In conclusion, this study confirms consumer interest in cost data on health care providers, but underscores the challenges in presenting comparative cost and efficiency information effectively to consumers. We found that commonly used approaches to presenting both types of measures, like Hospital Compare's spending ratio and adding a label that a “lower score is better” on readmissions rates presented as percentages resulted in suboptimal choices for many consumers. The study was able to identify approaches to presenting both cost and readmissions data that were far more effective. Specifically, presenting hospital cost data using median costs and readmissions data using Word Icons resulted in substantially more consumers selecting high‐value hospitals (16 and 25 percentage points, respectively). Cost data were also effectively presented using a Word icon labeled as “affordable.” While an effective term with consumers, using it may raise flags with information providers, as it can be interpreted in different ways. Given the complexity of presenting this information to consumers, we recommend that public reports use approaches previously found to be effective through empirical testing, or that new approaches are tested with consumers to ensure that the data are interpreted as intended.

Supporting information

Appendix SA1: Author Matrix.

Appendix SA2:

Table S1. Characteristics of the Study Sample.

Table S2. Cost Presentation Experiment—Choice of High‐Value Hospital by Cost Presentation Approach, Multivariate Analysis.

Table S3. Readmissions Presentation Experiment—Choice of High‐Value Hospital by Cost Presentation Approach, Multivariate Analysis.

Table S4. Interest in Cost Data Experiment, Multivariate Analyses.

Acknowledgments

Joint Acknowledgment/Disclosure Statement: We are grateful to Kathy Reinhardt, formerly the Director of Benefits of Analog Devices, Inc., for her willingness to have the employees of Analog Devices participate in this study. We would like to thank Barbra Rabson of Massachusetts Health Quality Partners (MHQP) and Barbara Lambiaso formerly of MHQP for their interest in this research and effort identifying an employer partner for conducting the study. And finally, we would like to thank Diane C. Farley of Penn State University for her role in setting up the online survey.

This research was conducted as part of the evaluation of the Aligning Forces for Quality initiative, which was funded by the Robert Wood Johnson Foundation.

Disclosures: None.

Disclaimer: None.

References

- American Institutes for Research . 2014. “How to Report Cost Data to Promote High‐Quality, Affordable Choices: Findings from Consumer Testing” [accessed February 5, 2018]. Available at http://www.rwjf.org/content/dam/farm/reports/issue_briefs/2014/rwjf410706

- American Institutes for Research . 2015. “Reporting Resource Use Information to Consumers: Findings from Consumer Testing.” Princeton, NJ [accessed February 5, 2018]. [accessed February 5, 2018]. Available at http://forces4quality.org/af4q/download-document/11298/Resource-16-002_-_reporting_resource_use_information_to_consumers_-_final.pdf

- Berwick, D. M. , James B., and Coye M. J.. 2003. “Connections between Quality Measurement and Improvement.” Medical Care 41 (1): I30–8. [DOI] [PubMed] [Google Scholar]

- Blumenthal, D. , and Rizzo J. A.. 1991. “Who Cares for Uninsured Persons? A Study of Physicians and Their Patients Who Lack Health Insurance.” Medical Care 29: 502–20. [PubMed] [Google Scholar]

- Blumenthal‐Barby, J. S. , Robinson E., Cantor S. B., Naik A. D., Russell H. V., and Volk R. J.. 2015. “The Neglected Topic: Presentation of Cost Information in Patient Decision AIDS.” Medical Decision Making: An International Journal of the Society for Medical Decision Making 35 (4): 412–8. [DOI] [PubMed] [Google Scholar]

- de Brantes, F. , and Delbanco S.. 2016. “Report Card on State Price Transparency Laws.” Newtown, CT [accessed February 5, 2018]. Available at https://www.catalyze.org/product/2016-report-card/

- Bridges, J. F. P. , Berger Z., Austin M., Nassery N., Sharma R., Chelladurai Y., Karmarkar T. D., and Segal J. B.. 2015. “Public Reporting of Cost Measures in Health: An Environmental Scan of Current Practices and Assessment of Consumer Centeredness.” Rockville, MD [accessed on February 5, 2018]. Available at https://www.ncbi.nlm.nih.gov/books/NBK279761/ [PubMed]

- Castlight Health . 2013. “Castlight Signals Better Health Care Costs and Outcomes for Analog Devices.” Press release [accessed on February 5, 2018]. Available at http://www.castlighthealth.com/press-releases/castlight-signals-better-health-care-costs-and-outcomes-for-analog-devices-unique/

- Catalyst for Payment Reform . 2013. “The State of the Art of Price Tranparency Tools and Solutions.” Berkeley, CA [accessed on February 5, 2018]. Available at https://www.catalyze.org/wp-content/uploads/2017/04/2013-The-State-of-the-Art-of-Price-Transparency-Tools-and-Solutions.pdf

- The Center for Health Care Quality . 2011. “Lessons Learned in Public Reporting: Crossing the Cost and Efficiency Frontier” [accessed on February 5, 2018]. Available at http://forces4quality.org/af4q/download-document/3021/Resource-RegTable_CostEfficiency.pdf

- Christianson, J. , and Shaw B.. 2016. “Did Aligning Forces for Quality (AF4Q) Improve Provider Performance Transparency? Evidence from a National Tracking Study” [accessed on February 5, 2018]. Available at http://hhd.psu.edu/media/CHCPR/alignforce/files/2016_PR_Research_Summary.pdf

- Christianson, J. , Shaw B., Greene J., and Scanlon D.. 2016. “Reporting Provider Performance: What Can Be Learned from the Experience of Multi‐Stakeholder Community Coalitions.” American Journal of Managed Care 22: S382–92. [PubMed] [Google Scholar]

- Desai, S. , Hatfield L. A., Hicks A. L., Chernew M. E., and Mehrotra A.. 2016. “Association Between Availability of a Price Transparency Tool and Outpatient Spending.” Journal of the American Medical Association 315 (17): 1874. [DOI] [PubMed] [Google Scholar]

- Duke, C. , Stanik C., Beaudin‐Seiler B., Garg P., Leis H., Fields J., Ducas A., and Knight M.. 2017. “Right Place, Right Time.” Washington, DC [accessed on February 5, 2018]. Available at http://altarum.org/sites/default/files/uploaded-publication-files/USE_RPRT_Consumer_Perspectives_Final.pdf

- Faber, M. , Bosch M., Wollersheim H., Leatherman S., and Grol R.. 2009. “Public Reporting in Health Care: How Do Consumers Use Quality‐of‐Care Information? A Systematic Review.” Medical Care 47 (1): 1–8. [DOI] [PubMed] [Google Scholar]

- Fox, S. 2011. “Social Life of Health Information 2011,” Pew Research Center's Internet & American Life. Washington, DC: Pew Research Center. [Google Scholar]

- Frost, A. , and Newman D.. 2016. “Spending on Shoppable Services in Health Care.” Washington DC [accessed on February 5, 2018]. Available at http://www.healthcostinstitute.org/files/Shoppable%20Services%20IB%203.2.16_0.pdf

- Frost, A. , Newman D., and Quincy L.. 2016. “Health Care Consumerism: Can the Tail Wag the Dog?” Health Affairs Blog [accessed on February 5, 2018]. Available at https://www.healthaffairs.org/do/10.1377/hblog20160302.053566/full/ [Google Scholar]

- Glöckner, A. , and Betsch T.. 2012. “Decisions Beyond Boundaries: When More Information Is Processed Faster Than Less.” Acta Psychologica 139 (3): 532–42. [DOI] [PubMed] [Google Scholar]

- Greene, J. , and Peters E.. 2009. “Medicaid Consumers and Informed Decision Making.” Health Care Financing Review 20: 25–40. [PMC free article] [PubMed] [Google Scholar]

- Hibbard, J. H. , Greene J., Sofaer S., Firminger K., and Hirsh J.. 2012. “An Experiment Shows That a Well‐Designed Report on Costs and Quality Can Help Consumers Choose High‐Value Health Care.” Health Affairs 31: 560–8. [DOI] [PubMed] [Google Scholar]

- Hussey, P. S. , de Vries H., Romley J., Wang M. C., Chen S. S., Shekelle P. G., and McGlynn E. A.. 2009. “A Systematic Review of Health Care Efficiency Measures.” Health Services Research 44 (3): 784–805. [DOI] [PMC free article] [PubMed] [Google Scholar]

- The Kaiser Family Foundation . 2015. “Kaiser Health Tracking Poll: October 2015.” Washington, DC [accessed on February 5, 2018]. Available at http://files.kff.org/attachment/topline-methodology-kaiser-health-tracking-poll-October-2015

- Kullgren, J. T. , Duey K. A., and Werner R. M.. 2013. “A Census of State Health Care Price Transparency Websites.” Journal of the American Medical Association 309 (23): 2437–8. [DOI] [PubMed] [Google Scholar]

- Kurtzman, E. T. , and Greene J.. 2015. “Effective Presentation of Health Care Performance Information for Consumer Decision Making: A Systematic Review.” Patient Education and Counseling 99: 36–43. [DOI] [PubMed] [Google Scholar]

- Mehrotra, A. , Hussey P. S., Milstein A., and Hibbard J. H.. 2012. “Consumers’ and Providers’ Responses to Public Cost Reports, and How to Raise the Likelihood of Achieving Desired Results.” Health Affairs 31 (4): 843–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Painter, M. , and Chernew M.. 2012. “Counting Change: Measuring Health Care Prices, Costs, and Spending.” Princeton, NJ [accessed on February 5, 2018]. Available at http://www.rwjf.org/content/dam/farm/reports/reports/2012/rwjf72445

- Patel, M. S. , and Volpp K. G.. 2012. “Leveraging Insights from Behavioral Economics to Increase the Value of Health‐Care Service Provision.” Journal of General Internal Medicine 27 (11): 1544–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peters, E. , Dieckmann N., Dixon A., Hibbard J. H., and Mertz C. K.. 2007. “Less Is More in Presenting Quality Information to Consumers.” Medical Care Research and Review 64: 169–90. [DOI] [PubMed] [Google Scholar]

- Romano, P. , Hussey P., and Ritley D.. 2010. “Selecting Quality and Resource Use Measures: A Decision Guide for Community Quality Collaboratives” [accessed on February 5, 2018]. Available at http://www.ahrq.gov/sites/default/files/publications/files/perfmeas.pdf

- Schleifer, D. , Hagelskamp C., and Rinehart C.. 2015. “How Much Will It Cost? How Americans Use Prices in Health Care1. Public Agenda. How Much Will It Cost? How Americans Use Prices in Health Care.” New York.

- Schleifer, D. , Silliman R., and Rinehart C.. 2017. “Still Searching: How People Use Health Care Price Information in the United States, New York State, Florida, Texas and New Hampshire.” New York [accessed on February 5, 2018]. Available at https://www.publicagenda.org/files/PublicAgenda_StillSearching_2017.pdf

- Schlesinger, M. , Kanouse D. E., Martino S. C., Shaller D., and Rybowski L.. 2014. “Complexity, Public Reporting, and Choice of Doctors: A Look Inside the Blackest Box of Consumer Behavior.” Medical Care Research and Review: MCRR 71 (5 Suppl): 38S–64S. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shaller, D. , Kanouse D. E., and Mark S.. 2013. “Context‐Based Strategies for Engaging Consumers with Public Reports about Health Care Providers.” Medical Care Research and Review 71: 17S–37S. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sinaiko, A. D. , and Rosenthal M. B.. 2011. “Increased Price Transparency in Health Care — Challenges and Potential Effects.” New England Journal of Medicine 364: 891–4. [DOI] [PubMed] [Google Scholar]

- Sommers, R. , Goold S. D., McGlynn E. A., Pearson S. D., and Danis M.. 2013. “Focus Groups Highlight That Many Patients Object to Clinicians’ Focusing on Costs.” Health Affairs (Project Hope) 32 (2): 338–46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tocknell, M. D. 2013. “CMS Releases Hospital Pricing Data.” Health Leaders Media. [Google Scholar]

- Ubel, P. 2014. “Health Care Decisions in the New Era of Health Care Reform: Can Patients in the United States Become Savvy Health Care Consumers?” North Carolina Law Review. [Google Scholar]

- White, J. 2015. “Promoting Transparency and Clear Choices in Health Care.” Health Affairs Blog [accessed on June 3, 2016]. Available at http://healthaffairs.org/blog/2015/06/09/promoting-transparency-and-clear-choices-in-health-care/

- Yegian, J. M. , Dardess P., Shannon M., and Carman K. L.. 2013. “Engaged Patients Will Need Comparative Physician‐Level Quality Data and Information about Their Out‐of‐Pocket Costs.” Health Affairs (Project Hope) 32 (2): 328–37. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix SA1: Author Matrix.

Appendix SA2:

Table S1. Characteristics of the Study Sample.

Table S2. Cost Presentation Experiment—Choice of High‐Value Hospital by Cost Presentation Approach, Multivariate Analysis.

Table S3. Readmissions Presentation Experiment—Choice of High‐Value Hospital by Cost Presentation Approach, Multivariate Analysis.

Table S4. Interest in Cost Data Experiment, Multivariate Analyses.