Abstract

A smile is the most frequent facial expression, but not all smiles are equal. A social-functional account holds that smiles of reward, affiliation, and dominance serve basic social functions, including rewarding behavior, bonding socially, and negotiating hierarchy. Here, we characterize the facial-expression patterns associated with these three types of smiles. Specifically, we modeled the facial expressions using a data-driven approach and showed that reward smiles are symmetrical and accompanied by eyebrow raising, affiliative smiles involve lip pressing, and dominance smiles are asymmetrical and contain nose wrinkling and upper-lip raising. A Bayesian-classifier analysis and a detection task revealed that the three smile types are highly distinct. Finally, social judgments made by a separate participant group showed that the different smile types convey different social messages. Our results provide the first detailed description of the physical form and social messages conveyed by these three types of functional smiles and document the versatility of these facial expressions.

Keywords: facial expressions, emotion, smile, reverse correlation, social perception

Everyone knows what a smiley face means. But real smiles are another story and not always a happy one. Similar to a little black dress or a classic suit, smiles are a perfect fit for many social occasions, ranging from reuniting with a best friend to public humiliation (Landis, 1924). Although people smile when they feel positive emotions (Ekman, 1973), they also smile when they are miserable (Ekman, 2009), uncomfortable (Woodzicka & LaFrance, 2001), or embarrassed (Keltner, 1995). Indeed, facial expressions involving the contraction of the zygomaticus major muscle constitute a single category, the smile, but could have different phylogenetic roots, as is reflected in animal ethology. For example, chimpanzees and canids retract the lip corners in functionally distinct facial expressions that appear during play but also when communicating submission or threat (Fox, 1970; Parr & Waller, 2006).

Based on observations of both animal and human behavior, the simulation-of-smiles model (SIMS; Niedenthal, Mermillod, Maringer, & Hess, 2010) proposes at least three subtypes of smile, each defined by its role in the resolution of major adaptive problems of social living (Keltner & Gross, 1999): reward smiles displayed to reward the self or other people and to communicate positive experiences or intentions; affiliative smiles to signal appeasement and create and maintain social bonds; and dominance smiles to negotiate status within and across social hierarchies. In theory, reward smiles are displayed during positive sensory and social experiences and are thus accompanied by and can reinforce pleasurable sensations through afferent feedback. Perceiving a reward smile can also elicit positive feelings through facial mimicry (Niedenthal et al., 2010). Reward smiles—which correspond to enjoyment smiles described in the literature (Ekman, Davidson, & Friesen, 1990)—may have evolved from the play face of primates and canids (Fox, 1970; Parr & Waller, 2006). Given previous findings, reward smiles should involve smooth and symmetrical action of the zygomaticus major muscle and could possibly be accompanied by eye constriction (Frank & Ekman, 1993).

Affiliative smiles facilitate social bonding by communicating approachability, acknowledgment, and appeasement (Eibl-Eibesfeldt, 1972; Ekman, 2009; Keltner, 1995) and thus may be functionally similar to the silent bared-teeth display in chimpanzees that occurs during grooming, sexual solicitation, and submission (Parr & Waller, 2006). Finally, dominance smiles serve to maintain and negotiate social or moral status and are associated with superiority or pride (Senior, Phillips, Barnes, & David, 1999; Tracy & Robins, 2008), defiance (Darwin, 1872/1999), derision, and contempt (Ekman, 2009; Ekman & Friesen, 1986). Unlike reward and affiliative smiles, dominance smiles are assumed to elicit negative feelings in observers (Boksem, Smolders, & De Cremer, 2009; Davidson, Ekman, Saron, Senulis, & Friesen, 1990). No homologous primate facial expression is known; however, some facial expressions displayed by high-status animal aggressors involve smile components (Parr & Waller, 2006; Parr, Waller, & Vick, 2007).

Although comparative studies and theoretical developments provide some insight into how smiles communicate reward, affiliation, and dominance, their exact facial-expression patterns remain unknown. In the studies reported here, we used a data-driven approach to mathematically model the dynamic facial-expression patterns (henceforth called models) communicating each smile type to individual observers (Study 1). We hypothesized that because the three smile types serve different social functions, each should be conveyed using a specific facial-expression pattern. We then tested the extent to which the physical similarities and differences between smile models predicted participants’ responses in a verification task (Study 2). Finally, we predicted that models of reward, affiliative, and dominance smiles would reliably communicate related social motives (i.e., positive feelings, social connectedness, and superiority, respectively) and tested this hypothesis in the final experiment (Study 3).

In Study 1, we mathematically modeled the dynamic facial-expression patterns of three smile types using a data-driven approach that combined a dynamic facial-expression generator (Yu, Garrod, & Schyns, 2012) with reverse correlation (Ahumada & Lovell, 1971) and subjective human perception (e.g., Jack, Garrod, & Schyns, 2014). In brief, each participant observed a large sample of random facial animations representing combinations of biologically plausible facial movements as described by action units of the Facial Action Coding System (FACS; Ekman & Friesen, 1978 ). Each facial expression included the bilateral or unilateral Lip Corner Puller (action unit, or AU, 12)—a main component of smiling—and participants rated the extent to which every animation represented a reward, affiliative, or dominance smile (e.g., “reward, very strong”). Using these responses, we computed a statistically robust dynamic facial-expression model of each smile type for each participant and then analyzed the resulting patterns.

We report all measures and manipulations including all data exclusions. In each of the three experiments, we sought to maximize statistical power and to recruit as many participants as possible within approximately 3 weeks (with a minimum of 30 participants per study or per cell). The institutional review board at the University of Wisconsin–Madison approved all three studies.

Study 1: Construction of Dynamic Smile Models

Method

Participants

Fifty-five U.S. students (32 female; mean age = 18.76 years, SD = 0.79) participated in exchange for course credit. We excluded data from 12 participants (8 female): 9 participants who did not complete the experiment, 1 participant who did not follow the instructions, and 2 African American participants whose recognition performance could have been influenced by faces from another race (i.e., White) used as stimuli (Elfenbein & Ambady, 2002). All participants had self-reported normal or corrected-to-normal vision.

Stimuli

The stimuli comprised 2,400 random facial animations, created using a dynamic facial-expression generator (Yu et al., 2012) and a 3-D morphable model (Blanz & Vetter, 1999). Figure 1 illustrates the stimulus-generation procedure. On each one of 2,400 experimental trials, the dynamic facial-expression generator randomly selected the bilateral Lip Corner Puller (AU12), the left Lip Corner Puller (AU12L), or the right Lip Corner Puller (AU12R)—in the example trial shown in Figure 1, the left Lip Corner Puller (AU12L)—plus a random sample of between 1 and 4 other AUs (e.g., in Fig. 1, the Nose Wrinkler, AU9, and the Upper Lip Raiser, AU10) selected from a core set of 36 AUs. For each separate AU, a random movement was assigned using randomly selected values specifying each of six temporal parameters: onset latency, acceleration, peak amplitude, peak latency, deceleration, and offset latency (see labels illustrating the red curve in Fig. 1). We used a cubic Hermite spline interpolation (five control points, 30 time frames, 24 frames per second) to generate the time course of each AU. We then presented the random facial animation on one of eight White face identities (four female, four male; mean age = 23.0 years, SD = 4.1) captured under the same conditions of illumination (2,600 lux) and recording distance (143 cm; see Yu et al., 2012). All animations started and ended with a neutral expression and had the same duration of 1.25 s.

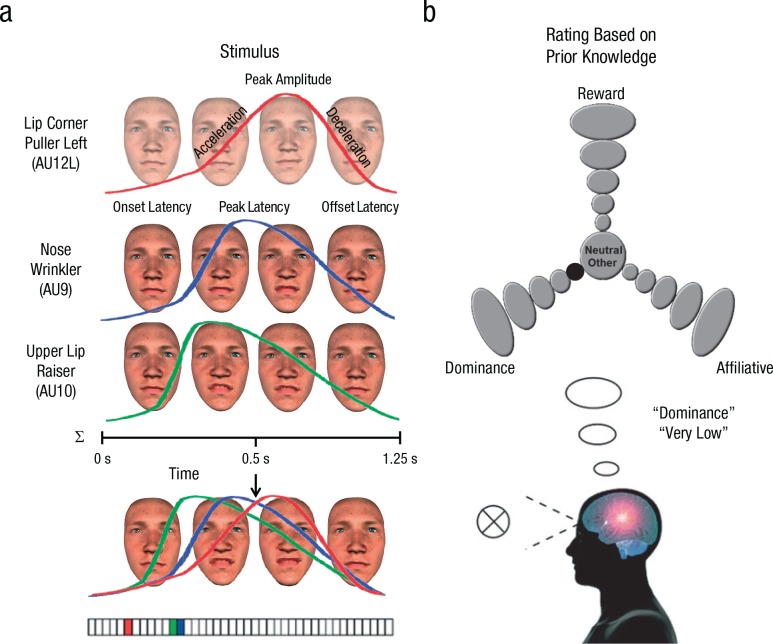

Fig. 1.

Modeling dynamic facial-expression patterns of the three smile types. On each experimental trial, the dynamic facial-expression generator (Yu, Garrod, & Schyns, 2012) selected a core face movement characteristic of a smile (AU12) and a random subset of other action units (AUs). In the example trial shown in (a), the core face movement was the left Lip Corner Puller (AU12), and the random AUs were the Nose Wrinkler (AU9) and the Upper Lip Raiser (AU10). For each individual AU selected, the generator assigned a random movement by randomly selecting values for six temporal parameters (see the labels on the top red curve) and combined these dynamic AUs to produce a random photorealistic facial animation (represented here by the sequence of four face images). The color-coded vector at the bottom represents the three randomly selected AUs that make up the stimulus on this trial. Each participant categorized 2,400 such facial animations displayed on same-race faces. On each trial, participants categorized the facial animation as one of the three smile types—reward, affiliative, or dominance—and rated the degree to which they thought it represented that smile type. If the facial-expression pattern did not correspond to any of the listed labels, participants selected “neutral/other.” The example shown in (b) illustrates a smile rated as representing a dominance smile to a very low degree (represented by the black dot on the “dominance” arm of the scale).

Procedure

Figure 1b illustrates the task procedure. On each experimental trial, participants viewed the randomly generated stimulus and, on the basis of their prior knowledge, categorized it into one of the three smile types—reward, affiliative, or dominance—and indicated the degree to which they thought it represented that smile type. Participants gave their responses using a modified Geneva Emotion Wheel (see Scherer, Shuman, Fontaine, & Soriano, 2013). If none of these labels accurately described the facial animation, participants selected “neutral/other.” In other words, even though all animations involved the Lip Corner Puller—a core component of smiling—participants were not constrained to categorize any of the facial animations as a smile. Each participant categorized 2,400 such random facial animations over twelve 20-min blocks within a week. Each stimulus was 600 × 800 pixels (average width = 9.31 cm; average height = 14.44 cm) and appeared in the center of the screen on a black background for 1.25 s. Each stimulus was shown only once. Stimuli subtended approximately 14.95° of visual angle vertically and 9.68° of visual angle horizontally at a viewing distance of approximately 55 cm.

Before the experiment, participants read definitions of the three social functions of smiles. Consistent with the SIMS model (Niedenthal et al., 2010), the definitions described reward smiles as reflecting a happy response, affiliative smiles as reflecting positive social intentions, and dominance smiles as reflecting superiority. For each smile type, we provided two examples of social situations in which a person would make such a smile: for the reward smile, “A person learns that he/she just got hired for his/her dream job”; for the affiliative smile, “A person thanks someone for their help in a store”; and for the dominance smile, “A person crosses paths with an enemy after winning an important prize.” Participants completed the first block in a laboratory with a female experimenter who was present only while the participants accessed the experimental Web site and read the instructions. Participants completed the remaining blocks independently, outside of the laboratory and on their personal computers. We instructed participants to take a minimum break of 3 hr between blocks and to complete the experiment without distractions.

Model fitting

After the experiment, we used each participant’s behavioral responses and the dynamic AU patterns shown on each trial to mathematically model the dynamic facial-expression patterns associated with each smile type for that participant. To do this, we computed a Pearson correlation between the binary vector detailing the presence versus the absence of each AU on each trial and the corresponding binary vectors detailing the response of the participant. We thus assigned a value of 1 to all significant correlations (p < .05) and a 0 otherwise. This procedure produced a 1- × 36-dimensional binary vector detailing the composition of AUs significantly correlated with each smile type for each participant. We thus computed a total of 129 dynamic smile models (43 participants × 3 smile types).

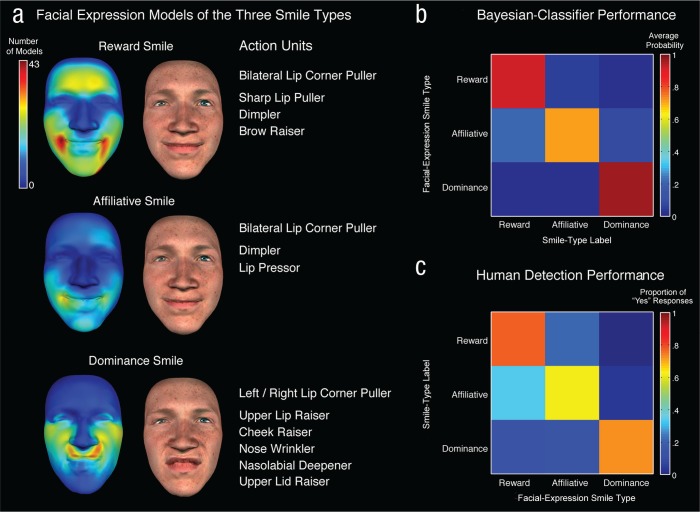

We modeled the six temporal parameters of each significant AU as a linear function of response intensity for each smile type. We estimated linear slope and offset using least squares regression. For visualization purposes, we evaluated the model at three equidistant points along the full intensity range and passed the resulting three sets of six temporal parameters to the animation system along with the significantly correlated AUs. Figure 2a summarizes the resulting facial-expression models for each smile type; the color-coded face maps show the number of individual participants’ models that included each AU (for the number and proportion of individual participants’ models with each AU, also see Table S1c in the Supplemental Material available online). The same AU patterns are also displayed on a face identity. Each smile type is associated with a specific facial-expression pattern.

Fig. 2.

Results from Studies 1 and 2: facial-expression patterns of the three smile types, results for the Bayesian-classifier analysis, and human detection performance. The color-coded face maps in (a) show, for each smile type, the number of individual participants’ models that included each action unit (AU). These facial-expression patterns are also displayed on the face identities to the right of the color maps. The AUs listed next to each face were included in at least 17 of the individual participants’ models. To objectively examine the distinctiveness of the facial-expression pattern of each smile type, we used a Bayesian-classifier approach with a 10-fold cross-validation procedure. The results are shown in the color-coded matrix in (b), which indicates the average probability with which the classifier labeled each facial-expression smile type with each of the smile-type labels. The color-coded matrix in (c) summarizes the detection-performance results from Study 2. For each facial-expression smile type, the matrix shows the proportion of participants who selected “yes” in response to each smile-type label (i.e., who reported that the label described the animation).

To objectively examine the distinctiveness of the facial-expression patterns of each smile type, we used a multinomial naive Bayesian-classifier approach with a 10-fold cross validation procedure (Kohavi, 1995). Specifically, we split the facial-expression patterns (i.e., AU patterns represented as 1 × 36 binary vectors) into 10 disjoint subsets (i.e., folds). We used 9 of the 10 subsets to train a classifier to associate the smile-type patterns with their smile-type labels (i.e., reward, affiliative, or dominance). After training, we tested the classifier using the remaining set of facial-expression patterns. Every test therefore produced a performance score for each of the three smile types. We repeated this training-and-testing procedure 10 times to ensure that each set had been tested. We then computed, for each smile type, the average probability of classifying the smile pattern with each smile-type label (i.e., the probability that a reward smile would be classified as reward, affiliative, or dominance).

Figure 2b shows the results as a color-coded matrix. As shown by the squares along the top-left-to-bottom-right diagonal, the smile models of each smile type were classified with high accuracy; classification accuracy was significantly better than chance (p < .05), as determined by a binomial-proportion test. Pairwise binomial-proportion tests comparing the three smile types revealed that affiliative smiles elicited significantly lower accuracy (p < .05) than dominance smiles. An examination of the distribution of errors for affiliative smiles (see the squares outside the diagonal from the top left to the bottom right) showed that this lower performance was due to these smiles being frequently misclassified as reward smiles; this occurred significantly more often than chance (21.4% of observations, p < .05), as determined by binomial-proportion tests (for all classification probabilities, see Table S1d in the Supplemental Material).

Study 2: Detection of Smile Types

Such specificities in the facial-expression patterns and the Bayesian-classifier performance suggest that people should accurately detect all three smile types but that affiliative smiles are harder to classify than reward or dominance smiles. To explore participants’ sensitivity to the functional smiles, we recruited new participants who completed a verification task using the dynamic facial-expression models derived from participant responses in Study 1.

Method

Participants

One hundred seven White U.S. students (71 female; mean age = 19.55 years, SD = 1.59) participated in exchange for course credits. We excluded data from three participants (2 female) because they deviated from the experimental instructions. All participants had self-reported normal or corrected-to-normal vision.

Stimuli

We displayed every dynamic smile model derived from participant responses in Study 1 on four different White face identities (two female; mean age = 21.5 years, SD = 6.46). This resulted in a total of 2,580 stimuli (3 smiles × 5 levels × 43 participants × 4 identities).

Procedure

Before the task, we told participants that they would see a large number of animated facial expressions. On each trial, participants viewed a facial animation followed by one of three labels: “reward smile,” “affiliative smile,” or “dominance smile.” We instructed participants to select “yes” if the label accurately described the facial animation and “no” if it did not. Participants viewed 300 stimuli (100 per smile type) selected pseudorandomly with replacement from the pool of 2,580 stimuli and presented in random order across the experiment. Among the 100 trials asking about a given smile type, 50 displayed models of the target smile type, and the other 50 displayed equal numbers of the other two smile types, presented as distractors. Participants remained naive to the proportion of targets and distractors throughout the experiment. Each facial animation was played one time on a black background in the center of the screen for 1.25 s. Stimuli subtended approximately 14.95° of visual angle vertically and 9.68° of visual angle horizontally, and a chin rest was used to maintain a constant viewing distance of 51 cm. We used an online interface to display the stimuli and record participant responses. We tested participants on individual computer stations and used the same smile-type definitions as in Study 1.

Results

To examine whether participants accurately detected the different smile types in the dynamic facial-expression models, we computed d′—a measure of signal detection sensitivity (Green & Swets, 1966)—for each of the three smile types by pooling the responses from all participants. A one-way analysis of variance (ANOVA) applied to the resultant d′ values showed that detection sensitivity varied significantly across the smile types, F(2, 206) = 24.08, p < .001; accuracy was highest for reward smiles (mean d′ = 0.81, SD = 0.24), intermediate for dominance smiles (mean d′ = 0.77, SD = 0.86), and lowest for affiliative smiles (mean d′ = 0.38, SD = 0.22).

Figure 2c summarizes the participants’ performance. The squares along the diagonal from top left to bottom right show that each smile type was detected with high accuracy (i.e., hits). Although reward and dominance smiles elicited similar detection performance (p > .05), participants’ sensitivity to the affiliative smiles was significantly lower, ps < .05. Further analysis of participants’ responses showed that the significantly lower detection sensitivity for affiliative smiles was due to a large number of false alarms when they were asked to detect affiliative smiles (M = .21) compared with when they were asked to detect reward smiles (M = .08) and when they were asked to detect dominance smiles (M = .14). The squares outside the hit diagonal (i.e., the squares representing false alarms) reveal confusions between affiliative and reward smiles. Specifically, when asked to detect affiliative smiles, participants responded positively to reward smile models (see the leftmost square in the middle row) significantly more than they did to dominance smile models (see the rightmost square in the middle row), t(84) = 20.15, p < .001. (For detection statistics for each individual model and proportions of positive responses, see Tables S2a and S2b in the Supplemental Material.)

The human pattern of performance closely mirrored that of the Bayesian classifier (see Fig. 2b). In accordance with the objective analysis of the AU patterns, participants’ performance indicated physical similarities between reward and affiliative smiles; participants could clearly distinguish dominance smiles from reward and affiliative smiles, whereas the latter two smile types elicited some similarity in perceptual judgments. In the next study, we examined participants’ judgments of the social motives conveyed by the three smile models. We predicted that, given the perceptual similarity of reward and affiliative smiles, they should convey similar messages of positive feelings and social connection. On the other hand, dominance smiles should elicit clearly different judgments and should be associated with superiority rather than with positive and prosocial motives.

Study 3: Social Information Communicated by Smile Type

The functions of the three distinct smiles proposed by the SIMS model and supported by the findings of the first two studies are, in theory, served by their communication of different social messages—that is, positive feelings, social connectedness, and superiority, respectively. To test this hypothesis, we recruited a new set of participants who rated the feelings and social motives communicated by a subsample of the individual participants’ smile models derived from participants’ responses in Study 1.

Method

Participants

Sixty-two U.S. students (41 female; mean age = 19.17 years, SD = 2.11) participated in exchange for course credit. Because we displayed the smile models on White faces, we discarded data from 1 Arab American female. All participants had self-reported normal or corrected-to-normal vision as per self-report.

Stimuli

From all the facial-expression models tested in Study 2, we pseudorandomly selected, for each smile type, five models with significantly high d′ values—reward smile: mean d′ = 0.89, SD = 0.15, zd′ = 1.36; affiliative smile: mean d′ = 1.44, SD = 0.17, zd′ = 1.29; dominance smile: mean d′ = 2.79, SD = 0.09, zd′ = 0.76 (see Table S2 in the Supplemental Material). We displayed each dynamic facial-expression model on eight new White face identities (four female, four male; mean age = 23.5 years). From these we created two sets of 60 stimuli (3 smile types × 5 models × 4 identities); each set contained two of the male identities and two of the female identities (Set 1: mean age = 26.75 years, SD = 5.91 years; Set 2: mean age = 20.25, SD = 3.30 years).

Procedure

On each experimental trial, participants viewed a facial expression presented along with one of three questions: “To what extent is this person . . .” (a) “having a positive feeling or reaction to something/someone?” (b) “feeling a social connection with someone?” or (c) “feeling superior or dominant?” Participants responded using a 7-point Likert scale ranging from 1 (not at all) to 7 (very much). We presented the facial expressions in the center of the screen on a black background, with the question below the facial expression in white text. Facial expressions subtended approximately 14.95° of visual angle vertically and 9.68° of visual angle horizontally; participants were instructed to maintain the same viewing distance during testing. Each stimulus played for 1.25 s, and participants could replay the video as many times as desired. We displayed each facial-expression model three times across the experiment, each time paired with a different question. Therefore, each participant completed a total of 180 trials (5 models × 3 smile types × 4 identities × 3 questions). We randomized the order of trials across the experiment for every participant. Subjects were tested in a laboratory and worked at individual computer stations. We used an online interface created in Qualtrics (Version 1.869s; Qualtrics, Provo, UT) to display the stimuli and collect responses.

Results

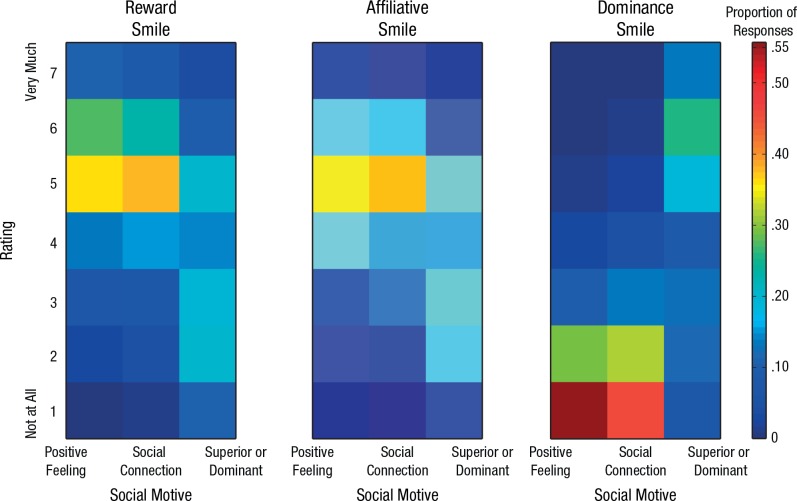

We performed all statistical analyses using RStudio (Version 0.96; RStudio, Inc., Boston, MA) and IBM SPSS (Version 20.0 for Windows; IBM, Armonk, NY). To examine whether different smile types communicate specific feelings and social motives, we analyzed participants’ ratings of each smile type. Specifically, for each smile and for each of the three questions, we computed the proportion of responses at each of the seven rating levels (i.e., not at all to very high) using data pooled across participants. Figure 3 shows the results. Both reward and affiliative smiles consistently elicited high ratings for positive feelings and social connection; the ratings for superiority were more inconsistent, as indicated by the flatter distribution of responses across the different levels. In contrast, dominance smiles consistently elicited high ratings for superiority and generally low ratings of positive feelings and social connectedness.

Fig. 3.

Results from Study 3. The color-coded matrices show the distribution of participants’ judgments of the extent to which each smile type conveyed positive feelings, social connection, and superiority or dominance.

To test the hypothesis that reward, affiliative, and dominance smiles convey distinct functional messages, we used a linear mixed-model analysis1 and regressed participants’ ratings on two planned orthogonal contrasts.2 First, we predicted that reward smiles would be rated significantly higher for positive feelings and social connection than for feelings of superiority or dominance. Second, we predicted that reward smiles would be rated significantly higher on positive feelings than on social connection. In line with our first prediction, reward smiles elicited significantly higher ratings of positive feelings (M = 5.08, SD = 0.74) and social connectedness (M = 4.91, SD = 0.76) compared with feelings of superiority (M = 3.59, SD = 1.18), b = −0.46, SE = 0.07, t(37.02) = −6.44, p < .001. In accordance with our second prediction, reward smiles elicited higher ratings for positive feelings than for social connectedness, b = 0.08, SE = 0.02, t(6.95) = 3.40, p = .01.

We applied the same contrasts to test ratings of social motives for affiliative smiles. The results showed that affiliative smiles elicited higher ratings for social connection (M = 4.57, SD = 0.80) and positive feelings (M = 4.61, SD = 0.76) than for superiority (M = 3.67, SD = 1.00), b = −0.31, SE = 0.07, t(23.29) = −4.18, p < .001. However, our second prediction was not supported: Ratings for social connectedness and positive feelings did not differ for affiliative smiles, b = 0.01, SE = 0.02, t(6.80) = 0.63, p = .55.

Finally, for dominance smiles, we predicted higher ratings for superiority compared with positive feelings and social connectedness, and this prediction was supported by the data, b = 0.90, SE = 0.07, t(61.92) = 12.10, p < .001 (superiority: M = 4.49, SD = 1.50; positive feelings: M = 1.69, SD = 0.64; social connection: M = 1.89, SD = 0.71).3

General Discussion

We aimed to identify and validate the expressive facial actions that constitute reward, affiliative, and dominance smiles. We modeled these patterns using a dynamic facial-expression generator, reverse correlation, and subjective human perception. Analysis of the resulting facial-expression models (43 participants × 3 smile types) showed that the three smile types are represented by distinct facial-movement patterns. Specifically, reward smiles involve eyebrow flashes (i.e., the Inner-Outer Brow Raiser, AU1-2), the Sharp Lip Puller (AU13), and Dimpler (AU14). Affiliative smiles involve the Lip Pressor (AU24) and the Dimpler (AU14). Dominance smiles comprise the Upper Lid Raiser (AU5), the Nose Wrinkler (AU9), the Cheek Raiser (AU6), and the Upper Lip Raiser (AU10). For each smile type, these AUs accompanied a core component of smiling—the Lip Corner Puller (AU12), involving the zygomaticus major muscle. Although reward and affiliative smiles both involve symmetrical movements of the Lip Corner Puller (AU12), dominance smiles are asymmetrical and comprise the unilateral right or left Lip Corner Puller (AU12R or AU12L). As predicted by the distinctiveness of the facial-expression patterns for the three smile types, both a Bayesian classifier and a set of human participants could accurately discriminate and detect each type. Finally, in line with predictions from the SIMS model, each smile type communicated a specific set of broader social messages—that is, reward and affiliative smiles elicited high ratings of positive feelings and social connection and generally low ratings of superiority, whereas dominance smiles yielded the opposite pattern of results.

Our results suggest a relationship between the form and function of each of the three smile types that supports existing theories of smiles and nonverbal communication more broadly. Specifically, our analyses revealed distinct AU patterns for each smile type that are commensurate with their specific social functions. For example, reward smiles involve face movements that increase sensory exposure (the Inner-Outer Brow Raiser, AU1-2, which could indicate the desire to prolong sensory input and feelings of pleasure; Niedenthal et al., 2010; Susskind et al., 2008). Affiliative smiles contain the Lip Pressor (AU24), which covers the teeth and thus could indicate approachability via the absence of aggression (Darwin, 1872/1999). Likewise, dominance smiles involve the Nose Wrinkler (AU9) and the Upper Lip Raiser (AU10), associated with facial expressions of disgust, anger, and sensory rejection (Chapman, Kim, Susskind, & Anderson, 2009; Darwin, 1872/1999; Ekman & Friesen, 1978; Jack et al., 2014), which suggests fundamental similarities in communicating rejection, negativity (Niedenthal et al., 2010), low affiliation, and high superiority (Knutson, 1996). Dominance smiles also contain the Upper Lid Raiser (AU5), revealing the white of the eye; this AU is typical of facial expressions of anger, fear, and surprise (Ekman & Friesen, 1978; Jack, Sun, Delis, Garrod, & Schyns, 2016) and potentially communicates a broad social message of immediacy. Finally, dominance smiles also involve the Cheek Raiser (AU6), often associated with genuine enjoyment (e.g., Ekman et al., 1990). Our data thus support previous findings linking AU6 with facial expressions other than smiles—including those communicating negative affect such as distress, despair, or disgust (Messinger, Mattson, Mahoor, & Cohn, 2012; Scherer & Ellgring, 2007). The involvement of eye constriction in the dominance smiles is also consistent with existing theories of contempt expression (Darwin, 1872/1999; Izard & Haynes, 1988).

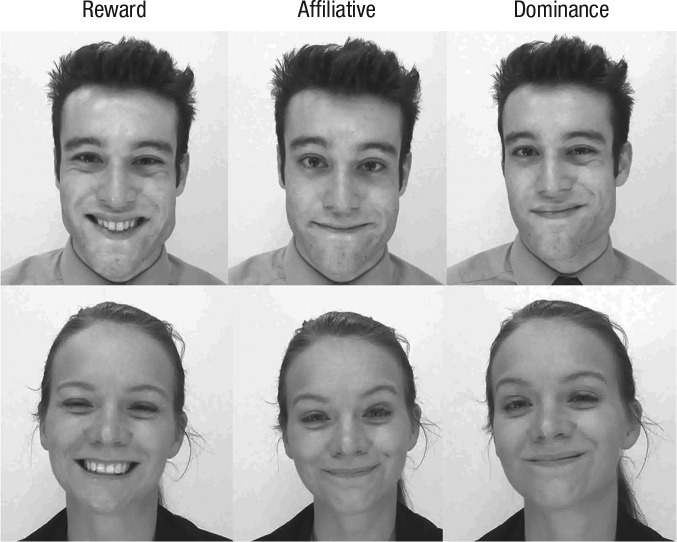

We also have shown differences in the core component of smiles—the Lip Corner Puller (AU12)—across the three smile types. Specifically, reward and affiliative smiles, both of which convey positive feelings and social connectedness, involve the symmetrical Lip Corner Puller, a facial movement eliciting judgments of genuineness and approach motivation (Ekman, 2009; Schmidt & Cohn, 2001). In contrast, dominance smiles, which communicate negative feelings and superiority, involve the asymmetrical Lip Corner Puller (AU12L or AU12R), a movement associated with the related social concepts of contempt and derision (Darwin, 1872/1999; Ekman & Friesen, 1986). Given the potentially negative social consequences of dominance smiles, their similarity to disgust expressions, and the relatively weak visibility of the AU12 in our dominance models, one could object that such expressions are not instances of a smile category at all. We addressed this question in an additional study, for which we created new smile stimuli. Fifteen experienced actors encoded the smiles after being coached about the appearance of the smiles as indicated by the present findings (for details about the stimuli, see the Supplemental Material and Martin, Abercrombie, Gilboa-Schechtman, & Niedenthal, 2017). Participants (N = 73) saw still images (Fig. 4) of reward, affiliative, and dominance expressions, in addition to other expressions, and categorized them as smiles using a “yes” or “no” response format. The results showed that participants were significantly more likely than chance to categorize reward, affiliative, and dominance smiles as smiles (estimated probabilities: 98%, 86%, and 69%, respectively). Neutral and disgust facial expressions were not categorized as smiles (estimated probabilities: 6% and 2%, respectively).

Fig. 4.

Image frames of reward, affiliative, and dominance smiles taken from a video. The smiles are based on the present findings. Dominance smiles involve lower activations of the bilateral Lip Corner Puller (AU12) and higher levels of the Nose Wrinkler (AU9) than reward smiles.

Our results reveal the facial movements involved in the three smile categories but also indicate that greater similarity in the underlying feelings and social motives translates into greater similarity in the facial-expression patterns. That is, the SIMS model describes reward and affiliative smiles as related in that they both convey generally positive feelings and motives, which—according to biological signaling accounts—should be communicated using similar signals (Hasson, 1997; Smith, Cottrell, Gosselin, & Schyns, 2005). Although we found some specificity in the reward and affiliative smiles, both contained the symmetrical Lip Corner Puller. Human participants tended to confuse these facial expressions (see Fig. 2) and judged them as conveying similar feelings and social motives (see Fig. 3). It is important to note that we explored smile categories that might be used for social living but for which semantic concepts are not easily accessible, which could reduce participants’ ability to explicitly use the labels of reward, affiliative, and dominance smiles. A further test of the distinction between the proposed functions of smiles could include the assessment of implicit physiological, neuroendocrine, and behavioral responses (Martin et al., 2017). Paradigms involving social situations, such as trust games or providing performance feedback, can also shed more light on the social impact of the three functional smiles (Martin et al., 2017; Rychlowska, van der Schalk, Martin, Niedenthal, & Manstead, 2017).

We have shown that individuals associate distinct facial-expression patterns with reward, affiliative, and dominance smiles, which also communicated positive feelings, social connectedness, and superiority, respectively. Our results converge with the findings of a recent study (Rychlowska et al., 2015) revealing that respondents from nine countries in North America, Europe, and Asia divided the social functions of smiles in three categories consistent with the theoretical distinctions proposed in the SIMS model.

We anticipate that our precise characterization of the facial-expression patterns of reward, affiliative, and dominance smiles, and the social messages they communicate, will contribute to furthering knowledge of the social function and form of these facial expressions. Smile models generated in the present studies, as well as video recordings of these smiles (Martin et al., 2017), could serve as a framework for the automatic detection and classification of smiles that occur in real-life situations—such as those displayed during presidential elections or interactions with intimate others (Kunz, Prkachin, & Lautenbacher, 2013)—using computer-vision algorithms. Finally, further analysis of the temporal dynamics of different smile types has the potential to inform the clinical assessment of the surgeries aimed at the reanimation of the smile (Manktelow, Tomat, Zuker, & Chang, 2006; Tomat & Manktelow, 2005).

Together, our results highlight the versatile nature of the human smile, which can be used for multiple social tasks, including love, sympathy, and war.

Supplementary Material

Acknowledgments

The data and experimental materials are available on request. We thank Or Alon, Eva Gilboa-Schechtman, Oriane Grodzki, Crystal Hanson, Hadar Keshet, Fabienne Lelong, Sophie Monceau, Hope Price, Christine Shi, Xiaoqian Yu, and Xueshi Zhou for their help.

All regression models included a by-subject random intercept, a by-subject random slope, a by-item (identity) random intercept, and a by-item random slope.

For Contrast 1, positive emotions and social connection were coded as −1 and superiority was coded as 2. For Contrast 2, positive emotions were coded as 1, social connection was coded as −1, and superiority was coded as 0.

Contrast 2 (positive feelings: 1; social connection: −1; superiority: 0), which tested the residual within-group variance, was also significant, b = −0.10, SE = 0.04, t(9.47) = −2.82, p = .02.

Footnotes

Action Editor: D. Stephen Lindsay served as action editor for this article.

Declaration of Conflicting Interests: The authors declared that they had no conflicts of interest with respect to their authorship or the publication of this article.

Funding: J. D. Martin was supported by National Institute of Mental Health Grant T32-MH018931-26. P. G. Schyns was supported by Wellcome Trust Senior Investigator Award 107802 and Multidisciplinary University Research Initiative (United States)/Engineering and Physical Sciences Research Council (United Kingdom) Grant EP/N019261/1. P. M. Niedenthal was supported by National Science Foundation Grant BVS-1251101 and by Israeli Binational Science Foundation Grant 2013205.

Supplemental Material: Additional supporting information can be found at http://journals.sagepub.com/doi/suppl/10.1177/0956797617706082

References

- Ahumada A., Lovell J. (1971). Stimulus features in signal detection. Journal of the Acoustical Society of America, 49, 1751–1756. doi: 10.1121/1.1912577 [DOI] [Google Scholar]

- Blanz V., Vetter T. (1999). A morphable model for the synthesis of 3D faces. In Rockwood A. (Ed.), Proceedings of the 26th Annual Conference on Computer Graphics and Interactive Techniques (pp. 187–194). New York, NY: ACM Press. doi: 10.1145/311535.311556 [DOI] [Google Scholar]

- Boksem M., Smolders R., De Cremer D. (2009). Social power and approach-related neural activity. Social Cognitive Affective Neuroscience, 7, 516–520. doi: 10.1093/scan/nsp006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chapman H. A., Kim D. A., Susskind J. M., Anderson A. K. (2009). In bad taste: Evidence for the oral origins of moral disgust. Science, 323, 1222–1226. doi: 10.1126/science.1165565 [DOI] [PubMed] [Google Scholar]

- Darwin C. (1999). The expression of the emotions in man and animals (3rd ed.). London, England: Fontana Press; (Original work published 1872) [Google Scholar]

- Davidson R., Ekman P., Saron C., Senulis J., Friesen W. (1990). Approach-withdrawal and cerebral asymmetry: Emotional expression and brain physiology: I. Journal of Personality and Social Psychology, 58, 330–341. doi: 10.1037/0022-3514.58.2.330 [DOI] [PubMed] [Google Scholar]

- Eibl-Eibesfeldt I. (1972). Similarities and differences between cultures in expressive movements. In Hinde R. A. (Ed.), Nonverbal communication (pp. 297–314). Cambridge, England: Cambridge University Press. [Google Scholar]

- Ekman P. (Ed.). (1973). Darwin and facial expression: A century of research in review. Cambridge, MA: Malor Books. [Google Scholar]

- Ekman P. (2009). Telling lies: Clues to deceit in the marketplace, politics, and marriage. London, England: W. W. Norton. [Google Scholar]

- Ekman P., Davidson R. J., Friesen W. V. (1990). The Duchenne smile: Emotional expression and brain physiology: II. Journal of Personality and Social Psychology, 58, 342–353. doi: 10.1037/0022-3514.58.2.342 [DOI] [PubMed] [Google Scholar]

- Ekman P., Friesen W. (1978). Facial Action Coding System: A technique for the measurement of facial movement. Palo Alto, CA: Consulting Psychologists Press. [Google Scholar]

- Ekman P., Friesen W. (1986). A new pan-cultural facial expression of emotion. Motivation and Emotion, 10, 159–168. doi: 10.1007/BF00992253 [DOI] [Google Scholar]

- Elfenbein H., Ambady N. (2002). On the universality and cultural specificity of emotion recognition: A meta-analysis. Psychological Bulletin, 128, 203–235. doi: 10.1037/0033-2909.128.2.203 [DOI] [PubMed] [Google Scholar]

- Fox M. (1970). A comparative study of the development of facial expressions in canids; wolf, coyote and foxes. Behaviour, 36, 49–73. doi: 10.1163/156853970X00042 [DOI] [Google Scholar]

- Frank M. G., Ekman P. (1993). Not all smiles are created equal: The differences between enjoyment and nonenjoyment smiles. Humor, 6, 9–26. doi: 10.1515/humr.1993.6.1.9 [DOI] [Google Scholar]

- Green D., Swets J. (1966). Signal detection theory and psychophysics. New York, NY: Wiley. [Google Scholar]

- Hasson O. (1997). Towards a general theory of biological signaling. Journal of Theoretical Biology, 185, 139–156. doi: 10.1006/jtbi.1996.0258 [DOI] [PubMed] [Google Scholar]

- Izard C., Haynes O. (1988). On the form and universality of the contempt expression: A challenge to Ekman and Friesen’s claim of discovery. Motivation and Emotion, 12, 1–16. doi: 10.1007/bf00992469 [DOI] [Google Scholar]

- Jack R. E., Garrod O. G. B., Schyns P. G. (2014). Dynamic facial expressions of emotion transmit an evolving hierarchy of signals over time. Current Biology, 24(2), 1–6. doi: 10.1016/j.cub.2013.11.064 [DOI] [PubMed] [Google Scholar]

- Jack R. E., Sun W., Delis I., Garrod O. G. B., Schyns P. G. (2016). Four not six: Revealing culturally common facial expressions of emotion. Journal of Experimental Psychology: General, 145, 708–730. doi: 10.1037/xge0000162 [DOI] [PubMed] [Google Scholar]

- Keltner D. (1995). Signs of appeasement: Evidence for the distinct displays of embarrassment, amusement, and shame. Journal of Personality and Social Psychology, 68, 441–454. doi: 10.1037/0022-3514.68.3.441 [DOI] [Google Scholar]

- Keltner D., Gross J. J. (1999). Functional accounts of emotions. Cognition & Emotion, 13, 467–480. doi: 10.1080/026999399379140 [DOI] [Google Scholar]

- Knutson B. (1996). Facial expressions of emotion influence interpersonal trait inferences. Journal of Nonverbal Behavior, 20, 165–182. [Google Scholar]

- Kohavi R. (1995). A study of cross-validation and bootstrap for accuracy estimation and model selection. In Mellish C. S. (Ed.), Proceedings of the 14th International Joint Conference on Artificial Intelligence (pp. 1137–1143). Retrieved from http://dl.acm.org/citation.cfm?id=1643047 [Google Scholar]

- Kunz M., Prkachin K., Lautenbacher S. (2013). Smiling in pain: Explorations of its social motives. Pain Research and Treatment, 2013, Article 128093. doi: 10.1155/2013/128093 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Landis C. (1924). Studies of emotional reactions. II. General behavior and facial expression. Journal of Comparative Psychology, 4, 447–510. doi: 10.1037/h0073039 [DOI] [Google Scholar]

- Manktelow R. T., Tomat L. R., Zuker R. M., Chang M. (2006). Smile reconstruction in adults with free muscle transfer innervated by the masseter motor nerve: Effectiveness and cerebral adaptation. Plastic and Reconstructive Surgery, 118, 885–899. doi: 10.1097/01.prs.0000232195.20293.bd [DOI] [PubMed] [Google Scholar]

- Martin J. D., Abercrombie H., Gilboa-Schechtman E., Niedenthal P. M. (2017). The social function of smiles: Evidence from hormonal and physiological processes. Manuscript submitted for publication.

- Messinger D. S., Mattson W. I., Mahoor M. H., Cohn J. F. (2012). The eyes have it: Making positive expressions more positive and negative expressions more negative. Emotion, 12, 430–436. doi: 10.1037/a0026498 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niedenthal P. M., Mermillod M., Maringer M., Hess U. (2010). The Simulation of Smiles (SIMS) model: Embodied simulation and the meaning of facial expression. Behavioral & Brain Sciences, 33, 417–433. doi: 10.1017/S0140525X10000865 [DOI] [PubMed] [Google Scholar]

- Parr L. A., Waller B. M. (2006). Understanding chimpanzee facial expression: Insights into the evolution of communication. Social Cognitive and Affective Neuroscience, 1, 221–228. doi: 10.1093/scan/nsl031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parr L. A., Waller B. M., Vick S. J. (2007). New developments in understanding emotional facial signals in chimpanzees. Current Directions in Psychological Science, 16, 117–122. doi: 10.1111/j.1467-8721.2007.00487.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rychlowska M., Miyamoto Y., Matsumoto D., Hess U., Gilboa-Schechtman E., Kamble S., . . . Niedenthal P. M. (2015). Heterogeneity of long-history migration explains cultural differences in reports of emotional expressivity and the functions of smiles. Proceedings of the National Academy of Sciences, USA, 112, E2429–E2436. doi: 10.1073/pnas.1413661112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rychlowska M., van der Schalk J., Martin J., Niedenthal P. M., Manstead A. (2017). Steal and smile: Emotion expressions and trust in intergroup resource dilemmas. Manuscript in preparation, School of Psychology, Cardiff University, Wales. [Google Scholar]

- Scherer K. R., Ellgring H. (2007). Are facial expressions of emotion produced by categorical affect programs or dynamically driven by appraisal? Emotion, 7, 113–130. doi: 10.1037/1528-3542.7.1.113 [DOI] [PubMed] [Google Scholar]

- Scherer K. R., Shuman V., Fontaine J. R. J., Soriano C. (2013). The GRID meets the Wheel: Assessing emotional feeling via self-report. In Fontaine J. R. J., Scherer K. R., Soriano C. (Eds.), Components of emotional meaning: A sourcebook (pp. 281–298). Oxford, England: Oxford University Press. [Google Scholar]

- Schmidt K. L., Cohn J. F. (2001). Human expressions as adaptations: Evolutionary questions in facial expression research. American Journal of Physical Anthropology, 33, 3–24. doi: 10.1002/ajpa.2001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Senior C., Phillips M. L., Barnes J., David A. S. (1999). An investigation into the perception of dominance from schematic faces: A study using the World-Wide Web. Behavior Research Methods, Instruments, & Computers, 31, 341–346. doi: 10.3758/bf03207730 [DOI] [PubMed] [Google Scholar]

- Smith M. L., Cottrell G. W., Gosselin F., Schyns P. G. (2005). Transmitting and decoding facial expressions. Psychological Science, 16, 184–189. doi: 10.1111/j.0956-7976.2005.00801.x [DOI] [PubMed] [Google Scholar]

- Susskind J. M., Li D. H., Cusi A., Feiman R., Grabski W., Anderson A. K. (2008). Expressing fear enhances sensory acquisition. Nature Neuroscience, 11, 843–850. doi: 10.1038/nn.2138 [DOI] [PubMed] [Google Scholar]

- Tomat L. R., Manktelow R. T. (2005). Evaluation of a new measurement tool for facial paralysis reconstruction. Plastic and Reconstructive Surgery, 115, 696–704. doi: 10.1097/01.PRS.0000152431.55774.7E [DOI] [PubMed] [Google Scholar]

- Tracy J. L., Robins R. W. (2008). The nonverbal expression of pride: Evidence for cross-cultural recognition. Journal of Personality and Social Psychology, 94, 516–530. doi: 10.1037/0022-3514.94.3.516 [DOI] [PubMed] [Google Scholar]

- Woodzicka J. A., LaFrance M. (2001). Real versus imagined gender harassment. Journal of Social Issues, 57, 15–30. doi: 10.1111/0022-4537.00199 [DOI] [Google Scholar]

- Yu H., Garrod O. G. B., Schyns P. G. (2012). Perception-driven facial expression synthesis. Computers & Graphics, 36, 152–162. doi: 10.1016/j.cag.2011.12.002 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.