Abstract

Humans are able to mentally construct an episode when listening to another person's recollection, even though they themselves did not experience the events. However, it is unknown how strongly the neural patterns elicited by mental construction resemble those found in the brain of the individual who experienced the original events. Using fMRI and a verbal communication task, we traced how neural patterns associated with viewing specific scenes in a movie are encoded, recalled, and then transferred to a group of naïve listeners. By comparing neural patterns across the 3 conditions, we report, for the first time, that event-specific neural patterns observed in the default mode network are shared across the encoding, recall, and construction of the same real-life episode. This study uncovers the intimate correspondences between memory encoding and event construction, and highlights the essential role our common language plays in the process of transmitting one's memories to other brains.

Keywords: communication, memory, mental construction, naturalistic, pattern similarity

Introduction

Sharing memories of past experiences with each other is foundational for the construction of our social world. What steps comprise the encoding and sharing of a daily life experience, such as the plot of a movie we just watched, with others? To verbally communicate an episodic memory, the speaker has to recall and transmit via speech her memories of the events from the movie. At the same time, the listener must comprehend and construct the movie's events in her mind, even though she did not watch the movie herself. To understand the neural processes that enable this seemingly effortless transaction, we need to study 3 stages: (1) the speaker's encoding and retrieval (Bird et al. 2015; Chen et al. 2017); (2) the linguistic communication from speaker to listener (Stephens et al. 2010; Silbert et al. 2014); and (3) the listener's mental construction of the events (Kosslyn et al. 2001; Mar 2004; Hassabis and Maguire 2009; Chow et al. 2014). To date, there has been little work addressing the direct links between the processes of memory, verbal communication, and construction (in the listener's mind) of a single real-life experience. Therefore, it remains unknown how information from a past experience stored in one person's memory is propagated to another person's brain, and to what degree the listener's neural construction of the experience from the speaker's words resembles the original encoded experience.

To characterize this cycle of memory transmission, we compared neural patterns during encoding, spoken recall, and mental construction of each scene in a movie (Fig. 1). To closely mimic a real-life scenario, the study consisted of “movie-viewers” who watched a continuous movie narrative, a person (“speaker”) watching and then freely verbally recalling the same movie, and finally naïve “listeners”, never having seen the movie, who listened to an audio recording of the speaker's recollection. We searched for scene-specific neural patterns common across the 3 conditions. To ensure the robustness of the results, the full study was replicated using a second movie. This design allowed us to map the neural processes by which information is transmitted across brains in a real-life context, and to examine relationships between neural patterns underlying encoding, communication, and construction.

Figure 1.

Circle of communication. Depiction of the entire procedure during sharing of an experience. Participants encode the movie and then reinstate it during recall. By listening to the audio recall, listeners construct the movie events in their mind. Mental representations related to the movie are shared throughout this cycle and transmitted across the brains via communication.

We predicted that scene-specific neural patterns in high-order brain areas would be similar during the encoding, spoken recall, and mental construction of a given event. Why should this be so? Firstly, resemblance between neural patterns elicited during encoding and retrieval has been shown in numerous studies using different types of stimuli (Polyn et al. 2005; Johnson et al. 2009; Buchsbaum et al. 2012; St-Laurent et al. 2014; Bird et al. 2015) over the past decade. More recently, it was demonstrated that “scene-specific” neural patterns elicited during encoding of complex natural stimuli (an audio–visual movie) are reinstated in high-order regions during free spoken recall (Chen et al. 2017). These areas include retrosplenial and posterior parietal cortices, medial prefrontal cortex, bilateral hippocampus, and parahippocampal gyrus, known collectively as the default mode network (DMN; Raichle et al. 2001; Buckner et al. 2008). Secondly, a number of studies suggest that the same high-order DMN areas exhibit increased activity during encoding and retrieval of episodic memories, and furthermore are active during the construction of imaginary and future events (Svoboda et al. 2006; Addis et al. 2007; Hassabis and Maguire 2007, 2009; Schacter et al. 2007; Szpunar et al. 2007; Spreng et al. 2009).

Why are the same brain areas active during episodic encoding, retrieval, and mental construction? One possibility is that the same brain areas are involved in encoding, retrieval, and construction, but these areas assume different activity states during each process; in this case, one would expect that neural representations present during encoding and retrieval of specific scenes would not match those present during mental construction of those scenes. Another possibility is that the same neural activity patterns underlie the encoding, retrieval, and construction of a given scene. This hypothesis has never been tested, as no study has directly compared scene-specific neural patterns of brain responses during mental construction of a story with the neural patterns elicited during initial encoding or subsequent recall of the same event.

Our communication protocol (Fig. 1) provides a testbed for this latter hypothesis. In our experiment, during the spoken recall phase, the speaker must retrieve and reinstate her episodic memory of the movie events. Meanwhile the listeners, who never experienced the movie events, must construct (imagine) the same events in their minds. Thus, if the same neural processes underlie both retrieval and construction, then we predict that similar activity patterns will emerge in the speaker's brain and the listeners’ brains while recalling/constructing each event. Furthermore, we predict that the greater the neural similarity between the speaker's brain and the listener's brain, the more successful memory communication will be. We addressed these questions using whole-brain searchlight analyses as well as additional region of interest (ROI) examination. ROI analyses were mainly focused on posterior cingulate cortex (PCC), a key node in the DMN (Buckner et al. 2008), as previous research suggests this region is involved in natural communication (Stephens et al. 2010), retrieval of autobiographical memories (Maddock et al. 2001), episodic reinstatement (Chen et al. 2017), memory consolidation (Bird et al. 2015), and prospection (Buckner and Carroll 2007; Hassabis and Maguire 2007).

In the current study we witness, for the first time, how an event-specific pattern of activity can be traced throughout the communication cycle: from encoding, to spoken recall, to comprehending and constructing (Fig. 1). Our work reveals the intertwined nature of memory, mental construction, and communication in real-life settings, and explores the neural mechanisms underlying how we transmit information about real-life events to other brains.

Materials and Methods

Stimuli

We used 2 audio–visual movies, excerpts from the first episodes of BBC's television shows Sherlock (24-min in length) and Merlin (25-min in length). These movies were chosen to have similar levels of action, dialogue, and production quality. Audio recordings were obtained from a participant who watched and recounted the 2 movies in the scanner. The outcome was an 18-min audio recording of the Sherlock story, and a 15-min audio recording of the Merlin story. Thus the stimuli consisted of a total of 2 movies (Sherlock and Merlin) and 2 corresponding audio recordings. This allowed us to internally replicate the results across 2 datasets.

Subjects

A total of 52 participants (age 18–45), all right-handed native English speakers with normal or corrected to normal vision, were scanned. Potential participants were first screened for previous exposure to both movie stimuli, and only those without any self-reported history of watching either of the 2 movie stimuli were recruited. From the total group, 4 were dropped due to head motion greater than 3 mm (voxel size), 4 fell asleep, 5 were dropped due to failure in postscan memory test (recall levels < 1.5 SD below the mean), and 2 were dropped who had watched the movie but did not report it before the scan session. Subjects who were dropped due to poor recall had scores close to zero (Merlin scores: max = 25, min = 0.4, mean = 11.9, std = 7.1 Sherlock scores: max = 21.4, min = 0, mean = 11.18, std = 5.6). We acquired informed consent from all participants, which was approved by Princeton University Institutional Review Board.

Procedure

Experimental Design

Eighteen participants watched a 25-min audio–visual movie (“Merlin”) while undergoing fMRI scanning (movie-viewing, Fig. 2A). Before the movie, participants were instructed to watch and/or listen to the stimuli carefully and were told that there would be a memory test after the experiment. One participant separately watched the movie and then recalled it aloud inside the scanner (unguided, without any experimenter cues), and her spoken description of the movie was recorded (spoken-recall). Another group of participants (N = 18), who were naïve to the content of the movie, listened to the recorded 15-minute spoken description (listening). The entire procedure was repeated with a second movie (“Sherlock”; 24-min movie, 18-min spoken description), with the same participant serving as the speaker. This design allowed us to internally replicate and evaluate the robustness of each analysis.

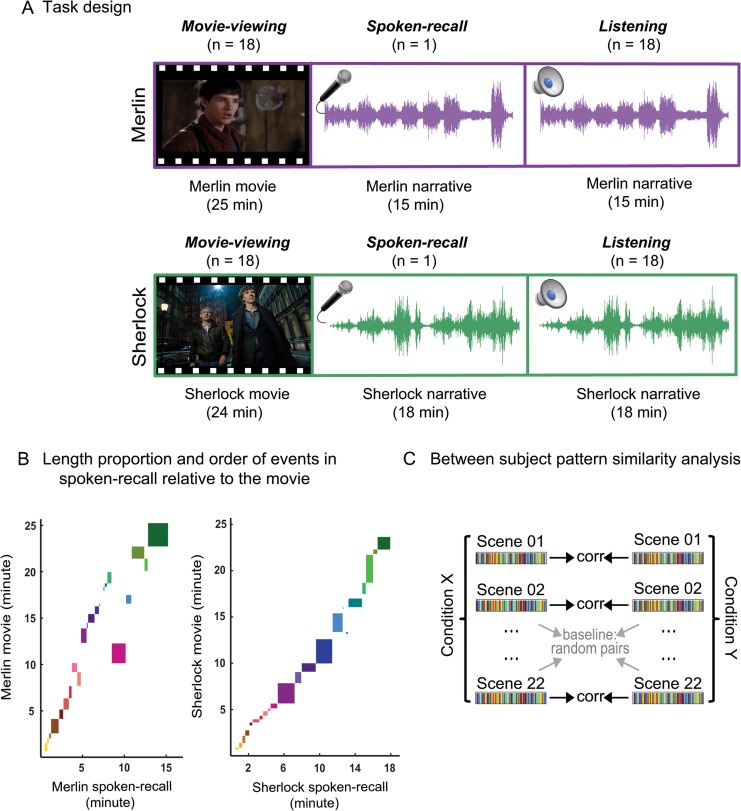

Figure 2.

Experiment design and analysis. (A) 18 participants watched a 25-min audio–visual movie (Merlin) while undergoing fMRI scanning (movie-viewing). One participant separately watched the movie and then recalled it inside the fMRI scanner and her spoken description of the movie was recorded (spoken-recall). Then a group of 18 participants who were naïve to the content of the movie listened to the recorded narrative. The entire procedure was repeated with a second movie (Sherlock) by recruiting new groups of participants. (B) Depiction of the length of each event in the movie (y-axis) relative to its corresponding event (if remembered) in spoken-recall (x-axis) for each movie. Each box denotes a different event. Boxes that are out of the continuous diagonal string of events depict the events that were recalled in an order different from their original place in the movie. (C) Schematic for the main analysis. Brain data were averaged within each scene in the dataset of each condition (e.g., condition x = movie-viewing and condition y = spoken-recall). Averaging resulted in a single pattern of brain response across the brain for each scene for each condition. Then these 2 patterns were compared and correlated using a searchlight method. Significant values were computed by shuffling the scene labels and comparing the nonmatching scenes. Similar analyses were performed for all other comparisons (spoken-recall to listening, listening to movie-viewing).

After the main experiment, but before the memory test, participants listened to a second audio stimulus (15 or 18 min) in the scanner. Data from this scan run were collected for a separate experiment and not used in this article. An anatomical scan was performed at the end of the scan session.

Participants were randomly assigned to watch Sherlock (n = 18) or Merlin (n = 18). The speaker was chosen from among the participants of a previous project in our lab (Chen et al. 2017). In that study, the task for all participants was to watch the Sherlock movie and perform the spoken recall task, describing as many scenes as they could remember. One of them was selected as the speaker for the current experiment based on her above-average recall performance, and her fMRI data from Chen et al. (2017) are used for analyses in this article. The same participant watched and recalled the Merlin movie for the current study.

Scanning Procedures

Participants’ only task inside the scanner was to attend to the stimuli, and there was no specific instruction about fixating to the center. Participants were asked to watch the stimuli through the mirror which was reflecting the rear screen. The movie was projected to this screen located at the back of the magnet bore via a LCD projector. In-ear headphones were used for the audio stimuli. Eye-tracking was performed during all the runs (recording during the movie, observing the eye during the audio) using iView X MRI-LR system (SMI Sensomotoric Instruments). Eye-tracking was implemented to ensure that participants were paying full attention and not falling asleep. They were asked to keep their eyes open during the audio runs (no visual stimuli). The movie and audio stimuli were presented using Psychophysics Toolbox [http://psychtoolbox.org] in MATLAB (Mathworks), which enabled us to coordinate the onset of the stimuli (movie and audio) and data acquisition.

We recorded the speaker's speech during the fMRI scan using a customized MR-compatible recording system (FOMRI II; Optoacoustics Ltd). The MR recording system uses 2 orthogonally oriented optical microphones. The reference microphone captures the background noise, whereas the source microphone captures both background noise and the speaker's speech utterances (signal). A dual-adaptive filter subtracts the reference input from the source channel (using a least mean square approach). To achieve an optimal subtraction, the reference signal is adaptively filtered where the filter gains are learned continuously from the residual signal and the reference input. To prevent divergence of the filter when speech is present, a voice activity detector is integrated into the algorithm. A speech enhancement spectral filtering algorithm further preprocesses the speech output to achieve a real-time speech enhancement. Finally, after the recording, the remaining noise was further cleaned using noise removal software (Adobe Audition). The resulted recording was good enough to be understood by all 18 naïve listeners as indicated by our post-listening comprehension test. During listening, sound level was adjusted separately for each participant to assure a complete and comfortable understanding of the stimuli.

MRI Acquisition

MRI data was collected on a 3 T full-body scanner (Siemens Skyra) with a 20-channel head coil. Functional images were acquired using a T2*-weighted echo planar imaging pulse sequence (TR 1500 ms, TE 28 ms, flip angle 64, whole-brain coverage 27 slices of 4 mm thickness, in-plane resolution 3 × 3 mm2, FOV 192 × 192 mm2). Anatomical images were acquired using a T1-weighted magnetization-prepared rapid-acquisition gradient echo (MPRAGE) pulse sequence (0.89 mm3 resolution). Anatomical images were acquired in an 8-min scan after the functional scans with no stimulus on the screen.

Postscan Behavioral Memory Test

Memory performance was evaluated using a free recall test in which participants were asked to write down the events they remembered from both the movie and audio recording in as much detail as possible. There was no time limit and they were instructed to write everything that they remembered. Three independent raters read these written free recalls and assigned memory scores to each participant. The raters were given general instructions to assess the quality of comprehension and accuracy of each response, working from a few examples. Suggested items to consider were the number of scenes remembered, importance of the remembered scenes, details provided, and overall comprehension/memory level. The raters could flexibly choose how they scored each of these items. They reported a score for each participant and these numbers were rescaled (to have the same minimum and maximum) across the 3 raters. Ratings generated by each of the 3 raters were highly similar (Cronbach's alpha = 0.85 and 0.87 for Merlin and Sherlock, respectively); these were averaged for further analysis. An example of a rating sheet made and used by one of the raters is provided in Supplementary material (Table S1).

Data Analysis

Preprocessing was performed in FSL [http://fsl.fmrib.ox.ac.uk/fsl], including slice time correction, motion correction, linear detrending, and high-pass filtering (140 s cutoff). These were followed by coregistration and transformation of the functional volumes to a template brain (MNI). The rest of the analyses were conducted using custom MATLAB scripts. All timecourses were despiked before further analysis. Before running the searchlight analysis, timecourses were averaged within each scene for all the participants and conditions.

Pattern Similarity Searchlight

Movie-viewing and spoken-recall data are not aligned across time points; it took the speaker 15 min to describe the 25-min Merlin movie, and 18 min to describe the 24-min Sherlock movie (Fig. 2B). To compare the brain responses across different conditions, data obtained during the watching of each movie (movie-viewing) were divided into 22 scenes (Fig. 2C), following major shifts in the narrative (e.g., location, topic, and/or time, as defined by an independent rater). The same 22 scenes were identified in the audio recordings of the recall session based on the speaker's verbal narration. Calculating the arithmetic mean of time points within each scene provided a single pattern of brain response for each scene during movie-viewing, spoken-recall, and listening. Before averaging, timecourses were shifted 3 TRs to account for HRF delay.

Pattern similarity searchlight analysis was performed across subjects (Chen et al. 2017). Pearson correlation was calculated between (1) the patterns elicited during movie-viewing and (2) the patterns observed during spoken-recall, in a searchlight analysis using 15 × 15 × 15 mm3 cubes centered on every voxel in the brain (Kriegeskorte et al. 2006, 2008). Similarity was calculated between the spoken-recall data and each movie-viewing (encoding) participant's data and then averaged across participants. The speaker's movie data was not included in this movie-viewing set, but will be used in a later analysis. The same type of analysis was performed to compute pattern similarity between brain responses during spoken-recall and listening, and also between listening and movie-viewing.

Statistical analyses were conducted to locate regions containing scene-specific patterns, wherein statistical significance is only reached if matching scenes (e.g., the same scene in movie-viewing and spoken-recall) can be differentiated from nonmatching scenes. Significance thresholds were calculated using a permutation test (Kriegeskorte et al. 2008), shuffling the scene labels and calculating random scene-to-scene correlations for each participant, then averaging across participants to create a null distribution of r values; the P-value was calculated as the proportion of the null distribution that was above the observed level of pattern similarity for matching scenes. This procedure was implemented for all searchlight cubes for which 50% or more of their volume was inside the brain. Individual P values were generated for each voxel and these were corrected for multiple comparisons using false discovery rate (FDR; Benjamini and Hochberg 1995) at q = 0.05. This analysis aims to confirm the event-specificity of neural patterns by demonstrating that correlation between matching scenes is significantly higher than correlations between random scenes, that is, a given scene's activity pattern is similar between movie-viewing and spoken-recall and discriminable from other scenes.

For each comparison, P values for each voxel are plotted as a brain map, with the threshold corrected using FDR (q = 0.05). Spoken-recall versus movie-viewing is presented in Figure 3A,B. Spoken-recall versus listening is presented in Figure 4A,B. These 2 comparisons each consist of one participant (speaker) compared with multiple others (movie-viewers or listeners). In the listening-versus-viewing analysis, each participant's movie-viewing was compared with the average of all the listening participants (Fig. 5A,B).

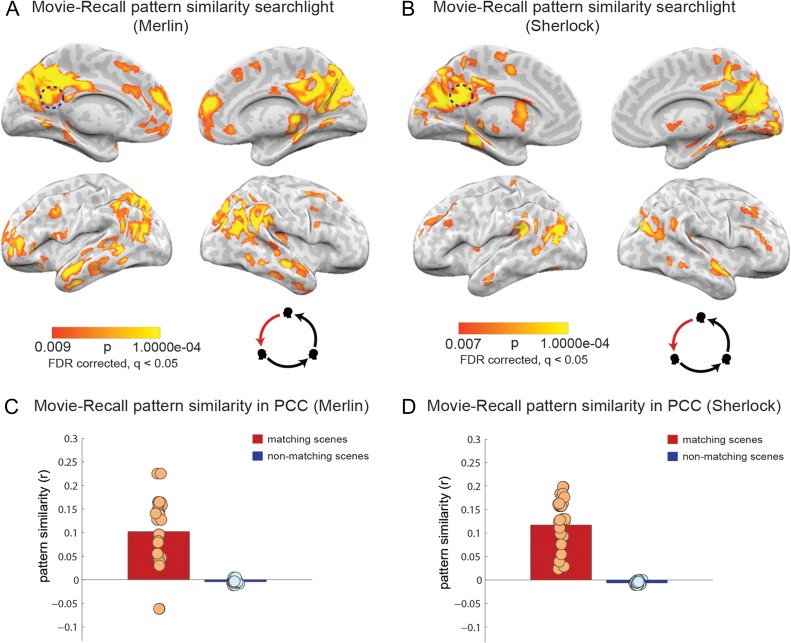

Figure 3.

Movie-viewing to spoken-recall pattern similarity analysis (A,B). Pattern similarity searchlight map, showing regions with significant between-participant, scene-specific correlations (P values) between spoken-recall and movie-viewing (searchlight was a 5 × 5 × 5 voxel cube). Panel A depicts data for the Merlin movie and panel B depicts data for the Sherlock movie. Dotted circle shows the approximate location of the PCC ROI that was used in the analysis in (C,D). Pattern similarity (r values) of each participant's encoding (movie-viewing) data to the brain response during spoken-recall (in the speaker) in posterior cingulate cortex. Red bar shows average correlation of matching scenes and blue bar depict average correlation of nonmatching scenes, averaged across subjects. Circles depict values for individual subjects. Panel C depicts data for the Merlin movie and panel D depicts data for the Sherlock movie.

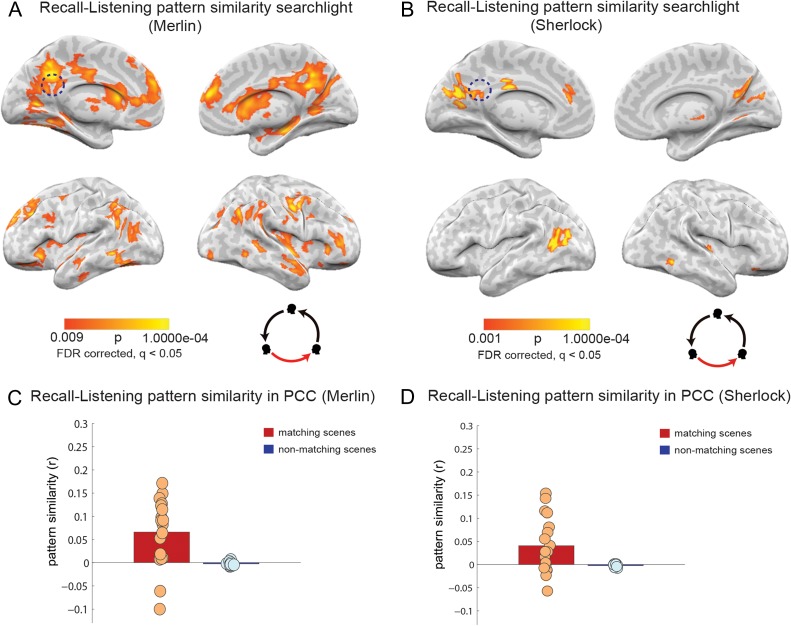

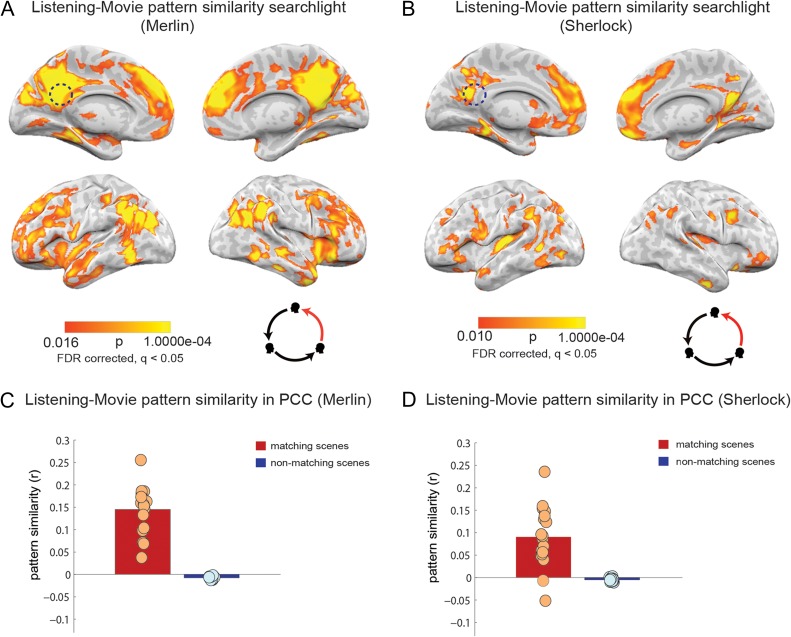

Figure 4.

Spoken-recall to listening pattern similarity analysis (A,B). Pattern similarity searchlight map, showing regions with significant between-participant, scene-specific correlations (P values) between spoken-recall and listening (searchlight was a 5 × 5 × 5 voxel cube). Panel A depicts data for the Merlin movie and panel B depicts data for the Sherlock movie. Dotted circle shows the approximate location of the PCC ROI that was used in the analysis in (C,D). Pattern similarity (r values) of each participant's listening data to the brain response during spoken-recall (in the speaker) in posterior cingulate cortex. Red bar show average correlation of matching scenes and blue bar depict average correlation of nonmatching scenes, averaged across subjects. Circles depict values for individual subjects. Panel C depicts data for the Merlin movie and panel D depicts data for the Sherlock movie.

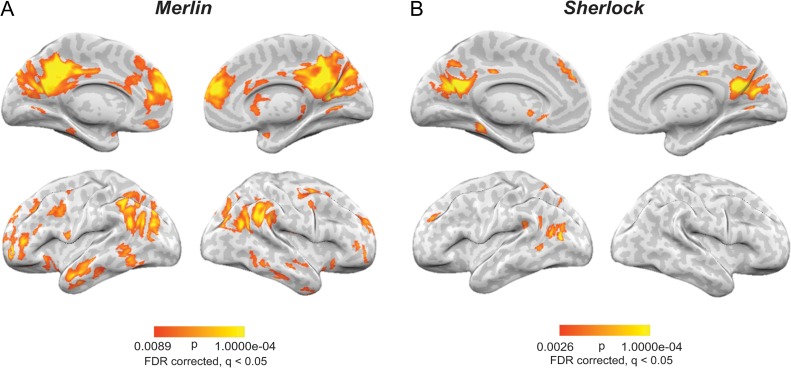

Figure 5.

Listening to movie-viewing pattern similarity analysis (A,B). Pattern similarity searchlight map, showing regions with significant between-participant, scene-specific correlations (P-values) between movie-viewing and listening (searchlight was a 5 × 5 × 5 voxel cube). Panel A depicts data for the Merlin movie and panel B depicts data for the Sherlock movie. Dotted circle shows the approximate location of the PCC ROI that was used in the analysis in panel (C,D). Pattern similarity (r values) of each participant's movie-viewing data to the average of all other listeners in posterior cingulate cortex. Red bar show average correlation of matching scenes and blue bar depict average correlation of nonmatching scenes, averaged across subjects. Circles depict values for individual subjects. Panel C depicts data for the Merlin movie and panel D depicts data for the Sherlock movie.

Speaker–Listener Time-Course Lag

Previous work suggests that during communication, neural responses observed in the listener follow the speaker's neural response timecourses with a delay of a few seconds (Stephens et al. 2010; Dikker et al. 2014; Silbert et al. 2014). To see whether this lag was also present in our listeners’ brains, we calculated the correlation in PCC between the scene-specific neural patterns during spoken-recall and listening in the spatial domain, with TR-by-TR shifting of listeners’ neural timecourses. Figure S1-A depicts the r values in the PCC ROI as the TR shift in the listeners was varied from −20 to 20 TRs (−30 to 30 s). In agreement with prior findings, we observed a lag between spoken-recall and listening. In the Merlin group, correlation peaked (r = 0.17) at a lag of ~5 TRs (7.5 s). A similar speaker–listener peak lag correlation at ~5 TRs was replicated in the listeners of the Sherlock group (Fig. S1-B). To account for the listeners’ lag response, we used this 5 TR lag across the entire brain in speaker to listener analysis.

ROI-Based Pattern Similarity

In addition to the searchlight analyses described above, pattern similarity was separately calculated in the PCC ROI. Exactly as in the searchlight, this analysis was performed by calculating Pearson correlations between patterns of brain response in different conditions, but across the entire ROI rather than in searchlight cubes. The results are presented for spoken-recall versus movie-viewing (Fig. 3C,D), spoken-recall versus listening (Fig. 4C,D), and movie-viewing versus listening (Fig. 6C,D). The same analysis was performed in auditory cortex, mPFC, angular gyrus, hippocampus, and parahippocampal gyrus ROIs (Figure S3 A-F). The PCC and mPFC ROIs were taken from a resting state connectivity atlas (Shirer et al. 2012). Auditory cortex, hippocampus, and parahipocampal gyrus ROIs were taken from the Harvard-Oxford anatomical atlas (distributed with the FSL software package; http://fsl.fmrib.ox.ac.uck/fsl/). Angular gyrus was defined using LONI Probabilistic Brain Atlas (Shattuck et al. 2008). For each ROI in each condition, significance of the average pattern similarity across subjects was calculated using a permutation test. Scene labels were shuffled and the average of correlation values for matching scenes was calculated 100 000 times to create a null distribution. The true averages larger than 99% of the values in their corresponding null distribution were considered significant (asterisks in Figure S3).

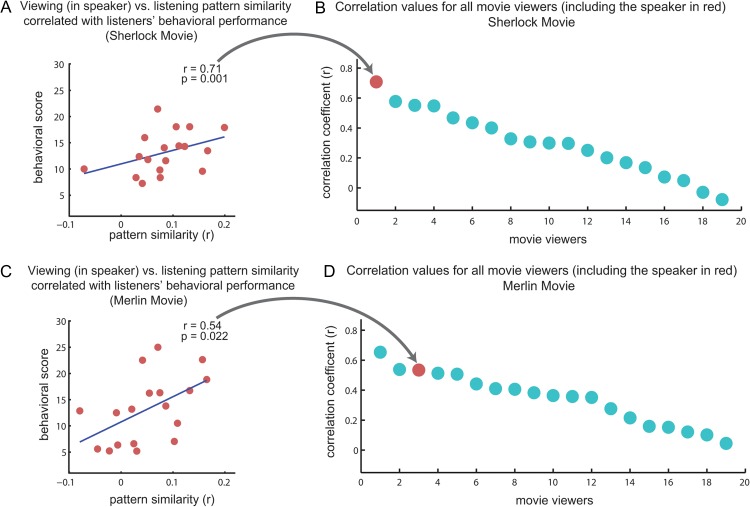

Figure 6.

Pattern similarity of movie viewers to listeners—relationship to behavior. (A,C) Correlation between the comprehension score of listeners and degree of similarity between the speaker neural responses during the encoding phase (i.e., while watching the movie) and all listeners in PCC, for each movie. (B,D) Rank order correlation values of the same analysis as in A,C for each of the viewers (including the speaker, red circle). Note that correlating the listeners’ brain responses with the actual speaker's brain responses during encoding phase better predicted comprehension levels than the correlation with other viewers.

Correlation of Neural Data and Behavior

To compute the behavioral correlation, neural data for each movie-viewing participant, as well as the speaker's movie-viewing, was compared with each listening participant's data in PCC. These patterns similarity values for each viewer (18 values because of the correlation of each viewer with each of the 18 listeners) were then correlated with the listeners’ behavioral scores on the memory test. Figure 5B,C shows the correlation for the speaker's viewing and the listeners. Figure 5A,C depicts the sorted outcome r values of correlation for each movie viewer and show the speaker's viewing in red.

Classification Analysis

To investigate the discriminability of neural patterns for individual scenes, we first averaged the time-course of brain response within each scene during movie-viewing and listening in the PCC ROI. The patterns were then averaged across participants in each group to make an averaged pattern for movie-viewing and an averaged pattern for listening. Pairwise correlation between the 2 groups for all 22 scenes was computed. Classification was considered successful if the pairwise correlation of any given scene between the movie-viewing and listening (matching scenes) was higher than its correlation with any other scene (out of 22 possibilities, chance 4.5%). Overall accuracy was then computed by the number of scenes with the highest correlation (rank = 1 for matching scene) divided by the number of scenes. Chance was calculated by shuffling the scene labels and computing the pairwise correlations 10 000 times. A null distribution was created using the classification accuracy of the shuffled scenes. Real classification accuracies of both movies stand beyond 99.9 percentile on this distribution.

Triple Shared Pattern Searchlight

The triple shared pattern analysis was performed to directly compare the neural patterns across the 3 conditions (movie-viewing, spoken-recall, listening). We sought to find voxels within each searchlight cube that were correlated across the 3 conditions. For each scene, the brain response was z-scored across voxels (spatial patterns) within each cube. If the same patterns are present across conditions, then the z-scored activation value for a given voxel should have the same sign across conditions. To measure this property, we implemented the following computation. For a given voxel in each cube, if it showed all positive or all negative values across the 3 conditions, we calculated the product of the absolute values of brain response in that voxel. Otherwise (if a voxel did not exhibit all positive or negative signs across the 3 conditions), the product value was set to zero. The final value for each voxel was then created by averaging these product values across scenes. To perform significance testing, the order of scenes in each condition was randomly shuffled (separately for each condition) and then the same procedure was applied (calculating the product value and averaging). By repeating the shuffling 10 000 times and creating the null distribution, P values were calculated for each voxel. The resulting P-values were then corrected for multiple comparisons using FDR (q < 0.05).

Results

Pattern Similarity Between Spoken-Recall and Movie-Viewing

We first asked whether brain patterns elicited during spoken-recall (memory retrieval) were similar to those elicited during movie-viewing (encoding). To this end, we needed to compare corresponding content across the 2 datasets, that is, compare brain activity as the movie-viewing participants encoded each movie event to the brain activity as the speaker recalled the same event during spoken-recall. Previous work from our lab (Chen et al. 2017) has shown that neural patterns elicited by watching a movie are highly similar across participants at the individual scene level. Therefore, to increase the reliability of the movie-viewing-related patterns, we used the data from 18 viewers (not including the speaker's viewing data) and compared them with the recall data in the speaker.

Pattern similarity analysis (details in Methods) revealed a large set of brain regions that exhibited significant scene-specific resemblance between the patterns of brain response during movie-viewing and spoken-recall. Figure 3A shows the scene-specific movie-viewing to spoken-recall pattern similarity for the Merlin movie; Figure 3B replicates the results for the Sherlock movie. These areas included posterior medial cortex, medial prefrontal cortex, parahippocampal cortex, and posterior parietal cortex; collectively, these areas strongly overlap with the DMN. In the PCC, a major ROI in the DMN, we observed a positive reinstatement effect in 17 of the 18 subjects in the Merlin group (Fig. 3C), and 18 out of the 18 subjects in the Sherlock group (Fig. 3D). The DMN has been previously shown to be active in episodic retrieval tasks (Maguire 2001; Svoboda et al. 2006). Our finding of similar brain activity patterns between encoding and recall of a continuous movie narrative supports previous studies showing reinstatement of neural patterns during recall using simpler stimuli such as words, images, and short videos (Polyn et al. 2005; St-Laurent et al. 2014; Wing et al. 2014; Bird et al. 2015). In addition, the result replicates a previous study from our lab that used a different dataset where both movie-viewing and spoken-recall were scanned for each participant (Chen et al. 2017).

The above result shows that scene-specific brain patterns presented during the encoding of the movie were reinstated during the spoken free recall of the movie. Next we asked whether listening to a recording of the recalled (verbally described) movie would elicit these same event-specific patterns in an independent group of listeners who had never watched it (listeners).

Pattern Similarity Between Spoken-Recall and Listening

Previous studies have provided initial evidence for neural alignment (correlated responses in the temporal domain using intersubject correlation) between the responses observed in the speaker's brain during the production of a story and the responses observed in the listener's brain during the comprehension of the story (Stephens et al. 2010; Silbert et al. 2014). Moreover, it has been shown that higher speaker–listener neural coupling predicts successful communication and narrative understanding (Stephens et al. 2010). However, it is not known whether similar scene-specific “spatial” patterns will be observed across communicating brains, and where in the brain such similarity exists. To test this question, we implemented pattern similarity analysis (see Methods) as in the previous section; however, for this analysis we correlated the average scene-specific neural patterns observed in the speaker's brain during spoken recall with the average scene-specific neural patterns observed in the listeners’ brains as they listened to a recording of the spoken recall. We observed significant scene-specific correlation between the speaker's neural patterns during the spoken recall and the listeners’ neural patterns during speech comprehension. Scene-specific neural patterns were compared between the spoken-recall and listening conditions using a searchlight and were corrected for multiple comparisons using FDR (q < 0.05). Figure 4A shows the scene-specific spoken-recall to listening pattern similarity for the Merlin movie; Figure 4B replicates the results for the Sherlock movie. Similarity was observed in many of the areas that exhibited the memory reinstatement effect (Movie-viewing to spoken-recall pattern similarity analysis, Fig. 3), including angular gyrus, precuneus, retrosplenial cortex, PCC, and mPFC.

Pattern Similarity Between Listening and Movie-Viewing

So far we have demonstrated that event-specific neural patterns observed during encoding in high-order brain areas were reactivated in the speaker's brain during spoken recall; and that some aspects of the neural patterns observed in the speaker were induced in the listeners’ brains while they listened to the spoken description of the movie. If speaker–listener neural alignment is a mechanism for transferring event-specific neural patterns encoded in the memory of the observer to the brains of naive listeners, then we predict that the neural patterns in the listeners’ brains during the construction of each event will resemble the movie-viewers’ neural patterns during each scene. To test this, we compared the patterns of brain responses when people listened to a verbal description of that event (listening) with those when people encoded the actual event while watching the movie (movie-viewing).

We found that the event-specific neural patterns observed as participants watched the movie were significantly correlated with neural patterns of naïve listeners who listened to the spoken description of the movie. Figure 5A shows the scene-specific listening to movie-viewing pattern similarity for the Merlin movie. Figure 5B replicates the results for the Sherlock movie. Similarity was observed in many of the same areas that exhibited memory reinstatement effects (movie-viewing to spoken-recall correlation Fig. 3) and speaker–listener alignment (Fig. 4), including angular gyrus, precuneus, retrosplenial cortex, PCC, and mPFC. Computing the scene-specific listening to movie-viewing pattern similarity within the same PCC ROI shows that the effect was positive for each of the individual subjects in the Merlin group and 16 out of the 18 subjects in the Sherlock group (Fig. 5C,D).

To confirm that the relationship between the viewing and listening patterns was scene-specific, we assessed whether we could classify “which scene” participants were hearing about (in the listening condition) by matching scene-specific patterns from the listening condition to scene-specific patterns from the viewing condition. We created average patterns in PCC for each scene separately for viewing and listening groups. On average, the neural pattern observed during movie-viewing of a particular scene was most similar to the pattern observed when listening to a verbal description of the scene (average classification accuracy for Merlin = 27%, P = 0.0002 1-tailed, Sherlock = 22%, P = 0.001 1-tailed, chance level = 4.5%, Fig. S2), even though participants listening to the verbal description had not previously seen the movie.

We conducted ROI-based pattern similarity analyses (same as for PCC in Figs 3–5C,D) for angular gyrus, mPFC, hippocampus, parahippocampal gyrus, and auditory cortex (Figure S3 A-F) for all 3 comparisons: movie-viewing to spoken-recall, spoken-recall to listening, and listening to movie-viewing. As expected, the control ROI (auditory cortex) did not exhibit reliable pattern similarity in any comparison. In contrast, areas within DMN such as PCC, AG, and mPFC showed significant pattern similarity in most comparisons. PHG showed significant pattern reinstatement during recall and pattern similarity between movie encoding and story encoding. However, hippocampus did not exhibit reliable pattern similarity in most of the comparisons, with the exception of recall-listening in the Merlin movie.

Relationship Between Pattern Similarity and Behavioral Performance

Given that the speaker's success in transmitting her memories may vary across listeners, we next asked whether the level of correlation between the neural responses of each listener and the speaker's neural responses while encoding the movie can predict the listeners’ comprehension level. To test this question, we looked at the PCC. The PCC was chosen as the ROI since previous research has shown that the strength of similarity between spatial patterns of brain response during encoding and rehearsal in this area could predict the subsequent memory performance (Bird et al. 2015). Indeed, within the PCC, speaker–listener neural alignment (correlation) predicted the level of comprehension in the listeners, as measured with an independent postscan test of memory and comprehension (Fig. 6A,C; R = 0.71 and P = 0.001 for the Merlin movie, R = 0.54 and P = 0.022 for the Sherlock movie).

Different people could vary in the way they encode and memorize the same events in the movie. These idiosyncrasies would then be transmitted to listeners when a particular speaker recounts her memory. A successful transmission of a particular episodic memory, therefore, may entail a stronger correspondence between the neural responses of the listeners with those of the “speaker watching the movie”, as opposed to with other viewers watching the same movie. To test this hypothesis, we compared the listeners’ comprehension levels with the correlation between neural patterns in PCC of each movie viewer (including the speaker, N = 19) and each listener during listening. We observed that the listeners’ comprehension levels were predicted the best when we compared the listeners’ neural patterns with those of the “actual speaker viewing the movie,” relative to all other 18 viewers (Fig. 6A); and among the top 3 in the replication study (Fig. 6C). These results indicate that during successful communication the neural responses in the listeners’ brains were aligned with neural responses observed in the speaker's brain during encoding (viewing) the movie, even before recall had begun. While we were able to replicate these results across both studies, we should interpret them with caution given the relatively small number of subjects (n = 18) in each study. Future studies are needed to better understand how the idiosyncratic way in which a speaker encodes an episode may shape the way it is transmitted to the listeners.

Shared Neural Response Across 3 Conditions (Triple Shared Pattern Analysis)

In Figures 3, 4, and 5 we show the pairwise correlations between encoding, speaking, and constructing. The areas revealed in these maps are confined to high order areas, which overlap with the DMN, and include the TPJ, angular gyrus, retrosplenial, precuneus, PCC, and mPFC. Such overlap suggests that there are similarities in the neural patterns, which are shared at least partially, across conditions. Correlation, however, is not transitive (beside the special case when the correlation values are close to 1). That is, if x is correlated with y, y is correlated with z, and z is correlated with x, one cannot conclude that a shared neural pattern is common across all 3 conditions. To directly quantify the degree to which neural patterns are shared across the 3 conditions, we developed a new, stringent 3-way similarity analysis to identify shared event-specific neural patterns across all 3 conditions (movie encoding, spoken recall, naïve listening). The analysis looks for shared neural patterns across all conditions, by searching for voxels that fluctuate together (either going up together or down together) in all 3 conditions (see Methods for details). Figure 7A shows all areas in which the scene-specific neural patterns are shared across all 3 conditions in the Merlin movie; Figure 7B replicates the results in the Sherlock movie. These areas substantially overlap with the pairwise maps (Figs 3, 4, and 5), thereby indicating that similarities captured by our pairwise correlations include patterns that are shared across all 3 conditions. Note that the existence of shared neural patterns across conditions does not preclude the existence of additional response patterns that are shared across only 2 of the 3 conditions (e.g., shared responses across the speaker–listener which are not apparent during movie encoding), and revealed in the pairwise comparisons (Figs 3, 4 and 5).

Figure 7.

Shared neural patterns across all conditions. Regions showing scene-specific pattern correlations across movie-viewing, spoken-recall, and listening for (A) the Merlin movie and (B) the Sherlock movie.

Discussion

This study reports, for the first time, that shared event-specific neural patterns are observed in the DMN during the encoding, reinstatement (spoken recall), and new construction of the same real-life episode. Furthermore, across participants, higher levels of similarity between the speaker's neural patterns during movie viewing and the listeners’ neural patterns during mental construction were associated with higher comprehension of the described events in the listeners (i.e., successful “memory transmission”). Prior studies have shown that neural patterns observed during the encoding of a memory are later reinstated during recall (Polyn et al. 2005; Johnson et al. 2009; Buchsbaum et al. 2012; St-Laurent et al. 2014; Bird et al. 2015; Chen et al. 2017). Furthermore, it has been reported that the same areas that are active during recall are also active during prospective thinking and mental construction of imaginary events (Addis et al. 2007; Buckner and Carroll 2007; Hassabis, Kumaran, and Maguire 2007; Schacter et al. 2007; Szpunar et al. 2007; Spreng et al. 2009). Our study is the first to directly compare scene-specific neural patterns observed during “mental construction (imagination) of a verbally described but never experienced event” directly to patterns elicited during “audio–visual perception of the original event.” This comparison, which was necessarily performed across participants, revealed brain areas throughout the DMN, including posterior medial cortex, mPFC, and angular gyrus, where spatial patterns were shared across both spoken recall and mental construction of the same event.

Why do we see such a strong link between memory encoding, spoken recall, and construction? By identifying these shared event-specific neural patterns, we hope to illustrate an important purpose of communication: to transmit and share one's thoughts and experiences with other brains. In order to transmit memories to another person, a speaker needs to convert between modalities, using speech to convey what she saw, heard, felt, smelled, or tasted. In our experimental setup, during the spoken recall, the speaker focused primarily on the episodic narrative (e.g., the plot, locations and settings, character actions and goals), rather than on fine sensory (visual and auditory) details. Accordingly, movie-viewing to spoken-recall pattern correlations were not found in low-level sensory areas, but instead were located in high-level DMN areas, which have been previously found to encode amodal abstract information (Binder et al. 2009; Honey et al. 2012; Regev et al. 2013). Future studies could explore whether the same speech-driven recall mechanisms can be used to reinstate and transmit detailed sensory memories in early auditory and visual cortices.

Spoken words not only enabled the reinstatement of scene-specific patterns during recall, but also enabled the construction of the same events and neural patterns as the listeners imagined those scenes. For example, when the speaker says “Sherlock looks out the window, sees a police car, and says, well now it's 4 murders,” she uses just a few words to evoke a fairly complex situation model. Remarkably, a few brief sentences such as this are sufficient to elicit neural patterns, specific to this particular scene, in the listener's DMN that significantly resemble those observed in the speaker's brain during the scene encoding. Thus, the use of spoken recall in our study exposes the strong correspondence between memories (event reconstruction) and event construction (imagination). This intimate connection between memory and imagination (Hassabis, Kumaran, and Maguire 2007; Hassabis, Kumaran, Vann et al. 2007; Hassabis and Maguire 2009; Romero and Moscovitch 2012) allows us not only to share our memories with others, but also to invent and share imaginary events with others. Areas within the DMN have been proposed to be involved in creating and applying “situation models” (Zwaan and Radvansky 1998; Ranganath and Ritchey 2012), and changes in the neural patterns in these regions seem to mark transitions between events or situations (Zacks et al. 2007; Baldassano et al. 2016). An interesting possibility is that the (re)constructed “situation model” is the “unit” of information transferred from the speaker to the listener, a transfer made compact and efficient by taking advantage of their shared knowledge.

We showed that, despite the differences between the verbal recall and the movie, listening to a recalled narrative of the movie triggered mental construction of the events in the listeners’ brain and enabled them to partly experience a movie they had never watched. Similarity between patterns of brain response during perception and imagination has been reported before (Cichy et al. 2012; Stokes et al. 2009; Johnson and Johnson 2014; Vetter et al. 2014). These studies have mostly focused on visual or auditory imagery of static objects, scenes, and sounds. In our study, we directly compared, for the first time, scene-specific neural patterns during mental construction of rich episodic content, which describes the actions and intentions of characters as embedded in real-life dynamical movie narrative.

In agreement with the hypothesis that the speaker's verbal recall transmitted her own idiosyncratic memory of the movie, we found the listeners correlated better with the speaker's neural patterns during the encoding of the movie, relative to neural responses in other viewers that watched the movie. Furthermore, the ability of the speaker to successfully transmit her memories can vary as a function of how successful the listeners are in constructing the information in their minds. And indeed we observed that the strength of speaker–listener neural alignment correlated with the listeners’ comprehension as measured by a postscan memory and comprehension tests. Taken together, these results suggest that the alignment of brain patterns between the speaker and listeners can capture the quality of transfer of episodic memories across brains. This finding extends previous research that showed a positive correlation between communication success and speaker–listener neural coupling in the temporal domain (Stephens et al. 2010; Dikker et al. 2014; Silbert et al. 2014) in posterior medial cortex, and is also consistent with research showing that higher levels of encoding-to-recall pattern similarity in PCC positively correlate with behavioral memory measures (Bird et al. 2015). Our result highlights the importance of the subjectivity and uniqueness of the original experience (how each person perceives the world, which later on affects how they retrieve that information) in transmission of information across different brains. Additional research is needed to assess the variability in such capacity among speakers as they share their memories with other subjects.

What causes some listeners to have weaker or stronger correlation with the speaker's neural activity? Listeners may differ in terms of their ability to construct and understand second hand information that is transmitted by the speaker. The speaker's recall is biased toward those parts of the movie which are more congruent with her own prior knowledge, and the listener's comprehension and memory of the speaker's description is also influenced by his/her own prior knowledge (Bartlett 1932; Bransford and Johnson 1972; Alba and Hasher 1983; Romero and Moscovitch 2012). Thus, the coupling between speaker and listener is only possible if the interlocutors have developed a shared understanding about the meaning and proper use of each spoken (or written) sign (Clark and Krych 2004; Pickering and Garrod 2004; Clark 2006). For example, if instead of using the word “police officers” the speaker uses the British synonym “bobbies”, she is likely to be misaligned with many of the listeners. Thus, the construction of the episode in the listeners’ imagination can be aligned with speaker's neural patterns (associated with the reconstruction of the episode) only if both speaker and listener share the rudimentary conceptual elements that are used to compose the scene.

Finally, it is important to note that information may change in a meaningful or useful way as it passes through the communication cycle; the 3 neural patterns associated with encoding, spoken recall, and construction are similar but not identical. For example, in a prior study we documented systematic transformations of neural representations between movie encoding and movie recall (Chen et al. 2017). In the current study, we observed that the verbal description of each scene seemed to be compressed and abstracted relative to the rich audio–visual presentation of these events in the movie. Indeed, at the behavioral level, we found that most of the scene recalls were shorter than the original movie scene (e.g., in our study it took the speaker ~15–18 min to describe a ~25-min movie). Nevertheless, the spoken descriptions were sufficiently detailed to elicit replay of the sequence of scene-specific neural patterns in the listeners’ DMNs. Because the DMN integrates information from multiple pathways (Binder and Desai 2011; Margulies et al. 2016), we propose that, as stimulus information travels up the cortical hierarchy of timescales during encoding, from low-level sensory areas up to high-level areas, a form of compression takes place (Hasson et al. 2015). These compressed representations in the DMN are later reactivated (and perhaps further compressed) using spoken words during recall. It is interesting to note that the listeners may benefit from the speaker's concise speech, as it allows them to bypass the step of actually watching the movie themselves. This may be an efficient way to spread knowledge through a social group (with the obvious risk of missing on important details), as only one person needs to expend the time and run the risks in order to learn something about the world, and can then pass that information on to others.

Overall, this study tracks, for the first time, how real-life episodes are encoded and transmitted to other brains through the cycle of communication. Sharing information across brains is a challenge that the human race has mastered and exploited. This study uncovers the intimate correspondences between memory encoding and narrative construction, and highlights the essential role that our shared language plays in that process. By demonstrating how we transmit mental representations of previous episodes to others through communication, this study lays the groundwork for future research on the interaction between memory, communication, and imagination in a natural setting.

Supplementary Material

Notes

We thank Christopher Baldassano for guidance on triple shared pattern analysis and his comments on the manuscript; We also thank Mor Regev, Yaara Yeshurun-Dishon, and other members of the Hasson lab for scientific discussions, helpful comments and their support. Conflict of Interest: None declared.

Supplementary Material

Funding

National Institutes of Health (1R01MH112357-01 and 1R01MH112566-01).

References

- Addis DR, Wong AT, Schacter DL. 2007. Remembering the past and imagining the future: common and distinct neural substrates during event construction and elaboration. Neuropsychologia. 45:1363–1377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alba JW, Hasher L. 1983. Is memory schematic. Psychol Bull. 93:203–231. [Google Scholar]

- Baldassano C, Chen J, Zadbood A, Pillow JW, Hasson U, Norman KA. 2016. Discovering event structure in continuous narrative perception and memory. bioRxiv:10.1101/081018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartlett FC. 1932. Remembering: a study in experimental and social psychology. Cambridge: Cambridge University Press. [Google Scholar]

- Benjamini Y, Hochberg Y. 1995. Controlling the false discovery rate: a practical and powerful approach to multiple. Testing J R Stat Soc Ser B Methodol. 57:289–300. [Google Scholar]

- Binder JR, Desai RH. 2011. The neurobiology of semantic memory. Trends Cogn Sci. 15:527–536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder JR, Desai RH, Graves WW, Conant LL. 2009. Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cereb Cortex. 19:2767–2796. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bird CM, Keidel JL, Ing LP, Horner AJ, Burgess N. 2015. Consolidation of complex events via reinstatement in posterior cingulate cortex. J Neurosci. 35:14426–14434. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buchsbaum BR, Lemire-Rodger S, Fang C, Abdi H. 2012. The neural basis of vivid memory is patterned on perception. J Cogn Neurosci. 24:1867–1883. [DOI] [PubMed] [Google Scholar]

- Buckner R, Andrews-Hanna JR, Schacter DL. 2008. The brain's default network: anatomy, function, and relevance to disease. Ann N Y Acad Sci. 1124:1–38. [DOI] [PubMed] [Google Scholar]

- Buckner RL, Carroll DC. 2007. Self-projection and the brain. Trends Cogn Sci. 11:49–57. [DOI] [PubMed] [Google Scholar]

- Chen J, Leong YC, Honey CJ, Yong CH, Norman KA, Hasson U. 2017. Shared memories reveal shared structure in neural activity across individuals. Nat Neurosci. 20:115–125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chow HM, Mar RA, Xu Y, Liu S, Wagage S, Braun AR. 2014. Embodied comprehension of stories: interactions between language regions and modality-specific neural systems. J Cogn Neurosci. 26:279–295. [DOI] [PubMed] [Google Scholar]

- Cichy RM, Heinzle J, Haynes J-D. 2012. Imagery and perception share cortical representations of content and location. Cereb Cortex. 22:372–380. [DOI] [PubMed] [Google Scholar]

- Clark HH. 2006. Context and common ground In: Brown K, editor. Encyclopedia of language and linguistics. Boston, MA: Elsevier. [Google Scholar]

- Clark HH, Krych MA. 2004. Speaking while monitoring addressees for understanding. J Mem Lang. 50:62–81. [Google Scholar]

- Dikker S, Silbert LJ, Hasson U, Zevin JD. 2014. On the same wavelength: predictable language enhances speaker–listener brain-to-brain synchrony in posterior superior temporal gyrus. J Neurosci. 34:6267–6272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hassabis D, Kumaran D, Maguire EA. 2007. Using imagination to understand the neural basis of episodic memory. J Neurosci. 27:14365–14374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hassabis D, Kumaran D, Vann SD, Maguire EA. 2007. Patients with hippocampal amnesia cannot imagine new experiences. Proc Natl Acad Sci USA. 104:1726–1731. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hassabis D, Maguire EA. 2007. Deconstructing episodic memory with construction. Trends Cogn Sci. 11:299–306. [DOI] [PubMed] [Google Scholar]

- Hassabis D, Maguire EA. 2009. The construction system of the brain. Philos Trans R Soc B Biol Sci. 364:1263–1271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasson U, Chen J, Honey CJ. 2015. Hierarchical process memory: memory as an integral component of information processing. Trends Cogn Sci. 19:304–313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Honey CJ, Thompson CR, Lerner Y, Hasson U. 2012. Not lost in translation: neural responses shared across languages. J Neurosci Off J Soc Neurosci. 32:15277–15283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bransford JD, Johnson MK. 1972. Contextual prerequisites for understanding: some investigations of comprehension and recall. J Verbal Learn Verbal Behav. 11:717–726. [Google Scholar]

- Johnson JD, McDuff SGR, Rugg MD, Norman KA. 2009. Recollection, familiarity, and cortical reinstatement: a multivoxel pattern analysis. Neuron. 63:697–708. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson MR, Johnson MK. 2014. Decoding individual natural scene representations during perception and imagery. Front Hum Neurosci. 8:59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kosslyn SM, Ganis G, Thompson WL. 2001. Neural foundations of imagery. Nat Rev Neurosci. 2:635–642. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. 2006. Information-based functional brain mapping. Proc Natl Acad Sci USA. 103:3863–3868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Bandettini P. 2008. Representational similarity analysis—connecting the branches of systems neuroscience. Front Syst Neurosci. 2:4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maddock RJ, Garrett AS, Buonocore MH. 2001. Remembering familiar people: the posterior cingulate cortex and autobiographical memory retrieval. Neuroscience. 104:667–676. [DOI] [PubMed] [Google Scholar]

- Maguire EA. 2001. Neuroimaging studies of autobiographical event memory. Philos Trans R Soc Lond Ser B. 356:1441–1451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mar RA. 2004. The neuropsychology of narrative: story comprehension, story production and their interrelation. Neuropsychologia. 42:1414–1434. [DOI] [PubMed] [Google Scholar]

- Margulies DS, Ghosh SS, Goulas A, Falkiewicz M, Huntenburg JM, Langs G, Bezgin G, Eickhoff SB, Castellanos FX, Petrides M, et al. 2016. Situating the default-mode network along a principal gradient of macroscale cortical organization. Proc Natl Acad Sci. 113:12574–12579. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pickering MJ, Garrod S. 2004. Toward a mechanistic psychology of dialogue. Behav Brain Sci. 27:169–190; discussion 190226. [DOI] [PubMed] [Google Scholar]

- Polyn SM, Natu VS, Cohen JD, Norman KA. 2005. Category-specific cortical activity precedes retrieval during memory search. Science. 310:1963–1966. [DOI] [PubMed] [Google Scholar]

- Raichle ME, MacLeod AM, Snyder AZ, Powers WJ, Gusnard DA, Shulman GL. 2001. A default mode of brain function. Proc Natl Acad Sci. 98:676–682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ranganath C, Ritchey M. 2012. Two cortical systems for memory-guided behaviour. Nat Rev Neurosci. 13:713–726. [DOI] [PubMed] [Google Scholar]

- Regev M, Honey CJ, Simony E, Hasson U. 2013. Selective and invariant neural responses to spoken and written narratives. J Neurosci. 33:15978–15988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romero K, Moscovitch M. 2012. Episodic memory and event construction in aging and amnesia. J Mem Lang. 67:270–284. [Google Scholar]

- Schacter DL, Addis DR, Buckner RL. 2007. Remembering the past to imagine the future: the prospective brain. Nat Rev Neurosci. 8:657–661. [DOI] [PubMed] [Google Scholar]

- Shattuck DW, Mirza M, Adisetiyo V, Hojatkashani C, Salamon G, Narr KL, Poldrack RA, Bilder RM, Toga AW. 2008. Construction of a 3D probabilistic atlas of human cortical structures. NeuroImage. 39:1064–1080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shirer WR, Ryali S, Rykhlevskaia E, Menon V, Greicius MD. 2012. Decoding subject-driven cognitive states with whole-brain connectivity patterns. Cereb Cortex. 22:158–165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silbert LJ, Honey CJ, Simony E, Poeppel D, Hasson U. 2014. Coupled neural systems underlie the production and comprehension of naturalistic narrative speech. Proc Natl Acad Sci. 111:E4687–E4696. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spreng RN, Mar RA, Kim ASN. 2009. The common neural basis of autobiographical memory, prospection, navigation, theory of mind, and the default mode: a quantitative meta-analysis. J Cogn Neurosci. 21:489–510. [DOI] [PubMed] [Google Scholar]

- Stephens GJ, Silbert LJ, Hasson U. 2010. Speaker-listener neural coupling underlies successful communication. Proc Natl Acad Sci USA. 107:14425–14430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- St-Laurent M, Abdi H, Bondad A, Buchsbaum BR. 2014. Memory reactivation in healthy aging: evidence of stimulus-specific dedifferentiation. J Neurosci. 34:4175–4186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stokes M, Thompson R, Cusack R, Duncan J. 2009. Top-down activation of shape-specific population codes in visual cortex during mental imagery. J Neurosci Off J Soc Neurosci. 29:1565–1572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Svoboda E, McKinnon MC, Levine B. 2006. The functional neuroanatomy of autobiographical memory: a meta-analysis. Neuropsychologia. 44:2189–2208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Szpunar KK, Watson JM, McDermott KB. 2007. Neural substrates of envisioning the future. Proc Natl Acad Sci. 104:642–647. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vetter P, Smith FW, Muckli L. 2014. Decoding sound and imagery content in early visual cortex. Curr Biol. 24:1256–1262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wing EA, Ritchey M, Cabeza R. 2014. Reinstatement of individual past events revealed by the similarity of distributed activation patterns during encoding and retrieval. J Cogn Neurosci. 27:679–691. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zacks JM, Speer NK, Swallow KM, Braver TS, Reynolds JR. 2007. Event perception: a mind/brain perspective. Psychol Bull. 133:273–293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zwaan RA, Radvansky GA. 1998. Situation models in language comprehension and memory. Psychol Bull. 123:162–185. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.