Abstract

Purpose

We investigated how overt visual attention and oculomotor control influence successful use of a visual feedback brain-computer interface (BCI) for accessing augmentative and alternative communication (AAC) devices in a heterogeneous population of individuals with profound neuromotor impairments. BCIs are often tested within a single patient population limiting generalization of results. This study focuses on examining individual sensory abilities with an eye toward possible interface adaptations to improve device performance.

Methods

Five individuals with a range of neuromotor disorders participated in four-choice BCI control task involving the steady state visually evoked potential. The BCI graphical interface was designed to simulate a commercial AAC device to examine whether an integrated device could be used successfully by individuals with neuromotor impairment.

Results

All participants were able to interact with the BCI, and highest performance was found for participants able to employ an overt visual attention strategy. For participants with visual deficits to due to impaired oculomotor control, effective performance increased after accounting for mismatches between the graphical layout and participant visual capabilities.

Conclusions

As BCIs are translated from research environments to clinical applications, the assessment of BCI-related skills will help facilitate proper device selection and provide individuals who use BCI the greatest likelihood of immediate and long term communicative success. Overall, our results indicate that adaptations can be an effective strategy to reduce barriers and increase access to BCI technology. These efforts should be directed by comprehensive assessments for matching individuals to the most appropriate device to support their complex communication needs.

Keywords: Brain-computer interface, BCI, steady-state visually evoked potential, SSVEP, AAC, feature matching

1. Introduction

Brain-computer interfaces (BCIs) allow individuals to control computers and other devices without requiring overt behavioral input (e.g., manual or vocal). A major area of BCI research focuses on providing aided access to communication software programs for individuals with severe neuromotor disorder and / or paralysis of the limbs and face [1,2]. The idea for providing aided access to communication has a rich history in the field of augmentative and alternative communication (AAC), which is part of a family of adaptive strategies focused on providing non-vocal access to language, literacy, and communication to individuals with severe speech and motor deficits [3,4]. Individuals who use AAC can range across a continuum, including children and adults with cerebral palsy, Down’s syndrome, traumatic brain injuries, spinal injury, blindness, deafness, and neuromotor disorders such as amyotrophic lateral sclerosis (ALS) [3]. A common characteristic of all individuals who use AAC is unintelligible or absent vocal communication despite often possessing sufficient cognitive ability for learning and using language. For some individuals with sufficient limb motor control, vocal communication can be replaced by AAC in the form of writing, typing, hand gestures, body language, or row-column scanning interfaces [4,5]. For others with more severe neuromotor disorders or paralysis of the limbs, upper vocal tract, and orofacial structures, alternative AAC strategies involving identification of eye gaze or head pointing location may be implemented [5]. For example, individuals with intact oculomotor control can create messages via camera-based eye tracking AAC systems. In this example, the AAC user orients their eyes toward a desired communication element (e.g., letter, word, graphic, or icon), then performs a predefined selection action such as prolonged fixation or eye blinking. In the case of a virtual keyboard, it is possible to spell out each letter of a word to form longer phrases, sentences and paragraphs [3,5].

Unfortunately, there are still many individuals with such profound speech and motor impairment, that they are unable to access traditional AAC devices through existing methods. Specifically, individuals with locked-in syndrome (LIS) often only have limited, if any, oculomotor control, and are unable to perform voluntarily movements of the limbs and face [6,7]. LIS can arise from a number of etiologies including traumatic brain injury, brainstem stroke, and neurodegenerative disorders such as ALS. For individuals with severely limited or absent movements, BCIs offer an alternative to existing types of aided communication by eliminating the requirement of voluntary motor control [1,2]. Therefore, the goal of BCI development is to uncover patterns of brain activity that can be reliably observed in response to some form of external stimulus (exogenous) or as a result of voluntary neural changes (e.g., imagined motor movements; endogenous), and to link those patterns to transmission of an intended communicative message [1,2,8].

While BCIs are expanding into the field of AAC, additional research is needed to determine the best way to match individuals from a variety of cognitive-motor phenotypes with complex communication needs [9-12] to the BCI that can provide the most appropriate and inclusive services [3,13]. Feature matching is a process for prescribing individuals an AAC device that is most suited to their unique profile, which includes current and projected future strengths and weaknesses [14,15]. The concept of feature matching is critically important to BCI given their technical complexity and the variety of methodology based on differences in sensory, cognitive, and motor requirements [2]. Major classes of BCI either involve sensory stimulation to evoke brain responses for controlling communication interfaces (e.g., steady state visually evoked potential [16], the P300 speller [17], auditory evoked responses [18,19], and motor imagery-based interfaces [20-22] (for a full review see [2]). Inappropriate matching, rather than technology failures, are among the most likely causes for AAC device rejection and abandonment [13], which is only likely to be exacerbated due to the complexity of BCI devices. One of the major considerations in feature matching involves assessment of user-centered factors associated with successful device operation [23,24]; therefore, investigation of the skills and requirements of each type of BCI for accessing AAC is required for effective clinical implementation [9,11,12].

One BCI technique that uses the steady state visual evoked potential (SSVEP) [16,25-29] holds great promise as an access technique for AAC devices due to its high potential communication rates [30] and relatively simple methodology [27]. The SSVEP is a neurophysiological signal detected using electroencephalography (EEG) over the occipital scalp locations, and is associated with a driving, oscillating stimulus to the visual system (e.g., a strobe stimulus with a fixed frequency) [31]. A transient visually evoked potential is elicited with every onset of the stimulus, and when transmitted to the visual cortex and summed, it is observed in the steady state at frequencies equal to the strobe rate and its harmonics [31,32]. A common approach for SSVEP-based BCIs is to present graphical icons on a screen that each flicker at a different strobe frequency [33]. The simultaneous flickering of all stimuli will generate SSVEPs at all of the strobe frequencies; however, the amplitude of the SSVEP [34] and its temporal correlation to each stimulus [35,36] increases with attention. Therefore, users can interact with the device by focusing their attention on a single graphical icon, and the attended SSVEP can be decoded using a variety of machine learning techniques (e.g., [34,35]). The frequency with the highest spectral amplitude [34] or greatest temporal correlation [35] is then chosen as the attended frequency, and its associated visual stimulus is selected as the desired response.

Recent studies have questioned whether overt attention by shifting eye gaze is necessary for a user to optimally interact with SSVEP-based (and other visually-based) BCIs, or whether covert attention is sufficient [29,37]. In this context, covert attention refers to a shifting of attention without changing eye gaze location. Past work confirms that SSVEP amplitudes are modulated via covert attention [27,28,38,39]; however, there appears to be a reduction in BCI performance when covert attention is used for both SSVEP [29] and P300 BCIs [37]. Similar concerns regarding sensory and motor abilities arise when selecting the most appropriate traditional AAC device and are addressed via thorough assessment procedures, followed by device adaptations (e.g., placement of communication icons on the screen, positioning of the device) and user trials with multiple devices. Therefore, rather than using overt attention as a strict screening tool for SSVEP suitability, our study is focused on examining how BCI performance varies by individual and according to neuromotor and oculomotor status. We also provide recommendations for assessment and intervention based on the results of the individual participants in our study.

In this study, we examine performance on an SSVEP-based BCI task by individuals with motor impairments (including oculomotor), and emphasize differences in overt visual attention due to deficits in oculomotor control. Prior studies evaluating the influence of covert attention on BCI task performance have been primarily limited to participants without neurological impairments [29,37,40,41], with only one study evaluating the feasibility of a SSVEP gaze independent display (a yellow and red interlaced square display with a central fixation cross) for two class SSVEP selection for individuals with LIS [42]. Here, we focus on a heterogeneous population of individuals with severe neuromotor deficits including ALS, brain-stem stroke, traumatic brain injury and progressive supranuclear palsy, and a range of oculomotor abilities.

Each condition can lead to specific differences in visual abilities (e.g., deficits in the lower visual field in progressive supranuclear palsy). Following BCI task completion, performance was analyzed with respect to participants’ observed oculomotor control. In addition, we designed our four class BCI visual display to simulate one possible method for combining existing graphical interfaces used by AAC devices with SSVEP stimuli for controlling a grid-like spelling / communication program. In many SSVEP applications, custom computer hardware is used to control flickering SSVEP stimuli with light-emitting diodes (LEDs) to ensure accurate stimulation frequencies. Computer screens, on the other hand, are limited to accurate flicker rates that are factors of the screen refresh rate (commonly 60 Hz), while other flicker rates are approximated. We chose to simulate possible integration on-board the graphical display of a computer-based AAC device for SSVEP stimulation rather than requiring additional hardware for LED stimulation (see [2] for an example), which may be more practical for future translation of research into clinical practice.

The results of our experiment agree with prior investigations on the importance of oculomotor control to visually-based BCI systems, namely, performance decreases when participants are not able to orient their eyes to visual targets of interest (cf. [37]). However, performance can be increased if the BCI visual display is customized for individual differences in oculomotor capabilities. In many cases, the visual deficits leading to poor BCI performance may also limit the effectiveness of traditional eye-tracking solutions, therefore, a visually based BCI may still be an effective communication interface if appropriately tailored to each user. We provide recommendations for using visual BCIs generally, and SSVEP-based BCIs specifically, based on a new BCI feature matching protocol for individuals who may use BCI for AAC.

2. Methods

2.1. Participants

We recruited five participants with severe neuromotor impairments (1 female, 4 male, age range = 29–64, mean age = 46). Informed consent was obtained from all participants, or a combination of participant assent and consent from a legally authorized representative in the event that participants were not able to provide consent due to their motor impairment. All study procedures were approved by the Institutional Review Boards of both Boston University and the University of Kansas. These participants represent a heterogeneous population with variable etiology including, traumatic injury leading to brain-stem stroke, progressive supranuclear palsy and ALS. They also vary in their level of oculomotor control ranging from the ability to control an eye-gaze device (participants P1 & P2) to severely impaired or nearly absent eye movements that are limited to one dimension only (P3, P4 & P5). Similarly, all participants varied in their primary mode of communication, P1 regularly used an eye-tracking AAC device, P2 occasionally used an eye-tracking AAC device, but often had difficulty and preferred to use partner assisted spelling through mouthing gestures, P3 used eye blinks, P4 produced minimal, severely dysarthric speech (yes/no only), and manual gestures (e.g., “thumbs-up” and “thumbs-down”) to indicate binary responses, supplemented by pointing to an alphabet board, and P5 utilized vertical eye movements. Finally, participants P1, P3, P4 and P5 each completed the BCI task in open spaces inside a research lab while P2 completed the study protocol in his own home. A summary of participant characteristics can be found in table 1.

Table 1.

Description of participant characteristics including etiology, duration of neurological disorder, current age, gender, self-reported vertical and horizontal visual impairment, primary mode of communication, and message preference.

| ID | Etiology | Duration (years) | Age (years) | Gender | Oculomotor vertical | Impairment horizontal | Primary AAC Preference | Message |

|---|---|---|---|---|---|---|---|---|

| P1 | ALS | 5 | 45 | M | no | no | Eye-tracking | Spelling |

| P2 | ALS | 27 | 61 | M | no* | no | Mouthing | Spelling |

| P3 | TBI | 9 | 31 | M | no† | no† | Blinking | Phrases / Symbols |

| P4 | PSP | 3 | 64 | F | yes | no | Gestures | Spelling / Phrases |

| P5 | BS | 13 | 29 | M | no | yes | Vertical Eye Movement | Phrases / Symbols |

Participants P3, P4 and P5 all had significant oculomotor impairment associated with their disorder (even though P3 did not report any visual deficits) while P1 and P2 were able to control an eye-gaze tracking AAC device to some extent.

P2 reported no oculomotor deficits, but presented with a ptosis of the right eye. Abbreviations: brainstem stroke (BS); progressive supranuclear palsy (PSP), traumatic brain injury (TBI); amyotrophic lateral sclerosis (ALS).

2.2. EEG data acquisition

EEG and electrooculography (EOG) were collected from all participants as they completed the the experimental paradigm. EEG was recorded from three active Ag/AgCl electrodes placed at the locations O1, Oz and O2 according to the international standard [43] for monitoring visually evoked potentials. A single active Ag/AgCl electrode was placed lateral to the corner of the right eye to record the EOG. All EEG and EOG signals were recorded using the g.MOBILab+ (g.tec, Graz, AT) mobile biophysiological acquisition device at 256 Hz sampling rate with the ground electrode placed on the forehead, and reference electrode on the left earlobe. Signals were acquired wirelessly and in real-time over a Bluetooth connection from the g.MOBILab+ to the experimental computer. Signals were bandpass filtered from 0.5 to 100 Hz on-board the acquisition device prior to subsequent analysis.

2.3. Experimental paradigm

Participants were asked to engage in an SSVEP-based BCI task in which frequency-tagged, on-off strobe, checkerboard stimuli were used to elicit the SSVEP. Stimuli were centered along the four edges of the LCD screen with rectangular dimensions (i.e., 100 × 600px [left and right], 600 × 100px [top and bottom]), and the middle of the screen was empty in order to provide task instructions and online feedback of BCI selection accuracy (see figure 1).

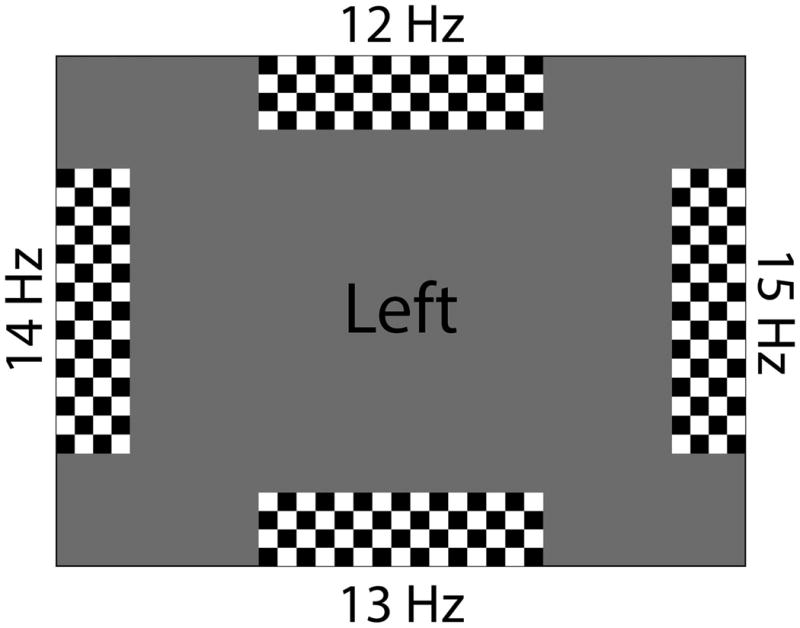

Figure 1.

An example of the graphical interface used to elicit the SSVEP, and provide participant instructions and feedback on decoding results.

Each stimulus was tagged according to its strobe frequency (12, 13, 14 and 15 Hz) and screen position (left, right, up and down). A pilot study determined that these frequencies generated the maximum SSVEP response without overlap between fundamental and harmonic frequencies (i.e., 6 Hz was not chosen since its first harmonic would overlap with 12 Hz stimulation). Attention to one of the stimuli (e.g., [up]) would then result in an amplified SSVEP response at the associated strobe frequency (e.g., 12 Hz). Additionally, participants provided feedback on their performance using their primary method of communication. Prior to the experimental task, all participants (except P1) answered questions regarding their feelings about the BCI experiment, and their expectations about operating the BCI device. Following their participation, they were asked again about their feelings regarding BCI and their perception of task difficulty. The BCI graphical layout was designed with a grid-based AAC device in mind. For SSVEP integration with AAC devices, the strobe stimuli may be positioned on the outer perimeter of the screen with a central communication grid. In this way, attention to one of the four SSVEP stimuli would result in a grid cursor movement in the appropriate cardinal direction (see [2] for an example of both spelling and symbol-based versions).

Each trial began with a text cue [up, down, left, right] displayed in the middle of the screen indicating to the participant which of the four stimuli was designated as the target stimulus. The cue was presented to each participant for 2 s, followed by a 4 s response period. During that time, participants shifted their attention to one of the four stimuli. Attention was shifted without instruction, so participants could employ either overt or covert strategies. If the BCI decoding algorithm predicted a stimulus that matched the target, a thumbs-up graphic was displayed as feedback, otherwise the participant received a thumbs-down graphic. A 1 s intertrial interval with a blank screen followed each response period and feedback presentation. A minimum of three runs (each run contained 20 trials) were performed by each participant.

2.4. SSVEP Analysis and BCI Decoding

Simultaneous presentation of many different frequency-tagged strobe stimuli will generate an SSVEP with frequency components from each stimulus; however, the attended stimulus will be amplified relative to the competitors [34] and have greatest temporal correlation [35]. For use in a BCI application, a decoding algorithm must determine to which of the stimuli participants are attending by identifying the SSVEP frequency with the greatest response. In this study, BCI decoding was accomplished by computing EEG spectra via the fast Fourier transform and decoding the SSVEP frequencies using the Harmonic Sum Decision algorithm (HSD; [44]).

EEG data collected in our experimental paradigm was first stored in a 1024 point buffer (4 s) aligned to the trial onset. Next the mean was subtracted from the stored data, and the power spectral density was estimated using a 1024-point fast Fourier transform. The HSD algorithm then uses a sum of the spectral density at each of the stimulation frequencies and their first harmonics. We used the average spectral power in a 0.2 Hz window around each stimulation frequency (e.g., 11.9 - 12.1 Hz for a 12 Hz center frequency) and its first harmonic to compute the HSD. To make a BCI choice, the stimulus with the maximum harmonic sum per trial was chosen by the decoding algorithm as the attended target, and the result was presented to the participant in the middle of the screen.

2.5. Statistical analysis of BCI results

We computed summary statistics (mean, standard errors and 95% confidence intervals) of BCI accuracy for each participant individually since the heterogeneity of the population makes group-level analyses difficult to interpret. In addition, we calculated confusion matrices of the BCI output for each participant to explore any error patterns in decoding, and used a bootstrap randomization procedure to examine whether BCI performance for each participant exceeded chance levels. In this procedure, BCI responses were held fixed, compared to randomly shuffed target values (the target stimulus direction), and repeated 10000 times. We report BCI accuracy as statistically significant if the proportion of randomized accuracy values greater than actual pre-dicted BCI responses were less than 5%. To examine the influence of oculomotor control on performance of the SSVEP-based BCI, we modeled BCI responses using a logistic regression with two within groups factors: participant age and status of oculomotor control (impaired or not impaired).

3. Results

3.1. Overall BCI performance

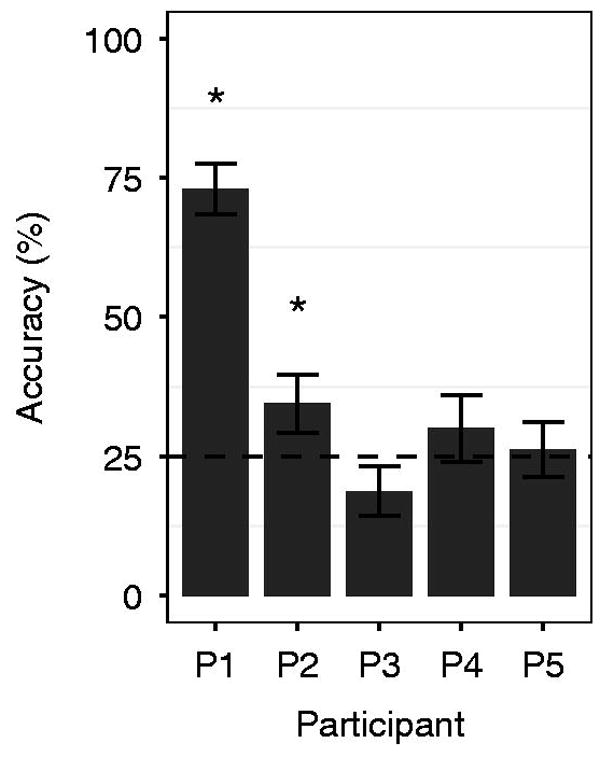

Overall accuracy in the BCI task ranged from 18.75% - 73.0% correct (mean 38.61%; 25% is the theoretical chance rate for a four choice task). Our results shown in figure 2 and table 2 indicate BCI performance for participants P1 and P2 was statistically significantly greater than chance (similar to [42]) determined from the bootstrap randomization test of accuracy (p < 0.05). Though the average performance for participant P4 was above the theoretical chance level, the result did not reach statistical significance.

Figure 2.

Average individual performance for all participants. The dashed line represents the theoretical chance accuracy rate (25% for a 4-choice BCI); performance greater than chance levels according to the bootstrap randomization test indicated with *. Error bars shown for 1 se.

Table 2.

A summary of BCI performance for each participant with the results of a bootstrap randomization test of accuracy against chance levels

| Participant | Accuracy (%) | # Runs | Significance test |

|---|---|---|---|

| P1 | 73.0 | 4 | p < 0.0001 |

| P2 | 34.52 | 7 | p = 0.0145 |

| P3 | 18.75 | 4 | p = 0.8896 |

| P4 | 30.00 | 3 | p = 0.1040 |

| P5 | 26.25 | 4 | p = 0.3169 |

Next we sought to determine the effects of oculomotor control on BCI performance. Oculomotor control is needed for overt visual attention (i.e., moving the eyes) and a lack of oculomotor control will require some amount of covert visual attention for portions of the visual display that are in the periphery. To do this, we examined two main factors influencing performance of the SSVEP-BCI system: participant age, and oculomotor impairment, using a binary logistic regression analysis. We evaluated the statistical significance of model coefficients using a analysis of variance (ANOVA) with a chi-square test in R [45]. There was a statistically significant, main effect of oculomotor impairment (Wald Type-II, χ 2(1) = 40.673, p < 0.001), but no effect of age. There was an additional statistically significant interaction between oculomotor impairment and age (Wald Type-II, χ2(1) = 26.582, p < 0.001), though the limited number of participants make it difficult to interpret this effect. A post-hoc Wilcoxon rank sum test of the main effect of oculomotor impairment indicated that individuals without severe oculomotor impairment (e.g., participants P1 & P2) had statistically significantly greater performance than those with significant impairments (participants P3, P4, & P5; Wilcoxon rank sum test, p < 0.05).

3.2. Directionality preferences

We conducted a separate analysis to characterize performance based upon the direction of each SSVEP stimulus to address variability in oculomotor control between the participants. Figure 3 provides a summary of the BCI accuracy per participant for each directional stimulus. A logistic regression with ANOVA of the stimulus direction (up, down, left, right) per participant was used to confirm directional preferences. A statistically significant effect direction was found for all participants indicating that some directions outperformed others (figure 3). A post-hoc pairwise Wilcoxon rank sum test with Bonferroni correction was used to determine the directions with statistically significant differences in accuracy (results and summary of statistical tests shown in figure 3). This analysis identified greater performance on the directions [up] (92%) and [left] (84%) versus [right] (44%), though [up], [down], and [left] were all above 72% for participant P1, [down] (76%) versus all others for P2, [up] (40%) for P3 (all others were less than 40%), [up] (40%) and [left] (67%) for P4 versus both [down] (0%) and [right] (10%), and [up] (65%) versus all others (≤ 20%) for P5.

Figure 3.

(left) Performance by participant for each of the four directions presented in the BCI experiment with (right) Wald Type-II χ2 test of the factor Direction for each participant and any statistically significant differences between directions (Wilcoxon sign rank test). Each participant demonstrated patterns of BCI performance that suggest where BCI stimuli and communication items should be placed (i.e., those locations with the highest performance among the four tested directions.)

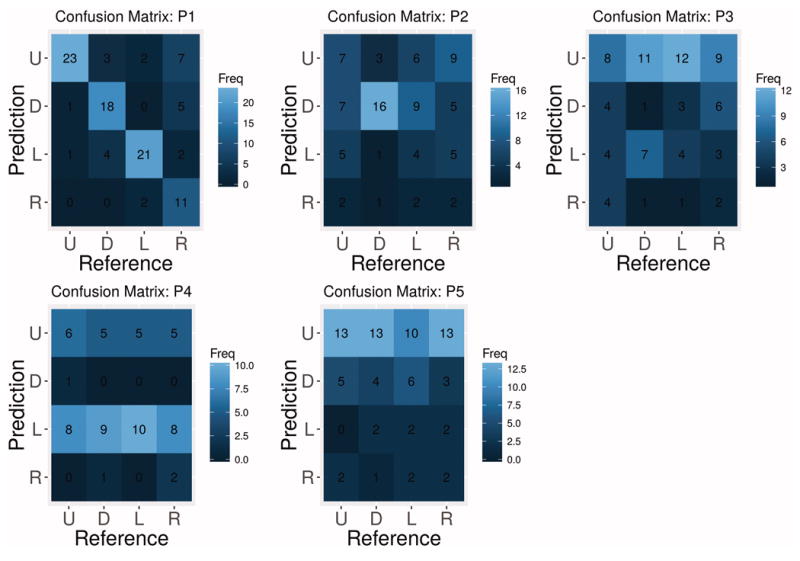

To further examine directional preferences we computed confusion matrices for BCI accuracy per participant (in figure 4). For participant P1 the dominant classification results occur along the confusion matrix diagonal, indicating high true positive rates (also observed in figure 3). The remaining participants all demonstrated certain patterns that reflect directional preferences associated with their specific oculomotor or visual field deficits. For instance, classification performance for participant P2 appears to be biased toward good classification of the [down] stimulus and random confusions among the remaining directions. Classifications for participants P3, P4 and P5 appear to be biased toward one predicted direction ([up] for P3 and P5, [up] & [left] for P4). A full discussion of the relationship between oculomotor and visual field deficits and the observed BCI performance is provided in Section 4.1. The finding of unique directional preferences and decoding patterns has important implications for feature matching assessment and selection of possible, visually-based BCIs. Further, these directional competencies can be used to tailor the visual display to match individual strengths in oculomotor control and visual acuity (e.g., placement of communication icons and BCI control stimuli in the upper and left visual field for participant P4).

Figure 4.

Confusion matrices for measuring decoding performance using the SSVEP-based BCI. Ideally the diagonal should contain the most classifications. Other patterns (e.g., horizontal line for the [left] stimulus in P4) indicates some form of bias toward certain predictions.

3.3. Motivation

We asked participants to rate their feelings about operating the BCI before and after completing the experimental protocol by choosing among the following words (some chose more than one word leading to more responses than participants): excited, hopeful, skeptical, and curious. Prior to the experiment, participants were mostly skeptical, though curious and somewhat excited (3 participants were skeptical, 2 curious, 2 excited and 1 apathetic). Following the experiment, all four participants who completed the questionnaire (all but P1) indicated excitement for BCI technology, 2 were curious, 2 were hopeful and 1 was still apathetic (P5). We also examined overall BCI performance in the first run versus the last run for all participants to gauge any effects of fatigue, learning, or changes in motivation on BCI control. We did not find any statistically significant change in performance over all participants (Wilcoxon rank sum test, p = 0.30) nor for any individual participant (Wilcoxon rank sum test, all p > 0.06). Taken together, the increase in positive ratings (e.g., “excited” increased from 2 to 4 participants; “skeptical” decreased from 3 to 0 participants) regarding the BCI and stable performance from first to last run suggests our participants were motivated to operate the BCI device. Motivation is important for eventual buy-in and acceptance from individuals who may use BCI for accessing AAC, their caretakers and AAC clinical professionals, and minimizing device abandonment.

4. Discussion

The present study was designed to test the performance of an SSVEP-based BCI when controlled by a heterogeneous population of individuals with profound neuro-motor impairments, including impairments to oculomotor control. The SSVEP method for eliciting brain activity has the potential to capitalize on the sensory abilities for individuals with paralysis since it is a neurophysiological response to a driving visual stimulus [16,29,46], and may require diffierent cognitive skills than other visual BCIs (e.g., only requires selective attention to target stimuli rather than a cognitive decision about the stimulus needed for P300 spellers) [12]. There is debate in the BCI community, however, whether overt attention is required (i.e., movements of the eyes) to properly operate visually-based BCIs, including the grid-based P300 speller [37] and SSVEP [29], or whether covert attention (i.e., attending to stimuli in the periphery) is sufficient. The answer to this question will help to evaluate the appropriateness of SSVEP-based BCIs as an access method for AAC for those with oculomotor difficulties according to person-centered AAC best practices. For instance, the results of our study will help inform SSVEP BCI recommendations based upon an evaluation that includes an initial screening / training session with the device. In addition, our results will aid future BCI assessments that either rule out certain BCI modalities, or identify those that have the potential for success, but require certain modifications in order to optimally match the BCI to a unique individual profile. These topics are discussed in more detail in the following subsections.

4.1. BCI Performance Analysis

We estimated the classification accuracy and confusion matrices for BCI performance for each participant and stimulus direction. While average accuracy was 38%, some errors may have been due to external noise and distractions. This study was intentionally performed in an open space and no attempt was made to minimize environmental distractions or enforce strict attention to the task in order to gauge performance in a somewhat realistic usage scenario. The lack of full oculomotor control was a significant factor in low performance for participants P2, P3, P4 and P5; however, optimal performance can only be expected if visual stimulation occurs in a region of the visual field that is accessible ([up] for P5 with brain-stem stroke, [up] or [left] for P4 with progressive supranuclear palsy). Comprehensive assessment to identify participant strengths (particularly in visual perception and oculomotor capabilities), similar to those used in AAC [3], can be used to tailor the interface to improve performance.

Directional preference was variable between participants, but often agreed with their reported oculomotor or visual field disruptions. Further, our results suggest that participant-specific deficits for accurately perceiving the entire visual field may have been responsible for the observed differences in directional performance when using the BCI. For instance P1 had full oculomotor control, and unsurprisingly had the best performance using the BCI. Additionally, inspection of the directional results in figures 3 and 4 shows no systematic confusions between directions with high true positive rate. The results for participant P2 were more unique, with strong performance for the [down] stimulus, weaker performance for [up] and very weak performance for the remaining directions. The confusion matrix reveals that reliable performance was only achieved for the [down] direction, albeit at a high accuracy. Participant P2 presented with a ptosis of the right eye, which may have negatively affected access to the upper and right visual fields. In addition, he reported difficulty using eye-tracking for accessing his AAC device and was unable to use binocular eye-tracking systems. Participants P3, P4 and P5 all show relatively good performance in at least one direction, [up] for P3, [up] and [left] for P4 and [up] for P5. Participant P3 had limited eye movements and his parents reported he has difficulty with attention, which likely contributed to his relatively low performance in the BCI task. Participant P4 had progressive supranuclear palsy, which can adversely affect the lower visual field. After questioning, this participant revealed she was unable to see the [down] stimulus, which is evident from the 0% accuracy in the BCI task. Participant P5 had locked-in syndrome due to a brainstem stroke and primarily communicated using vertical eye movements for binary responses. He also had no ability to move his eyes horizontally (e.g., classical LIS [7]), which is evident in the poor directional performance to the left and right directions. The observation of participant-specific patterns of performance, rather than systematic, suggests that oculomotor and visual field deficits were the primary reason for observed BCI directional preferences. The directional analysis should be a key part of BCI assessment procedures, and in this study revealed that whole-screen interfaces are not optimal for all participants, but that adaptations may be possible based on individual strengths.

4.2. Assessment and selection for BCI

Assessment and feature matching procedures in AAC help identify both the access modality and communication interface that best meets the needs of individuals with complex communication needs [13-15]. The sheer variety of access techniques, AAC options, and profiles of individuals who may use AAC makes these procedures a necessity for optimal device selection. For instance, some individuals can not maintain eye gaze on a screen, but are able to still view visual interfaces for communication. Therefore, some access modality other than eye-tracking (e.g., if available, button press using a limb or head) may be most appropriate. The introduction of BCIs into AAC best practices adds additional variety that should be considered when selecting the most appropriate communication method [2,5,9,12]. The current study investigated only sensory abilities to determine operational competency, however, additional comprehensive BCI assessment should include cognitive ability, attention, literacy, motor skill / motor signs of neurological disorder (e.g., spasticity), medical history (e.g., risk of seizures), individual preferences, and caregiver supports [12]. Assessment recommendations for each participant are listed below:

Participant P1 was already successful using binocular eye-tracking to access AAC and he was also very proficient using the SSVEP interface; therefore, his current AAC access method is recommended as the most appropriate option. He currently has the skills needed to operate an SSVEP (or likely any other visual BCI). Thus, it is suggested that he builds and maintains his BCI skills in order to facilitate switching from eye-tracking to BCI access in the event of progressive decline due to ALS.

Participant P2 was able to use monocular eye-tracking though he preferred using partner assisted spelling through mouthing gestures. He demonstrated high proficiency using the SSVEP interface using the [down] stimulus; therefore, additional assessment with modified stimulus placements are needed to fully evaluate his likelihood of success using SSVEP-based BCIs. Follow up evaluations should place all stimuli in the lower visual field and ensure appropriate placement of the graphical display relative to his current field of view. While P2 has a reliable form of communication with his partner, BCI-based access to AAC may provide him with some independence or an ability to communicate when his partner is unavailable.

Participant P3 was unable to use the SSVEP device in any meaningful fashion. SSVEP-based BCIs require an ability to selectively attend to individuals visual stimuli while ignoring others. It is possible he was not able to complete this complex attentional task; therefore, the SSVEP and likely other sensory-based BCIs (e.g., P300 speller) are not recommended for accessing AAC. An evaluation of auditory-based and motor-based BCIs is appropriate to identify an alternative potential BCI access modality.

Participant P4 used manual gestures for her current mode of communication, and she was able to control the SSVEP device using the [up] and [left] stimuli, with greatest performance for [left]. Notably, she was unable to use the [down] stimulus, with 0% accuracy, which is consistent with visual impairments as a result of progressive supranuclear palsy. Her relative success with two of the four SSVEP stimuli suggests a need for follow-up evaluations with stimuli located in the upper visual field and appropriate placement of the graphical display. Though her current communication method is effective, the gestures used by participant P4 are not suited to keyboard or touchscreen access. SSVEP-based BCI access may be an alternative access technique that bypasses the motor system and facilitates spelling, which was identified as her preferred message format.

Participant P5 used vertical eye movements for binary selection as his primary method of communication (e.g., up for yes, down for no), and had previous experience with a communication board. As a result of a brain-stem stroke, participant P5 was unable to make any horizontal movements. Therefore, his performance in the SSVEP task was consistent with his oculomotor ability. In addition, participant P5 reported good hearing sensitivity and was able to follow multi-step directions. Therefore, though the SSVEP interface may not be optimal to support his communication needs, an alternative BCI may be appropriate including auditory stimulation and motor-based interfaces. Additional testing is recommended to select a BCI from one of these two modalities.

5. Limitations

This pilot study investigated how well individuals across a range of neuromotor disorders were able to use an SSVEP-based BCI. As such, the parameters chosen represent the first step in an iterative process to fully examine sensory ability for feature matching assessment for BCI selection. First, the decision to keep the stimulation frequencies fixed to specific direction (e.g., 15 Hz was always right) limits some interpretability of the directional results. However, the fact that performance decreased according to known visual deficits helps to minimize this potential confound. The participant with progressive supranuclear palsy is a great example; a specific deficit in the lower visual field is associated with this disorder, and participant P4 was unable to attend sufficiently to the [down] stimulus. Second, the inclusion of participants with a range of neu-romotor disorders reduces the explanatory power for any individual disorder. However, expanding beyond typical populations who may use BCI is important for translation of this technology into clinical practice, and our results provide compelling evidence to warrant future study of such heterogeneous populations in greater detail. In addition, this study focused on single session results, and did not feature any adaptation based on the observed directional performance. Future studies should investigate the effects of graphical display adaptation (cf. [29,42]) over multiple sessions.

6. Conclusion

The present study provides a glimpse into the short term (one-session) BCI performance by individuals with significant neuromotor impairments in an every-day environment. The study procedures and results have potential generalization for use as a practical screening protocol for selecting SSVEP-based BCI techniques for accessing AAC by individuals with neuromotor impairments. A fundamental clinical practice in AAC is the process of feature matching in which devices are selected for possible intervention based on an individual’s current and future profile [3,13,15], which is often accompanied by practice trials with a number of potential communication devices. In these practice sessions, devices with relatively similar feature matching profiles can be tested by the client and selected based on one’s preferences, performance, and motivation. The translation of this clinical framework to BCI practice is important [2,12], given inter- and intra-subject variability in BCI performance [47], and that each individual may have different perceptions of the same BCI system [48].

In the current study, the majority of participants demonstrated a one-session increase in overall feeling toward BCI with greater excitement for the technology following their participation. This finding corroborates past evidence of increased motivation after using BCIs [49]. However, an individuals level of interest in a BCI system may be influenced by their perceived performance, which may have been a factor for P5 [50]. Motivation and positive feelings toward BCI will likely increase acceptance by ensuring individual preferences are taken into account along with objective measures of BCI operation. Buy-in, and initial selection of a combined AAC-BCI device is critically important as proper selection can lead to long-term performance gains and eventual every-day use. Poor selection is equally critical and can lead to patient frustration and device abandonment [13]. The procedures listed here for evaluating SSVEP-BCI performance can augment other evaluation parameters such as sufficient visual capacity, cognitive status, attention, and working memory needed for BCI control (cf. [46,51-53]). Overall, these results reiterate the need for comprehensive physical, sensory, and neurological assessment when matching BCI systems to individuals who require AAC (e.g., [9,11,12]). In addition, these systems should be flexible enough to support individualized modifications for maximizing their chances of success. This proof-of-concept study with a heterogeneous participant pool demonstrated the feasibility of the SSVEP-based BCI as an input modality for accessing AAC systems.

Acknowledgments

We would like to thank all of the participants for their time and support of this research

Funding

This work was supported in by the National Institutes of Health under Grant NIDCD R03-DC011304; and CELEST, a National Science Foundation Science of Learning Center under Grant NSF SMA-0835976.

ABBREVIATIONS

- BCI

brain-computer interface

- AAC

augmentative and alternative communication

- SSVEP

steady state visually evoked potential

- LIS

locked-in syndrome

- ALS

amyotrophic lateral sclerosis

Footnotes

Disclosure statement

The authors report no conflicts of interest.

References

- 1.Wolpaw JR, Birbaumer N, McFarland DJ, et al. Brain-computer interfaces for communication and control. Clin Neurophysiol. 2002;113(6):767–91. doi: 10.1016/s1388-2457(02)00057-3. [DOI] [PubMed] [Google Scholar]

- 2.Brumberg JS, Pitt KM, Mantie-Kozlowski A, et al. Brain-computer interfaces for augmentative and alternative communication: A tutorial. Am J Speech-Lang Pat. 2018:1–12. doi: 10.1044/2017_AJSLP-16-0244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Beukelman D, Mirenda P. In: Augmentative and alternative communication: Supporting children and adults with complex communication needs. 4. Paul H, editor. Brookes Publishing Co; 2013. [Google Scholar]

- 4.Higginbotham DJ, Shane H, Russell S, et al. Access to AAC: present, past, and future. Augment Altern Commun. 2007;23(3):243–57. doi: 10.1080/07434610701571058. [DOI] [PubMed] [Google Scholar]

- 5.Fager S, Beukelmanm DR, Fried-Oken M, et al. Access Interface Strategies. Assist Technol. 2012;24(1):25–33. doi: 10.1080/10400435.2011.648712. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Plum F, Posner JB. The diagnosis of stupor and coma. Contemp Neurol Ser. 1972;10:1–286. [PubMed] [Google Scholar]

- 7.Bauer G, Gerstenbrand F, Rumpl E. Varieties of the locked-in syndrome. J Neurol. 1979;221(2):77–91. doi: 10.1007/BF00313105. [DOI] [PubMed] [Google Scholar]

- 8.Brumberg JS, Guenther FH. Development of speech prostheses: current status and recent advances. Expert Rev Med Devices. 2010;7(5):667–79. doi: 10.1586/erd.10.34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Fried-Oken M, Mooney A, Peters B, et al. A clinical screening protocol for the RSVP Keyboard brain-computer interface. Disabil Rehabil Assist Technol. 2013;10(1):11–18. doi: 10.3109/17483107.2013.836684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Oken BS, Orhan U, Roark B, et al. Brain-computer interface with language model-electroencephalography fusion for locked-in syndrome. Neurorehabil Neural Repair. 2014;28(4):387–94. doi: 10.1177/1545968313516867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kübler A, Holz EM, Sellers EW, et al. Toward independent home use of brain-computer interfaces: A decision algorithm for selection of potential end-users. Arch Phys Med Rehabil. 2015;96(3):S27–S32. doi: 10.1016/j.apmr.2014.03.036. [DOI] [PubMed] [Google Scholar]

- 12.Pitt KM, Brumberg JS. Guidelines for Feature Matching Assessment of Brain-Computer Interfaces for Augmentative and Alternative Communication. Am J Speech-Lang Pat. doi: 10.1044/2018_AJSLP-17-0135. in review. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Light J, McNaughton D. Putting people first: re-thinking the role of technology in augmentative and alternative communication intervention. Augment Altern Commun. 2013;29(4):299–309. doi: 10.3109/07434618.2013.848935. [DOI] [PubMed] [Google Scholar]

- 14.Gosnell J, Costello J, Shane H. Using a clinical approach to answer ”what communication Apps should we use?”. Perspect Augment Altern Commun. 2011:87–96. [Google Scholar]

- 15.Thistle JJ, Wilkinson KM. Building Evidence-based Practice in AAC Display Design for Young Children: Current Practices and Future Directions. Augment Altern Commun. 2015;31(2):124–36. doi: 10.3109/07434618.2015.1035798. [DOI] [PubMed] [Google Scholar]

- 16.Sutter EE. The brain response interface: communication through visually-induced electrical brain responses. J Microcomput Appl. 1992;15(1):31–45. [Google Scholar]

- 17.Donchin E, Spencer KM, Wijesinghe R. The mental prosthesis: assessing the speed of a P300-based brain-computer interface. IEEE Trans Rehabil Eng. 2000;8(2):174–179. doi: 10.1109/86.847808. [DOI] [PubMed] [Google Scholar]

- 18.Hill NJ, Ricci E, Haider S, et al. A practical, intuitive brain-computer interface for communicating ’yes’ or ’no’ by listening. J Neural Eng. 2014;11(3):035003. doi: 10.1088/1741-2560/11/3/035003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Halder S, Rea M, Andreoni R, et al. An auditory oddball brain-computer interface for binary choices. Clin Neurophysiol. 2010;121(4):516–523. doi: 10.1016/j.clinph.2009.11.087. [DOI] [PubMed] [Google Scholar]

- 20.Blankertz B, Dornhege G, Krauledat M, et al. The Berlin Brain-Computer Interface: EEG-based communication without subject training. IEEE Trans Neural Syst Rehabil Eng. 2006;14(2):147–52. doi: 10.1109/TNSRE.2006.875557. [DOI] [PubMed] [Google Scholar]

- 21.Neuper C, Müuller-Putz GR, Scherer R, et al. Motor imagery and EEG-based control of spelling devices and neuroprostheses. Prog Brain Res. 2006;159:393–409. doi: 10.1016/S0079-6123(06)59025-9. [DOI] [PubMed] [Google Scholar]

- 22.Brumberg JS, Burnison JD, Pitt KM. Foundations of Augmented Cognition: Neuroergonomics and Operational Neuroscience. Spring International Publishing; Switzerland: 2016. Using motor imagery to control brain-computer interfaces for communication. [Google Scholar]

- 23.Light J. Toward a definition of communicative competence for individuals using augmentative and alternative communication systems. Augment Altern Commun. 1989;5:137–144. [Google Scholar]

- 24.Hill K, Kovacs T, Shin S. Critical Issues Using Brain-Computer Interfaces for Augmentative and Alternative Communication. Arch Phys Med Rehabil. 2015;96(3):S8–S15. doi: 10.1016/j.apmr.2014.01.034. [DOI] [PubMed] [Google Scholar]

- 25.Middendorf M, McMillan G, Calhoun G, et al. Brain-computer interfaces based on the steady-state visual-evoked response. IEEE Trans Rehabil Eng. 2000;8(2):211–214. doi: 10.1109/86.847819. [DOI] [PubMed] [Google Scholar]

- 26.Cheng M, Gao X, Gao S, et al. Design and implementation of a brain-computer interface with high transfer rates. IEEE Trans on Biomed Eng. 2002;49(10):1181–1186. doi: 10.1109/tbme.2002.803536. [DOI] [PubMed] [Google Scholar]

- 27.Kelly SP, Lalor EC, Finucane C, et al. Visual spatial attention control in an independent brain-computer interface. IEEE Trans on Biomed Eng. 2005;52(9):1588–1596. doi: 10.1109/TBME.2005.851510. [DOI] [PubMed] [Google Scholar]

- 28.Kelly SP, Lalor EC, Reilly RB, et al. Visual spatial attention tracking using high-density SSVEP data for independent brain-computer communication. IEEE Trans Neural Syst Rehabil Eng. 2005;13(2):172–178. doi: 10.1109/TNSRE.2005.847369. [DOI] [PubMed] [Google Scholar]

- 29.Allison BZ, McFarland DJ, Schalk G, et al. Towards an independent brain-computer interface using steady state visual evoked potentials. Clin Neurophysiol. 2008;119(2):399–408. doi: 10.1016/j.clinph.2007.09.121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Zhu D, Bieger J, Garcia Molina G, et al. A survey of stimulation methods used in SSVEP-based BCIs. Comput Intell Neurosci. 2010;2010:702357. doi: 10.1155/2010/702357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Regan D. Human Brain Electrophysiology: Evoked Potentials and Evoked Magnetic Fields in Science and Medicine. New York: Elsevier; 1989. [Google Scholar]

- 32.Regan D. Steady-state evoked potentials. J Opt Soc Am. 1977;67(11):1475. doi: 10.1364/josa.67.001475. [DOI] [PubMed] [Google Scholar]

- 33.Müller-Putz GR, Scherer R, Brauneis C, et al. Steady-state visual evoked potential (SSVEP)-based communication: impact of harmonic frequency components. J Neural Eng. 2005;2(4):123–130. doi: 10.1088/1741-2560/2/4/008. [DOI] [PubMed] [Google Scholar]

- 34.Lotte F, Congedo M, L′ecuyer A, et al. A review of classification algorithms for EEG-based brain-computer interfaces. J Neural Eng. 2007;4(2):R1–R13. doi: 10.1088/1741-2560/4/2/R01. [DOI] [PubMed] [Google Scholar]

- 35.Lin Z, Zhang C, Wu W, et al. Frequency recognition based on canonical correlation analysis for SSVEP-Based BCIs. IEEE Trans Biomed Eng. 2007;54(6):1172–1176. doi: 10.1109/tbme.2006.889197. [DOI] [PubMed] [Google Scholar]

- 36.Chen X, Wang Y, Gao S, et al. Filter bank canonical correlation analysis for implementing a high-speed SSVEP-based brain-computer interface. J Neural Eng. 2015;12(4):46008. doi: 10.1088/1741-2560/12/4/046008. [DOI] [PubMed] [Google Scholar]

- 37.Brunner P, Joshi S, Briskin S, et al. Does the ’P300’ speller depend on eye gaze? J Neural Eng. 2010;7(5):056013. doi: 10.1088/1741-2560/7/5/056013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Müller MM, Andersen S, Trujillo NJ, et al. Feature-selective attention enhances color signals in early visual areas of the human brain. Proc Natl Acad Sci USA. 2006;103(38):14250–4. doi: 10.1073/pnas.0606668103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Walter S, Quigley C, Andersen SK, et al. Effects of overt and covert attention on the steady-state visual evoked potential. Neurosci Lett. 2012;519(1):37–41. doi: 10.1016/j.neulet.2012.05.011. [DOI] [PubMed] [Google Scholar]

- 40.Lesenfants D, Partoune N, Soddu A, et al. Design of a novel covert ssvep-based bci. Proceedings of the 5th International Brain-Computer Interface Conference; 2011; University of Technology Publishing House; 2011. [Google Scholar]

- 41.Zhang D, Maye A, Gao X, et al. An independent brain–computer interface using covert non-spatial visual selective attention. J Neural Eng. 2010;7(1):016010. doi: 10.1088/1741-2560/7/1/016010. [DOI] [PubMed] [Google Scholar]

- 42.Lesenfants D, Habbal D, Lugo Z, et al. An independent ssvep-based brain–computer interface in locked-in syndrome. J Neural Eng. 2014;11(3):035002. doi: 10.1088/1741-2560/11/3/035002. [DOI] [PubMed] [Google Scholar]

- 43.Jasper HH. The 10-20 electrode system of the International Federation. Electroen-cephalogr Clin Neurophysiol. 1958;10:371–375. [Google Scholar]

- 44.Müller-Putz GR, Eder E, Wriessnegger SC, et al. Comparison of DFT and lock-in amplifier features and search for optimal electrode positions in SSVEP-based BCI. J Neurosci Methods. 2008;168(1):174–181. doi: 10.1016/j.jneumeth.2007.09.024. [DOI] [PubMed] [Google Scholar]

- 45.R Core Team. R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing; 2015. [Google Scholar]

- 46.Allison B, Lüth T, Valbuena D, et al. BCI demographics: How many (and what kinds of) people can use an SSVEP BCI? IEEE Trans Neural Syst Rehabil Eng. 2010;18(2):107–116. doi: 10.1109/TNSRE.2009.2039495. [DOI] [PubMed] [Google Scholar]

- 47.Ahn M, Jun SC. Performance variation in motor imagery brain–computer interface: A brief review. J Neurosci Methods. 2015;243:103–110. doi: 10.1016/j.jneumeth.2015.01.033. [DOI] [PubMed] [Google Scholar]

- 48.Peters B, Mooney A, Oken B, et al. Soliciting bci user experience feedback from people with severe speech and physical impairments. Brain-Computer Interfaces. 2016;3(1):47–58. doi: 10.1080/2326263x.2015.1138056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Nijboer F, Birbaumer N, Kübler A. The influence of psychological state and motivation on brain-computer interface performance in patients with amyotrophic lateral sclerosis - a longitudinal study. Front Neurosci. 2010;4:1–13. doi: 10.3389/fnins.2010.00055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Geronimo A, Stephens HE, Schiff SJ, et al. Acceptance of brain-computer interfaces in amyotrophic lateral sclerosis. Amyotroph Lat Scl Fr. 2015;16(3-4):258–264. doi: 10.3109/21678421.2014.969275. [DOI] [PubMed] [Google Scholar]

- 51.Riccio A, Simione L, Schettini F, et al. Attention and P300-based BCI performance in people with amyotrophic lateral sclerosis. Front Hum Neurosci. 2013;7:732. doi: 10.3389/fnhum.2013.00732. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Sprague SA, McBee MT, Sellers EW. The effects of working memory on brain-computer interface performance. Clin Neurophysiol. 2016;127(2):1331–1341. doi: 10.1016/j.clinph.2015.10.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Geronimo AM, Jin Q, Schiff SJ, et al. Performance predictors of brain-computer interfaces in patients with amyotrophic lateral sclerosis. J Neural Eng. 2016;13(2):026002. doi: 10.1088/1741-2560/13/2/026002. [DOI] [PubMed] [Google Scholar]