Abstract

Previous studies have demonstrated differential perception of body expressions between males and females. However, only two recent studies (Kret et al., 2011; Krüger et al., 2013) explored the interaction effect between observer gender and subject gender, and it remains unclear whether this interaction between the two gender factors is gender-congruent (i.e., better recognition of emotions expressed by subjects of the same gender) or gender-incongruent (i.e., better recognition of emotions expressed by subjects of the opposite gender). Here, we used event-related potentials (ERPs) to investigate the recognition of fearful and angry body expressions posed by males and females. Male and female observers also completed an affective rating task (including valence, intensity, and arousal ratings). Behavioral results showed that male observers reported higher arousal rating scores for angry body expressions posed by females than males. ERP data showed that when recognizing angry body expressions, female observers had larger P1 for male than female bodies, while male observers had larger P3 for female than male bodies. These results indicate gender-incongruent effects in early and later stages of body expression processing, which fits well with the evolutionary theory that females mainly play a role in care of offspring while males mainly play a role in family guarding and protection. Furthermore, it is found that in both angry and fearful conditions male observers exhibited a larger N170 for male than female bodies, and female observers showed a larger N170 for female than male bodies. This gender-incongruent effect in the structural encoding stage of processing may be due to the familiarity of the body configural features of the same gender. The current results provide insights into the significant role of gender in body expression processing, helping us understand the issue of gender vulnerability associated with psychiatric disorders characterized by deficits of body language reading.

Keywords: emotion, body expression, gender difference, affective arousal, event-related potentials

Introduction

Successful social interaction requires correctly decoding and recognizing emotional signals from the human body (Krüger et al., 2013; Enea and Iancu, 2016), which is an important medium of emotional communication (De Meijer, 1989; de Gelder, 2009; de Gelder et al., 2010). Compared with the face, the body is considered a more reliable carrier of affective information (Aviezer et al., 2012). When emotions expressed by faces and bodies are incongruent, the emotions conveyed by the body can bias the perception and recognition of facially expressed emotions (Meeren et al., 2005; Van den Stock et al., 2007; Aviezer et al., 2012).

It is noticeable that the processing of emotions expressed by the body is strongly affected by gender differences (Trnka et al., 2007; Aleong and Paus, 2010; Alaerts et al., 2011; Kret et al., 2011; Krüger et al., 2013). For the gender of the observer of body expressions, studies have demonstrated that females are better in recognition of hostile, angry body actions than males, whereas males surpass females in recognition of happy body expressions (Sokolov et al., 2011; Krüger et al., 2013). In addition, Kleinsmith et al. (2006) found shorter response times in female compared to male observers when they recognized emotion from avatar postures, highlighting that the gender of the observer did influence the processing of body expressions. For the gender of the subjects of body expressions, it is believed that males are stereotypically angrier than females, whereas females are more fearful than males (Plant et al., 2000; Kret and de Gelder, 2013; Zibrek et al., 2015). In addition, Johnson et al. (2011) found that subjects with angry body expression were largely judged to be male whereas subjects with sad body expression were more likely to be judged as female, indicating that gender stereotyping affects the decoding of emotional body expressions. However, while previous studies have provided valuable understanding of the influence of gender differences of either observers or subjects of body expressions, the interaction of the two gender factors has only been preliminarily investigated in two studies. Using subtle emotions displayed by point-light locomotion, Krüger et al. (2013) found that male observers had higher recognition accuracy than female observers for happy body expressions portrayed by females, whereas female observers exhibited a tendency to show better performance than male observers for angry body expressions portrayed by males. However, caution should be exercised in generalizing these findings because the gender information conveyed by point-light pictures is very limited (Pollick et al., 2005). Another study by Kret et al. (2011) presented movie clips of fearful and angry body expressions to participants, finding increased activity in brain regions including the extrastriate body area (EBA), superior temporal sulcus, and fusiform gyrus in male observers when they attended to male threatening vs. neutral body expressions. Therefore, the two previous studies of observer and subject gender factors obtained opposite results: Kret et al. (2011) reported a better recognition of emotions expressed by subjects of the same gender, while Krüger et al. (2013) reported a better recognition of emotions expressed by the opposite gender. Furthermore, the two studies used stimuli that contained motion information. This may induce different cognitive processes between male and female observers that are due to motion rather than emotion (e.g., females perform better than males in recognition of non-emotional actions; Alaerts et al., 2011; Sokolov et al., 2011).

To address these issues, the current study used static pictures of body expressions to exclude the potential confounding factor of motion processing (Alaerts et al., 2011; Volkova et al., 2014). In light of previous related studies (Kret et al., 2011; Krüger et al., 2013), it is hoped that this study could further reveal whether an interaction effect of observer and subject gender for body expressions is gender-congruent (i.e., better recognition of emotions expressed by subjects of the same gender) or gender-incongruent (i.e., better recognition of emotions expressed by the opposite gender). In addition to behavioral measures, this study employed event-related potentials (ERPs) to provide neural correlates of gender effects upon body expression processing with high temporal resolution. We were interested in four ERP components that are associated with visual perception and recognition of body expressions. The first is the occipital P1: an early emotional effect on P1 has been revealed for angry and fearful body expressions, demonstrating a rapid detection of threatening body emotions at the earliest stage of visual processing (Meeren et al., 2005; van Heijnsbergen et al., 2007). The second component is the frontal N1, which is associated with early attention allocation (Naatanen, 1988; Hsu et al., 2015). The third component is the occipito-temporal N170, which is generated from the EBA and is an index of early configural encoding of body images and could be modulated by body expressions (Stekelenburg and de Gelder, 2004; Gliga and Dehaene-Lambertz, 2005; Meeren et al., 2005; Righart and de Gelder, 2007; Taylor et al., 2010). The fourth component is the parietal P3: a recent study demonstrated that this component is influenced by top-down attention and affective arousal due to body expressions (Hietanen et al., 2014). Given the evidence that females are more sensitive to threatening information than males (female negativity bias; Lithari et al., 2010; Gardener et al., 2013), and that threatening body expressions performed by males are potentially more harmful (Kret et al., 2011), we hypothesized an interaction of the two gender factors, that is, females would show a heightened sensitivity to threatening body emotion expressed by males. Accordingly, interaction effects also were expected to be shown in the ERP components P1, N1, N170 and P3. However, no detailed hypothesis was formulated with regards to the ERP findings due to the absence of prior studies on this specific topic.

Materials and Methods

Participants

Forty undergraduates (20 females) aged 18–26 years participated in this study. All were right-handed and had normal vision (with or without correction). All participants provided written informed consent prior to the experiment. The experimental protocol was approved by the Ethics Committee of Shenzhen University and was in compliance with the 1964 Helsinki Declaration and its later amendments, and this study was performed in strict accordance with the approved guidelines.

Stimuli

The body stimuli were adapted from a validated stimulus set, i.e., the Bodily Expressive Action Stimulus Test (BEAST; de Gelder and Van den Stock, 2011). A total number of 40 pictures (20 fearful and 20 angry ones) of body expressions were used. The number of actors and actresses was equal in fearful and angry pictures. These two emotions were selected because they are both negative emotions associated with evolutionarily relevant threat situations (Meeren et al., 2005), with fear representing the potential threat and danger in the environment and anger signifying a direct threat and aggression (Marsh et al., 2005; Leppaenen and Nelson, 2012; Borgomaneri et al., 2015). The two stimuli are evolutionarily meaningful and may show a significant level of gender difference. The 40 pictures were selected from BEAST because they had comparable ratings of valence (t(38) = 1.1, p = 0.26; mean ± standard deviation: anger = 2.9 ± 0.5, fear = 2.7 ± 0.5) and arousal (t(38) = −0.9, p = 0.38; anger = 5.9 ± 1.3, fear = 6.3 ± 1.0) according to a previous survey (on a 9-point scale) including another 40 Chinese participants. All stimuli were centrally presented in gray scale on the white background with the same contrast and brightness (2.0° × 5°).

Procedure

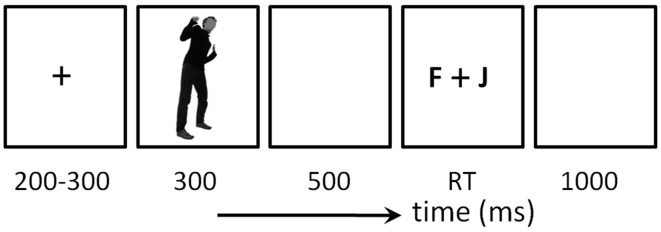

As shown in Figure 1, stimuli were presented for 300 ms. Participants were required to discriminate, as accurately and rapidly as possible, the body expression category (fear or anger) with the masked face in each trial by pressing the “F” or “J” button on the computer keyboard with their left or right index finger. All body pictures were presented three times in a random order, resulting in 30 trials in each condition. The experiment had a total of 240 trials (30 trials × two emotional types × two gender of observers × two gender of actors/actresses).

Figure 1.

Illustration of one experimental trial in this study.

After the EEG experiment, participants completed an affective rating task, in which the valence, emotional intensity and arousal of each picture was reported on a 9-point scale.

Data Recording and Analysis

Brain electrical activity was recorded referentially against left mastoid and off-line re-referenced to average reference, by a 64-channel amplifier using a standard 10-20 system (Brain Products, Gilching, Germany). EEG data were collected with electrode impedances kept below 5 kΩ. Ocular artifacts were removed from EEGs using a regression procedure implemented in NeuroScan software (Scan 4.3).

The data analysis and result display in this study were performed using Matlab R2011a (MathWorks, Natick, MA, USA). The recorded EEG data were filtered (0.01–30 Hz) and segmented beginning 200 ms prior to the onset of stimulus and lasting for 800 ms. All epochs were baseline-corrected with respect to the mean voltage over the 200 ms preceding the onset of stimulus, followed by averaging in association with experimental conditions based on behaviorally correct trials.

We analyzed the amplitudes and latencies of the occipital P1, the frontal N1, the occipito-temporal N170 and the parietal P3 across different sets of electrodes according to the grand-mean ERP topographies and relevant literatures (Stekelenburg and de Gelder, 2004; van Heijnsbergen et al., 2007; Jessen and Kotz, 2011; Gu et al., 2013). The P1 was measured using the peak amplitude and peak latency at electrode sites of O1 and PO3 for the left hemisphere, and at O2 and PO4 for the right hemisphere (time window = 90–130 ms post stimulus). The N1 was measured using the peak amplitude and peak latency at electrode sites of FCz, FC1 and FC2 between 90 ms and 130 ms. The N170 was measured using the peak amplitude and peak latency at electrode sites of P7 and PO7 for the left hemisphere, and at P8 and PO8 for the right hemisphere (time window = 150 ms and 200 ms). The P3 was measured using the average amplitude at electrode sites of Pz, P1 and P2 between 350 ms and 800 ms post stimulus.

Statistics

Statistical analysis was performed using SPSS Statistics 20.0 (IBM, Somers, NY, USA). Descriptive data were presented as mean ± standard deviation. The significance level was set at 0.05.

Three-way repeated-measures analysis of variance (ANOVA) was performed on measurements of behavioral and ERP data, with emotion (fear and anger) and gender of body expression (male actor and female actress) as the within-subject factors, and gender of observer (male and female subject) as the between-subject factor. For the measurements of P1 and N170 components, four-way repeated-measures ANOVA was performed, adding hemisphere (left and right) as another within-subject factor. Significant interactions were analyzed using simple effects model. Partial eta-squared was reported to demonstrate the effect size.

Results

For the sake of brevity, this section only reports significant findings.

Affective Rating Task

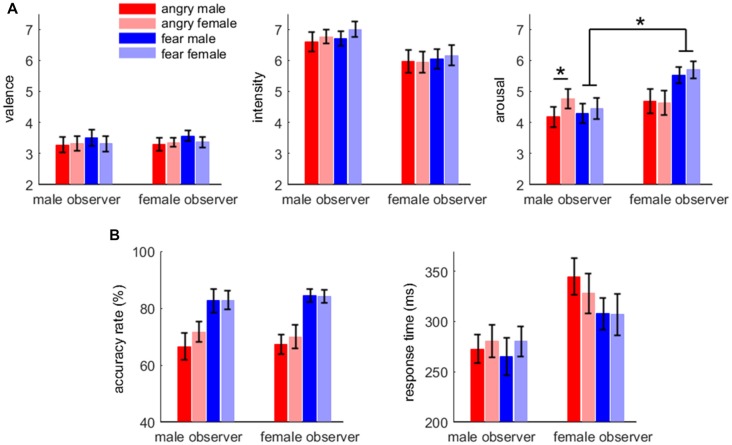

For arousal rating, the main effect of emotion was significant (F(1,38) = 5.61, p = 0.023, = 0.129); fearful expressions (5.0 ± 1.5) were evaluated as more aroused than angry expressions (4.6 ± 1.6). The main effect of gender of body expression was significant (F(1,38) = 7.58, p = 0.009, = 0.166); body expressions of females (4.9 ± 1.5) were evaluated as more aroused than those of males (4.7 ± 1.5). More importantly, the interaction effect between emotion and gender of observer was significant (F(1,38) = 8.51, p = 0.006, = 0.183); while female observers (5.6 ± 1.2) rated fearful body expressions more aroused than male observers (4.4 ± 1.5; F(1,38) = 8.89, p = 0.005), this difference between male and female observers was not observed for angry body expressions (F < 1). Furthermore, the interaction effect of emotion × gender of observer × gender of body expression was significant (F(1,38) = 4.96, p = 0.032, = 0.115). While male observers evaluated female angry expressions (4.8 ± 1.4) more aroused than male angry expressions (4.2 ± 1.5; F(1,38) = 16.6, p < 0.002), the difference in arousal between male and female expressions was not observed for the other conditions.

For valence (3.4 ± 1.0) and intensity (6.4 ± 1.4), no significant difference was found across conditions. The affective rating results are shown in Figure 2A.

Figure 2.

Affective rating (A) and behavioral results (B). Bars represent ± standard error of the mean. Significant interaction effect is denoted by an asterisk.

Emotion Discrimination Task: Behavioral Data

For accuracy rate, the main effect of emotion was significant (F(1,38) = 29.2, p < 0.001, = 0.434); the accuracy rate for fearful body expressions (83.5 ± 13.8%) was higher than that for angry body expressions (68.9 ± 17.7%).

For response time, the main effect of gender of observer was significant (F(1,38) = 4.65, p = 0.037, = 0.109); male observers (275 ± 70 ms) responded more quickly than female observers (322 ± 83 ms). The main effect of emotion was significant (F(1,38) = 5.74, p = 0.022, = 0.131); the response for fearful body expressions (290 ± 78 ms) was faster than that for angry body expressions (306 ± 81 ms). The behavioral results are shown in Figure 2B.

Emotion Discrimination Task: ERP Data

P1 Component

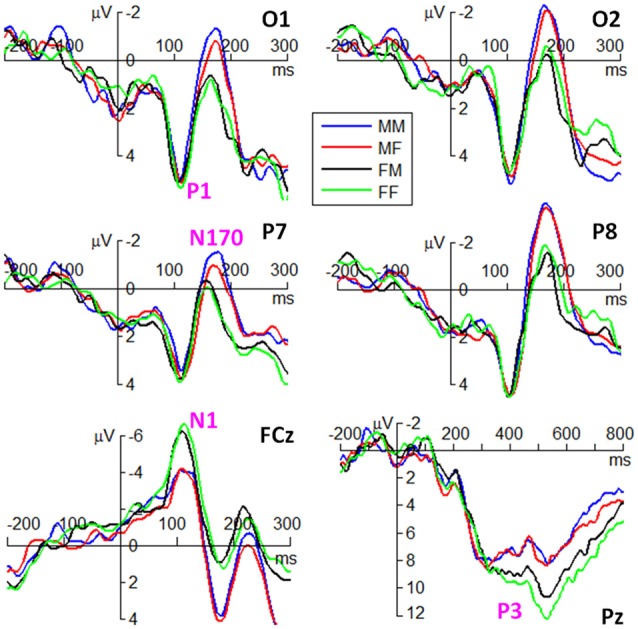

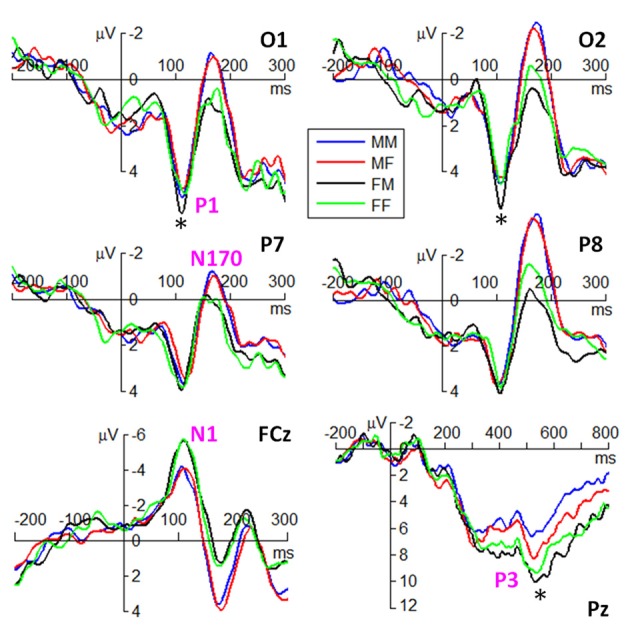

ERP waveforms of the four components are shown in Figures 3, 4 in fear and anger conditions respectively. For peak amplitude, the interaction effect of emotion × gender of observer × gender of body expression was marginally significant (F(1,38) = 4.09, p = 0.050, = 0.097). While larger P1 was evoked by angry body expressions postured by males (6.54 ± 3.55 μV) than females (5.54 ± 3.21 μV) in female observers (F(1,38) = 9.12, p = 0.004), this amplitude difference between male and female body expressions was not observed for the other conditions.

Figure 3.

Grand average event-related potentials (ERPs) of the P1, N1, N170 and P3 components at the indicated electrode sites in fear condition. M represents male. F represents female. The first latter represents gender of observers. The second latter represents gender of body expressions.

Figure 4.

Grand average ERPs of the P1, N1, N170 and P3 components at the indicated electrode sites in anger condition. The symbol * denotes a significant level of p < 0.05.

For peak latency, the main effect of emotion was significant (F(1,38) = 12.2, p = 0.001, = 0.244); the P1 evoked by fearful body expressions (112 ± 10 ms) peaked earlier than that evoked by angry body expressions (116 ± 9 ms). The main effect of hemisphere was significant (F(1,38) = 5.28, p = 0.027, = 0.122); the P1 latency in the right hemisphere (112 ± 10 ms) was shorter than that in the left hemisphere (115 ± 10 ms).

N1 Component

For peak amplitude, the main effect of gender of observer was significant (F(1,38) = 4.45, p = 0.041, = 0.105); the amplitudes in female observers (−7.26 ± 3.95 μV) were larger than that in male observers (−5.01 ± 3.27 μV).

For peak latency, the main effect of emotion was significant (F(1,38) = 4.19, p = 0.048, = 0.099); the N1 evoked by angry body expressions (111 ± 13 ms) peaked earlier than that evoked by fearful body expressions (113 ± 13 ms).

N170 Component

For peak amplitude, the main effect of gender of observer was marginally significant (F(1,38) = 4.09, p = 0.050, = 0.097); the N170 evoked in male observers (−3.42 ± 3.37 μV) were larger than that evoked in female observers (−1.86 ± 3.11 μV). The main effect of hemisphere was significant (F(1,38) = 12.9, p = 0.001, = 0.253); the N170 was larger in the right (−3.62 ± 3.68 μV) than in the left hemisphere (−1.66 ± 2.60 μV). More importantly, the interaction effect between gender of observer and gender of body expression was significant (F(1,38) = 7.46, p = 0.010, = 0.164); while male body expressions (−3.56 ± 2.60 μV) evoked larger N170 than female body expressions (−3.27 ± 2.60 μV) in male observers (F(1,38) = 5.01, p = 0.031), female body expressions (−1.96 ± 2.60 μV) evoked slightly larger N170 than male body expressions (−1.75 ± 2.60 μV) in female observers (F(1,38) = 2.64, p = 0.112). Furthermore, the interaction effect of emotion by gender of observer by hemisphere was significant (F(1,38) = 4.16, p = 0.048, = 0.099). Angry body expressions evoked larger N170 in male observers (−4.60 ± 3.27 μV) than female observers (−2.28 ± 3.67 μV) in the right hemisphere (F(1,38) = 4.63, p = 0.038); this amplitude difference between male and female observers was not observed for the other conditions.

In addition, the interaction effect between emotion and gender of body expression was significant (F(1,38) = 5.70, p = 0.022, = 0.131); while the N170 evoked by male expressions (−2.95 ± 3.47 μV) were larger than that evoked by female expressions (−2.60 ± 3.38 μV) in fear condition (F(1,38) = 5.85, p = 0.021), this amplitude difference was not significant in anger condition (F(1,38) = 1.15, p = 0.291). The interaction effect between gender of body expression × hemisphere was significant (F(1,38) = 7.52, p = 0.009, = 0.165); while male body expressions (−1.82 ± 2.62 μV) evoked larger N170 than female body expressions (−1.50 ± 2.59 μV) in the left hemisphere (F(1,38) = 7.83, p = 0.008), this effect was not observed for the right hemisphere (F(1,38) = 2.27, p = 0.140).

For peak latency, no significant difference was found across conditions.

P3 Component

For average amplitude, the main effect of emotion was significant (F(1,38) = 10.1, p = 0.003, = 0.210); fearful expressions (7.41 ± 3.84 μV) evoked larger P3 compared to angry expressions (6.31 ± 3.64 μV). The main effect of gender of observer was significant (F(1,38) = 5.77, p = 0.021, = 0.132); the P3 elicited in female observers (8.08 ± 3.73 μV) was larger than that elicited in male observers (5.65 ± 3.4 μV). More importantly, the interaction effect of emotion × gender of observer × gender of body expression was significant (F(1,38) = 4.73, p = 0.036, = 0.111). While larger P3 was evoked by angry body expressions postured by females (5.63 ± 2.99 μV) than males (4.58 ± 2.52 μV) in male observers (F(1,38) = 4.35, p = 0.044), this amplitude difference between male and female body expressions was not observed for the other conditions.

Discussion

The findings of the current study suggest that the recognition and conveyance of fearful and angry body expressions is strongly affected by gender. The most distinct finding is the gender-incongruent effect observed in P1 and P3 amplitudes. That is, female observers had larger P1 for male than female angry expressions, while male observers had larger P3 for female than male angry expressions. In light of the cognitive processes associated with the P1 component in body expression processing (Meeren et al., 2005; van Heijnsbergen et al., 2007), the current results suggest that at an early stage of visual processing, female observers have stronger vigilance for angry male than angry female bodies. This vigilance occurs at the earliest stage of visual processing, even before configural processing of the human body (indexed by the N170 component; Stekelenburg and de Gelder, 2004; Meeren et al., 2005; Righart and de Gelder, 2007). This early effect (P1) is evolutionarily adaptive to females, although it did not result in better performance in female vs. male observers for angry male body identification, as revealed in Krüger et al.’s (2013) study. Bearing in mind that from the evolutionary point of view the role of the female is often related to offspring caring, females should be sensitive to aggressive or threatening cues including angry body expressions so as to help keep their offspring away from danger (Krüger et al., 2013). Since the anger posed by males is more frequent (Bosson et al., 2009), more threatening and more physically harmful than that posed by females (Lassek and Gaulin, 2009; Kret et al., 2011; Kret and de Gelder, 2012; Tay, 2015), it could be more rapidly detected and processed by females. Another explanation is that females usually have smaller body size and less strength compared to males, so females should detect and react to the anger expressed by males as early as possible to keep themselves safe. In accordance with these ideas, behavioral evidence suggests that females respond faster to male compared to female angry facial expressions (Rotteveel and Phaf, 2004). For males, quickly detecting angry signals from other males is also important because males are often engaged in male-male aggressive behavior associated with resource supply and mate-seeking performance (Kret and de Gelder, 2012; Tay, 2015). However, we did not observe such effects in the P1 of male observers. This may be because females usually show greater cortical activation at earlier stages in visual processing of biological motion compared to males (Pavlova et al., 2015). However, further experimental evidence is required to evaluate this explanation.

Another important interaction effect was found in later stages of processing. Larger P3 amplitudes were evoked in male observers when they watched angry body expressions posed by female relative to male subjects. The P3 in previous studies has been found to be larger for high-arousal compared to neutral human body expressions (Hietanen et al., 2014). In accordance with this study, the P3 effect likely reflects a high affective arousal in male observers in response to angry female bodies, as revealed by the behavioral arousal rating. Adopting a similar time window for P3 analysis (200–650 ms), previous studies show that males but not females exhibited larger late slow waves to attractive female faces than male faces, which could also be explained by arousal (van Hooff et al., 2011). This explanation is in line with a neuroimaging study that demonstrated larger amygdala activation was found in males when observing female compared to male faces (Fischer et al., 2004). Since the amygdala is also a critical region in response to body affective information (Peelen et al., 2007), the current finding regarding P3 could be due to this amygdala effect when emotional cues such as faces and bodies are presented. According to evolutionary based hypotheses, to maintain power and privilege, males should utilize a variety of ways to coerce and control female partners (Dobash and Dobash, 1979). It is suggested that high sensitivity and arousal to anger from female partners may help males to detect potential threats to their power and privilege. The lack of similar gender-differential P3 effects in female observers is consistent with the insignificant behavioral arousal rating, indicating that affective arousal in females evoked by threatening expressions was not modulated by body gender. This may be because females allocate relatively fewer cognitive resources at later stages of body stimuli processing, as revealed by a recent magnetoencephalography study (Pavlova et al., 2015).

In the present study, the above gender differential effects in processing body expressions were found in only the anger condition. Although fear and anger both constitute threatening signals, anger is a more interactive signal because coping with another’s anger involves additional socially adaptive behaviors relative to coping with another’s fear (Pichon et al., 2009). Thus, the processing of fearful and angry body expressions entails both common but also specific neural representations: whereas fear mainly triggers activity in the right temporoparietal junction, anger elicits activation in the temporal lobe, premotor cortex and ventromedial prefrontal cortex (Pichon et al., 2009). Notably, temporal lobe and premotor cortex have been reported to be the gender-specific regions of human body processing in several previous studies (Kret et al., 2011; Kana and Travers, 2012; Pavlova, 2017). These regional differences may provide the explanation for why the observed gender effects are much stronger in the anger than in the fear condition.

Unlike the findings for the P1 and P3 components, this study revealed a gender-congruent effect in the structural encoding stage indexed by N170. In particular, males showed larger N170 for male than female bodies and females showed a tendency to have larger N170 amplitudes for female than male bodies. This result suggests that observers are more sensitive to the configural information of body expressions posed by subjects of the same gender than the opposite gender. Previous studies have indicated that familiar body stimuli could evoke more prominent configural processing than unfamiliar stimuli (Reed et al., 2003), which explains why female dancers performed better than male dancers in discrimination of point-light dancing movements expressed by females (Calvo-Merino et al., 2010). In line with this idea, the current N170 result indicates that the configural perception of body expressions is enhanced by familiarity of body expressions posed by subjects of the same gender as the observer.

This study also discovered that female observers showed larger N1 and P3 amplitudes than male observers in response to both threatening body expressions (anger and fear). The N1 result replicated a recent finding showing that females displayed a larger N1 in response to negative images than males (Lithari et al., 2010; Gardener et al., 2013). This N1 finding indicates that females are more sensitive to threatening information than males in early stages of emotional processing and reactivity. The P3 result is consistent with previous studies showing that relative to males, females had an enhanced P3 for negative pictures and expressions of suffering in infants (Proverbio et al., 2006; Li et al., 2008). The enhanced P3 in female observers indicates that females allocate more cognitive resources than males at the stage of in-depth evaluation of negative emotions (Gard and Kring, 2007; Gardener et al., 2013).

There are a number of limitations of this study. First, the current work only explored gender differences in young adult participants. Previous studies have demonstrated that when processing point-light body movements, gender differences in brain activation (e.g., temporal lobe and amygdala) are less significant in 4-to-16-year-old children and adolescents as compared to adults (Anderson et al., 2013). Therefore, generalization of the current findings for all age groups (e.g., adolescents and elderly people) should be treated with caution. Second, we did not measure hormonal information and thus we cannot rule out the possibility that the observed gender effects might be partly driven by gender dimorphic endocrine status. In particular, females usually show hormonal fluctuations across the menstrual cycle, which may lead to fluctuations of behavioral performance in different tasks (Hampson and Kimura, 1988). For example, previous studies have shown that females showed differential performance in the identification of emotional faces, depending on the phase of the menstrual cycle (Guapo et al., 2009). Third, due to the low spatial resolution of electroencephalogram (EEG), the present study could not provide information on the specific brain regions accounting for the observed gender differences. There is much evidence indicating that the neural substrates underlying emotional body language reading are gender specific, i.e., the EBA, superior temporal sulcus, anterior insula, superior parietal lobule, pre-supplementary motor area and premotor cortex are reported to mediate gender differences in processing body expressions (Kret et al., 2011; Kana and Travers, 2012). Future studies should explore the time course and functional anatomy of gender differences, for example by using simultaneous EEG-functional magnetic resonance imaging.

Our results have potential clinical relevance. Most psychiatric disorders characterized by social cognition deficits (e.g., autism and depression) have gender-specific patterns of prevalence and severity. For example, males have a higher risk than females of suffering from autism spectrum disorder (Newschaffer et al., 2007), while females are more affected by depression and anxiety disorders. Clarifying the gender impact could help provide novel insights into understanding of gender vulnerability in these psychiatric disorders (Craske, 2003).

In conclusion, the present study discovered distinct interaction effects between the gender of observers and the gender of subjects of body expressions. At the early (P1) and late (P3) processing stages, we discovered gender-incongruent effects of body expression processing. However, gender-congruent effects were exhibited in the structural encoding stage indexed by N170, which may be due to the familiarity of the configural features of bodies of the same gender. Taken together, the current results highlight the significance of gender as a modulating factor in the processing of body expressions. Further studies focusing on the gender dimorphism issue are needed to facilitate the understanding of differential gender vulnerability in psychiatric disorders characterized by social cognition deficits including impairment of body language reading (Pavlova, 2012, 2017).

Author Contributions

DZ designed the study. ZL conducted the experiment. ZH and DZ analyzed the data. ZH, ZL, JW and DZ contributed to the manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnotes

Funding. This study was funded by the National Natural Science Foundation of China (31571120), the National Key Basic Research Program of China (973 Program, 2014CB744600), the Training Program for Excellent Young College Faculty in Guangdong (YQ2015143), Shenzhen Basic Research Project (JCYJ20170302143246158), and the Project for Young Faculty of Humanities and Social Sciences in Shenzhen University (17QNFC43).

References

- Alaerts K., Nackaerts E., Meyns P., Swinnen S. P., Wenderoth N. (2011). Action and emotion recognition from point light displays: an investigation of gender differences. PLoS One 6:e20989. 10.1371/journal.pone.0020989 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aleong R., Paus T. (2010). Neural correlates of human body perception. J. Cogn. Neurosci. 22, 482–495. 10.1162/jocn.2009.21211 [DOI] [PubMed] [Google Scholar]

- Anderson L. C., Bolling D. Z., Schelinski S., Coffman M. C., Pelphrey K. A., Kaiser M. D. (2013). Sex differences in the development of brain mechanisms for processing biological motion. Neuroimage 83, 751–760. 10.1016/j.neuroimage.2013.07.040 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aviezer H., Trope Y., Todorov A. (2012). Body cues, not facial expressions, discriminate between intense positive and negative emotions. Science 338, 1225–1229. 10.1126/science.1224313 [DOI] [PubMed] [Google Scholar]

- Borgomaneri S., Vitale F., Gazzola V., Avenanti A. (2015). Seeing fearful body language rapidly freezes the observer’s motor cortex. Cortex 65, 232–245. 10.1016/j.cortex.2015.01.014 [DOI] [PubMed] [Google Scholar]

- Bosson J. K., Vandello J. A., Burnaford R. M., Weaver J. R., Arzu W. S. (2009). Precarious manhood and displays of physical aggression. Pers. Soc. Psychol. Bull. 35, 623–634. 10.1177/0146167208331161 [DOI] [PubMed] [Google Scholar]

- Calvo-Merino B., Ehrenberg S., Leung D., Haggard P. (2010). Experts see it all: configural effects in action observation. Psychol. Res. 74, 400–406. 10.1007/s00426-009-0262-y [DOI] [PubMed] [Google Scholar]

- Craske M. G. (2003). Origins of Phobias and Anxiety Disorders: Why More Women Than Men? Amsterdam: Elsevier. [Google Scholar]

- de Gelder B. (2009). Why bodies? Twelve reasons for including bodily expressions in affective neuroscience. Philos. Trans. R. Soc. Lond. B. Biol. Sci. 364, 3475–3484. 10.1098/rstb.2009.0190 [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B., Van den Stock J. (2011). The bodily expressive action stimulus test (BEAST). Construction and validation of a stimulus basis for measuring perception of whole body expression of emotions. Front. Psychol. 2:181. 10.3389/fpsyg.2011.00181 [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B., Van den Stock J., Meeren H. K., Sinke C. B., Kret M. E., Tamietto M. (2010). Standing up for the body. Recent progress in uncovering the networks involved in the perception of bodies and bodily expressions. Neurosci. Biobehav. Rev. 34, 513–527. 10.1016/j.neubiorev.2009.10.008 [DOI] [PubMed] [Google Scholar]

- De Meijer M. (1989). The contribution of general features of body movement to the attribution of emotions. J. Nonverb. Behav. 13, 247–268. 10.1007/bf00990296 [DOI] [Google Scholar]

- Dobash R. E., Dobash R. (1979). Violence Against Wives: A Case Against the Patriarchy. New York, NY: Free Press. [Google Scholar]

- Enea V., Iancu S. (2016). Processing emotional body expressions: state-of-the-art. Soc. Neurosci. 11, 495–506. 10.1080/17470919.2015.1114020 [DOI] [PubMed] [Google Scholar]

- Fischer H., Sandblom J., Herlitz A., Fransson P., Wright C. I., Bäckman L. (2004). Sex-differential brain activation during exposure to female and male faces. Neuroreport 15, 235–238. 10.1097/00001756-200402090-00004 [DOI] [PubMed] [Google Scholar]

- Gard M. G., Kring A. M. (2007). Sex differences in the time course of emotion. Emotion 7, 429–437. 10.1037/1528-3542.7.2.429 [DOI] [PubMed] [Google Scholar]

- Gardener E. K., Carr A. R., Macgregor A., Felmingham K. L. (2013). Sex differences and emotion regulation: an event-related potential study. PLoS One 8:e73475. 10.1371/journal.pone.0073475 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gliga T., Dehaene-Lambertz G. (2005). Structural encoding of body and face in human infants and adults. J. Cogn. Neurosci. 17, 1328–1340. 10.1162/0898929055002481 [DOI] [PubMed] [Google Scholar]

- Gu Y., Mai X., Luo Y. J. (2013). Do bodily expressions compete with facial expressions? Time course of integration of emotional signals from the face and the body. PLoS One 8:e66762. 10.1371/journal.pone.0066762 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guapo V. G., Graeff F. G., Zani A. C., Labate C. M., Dos R. R., Del-Ben C. M. (2009). Effects of sex hormonal levels and phases of the menstrual cycle in the processing of emotional faces. Psychoneuroendocrinology 34, 1087–1094. 10.1016/j.psyneuen.2009.02.007 [DOI] [PubMed] [Google Scholar]

- Hampson E., Kimura D. (1988). Reciprocal effects of hormonal fluctuations on human motor and perceptual-spatial skills. Behav. Neurosci. 102, 456–459. 10.1037/0735-7044.102.3.456 [DOI] [PubMed] [Google Scholar]

- Hietanen J. K., Kirjavainen I., Nummenmaa L. (2014). Additive effects of affective arousal and top-down attention on the event-related brain responses to human bodies. Biol. Psychol. 103, 167–175. 10.1016/j.biopsycho.2014.09.003 [DOI] [PubMed] [Google Scholar]

- Hsu Y. F., Le Bars S., Hämäläinen J. A., Waszak F. (2015). Distinctive representation of mispredicted and unpredicted prediction errors in human electroencephalography. J. Neurosci. 35, 14653–14660. 10.1523/JNEUROSCI.2204-15.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jessen S., Kotz S. A. (2011). The temporal dynamics of processing emotions from vocal, facial, and bodily expressions. Neuroimage 58, 665–674. 10.1016/j.neuroimage.2011.06.035 [DOI] [PubMed] [Google Scholar]

- Johnson K. L., McKay L. S., Pollick F. E. (2011). He throws like a girl (but only when he’s sad): emotion affects sex-decoding of biological motion displays. Cognition 119, 265–280. 10.1016/j.cognition.2011.01.016 [DOI] [PubMed] [Google Scholar]

- Kana R. K., Travers B. G. (2012). Neural substrates of interpreting actions and emotions from body postures. Soc. Cogn. Affect. Neurosci. 7, 446–456. 10.1093/scan/nsr022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleinsmith A., Bianchi-Berthouze N., Berthouze L. (2006). “An effect of gender in the interpretation of affective cues in avatars,” in Proceedings of Workshop on Gender and Interaction: Conjunction with the International Conference of Advanced Visual Interfaces, Venice, Italy. [Google Scholar]

- Kret M. E., de Gelder B. (2012). A review on sex differences in processing emotional signals. Neuropsychologia 50, 1211–1221. 10.1016/j.neuropsychologia.2011.12.022 [DOI] [PubMed] [Google Scholar]

- Kret M. E., de Gelder B. (2013). When a smile becomes a fist: the perception of facial and bodily expressions of emotion in violent offenders. Exp. Brain. Res. 228, 399–410. 10.1007/s00221-013-3557-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kret M. E., Pichon S., Grèzes J., de Gelder B. (2011). Men fear other men most: gender specific brain activations in perceiving threat from dynamic faces and bodies—an fMRI study. Front. Psychol. 2:3. 10.3389/fpsyg.2011.00003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krüger S., Sokolov A. N., Enck P., Krägeloh-Mann I., Pavlova M. A. (2013). Emotion through locomotion: gender impact. PLoS One 8:e81716. 10.1371/journal.pone.0081716 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lassek W. D., Gaulin S. J. C. (2009). Costs and benefits of fat-free muscle mass in men: relationship to mating success, dietary requirements, and native immunity. Evol. Hum. Behav. 30, 322–328. 10.1016/j.evolhumbehav.2009.04.002 [DOI] [Google Scholar]

- Leppaenen J. M., Nelson C. A. (2012). Early development of fear processing. Curr. Dir. Psychol. Sci. 21, 200–204. 10.1177/0963721411435841 [DOI] [Google Scholar]

- Li H., Yuan J., Lin C. (2008). The neural mechanism underlying the female advantage in identifying negative emotions: an event-related potential study. Neuroimage 40, 1921–1929. 10.1016/j.neuroimage.2008.01.033 [DOI] [PubMed] [Google Scholar]

- Lithari C., Frantzidis C. A., Papadelis C., Vivas A. B., Klados M. A., Kourtidou-Papadeli C., et al. (2010). Are females more responsive to emotional stimuli? A neurophysiological study across arousal and valence dimensions. Brain Topogr. 23, 27–40. 10.1007/s10548-009-0130-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marsh A. A., Ambady N., Kleck R. E. (2005). The effects of fear and anger facial expressions on approach- and avoidance-related behaviors. Emotion 5, 119–124. 10.1037/1528-3542.5.1.119 [DOI] [PubMed] [Google Scholar]

- Meeren H. K., van Heijnsbergen C. C., de Gelder B. (2005). Rapid perceptual integration of facial expression and emotional body language. Proc. Natl. Acad. Sci. U S A 102, 16518–16523. 10.1073/pnas.0507650102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Naatanen R. (1988). Implications of ERP data for psychological theories of attention. Biol. Psychol. 26, 117–163. 10.1016/0301-0511(88)90017-8 [DOI] [PubMed] [Google Scholar]

- Newschaffer C. J., Croen L. A., Daniels J., Giarelli E., Grether J. K., Levy S. E., et al. (2007). The epidemiology of autism spectrum disorders. Annu. Rev. Public Health 28, 235–258. 10.1016/b978-0-12-391924-3.00001-6 [DOI] [PubMed] [Google Scholar]

- Pavlova M. A. (2012). Biological motion processing as a hallmark of social cognition. Cereb. Cortex 22, 981–995. 10.1093/cercor/bhr156 [DOI] [PubMed] [Google Scholar]

- Pavlova M. A. (2017). Sex and gender affect the social brain: beyond simplicity. J. Neurosci. Res. 95, 235–250. 10.1002/jnr.23871 [DOI] [PubMed] [Google Scholar]

- Pavlova M. A., Sokolov A. N., Bidet-Ildei C. (2015). Sex differences in the neuromagnetic cortical response to biological motion. Cereb. Cortex 25, 3468–3474. 10.1093/cercor/bhu175 [DOI] [PubMed] [Google Scholar]

- Peelen M. V., Atkinson A. P., Andersson F., Vuilleumier P. (2007). Emotional modulation of body-selective visual areas. Soc. Cogn. Affect. Neurosci. 2, 274–283. 10.1093/scan/nsm023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pichon S., de Gelder B., Grèzes J. (2009). Two different faces of threat. Comparing the neural systems for recognizing fear and anger in dynamic body expressions. Neuroimage 47, 1873–1883. 10.1016/j.neuroimage.2009.03.084 [DOI] [PubMed] [Google Scholar]

- Plant E. A., Hyde J. S., Keltner D., Devine P. G. (2000). The gender sterotyping of emotions. Psychol. Women. Quart. 24, 81–92. 10.1111/j.1471-6402.2000.tb01024.x [DOI] [Google Scholar]

- Pollick F. E., Kay J. W., Heim K., Stringer R. (2005). Gender recognition from point-light walkers. J. Exp. Psychol. Hum. Percept. Perform. 31, 1247–1265. 10.1037/0096-1523.31.6.1247 [DOI] [PubMed] [Google Scholar]

- Proverbio A. M., Brignone V., Matarazzo S., Del Zotto M., Zani A. (2006). Gender and parental status affect the visual cortical response to infant facial expression. Neuropsychologia 44, 2987–2999. 10.1016/j.neuropsychologia.2006.06.015 [DOI] [PubMed] [Google Scholar]

- Reed C. L., Stone V. E., Bozova S., Tanaka J. (2003). The body-inversion effect. Psychol. Sci. 14, 302–308. 10.1111/1467-9280.14431 [DOI] [PubMed] [Google Scholar]

- Righart R., de Gelder B. (2007). Impaired face and body perception in developmental prosopagnosia. Proc. Natl. Acad. Sci. U S A 104, 17234–17238. 10.1073/pnas.0707753104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rotteveel M., Phaf R. H. (2004). Automatic affective evaluation does not automatically predispose for arm flexion and extension. Emotion 4, 156–172. 10.1037/1528-3542.4.2.156 [DOI] [PubMed] [Google Scholar]

- Sokolov A. A., Krüger S., Enck P., Krägeloh-Mann I., Pavlova M. A. (2011). Gender affects body language reading. Front. Psychol. 2:16. 10.3389/fpsyg.2011.00016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stekelenburg J. J., de Gelder B. (2004). The neural correlates of perceiving human bodies: an ERP study on the body-inversion effect. Neuroreport 15, 777–780. 10.1097/00001756-200404090-00007 [DOI] [PubMed] [Google Scholar]

- Tay P. K. C. (2015). The adaptive value associated with expressing and perceiving angry-male and happy-female faces. Front. Psychol. 6:851. 10.3389/fpsyg.2015.00851 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor J. C., Roberts M. V., Downing P. E., Thierry G. (2010). Functional characterisation of the extrastriate body area based on the N1 ERP component. Brain Cogn. 73, 153–159. 10.1016/j.bandc.2010.04.001 [DOI] [PubMed] [Google Scholar]

- Trnka R., Kubĕna A., Kucerová E. (2007). Sex of expresser and correct perception of facial expressions of emotion. Percept. Mot. Skills 104, 1217–1222. 10.2466/pms.104.3.1217-1222 [DOI] [PubMed] [Google Scholar]

- Van den Stock J., Righart R., de Gelder B. (2007). Body expressions influence recognition of emotions in the face and voice. Emotion 7, 487–494. 10.1037/1528-3542.7.3.487 [DOI] [PubMed] [Google Scholar]

- van Heijnsbergen C. C., Meeren H. K., Grèzes J., de Gelder B. (2007). Rapid detection of fear in body expressions, an ERP study. Brain Res. 1186, 233–241. 10.1016/j.brainres.2007.09.093 [DOI] [PubMed] [Google Scholar]

- van Hooff J. C., Crawford H., van Vugt M. (2011). The wandering mind of men: ERP evidence for gender differences in attention bias towards attractive opposite sex faces. Soc. Cogn. Affect. Neurosci. 6, 477–485. 10.1093/scan/nsq066 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Volkova E. P., Mohler B. J., Dodds T. J., Tesch J., Bülthoff H. H. (2014). Emotion categorization of body expressions in narrative scenarios. PLoS One 5:623. 10.3389/fpsyg.2014.00623 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zibrek K., Hoyet L., Ruhland K., McDonnell R. (2015). Exploring the effect of motion type and emotions on the perception of gender in virtual humans. ACM Trans. Appl. Percept. 12, 1–201. 10.1145/2767130 [DOI] [Google Scholar]