Abstract

Adaptation is fundamental in sensory processing and has been studied extensively within the same sensory modality. However, little is known about adaptation across sensory modalities, especially in the context of high-level processing, such as the perception of emotion. Previous studies have shown that prolonged exposure to a face exhibiting one emotion, such as happiness, leads to contrastive biases in the perception of subsequently presented faces toward the opposite emotion, such as sadness. Such work has shown the importance of adaptation in calibrating face perception based on prior visual exposure. In the present study, we showed for the first time that emotion-laden sounds, like laughter, adapt the visual perception of emotional faces, that is, subjects more frequently perceived faces as sad after listening to a happy sound. Furthermore, via electroencephalography recordings and event-related potential analysis, we showed that there was a neural correlate underlying the perceptual bias: There was an attenuated response occurring at ∼ 400 ms to happy test faces and a quickened response to sad test faces, after exposure to a happy sound. Our results provide the first direct evidence for a behavioral cross-modal adaptation effect on the perception of facial emotion, and its neural correlate.

Keywords: aftereffect, laughter, neural attenuation, unimodal

Introduction

Human voice and facial expression, important sources of emotional signals, express one's mental state and are essential for social interactions. Even at the earliest age of life, humans are skilled at perceiving and understanding another's voice and facial expressions (Dalferth 1989), and these social signals are commonly linked together.

When a stimulus lies on a continuum between 2 extreme states (for faces, this continuum might run from happy through to neutral through to sad), prolonged exposure to a stimulus at one end of this continuum will lead to a bias in the perception of a subsequently presented stimulus toward the opposite end (Webster and MacLeod 2011). Thus, prolonged exposure to a sad face will make a subsequently presented neutral face appear happy (Webster et al. 2004). Recent studies have shown that adaptation also exists in the auditory domain. For example, adaptation to male voices causes a voice to be perceived as more female, and vice versa (Schweinberger et al. 2008). In addition, adapting to an angry vocalization biased emotionally ambiguous voices toward more fearful ones (Bestelmeyer et al. 2010). Finally, prolonged exposure to the voice of Speaker A biased participants to identify Speaker B in subsequently presented test voices. The test voices were created by morphing voice A and B together to create identity ambiguous hybrid voices, thus producing a voice-to-voice adaptation in the perception of speaker identity (Zaske et al. 2010).

This raises several interesting questions; specifically, does this adaptation aftereffect exist across sensory modalities, and can an emotional voice bias the subsequent judgment of the emotion of a face, and/or vice versa? There is very little research exploring the extent to which cross-modal adaptation can occur, and its potential neural processes. Recently, emerging evidence has shown that facial expression adaptation biased the judgment of a subsequently presented voice in male participants, demonstrating a face to voice emotional aftereffect (Skuk and Schweinberger 2013). Auditory object recognition was found to be worse than visual object recognition (Cohen et al. 2009). However, further exploration revealed that this effect is attention dependent—both can be recognized accurately (∼95% in accuracy) when each modality was paid in full attention, and auditory memory was impaired more by attending to the visual object when both modalities (pictures/sounds) are presented simultaneously, and when their initial recognizabilities were matched (Schmid et al. 2011). Therefore, one might infer that an auditory memory would be less likely to bias subsequent visual perception than a visual memory might bias subsequent auditory perception. A recent study showed voice to face adaptation in judgment of gender (Kloth et al. 2010). However, to date there is no direct evidence for a cross-modal emotional aftereffect from audition to vision, nor is there any work on the neural correlates of cross-modal adaptation.

Similar to behavioral adaptation, neurophysiological measures also show evidence of an adaptation effect. There is a decreased response of single neurons in the inferior temporal cortex to repeated stimuli compared with novel stimuli (Verhoef et al. 2008). In the auditory modality, the repetition of sounds leads to adaptation of the response of neurons in the auditory cortex (Perez-Gonzalez and Malmierca 2014). In contrast, novel sounds lead to an enhanced neural response, which is interpreted as the result of nonadapted cells. This is also true in the visual modality: Visual responses are attenuated after repeated presentations of the same visual stimuli such as simple spots of light (Goldberg and Wurtz 1972), faces (Kovacs et al. 2013), and scenes (Yi et al. 2006). Here, we asked whether adaptation to a sound changed subsequent brain responses to visual stimuli, and if so, to what extent.

We investigated cross-modal adaptation by adapting participants to the sound of laughter before asking them to make judgments upon the perceived emotion of a subsequently presented face. If we were to find evidence of an emotion adaptation aftereffect by adapting to the sound of laughter, it would suggest an interaction between the auditory and visual systems when processing emotionally relevant signals. We also employed electrophysiological recordings in order to detect whether any psychophysical adaptation would also produce differential effects in the event-related potentials (ERP).

Materials and Methods

Subjects

Twenty naïve participants (10 females and 10 males, age range = 20–28 years, university students) with no history of neurological or psychiatric impairment participated in this study. All participants were right-handed (Oldfield 1971) and had normal or corrected-to-normal vision and normal hearing (mean hearing threshold [and SD] was 0.7 [4.8] dB HL for the left ear and 1.2 [6.2] dB HL for the right ear). The protocols and experimental procedures employed in this study were reviewed and approved by the Internal Review Board of Nanyang Technological University and the Biomedical Research Ethics Committee of University of Science and Technology of China. The participants provided written informed consent before the experiment and were compensated for their participation.

Stimuli

Visual stimuli: We used 3 images (sad, neutral, and happy) of the face of one male person taken from the Karolinska Directed Emotional Faces database (Lundqvist et al. 1998). These faces were morphed using Morph Man 4.0 (STOIK Imaging) following the parameters from our previous experiment (Xu et al. 2008). We morphed the sad face with the neutral face to generate a series of images with the proportion of happiness varying from 0 (saddest) to 0.5 (neutral) and morphed the neutral face with the happy face to generate a series of images with the proportion of happiness varying from 0.5 (neutral) to 1.0 (happiest). We used the same face for all experiments to minimize the number of trials needed and to ensure that the same criteria were used in the morphs.

Auditory stimuli: For the sound of laughter, we used a recording of an adult male's laughter, sampled at 44.1 kHz with 16-bit quantization. For a control sound, we used Adobe Audition Software (Version 3.0) to generate a complex neutral tone with a fundamental frequency of 220 Hz, similar to the average fundamental frequency of the laughter sound, and 5 higher bands, 440, 660, 880, 1760, and 3520 Hz. We used a 3900-ms sample of each sound, a rise and fall time of 20 ms each, preceded by 80 ms of silence and followed by 20 ms of silence, for a total duration of 4000 ms. We adjusted the intensity of the sounds, and they were of equal intensity. We used E-prime software (Psychology Software Tools) to deliver the sounds binaurally at 60 dB above each subject's hearing threshold through headphones (Sennheiser HD 25-1 II) driven by the audio output of a computer.

Procedure

The experiment was presented on an LED monitor (SyncMaster BX2350, with a refresh rate of 60 Hz and a spatial resolution of 1920 × 1080) at a viewing distance of 1.0 m. For each trial, the subjects had to fixate on a centrally presented fixation cross-laid against a gray background. We presented face stimuli to the left side of the central fixation cross, subtending 5.7° visual angle horizontally and 6.9° vertically. The reason for the periphery presentation of faces was that the adaptation effect has been found to be stronger when the stimuli were presented in the visual periphery than at the fovea (on tilt aftereffect, Chen et al. 2015; on color adaptation, Bachy and Zaidi, 2014).

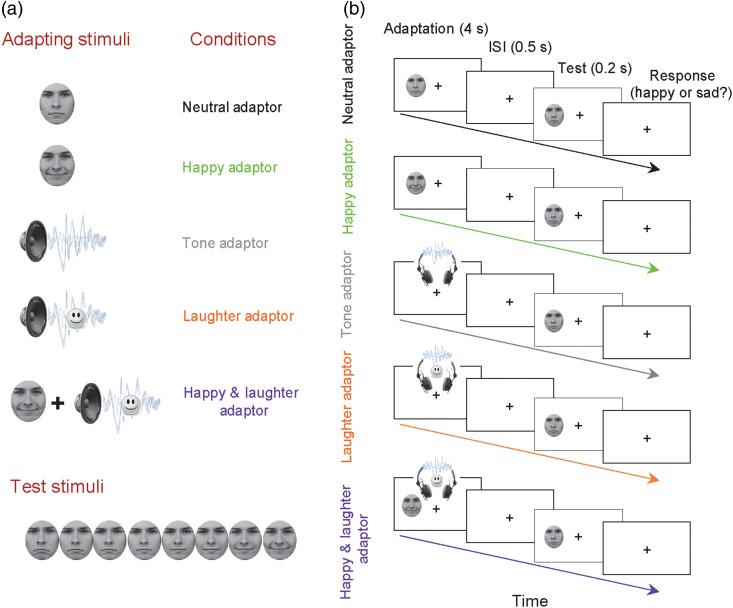

The subjects were presented with an adapting stimulus for 4000 ms and then a test face for 200 ms. The subjects then had to judge whether the test faces were happy or sad by pressing a key (“1” for happy and “2” for sad) as accurately and quickly as possible. There were 5 conditions (Fig. 1): testing on the morphed faces after adapting to the neutral face (the Neutral Adaptor, the baseline condition), the happy face (the Happy Adaptor condition), the neutral complex tone (the Tone Adaptor condition), the laughter sound (the Laughter Adaptor condition), and a combination of both the happy face and the laughter sound (the Happy and Laughter Adaptor condition).

Figure 1.

Stimuli and experimental design. (a) Five types of adapting stimuli—neutral face, happy face, neutral tone sound, laughter sound, and happy face with laughter sound—were followed by a series of morphed test faces from sad to happy. (b) Participants judged if a test face was happy or sad by pressing the response button. Adapting stimuli were presented for 4 s followed by a 0.5-s inter-stimuli interval. The test face was then presented for 0.2 s.

Each condition consisted of a practice session of 20 trials followed by a block of 120 trials, with 15 repetitions for each test face. We randomly varied the order of block types (Neutral Adaptor, Happy Adaptor, Tone Adaptor, Laughter Adaptor, and Happy and Laughter Adaptor) from subject to subject. In our previous work (Xu et al. 2008), we ran a pilot study on 2 experimenters and confirmed that a 10-min break after an adaptation block was long enough for the aftereffect to decay. In the present study, the subjects had a 2-min break after the practice session and a 12-min break after each experimental block to avoid carryover of the aftereffects to the next block. During the break time, the subjects had no other task.

Data Recording and Analysis

Psychophysical Data

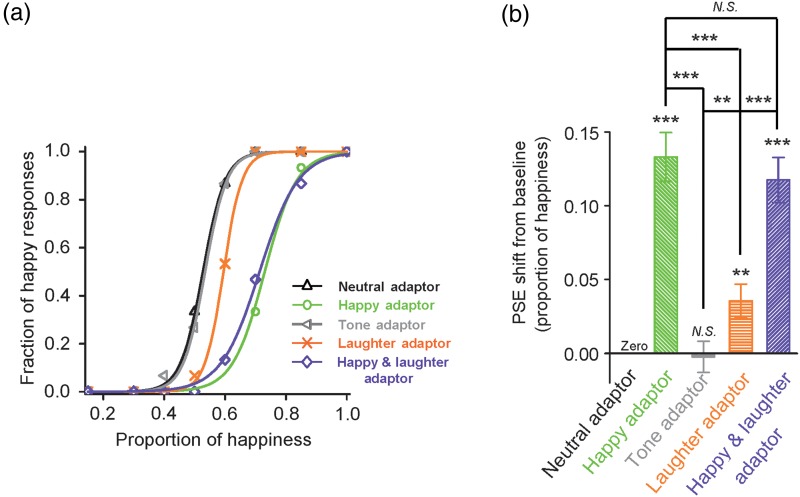

In the experiment, we chose images with proportions of happiness equal to 0.15, 0.3, 0.4, 0.5, 0.6, 0.7, 0.85, and 1.0. Pilot studies in our lab had indicated that this range of proportioned images led to sufficient data points with which to produce reliable and informative psychometric curves (described in the following text), thus leading to more precise measurements in the size of our participants' adaptation aftereffects in the form of their PSE shifts. Data for each condition were sorted into the fraction of “happy” responses to each test face. We then plotted the fraction of happy responses as function of the proportion of happiness of the test face. We fit the resulting psychometric curve with a sigmoidal function in the form of f(x) = 1/[1+e−a(x − b)], where b is the test-stimulus parameter corresponding to 50% of the psychometric function, the point of subjective equality (PSE), and a/4 is the slope of the function at the PSE (Fig. 2). We used a two-tailed paired t-test to compare subjects' PSEs for different conditions in the experiment. We defined the aftereffect as the difference between the PSE of an adaptation condition (Happy Adaptor, Tone Adaptor, Laughter Adaptor, or Happy and Laughter Adaptor) and the PSE of the corresponding baseline condition (Neutral Adaptor).

Figure 2.

Adaptation aftereffect on facial expression judgment. (a) The fraction of happy responses of a representative participant (ordinate) plotted as a function of the proportion of happiness of the test faces (abscissa), under the following conditions: Neutral Adaptor, adaptation to a neutral face (black); Happy Adaptor, adaptation to a happy face (green); Tone Adaptor, adaptation to a neutral tone (grey); Laughter Adaptor, adaptation to an auditory laughter (orange); Happy and Laughter Adaptor, adaptation to simultaneously presented happy face and laughter sound (violet). (b) Summary of data from all participants (n = 20). Average PSE relative to baseline condition (Neutral Adaptor) and SEM were plotted. *, **, *** and N.S. indicate significance levels P < 0.05, P < 0.01, P < 0.001, and no significance, respectively.

Electrophysiological Data

We recorded monopolar EEG (SynAmps 2, NeuroScan) with a cap carrying 64 Ag/AgCl electrodes using the International Standard 10–20 system to cover the whole scalp. We attached the reference electrode to the tip of the nose, and the ground electrode to the forehead. Alternating current signals (0.05–100 Hz) were continuously recorded at a sampling rate of 500 Hz. We measured electrooculography using bipolar electrodes, attaching the vertical electrodes above and below the left eye, and the horizontal electrodes lateral to the outer canthus of each eye. We corrected vertical electrooculography (EOG) artifacts with a regression-based procedure (Semlitsch et al. 1986; Gu et al. 2013). There were very few trials contaminated with the horizontal EOG artifacts. Like most event-related potentials (ERP) studies (e.g., Froyen et al. 2010; Gu et al. 2013), we only corrected the vertical EOG artifacts and did not correct the horizontal EOG artifacts. We rejected the epochs with potentials (including those contaminated with horizontal eye movement) exceeding a maximum voltage criterion of 75 μV. We maintained electrode impedances <5 kΩ and filtered the recording data offline (30 Hz low-pass, 24 dB/octave) with a finite impulse response filter. Although recording was continuous, we analyzed only the data from a 1000-ms epoch for each trial beginning 100 ms before stimulus onset. We rejected epochs when fluctuations in potential values exceeded±75 μV at any channel except the electrooculography channels. We averaged the ERP evoked by each test face from all 15 trials (for a single test face, the mean number of valid trials across all the conditions was 13.6 and did not differ significantly between any test face and condition) of each condition and proportion of happiness. To increase the SNR, we averaged the ERPs to the happiest and second happiest face (proportions of happiness: 1.0 and 0.85) as the ERP response to the happy test stimuli and averaged the ERPs to the saddest and second saddest face (proportions of happiness: 0.15 and 0.3) as the ERP response to the sad test stimuli. These ERPs were then processed via the average of 9 electrodes in the left parieto-occipital region (P1, P3, P5, P7, PO3, PO5, PO7, O1, and CB1) and 9 in the right parieto-occipital region (P2, P4, P6, P8, PO4, PO6, PO8, O2, and CB2) (Figs 3 and 4). Finally, the analyses for the ERPs were performed on the mean amplitudes in time segments for the N170, N2, and Plate components. These time segments were defined by the intervals of ±20 ms placed around the peak latency for the N170 and N2, and of ±50 ms for the Plate, for both the within modal and cross-modal conditions. For instance, for the ERP responses to happy test faces after the neutral and happy adaptors, the time segment was determined by the grand average of the ERPs to the happy test faces after both the neutral and happy adaptors, from both hemispheres, and the time segments were 167–207 ms for N170, 262–302 ms for N2, and 380–480 ms for Plate.

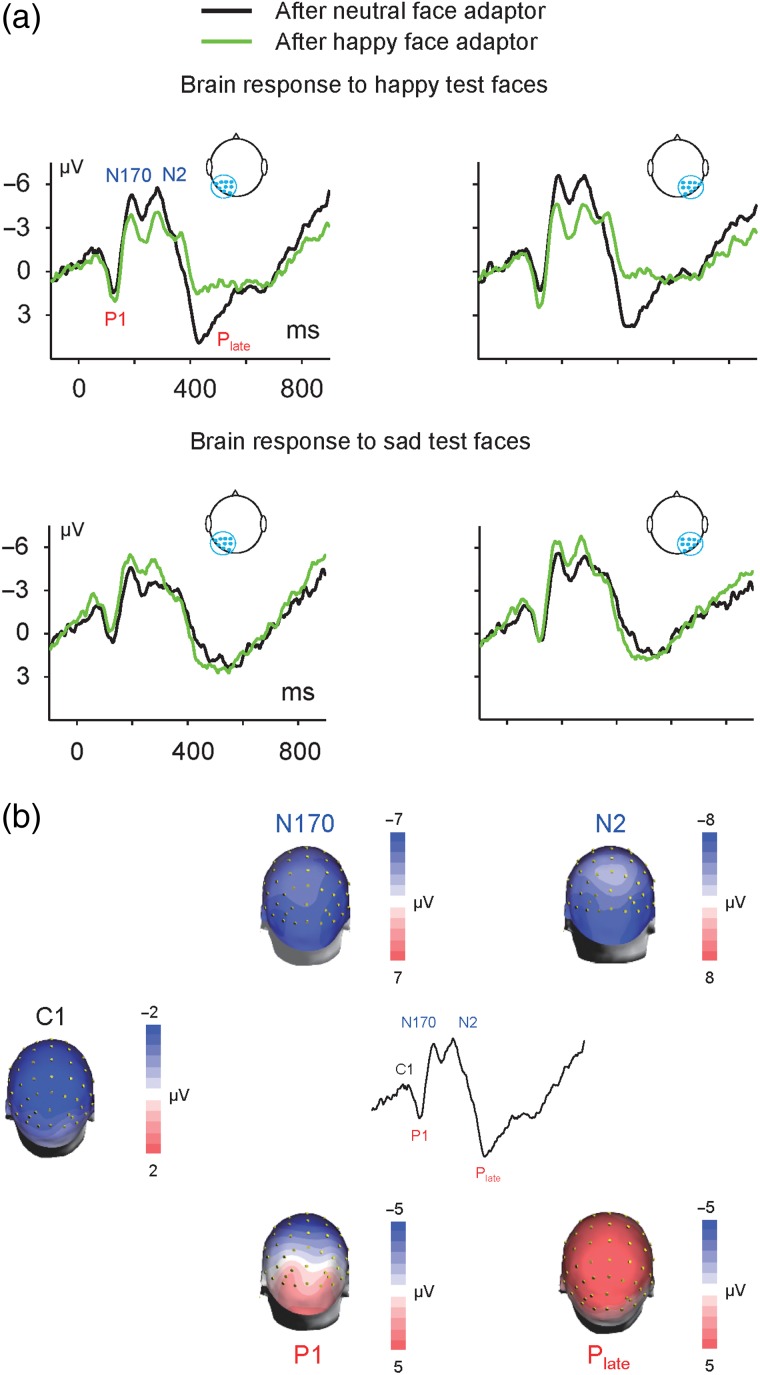

Figure 3.

Visual adaptation revealed by ERPs. (a) Grand averaged ERPs in response to happy test faces (upper panel) and sad test faces (lower panel) preceded by the Neutral Adaptor and Happy Adaptor. ERP waveforms showed robust P1-N170-N2-Plate complex in the left hemisphere (average of electrodes P1, P3, P5, P7, PO3, PO5, PO7, O1, and CB1) and right hemisphere (average of electrodes P2, P4, P6, P8, PO4, PO6, PO8, O2, and CB2). (b) Topographic distribution maps of C1, P1, N170, N2, and Plate were constructed at their peak latencies. These components showed similar distribution across conditions. For instance, in terms of the ERP response to happy test faces after the neutral face adaptor, the C1, P1, N170, and N2 showed a parieto-occipital maximum topography, whereas the Plate showed a central-parieto maximum topography.

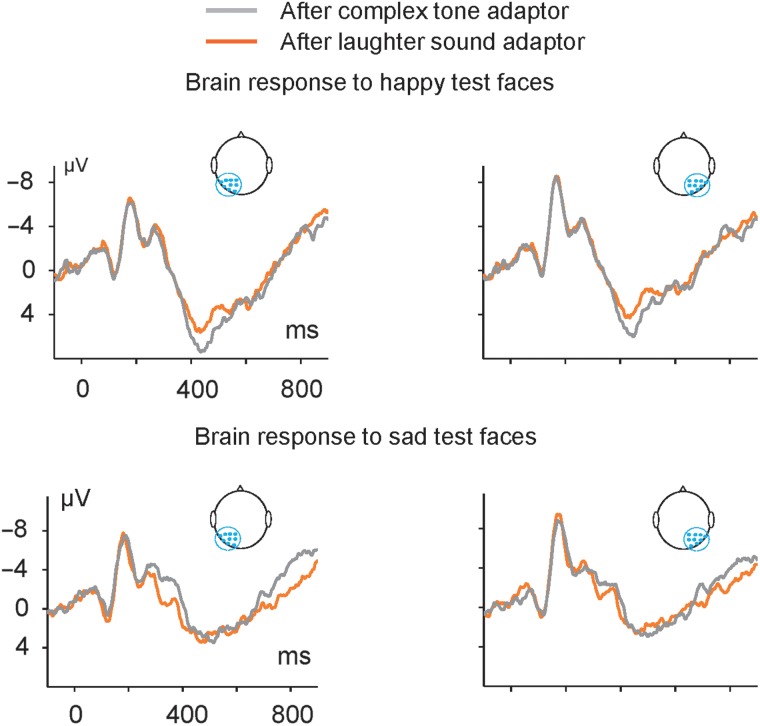

Figure 4.

Cross-modal auditory adaptation on visual perception revealed by ERPs. Grand-averaged ERPs in response to happy test faces (upper panel) and sad test faces (lower panel) preceded by the Tone Adaptor and Laughter Adaptor. ERP waveforms showed robust P1-N170-N2-Plate complex in the left hemisphere (average of electrodes P1, P3, P5, P7, PO3, PO5, PO7, O1, and CB1) and right hemisphere (average of electrodes P2, P4, P6, P8, PO4, PO6, PO8, O2, and CB2).

To determine whether there was a difference in the responses to happy (or sad) faces in the components of the ERP response (N170, N2, and Plate) across conditions, we performed a two-way Analysis of Variance (ANOVA) with adaptor and hemisphere as within-subject factors for the mean amplitudes of N170, N2, and Plate separately. For components that showed significant (P < 0.05) differences, we calculated a difference value between the ERP responses across conditions and then calculated the Pearson correlation value of the difference for each subject and the change in PSE from control to adaptation. For the unimodal adaptation condition, we calculated the correlation between the PSE shift (after the Happy Adaptor vs. after the Neutral Adaptor) and changes of ERP magnitude in response to the happy test faces (N170, N2, and Plate after the Happy Adaptor vs. after the Neutral Adaptor). For the cross-modal adaptation condition, we calculated the correlation between PSE shift (after the Laughter Adaptor vs. after the Tone Adaptor) and changes of ERP magnitude in response to the happy test faces (N170, N2, and Plate after the Laughter Adaptor vs. after the Tone Adaptor). For the correlation analysis, we measured the changes of ERP magnitude from the peak amplitude of the average of 9 electrodes in the left parieto-occipital region (P1, P3, P5, P7, PO3, PO5, PO7, O1, and CB1) and the right parieto-occipital region (P2, P4, P6, P8, PO4, PO6, PO8, O2, and CB2).

Results

Overall, as shown in Table 1, we found that the Happy Adaptor, Laughter Adaptor, and Happy with laughter Adaptor biased the judgment of test faces toward sadness, thus producing a behavioral adaptation aftereffect in all 3 conditions. The Happy Adaptor and Laughter Adaptor suppressed the Plate ERP responses to subsequent happy faces but quickened them to sad faces. The behavioral aftereffects of the Happy Adaptor and Laughter Adaptor were correlated with the neural attenuation of the Plate response to subsequent happy faces. Besides, the Happy Adaptor suppressed the N2 ERP response to happy faces but enhanced that to sad faces.

Table 1.

Behavioral and ERP results compared with neutral adaptor (or tone adaptor for the auditory condition)

| Happy adaptor | Happy + laughter adaptor | Laughter adaptor | Tone adaptor | |

|---|---|---|---|---|

| Behavioral (PSE) | ***, Correlated with neural attenuation of Plate response (at CPz) to happy test faces | ***, N.A. | **, Correlated with neural attenuation of Plate response (at CPz) to happy test faces | N.S. |

| ERP response to happy test face | Suppressed N2 (parieto-occipital), suppressed Plate (central-parietal) | N.A. | Suppressed Plate (central-parietal) | |

| ERP response to sad test face | Enhanced N2 (parieto-occipital), quickened Plate (central-parietal) | N.A. | Quickened Plate (central-parietal) |

**, ***, and N.S. indicate significance levels P < 0.01, P < 0.001, and no significance, respectively.

N.A., the comparisons are not critical to the experiment.

Behavioral Results

Adapting to the Happy Adaptor biased the subject's judgment of facial expression toward sadness in comparison to adapting to the Neutral Adaptor (Fig. 2a, a representative participant), reflecting the expected facial expression aftereffect (Webster et al. 2004; Xu et al. 2008). Adapting to the Laughter Adaptor also biased the subject's judgment of facial expression toward sadness (Fig. 2a). This shows, for the first time, evidence of auditory to visual cross-modal adaptation on facial emotions. Adapting to the Happy and Laughter adaptor biased the subject's judgment in a manner similar to the single modality cases (Fig. 2).

Figure 2b shows the mean PSEs relative to the baseline condition (Neutral Adaptor), that is, the magnitude of the adaptation aftereffect. A positive value indicated a rightward shift of the psychometric curve, or more sad judgments of the test faces, relative to the baseline. We performed a two-way ANOVA with emotion (neutral, happy) and modality (unimodal, cross-modal) as within-subject factors for the PSE value of conditions Neutral Adaptor, Happy Adaptor, Tone Adaptor, and Laughter Adaptor. We found a main effect of both factor emotion (F1, 19 = 55.476, P = 0.000) and modality (F1, 19 = 24.504, P = 0.000), as well as an interaction between the 2 factors (F1, 19 = 33.100, P = 0.000). We performed a follow-up test for the emotion effect at each level of the factor modality using a one-way ANOVA. The results showed significant effects of emotion for both the unimodal (F1, 19 = 65.084, P = 0.000) and cross-modal (F1, 19 = 11.234, P = 0.003) modalities, that is, the happy face (and laughter sound) adaptor generated a significant aftereffect, compared with the neutral face (and tone) adaptor. We also performed a follow-up test for the modality effect at each level of the factor emotion. The results showed a significant effect of modality for the happy emotion (F1, 19 = 41.301, P = 0.000), but not for the neutral emotion (F1, 19 = 0.050, P = 0.826), that is, there is no modality effect in the neutral conditions (neutral face and tone) but there is one (happy > laughter) in the happy/positive conditions (Fig. 2b). This is reasonable as neutral face or tone does not contain emotional signal and therefore there is no adaptation aftereffect, regardless of the modality. We also compared the adaptation aftereffect of condition Happy and Laughter Adaptor against that of the baseline condition Neutral Adaptor directly. The Happy and Laughter Adaptor generated a significant aftereffect (F1, 19 = 59.618, P = 0.000). This aftereffect was comparative to that generated by the Happy Adaptor (Fig. 2b).

ERP Results

Adapting to the Happy Adaptor Suppressed Brain Response to Subsequent Happy Test Faces and Enhanced Them to Sad Test Faces

Face images elicited robust C1-P1-N170-N2-Plate ERP complex. For instance, in terms of the ERPs (average of electrodes P2, P4, P6, P8, PO4, PO6, PO8, O2, and CB2) to happy test faces after the Neutral Adaptor, the peak latencies for the ERP complex were 72 ms (C1), 124 ms (P1), 186 ms (N170), 280 ms (N2), and 430 ms (Plate) separately. All ERP components showed a maximal parieto-occipital topographic distribution, except the Plate component, which showed a maximal central-parietal topographic distribution (Fig. 3b).

We first compared the ERP responses to the happy test faces after the Happy Adaptor to those after the Neutral Adaptor and found that exposure to the Happy Adaptor suppressed the N2 and Plate responses to subsequent happy test faces (Fig. 3a, upper panel). We performed a two-way ANOVA with adaptor (happy, neutral) and hemisphere (left, right) as within-subject factors for the N170, N2, and Plate components separately. The factor adaptor showed a main effect on the N2 and Plate, but not on the N170. For the N2 component, the results showed a significant main effect for adaptor (F1, 19 = 9.520, P = 0.006), indicating a suppression of the N2 response amplitude to happy faces after prior exposure to the Happy Adaptor. There was no significant main effect for hemisphere (F1, 19 = 3.830, P = 0.065) and no interaction between the 2 factors (F1, 19 = 2.027, P = 0.171). For the Plate component, there was a significant main effect for adaptor (F1, 19 = 23.604, P = 0.000), indicating a suppression of Plate response amplitude to happy faces after prior exposure to the Happy Adaptor. There was no significant main effect for hemisphere (F1, 19 = 0.920, P = 0.349) and no interaction between the 2 factors (F1, 19 = 0.201, P = 0.659).

We then compared the ERP responses to the sad test faces after the Happy Adaptor to those after the Neutral Adaptor and found that exposure to the Happy Adaptor enhanced the N2 and quickened the Plate responses to subsequent sad test faces (Fig. 3a, lower panel). For the N2 component, the results showed significant main effects for adaptor (F1, 19 = 7.282, P = 0.014) and hemisphere (F1, 19 = 8.233, P = 0.010) but did not showed an interaction between the 2 factors (F1, 19 = 0.139, P = 0.713). A one-way ANOVA with adaptor (happy, neutral) as within-subject factor was performed at each level of the factor hemisphere. The results showed that the N2 amplitude was greater after the Happy Adaptor than after the Neutral Adaptor (Fig. 3a, lower panel), in both the left hemisphere (F1, 19 = 8.364, P = 0.009) and right hemisphere (F1, 19 = 5.550, P = 0.029), indicating an enhancement of N2 response amplitude to sad faces after prior exposure to the Happy Adaptor. The amplitude of Plate did not show any main effect for the factor adaptor or hemisphere, or any interaction between the 2 factors. However, the peak latency of Plate showed a significant main effect for adaptor (F1, 19 = 10.005, P = 0.005), indicating that prior exposure to the Happy Adaptor shortened the latency of Plate response to subsequent sad faces (e.g., 483.4 vs. 514.2 ms). There was no significant main effect for hemisphere (F1, 19 = 2.157, P = 0.158) and no interaction between the 2 factors (F1, 19 = 0.534, P = 0.474).

Adapting to the Laughter Adaptor Suppressed Brain Response to Subsequent Happy Test Faces and Shortened the Latency to Sad Test Faces

We first compared the ERP responses to the happy test faces after the Laughter Adaptor to those after the Tone Adaptor, using the same analysis method as that in the condition of face-to-face adaptation. The factor adaptor showed a main effect on the Plate, but not on the N170 or N2 component. For the Plate component, the results showed a significant main effect for adaptor (F1, 19 = 12.223, P = 0.002), indicating a suppression of the Plate response to happy faces after prior exposure to the Laughter Adaptor. There was no significant main effect for hemisphere (F1, 19 = 2.339, P = 0.143) and no interaction between the 2 factors (F1, 19 = 0.147, P = 0.706).

Adapting to the Laughter Adaptor decreased the latency of the Plate response to subsequent sad test faces (Fig. 4, lower panel). For the response amplitude of either the N2 or Plate, the results did not show any main effect for the factor adaptor or hemisphere, or any interaction between the 2 factors. However, the analysis of the peak latency of the Plate showed that the Laughter Adaptor decreased the latency of the Plate response to sad test faces: There was a significant main effect for adaptor (F1, 19 = 13.832, P = 0.001), indicating that prior exposure to a sound of laughter quickened the Plate response to subsequent sad faces (e.g., 468.3 vs. 491.9 ms). There was no significant main effect for hemisphere (F1, 19 = 0.011, P = 0.916) and no interaction between the 2 factors (F1, 19 = 3.949, P = 0.062).

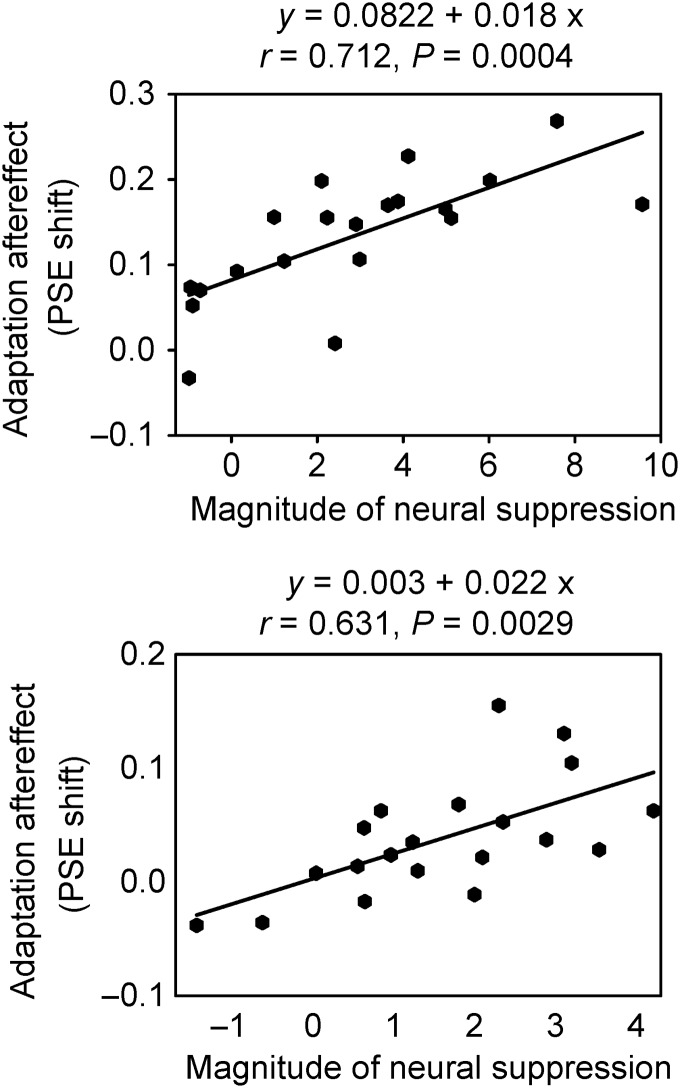

The behavioral effect of the adaptor correlated with its effect on the ERP. We first calculated the ERP magnitude as the average of a group of electrodes in the right parieto-occipital region. This was reasonable for the measurement of N170 and N2 components, which showed a rightward parieto-occipital topographic distribution (Fig. 3b). However, there was no significant correlation between the behavioral effect and any peak ERP magnitude change (suppression for the response to happy test faces and enhancement for that to sad test faces); all Ps > 0.1. Because the Plate component showed a central-parietal topographic distribution (Fig. 3b), we measured the effects at the CPz electrode. We found that for the Happy Adaptor condition, the behavioral aftereffect (PSE shift) was correlated with the neural attenuation of the Plate response to happy test faces (r = 0.712, P = 0.0004; Fig. 5, upper panel). The greater the neural attenuation of the Plate in response to subsequent happy test faces after prior exposure to the Happy Adaptor, the greater the behavioral aftereffect of the adaptor. For the Laughter Adaptor condition, the behavioral aftereffect was also correlated with the neural attenuation of the Plate response to happy test faces (r = 0.631, P = 0.0029; Fig. 5, lower panel). The greater the neural attenuation of the Plate in response to subsequent happy test faces after prior exposure to the Laughter Adaptor, the greater the behavioral aftereffect of the adaptor.

Figure 5.

Scatterplot of the correlation between participants' magnitude of behavioral aftereffect of unimodal (adapted to a happy face, upper panel) and cross-modal (adapted to a laughter sound, lower panel) adaptation, and magnitude of neural suppression of the Plate response. The y-axis represents the PSE shift from control (Neutral Adaptor and Tone adaptor) and the x-axis represents the change in amplitude of Plate response at CPz electrode.

In a preliminary experiment, we collected data from another sample (N = 24) using the same experimental design and setup as those in the main experiment, except that the block order was not random. Those results were reported in the Supplementary material (S2). We replicated our central findings in the preliminary experiment, and the results of them fully confirm our initial analyses and conclusions (Supplementary Fig. 2).

We also analyzed the PSE values using a one-way ANOVA with block order as the factor to investigate whether there was a carryover effect in the sequence of the 5 blocks (though the blocks are randomized in order to minimize the influence of carryover effect). The order of each adaptor block (Neutral, Happy, Tone, Laughter, and Happy with laughter) might be first, second, third, fourth, or fifth, and therefore we conducted the analysis for each adaptor with 5 levels. We did not find a block order effect for any adaptor: for the Neutral Adaptor (F4, 15 = 0.965, P = 0.455); for the Happy Adaptor (F4, 15 = 0.810, P = 0.538); for the Tone Adaptor (F4, 15 = 0.277, P = 0.888); for the Laughter Adaptor (F4, 15 = 0.603, P = 0.666); and for the Happy with laughter Adaptor (F4, 15 = 0.822, P = 0.531). This confirms that the randomization procedure canceled out any possible carryover effects from consecutive blocks. We finally conducted a supplementary analysis and explored whether ERP responses to a test stimulus may habituate over the course of the testing sessions. We compared the ERP average from the first half of the trials with that from the second half of the trials for a single test face in each condition. These analyses did not reveal a significant change in amplitude or peak latency between the ERPs (Supplementary material, S1). This further suggested that the effect of habituation to a single test face in the current experimental paradigm was minimal.

Discussion

We conducted this experiment to investigate cross-modal adaptation aftereffect and its underlying neural mechanisms. For the first time, we provided evidence that in normal subjects, hearing laughter biased the subsequent judgment of facial expressions: a high-level cross-modal adaptation from audition to vision. The Laughter Adaptor and the Happy Adaptor generated significant behavioral aftereffects, although the magnitude of the behavioral aftereffect was smaller for the Laughter Adaptor. Although both the Happy Adaptor and the Laughter Adaptor had significant effects on the ERP responses, the temporal profiles of these aftereffects were different. Unimodal visual adaptation occurred earlier than cross-modal adaptation temporally. Finally, the behavioral adaptation aftereffects of the Happy and Laughter Adaptors were both correlated with the neural attenuation of the Plate brain response to subsequent happy test faces (Fig. 5), providing additional evidence for the neural correlates of adaptation in emotion.

Compared with the large number of behavioral studies on face adaptation, studies on the neural correlates of face adaptation have only emerged recently (Fox and Barton 2007; Kloth et al. 2010; Kovacs et al. 2013). The combination of the face adaptation paradigm and EEG recordings in the present study enabled us to correlate the behavioral and neural aftereffects. In terms of the unimodal (face-to-face) adaptation, we showed that adapting to a happy face suppressed the N2 and Plate ERP responses to the happy test faces (Fig. 3a, upper panel) but enhanced the N2 and quickened the Plate response to the sad test faces (Fig. 3a, lower panel). Interestingly, in cross-modal (sound-to-face) adaptation, the neural activity change induced by adaptation occurs only at the later Plate stage (> 400 ms), not the earlier N170 or N2 stage. Our results showed that adapting to a happy sound suppressed the Plate ERP response to the happy test faces (Fig. 4, upper panel) but decreased the latency of the response to the sad test faces (Fig. 4, lower panel). These distinct patterns indicate that the enhancement and suppression effects in adaptation involve selective neural mechanisms that show qualitatively different effects on the ERPs. Psychologically, adapting to a happy face leads to at least 3 consequences: normalization (the adapting face appears less extreme, and the norm of facial emotion shifts toward happy instead of remaining neutral); increased sensitivity (the sensitivity of facial expression judgment increases); and aftereffect (a subsequently presented neutral face appears sadder). Whether those distinctive ERP neural patterns (suppressed N2 and Plate ERP responses to happy test faces, enhanced N2 and quickened Plate responses to sad test faces, after adapting to a happy face) are related to the normalization, sensitivity, and/or aftereffects are still to be further explored.

We found that the emotion of the adaptor did not significantly modulate the N170 response to the test stimuli: N170 amplitudes in response to a test face did not differ significantly when preceded by the happy face adaptor or by the neutral face adaptor (Fig. 3a), and when preceded by the laughter adaptor or by the tone adaptor (Fig. 4). However, the N170 amplitude preceded by the face adaptors was much lower than that preceded by the sound adaptors (Fig. 3a vs. 4). Therefore, it appears that this attenuation of the N170 reflects adaptation at the level of detecting generic facial configurations at a stage of early perceptual processing, rather than at a more advanced stage of encoding emotion-specific information. These results are in line with previous findings that the N170 is sensitive to the general category of a face rather than the identity (Amihai et al. 2011) or the gender of a face (Kloth et al. 2010). This indicates that the N170 amplitude difference (Fig. 3a vs. 4) might account for the general low-level adaptation effect based on stimulus category, that is, S1 (face)—S2 (face). In our data analysis, we compared ERPs to the same test face after adaptors from the same modality (e.g., happy face adaptor vs. neutral adaptor; laughter adaptor vs. tone adaptor), and therefore the low-level effect could be ruled out.

The happy face adaptor modulated the N2 response to subsequent test faces. This component, also referred to as N250r, is thought to be the first component reflecting individual face recognition (Schweinberger, Pickering, Jentzsch, et al. 2002) and is a face-selective brain response to stimulus repetitions (Schweinberger et al. 2004). We repeated this finding as the happy face adaptor suppressed the subsequent N2 response to happy faces (Fig. 3a). In addition, we found that the happy face adaptor enhanced subsequent N2 responses to sad faces, suggesting that the N2, a face-selective ERP component, is sensitive to high-level facial information, such as expressions.

Correlation analyses did not reveal any relationship between the strength of aftereffect and the earlier ERP components such as the N170 and N2 in our subjects. They did, however, reveal a correlation between the strength of aftereffect and the late positive ERP component (Plate) peaking around 400 ms. The Plate response showed a central-parietal topographic distribution (Fig. 3b). Its topography and latency are consistent with the properties of the P300 component. This leads us to suggest that the Plate effect might be equivalent to the P300 effect that has been reported in earlier adaptation studies (Kloth et al. 2010). In this study, there was a S1–S2 congruence if the adaptor and test faces were both happy faces, that is, S1 (happy face)—S2 (happy face). It has been widely accepted that the amplitude of the P300 is inversely proportional to the a priori probability of task-relevant events. For instance, the P300 amplitude was diminished when an eliciting pure tone repeated the preceding tone and was enhanced when it was preceded by another tone (Duncan-Johnson and Donchin 1977). However, this low-level effect was not able to explain the emotional adaptation effect found in the present study. Besides the effect of S1-happy face on the N2 and Plate brain responses to S2-happy face (same stimuli, attenuation in amplitude), we also found an effect of S1-happy face on the N2 and Plate brain responses to S2-sad face (enhancement in amplitude for N2 and quicken in latency for Plate), an effect of S1-laughter on the Plate brain response to S2-happy face (attenuation in amplitude) and an effect S1-laughter on the Plate brain response to S2-sad face (quicken in latency).

Having taken previous findings on the P300 into account, we suggest that the emotional status of the adaptor modulates the subject's mental evaluation of the emotional information of subsequent items. For example, the congruency of auditory sounds and visual letters modulates the P300 response, an index of visual attention, to the sounds by reducing its amplitude in the frontal and central but not parietal electrodes for repeatedly presented stimuli, and by reducing its latency for oddball stimuli (Andres et al. 2011). This may serve as an explanation for the suppression of Plate brain response to faces with a happy emotion after prolonged exposure to a happy face or a laughter sound. Interestingly, we also found that a happy face or a sound of laughter modulated the subsequent Plate response to sad faces by shortening its latency. This finding is consistent with previous findings, which stated that the P300 latency could be an effective tool to separate the mental chronometry of stimulus evaluation from the selection and execution of a response (Coles et al. 1995). More specifically, the P300 latency increases when targets are harder and decreases when targets are easier, to discriminate from standards (see Linden 2005 for a review).

In this study, we found face to face, and laughter to face, adaptation in the perception of facial emotion, that is, perception of S2 (test stimulus) is biased by previous exposure to S1 (adaptor). Similar paradigms have also been used in studies investigating the effects of priming, that is, perception of S2 is facilitated, rather than biased, by previous exposure to S1 (primer) (Ellis et al. 1987; Lang 1995; Johnston and Barry 2001). The degree to which the effect of adaptation and priming share the same brain mechanisms is still under heavy discussion.

Factors determining whether an adaptation or a priming effect could be observed in a study include the parameters of S1 and S2, as well as the tasks. First, in adaptation studies, the aftereffect is usually elicited by a prolonged exposure to an adaptor with longer durations prior to a shorter test stimulus (Leopold et al. 2001; Xu et al. 2008). In contrast, paradigms investigating priming typically employ a shorter S1 but relatively longer S2 presentations (Schweinberger, Pickering, Burton, et al. 2002; Henson 2003). Second, S1–S2 intervals are relatively shorter in adaptation but longer in priming paradigms. For instance, an adaptation aftereffect was found to transfer to a priming effect when the S1–S2 interval was prolonged from 50 to 3100 ms, while other parameters were kept the same (Daelli et al. 2010). Third, the S2 stimuli in adaptation paradigms are always ambiguous whereas those in priming paradigms have always been unambiguous (Walther et al. 2013). Fourth, tasks in adaptation studies usually involve matching a feature of the adapting stimulus with the test stimuli to measure the behavioral aftereffect. Typically, it is a two-alternative forced-choice task or a discrimination task, such as if a face is happy or sad after being adapted to a happy face. In contrast, tasks in typical priming studies have usually been based on features necessary for recognition (e.g., deciding if a face is familiar or not). Previous studies have shown that visual imagery facilitates subsequent face perception when the preceding imagined content matched the face image and interfered the perception, when they mismatched (Wu et al. 2012). In this study, we found that presentation of laughter biased the judgment of subsequent neutral faces toward sadness, an auditory to visual adaptation aftereffect, rather than the laughter facilitated the identification of happiness, a priming effect. The laughter sound is less likely to elicit the subject's mental imagery of a corresponding smiling face, which facilitated subsequent face perception, as revealed in previous visual imagery studies (Ishai and Sagi 1995; Moulton and Kosslyn 2009). We suggest that the reasons we found an adaptation rather than a priming effect in this study to be the use of long S1 (adaptor with 4 s duration), short S1–S2 interval (500 ms), short S2 (test face with 200 ms duration), as well as a typical two-alternative forced-choice judgment task that was usually employed in adaptation studies.

In face adaptation, it has been shown that different aspects of the face properties can be adapted, such as emotion, identity, or ethnicity (Webster et al. 2004). We previously reported that adapting to a sad face biased the facial expression judgment of subsequently presented faces toward happiness, and adapting to a happy face biased the facial expression judgment of subsequently presented faces toward sadness (Xu et al. 2008). Therefore, if we adapt to a fearful face, we would expect an emotional aftereffect toward the opposite direction (e.g., fear vs. anger), as long as we construct our adaptation paradigm in 2-distinct facial emotion judgment (e.g., fear vs. anger). As the arousal rating of a face might also affect face perception, future studies on emotional adaptation could also test the possible arousal effect of the adaptors. Previous studies on face adaptation demonstrated contrastive adaptation aftereffects in face perception, that is, contrastive aftereffects between the happy and fear adaptors (Hsu and Young 2004). These effects were attributed to the emotional adaptation aftereffects rather than the arousal effects, because both the happy and fear adaptors had high arousal ratings. Similar but not contrastive effect would be expected between the happy vs. fear adaptors, according to an arousal effect.

In summary, we investigated cross-modal facial emotion adaptation and found that the sound of laughter biased the subsequent judgment of facial expressions. The test faces were presented 4.5 s after, rather than simultaneously with, the onset of the sound adaptor; and 0.5 s after the adaptor's offset. We propose, therefore, that the perception of the emotion information in the sound adaptor is initially analyzed in the unimodal cortex (e.g., the auditory cortex). This information must then be stored by some neuronal populations, so that it can subsequently influence the subsequent perception of inputs from other sensory modalities (e.g., the visual modality); thus leading to cross-modal adaptation. This cross-modal adaptation might arise through direct neural interactions between the auditory and visual cortices. Alternatively, this interaction could be facilitated by the amygdala. The amygdala is connected to both the visual and the auditory cortex and could, therefore, relay information to the visual cortex about the emotional valence of sounds analyzed by the auditory cortex (Stevenson et al. 2014 for a review).

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/.

Author's contribution

H.X., X.W., and M.E.G. designed the research. X.W. prepared the experiment. X.W. and X.G. collected the data. X.W. analyzed the data. H.X., X.W., L.C., Y.L., and M.E.G. wrote the paper. All authors contributed to and approved the final manuscript.

Funding

This work was supported by the Fundamental Research Funds for the Central Universities (SWU114103, SWU1509449) by Southwest University and the College of Humanities, Arts, and Social Sciences Postdoctoral fellowship by Nanyang Technological University (X.W.), the Zegar, Keck, Kavli, and Dana Foundations, and the National Eye Institute (P30EY019007, R24EY015634, R21EY017938, R01EY014978, R01EY017039 (M.E.G.), and the Singapore Ministry of Education Academic Research Fund Tier 1 (RG42/11, RG168/14) and Nanyang Technological University College of Humanities, Arts, and Social Sciences Incentive Scheme (H.X.).

Supplementary Material

Notes

Conflict of Interest: None declared.

References

- Amihai I, Deouell LY, Bentin S. 2011. Neural adaptation is related to face repetition irrespective of identity: a reappraisal of the N170 effect. Exp Brain Res. 209:193–204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andres AJ, Oram Cardy JE, Joanisse MF. 2011. Congruency of auditory sounds and visual letters modulates mismatch negativity and P300 event-related potentials. Int J Psychophysiol. 79:137–146. [DOI] [PubMed] [Google Scholar]

- Bachy R, Zaidi Q. 2014. Factors governing the speed of color adaptation in foveal versus peripheral vision. J Opt Soc Am A Opt Image Sci Vis. 31:A220–A225. [DOI] [PubMed] [Google Scholar]

- Bestelmeyer PE, Rouger J, DeBruine LM, Belin P. 2010. Auditory adaptation in vocal affect perception. Cognition. 117:217–223. [DOI] [PubMed] [Google Scholar]

- Chen C, Chen X, Gao M, Yang Q, Yan H. 2015. Contextual influence on the tilt after-effect in foveal and para-foveal vision. Neurosci Bull. 31:307–316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen MA, Horowitz TS, Wolfe JM. 2009. Auditory recognition memory is inferior to visual recognition memory. Proc Natl Acad Sci USA. 106:6008–6010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coles MG, Smid HG, Scheffers MK, Otten LJ. 1995. Mental chronometry and the study of human information processing. In: Rugg MD, Coles MGH, editors. Electrophysiology of Mind Event-Related Brain Potentials and Cognition. Oxford, UK: Oxford University Press; pp. 87–131. [Google Scholar]

- Daelli V, van Rijsbergen NJ, Treves A. 2010. How recent experience affects the perception of ambiguous objects. Brain Res. 1322:81–91. [DOI] [PubMed] [Google Scholar]

- Dalferth M. 1989. How and what do autistic children see? Emotional, perceptive and social peculiarities reflected in more recent examinations of the visual perception and the process of observation in autistic children. Acta Paedopsychiatr. 52:121–133. [PubMed] [Google Scholar]

- Duncan-Johnson CC, Donchin E. 1977. On quantifying surprise: the variation of event-related potentials with subjective probability. Psychophysiology. 14:456–467. [DOI] [PubMed] [Google Scholar]

- Ellis AW, Young AW, Flude BM, Hay DC. 1987. Repetition priming of face recognition. Q J Exp Psychol. 39:193–210. [DOI] [PubMed] [Google Scholar]

- Fox CJ, Barton JJ. 2007. What is adapted in face adaptation? The neural representations of expression in the human visual system. Brain Res. 1127:80–89. [DOI] [PubMed] [Google Scholar]

- Froyen D, Atteveldt N, Blomert L. 2010. Exploring the role of low level visual processing in letter–speech sound integration: a visual MMN study. Front Integr Neurosci. 4:9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldberg ME, Wurtz RH. 1972. Activity of superior colliculus in behaving monkey. II. Effect of attention on neuronal responses. J Neurophysiol. 35:560–574. [DOI] [PubMed] [Google Scholar]

- Gu F, Zhang C, Hu A, Zhao G. 2013. Left hemisphere lateralization for lexical and acoustic pitch processing in Cantonese speakers as revealed by mismatch negativity. Neuroimage. 83:637–645. [DOI] [PubMed] [Google Scholar]

- Henson RN. 2003. Neuroimaging studies of priming. Prog Neurobiol. 70:53–81. [DOI] [PubMed] [Google Scholar]

- Hsu SM, Young A. 2004. Adaptation effects in facial expression recognition. Visual Cognition. 11:871–899. [Google Scholar]

- Ishai A, Sagi D. 1995. Common mechanisms of visual imagery and perception. Science. 268:1772–1774. [DOI] [PubMed] [Google Scholar]

- Johnston RA, Barry C. 2001. Best face forward: similarity effects in repetition priming of face recognition. Q J Exp Psychol. 54:383–396. [DOI] [PubMed] [Google Scholar]

- Kloth N, Schweinberger SR, Kovacs G. 2010. Neural correlates of generic versus gender-specific face adaptation. J Cogn Neurosci. 22:2345–2356. [DOI] [PubMed] [Google Scholar]

- Kovacs G, Zimmer M, Volberg G, Lavric I, Rossion B. 2013. Electrophysiological correlates of visual adaptation and sensory competition. Neuropsychologia. 51:1488–1496. [DOI] [PubMed] [Google Scholar]

- Lang PJ. 1995. The emotion probe. Studies of motivation and attention. Am Psychol. 50:372–385. [DOI] [PubMed] [Google Scholar]

- Leopold DA, O'Toole AJ, Vetter T, Blanz V. 2001. Prototype-referenced shape encoding revealed by high-level aftereffects. Nat Neurosci. 4:89–94. [DOI] [PubMed] [Google Scholar]

- Linden DE. 2005. The p300: where in the brain is it produced and what does it tell us? Neuroscientist. 11:563–576. [DOI] [PubMed] [Google Scholar]

- Lundqvist D, Flykt A, Öhman A. 1998. The Karolinska Directed Emotional Faces - KDEF, CD ROM from Department of Clinical Neuroscience, Psychology section, Karolinska Institutet, ISBN 91-630-7164-9.

- Moulton ST, Kosslyn SM. 2009. Imagining predictions: mental imagery as mental emulation. Philos Trans R Soc Lond B Biol Sci. 364:1273–1280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oldfield RC. 1971. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 9:97–113. [DOI] [PubMed] [Google Scholar]

- Perez-Gonzalez D, Malmierca MS. 2014. Adaptation in the auditory system: an overview. Front Integr Neurosci. 8:19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmid C, Buchel C, Rose M. 2011. The neural basis of visual dominance in the context of audio-visual object processing. NeuroImage. 55:304–311. [DOI] [PubMed] [Google Scholar]

- Schweinberger SR, Casper C, Hauthal N, Kaufmann JM, Kawahara H, Kloth N, Robertson DM, Simpson AP, Zaske R. 2008. Auditory adaptation in voice perception. Curr Biol. 18:684–688. [DOI] [PubMed] [Google Scholar]

- Schweinberger SR, Huddy V, Burton AM. 2004. N250r: a face-selective brain response to stimulus repetitions. Neuroreport. 15:1501–1505. [DOI] [PubMed] [Google Scholar]

- Schweinberger SR, Pickering EC, Burton AM, Kaufmann JM. 2002. Human brain potential correlates of repetition priming in face and name recognition. Neuropsychologia. 40:2057–2073. [DOI] [PubMed] [Google Scholar]

- Schweinberger SR, Pickering EC, Jentzsch I, Burton AM, Kaufmann JM. 2002. Event-related brain potential evidence for a response of inferior temporal cortex to familiar face repetitions. Brain Res Cogn Brain Res. 14:398–409. [DOI] [PubMed] [Google Scholar]

- Semlitsch HV, Anderer P, Schuster P, Presslich O. 1986. A solution for reliable and valid reduction of ocular artifacts, applied to the P300 ERP. Psychophysiology. 23:695–703. [DOI] [PubMed] [Google Scholar]

- Skuk VG, Schweinberger SR. 2013. Adaptation aftereffects in vocal emotion perception elicited by expressive faces and voices. PloS one. 8:e81691. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Ghose D, Fister JK, Sarko DK, Altieri NA, Nidiffer AR, Kurela LR, Siemann JK, James TW, Wallace MT. 2014. Identifying and quantifying multisensory integration: a tutorial review. Brain Topogr. 27(6):707–730. [DOI] [PubMed] [Google Scholar]

- Verhoef BE, Kayaert G, Franko E, Vangeneugden J, Vogels R. 2008. Stimulus similarity-contingent neural adaptation can be time and cortical area dependent. J Neurosci. 28:10631–10640. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walther C, Schweinberger SR, Kaiser D, Kovacs G. 2013. Neural correlates of priming and adaptation in familiar face perception. Cortex. 49:1963–1977. [DOI] [PubMed] [Google Scholar]

- Webster MA, Kaping D, Mizokami Y, Duhamel P. 2004. Adaptation to natural facial categories. Nature. 428:557–561. [DOI] [PubMed] [Google Scholar]

- Webster MA, MacLeod DI. 2011. Visual adaptation and face perception. Philos Trans R Soc Lond B Biol Sci. 366:1702–1725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu J, Duan H, Tian X, Wang P, Zhang K. 2012. The effects of visual imagery on face identification: an ERP study. Front Hum Neurosci. 6:305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu H, Dayan P, Lipkin RM, Qian N. 2008. Adaptation across the cortical hierarchy: low-level curve adaptation affects high-level facial-expression judgments. J Neurosci. 28:3374–3383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yi DJ, Kelley TA, Marois R, Chun MM. 2006. Attentional modulation of repetition attenuation is anatomically dissociable for scenes and faces. Brain Res. 1080:53–62. [DOI] [PubMed] [Google Scholar]

- Zaske R, Schweinberger SR, Kawahara H. 2010. Voice aftereffects of adaptation to speaker identity. Hearing Res. 268:38–45. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.