Abstract

Background

High resolution and high throughput genotype to phenotype studies in plants are underway to accelerate breeding of climate ready crops. In the recent years, deep learning techniques and in particular Convolutional Neural Networks (CNNs), Recurrent Neural Networks and Long-Short Term Memories (LSTMs), have shown great success in visual data recognition, classification, and sequence learning tasks. More recently, CNNs have been used for plant classification and phenotyping, using individual static images of the plants. On the other hand, dynamic behavior of the plants as well as their growth has been an important phenotype for plant biologists, and this motivated us to study the potential of LSTMs in encoding these temporal information for the accession classification task, which is useful in automation of plant production and care.

Methods

In this paper, we propose a CNN-LSTM framework for plant classification of various genotypes. Here, we exploit the power of deep CNNs for automatic joint feature and classifier learning, compared to using hand-crafted features. In addition, we leverage the potential of LSTMs to study the growth of the plants and their dynamic behaviors as important discriminative phenotypes for accession classification. Moreover, we collected a dataset of time-series image sequences of four accessions of Arabidopsis, captured in similar imaging conditions, which could be used as a standard benchmark by researchers in the field. We made this dataset publicly available.

Conclusion

The results provide evidence of the benefits of our accession classification approach over using traditional hand-crafted image analysis features and other accession classification frameworks. We also demonstrate that utilizing temporal information using LSTMs can further improve the performance of the system. The proposed framework can be used in other applications such as in plant classification given the environment conditions or in distinguishing diseased plants from healthy ones.

Keywords: Deep learning, Temporal information, Deep features, Accession classification

Introduction

Plant productivity must increase dramatically this century, while using resources more efficiently, to accommodate the ever-growing demand of a more affluent and growing human population. Precision breeding, via selecting advantageous genomic variants, will help improve plant productivity and efficiency but it relies on a detailed understanding of the genotype to phenotype relationship [1]. Here, a framework for automatic feature (phenotype) extraction and classification during the plant growth time period can greatly facilitate these studies. We have developed climate chambers, which maintain diurnal and seasonal climate signals but remove the weather noise plaguing field studies. These chambers have automated image capture capability to constantly monitor plants throughout their entire life cycle [2].

Arabidopsis thaliana is one of the model organisms used for studying plant biology, and it now has genomes sequences from 1000s of accessions [3]. Since the growth patterns of this plant are easily observable (especially from top-view), it is a very useful model for automated phenotyping. Previous work on phenotyping different accessions (genotypes) have mostly used biologist specified, ‘hand-crafted’ image features such as number of leaves, leaf area, compactness, roundness, etc. [4–8]. These features are computed either manually or via custom image processing algorithms. Their output may then be passed to a classifier. The main weakness of using hand-crafted descriptors is that although they are readily interpretable, they may be missing or incorrectly measuring the actual features that are variable among accessions. Furthermore, the custom image processing methods to extract the hand crafted features may not work as well when run on other experiments and may be difficult to generalize to more heterogeneous datasets [9].

Problems with hand crafted features have been addressed in the past few years by harnessing the power of deep learning Convolutional Neural Networks (CNNs) in particular [10–14], although difficulties with interpretation of the machine learned traits and over-fitting to a particular experiment remain. CNNs automatically find and extract the most descriptive features from the data during the training process. In other words, both feature extraction and training steps are performed simultaneously and hence, the system tries to find the features that minimize the loss criterion of the phenotyping problem. As a result, novel features for accession recognition are revealed in this process. However, in order for a machine to learn a good set of features, a very large training dataset is required.

CNNs are great for classification and segmentation of images, but they are unable to properly model dynamic systems, such as time-lapse video in our case. Although CNNs can not encode temporal dependency of successive image frames, this problem can be addressed by using a Recurrent Neural Network (RNN) in which, each image frame is processed and analyzed by a neural cell and the information of each cell is circulated to the succeeding cells. RNNs, and in particular Long Short-Term Memories (LSTMs, which are explained in detail in "LSTM" section) have demonstrated potential in computer vision for analysis of dynamic systems [15–19]. In this study we utilize LSTMs to carefully model the growth patterns of plants.

In this work we investigate the capability of CNN features for describing the visual characteristics (phenotypes) of different accessions (genotypes), and compare these deep features with hand-crafted descriptors that were primarily used in previous works. In particular we present a plant analysis framework that automatically extracts and utilizes most descriptive features for each application and exempts us from manual feature selection and tuning for different tasks and experiments. More importantly, we propose to use LSTMs to automatically take into account the growth and temporal behavior of plants in their classification. By incorporating the temporal information into the analysis, it is revealed how phenotypes that distinguish different accessions change over days of plant growth. This framework can also be used for classification of the plants with different genotypes, plants grown in different environment conditions (e.g. soil, temperature, humidity and light), or detection of plant diseases. Furthermore, plant detection and classification using robotics and automation for improved plant production and care is another potential application.

In addition, we release a new challenging dataset that contains time-lapse recordings of top-view images of Arabidopsis accessions, to evaluate the proposed method in this paper for accession classification task. Note that there is a substantial similarity between the appearance of different accessions in this dataset, which is even very hard for biologists to distinguish them. Nonetheless, our model outperformed traditional methods based on hand-crafted image features and other accession classification frameworks, by using deep features as well as by encoding temporal information. A primary extension of this work in the future is to study new accessions and their behavioural and appearance association with parental reference accessions. This can vastly help us to better find relationships between phenotypes and genotypes. This is briefly described in "Conclusion" section.

Background

Research has focused on automatic plant phenotyping and classification using high-throughput systems. Classification of growth phenotypes based on data from known planted genotypes represents a typical experimental design where the aim is to obtain measures that maximize signal between genotypes relative to environmental error within biological replicates of the same genotype. Advanced image processing using machine learning techniques have become very popular in phenotyping qualitative states [20–24] while there are still many prospective needs and goals [25–29] to be experimentally explored in plants. A number of recent studies have presented high-throughput systems for plant phenotyping [2, 30–33] and also plant/leaf segmentation and feature extraction [34–37].

Plant classification has attracted researchers from the computer vision community [38–41] given its importance in agriculture and ecological conservation. There are several studies of plant classification built on the pictures of individual plant leaves [42–45]. Approaches to recognize plant disease [46, 47], symptoms of environmental stress [31, 48], and differentiation of crops from weeds [49, 50] have been previously studied. Normally three primary steps of plant/leaf segmentation, feature extraction, and classification are involved in these studies. The performance of the whole phenotyping pipeline depends on the performance and interaction among each of the three elements.

In the past few years, deep learning methods and in particular, Convolutional Neural Networks have achieved state-of-the-art results in various classification problems, and have motivated scientists to use them for plant classification [51–57] and plant disease detection tasks as well [58, 59]. CNNs are able to learn highly discriminative features during the training process and classify plants, without any need for segmentation or hand-crafted feature extraction. In particular, [54] used a CNN for root and shoot feature identification and localization. The authors in [52] proposed Deep Plant framework which employs CNNs to learn feature representation for 44 different plant species using the leaves. However, all the above-mentioned studies in plant phenotyping, feature extraction and classification are all based on individual static images of the plants of different species. In other words, temporal information, such as the growth patterns, one of the key distinguishing factors between varieties within plant species, has not been previously taken into account. Temporal cues can be very helpful, especially for distinguishing between different plants that have similar appearances, e.g. for separating different accessions of a particular plant, which is often a very challenging task.

In order to account for temporal information, various probabilistic and computational models (e.g. Hidden Markov Models (HMMs) [60–62], rank pooling [63–65], Conditional Random Fields (CRFs) [66–68] and RNNs [69–72]) have been used for a number of applications involving sequence learning and processing.

RNNs (and LSTMs in particular) are able to grasp and learn long-range and complex dynamics and have recently become very popular for the task of activity recognition. For example, The authors in [73, 74] used CNN and LSTM for generating image descriptions and multi-lable image classification, respectively. More specifically, [15–19] used LSTM in conjunction with CNN for action and activity recognition and showed improved performance over previous studies of video data. In this paper, we treat the growth and development of plants as an action recognition problem, and use CNN for extracting discriminative features, and LSTM for encoding the growth behavior of the plants.

Preliminary

In this section, we explain the fundamentals of deep structures used in this paper, including CNN, RNN and LSTM.

CNN

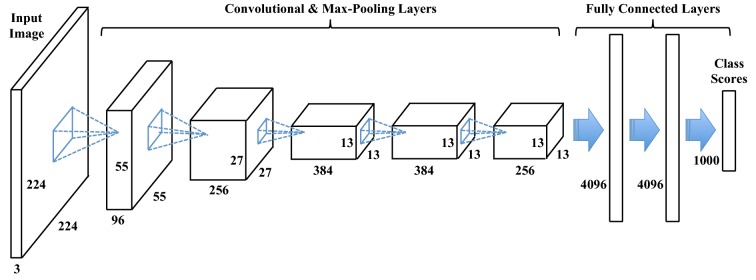

Figure 1 depicts the schematic of a Convolutional Neural network (Alexnet [75]). Each layer in this network consists of a set of parameters, which are trainble in general, either from scratch or by benefiting from pretrained networks (refer to "CNN training" section for further explanation). The output of each layer might pass through some non-linear activations such as sigmoid or Relu functions [75]. The CNN structure takes a tensor of three-dimensional data as its input, passes it through multiple sets of layers and then outputs a score that represents the semantic class label of the input data. For instance in a simple cat vs. dog classification task, the input could be the image of a kitty and the correct output would be a high score for the cat class.

Fig. 1.

The schematic of Alexnet. A CNN often consists of convolutional layers, max-pooling layers and fully connected layers. The output of each convolutional layer is a block of 2D images (a.k.a. feature maps), which are computed by convolving previous feature maps with a small filter. The filter parameters are learned during the training process. The last few layers of CNN are densely connected to each other, and the class scores are obtained from the final layer

In our application, we feed the CNN with top-view images (with three color channels) from plants. Next we introduce the main layers of a CNN.

Convolutional layer

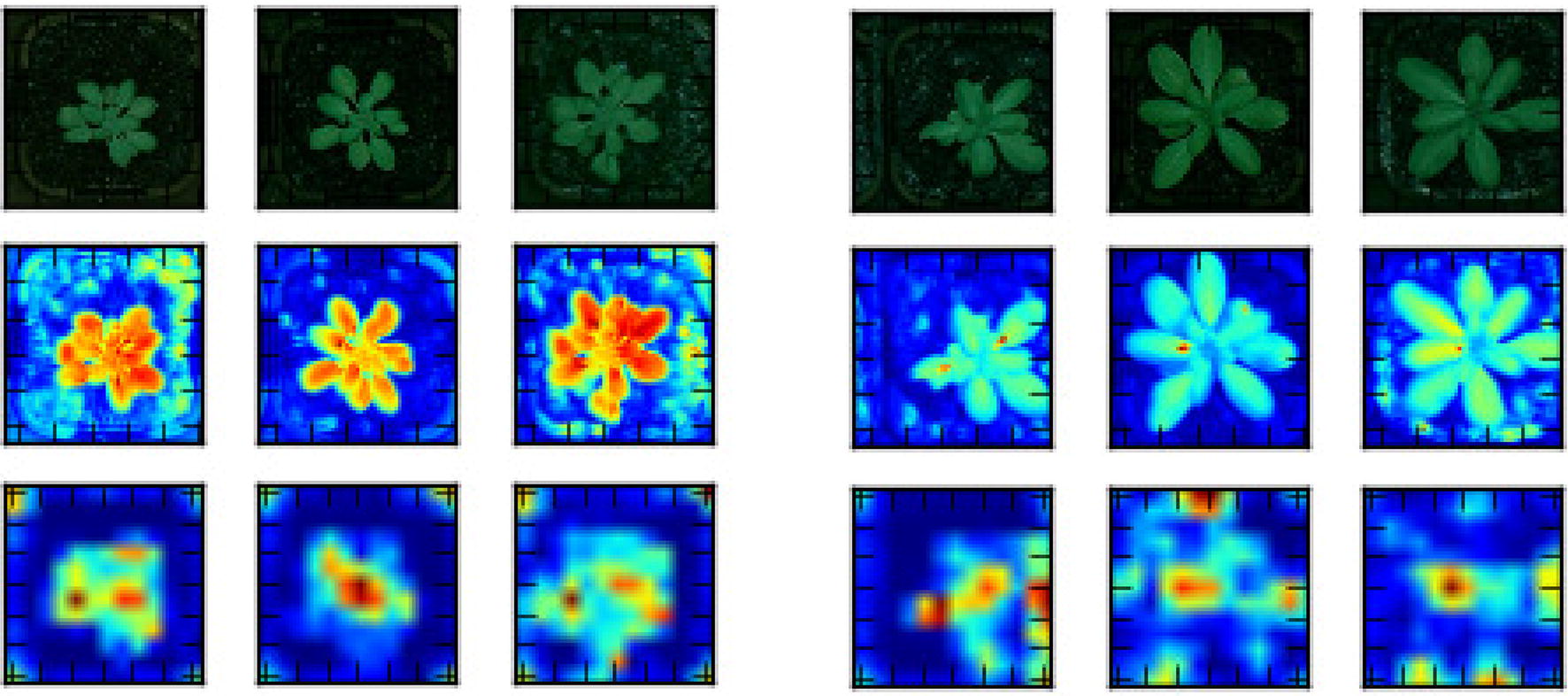

This layer is computed by applying multiple filters to the input image, i.e. sliding the filter window over the entire input image. Different filters can have different parameters, which lets them detect and learn different image features. For example, one filter could be in charge of spotting vertical edges, while another one could detect horizontal edges [76]. The output of this layer is called a feature map, which is depicted in Fig. 2. It shows class activation maps that identify image important regions.

Fig. 2.

Feature maps. The average feature maps of the first (row 2) and last (row 3) convolutional layers for three Col-0 (left) and also three Ler-1 (right); localized class activation maps are visible

Filters are normally designed to be small (, , , ...), to reduce the number of parameters in the system. As a result, regardless of the size of the input image, the parameter size remains limited. Moreover, multiple back-to-back small filters in successive layers can cover a larger receptive field and consequently, more context information can be encoded. This is in contrast to the design of a fully connected neural network where all the units in the previous layer are connected to every unit in the next layer with unique parameters, which leads to a sizable parameter set.

Max pooling layer

Each feature map obtained from the convolutional layer, is an indicator of a particular feature in different locations of the input image. We normally want our descriptors to be robust against minor displacements of the input data. This is addressed by adding a max pooling layer to the network, which downsamples the feature maps. In other words, it reduces small patches of the feature map into single pixels. If a feature is detected anywhere within the patch, the downsampled patch fires a detection of that feature (local invariance).

A more practical benefit of the pooling layer is that, reducing the size of the feature maps leads to a significant decrease in the number of parameters, which in turn controls overfitting and also speeds up the training process. Another advantage of pooling layer is that it helps the network to detect more meaningful and high-level features as it moves on to the deeper layers. In this structure, the first layer has detected low level features like edges, whereas the next layer could grab more sophisticated descriptors like leaves or petiole, and the layer after has learned high-level features that are able to describe the whole plant.

Fully connected layer

After a sequence of multiple convolution and pooling layers, the size of input data is shrunk dramatically which is suitable as input to a fully connected (dense) layer. The resulting feature maps up to this point of the network are vectorized and feed a multi-layer fully connected neural network, whose last layer (a.k.a classification layer or softmax layer) denotes the scores of the class labels in our problem.

The last fully connected layer is in charge of computing the scores for each class label. Each neuron in this layer represents a category in the classification problem, and its class probability can be computed by applying a softmax function to its inputs from the previous layer.

CNN structure

The structure of a CNN (number of different layers, size of the filters, size of the fully connected layers, etc.) may vary depending on the application and the size of the training data. During the past few years, several architectures have been proposed and shown to work quite well for image classification and segmentation problems, among which Alexnet [75], VggNet [77] and ResNet [78] are the most notable ones.

Figure 1 shows the schematic of Alexnet, which has five convolution layers, three of which are followed by max pooling layers. It also features three fully connected layers. This is the network that first attracted the attention of researchers to the potential of CNNs, by winning the ImageNet Large Scale Visual Recognition Competition (ILSVRC) by a big margin [79], compared to the models with hand-crafted features.

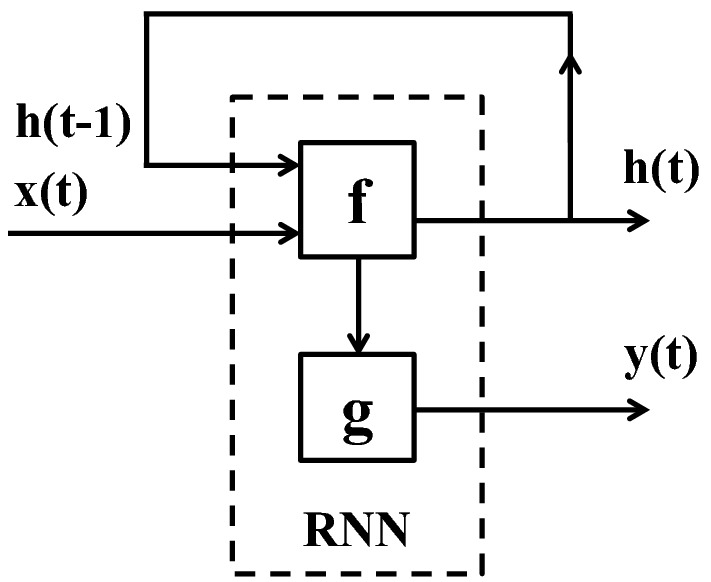

RNN

Figure 3 illustrates a simple RNN [80] that models a temporal data with three time points. In this representation, each time step is portrayed by a block of neurons, which receives two inputs respectively from the observed frame at that time, and the temporal cues propagated from previous times points. A fully connected neural network is embedded within each RNN cell to analyze the visual information of each frame together with the information that is received from previous times, to obtain the system state at each time frame. Let , and denote the visual input data, the output of RNN cell and the class label of the sequential data, respectively, at time t. Then the RNN can be expressed as

| 1 |

| 2 |

where , and are the neural network parameters, is a bias vector, and f and g are element-wise non-linear functions which are often set to hyperbolic tangent () and sigmoid (), respectively.

Fig. 3.

The structure of an RNN. The system at each time-point is updated based on the current input data and the status of the system at the previous time-point. Here, f and g are element-wise non-linear functions which are often set to hyperbolic tangent () and sigmoid (), respectively

What makes this structure more interesting is that we can readily integrate RNN with a CNN, by feeding the visual input of the RNN cell with the pre-trained CNN features of the image frame at that time point.

LSTM

The main shortcoming of standard RNNs (Fig. 3) is that they can not encode temporal dependencies that prolong to more than a limited number of time steps [81]. In order to address this problem, a more sophisticated RNN cell named Long Short-Term Memory (LSTM) has been proposed to preserve the useful temporal information for an extended period of time.

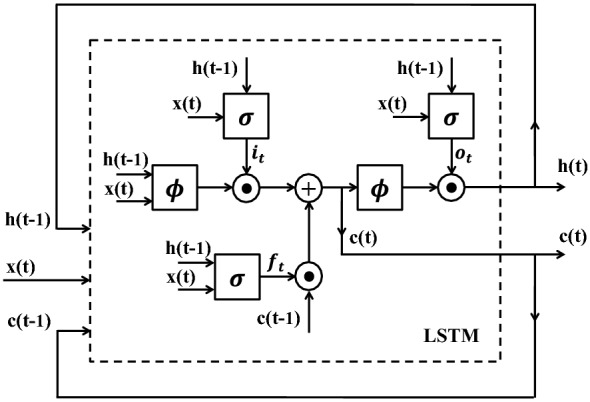

An LSTM [82], as depicted in Fig. 4, is equipped with a memory cell and a number of gates. The gates control when a new piece of information should be written to the memory or how much of the memory content should be erased. Similar to a standard RNN, the state of the system at each time point is computed by analyzing the visual input at that time point, together with the output of previous cell and also the content of the LSTM memory, which is referred to as . Given , and , the LSTM updates are defined as

| 3 |

| 4 |

| 5 |

| 6 |

| 7 |

In these equations, , and denote input gate, forget gate and output gate respectively. The input gate controls how much of the new input data should be recorded into the memory, whereas the forget gate decides how much of the old memory should be preserved at each time. The output of the LSTM cell is also computed by applying the output gate to the memory content. This sophisticated structure enables LSTM to perceive and learn long-term temporal dependencies. Note that in Eq. 3 indicates an element-wise multiplication.

Fig. 4.

The structure of an LSTM. The system at each time-point is updated based on the current input data, the status of the system at the previous time-point, and the content of the memory. Here, and are hyperbolic tangent and sigmoid functions, respectively, and stands for the element-wise multiplication. , , and denote input gate, forget gate, output gate and memory cell respectively

After seeing a sufficient number of data sequences in the training phase, LSTM learns when to update the memory with new information or when to erase it, fully or partially. LSTMs can model various sequential data very easily, unlike other complicated and multi-step pipelines. Furthermore, they can be fine-tuned similar to CNNs. These benefits has made LSTMs very popular in the recent years for modelling data sequences. In this paper, we propose a CNN-LSTM structure (Fig. 5) to build a plant classification system, which is explained in more detail in "CNN-LSTM network" section.

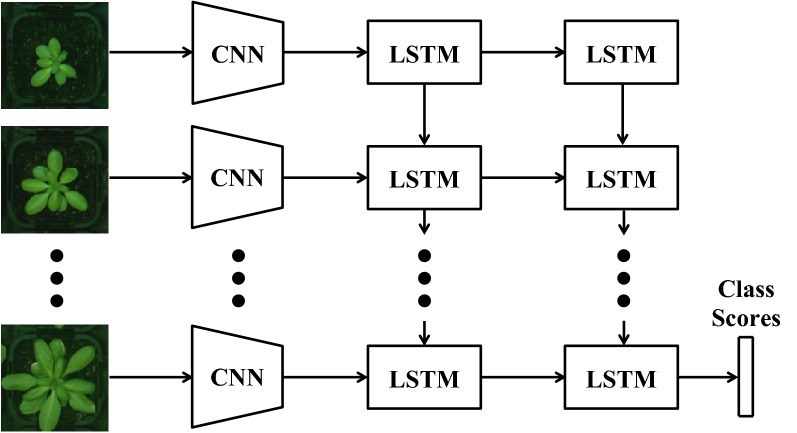

Fig. 5.

The CNN-LSTM structure. The CNNs extract deep features of the plant images and then, the growth pattern of the plant is modeled using LSTMs. Finally the genotype with highest class score is selected

Methods

We aim to propose an automatic accession classification framework, using the deep visual features of the plants (which are trained specifically for the accession categories) as well as the temporal cues of the plant growth sequences. To this end, in this section we introduce the CNN-LSTM model and then explain how to train this model.

CNN-LSTM network

In this section, we describe the proposed framework for genotype classification, which is composed of a deep visual descriptor (using a CNN), and an LSTM which can recognize and synthesize temporal dynamics in an image sequence as well as the texture changes. As depicted in Fig. 5, our approach is to first pass each individual frame of the plant image sequence through the deep visual descriptor (CNN) to produce a fixed-length vector representation. This fixed-length vector embodies the features of each individual plant, which are extracted after fine-tuning step (as explained in "CNN training" section). In this work, we have used Alexnet as our CNN.1 The outputs of CNN for the sequence of pot images are then passed onto a sequence learning module (LSTM). At this stage, the LSTM attempts to classify the plants via analyzing the sequences of the features that are extracted from image frames and by taking into account their temporal variations. Although there is no quantitative measurement (among the deep features and their variations) for some important phenotypes, such as number of leaves or growth rates, these information are implicitly encoded throughout the time by the network to better distinguish different accessions. In other words, the proposed CNN-LSTM structure captures the activity of the plants during their growth period to model the relationships between their phenotypes and genotypes.

The proposed model can automatically classify plants into the desired categories, given only the plant images. Note that our approach can be easily extended to the cases, where more classes are involved, just by performing the training phase for the new set of classes. Extending the model to applications other than plant classification is just as easy, where one can simply modify the target layer of the network to fit that particular problem. This is counter to the conventional phenotyping methods, where one is required to find relevant hand-crafted features for each individual application.

CNN training

The goal of training is to find the values of network parameters such that the predicted class labels for the input data are as close as possible to their ground truth class labels. This, however, is a very challenging task since CNNs normally have a vast number of parameters to be learned. Alexnet for instance is built on more than 60 millions parameters. Training a system with this many parameters requires a massive number of training images as well.

There are a few publicly available datasets that provide sufficient number of images for training CNN architectures, among which ImageNet-ILSVRC is very popular. It is a subset of much larger ImageNet dataset and has about 1.2 millions images selected from 1000 different categories. However, in many problems we do not have access to a large dataset, and this prevents us from properly training a CNN for them.

It is shown if we initialize the network using the parameters of a pre-trained CNN (a CNN that is already trained on a big dataset like ImageNet), and then train it using the limited dataset in our problem, we can achieve very good performance. In particular, we can rely on the basic features that the CNN has learned in the first few layers of the network on ImageNet, and try to re-train the parameters in the last few layers (normally fully connected layers) such that the network could be fit to our specific problem. This method is often referred to as fine-tunning, which speeds up the training process and also prevents overfitting of the network to a relatively small dataset.

Note that in many image classification problems, it is very common to preserve all the layers and parameters of a pre-trained CNN, and only replace the last layer that represents the 1000 class labels of ImageNet with the class labels in our specific problem. Then only the parameters of the classification layer are learned in the training phase, and the rest of the parameters of the network are kept fixed to the pre-trained settings. In fact here we assume that the deep features that are previously learned on ImageNet dataset can describe our specific dataset quite well, which is often an accurate assumption. The outputs of the layer before the classification layer of a CNN are sometimes refereed to as pre-trained CNN features.

In this work, we chose to fine-tune a pre-trained CNN using the top-view images of the plants, in order to learn more discriminant features for distinguishing different accessions.

Data augmentation

When a dataset has a limited number of images, which is not sufficient for properly training the CNN, it makes the network vulnerable to overfitting. In order to synthetically increase the size of the training data, we can use a simple and common technique, called Data Augmentation. In this procedure, we rotate each image in the dataset by , and around its center and add it to the dataset.

Deep feature extraction

Our goal is to classify plants into different genotypes (Accessions), as depicted in Fig. 6. First, we need to train a CNN on our plant dataset to learn the deep features that are fed to the LSTM cells. We use Alexnet, which is pre-trained on ImageNet to provide us with very descriptive features. Note that we choose Alexnet over deeper network such as VggNet or ResNet, because it has fewer parameters to learn, which better suits our limited dataset. We then replace the last layer of Alexnet with a layer of L neurons to adapt the network to our application, hence L represents the number of classes, i.e., accessions.

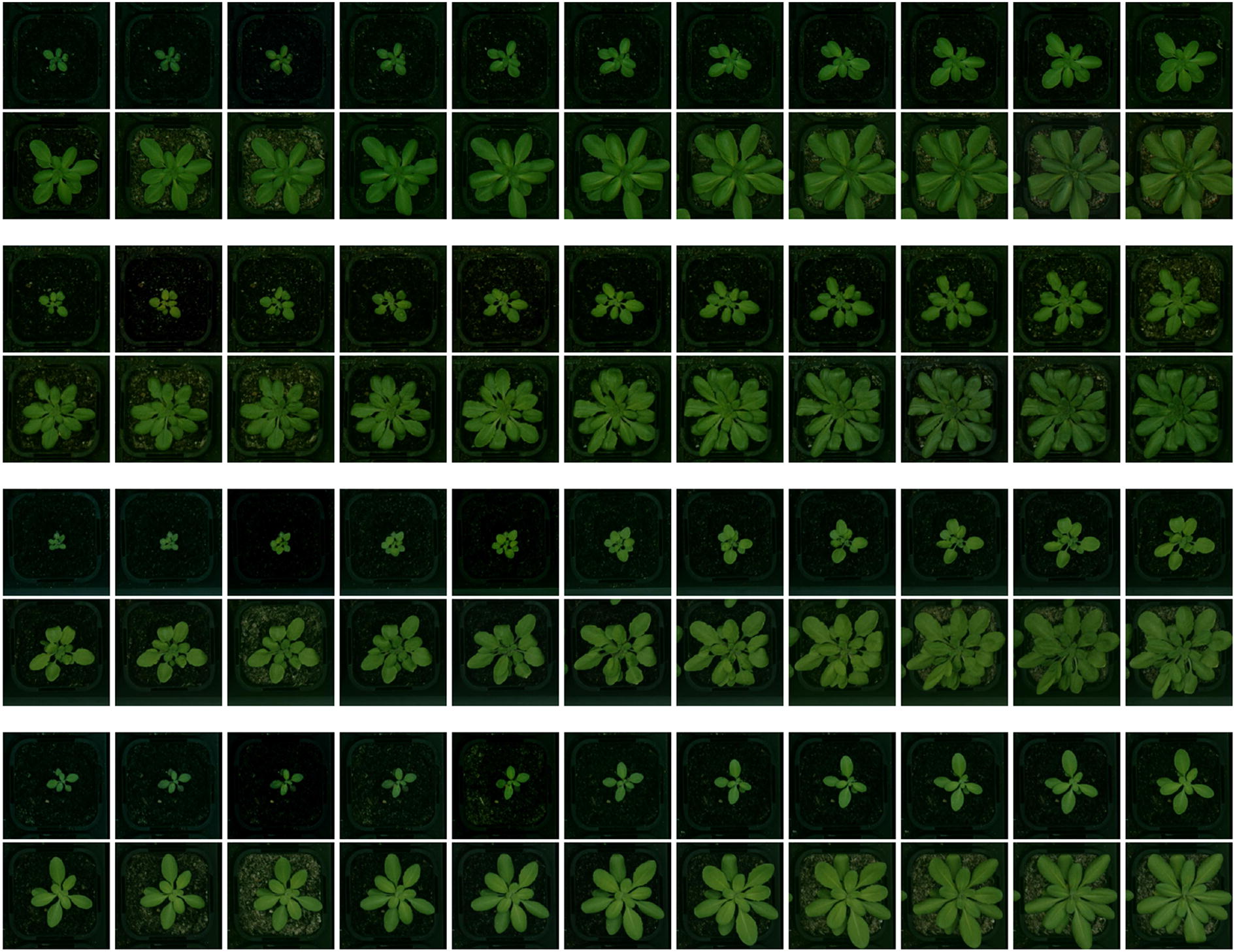

Fig. 6.

Samples of sequence data from various accessions. Examples of sequence data including 22 successive top-view images of 4 different categories of Arabidopsis thaliana. Successive images are recorded at 12:00 pm of every day. From top to bottom, accessions are: Sf-2, Cvi, Landsberg (Ler-1), and Columbia (Col-0)

Our dataset is composed of sequences of images captured from the plants in different days while they grow. We initially break down image sequences of the plants into individual images in order to build a CNN training dataset, and then use data augmentation to extend the size of this dataset, as explained in "Data augmentation section. However, since plants change in size a lot during their growth, the decomposed images from the plant sequences are not sufficiently consistent to form a proper training dataset for a genotype. This makes CNN training very difficult, if not impossible, particularly in our case where the total size of the training set is very limited.

We account for this intra class variability by splitting each genotype class into a class set of that genotype in multiple area sizes. The area is computed by counting the total number of pixels that belong to the plant, and is computed by segmenting the image. Plant segmentation process is explained in "Phenotyping using hand-crafted features" section. Another factor that could have been considered for breaking down each genotype into smaller and more consistent categories, is the day when the plant is observed and its image is captured. This factor, which somehow encodes the growth rate of the plant, is not however purely dependent of the genotypes and is heavily affected by environment conditions such as germination occurring on different days. Note that even though the experiments are conducted inside growth chambers where environment conditions are to be controlled, the plants still show variability.

Given the area as a proper class divider, each genotype category is split into five sub-classes based on the plant areas, which means the CNN training is performed on classes. Once the CNN is trained, for each plant image we can use the output of the last fully connected layer before the classification layer, as deep features of the plant and feed them into the corresponding time point of the LSTM, in our CNN-LSTM structure.

LSTM training

In order to train the LSTM, we feed it with sequences of deep features that are computed by applying the approach in "Deep feature extraction" section to the training image sequences. The system is then optimized to predict the true class label of the plants based on the information of the entire sequence. Note that we deepen the sequence learning module by adding another layer of LSTM to the structure (Fig. 5). This enhances the ability of the proposed system to learn more sophisticated sequence patterns and in turn, improves the classification accuracy.

Experiments and results

In this section, we first introduce the dataset and then explain the pre-processing and plant segmentation steps. Next, we report the accession classification results using the proposed CNN-LSTM method. In order to evaluate this method more thoroughly, we extract a set of hand-crafted features and investigate their performance in the accession classification task, compared to our CNN-LSTM framework that uses deep features. Furthermore, we report the results of a variant of our approach where the LSTM is replaced by a CRF, to have a more thorough temporal analysis of the proposed model. To the best of our knowledge, our dataset is the first publicly available dataset that provides successive daily images of plants while they are growing, together with their accession class information. Therefore we did not have access to other temporal data to further evaluate our model. We hope this could help other researchers in the field to have a more in-depth study of temporal variations of different accessions.

Our dataset

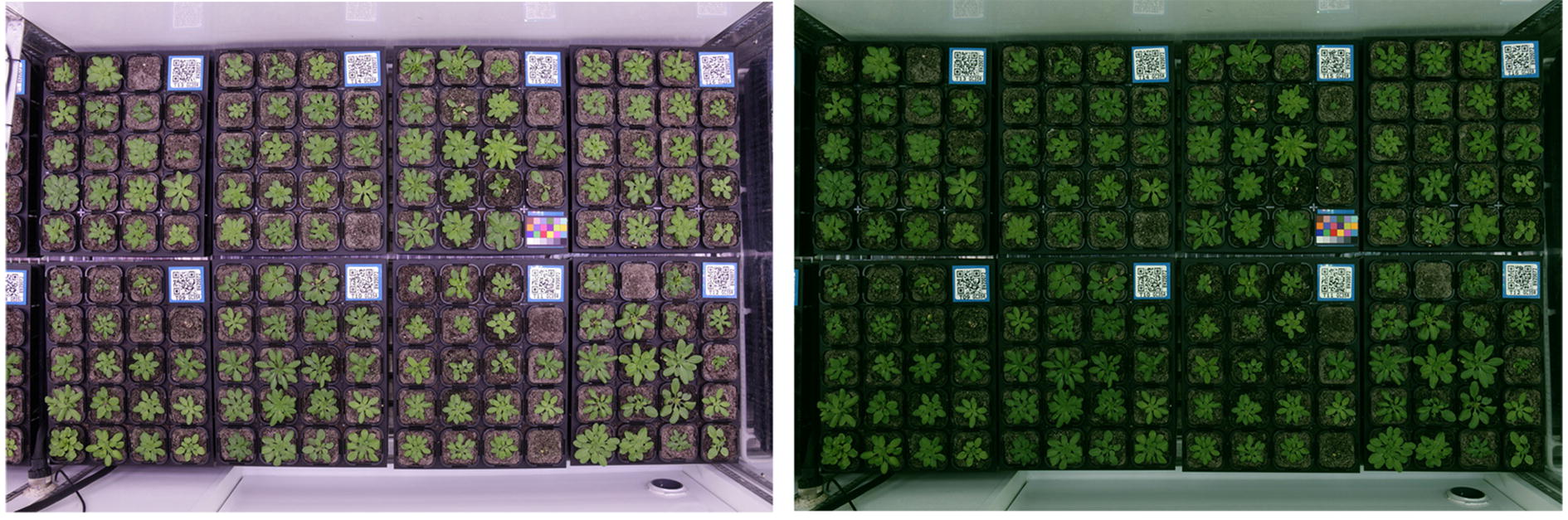

We presented a plant dataset which is comprised of successive top-view images of different accessions of Arabidopsis thaliana, which are Sf-2, Cvi, Landsberg (Ler-1) and Columbia (Col-0), as depicted in Fig. 6. An example growth chamber that is used in our experiments is depicted in Fig. 7, which contains a color card for color correction, and each tray in the chamber is accompanied with a QR code. Every pot is constantly monitored via a Canon EOS 650D, which is installed above the chamber.

Fig. 7.

Growth chamber. Left: the original picture of a growth chamber; right: the result of camera distortion removal and color correction step

In this work, we use the pot images that are recorded at 12:00 pm of every day to build the data sequence of each plant. We do not include more than one image per day, as it makes the sequences longer, and the classification process becomes more computationally expensive, while it does not add significant temporal information. The obtained sequence for each plant involves 22 successive top-view images.

A number of pre-processing steps are applied to the captured images before moving on to the classification task. The first step is camera distortion removal to eliminate image distortions, flattening the image so pots are equal sizes. Then the images undergo a color correction process using the included color cards in the chambers. This step transforms the plant colors to make them appear as similar as possible to the real colors (Fig. 7). Moreover, we use a temporal matching approach to detect trays and individual pots inside the trays, in order to extract the images of each pot and in turn generate the image sequence of the growing of each plant.

There is another public dataset which is called Ara-2013 dataset [83] that contains 165 single images of 5 accessions of Arabidopsis that is used for accession classification using CNN [57]. Unlike our dataset, the images in Ara-2013 dataset have not been captured in similar conditions, as the images of different accessions significantly vary in size, quality and background. These calibration inconsistencies can provide the CNN with cues that are irrelevant to phenotypes. We have addressed this issue in our dataset, by capturing all images from the plants in similar imaging conditions. Our dataset enables researchers to study the growth of the plants and their dynamic behaviors. Ara-2013 also includes eight temporal stacks of plant images. These are prepared only for segmentation and tracking tasks and no accession class information are provided, which makes it inapplicable for our problem. Hence, we apply our CNN model only on the single plant images of Ara-2013 and report the respective results.

CNN-LSTM

We implemented our deep structure using Theano [84] and Keras [85]. We trained the parameters of the CNN using Stochastic Gradient Descent (SGD) method in mini-batches of size 32 and with a fixed learning rate of 0.001, a momentum of 0.9, and a weight decay of 1e-6. Similarly, we used SGD for the training of LSTM and trained it in mini-batches of size 32 with a fixed learning rate of 0.01, a momentum of 0.9, and a weight decay of 0.005. The LSTM is equipped with 256 hidden neurons. Table 2 illustrates the results of using our CNN-LSTM structure for accession classification, compared to the case where only CNN is used for classification and temporal information is ignored. Adding the LSTM to our structure has led to a significant accuracy boost (76.8–93%), which demonstrates the impact of temporal cues in accession classification. Table 2 reports comparisons with other benchmarks, which are explained in more detail in the next sections.

Table 2.

The performance of our deep phenotyping system (CNN + LSTM) compared to other baseline methods (Using handcrafted features and SVM as a classifier, adding the LSTM to consider temporal information, CNN without temporal information and using CRF instead of LSTM to compare their performance)

| Sf-2 | Cvi | Ler-1 | Col-0 | Avg. | |

|---|---|---|---|---|---|

| Hand-crafted features using SVM | 58.4 | 83.9 | 65.1 | 35.7 | 60.8 |

| Hand-crafted features + LSTM | 61.3 | 90.2 | 68.2 | 52.4 | 68.0 |

| CNN | 71.0 | 86.0 | 74.4 | 76.0 | 76.8 |

| CNN + CRF | 84.3 | 96.1 | 90.4 | 89.4 | 87.6 |

| CNN + LSTM | 89.6 | 93.8 | 94.2 | 94.2 | 93.0 |

Results are reported in percent (%)

The best performing method for each category is italicized

We also applied our baseline CNN-only model to Ara-2013 dataset. With a similar cross-validation method as in [57], we achieved 96% classification accuracy, which is on par with the reported result by Ubbens, et al. method.

Phenotyping using hand-crafted features

We conduct an experiment where hand-crafted features, which are extracted from the plant images, are fed to the LSTM instead of deep CNN features. Then we can evaluate the contribution of deep features in our framework. To extract hand-crafted features, following plant segmentation method has been used.

Plant segmentation

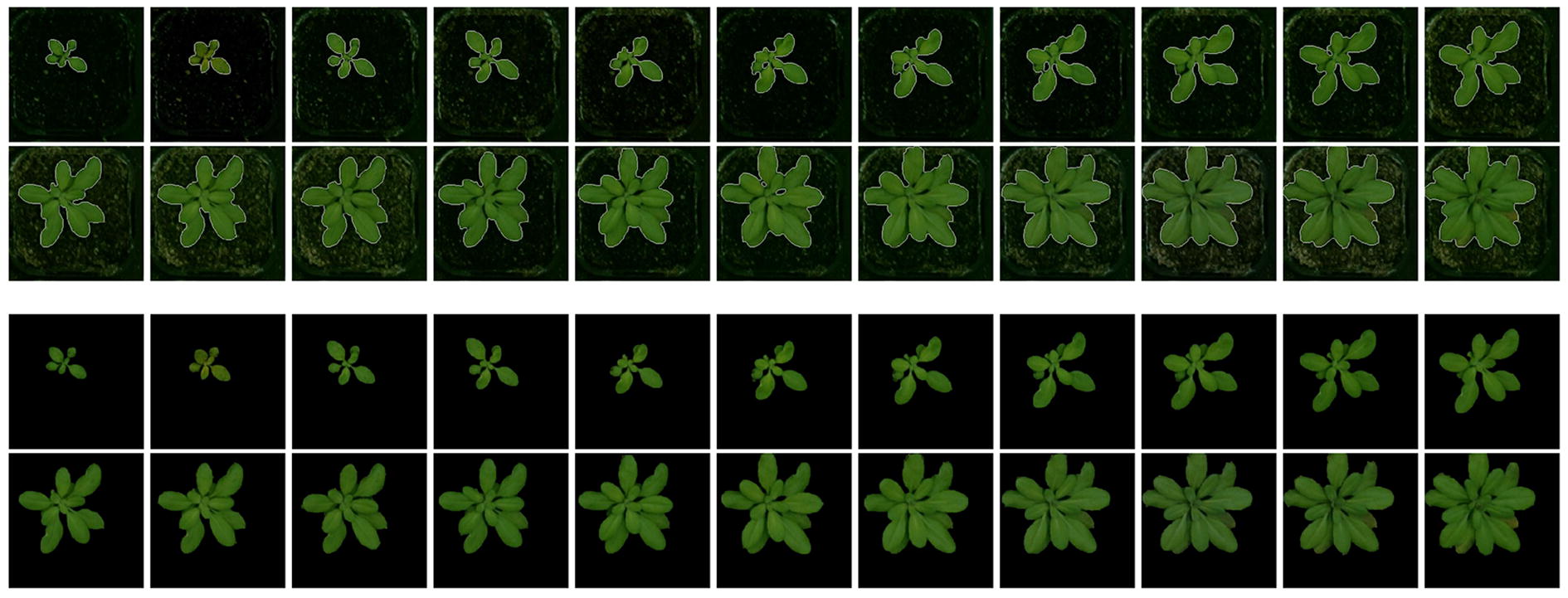

For segmenting the plants we use the GrabCut algorithm [86], which is a method of distinguishing foreground from background based on the graph cuts [87]. In this algorithm, in addition to the input image, a bounding box that encompasses the foreground object should also be given as an input. Furthermore, a mask image with four intensity levels, representing definite background (0), definite foreground (1), probable background (2) and probable foreground (3) can also be provided as an auxiliary input to improve the segmentation.

Since the plants can be anywhere in the pots, especially when they grow large, we choose the bounding box to be as large as the input image to ensure no part of plants is missed. To generate the mentioned quaternary mask, the following approach is proposed. First, the image is transformed from RGB into L * a * b color space, as the plants and background are better distinguishable in a and b channels. Then, for each of the a and b components, image binarization using Otsu’s method [88] is performed; the outcome is two binary masks that highlights candidate foreground and background points for each of the channels. To ensure no part of the plants is mistakenly assumed as definite background, especially the leaf borders that could be faded into the soil in the images, next we use morphological dilation to expand the mask and this is then added to the binary mask. This leaves us with two masks, each having three intensity levels, 0: definite background, 1:probable background/foreground and 2: foreground.

The two masks are then combined to form the ultimate mask using the mapping in Table 1. The obtained mask is then used in the GrabCut algorithm to segment the plants. Finally, morphological opening and closing operations are applied to remove unwanted holes and blobs. The segmentation results for a sample sequence is shown in Fig. 8.

Table 1.

Combining the two binary masks computed from a and b color channels to produce the final mask for Grab-cut segmentation algorithm

| 0 | 1 | 2 | |

|---|---|---|---|

| 0 | Definite background | Probable background | Probable foreground |

| 1 | Probable background | Probable background | Probable foreground |

| 2 | Probable foreground | Probable foreground | Definite foreground |

Fig. 8.

Plant segmentation. The result of segmentation step is shown in this figure; top: plant contours, bottom: plant segments

Hand-crafted features

The features, which are extracted from the segmented plant images, are as follows: Mean, Max and Min of RGB image; Mean of HSV image; area and perimeter of the plant; roundness of the plant which is the ratio between its area and perimeter; compactness which is the ratio between area and convex-hull area; eccentricity which is the ratio between the major axis and minor axis of the convex-hull; length of the ellipse with the same second moment as the region; and extent which is the ratio between the area and the bounding box.

Furthermore, we compute a set of Fourier descriptors [89] to describe the shapes of the leaves in terms of their contours. It is worth noting that we make the Fourier features invariant to translation by setting the centre element of the Fourier transform of the image contours to zero. In total, a vector of 1024 elements (composed of 512 real and 512 imaginary components of the Fourier transform) is extracted to represent the contour shape of each plant.

In addition, we employ a set of texture features using the Gray-Level Co-occurrence Matrix (GLCM) [90, 91]. These features are extracted from segmented image plants and as a result, the texture information of different accessions are taken into account in the classification process. The obtained features via this method are independent of gray-level scaling of images and therefore, invariant to various illuminations and lighting conditions [91, 92]. Each element of GLCM indicates the frequency of the adjacency of a particular pair of gray-level intensities. In this experiment, we considered adjacencies in four directions of 0, , and , computed a GLCM for each direction, and then extracted three texture properties, Energy, Contrast and Homogeneity from each of the computed GLCMs. In total, this method provided us with 12 texture descriptors for each segmented plant.

The results of using hand-crafted features are reported in Table 2, which could be compared with the results of the proposed system ( compared to ). Note that the quality of extracted hand-engineered features depends on how good the segmentation step is done. If the plants are not segmented properly, we may not obtain a reliable set of hand-crafted features, which in turn deteriorates the system performance even more.

The experimental results indicate the superiority of deep features compared to the above hand-engineered descriptors for accession classification. Note that we attempted to include a large array of various hand-crafted features in this experiment, but the classification system built on these descriptors was outperformed by our CNN-based classifier. Note that using a pure CNN-based classifier with no sequence learning module involved (no LSTM), led to a classification accuracy of . This configuration outperforms the system with hand-crafted features, and clearly indicates the benefit of using deep features over hand-engineered descriptors.

In addition, we perform another experiment with handcrafted features where the temporal information of the plants are discarded and LSTMs are dropped from the structure. Then a Support Vector Machine classifier (SVM) is applied to the hand-crafted features to predict the accession of each plant. This further degrades the classification performance of the system (68–60.8%), as shown in Table 2.

CNN-CRF

The Conditional Random Field (CRF) is a popular probabilistic graphical model for encoding structural and temporal information of sequential data [93], and it has been widely used in the computer vision community [15, 66–68, 94, 95]. At its simplest form, this model encourages the adjacent elements in the spatial or temporal structure to take similar or compatible class labels and hence, it gives rise to a more consistent label for the whole structure (sequence).

In this work we studied the potential of the CRF for sequence analysis and compared it with LSTM in our sequence learning and accession classification experiment. To this aim, we fed the CRF with the previously computed deep features and reported its performance in the sequence classification task. Table 2 demonstrates the potential of CRFs for encoding the temporal dependencies in the sequential data, though they are still outperformed by our CNN-LSTM framework.

Conclusion

In this paper, we proposed a framework for automatic plant phenotyping based on deep visual features of the plants and also temporal cues of their growth patterns to classify them based on their genotypes. Classification of accessions using their images implies the difference in their appearances and indicates the ability of deep learning based methods in finding these differences. Moreover, to the best of our knowledge, this is the first work that studies the temporal characteristics and behaviors of plants using LSTMs and shows their potential for the accession classification task. Our experiments evidence the benefits of using deep features over hand-crafted features, and indicate the significance of temporal information in a plant classification task.

Despite the deep learning demand for a large input dataset and our limited sequential data from different accessions, we presented a sophisticated deep network and an efficient method to train it. In the future, we plan to augment our dataset with more varying visual and sequential data to enhance the robustness of our system when dealing with more challenging classifications.

The model obtained in this study can be used for analysis of unseen accessions, e.g. for finding their behavioral similarities with the accessions used in the training, which could reveal the relationships between the phenotypes and genotypes (our ongoing work). In fact, probabilistic classification of reference accessions is a holistic approach to plant phenotyping where unknown accessions can be typed as to their similarity to multiple references. This goes beyond traditional hand crafted measures of leaf size shape and color. One example is the classification of progeny accessions based on their similarity to parental reference accessions. We plan to apply our trained classifier to a large set of accessions. The probability of each genotype state, Sf-2, Cvi, Ler-1, Col-0, is a multivariate growth pattern phenotype of each accession, which can be decomposed into its causal genetic factors using Genome Wide Association Studies.

Furthermore, due to the generality of the proposed model, it can be used with no major modification for other tasks such as disease detection or for analyzing different environment conditions (e.g. soil, temperature, humidity and light) for plants. Studying the temporal behavior of the plants using the recorded image sequences of their first few days of growth and based on our CNN-LSTM model, can predict the crop yield of the plants as well as their health (our future work).

Authors' contributions

STN, ME and MN carried out the model designing, implementation and drafted the main manuscript. TB contributed in designing the experiment, using high-throughput system and collection of the data. JB was the project leader with technical knowledge in plant biology and phenotype/genotype interactions and contributed in designing the experiment and preparation of the manuscript. All authors read and approved the final manuscript.

Acknowledgements

We thank funding sources including, Australian Research Council (ARC) Centre of Excellence in Plant Energy Biology CE140100008, ARC Linkage Grant LP140100572 and the National Collaborative Research Infrastructure Scheme - Australian Plant Phenomics Facility. All research carried out in this manuscript follows the local, national or international guidelines and legislation and the required or appropriate permissions and/or licences for the study.

Competing interests

The authors declare that they have no competing interests.

Availability of data and materials

Data is publicly available at https://figshare.com/s/e18a978267675059578f or http://phenocam.anu.edu.au/cloud/a_data/_webroot/published-data/2017/2017-Namin-et-al-DeepPheno.zip

Plant licences

The local, national, or international guidelines and legislation relating to plants and the required or appropriate permissions and/or licences for the study have been obtained.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Footnotes

We also investigated using more complex networks, such as Vgg-16, but the parameters could not be properly trained due to the insufficiency of our data and we achieved better results with Alexnet.

Contributor Information

Sarah Taghavi Namin, Email: sarah.taghavi-namin@anu.edu.au.

Mohammad Esmaeilzadeh, Email: mohammad.esmaeilzadeh@anu.edu.au.

Mohammad Najafi, Email: mohammad.najafi@anu.edu.au.

Tim B. Brown, Email: tim.brown@anu.edu.au

Justin O. Borevitz, Email: justin.borevitz@anu.edu.au

References

- 1.Rivers J, Warthmann N, Pogson B, Borevitz J. Genomic breeding for food, environment and livelihoods. Food Secur. 2015;7:375–382. doi: 10.1007/s12571-015-0431-3. [DOI] [Google Scholar]

- 2.Brown T, Cheng R, Sirault X, Rungrat T, Murray K, Trtilek M, Furbank R, Badger M, Pogson B, Borevitz J. Traitcapture: genomic and environment modelling of plant phenomic data. Curr Opin Plant Biol. 2014;18:73–79. doi: 10.1016/j.pbi.2014.02.002. [DOI] [PubMed] [Google Scholar]

- 3.Nordborg M, Weigel D. 1,135 genomes reveal the global pattern of polymorphism in Arabidopsis thaliana. Cell. 2016;166:481–491. doi: 10.1016/j.cell.2016.05.063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Vanhaeren H, Gonzalez N, Inzé D. A journey through a leaf: phenomics analysis of leaf growth in Arabidopsis thaliana. Rockville: The Arabidopsis Book; 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Monsalve D, Trujillo M, Chaves D. Automatic classification of nutritional deficiencies in coffee plants. In: LACNEM. 2015

- 6.Camargo A, Papadopoulou D, Spyropoulou Z, Vlachonasios K, Doonan JH, Gay AP. Objective definition of rosette shape variation using a combined computer vision and data mining approach. PLoS One. 2014;9(5):e96889. doi: 10.1371/journal.pone.0096889. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kadir A, Nugroho LE, Susanto A, Santosa PI. A comparative experiment of several shape methods in recognizing plants. In: IJCSIT. 2011

- 8.PlantScreen Phenotyping Systems, Photon Systems Instruments (PSI). www.psi.cz. Accessed 2 Aug 2018.

- 9.Antipov G, Berrani S-A, Ruchaud N, Dugelay J-L. Learned vs. hand-crafted features for pedestrian gender recognition. In: ACM multimedia. 2015

- 10.Krizhevsky A, Sutskever I, Hinton G. Imagenet classification with deep convolutional neural networks. In: NIPS. 2012

- 11.LeCun Y, Denker J, Henderson D, Howard R, Hubbard W, Jacke L. Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems. 1990

- 12.Donahue J, Jia Y, Vinyals O, Hoffman J, Zhang N, Tzeng E, Darrel T. Decaf: a deep convolutional activation feature for generic visual recognition. In: ICML. 2014

- 13.Razavian A, Azizpour H, Sullivan J, Carlsson S. Cnn features off-the-shelf: an astounding baseline for recognition. In: CVPR. 2014

- 14.Xia F, Zhu J, Wang P, Yuille A. Pose-guided human parsing by an and/or graph using pose-context features. In: AAAI. 2016

- 15.Donahue J, Hendricks LA, Guadarrama S, Rohrbach M. Long-term recurrent convolutional networks for visual recognition and description. In: CVPR. 2015 [DOI] [PubMed]

- 16.Akbarian MSA, Saleh F, Fernando B, Salzmann M, Petersson L, Andersson L. Deep action- and context-aware sequence learning for activity recognition and anticipation. In: CoRR. 2016

- 17.Mahasseni B, Todorovic S. Regularizing long short term memory with 3d human-skeleton sequences for action recognition. In: CVPR. 2016

- 18.Singh B, Marks TK, Jones M, Tuzel O, Shao M. A multi-stream bi-directional recurrent neural network for fine-grained action detection. In: CVPR. 2016

- 19.Srivastava N, Mansimov E, Salakhutdinov R. Unsupervised learning of video representations using lstms. In: CoRR. 2015

- 20.Rahaman MM, Chen D, Gillani Z, Klukas C, Chen M. Advanced phenotyping and phenotype data analysis for the study of plant growth and development. Front Plant Sci. 2015;6:619. doi: 10.3389/fpls.2015.00619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Dee H, French A. From image processing to computer vision: plant imaging grows up. Funct Plant Biol. 2015;42:1–2. doi: 10.1071/FPv42n5_FO. [DOI] [PubMed] [Google Scholar]

- 22.Minervini M, Scharr H, Tsaftaris S. Image analysis: the new bottleneck in plant phenotyping. IEEE Signal Process Mag. 2015;32:126–131. doi: 10.1109/MSP.2015.2405111. [DOI] [Google Scholar]

- 23.Granier C, Vile D. Phenotyping and beyond: modelling the relationships between traits. Curr Opin Plant Biol. 2014;18:96–102. doi: 10.1016/j.pbi.2014.02.009. [DOI] [PubMed] [Google Scholar]

- 24.Bell J, Dee HM. Watching plants grow–a position paper on computer vision and Arabidopsis thaliana. IET Comput Vis. 2016;11:113–121. doi: 10.1049/iet-cvi.2016.0127. [DOI] [Google Scholar]

- 25.Dhondt S, Wuyts N, Inze D. Cell to whole-plant phenotyping: the best is yet to come. Trends Plant Sci. 2013;18:428–439. doi: 10.1016/j.tplants.2013.04.008. [DOI] [PubMed] [Google Scholar]

- 26.Singh A, Ganapathysubramanian B, Singh AK, Sarkar S. Machine learning for high-throughput stress phenotyping in plants. Trends Plant Sci. 2016;21:110–124. doi: 10.1016/j.tplants.2015.10.015. [DOI] [PubMed] [Google Scholar]

- 27.Tsaftaris SA, Minervini M, Scharr H. Machine learning for plant phenotyping needs image processing. Trends Plant Sci. 2016;21:989–991. doi: 10.1016/j.tplants.2016.10.002. [DOI] [PubMed] [Google Scholar]

- 28.Furbank RT, Tester M. Phenomics–technologies to relieve the phenotyping bottleneck. Trends Plant Sci. 2011;16:635–44. doi: 10.1016/j.tplants.2011.09.005. [DOI] [PubMed] [Google Scholar]

- 29.Yang W, Duan L, Chen G, Xiong L, Liu Q. Plant phenomics and high-throughput phenotyping: accelerating rice functional genomics using multidisciplinary technologies. Curr Opin Plant Biol. 2013;16:180–187. doi: 10.1016/j.pbi.2013.03.005. [DOI] [PubMed] [Google Scholar]

- 30.Minervini M, Giuffrida MV, Perata P, Tsaftaris SA. Phenotiki: an open software and hardware platform for affordable and easy image-based phenotyping of rosette-shaped plants. Plant J. 2017;90:204–216. doi: 10.1111/tpj.13472. [DOI] [PubMed] [Google Scholar]

- 31.Fahlgren N, Feldman M, Gehan MA, Wilson MS, Shyu C, Bryant DW, Hill ST, McEntee CJ, Warnasooriya SN, Kumar I, Ficor T, Turnipseed S, Gilbert KB, Brutnell TP, Carrington JC, Mockler TC, Baxter I. A versatile phenotyping system and analytics platform reveals diverse temporal responses to water availability in setaria. Mol Plant. 2015;8:1520–1535. doi: 10.1016/j.molp.2015.06.005. [DOI] [PubMed] [Google Scholar]

- 32.Hartmann A, Czauderna T, Hoffmann R, Stein N, Schreiber F. Htpheno: an image analysis pipeline for high-throughput plant phenotyping. BMC Bioinform. 2011;12:148. doi: 10.1186/1471-2105-12-148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Knecht AC, Campbell MT, Caprez A, Swanson DR, Walia H. Image harvest: an open-source platform for high-throughput plant image processing and analysis. J Exp Bot. 2016;67:3587–3599. doi: 10.1093/jxb/erw176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Backhaus A, Kuwabara A, Bauch M, Monk N, Sanguinetti G, Fleming A. Leafprocessor: a new leaf phenotyping tool using contour bending energy and shape cluster analysis. New Phytol. 2010;187:251–261. doi: 10.1111/j.1469-8137.2010.03266.x. [DOI] [PubMed] [Google Scholar]

- 35.Yin X, Liu X, Chen J, Kramer D. Multi-leaf tracking from fluorescence plant videos. In: ICIP. 2014 [DOI] [PubMed]

- 36.Wu SG, Bao FS, Xu EY, Wang Y-X, Chang Y-F, Xiang Q-L. A leaf recognition algorithm for plant classification using probabilistic neural network. In: Signal processing and information technology. 2007

- 37.Aakif A, Khan MF. Automatic classification of plants based on their leaves. Biosyst Eng. 2015;139:66–75. doi: 10.1016/j.biosystemseng.2015.08.003. [DOI] [Google Scholar]

- 38.Wang Z, Li H, Zhu Y, Xu T. Review of plant identification based on image processing. Comput Methods Eng. 2016;24:637–654. doi: 10.1007/s11831-016-9181-4. [DOI] [Google Scholar]

- 39.Amean ZM, Low T, McCarthy C, Hancock N. Automatic plant branch segmentation and classification using vesselness measure. In: ACRA. 2013

- 40.Pahikkala T, Kari K, Mattila H, Lepistö A, Teuhola J, Nevalainen O, Tyystjärvi E. Classification of plant species from images of overlapping leaves. Comput Electron Agric. 2015;118:186–192. doi: 10.1016/j.compag.2015.09.003. [DOI] [Google Scholar]

- 41.Dey D, Mummert L, Sukthankar R. Classification of plant structures from uncalibrated image sequences. In: WACV. 2012

- 42.Mouine S, Yahiaoui I, Verroust-Blondet A. A shape-based approach for leaf classification using multiscale triangular representation. In: ICMR (2013)

- 43.Goëau H, Bonnet P, Joly A, Boujemaa N, Barthelemy D, Molino J-F, Birnbaum P, Mouysset E, Picard M. The clef 2011 plant images classification task. In: CLEF. 2011

- 44.Fiel S, Sablatnig R. Leaf classification using local features. In: Workshop of the Austrian association for pattern recognition. 2010

- 45.Rashad MZ, Desouky BS, Khawasik M. Plants images classification based on textural features using combined classifier. In: IJCSIT. 2011

- 46.Schikora M, Schikora A, Kogel K, Koch W, Cremers D. Probabilistic classification of disease symptoms caused by salmonella on arabidopsis plants. GI Jahrestag (2). 2010;10:874–879. [Google Scholar]

- 47.Schikora M, Neupane B, Madhogaria S, Koch W, Cremers D, Hirt H, Kogel K, Schikora A. An image classification approach to analyze the suppression of plant immunity by the human pathogen salmonella typhimurium. BMC Bioinform. 2012;13:171. doi: 10.1186/1471-2105-13-171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Chen D, Neumann K, Friedel S, Kilian B, Chen M, Altmann T, Klukas C. Dissecting the phenotypic components of crop plant growth and drought responses based on high-throughput image analysis. Plant Cell. 2014;26(12):4636–4655. doi: 10.1105/tpc.114.129601. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Lottes P, Höferlin M, Sander S, Stachniss C. Effective vision-based classification for separating sugar beets and weeds for precision farming. J Field Robotics. 2016;34:1160–1178. doi: 10.1002/rob.21675. [DOI] [Google Scholar]

- 50.Haug S, Michaels A, Biber P, Ostermann J. Plant classification system for crop/weed discrimination without segmentation. In: WACV. 2014

- 51.Plantix. https://plantix.net. Accessed 2 Aug 2018.

- 52.Lee SH, Chan CS, Wilkin P, Remagnino P. Deep-plant: plant identification with convolutional neural networks. In: ICIP. 2015

- 53.Lee SH, Chang YL, Chan CS, Remagnino P. Plant identification system based on a convolutional neural network for the lifeclef 2016 plant classification task. In: LifeClef. 2016

- 54.Pound MP, Burgess AJ, Wilson MH, Atkinson JA, Griffiths M, Jackson AS, Bulat A, Tzimiropoulos Y, Wells DM, Murchie EH, Pridmore TP, French AP. Deep machine learning provides state-of-the-art performance in image-based plant phenotyping. In: Biorxiv. 2016

- 55.Reyes A, Caicedo J, Camargo J. Fine-tuning deep convolutional networks for plant recognition. In: Working notes of CLEF 2015 conference. 2015

- 56.Lee SH, Chan CS, Mayo SJ, Remagnino P. How deep learning extracts and learns leaf features for plant classification. Pattern Recognit. 2017;71:1–13. [Google Scholar]

- 57.Ubbens JR, Stavness I. Deep plant phenomics: a deep learning platform for complex plant phenotyping tasks. Front Plant Sci. 2017;8:1190. doi: 10.3389/fpls.2017.01190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.DeChant C, Wiesner-Hanks T, Chen S, Stewart E, Yosinski J, Gore M, Nelson R, Lipson H. Automated identification of northern leaf blight-infected maize plants from field imagery using deep learning. Phytopathology. 2017;107:1426–1432. doi: 10.1094/PHYTO-11-16-0417-R. [DOI] [PubMed] [Google Scholar]

- 59.Mohanty SP, Hughes D, Salathe M. Using deep learning for image-based plant disease detection. Front Plant Sci. 2016;7:1419. doi: 10.3389/fpls.2016.01419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Vezzani R, Baltieri D, Cucchiara R. Hmm based action recognition with projection histogram features. In: ICPR. 2010

- 61.Lv F, Nevatia R. Recognition and segmentation of 3-d human action using hmm and multi-class adaboos. In: ECCV. 2006

- 62.Wu D, Shao L. Leveraging hierarchical parametric networks for skeletal joints based action segmentation and recognition. In: ICCV. 2014

- 63.Bilen H, Fernando B, Gavves E, Vedaldi A, Gould S. Dynamic image networks for action recognition. In: CVPR. 2016 [DOI] [PubMed]

- 64.Fernando B, Anderson P, Hutter M, Gould S. Discriminative hierarchical rank pooling for activity recognition. In: CVPR. 2016

- 65.Fernando B, Gavves E, Oramas J, Ghodrati A, Tuytelaars T. Rank pooling for action recognition. In: TPAMI. 2016 [DOI] [PubMed]

- 66.Vail DL, Veloso MM, Lafferty JD. Conditional random fields for activity recognition. In: AAMAS. 2007

- 67.Wang Y, Mori G. Max-margin hidden conditional random fields for human action recognition. In: CVPR. 2009

- 68.Song Y, Morency LP, Davis R. Action recognition by hierarchical sequence summarization. In: CVPR. 2013

- 69.Du Y, Wang W, Wang L. Hierarchical recurrent neural network for skeleton based action recognition. In: CVPR. 2015

- 70.Baccouche M, Mamalet F, Wolf C, Garcia C, Baskurt A. Sequential deep learning for human action recognition. In: Human behavior understanding. 2011

- 71.Grushin A, Monner DD, Reggia JA, Mishra A. Robust human action recognition via long short-term memory. In: IJCNN. 2013

- 72.Lefebvre G, Berlemont S, Mamalet F, Garcia C. Blstm-rnn based 3d gesture classification. In: ICANN. 2013

- 73.Karpathy A, Fei-Fei L. Deep visual-semantic alignments for generating image descriptions. IEEE Trans Pattern Anal Mach Intell. 2017;39(4):664–76. doi: 10.1109/TPAMI.2016.2598339. [DOI] [PubMed] [Google Scholar]

- 74.Wang J, Yang Y, Mao J, Huang Z, Huang C, Xu W. Cnn-rnn: a unified framework for multi-label image classification. In: CVPR. 2016

- 75.Krizhevsky A, Sutskever I, Hinton GE. Imagenet classification with deep convolutional neural networks. In: NIPS. 2012

- 76.Zeiler MD, Fergus R. Visualizing and understanding convolutional networks. In: Fleet D, Pajdla T, Schiele B, Tuytelaars T, editors. ECCV. 2014

- 77.Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. In: ICLR. 2015

- 78.He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In: CVPR. 2016

- 79.LSVRC 2012. http://www.image-net.org/challenges/LSVRC/. Accessed 2 Aug 2018.

- 80.Goodfellow I, Bengio Y, Courville A. Deep learning: sequence modelling. Cambridge: MIT Press; 2016. [Google Scholar]

- 81.Bengio Y, Simard P, Frasconi P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans Neural Netw. 1994;5(2):157–166. doi: 10.1109/72.279181. [DOI] [PubMed] [Google Scholar]

- 82.Hochreiter S, Schmidhuber J. Long short-term memory. Neural Comput. 1997;9(8):1735–80. doi: 10.1162/neco.1997.9.8.1735. [DOI] [PubMed] [Google Scholar]

- 83.Minervini M, Fischbach A, Scharr H, Tsaftaris SA. Finely-grained annotated datasets for image-based plant phenotyping. Pattern Recognit Lett. 2015;81:80–89. doi: 10.1016/j.patrec.2015.10.013. [DOI] [Google Scholar]

- 84.Theano Development Team: Theano: a python framework for fast computation of mathematical expressions. 2016. arXiv e-prints arXiv:abs/1605.02688

- 85.Chollet F. Keras. San Francisco: GitHub; 2016. [Google Scholar]

- 86.Rother C, Kolmogorov V, Blake A. “Grabcut”: interactive foreground extraction using iterated graph cuts. ACM Trans Gr. 2004;23(3):309–14. doi: 10.1145/1015706.1015720. [DOI] [Google Scholar]

- 87.Boykov Y, Veksler O, Zabih R. Fast approximate energy minimization via graph cuts. PAMI. 2001;23(11):1222–39. doi: 10.1109/34.969114. [DOI] [Google Scholar]

- 88.Otsu N. A threshold selection method from gray-level histograms. IEEE Trans Syst Man Cybern. 1979;9(1):62–6. doi: 10.1109/TSMC.1979.4310076. [DOI] [Google Scholar]

- 89.Granlund GH. Fourier preprocessing for hand print character recognition. IEEE Trans Comput. 1972;21(2):195–201. doi: 10.1109/TC.1972.5008926. [DOI] [Google Scholar]

- 90.Haralick RM. Statistical and structural approaches to texture. Proc IEEE. 1979;67(5):786–804. doi: 10.1109/PROC.1979.11328. [DOI] [Google Scholar]

- 91.Taghavi Namin S, Petersson L. Classification of materials in natural scenes using multi-spectral images. In: IEEE/RSJ international conference on intelligent robots and systems (IROS). 2012, pp. 1393–1398

- 92.Douillard B, Fox D, Ramos F, Durrant-Whyte H. Classification and semantic mapping of urban environments. IJRR. 2011;30(1):5–32. [Google Scholar]

- 93.Lafferty JD, McCallum A, Pereira FCN. Conditional random fields: probabilistic models for segmenting and labeling sequence data. In: ICML. 2001

- 94.Ladicky L, Russell C, Kohli P, Torr PHS. Inference methods for crfs with co-occurrence statistics. IJCV. 2013;103(2):213–25. doi: 10.1007/s11263-012-0583-y. [DOI] [Google Scholar]

- 95.Najafi M, Taghavi Namin S, Salzmann M, Petersson L. Sample and filter: nonparametric scene parsing via efficient filtering. In: CVPR. 2016

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data is publicly available at https://figshare.com/s/e18a978267675059578f or http://phenocam.anu.edu.au/cloud/a_data/_webroot/published-data/2017/2017-Namin-et-al-DeepPheno.zip