Abstract

Introduction

Computerized assessments are becoming widely accepted in the clinical setting and as a potential outcome measure in clinical trials. To gain patient perspectives of this experience, the aim of the present study was to investigate patient attitudes and perceptions of the Cognigram [Cogstate], a computerized cognitive assessment.

Methods

Semi-structured interviews were conducted with 19 older adults undergoing a computerized cognitive assessment at the University of British Columbia Hospital Clinic for Alzheimer Disease and Related Disorders. Thematic analysis was applied to identify key themes and relationships within the data.

Results

The analysis resulted in three categories: attitudes toward computers in healthcare, the cognitive assessment process, and evaluation of the computerized assessment experience. The results show shared views on the need for balance between human and computer intervention, as well as room for improvement in test design and utility.

Discussion

Careful design and user-testing should be made a priority in the development of computerized assessment interfaces, as well as reevaluating the cognitive assessment process to minimize patient anxiety and discomfort. Future research should move toward continuous data capture within clinical trials and ensuring instruments of high reliability to reduce variance.

Keywords: Cognitive assessment, Patient experience, Qualitative interviews, Alzheimer's disease, Dementia, Computerized assessment

Highlights

-

•

Computerized assessment can enhance the patient-clinician relationship.

-

•

There is a need for balance between human and computer intervention.

-

•

Strategies to ease anxiety in computerized assessment should be further explored.

-

•

Careful design and user-testing should be a priority in computerized assessment.

1. Background

In response to the burden and rising costs associated with Alzheimer disease (AD), there is policy consensus for the public health benefit of early intervention. By the time AD is diagnosed, many patients have suffered significant neuronal injury that is believed to be irreversible. With no preventive or disease-modifying therapies available [1], early detection of cognitive decline is becoming increasingly critical in parallel with advances in therapeutics [2]. As a result, cognitive assessments are being used to assist with earlier diagnosis, evaluating treatments, and as a potential outcome measure in clinical trials [3], [4].

Cognitive assessments are available in a range of settings, including at home and at the clinic [5]. Currently available methods of evaluation include the following: neuropsychological assessments conducted by a clinical neuropsychologist; pen-and-paper screening tools; and computerized versions of cognitive tests [2]. As technology advances, clinical trials are moving away from conventional methods and adopting validated computerized tests that can sensitively capture the cognitive changes in secondary prevention cohorts [4]. Computerized cognitive assessments can offer novel benefits over traditional tests, such as precisely recording accuracy and speed, minimizing floor and ceiling effects, and offering a standardized format unaffected by examiner bias—all characteristics critical in detecting AD-related cognitive change [2]. Furthermore, computerized cognitive assessments can be time and cost saving [2], [6].

While computerized assessments are increasingly accepted, there remain several limitations to their utility. Computerized adaptations of existing tests may not have the same psychometric properties as pencil-and-paper versions, and thus, require their own validation studies. In addition, few studies have found substantive evidence to support the equivalence between the experience of computer and noncomputer methods of test administration from the patient perspective [2], [7]. Finally, computerized assessments raise issues related to the impersonal nature of testing, in particular at a time when end-users may feel vulnerable.

Compounding these considerations, many older adults face unique barriers in adopting technology compared with their younger counterparts [8]. Multiple studies have cited age-related sensory, motor, and cognitive declines as the most common physical obstacles faced by older adults when using computers [9], [10]. These changes can limit the ability of older adults to perform computer-based tasks and thus have important implications for the design of computerized interfaces.

A growing body of research examines the broader social context in which older adults interact with computer technology. Resistance to using computers is associated with the perception that computer use is discordant with social and cultural values, such as freedom and the need for social interaction [11]. Older adults who regularly use computer technology tend to be younger, highly educated, and financially affluent, suggesting that older adults' experiences and comfort with computer technology may also be colored by social, cultural, and economic factors [8], [12]. Taken together, these findings related to physical and social barriers in using computer technology highlight the importance of considering the voices of end-users in the development and implementation of computerized assessments.

Despite the abundance of literature on aging and computer experience, little is known about how the intersection of these factors affects individuals in a clinical setting. This study addressed these gaps through a series of qualitative interviews with patients undergoing the Cognigram [Cogstate] test.

2. Methods

2.1. Setting and context

We conducted a qualitative study using semi-structured interviews to explore patient perspectives on the experience of a computerized cognitive assessment for AD. The study took place at the University of British Columbia Hospital Clinic for Alzheimer Disease and Related Disorders.

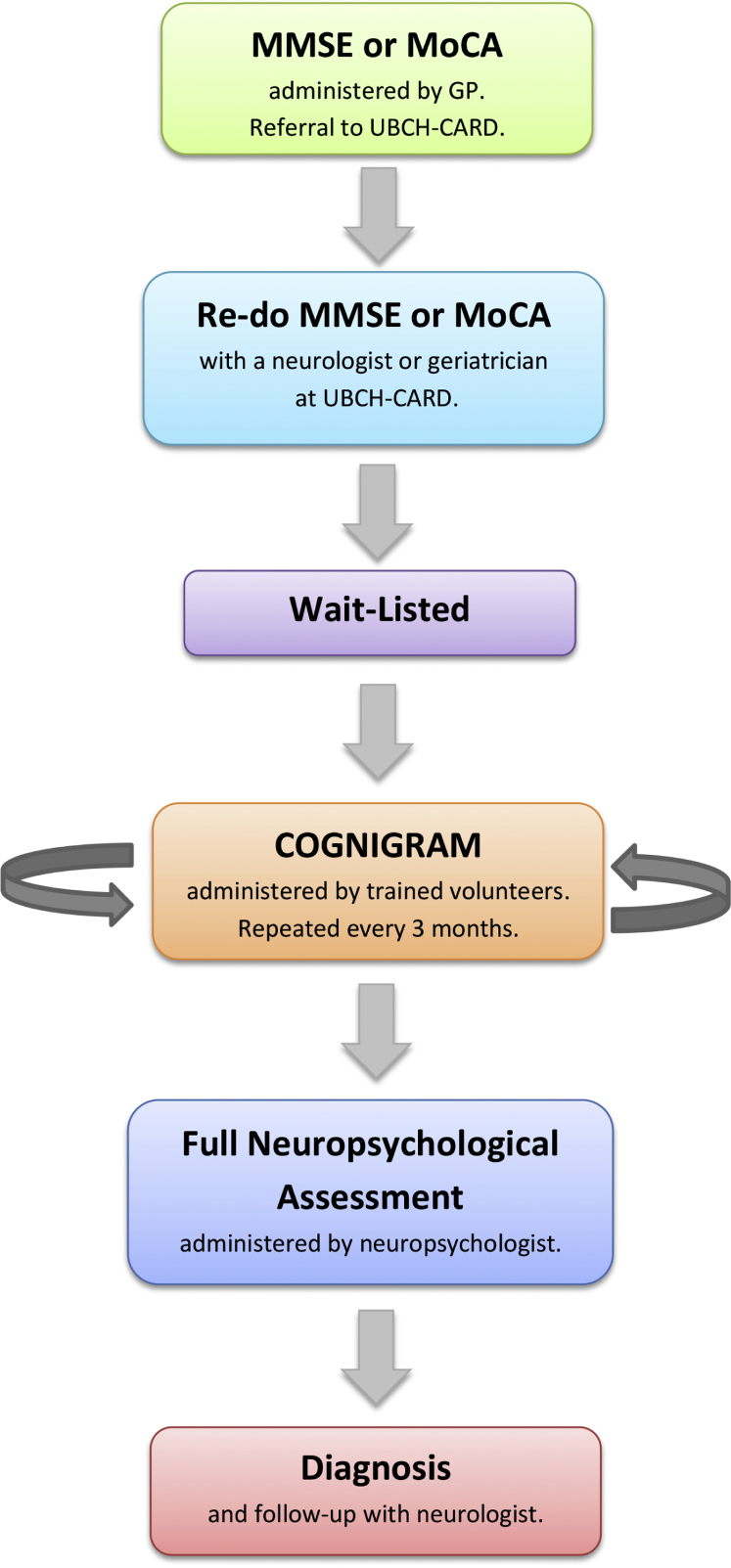

During the study period, University of British Columbia Hospital Clinic for Alzheimer Disease and Related Disorders clinicians were testing the use of a computerized cognitive assessment called the Cognigram [Cogstate], a computerized test battery designed to detect and monitor change in cognitive function over time (Fig. 1). Patients were asked to take the Cognigram test every 3 months until either their test scores dropped or they reached the top of the waitlist.

Fig. 1.

Integration of the Cognigram test into the cognitive assessment and diagnostic process. Abbreviations: UBCH-CARD, University of British Columbia Hospital Clinic for Alzheimer Disease and Related Disorders; MMSE, Mini–Mental State Examination; MoCA, Montreal Cognitive Assessment.

Patients scheduled to undergo their first cognitive assessment using the Cognigram were invited to participate in our study. Patients were considered eligible for participation if they met the following inclusion criteria: (1) were English-speaking, (2) held residency in British Columbia, (3) were taking the Cognigram test, (4) were available for two interview segments. Patients with advanced cognitive impairment (scoring in the second percentile or below in the neuropsychology assessment) were excluded.

The interview guide was developed based on the research questions and a review of existing literature. The guide was subsequently piloted and reviewed by colleagues for feedback. The guide was modified through an iterative process to provide focus on four main topic areas: (1) expectations for the visit, (2) evaluation of the experience, (3) trust in the process, and (4) influence of computerized assessment on the patient-physician relationship (Table 1). Ethics approval was received from the University of British Columbia Office of Research Ethics, and written consent was obtained for each participant.

Table 1.

Research focus areas and example questions from the interview guide

| Focus area | Example question(s) from the interview guide |

|---|---|

| Expectations about the computerized assessment test | Why do you think you are taking this test today? What do you hope to gain from taking the test? |

| Perspectives/evaluations of the test-taking experience | Do you think that your performance on the test today reflects your true abilities? Why or why not? |

| Trust in the process | What do you think about the use of computers to detect or diagnose a health problem? |

| Computerized assessment and the patient-physician relationship | When it comes to evaluating your health, which would you trust more: a doctor's opinion OR a computer test result? |

2.2. Data collection

One-on-one, in-person interviews were conducted between January and October 2016. The interview process included a pretest interview (10–20 minutes), which took place immediately before the Cognigram assessment and a post-test interview (30–60 minutes). Interviews were audio-recorded and transcribed verbatim.

2.3. Data analysis

Thematic analysis was applied to the data through a constant comparison between the research questions, interview guide, and transcripts. To facilitate code development, sections of text were labeled with codes based on questions in the interview guide and emerging themes. A rich coding strategy was used to allow for a single segment of text to be labeled with more than one code. Interviews were coded in this manner by one to three investigators, and a preliminary coding guide was generated.

To ensure rigor within the analyses, 15% of the transcripts were independently coded by two investigators using the preliminary coding guide. Percentage agreement was calculated, and initial disagreements were resolved through discussion to achieve consensus. Subsequently, the coding guide was revised using an iterative process, in which three investigators collectively discussed modifications to reduce ambiguity and condense codes to generate the final guide.

Following the coding of all transcripts, coded segments were reviewed in a process of constant comparison to generate overarching categories and concepts. A collective examination of the data was conducted to identify relationships between themes and provide answers to the research questions.

3. Results

3.1. Sociodemographic characteristics

Nineteen participants undergoing cognitive assessment at University of British Columbia Hospital Clinic for Alzheimer Disease and Related Disorders were interviewed (16 females; 3 males). The mean age of participants was 66 years, with a range of 60–73 years. Participants represented a diversity of sociodemographic characteristics (Table 2) and ethnic and cultural backgrounds (for full list see Supplementary Table 1).

Table 2.

Sociodemographic characteristics of interviewed participants

| Characteristic | Number of participants (n = 19) |

|---|---|

| Sex | |

| Female | 16 |

| Male | 3 |

| Age (years) | |

| 60–65 | 4 |

| 66–70 | 3 |

| 71–75 | 2 |

| Did not disclose | 10 |

| Education level | |

| High school (incomplete) | 1 |

| High school (complete) | 6 |

| College | 3 |

| University | 9 |

| English ability | |

| First language | 14 |

| Second language | 4 |

| Third language | 1 |

3.2. Attitudes toward computers in healthcare

In assessing participant views on the use of computers to diagnose or detect health conditions, two interrelated themes emerged: (1) growing acceptance of computers as a tool to improve healthcare and (2) the need for balance between human and computer intervention.

3.2.1. Acceptance toward computers in healthcare

Participant responses reflected a growing acceptance of the use of computers to detect health conditions. Participants cited advantages associated with computerized diagnosis, such as increased speed, efficiency and accuracy, mitigation of human errors, and more frequent data capture.

Some participants expressed hope that computers would free up clinicians' time, allowing for longer in-person consultations, and saw potential for computers to reduce patient anxiety during the assessment as illustrated in the following excerpt:

“I'm always nervous when I have to talk to people, especially since I forget words and then […] I know the person is waiting, doesn't have that much time and I'm frantically trying to remember the word to make a sentence. And then I get more upset, so maybe a computer is better.” (P19)

3.2.2. The need for balance between human and computer intervention

Positive attitudes toward the use of computers in healthcare were moderated by the desire for the human touch and an overarching trust in human over machine. The importance of maintaining a balance between human and computer intervention was captured by one individual's response:

“Because when you're stressed and think you're going to lose your mind, it's helpful to have somebody […] asking the questions.” (P4)

Over half of the participants echoed this belief, noting lack of human perception and inability to provide comfort and communicate as potential drawbacks of using computer technology:

“Well, there are pluses and minuses. Eh, [a computer test] is unbiased right. But on the other hand, a perceptive interviewer will read more than just the strict answer, right. It will be the body language, will be the tone and whatever.” (P17)

In line with these findings, 63% of participants stated that they would trust their doctor's opinion over a computer test result, whereas 26% said they would trust both the computer and doctor equally. Common reasons for participants' preferential trust in physicians included the following: (1) existence of a patient-physician relationship, (2) the ability of physicians to participate in bidirectional communication and answer questions, and (3) a lack of understanding/familiarity with computers.

Despite the unique advantages offered by computerized assessment tools, participants ultimately placed their trust in the physician to integrate and evaluate the raw data from diagnostic tests, analog or computerized, to inform their diagnosis.

3.3. Attitudes toward the cognitive assessment process

General attitudes toward the cognitive assessment process were mixed. When asked about their concerns about the test-taking process and its outcomes, the concept of “I'll do as well as I'll do” frequently featured in participant responses. For these individuals, viewing the test as one part of a larger process and acknowledging the impact of their attitude on performance helped them to navigate the experience and ameliorate negative feelings toward taking the test.

Empowerment also played a central role in people's expectations and attitudes. Some individuals expressed excitement or relief about undergoing the cognitive assessment, viewing it as a chance to address their concerns as noted by this participant:

“The outcomes, whatever they are, they just give you -from my perspective you've won the opportunity to decide how they're going to proceed. […] It gives them solid, concrete, objective information by which to make decisions.” (P3)

On the opposite end of the spectrum, others viewed the test as an obligation. Test-taking anxiety, the tendency to give up under stressful conditions, fear of performing poorly, and fear of being diagnosed with AD constituted negative attitudes toward the cognitive assessment process, as illustrated by the following excerpt:

“When I have an exam, some exams, I get very scared and I even forget the language sometimes. Even though I prepare, prepare, prepare, I'm just always scared.” (P19)

Although most participants reported that their attitudes toward the assessment were not affected by the computerized assessment modality, some believed taking a computerized test had the potential to affect their performance either negatively or positively. Participants with limited knowledge of computers or with frustrations with computers were more likely to worry about performing poorly. Conversely, those who had more positive experiences with computers were more likely to view computerized assessment methods as a way to improve the cognitive assessment process.

3.4. Evaluating the computerized assessment experience

Through our exploration of participant experiences with computerized cognitive testing, we identified several areas of interest including the following: self-perception of performance, praise and criticisms of the test, and trust in the test.

3.4.1. Self-perception of performance

Seventy-four percent of participants believed that their performance on the test was reflective of their true abilities. However, over half of the participants mentioned the presence of extraneous factors that may have affected their performance. Such factors included the presence of another health condition such as attention deficit hyperactivity disorder, as well as issues with motor control as described in the following quote:

“You just have to be fast enough, to grasp it. And sometimes you're fast, but you're hitting the wrong button. You're really wanting to hit this one, but you're automatically hitting the other -wrong.” (P2)

Perceptions of poor performance were often associated by sentiments of self-blame and regret:

“I could have done better. I should have done better.” (P4)

One participant mentioned culture as a lens that affected their feelings toward the test:

“I come from a culture where tests are very important. And you're supposed to learn from every test and do better right? Well, there's no way I can do better, so that is frustrating. I cannot learn the facts and say that next time I will know better.” (P19)

3.4.2. Praises and criticisms of the test

Several participants were satisfied with the simple design of the Cognigram test and found the instructions easy to understand. However, most participants believed that there was room for improvement. The following quotes exemplify major criticisms of the testing experience:

Design: “Part of the problem is that it was cards and suits. I don't play cards, so diamonds and hearts I can sort of remember, but I can't really tell the difference between shamrocks and shovels -spades- and that made it difficult.” (P16)

Length/intensity: “I didn't like test three. I think it was too long. […] it was a little bit too intense and had the potential of producing anxiety […]” (P3)

Feedback: “[The feedback sound] makes you more aware, but it also makes you aware that right then you screwed up. So if you screw up two or three in a row, for me, I get flustered. So from that point, it wasn't beneficial.” (P6)

3.4.3. Trust in the test

When asked about their trust in the Cognigram test, 47% of the participants expressed uncertainty about the test's utility in detecting AD or related health conditions. Lack of trust in the test was frequently associated with motor control issues such as being “trigger happy” and impulsive:

“I do know that most of my failings were due to my impulsiveness. But impulsiveness, it is a trait; it is not a cognition thing. So it will not really, in my eyes, it will not really measure my cognition.” (P17)

No motor considerations were raised other than the issue of impulsiveness when clicking through the test. However, our study sample did not include persons considered in the “oldest old” age group (aged 80 years and older). Motor issues may be a key barrier for this age group and should be considered when evaluating computerized screening in this demographic.

3. Discussion

Findings from this study emphasize the following: (1) the potential for computerized assessment to enhance the patient-clinician relationship, (2) the need to reframe the assessment process to reduce test-taking anxiety, (3) the importance of design and user-testing for computerized cognitive assessment interfaces, and (4) the implications of computerized assessments on reducing variance and increasing reliability of clinical trials.

In a study investigating technology and humane care, Barnard and Sandelowski [13] argued technology itself is not the reason for dehumanized care; instead poor care stems from the manner in which care providers operate and interact with these technologies [10]. Results from this study are convergent with this theory. Participants emphasized the need for balance between human and computer intervention, suggesting that the discordance between technology and person-focused care may be presumed rather than actual. Participants viewed the advantages and drawbacks of computerized cognitive assessments and the strengths and weaknesses of human-based methods as complementary. For example, participants felt the speed and efficiency afforded by computer tests could increase the time available for patient-physician communication. Advantages including the mitigation of human error and bias, efficiency, and frequency of data capture were raised by participants as important means to improve the diagnosis process. These computerized attributes within clinical trials can offer practical benefits in making statistical decisions about cognitive change, such as reducing error variance and increasing reliability [14]. This, in turn, can be an important factor in accommodating early stage clinical trials that typically recruit only a small group of subjects [15].

Research has shown that test anxiety can have a significant effect on cognitive performance [16], [17]. One potential shortcoming of computerized assessment of older patients is the anxiety individuals may experience when confronted with unfamiliar equipment [18]. When taking the Cognigram test, some participants gave up when the test became too hard. Others felt that stressful circumstances during the test such as time pressure and error sounds led to an increase in the number of errors they made. As a result, an individual's performance may not be an accurate reflection of their cognitive ability. In our sample, although 74% of participants felt the test reflected their abilities, only 47% indicated it could be useful to detect AD. This disconnect between perceptions of performance and perceptions of usefulness may hinder the adoption and widespread use of cognitive assessment technologies as users lack confidence in the benefits of the tool and at the same time, in their ability to realize these eventual benefits. Ultimately, the success of using computerized assessments is dependent on the user's comfort with the technology. Efforts to reframe the assessment process, whereby patients are offered an opportunity to provide information about their cognitive status through an activity, rather than a test, may be beneficial for patients who experience test-taking anxiety. Moreover, it should be emphasized that data capture of clinical trials should be valid and of high quality, as unfavorable testing conditions may limit the opportunities to capture assessment data and impact testing results [19].

Participant experiences of anxiety and stress during the Cognigram assessment highlight the importance of design and user-testing of computerized cognitive tests. While some participants found the error sounds anxiety-producing, others interpreted the sound as a form of feedback to confirm personal health concerns. In previous studies, self-diagnosing behavior has been shown to guide health decision-making and can have harmful ramifications on patient safety and well-being such as delayed diagnosis, misdiagnosis, and inappropriate treatment [20]. In addition, 25% of participants were not familiar with playing cards, the testing paradigm used in the Cognigram, and felt that this may have affected their performance. Mitigating anxiety and overcoming unfamiliar testing formats have the potential to cause learning effects in repeated assessments [21]. Disregarding these learning effects in the development of cognitive computerized assessments can lead to wrong conclusions of interventions and inaccurate cognitive evaluation within clinical trials [21], [22]. As computer use is typically less frequent with older adults, this age group is at greater risk for increased anxiety, and developers must be aware of the possible design implications of computerized assessments to ensure patient safety and optimize testing outcomes.

A proposed solution to ensure that technologies for the assessment and diagnosis of AD lead to maximum benefits for patients while minimizing potential harms is to engage end-users throughout the process of development. Several models have been put forward to address this goal, such as patient engagement [23], user-centered design [24], and participatory design [25]. These user engagement strategies not only democratize the development process but also allow technology developers to benefit from the experience and knowledge of target end-users, whether patients, family members, caregivers, or health-care professionals.

There exist several limitations of this present study related to the sample and setting. First, participants were recruited through convenience sampling. This may have resulted in limited cultural representation as all participants were either born in Canada or immigrated over 25 years ago. Many of those who identified as immigrants reported that assimilation into Canadian culture was important to them. In addition, participants took the Cognigram test as part of a research study rather than a standardized component of the clinical assessment process. It is possible that participant's attitudes toward the assessment experience and motivations to perform well differ in a research context as compared to in a diagnostic setting.

This study provides new insight into the attitudes and perceptions of individuals undergoing computerized cognitive assessment. Notably, participants expressed a growing acceptance of the use of computerized tests for detecting cognitive impairment, viewing it as a potential tool to improve quality of patient care and the patient-clinician relationship when used in conjunction with the “human touch.” Adverse effects impacting the patient experience of clinical testing can increase the risk of learning effects and lead to inaccurate intervention conclusions and assessment results. Results from this study highlight the need for careful design and user-testing of computerized assessment interfaces to minimize these effects.

To maximize the information obtained during early stage clinical trials, performance data must be clearly distinguished over time [26]. As a result, future research should be moving toward continuous data capture versus capturing data points. Implementing at-home testing of cognitive assessments can assist in continuous data capture, allowing older adults to complete testing more regularly and potentially reduce anxiety being in their preferred setting. In addition, ensuring the use of computerized assessments of high reliability would improve the interpretation of testing results within clinical trials and assist in the effort to reduce “noise” and variation of instruments.

Research in Context.

-

1.

Systematic review: The authors reviewed the literature using academic and medical databases (PubMed, Google Scholar, Cochrane Library, and Medline) and identified a lack of evidence around patient perspectives of computerized cognitive assessment.

-

2.

Interpretation: Our study suggests that computer interventions can enhance the quality of patient care and the patient-clinician relationship when balanced with human interaction. Areas for improvement include remodeling the assessment process to reduce test-taking anxiety and minimize learning effects.

-

3.

Future directions: Future work should explore strategies to minimize patient anxiety and discomfort in cognitive assessments, as well as further investigate ways to improve the design and utility of computerized assessment tools in collaboration with patient values. Moving toward continuous data capture and greater at-home cognitive assessments should be further explored.

Acknowledgments

The authors gratefully acknowledge Mannie Fan, Clinical Research Coordinator at UBCH CARD, for her invaluable assistance in scheduling participants and Dr. Jordan Tesluk and Dr. Daniel Buchman for their insightful comments on the interview guide.

This work was funded by the Canadian Consortium on Neurodegeneration in Aging, the Canadian Institutes for Health Research, the British Columbia Knowledge Development Fund, the Canadian Foundation for Innovation, and the Vancouver Coastal Health Research Institute.

Footnotes

Supplementary data related to this article can be found at https://doi.org/10.1016/j.trci.2018.06.003.

Supplementary data

References

- 1.Walsh D.M., Teplow D.B. Alzheimer's disease and the amyloid β-protein. Prog Mol Biol Transl Sci. 2012;107:101–124. doi: 10.1016/B978-0-12-385883-2.00012-6. [DOI] [PubMed] [Google Scholar]

- 2.Wild K., Howieson D., Webbe F., Seelye A., Kaye J. The status of computerized cognitive testing in aging: a systematic review. Alzheimers Dement. 2008;4:428–437. doi: 10.1016/j.jalz.2008.07.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Jutten R.J., Harrison J., de Jong F.J., Aleman A., Ritchie C.W., Scheltens P. A composite measure of cognitive and functional progression in Alzheimer's disease: Design of the Capturing Changes in Cognition study. Alzheimers Dement. 2017;3:130–138. doi: 10.1016/j.trci.2017.01.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Buckley R.F., Sparks K.P., Papp K.V., Dekhtyar M., Martin C., Burnham S. Computerized cognitive testing for use in clinical trials: a comparison of the NIH toolbox and Cogstate C3 batteries. J Prev Alzheimers Dis. 2017;4:3–11. doi: 10.14283/jpad.2017.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Livingston G., Sommerlad A., Orgeta V., Costafreda S.G., Huntley J., Ames D. Dementia prevention, intervention, and care. Lancet. 2017;390:2673–2734. doi: 10.1016/S0140-6736(17)31363-6. [DOI] [PubMed] [Google Scholar]

- 6.Wesnes K.A. Moving beyond the pros and cons of automating cognitive testing in pathological aging and dementia: the case for equal opportunity. Alzheimers Res Ther. 2014;6:58. doi: 10.1186/s13195-014-0058-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Wagner G.P., Trentini C.M. Assessing executive functions in older adults: a comparison between the manual and the computer-based versions of the Wisconsin Card Sorting Test. Psychol Amp Neurosci. 2009;2:195–198. [Google Scholar]

- 8.Smith A. Older Adults and Technology Use. Pew Res Cent Internet Sci Tech 2014. http://www.pewinternet.org/2014/04/03/older-adults-and-technology-use Available at:

- 9.Williams D., Alam M.A.U., Ahamed S.I., Chu W. 2013. Considerations in Designing Human-Computer Interfaces for Elderly People; pp. 372–377. 2013 13th Int Conf Qual Softw. [Google Scholar]

- 10.Hawthorn D. Possible implications of aging for interface designers. Interact Comput. 2000;12:507–528. [Google Scholar]

- 11.Hakkarainen P. ‘No good for shovelling snow and carrying firewood’: social representations of computers and the internet by elderly Finnish non-users. New Media Soc. 2012;14:1198–1215. [Google Scholar]

- 12.Werner J.M., Carlson M., Jordan-Marsh M., Clark F. Predictors of computer use in community-dwelling, ethnically diverse older adults. Hum Factors. 2011;53:431–447. doi: 10.1177/0018720811420840. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Barnard A., Sandelowski M. Technology and humane nursing care: (ir)reconcilable or invented difference? J Adv Nurs. 2001;34:367–375. doi: 10.1046/j.1365-2648.2001.01768.x. [DOI] [PubMed] [Google Scholar]

- 14.Collie A., Maruff P., Darby D.G., McStephen M. The effects of practice on the cognitive test performance of neurologically normal individuals assessed at brief test–retest intervals. J Int Neuropsychol Soc. 2003;9:419–428. doi: 10.1017/S1355617703930074. [DOI] [PubMed] [Google Scholar]

- 15.Collie A., Darekar A., Weissgerber G., Toh M.K., Snyder P.J., Maruff P. Cognitive testing in early-phase clinical trials: development of a rapid computerized test battery and application in a simulated Phase I study. Contemp Clin Trials. 2007;28:391–400. doi: 10.1016/j.cct.2006.10.010. [DOI] [PubMed] [Google Scholar]

- 16.Spielberger C.D. John Wiley & Sons, Inc.; New Jersey, USA: 2010. Test Anxiety Inventory. Corsini Encycl. Psychol. [Google Scholar]

- 17.Eysenck M.W., Calvo M.G. Anxiety and performance: the processing efficiency theory. Cogn Emot. 1992;6:409–434. [Google Scholar]

- 18.Cassady J. The impact of cognitive test anxiety on text comprehension and recall in the absence of external evaluative pressure. Appl Cogn Psychol. 2004;18:311–325. [Google Scholar]

- 19.Nass S.J., Levit L.A., Gostin L.O. National Academies Press; US: 2009. Rule I of M (US) C on HR and the P of HITHP. The Value, Importance, and Oversight of Health Research. [PubMed] [Google Scholar]

- 20.Liebert R.M., Morris L.W. Cognitive and emotional components of test anxiety: a distinction and some initial data. Psychol Rep. 1967;20:975–978. doi: 10.2466/pr0.1967.20.3.975. [DOI] [PubMed] [Google Scholar]

- 21.Hausknecht J.P., Halpert J.A., Di Paolo N.T., Moriarty Gerrard M.O. Retesting in selection: a meta-analysis of coaching and practice effects for tests of cognitive ability. J Appl Psychol. 2007;92:373–385. doi: 10.1037/0021-9010.92.2.373. [DOI] [PubMed] [Google Scholar]

- 22.Salthouse T.A., Toth J., Daniels K., Parks C., Pak R., Wolbrette M. Effects of aging on efficiency of task switching in a variant of the trail making test. Neuropsychology. 2000;14:102–111. [PubMed] [Google Scholar]

- 23.Silverberg N.B., Ryan L.M., Carrillo M.C., Sperling R., Petersen R.C., Posner H.B. Assessment of cognition in early dementia. Alzheimers Dement. 2011;7:e60–e76. doi: 10.1016/j.jalz.2011.05.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Ryan A., Wilson S. Internet healthcare: do self-diagnosis sites do more harm than good? Expert Opin Drug Saf. 2008;7:227–229. doi: 10.1517/14740338.7.3.227. [DOI] [PubMed] [Google Scholar]

- 25.Hayden K.M., Makeeva O.A., Newby L.K., Plassman B.L., Markova V.V., Dunham A. A comparison of neuropsychological performance between US and Russia: preparing for a global clinical trial. Alzheimers Dement. 2014;10:760–768.e1. doi: 10.1016/j.jalz.2014.02.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Beglinger L.J., Gaydos B., Tangphao-Daniels O., Duff K., Kareken D.A., Crawford J. Practice effects and the use of alternate forms in serial neuropsychological testing. Arch Clin Neuropsychol. 2005;20:517–529. doi: 10.1016/j.acn.2004.12.003. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.