Abstract

Current status data occur in many biomedical studies where we only know whether the event of interest occurs before or after a particular time point. In practice, some subjects may never experience the event of interest, i.e., a certain fraction of the population is cured or is not susceptible to the event of interest. We consider a class of semiparametric transformation cure models for current status data with a survival fraction. This class includes both the proportional hazards and the proportional odds cure models as two special cases. We develop efficient likelihood-based estimation and inference procedures. We show that the maximum likelihood estimators for the regression coefficients are consistent, asymptotically normal, and asymptotically efficient. Simulation studies demonstrate that the proposed methods perform well in finite samples. For illustration, we provide an application of the models to a study on the calcification of the hydrogel intraocular lenses.

Keywords: Box-Cox transformation, Cure fraction, Empirical process, NPMLE, Proportional hazards cure model, Proportional odds cure model, Semiparametric efficiency

1 Introduction

Current status data occur in many biomedical and health studies, where the exact onset of the event of interest cannot be observed directly and we only know whether or not the event has occurred at the time the sample is collected. For example, in a tumorigenicity experiment that involves lung tumors in mice (Hoel and Walburg, 1972), only an indicator of lung tumor presence or absence at time of death was observed. A second example is the Calcification study of the hydrogel infraocular lenses (IOL), which is an infrequently reported complication of cataract treatment (Yu et al, 2001). The exact time to the occurrence of calcification is not observable. Instead, the calcification status was observed at the time of examination.

Current status data have been investigated extensively in literature. Groeneboom and Wellner (1992) derived the nonparametric maximum likelihood estimator (NPMLE) of the survival function of time to event. Methods for the regression analysis of current status data include the Cox proportional hazards model (Huang, 1996), the proportional odds model (Rossini and Tsiatis, 1996; Huang and Rossini, 1997), and the additive hazard model (Lin et al, 1998). More recently, partly linear accelerated failure time (AFT) model and partly linear transformation models were proposed by Xue et al (2004) and Ma and Kosorok (2005), respectively. There is an inexplicit assumption in all the aforementioned methods that the event will eventually occur for all subjects given a sufficient long time of follow-up.

In many studies, it is observed that a proportion of subjects never experiences the event of interest, i.e., they are cured or not susceptible to the event of interest. The aforementioned standard survival analysis techniques may no longer be appropriate in such situations. Alternatively, survival models that allow for a cured subgroup are more desirable. Such models generally are referred to as “cure models”. Under the cure model, the survival function, denoted by S, is allowed to be improper, i.e., S(∞) > 0. There is an abundant literature on cure rate models incorporating a cure fraction for right censored data. Perhaps the most commonly used cure rate model is the mixture model first introduced by Berkson and Gage (1952). This model assumes that the underlying population consists of a cured sub-population and a sub-population not being cured. This mixture cure model and its extensions have been studied by many investigators, including Farewell (1982, 1986), Gray and Tsiatis (1989), Kuk and Chen (1992), Taylor (1995), Sy and Taylor (2000), Peng and Dear (2000), Betensky and Schoenfeld (2001), and Lu and Ying (2004), among others. A comprehensive discussion of the mixture cure model is given in Maller and Zhou (1996).

The mixture cure model, however, does have several drawbacks both from a frequentist and Bayesian perspective as noted by Chen et al (1999) and Ibrahim et al (2001). One particular drawback is that the mixture cure model does not appear to describe the underlying biological process generating the failure time in the context of cancer relapse. The test of the effects of one covariate (on the cure fraction and the hazard rate in the uncured sub-population) has a degree of freedom of two and consequently can potentially compromise the power of the test. Furthermore, the EM-algorithm is often used to estimate the unknown parameters in the mixture cure models, which may increase the computation burden especially when there are no closed form solutions in the M-step. Additionally, in the Bayesian inference, the mixture cure model can yield improper posterior distributions for many non-informative improper priors, including the uniform prior for regression coefficients.

Alternatively, Yakovlev and Tsodikov (1996) proposed the promotion time cure model, which was further studied by Tsodikov (1998), Chen et al (1999), and Tsodikov et al (2003), among others. This model has a proportional hazards structure, a desirable feature of survival models, yields proper posterior distributions under a wide class of non- informative improper priors for the regression coefficients, and also has biological motivations (Chen et al, 1999). In this model, the population survival function for a subject with covariates Z is given by

| (1) |

where F(t) is a distribution function. This model integrates the survival function for either cured subjects or non-cured subjects into one single formulation and assumes that the cured subjects have survival time equal to infinity. The hazard function corresponding to (1) is λpop(t|Z) = eβTZf(t), where f(t) = dF(t)/dt. The cure rate under model (1) is limt→∞ Spop(t|Z) = exp(−eβTZ). The promotion time cure model is also referred to as the proportional hazards cure model. As a matter of fact, the above promotional time cure model can be derived from a biological process generating the relapse time in cancer patients. For a cancer patient, let N denote the number of carcinogenic cells, which is assumed to follow a Poisson distribution with mean θ. Here θ may depend on some covariates, e.g., θ = eβTZ. We denote by Yi the random time for the ith carcinogenic cell to produce a detectable cancer mass. We assume that Yi’s are i.i.d. with a distribution function F(·) and are independent of N. It then can be shown that the probability of cancer free by time t for the patient is exp{−θF(t)}, which takes the same form as the survival function in (1).

To further increase the flexibility of the promotional time cure model, Zeng et al (2006b) proposed the following class of cure rate models by using Box-Cox type of transformations

| (2) |

where

This class of models include the proportional hazards cure model and the proportional odds cure model as special cases. When γ = 0, model (2) reduces to the proportional hazards cure model; when γ = 1, model (2) has the proportional odds structure. This class of cure models have sound biological basis and provide flexible alternatives to the mixture cure models.

The studies of cure rate models for current status data are relatively limited. Lam and Xue (2005) considered a partly linear AFT model for susceptible subjects and used a sieve maximum likelihood approach. Ma (2008) and Ma (2009) used additive risk model and linear or partly linear Cox models (Cox, 1972), respectively, to model the event time in the susceptible population. In all three papers, the authors considered mixture cure rate models. Cook et al (2008) also considered parametric and semiparametric mixture cure models but focused on the estimation of covariates effects on the cure rate only. Liu and Shen (2009) considered the proportional hazards cure model for interval-censored data and proved the strong consistency of the maximum likelihood estimators under the Hellinger distance.

In this article, we investigate current status data with a cure fraction. Particularly, we consider the class of transformation models (2). Although this class of models have been well studied for right-censored data, such models have not been used to analyze current status data and the extension is far from trivial. Our research aims to fill in this gap both theoretically and practically. In the next section, we derive the likelihood function and propose using maximum likelihood approach for estimation. In section 3 we establish the asymptotic properties of the proposed maximum likelihood estimators (MLEs). In Section 4 we conduct simulation studies to evaluate the finite-sample properties of the estimators and also illustrate the proposed model with the Calcification data. We conclude with a brief discussion in Section 5 and provide technical details for the proofs in the Appendix.

2 Maximum Likelihood Estimation

Suppose that there are n i.i.d. observations. For i = 1, …, n, we observe {Yi, Δi = I(Ti ≤ Yi), Zi}, where Ti is the failure time for member i, Yi is the random censoring or inspection time, Zi is a vector of bounded covariates, and I(·) is the indicator function. The first component of Zi is 1. We assume that the inspection time Yi is conditionally independent of Ti given Zi.

We consider model (2). The observed-data likelihood function for the parameters (β, F) can be expressed, for fixed γ, as

We wish to to obtain the maximum likelihood estimators of (β, F) by maximize the above likelihood function over the parameter space

It is obvious that the MLEs exist since the likelihood function is bounded from above by 1 and Θ is weakly compact. The MLEs, however, are not unique since the likelihood function depends on F only through the its values at the observed times Yi, i = 1, …, n. Thus we focus on the maximization of Ln(β, F) over all nondecreasing step functions with jumps at the Yi’s for F(t). We denote the MLEs of β and F by β̂n and F̂n. Furthermore, we claim that F̂n can only have jumps at those Yi’s with Δi = 1. Let s1 < ··· < sm denote the ordered distinct time points of Yi, i = 1, …, n with associated Δi = 1. For a subject with Δi = 0 and sk < Yi < sk+1, consider two distribution functions F1 and F2, which have the same values at all time points except that F1(Yi) = F1(sk) and F2(Yi) > F1(sk). Since Gγ(·) is a decreasing function, it can be easily shown that Ln(β, F1) > Ln(β, F2). Therefore, F̂n can have positive jumps only at sk, k = 1, …, m. Similarly, we can show that F̂n(sm) = 1 since F is a proper distribution function. Therefore we maximize the likelihood function subject to the constraint that 0 ≤ F(s1) ≤ ··· ≤ F(sm) = 1.

The pool-adjacent-violator (PAV) approach (Barlow et al, 1972; Robertson et al, 1988) is often used in the optimization problems with monotone constraints, e.g., see Groeneboom and Wellner (1992), van der Laan and Jewell (2001), and Ma (2008, 2009). Alternatively, in this paper we use the following transformation

and the constrained optimization problem reduces to the maximization of the likelihood function over (β, α1, …, αm−1) without constraints. For small m, Newton-Raphson algorithm can be used to solve the score equations for β and {αk, k = 1, …, m − 1}. When m is large, we use the quasi-Newton method of Broyden, Fletcher, Goldfarb and Shanno (or the so-called BFGS algorithm) as described in Press et al (1992) to search for the optimum. The BFGS algorithm refines an approximation of the inverse Hessian matrix at each iteration via a rank-one update. Therefore, although the number of parameters under the proposed model increases with sample size, the computation burden for obtaining the NPMLEs increases linearly with sample size. In our experience, the BFGS algorithm performs well even when there are thousands of parameters. This algorithm has been successfully applied in optimization problems with a large number of parameters, e.g., see Diao et al (2013) and Yuan and Diao (2014). It is well known that for interval-censored data, the MLE of the distribution function may have zero mass at some inspection times even with Δ = 1. Numerically, the estimates of the jumps of F are negligible (< 10−6) at those time points. The same transformation technique was also used by Zeng et al (2006a) to estimate the cumulative hazard function under the semiparametric additive risks model for interval-censored data.

3 Asymptotic Properties

We first impose the following assumptions:

-

(C1)

Covariates Zi are bounded with probability one. Furthermore, if there exists a constant vector β such that βTZi = 0 almost surely, then β = 0.

-

(C2)

Conditional on Zi, the inspection time Yi is independent of Ti.

-

(C3)

There exists a constant t0 < ∞ such that P(Ti > t0|Zi) = P(Ti = ∞|Zi) > 0 almost surely. Furthermore, the inspection time Yi is a random variable from a distribution with support [τl, τu] with 0 ≤ τl < t0 ≤ τu < ∞ and P(Yi ≥ t0|Zi) > 0.

-

(C4)The true values of β, denoted by β0, belong to the interior of a known compact set,

where ||·|| is the Euclidean norm.

-

(C5)The true promotion time cumulative distribution function F0 belongs to the space

Remark 1

Condition (C1) is the usual linear independence condition of Zi in regression settings. Conditions (C2), (C4), and (C5) are standard assumptions in semiparametric inferences with failure time data. Condition (C3) assumes that no new cases occur after t0 and that t0 is in the support of inspection time. Under this assumption, all censored observations beyond t0 are treated as “Ti = ∞” (i.e., observed to be cured) and we can discriminate between cured and un-cured observations. The constant t0 serves as the “cure threshold” defined in Zeng et al (2006b). This condition is needed to ensure the identifiability of the unknown parameters (β, F). Note that for a parametric cure rate model, the condition that some subjects are observed to be cured is not needed. More details on the identifiability of cure models are provided in Li et al (2001). For practical data analysis, we can set t0 = sm or choose a threshold value suggested by clinicians. In the mixture cure rate model setting, Ma (2008, 2009) set Λ̂(t) = ∞ for t > max{Yi : i = 1, …, n}, where Λ is the baseline cumulative hazard function for the un-cured sub-population. This technique is essentially the same as setting t0 = sm.

Remark 2

For right-censored failure time data, Zeng et al (2006b) imposed the assumption P(Yi = ∞|Zi) > 0. However with current status data Yi = ∞ implies Δi = 1 regardless of whether Ti = ∞ or not therefore such observations are not informative.

Under conditions (C1) – (C3), the parameters β and F are identifiable. Suppose that two sets of parameters, (β, F) and (β̃, F̃), give the same likelihood function for the observed data, i.e., if for almost all (Z, Y),

we claim that β = β̃ and F(t) = F̃(t) for every t ∈ [τl, t0]. In fact, by condition (C3), F(t) = F̃(t) = 1 for any t ≥ t0. Let Y = t0, we have Gγ(eβTZ) = Gγ(eβ̃TZ). Then, from the monotonicity of Gγ, it follows that βTZ = β̃T Z for almost all Z. Thus condition (C1) gives β = β̃. Furthermore, by letting Δ = 1 and Y = t, τl ≤ t < t0, we have Gγ{eβTZF(t)} = Gγ{eβTZ F̃(t)}. It then follows that F(t) = F̃(t) for any t ∈ [τl, t0].

We establish the consistency of the MLEs in the following theorem.

Theorem 1. (Consistency)

Under conditions (C1), (C3) and (C4), ||β̂n−β0|| → 0 and supt∈[τl,t0] |F̂n(t) − F0(t)| → 0 almost surely.

We outline the proof here and defer the details to Appendix A.1. Let

and

be the log-likelihood ratio. Let d((β, F), (β0, F0)) = ||β−β0||+supt∈[τl,b0] |F(t)− F0(t)|, and ℳ = {m(Y,Z,Δ|β, F) : (β, F) ∈ ℬ×ℱ}. We show that ℳ is a P-Glivenko-Cantelli class, and m(Y,Z,Δ|β, F) satisfies some other conditions. If follows from Theorem 5.8 in van der Vaart (2002) that

This gives the desired result.

With the consistency result, we can establish the following result on convergence rate of the MLEs.

Lemma 1. (Convergence rate)

Under conditions (C1), (C3) and (C4),

Groeneboom and Wellner (1992) established that the best convergence rate for estimates of the nonparametric distribution function with one-sample current status data is n1/3. Huang (1996) and Ma (2008, 2009) obtained similar results for the estimates of the baseline cumulative hazard function in the Cox model (Cox, 1972) and the mixture cure rate model, respectively. Lemma 1 indicates that the optimal convergence rate can be achieved under the proposed cure rate model. The detailed proof of Lemma 1 is given in Appendix A.2.

Before stating the result for the asymptotic normality of β̂n, we derive the efficient score function for β. The log-likelihood for a single observation X ≡ (Y, Δ, Z), denoted by l(β, F), takes the form

The score function for β is the first derivative of l(β, F) with respect to β and takes the form

where

and is the first derivative of Gγ. For a function g in [τl, τu] with bounded total variation, the score function for F along the direction of g is given by

Project the score function of β onto the space generated by lF (β, F)[g], and for g = (g1, …, gd) with d = dim(β), denote lF (β, F)[g] = (lF (β, F)[g1], …, lF (β, F)[gd])T. We obtain the efficient score function for β at the true parameter

where

Write . In the appendix, we will show that Iβ(β0, F0) is positive definite and component-wise bounded.

With the derived convergence rate and the efficient score function for β, we can obtain the following asymptotic normality results for β̂n.

Theorem 2. (Asymptotic normality)

Under conditions (C1)–(C4), converges weakly to a zero-mean normal distribution with variance .

Theorem2 states that although the convergence rate of (β̂n, F̂n) is considerably slower than the n1/2 rate for right censored data, we can still obtain the consistency and asymptotic normality of β̂n. Furthermore, we conclude that β̂n is the most efficient estimator for β0 as attains the semiparametric efficiency bound for β0. The proof of Theorem 2 is provided in Appendix A.3.

Let 𝔹(·) be the two-sided Brownian motion originating from zero: a mean zero Gaussian process on R with 𝔹(0) = 0, and E(𝔹(s) − 𝔹(h)2 = |s − h| for all s, h ∈ R. Denote for convergence in distribution. Let wn be the random variable that given the Zi’s, wn takes with probability 1/n. Denote w = w(Z) for its asymptotic version conditioning on Z. Let f0(·) be the derivative of F0(·).

To derive the asymptotic distribution of F̂n, we make two additional assumptions.

-

(C6)

Function Gγ satisfies .

-

(C7)

The density function of Y, g(t) > 0 with t ∈ [τ1, t0].

Theorem 3

Assume (C1)–(C7), then ∀t ∈ [τ1, t0],

where η2(t) = E[(I(T ≤ t)w(Z) − F0(t))2].

The distribution of arg minh∈R{𝔹(h)+h2} is called Chernoff distribution, the density function of which was derived in Groeneboom (1989). It has no closed form and is not easy to evaluate. Kosorok (2008) proposed a sampling method to evaluate this distribution. The proof of Theorem 3 is in Appendix A.4.

Remark 3

The inference above is based on the selected model with the transformation parameter γ fixed. To select the best model, we may choose the transformation which minimizes the Akaike information criterion (AIC), which is defined as twice the negative log-likelihood function plus twice the number of parameters. It is theoretically possible to accounting for the variation due to the model selection procedure. The computation, however, may be demanding or unstable. Furthermore, whether this kind of variation should be accounted for is up to debate (Box and Cox, 1982). In the subsequent simulation studies, we will fix the transformation whereas in the data application, we will use the AIC to select the best transformation.

4 Numerical Studies

4.1 Simulation

We conducted simulation studies to examine the finite sample performance of our proposed methodology. We generated data from model (2) with Z = (1, Z1, Z2)T, where Z1 is a uniform random variable in [0, 1], and Z2 is a Bernoulli random variable with a success probability 0.5. The true parameter values of β = (β0, β1, β2) are are set to be (−0.5, 1, −0.5); and F(t) = 1 − {exp(−t) − exp(−t0)}I(t ≤ t0)/{1−exp(−t0)}, where t0 = 4. The inspection time was set to be the minimum of 4 and an exponential variable with mean 2. We considered five different models by varying the values of γ from 0 to 1. The average cure rate ranged from 0.45 to 0.56 as α changed from 0 to 1, whereas the censoring proportion among those un-cured subjects was about 0.26 for all five models.We considered sample sizes of 200 and 400 for each model. For each simulation set-up, we generated 1,000 data sets. The quasi-Newton algorithm implemented in C language was applied to maximize the log-likelihood function. The initial values of the regression parameters were set to be 0 and the initial jump sizes of F were set to be 1/m at s1, …, sm. The convergence of the quasi-Newton algorithm was very fast and it took about 0.3 second to analyze one data set with 400 subjects on a Dell PowerEdge 2900 server.

Table 1 summarizes the results for each combination of γ and n. The column labeled as “Mean” denotes the sampling mean of the parameter estimator from the true parameter value; “SE” is the sampling standard error of the parameter estimator; “SEE” is the mean of the standard error estimator, which is calculated based on the inverse of the observed information; and “CP(%)” is the coverage probability of the 95% confidence interval based on the asymptotic normal approximation. The proposed estimators appear to be unbiased. The estimate of the standard error reflects accurately the true variation, and for moderate sample size and cluster size the confidence intervals have proper coverage probabilities. As the sample size increases from 200 to 400, the standard deviations of the estimates shrink at approximately the rate.

Table 1.

Summary Statistics for the Maximum Likelihood Estimators

| Model | n | Parameter | True value | Estimate | SE | SEE | CP(%) |

|---|---|---|---|---|---|---|---|

| γ = 0 | 200 | β1 | 1 | 1.047 | 0.436 | 0.427 | 94.7 |

| β2 | −0.5 | −0.538 | 0.249 | 0.245 | 94.9 | ||

| 400 | β1 | 1 | 1.029 | 0.302 | 0.294 | 94.1 | |

| β2 | −0.5 | −0.522 | 0.168 | 0.169 | 94.9 | ||

| γ = 0.25 | 200 | β1 | 1 | 1.048 | 0.481 | 0.471 | 95.0 |

| β2 | −0.5 | −0.540 | 0.275 | 0.270 | 93.3 | ||

| 400 | β1 | 1 | 1.031 | 0.334 | 0.325 | 94.5 | |

| β2 | −0.5 | −0.523 | 0.183 | 0.187 | 95.2 | ||

| γ = 0.5 | 200 | β1 | 1 | 1.044 | 0.525 | 0.511 | 94.4 |

| β2 | −0.5 | −0.54 | 0.298 | 0.293 | 94.3 | ||

| 400 | β1 | 1 | 1.026 | 0.367 | 0.353 | 94.0 | |

| β2 | −0.5 | −0.524 | 0.199 | 0.203 | 95.7 | ||

| γ = 0.75 | 200 | β1 | 1 | 1.048 | 0.569 | 0.550 | 93.8 |

| β2 | −0.5 | −0.541 | 0.321 | 0.315 | 95.4 | ||

| 400 | β1 | 1 | 1.030 | 0.395 | 0.379 | 94.1 | |

| β2 | −0.5 | −0.525 | 0.215 | 0.218 | 95.0 | ||

| γ = 1 | 200 | β1 | 1 | 1.054 | 0.602 | 0.587 | 94.2 |

| β2 | −0.5 | −0.547 | 0.344 | 0.336 | 95.1 | ||

| 400 | β1 | 1 | 1.032 | 0.417 | 0.404 | 94.4 | |

| β2 | −0.5 | −0.529 | 0.234 | 0.232 | 94.3 |

The next line of simulation studies concerned about the performance of the commonly used proportional hazards cure model and the proportional odds cure model when data were generated from a different model. Specifically, we used the same setting for generating data in the simulation studies described earlier. The model with γ = 1/2 corresponds to a model between the proportional hazards cure model and the proportional odds cure models. For each simulation setting, we generate 1,000 replications with sample size of n = 400. The results are summarized in Table 2. We observe when the true model is the proportional hazards cure model, the regression parameters from the proportional odds cure model are overestimated, whereas the parameters from the proportional hazards cure model are underestimated when the proportional proportional odds cure model is true. In general, when the transformation parameter is between 0 and 1, the proportional hazards cure model tends to bias towards 0, whereas the opposite is observed for the proportional odds cure model. Note that both models estimate the direction of the coefficients correctly under misspecified transformation. We also observe that standard error estimates of the regression coefficients corresponding to the covariates appear to be correct although the parameter estimates are biased.

Table 2.

Simulation Results Under Misspecified Transformation

| Model | Parameter | True value | Estimate | SE | SEE | CP(%) |

|---|---|---|---|---|---|---|

| True transformation: G(x) = e−x | ||||||

| Proportional odds model | β1 | 1 | 1.396 | 0.415 | 0.405 | 84.6 |

| β2 | −0.5 | −0.706 | 0.228 | 0.231 | 86.7 | |

| True transformation: G(x) = (1+x)−1 | ||||||

| Proportional hazards model | β1 | 1 | 0.806 | 0.325 | 0.316 | 89.7 |

| β2 | −0.5 | −0.414 | 0.185 | 0.183 | 92.9 | |

| True transformation: G(x) = (1+x/2)−2 | ||||||

| Proportional hazards model | β1 | 1 | 0.889 | 0.317 | 0.305 | 92.1 |

| β2 | −0.5 | −0.455 | 0.174 | 0.176 | 95.2 | |

| Proportional odds model | β1 | 1 | 1.168 | 0.420 | 0.402 | 92.6 |

| β2 | −0.5 | −0.595 | 0.226 | 0.230 | 93.9 | |

4.2 Application to the Calcification Study

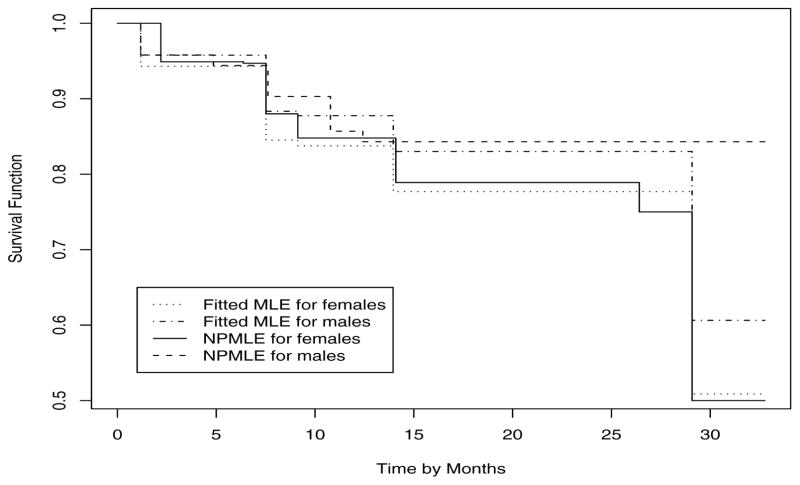

As an illustration, we applied our proposed model to the Calcification study of Yu et al (2001). The objective of the study was to investigate the effects of certain clinical factors on the time to IOL calcification after implantation. The status of calcification for each of the 379 patients in the study was determined by an experienced ophthalmologist at a random time ranging from 0 to 36 months after implantation. Covariates of interest include incision length, gender, and age at implantation. One individual with missing measurement on incision length was excluded from the analysis. Of the remaining 378 individuals, 48 experienced calcification before examination time. The nonparametric maximum likelihood estimates of the survival functions for males and females at the end of study were 0.844 and 0.5, respectively.

In our data analysis, we included incision length, gender, and age at implantation as covariates in the cure models. Let Z1 be the incision length, Z2 be gender that takes values 0 and 1 for female and male patients, respectively, and Z3 be the age at implantation divided by 10. We fit the proposed model (2) with Z = (1, Z1, Z2, Z3)T. We compared the proportional hazards cure model and the proportional odds cure model, and those models in between. As suggested by Zeng et al (2006b), we selected the proportional odds cure model which maximizes the Akaike information criterion (AIC). The AIC is defined as the twice log-likelihood function minus twice the number of parameters. The results under the proportional odds cure model are presented in Table 3. We see that males and older people are more likely to be cured and increase in incision length leads to an decrease in cure rate, although their effects are not significant. Our findings agree well with those of Lam and Xue (2005). Furthermore, to give a graphical comparison, Figure 1 plots the separate nonparametric estimates of the survival curve and the model-fitted survival curve for each gender group. The model-fitted survival function is calculated as the empirical average of the predicted survival functions. The dotted and dot-dashed lines in Figure 1 present the predicted survival functions; these in general agree quite well with the nonparametric estimates of the survival curves. The big difference between the fitted survival function and the nonparametric estimate of the survival function for males at the tail is due to that the largest inspection time in male patients with the occurrence of calcification was 17.6 months. Therefore the nonparametric estimate of the survival function for males is flat whereas the MLE of F0 in the fitted model has jumps beyond that time point. Figure 1 indicates that the proportional odds cure model fits the data well.

Table 3.

Maximum Likelihood Estimates of Regression Coefficients in the Proportional Odds Cure Model for the Calcification Data

| Covariate | Estimate | SE | Est/SE | p-value |

|---|---|---|---|---|

| Intercept | 0.354 | 0.075 | 4.689 | <0.001 |

| Incision length | 0.447 | 0.260 | 1.719 | 0.086 |

| Gender (male) | −0.310 | 0.343 | −0.906 | 0.365 |

| Age/10 | −0.136 | 0.216 | −0.626 | 0.531 |

Fig. 1.

Nonparametric estimates and proportional odds cure model-fitted survival functions of time to IOL calcification. The model-fitted survival function is calculated as the empirical average of the predicted survival functions for each gender group.

5 Discussion

We have considered a class of semiparametric cure models by imposing the Box-Cox type transformation on the population survival function for the analysis of current status data with a cured proportion in the population. This class includes the well-known proportional hazards and proportional odds structures as two special cases and has great potential in clinical trials and for modeling survival data with a cure fraction. We have developed efficient likelihood-based estimation and inference procedures and shown that the MLEs of the regression parameters are consistent, asymptotically normal and asymptotically efficient. The inference in this paper is based on the selected model with the transformation parameter fixed. As suggested by Zeng et al (2006b) we use the AIC to select the best model. It would be of interest to estimate the variances of the MLEs of the regression parameters accounting for the variation due to the model selection procedure in semiparametric inference. Future research is warranted.

We have developed an efficient algorithm implemented in C language for calculating the MLEs and standard error estimates of the MLEs under the proposed models. The computation is substantially faster than the PAV approach under the mixture cure model in Ma (2009). This significant improvement makes it possible to apply our methods to genetic studies in which linkage or association tests are performed at hundreds of thousands of genetic markers. Our computer program is available upon request.

As noted by Taylor (1995), Fang et al (2005), and Lam et al (2005), applications of cure models are restricted to problems in which there is strong scientific evidence for the existence of the cured population or a clear level plateau of the survival function is observed. Identifiability problem may arise when the cure probability is very close to one or zero. In our experience, the numerical computation of the MLEs may not be stable if the cure fraction is small.

Acknowledgments

NIH grant 1R15CA150698-01

The authors wish to thank Dr. Donglin Zeng and the referees for their helpful comments and suggestions, which lead to a considerable improvement in the presentation of this manuscript. The authors also would like to thank Drs. A. F. K. Yu, K. F. Lam, and HongQi Xue for providing the Calcification data.

Appendix

A.1. Proof of Theorem 1

Let p(Y,Z,Δ|β, F) = [1 − Gγ(eβTZF(Y))]Δ[Gγ(eβTZF(Y))]1−Δ, and

be the log-likelihood ratio. Let P be the probability measure of p(Y,Z,Δ|β0, F0), Pq(Y,Z,Δ|β, F) = ∫ q(Y,Z,Δ|β, F)dP(Y,Z,Δ), the true mean of q; and let , the empirical mean of q based on the data {(Yi,Zi,Δi): i = 1, …,n}. Note that Pq(Y,Z,Δ|β, F) is the negative Kullback-Leibler divergence of p(Y,Z,Δ|β, F) from p(Y,Z,Δ|β0, F0), and as a function of (β, F), it is always non-positive, attaining its maximum value of 0 at (β, F) = (β0, F0). Let Θ be the range of β and ℱ be the collection of all distribution functions on R+. Recall that (β̂n, F̂n) is the MLE of (β0, F0), i.e.,

Let d((β, F), (β0, F0)) as given before the Lemma (recall definitions of τl and t0 given in condition (C3)). By our model specification, Pq(Y,Z,Δ|β, F) is continuous with respect to (β, F); also, with (C3), the model is identifiable, and so (β0, F0) is the unique maximizer of Pq(Y,Z,Δ|β, F). Thus for all η > 0,

so if we show that 𝒬 = {q(Y,Z,Δ|β, F): (β, F) ∈ (Θ,ℱ)} is a P-Glivenko- Cantelli class, then by Theorem 5.8 in van der Vaart (2002, p.386),

This gives the desired result. Note that F̂n is estimated by data points s1, …, sm.

Now we show that 𝒬 is P-Glivenko-Cantelli. For any function g, let ||g||L1(P) = ∫ |g(y, z, δ)|dP(y, z, δ), and N[ ](ε,𝒬, L1(P)) be the minimum number of ε-brackets needed to cover 𝒬 under norm ||·||L1(P). We first show that N[ ](ε,𝒬, L1(P)) is finite ∀ε > 0.

By our specification of p(Y,Z,Δ|β, F) and with (C1), it can be checked that q(Y,Z,Δ|β, F) is boundedly differentiable with respect to β, and boundedly Gáteaux differentiable with respect to F. Consequently, by Taylor expansion, there are constants 0 < Cj < ∞ (j = 1, 2), such that

By (C4), N[ ](ε/(2C1),Θ, ||·||) = O(1/εd), with d = dim(Θ). Since ℱ is a collection of bounded monotone functions, by Theorem 2.7.5 in van der Vaart and Wellner (1996, p.159), for some constant 0 < C < ∞,

Thus, for some generic constant 0 < C < ∞,

and so by Theorem 2.4.1 in van der Vaart and Wellner (1996, p.122), 𝒬 is a Glivenko-Cantelli class with respect to P.

A.2 Proof of Lemma 1

Denote S = (Y,Z,Δ), let p(S|β, F), P and Pn as given in the proof of Theorem 1, ℓ(β, F|S) = log p(S|β, F) be the log-likelihood, and Dn be the set of all the observed data. Define

Since (β̂n, F̂n) ∈ (Θ,ℱ) is the MLE of (β0, F0), it is the M-estimator based on the log-likelihood ℓ(β, F|Dn) on the parameter space (Θ,ℱ), and so , for any (β, F) ∈ (Θ,ℱ) and any positive sequence rn →∞.

Let d(β̂n − β0, F̂n − F0) = ||β̂n − β0|| + || F̂n − F0||L2. Since conditions of Theorem 1 are satisfied, in its proof change supt∈[τl,t0] | F̂n(t) − F0(t)| to ||F̂n − F0||L2, we get .

Note that (β0, F0) = arg sup(β,F)∈(Θ,ℱ) 𝕄(β, F). Denote by the partial derivatives of ℓ(β, F|S) with respect to (β, F). The derivative with respect to F is in the Gáteaux sense. By our specification of model, these quantities exist. Note that , and . Denote by Q(2)(β, F|S)[F − F0, F − F0] the (d + 1) × (d + 1) matrix of all the second order partial derivatives. Therefore,

and is of order O(d2(β − β0, F − F0), where (β̄, F̄) is an intermediate value between (β, F) and (β0, F0). Under some general conditions, the above can be upper bounded by −Cd2(β − β0, F − F0) for some 0 < C < ∞, in small neighborhood of (β0, F0). So for any 0 < ηn → 0 and any τ with ηn < τ ≤ η < ∞, for some 0 < C < ∞,

Next we show, with E* for outer expectation,

| (A.0) |

for some decreasing function ϕn(·) to be given.

For this, let N[ ](ε, (Θ,ℱ),L2(P)) be defined in the proof of Theorem 1, we showed N[ ](ε, (Θ,ℱ),L2(P)) = O(1/εd exp{C/ε}) = O(exp{C/ε}). Let C be some generic finite positive constant, and

In the above we used the fact that for small τ > 0, log τ < 0, so e−t > 1 and 1 + Ce−t ≤ (1 + C)e−t on (−∞, log τ).

Let 𝒬1 = {q(·|β, F): (β, F) ∈ (Θ,ℱ), τ/2 < d(β − β0, F − F0) ≤ τ}, , ||𝔾n||𝒬1= supq∈𝒬1|𝔾nq|. Then the left hand side of (A.0) is upper bounded by 2||𝔾n||𝒬1.

Note that since 𝒬1 is a subset of the 𝒬 defined in the proof of Theorem 1, so N[ ](ε,𝒬1, L2(P)) ≤ N[ ](ε,𝒬, L2(P)) ≤ N[ ](ε, (Θ,ℱ),L2(P)) and consequently, J[ ](τ,𝒬1, L2(P)) ≤ J[ ](τ, (Θ,ℱ), L2(P)) ≤ Cτ1/2.

Also, it is easy to see that, with our specification of the likelihood, Pq2 < Cτ2 and ||q||∞ < C for all q ∈ 𝒬1, for some 0 < C < ∞. Thus, by Lemma 3.4.2 in van der Vaart and Wellner (1996, p.324),

which implies (A.0) with ϕn(τ) = Cτ1/2(1 + τ−3/2n−1/2). Take rn = n1/3, then

Now, all conditions of Theorem 3.4.1 in van der Vaart and Wellner (1996, p.322) are satisfied, so by this Theorem, rnd(β̂ n − β0, F̂n − F0) = n1/3d(β̂n − β0, F̂n − F0) = Op(1).

A.3 Proof of Theorem 2

Recall that lβ(β, F) is the score for β, lF (β, F)[g] = ∂l(β, F + λg)/∂λ|λ=0 is the score operator for F at direction g, with and , where . Denote . Note that be the efficient score, where ,

and g* is determined by

| (A.1) |

This gives g*(Y) = F0(Y)E[ZQ2(X;β0, F0)|Y ]/E[Q2(X;β0, F0)|Y ].

Let ln(β, F) = logLn(β, F) be the log-likelihood, lβ,n(β, F) = ∂ln(β, F)/∂β and lF,n(β, F)[g] be the sample score operator for F at direction g, and define lF,n(β, F)[g*] similarly. Since (β̂n, F̂n) is the MLE, we have lβ,n(β̂, F̂n) = 0 and lF,n(β̂, F̂n)[g*] = 0. Additionally, it is obvious that Plβ(β0, F0) = 0 and PlF (β0, F0)[g*] = 0. Let ℳ1 = {lβ(β, F): β ∈ ℬ, F ∈ ℱ} and ℳ2 = {lF (β, F)[g*]: β ∈ ℬ, F ∈ ℱ}. Since ℬ is bounded and ℱ is collection of bounded monotone functions, using the entropy computations in the proof of Theorem 1, it is easy to show that they are Donsker classes. It can be shown that for fixed X, lβ(β, F) and lF (β, F) are Lipchitz in (β, F), so ℳ1 and ℳ2 are also Donsker classes. Since by Theorem 1, ||β̂n − β0||+ supt∈[τl,t0] |F̂n −F0| → 0 almost surely, condition (C1) and the given model imply that lβ(β, F) and lF (β0, F0)[g*] are continuous in (β, F) and with finite second moments, thus by Corollary 2.3.12 in van der Vaart and Wellner (1996), we have

where the op(1) is in the vector sense, and

From these facts we get

| (A.2) |

and

| (A.3) |

Denote lβ,β(β, F) = ∂l(β, F)/(∂β∂βT),

and define lF,β(β, F)[g] and lF,F (β, F)[g1, g2] similarly. Using Taylor expansion, and note that ||β̂n−β0||2+|| F̂n−F0||2 is bounded, so by Lemma 1 and dominated convergence E(||β̂n − β0||2 + ||F̂n − F0||2) = O(n−2/3), and so

The above and (A.2) give

| (A.4) |

Similarly,

The above and (A.3) give

| (A.5) |

It is known that . By the same way, −Plβ,F (β0, F0)[g] = P(lβ(β0, F0)lF (β0, F0)[g]) for all g, and −P(lF,F (β0, F0)[g1, g2]) = P(lF (β0, F0)[g1]lF (β0, F0)[g2]) for all g1 and g2. Note that (A.1) holds for any g. Thus,

Now, subtracting (A.4) from (A.5) we get

| (A.6) |

Note that, since , so

This and (A.6) give

which gives the desired result.

A.4 Proof of Theorem 3

Since (β̂n, F̂n) is the MLE, we have

Let R(y|z, F) = 1 − Gγ{β̂T zF(y)}, then R(·|z, F) is a distribution function. Let ℛ = {R(·|z,F): F ∈ ℱ}, and R̂n(·|z) be the MLE of R(·|z, F), i.e.,

and it is straightforward that R̂n(·|z) = R(y|z, F̂n).

Recall that the common current status model with log-likelihood

and it is known (e.g., see Example 3.2.15 in van der Vaart and Wellner (1996)) that the NPMLE F̌n(·) of F0(·) is the slope function of the greatest convex minorant of Fn(·), which is the cumulative- sum diagram on [0, 1] with jump Δi/n at Yi. i.e.

and F̌n(·) is the slope of greatest convex minorant of Fn(·), and for a ∈ R,

where , and .

In our case, similarly, R̂n(·|Z) is the greatest convex minorant of Rn(·|Z), cumulative- sum diagram on [0, 1], where the latter has constant jump of Δi/n. Thus the corresponding Fn(·) in this case must be a we weighted cumulativesum diagram with weight wi at the Yi’s, where the wi’s are determined by wi ≥ 0, . Then F̂n(Yi) = Σj≤i wi:= Wi. Assume the ’s are arranged in an increasing order. With (C6), with 0 < limn Wn/n < ∞ in probability. Since Wi = 0 for Δi = 0, we can arrange the Wi’s such that {wi/n = (Wi+1 − Wi)/n: i = 1, …,n} are probability weights. Then as for the common current status model, the log-likelihood in our case will be maximized with the Wi’s satisfying

Thus, , and

In our case, the above equality may not be achieved for some of the Δi’s.

Let

then F̂n(·) is the slope of greatest convex minorant of Fn(·), and for a ∈ R,

where and .

Then as in Example 3.2.15 in van der Vaart and Wellner (1996), at every fixed point t,

To evaluate the distribution of n1/3(F̂n(t) − F0(t)), we need to compute the probability of the event {n1/3(F̂n(t)−F0(t)) ≤ r}for each r ∈ R. Take a = F0(t)+ rn−1/3, then {n1/3(F̂n(t)−F0(t))≤ r} = {F̂n(t) ≤ a} = {n1/3(arg mins {Vn(s)− aGn(s)} − t)≥ 0}, and by the change of variable s ↦ t + n−1/3h,

| (A.6) |

Let g(·) be the density function of Y,

As a random variable, given the Zi’s, w takes with probability 1/n. Without confusion, we also denote w = w(Z) for its asymptotic version conditioning on Z. Note that Y and (T,Z) are independent, and ||β̂n − β0|| = Op(n−1/2), and with condition (C6), we show that

and

Now we evaluate B1,n(h). For h < 0, the notation I(t ≤ Y ≤ t + hn−1/3) means I(t + hn−1/3 ≤ Y ≤ t). Let

Then

Let ℱn = {fn,h(·, ·): |h| ≤ K}, for some K > 0. By (C1) and (C4), the w(Zi)’s are uniformly bounded, thus ℱn has an envelope function Fn(y, x, z) = n1/6CI(t− Kn−1/3 ≤ Y ≤ t + Kn−1/3, with some 0 < C < ∞. Obviously,

and for some 0 < C < ∞,

It follows that for any totally bounded semimetric ρ(·, ·) on [−K,K],

Thus the three conditions in Theorem 2.11.21 of van der Vaart and Wellner (1996) are satisfied.

Let ℱn,δ = {fn,s − fn,h: ρ(s, h) ≤ δ}. Then ℱn,δ and are P-measurable in the sense of Definition 2.33 in van der Vaart and Wellner (1996). Furthermore, for fixed h,

and

Therefore as n → ∞, (Pfn,sfn,h − Pfn,sPfn,h) → η2(t)g(t)(s ∧ h). Note that E[𝔹(s) − 𝔹(h)]2 = |s − h|, where 𝔹(·) is the two-sided Brownian motion process, originating from zero. It follows that Pfn,sfn,h − Pfn,sPfn,h converges to the covariance function of the process g1/2(t)η(t)𝔹(·).

For a probability measure Q, let N(ε,ℱn, L2(Q)) be the number of -balls needed to cover ℱn under the metric of L2(Q). It is easy to see that

where the supreme is over all probability measures. Thus, ∀ ηn → 0,

By Theorem 2.11.22 in van der Vaart and Wellner (1996), we obtain

where l∞([−K,K]) is the space of all bounded real functions on [−K,K] equipped with the supremum metric.

Now collecting results from (A.6), we have that the event {n1/3(F̂n(t) − F0(t))≤ r} is asymptotically equivalent to

In the above we used the fact that . Using problem 3.2.5 in van der Vaart and Wellner (1996), the above is re-written as

In the above we used the fact that −𝔹(·) and 𝔹(·) have the same distribution, and that W = arg minh {𝔹(h) + h2} is symmetrically distributed about 0, thus P(CW ≥ −b) = P(CW ≤ b). This gives the desired result for h ∈ [−K,K]. Below we prove that the result is actually true on R. Let ĥn = arg minh{Vn(h) − aGn(h)} − t. We need to show that ĥn is bounded in probability. Thus for large n, ĥn will be in [−K,K] for some 0 < K < ∞ in probability, and so the desired result is true on R. For this, we only need to show n1/3d(ĥn, ĥ) = Op(1) for some distance d(·, ·). The method is similar to that in the proof of Lemma 1 and is omitted.

Contributor Information

Guoqing Diao, Department of Statistics, George Mason University.

Ao Yuan, Department of Biostatistics, Bioinformatics and Biomathematics, Georgetown University.

References

- Barlow RE, Bartholomew DJ, Bremner JM, Brunk HD. Statistical Inference Under Order Restrictions. Wiley; New York: 1972. [Google Scholar]

- Berkson J, Gage RP. Survival curve for cancer patients following treatment. Journal of the American Statistical Association. 1952;47:501–515. [Google Scholar]

- Betensky RA, Schoenfeld DA. Nonparametric estimation in a cure model with random cure times. Biometrics. 2001;57(1):282–286. doi: 10.1111/j.0006-341x.2001.00282.x. [DOI] [PubMed] [Google Scholar]

- Box GEP, Cox DR. An analysis of transformation revisited, rebutted. Journal of The American Statistical Association. 1982;77:209–210. [Google Scholar]

- Chen MH, Ibrahim JG, Sinha D. A new bayesian model for survival data with a surviving fraction. Journal of the American Statistical Association. 1999;94:909–919. [Google Scholar]

- Cook RJ, White BJG, Grace YY, Lee KA, Warkentin TE. Analysis of a nonsusceptible fraction with current status data. Statistics in Medicine. 2008;27:2715–2730. doi: 10.1002/sim.3102. [DOI] [PubMed] [Google Scholar]

- Cox DR. Regression model and life-tables (with Discussion) Journal of the Royal Statistical Society, Series B. 1972;34:187–220. [Google Scholar]

- Diao G, Zeng D, Yang S. Efficient semiparametric estimation of short-term and long-term hazard ratios with right-censored data. Biometrics. 2013;69(4):840–849. doi: 10.1111/biom.12097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fang H, Li G, Sun J. Maximum likelihood estimation in a semiparametric logistic/proportional-hazards mixture model. Scandinavian Journal of Statistics. 2005;32(1):59–75. [Google Scholar]

- Farewell VT. The use of mixture models for the analysis of survival data with long-term survivors. Biometrics. 1982;38:1041–1046. [PubMed] [Google Scholar]

- Farewell VT. Mixture models in survival analysis: Are they worth the risk? Canadian Journal of Statistics. 1986;14:257–262. [Google Scholar]

- Gray RJ, Tsiatis AA. A linear rank test for use when the main interest is in differences in cure rates. Biometrics. 1989;45(3):899–904. [PubMed] [Google Scholar]

- Groeneboom P. Brownian motion with a parabolic drift and airy functions. Probability theory and related fields. 1989;81(1):79–109. [Google Scholar]

- Groeneboom P, Wellner JA. Information Bounds and Nonparametric Maximum Likelihood Estimation. Birkhauser; Basel: 1992. [Google Scholar]

- Hoel DG, Walburg HE. Statistical analysis of survival experiments. Journal of National Cancer Institute. 1972;49:361–372. [PubMed] [Google Scholar]

- Huang J. Efficient estimation for the proportional hazards model with interval censoring. Annals of Statistics. 1996;24(2):540–568. [Google Scholar]

- Huang J, Rossini AJ. Sieve estimation for the proportional-odds failure-time regression model with interval censoring. Journal of the American Statistical Association. 1997;92(439):960–967. [Google Scholar]

- Ibrahim JG, Chen MH, Sinha D. Bayesian Survival Analysis. Springer; New York: 2001. [Google Scholar]

- Kosorok MR. Beyond parametrics in interdisciplinary research: Festschrift in honor of Professor Pranab K. Sen. Institute of Mathematical Statistics; 2008. Bootstrapping the grenander estimator; pp. 282–292. [Google Scholar]

- Kuk AYC, Chen CH. A mixture model combining logistic-regression with proportional hazards regression. Biometrika. 1992;79(3):531–541. [Google Scholar]

- van der Laan MJ, Jewell NP. The NPMLE for doubly censored current status data. Scandinavian Journal of Statistics. 2001;28(3):537–547. [Google Scholar]

- Lam K, Xue H. A semiparametric regression cure model with current status data. Biometrika. 2005;92(3):573–586. doi: 10.1093/biomet/92.3.573. [DOI] [Google Scholar]

- Lam KF, Fong DYT, Tang O. Estimating the proportion of cured patients in a censored sample. Statistics in Medicine. 2005;24(12):1865–1879. doi: 10.1002/sim.2137. [DOI] [PubMed] [Google Scholar]

- Li CS, Taylor JMG, Sy JP. Identifiability of cure models. Statistics & Probability Letter. 2001;54:389–395. [Google Scholar]

- Lin DY, Oakes D, Ying Z. Additive hazards regression with current status data. Biometrika. 1998;85(2):289–298. [Google Scholar]

- Liu H, Shen Y. A semiparametric regression cure model for interval-censored data. Journal of The American Statistical Association. 2009;104:1168–1178. doi: 10.1198/jasa.2009.tm07494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lu W, Ying Z. On semiparametric transformation cure models. Biometrika. 2004;91(2):331–343. [Google Scholar]

- Ma S. Additive risk model for current status data with a cured subgroup. Annals of the Institute of Statistical Mathematics. 2008 in press. [Google Scholar]

- Ma S. Cure model with current status data. Statistica Sinica. 2009;19(1):233–249. [Google Scholar]

- Ma S, Kosorok MR. Penalized log-likelihood estimation for partly linear transformation models with current status data. Annals of Statistics. 2005;33(5):2256–2290. doi: 10.1214/009053605000000444. [DOI] [Google Scholar]

- Maller R, Zhou X. Survival Analysis with Long-Term Survivors. Wiley; New York: 1996. [Google Scholar]

- Peng Y, Dear KBG. A nonparametric mixture model for cure rate estimation. Biometrics. 2000;56(1):237–243. doi: 10.1111/j.0006-341x.2000.00237.x. [DOI] [PubMed] [Google Scholar]

- Press WH, Teukolsky SA, Vetterling WT, Flannery BP. Numerical Recipes in C: the art of scientific computing. 2. Cambridge University Press; 1992. [Google Scholar]

- Robertson T, Wright F, Dykstra R. Order Restricted Statistical Inference. Wiley; New York: 1988. [Google Scholar]

- Rossini AJ, Tsiatis AA. A semiparametric proportional odds regression model for the analysis of current status data. Journal of the American Statistical Association. 1996;91(434):713–721. [Google Scholar]

- Sy JP, Taylor JMG. Estimation in a cox proportional hazards cure model. Biometrics. 2000;56(1):227–236. doi: 10.1111/j.0006-341x.2000.00227.x. [DOI] [PubMed] [Google Scholar]

- Taylor J. Semiparametric estimation in failure time mixture-models. Biometrics. 1995;51(3):899–907. [PubMed] [Google Scholar]

- Tsodikov A. A proportional hazards model taking account of long-term survivors. Biometrics. 1998;54:1508–1516. [PubMed] [Google Scholar]

- Tsodikov AD, Ibrahim JG, Yakovlev AY. Estimating cure rates from survival data: An alternative to two-component mixture models. Journal of the American Statistical Association. 2003;98(464):1063–1078. doi: 10.1198/01622145030000001007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van der Vaart A, Wellner J. Weak Convergence and Empirical Processes: With Applications to Statistics. Springer-Verlag; New York: 1996. [Google Scholar]

- van der Vaart AW. Lectures on Probability Theory and Statistics. Lecture Notes in Math. Vol. 1781. Springer; New York: 2002. Semiparametric statistics; pp. 331–457. [Google Scholar]

- Xue H, Lam K, Li G. Sieve maximum likelihood estimator for semiparametric regression models with current status data. Journal of the American Statistical Association. 2004;99(466):346–356. doi: 10.1198/016214504000000313. [DOI] [Google Scholar]

- Yakovlev AY, Tsodikov AD. Stochastic Models of Tumor Latency and Their Biostatistical Applications. World Scientific; New Jersey: 1996. [Google Scholar]

- Yu A, Kwan K, Chan D, Fong D. Clinical features of 46 eyes with calcified hydrogel intraocular lenses. Journal of Cataract and Refractive Surgery. 2001;27:1596–1606. doi: 10.1016/s0886-3350(01)01038-0. [DOI] [PubMed] [Google Scholar]

- Yuan M, Diao G. Semiparametric odds rate model for modeling short-term and long-term effects with application to a breast cancer genetic study. The international journal of biostatistics. 2014;10(2):231–249. doi: 10.1515/ijb-2013-0037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeng D, JC, YS Semiparametric additive risks model for interval-censored data. Statistica Sinica. 2006a;16:287–302. [Google Scholar]

- Zeng D, Yin G, Ibrahim J. Semiparametric transformation models for survival data with a cure fraction. Journal of American Statistical Association. 2006b;101:670–684. [Google Scholar]