Abstract

In 2012, Access Community Health Network, a Federally Qualified Health Center (FQHC) network with 36 health centers serving the greater Chicago area, embarked on a 3-year initiative to improve patient access. “Dramatic Performance Improvement” (DPITM) included the adoption of modified open access scheduling and practice changes designed to improve capacity and the ability to balance supply and demand. This article describes DPITM implementation, strategies, and associated outcomes, including a 20% decrease in no-show rate, a 33% drop in time to the third next available appointment (TNAA), a 37% decrease in cycle time, and a 13% increase in patient satisfaction.

Keywords: Chicago, cycle time, Dramatic Performance Improvement (DPITM), Federally Qualified Health Center, no-show rate, open access scheduling, patient access, patient satisfaction, practice improvement, third next available appointment (TNAA)

TIMELY access to care is a key marker of health care quality (Institute of Medicine, 2001) and has been linked to patient satisfaction (Bundy et al., 2005; Harris et al., 1999; Kennedy & Hsu, 2003; Sans-Corrales et al., 2006; Tuli et al., 2010), continuity of care (Belardi et al., 2004; Tseng et al., 2015; Tuli et al., 2010), and care efficiency (Kennedy & Hsu, 2003; Tuli et al., 2010). While always a focus, the importance of ensuring timely access has increased because of the expansion of Medicaid and insurance subsidies under the Patient Protection and Affordable Care Act (ACA) (Kaiser Family Foundation, 2015) and the resultant surge in the number of newly insured individuals seeking regular care. Even with pending changes to the ACA, the need to understand how to improve timely access to care is crucial, especially among populations whose access to care is limited by multiple barriers.

The issue is particularly challenging for Federally Qualified Health Centers (FQHCs). By definition, FQHCs receive federal funding to provide primary care services in underserved urban and rural communities (Centers for Medicare & Medicaid Services, 2013). In 2013, there were approximately 1202 federally supported health center grantees operating 9170 sites; 72% of FQHC patients were at or below the 100% poverty level, 35% were uninsured, and 49% received Medicaid or Medicare (National Association of Community Health Centers, 2013). Some observers have suggested that Medicaid expansion and the rise in the number of newly insured patients will increase demand for FQHC services (Adashi et al., 2010; Commonwealth Fund, 2014). Others believe that competition among FQHCs means they must focus on patient engagement and retention by strengthening referral networks, investing in infrastructure, and becoming primary care medical homes (Kulesher, 2013). Under either scenario, ensuring timely patient access is essential.

One strategy is to move from a first-come, first-served model to open or “advanced” appointment scheduling. Under the first-come, first-served approach, patients call for an appointment and are offered the first available slot (Murray & Berwick, 2003). Practices sometimes accommodate urgent appointments, but few, if any, slots are left available for same-day visits. The approach is associated with high no-show rates because appointments are scheduled far in advance. (Kopach et al., 2007; Lee et al., 2005). No-show rates are of special concern for FQHCs since they have been found to be higher among low-income patients and Medicaid recipients (Kaplan-Lewis & Percac-Lima, 2013; Miller et al., 2015).

With open access, patients call for an appointment when they want to see their physician and are scheduled that day or soon afterward (Kopach et al., 2007; Parente et al., 2005). Open access has been associated with significant reductions in the time to book the third next available appointment (TNAA), a metric that is commonly used to evaluate access by calculating the average number of days between when a patient requests an appointment and when the third next appointment is available (Belardi et al., 2004; Bundy et al., 2005; Mehrotra et al., 2008; Rose et al., 2011; Tseng et al., 2015), no-show rates (Bundy et al., 2005; Kennedy & Hsu, 2003; Rose et al., 2011), and continuity improvements (Belardi et al., 2004; Tseng et al., 2015). Its impact on patient satisfaction is mixed, with some studies showing improved patient satisfaction and others finding no effect (Rose et al., 2011). For open access to be successful, practice capacity must be sufficient to meet demand (Murray & Berwick, 2003). Often, additional efforts to expand capacity and balance supply and demand are needed to sustain improvements in access (Kopach et al., 2007; Safety Net Medical Home Initiative, n.d.). Such interventions may include attempting to improve patient flow (Bard et al., 2016) and reduce no-shows.

In 2012, Access Community Health Network (ACCESS), an FQHC network with 36 health centers serving the greater Chicago area, embarked on a 3-year, multipronged initiative to improve patient access. The initiative, called “Dramatic Performance Improvement” (DPITM), included the adoption of modified open access scheduling, along with additional changes designed to improve capacity and the centers' ability to balance supply and demand. This article describes DPITM implementation, strategies, and associated outcomes.

METHODS

DPITM was launched at ACCESS in October 2012 and continued formally until June 2015. While patient access had been an ongoing focus, its importance increased as other area providers and new retail clinics entered the marketplace and began aggressively pursuing newly insured patients. The improvement initiative was also prompted by the network's interest in obtaining Patient-Centered Medical Home recognition by the National Committees for Quality Assurance (NCQA, n.d.). In preparation, ACCESS conducted a readiness assessment to identify performance gaps or areas that did not meet NCQA standards, and determined that the area with the greatest gaps involved patient access. Evidence included low patient satisfaction ratings with reaching centers by phone, a networkwide no-show rate of 22%, many walk-in appointments, congested waiting areas due to high walk-in volume, and scheduling and care delivery practices that contributed to bottlenecks.

Multiple access-limiting, efficiency-related factors were identified. First-come, first-served scheduling left few same-day appointments available for urgent visits. Over the years, 83 different visit types had been created to accommodate provider preferences, which complicated efforts to adjust health center schedules. Phone systems could not handle the call volume. Some patients had limited access to phones and reliable phone service. Because of these problems, many ACCESS patients simply showed up at health centers when they needed care, which contributed to long waiting times, congested waiting rooms, and other operational inefficiencies.

ACCESS leaders decided that a systemwide patient access quality improvement (QI) initiative was needed and secured consulting services. DPITM was launched during a 1-day leadership conference, facilitated by ACCESS' CEO with support from the consulting firm. The conference was attended by senior ACCESS leaders, regional medical directors and administrators, health center managers, representatives from support departments, and interdisciplinary improvement teams. The CEO stressed the importance of the initiative by linking it to the ACCESS strategic plan and financial and growth targets, and worked with conference attendees to establish QI goals.

Setting

ACCESS health centers are in urban and suburban locations in the city of Chicago and Cook and DuPage counties. In fiscal year 2016, ACCESS provided services to more than 181 000 patients with 608 480 encounters. Medicaid is the system's top payer, accounting for 67% of ACCESS patients, followed by commercial payers (11%), Medicare (6%), and self-pay patients (16%). Approximately 77% of ACCESS patients are part of a managed care plan. The ACCESS patient population is racially and ethnically diverse, with 51% of patients identifying as Hispanic/Latino, 39% African American, 18% Caucasian, and 1% Asian. Four percent of ACCESS patients are homeless and 14% live in public housing.

The health centers are embedded into the communities they serve, which increases visibility and understanding of community-specific challenges and characteristics. Each is managed by its own leadership team and maintains an interdisciplinary clinical governance model that engages clinical, administrative, and IT staff in cross-departmental planning and decision-making. All health center staff are ACCESS employees. Staff includes physicians from internal medicine, family medicine, pediatrics, and obstetrics (OB); advanced practice practitioners: nurse practitioners (NPs), physician assistants (PAs), and certified nurse midwives; registered nurses (RNs); medical assistants (MAs); and receptionists. Three centers host teaching programs maintained by area medical schools and serve as clinical practice sites for residents in family medicine, internal medicine, and OB. Students from other clinical programs (eg, NPs and PAs) also complete clinical rotations at the health centers. The centers are supported by centralized departments, including human resources, finance, grants planning, quality monitoring, and information services.

At the time of the patient access improvement initiative, there were 34 FQHCs in ACCESS' network. The 34 centers varied in size from small centers with a single provider, to centers with multiple providers and up to 25 examination rooms. Staffing and hours of operation also varied depending on the center's size and needs of the patient population.

Approach

Each center created a quality improvement (QI) team to lead and coordinate DPITM-associated QI activities addressing 3 metrics: no-show rate, TNAA, and cycle time (the total time of an appointment, from check-in to check-out). Teams included a provider, medical assistant (MA), and receptionist, with additional staff added at the center's discretion. ACCESS' Chief Operating Officer (COO) oversaw the initiative, with help from the consulting firm, which helped coordinate activities across the health centers and provided coaching support.

The initiative was implemented in 4 waves, with 8 to 12 health centers in each wave. The waves consisted of three 6-week “learning action periods” sandwiched between four 1-day learning sessions. A collaborative design allowed the teams to learn from one another's experiences. During the learning sessions, the teams were taught about system redesign principles, quality measurement and improvement techniques, and evidence-based strategies for improving patient access. A key educational component was to have teams use newly learned concepts to design “rapid redesign tests” or “tests of change,” addressing problems related to the 3 metrics. Teams then implemented those tests of change in their centers during the learning action periods. The consultants met with the teams in weekly coaching sessions to assess progress, fine-tune improvements being tested, and identify new strategies to address identified barriers. Later, some ACCESS staff, who had received additional QI training, joined the coaching sessions as internal, peer consultants.

A first step for each team was to follow patients through the appointment process to understand their center's scheduling, registration, and patient care practices, which helped identify bottlenecks and inefficiencies. Tests of change were initially piloted with 1 provider. Based on the results of the pilot, a team either disseminated the change throughout the center or modified their approach and tested it again. Rather than following a prescribed sequence, the teams determined which changes they would pilot based on conditions at their health center. By each wave's midpoint, most teams were addressing all 3 metrics simultaneously through concurrent tests of change. The teams used e-mail and staff meetings to teach staff about QI concepts and techniques and seek input on practice change.

The teams calculated the 3 metrics daily and used the data to guide their efforts. Data were shared with other teams through a weekly newsletter that featured improvement tips and articles highlighting the work. Teams were encouraged to share successful strategies during the learning sessions.

Improvement strategies

Several strategies were implemented across the network. All centers replaced their “first-come, first-served” scheduling practices with a modified open access approach, in which they left a portion of each provider's day unscheduled to accommodate same-day appointments and walk-ins. Visit-type definitions were standardized across the network, and the number of allowable visit types was reduced to 10. A centralized call center was created to handle scheduling for centers with the highest call volumes, expanding over time to accommodate weekend and evening hours.

The teams specifically targeted no-shows, TNAA, and cycle time in various ways, including multiple appointment confirmation calls, with the last call 15 to 30 minutes before the patient was scheduled to arrive; a no-show follow-up call to determine the reason for a missed appointment and provide an opportunity to reschedule; provision of transportation vouchers to patients who needed them; and changes to scheduling policies to better meet patient needs. Staff focused on building positive relationships in their communications with patients, with the goal of developing a welcoming community and encouraging a sense of mutual respect for one another's time and efforts.

Strategies to reduce days to TNAA focused on how to actively manage and adjust provider schedules and ensure no appointment slot went unused. For example, if a patient scheduled at 9:00 am was late and a patient scheduled for 9:15 was early, the staff could switch the appointment times, eliminating the unused slot and reducing later bottlenecks.

Multiple strategies were used to reduce cycle time and eliminate steps that did not add value to patient experience, including optimizing the MA role. Prior to the launch of DPITM, ACCESS had invested resources to standardize role expectations and expand skills/competencies for MAs and in preparation for Joint Commission accreditation and implementation of ACCESS' new EHR system. This work provided a foundation for cycle time reduction strategies, which included pairing MAs with providers, so they understood the provider's preferences and patient panel; conducting daily morning huddles when MAs, providers, support staff, case managers, behavioral health specialists, and others discussed information needed for encounters and matched providers with potential walk-ins; stocking examination rooms with needed supplies and placing printers and other equipment in close proximity to support staff; ushering patients to examination rooms as soon as possible after check-in; localizing care, including immunizations and blood draws, in examination rooms, rather than having patients move from place to place; and having MAs implement a “mid-way knock” during patient encounters to remind providers of the passing time and inquire about needed assistance. Over time, providers and staff in all the centers moved from reacting to patient needs to anticipating patient needs, often calling patients to obtain information that might be useful in planning upcoming visits.

Data collection

DPITM impact was measured from December 2013 through June 2015 by tracking no-show rate, TNAA, and cycle time. Patient satisfaction was also measured for the same period.

The teams collected data and calculated each metric following instructions provided by the consultants. The no-show rate was calculated as the percentage of visits in which a patient failed to keep an appointment or cancel ahead of time. The TNAA was calculated by counting the number of days until the TNAA for each provider and then calculating an average for the center. Cycle time was computed as the elapsed time from patient check-in to check-out, measured in minutes. Average cycle time was calculated using measures obtained for 1 hour in the morning and 1 hour in the afternoon. The teams submitted their center's results to the consultants, who calculated the 3 metrics for ACCESS overall.

Patient satisfaction was measured via a routinely administered survey that asks patients to rate (1) overall satisfaction with their health center experience, (2) ease of getting through to the center by telephone, (3) friendliness and helpfulness of the receptionist, (4) friendliness and helpfulness of the medical assistant, and (5) encounter with the provider (ie, whether the provider spent enough time with the patient and answered the patient's questions). Patients rate each item on a 5-point scale that ranges from “poor” (1) to “excellent” (5).

Analysis

Data from the beginning of fiscal year 2013 (July 1, 2012) through the end of fiscal year 2016 (June 30, 2016) were analyzed to assess changes in the 3 DPITM metrics and the association of those metrics with patient satisfaction. Reliable data regarding all 3 metrics were not available for the entire DPITM rollout period, particularly for cycle time; reliability of that data is associated with the rolling implementation of the electronic health record. Baseline data start dates vary among the metrics as noted.

RESULTS

DPITM metrics

Table 1 summarizes changes in average no-show rate, average TNAA, and median cycle time during the time of DPITM rollout until the end of wave 4 in June 2016.

Table 1. ACCESS Overall Change in DPITM Indicators: No-Show, TNAA, Cycle Time, and OEE During DPITM Rollout.

| Indicator | At Start of Available Data | End of Wave 4 FY 2016 Q4, June 2016 |

|---|---|---|

| Average no-show rate (goal: 5%) | 20% FY 2013 Q3, March 2014 | 16% |

| Average TNAA (goal: 0 d) | 10.5 d FY 2013 Q2, December 2012 | 7 da |

| Median cycle time (goal: 30 min) | 59 min FY 2014 Q3, March 2015 | 37 min |

Abbreviations: ACCESS, Access Community Health Network; DPITM, Dramatic Performance ImprovementTM; OEE, overall experience excellent; TNAA, third next available appointment.

aPrimary care TNAA: 4 days; obstetrics TNAA: 8 days.

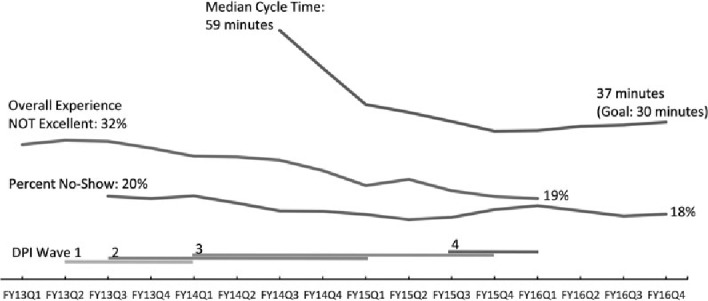

No-show

The average overall no-show rate decreased from 20% in March 2014 to 16% at the end of wave 4. While the 5% no-show rate goal was not achieved, the results represent a 20% decrease.

TNAA

Average TNAA dropped from 10.5 days in December 2012 to 7 days by June 2016, a decrease of 33%. Additional analyses revealed a difference in TNAA between primary care and OB; the average TNAA at the end of wave 4 for primary care was 4 days, versus 8 days for OB; longer TNAA for OB is expected since most OB providers do not work full-time.

Cycle time

The median overall cycle time decreased from 59 minutes in March 2015 to 37 minutes at the end of wave 4, a 37% decrease. The target cycle time was 30 minutes. Data show that the median cycle time was 110 minutes in December 2012. That estimate is unreliable, however, given inconsistent cycle time reporting prior to DPITM implementation. It is probable that the actual baseline cycle time was higher than 59 minutes and the percent decrease was concomitantly larger.

Patient satisfaction

Patient satisfaction results are summarized in Table 2. Patient satisfaction scores on all items exceeded benchmarks established by the Midwest Clinicians' Network (2014), both at baseline and at the end of wave 4 (the Midwest Clinicians' Network is a not-for-profit corporation with a membership that includes over 100 community health centers and 10 primary care associations in 10 states). The survey item with the most noteworthy improvement was the percentage of survey respondents reporting “overall experience excellent” (OEE).

Table 2. Patient Satisfaction Survey Results.

| Survey Item | Baseline FY 2013 Q1, July to Sept 2012 | End of Wave 4 FY 2016 Q4, April to June 2016 |

|---|---|---|

| Overall experience excellent (goal: 70%) | 68% | 81% |

| Calls get through easily (goal: 70%) | 53% | 63% |

| Friendliness and helpfulness of receptionist (goal: 85%) | 73% | 81% |

| Friendliness and helpfulness of medical assistant (goal: 85%) | 76% | 84% |

| Provider answers your questions and spends enough time with you (goal: 85%) | 77% | 79% |

Overall experience excellent

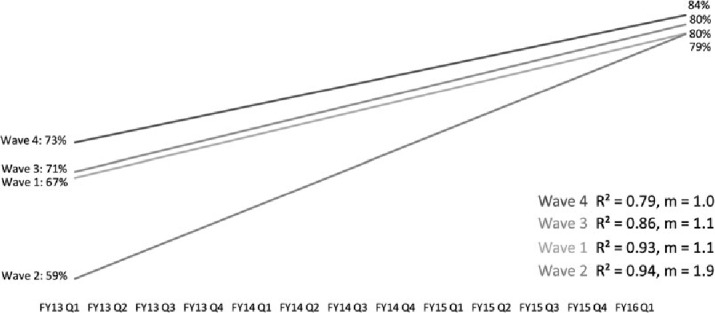

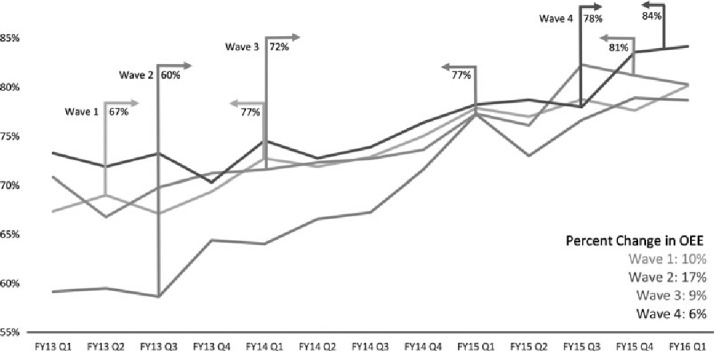

The percent OEE increased from 68% to 81% from the first quarter of fiscal year 2013 (July to September 2012) to the fourth quarter of fiscal year 2016 (April to June 2016). Figures 1 and 2 illustrate the improvement in average percent OEE during DPITM rollout. Figure 1 fits a line onto the OEE scores by quarter. It shows that waves 1, 3, and 4 had nearly identical overall trajectories of improvement from the first quarter of fiscal year 2013 (July to September 2012) through the first quarter of fiscal year 2016 (July to September 2015). Wave 2 had a more dramatic rate of improvement, but started with a lower average score. By the end of the evaluation period, wave 2's percent OEE had increased to approximately match that of the other 3 waves.

Figure 1.

Linear trends: Quarterly average percent “overall experience excellent” by DPITM rollout wave clinic group (FY13 Q1 to FY16 Q1). DPITM indicates Dramatic Performance ImprovementTM.

Figure 2.

Trend: Average percent “overall experience excellent” by DPITM rollout wave clinic group (FY13 Q1 to FY16 Q1). DPITM indicates Dramatic Performance ImprovementTM.

Figure 2 describes the same changes in average percent OEE in more detail. Upright bars with arrows show the score at the beginning and end of each wave, while the solid trend lines show the change by quarter. Not only did improvement in scores occur during each wave, in general, improvements continued after wave completion. Also, improvement for waves 3 and 4, in particular, started before official DPITM rollout, which is suggestive of possible “knowledge bleed” within the system. It also suggests there may have been other reasons for improvement in overall patient satisfaction besides DPITM.

Figure 3 illustrates comparative trends in median cycle time, no-show rate, and OEE during DPITM rollout. The periods of the 4 DPITM waves are shown at the bottom. Note that the trend lines in Figure 3 have different y-axes; the chart is included as an illustrative comparison of overall declining trends in median cycle time and percent no-show, along with the decreasing percentage of those reporting their experience as not excellent.

Figure 3.

Comparative trends in cycle time, no-show rate, and overall experience during time of DPITM rollouts. DPITM indicates Dramatic Performance ImprovementTM.

DISCUSSION

The DPITM initiative resulted in improved patient access as indicated by marked reductions in the average no-show rate, days to TNAA, and median cycle time, along with improved patient satisfaction. Previously congested health center waiting rooms are now lightly occupied, prompting positive comments from patients and allowing ACCESS leaders to consider reducing waiting room size in future facility planning initiatives. Feedback also suggests that the initiative advanced staff leadership skills and fostered a culture of teamwork and continuous improvement.

The initiative's multipronged approach reflected the understanding that problematic patient access is a function of multiple factors. Implementation of modified rather than complete open access was guided by patient needs. Allowing some advance scheduling ensured that patients with limited or no access to telephones, or who tended to forget to book appointments, could book follow-up appointments while still at the health center. Leaving some slots open ensured that same-day appointments would be available to patients seeking routine and urgent care, and that walk-in appointments could also be accommodated. The establishment of the centralized call center addressed longstanding problems associated with overloaded and outdated phone systems at certain high-volume health centers. The interventions targeting no-shows, TNAA, and cycle time helped further address access problems by expanding health center capacity, although all strategies used still required daily attention by staff, underscoring the ongoing nature of access management.

ACCESS and health center leaders laid the groundwork for success by specifying goals and metrics for the initiative, allowing sufficient time for training and QI activities, and setting an expectation that all employees support and participate in the initiative. ACCESS' CEO continued to highlight the initiative's importance and demonstrated high-level support by promoting DPITM efforts and metrics in bimonthly organization-wide teleconference huddles. Equally important was the pairing of regional medical directors as team leaders with operations managers so that changes were not seen as outside of clinical care. There was also emphasis on the initiative at quarterly provider meetings, which required attendance of all employed providers. Health centers also conducted team huddles 3 times at specific days and times so that all managers and staff had an opportunity to participate.

Other factors aiding the initiative included the network's practice management system, which provided up-to-date information on each patient and facilitated adjusting providers' schedules; the data-driven process, in which teams used their center's data to guide tests of change and shared results with other centers; the buy-in and participation of clinicians who were willing to test changes and then promote adoption by colleagues; and the participation of support staff. Perhaps most importantly, this initiative challenged and empowered receptionists and MAs, whose leadership capabilities are often overlooked, to master complex skills and champion the initiative. Support staff became QI technique experts and served as role models and coaches. ACCESS leaders worked to remove hierarchical barriers so that staff could work as a team and use the health center as a testing ground without fear of failure. Their understanding of system barriers was critical to improvement and the development of new workflows. Staff were given a voice and pride of ownership, which led to the emergence of new leaders and a different kind of dialogue between staff and providers.

Table 3 summarizes the key steps described previously that organizations should consider when adopting a similar strategy for dramatic improvement. A step-linking factor was identified in discussions with DPITM leaders: the teams set audacious goals, which were tracked weekly and reported regularly up the organizational chain all the way through to the board. Without this, success would have been much more muted and likely not sustainable over time.

Table 3. Key Elements/Steps for Dramatic Performance ImprovementTM Strategy.

| Step 2: Set the stage with leadership and staff for a long-term strategy and create mechanisms for ongoing communication |

|

| Step 2: Allocate sufficient financial, time, and staff resources |

|

| Step 3: Develop teams that include all levels of staff, including initial teams of “early adopters” |

|

| Step 4: Identify the problem and set goals, create timeframe for process improvement and schedules. Teach how to measure goals regularly and report on results. |

|

| Step 5: Launch the process improvement, report on progress, create plans for sustainability |

|

Abbreviations: ACCESS, Access Community Health Network; DPITM, Dramatic Performance ImprovementTM; PCMH, Patient-Centered Medical Home.

Efforts to improve patient access continue. No-show rates, TNAA, and cycle time are tracked and health center-based QI teams continue to work on improvements. New staff undergo DPITM training as part of orientation. The CEO staff teleconferences continue, and progress toward achieving patient access goals is discussed at each ACCESS Board of Directors meeting. Although the overall no-show rate of 16% is in line with rates attained in other studies (Rose et al., 2011), health center staff and leaders continue to make changes in pursuit of a no-show rate of 5%. TNAA has proved to be the most difficult area to address, suggesting that new strategies may be required to expand capacity. Health center leaders are examining provider staffing patterns to assess the need for additional providers, particularly for OB. One health center, for example, has used patient access data and evaluation results from ACCESS' Healthy Start program, a federal program that strives to decrease infant morbidity and mortality in low-income, hard-to-reach women, to assess practice patterns associated with the program's 1 dedicated obstetrician. The center found that the obstetrician averaged only 6 to 8 prenatal visits per day and had an average patient waiting time of more than 2 hours, largely because he was often called to deliveries during scheduled appointments. In conjunction with the obstetrician, the center hired 2 advanced practice nurses for the OB practice, and gave patients the option of going to other health centers with shorter TNAAs.

There are several limitations that may affect the validity or generalizability of the initiative's results. These include lack of control sites, use of assessment methods at the system level that did not allow the authors to link improvements to individual strategies, and ACCESS' geographic limitation to the greater Chicago area. The initiative also did not assess the impact of changes on continuity or clinical outcomes.

Even with these limitations, the authors conclude that the combination of modified open access and strategies to enhance capacity and match supply and demand were effective in improving patient access to care. The approach used at ACCESS highlights the feasibility and value of using a multifaceted approach to improving patient access and offers a model for implementing such an approach in other settings.

Footnotes

The authors have disclosed that they have no significant relationships with, or financial interest in, any commercial companies pertaining to this article.

REFERENCES

- Adashi E. Y., Geiger J., Fine M. D. (2010). Health care reform and primary care: The growing importance of the community health center. New England Journal of Medicine, 362, 2047–2050. 10.1056/NEJMp1003729 [DOI] [PubMed] [Google Scholar]

- Bard J. F., Shu Z., Morrice D. J., Wang D. E., Poursani R., Leykum L. (2016). Improving patient flow at a family health clinic. Health Care Management Science, 19(2), 170–191. 10.10007/s10729-014-9294-y [DOI] [PubMed] [Google Scholar]

- Belardi F. G., Weir S., Craig F. W. (2004). A controlled trial of an advanced access appointment system in a residency family medicine center. Family Medicine, 36(5), 341–345. [PubMed] [Google Scholar]

- Bundy D. G., Randolph G. D., Murray M., Anderson J. B., Margolis P. A. (2005). Open access in primary care: Results of a North Carolina pilot project. Pediatrics, 116(1), 82–87. [DOI] [PubMed] [Google Scholar]

- Centers for Medicare & Medicaid Services. (2013). Federally qualified health center. Retrieved January 29, 2016, from https://www.cms.gov/Outreach-and-Education/Medicare-Learning-Network-MLN/MLNProducts/downloads/fqhcfactsheet.pdf

- Commonwealth Fund. (May 16, 2014). New survey: Community health centers make substantial gains in health information technology use, remain concerned about ability to meet increased demand following ACA coverage expansions. Retrieved from http://www.commonwealthfund.org/publications/press-releases/2014/apr/community-health-centers

- Harris L. E., Swindle W., Mungai S. M., Weinberger M., Tierney W. (1999). Measuring patient satisfaction for quality improvement. Medical Care, 37(12), 1207–1213. [DOI] [PubMed] [Google Scholar]

- Institute of Medicine. (2001). Crossing the quality chasm: A new health system for the 21st century. Washington, DC: National Academy Press. [PubMed] [Google Scholar]

- Kaiser Family Foundation. (2015). Key facts about the uninsured population. Retrieved from http://kff.org/uninsured/fact-sheet/key-facts-about-the-uninsured-population/

- Kaplan-Lewis E., Percac-Lima S. (2013). No-show to primary care appointments: Why patient do not come. Journal of Primary Care and Community Health, 4(4), 241–244. 10.1177/2150131913498513 [DOI] [PubMed] [Google Scholar]

- Kennedy J. G., Hsu J. T. (2003). Implementation of an open access scheduling system in a residency training program. Family Medicine, 35(9), 666–670. [PubMed] [Google Scholar]

- Kopach R., Delaurentis P. C., Lawley M., Muthuraman K., Ozsen L., Rardin R., Willis D. (2007). Effects of clinical characteristics on successful open access scheduling. Health Care Management Science, 10(2), 111–124. [DOI] [PubMed] [Google Scholar]

- Kulesher R. R. (2013). Health reform's impact on federally qualified community health centers: The unintended consequence of increased Medicaid enrollment on the primary care medical home. Health Care Management, 32(2), 99–106. 10.1097/HCM.0b013e31828ef5d5 [DOI] [PubMed] [Google Scholar]

- Lee V. J., Earnest A., Chen M. I., Krishnan B. (2005). Predictors of failed attendances in a multi-specialty outpatient centre using electronic databases. BMC Health Services Research, 5, 51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mehrotra A., Keehl-Markowitz L., Avanian J. (2008). Implementing open-access scheduling of visits in primary care practices: A cautionary tale. Annals of Internal Medicine, 148(12), 915–922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller A. J., Chae E., Peterson E., Ko A. B. (2015). Predictors of repeated “no-showing” to clinic appointments. American Journal of Otolaryngology, 36(3), 411–414. [DOI] [PubMed] [Google Scholar]

- Midwest Clinicians' Network. (2014). Members of Midwest Clinicians' Network. Retrieved February 3, 2016, from http://www.midwestclinicians.org/#!member-organizations/c5x5

- Murray M., Berwick D. M. (2003). Advanced access: Reducing waiting and delays in primary care. Journal of the American Medical Association, 289(8), 1035–1040. [DOI] [PubMed] [Google Scholar]

- National Association of Community Health Centers. (2013). United States health center fact sheet. Retrieved January 29, 2016, from http://www.nachc.com/client//United_States_FS_2014.pdf

- NCQA. (n.d.). NCQA Patient-Centered Medical Home: Improving experiences for patients, providers, and practice staff. Retrieved January 28, 2016, from https://www.ncqa.org/Portals/0/PCMH%20brochure-web.pdf

- Parente D. H., Pinto M. B., Barber J. C. (2005). A pre-post comparison of service operational efficiency and patient satisfaction under open access scheduling. Health Care Management Review, 30(3), 220–228. [DOI] [PubMed] [Google Scholar]

- Rose K. D., Ross J. S., Horwitz L. (2011). Advanced access scheduling outcomes: A systematic review. Archives of Internal Medicine, 171(13), 1150–1159. 10.1001/archinternmed.2011.168 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Safety Net Medical Home Initiative. (n.d.). Enhanced access. Retrieved from http://www.safetynetmedicalhome.org/change-concepts/enhanced-access

- Sans-Corrales M., Pujol-Ribera E., Gené'-Badia J., Pasarín-Rua M. I., Iglesias-Pérez B., Casajuana-Brunet J. (2006). Family medicine attributes related to satisfaction, health, and costs. Family Practice, 23(3), 308–316. [DOI] [PubMed] [Google Scholar]

- Tseng A., Wiser E., Barclay E. (2015). Implementation of advanced access in a family medicine residency practice. Journal of Medical Practice Management, 31(2), 74–77. [PubMed] [Google Scholar]

- Tuli S. Y., Thompson L. A., Ryan K. A., Srinivas G. L., Fillips D. J., Young C. M., Tuli S. S. (2010). Journal of Graduate Medical Education, 2(2), 215–221. 10.4300/JGME-D-09-00087.1 [DOI] [PMC free article] [PubMed] [Google Scholar]