Abstract

In social, personality and mental health research, the tendency to select absolute end-points on Likert scales has been linked to certain cultures, lower intelligence, lower income and personality/mental health disorders. It is unclear whether this response style reflects an absolutist cognitive style or is merely an experimental artefact. In this study, we introduce an alternative, more informative, flexible and ecologically valid approach for estimating absolute responding, that uses natural language markers. We focussed on ‘function words’ (e.g. particles, conjunctions, prepositions) as they are more generalizable because they do not depend on any specific context.

To identify such linguistic markers and test their generalizability, we conducted a text analysis of online reviews for films, tourist attractions and consumer products. All written reviews were accompanied by a rating scale (akin to Likert scale), which allowed us to label text samples as absolute/moderate. The data was split into independent ‘training’ and ‘test’ sets. Using the training set we identified a rank order of linguistic markers for absolute and moderate text, which were evaluated in a classifier on the test set. The top three markers alone (“but”, “!” and “seem”) produced 88% classification accuracy, which increased to 91% using 31 linguistic markers.

Keywords: Extreme responding, Absolutism, Text analysis, Natural language, Machine learning

1. Introduction

In social, personality and mental health research, absolute responding (or ‘extreme’ responding) is a response style estimated using Likert type scales. Where selecting the absolute endpoints of a scale (e.g. 1 and 5 on a 5-point scale) corresponds to absolute responding, while selecting any point in-between corresponds to non-absolute or moderate responding. This study aimed to identify linguistic markers which act as surrogates to absolute and moderate responding on Likert scales. These markers could expand our understanding of both the language and cognition related to absolute and moderate responding. The language we use has previously been shown to relate to the way we think (e.g., Al-Mosaiwi & Johnstone, 2018). In measuring absolute and moderate responding, linguistic markers are also a more informative and ecologically valid alternative/addition to using Likert scales.

1.1. Absolute responding using Likert scales and the limitations

Absolute responding on Likert-scales has been linked to a number of cognitive, social and cultural factors. Lower IQ and less education (e.g., Light, Zax, & Gardiner, 1965; Marin, Gamba, & Marin, 1992) have been associated with more absolute responding, as have personality characteristics such as intolerance of ambiguity and simplistic thinking (e.g., Naemi, Beal, & Payne, 2009).

Greater absolute responding has also been linked to ‘black’ and ‘Hispanic’ cultures (e.g., Bachman, O'Malley, & Freedman-Doan, 2010; Hui & Triandis, 1989; Marin, Gamba, & Marin, 1992); while lower absolute responding (more moderate responding) is linked to Japanese, Chinese (e.g., Chen, Lee, & Stevenson, 1995) and Korean cultures (e.g., Chun, Campbell, & Yoo, 1974). On closer inspection, these cultural findings often depend on the size of the scale used; an observed effect on a 5-point scale may not be apparent on a 10-point scale (e.g., Clarke, 2000; Hui & Triandis, 1989). Such inconsistencies naturally raise doubts about the veracity of the results.

Additionally, a series of studies with depressed participants reveal that both positive and negative absolute responses on Likert scales predicted future relapse (e.g., de Graaf, Huibers, Cuijpers, & Arntz, 2010; Peterson et al., 2007; Teasdale et al., 2001). However, other studies have failed to find the effect (Ching & Dobson, 2010), or raised methodological concerns regarding the use of Likert scales, specifically in reference to the effect of item content on response style (Forand & DeRubeis, 2014). That is, the content of the questions and the labelling of the end-points (e.g. “Mostly agree”), could compromise the absolute nature of an end-point response. This moderating effect would not be accounted for when simply measuring the number of end-point responses.

These previous findings have exclusively relied on observing an absolute response style on Likert scales. This simplistic method cannot be applied to qualitative data, it lacks ecological validity, and there is no evidence as to whether the findings generalize beyond Likert scales. That is, it is not clear whether the absolute responding of some groups relates to meaningful differences in absolutist thinking, or simply an experimental artefact specific to using Likert scales.

Our proposed method of measuring absolute responding through linguistic markers in natural language presents an alternative that avoids some of the limitations inherent to Likert scales. Being based on complex, naturalistic data (natural language), it offers greater flexibility and ecological validity because it is not reliant on structured response formats and can be used in an observational study of data acquired from a wide variety of sources.

1.2. Function word linguistic markers

To be generalizable, linguistic markers cannot depend on the content of any given subject (e.g. nouns, verbs, adjectives), as these will differ from one subject to another. Therefore, we restrict our feature selection to include only ‘function words’, which have a grammatical and structural role, but convey little to no content (e.g. particles, conjunctions, prepositions). Ordinarily, we attend to the content of language and have little conscious awareness of its functional style. For this reason, function words have previously been examined as implicit measures, particularly for differences in writing style (for review see Tausczik & Pennebaker, 2010).

Text analysis studies have associated specific classes of function words with certain writing styles. For example, conjunctions, negations, articles and prepositions have been associated with a categorical or formal language style (Chung & Pennebaker, 2007). Exclusive words (e.g. “but”, “except”, “without”), conjunctions and prepositions have been shown to be markers of greater ‘cognitive complexity’ (Pennebaker & King, 1999). Increased use of auxiliary verbs, pronouns and adverbs are characteristic of a narrative language style (Pennebaker, Chung, Frazee, Lavergne, & Beaver, 2014). Personal pronouns predictably indicate a self-focus; while it is suggested that third person pronouns (they, he, she) are a sign of wellbeing (Chung & Pennebaker, 2007).1 We aim to extend this literature by identifying function words which correlate with absolute and moderate responding on Likert-type scales.

1.3. Machine learning classification

Text analysis combined with machine learning has regularly been used to classify natural language text linked to positive vs. negative ratings (e.g., Feldman, 2013); this is referred to as ‘sentiment analysis’. In this study, we followed the same process, except we were interested in absolute/moderate ratings differences, rather than positive/negative. The purpose of building a classifier, similar to those previously used for valence classification, was to demonstrate the predictive accuracy of the linguistic markers we identified in the training set.

2. Methods and data analysis

2.1. Data collection

The internet is increasingly being used as a source of naturalistic writing for research in linguistics and psychology. Many websites allow users to leave lengthy comments in the form of personal narratives, requests for help, or reviews. In this study, we collected natural language text posts from three popular websites; IMDB, TripAdvisor and Amazon. All three websites combine a star rating system (akin to a Likert scale) with written natural language reviews about films, holiday destinations or products respectively. Reviews paired with the lowest or highest (end-point) ratings were labelled absolute, and all other reviews were labelled non-absolute (or moderate). The valence of the reviews (positive or negative) was not factored into the analysis. This means that absolutely positive reviews were grouped with absolutely negative reviews as they were both absolute. Convergent validity in absolute responding between Likert scales and natural language was therefore estimated using the star rating scales and the text posts of these websites.

We selected the websites IMDB, TripAdvisor and Amazon as they were large enough to provide sufficient data for training and testing with our classifier approach. All three websites currently have the most web traffic in their respective domains of ‘Arts and Entertainment’, ‘Travel’ and ‘Shopping’ as shown by www.similarweb.com. We selected websites from three completely different industries, so that the linguistic markers identified would be less dependent on any particular context. In IMDB, users commented on films, for TripAdvisor they wrote about tourist destinations and on Amazon they reviewed everyday products. From each website we selected 18 films, tourist attractions and products, respectively. Generally, our selection procedure was to first identify the films, tourist attractions and products with the most overall number of reviews. We then singled out those that had the broadest ratings distributions (i.e. not predominantly positive or negative). This was to ensure a reasonable sample size could be collected at each level of the star rating scale. Additionally, we were keen to select films, tourist attractions and products from wide mix of different genres, countries and categories (respectively2).

For each film, tourist attraction and product, we gathered the written text accompanying each star rating. We aimed to collect 15,000 words for each level of star rating for all films, attractions and products. Where this was not possible, we simply collected all the available reviews, ensuring a minimum of 3000 words were sampled. These were copied and pasted into a single text file. For TripAdvisor and Amazon, reviews are rated on a 5-point scale, this resulted in 90 text files (18 ∗ 5) from each website. IMDB was a slight exception, where the star rating scale ranges from 1 to 10 (not 1–5), so we generated 180 text files (18 ∗ 10) for this website.

To reduce the IMDB 10-point scale to match with the Amazon and TripAdvisor 5-point scales, we first aligned the absolute end-points. For both scales, 1-star meant absolute negative. Absolute positive is 10-stars for IMDB but was reassigned to 5 to match the TripAdvisor and Amazon 5-point scale (i.e. 1-star -> 1-star; 10-stars -> 5-stars). We next determined that the central values on the 10-point scale (that corresponding to ‘3’ on a 5-point scale) were between 5 and 6, these were reassigned as 3 (i.e. 5-stars -> 3-stars; 6-stars -> 3-stars). This meant that 2–4 stars on a 10-point scale, which are neither absolutely negative, nor central, corresponded to 2-stars on a 5-point scale. Similarly, ratings 7–9 stars on a 10-point scale, which are neither absolutely positive, nor central, corresponded to 4-stars on a 5-point scale. This realignment achieved our main objective of preserving the integrity of the absolute end-points (e.g. not combining 9-stars with 10-stars, as 9-stars is not an absolute).

2.2. Data-analysis in R

We used R programming language (R Development Core Team, 2010) to conduct the text analysis and measure function word usage by dividing text into unigrams (single words). For our training set, we identified unigrams which best differentiated between absolute and moderate natural language. These would then be used in machine learning classification, on an independent test set, to automatically label text as either absolute or moderate.

2.2.1. Pre-processing data

Text analysis and pre-processing was performed using the quanteda Package (Benoit & Nulty, 2016) in R. We first divided our data into a training and test set (70:30 split), we used a stratified partition to ensure that the proportions of the different groups (i.e. absolute/moderate; positive/negative) were comparable in both the training and test sets. Both sets were then tokenized (separated into individual words), and all tokens (words) were converted to lower case.

In R, function words are termed ‘stop words’, as these are traditionally the words which data scientists remove from their analysis. Stop words are commonly viewed as unimportant because they convey little content, therefore R has standard procedures for removing them. By making slight alterations to these same procedures, we could retain stop words and remove all other words (content words) instead.

Tokens were then ‘stemmed’, this is a process which reduces words to their root form, for example, the words ‘argued’, ‘argues’ and ‘arguing’ would become ‘argue’. Tokens were also normalized by converting frequency counts for each token type into percentage prevalence values. Importantly, the features on the test and training data sets must match; therefore, tokens which only appear in the test set were removed and tokens which only appear in the training set were added to the test set with a percentage prevalence score of 0.

2.2.2. Feature selection and classification

Machine learning and classification was implemented with the caret package (Kuhn, 2008) in R. Other functions, including data manipulation and visualization tools were retrieved from the CRAN library (R Core Team, 2014).

A Gaussian naive Bayes classifier was used to classify absolute and moderate labelled reviews. Naive Bayes is a probabilistic classifier based on applying Bayes' theorem and assumes independence between features. This classifier was used because it is simple, predicts between categories, and is particularly suited when the dimensionality of inputs is high, as is the case with text analysis (Scikit-learn, 2016).

Each function word token was treated as an independent predictor, and its importance was evaluated individually. Receiver operating characteristic (ROC) curve analysis was conducted on each predictor, plotting their true positive rate against the false positive rate for a range of discrimination thresholds. The area under the curve (AUC) of the ROC was used as the metric for variable importance. Function words were then ranked according to their importance and sequentially incorporated into the classifier to determine how many of these linguistic markers are required to satisfactorily discriminate between absolute and moderate natural language. This is done via cross-validation, a process that partitions data into ‘training’ and ‘test’ sets. The training set was used to identify the most important features, and to train the naive Bayes classifier. The ‘test’ set is used only to examine the predictive accuracy of the trained classifier. More important than the classification accuracy is the Cohens Kappa statistic, which compares the observed accuracy with the expected accuracy (random chance), thereby taking into account prior probabilities. Generally, a kappa >0.75 is considered ‘excellent’ (Fleiss, 1981). We thus obtain classification accuracies for models with increasing numbers of features.

2.2.3. Feature selection and classification of valence

Our main objective was to examine content-free function words as markers for the style of responses (absolute/moderate). For the purpose of comparison, we conducted an additional feature selection and classification analysis of the valence content of responses (positive/negative) using the same data and methodology. Reviews paired with 1–2 stars were labelled negative, and reviews with 4–5 stars were labelled positive.

3. Results

3.1. Unigrams and classification

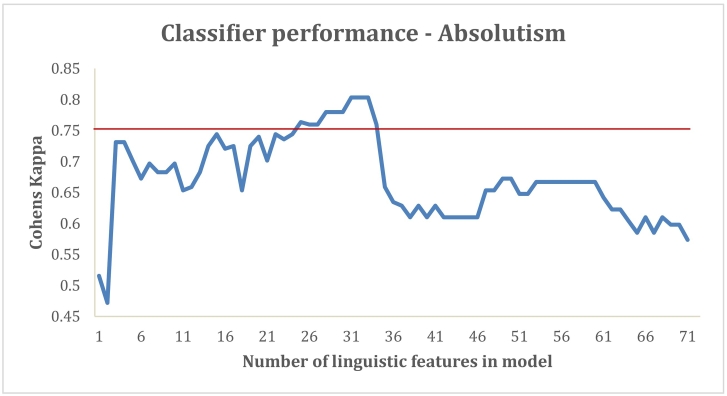

Based on ROC curves, we identified tokens (unigrams) which were most predictive of moderate and absolute reviews (Fig. 1; for an extended list see Table S1 in the Supplementary materials). The Kappa values for trained models with increasing numbers of linguistics features are shown in Fig. 2. Interestingly, the top three features alone (the words “but”, “seem” and exclamation marks) can be used to adequately distinguish absolute and moderate natural language in the test set (kappa = 0.73). The best classification accuracy is achieved by including the top 25–34 features (kappa = 0.76–0.80). There is then a precipitous drop in classifier performance when >34 features are added to the model, this is referred to as ‘over-fitting’, and occurs when new features add more noise than signal.

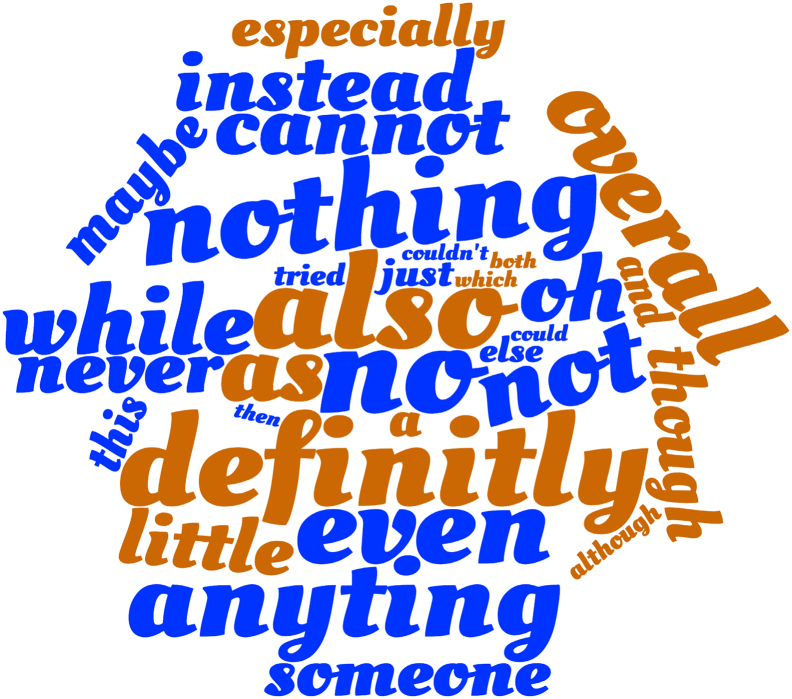

Fig. 1.

The top 31 tokens (unigrams) which are most predictive of absolute and moderate reviews. The size of font reflects the order of importance as designated by the ROC curve values for each unigram. The tokens specific to absolute reviews are in red, while the tokens specific to moderate reviews are in green. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

Fig. 2.

Cohens Kappa accuracy values for classifiers with increasing features as ranked by the receiver operating characteristic curve analysis.

3.2. Unigram natural language markers — absolutism

The highest kappa was obtained using the top 31 linguistic features (Fig. 2), of these, 11 are specific to absolute reviews and 20 are specific to moderate reviews (Fig. 1). We combined the absolute words into a single dictionary to analyse their distribution across the 5-point rating scale. This was done using the Linguistic Inquiry and Word Count software (LIWC; Pennebaker, Booth, Boyd, & Francis, 2015), which calculates the percentage prevalence of words. To analyse the data, a linear mixed-effects modeling approach was adopted (the SPSS syntax script can be found in the supplemental material online). This is the recommended analysis method for this type of data structure (Baayen, Davidson, & Bates, 2008). Our fixed factor is the star ratings and our random factor is the websites. Mixed-effects models consider both fixed and random effects and can be used to assess the influence of the fixed effects on the dependent variable after accounting for random effects (namely, correlated residuals in star ratings from the same website). We found a significant main effect for the absolutist words with respect to the star rating factor F(4, 327) = 40.01, p < .001. There was also a significant main effect for websites F(2, 327) = 216.97, p < .001, but no significant interaction between star ratings and websites F(8, 327) = 1.12, p = .35. Paired comparisons with a Bonferroni correction for star ratings found that 1-star reviews (M = 2.07%, SD = 0.60) had significantly more absolutist words than 2 (M = 1.39%, SD = 0.52, p < .001), 3 (M = 1.46%, SD = 0.52, p < .001) and 4 (M = 1.30%, SD = 0.54, p < .001) star reviews; but crucially were not significantly different from 5-star reviews (M = 2.12%, SD = 0.71, p = .74). Similarly, 5-star reviews also had significantly more absolutist words than 2 (p < .001), 3 (p < .001) and 4 (p < .001) star reviews. There was no significant difference in the prevalence of classifier absolutist words between 2, 3 and 4-star reviews (p's > .46; Fig. 3). Paired comparisons for the random factor of website found that the prevalence of classifier absolute words was significantly different between all three websites (p's < .001). However, there was no interaction between websites and star ratings (Fig. 3).

Fig. 3.

Prevalence of absolute and moderate words, across different star ratings for IMDB, Amazon and TripAdvisor websites. Error bars represent 95% confidence intervals.

3.3. Unigram natural language markers — moderation

We combined the 20 remaining classifier moderate words into a single dictionary to analyse their distribution across the 5-point rating scale using the LIWC. We ran a linear mixed effects model, with star ratings as the fixed factor and websites as a random factor. We found a significant main effect for the classifier moderate words with respect to the star rating factor F(4, 327) = 36.47, p < .001). There was also a significant main effect for websites F(1, 327) = 33.07, p < .001, and no significant interaction between ratings and websites F(8, 327) = 1.18, p = .31. Paired comparisons for star ratings found that 1-star reviews (M = 4.19%, SD = 0.52, p < .001) had significantly fewer moderate words than 2 (M = 4.97%, SD = 0.58, p < .001), 3 (M = 5.15%, SD = 0.59, p < .001) and 4 (M = 5.19%, SD = 0.65, p < .001) star reviews; but crucially, were not significantly different from 5-star reviews (M = 4.36%, SD = 0.56, p = .91. Similarly, 5-star reviews also had significantly fewer moderate words than 2 (p < .001), 3 (p < .001) and 4 (p < .001) star reviews (Fig. 3). Paired comparisons for the random factor of website found that the prevalence of classifier absolute words was significantly different between all three websites (p's < 0.001). However, there was no interaction between websites and star ratings (Fig. 3).

3.4. Unigrams and classification of valence

Based on ROC curves, we identified tokens (unigrams) which were most predictive of positive and negative reviews (Fig. 4; for an extended list see Table S2 in the Supplementary Materials). The Kappa values for trained models with increasing numbers of linguistics features are shown in Fig. 5. Overall, the classification accuracies for valence are lower than those for absolute and moderate ratings. None of the models achieved a kappa value of 0.75, the standard for excellent classifiers set by Fleiss (1981). This reveals that function words are better markers for the style of responses (absolute/moderate) than for the content of responses (positive/negative).

Fig. 4.

The top 30 tokens (unigrams) which are most predictive of positive and negative reviews. The size of font reflects the order of importance as designated by the ROC curve values for each unigram. The tokens specific to positive reviews are in orange, while the tokens specific to negative reviews are in blue. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

Fig. 5.

Cohens Kappa accuracy values for classifiers with increasing features as ranked by the receiver operating characteristic curve analysis.

4. Discussion

4.1. Unigrams and machine learning

Our feature selection process identified 31 unigrams which most distinguish absolute natural language from moderate natural language in review comments. Of these, 11 were specifically more prevalent in absolute review comments and 20 were specifically more prevalent in moderate review comments. The classifier's absolutist words include ‘ever’, ‘never’ and ‘anyone’, which are defined as ‘at any time’, ‘at no time’ and ‘any person’, respectively, therefore all denoting absolutes. Also included are the determiners ‘my’, ‘you’ and ‘your’, which determine the reference for a noun group. There are two negations “can't” and “doesn't”, which are used in categorical imperatives. The final linguistic feature is ‘exclamation marks’, which are used as intensifiers.

For the moderate words included in the classifier, ‘but’, ‘though’, ‘despite’, ‘other’ and ‘however’ are all used to introduce nuance or exception. The words ‘much’ and ‘more’ both refer to large amounts. The words ‘rather’, ‘somewhat’ ‘sometime’ and ‘some’ all specify a moderate extent or amount. The moderate words ‘seem’ ‘maybe’ and ‘probable’ have a vague noncommittal property and the word ‘overall’ seeks to combine separate components. Finally, it was surprising to find that the word ‘certain’ is specific to moderate reviews as certainty is absolutist. Analysis of ‘certain’ used in context reveals that it is used to specify subcomponents (e.g. “certain aspects”) rather than relating a state of total confidence (e.g. being certain).

Throughout, we have used the term absolute rather than ‘extreme’ as we believe, and have previously demonstrated (Al-Mosaiwi & Johnstone, 2018) that there is a qualitative difference between words that convey absolutes and words that convey large extents (or extremes). This can also be gleaned here, where the words ‘much’ and ‘more’, which denote large amounts, are actually markers specific to moderation as opposed to absolutism.

Using these 31 predictors, our classifier test performance accuracy is >90% with a Kappa >0.80. This is considered excellent by prominent guidelines for classifier accuracy (e.g., Fleiss, 1981). Interestingly, good classifier performance was achieved using any number of features from the top 3–35, as defined by Fleiss (1981). There is therefore flexibility for researchers in selecting linguistic features that measure absolute/moderate natural language in text.

In this study, we have restricted our feature selection to stop words/function words, unlike the majority of other text analysis classifiers. We believe this will improve the generalizability of our classifier as it is not dependent on subject specific content or sentiment analysis.

For both absolutist and moderate words, we found an effect of website (random factor) but no interaction between websites and the star ratings. This means that although the percentage frequency of these words varied between different contexts (i.e. films, tourist attractions and products), the relative distribution across the rating scales remains the same. The similar distribution pattern of predictors across the rating scales, for all the websites, affirms our intention to identify generalizable linguistic markers for absolute and moderate text. Moreover, we found there was no significant difference in percentage prevalence for absolute words and moderate words between absolute positive (5-stars) and absolute negative (1-star) natural language reviews. Our predictors are therefore independent of valence. This is a necessary quality for generalizable absolute/moderate natural language markers. Finally, we found that the percentage prevalence of absolute words was only significantly elevated at absolute end-point reviews and that there was no significant difference between moderate 2–4 star ratings. This was not the case for moderate words, which were not as discriminating.

As detailed in the introduction, there are practical applications for these linguistic markers of absolute and moderate responding. They could be employed by researchers to estimate absolute and moderate language in qualitative natural language data. This could be done for various groups of interest, possibly in an observational study design. Such an analysis would be more informative than counting absolute responses on Likert scales, and significantly more ecologically valid. In this way, previous findings relating to absolute and moderate response style, which have relied exclusively on Likert scales, could be supported or challenged via a linguistic analysis. This is especially important as many of these findings are contentious and consequential.

4.2. Limitations and future directions

In this study, we used review websites as they conveniently provide both natural language and a Likert type rating scale, which allows us to establish convergent validity. However, more work is needed to confirm or amend the features identified in this study based on a wider variety of writing topics and formats (e.g. narrative writing). We employed a simple naive Bayes classifier because it is easy to train and produces excellent results, however, more sophisticated algorithms would no doubt further improve the classification accuracy. Although, sophisticated classification models can be difficult to interpret and suffer from over fitting. Also, in this study we made no distinction between extreme and moderate ratings in the classification problem. Future work may seek to classify absolute vs. extreme natural language. Finally, just as there are possibly cultural differences in response styles on Likert scales, this may also be the case for absolute and moderate language use. While the use of absolutist words have previously been shown to reflect absolutist thinking (Al-Mosaiwi & Johnstone, 2018), whether this is impacted by cultural differences is not clear.

Footnotes

More information on the grammatical and structural role of particular classes of function words is provided in the Supplementary Material.

All data are available at 10.6084/m9.figshare.6199235

Supplementary data to this article can be found online at https://doi.org/10.1016/j.paid.2018.06.004.

Appendix A. Supplementary data

Supplementary material 1

Supplementary material 2

References

- Al-Mosaiwi M., Johnstone T. In an absolute state: Elevated use of absolutist words is a marker specific to anxiety, depression, and suicidal ideation. Clinical Psychological Science. 2018 doi: 10.1177/2167702617747074. 216770261774707. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baayen R.H., Davidson D.J., Bates D. Mixed-effects modelling with crossed random effects for subjects and items. Journal of Memory and Language. 2008;59(4):390–412. [Google Scholar]

- Bachman J.G., O'Malley P.M., Freedman-Doan P. Institute for Social Research; Ann Arbor, MI: 2010. Response styles revisited: Racial/ethnic and gender differences in extreme responding, Monitoring the Future Occasional Paper No, 72.http://www.monitoringthefuture.org/ [Google Scholar]

- Benoit K., Nulty P.P. Quanteda: Quantitative analysis of textual data. R package version 0.9.9–60. 2016. http://quanteda.io Available at:

- Chen C., Lee S.Y., Stevenson H.W. Response style and cross-cultural comparisons of rating scales among East Asian and North American students. Psychological Science. 1995;6(3):170–175. [Google Scholar]

- Ching L.E., Dobson K.S. An investigation of extreme responding as a mediator of cognitive therapy for depression. Behaviour Research and Therapy. 2010;48(4):266–274. doi: 10.1016/j.brat.2009.12.007. [DOI] [PubMed] [Google Scholar]

- Chun K.T., Campbell J.B., Yoo J.H. Extreme response style in cross-cultural research: A reminder. Journal of Cross-Cultural Psychology. 1974;5(4):465–480. [Google Scholar]

- Chung C., Pennebaker J.W. The psychological functions of function words. In: Fielder K., editor. Social communication. Psychology Press; New York: 2007. pp. 343–359. [Google Scholar]

- Clarke I. Extreme response style in cross-cultural research: An empirical investigation. Journal of Social Behavior and Personality. 2000;15(1):137. [Google Scholar]

- Development Core Team R. R: A language and environment for statistical computing. 2010. http://www.R-project.org Available from the R foundation for statistical computing at.

- Feldman R. Techniques and applications for sentiment analysis. Communications of the ACM. 2013;56(4):82–89. [Google Scholar]

- Fleiss J.L. Balanced incomplete block designs for inter-rater reliability studies. Applied Psychological Measurement. 1981;5(1):105–112. [Google Scholar]

- Forand N.R., DeRubeis R.J. Extreme response style and symptom return after depression treatment: The role of positive extreme responding. Journal of Consulting and Clinical Psychology. 2014;82(3):500. doi: 10.1037/a0035755. [DOI] [PubMed] [Google Scholar]

- de Graaf L.E., Huibers M.J., Cuijpers P., Arntz A. Minor and major depression in the general population: Does dysfunctional thinking play a role? Comprehensive Psychiatry. 2010;51(3):266–274. doi: 10.1016/j.comppsych.2009.08.006. [DOI] [PubMed] [Google Scholar]

- Hui C.H., Triandis H.C. Effects of culture and response format on extreme response style. Journal of Cross-Cultural Psychology. 1989;20(3):296–309. [Google Scholar]

- Kuhn M. Caret package. Journal of Statistical Software. 2008;28(5):1–26. [Google Scholar]

- Light C.S., Zax M., Gardiner D.H. Relationship of age, sex, and intelligence level to extreme response style. Journal of Personality and Social Psychology. 1965;2(6):907. doi: 10.1037/h0022746. [DOI] [PubMed] [Google Scholar]

- Marin G., Gamba R.J., Marin B.V. Extreme response style and acquiescence among Hispanics: The role of acculturation and education. Journal of Cross-Cultural Psychology. 1992;23(4):498–509. [Google Scholar]

- Naemi B.D., Beal D.J., Payne S.C. Personality predictors of extreme response style. Journal of Personality. 2009;77(1):261–286. doi: 10.1111/j.1467-6494.2008.00545.x. [DOI] [PubMed] [Google Scholar]

- Pennebaker J.W., Booth R.J., Boyd R.L., Francis M.E. Pennebaker Conglomerates; Austin, TX: 2015. Linguistic inquiry and word count: LIWC 2015 [Computer software]www.LIWC.net Retrieved from. [Google Scholar]

- Pennebaker J.W., Chung C.K., Frazee J., Lavergne G.M., Beaver D.I. When small words foretell academic success: The case of college admissions essays. PLoS One. 2014;9(12) doi: 10.1371/journal.pone.0115844. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pennebaker J.W., King L.A. Linguistic styles: Language use as an individual difference. Journal of Personality and Social Psychology. 1999;77(6):1296. doi: 10.1037//0022-3514.77.6.1296. [DOI] [PubMed] [Google Scholar]

- Peterson T.J., Feldman G., Harley R., Fresco D.M., Graves L., Holmes L. Extreme response style in recurrent and chronically depressed patients: Change with antidepressant administration and stability during continuation treatment. Journal of Consulting and Clinical Psychology. 2007;75(1):145–153. doi: 10.1037/0022-006X.75.1.145. [DOI] [PubMed] [Google Scholar]

- Scikit-learn Choosing the right estimator – Scikit-learn 0.18.1 documentation. 2016. http://scikit-learn.org/stable/tutorial/machine_learning_map/index.html (Retrieved 02 November 2016 from)

- Tausczik Y.R., Pennebaker J.W. The psychological meaning of words: LIWC and computerized text analysis methods. Journal of Language and Social Psychology. 2010;29(1):24–54. [Google Scholar]

- Teasdale J.D., Scott J., Moore R.G., Hayhurst H., Pope M., Paykel E.S. How does cognitive therapy prevent relapse in residual depression? Evidence from a controlled trial. Journal of Consulting and Clinical Psychology. 2001;69(3):347. doi: 10.1037//0022-006x.69.3.347. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary material 1

Supplementary material 2