Abstract

Extraction of particles from cryo-electron microscopy (cryo-EM) micrographs is a crucial step in processing single-particle datasets. Although algorithms have been developed for automatic particle picking, these algorithms generally rely on two-dimensional templates for particle identification, which may exhibit biases that can propagate artifacts through the reconstruction pipeline. Manual picking is viewed as a gold-standard solution for particle selection, but it is too time-consuming to perform on data sets of thousands of images. In recent years, crowdsourcing has proven effective at leveraging the open web to manually curate datasets. In particular, citizen science projects such as Galaxy Zoo have shown the power of appealing to users’ scientific interests to process enormous amounts of data. To this end, we explored the possible applications of crowdsourcing in cryo-EM particle picking, presenting a variety of novel experiments including the production of a fully annotated particle set from untrained citizen scientists. We show the possibilities and limitations of crowdsourcing particle selection tasks, and explore further options for crowdsourcing cryo-EM data processing.

Keywords: Structural biology, Cryo-EM, Crowdsourcing, Computation, Data processing, Single-particle analysis

1. Introduction

In the past several years cryo-electron microscopy (cryo-EM) has become a powerful tool for elucidating the structures of macro-molecular complexes to near-atomic resolution, and has been effectively used to solve structures of membrane-bound and non-rigid proteins that are difficult to crystallize. Handling low signal-to-noise ratio cryo-EM data necessitates processing large amounts of data, involving thousands of individual micrographs each containing hundreds of particles. A crucial, early step in cryo-EM processing is the selection of individual protein particles from EM micrographs to be used in generating a 3D reconstruction. In the past, particles were hand-picked by a researcher after data collection, but since cryo-EM datasets can now consist of thousands of micrographs and hundreds of thousands of particles, manual picking has become viewed as an unnecessarily banal and time-consuming task for cryo-EM researchers (Scheres, 2015).

As a result, many algorithms have been developed to automate particle picking and reduce the time required for this crucial step in EM processing. Popular methods either identify features common to particles, such as particle size with DoG Picker, or use supplied templates to identify similar-looking subsections of a micrograph (Voss et al., 2009). Automatic methods are limited, however, in their ability to distinguish noise and contaminants from legitimate particles, and will sometimes misplace the center of particles in cases where they are closely packed. Inaccuracies in the collection of particle data can disrupt processing; in the challenging reconstruction of the HIV-1 envelope glycoprotein complex by Liao et al., Henderson noted that a lack of validation of the particle set picked via a template method begat a set of particles containing significant white noise, which nonetheless sufficiently matched the templates provided (Liao et al., 2013; Henderson, 2013). Indeed, manual selection by a trained microscopist is still viewed as an ideal strategy in many cases, especially when templates are not available or the protein particles are ill-defined in the micrographs. Implementing manual selection necessitates an immense amount of time and effort for this single processing step; as an example, Fan et al. manually boxed out 156,805 particles from 3743 micrographs when determining the structure of the InsP3R ion channel. The time needed to produce a manually-picked set precludes its adoption as a regular procedure for particle picking, and a method that reduced the temporal investment could prove valuable for researchers. In addition, scientists seeking particular idealized structures can consciously or subconsciously impart their own biases into manual picking, preferring certain angular views of the particle or omitting subsets of particles that do not exhibit anticipated features (Cheng et al., 2015).

This work examines an increasingly popular method of data processing, crowdsourcing. A term coined in 2006, crowdsourcing opens a task normally assigned to a specific worker to a wider, more generalized userbase (Good and Su, 2013). In recent years, crowdsourcing initiatives have come to rely on the ability of the internet to quickly disseminate data and recruit users to perform the necessary processing.

There are many approaches to crowdsourcing, including scientific games (e.g., Foldit, Eterna) and paid microtask services (e.g., Amazon Mechanical Turk, Crowdflower). Particularly intriguing is the emergence of ‘citizen science’ projects, which rely on community engagement and scientific intrigue to attract users to an otherwise menial task. Citizen science has proven extremely successful, with the project ‘Galaxy Zoo’ classifying over 1 million images from more than 100,000 users over nine months (Lintott et al., 2008). In this paper, we present and analyze the results from a citizen science project ‘Microscopy Masters’, which focused on crowdsourcing particle picking from single-particle cryo-EM micrographs. We examine the efficacy of crowdsourcing particle picking to lightly trained workers when compared to trained electron microscopists, and show that particle sets derived through crowdsourcing can yield robust and reliable 2D class averages and 3D reconstructions. The method presented here is shown as not only a viable time-saving option for datasets that confound automatic pickers, but also shows promise for future applications of crowdsourcing to cryo-EM data processing.

2. Results

2.1. Production of gold standard

A ‘gold standard’ or ‘ground truth’ for evaluating annotated subjects is crucial for beginning any classification study. In the case of algorithmic particle picking, evaluation is typically performed relative to a set of manually picked micrographs. Although manually picked datasets are available from previous studies examining particle picking, they generally contain a small number of images and are only annotated by a single individual (Scheres, 2015). In order to create a richer gold standard for evaluating our crowdsourcing protocol, micrographs were chosen from a single-particle cryo-EM dataset of the 26S proteasome lid complex (Dambacher et al., 2016). Out of the 3,446 micrographs used in the published refinement, 190 were marked by at least two randomly-assigned cryo-EM experts, with a total of nine contributing participants.

In addition, intra-expert agreement was measured by requiring each expert to mark five randomly chosen images twice. The complete union of all marks by all experts totaled to 13,028 particles and was used as the ground truth for all following accuracy measurements in this paper.

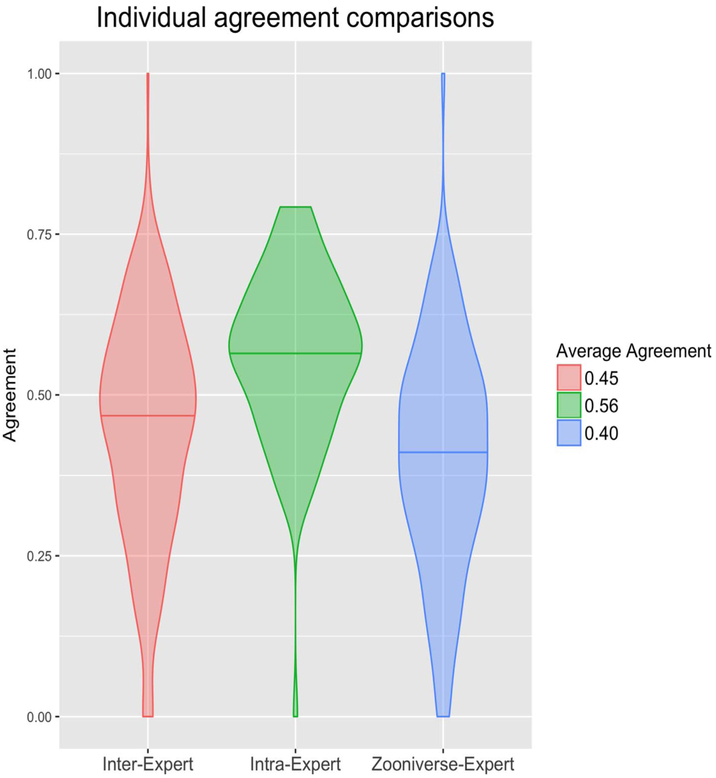

Agreement between two annotations was calculated using the Jaccard index, defined as the proportion of the size of the intersection of the particles picked in two annotations to the size of the union. Intra-expert agreement was found to be surprisingly low and only slightly higher than inter-expert agreement, intra-agreement between all experts averaged 0.56 and inter-agreement averaged 0.45. This indicates that less than three quarters of particles picked by a single individual are picked again on reannotation by the same annotator on the same image. Among those annotators who completed all assigned micrographs, agreement was consistently higher among intra-expert comparisons (Table 1).

Table 1.

Intra-expert and inter-expert agreement calculated using Jaccard index for experts who created the gold dataset. Of the nine experts who participated, one did not complete all assigned micrographs and so was not included in this table.

| User | Inter | Intra |

|---|---|---|

| Expert 1 | 0.45 | 0.64 |

| Expert 2 | 0.42 | 0.46 |

| Expert 3 | 0.54 | 0.65 |

| Expert 4 | 0.40 | 0.51 |

| Expert 5 | 0.44 | 0.64 |

| Expert 6 | 0.44 | 0.58 |

| Expert 7 | 0.45 | 0.61 |

| Expert 8 | 0.48 | 0.51 |

2.2. Initial testing

A chief concern for crowdsourcing, especially citizen science, is building a reliable userbase, either through accessing pre-existing groups of users or attracting users through social media and community engagement. Since we desired to annotate a large, fully manually-picked particle set, we hosted our experiment on an established crowdsourcing platform, Panoptes, a Zooniverse-run initiative for citizen science projects.

Initial testing for the crowdsourcing system was performed by paid workers recruited through Amazon Mechanical Turk (AMT). Workers were recruited, trained, and paid through AMT, while Panoptes hosted the particle selection tasks and stored the results (Fig. 1).

Fig. 1.

Screencap of picking interface hosted on Zooniverse.

Testing produced 16,562 particles chosen by 42 unique workers using the same set of 190 images in the gold standard. Based off of feedback from AMT workers, additional instructions, shown in (Fig. S2), were added to the picking interface.

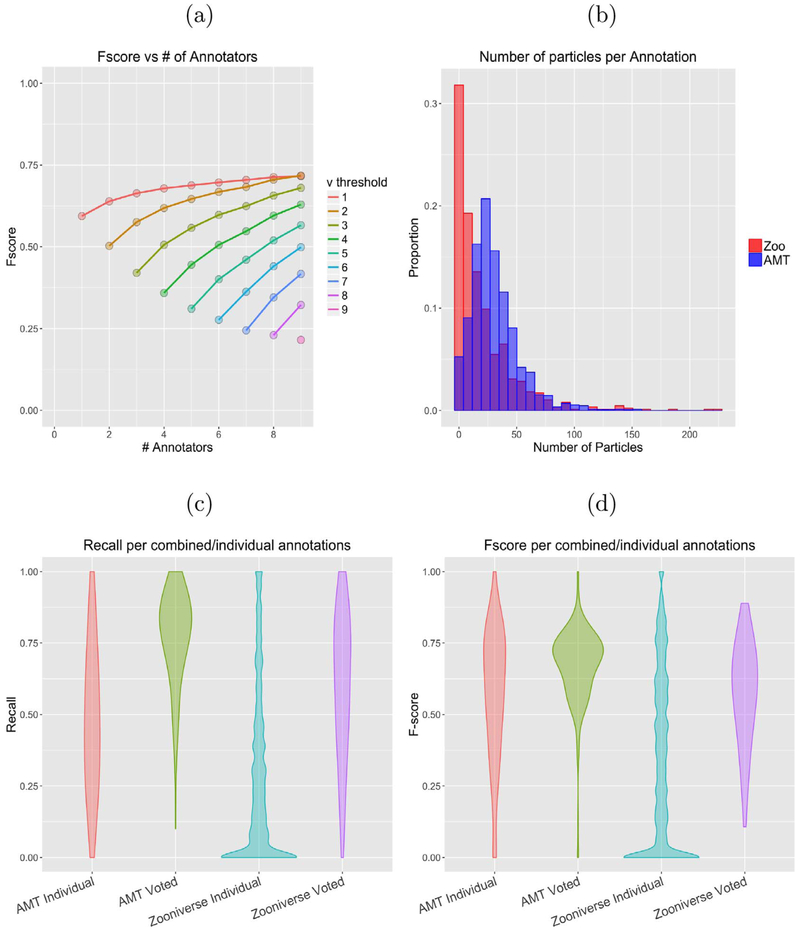

Importantly, this initial testing was used to determine the optimal number of people to assign to each image, as well as to establish a voting mechanism. To this end, at least 10 workers annotated each micrograph and accuracy statistics were derived for randomized subsets of those workers using various voting thresholds (Fig. 2a). Limited returns after five annotators at union led to the choosing of this threshold as optimal; in all subsequent experiments, each image was shown to five annotators and the “crowd” output was defined as the union of users’ annotations.

Fig. 2.

(a) Number of annotators vs performance, grouped by voting threshold. The top line represents union, while the bottom rung represents consensus, (b) Histogram showing distribuion of number of particles picked per person per Image for both AMT and Zoonlverse. Note peak at zero for Zooniverse, comprising ‘low-effort’ annotations. Recall (c) and F-score (d) of Individual classifications from Zoonlverse and AMT workers compared to gold standard. High numbers of low-quality, low-effort annotations from Zoonlverse workers result In peaks at 0 for recall and F-score.

2.3. Zooniverse

After testing in Amazon Mechanical Turk, the project, dubbed Microscopy Masters, was launched on Panoptes in March 2016. A total dataset of 209,696 particle picks was produced over a year from 3,446 micrographs, with 2,108 unique volunteers. The parameters established in our initial testing were utilized, with each image being classified by five different users and the ‘voting threshold’ set to one, meaning the total union of all classifications performed on an image were used to generate the final data set of picks. For individual users, we observed a marked decrease in F-score and recall in the Zooniverse set, as shown in Fig. 2, which we attributed to differing incentives between paid testing on AMT and unpaid volunteers on Zooniverse. In particular, the number of particles selected in each image by Zooniverse volunteers is highly variable; a peak at zero in the distribution of particles picked per-user per-image resulted in a corresponding peak at zero for recall and F-score, as well as a peak at one for precision (Fig. 2b). Association of low recall and low-cardinality annotations implied a body of “low-effort” annotations, where a user did not fully complete the image before submission. Aggregation of the five user annotations per image mitigated the low individual accuracy, yielding an average aggregate F-score comparable to that of the AMT-annotated data, as shown in (Fig. 2d).

Average agreement between the voted crowd annotations and individual expert annotations was found to be slightly less than inter-expert agreement, with the mean for inter-expert agreement at 0.45 and mean between the crowd and experts at 0.40 (Fig. 3).

Fig. 3.

Violin plots of agreement, measured as the Jaccard index, for inter-expert, intra-expert, and crowd-expert comparisons. Standard deviations (σ) and number of comparisons (n) for each distribution are as follows: Inter-Expert: σ = 0.18, n = 227; Intra-Expert: σ = 0.13, n = 90; Zooniverse-Expert: σ = 0.18, n = 382.

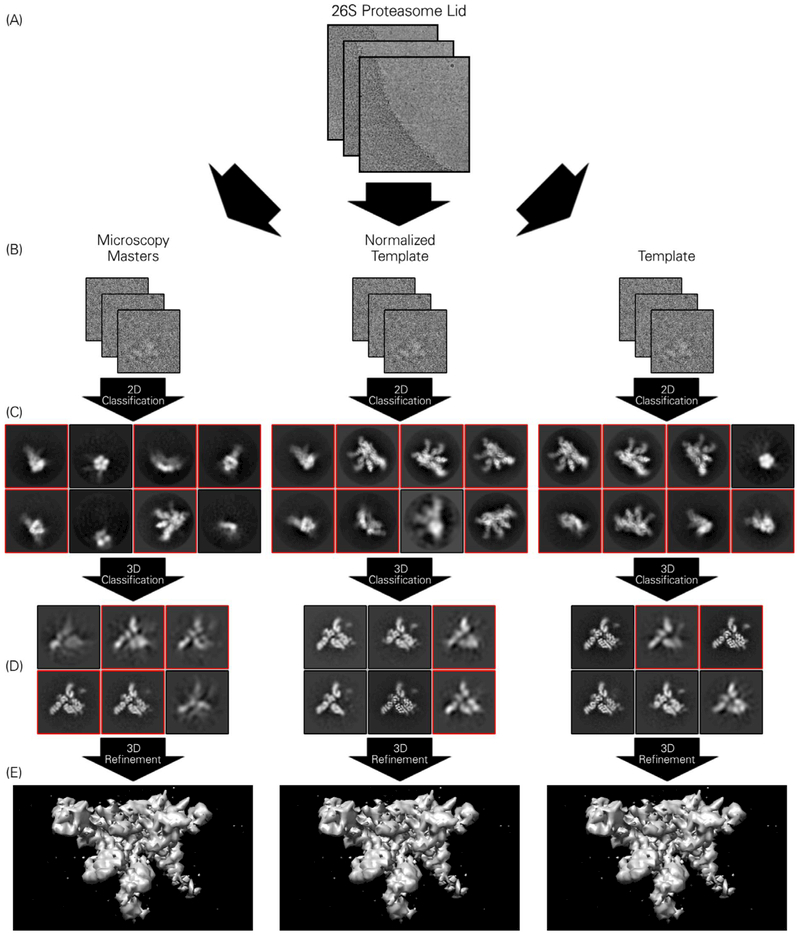

2.4. Reconstruction

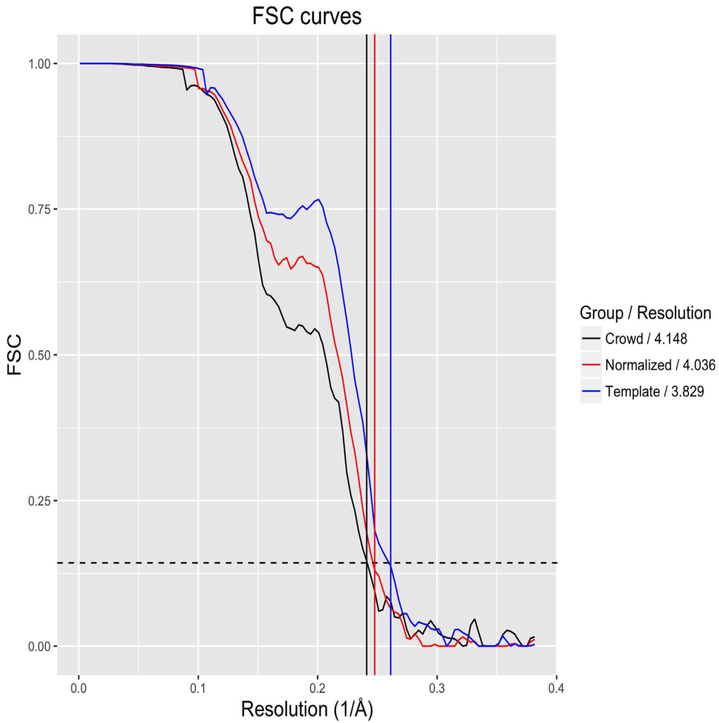

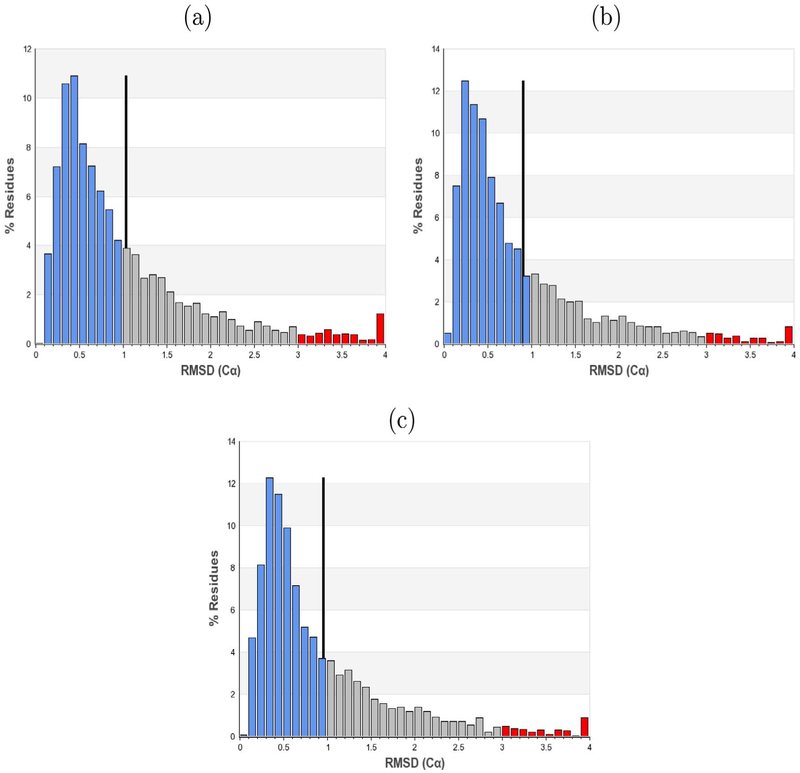

Refinement of the proteasome lid complex structure was performed using the crowdsourced dataset collected through Zooniverse, as well as the dataset used in Dambacher et al., which was picked using a template-based method (Dambacher et al., 2016). Since the resolution of a refined single-particle reconstruction generally correlates with the number of particles in the dataset, a reconstruction was also generated using a random subset of the template-picked particles with the same cardinality as the crowdsourced data, called the ‘normalized template’ set (Cheng, 2015). Particle stacks were extracted from the micrographs, and 2D reference-free classification was performed. “Junk” classes containing false particle picks or damaged/aggregated particles were manually selected and removed, following the same selection criteria used previously (Dambacher et al., 2016). A subset of homogeneous “high resolution” particles were identified through 3D classifications which were refined to yield the final reconstruction (Fig. 4). The number of particles remaining after each filtering step, as well as the final resolution estimates, indicate the template sets produced reconstructions with more internal structural consistency (Table 2). In each reconstruction, Fourier shell correlation (FSC) curves calculated from two independently determined half-maps indicated no irregularities in any of the particle sets (Fig. 5). Resolution, measured at the standard FSC value of 0.143, was found to be higher for the refinement produced from the automatically picked datasets, with the Zooniverse-produced refinement’s resolution at 4.1 Å and the template-picked refinement’s resolution at 3.8 Å. The refinement produced from the normalized template set was 4.0 Å, predictably lower than the template refinement and slightly higher than the crowdsourced refinement. As a further measure of resolution consistency, ten atomic models were independently built into the reconstructions (Herzik et al.). The root-mean-square deviation (RMSD) of each residue’s Cρ position in the ten reconstructions was used as a measure of the local quality of the refinements, with a higher RMSD indicating a less convergent refinement (Fig. 6). While the distribution of RMSD values from the crowd set were significantly higher than the template-picked structures (p < 0.01 as assessed by a two-sample Kolmogorov-Simrnov test), the overall magnitude of the difference was relatively small. The normalized and full template reconstructions had 67.3% and 69.6% of residues with high convergence, respectively, while the crowd-produced reconstruction had 63.7% of residues with high convergence.

Fig. 4.

Reconstruction using crowdsourced, template-picked, and ‘normalized’ template-picked particle sets. (A) Micrographs from 26S are run through template and crowdsourcing particle picking procedures. (B) Particles are then extracted and run through 2D template-free class averaging. (C) Top 8 2D classes from each particle set. Classes chosen to continue through processing marked in red. (D) All 3D classes from 3D classification of particles chosen in step (C), with similar marking. (E) Final refinements for each particle set.

Table 2.

Number of particles left after each filtering step during single-particle reconstruction.

| Step | Template | Normalized | Crowd |

|---|---|---|---|

| Initial | 249,657 | 209,696 | 209,696 |

| 2D filtering | 184,200 | 138,928 | 120,058 |

| 3D filtering | 119,603 | 62,676 | 44,516 |

Fig. 5.

FSC curves for all reconstructions. Horizontal dash line at y = .143 represents value for resolution estimation. Vertical lines’ colors correspond to FSC curves and the x-intercepts represent each curve’s resolution.

Fig. 6.

RMSD histograms of Cρ positions in the tenfold ensemble analysis. Blue, gray and red bars denote residues of low (<1), medium(>1, <3, and high (>3) convergence, respectively. The black line marks the mean of the distribution, (a) Ensemble based off refinement from Zooniverse volunteers, (b) Ensemble from automated template-based method data, (c) Ensemble from normalized automated template-based method data.

3. Discussion

Our study has demonstrated the utility of crowdsourcing particle picking for single-particle cryo-EM to users with little to no experience in cryo-EM. Volunteer particle pickers created a sizeable, usable dataset through a popular citizen science site that produced a 3D reconstruction of comparable quality to the reconstruction generated from template-picked particles. The particles chosen by citizen scientists also compared favorably to those produced in paid scenarios, suggesting the use of a citizen science framework as a low-cost, low-effort alternative to producing high-quality datasets without the potential biases associated with template picking or manual selection by a single person.

Many automatic picking algorithms still rely on some amount of manual picking, since popular template-driven algorithms generally use 2D class averages generated from manually-picked particles as templates. The demonstrated lack of consistency of scientists trained in single-particle cryo-EM when manually picking calls into question the acceptance of manually-picked data as the ground truth when assessing particle-picking protocols. More generally, the noisy nature of micrographs can make it difficult for the human eye to reliably distinguish the multitude of patterns needed to create a robust single-particle dataset, particularly for smaller-sized complexes.

Key to the acceptance of manual selection as an optimal particle-picking method is the superior quality of resultant reconstructions. In our trials, reconstructions produced from the full and normalized template sets, as well as the manually picked set, showed no marked differences in resolution. Far more particles were filtered from the manually picked set through classification, likely a result of the template consistently picking particles with similar features, which results in better clustering of 2D classes. However, since manual picking does not rely on templates, 2D views of protein particles not present in the templates could be recovered from micrographs.

Manual picking through crowdsourcing approaches can produce a dataset of comparable quality to both manual picking by trained experts and computational particle picking methods, but the question remains as to whether manually picked data produces significantly better reconstructions.

Volunteer particle pickers produced a large body of “low-effort” annotations when they were asked to work for no pay. The deleterious effects of these annotations are successfully mitigated by using the union of particles from five separate annotations for each image. Since the low-accuracy annotations generally are those with little to no marks, the increased accuracy from combining all annotations can be inferred to come from users who contribute particles that generally agree with experts’ picks. However, relying upon a fraction of annotations is not ideal, and future attempts to crowdsource particle picking should focus on creating incentive structures that encourage a higher proportion of high-quality annotations. In fact, the lower resolution of the crowdsourced reconstruction is likely largely due to the lower amount of particles in the crowdsourced dataset. If more annotations per image were properly completed, the number of particles, and possibly the resolution, would increase.

A further limitation to crowdsourcing is the time needed to produce a manually picked dataset, with our project running over the course of a year before completion. The challenge to increasing user engagement and reducing the time needed to annotate a cryo-EM dataset is that particle picking competes with other citizen science projects such as wildlife photography, historical text annotation, and telescope imagery. Engaging users through gamification, more frequent updates to the user community, and providing users with information and statistics on their particle selections, such as feedback on gold standard images and creating 2D class averages as they pick, are possibilities that were considered when building this project. Implementing these would have required a platform and userbase separate from Zooniverse due to the current limitations of the platform, and so they were not pursued over the course of this experiment. However, the Zooniverse team is consistently implementing new features, and future iterations of the site could allow for more direct contact with users. Producing a fully crowdsourced particle set might be most effective for proteins where the particles are particularly difficult to identify with template pickers or other automatic methods.

As mentioned previously, automated template particle pickers generally require some initial manual selection of particles from micrographs in order to ‘seed’ the algorithm with 2D templates of the desired protein particle. In order to explore the potential for crowd-sourcing in accomplishing the preliminary manual task of generating such “seed” datasets of particle picks, three datasets were presented to untrained workers with the intent of creating templates suitable for automated particle picking programs. A small number of micrographs from datasets of the HIV-1 envelope trimer, and TRPV2 ion channel were presented to Zooniverse and AMT users (Lee et al., 2016; Zubcevic and Herzik, 2016).

It took two days for the users to complete the desired one hundred HIV-1 trimer annotations, and eight days for the desired one hundred classifications, the discrepancy likely caused by stagnation in the userbase of Microscopy Masters. On AMT, users completed the TRPV2 ion channel tasks in under an hour, while the HIV trimer tasks, which offered much less pay, took around two weeks. The same classification aggregation criteria used in the full reconstruction were applied to the sprint datasets, yielding sets of 1863 and 3298 particles for the HIV-1 trimer and TRPV2 datasets, respectively. Reference-free 2D classification of the manually selected datasets yielded averages that were suitable for use as templates (Fig. S4). Assuming an engaged group of users can be accessed, either through a paid system or by cultivating a dedicated community, the relatively short turnaround time of creating templates via this method demonstrates its usefulness for cryo-EM researchers.

Another possible application for crowdsourcing can be found in cellular tomography, which requires manual curation in order to identify and link sub-cellular structures in multiple frames. Even newer machine-learning algorithms for automated segmentation require that a user initially identify features manually within tomograms (Chen et al.).

The results outlined in this manuscript showcase crowdsourcing as a useful, new option for microscopists whose data resist selection by automated method, but although the untrained workers demonstrated an ability to produce particle sets of acceptable quality, there is work to be done in creating viable incentive structures and encouraging user engagement. Despite the difficulties in producing a fully crowdsourced dataset, the body of work presented here is an encouraging first step in the novel application of crowdsourcing processing for cryo-EM data.

4. Methods and materials

4.1. Equations and definitions

In this subsection the various accuracy measures used in this manuscript will be formally defined. Precision and recall are used as measures of the true negative and true positive rates, respectively. Precision (P) is defined as 1 – False Positive Rate, more formally:

| (1) |

where FP is the number of false positives and TP is the number of true positives. Recall (R) is the measured power of the classification, given as:

| (2) |

where FN is the number of false negatives. F-score (F) takes both precision and recall into account for a general measure of the accuracy of a classification versus a gold standard, it is defined as:

| (3) |

The agreement, or Jaccard index, is used as a measure of consensus between two groups. It is defined as the ratio of the size of the intersection to the size of the union of two separate classifications. Given two sets of points S1 and S2, this is shown as

| (4) |

In the above equation we also define ∣*∣, the cardinality, of a set. Set cardinality is simply the number of members in a given set.

4.2. Gold standard production

The gold standard was generated in a single, pizza-fueled afternoon with resident electron microscopy researchers at The Scripps Research Institute. Each researcher was randomly assigned forty-five images out of a 190 micrograph subset from the 26S Proteasome lid complex dataset from Dambacher et al. (2016). Additionally, each expert marked five images twice in order to study consistency of experts’ particle picking, making a total of fifty annotations for each expert. The union of these repeated images’ picks were used for the gold standard of a particular expert on the respective image, and the union of all experts’ picks was used as the gold standard for our entire study. The platform used by the experts was identical to that used by the AMT workers, although some features such as the pop-up tutorial and additional screen for marking unusable images implemented in the Microscopy Masters project were absent for the experts.

4.3. Project hosting on Zooniverse

Initiation of our crowdsourcing project coincided with the launch of the Panoptes system on a popular crowdsourcing consortium called Zooniverse, creators of the successful Galaxy Zoo. Panoptes offered a customizable interface for allowing the Zooniverse userbase to actively work on projects outside of the usual Zooniverse scope. Since the Zooniverse userbase numbers in the millions, its use offered access to a much larger group of users than could have been achieved on an independent platform.

4.4. Initial user testing

In order to create a usable, easily understandable interface, the Panoptes workflow was initially tested on Amazon Mechanical Turk (AMT). Workers recruited via AMT were sent to single images on Panoptes and given a code unique to each image to verify that they completed the task. Before being allowed to work, completion of a short tutorial and mutiple-choice quiz was required, with a minimum allowable score of 5/7 questions correct. Initially, instructions beyond initial quizzing and explanation were minimal, but this led to complaints from the userbase that it was too difficult to complete the task without any easily accessible instructions. Workers were paid variable amounts from ten cents to 25 cents per image, they generally averaged 20 s per image. Total cost for all rounds of user testing is included in Table S1. Predictably, lower payments resulted in a lower rate of participation; 10 cent payments took one week to complete at five annotations per image over 200 images, while 25 cent payments with the same amount of required work took 3 days. Text for the tutorials and help text were tweaked using feedback from the AMT workers before a full launch on Panoptes.

4.5. Microscopy masters

Initial testing was followed by a beta release of Microscopy Masters to select members of the Zooniverse community. Following feedback from the beta test, an additional screen allowing users to mark images as poor quality was placed before the picking interface. Some features were added to the interface during the middle of production; two versions were implemented over the course of the project. When compared to gold standard, the differing versions showed no marked dissimilarity in terms of F-score.

4.6. Voting protocol

Although the ‘voting threshold’ for points was set to one, meaning that a point only needed to be selected once for it to be included in the final set, an algorithm needed to be designed to combine picks from separate annotators. Two points in two separate annotations of the same image were combined into a vote if:

They were within 20 pixels of one another

They were mutual nearest neighbors, i.e., each point was the other’s nearest neighbor in the second annotation

If two points were combined, their averaged coordinates were used as a new particle.

4.7. 26S Proteasome lid complex dataset

A single-particle cyro-EM dataset for the ovine 26S proteasome lid complex was chosen as the primary test case for crowdsourced particle picking. The globular nature of the lid complex can confound often-used template pickers, making it an ideal choice to test the limitations of automatic picking procedures. Dambacher et al. imaged the dataset on a Krios Titan cryo-EM microscope, technical details are detailed in their text (Dambacher et al., 2016).

4.8. Reconstruction pipeline

Cryo-EM processing was performed in Relion v1.4 (Scheres, 2012). Processing followed the methods previously described (Dambacher et al., 2016) in order to produce comparable reconstructions. CTF parameters were estimated using CTFFIND3 (Mindell and Grigorieff, 2003). Particles were extracted from respective particle coordinate files with a box size of 256 pixels. Choosing of class averages was performed through random selection by Gabriel Lander; classes from separate reconstructions were anonymously mixed treated as a single dataset. Particles from chosen classes were passed on to the subsequent step. Final refinement was performed with the same initial model used in Dambacher et al. using default parameters in RELION.

4.9. Initial template construction

Images for the initial template construction were initially processed in a manner similar to that of the large 26S proteasome lid project. Five classifications were requested for the HIV trimer set on both platforms as an initial test, this was increased to fifteen classifications for TRPV2 on zooniverse in order to attract more users for annotation. Costs for the AMT experiments are summarized in Table S1. In an attempt to make the task more visually engaging, micrographs were colored with various contrasting colors as well as offering the traditional black and white. No marked decrease in quality was associated with the color changes. Upon release of the new datasets, Microscopy Masters users were notified of additional datasets via an emailed newsletter.

Supplementary Material

4.10. Acknowledgements

We thank the members of the Hazen cryo-EM facility for their help in generating the gold standard dataset. We also thank J.C. Ducom at the High Performance Computing group for computational support. G.C.L. is supported as a Searle Scholar, a Pew Scholar, and by the National Institutes of Health (NIH) DP2EB020402. This work was also supported by the National Institutes of General Medical Sciences (U54GM114833 to A.I.S.). This publication uses data generated via the Zooniverse.org platform, development of which is funded by generous support, including a Global Impact Award from Google, and by a grant from the Alfred P. Sloan Foundation.

Footnotes

Appendix A. Supplementary data

Supplementary data associated with this article can be found, in the online version, at http://dx.doi.org/10.1016/j.jsb.2018.02.006.

Contributor Information

Jacob Bruggemann, Email: jbrugg@scripps.edu.

Gabriel C. Lander, Email: glander@scripps.edu.

Andrew I. Su, Email: asu@scripps.edu.

References

- Chen M, Dai W, Sun Y, Jonasch D, He CY, Schmid MF, Chiu W, Ludtke SJ, Convolutional neural networks for automated annotation of cellular cryo-electron tomograms. arXiv preprint arXiv:1701.05567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheng Y, 2015. Single-particle cryo-em at crystallographic resolution. Cell 161 (3), 450–457. 10.1016/j.cell.2015.03.049. http://www.sciencedirect.com/science/article/pii/S0092867415003694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheng Y, Grigorieff N, Penczek PA, Walz T, 2015. A primer to single-particle cryo-electron microscopy. Cell 161 (3), 438–449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dambacher CM, Worden EJ, Herzik MA Jr., Martin A, Lander GC, 2016. Atomic structure of the 26s proteasome lid reveals the mechanism of deubiquitinase inhibition. eLife 5, el3027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Good BM, Su AI, 2013. Crowdsourcing for bioinformatics. Bioinformatics btt333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henderson R, 2013. Avoiding the pitfalls of single particle cryo-electron microscopy: Einstein from noise. Proc. Natl. Acad. Sci 110 (45), 18037–18041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herzik MA, Fraser J, Lander GC, A multi-model approach to assessing local and global cryo-em map quality, bioRxiv arXiv:http://www.biorxiv.org/content/early/2017/04/19/128561.full.pdf, doi: 10.1101/128561 .http://www.biorxiv.org/content/early/2017/04/19/128561. [DOI] [PMC free article] [PubMed]

- Lee JH, Ozorowski G, Ward AB, 2016. Cryo-em structure of a native, fully glycosylated, cleaved hiv-1 envelope trimer. Science 351 (6277), 1043–1048. 10.1126/science.aad2450. arXiv:http://science.sciencemag.org/content/351/6277/1043.full.pdf, http://science.sciencemag.org/content/351/6277/1043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liao H-X, Lynch R, Zhou T, Gao F, Alam SM, Boyd SD, Fire AZ, Roskin KM, Schramm CA, Zhang Z, et al. , 2013. Co-evolution of a broadly neutralizing hiv-1 antibody and founder virus. Nature 496 (7446), 469–476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lintott CJ, Schawinski K, Slosar A, Land K, Bamford S, Thomas D, Raddick MJ, Nichol RC, Szalay A, Andreescu D, et al. , 2008. Galaxy zoo: morphologies derived from visual inspection of galaxies from the sloan digital sky survey. Mon. Not. R. Astron. Soc 389 (3), 1179–1189. [Google Scholar]

- Mindell JA, Grigorieff N, 2003. Accurate determination of local defocus and specimen tilt in electron microscopy. J Struct Biol 142 (3), 334–347. 10.1016/S1047-8477(03)00069-8. http://www.sciencedirect.com/science/article/pii/S1047847703000698. [DOI] [PubMed] [Google Scholar]

- Scheres SH, 2012. Relion: Implementation of a bayesian approach to cryo-em structure determination. J Struct Biol 180 (3), 519–530. 10.1016/j.jsb.2012.09.006. http://www.sciencedirect.com/science/article/pii/S1047847712002481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scheres SH, 2015. Semi-automated selection of cryo-em particles in relion-1.3. J. Struct. Biol. 189 (2), 114–122. 10.1016/j.jsb.2014.11.010. http://www.sciencedirect.com/science/article/pii/S1047847714002615. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Voss N, Yoshioka C, Radermacher M, Potter C, Carragher B, 2009. Dog picker and tiltpicker: software tools to facilitate particle selection in single particle electron microscopy. J. Struct. Biol. 166 (2), 205–213. 10.1016/j.jsb.2009.01.004. http://www.sciencedirect.com/science/article/pii/S1047847709000197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zubcevic L, Herzik J, Mark A, Chung BC, Liu Z, Lander GC, Lee S-Y, 2016. Cryo-electron microscopy structure of the tppv2 ion channel. Nat. Struct. Mol. Biol 23 (2), 180–186. 10.1038/nsmb.3159. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.