Abstract

The social brain hypothesis posits that dedicated neural systems process social information. In support of this, neurophysiological data have shown that some brain regions are specialized for representing faces. It remains unknown, however, whether distinct anatomical substrates also represent more complex social variables, like the hierarchical rank of individuals within a social group. Here we show that the primate amygdala encodes the hierarchical rank of individuals in the same neuronal ensembles that encode the rewards associated with non-social stimuli. By contrast, orbitofrontal and anterior cingulate cortices lack strong representations of hierarchical rank while still representing reward values. These results challenge the conventional view that dedicated neural systems process social information. Instead, information about hierarchical rank – which contributes to the assessment of the social value of individuals within a group – is linked within the amygdala to representations of rewards associated with non-social stimuli.

Introduction

Human and non-human primates tend to live in large social groups that possess dominant and subordinate members1. In many settings, such as interviewing for a job or attending a family reunion, appropriate social interactions and emotional behavior depend upon an assessment of the hierarchical rank of both oneself and of the other people present. The hierarchical rank of individuals and the accurate evaluation of the hierarchical rank of others impact the ability to thrive, for example by influencing fluid and food access, mating priority, and defensive behavior,2–5.

The assessment of hierarchical rank requires accurate identification of social group members. Primates are masters at recognizing identity based on faces6, 7. Brain areas engaged during face processing include the occipital, fusiform, and superior temporal sulcus (STS) face areas, as well as the amygdala and orbitofrontal cortex8–15. The STS is anatomically connected to brain areas implicated in social processing, including the anterior cingulate and orbitofrontal cortices (ACC and OFC) and the amygdala16–18. These connections may link representations of facial identity to neural circuits processing motivational and emotional information about non-social stimuli. For example, the amygdala, OFC and ACC all provide neural representations of the value of conditioned stimuli acquired through reinforcement learning19–21.

How and where neural representations of social variables more complex than facial identity - such as hierarchical rank - emerge in the brain is an enduring mystery. The Social Brain Hypothesis posits that the evolution of large brains in mammals reflects increasingly complex social demands22, a proposal consistent with the discovery in primates of brain areas specialized for representing faces. In further support of this hypothesis, gray matter brain volume in human and non-human primates varies as a function of social status and/or social experiences23–27. However, the extent to which more complex social variables like hierarchical rank are processed in specialized neural circuits remains unclear. Human neuroimaging studies indicate a role for the amygdala in establishing artificial hierarchies acquired during performance of a task in the laboratory28–32, but the amygdala’s role in representing naturally established hierarchies has not been demonstrated. It is possible that some social variables – such as the propensity to share rewards with a partner – may engage distinct neuronal ensembles14, 33–36, but other social variables may not engage neuronal circuits dedicated to processing only social information. Indeed, rewarding stimuli and stimuli that possess hierarchical information have been observed to modulate the same neuronal ensembles in brain areas such as OFC, striatum and the lateral intraparietal area (LIP)12, 14, 35, 37. In these experiments, however, neural responses to stimuli spanning a full social hierarchy were not examined, leaving unclear whether the studied neural ensembles in fact represent hierarchical rank per se.

Monkeys (and other primates) almost certainly learn the social rank of other group members through experience. This learning process may entail assigning group members different ‘values’ through experience, a process bearing resemblance to learning about the value of non-social stimuli. Here we provide evidence that the primate amygdala encodes the hierarchical rank of individuals in the same neuronal ensembles that also encode the rewards associated with non-social stimuli. By contrast, despite representing the rewards associated with non-social stimuli, OFC and ACC lack strong representations of hierarchical rank. These results challenge strong versions of the “Social Brain” hypothesis22, the traditional view that the processing of social stimuli occurs exclusively in dedicated neural systems. Instead, although some aspects of social processing may occur in distinct neural circuits, some complex social variables, like hierarchical rank, may be represented by neuronal ensembles that also process non-social stimuli.

Results

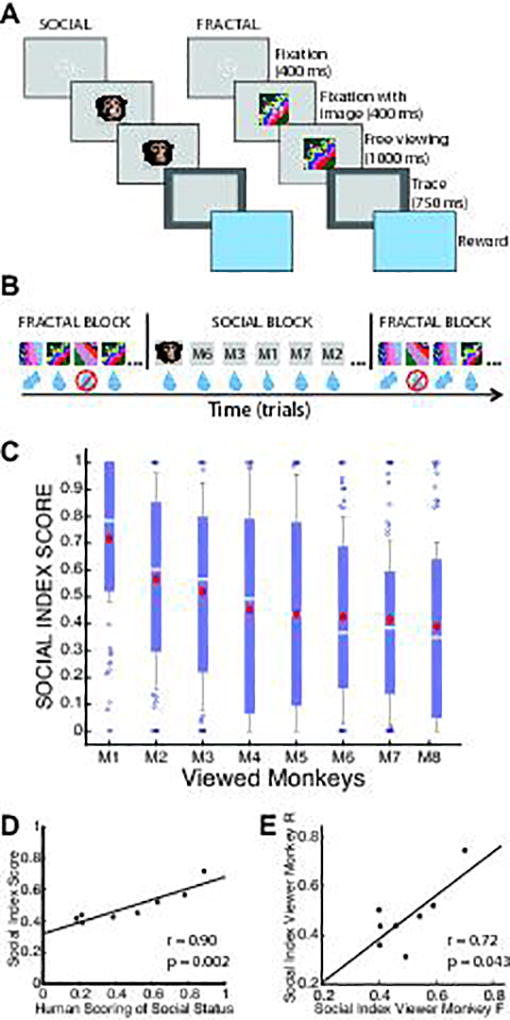

Two rhesus monkeys who had lived within the same stable group of 10 monkeys for many years performed a task in which they viewed, in different blocks of trials, images of the faces of other members of their group, or fractal images predicting different reward amounts (social and fractal trials, Fig. 1A, B). The face images were neutral in expression and did not depict emotionally relevant expressions (see Methods). “Viewer” monkeys fixated for the first 400 ms of image presentation for all trials, and then were permitted free viewing of the images for an additional 1000 ms. Successful fixation resulted in delivery of one drop of liquid reward after viewing a face image, or 0, 1 or 2 drops of liquid reward on fractal trials (depending upon the image). Anticipatory licking demonstrated that monkeys learned the different reward associations of the fractal images (Kruskal-Wallis test, p < 1e-05, Supplementary Fig.1).

Figure 1. Task and behavioral measures.

A, Sequence of events in behavioral task. Monkeys view images of the faces of other monkeys who reside within their housing room (left panels) or fractal images (right panels). Successful fixation results in reward delivery. B, Behavioral task interleaving social and non-social (fractal) trial blocks. In fractal blocks, different images are associated with different reward amounts, unlike in trials for the social block. C, Social Index from behavioral measures of hierarchical assessment observed during the recording sessions plotted for each viewed monkey, M1 through M8, where M1 is the Alpha monkey and M8 the most submissive one. Kruskal-Wallis test (Chi-sq(7,535)=47.11, p < 1e-07), Dunn’s post-hoc: M1 different from M3 (p = 0.023), M4 (p < 1e-03), M5 (p < 1e-03), M6 (p < 1e-04), M7 (p < 1e-04), M8 (p < 1e-04); M2 vs. M8, p = 0.074). Data represent the average of the 4 behavioral measures used to compute the social index across the sessions (n=17) (see Methods and Supp. Fig. 2). Red diamonds represent mean, white lines represent median, blue bars and whiskers represent 75th and 85th percentiles respectively, blue circles represent data points beyond the whiskers limits. D, Social index score plotted as a function of the scoring of the colony hierarchy by human observer (see Methods, Pearson’s correlation coefficient (two-sided), r = 0.90, p=0.002). E, Index score for each viewer monkey plotted against each other (Pearson’s correlation coefficient (two-sided), r = 0.72, p=0.043).

Human observers scored hierarchical rank using established methodology that determined the “winner” of agonistic interactions between monkeys (Table 1 and Supplementary Table 1)38. We also used the oculomotor behavior of viewer monkeys during electrophysiological recording sessions to construct an index of the monkeys’ assessment of hierarchical rank of the face images. Several measures of viewer monkeys’ oculomotor behavior varied depending upon the face image being displayed (Fig. 1C and Methods)39. These measures capitalized on the fact that monkeys tended to look at the face images of dominant monkeys, that viewing dominant monkeys can be more arousing, and that the propensity to look at the eyes of an individual is modulated by hierarchical rank (see Methods, Supplementary Fig. 2)40. The social status indices for each viewer monkey were significantly correlated (r = 0.72, p = 0.043; Fig. 1E), perhaps reflecting that the two viewer monkeys both were in the middle of the hierarchy (Table 1). Furthermore, the index constructed from oculomotor measures was correlated with the human scoring of hierarchical rank (r = 0.90, p = 0.002; Fig. 1D), and was not significantly correlated with monkey age or weight (r = 0.41, p = 0.305 and r = 0.071, p = 0.867, respectively). A behavioral experiment showed that the oculomotor measures obtained from viewer monkeys did not distinguish between dominant from submissive monkeys if the faces displayed on the screen were unfamiliar to the viewer monkeys (Supplementary Fig. 3). The correlation of the social status index with a traditional scoring method of hierarchical organization by human observers provides strong evidence that during the electrophysiological recordings, the viewer monkeys were actively assessing the hierarchical rank of the facial images.

Table 1.

Hierarchical rank determined by human observers. Summary of the overall observed social interactions used in the computation of the social status scored by human observer. MF and MR are the two viewer monkeys.

| Monkey | Number of won agonistic interactions observed |

Number of agonistic interactions |

Relative Dominance Status |

|---|---|---|---|

| Viewed M1 | 61 | 69 | 88.4 |

| Viewed M2 | 53 | 68 | 77.9 |

| Viewed M3 | 22 | 35 | 62.9 |

| Viewer MF | 45 | 83 | 54.2 |

| Viewed M4 | 27 | 51 | 51.9 |

| Viewer MR | 42 | 94 | 44.7 |

| Viewed M5 | 10 | 47 | 21.3 |

| Viewed M6 | 17 | 44 | 38.6 |

| Viewed M7 | 9 | 49 | 18.4 |

| Viewed M8 | 12 | 55 | 21.8 |

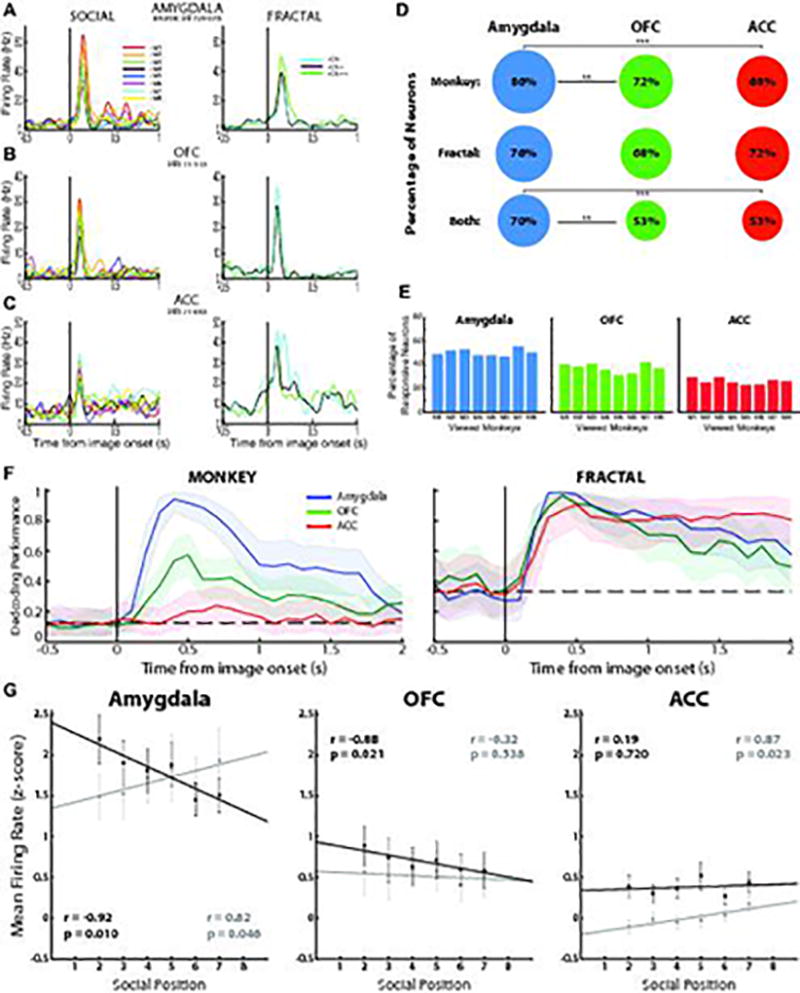

While monkeys performed the fixation task, we recorded the responses of individual neurons in the amygdala (196 neurons), OFC (134 neurons), and ACC (187 neurons) to face images (see Supplementary Fig. 4 for recording site examples). Figure 2A–C, left panels, depicts data from individual neurons in amygdala, OFC, and ACC. Across the populations of neurons, the proportion of neurons responsive to faces during fixation was higher in the amygdala than in OFC (z-test, z = 3.139, p = 0.002) or in ACC (z-test, z = 4.031, p < 1e-04) (Fig. 2D, top row). OFC and ACC were statistically indistinguishable for this measure (z-test, z = 0.614, p = 0.539). However, responses to faces were usually not restricted to a single face image: 85.7% of face-responsive neurons in the amygdala responded to more than one face (80.2% of neurons in OFC and 59.4% of neurons in ACC exhibited the same property; see Supplementary Fig. 5A). Only a minority of neurons responded to only one face image (e.g. Supplementary Fig. 5B). The proportion of neurons exhibiting a significant response to each face image did not differ across the face images for any brain area (chi-square test, Chi2 = 2.421, p>0.9 in amygdala; Chi2 = 3.930, p>0.5 in OFC; Chi2 = 2.964, p>0.5 in ACC; Fig. 2E).

Figure 2. The amygdala represents the hierarchical status of individuals.

A–C. Example neurons from the amygdala (A), OFC (B), and ACC (C) that respond to both face (left panels) and fractal images (right panels). The amygdala neuron responds most strongly to the dominant monkey face image and to the most highly rewarded fractal image, with responses decreasing monotonically with decreases in the hierarchical status of face images or the reward associated with fractals. D. The percentage of neurons in the 3 brain structures that respond to at least one monkey, one fractal or one of both type of images. ** and *** indicate significant differences at p<0.01 and p<0.0001 (two-tailed z-test, top row: amygdala vs. OFC, z = 3.139, p = 0.002; amygdala vs. ACC, z = 4.031, p < 1e-04; OFC vs. ACC, z = 0.614, p = 0.539; middle row: amygdala vs. OFC, z = 1.517, p = 0.129; amygdala vs. ACC, z = 0.855, p = 0.393; OFC vs. ACC, z = −0.723, p = 0.470; bottom row: amygdala vs. OFC, z = 3.126, p = 0.002, amygdala vs. ACC, z = 3.411, p < 1e-03; OFC vs. ACC, z = 0.008, p = 0.994. Analysis have been performed on the entire neuronal population for each brain area: Amygdala, n=196; OFC, n = 134; ACC, n=187; see Methods). E. The proportion of neurons in each brain area that exhibit a significant response to each of the 8 viewed faces. F, Average decoding performance for each brain area for classifying monkey image (left) or fractal (right) identity (n=1000 iterations). Left, curves show the decoding performance for an 8-way classification of monkey face images (equalized number of neurons for the 3 brain areas, n=110 neurons). Right, average decoding performance for each brain structure of discriminating between the 3 fractal images (3-way classifier) (equalized number of neurons for the 3 brain areas, n=131 neurons). Shading, 95% confidence intervals (bootstrap). Dotted lines, chance decoding level. G. Average z-scored firing rate plotted as a function of hierarchical status for neurons that respond more strongly (black line and dots) or weakly (grey line and dots) to a higher ranked monkey (M1) than to a lower ranked monkey (M8) in Amygdala (left panel), OFC (center) and ACC (right). Since neural responses to M1 and M8 are used to classify the cells, these data are excluded from the regression analyses. Pearson’s correlation coefficient (two-sided): amygdala, r = −0.92, p = 0.010, n = 112 neurons (black), r = 0.82, p = 0.046, n = 83 (grey); OFC, r = −0.88, p = 0.021, n = 79 (black), r = −0.32, p = 0.538, n = 49 (grey); ACC, r = 0.19, p = 0.720, n = 97 (black), r = 0.87, p = 0.023, n = 87 (grey). Error bars, SEM. Neural responses were analyzed during the time epoch extending from 100 – 400 ms after image onset for all panels in this figure (fixation was required during this interval).

Although neurons often responded to more than one face image, an analysis utilizing a linear decoder demonstrated that the population of amygdala neurons could be used to selectively discriminate which facial image is being viewed shortly after image onset (Fig. 2F, see Methods)41. Decoding performance for face image identity was markedly superior in the amygdala than in OFC or ACC (Fig. 2F, left panel), suggesting that on average the amygdala communicated more facial identity information per neuron than the pre-frontal areas.

We next explored whether a neural representation of hierarchical rank was present in each brain area. A regression analysis was used to quantify the relationship between neural responses during fixation of the face images (100 – 400 ms after image onset) and the social position (1–8) of the viewed face, where position 1 corresponds to the most dominant monkey (M1). The regression coefficient βsoc characterized neuronal tuning to the hierarchical rank of face images. For the amygdala neuron depicted in Fig. 2A, βsoc was significantly less than 0 (p < 0.05), indicating that the neuron tended to fire more strongly to face images from dominant monkeys. Overall, 30.6% of amygdala neurons, corresponding to 36% of face-responsive neurons, exhibited statistically significant selectivity for hierarchical rank (Supplementary Table 2). Out of those 30.6% neurons, 63.6% responded more strongly to dominant face images, and 36.4% to submissive face images (Supplementary Table 2). Neurons in OFC and ACC exhibited selectivity for hierarchical rank much less frequently (16.7% of OFC neurons (23% of face-responsive OFC neurons) and 6.3% of ACC neurons (9% of face-responsive ACC neurons).

We confirmed the presence of a representation of hierarchical rank across the population of amygdala cells by performing an out-of-sample test: we analyzed separately the neurons that responded more strongly to M1 (most dominant monkey) than M8 (most submissive monkey), and those that responded more strongly to M8 than M1 (see Methods). We then assessed whether neural responses to faces from the remaining out-of-sample viewed monkeys (M2–M7) were correlated with hierarchical rank. For the amygdala, responses to monkey face images correlated significantly with the social position of viewed monkeys (p = 0.010 and p = 0.046 for dominant and submissive monkeys preferring neurons respectively, Fig.2G, left panel). These results indicate that neurons classified according to preferential responding to M1 or M8 exhibit correlated neural activity with hierarchical ranking when only considering responses to M2–M7. The relationship between neural activity and hierarchical rank is therefore not merely driven by responses to the most dominant (M1) and most submissive (M8) monkeys.

Unlike the amygdala, OFC and ACC did not provide a compelling representation of social hierarchy (Fig. 2G, middle and right panels). Moreover, only the amygdala exhibited regressions with oppositely signed slopes for dominant and submissive preferring neurons. We used a Fisher's r to z transformation test to assess the statistical significance of the difference between the correlation coefficients in each brain area. This test revealed a statistically significant difference between the amygdala and ACC for dominant preferring neurons, and between the amygdala and OFC for submissive preferring neurons (difference between correlations for dominant preferring neurons: amygdala (r=−0.92) vs. OFC (r=−0.88), z=−0.26, p=0.398; amygdala (r=−0.92) vs. ACC (r=0.19), z=−2.18, p=0.015; OFC (r=−0.88) vs. ACC (r=0.19), z=−1.92; p=0.028; for submissive preferring neurons: amygdala (r=0.82) vs. OFC (r=−0.32), z=1.82; p=0.034; amygdala (r=0.82) vs. ACC (r=0.87), z=0.22, p=0.413; OFC (r=−0.32) vs. ACC (r=0.87), z=−2.04, p=0.021). We also combined the data from dominant-preferring and submissive-preferring neurons by sign-flipping the data from the submissive-preferring neurons and then averaging these responses with dominant-preferring neurons. A correlation coefficient of these combined data was computed for each area. The amygdala was the only area significantly correlated with the social position of the viewed monkeys (r = −0.91, p=0.013 for amygdala; r = − 0.61, p=0.20 for OFC; and r = −0.60, p=0.21for ACC).

Our electrophysiological recordings were obtained from the same parts of the amygdala, OFC, and ACC shown previously to contain individual neurons that respond differentially to a conditioned stimulus (CS) depending upon its reward association19, 20, 41, a finding we also observed in this study (Fig. 2A–C). We examined the encoding of reward magnitudes associated with fractal images by using a regression analysis to estimate a coefficient (βfrac) quantifying the relationship between neural activity and the reward associated with fractals (see Methods). βfrac was significantly different from 0 in 38.9%, 25.8%, and 39.2% of neurons in amygdala, OFC and ACC, respectively, with different neurons exhibiting positive or negative valence preference (Supplementary Table 2). The proportion of neurons responsive to one or more fractal images was not statistically distinguishable across the 3 brain structures (Fig. 2D, middle row, Amygdala vs. OFC, z = 1.517, p = 0.129; Amygdala vs. ACC, z = 0.855, p = 0.393; OFC vs. ACC, z = −0.723, p = 0.470; z-test for all comparisons), but the proportion of neurons responsive to one or more face and one or more fractal images was significantly greater in the amygdala than OFC and ACC, which were indistinguishable from each other (Fig. 2D, bottom row; z-test: amygdala vs. OFC, z = 3.126, p = 0.002, amygdala vs. ACC, z = 3.411, p < 1e-03; OFC vs. ACC, z = 0.008, p = 0.994). A linear decoder analysis revealed that neuronal populations in all 3 brain areas could be used to decode fractals associated with different rewards beginning shortly after image onset, consistent with prior observations (Fig. 2F, right panel)41.

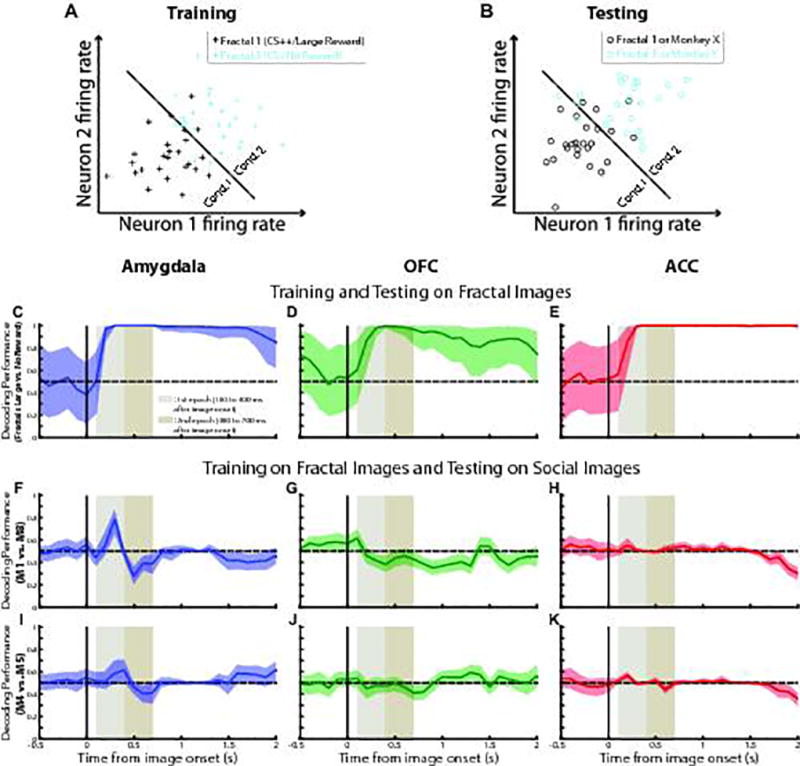

We next hypothesized that neural representations of hierarchical rank and of the rewards associated with non-social (fractal) images might be encoded by overlapping neuronal ensembles. To test this hypothesis, we trained a linear decoder to distinguish between trials when fractals were associated with large or no reward. The training procedure sets the weight of each neuron as well as a global threshold for distinguishing between the two conditions used for training (Fig. 3A). The decoder was then tested on held-out trials of the same types, as well as on trials in which monkeys viewed two different selected monkey face images (Fig. 3B). This decoding analysis was performed in a 300 ms sliding window (see Methods). As expected, decoding performance was high in all 3 brain areas when testing occurred on held-out fractal trials (Fig. 3C–E), consistent with previously described neural representations of expected reward in all 3 brain areas41. When the decoder was trained to distinguish between trials with fractals associated with large or no reward, and was tested to distinguish between trials in which two monkey faces were presented that differed greatly in hierarchical rank (M1 vs. M8), decoding performance in the amygdala was significantly above chance shortly after image onset and extending through the fixation interval (Fig. 3F). During the beginning of free viewing, however, decoding performance was significantly below chance, indicating that the opposite classification was made in this time interval, but that a relationship still existed between the encoding of fractals associated with different rewards and hierarchical rank. By contrast, when the same analysis was applied to neural responses to monkeys who had similar hierarchical rank (M4 and M5), decoding performance was barely different from chance (Fig. 3I). The same analysis performed on OFC and ACC neurons failed to classify hierarchical status when training the decoder on fractal image trials (Fig. 3G–H, J–K).

Figure 3. The same neuronal ensemble in the amygdala can decode reward value and differences in hierarchical status.

A, B. Procedure for determining whether same neural ensemble represents the reward associated with fractals and the hierarchical status of viewed face images. A linear decoder is trained to discriminate between fractals associated with large and no reward on a subset of trials (A). Decoding performance was tested on held out fractal trials, or on trials with any two monkey face images (e.g. monkey X and monkey Y) (B). C–E: Decoding performance plotted as a function of time for each brain area when testing the decoder on held-out large and no reward trials. F–H: Decoding performance for each brain area when testing the decoder on high vs. low ranked monkey image trials (M1 vs. M8, i.e. the most distant monkeys in term of social status). I–K: Decoding performance when testing the decoder on two adjacent middle-ranked monkeys (M4 vs. M5). Shading, 95% confidence intervals (bootstrap). Dotted lines, chance decoding level. For all these analyses the decoder has been trained and tested on the entire neuronal population (i.e. no down sampling, n = 1000 iterations, see Methods).

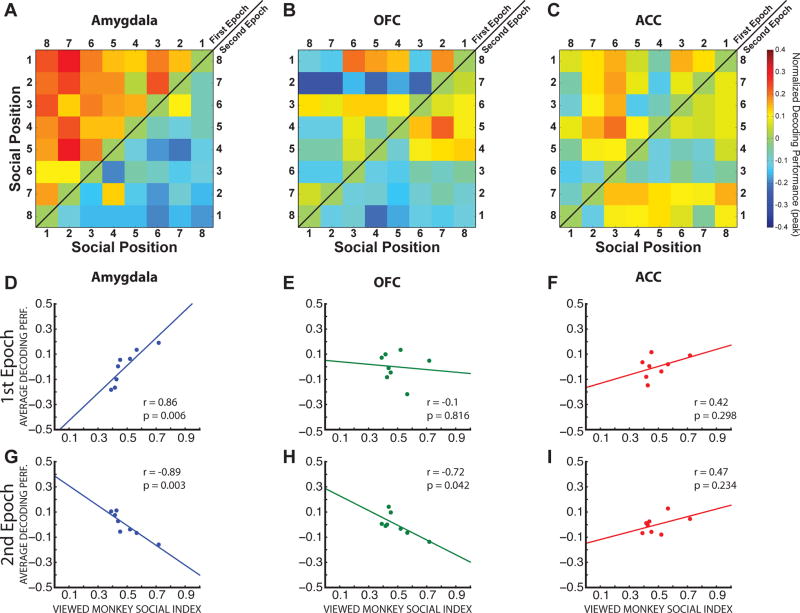

We used the analysis in Fig. 3F to define two time epochs: decoder above chance: 100 – 400 ms after image onset, which occurred during fixation; and decoder below chance: 400 – 700 ms after image onset (0–300 ms after FP extinction). We then trained the decoder on large and no reward fractal image trials separately during each epoch, and tested decoding for all pairs of face images during the corresponding time intervals, using the neuronal populations recorded from each brain area. In general, for the amygdala, decoding performance became increasingly different from chance the further apart the social position of two monkeys (Fig. 4A). Moreover, consistent with the analysis in which the decoder was tested on M1 vs. M8 (Fig. 3F), there was an opposite sign in decoding accuracy for the two time epochs (“hot” and “cool” colors above and below the identity diagonal reflect decoding above and below chance in the two time intervals, respectively). The same analysis applied to OFC and ACC demonstrated markedly less robust decoding of hierarchical status (Fig. 4B,C). These data indicate that the ability to decode the reward magnitude associated with an image does not necessarily result in the capacity to decode hierarchical rank, although it does so in the amygdala.

Figure 4. The relationship between the encoding of reward value and hierarchical status.

A–C. Peak decoding performance in the amygdala, OFC and ACC tested after training the decoder to classify large vs. no reward trials and testing the decoder for all pairs of monkey face images (e.g. M1 vs. M8, M1 vs. M7, etc., see Methods). Color scale indicates decoding performance minus 0.5, so a value of 0 corresponds to chance decoding. Decoding performance during fixation (100 – 400 ms after image onset), upper left of each plot; decoding performance during free viewing (400–700 ms after image onset), lower right of each plot. D–I, Average decoding performance for each monkey face image (across all comparisons) plotted as a function of that monkey’s social status index for the first (D–F) and second (G–I) time epochs in the 3 brain areas. Note the inversion of sign in the amygdala between the first (D) and second (G) time epochs. For each panel n = 8, Pearson’s correlation coefficient (two-sided): amygdala, r = 0.86, p = 0.006 (1st epoch), r = −0.89, p = 0.003 (2nd epoch); OFC, r = −0.1, p = 0.816 (1st epoch), r = −0.72, p = 0.042 (2nd epoch); ACC, r = 0.42, p = 0.298 (1st epoch), r = 0.47, p = 0.234 (2nd epoch).

Decoder performance was quantitatively related to the social status index calculated from oculomotor behavior (see Fig. 1C). For each face image, we computed the average decoding performance for that image compared to each of the other faces when the decoder was trained to classify fractal images associated with large or no reward. In the amygdala, this average decoding performance was correlated with the computed social status index for that face image (p = 0.006 and p = 0.003, Fig. 4D and G). The regression slopes were opposite in the two time epochs, consistent with a change in sign in relationship between reward value and hierarchical status encoding at the ensemble level. A significant relationship between average decoding performance and social status index was not observed for OFC and ACC (p = 0.816 for OFC-Fig. 4E, p = 0.298 for ACC-Fig. 4F and p = 0.234 for ACC-Fig. 4I), except for during the second time epoch in OFC (p = 0.042, Fig. 4H). In addition, decoding performance between monkey face images was linearly correlated with the social distance between monkeys in the amygdala for the first and second time epochs, again with opposite signs for the two time epochs (this analysis resampled the identities of the monkeys in the data points, Supplementary Fig. 6, r=0.84, p = 0.018 and r=−0.92, p = 0.003).

We considered two possible explanations for the intriguing change in sign of the relationship between the decoding of trials with different reward magnitudes and the decoding of hierarchical rank in the amygdala that occurs as a function of time (during and after required fixation). One possibility was that individual neurons may change their response preferences over time with respect to either hierarchical rank or expected reward magnitude. Alternatively, response preferences might be preserved, but instead different sub-ensembles of neurons might contribute to the relationship between hierarchical rank and reward expectation during the two time intervals. The evidence supports this latter possibility.

First, the preferred response selectivity of neurons tended to be preserved across time intervals for both reward value and hierarchical rank preference. Each of the regression coefficients βfrac and βsoc, which quantify the relationship between firing rate and either the reward associated with fractals or the hierarchical status of face images, were correlated across the two time intervals on a cell-by-cell basis (r= 0.49, p < 1e-06 for βfrac, Supplementary Fig. 7A, and r= 0.79, p < 1e-15 for βsoc, Supplementary Fig. 7B, regression analyses). A change in sign in the relationship between decoding reward magnitude and hierarchical rank is therefore not likely to be accounted for by a change in the underlying response preferences of individual neurons.

Second, in the first time epoch (during fixation), for the dominant-preferring amygdala neurons (βsoc<0), a significant correlation between the two regression coefficients βfrac and βsoc was observed, such that neurons that fired more for larger expected rewards fired more for dominant face images than submissive ones (p = 0.032, r = −0.21). This was not observed in OFC and ACC for the sub-populations of neurons exhibiting a weak correlation with rank (Fig. 2G) (p = 0.194, r = 0.17 for dominant-preferring neurons in OFC and p = 0.337, r = 0.11 for submissive-preferring neurons in ACC, see Supplementary Fig. 8 A–D). Consistent with this, dominant-preferring amygdala neurons responded significantly more to the large reward fractal than to the fractal predicting no reward (p = 0.045, paired t-test, Supplementary Fig. 9A), unlike submissive-preferring amygdala neurons (p = 0.202, paired t-test test in all cases, Supplementary Fig. 9B), or the sub-populations of OFC and ACC neurons that exhibited a weak correlation with hierarchical rank (Fig. 2G) (p = 0.226 for OFC and p = 0.452 for ACC, paired t-test, Supplementary Fig. 9C,D).

Finally, in the second time epoch (after the fixation requirement), a significant relationship was observed between βfrac and βsoc, when considering all amygdala neurons (r= 0.15, p = 0.039, Supplementary Fig. 10A, black line). This relationship seemed to be driven predominantly by neurons responding most strongly to submissive monkeys (r=0.20, p = 0.066, Supplementary Fig. 10A, red line) as opposed to neurons that responded most strongly to dominant monkeys (r=0.04, p=0.694, Supplementary Fig. 10A, blue line), which were the sub-ensemble of neurons correlated with reward selectivity during the first time epoch (see above). Furthermore, neurons that responded more strongly to M8 than M1 (submissive-preferring neurons) during the second time epoch responded significantly more strongly to the large reward fractal than to the no reward fractal (p = 0.030, paired t-test, Supplementary Fig. 10C), a relationship not observed for the dominant-preferring neurons (p = 0.434, paired t-test, Supplementary Fig. 10B). Overall, therefore, the change in sign in decoding performance in the amygdala for hierarchical rank during the second compared to the first time epoch appears to be accounted for by a change in the relative contribution of dominant- and submissive-preferring sub-ensembles of neurons to decoding performance in the two time epochs. These results can also be observed at a qualitative level by examining average neural responses to dominant and submissive face images separately for populations of neurons that prefer large or no reward (Supplementary Fig. 11).

We considered the possibility that the differential engagement of sub-populations of neurons during the two time epochs was related to differences in oculomotor behavior that could emerge due to the initiation of a free viewing interval. However, we did not observe any differences in oculomotor metrics that were related to social hierarchy (Supplementary Fig. 12) or reward amount of the fractal images. Even during the time interval extending from 700–1000ms after image onset, significant differences in oculomotor behavior that were related to hierarchical rank were not observed (Supplementary Fig. 13). The time window used to compute the social index - where oculomotor differences emerged - was at the very end of the free viewing epoch, more than 1000 ms after image onset. Despite the fact that the observed patterns of neural activity could not be accounted for by oculomotor behavior, covert changes in cognitive processes – such as a desire to shift gaze or attention depending upon the hierarchical rank of a viewed face - may occur upon fixation point offset that could cause differential engagement of amygdala neuronal sub-ensembles.

Discussion

This study took advantage of the fact that 10 monkeys had lived together in the same social group for many years to study the neurophysiological encoding of social hierarchy. Human observers and a social status index computed from measures of monkeys’ oculomotor behavior during viewing face images of their social cohort indexed the social hierarchy within this group in a consistent manner (Fig. 1). Electrophysiological data revealed that the primate amygdala provided a neural representation of the hierarchical rank of individuals (Fig. 2G). As in prior studies, neurons in the amygdala also represented the reward magnitudes associated with different non-social (fractal) images (Figs. 2F, 3C–E). The representation of hierarchical rank was apparent in the same neuronal ensemble that represent rewards associated with non-social stimuli (Figs. 3F, 4). Training a linear decoder to discriminate between trials where different non-social stimuli predict large or no reward permitted decoding of the hierarchical relationship between individuals within monkeys’ social group. These data provide strong evidence that the same neuronal ensembles that encode expected reward magnitudes may mediate aspects of social behavior by representing a complex social variable, hierarchical rank42. The data therefore argue against strong versions of the Social Brain Hypothesis by demonstrating that at least for the encoding of hierarchical rank, the same neuronal substrate in the amygdala appears to process social and non-social information.

Our results indicate that neuronal ensembles that represent reward magnitudes associated with non-social stimuli may also be utilized to represent the hierarchical ranks of individuals. Stimuli that depict individuals with known hierarchical rank convey motivational meaning to a subject, just as reward-predictive stimuli convey motivational meaning. Both social and non-social stimuli can influence subsequent decisions, actions, and emotional responses more broadly. However, two lines of evidence indicate that representations of the reward magnitudes associated with non-social stimuli do not inherently represent hierarchical rank in all brain structures. First, the OFC and ACC both represent expected reward magnitudes strongly, but neither structure provides a compelling representation of hierarchical rank; training a linear decoder to discriminate between large and no reward fractal image trials does not allow one to decode hierarchical rank in those structures (Figs. 2–4). Second, we observed that the relationship between representations of reward magnitude and hierarchical rank in the amygdala changed as a function of time during a trial (Figs. 3 and 4). This observation argues strongly that the results cannot be easily accounted for by neural signals that merely encode variables such as arousal, attention, valuation, or other related processes. It is very unlikely, for example, that an arousing and attention-grabbing alpha monkey becomes not arousing or attention-grabbing within a few hundred milliseconds, which would be required for signals encoding arousal or attention to account for our data. Instead, cell-by-cell regression analyses suggested that separate populations of amygdala neurons may be engaged at different times during a trial to represent hierarchical rank, and these sub-populations have differential relationships with the encoding of expected reward magnitudes (see Supplementary Figs. 7–10).

Our data are also unlikely to be accounted for by a low-level visual feature of face images that somehow conveys hierarchical rank. Training a decoder on neural responses to fractal images that predict different reward amounts should not be expected to allow decoding of hierarchical rank based on a low-level visual feature. In short, it is difficult to imagine that a particular sensory feature of face images that conveys hierarchical rank information has a consistent relationship with reward magnitudes associated with fractal images. On the other hand, the shared encoding within the amygdala of hierarchical rank and the rewards associated with non-social stimuli could reflect the provision of a common currency for stimuli of many different types that is present transiently in the amygdala (as the representation of social rank is not sustained across the entire trial, unlike reward value, see Fig. 3C,F)43, but further experiments are needed. To wit, the neuronal ensembles we have characterized may also represent other social variables, such as body gestures or complex facial expressions that convey emotional and motivational meaning, and it is unknown if the representations of these social variables are related to encoding of the reward values of non-social stimuli. It remains possible that some aspects of primate social behavior share the same neuronal encoding as non-social processes, with other aspects of social behavior mediated by different neural representations42, 44.

Monkeys almost certainly learn the hierarchical rank of other group members through experience, so the acquisition of a neural representation of hierarchical rank may occur in a similar manner as when a subject learns the relationship between non-social stimuli and rewards through reinforcement learning45. Of course, learning about hierarchical rank may also be facilitated through observational learning46. The sharing of a neuronal substrate for learning about non-social rewards and about social hierarchy information has mechanistic implications for understanding the pathophysiology and potential treatments for some patients with psychiatric disorders. For example, patients with autism or schizophrenia often exhibit deficits in social interactions, sometimes struggling to register appropriately social situations or cues, or to respond to such situations adaptively47, 48. Some of these patients may also possess deficits in learning about the significance (e.g. reward value) of non-social stimuli49. Indeed, Applied Behavior Analysis (ABA) treatments - based on reinforcing correct behavior with different types of reward – has been shown to benefit a large panel of ASD patients50 suggesting that focused behavioral manipulations may compensate for deficits in learning mechanisms in these patients. However, the neural underpinnings of deficits in social processing that also requires learning has remained unclear in large part because we have understood relatively little about how the brain represents and utilizes social information. The discovery of neural representations of hierarchical rank in the amygdala observed in the same neural ensembles that represent the rewards associated with non-social stimuli may thereby provide a significant step towards describing the neural networks critical for understanding psychiatric pathology and treatment.

Methods

Animals and Surgical Procedures

All experiment procedures were in accordance with the guidelines of the National Institutes of Health guide for the care and use of laboratory animals and the Animal Care and Use Committees at New York State Psychiatric Institute and Columbia University. Two adult male rhesus monkeys (Macaca mulatta) weighing 9.6 kg and 10.2 kg (monkey R and monkey F) underwent surgical sessions under isoflurane general anesthesia and using aseptic techniques to implant a plastic head post and an oval recording chamber positioned over a craniotomy that provided access to the right amygdala and pre-frontal cortex. Analgesics and antibiotics were administered during recovery from surgery. The Brainsight 2 system (Rogue Research) was used in combination with pre-surgery magnetic resonance imaging (MRI) to localize the brain structures and determine coordinates of chamber placement. A second MRI was performed after surgery to verify correct placement of the recording chamber and to determine electrode coordinate locations using the Brainsight 2 software for recording from the amygdala, orbitofrontal cortex, and anterior cingulate cortex. Another MRI was performed on the monkeys at the end of the experimental sessions to validate localization of our recoding sites.

These experiments involved electrophysiological recordings in two monkeys each of whom lived in the same social group of 10 male monkeys for more than 5 years. In addition, no monkeys entered or exited this stable social group of 10 monkeys. Monkeys were either housed individually, or, when possible, in pairs. In the present study, monkey F was paired with M5 and Monkey R was occasionally paired with M3. Human observers have noted a consistent hierarchy within the group of 10 monkeys, with the same monkeys being viewed as most dominant or submissive. Their room was equipped with mirrors on the wall as well as Plexiglas panels dividing the different cages allowing frequent visual contact between all members of the colony. Play cages were adjacent to all the apartment cages in the room, and every monkey had regular access to a play cage.

Experimental Procedures

The experimental sessions were conducted in a darkened sound-attenuated and RF-shielded room. Throughout the session, the monkeys were seated in a primate chair with their head restrained. They viewed a 17 inch, 1024*800 pixels, CRT screen, refresh rate 100Hz, placed 57 cm from monkeys’ eyes. Eye movements were recorded at 1000 Hz using an optometric system: Eyelink I system (SR Research). To measure licking behavior a reward delivery spout was placed few millimeters in front of monkeys’ mouths. Receipt of liquid reward required extrusion of the tongue to the delivery spout, or the liquid reward would drop to the floor. Licking behavior was measured by using an infrared laser beam set between the monkey lips and the reward delivery spout. The interruption of this laser beam thereby demarcated anticipatory and consummatory approach behaviors to obtain reward.

Visual stimuli and the behavioral task were under the control of Expo software running on a dedicated Apple computer. Neuronal data, eye movements and licking behavior were store using the Plexon Omniplex system (Plexon, Inc.). Synchronization between the Expo and Plexon system was achieved by timestamps sent from Expo during each trial to the Omniplex system. Data collection and analysis were not performed blind to the conditions of the experiments. However all well isolated neurons were kept and we did not choose the type of neurons we recorded during data acquisition based on their selectivity to the task events.

Behavioral Task

Animals performed a trace-conditioning task where a conditioned stimulus (CS) predicts delivery of an unconditioned stimulus (US); the task required fixation and actual reward receipt depended upon licking behavior. The 2 monkeys used in this study had previously been trained on a task using CS and US presentations, but the prior experience did not involve the presentation of social stimuli of any kind. The two monkeys experienced only 2 sessions on the specific task described below before recording sessions commenced.

In the present experiments, monkeys performed a trace conditioning task in which either the presentation of a fractal image or monkey face image predicted the delivery of reward. Fractal image CS and monkey face image CS trials were presented in separate blocks of trials. Monkeys initiated a trial by centering gaze on a fixation point (FP) at the center of the screen. The radius of the fixation window was 5 degrees. The average fixation position was not different between the fractal and social images, indicating that visual scanning of the images did not differ for the fractal vs. face images during fixation (Kruskal-Wallis test, p>0.05). Following 0.4 sec of fixation, we presented an image (CS) under the FP; images were 15 degrees square. Monkeys were required to maintain fixation for an additional 0.4 sec before FP disappearance. The CS remained on the screen for an additional 1 sec, providing a free viewing epoch. After image extinction, a 0.75 sec delay ensued before delivery of the unconditioned stimulus (US). The inter-trial interval (ITI) was 1 sec for all trial types. Failure to engage or maintain fixation for the entire 0.8 sec epoch led to the presentation of a black screen for a 1000 ms timeout followed by the ITI, and then trial repetition. All trials were randomly interleaved within a block.

The CSs were either a fractal or social image. Three different fractal images were presented to the viewer monkeys, one associated with 2 drops of liquid reward, one with one drop of reward, and one with no reward. Successful fixation therefore resulted in reward delivery for 2 of the fractals. Social images were pictures of the faces of 8 “viewed” rhesus monkeys (M1 to M8) who were all members of the same group of 10 primates (the 8 viewed monkeys and the 2 viewer monkeys who were the subject of electrophysiological recordings in this study) housed in the same room for more than 5 years. The position of the FP was between the superior lip and the nose of images. Face images were selected to be devoid of emotional expressions, such as lip smacking, aggressive open mouth, anxious bared-teeth grins or ear position. The gaze of the eyes of the face images was not directly toward the viewer monkeys. Successful fixation during trials in which social images were used as CSs resulted in one drop of reward. Note that reward delivery was not contingent on approach behavior to the reward tube, but that failure to approach the reward tube (e.g. with licking) resulted in the reward falling to the floor. Each experiment was composed of a fractal block, followed by a social block, followed by a fractal block, with 5–20 trials per condition per block.

Electrophysiological methods

The activity of single neurons was recorded extracellularly using U and V-probes (Plexon, Inc.) of 24 channels in Amygdala and 16 channels each in OFC and ACC. All the probes had 100 µm spacing between each contact channel. The 3 probes were lowered simultaneously through stainless steel guide tubes by means of a motorized multi-electrode drive (NaN instrument). Analog extracellular signals were amplified, bandpass filtered (250–8000 Hz), and digitized (40000 Hz) using the Plexon Omniplex system (Plexon, Inc.). Single units were isolated offline using the Plexon offline sorter 3.3 based on the 3 principal components of waveforms. Only well isolated single cells with an adequate refractory period (1.5ms) were kept. Cross-correlogram and selectivity analysis were performed through the different units in order to reject duplicate cells across contingent channels. 196 neurons were recorded in the basolateral amygdala (monkey F: 151 cells; monkey R: 45 cells), 134 in OFC (area 13m and 13l; monkey F: 78 cells; monkey R: 56 cells) and 187 in ACC (monkey F: 117 cells; monkey R: 70 cells; 61%, 21%, and 18% of ACC neurons were recorded in the ventral bank of ACC, area 24c, the dorsal bank of ACC, and the ACC gyrus, area 32, respectively). Different types of neural modulation by social experiences have been previously reported in these different parts of ACC34, but we did not observe difference between these sub-structures. Recording site reconstruction is shown in Supplementary Fig. 4.

Behavioral Analysis

Scoring of social hierarchy by human observer

A human observer scored the social status of each monkey of the colony based on the method described by Zumpe and Michael, which has successfully been applied in several studies23, 38. Aggressive and submissive agonistic behaviors were recorded during a dozen series of 5 to 15 minute observations each of which were preceded by 5 minutes of habituation. These observations occurred prior to all the recording sessions with the 2 monkeys. Observation sessions were conducted either when 2 monkeys were in nearby cages such that they could interact socially, or when 2 monkeys were placed in chairs positioned to face each other. We scored behaviors that included escaping, lip smacking, chasing and aggressive behaviors such as an open mouth or the shaking/slapping of a cage wall adjacent to the other monkey. A submissive behavior was recorded as a win for the other monkey. When two monkeys were seated in primate chairs facing each other, we also conducted a food competition test. A piece of food was placed 2 to 4 consecutive times on a small plate between the 2 monkeys. Each time one of the monkeys grabbed the food, he was scored as the winner51, 52. Other agonistic behaviors (described above) were also scored during this test. If an aggressive behavior by one monkey induced a submissive behavior by another monkey, only the aggressive behavior was scored. Because grooming and mounting have no necessary relationships with dominance53, these behaviors were not classified as agonistic (Table 1 and Supplementary Table 1).

Analysis of behavior during electrophysiology experiments

All data analysis programs were written in MATLAB (Mathworks Inc, Natick, MA) and used its statistical toolbox. Since preliminary analyses did not reveal substantial differences between the patterns of neuronal activity, the oculomotor behavior and the valuation of the social hierarchy between the two monkeys, we conducted all subsequent analyses on data pooled from all recording sessions in the two viewer monkeys.

The assessment of social hierarchy when monkeys view face images

A social status index was created that characterized how the 2 experimental monkeys assessed the social status of the 8 monkeys whose faces they viewed. This index was computed using the oculomotor behavior of the 2 recorded (“viewer”) monkeys. The index was constructed from 4 measures, which were weighted equally: 1) trial completion rate for each viewed face image (successful completion of fixation); 2) the proportion of time a viewer monkey spends looking at the image of a monkey’s face during the last 1/3 of the free viewing interval; 3) the proportion of errors that are due to broken fixation during viewing of a monkey face, as opposed to breaking fixation before image presentation or not engaging fixation at all; and 4) the proportion of time during the last 1/3 of the free viewing interval that the monkey views the eyes of a face image divided by the time the subject spends looking at other parts of the same image. The social status index was computed as:

where A, B, C, and D are measures #1, 2, 3 and 4 described above.

In general, these measures of social status exploit the fact that monkeys tend to prefer viewing pictures of dominant monkeys40, 54, 55 (measures 1 and 2), but that viewing of a dominant monkey face can be more arousing, leading to more frequent reflexive broken fixations (measure 3). These broken fixations could reflect arousal or other motivated behaviors (e.g. gaze shifting) related to the social status of a viewed face. In support of this notion, measure 3 is strongly anti-correlated with propensity to re-engage fixation on the next trial after this type of error (r=−0.90, p = 0.002, Pearson's linear correlation test (two-sided)), suggesting that these broken fixations may not be volitional and instead may simply be automatic responses due to arousal. Finally, we employ eye gaze directed towards the eyes as a measure because such gaze is a threat in the primate world, and therefore there is a tendency not to look at the eyes of a dominant monkey56.

Analysis of Oculomotor Metrics

We also analyzed oculomotor metrics that included the number of saccades or micro-saccades, and the amplitude and orientation of these saccades during two time epochs: 100 – 400 ms after CS onset (when fixation was required) and 400–700 ms after CS onset (the first 300 ms of the free viewing epoch). Oculomotor parameters were examined for each of the 8 viewed monkey face images to determine if they varied as a function of the viewed monkey identity.

Statistical methods

No statistical methods were used to pre-determine sample sizes but our sample sizes are similar to those reported in previous publications (e.g.41). Data distribution was assumed to be normal but this was not formally tested for all the analyses. Importantly, the decoder does not assume any normality in the data distribution. Behavioral analyses were carried out using Kruskal-Wallis test for main and interaction effects between groups and post-hoc comparisons (Dunn’s Multiple comparison test) or Wilcoxon rank sum test, when appropriate. We used two-sided paired t-tests and Fisher's r to z transformation test to investigate statistical neural difference between conditions and Pearson's linear correlation test (two-sided) to compute linear correlation coefficients (see below).

Analysis of Neurophysiological Data

For all analyses described below, data were combined across monkeys because all key features of the data set were statistically indistinguishable between monkeys. In general, we required at least 5 trials per condition in order to consider a neuron for any analysis described below.

Single neuron analysis

The analysis of neural data largely focuses on a time epoch extending from 100 – 400 ms after CS onset, or in a time epoch spanning 400–700 ms after CS onset. The first time epoch was selected due to the known response latency of neurons in amygdala, OFC and ACC to visual stimuli of approximately 100 ms, and due to the fact that the fixation requirement terminated 400 ms after CS onset. The second time epoch was of the same length and corresponded to the first 300 ms of the free viewing epoch.

Normalization of neural activity

For some analyses, we normalized neural activity before averaging across neurons. In this case, for each session, the firing rate (FR) for different conditions was normalized with respect to baseline activity measure during the second half of the inter-trial interval (ITI), a time interval chosen because fixation point presentation can induce a visual response and a signal related to state value in the amygdala57:

where FRn represent the normalized FR for one condition in one epoch, FRi is the absolute FR for that condition in the same epoch, and mu and sd the mean and standard deviation of the spike count across trials during the baseline interval.

Linear regression analysis quantifying reward value and social hierarchy tuning

Individual cells were characterized according to their tuning to reward value and social hierarchy in the following way. In order to quantify the tuning to the reward predicted by the fractals, the firing rate of each neuron during a time interval of interest, FR, was fitted to the value of the fractals V (the amount of reward predicted by a given fractal) in accordance to the linear regression:

Notice that we obtain one regression coefficient βfrac per cell (and per considered time interval). Analogously, we quantify neural tuning to social hierarchy by fitting the firing rate FR of each neuron with the linear regression:

where R indicates the rank of a given monkey face image in the social hierarchy as derived from the social status index (see above).

Correlations between regression coefficients (either of the same β computed in different time epochs, or between βfrac and βsoc in the same time epoch) quantify the consistency of the neural tuning (across time epochs, or between non-social and social value, respectively). This correlation was calculated by computing the Pearson’s correlation coefficient r between βfrac and βsoc for the whole population of recorded cells (or for sub-populations of cells, as indicated in the text).

Population Decoding

We used a standard linear classifier for the population decoding (see e.g.41). The decoding algorithm was based on a population decoder trained on pseudo-simultaneous population response vectors58. The components of these vectors corresponded to the spike counts of the recorded neurons in specific time bins computed in the following way. The activity of each neuron in every trial was aligned to CS onset, the event that in the analysis defines time zero. Within each trial we then computed the spike count over time bins. All analyses were then carried out independently in every time bin as explained below. For every neuron and every time bin, we z-scored the distribution of spike counts across all trials and all conditions (the mean and standard deviations used were always computed on the training data, see below). Given a task condition c (for instance, the fractal associated with large reward) and a time bin t (for instance, between 100 and 400 ms after CS presentation) we generated pseudo-simultaneous population response vectors by sampling, for every neuron i, the z-scored spike count in a randomly selected trial in condition c, that we indicate by nci(t). This procedure resulted in the single trial population response vector nc(t) = (nc1(t), nc2(t),…, ncN(t)), where N is the number of recorded neurons in the area under consideration.

Training and testing of the population decoder

The decoder analysis comprises a training phase for which (unless otherwise stated) we used 75% of the trials, and a testing (or evaluation) phase done over the 25% of trials that were held out from training. Training and testing were repeated 1000 times over different random partitions of the trials into training and test trials. We then report the mean performance and the associated confidence interval obtained from the resulting empirical distribution of decoding accuracy.

We discarded neurons with less than 5 repetitions in the training stage of the decoding analysis. For standard decoding (training and testing on same type of stimuli) the 2 conditions tested could be either 2 fractal images allowing 3 combinations (Large vs. No Reward, Medium vs. No Reward, Large vs. Medium Reward, i.e. 3-way classifier) or 2 social images (56 combinations, i.e. 8-way classifier). For some analyses, we trained the decoder to discriminate fractals associated with large vs. no reward, and we tested the decoder’s ability to discriminate between any 2 monkey face images.

We performed the decoding analyses using an equal number of neurons in the 3 brain areas to reject a potential confound in the results due to differences in the number of neurons in the population of each area (Figure 2). This was accomplished by randomly sub-sampling the recorded populations such that decoding was performed on 110 cells in each structure when the training procedure was performed on social images or 131 cells when the training occurred on fractal images (the number of neurons was equal to the number of OFC neurons passing our criterion for inclusion, see Figure 2F). The random subsampling meant that we randomly rejected a new set of excess amygdala and ACC neurons at each iteration of the decoder. For all decoding analyses, similar results were obtained if we did not equate the number of neurons from each brain area and instead included all neurons.

When we examined decoding performance as a function of time across entire trials, we employed time bins of 300 ms stepped in 100 ms increments across the trial, with training and testing performed on each 300 ms time bin. We also performed decoding analyses focused on two time windows, from 100 – 400 ms after CS onset (during fixation) and from 400 – 700 ms after CS onset (the first 300 ms of free viewing) (see below). For these analyses, we used six 50 ms bins in each of the two 300 ms long epochs. We then located the peak of decoding within these two 300 ms windows (the 50 ms epoch with decoding performance most different from chance), and this peak value is plotted in Fig. 4. In Fig. 4A–C, although all paired combinations of monkey face images have been tested (56 pairs), we present only half of the comparisons as the other half of comparisons have the same results with an opposite sign (e.g. the comparison of M1 vs. M8 = −1*(M8 vs. M1)).

Justification of the neural windows selection (100–400ms and 400–700ms after image onset)

First, we selected the onset of the first window as 100ms after image onset due to the visual response latencies that have been observed in these brain areas41. This effect can be observed in Figure 2F (right and left panels) where the decoding performance starts to increase approximately 100ms after image presentation. The first time window is merely the remaining time in the fixation interval, which ends 400 ms after image onset. The second time window was set to 400–700ms for several reasons: 1) to mirror the length of the first window; 2) to be able to analyze neural data in an interval of the free viewing epoch where we didn’t observe differences in oculomotor behavior as a function of the viewed monkey face images (the differences in oculomotor behavior occur later, during the last third of the free viewing epoch), thus avoiding a contribution of explicit concurrent behavioral differences in our interpretation of the neural response properties; and 3) due to the fact that we observed a flip in decoding performance around FP extinction during the decoding analysis where we trained on fractal images differing in reward amount and tested the decoder on monkey face images that differed substantially in social rank (Fig. 3 F,I and Fig. 4A). This last analysis found that decoding different from chance occurred in a distinctive manner in each of these time windows, but that in later time intervals during free viewing, decoding performance was largely not different from chance.

Finally, as is evident in Fig. 2F, left panel, the peak of decoding in the 3 structures occurred between 300 and 700ms after image onset (350, 500 and 650ms in AMY, OFC and ACC respectively). This makes us confident that most of the neural signals of interest occur in the 100–700ms interval. Indeed, no clear modulation happens later in the trial (Fig. 3).

Statistical significance and estimate of confidence intervals

As described above, on every iteration of the training and testing procedure, we partitioned the trials into training and testing sets by random sampling without replacement. The average decoding performance, its statistical significance and confidence intervals were estimated by repeating the partitioning procedure 1,000 times59 The confidence intervals shown throughout the population analyses in this work correspond to the 95th percentiles of the distributions obtained by repeated partitioning.

Statistical methods summary for figures presenting neural data

Figure 2. Figure 2A–C are PSTHs for individual cells. For Figs. 2 D–E, we computed the percentage of neurons that had a statistically significant change in firing rate during the CS (fractal or face image) presentation during the time epoch spanning 100 to 400ms after CS appearance (response significantly different from the ITI, p < 0. 05, paired t-test). The percentage of neurons depicted in Figure 2D correspond to the percentage of neurons that exhibited a significant response to at least one of the 8 viewed monkeys ('Monkey'), at least one of the fractals ('Fractal') or at least one of the monkeys and at least one of the fractals ('Both'). Figure 2F depicts decoding performance when using a linear decoder (see above). All neurons are included in this analysis (with at least 5 trials of data per condition). We then equalized the number of neurons in the 3 areas by sub-sampling. In Fig. 2G, neurons were categorized as preferring dominant or submissive monkey face images if average response to M1 was either 1 Hz greater in response to M1 compared to M8 (dominant-preferring) or to M8 compared to M1 (submissive-preferring). Neural responses to M2–M7 during the time epoch 100–400 ms after CS onset was then normalized and averaged across all dominant- or submissive-preferring neurons. A correlation analysis was then performed on the average normalized responses to M2–M7.

Figure 3 presents data from a linear decoder used to analyze all neurons in our dataset (see above).

Figure 4A–C presents data from a linear decoder used to analyze all neurons in our dataset (see above). In Fig. 4 D-I, correlation analyses are performed to determine if there is a relationship for each viewed monkey face image between its social index score (see behavioral analysis methods) and the average decoding performance for distinguishing that face image from each of the other 7 face images. This analysis is performed in 2 different time epochs: 100–400 ms after CS onset (Figs. 4D–F) and 400–700 ms after CS onset (Figs. 4G–I), with the peak decoding performance in a 50 ms bin presented (see above methods description for the decoding analyses). All neurons are included in the analysis.

Supp. Figure 5 depicts example PSTHs constructed from the response of 2 different individual neurons.

Supp. Fig. 6 depicts a correlation analysis that characterizes the relationship between the difference in social rank of any two face images and the average decoding performance for distinguishing between those face images, an analysis that captures how decoding performance depends upon social ‘distance’.

In Supp. Fig. 7, we selected neurons with Beta coefficients significantly different from 0 in either of the two time epochs (100–400 ms or 400–700 ms after CS onset) when analyzing trials with fractals (A) or face images (B) (p < 0.05 using the linear regression analysis for single cells described above). We then used a correlation analysis to characterize the relationship between Beta coefficients for the same type of image presented (fractal or face image) in the two time epochs.

In Supp. Fig. 8, we categorized neurons as preferring dominant or submissive neurons by using the sign of the Beta coefficient from the linear regression analysis applied to data from the first time epoch, 100–400 ms after CS onset (see above). We did not impose a statistical threshold as an inclusion criterion in this analysis. We then asked whether Beta coefficients for fractal images and face images from each time epoch were correlated with each other.

In Supp. Fig. 9, for the groups of neurons identified in Fig. 2G (low or high rank preferring neurons) we calculated the average firing rate during fractal image presentation in the 1st analysis epoch (100–400ms after CS onset).

Supp. 10A, we categorized neurons as preferring dominant or submissive neurons by using the sign of the Beta coefficient from the linear regression analysis for neural activity from the second time epoch, 400–700 ms after CS onset (see above). We did not impose a statistical threshold as an inclusion criterion in this analysis. We then asked whether Beta coefficients for fractal images and face images from each time epoch were correlated with each other. Supp. Fig. 10B–C shows the average normalized activity during fractal image presentation in the 2nd analysis epoch (400–700ms) for neurons that prefer dominant or submissive monkey face images (as in Fig. 2G).

In Supp. Fig. 11, to compute the population PSTHs during the fractal and social trials, we partitioned neurons into two groups based on the preference of each neuron for Large or No Reward fractal images (unpaired t-test, p<0.05) during the 1st epoch and 2nd epoch.

Supp Table 2 represents the number and percentage of neurons with a significant regression factor (p<0.05) for social and fractal images in each of the two analyzed time epochs.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Code availability

No custom code has been used. Analysis have been performed by using Matlab statistical toolboxes. The analysis code employed is available upon reasonable request.

Supplementary Material

Acknowledgments

We thank R. Adolphs, E. Issa, and members of the Salzman lab for critical comments on this work and the manuscript. This work was supported by the Fyssen Fondation, Philippe Foundation (J.M), and the Simons Foundation and the National Institutes of Health grant R01 MH082017 (C.D.S.).

Footnotes

Authors Contributions: J.M. and C.D.S. designed the study; J.M. performed the experiments; J.M., M.R., and C.D.S. designed analyses; J.M. and M.R. performed analyses; J.M., M.R., and C.D.S. wrote the manuscript.

Competing Financial Interests: The authors declare no conflict of interest.

References

- 1.Thierry B. Covariation of conflict management patterns across macaque species. In: Aureli F, de Waal FBM, editors. Natural Conflict Resolution. University of California Press; Berkeley: 2000. pp. 106–128. [Google Scholar]

- 2.Bercovitch FB. Social-Stratification, Social Strategies, and Reproductive Success in Primates. Ethology and Sociobiology. 1991;12:315–333. [Google Scholar]

- 3.Cowlishaw G, Dunbar R. Dominance rank and mating success in male primates. Animal Behaviour. 1991;41:1045–1056. [Google Scholar]

- 4.Sapolsky RM. The influence of social hierarchy on primate health. Science. 2005;308:648–652. doi: 10.1126/science.1106477. [DOI] [PubMed] [Google Scholar]

- 5.Byrne R, Whiten A. Machiavellian intelligence: social expertise and the evolution of intellect in monkeys, apes and humans. Oxford Science Publications; Oxford: 1989. [Google Scholar]

- 6.Schell A, Rieck K, Schell K, Hammerschmidt K, Fischer J. Adult but not juvenile Barbary macaques spontaneously recognize group members from pictures. Animal Cognition. 2011;14:503–509. doi: 10.1007/s10071-011-0383-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Pfefferle D, Kazem AJ, Brockhausen RR, Ruiz-Lambides AV, Widdig A. Monkeys spontaneously discriminate their unfamiliar paternal kin under natural conditions using facial cues. Curr Biol. 2014;24:1806–1810. doi: 10.1016/j.cub.2014.06.058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Tsao DY, Freiwald WA, Tootell RBH, Livingstone MS. A cortical region consisting entirely of face-selective cells. Science. 2006;311:670–674. doi: 10.1126/science.1119983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Gothard KM, Battaglia FP, Erickson CA, Spitler KM, Amaral DG. Neural responses to facial expression and face identity in the monkey amygdala. J Neurophysiol. 2007;97:1671–1683. doi: 10.1152/jn.00714.2006. [DOI] [PubMed] [Google Scholar]

- 10.Hoffman KL, Gothard KM, Schmid MC, Logothetis NK. Facial-expression and gaze-selective responses in the monkey amygdala. Curr Biol. 2007;17:766–772. doi: 10.1016/j.cub.2007.03.040. [DOI] [PubMed] [Google Scholar]

- 11.Rutishauser U, et al. Single-Unit Responses Selective for Whole Faces in the Human Amygdala. Current Biology. 2011;21:1654–1660. doi: 10.1016/j.cub.2011.08.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Azzi JC, Sirigu A, Duhamel JR. Modulation of value representation by social context in the primate orbitofrontal cortex. Proc Natl Acad Sci U S A. 2012;109:2126–2131. doi: 10.1073/pnas.1111715109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Tsao DY, Schweers N, Moeller S, Freiwald WA. Patches of face-selective cortex in the macaque frontal lobe. Nature Neuroscience. 2008;11:877–879. doi: 10.1038/nn.2158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Watson KK, Platt ML. Social signals in primate orbitofrontal cortex. Curr Biol. 2012;22:2268–2273. doi: 10.1016/j.cub.2012.10.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Chang L, Tsao DY. The Code for Facial Identity in the Primate Brain. Cell. 2017;169:1013–1028. e1014. doi: 10.1016/j.cell.2017.05.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Amaral D, Price J, Pitkanen A, Carmichael S. Anatomical Organization of the Primate Amygdaloid Complex. In: Aggleton J, editor. The Amygdala: Neurobiological Aspects of Emotion, Memory, and Mental Dysfunction. Wiley-Liss, Inc.; New York: 1992. pp. 1–66. [Google Scholar]

- 17.Ghashghaei HT, Hilgetag CC, Barbas H. Sequence of information processing for emotions based on the anatomic dialogue between prefrontal cortex and amygdala. Neuroimage. 2007;34:905–923. doi: 10.1016/j.neuroimage.2006.09.046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Stefanacci L, Amaral DG. Some observations on cortical inputs to the macaque monkey amygdala: an anterograde tracing study. Journal of Comparative Neurology. 2002;451:301–323. doi: 10.1002/cne.10339. [DOI] [PubMed] [Google Scholar]

- 19.Paton J, Belova M, Morrison S, Salzman C. The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature. 2006;439:865–870. doi: 10.1038/nature04490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Morrison SE, Saez A, Lau B, Salzman CD. Different time courses for learning-related changes in amygdala and orbitofrontal cortex. Neuron. 2011;71:1127–1140. doi: 10.1016/j.neuron.2011.07.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Cai X, Padoa-Schioppa C. Neuronal encoding of subjective value in dorsal and ventral anterior cingulate cortex. The Journal of neuroscience : the official journal of the Society for Neuroscience. 2012;32:3791–3808. doi: 10.1523/JNEUROSCI.3864-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Dunbar RIM. The social brain hypothesis. Evolutionary Anthropology. 1998;6:178–190. [Google Scholar]

- 23.Sallet J, et al. Social network size affects neural circuits in macaques. Science. 2011;334:697–700. doi: 10.1126/science.1210027. [DOI] [PubMed] [Google Scholar]

- 24.Noonan MP, et al. A neural circuit covarying with social hierarchy in macaques. PLoS Biol. 2014;12:e1001940. doi: 10.1371/journal.pbio.1001940. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Bickart KC, Wright CI, Dautoff RJ, Dickerson BC, Barrett LF. Amygdala volume and social network size in humans. Nat Neurosci. 2011;14:163–164. doi: 10.1038/nn.2724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Powell JL, Lewis PA, Dunbar RIM, Garcia-Finana M, Roberts N. Orbital prefrontal cortex volume correlates with social cognitive competence. Neuropsychologia. 2010;48:3554–3562. doi: 10.1016/j.neuropsychologia.2010.08.004. [DOI] [PubMed] [Google Scholar]

- 27.Kanai R, Bahrami B, Roylance R, Rees G. Online social network size is reflected in human brain structure. Proceedings of the Royal Society B-Biological Sciences. 2012;279:1327–1334. doi: 10.1098/rspb.2011.1959. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Watanabe N, Yamamoto M. Neural mechanisms of social dominance. Frontiers in neuroscience. 2015;9:154. doi: 10.3389/fnins.2015.00154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Kumaran D, Melo HL, Duzel E. The emergence and representation of knowledge about social and nonsocial hierarchies. Neuron. 2012;76:653–666. doi: 10.1016/j.neuron.2012.09.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Zink CF, et al. Know your place: neural processing of social hierarchy in humans. Neuron. 2008;58:273–283. doi: 10.1016/j.neuron.2008.01.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Ligneul R, Obeso I, Ruff CC, Dreher JC. Dynamical Representation of Dominance Relationships in the Human Rostromedial Prefrontal Cortex. Curr Biol. 2016;26:3107–3115. doi: 10.1016/j.cub.2016.09.015. [DOI] [PubMed] [Google Scholar]

- 32.Kumaran D, Banino A, Blundell C, Hassabis D, Dayan P. Computations Underlying Social Hierarchy Learning: Distinct Neural Mechanisms for Updating and Representing Self-Relevant Information. Neuron. 2016;92:1135–1147. doi: 10.1016/j.neuron.2016.10.052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Chang SW, et al. Neural mechanisms of social decision-making in the primate amygdala. Proc Natl Acad Sci U S A. 2015;112:16012–16017. doi: 10.1073/pnas.1514761112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Chang SW, Gariepy JF, Platt ML. Neuronal reference frames for social decisions in primate frontal cortex. Nat Neurosci. 2013;16:243–250. doi: 10.1038/nn.3287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Klein JT, Platt ML. Social information signaling by neurons in primate striatum. Curr Biol. 2013;23:691–696. doi: 10.1016/j.cub.2013.03.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Yoshida K, Saito N, Iriki A, Isoda M. Social error monitoring in macaque frontal cortex. Nat Neurosci. 2012;15:1307–1312. doi: 10.1038/nn.3180. [DOI] [PubMed] [Google Scholar]

- 37.Klein JT, Deaner RO, Platt ML. Neural correlates of social target value in macaque parietal cortex. Curr Biol. 2008;18:419–424. doi: 10.1016/j.cub.2008.02.047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Zumpe D, Michael RP. Dominance Index - a Simple Measure of Relative Dominance Status in Primates. American Journal of Primatology. 1986;10:291–300. doi: 10.1002/ajp.1350100402. [DOI] [PubMed] [Google Scholar]

- 39.Shepherd SV, Platt ML. Spontaneous social orienting and gaze following in ringtailed lemurs (Lemur catta) Anim Cogn. 2008;11:13–20. doi: 10.1007/s10071-007-0083-6. [DOI] [PubMed] [Google Scholar]

- 40.Deaner RO, Khera AV, Platt ML. Monkeys pay per view: adaptive valuation of social images by rhesus macaques. Curr Biol. 2005;15:543–548. doi: 10.1016/j.cub.2005.01.044. [DOI] [PubMed] [Google Scholar]

- 41.Saez A, Rigotti M, Ostojic S, Fusi S, Salzman CD. Abstract Context Representations in Primate Amygdala and Prefrontal Cortex. Neuron. 2015;87:869–881. doi: 10.1016/j.neuron.2015.07.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Dichter GS, Felder JN, Bodfish JW, Sikich L, Belger A. Mapping social target detection with functional magnetic resonance imaging. Soc Cogn Affect Neurosci. 2009;4:59–69. doi: 10.1093/scan/nsn037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Rangel A, Camerer C, Montague PR. A framework for studying the neurobiology of value-based decision making. Nat Rev Neurosci. 2008;9:545–556. doi: 10.1038/nrn2357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Behrens TE, Hunt LT, Rushworth MF. The computation of social behavior. Science. 2009;324:1160–1164. doi: 10.1126/science.1169694. [DOI] [PubMed] [Google Scholar]

- 45.Sutton R, Barto A. Reinforcement Learning. MIT Press; Cambridge, Massachusetts: 1998. [Google Scholar]

- 46.Gariepy JF, et al. Social learning in humans and other animals. Frontiers in neuroscience. 2014;8:58. doi: 10.3389/fnins.2014.00058. [DOI] [PMC free article] [PubMed] [Google Scholar]