Abstract

Recently, there have been calls to develop ways of using a participatory approach when conducting interventions, including evaluating the process and context to improve and adapt the intervention as it evolves over time. The need to integrate interventions into daily organizational practices, thereby increasing the likelihood of successful implementation and sustainable changes, has also been highlighted. We propose an evaluation model—the Dynamic Integrated Evaluation Model (DIEM)—that takes this into consideration. In the model, evaluation is fitted into a co‐created iterative intervention process, in which the intervention activities can be continuously adapted based on collected data. By explicitly integrating process and context factors, DIEM also considers the dynamic sustainability of the intervention over time. It emphasizes the practical value of these evaluations for organizations, as well as the importance of their rigorousness for research purposes. © 2016 The Authors. Stress and Health published by John Wiley & Sons Ltd.

Keywords: process evaluation, organizational interventions, evaluation framework, implementation outcomes, occupational health

Introduction

The organizational change literature distinguishes between episodic and continuous change. Continuous change is emerging, ongoing and endless, involving constant modifications and stressing adaptability (Weick & Quinn, 1999). It can be seen as cumulative processes of small changes. Episodic changes, in contrast, are intentional, static (the content of the change stays constant), infrequent, interruptive, goal‐seeking and with a clear beginning and end (Weick & Quinn, 1999). Organizational interventions are planned actions designed to reach relatively large groups of individuals in a relatively uniform way by changing the way work is designed, organized or managed (cf. Nielsen, Taris, & Cox, 2010). They have traditionally been approached as episodic changes, set up as time‐limited projects with a separate, intervention‐specific organization (steering groups and change agents) and activities (e.g. screening, action planning and implementation). However, more dynamic organizational interventions have recently begun being developed, emphasizing participatory approaches and integration of the intervention in organizational structures. We argue that in these types of interventions the change process becomes continuous rather than episodic, which has implications on how the interventions are evaluated.

During the last decade, it has been advocated that integration between organizational occupational health (OH) interventions, strategic management and everyday organizational practices may be a way to fit the intervention into the logic of the organizational system (Bauer & Jenny, 2012; Nielsen, Randall, Holten, & Gonzalez, 2010; Nielsen et al., 2010; von Thiele Schwarz, Augustsson, Hasson, & Stenfors‐Hayes, 2015). This approach ensures that the intervention will not become a sidelined, temporary activity (Bauer & Jenny, 2013). The goal of integrated interventions is to make the intervention part of the organization, owned and managed by it (Kristensen, 2005; von Thiele Schwarz & Hasson, 2013). In integrated approaches, the intervention's sustainability is in focus from the outset. Thus, integrated interventions do not always have a clear beginning and certainly not an end, and their activities may evolve and be adapted over time in unpredictable ways.

The recommended approach for organizational interventions is participatory (Lamontagne, Keegel, Louie, Ostry, & Landsbergis, 2007; Nielsen, 2013; Nielsen & Randall, 2012). This means that an organization and its employees cannot be passive recipients of an intervention, but are instead active, to varying degrees, in designing and carrying it out (McVicar, Munn‐Giddings, & Seebohm, 2013). However, participation is a wide and ill‐defined concept that can mean anything from the organization accepting the intervention to the organization driving the change (Kristensen, 2005). In the present paper, we focus on a specific form of participation: co‐creation. Co‐creation entails an interconnected, recursive set of interactions between stakeholders, such as researchers, consultants and organizational representatives (Payne, Storbacka, & Frow, 2008; Prahalad & Ramaswamy, 2000). Through co‐creation, employees and other organizational stakeholders are engaged in creating value rather than having it delivered to them (Payne et al., 2008). In sum, we propose that co‐created, integrated organizational interventions are continuous change processes, which calls for an alternative evaluation framework.

Evaluation of organizational interventions as continuous change

In many frameworks, evaluation is described as the final step of the intervention, and its independence from the intervention is generally stressed (Biron, Gatrell, & Cooper, 2010; Nielsen & Abildgaard, 2013). However, whereas separating the evaluation from the intervention makes it possible to disentangle the effect of the evaluation from that of the intervention, such a separation makes less sense for co‐created and integrated interventions. Therefore, in line with the call by Nielsen (2013) to use evaluation to help organizations improve the intervention process while it is under way, we propose using evaluation for all parts of an organizational intervention: its creation (i.e. design), the change (e.g. its integration into structures and processes) and its evolution over time (i.e. its sustainability).

In line with a developmental approach to evaluation (Patton, 2011), we introduce an evaluation model that supports innovation and change in complex systems by being sensitive to context (i.e. the conditions or surroundings in which the change occurs and that may influence how it plays out) and facilitating real‐time, continuous development loops (Patton, 2006). In this, we use evaluation to continuously improve interventions, as suggested in the dynamic sustainability framework (Chambers, Glasgow, & Stange, 2013). Our proposed model illustrates how data on context, process (defined as how and why the outcomes of an intervention are brought about (Nielsen et al., 2010)) and the intervention itself can be used to iteratively renegotiate the fit between an intervention and the context, to allow both the intervention and the organization to evolve through learning and continuous improvements. Thus, whereas the intervention may have the characteristics of an episodic change when it is first introduced, onwards it is approached as a continuous change.

Aim

This paper presents a participatory evaluation model for continuous change interventions that integrates evaluation into an intervention through an iterative process—the Dynamic Integrated Evaluation Model (DIEM). This model makes four main contributions to the current understanding of intervention evaluation methodology. First, DIEM is designed to create value for both research and practice by establishing a co‐creation process and continuously using data to learn and improve, not only for scientific evaluation. Thereby, it addresses the division between evaluation of organizational interventions on the one hand and achieving practical change on the other (Anderson, Krathwohl, & Bloom, 2001; Biron & Karanika‐Murray, 2014). Second, it goes beyond current models stating that process and context influence interventions (Bauer & Jenny, 2012; Biron & Karanika‐Murray, 2014; Nielsen & Abildgaard, 2013), by integrating process and context into the intervention. This is done both during the planning phase, when stakeholders are engaged in contextualizing the intervention (adapting it to fit the organizational context) and as the intervention is put to practice, when data on process and context are consciously used to improve the intervention as it unfolds. Third, it emphasizes the need to track the footprint of the intervention and makes suggestions for how this can be done. Knowing what has been implemented in practice is denoted implementation outcome, which can be formally defined as the effects of purposive actions to implement new practice. Thus, implementation outcome is the independent variable as it unfolds in practice. We make a fourth contribution by including a comprehensive and structured guidance for how implementation outcomes can be assessed. In fields like implementation science (Damschroder et al., 2009; Proctor et al., 2011), measuring implementation outcomes is well established. Aspects of these outcomes have been included in previous evaluation models (Biron & Karanika‐Murray, 2014; Nielsen & Abildgaard, 2013; Nielsen & Randall, 2013; Randall & Nielsen, 2012), but have not been compiled in a taxonomy covering a broader range of such outcomes.

The Dynamic Integrated Evaluation Model

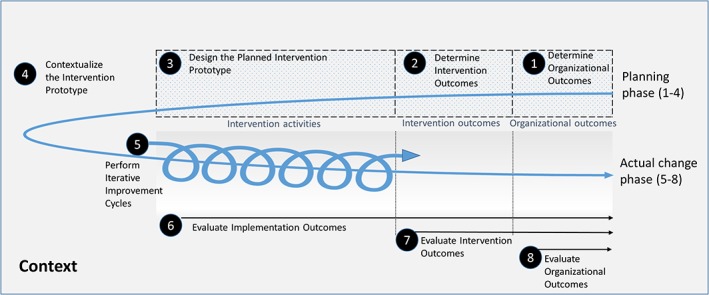

DIEM consists of eight steps (see below and Figure 1). The first four steps take place when the intervention is designed. These steps outline a co‐creation planning process whereby stakeholders are involved in identifying the burning questions in the organization, including what the most important outcomes are, and creating a contextualized intervention prototype. In Steps 5–8, we move to the actual change process and its evolution over time. This involves continuously evaluating how the intervention works in practice using data, first primarily on implementation outcomes and later also on intervention and possibly organizational outcomes. Thus, data are used to improve the intervention, meaning that the intervention prototype designed in Steps 1–4 is not static but rather evolves over time.

Figure 1.

The Dynamic Integrated Evaluation Model (DIEM). The process starts from the left upper side with a planning phase that ends with contextualization of the intervention prototype. Step 5–8 outlines the actual change phase, when the intervention prototype is iteratively tested and further contextualized as needed, guided by implementation, intervention and organizational outcomes

Planning phase: Co‐creating the contextualized intervention prototype (steps 1–4)

Steps 1 and 2. Determine objectives and outcomes

The first step involves the determination of objectives and outcomes that are important to the organization. We argue that outcomes related to areas such as productivity and performance should be evaluated in conjunction to an OH intervention in order to ensure that outcomes important to the organization are targeted and evaluated. This concerns placing an intended change process in relation to the bigger picture (i.e. what the organization needs to accomplish in order to reach its objectives). In many frameworks this is described in terms of a risk assessment, or screening (Biron & Karanika‐Murray, 2014; Nielsen & Abildgaard, 2013). In DIEM such activities may take place at this stage, but may also be done during the later steps. Instead, DIEM emphasizes that data in the form of existing information and knowledge in the organization may be sufficient for determining what the overall organizational needs are and the direction of the change (i.e. objectives and target outcomes). For example, an organization may use information from annual risk assessments, or the information it usually uses to track how it is performing in relation to organizational objectives, such as performance benchmarks. In fact, such data may be the trigger for initiating a DIEM process in the first place. From this follows that the first two DIEM steps focus on gathering stakeholders so that the knowledge—and data—they have can be shared (and gaps in knowledge and data can be identified and later be added as an intervention activity), with the aim of reaching a shared understanding of the need for change and intervention objectives.

After the overall objectives are agreed on, these need to be operationalized into outcomes. We suggest that, in general, these outcomes should reflect both organizational and employee objectives. Considering multiple outcomes serves several purposes. It builds commitment among key organizational stakeholders by showcasing the ability of the intervention to meet different stakeholders' objectives (von Thiele Schwarz & Hasson, 2013). It may also decrease the risk of unintended consequences associated with changing one part of a system where different parts are interrelated (Bauer & Jenny, 2013; Semmer, 2006). This motivates measuring important outcomes even when they are not directly targeted by the intervention, for example measuring productivity along with safety outcomes in a safety intervention to ensure that the intervention does not have an adverse effect on productivity (von Thiele Schwarz, Hasson, & Tafvelin, 2016). In essence, considering multiple outcomes already in the intervention's design phase is a way to plan for sustainability.

Once the objectives and organizational outcomes have been determined, the second step is to determine the intervention outcomes; that is, the target and intermediate outcomes that are directly related to the intervention. These outcomes act like a bridge between the intervention and the organizational outcomes. Since the organizational outcomes determined in the first step are often distal, requiring long follow‐up times and large sample sizes, more proximal intervention outcomes provide important information about whether the result of the intervention is moving in the right direction (towards the more distal outcomes). In fact, if a relationship between intermediate and more distal outcomes has been theoretically and empirically established, for example the link low job autonomy and adverse health events, measuring effects on intermediate outcomes may be sufficient (Biron et al., 2010).

Step 3. Design the planned intervention prototype

Theories of change are helpful in describing change processes, i.e. why the intervention activities have the proposed effect on the outcomes (Blamey & Mackenzie, 2007). However, with a few recent exceptions, there is a lack of such theories (Biron & Karanika‐Murray, 2014; Nielsen, 2013). We propose that programme logic (e.g. programme theory or logic model) can be used prior to the intervention to describe the potential relationship between intervention activities and a chain of intermediate and target outcomes, e.g. making the theory of change explicit and testable (Olsen, Legg, & Hasle, 2012; Rogers, 2008). The co‐creation process is essential for creating a programme logic. It helps establish a common view on the intervention activities and the hypothesized mechanisms and outcomes involved. Stakeholders who are involved in creating the programme logic are also better prepared to understand and accept conclusions drawn regarding the intervention's effectiveness (Blamey & Mackenzie, 2007; Leviton, Khan, Rog, Dawkins, & Cotton, 2010).

The traditional use of programme logic involves starting with an evidence‐based intervention and moving forward from the core activities through intermediate and increasingly distal outcomes (Saunders, Evans, & Joshi, 2005). In contrast, the starting point in DIEM is the outcomes, because achieving the intended outcome (e.g. increased job resources) is more important than implementing a specific intervention. This is similar to how programme theory is used in the field of quality improvement science (Reed, McNicholas, Woodcock, Issen, & Bell, 2014). By reasoning around ‘what will make this happen’ and ‘how will this happen,’, stakeholders brainstorm to identify what current activities are done in relation to reaching the targeted outcome(s), what activities need to be increased or added and what activities need to be reduced or abolished. The stakeholders then prioritize among the activities, based on which ones they believe will (1) have the greatest impact; (2) be the most possible to change; and (3) have the greatest positive spillover (Michie, Atkins, & West, 2015).

After agreeing on a few prioritized activities, these are then specified in terms of: What? When? Where? How often? and Involving Whom? (Michie et al., 2015). This is similar to action planning, which is part of the current evaluation frameworks (Randall & Nielsen, 2012), but uses a backward‐moving programme logic whereby outcomes are determined first and what needs to be changed in terms of activities thereafter, rather than starting with a problem or an activity. In the next steps the intervention prototype is contextualized and subsequently tested and improved.

Step 4. Contextualize the intervention prototype

Since organizational interventions are influenced by contextual and process‐related factors, the importance of understanding what works for whom under what circumstances (i.e. the influence of context and process) is highlighted in the literature (cf. Nielsen & Abildgaard, 2013). However, information about contextual factors is still primarily used to make sense of an intervention's outcomes after the fact, increasing the risk of drawing conclusions based on spurious findings. It also misses opportunities to use the knowledge to maximize the intervention's impact. Similarly, factors related to the process by which interventions are implemented—including activities like distribution of information and participatory workshops—are generally not described and evaluated as potential active ingredients with independent or moderating effects on outcomes; despite research showing that this is indeed the case (Nielsen & Randall, 2009; Nielsen, Randall, & Albertsen, 2007; Randall, Nielsen, & Tvedt, 2009). Overall, these shortcomings make replication and accumulation of knowledge across studies harder.

In DIEM, context and implementation factors that may influence the effectiveness of the intervention are managed up front by making adjustments to the intervention. Using contextual data in this way (e.g. increasing low readiness before an intervention rather than using it to explain lack of success later) is in line with recent suggestions (Nielsen, 2013). DIEM expands on this by stressing that contextual and implementation factors should be part of the intervention and evaluated. In this way, the effectiveness of an intervention imbedded in a specific context can be studied based on testing predefined hypotheses (i.e. the programme logic), thus avoiding post‐hoc explanations. The aim of contextualization is twofold: to offer as complete a description of the independent variable as possible, and to design the most effective intervention considering the current context.

To contextualize the intervention, the starting point is the uncontextualized programme logic (from Step 3). Organizational stakeholders often have insight into what the influential factors are, making co‐creation essential. Stakeholders are engaged in conversations about whether the programme logic holds up in their local context, and reflect on what potential contextual and implementation factors may influence the relationship between intervention and outcomes. We suggest critically thinking through how each intervention activity will play out in practice, as well as what may interfere with it. This may lead to modifications, additions or omission of planned activities in order to make the intervention work in the current context. In essence, this means that the stakeholders identify potential moderators in the relationship between intervention and outcomes up front, and use this information to design supporting activities (Blamey & Mackenzie, 2007; Dahler‐Larsen, 2001). This may for example involve adding activities to another organizational level in order to maximize the intervention's impact; for instance, adding individual assertiveness training to a group‐level activity aimed at increasing job autonomy. In DIEM, all these supporting activities should be added to the intervention prototype, and subsequently evaluated.

Two interlinked approaches to contextualization merit specific attention. First, managerial activities may be added to support implementation of employee‐level intervention activities. Activities at the managerial level are motivated by their central role in the organization, making them potential makers or breakers of interventions (Nielsen, 2013). It may, for example, entail training in intervention‐specific leadership behaviours. Second, as outlined above, integration of the intervention with existing structures and processes is central for sustainability. Since integration generally involves using local staff, it also helps to integrate the know‐how into the organization, thereby avoiding knowledge ‘walking away’ with external agents. Integration can be regarded as a way to improve intervention–organization fit by making efficient use of resources by detecting and aligning conflicting goals, activities and processes (Randall & Nielsen, 2012). Changing existing routines to align the intervention with other process is one integrative approach, including updating group and individual performance evaluation criteria so that they are aligned with the intervention.

One aspect of integration relates to the establishment of intervention‐specific steering groups. These are by definition temporary, and are often less well positioned to ensure the intervention activities align with other structures and processes. We suggest instead, when possible, that responsibilities in existing roles and groups be expanded (for example, management teams). This entails adding formal responsibilities to the job descriptions of local staff, for example to include monitoring and follow‐up, and having this as part of formal meeting agendas. In this way, sustainability beyond the project time is in focus. It also means that the performance of intervention‐related tasks, e.g. that they are attended to as planned and not down‐prioritized, is managed in the same way as other job tasks.

Actual change phase: continuous evaluating to achieve target outcomes

Step 5. Perform iterative improvement cycles

The literature on organizational interventions often stresses that such interventions are ongoing, cyclic processes, but the iterations are generally long, extending over years rather than days or weeks (Nielsen, Randall, & Christensen, 2010). We argue that one reason for these long cycles is that the evaluation approach is primarily set up to create value from a research perspective. From a practice perspective, evaluation may be more useful when conducted in shorter cycles and used for self‐reflection and continuous development (Jenny et al., 2014). This is a fundamental part of DIEM. Thus, after having planned the contextualized intervention prototype, the next step is to launch it and start the process of iteratively improving it, based on data. Thus, Steps 5 to 8 are interlinked but are presented separately for clarity.

Drawing on improvement science (Batalden & Stoltz, 1993) and recent developments in implementation and evaluation science (Chambers et al., 2013; Patton, 2011), DIEM builds on employing continuous, rapid improvement cycles. The idea is to approach interventions as a continuous, evolving change process, using data on implementation and context to improve the prototype so that the likelihood of the outcomes being achieved is increased. In this, sustainability is supported through a continual contextualization of the programme logic. In practice, starting with smaller groups is helpful, as the changes to the prototype are often greater in its first iterations. This may be thought of as pilot testing, although the change from prototype to pilot test to full scale is gradual in DIEM.

To monitor the change as it unfolds, data illuminating how the contextualized prototype is working are needed, as are data on process, context and intermediate intervention outcomes. Needs for improvement are identified based on the data, resulting in a revised plan that is put to test in iterative improvement cycles. Thus, Step 5 and 6 are interlinked. Approaches such as Plan‐Do‐Study‐Act (PDSA) and agile methodology are well established in fields as diverse as chemistry, healthcare improvement and software development. We do not propose a DIEM‐specific approach to continuous improvements. Rather, in line with the emphasis on integration, we suggest that the organization's existing structures and processes for managing continuous improvements, such as Kaizen systems, be employed, as long as the system allows quick cycles—days or weeks rather than months or years. If no system is available, a simple solution—such as a paper‐and‐pen graph to monitor a few central data points and/or adding follow‐up as a bullet point to the agenda of an existing meeting—may be sufficient. Repeated measurement is more important than use of sophisticated systems.

Using data for improvement has obvious benefits for the organization. From a research perspective, using data for both evaluation and improvement in rapid cycles adds the challenge of researching an intervention (an independent variable) that is not static. However, designs exist that are reasonably well suited to such interventions. For example, a stepped wedge design, whereby the intervention is sequentially rolled out to different groups, may allow for systematic changes in the intervention between steps (Schelvis, Oude Hengel, Burdorf, Strijk, & van der Beek, 2015). Adapted study designs, whereby participants are assigned to different groups retrospectively based on participation rates, for instance, is another alternative (Jenny et al., 2014; Randall, Griffiths, & Cox, 2005). Using advanced statistical modelling to investigate individual trajectories is a third example (Hofmann, Griffin, & Gavin, 2000). Multiple baselines and interrupted time series may also be appropriate (Schelvis et al., 2015). An extension of these approaches, statistical process control, may be worth particular attention. This approach separates common‐cause variation (the natural, stable variation inherent in a certain process) from special‐cause variation—variation that is not typical of the process (Benneyan, Lloyd, & Plsek, 2003). This has been suggested as a way to overcome some of the challenges of before‐after designs, and as a promising method for evaluating whether process changes are related to outcome changes (Benneyan et al., 2003). It is also valuable from a practical perspective, as it helps organizations visualize, and thus better understand and manage, changes. In sum, using data to learn and improve is an important part of creating sustained, efficient interventions. Therefore, researchers need to use evaluation approaches that do not prevent this.

Step 6: Evaluate implementation outcomes for improvements

A number of frameworks have been developed to guide process evaluation (Biron & Karanika‐Murray, 2014; Nielsen & Abildgaard, 2013; Nielsen & Randall, 2013). However, even though these frameworks describe iterative intervention processes, they are mostly focused on evaluating episodic change. They do not primarily cover how to evaluate individual intervention activities repeatedly and use the result to improve the intervention over time. In DIEM, data is crucial both for understanding what changes actually took place (Kristensen, 2005), and for getting data for rapid improvement cycles, e.g. the developmental aspect.

One of the most comprehensive frameworks for implementation outcomes presents eight factors: Acceptability, Adoption, Appropriateness, Feasibility, Fidelity, Implementation Cost, Penetration and Sustainability (Proctor et al., 2011). Some of these, or analogues, have also been acknowledged in the organizational intervention literature, e.g. perceived fit of the intervention (see Table 1). Table 1 presents a taxonomy for implementation outcomes that suggests what, how and when to measure to inform a dynamic evaluation of organizational interventions. The taxonomy suggests key factors to consider so that each intervention activity can be repeatedly assessed as it is being implemented. Note that not factors will be applicable to all intervention activities, and additional measures may be needed depending on the intervention's programme logic.

Table 1.

Taxonomy for implementation outcomes

| Outcome | Definition | Source | Sample questions |

|---|---|---|---|

| To what extent: | |||

| Fit of the intervention | Appropriateness, Suitability, Perceived fit of both the co‐creation process and the planned programme logic to needed change | Biron and Karanika‐Murray (2014); Fridrich, Jenny, and Bauer (2015); Proctor et al. (2011); Randall and Nielsen (2012) | ‐ is the intervention relevant for solving important problems in your organization?a, b |

| ‐ does the intervention meet your personal needs?a, b | |||

| Acceptability | Attitudes towards the intervention, satisfaction | Biron and Karanika‐Murray (2014); Fridrich et al. (2015); Proctor et al. (2011); Randall and Nielsen (2012) | ‐ do you look forward to the changes that the intervention will lead to?a, b |

| ‐ do you expect the intervention to bring about positive outcomes?a, b | |||

| Direction | Knowledge of what activities to perform and how performance is related to the overall goals of the organization | Frykman et al. (2014); von Thiele Schwarz and Hasson (2013) | ‐ is it clear to you what you should do?a, c |

| ‐ is it clear to you how the intervention is related to the organization's overall goals?a, c | |||

| Competence | Knowledge and skills to implement change, and to work in changed structures | Michie et al. (2015); Randall and Nielsen (2012) | ‐ do you have the knowledge and skills needed to: |

| ‐ participate in co‐creation/make the planned change happen/work as suggested. | |||

| Opportunity | Perceived time, space, tools and other resources to implement change and to work in changed structures | Biron and Karanika‐Murray (2014); Michie et al. (2015); Nielsen (2013); Randall and Nielsen (2012) | ‐ do you have the opportunity to: |

| ‐ participate in co‐creation/make the planned change happen/work as suggested. | |||

| ‐ does the intervention collide with other routines and practices?2, c | |||

| ‐ are you, in general, able to participate in activities without problems?a, b | |||

| Participation—frequency | Actual time spent on intervention activities, exposure | Biron and Karanika‐Murray (2014); Fridrich et al. (2015); Nielsen (2013); | ‐ have the participants spent time on co‐creation and intervention activities?a, b |

| Participation—quality | Influence and involvement | Biron and Karanika‐Murray (2014); Fridrich et al. (2015); Nielsen (2013) | ‐ have you been actively involved in co‐creating the intervention?a |

| ‐ have you had the opportunity to influence intervention activities?a, c | |||

| Support | Managers', groups' and systems' continuous support | Biron and Karanika‐Murray (2014); Fridrich et al. (2015); Nielsen (2013); Nielsen and Abildgaard (2013) | ‐ have you been given the information and support needed to be able to participate in change activities?a, b, c |

| Integration | Level of initiated institutionalization, routinization, anchoring to organization activities | Fridrich et al. (2015) | ‐ have plans been made to integrate intervention activities into regular organizational activities/routines?a |

| ‐ have the changes become part of the daily routine?c | |||

| Alterations and deviations | Translation of initial co‐created action plans into actual activities, changes made and reasons for changing initial plans | Biron and Karanika‐Murray (2014); Fridrich et al. (2015); Nielsen and Abildgaard (2013); Proctor et al. (2011) | ‐ are there differences between planned and enacted intervention activities?b, c |

| ‐ are changes being made to the planned activities? At what level? Why?b, c |

Note. The taxonomy is based on evaluation studies and models of the mentioned authors. These authors, in turn, have based their work on theory, studies and models, to which they refer.

= During prototyping.

= During initial implementation.

= Repeatedly during implementation.

Continuous measurements are central in DIEM. Such measurements can be time‐consuming and costly, which calls for creative and innovative data collection methods. This may include time‐ and event‐based diary data (Iida, Shrout, Laurenceau, & Bolger, 2012). Examples of this include short self‐ratings from staff, such as in the web‐based tool HealthWatch (Hasson, von Thiele Schwarz, Villaume, & Hasson, 2014). Other examples we have used include a verbal version of fixed schedule event diaries (Iida et al., 2012), asking teams about their perceptions of the teamwork after each shift during a teamwork intervention (Frykman, Hasson, Muntlin Athlin, & von Thiele Schwarz, 2014). Simply asking what worked well and what can be done differently (WWDD) can also be used to detect needs for improvement. The benefit is that this can easily be used at any meeting and target different time ranges and activities (e.g. this meeting, this programme). Observations are usually a time‐consuming activity, but can be performed by staff or managers as part of their daily routine, for example using checklists (Zohar & Luria, 2003). It is also worth considering using more intense data collection on a subset of the population, using either purposeful or random sampling.

Steps 7 and 8. Evaluate intervention and organizational outcomes

In addition to tracking the footprint of the intervention through implementation outcomes, intervention and organizational outcomes also need to be monitored. Since such outcomes are intervention specific we do not present them in detail, but emphasize that the programme logic can be used to determine the appropriate time points, and that continuous data or short time intervals are preferable, so that the rapid improvement cycles can be based on the data. In the spirit of integration and sustainability, we suggest using data that are already collected in the organization, for example on productivity or quality. Using existing data makes data collection cheaper and less invasive (Shadish, Cook, & Leviton, 1991). This is essential for sustainability, as it increases the likelihood that the organization will continue to monitor and wisely develop the intervention over time, based on data. The drawback is that existing data may be flawed, so the ambition to use them needs to be balanced. This has been called fitness for purpose, which entails using the correct approach to obtain data of appropriate quality, judged in relation to the purpose of obtaining these data (Cox, Karanika, Griffiths, & Houdmont, 2007).

Conclusions

The DIEM offers support for the entire intervention process: from creating the intervention so that process and context factors managed up front, to launching it into practice while carefully evaluating implementation outcomes in order to continuously adapt the intervention, process or context with the intervention and organizational outcomes in mind. This is done through a structured co‐creation process involving key stakeholders.

The model has primarily been developed for evaluation of continuous change organizational interventions, but may also be used to guide evaluation of implementation outcomes in more traditional, episodic types of interventions. The most crucial contribution of DIEM to organizational intervention evaluation is its use of evaluation as an integrated part of the intervention, aiming to create practical value for the organization with the rigorousness of research approaches.

Conflict of interest

The authors have declared that there is no conflict of interest.

Acknowledgments

This work was supported by the Swedish Research Council for Health, Working Life and Welfare (FORTE) [grant 2012‐0215], the Karolinska Institutet Board of Doctoral Education [KID funding 3‐1818/2013], ERA‐AGE2, (FLARE)/Swedish Council for Working Life and Social Research through a post doc position awarded to Henna Hasson [grant 2010‐1849] and Vinnvård, through a fellowship in Improvement Science awarded to Ulrica von Thiele Schwarz [grant VF13‐008].

von Thiele Schwarz, U. , Lundmark, R. , and Hasson, H. (2016) The Dynamic Integrated Evaluation Model (DIEM): Achieving Sustainability in Organizational Intervention through a Participatory Evaluation Approach. Stress Health, 32: 285–293. doi: 10.1002/smi.2701.

[The copyright line for this article was changed on 14 August 2018 after original online publication]

References

- Anderson, L. W. , Krathwohl, D. R. , & Bloom, B. S. (2001). A taxonomy for learning, teaching, and assessing: A revision of Bloom's taxonomy of educational objectives. Boston, MA: Allyn & Bacon (Pearson Education Group). [Google Scholar]

- Batalden, P. B. , & Stoltz, P. K. (1993). A framework for the continual improvement of health care: Building and applying professional and improvement knowledge to test changes in daily work. The Joint Commission Journal on Quality Improvement, 19(10), 424–452. [DOI] [PubMed] [Google Scholar]

- Bauer, G.F. , & Jenny, G.J. (2012). Moving towards positive organisational health: Challenges and a proposal for a research model of organisational health development. Occupational Health Psychology: European Perspectives on Research, Education and Practice, 126‐145.

- Bauer, G. F. , & Jenny, G. J. (2013). Salutogenic organizations and change: The concepts behind organizational health intervention research. Dordrecht, Netherlands: Springer Science & Business Media. [Google Scholar]

- Benneyan, J. C. , Lloyd, R. C. , & Plsek, P. E. (2003). Statistical process control as a tool for research and healthcare improvement. Quality and Safety in Health Care, 12(6), 458–464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Biron, C. , & Karanika‐Murray, M. (2014). Process evaluation for organizational stress and well‐being interventions: Implications for theory, method, and practice. International Journal of Stress Management, 21(2), 85–111. DOI: 10.1037/a0033227. [DOI] [Google Scholar]

- Biron, C. , Gatrell, C. , & Cooper, C. L. (2010). Autopsy of a failure: Evaluating process and contextual issues in an organizational‐level work stress intervention. International Journal of Stress Management, 17(2), 135–158. DOI: 10.1037/a0018772. [DOI] [Google Scholar]

- Blamey, A. , & Mackenzie, M. (2007). Theories of change and realistic evaluation peas in a pod or apples and oranges? Evaluation, 13(4), 439–455. [Google Scholar]

- Chambers, D. , Glasgow, R. , & Stange, K. (2013). The dynamic sustainability framework: Addressing the paradox of sustainment amid ongoing change. Implementation Science, 8(1), 117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox, T. , Karanika, M. , Griffiths, A. , & Houdmont, J. (2007). Evaluating organizational‐level work stress interventions: Beyond traditional methods. Work & Stress, 21(4), 348–362. [Google Scholar]

- Dahler‐Larsen, P. (2001). From programme theory to constructivism on tragic, magic and competing programmes. Evaluation, 7(3), 331–349. [Google Scholar]

- Damschroder, L. J. , Aron, D. C. , Keith, R. E. , Kirsh, S. R. , Alexander, J. A. , & Lowery, J. C. (2009). Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science, 4(1), 50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fridrich, A. , Jenny, G.J. , & Bauer, G.F. (2015). The context, process, and outcome evaluation model for organisational health interventions. BioMed Research International, 2015. [DOI] [PMC free article] [PubMed]

- Frykman, M. , Hasson, H. , Muntlin Athlin, Å. , & von Thiele Schwarz, U. (2014). Functions of behavior change interventions when implementing multi‐professional teamwork at an emergency department: A comparative case study. BMC Health Services Research, 14(1), 218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasson, H. , von Thiele Schwarz, U. , Villaume, K. , & Hasson, D. (2014). eHealth interventions for organizations ‐ potential benefits and implementation challenges In Burke R., Cooper C., & Biron C. (Eds.), Creating Healthy Workplaces: Stress Reduction, Improved Well‐being, and Organizational Effectiveness. New York, NY: Routledge. [Google Scholar]

- Hofmann, D.A. , Griffin, M.A. , & Gavin, M.B. (2000). The application of hierarchical linear modeling to organizational research. Multilevel Theory, Research, and Methods in Organizations, 467511.

- Iida, M. , Shrout, P.E. , Laurenceau, J‐P ., & Bolger, N. (2012). Using diary methods in psychological research In: Cooper H., Camic P.M., Long D.L., Panter A.T., Rindskopf D., Sher K.J. APA handbook of research methods in psychology, Vol 1: Foundations, planning, measures, and psychometrics., (pp. 277–305). Washington, DC, US: American Psychological Association, doi: 10.1037/13619-016. [DOI] [Google Scholar]

- Jenny, G.J. , Brauchli, R. , Inauen, A. , Füllemann, D. , Fridrich, A. , & Bauer, G.F. (2014). Process and outcome evaluation of an organizational‐level stress management intervention in Switzerland. Health Promotion International, dat091. [DOI] [PubMed]

- Kristensen, T. S. (2005). Intervention studies in occupational epidemiology. Occupational and Environmental Medicine, 62(3), 205–210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lamontagne, A. D. , Keegel, T. , Louie, A. M. , Ostry, A. , & Landsbergis, P. A. (2007). A systematic review of the job‐stress intervention evaluation literature, 1990–2005. International Journal of Occupational and Environmental Health, 13(3), 268–280. [DOI] [PubMed] [Google Scholar]

- Leviton, L. , Khan, L. K. , Rog, D. , Dawkins, N. , & Cotton, D. (2010). Evaluability assessment to improve public health policies, programs, and practices*. Annual Review of Public Health, 31, 213–233. [DOI] [PubMed] [Google Scholar]

- McVicar, A. , Munn‐Giddings, C. , & Seebohm, P. (2013). Workplace stress interventions using participatory action research designs. International Journal of Workplace Health Management, 6(1), 18–37. [Google Scholar]

- Michie, S. F. , Atkins, L. , & West, R. (2015). The behaviour change wheel: A guide to designing interventions. Sutton, UK: Silverback publishing. [Google Scholar]

- Nielsen, K. , & Randall, R. (2009). Managers' active support when implementing teams: The impact on employee wellbeing. Applied Psychology: Health and Well Being, 1(3), 374–390. [Google Scholar]

- Nielsen, K. , Randall, R. , & Albertsen, K. (2007). Participants' appraisals of process issues and the effects of stress management interventions. Journal of Organizational Behavior, 28(6), 793–810. [Google Scholar]

- Nielsen, K. (2013). Review article: How can we make organizational interventions work? Employees and line managers as actively crafting interventions. Human Relations, 66(8), 1029–1050. [Google Scholar]

- Nielsen, K. , & Abildgaard, J. S. (2013). Organizational interventions: A research‐based framework for the evaluation of both process and effects. Work and Stress, 27(3), 278–297. DOI: 10.1080/02678373.2013.812358. [DOI] [Google Scholar]

- Nielsen, K. , & Randall, R. (2012). The importance of employee participation and perceptions of changes in procedures in a teamworking intervention. Work and Stress, 26(2), 91–111. DOI: 10.1080/02678373.2012.682721. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nielsen, K. , & Randall, R. (2013). Opening the black box: Presenting a model for evaluating organizational‐level interventions. European Journal of Work and Organizational Psychology, 22(5), 601–617. DOI: 10.1080/1359432x.2012.690556. [DOI] [Google Scholar]

- Nielsen, K. , Randall, R. , & Christensen, K. (2010). Does training managers enhance the effects of implementing team‐working? A longitudinal, mixed methods field study. Human Relations, 63(11), 1719–1741. DOI: 10.1177/0018726710365004. [DOI] [Google Scholar]

- Nielsen, K. , Randall, R. , Holten, A. L. , & Gonzalez, E. R. (2010). Conducting organizational‐level occupational health interventions: What works? Work and Stress, 24(3), 234–259. DOI: 10.1080/02678373.2010.515393. [DOI] [Google Scholar]

- Nielsen, K. , Taris, T. W. , & Cox, T. (2010). The future of organizational interventions: Addressing the challenges of today's organizations. Work and Stress, 24(3), 219–233. DOI: 10.1080/02678373.2010.519176. [DOI] [Google Scholar]

- Olsen, K. , Legg, S. , & Hasle, P. (2012). How to use programme theory to evaluate the effectiveness of schemes designed to improve the work environment in small businesses. Work: A Journal of Prevention, Assessment and Rehabilitation, 41, 5999–6006. [DOI] [PubMed] [Google Scholar]

- Patton, M. Q. (2006). Evaluation for the way we work. Nonprofit Quarterly, 13(1), 28–33. [Google Scholar]

- Patton, M. Q. (2011). Developmental evaluation: Applying complexity concepts to enhance innovation and use. New York, NY: Guilford Press. [Google Scholar]

- Payne, A. F. , Storbacka, K. , & Frow, P. (2008). Managing the co‐creation of value. Journal of the Academy of Marketing Science, 36(1), 83–96. [Google Scholar]

- Prahalad, C. K. , & Ramaswamy, V. (2000). Co‐opting customer competence. Harvard Business Review, 78(1), 79–90. [Google Scholar]

- Proctor, E. , Silmere, H. , Raghavan, R. , Hovmand, P. , Aarons, G. , et al. (2011). Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health and Mental Health Services Research, 38(2), 65–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Randall, R. , Nielsen, K. , & Tvedt, S. D. (2009). The development of five scales to measure employees' appraisals of organizational‐level stress management interventions. Work & Stress, 23(1), 1–23. [Google Scholar]

- Randall, R. , Griffiths, A. , & Cox, T. (2005). Evaluating organizational stress‐management interventions using adapted study designs. European Journal of Work and Organizational Psychology, 14(1), 23–41. [Google Scholar]

- Randall, R. , & Nielsen, K. (2012). Does the intervention fit? An explanatory model of intervention success and failure in complex organizational environments In Improving organizational interventions for stress and well‐being. London: Routledge. [Google Scholar]

- Reed, J. E. , McNicholas, C. , Woodcock, T. , Issen, L. , & Bell, D. (2014). Designing quality improvement initiatives: The action effect method, a structured approach to identifying and articulating programme theory. BMJ quality & safetybmjqs‐2014‐003103. 23, 1040–1048. [DOI] [PubMed] [Google Scholar]

- Rogers, P. J. (2008). Using programme theory to evaluate complicated and complex aspects of interventions. Evaluation, 14(1), 29–48. [Google Scholar]

- Saunders, R. P. , Evans, M. H. , & Joshi, P. (2005). Developing a process‐evaluation plan for assessing health promotion program implementation: A how‐to guide. Health Promotion Practice, 6(2), 134–147. [DOI] [PubMed] [Google Scholar]

- Schelvis, R. , Oude Hengel, K. , Burdorf, A. , Strijk, J. E. , & van der Beek, A. J. (2015). Evaluation of occupational health interventions using a randomized controlled trial: Challenges and alternative research designs. Scandinavian Journal of Work, Environment & Health, 41(5), 491–503. [DOI] [PubMed] [Google Scholar]

- Semmer, N. K. (2006). Job stress interventions and the organization of work. Scandinavian Journal of Work, Environment & Health, 32(6), 515–527. [DOI] [PubMed] [Google Scholar]

- Shadish, W. R. , Cook, T. D. , & Leviton, L. C. (1991). Foundations of program evaluation: Theories of practice. New York, NY: Sage. [Google Scholar]

- von Thiele Schwarz, U. , & Hasson, H. (2013). Alignment for achieving a healthy organization In Bauer G. F., & Jenny G. J. (Eds.), Salutogenic organizations and change(pp. 107‐–125). Springer Netherlands. [Google Scholar]

- von Thiele Schwarz, U. , Augustsson, H. , Hasson, H. , & Stenfors‐Hayes, T. (2015). Promoting employee health by integrating health protection, health promotion, and continuous improvement: A longitudinal quasi‐experimental intervention study. Journal of Occupational and Environmental Medicine, 57(2), 217–225. [DOI] [PubMed] [Google Scholar]

- von Thiele Schwarz, U. , Hasson, H. , & Tafvelin, S. (2016). Leadership training as an occupational health intervention: Improved safety and sustained productivity. Safety Science, 81, 35–45. DOI: 10.1016/j.ssci.2015.07.020. [DOI] [Google Scholar]

- Weick, K. E. , & Quinn, R. E. (1999). Organizational change and development. Annual Review of Psychology, 50(1), 361–386. [DOI] [PubMed] [Google Scholar]

- Zohar, D. , & Luria, G. (2003). The use of supervisory practices as leverage to improve safety behavior: A cross‐level intervention model. Journal of Safety Research, 34(5), 567–577. DOI: 10.1016/j.jsr.2003.05.006. [DOI] [PubMed] [Google Scholar]