Significance

Bass sounds play a special role in conveying the rhythm and stimulating motor entrainment to the beat of music. However, the biological roots of this culturally widespread musical practice remain mysterious, despite its fundamental relevance in the sciences and arts, and also for music-assisted clinical rehabilitation of motor disorders. Here, we show that this musical convention may exploit a neurophysiological mechanism whereby low-frequency sounds shape neural representations of rhythmic input at the cortical level by boosting selective neural locking to the beat, thus explaining the privileged role of bass sounds in driving people to move along with the musical beat.

Keywords: EEG, rhythm, low-frequency sound, sensory-motor synchronization, frequency tagging

Abstract

Music makes us move, and using bass instruments to build the rhythmic foundations of music is especially effective at inducing people to dance to periodic pulse-like beats. Here, we show that this culturally widespread practice may exploit a neurophysiological mechanism whereby low-frequency sounds shape the neural representations of rhythmic input by boosting selective locking to the beat. Cortical activity was captured using electroencephalography (EEG) while participants listened to a regular rhythm or to a relatively complex syncopated rhythm conveyed either by low tones (130 Hz) or high tones (1236.8 Hz). We found that cortical activity at the frequency of the perceived beat is selectively enhanced compared with other frequencies in the EEG spectrum when rhythms are conveyed by bass sounds. This effect is unlikely to arise from early cochlear processes, as revealed by auditory physiological modeling, and was particularly pronounced for the complex rhythm requiring endogenous generation of the beat. The effect is likewise not attributable to differences in perceived loudness between low and high tones, as a control experiment manipulating sound intensity alone did not yield similar results. Finally, the privileged role of bass sounds is contingent on allocation of attentional resources to the temporal properties of the stimulus, as revealed by a further control experiment examining the role of a behavioral task. Together, our results provide a neurobiological basis for the convention of using bass instruments to carry the rhythmic foundations of music and to drive people to move to the beat.

Music powerfully compels humans to move, showcasing our remarkable ability to perceive and produce rhythmic signals (1). Rhythm is often considered the most basic aspect of music, and is increasingly regarded as a fundamental organizing principle of brain function. However, the neurobiological mechanisms underlying entrainment to musical rhythm remain unclear, despite the broad relevance of the question in the sciences and arts. Clarifying these mechanisms is also timely given the growing interest in music-assisted practices for the clinical rehabilitation of cognitive and motor disorders caused by brain damage (2).

Typically, people are attracted to move to music in time with a periodic pulse-like beat—for example, by bobbing the head or tapping the foot to the beat of the music. The perceived beat and meter (i.e., hierarchically nested periodicities corresponding to grouping or subdivision of the beat period) are thus used to organize and predict the timing of incoming rhythmic input (3) and to guide synchronous movement (4). Notably, the perceived beats sometimes coincide with silent intervals instead of accented acoustic events, as in syncopated rhythms (a hallmark of jazz), revealing remarkable flexibility with respect to the incoming rhythmic input in human perceptual-motor coupling (5). However, specific acoustic features such as bass sounds seem particularly well suited to convey the rhythm of music and support rhythmic motor entrainment (6, 7). Indeed, in musical practice, bass instruments are conventionally used as a rhythmic foundation, whereas high-pitched instruments carry the melodic content (8, 9). Bass sounds are also crucial in music that encourages listeners to move (10, 11).

There has been a recent debate as to whether evolutionarily shaped properties of the auditory system lead to superior temporal encoding for bass sounds (12, 13). One study using electroencephalography (EEG) recorded brain responses elicited by misaligned tone onsets in an isochronous sequence of simultaneous low- and high-pitched tones (12). Greater sensitivity to the temporal misalignment of low tones was observed when they were presented earlier than expected, which suggested better time encoding for low sounds. These results were replicated and extended by Wojtczak et al. (13), who showed that the effect was related to greater tolerance for low-frequency sounds lagging high-frequency sounds than vice versa. However, these studies only provide indirect evidence for the effect of low sounds on internal entrainment to rhythm, inferred from brain responses to deviant sounds. Moreover, they stop short of resolving the issue because, as noted by Wojtczak et al. (13), these brain responses are not necessarily informative about the processing of the global temporal structure of rhythmic inputs.

A promising approach to capture the internal representations of rhythm more directly involves the combination of EEG with frequency tagging. This approach involves measuring brain activity elicited at frequencies corresponding to the temporal structure of the rhythmic input (14–17). In a number of studies using this technique, an increase in brain activity has been observed at specific frequencies corresponding to the perceived beat and meter of musical rhythms (18–22). Evidence for the functional significance of this neural selectivity comes from work showing that the magnitude of beat- and meter-related brain responses correlates with individual differences in rhythmic motor behavior and is modulated by contextual factors influencing beat and meter perception (23–26).

The current study aimed to use this approach to provide evidence for a privileged effect of bass sounds in the neural processing of rhythm, especially in boosting cortical activity at beat- and meter-related frequencies. The EEG was recorded from human participants while they listened to isochronous and nonisochronous rhythms conveyed either by low (130 Hz) or high (1236.8 Hz) pure tones. The isochronous rhythm provided a baseline test of neural entrainment. The nonisochronous rhythms, which included a regular unsyncopated and a relatively complex syncopated rhythm, contained combinations of tones and silent intervals positioned to imply hierarchical metric structure. The unsyncopated rhythm was expected to induce the perception of a periodic beat that corresponded closely to the physical arrangement of sound onsets and silent intervals making up the rhythm, whereas the syncopated rhythm was expected to induce the perception of a beat that matched physical cues to a lesser extent, thus requiring more endogenous generation of the beat and meter (18, 19, 23, 24). Theoretical beat periods were confirmed in a tapping session conducted after the EEG session, in which participants were asked to tap along with the beat that they perceived in the rhythms, as a behavioral index of entrainment to the beat. Importantly, these different rhythms allowed us to test the low-tone benefit on cortical activity at the beat and meter frequencies even when the input lacked prominent acoustic energy at these frequencies. Frequency-domain analysis of the EEG was performed to obtain a direct fine-grained characterization of the mapping between rhythmic stimulus and EEG response. Additional analyses, including auditory physiological modeling, were performed to examine the degree to which the effect of bass sounds may be explained by cochlear properties.

Results

Sound Analysis.

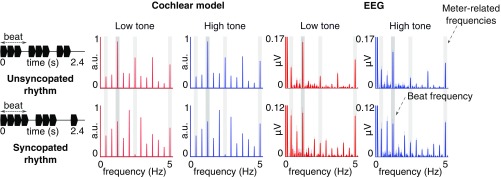

The isochronous rhythm and the two nonisochronous (unsyncopated and syncopated) rhythms carried by low or high tones were analyzed using a cochlear model to (i) determine frequencies that could be expected in the EEG response and (ii) estimate an early representation of the sound input. This model consisted of a gammatone auditory filter bank that converted acoustic input into a multichannel representation of basilar membrane motion (27), followed by a simulation of hair cell dynamics. The model yields an estimate of spiking responses in the auditory nerve with rate intensity functions and adaptation closely following neurophysiological data (28).

The envelope modulation spectrum obtained for the isochronous rhythms consisted of a peak at the frequency of single events (5 Hz) and its harmonics. For the unsyncopated and syncopated rhythms, the obtained envelope spectra contained 12 distinct peaks, corresponding to the repetition frequency of the whole pattern (0.416 Hz) and its harmonics up to the frequency of repetitions of single events (5 Hz) (Fig. 1). The magnitudes of responses at these 12 frequencies were converted into z scores (see Materials and Methods). This standardization procedure allowed the magnitude at each frequency to be assessed relative to the other frequencies, and thereby allowed us to determine how much one frequency (here, the beat frequency at 1.25 Hz) or a subgroup of frequencies (meter-related frequencies at 1.25, 1.25/3, 1.25 × 2, 1.25 × 4 Hz; see Materials and Methods) stood out prominently relative to the entire set of frequencies (see, e.g., ref. 18). This procedure has the further advantage of offering the possibility to objectively measure the degree of relative transformation, i.e., the distance between an input (corresponding to the cochlear model) and an output (corresponding to the obtained EEG responses), irrespective of the difference in their unit and scale (15, 18, 24, 29).

Fig. 1.

Spectra of the acoustic stimuli (processed through the cochlear model) and EEG responses (averaged across all channels and participants; n = 14; shaded regions indicate SEMs; see ref. 30). The waveform of one cycle for each rhythm (2.4-s duration) is depicted in black (Left) with the beat period indicated. The rhythms were continuously repeated to form 60-s sequences, and these sequences were presented eight times per condition. The cochlear model spectrum contains peaks at frequencies related to the beat (1.25 Hz; dark-gray vertical stripes) and meter (1.25 Hz/3, ×2, ×4; light-gray vertical stripes), and also at frequencies unrelated to the beat and meter. The EEG response includes peaks at the frequencies contained in the cochlear model output; however, the difference between the average amplitude of peaks at frequencies related vs. unrelated to the beat and meter is increased in the low-tone compared with high-tone conditions (see Relative Enhancement at Beat and Meter Frequencies and Fig. 2). Note the scaling difference in plots of EEG responses for unsyncopated and syncopated rhythms.

Behavioral Tasks.

During the EEG session, participants were asked to detect and identify deviant tones with the duration lengthened or shortened by 20% (40 ms) to encourage attentive listening specifically to the temporal structure of the auditory stimuli. While participants were generally able to identify the temporal deviants (SI Appendix, Table S1), there was a significant interaction between rhythm and tone frequency, F(2, 26) = 3.55, P = 0.04, = 0.04. Post hoc t tests revealed significantly lower performance in the low-tone compared with high-tone syncopated rhythm, t(13) = 2.97, P = 0.03, d = 0.79, suggesting higher task difficulty especially for the syncopated rhythm delivered with low tones (31, 32).

The beat-tapping task performed after the EEG session (SI Appendix) generally confirmed the theoretical assumption about entrainment to the beat based on preferential grouping by four events (18, 23, 33). Moreover, there were no statistically significant differences in the mean intertap interval and its variability between conditions (SI Appendix).

Frequency-Domain Analysis of EEG.

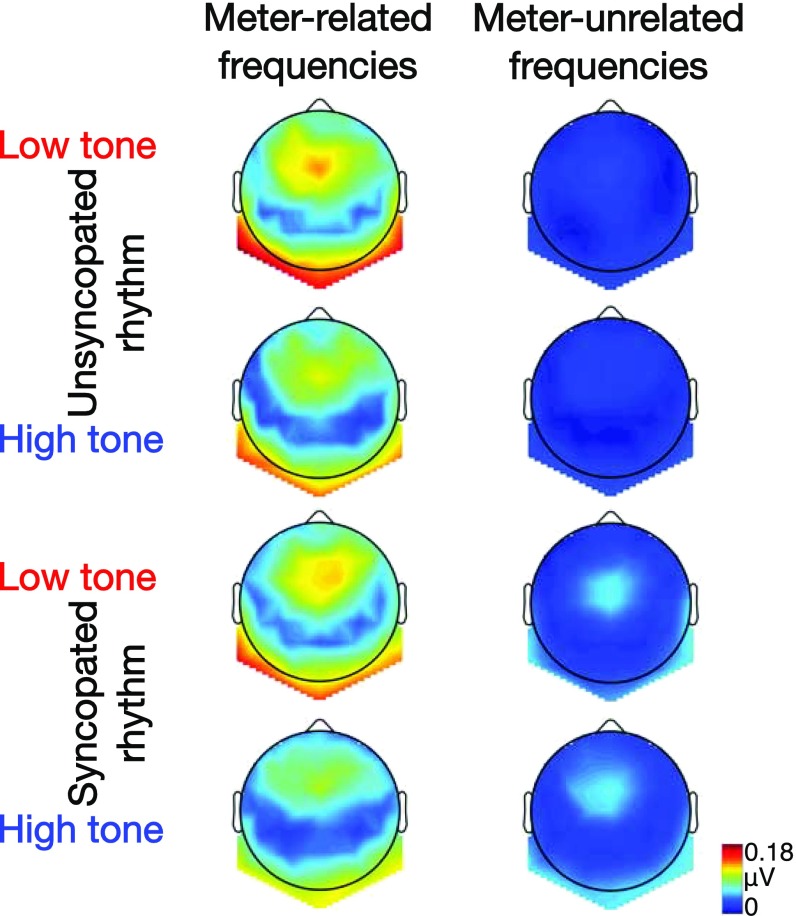

As shown in Fig. 1, the rhythmic stimuli elicited frequency-tagged EEG responses at the 12 frequencies expected based on the results of sound analysis with the cochlear model, with topographies similar to previous work (refs. 18 and 23 and Fig. 2).

Fig. 2.

Grand average topographies (n = 14) of neural activity measured at meter-related (Left column) and meter-unrelated (Right column) frequencies for the unsyncopated and syncopated rhythm conveyed by low or high tones.

Overall magnitude of the EEG response.

We first evaluated whether the overall magnitude (μV) of the EEG responses differed between low- and high-tone conditions for the three different rhythms (SI Appendix, Table S1). Overall magnitude was computed for each participant and condition by summing the amplitude of the frequency-tagged responses across the frequencies that we expected to be elicited based on the sound analysis of the rhythms. The resultant measure of overall response magnitude provides an index of the general capacity of the central nervous system to respond to the rhythms and the modulation of this capacity by tone frequency, regardless of the relevance of frequency-tagged components related to the beat and meter. For the isochronous rhythm, there was a significantly larger overall response in the low-tone condition, t(13) = 3.68, P = 0.008, d = 0.98, in line with previous work using isochronous trains of tones or sinusoidally amplitude-modulated tones (34, 35). In contrast, there were no significant differences between the high-tone and low-tone condition for the unsyncopated and syncopated rhythm (Ps > 0.34). This suggests that the global enhancement of the responses by low tones might only be present for isochronous rhythms.

Relative enhancement at beat and meter frequencies.

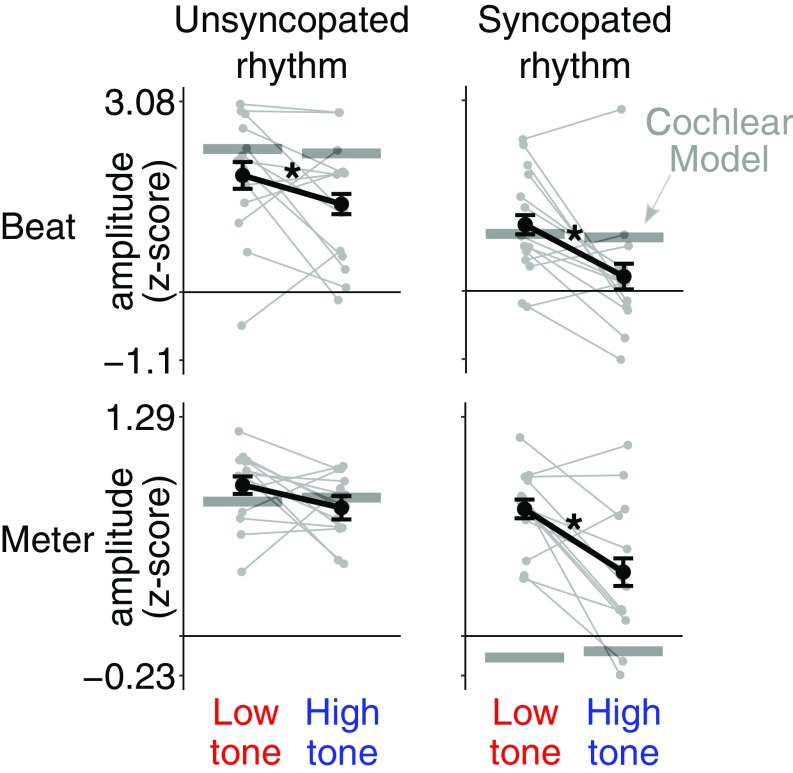

The main goal of the study was to examine the relative amplitude at specific beat- and meter-relevant frequency components for the unsyncopated and syncopated rhythms conveyed by high or low tones. A 2 × 2 repeated-measures ANOVA revealed greater relative amplitude at the beat frequency (z score of the amplitude at 1.25 Hz) in the low-tone condition for both types of rhythm (main effect of tone frequency; Fig. 3, Top) F(1, 13) = 9.46, P = 0.009, = 0.11 (see Materials and Methods and SI Appendix for tests of the validity of this standardization procedure). Furthermore, when all meter frequencies were taken into account (mean z-scored amplitude at 1.25, 1.25/3, 1.25 × 2, and 1.25 × 4 Hz), the 2 × 2 ANOVA revealed a significant interaction between tone frequency and rhythm, F(1, 13) = 5.23, P = 0.04, = 0.05, indicating greater relative amplitude at meter frequencies (Fig. 3, Bottom) in the low-tone condition for the syncopated rhythm, t(13) = 3.79, P = 0.004, d = 1.01, but not for the unsyncopated rhythm (P = 0.24, d = 0.45).

Fig. 3.

Effect of tone frequency on the selective enhancement of EEG activity at beat- and meter-related frequencies. Shown separately are z scores for the beat frequency (Top) and mean z scores for meter-related frequencies (Bottom) averaged across participants for the unsyncopated (Left) and syncopated (Right) rhythms. Error bars indicate SEMs (30). Asterisks indicate significant differences (P < 0.05). Responses from individual participants are shown as gray points linked by lines. The horizontal lines represent z score values obtained from the cochlear model. The low tone led to significant neural enhancement of the beat frequency in both rhythms. The low tone also elicited an enhanced EEG response at meter frequencies, but only in the syncopated rhythm. There was no significant modulation of meter-related responses by tone frequency for the unsyncopated rhythm.

Finally, we evaluated the extent to which early cochlear processes could potentially explain the relative enhancement of EEG response at the beat and meter frequencies elicited by low tones. The difference in z-scored EEG response amplitudes between the low- and high-tone conditions was compared with the corresponding difference in the z-scored cochlear model output. For the response at the beat frequency, difference scores were significantly larger in the EEG response compared with the cochlear model for the syncopated rhythm, t(13) = 1.4, P = 0.04, d = 0.8, but not for the unsyncopated rhythm (P = 0.73, d = 0.38). A similar pattern was revealed for the mean response at meter-related frequencies, with a significantly greater difference score for the syncopated rhythm, t(13) = 4.17, P = 0.004, d = 1.11, but not for the unsyncopated rhythm (P = 0.28, d = 0.53).

Discussion

The results show that rhythmic stimulation by bass sounds leads to enhanced neural representation of the beat and meter. EEG and behavioral responses were collected from participants presented with auditory rhythms conveyed either by low- or high-frequency tones. As hypothesized, we observed a selective enhancement of neural activity at the beat frequency for rhythms conveyed by low tones compared with high tones. When taking into consideration all meter frequencies, this low-tone benefit was only significant for the syncopated rhythm requiring relatively more endogenous generation of meter. Moreover, the low-tone benefit was not attributable to differences in perceived loudness between low and high tones, as a control experiment manipulating sound intensity alone did not yield similar results (SI Appendix, Control Experiment 1: Effect of Sound Intensity). Finally, the low-tone benefit appears to require the allocation of attention to temporal features of the stimulus, as the effect did not occur in a control experiment where attention was directed to nontemporal features (SI Appendix, Control Experiment 2: Effect of Behavioral Task).

It has been proposed that the preference for low-register instruments in conveying the rhythm within multivoiced music derives from more precise temporal encoding of low-pitched sounds due to masking effects in the auditory periphery (12). An alternative account holds that lower-pitched sounds are not necessarily subject to more precise temporal encoding but to greater tolerance for perception of simultaneity when low sounds lag behind higher-pitched sounds (13). Here we demonstrate that the privileged status of rhythmic bass sounds is not exclusively dependent on multivoice factors, such as masking or perceptual simultaneity, as it can be observed even without the presence of other instrumental voices.

Analysis of our stimuli with a physiologically plausible cochlear model (27, 28) indicated that nonlinear processes at the early stages of the auditory pathway are unlikely to account for the observed low-tone benefit and the interaction with syncopation. Moreover, the effect is not explained by greater activation of auditory neurons due to greater loudness, as the intensity of low and high tones was adjusted to evoke similar loudness percepts (36). Possible residual loudness differences between low and high tones are also unlikely to account for the observed effect of tone frequency. This was confirmed in a control experiment (SI Appendix, Control Experiment 1: Effect of Sound Intensity) showing that manipulating sound intensity alone (70- vs. 80-dB sound pressure level) did not influence the neural representation of the beat and meter.

Instead, the low-tone benefit observed here could be explained by a greater recruitment of brain structures involved in movement planning and control, including motor cortical regions (37–42), the cerebellum, and basal ganglia (24). These structures may be recruited via functional interconnections between the ascending auditory pathway and a vestibular sensory-motor network (including a striatal circuit involved in beat-based processing) that is particularly responsive to bass acoustic frequencies (43–45). The involvement of these sensory-motor areas thus constitutes a plausible mechanism for the observed low-tone benefit, as these areas have been shown to be critically involved in predictive beat perception (42), to contribute to the selective enhancement of EEG responses at the beat frequency (24), and to be activated by vestibular input (43, 44).

It should be noted that direct activation of the human vestibular organ by bass sounds occurs only at higher intensities (above ∼95-dB sound pressure level) than those employed in the current study (46). However, functional interactions between auditory and vestibular sensory-motor networks in response to low-frequency rhythms can arise centrally (45). These neural connections presumably develop from the onset of hearing in the fetus through the continuous experience of correlated auditory and vestibular sensory-motor input (e.g., the sound of the mother’s footsteps coupled with walking motion) (45).

Low-Tone Benefit in Syncopated Rhythm.

In accordance with previous studies (34, 35), overall larger magnitudes of EEG response were obtained with low tones compared with high tones in the isochronous rhythm. This general effect was not observed in nonisochronous rhythms, suggesting that as the stimulus becomes temporally more complex there is no longer a simple relationship between the overall response magnitude and tone frequency. Therefore, to fully capture the effect of tone frequency on the neural activity to complex rhythms, higher-level properties of the stimulus such as onset structure, which plays a role in inducing the perception of beat and meter (33), need to be taken into account. This was achieved here through a finer-grained frequency analysis focused on neural activity elicited at beat- and meter-related frequencies. Enhanced activity at the beat frequency was observed with low tones, irrespective of the rhythmic complexity of the stimulus. However, when taking into consideration all meter frequencies, neural activity was enhanced with low tones only in the syncopated rhythm, whose envelope did not contain prominent peaks of energy at meter-related frequencies.

These findings corroborate the hypothesis that bass sounds stimulate greater involvement of top-down, endogenous processes, possibly via stronger engagement of motor brain structures (39, 41–43). Activation of a widely distributed sensory-motor network may thus have facilitated the selective neural enhancement of meter-relevant frequencies in the current study, especially when listening to the low-tone syncopated rhythm. This association between rhythmic syncopation, low-frequency tones, and recruitment of a sensory-motor network could explain why musical genres specifically tailored to induce a strong urge to move to the beat (i.e., groove-based music such as funk) often contain a syncopated bass line (e.g., ref. 47). Accordingly, syncopation is perceived as more prominent when produced by a bass drum than a hi-hat cymbal (48), and rhythmically complex bass lines are rated as increasingly likely to make people dance (49).

Critical Role of Temporal Attention.

The greater involvement of endogenous processes in the syncopated rhythm carried by low tone could be driven by an increase in endogenously generated predictions or attention to stimulus timing necessitated by carrying out the temporal deviant identification task. An internally generated periodic beat constitutes a precise reference used to encode temporal intervals in the incoming rhythmic stimulus (3, 33, 50), and therefore contributes to successful identification of changes in tone duration (31, 51). In the current study, such an endogenous mechanism might have been especially utilized in the condition where the rhythmic structure of the input matched the beat and meter percept to a lesser extent (31, 33), and where low tones made the identification of fine duration changes more difficult due to lower temporal resolution of the auditory system with low-frequency tones (32). The relative contribution of these endogenous temporal processes to the low-tone benefit observed here was addressed in SI Appendix, Control Experiment 2: Effect of Behavioral Task, where participants were instructed to detect and identify any changes in broadly defined sound properties (pitch, tempo, and loudness) when, in fact, none were present. This experiment yielded no effect of tone frequency, suggesting that the low-tone benefit only occurred when the behavioral task required attention to be focused on temporal properties of the stimulus. Hence, even though a widespread sensory-motor network supporting endogenous meter generation can be directly activated by bass sounds when the intensities exceed the vestibular threshold (43), attending to temporal features of the sound is critical to the low-tone benefit at intensities beneath the vestibular threshold. Similarly, the association between temporal attention and vestibular sensory-motor activation may occur in music and dance contexts aimed at encouraging people to move to music. Indeed, the intention to move along with a stimulus is likely to direct attention to stimulus timing, and the resulting body movement, in turn, enhances vestibular activation.

Conclusions

The present study provides direct evidence for selective brain processing of musical rhythm conveyed by bass sounds, thus furthering our understanding of the neurobiological bases of rhythmic entrainment. We propose that the selective increase in cortical activity at beat- and meter-related frequencies elicited by low tones may explain the special role of bass instruments for delivering rhythmic information and inducing sensory-motor entrainment in widespread musical traditions. Our findings also pave the way for future investigations of how acoustic frequency content, combined with other features such as timbre and intensity, may efficiently entrain neural populations by increasing functional coupling in a distributed auditory-motor network. A fruitful avenue for probing this network further is through techniques with greater spatial resolution, such as human intracerebral recordings (19). Ultimately, identifying sound properties that enhance neural tracking of the musical beat is timely, given the growing use of rhythmic auditory stimulation for the clinical rehabilitation of cognitive and motor neurological disorders (52).

Materials and Methods

Participants.

Fourteen healthy individuals (mean age = 28.4 y, SD = 6.1 y; 10 females) with various levels of musical training (mean = 6.9 y, SD = 5.6 y; range 0–14 y) participated in the study after providing written informed consent. All participants reported normal hearing and no history of neurological or psychiatric disease. The study was approved by the Research Ethics Committee of Western Sydney University.

Auditory Stimuli.

The auditory stimuli were created in Matlab R2016b (MathWorks) and presented binaurally through insert earphones with an approximately flat frequency response over the range of frequencies included in the stimuli (ER-2; Etymotic Research). The stimuli consisted of three different rhythms of 2.4-s duration looped continuously for 60 s. All rhythms comprised 12 events, with each individual event lasting 200 ms. The structure of each rhythm was based on a specific patterning of sound (pure tones; 10-ms rise and 50-ms fall linear ramps) and silent events (amplitude at 0), as depicted in Fig. 1. The carrier frequency of the pure tone was either 130 Hz (low-tone frequency) or 1236.8 Hz (high-tone frequency; 39 semitones higher than the low-tone frequency). These two frequencies were chosen to fall within spectral bands where rhythmic fluctuations either correlate (100–200 Hz) or do not correlate (800–1600 Hz) with sensory-motor entrainment to music, as indicated by previous research (7, 53). To take into account the differential sensitivity of the human auditory system across the frequency range, the loudness of low and high tones was equalized to 70 phons using the time-varying loudness model of Moore et al. (ref. 36; by matching the maximum short-term loudness of a single 200-ms high-tone and low-tone sound), and held constant across participants.

One stimulus rhythm consisted of an isochronous train of tones with no silent events. The two other rhythms were selected based on previous evidence that they induce a periodic beat based on grouping by four events (i.e., 4 × 200 ms = 800 ms = 1.25 Hz beat frequency) (18, 23, 29). Related metric levels corresponded to subdivisions of the beat period by 2 (2.5 Hz) and 4 (i.e., 200-ms single event = 5 Hz), and grouping of the beat period by 3 (i.e., 2.4-s rhythm = 0.416 Hz). One rhythm was designed to be unsyncopated, as a sound event coincided with every beat in almost all possible beat positions (syncopation score = 1; calculated as in ref. 54). The internal representation of beat and meter should thus match physical cues in this rhythm. The other rhythm was syncopated, as it involved some beat positions coinciding with silent events rather than sound events (syncopation score = 4). The internal representation of beat and meter should thus match external cues to a lesser extent than in the unsyncopated rhythm.

Experimental Design and Procedure.

Crossing tone frequency (low, high) and rhythm (isochronous, unsyncopated, syncopated) yielded six conditions that were presented in separate blocks. The order of the six blocks was randomized, with the restriction that at least one high-tone and one low-tone block occurred within the first three blocks. These blocks were presented in an EEG session followed by a tapping session, with the same block order for the two sessions. Each block consisted of eight trials in the EEG session (2–4 s silence, followed by the 60-s stimulus) and two trials in the tapping session.

EEG Session and Behavioral Task.

In each trial of the EEG session, the duration of the steady-state portion of one randomly chosen sound event was either increased or decreased by 20% (40 ms), yielding four “longer” and four “shorter” deviant tones in each block. Participants were asked to detect the deviant tone and report after each trial whether it was longer or shorter than other tones comprising the rhythm. These deviants could appear only in the three repetitions of the rhythm before the last repetition and were restricted to three possible positions within each rhythm. In the unsyncopated and syncopated rhythms, these positions corresponded to sound events directly followed by a silent event. This was done to minimize the differences in task difficulty between unsyncopated and syncopated rhythms, as the perception of duration might differ according to the context in which a deviant tone appears (i.e., whether it is preceded and followed by tones or silences). For the isochronous rhythm, three random positions were chosen. The last four repetitions of the rhythms of all trials were excluded from further EEG analyses. The primary purpose of the deviant identification task was to ensure that participants were attending to the temporal properties of the auditory stimuli. To test whether the difficulty of deviant identification varied across conditions, percent-correct responses were compared using a repeated-measures ANOVA with the factors tone frequency (low, high) and rhythm (isochronous, unsyncopated, syncopated).

Participants were seated in a comfortable chair and asked to avoid any unnecessary movement or muscle contraction, and to keep their eyes fixated on a marker displayed on the wall ∼1 m in front of them. Examples of the “longer” and “shorter” deviant tones were provided before the session to ensure that all participants understood the task.

Stimulus Sound Analysis with Cochlear Model.

The cochlear model used to analyze the stimuli applied a Patterson-Holdsworth ERB filter bank with 128 channels (27), followed by Meddis’ (28) inner hair-cell model, as implemented in the Auditory Toolbox for Matlab (55). The output of the cochlear model was subsequently transformed into the frequency domain using the fast Fourier transform and averaged across channels. For the unsyncopated and syncopated rhythms, the magnitudes obtained from the resultant modulation spectrum were then expressed as z scores, as follows: (x − mean across the 12 frequencies)/SD across the 12 frequencies (18, 24–26, 29).

EEG Acquisition and Preprocessing.

The EEG was recorded using a Biosemi Active-Two system (Biosemi) with 64 Ag-AgCl electrodes placed on the scalp according to the international 10/20 system. The signals were referenced to the CMS (Common Mode Sense) electrode and digitized at a 2048-Hz sampling rate. Details of EEG data preprocessing are shown in SI Appendix. The cleaned EEG data were segmented from 0 to 50.4 s relative to the trial onset (i.e., exactly 21 repetitions of the rhythm, thus excluding repetitions of the rhythm where the deviant tones could appear), re-referenced to the common average, and averaged across trials in the time domain separately for each condition and participant (20, 24). The EEG preprocessing was carried out using Letswave6 (www.letswave.org) and Matlab. Further statistical analyses were carried out using R (version 3.4.1; https://www.R-project.org), with Greenhouse-Geisser correction applied when the assumption of sphericity was violated and Bonferroni-corrected post hoc tests to further examine significant effects.

Frequency-Domain Analysis of EEG Responses.

For each condition and participant, the obtained averaged waveforms were transformed into the frequency domain using fast Fourier transform, yielding a spectrum of signal amplitudes (in μV) ranging from 0 to 1024 Hz, with a frequency resolution of 0.0198 Hz. Within the obtained frequency spectra, the signal amplitude can be expected to correspond to the sum of (i) EEG responses elicited by the stimulus and (ii) unrelated residual background noise. To obtain valid estimates of the responses, the contribution of noise was minimized by subtracting, at each frequency bin, the average amplitude at the neighboring bins (second to fifth on both sides) (18, 56). For each condition and participant, noise-subtracted spectra were then averaged across all channels to avoid electrode-selection bias (as the response may originate from a widespread cortical network) and to account for individual differences in response topography (23, 57). The noise-subtracted, channel-averaged amplitudes at the expected frequencies (based on sound analysis with the cochlear model) in response to each stimulus were then measured for each condition and participant at the exact frequency bin of each expected response (note that the length of the analyzed epochs contained an integer number of rhythm cycles, so that the frequency bins were centered exactly at the frequencies of the expected responses).

Overall Magnitude of the EEG Response.

The overall magnitude of the EEG response in each condition was measured as the sum of amplitudes at the 12 frequencies expected in response to the unsyncopated and syncopated rhythms, and at 5 Hz and harmonics for the isochronous rhythm (only harmonics up to 45 Hz with an amplitude significantly above 0 μV in the noise-subtracted EEG spectra were considered for each condition). The significance of the harmonics was assessed using the nonsubtracted amplitude spectra, averaged over all electrodes and participants (58). Responses were tested by z-scoring the amplitude at each harmonic, with a baseline defined as 20 neighboring bins (second to 11th on each side), using the formula z(x) = (x − baseline mean)/baseline SD. Using this test, eight successive harmonics were considered significant for the low-tone and nine for the high-tone isochronous condition, as they had z scores >2.32 (i.e., P < 0.01, one-sample, one-tailed test; testing signal > noise). To test whether the overall response was enhanced when the same rhythm was conveyed by low vs. high tones, three separate paired-samples t tests were conducted on the isochronous, unsyncopated and syncopated rhythms.

Relative Amplitude at Beat and Meter Frequencies.

To assess the relative prominence of the specific frequencies in the EEG response to the unsyncopated and syncopated rhythms, amplitudes at the 12 expected frequencies elicited by each rhythm were converted into z scores, similarly to the analysis using the cochlear model (18, 25, 26) (see also SI Appendix for a control analysis using a different normalization method). The z score at the beat frequency (1.25 Hz) was taken as a measure of relative amplitude at the beat frequency. The greater this value, the more the beat frequency stood out relative to the entire set of frequency components elicited by the rhythm (23). Additionally, the z scores were averaged across frequencies that were related (0.416, 1.25, 2.5, 5 Hz) or unrelated (the remaining eight frequencies) to the theoretically expected beat and meter for these rhythms (18). The greater the average z score across meter frequencies, the more prominent was the response at meter frequencies relative to all elicited frequencies. We compared z-score values at beat and meter frequencies across conditions using a 2 × 2 repeated-measures ANOVA with the factors tone frequency (low, high) and rhythm (unsyncopated, syncopated).

Finally, the z-scored EEG response at the beat frequency in the high-tone condition was subtracted from the response in the low-tone condition, separately for the unsyncopated and syncopated rhythm. These difference scores were compared with the corresponding difference scores calculated from the z-scored magnitudes of the cochlear model output using one-sample t tests. The same comparison was conducted with the averaged response at the meter-related frequencies. These comparisons between cochlear model output and EEG responses are based on the assumption that if the EEG response is driven solely by early cochlear processes, the change in relative prominence between the low- and high-tone conditions should be similar in the cochlear model output and in the EEG response.

Supplementary Material

Acknowledgments

S.N. is supported by the Australian Research Council (Grant DE160101064). P.E.K. is supported by a Future Fellowship Grant from the Australian Research Council (Grant FT140101162). M.V. is supported by a Discovery Project Grant from the Australian Research Council (Grant DP170104322).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1801421115/-/DCSupplemental.

References

- 1.Phillips-Silver J, Aktipis CA, Bryant GA. The ecology of entrainment: Foundations of coordinated rhythmic movement. Music Percept. 2010;28:3–14. doi: 10.1525/mp.2010.28.1.3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Thaut MH, McIntosh GC, Hoemberg V. Neurobiological foundations of neurologic music therapy: Rhythmic entrainment and the motor system. Front Psychol. 2015;5:1185. doi: 10.3389/fpsyg.2014.01185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Essens PJ, Povel D-J. Metrical and nonmetrical representations of temporal patterns. Percept Psychophys. 1985;37:1–7. doi: 10.3758/bf03207132. [DOI] [PubMed] [Google Scholar]

- 4.Toiviainen P, Luck G, Thompson MR. Embodied meter: Hierarchical eigenmodes in music–induced movement. Music Percept. 2010;28:59–70. [Google Scholar]

- 5.Large EW, Herrera JA, Velasco MJ. Neural networks for beat perception in musical rhythm. Front Syst Neurosci. 2015;9:159. doi: 10.3389/fnsys.2015.00159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hove MJ, Keller PE, Krumhansl CL. Sensorimotor synchronization with chords containing tone-onset asynchronies. Percept Psychophys. 2007;69:699–708. doi: 10.3758/bf03193772. [DOI] [PubMed] [Google Scholar]

- 7.Burger B, London J, Thompson MR, Toiviainen P. Synchronization to metrical levels in music depends on low-frequency spectral components and tempo. Psychol Res, 2017:1–17. doi: 10.1007/s00426-017-0894-2. [DOI] [PubMed] [Google Scholar]

- 8.Lerdahl F, Jackendoff R. A Generative Theory of Tonal Music. MIT Press; Cambridge, MA: 1983. [Google Scholar]

- 9.Trainor LJ, Marie C, Bruce IC, Bidelman GM. Explaining the high voice superiority effect in polyphonic music: Evidence from cortical evoked potentials and peripheral auditory models. Hear Res. 2014;308:60–70. doi: 10.1016/j.heares.2013.07.014. [DOI] [PubMed] [Google Scholar]

- 10.Pressing J. Black Atlantic rhythm: Its computational and transcultural foundations. Music Percept. 2002;19:285–310. [Google Scholar]

- 11.Stupacher J, Hove MJ, Janata P. Audio features underlying perceived groove and sensorimotor synchronization in music. Music Percept. 2016;33:571–589. [Google Scholar]

- 12.Hove MJ, Marie C, Bruce IC, Trainor LJ. Superior time perception for lower musical pitch explains why bass-ranged instruments lay down musical rhythms. Proc Natl Acad Sci USA. 2014;111:10383–10388. doi: 10.1073/pnas.1402039111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wojtczak M, Mehta AH, Oxenham AJ. Rhythm judgments reveal a frequency asymmetry in the perception and neural coding of sound synchrony. Proc Natl Acad Sci USA. 2017;114:1201–1206. doi: 10.1073/pnas.1615669114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Nozaradan S. Exploring how musical rhythm entrains brain activity with electroencephalogram frequency-tagging. Philos Trans R Soc Lond B Biol Sci. 2014;369:20130393. doi: 10.1098/rstb.2013.0393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Nozaradan S, Keller PE, Rossion B, Mouraux A. EEG frequency-tagging and input-output comparison in rhythm perception. Brain Topogr. 2018;31:153–160. doi: 10.1007/s10548-017-0605-8. [DOI] [PubMed] [Google Scholar]

- 16.Regan D. Evoked Potentials and Evoked Magnetic Fields in Science and Medicine. Elsevier; New York: 1989. [Google Scholar]

- 17.Picton TW, John MS, Dimitrijevic A, Purcell D. Human auditory steady-state responses. Int J Audiol. 2003;42:177–219. doi: 10.3109/14992020309101316. [DOI] [PubMed] [Google Scholar]

- 18.Nozaradan S, Peretz I, Mouraux A. Selective neuronal entrainment to the beat and meter embedded in a musical rhythm. J Neurosci. 2012;32:17572–17581. doi: 10.1523/JNEUROSCI.3203-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Nozaradan S, et al. Intracerebral evidence of rhythm transform in the human auditory cortex. Brain Struct Funct. 2017;222:2389–2404. doi: 10.1007/s00429-016-1348-0. [DOI] [PubMed] [Google Scholar]

- 20.Nozaradan S, Peretz I, Missal M, Mouraux A. Tagging the neuronal entrainment to beat and meter. J Neurosci. 2011;31:10234–10240. doi: 10.1523/JNEUROSCI.0411-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Tal I, et al. Neural entrainment to the beat: The “missing-pulse” phenomenon. J Neurosci. 2017;37:6331–6341. doi: 10.1523/JNEUROSCI.2500-16.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Stupacher J, Wood G, Witte M. Neural entrainment to polyrhythms: A comparison of musicians and non-musicians. Front Neurosci. 2017;11:208. doi: 10.3389/fnins.2017.00208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Nozaradan S, Peretz I, Keller PE. Individual differences in rhythmic cortical entrainment correlate with predictive behavior in sensorimotor synchronization. Sci Rep. 2016;6:20612. doi: 10.1038/srep20612. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Nozaradan S, Schwartze M, Obermeier C, Kotz SA. Specific contributions of basal ganglia and cerebellum to the neural tracking of rhythm. Cortex. 2017;95:156–168. doi: 10.1016/j.cortex.2017.08.015. [DOI] [PubMed] [Google Scholar]

- 25.Chemin B, Mouraux A, Nozaradan S. Body movement selectively shapes the neural representation of musical rhythms. Psychol Sci. 2014;25:2147–2159. doi: 10.1177/0956797614551161. [DOI] [PubMed] [Google Scholar]

- 26.Cirelli LK, Spinelli C, Nozaradan S, Trainor LJ. Measuring neural entrainment to beat and meter in infants: Effects of music background. Front Neurosci. 2016;10:229. doi: 10.3389/fnins.2016.00229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Patterson RD, Holdsworth J. A functional model of neural activity patterns and auditory images. Adv Speech Hear Lang Process. 1996;3:547–563. [Google Scholar]

- 28.Meddis R. Simulation of mechanical to neural transduction in the auditory receptor. J Acoust Soc Am. 1986;79:702–711. doi: 10.1121/1.393460. [DOI] [PubMed] [Google Scholar]

- 29.Nozaradan S, Schönwiesner M, Keller PE, Lenc T, Lehmann A. Neural bases of rhythmic entrainment in humans: Critical transformation between cortical and lower-level representations of auditory rhythm. Eur J Neurosci. 2018;47:321–332. doi: 10.1111/ejn.13826. [DOI] [PubMed] [Google Scholar]

- 30.Morey RD. Confidence intervals from normalized data: A correction to Cousineau (2005) Tutor Quant Meth Psychol. 2008;4:61–64. [Google Scholar]

- 31.Grube M, Griffiths TD. Metricality-enhanced temporal encoding and the subjective perception of rhythmic sequences. Cortex. 2009;45:72–79. doi: 10.1016/j.cortex.2008.01.006. [DOI] [PubMed] [Google Scholar]

- 32.Moore BC, Peters RW, Glasberg BR. Detection of temporal gaps in sinusoids: Effects of frequency and level. J Acoust Soc Am. 1993;93:1563–1570. doi: 10.1121/1.406815. [DOI] [PubMed] [Google Scholar]

- 33.Povel D-J, Essens PJ. Perception of temporal patterns. Music Percept. 1985;2:411–440. doi: 10.3758/bf03207132. [DOI] [PubMed] [Google Scholar]

- 34.Wunderlich JL, Cone-Wesson BK. Effects of stimulus frequency and complexity on the mismatch negativity and other components of the cortical auditory-evoked potential. J Acoust Soc Am. 2001;109:1526–1537. doi: 10.1121/1.1349184. [DOI] [PubMed] [Google Scholar]

- 35.Ross B, Draganova R, Picton TW, Pantev C. Frequency specificity of 40-Hz auditory steady-state responses. Hear Res. 2003;186:57–68. doi: 10.1016/s0378-5955(03)00299-5. [DOI] [PubMed] [Google Scholar]

- 36.Moore BCJ, Glasberg BR, Varathanathan A, Schlittenlacher J. A loudness model for time-varying sounds incorporating binaural inhibition. Trends Hear. 2016;20:2331216516682698. doi: 10.1177/2331216516682698. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Burunat I, Tsatsishvili V, Brattico E, Toiviainen P. Coupling of action-perception brain networks during musical pulse processing: Evidence from region-of-interest-based independent component analysis. Front Hum Neurosci. 2017;11:230. doi: 10.3389/fnhum.2017.00230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Merchant H, Grahn J, Trainor L, Rohrmeier M, Fitch WT. Finding the beat: A neural perspective across humans and non-human primates. Philos Trans R Soc Lond B Biol Sci. 2015;370:20140093. doi: 10.1098/rstb.2014.0093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Kung S-J, Chen JL, Zatorre RJ, Penhune VB. Interacting cortical and basal ganglia networks underlying finding and tapping to the musical beat. J Cogn Neurosci. 2013;25:401–420. doi: 10.1162/jocn_a_00325. [DOI] [PubMed] [Google Scholar]

- 40.Morillon B, Baillet S. Motor origin of temporal predictions in auditory attention. Proc Natl Acad Sci USA. 2017;114:E8913–E8921. doi: 10.1073/pnas.1705373114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Todd NPM, Lee CS. Source analysis of electrophysiological correlates of beat induction as sensory-guided action. Front Psychol. 2015;6:1178. doi: 10.3389/fpsyg.2015.01178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Patel AD, Iversen JR. The evolutionary neuroscience of musical beat perception: The action simulation for auditory prediction (ASAP) hypothesis. Front Syst Neurosci. 2014;8:57. doi: 10.3389/fnsys.2014.00057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Todd NPM, Lee CS. The sensory-motor theory of rhythm and beat induction 20 years on: A new synthesis and future perspectives. Front Hum Neurosci. 2015;9:444. doi: 10.3389/fnhum.2015.00444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Trainor LJ, Gao X, Lei JJ, Lehtovaara K, Harris LR. The primal role of the vestibular system in determining musical rhythm. Cortex. 2009;45:35–43. doi: 10.1016/j.cortex.2007.10.014. [DOI] [PubMed] [Google Scholar]

- 45.Trainor LJ, Unrau A. Extracting the beat: An experience-dependent complex integration of multisensory information involving multiple levels of the nervous system. Empir Music Rev. 2009;4:32–36. [Google Scholar]

- 46.Todd NPM, Cody FWJ, Banks JR. A saccular origin of frequency tuning in myogenic vestibular evoked potentials? Implications for human responses to loud sounds. Hear Res. 2000;141:180–188. doi: 10.1016/s0378-5955(99)00222-1. [DOI] [PubMed] [Google Scholar]

- 47.Witek MAG. Filling in: Syncopation, pleasure and distributed embodiment in groove. Music Anal. 2017;36:138–160. [Google Scholar]

- 48.Witek MAG, Clarke EF, Kringelbach ML, Vuust P. Effects of polyphonic context, instrumentation, and metrical location on syncopation in music. Music Percept. 2014;32:201–217. [Google Scholar]

- 49.Wesolowski BC, Hofmann A. There’s more to groove than bass in electronic dance music: Why some people won’t dance to techno. PLoS One. 2016;11:e0163938. doi: 10.1371/journal.pone.0163938. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Large EW, Jones MR. The dynamic of attending: How people track time-varying events. Psychol Res. 1999;106:119–159. [Google Scholar]

- 51.Bergeson TR, Trehub SE. Infants perception of rhythmic patterns. Music Percept. 2006;23:345–360. [Google Scholar]

- 52.Hove MJ, Keller PE. Impaired movement timing in neurological disorders: Rehabilitation and treatment strategies. Ann N Y Acad Sci. 2015;1337:111–117. doi: 10.1111/nyas.12615. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Burger B, Thompson MR, Luck G, Saarikallio S, Toiviainen P. Music moves us: Beat-related musical features influence regularity of music-induced movement. In: Cambouropoulos E, Tsougras C, Mavromatis P, Pastiadis K, editors. Proceedings of the ICMPC-ESCOM 2012 Joint Conference: 12th Biennial International Conference for Music Perception and Cognition and the 8th Triennial Conference of the European Society for the Cognitive Sciences of Music. School of Music Studies, Aristotle University of Thessaloniki; Thessaloniki, Greece: 2012. pp. 183–187. [Google Scholar]

- 54.Longuet-Higgins HC, Lee CS. The rhythmic interpretation of monophonic music. Music Percept. 1984;1:424–440. [Google Scholar]

- 55.Slaney M. 1998. Auditory toolbox, version 2 (Interval Research Corporation, Palo Alto, CA), Interval Research Corporation Technical Report 1998:010.

- 56.Mouraux A, et al. Nociceptive steady-state evoked potentials elicited by rapid periodic thermal stimulation of cutaneous nociceptors. J Neurosci. 2011;31:6079–6087. doi: 10.1523/JNEUROSCI.3977-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Tierney A, Kraus N. Neural entrainment to the rhythmic structure of music. J Cogn Neurosci. 2015;27:400–408. doi: 10.1162/jocn_a_00704. [DOI] [PubMed] [Google Scholar]

- 58.Rossion B, Torfs K, Jacques C, Liu-Shuang J. Fast periodic presentation of natural images reveals a robust face-selective electrophysiological response in the human brain. J Vis. 2015;15:18. doi: 10.1167/15.1.18. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.