Abstract

A competitive scheme for economic storage of the informational content of an X-Ray image, as it can be used for further processing, is presented. It is demonstrated that sparse representation of that type of data can be encapsulated in a small file without affecting the quality of the recovered image. The proposed representation, which is inscribed within the context of data reduction, provides a format for saving the image information in a way that could assist methodologies for analysis and classification. The competitiveness of the resulting file is compared against the compression standards JPEG and JPEG2000.

1 Introduction

Sparse image representation refers to particular techniques for data reduction. Rather than representing the image informational content by the intensity of the pixels, the image is transformed with the aim of reducing the number of data points for reproducing the equivalent information. Lessening the cardinality of medical data is crucial for remote diagnosis and treatments [1–4]. The interest for this matter in the area of medical technology has recently been invigorated by the prospect of earlier disease detection, using neural networks and deep learning methodologies for automatic analysis of X-Ray plates [5–7].

In recent work [8] we have demonstrated that sparse representation, obtained by a large dictionary and greedy algorithms, renders high quality approximation of X-Ray images. The framework was proven to produce approximations which are far more sparser than those arising from traditional transformations such as the Cosine and Wavelet Transforms. Nevertheless, for the approximation to be useful within the context of automatic health care systems and remote reporting, it is necessary to ensure that the high levels of the achieved sparsity are not affected by saving the reduced data in a small file. This paper follows on the study in [8] by presenting a simple scheme to store the sparse approximation of an X-Ray medical image in a file of competitive size with respect to the most commonly used formats, namely JPEG and JPEG2000 (JPEG2).

Although in general X-Ray images are sparser if the approximation is performed in the wavelet domain, for analysis purposes the approximation in the pixel intensity domain may be needed. Hence, we consider here approximations in both domains. It is pertinent to stress that the aim of this work is not to propose yet one more method for image compression, but a method for economic storage of the informational content of an X-Ray image as it can be used for further processing [7, 9–15]. The comparison of the resulting file size, against those produced by JPEG and JPEG2, is carried out with the purpose of illustrating the effectiveness of the proposed scheme.

2 Sparse image representation

Throughout the paper stands for the set of real numbers. Lower boldface letters are used to represent vectors and upper boldface letters to represent matrices. Standard mathematical fonts indicate their corresponding components, e.g., is a vector of components d(i), i = 1, …, N and a matrix of elements I(i, j), i = 1, …, Nx, j = 1, …, Ny.

Suppose that an image, given as a 2D array of intensity values, is to be approximated by the linear decomposition

| (1) |

where each c(n) is a scalar and each is an element of normalized to unity, called an ‘atom’, to be selected from a redundant set, , called a ‘dictionary’. The selection of the atoms in (1) is carried out according to an optimality criterion.

The goal of a sparse representation is to produce an approximation Ik, of an image I, using as few atoms as possible. The mathematical methods for performing the task are either based on the minimization of the l1-norm [16, 17] or are greedy strategies for stepwise selection of atoms from the dictionary [18, 19]. When using large dictionaries, the latter are especially suited for practical applications. We focus here on the greedy algorithm known as Orthogonal Matching Pursuit (OMP), which is very effective at furnishing sparse solutions. In spite of the fact that the method is computationally intensive, the implementation described in the next section is very efficient in terms of processing time.

2.1 Orthogonal Matching Pursuit in 2D

The OMP method was introduced in [19] and has been implemented by a number of different algorithms. We describe a particular implementation for 2D, henceforth referred to as OMP2D. The dictionary is restricted to be separable, i.e. a 2D dictionary which corresponds to the tensor product of two 1D dictionaries . Our implementation of OMP2D is based on adaptive biorthogonalization and Gram-Schmidt orthogonalization procedures, as proposed in [20] for the one dimensional case. For the convenience of the interested researcher we include the description of its generalization to separable 2D dictionaries, as it is implemented by the MATLAB and C++ MEX functions we have made available.

Given a gray level intensity image, , and two 1D dictionaries and , we approximate the array by the atomic decomposition of the form:

| (2) |

where indicates the transpose of the column vector . The OMP2D approach determines the atoms in (2) as follows:

On setting k = 0 and R0 = I at iteration k + 1 the algorithm selects the elements and corresponding to the indices obtained as

| (3) |

The coefficients in (3) are such that ‖Rk‖F is minimum (‖⋅‖F being the Frobenius norm induced by the Frobenius inner product 〈⋅, ⋅〉F). This is guaranteed by calculating the coefficients as

| (4) |

where the matrices are recursively constructed, at each iteration, to account for each newly selected atom. Starting from the set of matrices is upgraded and updated through the formula:

| (5) |

For numerical accuracy of the orthogonal set at least one re-orthogonalization step is usually needed. It requires the recalculation of the element Wk+1 as

| (6) |

The algorithm iterates until for a given parameter ρ the stopping criterion is met.

2.2 Approximation by partitioning

The OMP2D approach described above is to be applied on an image partition. This implies the division of the image into small disjoint blocks Iq, q = 1, …, Q, which without loss of generality we assume to be square of dimension Nb × Nb. Denoting each element of the image partition by Iq, these are approximated, independently of each other, to produce the approximations , q = 1, …, Q, of the form (2). The superscript kq indicates the number of atoms intervening in the decomposition of the block q. As already mentioned, in spite of the fact that the approximation of most X-Ray images is significantly sparser if performed in the wavelet domain, results in the pixel intensity domain may be required for particular applications.

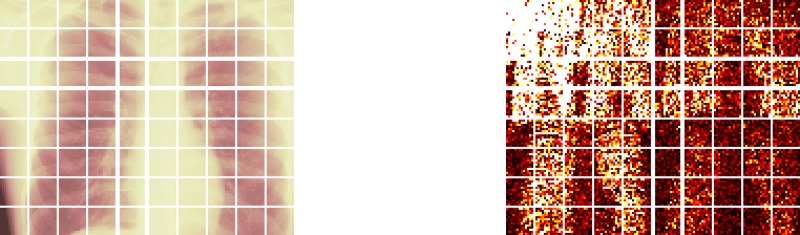

The approximation in the wavelet domain involves a transformation, via a Wavelet Transform, which converts the image into the array to be approximated. The inverse transformation is taken after the approximation is concluded. Fig 1 provides graphical illustrations of image partitions in the pixel intensity and the wavelet domain.

Fig 1. Portion of a chest image.

Illustration of an X-Ray image partition in the pixel intensity domain (left graph) and in the wavelet domain (right graph). The image size is 256 × 320 pixels and the partition is illustrated by blocks of 32 × 32 pixels. The colours are added for visual effect.

Remark 1: The OMP2D algorithm described in Sec. 2.1 is very effective up to some block size. While the actual size depends on the sparsity of the image, previous studies indicate that in general for block sizes greater than 24 × 24 an alternative implementation is advisable. The alternative implementation, called Self Projected Matching Pursuit (SPMP2D) [8, 21] is not only dedicated to tackling large dimensional problems, but also potentially suitable for implementation in Graphic Processing Unit (GPU) programming. However, because for X-Ray medical images a partition into blocks of size 16 × 16 is a good compromise between sparsity and processing time, we have focussed on the implementation of OMP2D as given in Sec. 2.1. Other possibilities for approximating a partition are discussed in [8, 22].

2.3 Mixed dictionaries

The available literature in relation to the construction of dictionaries for image representation is mainly concerned with methodologies for learning atoms from training data [23–28]. Those methodologies are not designed for learning the types of dictionaries of our interest though. We restrict the dictionary to be separable, in order to reduce the computational burden and memory requirements. In previous works [8, 21, 29] we have demonstrated that very large separable dictionaries, which are easy to construct, render high levels of sparsity. Such dictionaries are not specific to a particular class of images. A discrimination is only made to take into account whether the approximation is carried out in the pixel intensity or in the wavelet domain. In each domain we use mixed dictionaries which are similar in nature, but not equal. They have the trigonometric dictionaries and , defined below, as common components.

where wc(n) and ws(n), n = 1, …, M are normalization factors, and usually M = 2N.

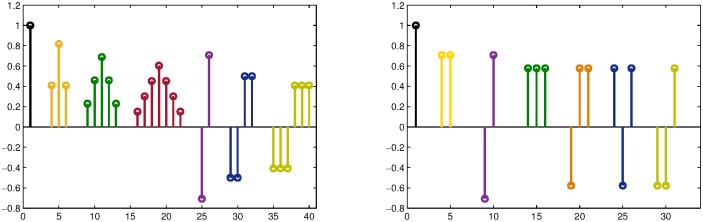

For approximations in the wavelet domain we add the dictionary , as proposed in [8], which is built by translation of the prototype atoms in the right graph of Fig 2. The mixed dictionary , to be used only in the wavelet domain, is formed as and .

Fig 2. Prototype atoms, which generate by translation the dictionaries (left) and (right).

For approximations in the pixel intensity domain we add the dictionary, , which is built by translation of the prototype atoms in the left graph of Fig 2. In this case the mixed dictionary is formed as and .

The corresponding 2D dictionaries and are very large, but not needed as such. All calculations are carried out using the 1D dictionaries.

3 Coding strategy

For the sparse representation of an X-Ray image to be useful within the current trend of medical technology developments it should be suitable to be encapsulated in a small file. Accordingly, the coefficients of the atomic decompositions need to be converted into integer numbers. This operation is known as quantization. We adopt a simple and commonly used uniform quantization technique. For q = 1, …, Q the absolute value coefficients |cq(n)|, n = 1, …, kq are converted to integers as follows:

| (7) |

where ⌈x⌉ indicates the smallest integer number greater than or equal to x, Δ is the quantization parameter, and θ the threshold to disregard coefficients of small magnitude. The signs of the coefficient are encoded separately, as a vector sq, using a binary alphabet.

In order to store the information about the particular atoms present in the approximation of each block, we proceed as follows: Firstly each pair of indices corresponding to the atoms in the decompositions of the block Iq is mapped into a single index oq(n). Then the set oq(1), …, oq(kq) is sorted in ascending order . This guarantees that, for each q-value, . The order of the indices induces an order in the unsigned coefficients, and in the corresponding signs . The advantage introduced by the ascending order of the indices is that they can be stored as smaller positive numbers, by taking differences between two consecutive values. Certainly by defining the string stores the indices for the block q with unique recovery. The number 0 is then used to separate the strings corresponding to different blocks.

| (8) |

The quantized magnitude of the re-ordered coefficients are concatenated in the strings stcf as follows:

| (9) |

Using 0 if the sign is positive and 1 if it is negative, the signs of the coefficients are placed in the string, stsg as

| (10) |

The next encoding/decoding scheme summarizes the above described procedure.

Encoding

- Given an image partition use OMP2D (or other method) to approximate each element of the partition by the atomic decomposition:

(11) The approximation is carried out on each block, independently of the others, until the stopping criterion is reached.

For each q, quantize as in (7) the magnitude of the coefficients in the decomposition (11) to obtain . Store the signs of the non-zero coefficient as components of a vector sq.

For each q, map the pair of indices in (11) into a single index oq(n), n = 1, …, kq and sort these numbers in ascending order to have the re-ordered sets: ; and to create the strings: stind, as in (8), and stcf, and stsg as in (9) and (10) respectively.

Let’s recall the content of the file encoded by the above steps:

stind contains the difference of indices corresponding to the atoms in the approximation of each of the blocks in the image partition.

stcf contains the magnitude of the corresponding coefficients (quantized to integer numbers).

stsg contains the signs of the coefficients in binary format. The quantization parameter Δ also needs to be stored in the file. We fix θ = 1.3Δ for all the images.

Decoding

Recover the indices from their difference. This operation also gives the information about the number of coefficients in each block.

Read the quantized unsigned coefficients from the string stcf and transform them into real numbers as . Read the corresponding signs from the string stsg.

- Recover the approximated partition, for each block, through the liner combination

- Assemble the recovered image as

where the indicates the operation for joining the blocks to restore the image.

Note: The MATLAB scripts for implementing the scheme and reproducing the results presented in the next section have been made available on [30].

3.1 Evaluation metrics

The quality of the approximation is quantified by the Mean Structural SIMilarity (MSSIM) index [31, 32] and the classical Peak Signal-to-Noise Ratio (PSNR), calculated as

| (12) |

The required MSSIM is set to be MSSIM ≥ 0.997. This limit guarantees that the approximation is indistinguishable from the image, in the original size (it should be noticed that the MSSIM between an image and itself is 1). For the comparison with standard formats all the PSNRs are fixed as values for which JPEG and JPEG2 produce the required MSSIM. For producing a requested PSNR with the sparse representation approach we proceed as follows: the approximation routine is set to yield a slightly larger value of PSNR and the required one is then obtained by tuning the quantization parameter Δ.

As a measure of sparsity we use the Sparsity Ratio, which is defined as [33]:

| (13) |

Accordingly, the sparsity of a representation is manifested as a high value of SR.

In addition to the SR, which is a global measure of sparsity, a meaningful description of the variation of the image content throughout the partition is rendered by the local sparsity ratio, which is given as

| (14) |

where kq is the number of coefficients in the decomposition of the q-block and is the number of pixels in the block.

3.2 Data sets

We illustrate the effectiveness of the proposed encoding scheme by storing the outputs of high quality sparse approximation of two data sets: 1) the Lukas 2D 8 bit medical image corpus, available on [34] and 2) the sample of 25 X-ray chest images taken randomly from the National Institute of Health (NIH) dataset available on [35].

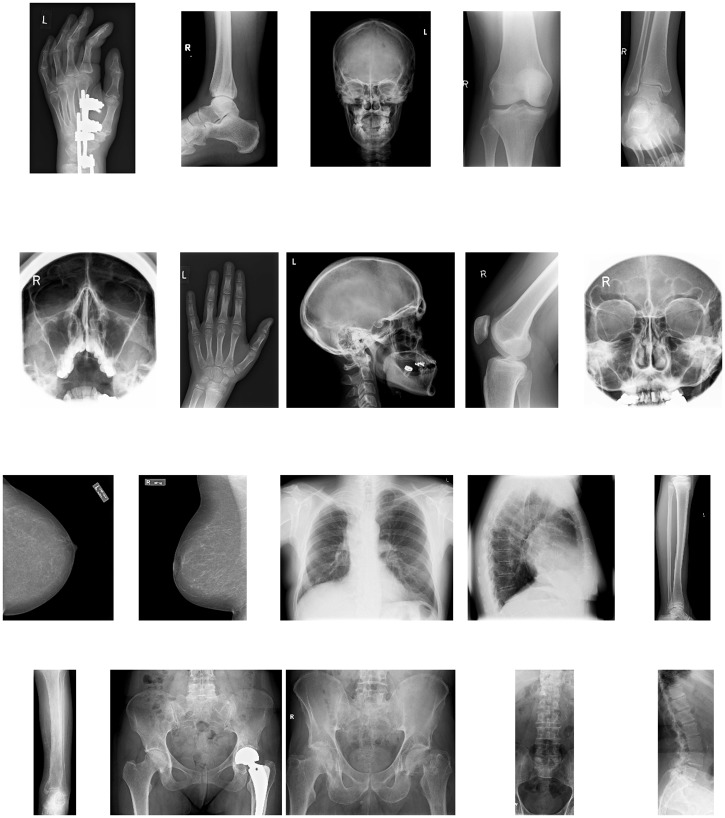

The Lukas corpus consists of 20 images which cover different parts of the human body, as shown in Fig 3. The average size of the images in the set is 1943 × 1364 pixels.

Fig 3. Lukas data set.

The 20 images in the Lukas Corpus [34] listed in Table 1. First row: Hand1, Foot0, Head0, Knee1, Foot1. Second row: Sinus0, Hand0, Head1, Knee0, Sinus1. Third row: Breast0, Breast1, Thorax0, Thorax1, Leg0. Fourth row: Leg1, Pelvis1, Pelvis0, Spine1, Spine0. The average size of these images is 1943 × 1364 pixels.

The chest X-Ray images are available in the raster graphics file format portable network graphics (png). Information related to the NIH X-Ray collection is given in [36]. The 25 images used here have been placed on [30]. The sample consists of common views of the chest in radiology. The posteroanterior, anteroposterior, and lateral views, which are obtained by changing the relative orientation of the body and the direction of the X-Ray beam. The images in this set are of similar size and smaller than in the Lukas corpus. The average size is 496 × 512 pixels.

4 Results

The approximation of all the images in the Lukas corpus are performed in both the pixel intensity and the wavelet domain. The size of the blocks in the image partition is fixed taking into account previously reported results [8, 21], which indicate that 16 × 16 is a good trade-off between the resulting sparsity and the processing time. The information about the sizes of the corresponding files is given in bits per pixel (bpp) in the third and forth columns of Table 1. The results for JPEG and JPEG2 are placed in the fifth and sixth columns, respectively. The last two rows of the table are the mean value of standard deviation (std) of the corresponding columns.

Table 1. Comparison of size rate (in bpp) for the Lukas corpus, listed in the first column.

The third column shows the bpp values corresponding to the sparse representation in the pixel domain (Spd). The forth column shows the corresponding results in the wavelet domain (Swd). The fifth and sixth columns are the bpp values for the formats JPEG and JPEG2, respectively. All the approaches render a MSSIM ≥ 0.997 and the values of PSNR listed in the second column.

| Image | dB | Spd | Swd | JPEG | JPEG2 |

|---|---|---|---|---|---|

| 1 Hand1 | 48.1 | 0.443 | 0.286 | 0.436 | 0.234 |

| 2 Foot0 | 48.6 | 0.462 | 0.306 | 0.449 | 0.247 |

| 3 Head0 | 47.4 | 0.441 | 0.320 | 0.419 | 0.244 |

| 4 Knee1 | 48.0 | 0.488 | 0.316 | 0.485 | 0.286 |

| 5 Foot1 | 48.1 | 0.624 | 0.391 | 0.566 | 0.335 |

| 6 Sinus0 | 47.1 | 0.676 | 0.416 | 0.575 | 0.334 |

| 7 Hand0 | 48.8 | 0.612 | 0.424 | 0.586 | 0.371 |

| 8 Head1 | 46.4 | 0.599 | 0.452 | 0.546 | 0.346 |

| 9 Knee0 | 49.1 | 0.612 | 0.419 | 0.573 | 0.364 |

| 10 Sinus1 | 45.8 | 0.697 | 0.453 | 0.594 | 0.358 |

| 11 Breast0 | 44.3 | 0.519 | 0.465 | 0.605 | 0.393 |

| 12 Breast1 | 44.3 | 0.691 | 0.629 | 0.832 | 0.521 |

| 13 Thorax0 | 44.1 | 0.827 | 0.686 | 0.874 | 0.629 |

| 14 Thorax1 | 43.4 | 0.816 | 0.693 | 0.924 | 0.619 |

| 15 Leg0 | 48.9 | 1.000 | 0.867 | 1.152 | 0.759 |

| 16 Leg1 | 49.2 | 1.384 | 1.240 | 1.584 | 1.056 |

| 17 Pelvis1 | 44.3 | 1.596 | 1.418 | 1.828 | 1.248 |

| 18 Pelvis0 | 44.4 | 1.606 | 1.435 | 1.827 | 1.310 |

| 19 Spine1 | 47.0 | 2.131 | 1.922 | 2.463 | 1.630 |

| 20 Spine0 | 47.4 | 2.395 | 2.298 | 2.764 | 1.902 |

| Mean Value | 46.7 | 0.938 | 0.727 | 1.004 | 0.659 |

| Std | 1.9 | 0.573 | 0.521 | 0.707 | 0.500 |

As shown in Table 1, all the files corresponding to approximations in the wavelet domain (Swd) are smaller than those corresponding to approximations in the pixel intensity domain (Spd), and also smaller than the JPEG ones. On average the files with the sparse representation in the wavelet domain are 22% smaller than those with the representation in the pixel domain, and 27% smaller than the JPEG files. In the present form JPEG2 produces the smallest files (on average 9% smaller than the files with the representation in the wavelet domain). However, both JPEG and JPEG2 formats involve an entropy coding step, which is not included in our scheme. Instead, the outputs of our algorithm are stored in HDF5 format [37, 38]. In the provided software [30] this is implemented in a straightforward manner using the MATLAB function save. On the contrary, adding an entropy coding step to the software would increase the processing time.

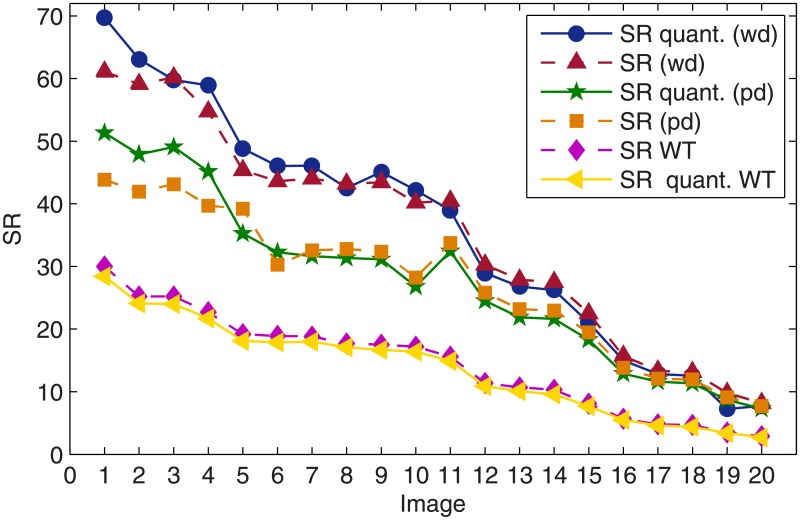

A very interesting feature of the numerical results is that the quantization process, intrinsic to the economic store of the coefficients in the image approximation does not reduce the sparsity. For the sake of comparison in addition to calculating the SRs obtained with the dictionary approach in both domains, before and after quantization, we have also calculated the corresponding SRs produced by nonlinear thresholding of the wavelet coefficients. The results are shown in Fig 4.

Fig 4. Comparison of the SRs, before and after quantization, corresponding to the dictionary approach in both the pixel intensity and the wavelet domain and to the wavelet approximation by nonlinear thresholding.

As already discussed, since the quantization of coefficients degrades quality, to achieve the required PSNR the approximation of the image has to be carry out up to a higher PSNR value. Nevertheless, because the quantization process maps some coefficients to zero, for the corpus of 20 images in this study, quantization does not affect sparsity. On the contrary, as can be observed in Fig 4, for the sparsest images (first 5 images in Table 1) sparsity actually benefits from quantization. It is also clear that for the images in the upper part of the table the SR in the wavelet domain is significantly larger than in the pixel intensity domain. However, the level of sparsity achieved by the dictionary approach is, in both domains, significantly higher than that achieved by nonlinear thresholding of the wavelet coefficients. If the dictionary approach operates in the wavelet domain, then after quantization the mean value gain in SR with respect to thresholding of the wavelet coefficients is 163%, with standard deviation of 17%. In the pixel intensity domain the corresponding gain is 113%, with standard deviation of 30%.

It is worth mentioning that the local sparsity ratio (c.f. (14)) can be used to produce a digital summary of the images. Indeed, each of the graphs of Fig 5 depicts the inverse of the local sparsity ratio in the pixel domain, for nine of the images in the Lukas corpus. Each of the points in a graph represents the number of coefficients in the atomic decomposition of each block in the image partition. Hence, the number of points in each of the graphs of Fig 5 is equal to the number of blocks in the corresponding image partition. Note that showing the inverse of the local sparsity ratio implies that the brightest pixels correspond to the least sparse blocks. By comparing the graphs in Fig 5 with the images in Fig 3 it is clear that each of the graphs in Fig 5 corresponds to one of the image in the Lukas corpus: Hand0, Foot1, Head1, Sinus1, Leg0, Thorax0, Breast0, Pelvis1 and Spine1. This suggests that the digital summary of the images could be of assistance to classification and feature extraction techniques. For recent publications in that area of application see [39–45].

Fig 5. Digital summary of nine of the images in the Lukas corpus.

The points in each graph are the inverse of the local sparsity ratio for nine of the images in Fig 3. The sizes of the graphs are (from top left to bottom right) 124 × 78, 140 × 86, 97 × 104, 99 × 88, 132 × 38, 107 × 128, 1138 × 105, 100 × 118 and 131 × 53 pixels.

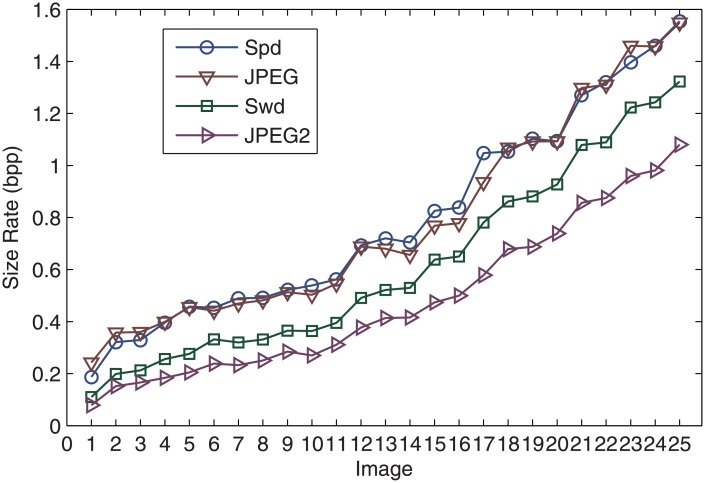

The approximation of the 25 chest X-Ray images is carried out to achieved a PSNR of 45dB for all the images. This guarantees a MSSIM of at least 0.99 for every image. The resulting size of the files lead to the same remarks as the values in the previous example displayed in Table 1. Indeed, as shown in Fig 6, the size rate produced by the dictionary approach in the pixel intensity domain (Spd) is competitive with the JPEG format for the same quality. The files produced in the wavelet domain (Swd) are smaller than the JPEG files but larger than the JPEG2 ones. However, let’s recall that the entropy coding step, which is part of the JPEG and JPEG2 compression standards, is not included in our scheme. This results in larger files but fast processing. Certainly, using MATLAB environment and a small notebook 2.9GHz dual core i7 3520M CPU and 4GB of memory, the average time for carrying out the approximation and creating the files of the 25 images with the dictionary approach is 1.7 s per image (with standard deviation 0.5). This time is the average of five independent runs in the time domain and another five independent runs in the wavelet domain.

Fig 6. Comparison of the file size rate, in bpp, against the JPEG and JPEG2 formats for the 25 chest images.

Spd and Swd correspond to the files containing the representation of the dictionary approach in the pixel and wavelet domains, respectively.

In this case the sparsity of the image representation benefits from quantization even more than in the previous case: The dictionary approach in the pixel intensity domain yields a mean value SR () equal to 25.4 before quantization and and after quantization. In the wavelet domain before quantization, while after quantization.

5 Conclusions

A scheme for encapsulating the sparse representation of an X-Ray image in a small file has been proposed. The approach operates within the over-complete dictionary framework, which achieves a notable level of sparsity in the image representation. An interesting feature, emerging from the numerical results illustrating the approach, is that the process of devising the small file does not affect the sparsity of the representation. We consider this a very important outcome, because the central aim of the approach is to reduce, as much as possible, the cardinality of the data representing the informational content of the images. Additionally, the competitivity of the file size was compared against the standard formats JPEG and JPEG2. The MATLAB routines, for implementing all the steps of the process to reproduce the results in Table 1 and Fig 6, have been made available on a dedicated website [30].

As a final remark it should be mentioned that the quality of the image representation in the present study is very high. A lower value of MSSIM might be acceptable as diagnostically lossless representation of X-Ray images [32]. Within the proposed scheme the only effects of setting a lower value of MSSIM are: a) the approximation routine runs faster, since less atoms are chosen, and b) the storage file is of course smaller. The right value of MSSIM is to be set according to how the file is to be used whilst taking into account specialized opinions.

Acknowledgments

Thanks are due to Prof. Rainer Köster and Dr. Jürgen Abel for making available the Lukas corpus of 8-bit 2D medical images [34].

Data Availability

All relevant data are available at https://doi.org/10.17036/researchdata.aston.ac.uk.00000370.

Funding Statement

There was no specific funding for this work.

References

- 1. J. Dheebaa, N. Albert Singhb, S. Tamil Selvi, “Computer-aided detection of breast cancer on mammograms: A swarm intelligence optimized wavelet neural network approach”, Journal of Biomedical Informatics, 49, 45–52 (2014). 10.1016/j.jbi.2014.01.010 [DOI] [PubMed] [Google Scholar]

- 2. Liu X., Zheng Z., “A new automatic mass detection method for breast cancer with false positive reduction”, Neurocomputing, 152, 388–402 (2015). 10.1016/j.neucom.2014.10.040 [DOI] [Google Scholar]

- 3. Zhang X., Dou H., Ju T., Xu J., Zhang S., “Fusing Heterogeneous Features From Stacked Sparse Autoencoder for Histopathological Image Analysis”, IEEE Journal of Biomedical and Health Informatics, 20, 1377–1383 (2016). 10.1109/JBHI.2015.2461671 [DOI] [PubMed] [Google Scholar]

- 4. Yoon J., Davtyan C., van der Schaar M, “Discovery and Clinical Decision Support for Personalized Healthcare”, IEEE Journal of Biomedical and Health Informatics, 1133–1145 (2016). 10.1109/JBHI.2016.2574857 [DOI] [PubMed] [Google Scholar]

- 5.Bar Y., Diamant I., Wolf L., Lieberman S., Konen E., Greenspan H., “Chest pathology detection using deep learning with non-medical training”, IEEE 12th International Symposium on Biomedical Imaging (ISBI), 2015.

- 6. Lakhani P., Sundaram B., “Deep Learning at Chest Radiography: Automated Classification of Pulmonary Tuberculosis by Using Convolutional Neural Networks”, Radiology, (2017). 10.1148/radiol.2017162326 [DOI] [PubMed] [Google Scholar]

- 7. Nithila E. E., Kumar S.S., “Automatic detection of solitary pulmonary nodules using swarm intelligence optimized neural networks on CT images”, Engineering Science and Technology, an International Journal, 20, 1192–1202 (2017). 10.1016/j.jestch.2016.12.006 [DOI] [Google Scholar]

- 8. Rebollo-Neira L., “Effective sparse representation of X-ray medical images” International Journal for Numerical Methods in Biomedical Engineering, (2017). 10.1002/cnm.2886 [DOI] [PubMed] [Google Scholar]

- 9. Pereira D. C., Ramos R. P., Zanchetta do Nascimento M., “Segmentation and detection of breast cancer in mammograms combining wavelet analysis and genetic algorithm”, Computer Methods and Programs in Biomedicine, 114, 88–101 (2014). 10.1016/j.cmpb.2014.01.014 [DOI] [PubMed] [Google Scholar]

- 10. Wang Z.,Yu G., Kang Y., Zhao Y., Qu Q., “Breast tumor detection in digital mammography based on extreme learning machine”, Neurocomputing, 128, 175–184 (2014). 10.1016/j.neucom.2013.05.053 [DOI] [Google Scholar]

- 11.Liu B., Wang M., Foroosh H., Tappen M., Penksy M., “Sparse Convolutional Neural Networks” IEEE Conference on Computer Vision and Pattern Recognition, 248–255, 2015 10.1109/CVPR.2015.7298681.

- 12. Summers R.M., “Deep Learning and Computer-Aided Diagnosis for Medical Image Processing: A Personal Perspective”, in Deep Learning and Convolutional Neural Networks for Medical Image Computing, 3–10 Eds: Lu L., Zheng Y., Carneiro G., Yang L, Springer International Publishing, 3–10, 2017. [Google Scholar]

- 13. Shen D., Wu G., and Suk H-Il, “Deep Learning in Medical Image Analysis”, Annual Review of Biomedical Engineering, 19, 221–248 (2017). 10.1146/annurev-bioeng-071516-044442 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Carneiro G., Zheng Y., F Xing. L Yang. “Review of Deep Learning Methods in Mammography, Cardiovascular, and Microscopy Image Analysis”, in Deep Learning and Convolutional Neural Networks for Medical Image Computing, 11–32, Eds: Lu L., Zheng Y., Carneiro G., Yang L, Springer International Publishing, 3–10, 2017. [Google Scholar]

- 15. Qayyum A., Anwar S.M., Awais M., Majid M., “Medical image retrieval using deep convolutional neural network”, Neurocomputing, 266, 8–20 (2017). 10.1016/j.neucom.2017.05.025 [DOI] [Google Scholar]

- 16. Chen S. S., Donoho D. L., Saunders M. A, “Atomic Decomposition by Basis Pursuit”, SIAM Journal on Scientific Computing, 20, 33–61 (1998). 10.1137/S1064827596304010 [DOI] [Google Scholar]

- 17. Elad M., Sparse and Redundant Representations: From Theory to Applications in Signal and Image Processing, Springer; (2010). [Google Scholar]

- 18. Mallat S., Zhang Z., “Matching pursuit with time-frequency dictionaries,” IEEE Transactions on Signal Processing, Vol (41,12) 3397–3415 (1993). 10.1109/78.258082 [DOI] [Google Scholar]

- 19.Pati Y.C., Rezaiifar R., and P.S. Krishnaprasad, “Orthogonal matching pursuit: recursive function approximation with applications to wavelet decomposition,” Proc. of the 27th ACSSC,1,40–44 (1993).

- 20. Rebollo-Neira L., Lowe D., “Optimized orthogonal matching pursuit approach”, IEEE Signal Processing Letters, 9, 137–140 (2002). 10.1109/LSP.2002.1001652 [DOI] [Google Scholar]

- 21. Rebollo-Neira L., Bowley J., “Sparse representation of astronomical images”, Journal of The Optical Society of America A,30, 758–768 (2013). 10.1364/JOSAA.30.000758 [DOI] [PubMed] [Google Scholar]

- 22. Rebollo-Neira L., Matiol R., Bibi S., “Hierarchized block wise image approximation by greedy pursuit strategies,” IEEE Signal Processing Letters, 20, 1175–1178 (2013). 10.1109/LSP.2013.2283510 [DOI] [Google Scholar]

- 23. Kreutz-Delgado K., Murray J. F., Rao B. D., Engan K., Lee Te-Won, and Sejnowski T. J., “Dictionary Learning Algorithms for Sparse Representation”, Neurocomputing, 15, 349–396 (2003). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Aharon M., Elad M., and Bruckstein A., “K-SVD: An Algorithm for Designing Overcomplete Dictionaries for Sparse Representation”, IEEE Transactions on Signal Processing,54, 4311–4322 (2006). 10.1109/TSP.2006.881199 [DOI] [Google Scholar]

- 25. Rubinstein R., Zibulevsky M., and Elad M., “Double Sparsity: Learning Sparse Dictionaries for Sparse Signal Approximation”, IEEE Transactions on Signal Processing,58, 1553–1564 (2010). 10.1109/TSP.2009.2036477 [DOI] [Google Scholar]

- 26. Tos̆ić I. and Frossard P., “Dictionary Learning: What is the right representation for my signal?”, IEEE Signal Processing Magazine, 28, 27–38 (2011). 10.1109/MSP.2010.939537 [DOI] [PubMed] [Google Scholar]

- 27. Zepeda J., Guillemot C., Kijak E., “Image Compression Using Sparse Representations and the Iteration-Tuned and Aligned Dictionary”, IEEE Journal of Selected Topics in Signal Processing,5,1061–1073 (2011). 10.1109/JSTSP.2011.2135332 [DOI] [Google Scholar]

- 28. Srinivas M., Naidu R.R., Sastry C.S., Krishna Mohana C., “Content based medical image retrieval using dictionary learning”, Neurocomputing, 168, 880–895 (2015). 10.1016/j.neucom.2015.05.036 [DOI] [Google Scholar]

- 29. Rebollo-Neira L., Bowley J., Constantinides A. and Plastino A., “Self contained encrypted image folding”, Physica A, 391, 5858–5870 (2012). 10.1016/j.physa.2012.06.042 [DOI] [Google Scholar]

- 30.http://www.nonlinear-approx.info/examples/node08.html

- 31. Wang Z., Bovik A. C., Sheikh H.R., Simoncelli E. P., “Image quality assessment: From error visibility to structural similarity,” IEEE Transactions on Image Processing, 13, 600–612 (2004). 10.1109/TIP.2003.819861 [DOI] [PubMed] [Google Scholar]

- 32.Kowalik-Urbaniak I., Brunet D., Wang J., Koff D., Smolarski-Koff N., Vrscay E., Wallace B., Wang Z., “The quest for ‘diagnostically lossless’ medical image compression: a comparative study of objective quality metrics for compressed medical images”, Proc. SPIE 9037, Medical Imaging 2014: Image Perception, Observer Performance, and Technology Assessment, 903717 (March 11, 2014);

- 33. Bowley J., Rebollo-Neira L., Sparsity and something else: an approach to encrypted image folding, IEEE Signal Processing Letters,8, 189–192 (2011). 10.1109/LSP.2011.2106496 [DOI] [Google Scholar]

- 34.http://www.data-compression.info/Corpora/LukasCorpus/

- 35.https://openi.nlm.nih.gov/imgs/collections/NLMCXR_png.tgz

- 36. Wang X., Peng Y., Lu L., Lu Z., Bagheri M., Summers R.M., “ChestX-ray8: Hospital-scale Chest X-ray Database and Benchmarks on Weakly-Supervised Classification and Localization of Common Thorax Diseases”, IEEE CVPR 2017, http://openaccess.thecvf.com/content_cvpr_2017/papers/Wang_ChestX-ray8_Hospital-Scale_Chest_CVPR_2017_paper.pdf [Google Scholar]

- 37.https://www.hdfgroup.org/

- 38.L. A. Wasser, “Hierarchical Data Formats—What is HDF5?”, http://neondataskills.org/HDF5/About (2015).

- 39. Lin C., Chen W., Qiu C., Wu Y., Krishnan S., Q Zou. “LibD3C: ensemble classifiers with a clustering and dynamic selection strategy”, Neurocomputing,123, 424–435 (2014). 10.1016/j.neucom.2013.08.004 [DOI] [Google Scholar]

- 40. Zou Q., Zeng J., Cao L, Ji R., “A novel features ranking metric with application to scalable visual and bioinformatics data classification” Neurocomputing,173, 346–354 (2016). 10.1016/j.neucom.2014.12.123 [DOI] [Google Scholar]

- 41. Zhu P., Zuo W., Zhang L., Hu Q., Shiu S.C.K., “Unsupervised feature selection by regularized self-representation”, Pattern Recognition, 48, 438–446 (2015). 10.1016/j.patcog.2014.08.006 [DOI] [Google Scholar]

- 42. Zhu P., Zhu W., Wang W., Zuo W., Hu Q., “Non-convex regularized self-representation for unsupervised feature selection”, Image and Vision Computing, 60, 22–29 (2017). 10.1016/j.imavis.2016.11.014 [DOI] [Google Scholar]

- 43. Zhu P., Hu Q., Han Y., Zhang C., Du Y., “Combining neighborhood separable subspaces for classification via sparsity regularized optimization”, Information Sciences, 370, 270–287 (2016). 10.1016/j.ins.2016.08.004 [DOI] [Google Scholar]

- 44. Zhu P., Zhu W., Hu Q., Zhang C., Zuo W., “Subspace clustering guided unsupervised feature selection”, Pattern Recognition, 60, 364–374 (2017). 10.1016/j.patcog.2017.01.016 [DOI] [Google Scholar]

- 45. Zhu P., Xu Q., Hu Q., Zhang C., Zhao H., “Multi-label feature selection with missing labels”, Pattern Recognition, 74, 488–502 (2018). 10.1016/j.patcog.2017.09.036 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All relevant data are available at https://doi.org/10.17036/researchdata.aston.ac.uk.00000370.