Abstract

Introduction

Internet interventions can reach large numbers of individuals. However, low levels of engagement and high rates of follow-up attrition are common, presenting major challenges to evaluation. This study investigated why registrants of an Internet smoking cessation intervention did not return after joining (“one hit wonders”), and explored the impact of graduated incentives on survey response rates and responder characteristics.

Methods

A sample of “one hit wonders” that registered on a free smoking cessation website between 2014 and 2015 were surveyed. The initial invitation contained no incentive. Subsequent invitations were sent to random subsamples of non-responders from each previous wave offering $25 and $50 respectively. Descriptive statistics characterized respondents on demographic characteristics, reasons for not returning, and length of time since last visit. Differences were investigated with Fisher's Exact tests, Kruskal-Wallis, and logistic regression.

Results

Of 8779 users who received the initial invitation, 132 completed the survey (1.5%). Among those subsequently offered a $25 incentive, 127 (3.7%) responded. Among those offered a $50 incentive, 97 responded (5.7%). The most common reasons endorsed for not returning were being unable to quit (51%), not having enough time (33%), having forgotten about the website (28%), and not being ready to quit (21%). Notably, however, 23% reported not returning because they had successfully quit smoking. Paid incentives yielded a higher proportion of individuals who were still smoking than the $0 incentive (72% vs. 61%). Among $0 and $25 responders, likelihood of survey response decreased with time since registration; the $50 incentive removed the negative effect of time-since-registration on probability of response.

Conclusions

One third of participants that had disengaged from an Internet intervention reported abstinence at follow-up, suggesting that low levels of engagement are not synonymous with treatment failure in all cases. Paid incentives above $25 may be needed to elicit survey responses, especially among those with longer intervals of disengagement from an intervention.

Keywords: Smoking cessation, Engagement, Attrition, Incentive, Survey methods

Highlights

-

•

Roughly a quarter of one time users of a smoking cessation website may not be treatment failures, as commonly assumed.

-

•

Graduated incentives produced higher response rates among Internet intervention registrants who disengaged after one visit.

-

•

Larger incentives may be needed to elicit survey responses among those with longer periods of intervention disengagement.

1. Introduction

Internet interventions offer the promise of efficiently delivering health behavior change programs to large numbers of individuals (Fox, 2011, North American Quitline Consortium, 2014, Alere Wellbeing Inc., 2014, van Mierlo et al., 2012, Wangberg et al., 2011, Cobb and Graham, 2006). However, low levels of intervention engagement and high follow-up attrition are commonly observed in Internet studies (Strecher et al., 2008, Richardson et al., 2013, Eysenbach, 2005, Neve et al., 2010, Crutzen et al., 2011, Schwarzer and Satow, 2012). Each of these phenomena presents distinct challenges to developers attempting to optimize the impact of Internet interventions and to researchers attempting to measure their efficacy or effectiveness.

Engagement with Internet interventions has been conceptualized to include 1) amount of exposure or use, and 2) skills practice, or the completion of activities or exercises that teach or reinforce knowledge or behavior related to the outcome of interest (Danaher et al., 2009, Ritterband et al., 2009). Amount of exposure can be easily, unobtrusively, and directly measured using automated tracking mechanisms, whereas measurement of skills practice typically requires participant self-report (Danaher et al., 2009). Consequently, because measuring skills practice is more effortful for both the participant and the researcher, amount of exposure is frequently used as a proxy for overall engagement (Sawesi et al., 2016). Multiple measures for amount of exposure typically are available, but the most common are number of site visits, number of page views, and session duration. One study that measured skills practice as “number of modules completed per session” found that it significantly predicted outcomes, while amount of exposure did not (Donkin et al., 2013).

However engagement is defined and measured, low levels are commonly reported in studies of Internet interventions across numerous domains (Strecher et al., 2008, Richardson et al., 2013, Schwarzer and Satow, 2012, Cobb et al., 2005, Munoz et al., 2009, Pike et al., 2007, Saul et al., 2007, Nash et al., 2015, Kohl et al., 2013). Eysenbach has noted that this phenomenon of low engagement (or “non-usage attrition”) is so common it is “one of the fundamental characteristics and methodological challenges in the evaluation of eHealth applications” (p.2) (Eysenbach, 2005). Participants commonly fail to use interventions to the full extent intended, whether that is reflected as the duration of time, the proportion of the intervention they are exposed to, or completion of key activities. Many studies have reported that a large proportion of users make only one visit and never return (Farvolden et al., 2005), even after providing detailed personal information in a registration process (Nash et al., 2015, Etter, 2005) or completing an extensive battery of survey instruments (Christensen et al., 2004). Christensen et al. (2006) have referred to these users as “one hit wonders.”

The assumption regarding low rates of engagement with Internet interventions is that participants may fail to receive an adequate “dose” to promote behavior change. A substantial number of studies have demonstrated an association between greater use of Internet interventions and improved outcomes for general health behavior change (Schweier et al., 2014, Ware et al., 2008, Cobb and Poirier, 2014, Poelman et al., 2013), as well as for smoking cessation specifically (Cobb et al., 2005, Pike et al., 2007, Saul et al., 2007, Danaher et al., 2008, Rabius et al., 2008, Japuntich et al., 2006, Civljak et al., 2013), supporting the notion that “more is better.” Two recent reports used statistical methods to account for the possibility of self-selection bias that is inherent in these kinds of associations, and found that use of an Internet smoking cessation community predicted abstinence (Graham et al., 2015, Papandonatos et al., 2016). Several ongoing studies are investigating strategies to boost website engagement (Alley et al., 2014, Denney-Wilson et al., 2015, Graham et al., 2013, Ramo et al., 2015, Thrul et al., 2015), but an unanswered question is why many people who register for online interventions never come back after an initial visit. A better understanding of reasons why people do not return to online cessation interventions could provide clearer insights into this “law of attrition” (Eysenbach, 2005) and potentially aid efforts to target re-engagement strategies for those who might benefit most from them.

To investigate reasons why people do not return to online interventions after registering, however, it is necessary to reach them for data collection. High rates of follow-up attrition (low response rates) are also observed in Internet interventions (Murray et al., 2009, Mathieu et al., 2013), making this kind of inquiry inherently challenging. One problematic implication of follow-up attrition specific to smoking cessation relates to the evaluation of outcomes. Intervention effectiveness is commonly evaluated using the “intent to treat” approach in which all smokers randomized to treatment are counted in outcome analyses, with those lost to follow-up presumed to be smoking. In a scenario where follow-up attrition is high, quit rates may be grossly underestimated. Indeed, systematic reviews and meta-analyses of the effectiveness of Internet interventions for smoking cessation have reported only modest findings, noting the systemic problem of attrition (Civljak et al., 2013). Few studies have explicitly addressed abstinence outcomes among survey non-responders and findings have been mixed. In a small study out of Sweden, Tomson et al. (2005) made additional efforts to reach non-responders to a quitline follow-up survey. They found that 39% (18/46) of those reached through these additional efforts reported being abstinent compared to 31% (354/1131) of initial survey responders, a non-significant difference. In another quitline study, Lien et al. (2016) found that study participants who required the most contacts for follow-up survey completion were the least likely to be abstinent.

One effective strategy for increasing response rates to electronic health surveys is the use of monetary incentives (David and Ware, 2014). However, little is known about the optimal incentive level that maximizes response rates while making the best use of study resources, or about the characteristics of individuals that respond to varying incentive levels. Previous research has shown that for hard-to-reach populations or study topics involving social stigma, a higher incentive may be needed (Khosropour and Sullivan, 2011). An observational study by Cobb et al. (2005) surveyed 1501 users who had registered on an Internet smoking cessation program 3 months prior. Of the 1316 surveys that were delivered successfully, 181 were completed without an incentive, yielding an initial response rate of 13.8%. The use of graduated incentives ($20 for initial non-responders, $40 for non-responders to the $20 survey) increased the overall response rate to 29.3% (385/1316). Their results suggest that graduated incentives may be a useful strategy for recruitment when resources are limited, allowing larger incentives to be offered to more difficult to reach participants while avoiding compensating users who were willing to participate for free. However, no information was provided about the characteristics of responders at the different incentive levels or their smoking outcomes at the time of survey completion.

The primary purpose of this study was to gain insight into the reasons for low levels of engagement with an Internet smoking cessation intervention. Among the “one hit wonders” on an Internet smoking cessation program, we were interested in determining the reasons that users did not return after an initial visit, and whether these reasons were related to their smoking status and/or perceived quality of the intervention itself. In addition, we sought to investigate the impact of graduated monetary incentives in boosting response rates among individuals that had disengaged from the intervention. We were specifically interested in determining whether higher incentives would yield different types of respondents in terms of demographic or smoking characteristics. Given the ubiquity of low levels of engagement and high follow-up attrition across a range of Internet interventions, our aim was to add to the relatively scarce but growing literature about Internet intervention engagement and disengagement.

2. Methods

2.1. Research setting

BecomeAnEX.org is an evidence-based smoking cessation program run by Truth Initiative (formerly the American Legacy Foundation) (Richardson et al., 2013, McCausland et al., 2011). Launched in 2008, the website is grounded in principles from the U.S. Public Health Service Clinical Practice Guideline for Treating Tobacco Use and Dependence (Fiore et al., 2008) and Social Cognitive Theory (Bandura, 1977). Multiple rounds of usability testing informed the original version of the site (Graham et al., 2013), and a continuous quality improvement process has guided subsequent enhancements and modifications. The site is designed to educate smokers and enhance self-efficacy for quitting through didactic content designed to help smokers prepare for quit day, cope with slips, and prevent relapse; videos about addiction and medication; a series of interactive tools and exercises; a large online support community of current and former smokers; and a companion text message intervention. A checklist displays whether each of the site's core components has been used and allows users to access various components of the quit plan in the order they desire, rather than requiring them to complete the program in a step-wise fashion. This strategy was implemented to help ensure that users explore personally relevant sections more readily. The site can be browsed anonymously but to save information, post to the community, or sign-up for text messages, visitors must register.

2.2. Selection and recruitment of participants

This study involved BecomeAnEX registrants who joined the website between April 1, 2014 and August 13, 2015 but did not return after the day of registration. All invited participants had registered at least 2 weeks prior to the survey. To be eligible for the study, participants had to be age 18 or older and to have opted in to receive email from BecomeAnEX.

The initial pool of eligible participants was invited via email to participate in the survey on August 26, 2015. No financial incentive was offered with the initial invitation. The invitation stated that the purpose of the survey was to better understand why users had not returned to the website after they had joined, and indicated that the survey could be completed within 5 min. One week after the initial invitation, a random subsample of non-responders was sent a second invitation with a $25 incentive. The incentive was mentioned in both the subject line and body of the email. A subsampling approach was used to maximize study resources. Approximately one week after the second invitation, a final wave of invitations was sent to a random subsample of non-responders to the $25 survey invitation. The final invitation included an incentive of $50 to complete the survey. Individuals who partially completed a survey were not considered for inclusion in sampling for subsequent invitations. Electronic Amazon gift cards were used for the incentives and were emailed to recipients upon completion of the survey. The study protocol was reviewed by Chesapeake Institutional Review Board and determined to be exempt from IRB oversight.

2.3. Data sources and measures

Data sources included the date of registration on BecomeAnEX, automated tracking data of survey delivery (i.e. bounced email), and the survey responses themselves. The survey was developed based on published studies of website disengagement, expertise of the study team, and common issues discussed among members in the BecomeAnEX community. The survey was pilot tested by team members and response items were grouped to make it easier to see all response items on a single screen in accordance with standard web-based survey methods (Dillman et al., 2014). The survey was administered via Survey Monkey (2016) and included the following items: 1) demographic characteristics (gender, age, race/ethnicity); 2) tobacco use status at the time of the survey completion (“have you smoked a cigarette, even a puff, in the last seven days?”); and 3) reasons for not returning to the website (four categories: life circumstances, smoking-related factors, website issues, and using other methods to quit) each with multiple response options including “none of these” or an “other” option where respondents entered their own text. Respondents could select more than one answer for the reasons items. Demographic questions included a “prefer not to answer” response option.

2.4. Statistical analyses

First, a CONSORT diagram was created to examine response rates for the full sample and by incentive level. Survey response rates for each of the three incentive levels were calculated as the number of completed surveys divided by the number of valid (non-bouncing) email addresses to which the survey was sent. Next, descriptive statistics were used to characterize survey respondents on demographic characteristics. Differences in demographic characteristics by all three incentive levels ($0, $25, $50) were investigated with 2 × 3 Fisher's Exact tests, with the exception of age which was compared using a Kruskal-Wallis test (df = 2) because age was not normally distributed. Fisher's Exact test was also used to compare self-reported abstinence at follow-up across the three incentive levels.

Descriptive statistics were used to characterize reasons for not returning to the site. Because respondents at the $25 and $50 levels did not significantly differ in age, gender, race, or ethnicity, these groups were combined into a single “incentivized” group for comparison with $0 respondents' reasons for not returning, using 2 × 2 Fisher's Exact tests. Alternative analyses maintaining all 3 levels yielded similar results.

To further investigate potential differences in the populations who responded at different incentive levels, the number of months between a user's initial registration on the site and the first survey invitation was assessed using quasipoisson logistic regression, and presented as relative risk (RR) estimates with 95% confidence intervals.

3. Results

3.1. Survey responders and response rates

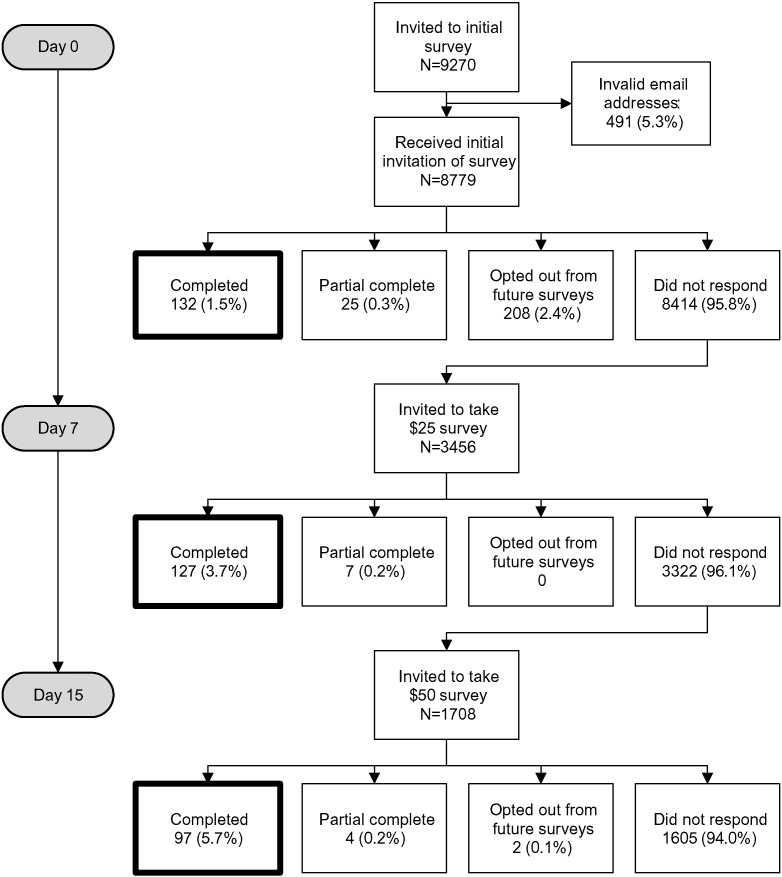

A total of N = 9270 registered users met study eligibility criteria and were sent the initial ($0) survey. Email addresses for 491 users (5.3%) were invalid and bounced, leaving a valid sample of 8779 potentially reachable users. The initial invitation yielded 132 completed surveys (1.5% of recipients), 25 partially completed surveys (0.3%), 208 opt-outs from future communication (2.4%), and 8414 non-responses (95.8%).

Of the 8415 non-respondents to the initial (0$) survey, 3456 were randomly selected to receive a second invitation with an offer of $25 as an incentive. This invitation yielded 127 (3.7%) completed surveys, 7 partially completed surveys (0.2%), and 3322 (96.1%) non-responses.

Of the 3322 non-respondents to the $25 invitation, 1708 were randomly selected to receive a third invitation with an offer of $50 as an incentive: this invitation yielded 97 (5.7%) completed surveys, 4 partially completed surveys (0.2%), 2 opt-outs (0.1%), and 1605 non-responses (94.0%). The study CONSORT is shown in Fig. 1.

Fig. 1.

CONSORT diagram.

3.2. Participant characteristics

Characteristics of all survey respondents are presented in Table 1. Respondents to the $0 survey were older (median age = 44 years, IQR = 32–56) than respondents to the $25 survey (median age = 32 years, IQR = 28–46) and the $50 survey (median age = 36 years, IQR = 27–47), H(2) = 15.69, p < 0.001. No significant differences were observed between incentive levels with respect to gender, race (white vs. non-white), or ethnicity.

Table 1.

Participant characteristics by survey incentive level.

| Overall n = 356 |

$0 incentive n = 132 |

$25 incentive n = 127 |

$50 incentive n = 97 |

p-Valuea | |

|---|---|---|---|---|---|

| Demographic characteristicsb | |||||

| Age in years, median (IQR) | 37 (29–51) | 44 (32–56) | 32 (28–46) | 36 (28–47) | < 0.001 |

| Gender is female | 67% | 70% | 66% | 65% | 0.72 |

| Race is Whitec | 87% | 85% | 88% | 87% | 0.88 |

| Ethnicity is Hispanic | 7% | 4% | 8% | 9% | 0.30 |

| Smoking characteristicsb | |||||

| Abstinent at follow-upd | 32% | 39% | 28% | 28% | 0.08 |

Median age compared with Kruskal Wallis test. All other characteristics compared with 2 × 3 Fishers Exact test.

Missing data rates for each dichotomized variable were as follows: Age 26% missing, gender 6% missing, race 8% missing, ethnicity 6% missing, abstinence 0% missing. “Prefer not to answer” was treated as missing for all variables presented here.

Includes respondents who only selected “White”.

Proportion of survey respondents reporting 7-day abstinence at survey completion.

At the time of survey completion, 32% of respondents reported not smoking in the previous 7 days. Rates of 7-day point prevalence abstinence (ppa) varied by incentive level, with a lower proportion of incentivized respondents reporting 7-day abstinence compared to $0 survey respondents (28% vs. 39%, p = 0.03). Abstinence rates among respondents incentivized at the $25 level and the $50 level did not differ (both at 28%).

3.3. Reasons for not returning to the website

The most commonly endorsed life circumstance reason was “I have been too busy/I don't have enough time,” selected by one-third (33%; 116/356) of survey respondents (Table 2). Forgetting to come back (28%; 99/356) and forgetting that they had signed up on the website (21%; 74/356) were the next most common reasons selected.

Table 2.

Reasons for not returning to an Internet-based smoking cessation intervention cited by registered users.a

| Life circumstances | n = 356 |

| Too busy/not enough time, n (%) | 116 (33%) |

| I forgot about it, n (%) | 99 (28%) |

| I don't remember signing up for this website, n (%)b | 74 (21%) |

| I lost my username and/or password, n (%) | 55 (15%) |

| I no longer have Internet access, n (%) | 7 (2%) |

| Smoking related reasons | n = 282 |

| I tried to quit but wasn't successful, n (%) | 144 (51%) |

| I quit smoking, n (%) | 64 (23%) |

| I wasn't ready to quit, n (%) | 60 (21%) |

| I got the information I was looking for, n (%) | 28 (10%) |

| Website reasons | n = 282 |

| No free medication, n (%) | 39 (14%) |

| Did not have the resources I was looking for, n (%) | 29 (10%) |

| Appeared to be trying to sell me something, n (%) | 24 (9%) |

| Hard to use, (%) | 21 (7%) |

| Community support was not comfortable for me, n (%) | 21 (7%) |

| Too much of a focus on medications, n (%) | 15 (5%) |

| Looked outdated, n (%) | 10 (4%) |

| I did not trust the information on the website, n (%) | 6 (2%) |

| Used other cessation treatment methods | n = 282 |

| Quit on my own (did not use anything), n (%) | 51 (18%) |

| Quit smoking medications, n (%) | 36 (13%) |

| A different quit smoking website, n (%) | 12 (4%) |

| Telephone coaching, n (%) | 6 (2%) |

| Text messaging program, n (%) | 5 (2%) |

| Email program, n (%) | 3 (1%) |

| Hypnosis, n (%) | 1 (< 1%) |

Survey responses not mutually exclusive; respondents could select more than one option

Survey skip logic did not ask respondents about other reasons for not returning if they selected this answer.

The most common smoking-related reason for not returning to the website was that participants had tried to quit but were not successful (51%; 144/282). Notably, 23% of respondents (64/282) reported that they did not come back because they had successfully quit smoking, and 10% (28/282) reported they had gotten the information they were looking for during their first visit. A total of 27% of all respondents endorsed either that they had quit or that they got the information they were looking for. Approximately one fifth (21%; 60/282) stated that they weren't ready to quit at the time of their visit to the site. The only website design reason to be endorsed by > 10% of respondents was that “the site did not provide free medication” (14%; 39/282). The most common other treatment method selected was “Quit on my own (did not use anything else)” (18%; 51/282). The only treatment method selected by > 5% of respondents was “Medications” (13%; 36/282). All other options were endorsed by < 5% of respondents.

Few differences were observed in the reasons for not returning endorsed by respondents at each incentive level. Compared to respondents to the $25 and $50 surveys, respondents to the $0 survey were less likely to remember signing up for the website (63% vs. 89%, p < 0.001) and less likely to report being “too busy” as a reason for not returning (21% vs. 39%, p < 0.001). Respondents at the $0 level were more likely than $25 and $50 survey responders to report that they did not return because they had quit smoking (31% vs. 19%, p = 0.03).

3.4. Impact of financial incentive in boosting response rates

The use of financial incentives raised survey response rates. The $0 survey invitation yielded a 1.5% response rate, the $25 invitation yielded a 3.7% response rate, and the $50 invitation yielded a 5.7% response rate (see Fig. 1).

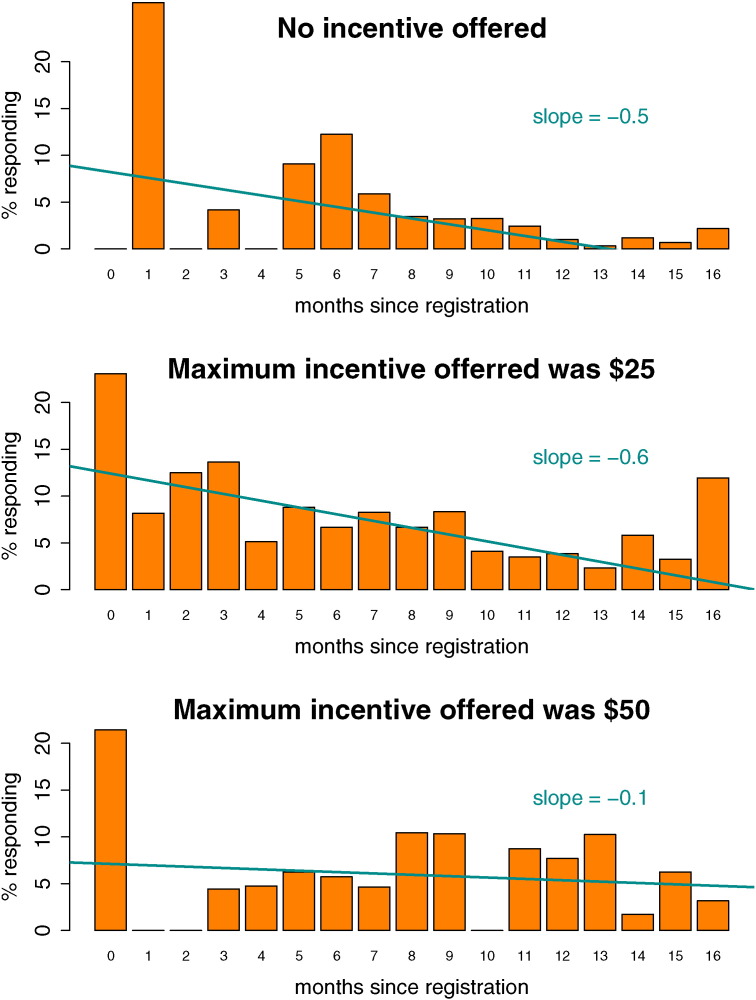

The likelihood of survey response decreased as a function of time-since-registration for users offered a maximum $0 or $25 incentive, but that relationship was eliminated by the $50 incentive. As shown in Fig. 2, among users who were not offered an incentive, the likelihood of survey response decreased with each additional month that had passed since their initial registration date (RR = 0.82, 95% CI = [0.77, 0.86]). A similar but weaker effect of time since registration was observed for users who received a maximum incentive of $25 (RR = 0.94, 95% CI = [0.89, 0.99]). The likelihood of response among participants offered a $50 incentive did not vary based on time since registration (RR = 1.00, 95% CI = [0.95, 1.06]).

Fig. 2.

Survey response rate by number of months since website registration. (Slopes on the plot show fit line from linear regression of aggregated proportions by number of months, provided as a visual aid. Significance testing conducted with person-level logistic regression, reported in the text.)

4. Discussion

Results from this study show that more than a quarter (27%) of “one hit wonders” did not return to the smoking cessation website because they quit smoking or found the information they needed. From this perspective, we may consider this subset of one-hit-wonders to be intervention “successes,” rather than failures of the website to retain them. Stated somewhat differently: for most users of Internet smoking cessation programs “more is better,” but for some smokers it may be that “once is enough.” This finding raises questions about the validity of relying exclusively on amount of exposure to an Internet intervention as a proxy for engagement, particularly using non-composite measures such as number of visits (Donkin et al., 2013). Internet trials that employ “modified intent to treat” analyses which report cessation outcomes for participants that meet a certain threshold of intervention dose may miss the opportunity to examine outcomes among the segment of users who do not meet this threshold, but for whom a more minimal level of exposure was sufficient.

Our findings also suggest that the reasons participants give for not continuing to engage with an intervention may be as important to consider as whether they return at all. A large number of participants reported that they did not come back because they forgot about the site (99/356), they tried to quit but were not successful (144/282), or they were not ready to quit (60/282). Additional reasons were provided as free responses to the “other” category, including the desire for more proactive reminders to return to the site. Understanding the reasons why tobacco users fail to return to an internet intervention can help target re-engagement efforts. Further research is needed in this area. Additionally, 10% of participants reported that they did not return to the site because they did not find the resources they were looking for. As noted by Danaher et al. (2009) researchers may need to include measures of not only skills practice, but also of receipt of desired information, in evaluating the potential impact of Internet interventions.

Not surprisingly, paid incentives boosted our overall response rate and the highest level of incentive yielded the highest response rate. Age emerged as the only demographic characteristic that differed across incentive levels, with both incentive levels yielding responses from younger adults. Given that younger smokers may be more likely to disengage from an Internet smoking cessation intervention than older adults (Cantrell et al., 2016), reaching them for outcome evaluation may require greater study resources. What is noteworthy from these results is that paid incentives produced a higher proportion of respondents who were still smoking compared to invitations with no incentive. The 7-day ppa rate for the full sample of responders was 32%, ranging from 28 to 39% for the three incentive levels. This range suggests that a similar study conducted without incentives may have underestimated abstinence among responders by 7%, a clinically meaningful amount. These self-reported abstinence data were consistent with the reasons participants provided for not returning to the website: 31% of unpaid respondents endorsed having already quit as a reason for not returning to the website, compared to 19% of incentivized respondents. Finally, we observed a decline in the probability of survey response as the length of time from registration increased, but only at the $0 and $25 incentive levels; the $50 incentive removed the negative effect of time since registration on probability of response. This was not surprising, as the farther one gets from an intervention, the less inclined one may be to respond to a survey about it. Researchers wanting to conduct follow-up surveys long after an ordinal event may need to use higher levels of incentives to do so.

These findings must be interpreted in the context of the following limitations. First, our results are based on a small proportion of the individuals who were invited to participate. Response rates were low, which was unsurprising given that we attempted to survey individuals who had disengaged from the intervention. The requirement that participants respond to the survey within a relatively short period (i.e., 7 days) may also have contributed to low response rates. The results presented may not generalize to the full sample of those we attempted to reach. Second, our study design co-varied incentive level with the number of emails participants received. As a result, we cannot determine the independent effects of graduated incentives from the effects of repeated study invitations. Both strategies have been shown to increase survey response rates in previous research (Rodgers, 2002, McGonagle et al., 2011). Future research is needed to determine the optimal combination of invitations and incentives and their relative impact on overall and subgroup response rates. Third, we deliberately kept our survey brief to maximize the likelihood of responding. As a result, our ability to characterize survey respondents is limited, and we may have omitted important items related to reasons for not returning to the website. For example, several respondents mentioned “other” reasons for not returning, including the desire to engage via smart phone without having to log in to the site with a computer, and the lack of personalization. Finally, given the observational nature of our study, we are not able to make causal statements about the links between intervention use and abstinence.

Despite these limitations, this is one of the first Internet-based studies to explore the reasons for disengagement from a smoking cessation intervention, as well as the role of graduated incentives in maximizing response rates. Our findings provide an important foundation for future research to better understand the complex patterns of both engagement and follow-up attrition. Given the impact that both these phenomena have on the science and practice of Internet interventions, more work in this area is needed.

5. Conclusions

We found that more than a quarter of “one-hit-wonders” reported not coming back to a smoking cessation website because they had quit smoking or found the information they needed. This finding suggests that efforts to assess the impact of Internet interventions should include measures of engagement that go beyond simple metrics of exposure like website visits. Attempts to measure the effectiveness of Internet cessation interventions should consider using sufficiently large paid incentives to reach the largest number of participants possible so as not to underestimate program impact under the assumption that all non-responders are still smoking. Substantive incentives may also be important to attenuate the decline in probability of response as a function of time since intervention.

Acknowledgements

The authors acknowledge the contributions of Mark Leta, Miro Kresonja and Sara Franco of Beaconfire-Red Engine, LLC.

Funding for the research reported in this manuscript was provided by Truth Initiative.

References

- Alere Wellbeing Inc. American Cancer Society Quit For Life Program. 2014. http://www.webcitation.org/6VmBNqJAr

- Alley S., Jennings C., Plotnikoff R.C., Vandelanotte C. My Activity Coach - using video-coaching to assist a web-based computer-tailored physical activity intervention: a randomised controlled trial protocol. BMC Public Health. 2014;14:738. doi: 10.1186/1471-2458-14-738. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bandura A. Self-efficacy: toward a unifying theory of behavioral change. Psychol. Rev. 1977;84(2):191–215. doi: 10.1037//0033-295x.84.2.191. [DOI] [PubMed] [Google Scholar]

- Cantrell J., Ilakkuvan V., Graham A.L., Xiao J.H., Richardson A., Xiao H., Mermelstein R.J., Curry S.J., Sporer A.K., Vallone D.M. Young Adult Utilization of a Smoking Cessation Website: an Observational Study Comparing Young and Older Adult Patterns of Use. JMIR Res. Protoc. 2016;5(3):e142. doi: 10.2196/resprot.4881. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Christensen H., Griffiths K.M., Korten A.E., Brittliffe K., Groves C. A comparison of changes in anxiety and depression symptoms of spontaneous users and trial participants of a cognitive behavior therapy website. J. Med. Internet Res. 2004;6(4):e46. doi: 10.2196/jmir.6.4.e46. (Dec 22) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Christensen H., Griffiths K., Groves C., Korten A. Free range users and one hit wonders: community users of an Internet-based cognitive behaviour therapy program. Aust. N. Z. J. Psychiatry. 2006;40(1):59–62. doi: 10.1080/j.1440-1614.2006.01743.x. (Jan) [DOI] [PubMed] [Google Scholar]

- Civljak M., Stead L.F., Hartmann-Boyce J., Sheikh A., Car J. Internet-based interventions for smoking cessation. Cochrane Database Syst. Rev. 2013;7:CD007078. doi: 10.1002/14651858.CD007078.pub4. [DOI] [PubMed] [Google Scholar]

- Cobb N.K., Graham A.L. Characterizing Internet searchers of smoking cessation information. J. Med. Internet Res. 2006;8(3):e17. doi: 10.2196/jmir.8.3.e17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cobb N.K., Poirier J. Effectiveness of a multimodal online well-being intervention: a randomized controlled trial. Am. J. Prev. Med. 2014;46(1):41–48. doi: 10.1016/j.amepre.2013.08.018. (Jan) [DOI] [PubMed] [Google Scholar]

- Cobb N.K., Graham A.L., Bock B.C., Papandonatos G., Abrams D.B. Initial evaluation of a real-world Internet smoking cessation system. Nicotine Tob. Res. 2005;7(2):207–216. doi: 10.1080/14622200500055319. (Apr) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crutzen R., de Nooijer J., Brouwer W., Oenema A., Brug J., de Vries N.K. Strategies to facilitate exposure to internet-delivered health behavior change interventions aimed at adolescents or young adults: a systematic review. Health Educ. Behav. 2011;38(1):49–62. doi: 10.1177/1090198110372878. (Feb) [DOI] [PubMed] [Google Scholar]

- Danaher B.G., Smolkowski K., Seeley J.R., Severson H.H. Mediators of a successful web-based smokeless tobacco cessation program. Addiction. 2008;103(10):1706–1712. doi: 10.1111/j.1360-0443.2008.02295.x. (Oct) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Danaher B.G., Lichtenstein E., Andrews J.A., Severson H.H., Akers L., Barckley M. Women helping chewers: effects of partner support on 12-month tobacco abstinence in a smokeless tobacco cessation trial. Nicotine Tob. Res. 2009;11(3):332–335. doi: 10.1093/ntr/ntn022. (Mar) [DOI] [PMC free article] [PubMed] [Google Scholar]

- David M.C., Ware R.S. Meta-analysis of randomized controlled trials supports the use of incentives for inducing response to electronic health surveys. J. Clin. Epidemiol. 2014;67(11):1210–1221. doi: 10.1016/j.jclinepi.2014.08.001. (Nov) [DOI] [PubMed] [Google Scholar]

- Denney-Wilson E., Laws R., Russell C.G. Preventing obesity in infants: the growing healthy feasibility trial protocol. BMJ Open. 2015;5(11):e009258. doi: 10.1136/bmjopen-2015-009258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dillman D.A., Smyth J.D., Christian L.M. fourth ed. John Wiley & Sons, Inc.; Hoboken, NJ: 2014. Internet, Phone, Mail, and Mixed-mode Surveys: The Tailored Design Method. [Google Scholar]

- Donkin L., Hickie I.B., Christensen H. Rethinking the dose-response relationship between usage and outcome in an online intervention for depression: randomized controlled trial. J. Med. Internet Res. 2013;15(10):e231. doi: 10.2196/jmir.2771. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Etter J.F. Comparing the efficacy of two Internet-based, computer-tailored smoking cessation programs: a randomized trial. J. Med. Internet Res. 2005;7(1):e2. doi: 10.2196/jmir.7.1.e2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eysenbach G. The law of attrition. J. Med. Internet Res. 2005;7(1):e11. doi: 10.2196/jmir.7.1.e11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farvolden P., Denisoff E., Selby P., Bagby R.M., Rudy L. Usage and longitudinal effectiveness of a Web-based self-help cognitive behavioral therapy program for panic disorder. J. Med. Internet Res. 2005;7(1):e7. doi: 10.2196/jmir.7.1.e7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiore M., Jaén C., Baker T. U.S. Department of Health and Human Services. Public Health Service; Rockville, MD: 2008. Treating Tobacco Use and Dependence: 2008 Update. 2008 update ed. [Google Scholar]

- Fox S. Pew Research Center's Internet and American Life Project; Washington, DC: 2011. Health Topics: 80% of Internet Users Look for Health Information Online. [Google Scholar]

- Graham A.L., Cha S., Papandonatos G.D. Improving adherence to web-based cessation programs: a randomized controlled trial study protocol. Trials. 2013;14:48. doi: 10.1186/1745-6215-14-48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graham A.L., Papandonatos G.D., Erar B., Stanton C.A. Use of an online smoking cessation community promotes abstinence: results of propensity score weighting. Health Psychol. 2015;34:1286–1295. doi: 10.1037/hea0000278. (Dec, Suppl.) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Japuntich S.J., Zehner M.E., Smith S.S. Smoking cessation via the internet: a randomized clinical trial of an internet intervention as adjuvant treatment in a smoking cessation intervention. Nicotine Tob. Res. 2006;8(Suppl. 1):S59–S67. doi: 10.1080/14622200601047900. (Dec) [DOI] [PubMed] [Google Scholar]

- Khosropour C.M., Sullivan P.S. Predictors of retention in an online follow-up study of men who have sex with men. J. Med. Internet Res. 2011;13(3):e47. doi: 10.2196/jmir.1717. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kohl L.F., Crutzen R., de Vries N.K. Online prevention aimed at lifestyle behaviors: a systematic review of reviews. J. Med. Internet Res. 2013;15(7):e146. doi: 10.2196/jmir.2665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lien R.K., Schillo B.A., Goto C.J., Porter L. The effect of survey nonresponse on quitline abstinence rates: implications for practice. Nicotine Tob. Res. 2016;18(1):98–101. doi: 10.1093/ntr/ntv026. (Jan) [DOI] [PubMed] [Google Scholar]

- Mathieu E., McGeechan K., Barratt A., Herbert R. Internet-based randomized controlled trials: a systematic review. J. Am. Med. Inform. Assoc. 2013;20(3):568–576. doi: 10.1136/amiajnl-2012-001175. (May 1) [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCausland K.L., Curry L.E., Mushro A., Carothers S., Xiao H., Vallone D.M. Promoting a web-based smoking cessation intervention: implications for practice. Cases Public Health Commun. Mark. 2011;5 (Proc:3–26) [Google Scholar]

- McGonagle K., Couper M., Schoeni R.F. Keeping track of panel members: an experimental test of a between-wave contact strategy. J. Off. Stat. 2011;27(2):319–338. [PMC free article] [PubMed] [Google Scholar]

- Munoz R.F., Barrera A.Z., Delucchi K., Penilla C., Torres L.D., Perez-Stable E.J. International Spanish/English Internet smoking cessation trial yields 20% abstinence rates at 1 year. Nicotine Tob. Res. 2009;11(9):1025–1034. doi: 10.1093/ntr/ntp090. (Sep) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray E., Khadjesari Z., White I.R. Methodological challenges in online trials. J. Med. Internet Res. 2009;11(2):e9. doi: 10.2196/jmir.1052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nash C.M., Vickerman K.A., Kellogg E.S., Zbikowski S.M. Utilization of a Web-based vs integrated phone/Web cessation program among 140,000 tobacco users: an evaluation across 10 free state quitlines. J. Med. Internet Res. 2015;17(2):e36. doi: 10.2196/jmir.3658. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neve M.J., Collins C.E., Morgan P.J. Dropout, nonusage attrition, and pretreatment predictors of nonusage attrition in a commercial Web-based weight loss program. J. Med. Internet Res. 2010;12(4):e69. doi: 10.2196/jmir.1640. [DOI] [PMC free article] [PubMed] [Google Scholar]

- North American Quitline Consortium Web-based services in the U.S. and Canada 2014. 2014. http://www.webcitation.org/6VmCxFNsj

- Papandonatos G.D., Erar B., Stanton C.A., Graham A.L. Online community use predicts abstinence in combined Internet/phone intervention for smoking cessation. J Consult Clin Psychol. 2016 Jul;84(7):633. doi: 10.1037/ccp0000099. Epub 2016 Apr 21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pike K.J., Rabius V., McAlister A., Geiger A. American Cancer Society's QuitLink: randomized trial of Internet assistance. Nicotine Tob. Res. 2007;9(3):415–420. doi: 10.1080/14622200701188877. (Mar) [DOI] [PubMed] [Google Scholar]

- Poelman M.P., Steenhuis I.H., de Vet E., Seidell J.C. The development and evaluation of an Internet-based intervention to increase awareness about food portion sizes: a randomized, controlled trial. J. Nutr. Educ. Behav. 2013;45(6):701–707. doi: 10.1016/j.jneb.2013.05.008. (Nov-Dec) [DOI] [PubMed] [Google Scholar]

- Rabius V., Pike K.J., Wiatrek D., McAlister A.L. Comparing internet assistance for smoking cessation: 13-month follow-up of a six-arm randomized controlled trial. J. Med. Internet Res. 2008;10(5):e45. doi: 10.2196/jmir.1008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramo D.E., Thrul J., Chavez K., Delucchi K.L., Prochaska J.J. Feasibility and quit rates of the tobacco status project: a Facebook smoking cessation intervention for young adults. J. Med. Internet Res. 2015;17(12):e291. doi: 10.2196/jmir.5209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richardson A., Graham A.L., Cobb N. Engagement promotes abstinence in a web-based cessation intervention: cohort study. J. Med. Internet Res. 2013;15(1):e14. doi: 10.2196/jmir.2277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ritterband L.M., Thorndike F.P., Cox D.J., Kovatchev B.P., Gonder-Frederick L.A. A behavior change model for internet interventions. Ann. Behav. Med. 2009;38(1):18–27. doi: 10.1007/s12160-009-9133-4. (Aug) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rodgers W. Proceedings of the Survey Research Methods Section of the American Statistical Association. American Statistical Association; Washington, DC: 2002. Size of incentive effects in a longitudinal study; pp. 2930–2935. [Google Scholar]

- Saul J.E., Schillo B.A., Evered S. Impact of a statewide Internet-based tobacco cessation intervention. J. Med. Internet Res. 2007;9(3):e28. doi: 10.2196/jmir.9.4.e28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sawesi S., Rashrash M., Phalakornkule K., Carpenter J.S., Jones J.F. The impact of information technology on patient engagement and health behavior change: a systematic review of the literature. JMIR Med. Inform. 2016;4(1):e1. doi: 10.2196/medinform.4514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwarzer R., Satow L. Online intervention engagement predicts smoking cessation. Prev. Med. 2012;55(3):233–236. doi: 10.1016/j.ypmed.2012.07.006. (Sep) [DOI] [PubMed] [Google Scholar]

- Schweier R., Romppel M., Richter C. A web-based peer-modeling intervention aimed at lifestyle changes in patients with coronary heart disease and chronic back pain: sequential controlled trial. J. Med. Internet Res. 2014;16(7):e177. doi: 10.2196/jmir.3434. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strecher V.J., McClure J., Alexander G. The role of engagement in a tailored web-based smoking cessation program: randomized controlled trial. J. Med. Internet Res. 2008;10(5):e36. doi: 10.2196/jmir.1002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Survey Monkey Survey monkey. 2016. www.surveymonkey.com (Accessed January 19, 2016)

- Thrul J., Klein A.B., Ramo D.E. Smoking cessation intervention on Facebook: which content generates the best engagement? J. Med. Internet Res. 2015;17(11):e244. doi: 10.2196/jmir.4575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tomson T., Bjornstrom C., Gilljam H., Helgason A. Are non-responders in a quitline evaluation more likely to be smokers? BMC Public Health. 2005;5:52. doi: 10.1186/1471-2458-5-52. (May 23) [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Mierlo T., Voci S., Lee S., Fournier R., Selby P. Superusers in social networks for smoking cessation: analysis of demographic characteristics and posting behavior from the Canadian Cancer Society's smokers' helpline online and StopSmokingCenter.net. J. Med. Internet Res. 2012;14(3):e66. doi: 10.2196/jmir.1854. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wangberg S.C., Nilsen O., Antypas K., Gram I.T. Effect of tailoring in an internet-based intervention for smoking cessation: randomized controlled trial. J. Med. Internet Res. 2011;13(4):e121. doi: 10.2196/jmir.1605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ware L.J., Hurling R., Bataveljic O. Rates and determinants of uptake and use of an internet physical activity and weight management program in office and manufacturing work sites in England: cohort study. J. Med. Internet Res. 2008;10(4):e56. doi: 10.2196/jmir.1108. [DOI] [PMC free article] [PubMed] [Google Scholar]