Abstract

Stochastic models are of fundamental importance in many scientific and engineering applications. For example, stochastic models provide valuable insights into the causes and consequences of intra-cellular fluctuations and inter-cellular heterogeneity in molecular biology. The chemical master equation can be used to model intra-cellular stochasticity in living cells, but analytical solutions are rare and numerical simulations are computationally expensive. Inference of system trajectories and estimation of model parameters from observed data are important tasks and are even more challenging. Here, we consider the case where the observed data are aggregated over time. Aggregation of data over time is required in studies of single cell gene expression using a luciferase reporter, where the emitted light can be very faint and is therefore collected for several minutes for each observation. We show how an existing approach to inference based on the linear noise approximation (LNA) can be generalised to the case of temporally aggregated data. We provide a Kalman filter (KF) algorithm which can be combined with the LNA to carry out inference of system variable trajectories and estimation of model parameters. We apply and evaluate our method on both synthetic and real data scenarios and show that it is able to accurately infer the posterior distribution of model parameters in these examples. We demonstrate how applying standard KF inference to aggregated data without accounting for aggregation will tend to underestimate the process noise and can lead to biased parameter estimates.

Keywords: Linear noise approximation, Stochastic systems biology, Time aggregation, Kalman filter

Introduction

Stochastic differential equations (SDEs) are used to model the dynamics of processes that evolve randomly over time. SDEs have found a range of applications in finance (e.g. stock markets, Hull 2009), physics (e.g. statistical physics, Gardiner 2004) and biology (e.g. biochemical processes, Wilkinson 2011). Usually, the coefficients (model parameters) of SDEs are unknown and have to be inferred using observations from the systems of interest. Observations are typically partial (e.g. collected at discrete times for a subset of variables), corrupted by measurement noise, and may also be aggregated over time and/or space. Given these observed data, our task is to infer the process trajectory and estimate the model parameters.

A motivating example of stochastic aggregated data comes from biology and more specifically from luminescence bioimaging, where a luciferase reporter gene is used for studying gene expression inside a cell (Spiller et al. 2010). The luminescence intensity emitted from the luciferase experiments is collected from single cells and is integrated over a time period (in certain cases up to 30 min, Harper et al. 2011) and then recorded as a single data point. In this paper, we consider the problem of inferring SDE model parameters given temporally aggregated data of this kind.

Imaging data from single cells are highly stochastic due to the low number of reactant molecules and the inherent stochasticity of cellular processes such as gene transcription or protein translation. The chemical master equation (CME) is widely used to describe the evolution of biochemical reactions inside cells stochastically (Gillespie 1992). Exact inference with the CME is rare and, even when possible, computationally prohibitive. In Golightly and Wilkinson (2005), the authors perform inference using a diffusion approximation of the CME, resulting in a nonlinear SDE. The linear noise approximation (LNA) (Kampen 2007) has been used as an alternative approximation of the CME which is valid for a sufficiently large system (Komorowski et al. 2009; Fearnhead et al. 2014). According to the LNA, the system is decomposed into a deterministic and a stochastic part. The latter is described by a linear SDE of the following form:

| 1 |

where is a d-dimensional process, is a -matrix-valued function, is an m-dimensional Wiener process, and a matrix-valued function.

Given an initial condition , Eq. (1) has the following known solution (Arnold 1974):

| 2 |

where is the fundamental matrix of the homogeneous equation . Note that the right integral in Eq. (2) is a Gaussian process, as it is an integral of a non-random function with respect to (Arnold 1974). If we further assume that the initial condition c is normally distributed or constant, Eq. (2) gives rise to a Gaussian process. Additionally, the solution of a (linear) SDE is a Markov process (Arnold 1974). These properties of linear SDEs (of the form of Eq. (1)) are highly desirable when carrying out inference.

The approaches above do not treat the aggregated nature of luciferase data in a principled way but instead assume that the data are proportional to the quantity of interest at the measurement time (Harper et al. 2011; Komorowski et al. 2009). Here, we build on the work of Komorowski et al. (2009) and Fearnhead et al. (2014) and extend it to the case of aggregated data. Since we are using the LNA, the problem is equivalent to a parameter inference problem for the time integral of a linear SDE as in Eq. (1): . We follow a Bayesian approach, where the likelihood of our model is computed using a continuous-discrete Kalman filter (Särkkä 2006) and parameter inference is achieved using an MCMC algorithm. The paper is structured as follows: we first provide a description of the LNA as an approximation of the CME and introduce the integral of the LNA for treating temporally aggregated observations. We then describe a Kalman filter framework for performing inference with the LNA and its integral. Finally, we apply our method in three different examples. The Ornstein–Uhlenbeck process has been picked as a system where we can study its exact solutions. The Lotka–Volterra model was selected as an example of a nonlinear system with partial observations. The translation inhibition model was used to demonstrate our method with real data.

The linear noise approximation and its integral

The CME can be used to model biochemical reactions inside a cell. It is essentially a forward Kolmogorov equation for a Markov process that describes the evolution of a spatially homogeneous biochemical system over time.

Assume a biochemical reaction network consisting of N chemical species in a volume and v reactions . The usual notation for such a network is given below:

: + + : + + ... : + +

where = represents the number of chemical species (we assume molecules) and = is the concentration of molecules. We denote with P the matrix whose elements are given by and Q the matrix with elements . We define the stoichiometry matrix S as . The probability of a reaction taking place in is given by the vector of reaction rates .

The probability that the system is in state at time t is given by the CME:

| 3 |

However, as mentioned before, exact inference with the CME, even when possible, is computationally prohibitive. We use the LNA as an approximation of the CME due to its successful application in Komorowski et al. (2009) and Fearnhead et al. (2014). The state of the system is expected to have a peak around the macroscopic value of order and fluctuations of order such that . This way the system is decomposed to the deterministic part and the stochastic part . The LNA arises as a Taylor expansion of the CME in powers of the volume ; for a detailed derivation the reader is referred to Kampen (2007) and Elf and Ehrenberg (2003). By collecting terms of order , we obtain the deterministic part of the system, namely the macroscopic rate equations , where i stands for the ith species:

| 4 |

Terms of order give us the stochastic part of the system:

| 5 |

where, and , while . Equation (5) is a linear SDE of the form of Eq. (1). Its solution is a Gaussian Markov process, provided that we have an initial condition that is a constant or a Gaussian random variable. The ordinary differential equations (ODEs) that describe the mean and variance of this Gaussian process are given by Arnold (1974):

| 6 |

| 7 |

Note that if we set the initial condition of , then Eq. (6) will lead to at all times. We will make the assumption that, at each observation point, is reset to zero since it can be beneficial for inference as discussed in Fearnhead et al. (2014) and Giagos (2010).

In what follows we will assume, without loss of generality, that the volume , i.e. the number of molecules equals the concentration of molecules and thus,

| 8 |

Equation (8) is the sum of a deterministic and a Gaussian term; consequently, it will also be normally distributed. By taking its expectation and variance, we have that which, according to the initial condition , leads to .

We are now interested in the integral of Eq. (8), as this will allow us to model the aggregated data,

| 9 |

The deterministic part of this aggregated process is given by I(t), and the stochastic part is given by Q(t). Subsequently, we have the following ODEs:

| 10 |

| 11 |

Here, will also follow a Gaussian process (as it is the integral of a Gaussian process) so we need to compute its mean and variance. The ODEs for the mean, variance and are given below; their proofs can be found in “Appendix A.1”:

| 12 |

| 13 |

| 14 |

Note that is not Markovian since knowledge of its history is not sufficient to determine its current state. However, jointly with it forms a bivariate Gaussian Markov process, that is characterised by the following linear SDE:

| 15 |

From Eq. (15) we have that are jointly Gaussian and, consequently, their marginals are also normally distributed. Thus, according to (9) with and .

Kalman filter for the LNA and its integral

The classical filtering problem is concerned with the problem of estimating the state of a linear system given noisy, indirect or partial observations (Kalman 1960). In our case, the state is continuous and is described by Eq. (8) while the observations are collected at discrete time points with or without Gaussian noise. For this reason, we refer to it as the continuous-discrete filtering problem (Jazwinski 1970; Särkkä 2006).

First, we consider the case where observations are taken from the process and not from its integral . In that case, the observation process is given by where and accounts for technical noise. The observability matrix is used to deal with the partial observability of the system, for example, if we have two species , and we observe only , .

Following the Kalman filter (KF) methodology, we need to define the following quantities:

Prior: .

Predictive distribution: , where refers to the observations at discrete points up to time .

Posterior or Update distribution: .

The predictive distribution is given by , where and are found by integrating forward for Eqs. (4) and (7) initialised at the posterior mean and variance . In our case, the mean of the stochastic part is initialised at 0, so corresponds to the deterministic part . By updating the deterministic solution at each observation point, we achieve a better estimate, as the ODE solution can become a poor approximation over long periods of time. The posterior distribution corresponds to the standard posterior distribution of a discrete KF and the updated and are given in “Appendix A.3”. This case has been thoroughly studied in Fearnhead et al. (2014).

We consider now the case where the state is being observed through the integrated process , such that the observation process is given by and . Again, we need to define a prior distribution as well as calculate the predictive and posterior distributions for the system that we are studying.

The predictive distribution of our system is given by , where . For this step, we need to integrate forward the ODEs (4), (10), (7), (13) and (14) with the appropriate initial conditions as seen in Algorithm 1. Note that the integrated process needs to be reset to 0 at each observation point in order to capture correctly the ‘area under graph’ of the underlying process .

To compute the posterior distribution , we look at the joint distribution of conditioned on :

| 16 |

By using the lemma in “Appendix A.2” and using the corresponding blocks of the joint distribution (16), we can calculate the posterior mean and variance of :

| 17 |

Since we are interested in parameter inference, we will need to compute the likelihood of the system, where represents the parameter vector of the system:

| 18 |

The individual terms of the likelihood are given by . Parameter inference is then straightforward either by using a numerical technique such as the Nelder–Mead algorithm to obtain the maximum likelihood (ML) parameters or using a Bayesian method such as a Metropolis-Hastings (MH) algorithm. The general procedure for performing inference using aggregated data is summarised in Algorithm 1.

The Ornstein–Uhlenbeck process

We first investigate the effect of integration in a one-dimensional, zero-mean OU process of the following form:

| 19 |

where is the drift or decay rate of the process and is the diffusion constant. Both of these parameters are assumed to be unknown, and we will try to infer them using the KF scheme that we have developed.

The OU process is a special case of a linear SDE (Eq. (1)), since its coefficients are time invariant, resulting in a stationary Gaussian–Markov process. Analytical solutions for both the OU and its integral exist (Gillespie 1996) and are presented in “Appendix A.4”. The results for the mean and variance of the OU, where , are given below:

| 20a |

| 20b |

The integral of Eq. (19) is given by , and the mean, variance and covariance are given below,

| 21a |

| 21b |

| 21c |

We are interested in inferring the parameters and given observations from at discrete times, where the interval between two observations is constant. We will compare two approaches. First, we will assume that the data come directly from ignoring their aggregated nature and use the standard discrete–continuous KF, referred to as KF1. To make the comparison of this scenario fairer, we will normalise the observations by dividing with , which brings the observation close to an average value of the process, in an attempt to match the observations to data generated from the process . In the second case, we will use the KF on the integrated process in analogy with Algorithm 1, which we will refer to as KF2. The case of inferring the parameters of an OU process using non-aggregated data with an MCMC algorithm has already been studied in Mbalawata et al. (2013).

will reach its stationary distribution after a time of order , which is given by (Gillespie 1992). However, the integrated process is non-stationary since as . This already shows us that the two processes behave differently.

Since we are going to use the normalised observations from with KF1, we will take a look at the normalised process :

| 22a |

| 22b |

By taking the limit as in Eq. (22) and using L’Hospital’s rule we can show that and . So, the normalised process is again not approaching the stationary distribution of .

We have generated aggregated data from the integral of an OU process with and . To simulate data from , we need to first simulate data from . This can be done in general by discretising the process and using the Euler–Maruyama algorithm. However, in the case of the OU process, we can also use an exact updating formula (see “Appendix A.6”). The aggregated data can then be collected using the discretised form or a numerical integration method such as the trapezoidal rule over the indicated integration period. In “Appendix A.12” we have included plots of the OU process and the corresponding aggregated process.

We tested inference using KF1 with normalised data and KF2 with aggregated data. Results of parameter estimation using a standard random walk MH algorithm are presented in Table 1. Improper uniform priors over infinite range have been used on the log parameters, while different time intervals have been considered. For each interval , we have sampled 100 observations from a single trajectory of an OU process with and aggregated over the specified . For this example, we have assumed no observation noise. MCMC traceplots of and can be found in “Appendix A.13” (Figs. 6, 7) which indicate a good mixing of the chain and fast convergence. All chains were run for 50K iterations and 30K were discarded as burn-in. To verify the validity of the results, we have run nine more datasets, separately each time. An average over the ten datasets can be found in “Appendix A.7” (Table 5). As we can see, the estimates for KF1 deteriorate for larger . This is expected since the aggregated process diverges further from the OU process as increases. Estimates remain good for KF2 even when is large, although they become more uncertain, as can be witnessed by the increased standard deviations. Filtering results for KF1 and KF2 with aggregated data using the estimated parameter results for are given in “Appendix A.14”.

Table 1.

Mean posterior ± 1 s.d. for and using a Metropolis-Hastings algorithm

| KF | |||

|---|---|---|---|

| 0.1 | KF1 | ||

| 0.5 | KF1 | ||

| 1.0 | KF1 | ||

| 2.0 | KF1 | ||

| 0.1 | KF2 | ||

| 0.5 | KF2 | ||

| 1.0 | KF2 | ||

| 2.0 | KF2 |

Data were simulated from an OU process with and

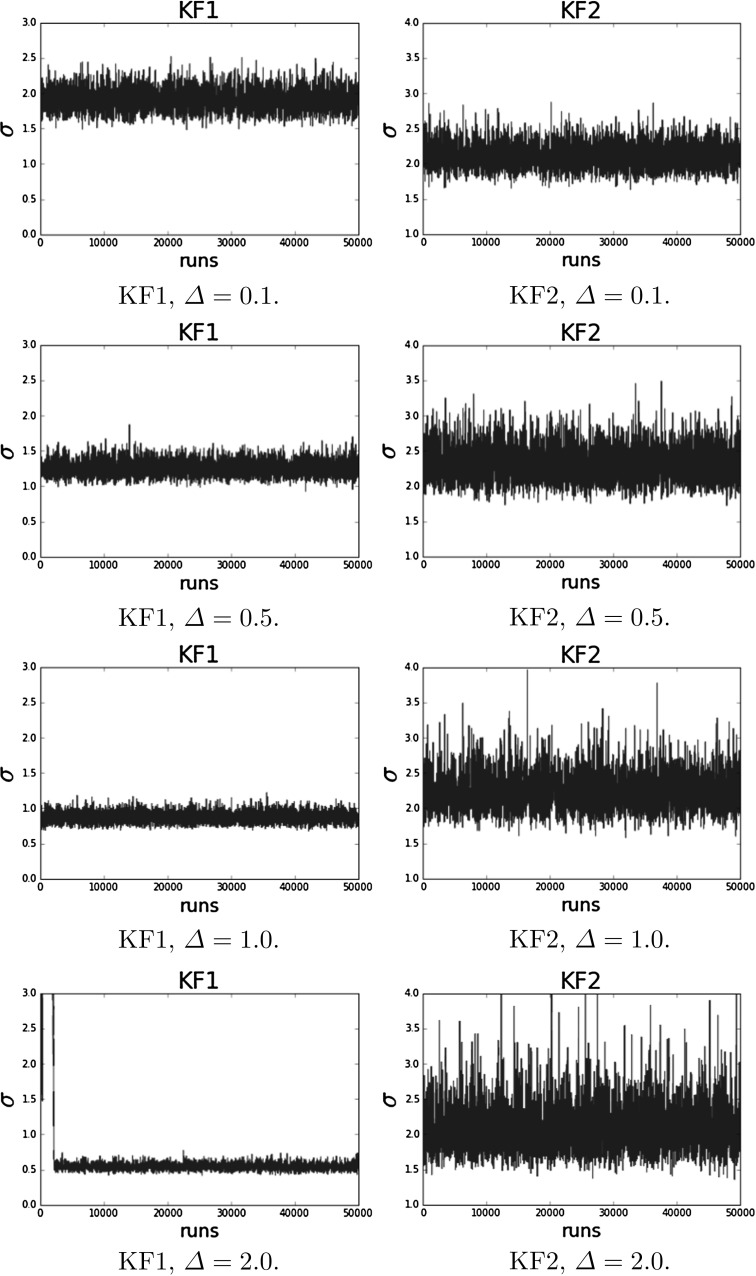

Fig. 6.

MCMC traces of the posterior of using a random walk MH for both KF1 and KF2. Ground truth for

Fig. 7.

MCMC traces of the posterior of using a random walk MH for both KF1 and KF2. Ground truth for

Table 5.

Average of mean posterior ± 1 s.d. over 10 different datasets for and using a Metropolis–Hastings algorithm

| KF | |||

|---|---|---|---|

| 0.1 | KF1 | ||

| 0.5 | KF1 | ||

| 1.0 | KF1 | ||

| 2.0 | KF1 | ||

| 0.1 | KF2 | ||

| 0.5 | KF2 | ||

| 1.0 | KF2 | ||

| 2.0 | KF2 |

Data were simulated from an OU process with and

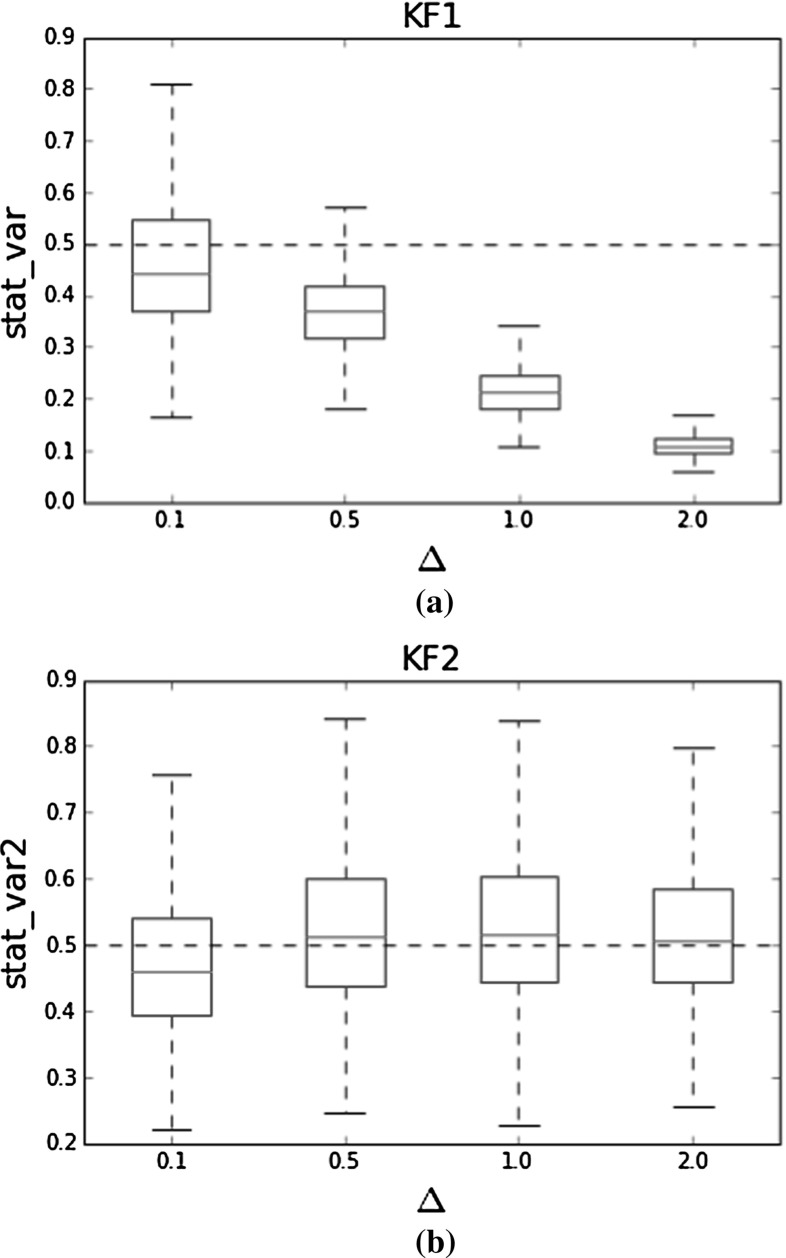

It is of interest to investigate the inferred stationary variance of the OU process using KF1 and KF2. We have plotted the inferred stationary variances obtained by the MH for both KF1 and KF2 in Fig. 1. The boxplots are obtained using the average of 10 different datasets and correspond again to an OU process with and , thus giving rise to a stationary variance of . When using the normalised aggregated data directly with KF1, we infer the wrong stationary variance of the underlying OU process which tends to zero as becomes larger, consistent with the theoretical results from Eq. (22). Intuitively, we can attribute this behaviour to the fact that aggregated data have relatively smaller fluctuations, so that KF1 will tend to underestimate the process variance.

Fig. 1.

Boxplots of inferred stationary variance of the OU process for different . The simulated OU process has and corresponding to a stationary variance of 0.5, as indicated by the dotted horizontal line. The inferred stationary variance using KF1 tends to zero as grows, but the stationary variance from KF2 is inferred correctly at all . a Boxplots of inferred stationary variance for different using KF1. b Boxplots of inferred stationary variance for different using KF2

In this section, we have looked at an example of inferring the parameters of an SDE using aggregated data, and we have found that to obtain accurate results we need to explicitly model the aggregated process. As the observation intervals become larger, there is a greater mismatch between KF1 and KF2. In the next two sections, we will look at examples of more complex stochastic systems that must be approximated by the LNA and compare again inference results using KF1 and KF2.

Lotka–Volterra model

We are now going to look at a system of two species that interact with each other according to three reactions

| 23a |

| 23b |

| 23c |

The model represented by the biochemical reaction network (23) is known as the Lotka–Volterra model, with representing prey species and predator species. Although a simple model, it has been used as a reference model (Boys et al. 2008; Fearnhead et al. 2014) since it consists of two species, making it possible to observe it partially through one of the species and also provides a simple example of a nonlinear system.

The LNA can be used to approximate the dynamics and the resulting ODEs can be found in “Appendix A.8”. We want to compare parameter estimation results using KF1 and KF2. We collected aggregated data from a Lotka–Volterra model using the Gillespie algorithm. We assumed a known initial population of 10 prey species and 100 predator species. The parameters of the system for producing the synthetic data were set to , following (Boys et al. 2008). We have added Gaussian noise with standard deviation set to 3.0, and we assumed that the noise level was known for inference. Our goal was to infer the three parameters of the system using aggregated observations solely from the predator population.

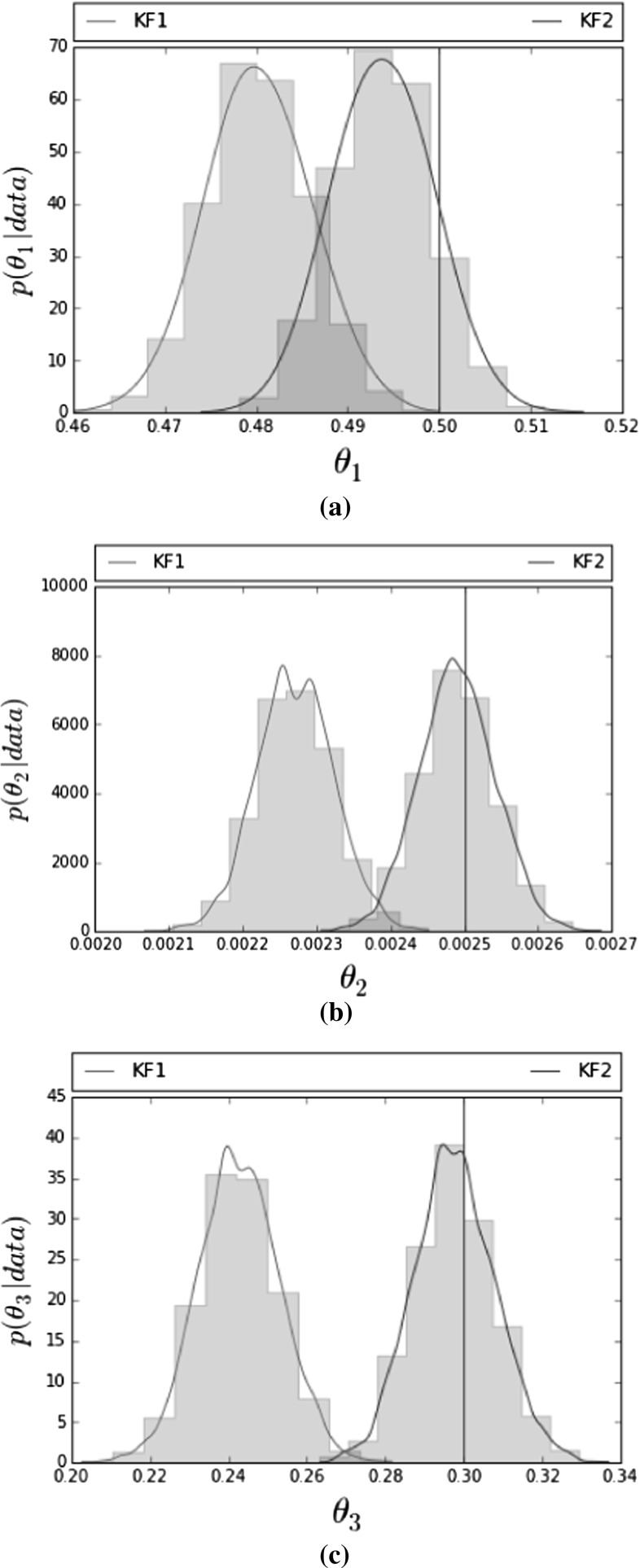

The Gillespie algorithm was run for 20 min. Data were aggregated and collected every 2 min resulting in 10 observations per sample. To infer the parameters, we assumed that we had 40 independent samples available. Since we assumed independence between the samples, we worked with the product of their likelihoods. In the ideal case of having complete data of a stochastic kinetic model the likelihood is conjugate to an independent gamma prior for the rate constants (Wilkinson 2011). The choice of Ga(2,10) with shape and rate gives a reasonable range for all three parameters and has also been used by Fearnhead et al. (2014). However, in this case the choice of prior is not important as the data dominate the posterior. We have run the same experiment using uninformative exponential priors Exp() that resulted in equivalent posterior distributions. Since we know that we want all parameters to be positive, we worked with a log transformation. MCMC convergence in this example is relatively slow and adaptive MCMC (Sherlock et al. 2010) was found to speed up convergence (see “Appendix A.9” for details). The adaptive MCMC was run for 30K iterations with 10K regarded as burn-in. The MCMC was initialised at random values sampled from uniform distributions. Parameter estimation results for all three parameters using adaptive MCMC are shown in Table 2, while Fig. 2 shows histograms of their posterior densities. The ground truth value for each parameter is indicated by a vertical blue line. We can see that only the posterior histograms corresponding to KF2 include the correct estimate for all three parameters in their support. In “Appendix A.15”, we have included traceplots of the MCMC runs for all three parameters, where we can see that the adaptive MCMC leads to a fast convergence for both KF1 and KF2. In order to verify the validity of our results, we have run an extra 100 datasets, each consisting of 40 independent samples and obtained point estimates from KF1 and KF2 using the Nelder–Mead algorithm. The results can be found in “Appendix A.10” and agree with our previous conclusion that inference with KF1 gives inaccurate estimates.

Table 2.

Mean posterior ± 1 s.d. for using an adaptive MCMC

| Ground truth | KF1 | KF2 | |

|---|---|---|---|

| 0.5 | |||

| 0.0025 | |||

| 0.3 |

Data were simulated from a Lotka–Volterra model according to the ground truth values

Fig. 2.

Posterior densities of from aggregated data using KF1 (red histogram) and KF2 (green histogram). a Posterior density of . b Posterior density of . c Posterior density of

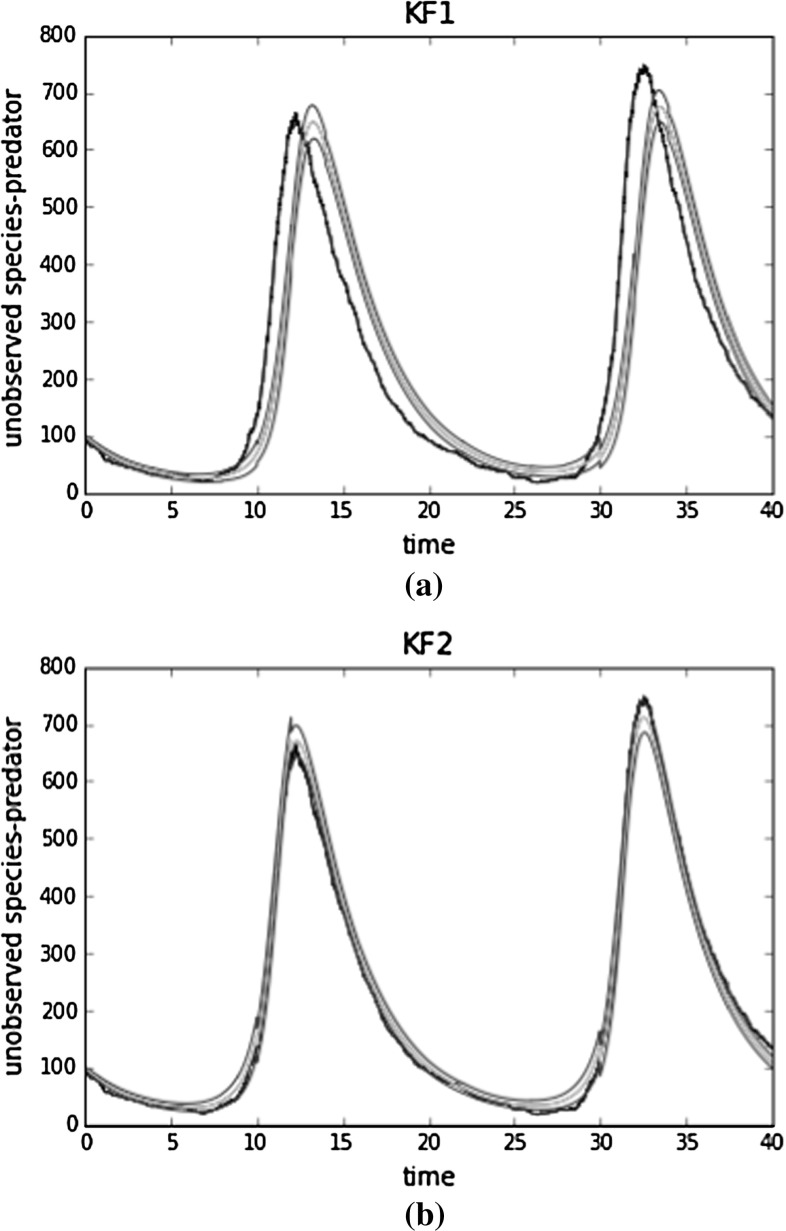

Assuming knowledge of the parameter values, we can also use the KF for trajectory inference. In Fig. 3, we demonstrate filtering results for the prey population assuming that we have aggregated data. We simulated a trajectory using and sampled aggregated data every 2 min. Black lines represent the true trajectory of the populations. We see that the inferred credible region with KF1 does not contain the true underlying trajectory in many places. Note that red dots correspond to normalised (aggregated) observations for KF1 and aggregated observations for KF2, so they do not have the same values. In “Appendix A.16”, we include filtering results for the unobserved predator population.

Fig. 3.

Filtering results for the prey population. Red dots correspond to aggregated observations for KF2 and normalised observations for KF1. The black line represents the actual process. Purple lines represent the mean estimate and green 1 s.d. . a Filtering results for the prey population using KF1. b Filtering results for the prey population using KF2

Translation inhibition model

In this example, we are interested in inferring the degradation rate of a protein from a translation inhibition experiment. We model the translation inhibition experiment by the following set of reactions where R stands for mRNA and P for protein:

| 24a |

| 24b |

The LNA is used, again, as an approximation of the dynamics and the resulting system of ODEs can be found in “Appendix A.11”. Before applying our method to real data from this system, we test the performance on synthetic data simulated using the Gillespie algorithm. We simulated 30 time series (corresponding to 30 different cells), assuming the following values as the ground truth for the kinetic parameters: and . We further set the initial protein abundance of to 400 molecules. We have scaled the data by a factor , so that they are proportional to the original synthetic data and added Gaussian noise with a variance of . For this study, we have assumed that data were integrated over 30 min.

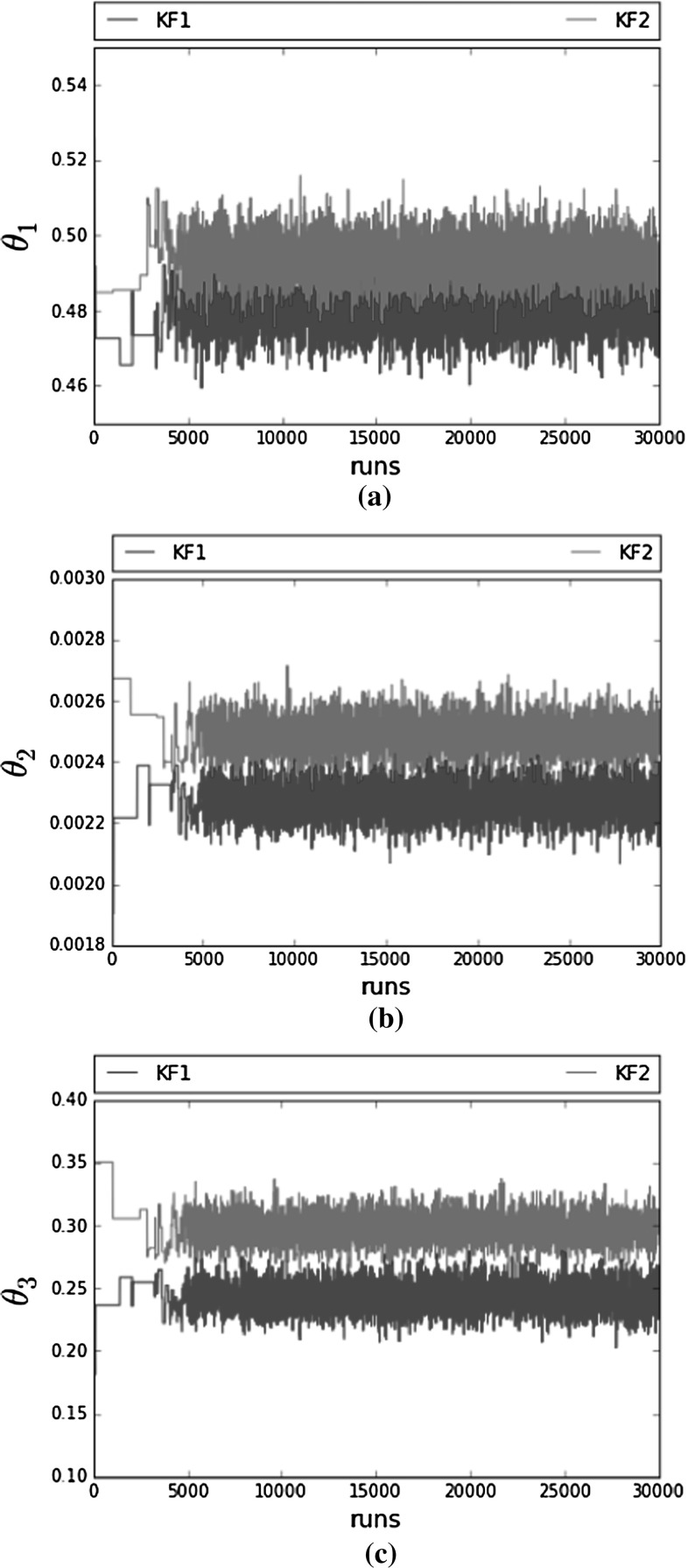

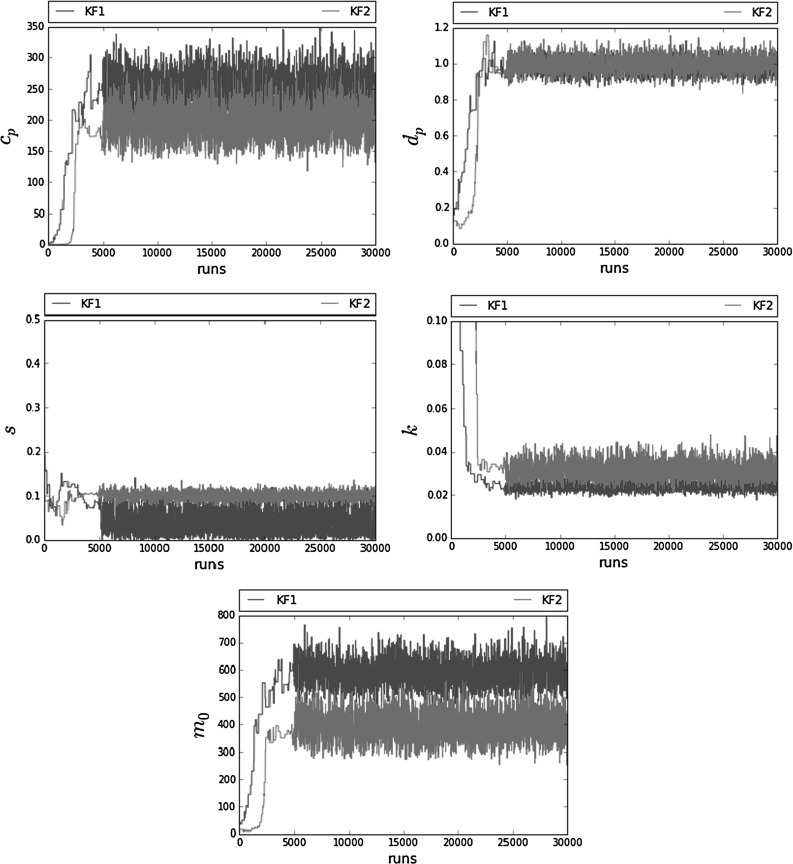

Again we use an adaptive MCMC algorithm (Sherlock et al. 2010). Non-informative exponential priors with mean were placed on all parameters. We have adopted the parametarisation used in Komorowski et al. (2009) and Finkenstädt et al. (2013) such as and and worked in the log parameter space. Parameter estimation results for the vector using KF1 and KF2 are summarised in Table 3. As we can see, the degradation rates are successfully inferred by both approaches. However, using KF1 leads to an overestimation of and an underestimation of the noise level s, which corresponds to a smoother process than the underlying one. MCMC traces from both KF1 and KF2 are presented in Fig. 11.

Table 3.

Mean posterior ± 1 s.d. for using an adaptive MCMC

| c | GT | KF1 | KF2 |

|---|---|---|---|

| 200 | |||

| 0.97 | |||

| s | 0.1 | ||

| k | 0.03 | ||

| 400 |

Data were simulated from a translation inhibition model according to the ground truth (GT) values

Fig. 11.

Adaptive MCMC traces of the posterior vector using synthetic data with KF1 and KF2

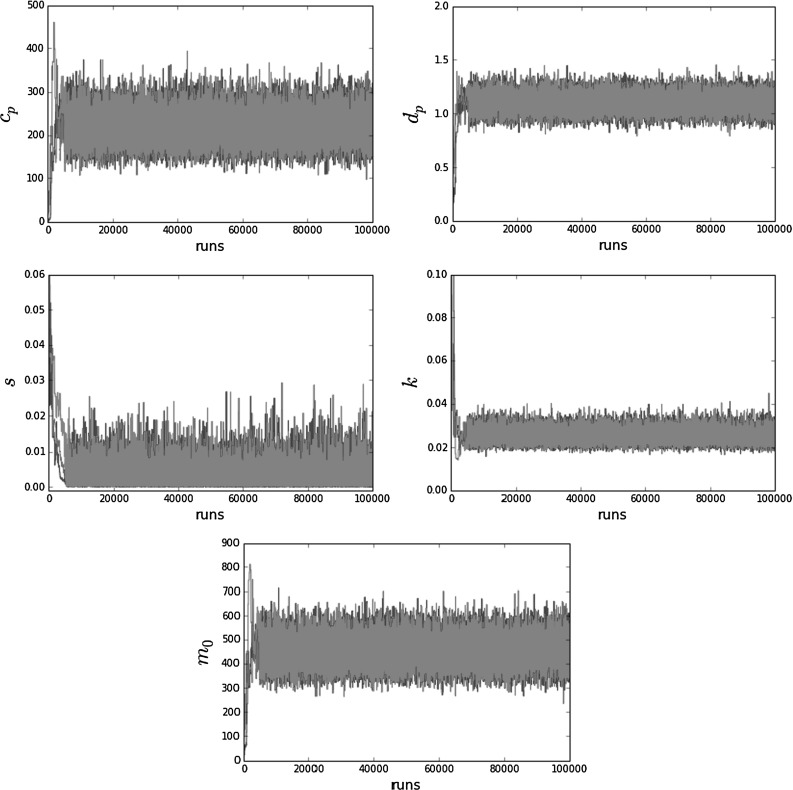

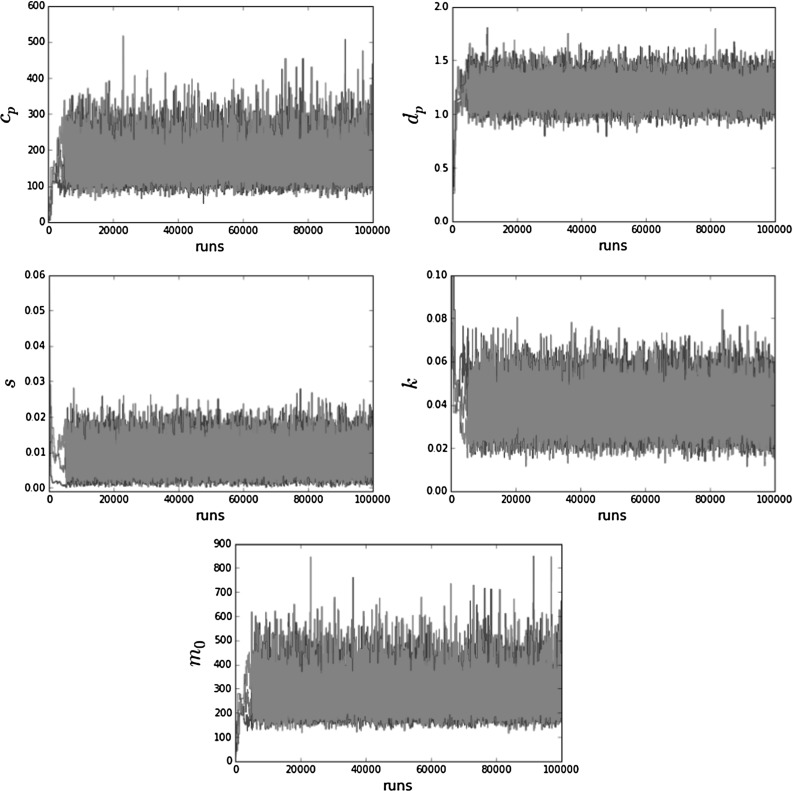

We then applied our model to single cell luciferase data from a subset of 11 pituitary cells (Harper et al. 2011). Parameter estimation results using the same adaptive MCMC are summarised in Table 4. The MCMC was run for 100K iterations out of which 60K were discarded as burn-in. Again, we observe that, using KF1, we get a higher and a slightly lower noise level s. Posterior histograms of the degradation rates are shown in Fig. 4. A deterministic approach for fitting the data would give a degradation rate of around 1.02 and, as we can see, this value is included in both histograms of Fig. 4. To check convergence using the Gelman–Rubin statistic, we have run 3 different chains with different initialisations. MCMC traces for both KF1 and KF2 are shown in “Appendix A.18” (Fig. 12 and 13) where we can see that the three chains are very close to each other, corresponding to a Gelman–Rubin statistic close to 1.

Table 4.

Mean posterior ± 1 s.d. for using adaptive MCMC with single cell data obtained from a subset of 11 pituitary cells from a translation inhibition experiment (Harper et al. 2011)

| c | KF1 | KF2 |

|---|---|---|

| s | ||

| k | ||

Fig. 4.

Posterior histograms of degradation rate using KF1 and KF2

Fig. 12.

Three MCMC chains of the posterior vector using single cell data with KF1

Fig. 13.

Three MCMC chains of the posterior vector using single cell data with KF2

Discussion

We have presented a Bayesian framework for doing inference using aggregated observations from a stochastic process. Motivated by a systems biology example, we chose to use the LNA to approximate the dynamics of the stochastic system, leading to a linear SDE. We then developed a Kalman filter that can deal with integrated, partial and noisy data. We have compared our new inference procedure to the standard Kalman filter which has previously been applied in systems biology applications approximated using the LNA. Overall, we conclude that the aggregated nature of data should be considered when modelling data, as aggregation will tend to reduce fluctuations and therefore the stochastic contribution of the process may be underestimated.

In Sect. 4, we described the different properties of a stochastic process and its integral in the case of the Ornstein–Uhlenbeck process. We showed that one cannot simply treat the integrated observations as proportional to observations coming from the underlying unintegrated process when carrying out inference. As the aggregation time window increases, parameter estimates using this approach become less accurate and the inferred stationary variance of the process is underestimated. In contrast, our modified KF is able to accurately estimate the model parameters and stationary variance of the process.

In Sect. 5, we have demonstrated the ability of our method to give more accurate results in a Lotka–Volterra model given synthetic aggregated data. In Sect. 6, we looked at a real-world application with data from a translation inhibition experiment carried out in single cells. As the LNA depends on its deterministic part, and in a deterministic system integration is dealt with reasonably well using the simple proportionality constant approach, some of the system parameters, such as the degradation rate, can be inferred reasonably well by the standard non-aggregated data approach. However, neglecting the aggregated nature of the data does lead to a significantly larger estimate of the initial population of molecules even in this simple application. This is consistent with our observation that neglecting aggregation will tend to underestimate the scale of fluctuations as it is the number of molecules that determines the size of fluctuations in this example. In models where noise plays a more critical role, e.g. systems with noise-induced oscillations, the effect of parameter misspecification could have more serious consequences on model-based inferences.

Our proposed inference method can deal with the intrinsic noise inside a cell, measurement noise and temporal aggregation. However, cell populations are highly heterogeneous, and cell-to-cell variability has not been considered in our current inference scheme. It would be possible to deal with cell-to-cell variability using a hierarchical model (Finkenstädt et al. 2013) which could be combined with the integrated data Kalman Filter developed here.

All experiments were carried out on a cluster of 64bit Ubuntu machines with an i5-3470 CPU @ 3.20 GHz x 4 processor and 8 GB RAM. All scripts were run in Spyder (Anaconda 2.5.0, Python 2.7.11, Numpy 1.10.4). Code reproducing the results of the experiments can be found on GitHub https://github.com/maria-myrto/inference-aggregated.

Acknowledgements

MR was funded by the UK’s Medical Research Council (award MR/M008908/1).

Appendix

Mean and variance of the integrated process

We start by computing , i.e. the mean of . We know that:

| 25 |

| 26 |

Averaging Eq. (26), dividing by and letting , gives us:

| 27 |

The mean of is set to zero, as we have chosen to use the Restarting LNA.

We now need to compute the covariance between and . Again and since we are using the Restarting LNA and thus, the covariance is given by . For our derivation, we need to use:

| 28 |

By multiplying Eqs. (26) and (28) we get:

| 29 |

Averaging the result (29), retaining terms up to first order in , dividing by and letting , we get:

| 30 |

The variance of is given by since . We have that,

| 31 |

By averaging (31), retaining terms up to first order in , dividing by and letting , we get:

| 32 |

Useful Gaussian identities

Let x and y be jointly Gaussian random vectors:

| 33 |

Then, the marginal and conditional distributions of x (equivalently for y) are, respectively (Bishop 2007):

| 34 |

| 35 |

Update equations of a discrete Kalman Filter

Using the Gaussian Identities in A.2 we have

| 36 |

Since we are working with Gaussians, we know that , and the updated and are given by:

| 37 |

Analytical solutions for the OU process and its integral

Given an OU process of the following form:

| 38 |

we can derive its solution according to the general theory for linear SDEs. Since the solution is a Gaussian process, we will only need to define its mean and variance which are given by Eqs. (6, 7). All the ODEs in this case are first-order linear ODEs with constant coefficients, so using for example an integrating factor, we can derive the following solution for an ODE of the form :

| 39 |

For the mean we get from Eq. (6):

| 40 |

For the variance we have the following:

| 41 |

For the solution of the integrated OU process , we need to calculate its mean, covariance and variance given by Eqs. (12), (13) and (14). The initial conditions for these ODEs will be set to 0, since at each observation point the integrated process starts from 0. For clarity, we will use the results A, B, C from “Appendix A.5”.

First we find the mean:

| 42 |

For the covariance, we first calculate from Eq. (13):

| 43 |

Now the covariance can be calculated from:

| 44 |

For the variance, we need to calculate:

| 45 |

Now we can derive the variance:

| 46 |

Frequently used integrals for part (A.4)

| 47 |

| 48 |

| 49 |

Exact updating formula of OU process

The OU process admits an exact update formula given by Gillespie (1992):

| 50 |

Average over 10 datasets—OU example

See Table 5.

LNA for Lotka–Volterra model

The Lotka–Volterra model (23) gives rise to the stoichiometry matrix,

| 51 |

with transition rates,

| 52 |

The following matrices need to be computed:

| 53 |

| 54 |

| 55 |

The macroscopic rate equations are now given by:

| 56 |

| 57 |

For the diffusion terms, we only need to compute the variance of the resulting Gaussian process since we restart the stochastic part at each observation point in accordance with (Fearnhead et al. 2014).

| 58 |

V is a symmetric matrix so . So:

| 59 |

| 60 |

| 61 |

The integrated process follows Eqs. (10),(13),(14). The deterministic part is given by:

| 62 |

The ODEs for its integrated variance and covariance with the underline process are given below, where and :

| 63 |

such as,

| 64 |

| 65 |

| 66 |

| 67 |

| 68 |

Adaptive MCMC

According to the specific adaptive MH (Sherlock et al. 2010), the new state is sampled from a mixture of Gaussians:

| 69 |

corresponds to the sampled variance up to iteration t and is estimated after enough samples have been accepted. The parameter and is defined by the user, we have chosen a value of 0.05. The scaling factor can either be fixed (Roberts and Rosenthal 2009) or be tuned (Sherlock et al. 2010). This algorithm targets an acceptance rate of .

Nelder Mead results for LV model

See Table 6.

Table 6.

Nelder–Mead results for . The median values across 100 datasets are shown in the third and fourth column for KF1 and KF2, respectively

| Ground truth | KF1 Median[LQ,UQ] | KF2 Median[LQ,UQ] | |

|---|---|---|---|

| 0.5 | 0.48160 [0.47770,0.48651] | 0.49746 [0.49278,0.50122] | |

| 0.0025 | 0.00227 [0.00222,0.00232] | 0.00248[0.00244,0.00254] | |

| 0.3 | 0.24773 [0.23927,0.25797] | 0.30047[0.29320,0.31061] |

Lower and upper quartiles are shown in brackets

LNA for translation inhibition model

The following model is being assumed where R and P stand for the (numbers of) gene mRNA and protein, respectively:

| 70a |

| 70b |

The above equations result in the following stoichiometry matrix:

| 71 |

and the transition rates are :

| 72 |

The required matrices are calculated below:

| 73 |

| 74 |

| 75 |

The deterministic part is now given by:

| 76 |

The stochastic part is given by the (restarting) LNA where we have dropped the dependency of from time:

| 77 |

resulting in the following ODE for the stochastic variance:

| 78 |

For the integrated process, we get the following according to Eqs. (10),(13) and (14) (Fig. 5). First the deterministic part is given by:

| 79 |

The stochastic part is given by:

| 80 |

resulting in the following ODEs for its integrated variance and covariance with the unintegrated process:

| 81 |

| 82 |

Fig. 5.

Simulated trajectories from an OU process with and , along with the corresponding aggregated process with an integration period of 2 min. For the aggregated process, we assumed observations every 2 min, which are indicated by red crosses. a OU process. b Aggregated process

OU and aggregated OU process

See Fig. 5.

OU traceplots

Figures 6 and 7 show samples of the OU parameters during the MCMC runs.

Filtering results for OU process using aggregated data

See Fig. 8.

Fig. 8.

Filtering results from KF1 and KF2 for an OU process with and (blue trace) using aggregated data over an integration period of . Black lines correspond to the posterior mean estimate and green lines to 1 s.d.. For inference, we used the estimated parameters from A.7. a KF1 (). b KF2 (). (Color figure online)

MCMC traces from LV experiment

See Fig. 9.

Fig. 9.

MCMC traceplots for the LV experiment using an adaptive MCMC algorithm. a MCMC traceplots for . b MCMC traceplots for . c MCMC traceplots for

Filtering results for the predator population

See Fig. 10.

Fig. 10.

Filtering results for the predator population. Red dots correspond to aggregated observations, and the black line represents the actual process. Purple lines represent the mean estimate and green 1 s.d. . a Filtering results for the predator population using KF1. b Filtering results for the predator population using KF2

MCMC traces for Translation inhibition example with synthetic data

See Fig. 11.

MCMC traces for translation inhibition example with single cell data

References

- Arnold L. Stochastic Differential Equations Theory and Applications. [S.l.] Hoboken: Wiley; 1974. [Google Scholar]

- Bishop C. Pattern Recognition and Machine Learning (Information Science and Statistics) New York: Springer; 2007. [Google Scholar]

- Boys R, Wilkinson D, Kirkwood T. Bayesian inference for a discretely observed stochastic kinetic model. Stat. Comput. 2008;18(2):125–135. doi: 10.1007/s11222-007-9043-x. [DOI] [Google Scholar]

- Elf J, Ehrenberg M. Fast evaluation of fluctuations in biochemical networks with the linear noise approximation. Genome Res. 2003;13(11):2475–2484. doi: 10.1101/gr.1196503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fearnhead P, Giagos V, Sherlock C. Inference for reaction networks using the linear noise approximation. Biometrics. 2014;70(2):457–466. doi: 10.1111/biom.12152. [DOI] [PubMed] [Google Scholar]

- Finkenstädt B, Woodcock DJ, Komorowski M, Harper CV, Davis JRE, White MRH, Rand DA. Quantifying intrinsic and extrinsic noise in gene transcription using the linear noise approximation: an application to single cell data. Ann. Appl. Stat. 2013;7(4):1960–1982. doi: 10.1214/13-AOAS669. [DOI] [Google Scholar]

- Gardiner C. Handbook of Stochastic Methods: for Physics, Chemistry and the Natural Sciences (Springer Series in Synergetics) 3. Berlin: Springer; 2004. [Google Scholar]

- Giagos, V.: Inference for auto-regulatory genetic networks using diffusion process approximations. Ph.D. Thesis, Lancaster University (2010)

- Gillespie DT. Markov Processes : An Introduction for Physical Scientists. Boston: Academic Press; 1992. [Google Scholar]

- Gillespie DT. A rigorous derivation of the chemical master equation. Phys. A Stat. Mech. Appl. 1992;188:404–425. doi: 10.1016/0378-4371(92)90283-V. [DOI] [Google Scholar]

- Gillespie DT. Exact numerical simulation of the Ornstein-Uhlenbeck process and its integral. Phys. Rev. E. 1996;54:2084–2091. doi: 10.1103/PhysRevE.54.2084. [DOI] [PubMed] [Google Scholar]

- Golightly A, Wilkinson DJ. Bayesian inference for stochastic kinetic models using a diffusion approximation. Biometrics. 2005;61(3):781–788. doi: 10.1111/j.1541-0420.2005.00345.x. [DOI] [PubMed] [Google Scholar]

- Harper CV, Finkenstädt B, Woodcock DJ, Friedrichsen S, Semprini S, Ashall L, Spiller DG, Mullins JJ, Rand DA, Davis JRE, White MRH. Dynamic analysis of stochastic transcription cycles. PLoS Biol. 2011;9(4):e1000607. doi: 10.1371/journal.pbio.1000607. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hull J. Futures and Other Derivatives. Options, Options, Futures and Other Derivatives. Upper Saddle River: Pearson/Prentice Hall; 2009. [Google Scholar]

- Jazwinski AH. Stochastic Processes and Filtering Theory. Cambridge: Academic Press; 1970. [Google Scholar]

- Kalman RE. A new approach to linear filtering and prediction problems. J. Basic Eng. 1960;82(1):35–45. doi: 10.1115/1.3662552. [DOI] [Google Scholar]

- Komorowski M, Finkenstädt B, Harper CV, Rand DA. Bayesian inference of biochemical kinetic parameters using the linear noise approximation. BMC Bioinform. 2009;10(1):1–10. doi: 10.1186/1471-2105-10-343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mbalawata IS, Särkkä S, Haario H. Parameter estimation in stochastic differential equations with Markov chain Monte Carlo and non-linear Kalman filtering. Comput. Stat. 2013;28(3):1195–1223. doi: 10.1007/s00180-012-0352-y. [DOI] [Google Scholar]

- Roberts GO, Rosenthal JS. Examples of adaptive MCMC. J. Comput. Graph. Stat. 2009;18(2):349–367. doi: 10.1198/jcgs.2009.06134. [DOI] [Google Scholar]

- Särkkä, S.: Recursive Bayesian inference on stochastic differential equations. Ph.D. Thesis, Helsinki University of Technology (2006)

- Sherlock C, Fearnhead P, Roberts GO. The random walk metropolis: linking theory and practice through a case study. Stat. Sci. 2010;25(2):172–190. doi: 10.1214/10-STS327. [DOI] [Google Scholar]

- Spiller DG, Wood CD, Rand DA, White MRH. Measurement of single-cell dynamics. Nature. 2010;465(7299):736–745. doi: 10.1038/nature09232. [DOI] [PubMed] [Google Scholar]

- van Kampen N. Stochastic Processes in Physics and Chemistry. 3. Amsterdam: Elsevier; 2007. [Google Scholar]

- Wilkinson, D.J.: Stochastic modelling for systems biology. In: Chapman & Hall/CRC Mathematical and Computational Biology, 2nd edn. Taylor & Francis (2011)