Abstract

Addiction has long been characterized by diminished executive function, control, and impulsivity management. In particular, these deficits often manifest themselves as impairments in reversal learning, delay discounting, and response inhibition. Understanding the neurobiological substrates of these behavioral deficits is of paramount importance to our understanding of addiction. Within the cycle of addiction, periods during and after withdrawal represent a particularly difficult point of intervention in that the negative physical symptoms associated with drug removal and drug craving increase the likelihood that the patient will relapse and return to drug use in order to abate these symptoms. Moreover, it is often during this time that drug induced deficits in executive function hinder the ability of the patient to refrain from drug use. Thus, it is necessary to understand the physiological and behavioral changes associated with withdrawal and drug craving—largely manifesting as deficits in executive control—to develop more effective treatment strategies. In this review, we address the long-term impact that drugs of abuse have on the behavioral and neural correlates that give rise to executive control as measured by reversal learning, delay discounting, and stop-signal tasks, focusing particularly on our work using rats as a model system.

Drug addiction encompasses the repetitive cycle of intoxication, withdrawal, and craving in the face of adverse consequences. Implicit in the definition of drug addiction is the failure to control drug intake, partially due to deficits in the management of impulsivity and executive control. Impulsivity, response inhibition, and the engagement in risky and premature behaviors, are all components of the psychological construct of executive control. Not surprisingly, executive control mechanisms are often impaired in drug addicted patients (Morein-Zamir and Robbins 2015; Dalley and Robbins 2017), which not only highlights the importance of understanding the neural processes that underlie executive control and response inhibition with respect to addiction, but also its importance for our understanding of the adaptive control of everyday behavior.

In both human and animal models, much research has focused on the dysregulation of midbrain dopamine and its striatal targets, given the importance of these pathways in reward learning and habit formation (Di Chiara et al. 1999; Di Chiara 2002; Goldstein and Volkow 2011; Volkow and Morales 2015). Changes in these areas are almost immediately detected, as consumption of drugs of abuse increases dopamine release in the nucleus accumbens (Di Chiara 2002). Moreover, years of research examining the biochemical mechanisms of drugs of abuse in areas like the nucleus accumbens have shown that drugs of abuse typically induce phasic changes in dopamine firing—which are necessary for activating D1R expressing neurons associated with reward learning—while suppressing D2R projection neurons associated with aversive learning (Smith et al. 2013; Volkow and Morales 2015). This insidious combination of enhancing reward associated with drug consumption, while suppressing learning about the aversive consequences of drug use, is thought to contribute to the development of drug dependence and addiction.

While changes in reward processes may partially explain the development of addiction, they do not fully account for its complexity; the development of addiction is a gradual pathological process influenced by a variety of factors, including the type of drug used (Gossop et al. 1992; Koob and Le Moal 1997), the frequency and route of exposure (Gossop et al. 1992; Strang et al. 1998), and its developmental timing (Schramm-Sapyta et al. 2009; Wong et al. 2013). Beyond the disruption of reward processes, the etiology of addiction encompasses the dysregulation of higher order frontal areas thought to be important for cognitive flexibility and executive control—such as the prefrontal cortex (PFC), orbitofrontal cortex (OFC), and anterior cingulate (ACC) (Schoenbaum et al. 2006; Goldstein and Volkow 2011; Volkow and Morales 2015; Everitt and Robbins 2016; Dalley and Robbins 2017). While it is unclear whether drugs of abuse and addiction are the cause of these deficits, a vulnerability (i.e., endophenotypes), or both, understanding how these psychological constructs are disrupted is of critical importance to the generation of effective behavioral and pharmacological treatments of addiction.

Given the multidimensional nature of impulsivity, the common battery of behavioral tests used to characterize deficits in patient populations and animal models often vary in the type(s) of impulsivity they characterize (Dalley et al. 2011; Dalley and Robbins 2017). Despite this complication, reversal learning, delay discounting, stop-signal and 5-choice serial reaction time (5-CSRT) tasks have been instrumental in identifying top-down regions that regulate several aspects of impulsivity—from outcome expectancies to response conflict to motor control (Schoenbaum et al. 2006; Eagle and Baunez 2010; Morein-Zamir and Robbins 2015; Dalley and Robbins 2017). These tasks are also particularly advantageous given the relative ease with which they can be adapted for both animal and human subjects, allowing for a more comprehensive understanding of addiction, from molecules to behavior (Schoenbaum et al. 2006; Eagle and Baunez 2010; Morein-Zamir and Robbins 2015; Dalley and Robbins 2017). However, one difficulty in studying top-down processes of cognitive control in the context of addiction is that functionally, there may be no detectable differences during early drug consumption. Deficits in these abilities become more pronounced as dependency increases, and therefore it is imperative to study these functions once addiction has developed, such as during and after withdrawal and craving periods.

Within the cycle of addiction, the withdrawal process represents one of the most pernicious and difficult aspects to combat. Withdrawal is often characterized by a period of negative affect or emotional pain that accompanies the body's attempt to reach a euthymic state in the absence of drugs (Koob and Le Moal 1997; Koob 2013). It is during this time that the negative physical symptoms associated with withdrawal are often compounded by alterations of the brain regions and circuits implicated in executive control. Consequently, individuals suffering with withdrawal may return to drugs in an effort to abate these negative physical symptoms. Further, even after withdrawal symptoms subside, patients suffering with addiction are more susceptible to relapse and may return to drug use after many years of abstinence. In part, this return to drugs often occurs at a time when individuals are lacking the cognitive control to prevent and properly contextualize their own behavior (Koob 2013). Understanding how deficits in top-down processes such as response inhibition contribute to and are affected by the cycle of addiction is paramount to the development of better pharmaceutical and behavioral interventions. This review will highlight the impact of drugs of abuse on executive function by focusing on evidence from reversal learning, delay discounting and stop-signal task literature, with a special focus on our recent work characterizing the subsequent long-term changes in the neural correlates of reward-based decision-making in brain regions routinely altered by chronic drug use and withdrawal (see Fig. 1).

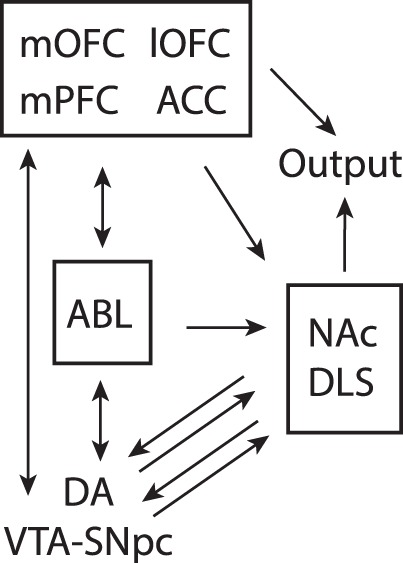

Figure 1.

Circuit diagram demonstrating connectivity between brain regions involved in performance of reward-guided decision-making for delayed rewards, reversal learning, and the stop-signal tasks. Arrows represent direction of information flow, where single headed arrows are unidirectional and double headed arrows are reciprocal. (lOFC) Lateral Orbitofrontal Cortex, (mOFC) Medial Orbitofrontal Cortex, (ABL) Basolateral Amygdala, (ACC) Anterior Cingulate Cortex, (NA) Nucleus Accumbens, (DLS) Dorsolateral Striatum, (VTA) Ventral Tegmental Area, (DA) Dopamine, (SNc) Substantia Nigra compacta.

Fractionating impulsive behavior through task design

Impulsive behavior is a multifaceted trait generally defined by a tendency to act prematurely or to engage in risky/inappropriate behavior without foresight (Dalley and Robbins 2017). Behavioral measures of impulsivity often discriminate between “waiting impulsivity” and “stopping impulsivity.” Deficits in one type of impulsivity often do not generalize to the other, although this may be a product of task design (Dalley et al. 2011; Everitt and Robbins 2016; Dalley and Robbins 2017). Waiting impulsivity is typically tested using delay discounting tasks, which, as the name suggests, measures a subject's willingness to wait for a larger reward when given the option for a smaller immediate reward. Stopping impulsivity measures response inhibition, and describes a subject's ability to disengage from an already selected action in the presence of a “stop” cue. This form of impulsivity requires the cancelation of an action policy, and is typically tested using either stop-signal or Go/NoGo tasks. Importantly, performance on stop-signal tasks can be differentiated from Go/NoGo task, suggesting that, while similar, these tasks test different forms of response inhibition (Eagle et al. 2008).

On both stop-signal and Go/NoGo tasks, difficulty arises on trials that require inhibition of an automatic response (Verbruggen and Logan 2008). During both tasks, their structure is manipulated in such a way that on ∼80% of trials subjects receive a “GO” cue requiring them to make an instrumental response (i.e., pressing the left lever in the presence of a light) in order to receive a reward (i.e., sucrose). On the remaining trials (i.e., ∼20% of all trials) subjects either receive a “GO” cue followed by a “STOP” cue (i.e., stop-signal tasks) or just a “NoGo” cue (i.e., Go/NoGo tasks) requiring them to refrain from making the prepotent automatic GO response (Fig. 2). The percentage of correct trials separated by trial type is a common dependent variable for these tasks, as is the number of errors made. For stop-signal tasks, the stop-signal reaction time (SSRT) is another common measure of response inhibition which captures the latency of the stop measure as estimated by a stochastic model (Verbruggen and Logan 2008). Generally speaking, deficits in stopping impulsivity on both types of tasks manifest as increased error commission on STOP/ NoGo trials, and—in the case of stop-signal tasks—specifically longer SSRTs, suggesting a disinhibition of executive control and increased impulsivity (Verbruggen and Logan 2008; Eagle and Baunez 2010; Dalley et al. 2011; Dalley and Robbins 2017).

Figure 2.

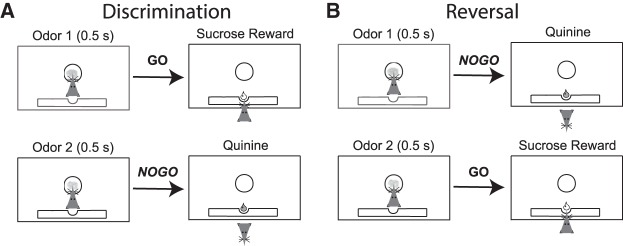

Previous cocaine self-administration impairs reversal learning. Illustration of a GO/NOGO reversal paradigm used in rats. (A) Rats learn that odor 1 predicts sucrose, and odor 2 predicts quinine during initial discrimination learning. (B) During reversal learning, odor-outcome relationships reverse (i.e., odor 1 = quinine; odor 2 = sucrose). Prior cocaine exposure makes rats faster to respond GO and NOGO trials, and increases the number of trials to criterion during reversal blocks but not during discrimination learning. For a comprehensive review of neural correlates related to performance of this task and disruption after cocaine exposure please see Stalnaker et al. 2009.

A third measure of impulsivity is reversal learning. Although reversal learning involves several different cognitive processes, one key component of adaptive and flexible behavior that is engaged during reversal learning is the ability to control the impulse to act on previously learned associations when the meaning of conditioned stimuli change. In these tasks, animals first learn an initial discrimination where one stimulus or behavior produces an appetitive outcome, whereas a second stimulus or behavior yields no outcome or something aversive. After learning to discriminate between the two stimuli (∼80% accuracy), contingencies are reversed. Reversal of the original discrimination takes longer than initial learning due to interference of previously learned associations. This requires the animal to inhibit no longer desired responses and to be adaptive and flexible in its decision-making in different contexts.

In summary, there are multiple measures and forms of impulse control that involve executive and cognitive control mechanisms that allow animals to appropriately inhibit and accurately guide flexible behavior. Importantly, as discussed below, chronic drug exposure impairs performance on all three tasks, as well as the neural signals giving rise to impulse control and executive function.

Reversal learning

Understanding the disruptive impact of cocaine exposure on reversal learning using animal models

The ability to successfully obtain reward and avoid aversive consequences requires an understanding of contingencies between environmental stimuli or behaviors and subsequent events (i.e., odor A predicts reward, and odor B predicts punishment). However, in the real world, associative learning is often complicated, and in certain contexts it becomes necessary to reverse these contingencies (i.e., odor B now predicts reward, and odor A predicts punishment). Reversal learning is an essential component of executive function that is often disrupted in cocaine and alcohol abusers, as well as in nonhuman primate and rodent models of addiction (Stalnaker et al. 2009). The negative impact of cocaine exposure on reversal learning in humans and animal models has been reviewed extensively (Takahashi et al. 2008; Stalnaker et al. 2009). Here we highlight major findings using rodent models to explore the negative consequences of cocaine exposure on flexible decision-making from a systems neuroscience perspective.

Reversal learning relies in part on cooperation between frontal regions such as OFC, ventral striatum, dorsolateral striatum, and basolateral amygdala (Schoenbaum et al. 2006; Takahashi et al. 2008; Stalnaker et al. 2009), and this proposed network has been used to conceptualize computational accounts of decision-making using the Actor-Critic model (Takahashi et al. 2008). In this framework, phasic dopamine release from the ventral tegmental area (VTA) signals a prediction error signal that in turn trains the Critic (Nucleus Accumbens core (NAc) and OFC), which simultaneously reinforces the action-selection policies of the Actor (the dorsolateral striatum). This circuitry has been shown to be corrupted by cocaine exposure (Takahashi et al. 2007). Previously cocaine-exposed rats show impaired reversal learning when presented with an odor-based GO NO/GO task (Takahashi et al. 2007) and respond more rapidly on both GO and NO/GO trials (Stalnaker et al. 2006). In this task (Fig. 2), novel odors were paired with either a sucrose reward or quinine punishment. Animals were required to learn these contingencies, which were systematically reversed after discrimination criteria (80%) were reached (Takahashi et al. 2007). Single unit recordings in both dorsolateral striatum (Actor) and nucleus accumbens (Critic) of cocaine sensitized rats revealed increased activation of dorsolateral striatum and diminished activation of the nucleus accumbens. This imbalance leads to perseveration, making it harder for a cocaine exposed rat to reverse their previous learned associations.

While these findings support the disruption of the actor-critic model after cocaine exposure, the interaction between the nucleus accumbens and the dorsolateral striatum alone does not fully account for the deficits in reversal learning. OFC has been implicated in signaling the value of an expected outcome—a key feature that helps define its role as the Critic—and is also impaired by cocaine exposure (Stalnaker et al. 2007b). There is evidence that OFC disruptions resulting from lesions are similar to deficits that arise due to drug exposure (Schoenbaum and Shaham 2008). Lesions to OFC not only impair reversal learning on the previously described task, but also generate encoding errors in the basolateral amygdala that are suggestive of a failure to track cue significance when contingencies change (Saddoris et al. 2005; Stalnaker et al. 2007a,b). This apparent inflexibility is mediated by the basolateral amygdala, as bilateral lesions of the basolateral amygdala reverse the negative impact of bilateral OFC lesions (Stalnaker et al. 2007b). This suggests that the basolateral amygdala is necessary for the significance of cues to be learned and encoded in other regions such as OFC. Moreover, the inflexible effects of cocaine exposure on reversal learning can be prevented by lesioning the basolateral amygdala, suggesting that deficits in basolateral amygdala encoding are the proximal cause of cocaine-induced impairment of reversal learning (Takahashi et al. 2008; Stalnaker et al. 2009).

In summary, tasks of reversal learning—such as the one described here—offer a clear approach to understanding the circuitry mediating addiction-induced deficits in inhibitory control. Moreover, when behavioral and neural data is paired with computational neuroscience, this combination may provide a powerful pipeline for the generation of novel theoretical approaches to the treatment of drug addiction, in particular with regards to withdrawal and relapse (Takahashi et al. 2008; Mainen et al. 2016; International Brain Laboratory 2017; Paninski and Cunningham 2017).

Delay discounting

Addiction-related deficits in delay discounting behavior in humans

Delay discounting tests the degree to which the value of a reward is temporally diminished. These decision tasks typically involve the subject making a choice between a smaller, more immediate reward and a larger, more delayed reward. The degree to which a subject discounts the larger reward, indicated by a calculated “indifference point” between the two choices or by directly comparing how often each choice is selected (Odum 2011), can be indicative of choice impulsivity and how well the subject is able to delay gratification.

Given the relative importance of impulsivity in the study of addiction, delay discounting tasks have been extensively used to examine the impact of different drugs of abuse on choice behavior. Human studies have shown that nicotine, alcohol, heroin, cocaine, and even gambling addictions all produce changes in delay discounting performance, with addicted individuals selecting the smaller, immediate reward more often than matched controls (Madden et al. 1997; Vuchinich and Simpson 1998; Mitchell 1999; Petry 2001; Coffey et al. 2003; Yi et al. 2010). The rewards in these studies typically take the form of hypothetical monetary rewards with different delivery times (i.e., subjects choose between $10 now, or $20 in 1 wk), but, in some cases hypothetical drug rewards have been used instead. Interestingly, the hypothetical drug rewards are often found to be very steeply discounted even when compared to equivalent hypothetical monetary rewards (Petry 2001; Johnson et al. 2015).

Greater discounting in drug users can also be seen in the context of choosing outcomes that pose a potential risk to their health. Smokers, for example, have been shown to discount future health consequences when considering their continued nicotine use (Odum et al. 2002). Similarly, heroin users with higher rates of delay discounting are more likely to share needles as opposed to waiting for new ones, if sharing means faster access to the drug (Odum et al. 2000). These and other studies provide considerable evidence supporting a correlation between greater impulsivity and drug addiction. Relatedly, higher delay discounting among addicts may also predict relapse or lower success at addiction treatment, as seen in both nicotine and cocaine users; women who had stopped smoking during pregnancy were more likely to relapse if they displayed higher rates of delay discounting, and several studies have also found higher impulsivity in cocaine users generally being inversely related to abstinence success (Washio et al. 2011; Verdejo-Garcia et al. 2014).

Functional imaging correlates of impaired delay discounting performance

Imaging studies have produced considerable work isolating distinct regions that are activated during delay discounting, although there is some debate as to how exactly such regions may be involved in this choice behavior. Much work has supported the possibility of opposing systems that are involved in both normal and impaired decision-making, with an “executive system” activating in favor of future gains, and an opposing “impulsive system” that reacts more strongly to immediate reward (Jentsch and Taylor 1999; Bechara 2005; Bickel et al. 2007, 2011; Kahneman 2011). Several fMRI studies have found greater activation in limbic and paralimbic regions—particularly the ventral striatum, medial orbitofrontal cortex, medial prefrontal cortex, and posterior cingulate cortex during selection of immediate rewards (McClure et al. 2004a; Kable and Glimcher 2007; Bickel et al. 2009). The proposed executive system, on the other hand, may involve activity from the dorsolateral PFC, ventrolateral PFC, and lateral OFC when one selects the delayed but higher-valued reward (McClure et al. 2004b, 2007). Gray matter volume studies and transcranial magnetic stimulation have also demonstrated higher activation or volume of these regions in relation to promoting optimal decision-making (Cho et al. 2010, Bjork et al. 2009), while the inverse seems to be related to enhanced impulsivity and higher selection of immediate reward (Lyoo et al. 2006). As compelling as this theory is, several fMRI studies have been unable to support these results in either abstinent (Kable and Glimcher 2010), or methamphetamine-dependent individuals (Monterosso et al. 2007) during delay discounting. A TMS study even found opposing effects following stimulation of regions making up the proposed executive system (Figner et al. 2010). One possible explanation for these discrepancies in the literature could come from an overgeneralization of the functions associated with these implicated brain regions. Knutson and colleagues, for example, suggest that lateral prefrontal regions may actually be associated with reward delay while limbic areas represent the absolute value of a reward regardless of the subject's choice (Knutson et al. 2005; Ballard and Knutson 2009). Additional research will be needed to refine this proposal in the context of greater delay discounting literature. Taken together, it is clear that there is potential for finding distinct regions involved in complex decision-making, although further work must be done in both control and substance-abusing populations to further elucidate them.

Evidence from animal models on the negative impact of chronic drug use and withdrawal on delay discounting behavior

The abstinence studies previously mentioned and studies of higher self-reported impulsivity among drug users (Patton et al. 1995; Hulka et al. 2015) have been useful in determining a correlation between impulsivity and drug abuse. Animal studies, in turn, have extended these findings, suggesting that this relationship may be bidirectional, contributing to the cyclical nature of addiction. Indeed, studies have shown that rats classified as highly impulsive in a delay discounting task tend to exhibit faster acquisition of cocaine-seeking behavior and a slower rate of extinction compared to low-impulsive classified rats (Perry et al. 2005; Broos et al. 2012). Conversely, chronic exposure to cocaine can produce lasting changes in impulsive choice during delay discounting tasks, regardless of whether the cocaine is administered by experimenter (Roesch et al. 2007a; Simon et al. 2007), or through self-administration (Mendez et al. 2010). At first, these findings may seem at odds with the simple idea that cocaine treated animals show general perseveration or the inability to inhibit/switch action. However, it is important to note that in the discounting paradigms referenced above, the intertrial interval was lengthened from trial to trial to normalize trial length, so that responding for more immediate reward was not advantageous to the rat in the long run. Further, although rats were more sensitive to delays to reward, we have found in our own work that they do not switch faster after reversals, and in some cases were slower to switch when delays were lengthened (Burton et al. 2017, 2018). It was only at the end of trial blocks that rats that had self-administered cocaine chose the more immediate reward significantly more often (Burton et al. 2017, 2018). It is also worth noting that this biased response toward more immediate rewards was not ultimately adaptive as it generalized to forced-choice trials as well, resulting in a lower percentage of correct trials (Burton et al. 2017, 2018).

In addition to replicating dysfunction observed after chronic drug use in humans, animal self-administration studies have also been valuable in allowing researchers to examine how varying degrees of drug consumption may be related to impulsivity. This was demonstrated in a study that segregated “high cocaine intake” and “low cocaine intake” rats depending on the mean cumulative intake during self-administration. Only the high-intake animals demonstrated changes in impulsivity, while low intake animals resembled controls in the delay discounting task (Mitchell et al. 2014). The results of these studies suggest that drug exposure impairs delay discounting performance in both humans and animals in an intake-dependent manner.

Single neuron activity related to delay discounting that is impacted by drugs of abuse

Understanding the neural correlates of how addiction alters delay discounting behavior is of critical importance to our knowledge of the underlying mechanism(s) of addiction and, more specifically, the brain's regulation of impulsive behavior. Although there have been many studies examining the neural correlates of discounting (Kalenscher et al. 2005; Fiorillo et al. 2008; Kim et al. 2008; Cai et al. 2011; Hosokawa et al. 2013), few have studied discounting in the context of prior drug exposure. Further, most tasks used do not clearly dissociate neural correlates related to delay and size of future reward, two critical components of delay discounting that might be encoded separately in the brain and differently impacted by chronic drug use. To combat this, we designed a version of the delay discounting task where reward manipulations of size and delay can be independently manipulated. Here we focus on our work that independently manipulates delay to and size of reward, and asks studying how correlates related to these value parameters are impacted by prior cocaine exposure. Utilizing single unit recordings, this variant of the size-delay task has allowed us to begin characterizing the activity of brain regions implicated in decision-making that are impaired after chronic drug use and withdrawal (see Fig. 3) (for comprehensive review, please see Roesch and Bryden 2011; Bissonette and Roesch 2015). Below we will describe the results of several studies from our laboratory that have focused on the same four regions that were shown to be disrupted during reversal learning as described above—OFC, ABL, VS, and DS. In addition, we will describe results from dopamine (DA) neurons in VTA, as the role of DA and the VTA in learning have been well studied and are thought to be readily altered by chronic drug use.

Figure 3.

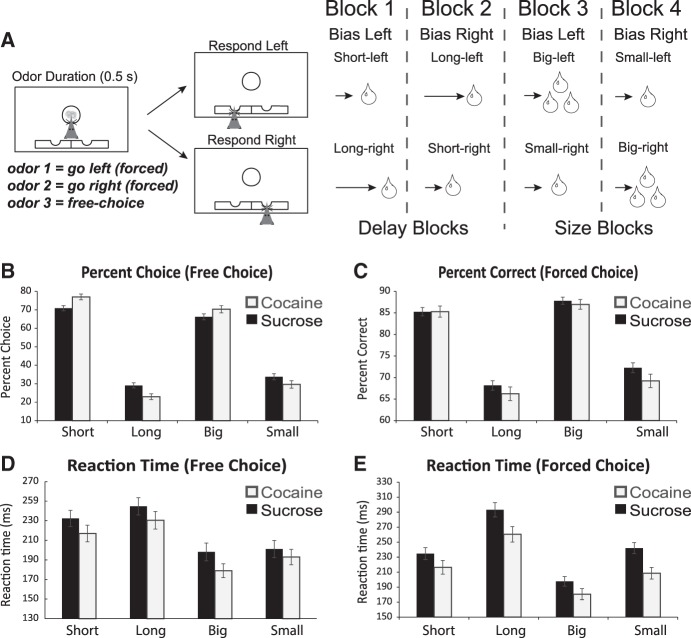

Previous cocaine self-administration makes rats hypersentive to delays to reward during discounting. Illustration of our reward-guided decision-making task that manipulates delay to and size of reward different trial blocks. (A) Task schematic, showing sequence of events in one trial (left panels) and the sequence of blocks in a session (right). Rats were required to nose-poke in the odor port for 0.5 sec before the odor turned on for 0.5 sec instructing them to respond to the adjacent fluid wells below where they would receive liquid sucrose reward after 500–7000 msec. For each recording session, one fluid well was arbitrarily designated as short (a short 500-msec delay before reward) and the other designated as long (a relatively long 1- to 7-sec delay before reward) (Block 1). After the first block of trials (∼60 trials), contingencies unexpectedly reversed (Block 2). With the transition to Block 3, the delays to reward were held constant across wells (500 msec), but the size of the reward was manipulated. The well designated as long during the previous block now offered an additional fluid bolus (i.e., large reward), whereas the opposite well offered 1 bolus (i.e., small reward). The reward stipulations again reversed in Block 4. In three different publications we have found that 12 d of cocaine self-administration ∼1 mo before task performance increases rat sensitivity to delayed rewards. That is, rats exposed to cocaine choose the short delay option more often than control rats. We also observed that cocaine rats have stronger preference for larger reward, are worse at forced-choice trials, and are faster to respond (reaction time) than controls that had self-administered sucrose. Data shown are the average across two different studies where we recorded from NAc or DLS during task performance in rats that had self-administered either cocaine or sucrose. (B) Percent choice on free-choice trials in each value manipulation (controls, black bars; cocaine, gray bars). (C) Percent correct on forced-choice trials in the same manner as B and D. (D) Reaction time (odor port exit minus odor offset) on all free-choice trials for each value manipulation. (E) Reaction time (odor port exit minus odor offset) on forced-choice trials in the same manner as B and C. Error bars indicate SEM. Asterisks (*) indicate significance (P < 0.05) in multifactor ANOVA and/or post-hoc t-tests. For comprehensive reviews of neural correlates related to performance of this task, please see (Roesch and Bryden 2011; Burton et al. 2015; Bissonette and Roesch 2015). Figure modified from Burton et al. (2017, 2018).

During each session of our delay discounting task, rats perform four blocks of ∼60 correct trials each, in which either the time to reward delivery or the size of a liquid sucrose reward is independently manipulated. In this task, rats are first required to nose-poke into a central port and remain there until delivery of a directional odor cue. A single trial results in the presentation of one of three possible odor cues—either instructing the animal to go to a left or right fluid well to receive reward (forced-choice), or to freely choose between either of the two fluid wells to receive reward (free-choice). In the first block of trials, one well is randomly designated to deliver reward immediately (500 msec delay), while the other well delivered reward with gradually increasing delays (1000–7000 msec). Upon completion of the first block of 60 trials, the delay-well contingency was switched and rats learned that the previously higher-valued well (short delay) now carried the longer delay to reward (long delay). During the third block of trials, delays in both wells were held constant at 500 msec, and reward value was manipulated by having one well produce a larger reward than the other. In the fourth block of trials, size-direction contingencies were reversed one last time.

During performance of this task, there are two systems that signal block transitions when unexpected rewards are delivered or omitted—DA neurons in VTA and ABL neurons. DA and ABL neurons carry signals tracking signed and unsigned prediction errors (Roesch et al. 2010a). Cue-responsive DA neuron activity reflects reward prediction errors, increasing firing to cue and rewards that are better than expected (i.e., more immediate reward and larger reward) and decreasing when outcomes were worse than expected (i.e., when a reward is delayed or smaller than expected; Roesch et al. 2007a). ABL neurons, on the other hand, increase firing to both unexpected earlier delivery of reward and larger rewards (Roesch et al. 2010b). Considering the critical roles these systems play in discounting behavior (i.e., pharmacological manipulation impairs discounting functions), and reports of disrupted signals in other tasks (Saddoris et al. 2005, 2016, 2017; Stalnaker et al. 2007b; Roesch et al. 2010b; Saddoris and Carelli 2014) they are certainly pertinent regions targeted by drugs of abuse.

For prediction error signals to be computed by DA and ABL neurons, they must receive information about predicted rewards. As mentioned above, two brain areas thought to be critical in reinforcement learning circuitry are OFC and NAc, both of which contribute function ascribed to the “Critic” in the “Actor-Critic” model (Barto 1995; Houk and Wise 1995; Haber et al. 2000; Joel et al. 2002; O'Doherty et al. 2004; Redish 2004; Ikemoto 2007; Niv and Schoenbaum 2008; Takahashi et al. 2008; Padoa-Schioppa 2011; van der Meer and Redish 2011). In this model, the “Actor” chooses actions according to some policy of behavior, and the “Critic” provides feedback that updates the Actor, allowing for subsequent action reevaluation that should ultimately lead to enhanced performance. Under this model, if the actual outcome deviates from the expected outcome (prediction error), then action policies and future reward predictions are updated so subsequent behavior can become optimal. In the brain, connections between OFC and NAc with midbrain DA neurons are thought to contribute to the Critic, and sensorimotor regions of dorsolateral striatum (DLS) are thought to constitute the Actor. Consistent with these proposed roles, we have shown that activity in DLS represents action policies, that NAc and OFC represent expected outcomes, and that activity in midbrain DA neurons is modulated by errors in reward prediction (Roesch et al. 2006; Roesch et al. 2007b; Roesch et al. 2009; Takahashi et al. 2009; Goldstein et al. 2011; Roesch et al. 2012; Bissonette et al. 2013; Bissonette and Roesch 2015; Burton et al. 2015). Further, we have shown that DA reward prediction error signals are dependent on NAc and OFC (i.e., lesions disrupt computations of prediction errors by DA neurons) and that NAc lesions impact encoding in DLS.

A slightly less computational view of the circuit is that OFC and NAC have roles in guiding behaviors based on expectations about future outcomes, while DLS guides behavior based on learned associations that drive more automatic decisions, with less regard for the value of the predicted outcome. When outcomes are not quite as expected, prediction errors are generated and predictions are updated in OFC and NAc, which, via spiraling connectivity with DA neurons, alters DLS encoding, leading to more automatic behaviors or action policies. It is important to note that—though the critic can update action policies via the actor—it too has strong connections to motor regions (i.e., limbic–motor interface), thus most decisions are likely to be guided by a mixture of the two systems.

With the basic understanding of model and the circuit/signals involved, we have started to determine how these neural signals might be disrupted after chronic cocaine use, as well as how changes in signaling might subsequently disrupt decision-making and impulsive choice. To accomplish this goal, we have recorded single neuron activity from NAc and DLS activity ∼1 mo after 12 d of cocaine self-administration. In the NAc of cocaine-exposed rats, we have found a significant reduction in the quantity of neurons that were responsive to odor cues and rewards. In addition, there was diminished directional selectivity and value encoding in the neurons that maintained firing at the time of reward. Lastly, we have shown that cocaine self-administration reduces the ability of NAc to maintain expectations over longer delays. Thus, we see that after cocaine exposure, neurons in NAc that typically encode the value and direction of expected outcomes do so at a reduced capacity. Given the circuit delineated above, these changes should disrupt the processing of cues, reward, and associated actions in downstream areas. Indeed, after cocaine self-administration, correlates related to response-outcome (R-O) encoding were enhanced and divorced from actions (Burton et al. 2017). That is, after cocaine exposure, correlates in DLS better reflected the contingencies available during decision-making (action-outcome encoding), as opposed to a representation of what would ultimately be selected (chosen-outcome encoding). Notably, these correlates were amplified after chronic cocaine self-administration at the expense of correlates that signal the outcome predicted by what the actual decision would be, ultimately resulting in failed modifications of action selection (Burton et al. 2018). Further, increases in neural activity are observed prior to odor onset, suggesting they represent animals’ biases even before the rat was fully informed of the reward availability (Burton et al. 2017). This lack of direction and value processing in DLS may result from the impaired encoding in NAc described above, and would correspond to the faster and higher responding for more immediate reward that is seen in the behavioral task.

In conclusion, cocaine self-administration alters both value and directional response encoding in the striatal circuit. In the NAc of cocaine-exposed rats, there were fewer neurons accurately representing the value of expected outcomes, and the nature of the response necessary to obtain those outcomes was attenuated. Of those neurons that did encode information pertaining to reward value and response direction, representations of rewards after longer delays were steeply discounted. We predict that this loss of this information leads to altered encoding in DLS in that neurons fail to represent the selected behavior and its associated expected outcome. Instead, after cocaine self-administration, DLS signals overrepresented the location of expected rewards in a given block context, biasing behavior toward higher valued reward irrespective of reward availability, resulting in inflexible decision-making. Although we describe our results in the context of “S-R/ R-O” and “action-outcome/chosen-outcome” encoding, which accurately represents relationships to task contingencies, we cannot unequivocally link them to goal-directed and habit-driven behaviors because we did not incorporate contingency degradation or devaluation (Balleine and O'Doherty 2010) as others have previously done (Gremel and Costa 2013). However, with that said, recent work has suggested that in choice paradigms with multiple action-outcome contingencies (as in our task), behaviors are goal-directed even after extensive training (Colwill and Rescorla 1985; Dickinson et al. 2000; Colwill and Triola 2002; Holland 2004; Kosaki and Dickinson 2010) and that exposure to drugs of abuse can leave these goal-directed mechanisms intact and, in some cases, enhanced (Phillips and Vugler 2011; Son et al. 2011; Halbout et al. 2016). Together, these results suggest that drugs of abuse alter the cortico-striatal circuit in ways that goes beyond classic models of addiction, and that the expedited transition from goal-directed to habit-driven behaviors might not adequately explain drug induced alterations in brain and behavior in animals performing more complicated decision-making paradigms.

Stop-signal

Evidence for impaired response inhibition among drug users

In humans, differing effects of drug intoxication on stopping impulsivity have been observed in alcohol (De Wit et al. 2000; Kamarajan et al. 2005; Noël et al. 2007), cocaine (Fillmore and Rush 2002; Kaufman et al. 2003; Hester and Garavan 2004; Fillmore et al. 2005b; Kelley et al. 2005; Li et al. 2008), methamphetamine (Fillmore et al. 2003, 2005a; Monterosso et al. 2005), and nicotine (Harrison et al. 2009; Wignall and de Wit 2011; Ashare and Hawk 2012) abusers. These differences are largely due to the biochemical nature of the drug of interest. Administration of stimulants such as cocaine (Fillmore et al. 2005b), methamphetamine (De Wit et al. 2000; Fillmore et al. 2005b), and nicotine (Wignall and de Wit 2011) just prior to testing generally improve impulsivity measures, consistent with the function of stimulants on top-down systems—meaning subjects actually show greater impulse control immediately following drug intoxication. However, there is evidence to suggest that—at least for cocaine (Fillmore and Rush 2002; Fillmore et al. 2005b) and methamphetamine (De Wit et al. 2000; Fillmore et al. 2003, 2005a)—these improvements may be dose dependent, with lower doses causing impairment of impulsivity measures and higher doses facilitating performance. While dose response curves mapping the impairment STOP trial performance as a function of drug concentration are lacking, there is a suggestion that the effect of stimulants on behavior may be modeled as an inverted U-shaped curve (Fillmore et al. 2005b). Not surprisingly, alcohol intoxication leads to impaired performance on STOP tasks (De Wit et al. 2000; Kamarajan et al. 2005; Noël et al. 2007), in line with the role that depressants have on brain functioning and top-down control of behavior.

In contrast to the ambiguous effects of drug intoxication on task performance, the consequences of withdrawal from drugs of abuse on measures of response inhibition are clearer. Patients experiencing withdrawal from cocaine (Kaufman et al. 2003; Hester and Garavan 2004; Kelley et al. 2005), methamphetamine (Monterosso et al. 2005), and nicotine (Harrison et al. 2009; Ashare and Hawk 2012) generally exhibit significant impairments on stop-signal task measures. It is during this time of impaired top-down control and decreased physical health that the body struggles to reach a euthymic state, at which point increased feelings of craving and drug consumption are often reported (Koob and Le Moal 1997; Everitt and Robbins 2005, 2016; Koob 2013). Withdrawal represents a unique point of intervention, but long-term treatment requires a more nuanced understanding of the neural correlates that support these deficits in top-down control.

Neural correlates of deficits in stop-signal performance

Functional magnetic resonance imaging (fMRI) studies have been useful in mapping the neural basis of inhibitory behavioral deficits; although to date relatively few studies have examined these deficits in the context of stop tasks. Impaired performance on STOP/NoGo trials has been correlated with hypoactivity in ACC in studies comparing cocaine users (∼12 h—2 wk since last use) and healthy controls (Kaufman et al. 2003; Hester and Garavan 2004; Li et al. 2008; Morein-Zamir et al. 2013). Deficits in ACC function are commonly reported in conditions where response inhibition on a stop task is impaired, such as in patients with ADHD and OCD (Dalley et al. 2011; Dalley and Robbins 2017). Hypoactivity following cocaine withdrawal has also been reported in other higher-order cognitive areas, such as the presupplementary motor area (pre-sMA) and the insula (Kaufman et al. 2003; Hester and Garavan 2004; Morein-Zamir and Robbins 2015). However, unlike in the ACC, these effects may not be as robust and in some cases fail to remain significant when controlling for attentional monitoring, post error behavioral adjustment and task-related frustration (Li et al. 2008). Hypoactivity of ACC and behavioral deficits on STOP trials similar to those seen in cocaine users has also been reported for methamphetamine users (Morein-Zamir et al. 2013), although to our knowledge, no fMRI studies have examined the effects of nicotine withdrawal on STOP task performance.

Individual variability in addiction and stop-signal performance

Reports of hypoactivity in frontal regions supporting top-down control are relatively common with substance abuse disorders, as well as in patients with attention-deficit hyperactivity disorder (ADHD), for which deficits in impulse control are one of the more prominent symptoms (Milberger et al. 1996, 1998; Banerjee et al. 2007). However, it is unclear whether hypoactivity in these regions is brought on by the use of illicit substances, or whether biological vulnerabilities and individual variation play a role. However, research into individual differences may provide useful clues.

Individual susceptibility to addiction likely plays a key role in modulating changes in brain activation. A study examining stimulant users (cocaine and methamphetamine), their nondrug using siblings, and healthy controls confirmed hypoactivity in prefrontal regions and impaired performance on STOP task associated with stimulant use (Morein-Zamir et al. 2013). In contrast to stimulant users, the siblings of stimulant users exhibited hyperactivation of these regions relative to controls (Morein-Zamir et al. 2013). The authors suggest that this hyperactivation of prefrontal regions observed in the siblings of stimulant users may reflect a compensatory mechanism(s) that could be protective, or a potential coping strategy that confers resilience to the deficits in top-down control seen in their stimulant using siblings (Morein-Zamir et al. 2013).

While these results may speak against endophenotype hypotheses about drug susceptibility based on brain activity levels, these results do highlight the complexity of individual differences in neural functioning. Understanding these individual variations in susceptibility to addiction and, more generally, drug use, is of critical importance to the development of a more dimensional approach to the treatment of these disorders. Further, it highlights the need for animal models that can better control for genetic factors and drug history.

Exploring single neuron activity using stop tasks

One major utility of stop-signal tasks is their ability to be easily adapted for the testing of animal models, which makes understanding the neural correlates at the level of single units possible (Eagle and Baunez 2010; Dalley and Robbins 2017). Use of the stop-signal task has shown that increased striatal input creates a point of no return that requires stop-cue information to be transmitted from the subthalamic nucleus (STN) to the substantia nigra pars reticulata (SNr) in order to cancel the prepotent response (Freeze et al. 2013; Schmidt et al. 2013). This is consistent with single unit recordings showing that dorsal medial striatum (DMS) mediates directional selectivity during stop task performance (Bryden et al. 2012). Moreover, recent evidence suggests that arkypallidal cells in the globus pallidus fire rapidly and robustly to stop signals, and provide the cellular substrate for relaying the stop signal to the subthalamic-nigral pathway (Mallet et al. 2016). Examination of executive systems involved in the top-down control of response inhibition has shown that putative noncholinergic neurons in the basal forebrain are completely inhibited by stop-cues. Moreover, brief electrical microstimulation of the basal forebrain is sufficient for inducing abrupt stopping behavior in rodents (Mayse et al. 2015). Collectively, these results suggest that behavioral inhibition is regulated by multiple brain regions and highlights the power of adapting stop-signal tasks for use in rodent models.

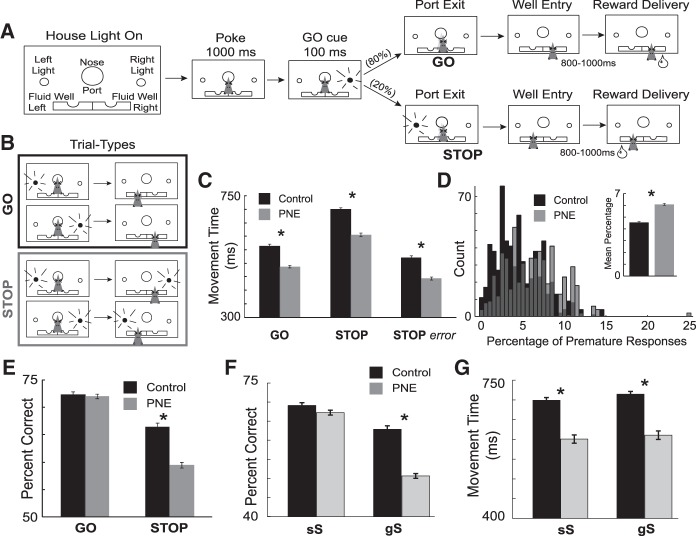

One major hurdle in adapting traditional stop-signal tasks for animal studies using single unit recordings is that on correct STOP trials (i.e., trials where the animal correctly refrains from making a response) it is difficult to ascertain the behavioral state and location of the animal because the rat is not required perform a measurable action. To ameliorate this problem, we modified the traditional stop-signal task to require the animal to not only refrain from responding when presented with a STOP cue, but to then redirect their behavior to the opposite well (Fig. 4). This stop-change task eliminates ambiguity associated with correct STOP trials, and also allows us to be sure that we are measuring neural activity when the animal is actively displaying response inhibition. Importantly, our task captures the hallmark features of response conflict tasks described in other species—high conflict on STOP trials following GO trials, reduced conflict after errors and STOP trials (conflict adaptation), behavior modulation by stop signal delay (SSD), and speed accuracy tradeoff (Fig. 4).

Figure 4.

Prenatal nicotine exposure makes rats more impulsive during performance of a Stop Change task. (A) Illustration of our stop-change task. Rats were required to nose poke and remain in the port for 1000 msec before one of the two directional lights (left or right) illuminated for 100 msec. The cue-light disclosed the response direction in which the animal could retrieve fluid reward (GO trials). On 20% of trials after port exit, the light opposite the first illuminated to instruct the rat to inhibit the current action and redirect behavior to the corresponding well under the second light (STOP trials). (B) Schematic representation of GO and STOP trial types. (C) Session averaged movement times (msec). Error bars indicate standard error of the mean. Asterisks indicate group comparisons (Wilcoxon; P < 0.05). (D) Histogram represents the proportion of premature responses (withdraw from the nose-poke prior to GO light offset) for control and PNE animals per session. Inset represents the average proportion of premature responses for control and nicotine rats. (E) Percent of correct responses by trial type and condition. As before error bars indicate standard error of the mean. Asterisks indicate group comparisons (Wilcoxon; P < 0.05). (F) Percent of correct responses per session for sS (STOP trial preceded by STOP trial; low response conflict) and gS (STOP trial preceded by GO trial; high response conflict) trials. Asterisks indicate significant mean differences (Wilcoxon; P < 0.05). (G) Movement times are calculated as the latency from port exit to well entry. Asterisks indicate significant mean differences (Wilcoxon; P < 0.05). Figure modified from Bryden et al. (2016).

In rats, we have already used the stop-change task to characterize single unit activity in several brain regions thought to be important for modulating response inhibition (Bryden et al. 2012, 2016, 2018; Bryden and Roesch 2015; Tennyson et al. 2018). In our task, rats are required to nose poke and remain in the port for 1000 msec before one of the two directional lights (left or right) is illuminated for 100 msec (Fig. 4). The cue-light disclosed the response direction in which the animal could retrieve fluid reward (GO trials). On 20% of trials after port exit, the light opposite the first illuminated light instructs the rat to inhibit the current action and redirect behavior to the corresponding well under the second light (STOP trials). For single neuron recordings, this setup is particularly advantageous in that our task is well controlled from start to finish. Our task provides two measures of speed—reaction time (GO cue on to port exit) and movement time (port exit to well entry)—which are within-trial measures of processing time related to behavioral redirection and the degree of conflict induced on a given trial. The use of two directions affords us the opportunity to directly compute the stop-change reaction time (SCRT) in addition to the more commonly used stop-signal reaction time (SSRT) (Verbruggen and Logan 2008), thus providing us with multiple measures of the time needed to inhibit a response.

Using this task, we have shown that single units in the DMS are important for “response selection” (i.e., left or right), meaning that units in the DMS fire more strongly for one response over the other (Bryden et al. 2012). On GO trials, this firing emerges quickly; however, on STOP trials directional tuning is conflicted, initially miscoding the appropriate motor output and slow to signal the correct response, paralleling the behavioral output of the animal (Bryden et al. 2012). Only on correct trials is this conflict resolved, with activity correctly reflecting inhibition of the unwanted behavior and redirection of the response to the appropriate location.

Resolution of conflict at the behavioral and neural level requires detection and monitoring of, and adaptation to response conflict so that unwanted behavior can be inhibited. “Response conflict” refers to the simultaneous activation of two competing behaviors—in this case, GO and STOP—requiring the override of an initial response. We have recently shown that these functions map on to neural correlates identified in ACC, mPFC, and lOFC. In ACC, we have shown that neurons fire strongly to STOP cues prior to resolution of response conflict, both at the neural and behavioral level (Bryden et al. 2018). It is likely that these neurons signal the need to inhibit behavior and redirect it (Bryden et al. 2018). In mPFC, similar correlations have been observed; however, stronger firing emerged only after resolution of conflict and on trials that required the most cognitive control (i.e., STOP trials preceded by GO trials). These neurons are likely monitoring the degree of conflict and the direction of the response so that more appropriate actions can be taken (Bryden et al. 2016; Bissonette and Roesch 2015, 2016). Finally, after the system has detected conflict, successful performance on stop-signal tasks require “conflict adaptation” (Botvinick et al. 2001), or the ability to slow down and respond more accurately following high conflict STOP trials. Using our stop-change task, we have shown that single units in OFC show that their activity is enhanced when rats exhibit cognitive control during conflict (Bryden and Roesch 2015). These findings are consistent with a conflict adaptation function for OFC, and map on to behavioral data suggesting improved accuracy in performance following high levels of activity in OFC (Mansouri et al. 2014; Bryden and Roesch 2015).

To the best of our knowledge, no study has asked if chronic drug abuse disrupts stop-signal measures in experimental animals or has determined what neural correlates are disrupted by drugs of abuse, even though there has been considerable work showing deficits in human addicts. However, there is emerging evidence demonstrating that prenatal drug exposure can impair impulsivity and executive control in offspring once they reach adulthood (Schneider et al. 2011; Zhu et al. 2012, 2014). The effects of drugs abuse in utero on the development of behavioral deficits in impulsivity is an understudied question that bares great importance given the 2013 National Survey on Drug Use and Health (SAMHSA), which found that 15.4% of women continue to smoke during pregnancy. Prenatal nicotine exposure has been linked to the development of ADHD (Milberger et al. 1996, 1998; Banerjee et al. 2007; Biederman et al. 2012) and impaired impulse control in rats (Bryden et al. 2016). It is well known that patients with ADHD often exhibit impulsive behavior and impaired performance on STOP tasks (Dalley and Robbins 2017). Whether prenatal nicotine exposure increases risk of the development of ADHD or promotes the development of a phenotype with marked similarity to ADHD is not fully understood (Biederman et al. 2012); in either case, the role of prenatal nicotine exposure on STOP task performance is almost completely unexplored.

Recently, using a prenatal nicotine exposure model in rats in conjunction with our stop-signal task, we have shown that prenatal nicotine exposure in utero is associated with altered performance in our task. Adult rats exposed to nicotine prenatally exhibited faster responding on all trial types (GO and STOP), as well as increased premature responding, and greater difficulty inhibiting behavior on STOP trials (Bryden et al. 2016). Rats were exposed to nicotine in utero by supplementing the drinking water of dams with nicotine bitartrate over the course of 3 wk, which is reported to mimic plasma nicotine levels reported in habitual smokers (Schneider et al. 2011; Zhu et al. 2012, 2014; Bryden et al. 2016). We also recorded single unit activity from neurons in the medial prefrontal cortex in rats exposed to nicotine prenatally as they performed the stop-change task. We found that single units encoding conflict monitoring signals, as described above, were significantly decreased on STOP trials that immediately followed low-conflict GO trials, suggesting that prenatal nicotine exposure in rats increases impulsivity by disrupting the firing of mPFC neurons involved in response planning and conflict monitoring (Bryden et al. 2016). While work examining the role of activity in frontal regions on stop-signal performance following drug withdrawal is relatively limited, these results likely generalize to cases where impaired stop-signal performance is found. Moreover, this highlights the importance of understanding the circuitry underlying top-down control, in order to better inform future research seeking to develop and characterize therapeutic interventions.

These findings provide some of the first evidence suggesting that prenatal exposure to drugs of abuse alters behavioral impulsivity and top-down control from the level of the single neuron. The prenatal period is a developmental time period particularly susceptible to drugs of abuse. Recently, the nationwide surge in individuals afflicted with opioid use disorder has reached epidemic levels causing numerous local, state, and federal agencies in the United States to declare a state of emergency with the CDC, reporting that the number of deaths due to opioid overdose exceeds those caused by guns and breast cancer. The negative impact of this opioid crisis has not only affected adult users, but there have been reports of a surge in the number of infants suffering from neonatal abstinence syndrome (NAS). The effects of prenatal exposure to opiates in the context of response inhibition and behavioral control is not well understood, although there is evidence that in adult users, early withdrawal from opiates is associated with impaired executive function (Rapeli et al. 2006; Befort et al. 2011). Future research should utilize tasks of stopping impulsivity to begin examining how exposure to opiates in utero alters neural processes associated with the development of executive control.

Conclusions

Impulsivity and maladaptive decision-making are defining features of addiction, and can be characterized by deficits in reversal learning, delay discounting, and response inhibition. In order to assess the physiological and behavioral changes brought about by drug use, we have to determine how drugs of abuse alter neural signals that give rise to different forms of impulsivity and deficits in executive control. During reversal learning, OFC and ABL bidirectionally encode predictions about expected outcomes and are mutually dependent. Chronic cocaine exposure and OFC lesions disrupts associative encoding in the ABL, which results in inflexible learning. ABL lesions ameliorate this inflexibility in cocaine exposed rats. In striatum, NAc and DLS govern goal-directed (response-outcome) and habitual (S-R) behavior during learning. After chronic cocaine exposure, there is a behavioral shift from goal-directed to habitual responding and a respective transition in neural encoding from NAc to DLS. In a more complicated choice paradigm involving value manipulations of delay to and size of reward, we find that reward expectancy signals that precede reward delivery are disrupted in NAc after cocaine self-administration, and response-outcome encoding in DLS no longer reflects the nature of the upcoming behavioral response. Finally, we see that prenatal nicotine exposure can enhance motor impulsivity during performance of a stop-change task, and disrupts mPFC signals related to redirection and conflict monitoring. Figure 1 illustrates how heavily interconnected these regions are, indicating the need to further assess deficits that occur across the circuit and to determine how changes in signaling alters activity patterns.

Above we present evidence suggesting that drugs of abuse, such as cocaine and nicotine, have negative effects on executive control, translating into many forms of impulsive behavior. Although there are some similarities, drugs of abuse often have different modes of action and alter brain chemistry in subtly different ways; consequently, it is often difficult to draw conclusions across studies. Moreover, neuroscience has developed nuanced hypotheses specific to a laboratory or an investigator's drug of choice and behavior of interest. While understandable, this leaves gaps in which certain brain regions go relatively unstudied in comparison to others. Despite this, we search for commonalities with the hope of targeting a specific node within the decision-making circuit that might alleviate dysfunction observed after chronic self-administration of drugs of abuse.

Across studies, we see that prior exposure to drugs of abuse increases latency to respond, and makes it difficult for animals to override and revise initial responses. Additionally, we see that cocaine exposed rats are more sensitive to delays to reward. From these results, we cannot exactly extrapolate whether behavioral changes observed across tasks represent a common node of dysfunction. However, during both reversal and size/delay experiments, we observed a reduction and enhancement of processing in NAc and DS, respectively. In the context of the reversal task, correlates that closely mapped on to goal-directed (R-O) and habit learning (S-R) systems were increased and decreased, consistent with the general idea that addiction reflects the transition from goal-directed (NAc, DMS) to habit-driven (DLS) behavior. In the context of the odor guided size/delay task, value and response information was lost and heavily discounted in NAc, while DLS encoding of distinctive features of expected outcomes was enhanced (Stalnaker et al. 2010; Burton et al. 2017). Thus, in two different decision-making paradigms, common alterations in the striatal circuit were observed. How this maps onto neural circuits that give rise to performance on stop signal tasks is unclear, but it is thought that NAc and DS function are more closely tied to waiting and stopping impulsivity (Dalley and Robbins 2017). Thus, we would predict that a similar pattern of reduced and enhanced activity patterns in the context of our stop-change task might emerge after chronic cocaine self-administration; however, this remains to be tested.

Another similarity that prevails across studies is the importance of the reward system in training upstream brain regions—such as OFC—on the specifics of the task, sometimes referred to as task space (Wilson et al. 2014; Wikenheiser and Schoenbaum 2016; Wikenheiser et al. 2017). Across the three aforementioned behavioral tasks, we have seen that the OFC tracks reward outcomes and is critical for modifying behavior following errors or reversal of contingencies. During discrimination and reversal learning, as well as during the odor-guided directional size/delay task, we see firing to cues and during outcome anticipation. In the context of reversal learning, we know this outcome-expectancy signal is disrupted after chronic cocaine exposure (Schoenbaum et al. 2006). The OFC's role in modifying behavior during reversal learning, conflict adaptation, and other contexts likely reflects its role in managing task space (Wilson et al. 2014; Wikenheiser and Schoenbaum 2016; Wikenheiser et al. 2017). In this view, it is possible that one commonality among all drugs of abuse, at least during withdrawal, is in their disruption of the OFC's ability to accurately represent the task at hand. Assiduous experiments assessing how the OFC develops these representations are needed; one possibility is that during training, dopaminergic input trains the OFC (and likely other brain regions, such as mPFC and ACC) about the specifics of the task. Once an animal achieves a certain level of proficiency, defined by some degree of automaticity, dopamine signaling switches to serving a more error-detection like role. Error (i.e., failure to inhibit a prepotent response) at this point then results in a change in dopamine firing that alerts OFC to quickly assess the animal's behavior in terms of the task goals, and direct the animal accordingly. In all of our single unit studies, our animals are trained prior to task performance. However, their failure to appropriately inhibit behavior after chronic self-administration across reversal, delay discounting and stop-change tasks may be due to a compromised ability to accurately represent the task at hand. This failure has been described in the OFC, but is likely present across many frontal and executive brain regions, and may support the development of abnormal and maladaptive behavior. Currently this hypothesis is mostly conjecture, but is supported by recent computational work suggesting a role for dopamine in the training of higher-order systems (Wang et al. 2018). This highlights a need to begin looking across drugs of abuse for convergent mechanisms that allow us to identify critical disruptions along the decision-making circuit.

Acknowledgments

National Institute on Drug Abuse (NIDA) grants DA031695 and DA040993 to M.R.R.

Footnotes

Article is online at http://www.learnmem.org/cgi/doi/10.1101/lm.047001.117.

References

- Ashare RL, Hawk LW. 2012. Effects of smoking abstinence on impulsive behavior among smokers high and low in ADHD-like symptoms. Psychopharmacology (Berl) 219: 537–547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ballard K, Knutson B. 2009. Dissociable neural representations of future reward magnitude and delay during temporal discounting. Neuroimage 45: 143–150. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balleine BW, O'Doherty JP. 2010. Human and rodent homologies in action control: corticostriatal determinants of goal-directed and habitual action. Neuropsychopharmacology 35: 48–69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banerjee TD, Middleton F, Faraone SV. 2007. Environmental risk factors for attention-deficit hyperactivity disorder. Acta Paediatr 96: 1269–1274. [DOI] [PubMed] [Google Scholar]

- Barto AG. 1995. Adaptive critics and the basal ganglia. In Computational neuroscience. Models of information processing in the basal ganglia (ed. Houk JC, Davis JL, Beiser DG), pp. 215–232. The MIT Press, Cambridge, MA. [Google Scholar]

- Bechara A. 2005. Decision making, impulse control and loss of willpower to resist drugs: a neurocognitive perspective. Nat Neurosci 8: 1458–1463. [DOI] [PubMed] [Google Scholar]

- Befort K, Mahoney MK, Chow C, Hayton SJ, Kieffer BL, Olmstead MC. 2011. Effects of delta opioid receptors activation on a response inhibition task in rats. Psychopharmacology (Berl) 214: 967–976. [DOI] [PubMed] [Google Scholar]

- Bickel WK, Miller ML, Yi R, Kowal BP, Lindquist DM, Pitcock JA. 2007. Behavioral and neuroeconomics of drug addiction: competing neural systems and temporal discounting processes. Drug Alcohol Depend 90S: S85–S91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickel WK, Pitcock JA, Yi R, Angtuaco EJ. 2009. Equivalent neural correlates across intertemporal choice conditions. Neuroimage 47(Supplement 1): S39–S41. [Google Scholar]

- Bickel WK, Yi R, Landes RD, Hill PF, Baxter C. 2011. Remember the future: working memory training decrease delay discounting among stimulant addicts. Biol Psychiatry 69: 260–265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Biederman J, Petty CR, Bhide PG, Woodworth KY, Faraone S. 2012. Does exposure to maternal smoking during pregnancy affect the clinical features of ADHD? Results from a controlled study. World J Biol Psychiatry 13: 60–64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bissonette GB, Roesch MR. 2015. Neural correlates of rules and conflict in medial prefrontal cortex during decision and feedback epochs. Front Behav Neurosci 9: 266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bissonette GB, Roesch MR. 2016. Neurophysiology of reward-guided behavior: correlates related to predictions, value, motivation, errors, attention, and action. Curr Top Behav Neurosci 27: 199–230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bissonette GB, Burton AC, Gentry RN, Goldstein BL, Hearn TN, Barnett BR, Kashtelyan V, Roesch MR. 2013. Separate populations of neurons in ventral striatum encode value and motivation. PLoS One 8: e64673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bjork JM, Momenan R, Hommer DW. 2009. Delay discounting correlates with proportional lateral frontal cortex volumes. Biol Psychiatry 65: 710–713. [DOI] [PubMed] [Google Scholar]

- Botvinick MM, Braver TS, Barch DM, Carter CS, Cohen JD. 2001. Conflict monitoring and cognitive control. Psychol Rev 108: 624–652. [DOI] [PubMed] [Google Scholar]

- Broos N, Diergaarde L, Schoffelmeer AN, Pattij T, De Vries TJ. 2012. Trait impulsive choice predicts resistance to extinction and propensity to relapse to cocaine seeking: a bidirectional investigation. Neuropsychopharmacology 37: 1377–1386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bryden DW, Roesch MR. 2015. Executive control signals in orbitofrontal cortex during response inhibition. J Neurosci 35: 3903–3914. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bryden DW, Burton AC, Kashtelyan V, Barnett BR, Roesch MR. 2012. Response inhibition signals and miscoding of direction in dorsomedial striatum. Front Integr Neurosci 6: 69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bryden DW, Burton AC, Barnett BR, Cohen VJ, Hearn TN, Jones EA, Kariyil RJ, Kunin A, Kwak S, Lee J, et al. 2016. Prenatal nicotine exposure impairs executive control signals in medial prefrontal cortex. Neuropsychopharmacology 41: 716–725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bryden DW, Brockett AT, Blume E, Heatley K, Zhao A, Roesch MR. 2018. Single neurons in anterior cingulate cortex signal the need to change action during performance of a stop-change task that induces response competition. Cereb Cortex 10.1093/cercor/bhy008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burton AC, Nakamura K, Roesch MR. 2015. From ventral-medial to dorsal-lateral striatum: neural correlates of reward-guided decision-making. Neurobiol Learn Mem 117: 51–59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burton AC, Bissonette GB, Zhao AC, Pooja PK, Roesch MR. 2017. Prior cocaine self-administration increases response-outcome encoding that is divorced from actions selected in dorsolateral striatum. J Neurosci 37: 7737–7747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burton AC, Bissonette GB, Vazquez D, Blume EM, Donnelly M, Heatley KC, Hinduja A, Roesch MR. 2018. Previous cocaine self-administration disrupts reward expectancy encoding in ventral striatum. Neuropsychopharmacology 10.1038/s41386-018-0058-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cai X, Kim S, Lee D. 2011. Heterogeneous coding of temporally discounted values in the dorsal and ventral striatum during intertemporal choice. Neuron 69: 170–182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cho SS, Ko JH, Pellecchia G, Van Eimeren T, Cilia R, Strafella AP. 2010. Continuous theta burst stimulation of right dorsolateral prefrontal cortex induces changes in impulsivity level. Brain Stimul 3: 170–176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coffey SF, Gudleski GD, Saladin ME, Brady KT. 2003. Impulsivity and rapid discounting of delayed hypothetical rewards in cocaine-dependent individuals. Exp Clin Psychopharmacol 11: 18–25. [DOI] [PubMed] [Google Scholar]

- Colwill RM, Rescorla RA. 1985. Instrumental responding remains sensitive to reinforcer devaluation after extensive training. J Exp Psychol Anim Behav Process 11: 520–536. [Google Scholar]

- Colwill RM, Triola SM. 2002. Instrumental responding remains under the control of the consequent outcome after extended training. Behav Processes 57: 51–64. [DOI] [PubMed] [Google Scholar]

- Dalley JW, Robbins TW. 2017. Fractionating impulsivity: neuropsychiatric implications. Nat Rev Neurosci 18: 158–171. [DOI] [PubMed] [Google Scholar]

- Dalley JW, Everitt BJ, Robbins TW. 2011. Impulsivity, compulsivity, and top-down cognitive control. Neuron 69: 680–694. [DOI] [PubMed] [Google Scholar]

- De Wit H, Crean J, Richards JB. 2000. Effects of d-amphetamine and ethanol on a measure of behavioral inhibition in humans. Behav Neurosci 114: 830–837. [DOI] [PubMed] [Google Scholar]

- Di Chiara G. 2002. Nucleus accumbens shell and core dopamine: differential role in behavior and addiction. Behav Brain Res 137: 75–114. [DOI] [PubMed] [Google Scholar]

- Di Chiara G, Tanda G, Bassareo V, Pontieri F, Acquas E, Fenu S, Cadoni C, Carboni E. 1999. Drug addiction as a disorder of associative learning. Role of nucleus accumbens shell/extended amygdala dopamine. Ann N Y Acad Sci 877: 461–485. [DOI] [PubMed] [Google Scholar]

- Dickinson A, Smith J, Mirenowicz J. 2000. Dissociation of Pavlovian and instrumental incentive learning under dopamine antagonists. Behav Neurosci 114: 468–483. [DOI] [PubMed] [Google Scholar]

- Eagle DM, Baunez C. 2010. Is there an inhibitory-response-control system in the rat? Evidence from anatomical and pharmacological studies of behavioral inhibition. Neurosci Biobehav Rev 34: 50–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eagle DM, Bari A, Robbins TW. 2008. The neuropsychopharmacology of action inhibition: cross-species translation of the stop-signal and go/no-go tasks. Psychopharmacology (Berl) 199: 439–456. [DOI] [PubMed] [Google Scholar]

- Everitt BJ, Robbins TW. 2005. Neural systems of reinforcement for drug addiction: from actions to habits to compulsion. Nat Neurosci 8: 1481–1489. [DOI] [PubMed] [Google Scholar]

- Everitt BJ, Robbins TW. 2016. Drug addiction: updating actions to habits to compulsions ten years on. Annu Rev Psychol 67: 23–50. [DOI] [PubMed] [Google Scholar]

- Figner B, Knoch D, Johnson EJ, Krosch AR, Lisanby SH, Fehr E, et al. 2010. Lateral prefrontal cortex and self-control in intertemporal choice. Nat Neurosci 13: 538–539. [DOI] [PubMed] [Google Scholar]

- Fillmore MT, Rush CR. 2002. Impaired inhibitory control of behavior in chronic cocaine users. Drug Alcohol Depend 66: 265–273. [DOI] [PubMed] [Google Scholar]

- Fillmore MT, Rush CR, Marczinski CA. 2003. Effects of d-amphetamine on behavioral control in stimulant abusers: the role of prepotent response tendencies. Drug Alcohol Depend 71: 143–152. [DOI] [PubMed] [Google Scholar]

- Fillmore MT, Kelly TH, Martin CA. 2005a. Effects of d-amphetamine in human models of information processing and inhibitory control. Drug Alcohol Depend 77: 151–159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fillmore MT, Rush CR, Hays L. 2005b. Cocaine improves inhibitory control in a human model of response conflict. Exp Clin Psychopharmacol 13: 327–335. [DOI] [PubMed] [Google Scholar]

- Fiorillo CD, Newsome WT, Schultz W. 2008. The temporal precision of reward prediction in dopamine neurons. Nat Neurosci 11: 966–973. [DOI] [PubMed] [Google Scholar]

- Freeze BS, Kravitz AV, Hammack N, Berke JD, Kreitzer AC. 2013. Control of basal ganglia output by direct and indirect pathway projection neurons. J Neurosci 33: 18531–18539. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldstein RZ, Volkow ND. 2011. Dysfunction of the prefrontal cortex in addiction: neuroimaging findings and clinical implications. Nat Rev Neurosci 12: 652–669. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldstein BL, Barnett BR, Vasquez G, Tobia SC, Kashtelyan V, Burton AC, Bryden DW, Roesch MR. 2012. Ventral striatum encodes past and predicted value independent of motor contingencies. J Neurosci 32: 2027–2036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gossop M, Griffiths P, Powis B, Strang J. 1992. Severity of dependence and route of administration of heroin, cocaine and amphetamines. Br J Addict 87: 1527–1536. [DOI] [PubMed] [Google Scholar]

- Gremel CM, Costa RM. 2013. Orbitofrontal and striatal circuits dynamically encode the shift between goal-directed and habitual actions. Nat Commun 4: 2264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haber SN, Fudge JL, McFarland NR. 2000. Striatonigrostriatal pathways in primates form an ascending spiral from the shell to the dorsolateral striatum. J Neurosci 20: 2369–2382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Halbout B, Liu AT, Ostulund SB. 2016. A closer look at the effects of repeated cocaine exposure on adaptive decision making under conditions that promote goal-directed control. Front Psychiatry 7: 44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harrison ELR, Coppola S, McKee SA. 2009. Nicotine deprivation and trait impulsivity affect smokers’ performance on cognitive tasks of inhibition and attention. Exp Clin Psychopharmacol 17: 91–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hester R, Garavan H. 2004. Executive dysfunction in cocaine addiction: evidence for discordant frontal, cingulate, and cerebellar activity. J Neurosci 24: 11017–11022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holland PC. 2004. Relations between Pavlovian-instrumental transfer and reinforcer devaluation. J Exp Psychol Anim Behav Process 30: 104–117. [DOI] [PubMed] [Google Scholar]

- Hosokawa T, Kennerley SW, Sloan J, Wallis JD. 2013. Single-neuron mechanisms underlying cost-benefit analysis in frontal cortex. J Neurosci 33: 17385–17397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Houk JC, Wise SP. 1995. Distributed modular architectures linking basal ganglia, cerebellum, and cerebral cortex: their role in planning and controlling action. Cereb Cortex 5: 95–110. [DOI] [PubMed] [Google Scholar]

- Hulka LM, Vonmoos M, Preller KH, Baumgartner MR, Seifritz E, Gamma A, Quednow BB. 2015. Changes in cocaine consumption are associated with fluctuations in self-reported impulsivity and gambling decision-making. Psychol Med 45: 3097–3110. [DOI] [PubMed] [Google Scholar]

- Ikemoto S. 2007. Dopamine reward circuitry: two projection systems from the ventral midbrain to the nucleus accumbens-olfactory tubercle complex. Brain Res Rev 56: 27–78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- International Brain Laboratory. 2017. An international laboratory for systems and computational neuroscience. Neuron 96: 1213–1218. [DOI] [PMC free article] [PubMed] [Google Scholar]