SUMMARY

Background

The ability to distinguish sensory signals that register unexpected events (exafference) from those generated by voluntary actions (reafference) during self-motion is essential for accurate perception and behavior. The cerebellum is most commonly considered in relation to its contributions to the fine-tuning of motor commands and sensori-motor calibration required for motor learning. During unexpected motion, however, the sensory prediction errors that drive motor learning potentially provide a neural basis for the computation underlying the distinction between reafference and exafference.

Results

Recording from monkeys during voluntary and applied self-motion, we demonstrate that individual cerebellar output neurons encode an explicit and selective representation of unexpected self-motion by means of an elegant computation that cancels the reafferent sensory effects of self-generated movements. During voluntary self-motion, the sensory responses of neurons that robustly encode unexpected movement are cancelled. Neurons with vestibular and proprioceptive responses to applied head and body movements are unresponsive when the same motion is self-generated. When sensory reafference and exafference are experienced simultaneously, individual neurons provide a precise estimate of the detailed time course of exafference.

Conclusions

These results provide an explicit solution to the longstanding problem of understanding mechanisms by which the brain anticipates the sensory consequences of our voluntary actions. Specifically by revealing a striking computation of a sensory prediction error signal that effectively distinguishes between the sensory consequences of self-generated and externally produced actions, our findings overturn the conventional thinking that the sensory errors coded by the cerebellum principally contribute to the fine-tuning of motor activity required for motor learning.

Keywords: sensory coding, self-motion, response selectivity, cerebellum, fastigial nucleus, efference copy, voluntary movement, vestibular, neck proprioception

INTRODUCTION

The cerebellum has been proposed as a likely candidate site for a forward model that predicts the sensory consequences of self-generated action. Such a representation of the expected consequences of movement has emerged as an important theoretical concept in motor control [reviewed in 1, 2]. To date, this hypothesis has been chiefly considered in relation to the fine-tuning of motor commands and sensori-motor calibration required for motor learning. In this context, the cerebellum computes a prediction of the sensory consequences of an action. This prediction is then compared to the sensory stimulation produced by the actual movement to compute an error signal that in turn guides the updating of the motor program. Indeed, there is evidence that sensory prediction errors drive motor learning [3, 4], and studies of neurologic patients [5, 6] and brain stimulation [7] suggest a role for cerebellum-dependent mechanisms.

However, sensory prediction errors arise not only as a result of changes in the motor apparatus and/or environment (i.e., conditions that drive motor learning); but also whenever we experience externally produced sensory stimuli. If externally imposed stimulation is systematically paired with voluntary movement, motor learning occurs [8]. In contrast, when sensory stimulation is unexpected the computation of sensory prediction errors effectively enables the brain to distinguish between the consequences of our self-generated actions (sensory reafference) and stimulation that is externally produced (sensory exafference). Work in the vestibular system has provided evidence that indeed such a computation is performed. While afferents similarly encode vestibular reafference and exafference during self-motion [9, 10], neurons at the next stage of sensory processing preferentially respond to vestibular exafference [11, 12] indicating that the brain computes a cancellation signal required to suppress self-generated vestibular signals and thus selectively encode sensory exafference.

Despite long-standing interest in the computations required for accurate motor control, the neural mechanisms underlying the computation of sensory exafference remain unclear. It has been proposed that our brain constructs an internal model of the expected sensory consequences of movement based on an efference copy of the self-produced motor command [13–15]. Studies in the electrosensory system of fish have shown that its cerebellum-like circuitry computes predictions about the sensory consequences of the animals’ own behavior [16]. However, while single unit recording studies in primates [17] and imaging studies in normal subjects show increases in cerebellar activity when sensory feedback does not match what is expected [18, 19], the proposal that the cerebellum computes an explicit estimate of the sensory consequences of unexpected movement has not, to our knowledge, been tested. Moreover, because exafference and reafference are often experienced simultaneously, it is crucial to address whether neurons encode sensory prediction errors demonstrating the anticipation and cancellation of reafferent effects under conditions in which active and passive stimulation is concurrent.

Here we recorded from individual neurons in the most medial of the deep cerebellar nuclei (rostral fastigial nucleus (rFN)), which constitutes a major output target of the cerebellar cortex [20] and sends strong projections to the vestibular nuclei, reticular formation, and spinal cord [20–23] to regulate postural control. We show for the first time that two separate processing streams within the cerebellum encode unexpected movements of the head (i.e., vestibular exafference) and body (i.e., combining vestibular and proprioceptive exafference) - even when applied concomitantly with voluntary motion. Our findings overturn the common assumption that the sensory errors coded by the cerebellum principally contribute to the fine-tuning of motor activity required for motor learning by demonstrating the contribution of sensory prediction errors in the cerebellum to the computation of sensory exafference.

RESULTS

All neurons included in the present report (n=41) were sensitive to passive vestibular stimulation and were insensitive to eye movements, consistent with previous characterizations of rFN neurons [24–26]. Neurons were further characterized as either unimodal or bimodal based on their sensitivity to applied stimulation of the neck proprioceptors. Notably, 52% of our sample (unimodal neurons; n=21) were insensitive to proprioceptive stimulation. The other 48% (bimodal neurons; n=20) were responsive to passive proprioceptive as well as vestibular stimulation. As a result of their vestibular sensitivity, unimodal neurons encode passive head motion, while bimodal neurons combine vestibular and neck proprioceptive inputs to encode passive body motion [24]. Thus, as expected, only the unimodal neurons responded during a third passive protocol in which the head was rotated on a stationary body.

rFN neurons encode unexpected but not self-produced sensory signals during self-motion

To date, prior investigations exclusively focused on understanding how these neurons, which constitute a major output target of the cerebellar cortex, encode sensory information under the passive (i.e., exafferent) stimulation conditions described above. However, in everyday life, vestibular and proprioceptive stimulation is the result of our own actions as well as motion resulting from external events. Accordingly, we performed a series of experiments to test the hypothesis that these neurons selectively encode unexpected, passively applied stimuli.

We first compared neuronal responses to passively applied and self-produced (i.e., active) stimuli with similar profiles. In the head-restrained condition, we applied vestibular and proprioceptive stimuli with a velocity profile designed to mimic that produced during active movement (i.e., ‘active-like’ motion profiles; see S1A1–3 and EXPERIMENTAL PROCEDURES). The monkey’s head was then released allowing the generation of actual active head/body movements. Figure 1 illustrates the striking difference in the responses of representative unimodal and bimodal neurons during passive (A) versus active (B) motion. The example cells were strongly modulated during passive head and body motion, respectively. However, when the monkey voluntarily produced motion that resulted in comparable sensory stimulation, the example neurons were virtually unresponsive to the same motion (87% and 86% response attenuation, respectively). For completeness, analyses comparing responses to active versus passive motion were also carried out on unimodal neurons during body motion (Fig. S1B1) and bimodal neurons during head motion (Fig. S1B2) confirming that the expected lack of response remained in the active condition.

Figure 1. rFN neurons responses encode unexpected but not self-produced sensory inputs during self-motion.

A-B, Responses of example unimodal (A1 and B1) and bimodal (A2 and B2) neurons during passive (blue traces, A) and voluntary (red traces, B) motion paradigms. Top row: Average head (unimodal neurons) and body (bimodal neurons) velocities for 10 movements. The thickness of the shaded traces corresponds to the standard deviation across trials. Raster plots illustrating the example neurons’ responses for each trial are shown below. Bottom row: Dark and lighter grey shading correspond to the average firing rates and standard deviations for the same 10 movements. Overlaying blue (A1–2) and red (B1–2) lines show the estimated best fit to the firing rate based on a bias term and sensitivity to head (unimodal neurons) or body (bimodal neurons) motion. Note, both neurons showed robust modulation for passive motion (unimodal neuron, head velocity sensitivity: 0.41 (sp/s)/(°/s) and bimodal neuron, body velocity sensitivity: 0.36 (sp/s)/(°/s)), but responses were minimal when the same motion was self-produced (0.07 (sp/s)/(°/s) versus 0.05 (sp/s)/(°/s), respectively). Superimposed dashed red lines in the active condition (B1–2) show predicted responses based on each neuron’s sensitivity to passive motion. Dashed black arrows on the cartoons show where the force was applied to generate passive motion. Raster plots were down sampled to improve visibility of the spikes. See also supplemental Figure S1.

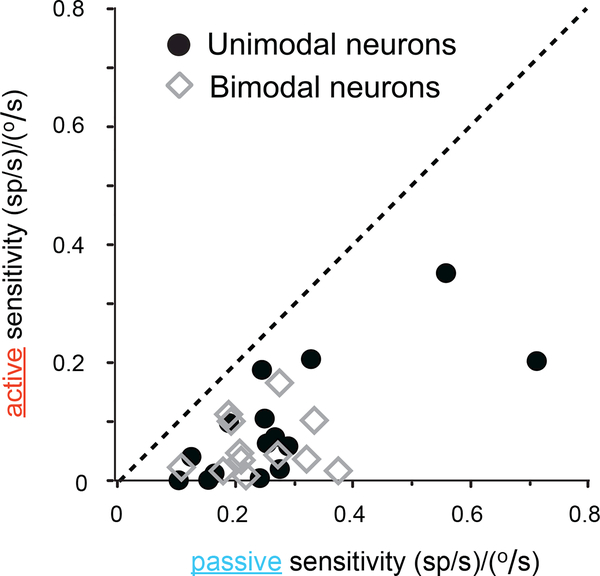

The observations shown in Figure 1 are summarized for the population in Figure 2. Neuronal responses in the active condition were not well predicted by sensitivities to vestibular and/or proprioceptive inputs in the passive condition. All data points fall well below the unity line indicating that responses were suppressed during self-generated motion. Overall, for our entire population of neurons, modulation was reduced by ~70% when stimulation was self-produced (paired t-test p<0.05 for both populations). This result suggests that rFN neurons do not provide a veridical representation of head (unimodal neurons) or body (bimodal neurons) motion during everyday activities as their sensitivities to self-produced vestibular and/or proprioceptive input are attenuated.

Figure 2. Population analysis: cerebellar neurons preferentially respond to passive stimulation.

Scatter plot showing a cell-by-cell comparison of response sensitivities to active and passive motion for the populations of unimodal (black circles) and bimodal (grey diamonds) neurons. The dotted line represents unity. Mean neuronal sensitivities= 0.13±0.03 versus 0.32±0.05 (sp/s)/(°/s) for unimodal neurons and 0.11±0.01 versus 0.31±0.04 (sp/s)/(°/s) for bimodal neurons, during active and passive motion, respectively. See also supplemental Figure S2.

The question thus arises: What accounts for the observed attenuation? During passive motion, rFN neuron responses can be predicted based on a linear summation of each neuron’s sensitivity to vestibular and neck proprioceptor stimulation [24]. Accordingly, we tested whether this might also be the case during active motion. In contrast to the passive condition, neuronal responses could not be predicted based on a linear summation of their sensitivities to vestibular and proprioceptive stimulation (see Fig. S2), indicating that an additional inhibitory signal is necessary to account for the attenuation observed in rFN neuron responses to self-generated sensory stimuli. Additionally, we tested the possibility that the production of a motor command alone can account for the attenuation of responses during active motion but found that this was not the case. Specifically, monkeys were unexpectedly restrained as they oriented to an eccentric target, where the intended but unrealized movement was demonstrated by the production of neck torque (Fig. 3A1,A2). The responses of unimodal and bimodal neuron populations during attempted movements in either direction did not differ from resting rate (Fig. 3B1,B2; paired t-test; p>0.05 for both neuronal populations).

Figure 3. The production of motor commands does not account for neuronal response attenuation.

A1 and A2, The example unimodal (A1) and bimodal (A2) neurons did not display any related modulation for torques produced either in the ipsliateral (i.e., ipsi; left) or contralateral (i.e., contra; right) directions. Traces are aligned on torque onset and show eye position (green), torque (blue), and firing rates (grey) averaged (±SD) over 10 movements in each direction. B1 and B2, Population analysis: Mean firing rates of unimodal (B1) and bimodal (B2) neurons for periods of ipsilaterally (light blue symbols) and contralaterally (dark blue symbols) directed torque plotted as a function of resting rate. The slope of the regression line was not different from 1 (p>0.05), indicating that torque production did not influence neuronal activity. Insets: mean response rates of both neuron populations do not differ across conditions (paired t-test; p>0.05 for both neuronal populations).

Neurons selectively respond to passive motion when experienced concurrently with active motion

Thus far we have only considered active or passive stimulation in isolation; during daily activities we commonly experience simultaneous active and passive motion. Thus the question remains: What information do rFN neurons encode when unexpected sensory signals are experienced concurrently with self-produced ones? We predicted that unimodal and bimodal neurons solve this problem by each selectively responding to passively applied stimulation thereby providing representations of unexpected motion (i.e., passive head and body motion, respectively).

To test this proposal, neuronal activity was recorded as monkeys generated voluntary movements of their heads and bodies while simultaneously undergoing passive whole-body rotation. First, we considered unimodal neurons. If a given neuron selectively responded to passively applied vestibular stimulation, one would expect that during combined active-passive motion it should (i) remain unresponsive to any actively generated motion of the head relative to space, but (ii) continue to robustly encode head motion due to the passively applied rotation. Figure 4 illustrates the response of an example unimodal neuron during passive whole-body rotation alone (Fig. 4A), combined with simultaneous active head (Fig. 4B-C, open boxes) and body (Fig. 4C, shaded box) movements. Comparison across panels reveals that the neuron selectively encoded only the passive component of motion (passive head motion only prediction, blue trace), rather than absolute head in space motion (total head motion prediction, black trace). Thus, consistent with our prediction, our example unimodal neuron faithfully encoded passively applied vestibular stimulation regardless of whether it occurred in isolation or simultaneously with self-generated vestibular stimulation.

Figure 4. Unimodal neurons selectively respond to passive head motion when experienced concurrently with active motion.

A, Activity of an example unimodal neuron during passive sinusoidal whole-body rotation applied alone. B-C, Response of the same unimodal neuron during a paradigm where the monkey generated voluntary head (B) or body (C) movements while being simultaneously passively rotated. Superimposed on the firing rates are predictions based on passive head motion only (blue traces), and total head motion (black traces).

Using the same approach, we next tested bimodal neurons. In this case, if a given neuron selectively responded to passively applied vestibular and proprioceptive stimulation, one would expect that during combined active and passive motion it should (i) remain unresponsive to active body motion relative to space but (ii) robustly encode body motion due to the passively applied rotation. Figure 5 illustrates the response of an example bimodal neuron during passive whole-body rotation alone (Fig. 5A) and combined with active body (Fig. 5B, shaded box) and head (Fig. 5B-C, open boxes) movements. Comparison across panels reveals that the neuron selectively encoded only the passive component of the motion (passive body motion only prediction, blue trace), rather than absolute body in space motion (total body motion prediction, dashed black trace). Furthermore, this neuron’s response was also not altered by concurrent active head movements (Fig. 5B-C, open boxes). Thus, in agreement with our prediction, our example bimodal neuron faithfully encoded passively applied vestibular and proprioceptive stimulation regardless of whether it occurred in isolation or simultaneously with self-generated stimulation.

Figure 5. Bimodal neurons selectively respond to passive body motion when experienced concurrently with active motion.

A, Activity of an example bimodal neuron during passive sinusoidal whole-body rotation applied alone. B-C, Response of the same bimodal neuron during a paradigm where the monkey generated voluntary body (B) or head (C) movements while being simultaneously passively rotated. Superimposed on the firing rates are predictions based on passive body motion only (blue traces) and total body motion (dashed black traces).

What signal do cerebellar neurons encode during self-motion?

Cerebellar dependent mechanisms are thought to play a central role in computing sensory prediction errors - a signal that would represent the sensory consequences of unexpected sensory stimulation (i.e. exafference) during self-generated actions. Given that the rFN receives direct input from the output cells of the cerebellar cortex (i.e., Purkinje cells), we hypothesized that rFN neurons would explicitly encode exafference. To directly test this hypothesis, we next compared the sensitivities of each unimodal and bimodal neuron in our population during combined active and passive motion of the head (Fig. 4) or body (Fig. 5), respectively to their sensitivity to the same motion passively applied in isolation. If a given neuron explicitly encodes exafference then its sensitivity to sensory stimulation resulting from the passive component of motion should be the same regardless of whether it occurred concurrently with active motion or was applied in isolation. Additionally, its sensitivity to sensory stimulation resulting from the active component of motion should be markedly reduced relative to its passive sensitivity.

Figure 6 shows a comparison of each neuron’s sensitivity to the passive (A) and active (B) components of motion to the same neuron’s sensitivity to passive motion applied alone. Consistent with the first part of our hypothesis, unimodal and bimodal neurons responded similarly to passive motion in both conditions (Fig. 6A); the slopes of the regression lines were not different from unity (p=0.63 and 0.95, respectively). Thus, rFN neurons faithfully encoded exafference during passive motion, whether it occurred concurrently with active movements or in isolation. Figure 6B shows that, consistent with the second part of our hypothesis, the responses of unimodal and bimodal neurons were significantly (p<0.05) attenuated during active as compared to passive motion (68% and 71% attenuation, respectively). All points fell below the unity line, clearly demonstrating that rFN neurons remain unresponsive to sensory stimulation that is the result of active motion, even when it occurs concurrently with passively applied motion. Taken together, our results not only demonstrate for the first time that unimodal and bimodal neurons differentiate between the sensory consequences of active and passive motion, but also show that this facilitates the selective and explicit encoding of the passive components of head and body motion, respectively. Thus, our findings are consistent with the proposal that the responses of individual rFN neurons reveal the output of a computation providing an explicit estimate of the detailed time course of exafference (e.g, blue fits in Fig. 4 and 5).

Figure 6. rFN neurons encode an explicit estimate of exafference.

A, Scatter plot of unimodal (black circles) and bimodal (open diamonds) neuron sensitivities to the passive component of simultaneously occurring active and passive rotation, versus neuronal sensitivities to passive rotations occurring alone. The sensitivity of rFN neurons to passive motion was the same whether it occurred in isolation or in combination with active movements (slope=0.91; p=0.67 and slope=0.99; p= 0.95 for unimodal and bimodal neurons respectively). B, Scatter plot of unimodal (black circles) and bimodal (open diamonds) neuron sensitivities to the active component of simultaneously active and passive rotation, versus sensitivities to passive rotations occurring alone. Comparison with panel A indicates that neuronal responses to active motion were significantly selectively suppressed in this condition.

DISCUSSION

To date, all previous single unit recording studies in the vestibular cerebellum have exclusively focused on understanding how passively applied stimuli are encoded during motion [reviewed in 27, 28]. However, during daily activities we commonly experience simultaneous active and passive motion. Our data clearly show two separate processing streams within macaque cerebellum selectively encode sensory information arising from externally applied motion. Specifically, unimodal neurons selectively respond to vestibular exafference to encode unexpected head-in-space velocity, while bimodal neurons respond to both vestibular and proprioceptive exafference to encode unexpected body-in-space velocity. Thus, taken together our findings reveal for the first time the output of an elegant computation in which two distinct neuron populations selectively and dynamically encode unexpected head versus body motion relative to space.

Theoretically, the computation of exafference requires a comparison between an internal estimate of the sensory consequences of self-generated action (i.e., forward model) and the actual sensory feedback [14, 29]. There is accumulating evidence that this comparison is made, at least in part, at the level of the cerebellar cortex. Notably, complex and simple spike responses decrease and increase, respectively during active versus passive wrist movements [17], consistent with the idea that reafferent sensory feedback is suppressed. Additionally, the result of human imaging studies are consistent with the proposal that cerebellar cortex is involved in the suppression of tactile reafference during self-produced ‘tickle’ [30], and signaling of the discrepancy between predicted and actual sensory feedback when subjects encounter unexpected load changes in a voluntary lifting task [19].

The ability to control posture and estimate self-motion depends strongly on the integration of vestibular and proprioceptive information [31–34], and is profoundly disrupted in cerebellar patients [35]. The rFN is ideally situated anatomically to contribute to postural control as it receives descending projections from the anterior vermis of the cerebellum [20] as well as ascending neck proprioceptive input via the central cervical nucleus (CCN) and the external cuneate nucleus [36], and in turn projects to vestibular neurons, reticular formation, and spinal cord [20–23]. Accordingly, our data (Fig. 4 and 5) can account for how the brain selectively responds to adjust postural tone in response to unexpected motion experienced during voluntary motion. Notably, our findings also provide significant insight into the neural mechanisms underlying the suppression of sensory reafference in vestibular and proprioceptive processing. In primates, vestibular afferents do not make the distinction between active and passive motion [e.g., 9, 10]. Similarly, proprioceptive and somatosensory inputs are largely intact during active motion at the level of the periphery and spinal cord [37, 38]. Reafferent signals are however suppressed at subsequent stages of processing. For example, reafferent vestibular input is suppressed at the first central stage of vestibular processing (i.e., neurons in the vestibular nuclei). Prior to this study, the source of the suppression signal required to suppress vestibular afferent input at this first stage of sensory processing was unknown [11, 12]. The rostral fastigial nucleus sends a strong and direct projection to the vestibular nuclei [20–23], and thus we speculate based on our current results that the unimodal neurons of the rFN contribute to the selective processing of exafference in early vestibular processing.

Ultimately, further studies in the cerebellar cortex and deep nuclei are required to provide a deeper understanding of the mechanism underlying the suppression of sensory reafference. An interesting parallel can be found in the electrosensory system of fish in which the cerebellum-like circuitry computes a negative image of the predicted sensory consequences of the animals’ own behavior to suppress reafference [39, 40]. Interestingly, however our results show that the rules that govern the cancellation of self-generated vestibular signals in the primate cerebellum differ from those in fish electrosensory system. In the latter case, a cancellation signal is produced to eliminate self-generated inputs regardless of whether or not the fish’s motor command to elicit an electric organ discharge is actually executed. In contrast, reafference cancellation in the primate cerebellum appears to be characterized by a more sophisticated computation in which the differences between expected and actual sensory inputs are used to selectively modulate sensory processing of self-motion as when motor output is blocked there is no negative image evident in the firing of rFN neurons (Fig. 3).

The cerebellum is most commonly considered in relation to its contributions to the fine-tuning of motor commands and the sensori-motor calibration required for motor learning. In this context, there is considerable debate whether cerebellar activity is consistent with an inverse or forward model [reviewed in 41]. Support for the latter view is provided by recent evidence that cerebellar Purkinje cell responses encode the predicted consequence of movement, rather than actual movement [42, 43]. The difference between this prediction of the forward model and actual sensory feedback is proposed to drive motor learning. In the current study, we found that neurons in the cerebellar deep nuclei reveal the output of such a computation, namely an explicit estimate of the detailed time course of unexpected sensory input (i.e., sensory prediction error). Notably, however, this signal was encoded in conditions that did not require motor learning. Thus, our results support the view that the output of the cerebellum is consistent with a forward model, and further suggest that this computation underlies the brain’s ability to distinguish between expected and unexpected sensory inputs.

EXPERIMENTAL PROCEDURES

Surgical procedures and data acquisition

All experimental protocols were approved by the McGill University Animal Care Committee and were in compliance with Canadian Council on Animal Care guidelines. Two rhesus monkeys (Macaca mulatta) were prepared for extracellular recording using aseptic surgical techniques as previously described by Brooks and Cullen [24].

During experiments, monkeys trained to follow a target light sat in a primate chair attached to a vestibular turntable. Single-unit activity was recorded using enamel-insulated tungsten microelectrodes, and gaze, head and body positions were measured using the search coil technique [44, 45]. Target position, and motor velocity were controlled on-line [46], and recorded on DAT tape for later playback with concurrent gaze, head, body position, and unit activity. During playback, action potentials were discriminated (BAK), and recorded position and table velocity signals were low-pass filtered at 250 Hz (8 pole anti-aliasing Bessel filter) and sampled at 1 kHz.

Head-restrained paradigms

Passive vestibular (i.e., whole-body rotations) and proprioceptive (body-under-head rotatiosn) stimuli were first applied as described by Brooks and Cullen [24]. Stimuli included: i) 1Hz sinusoidal (±40 °/s) and ii) ‘active-like motion’ trajectories corresponding to those produced during active head-unrestrained gaze shifts (see “head-free paradigms” below). Units were classified as bimodal if they responded to proprioceptive stimulation (sensitivity >0.1 (sp/s)/(°/s)), and unimodal if insensitive.

To quantify the integration of vestibular and proprioceptive inputs, neuronal responses were next characterized during head-on-body rotations. A torque motor (Kollmorgen) attached to the monkey’s head delivered sinusoidal and ‘active-like motion’ stimuli. Note, that sensitivities to sinusoidal (Brooks and Cullen 2009) and active-like motion (Fig. S1A-3) were not statistically different during passive whole-body, body-under-head and head-on-body rotation paradigms (p>0.05 for both neuron types during these three paradigms).

Finally, neuronal responses were recorded while each monkey attempted to orient to a target, but its head and body were restrained. The large torques measured during this paradigm (>1 Nm) verified that monkeys generated motor commands that were unrealized due to the restraint.

Head-Free paradigms

After a neuron was fully characterized in the head-restrained condition, the monkey’s head was carefully released to maintain neuronal isolation. Once released, the monkey was able to rotate its head freely in the yaw axis. The same neuron was then recorded while monkeys made voluntary i) eye-head movements (necessitating neck muscle activation) with the body stationary and ii) eye-head-body movements (necessitating both neck and axial muscles activation). Additionally, to allow the characterization of response to simultaneous voluntary and passive movements, head and body-unrestrained monkeys were passively rotated (1Hz, ±40°/s peak velocity).

Analysis of neuronal discharges

Data were imported into the Matlab (The MathWorks) programming environment for analysis, filtering, and processing as previously described [14]. Neural firing rate was represented using a spike density function in which a Gaussian was convolved with the spike train [SD of 5 ms; 47].

We first verified that each neuron neither paused nor burst during saccades, and was unresponsive to changes in eye position during fixation [11, 48]. A least-squares regression analysis was then used to describe each unit’s response to whole-body and body-under-head rotations:

| (1) |

where is the estimated firing rate, b is a bias term, Cv,i and Ca,i are coefficients representing velocity and acceleration sensitivities respectively to head (i=1) or body motion (i=2), and and are head (i=1) velocity and acceleration, and body (i=2) velocity and acceleration (during whole-body and body-under-head), respectively.

A similar approach was used to estimate sensitivities to passive and active head-on-body movements. In these conditions, neck proprioceptive and vestibular sensitivities cannot be dissociated and are estimated as a single coefficient. Estimated sensitivities were compared to those predicted from linear summation of the vestibular and proprioceptive sensitivities estimated for the same neuron during passive whole-body and body-under-head rotations (termed linear model), respectively. During active body movements, where the head and body moved together in space, the data was fit with estimated vestibular coefficients (eq. 1).

To quantify the ability of the linear regression analysis to model neuronal discharges, the variance-accounted-for (VAF) for each regression equation was determined as previously described [14]. Values are expressed as mean ±SEM, and Student’s t-tests were used to assess differences between conditions.

Supplementary Material

HIGHLIGHTS.

-

►

The cerebellum distinguishes the exafferent and reafferent effects of self-motion.

-

►

Cerebellar output neurons dynamically encode sensory prediction errors in monkeys.

-

►

During self-motion, neurons provide an explicit representation of unexpected motion.

-

►

The neuronal computation ensures accurate spatial orientation and postural control.

Acknowledgments:

We thank M. Jamali, D. Mitchell and A. Dale for critically reading this manuscript and S. Nuara and W. Kucharski for excellent technical assistance.

This research was supported by FQRNT (J.X.B.), CIHR (K.E.C.), as well as NIH grant DC002390 (K.E.C.).

References

- 1.Wolpert DM, Miall RC, and Kawato M (1998). Internal models in the cerebellum. Trends Cogn Sci 2, 338–347. [DOI] [PubMed] [Google Scholar]

- 2.Krakauer JW, and Mazzoni P Human sensorimotor learning: adaptation, skill, and beyond. Curr Opin Neurobiol 21, 636–644. [DOI] [PubMed] [Google Scholar]

- 3.Mazzoni P, and Krakauer JW (2006). An implicit plan overrides an explicit strategy during visuomotor adaptation. J Neurosci 26, 3642–3645. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Wong AL, and Shelhamer M Exploring the fundamental dynamics of error-based motor learning using a stationary predictive-saccade task. PLoS One 6, e25225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Taylor JA, Klemfuss NM, and Ivry RB An explicit strategy prevails when the cerebellum fails to compute movement errors. Cerebellum 9, 580–586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Tseng YW, Diedrichsen J, Krakauer JW, Shadmehr R, and Bastian AJ (2007). Sensory prediction errors drive cerebellum-dependent adaptation of reaching. J Neurophysiol 98, 54–62. [DOI] [PubMed] [Google Scholar]

- 7.Galea JM, Vazquez A, Pasricha N, de Xivry JJ, and Celnik P Dissociating the roles of the cerebellum and motor cortex during adaptive learning: the motor cortex retains what the cerebellum learns. Cereb Cortex 21, 1761–1770. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Davidson PR, and Wolpert DM (2003). Motor learning and prediction in a variable environment. Curr Opin Neurobiol 13, 232–237. [DOI] [PubMed] [Google Scholar]

- 9.Cullen KE, and Minor LB (2002). Semicircular canal afferents similarly encode active and passive head-on-body rotations: implications for the role of vestibular efference. J Neurosci 22, RC226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Jamali M, Sadeghi SG, and Cullen KE (2009). Response of vestibular nerve afferents innervating utricle and saccule during passive and active translations. J Neurophysiol 101, 141–149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Roy JE, and Cullen KE (2001). Selective processing of vestibular reafference during self-generated head motion. J Neurosci 21, 2131–2142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Roy JE, and Cullen KE (2004). Dissociating self-generated from passively applied head motion: neural mechanisms in the vestibular nuclei. J Neurosci 24, 2102–2111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sperry R (1950). Neural basis of the spontaneous optokinetic response produced by visual inversion. J Comp Physiol Psychol 43, 482–489. [DOI] [PubMed] [Google Scholar]

- 14.von Holst E, and Mittelstaedt H (1950). Das reafferenzprinzip. Naturwissenschaften 37, 464–476. [Google Scholar]

- 15.Jeannerod M, Kennedy H, and Magnin M (1979). Corollary discharge: its possible implications in visual and oculomotor interactions. Neuropsychologia 17, 241–258. [DOI] [PubMed] [Google Scholar]

- 16.Sawtell NB, and Bell CC (2008). Adaptive processing in electrosensory systems: links to cerebellar plasticity and learning. Journal of physiology, Paris 102, 223–232. [DOI] [PubMed] [Google Scholar]

- 17.Bauswein E, Kolb FP, Leimbeck B, and Rubia FJ (1983). Simple and complex spike activity of cerebellar Purkinje cells during active and passive movements in the awake monkey. J Physiol 339, 379–394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Blakemore SJ, Frith CD, and Wolpert DM (1999). Spatio-temporal prediction modulates the perception of self-produced stimuli. J Cogn Neurosci 11, 551–559. [DOI] [PubMed] [Google Scholar]

- 19.Jenmalm P, Schmitz C, Forssberg H, and Ehrsson HH (2006). Lighter or heavier than predicted: neural correlates of corrective mechanisms during erroneously programmed lifts. J Neurosci 26, 9015–9021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Batton RR 3rd, Jayaraman A, Ruggiero D, and Carpenter MB (1977). Fastigial efferent projections in the monkey: an autoradiographic study. J Comp Neurol 174, 281–305. [DOI] [PubMed] [Google Scholar]

- 21.Homma Y, Nonaka S, Matsuyama K, and Mori S (1995). Fastigiofugal projection to the brainstem nuclei in the cat: an anterograde PHA-L tracing study. Neurosci Res 23, 89–102. [PubMed] [Google Scholar]

- 22.Carleton SC, and Carpenter MB (1983). Afferent and efferent connections of the medial, inferior and lateral vestibular nuclei in the cat and monkey. Brain Res 278, 29–51. [DOI] [PubMed] [Google Scholar]

- 23.Shimazu H, and Smith CM (1971). Cerebellar and labyrinthine influences on single vestibular neurons identified by natural stimuli. J Neurophysiol 34, 493–508. [DOI] [PubMed] [Google Scholar]

- 24.Brooks JX, and Cullen KE (2009). Multimodal integration in rostral fastigial nucleus provides an estimate of body movement. J Neurosci 29, 10499–10511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Shaikh AG, Meng H, and Angelaki DE (2004). Multiple reference frames for motion in the primate cerebellum. J Neurosci 24, 4491–4497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Gardner EP, and Fuchs AF (1975). Single-unit responses to natural vestibular stimuli and eye movements in deep cerebellar nuclei of the alert rhesus monkey. J Neurophysiol 38, 627–649. [DOI] [PubMed] [Google Scholar]

- 27.Angelaki DE, Yakusheva TA, Green AM, Dickman JD, and Blazquez PM (2010). Computation of egomotion in the macaque cerebellar vermis. Cerebellum 9, 174–182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Barmack NH, and Yakhnitsa V Topsy turvy: functions of climbing and mossy fibers in the vestibulo-cerebellum. Neuroscientist 17, 221–236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Miall RC, Weir DJ, Wolpert DM, and Stein JF (1993). Is the cerebellum a smith predictor? J Mot Behav 25, 203–216. [DOI] [PubMed] [Google Scholar]

- 30.Blakemore SJ, Wolpert DM, and Frith CD (1999). The cerebellum contributes to somatosensory cortical activity during self-produced tactile stimulation. Neuroimage 10, 448–459. [DOI] [PubMed] [Google Scholar]

- 31.Mergner T, Anastasopoulos D, Becker W, and Deecke L (1981). Discrimination between trunk and head rotation; a study comparing neuronal data from the cat with human psychophysics. Acta Psychol (Amst) 48, 291–301. [DOI] [PubMed] [Google Scholar]

- 32.Mergner T, Nardi GL, Becker W, and Deecke L (1983). The role of canal-neck interaction for the perception of horizontal trunk and head rotation. Exp Brain Res 49, 198–208. [DOI] [PubMed] [Google Scholar]

- 33.Mergner T, Siebold C, Schweigart G, and Becker W (1991). Human perception of horizontal trunk and head rotation in space during vestibular and neck stimulation. Exp Brain Res 85, 389–404. [DOI] [PubMed] [Google Scholar]

- 34.Horak FB, Shupert CL, Dietz V, and Horstmann G (1994). Vestibular and somatosensory contributions to responses to head and body displacements in stance. Exp Brain Res 100, 93–106. [DOI] [PubMed] [Google Scholar]

- 35.Kammermeier S, Kleine J, and Buttner U (2009). Vestibular-neck interaction in cerebellar patients. Ann N Y Acad Sci 1164, 394–399. [DOI] [PubMed] [Google Scholar]

- 36.Voogd J, Gerrits NM, and Ruigrok TJ (1996). Organization of the vestibulocerebellum. Ann N Y Acad Sci 781, 553–579. [DOI] [PubMed] [Google Scholar]

- 37.Jones KE, Wessberg J, and Vallbo AB (2001). Directional tuning of human forearm muscle afferents during voluntary wrist movements. J Physiol 536, 635–647. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Seki K, and Fetz EE (2012). Gating of sensory input at spinal and cortical levels during preparation and execution of voluntary movement. J Neurosci 32, 890–902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Bell CC, Han V, and Sawtell NB (2008). Cerebellum-like structures and their implications for cerebellar function. Annu Rev Neurosci 31, 1–24. [DOI] [PubMed] [Google Scholar]

- 40.Requarth T, and Sawtell NB (2011). Neural mechanisms for filtering self-generated sensory signals in cerebellum-like circuits. Curr Opin Neurobiol 21, 602–608. [DOI] [PubMed] [Google Scholar]

- 41.Medina JF The multiple roles of Purkinje cells in sensori-motor calibration: to predict, teach and command. Curr Opin Neurobiol 21, 616–622. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Pasalar S, Roitman AV, Durfee WK, and Ebner TJ (2006). Force field effects on cerebellar Purkinje cell discharge with implications for internal models. Nat Neurosci 9, 1404–1411. [DOI] [PubMed] [Google Scholar]

- 43.Hewitt AL, Popa LS, Pasalar S, Hendrix CM, and Ebner TJ (2011). Representation of limb kinematics in Purkinje cell simple spike discharge is conserved across multiple tasks. J Neurophysiol 106, 2232–2247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Fuchs AF, and Robinson DA (1966). A method for measuring horizontal and vertical eye movement chronically in the monkey. J Appl Physiol 21, 1068–1070. [DOI] [PubMed] [Google Scholar]

- 45.Judge SJ, Richmond BJ, and Chu FC (1980). Implantation of magnetic search coils for measurement of eye position: an improved method. Vision Res 20, 535–538. [DOI] [PubMed] [Google Scholar]

- 46.Hayes AV RB, Optican LM (1982). A UNIX-based multiple process system for real-time data acquisition and control. Western Electronic Show and Conference Proceedings, 2, 1–10. [Google Scholar]

- 47.Cullen KE, Rey CG, Guitton D, and Galiana HL (1996). The use of system identification techniques in the analysis of oculomotor burst neuron spike train dynamics. J Comput Neurosci 3, 347–368. [DOI] [PubMed] [Google Scholar]

- 48.Roy JE, and Cullen KE (1998). A neural correlate for vestibulo-ocular reflex suppression during voluntary eye-head gaze shifts. Nat Neurosci 1, 404–410. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.