Abstract

Healthcare providers who use peripheral vascular and cardiac ultrasound require specialized training to develop the technical and interpretive skills necessary to perform accurate diagnostic tests. Assessment of competence is a critical component of training that documents a learner’s progress and is a requirement for competency-based medical education (CBME) as well as specialty certification or credentialing. The use of simulation for CBME in diagnostic ultrasound is particularly appealing since it incorporates both the psychomotor and cognitive domains while eliminating dependency on the availability of live patients with a range of pathology. However, successful application of simulation in this setting requires realistic, full-featured simulators and appropriate standardized metrics for competency testing. The principal diagnostic parameter in peripheral vascular ultrasound is measurement of peak systolic velocity (PSV) on Doppler spectral waveforms, and simulation of Doppler flow detection presents unique challenges. The computer-based duplex ultrasound simulator developed at the University of Washington uses computational fluid dynamics modeling and presents real-time color-flow Doppler images and Doppler spectral waveforms along with the corresponding B-mode images. This simulator provides a realistic scanning experience that includes measuring PSV in various arterial segments and applying actual diagnostic criteria. Simulators for echocardiography have been available since the 1990s and are currently more advanced than those for peripheral vascular ultrasound. Echocardiography simulators are now offered for both transesophageal echo and transthoracic echo. These computer-based simulators have 3D graphic displays that provide feedback to the learner and metrics for assessment of technical skill that are based on transducer tracking data. Such metrics provide a motion-based or kinematic analysis of skill in performing cardiac ultrasound. The use of simulation in peripheral vascular and cardiac ultrasound can provide a standardized and readily available method for training and competency assessment.

Keywords: Doppler ultrasound, duplex scanning, echocardiography, medical education, simulation

Introduction

In the past three decades, medical education has undergone two revolutions that, although converging from different disciplines, are directed towards the goal of improving patient safety that has been spurred by revelations of medical error.1 The need to focus on competency-based medical education (CBME) was voiced in 1978 by McGaghie in a World Health Organization report: ‘The intended output of a competency-based program is a health professional who can practice medicine at a defined level of proficiency, in accord with local conditions, to meet local needs’.2 At about the same time, simulation technology, which was initially developed for aviation and industrial safety, was adapted for medical applications.3 This review will report on the use of simulation to enable CBME in diagnostic ultrasound of blood vessels and the heart.

Competency-based medical education (Table 1)

Table 1.

Competency-based medical education.

|

Methods suggested by Accreditation Council for Graduate Medical Education (ACGME) for assessment of medical procedures:5

|

To evaluate competence based on proficiency rather than case volume it is necessary to define competence and describe how it should be assessed. The Accreditation Council for Graduate Medical Education (ACGME) defined six core competencies common to all programs, developed detailed Milestones to be achieved in each specialty and subspecialty program, and provided a collection of assessment tools to assist program directors in implementing the core competencies.4,5 Organizations in other countries worked in parallel.6

Assessment may be summative (judging a candidate’s ability to perform for certification or promotion) or formative (providing feedback on progress, accomplishment, and areas needing further practice). In addition to providing feedback to learners and progress reports to program directors, assessment metrics are valuable for assessing or comparing new curricula. In diagnostic ultrasound, assessment must encompass technical (psychomotor) skill in image acquisition in addition to cognitive skill in image interpretation. There are many tools for assessing cognitive skill,7 but technical skill competency has always been difficult to assess in a manner that is objective and reproducible between instructors when there is no standardized assessment method. Instead, certification has traditionally been, and still is, based on faculty observation of performance. The ACGME ranked several evaluation methods for performance of medical procedures.5 The methods have advantages as well as limitations.8–16 For diagnostic ultrasound the pertinent question is how best to assess technical skill in image acquisition.

Simulation (Table 2)

Table 2.

Advantages of simulation for competency-based medical education.

|

The ACGME requires simulation in multiple specialties due to its many important advantages in medical training, which include enabling practice in a safe environment and providing exposure to rare conditions that might not be encountered clinically.17–20 Since the Agency for Healthcare Research and Quality’s report in 2007 that simulation is especially effective for training in psychomotor skills,21 many studies have confirmed that simulation with deliberate practice is more effective in improving skills than the traditional ‘watch one, do one, teach one’ approach, and improves clinical outcomes.22,23

Simulation is also a very useful tool for assessment. During training, incorporation of metrics for competency testing can provide formative assessment or feedback and thereby help trainees reach proficiency. The ability to provide feedback was cited as a key educational feature of simulation in two reviews.24,25 This is an important advantage of simulation because feedback leads to the most effective learning and ‘appears to slow the decay of acquired skills’.24 Frequent feedback was recommended as a key component of CBME.2 Collection of skill metric data can inform program directors of the rate at which trainees reach competence in specific procedures.26 Training time can be increased in areas where poorly answered questions reflect potential learning needs. Skill metrics have shown that some trainees may never achieve competence.27 Simulation may also assist in training to proficiency, which is advocated by some educators because the traditional approach penalizes fast learners.28

Simulation provides an opportunity to perform rigorous competency testing on cases that have suitable pathology and are standardized not only for all trainees in a program, but for all trainees being considered for certification within a specialty. A survey by the American Association of Medical Colleges in 2011 found that most medical schools and teaching hospitals use simulation for education and/or assessment of psychomotor tasks.29 However, the employment of simulation for summative assessment or certification, credentialing, and accreditation remains in its infancy despite the clear theoretical advantages. ‘Only two specialty boards, anesthesiology and surgery, have required the use of simulation for primary certification.’30 Some authors criticize validity evidence for simulation-based assessments as being sparse and suboptimal.31 However, 28 studies that evaluated associations between simulator-based performance and performance with real patients showed that ‘higher simulator scores were associated with higher performance in clinical practice’.31

Simulation of vascular Doppler ultrasound

The need for skills assessment

In most applications of medical ultrasound the diagnosis is made from two-dimensional (2D) B-mode (gray scale) images alone. However, classification of disease severity in peripheral vascular applications is based primarily on alterations in velocities and flow patterns detected in the imaged vessels. In duplex ultrasound scanning, these flow patterns are characterized by Doppler spectral waveforms and color-flow imaging.32 The criteria for classification of arterial stenosis are based on peak systolic velocity (PSV) obtained from the Doppler spectral waveforms.33

Because the Doppler flow detector can only measure the component of velocity that is parallel to the ultrasound beam, the true velocity is calculated by assuming a flow-to-insonation angle. Accurate velocity calculation is therefore dependent on proper imaging technique by the examiner. Errors in Doppler velocity measurements can be introduced and exacerbated by improper alignment of the angle cursor, incorrect placement of the Doppler sample volume within the vessel lumen, utilizing too large a Doppler angle, or changing the Doppler angle between examinations.32 Since duplex ultrasound interpretation criteria for stenosis are based primarily on velocities, errors in the Doppler velocity measurements can lead to errors in diagnosis and treatment. The sensitivity of carotid duplex scanning in grading stenosis is only 65% to 71%.34 One study reported erroneous assignment of 15 of 35 lesions to non-surgical intervention solely on the basis of incorrect Doppler insonation angle.35 These findings are of particular concern because Doppler ultrasound is used as the primary, and sometimes the only, diagnostic test for vascular pathology.36

Skilled use of Doppler ultrasound by sonographers, physicians, and nurses for evaluating vascular access sites is an essential component of caring for patients on hemodialysis.37 Duplex ultrasound, which displays the B-mode tissue image together with Doppler spectral waveforms and color-flow, plays a major role in minimizing the morbidity and costs of hemodialysis access by determining the adequacy of arterial inflow and venous outflow, assessing fistula maturation, and determining the reasons for maturation failure.38 Duplex scanning can also diagnose problems related to maintenance of fistulas, including arterial steal, pseudoaneurysms, and stenoses in prosthetic or native venous conduits.38–40 Accurate assessment of dialysis access fistula patency is important because as many as 60% of access sites require an intervention within 1 year to maintain functional patency.41,42

Several studies have highlighted the crucial need for improved training methods in Doppler ultrasound. The Intersocietal Accreditation Commission (IAC, formerly ICAVL) reported that up to 35% of the applications for vascular laboratory accreditation demonstrated improper Doppler angle correction techniques.43 Stenosis classification error has been linked to lack of experience in Doppler ultrasound,44 and clinical management decisions differed between examiners in 45% of severe internal carotid artery stenoses.45 Accredited vascular laboratories that adhere to training and practice guidelines have been shown to perform better than non-accredited laboratories in terms of accuracy of treatment decisions. Stanley showed that IAC-accredited vascular laboratories correlated 83% of the time with reference studies performed at a central site, while facilities without IAC accreditation had only 45% correlation.46 Disagreement in the examination outcome indicated cases for which an incorrect treatment decision would have been made based solely on the non-accredited laboratory examination. Similarly, Brown et al. found that 35% of vascular examinations from non-accredited vascular laboratories had clinically significant errors when compared with reference studies at an accredited facility.47 These variations in practice outcome underline the need to not only improve the initial training of physicians and sonographers but also to monitor skill retention in the clinical setting.

Current Doppler ultrasound simulation technology (Table 3)

Table 3.

Current Doppler ultrasound simulators with skill metrics.

| Type | Doppler source | Metrics |

|---|---|---|

| Flow phantom | Real ultrasound machine |

|

| Mannequin with mock transducer | Generated in real time from the computational flow model as the mannequin is scanned |

|

There are several types of vascular ultrasound simulators that provide representations of the color-flow Doppler images and Doppler spectral waveforms with varying realism. Flow phantoms have a tissue-mimicking substrate with one or more vessels connected to a pump containing blood-mimicking fluid. An advantage for training is that the learner can acquire skill and experience with the operation (sometimes referred to as ‘knobology’) of the duplex ultrasound system because the phantom is examined with an actual duplex scanner. These simulation products can also be used to practice ultrasound-guided vascular access. However, flow phantoms present vascular flow abnormalities in an idealized fashion, and the number of cases is limited by the need for a separate physical model for each flow abnormality.

Some commercial vascular ultrasound simulators provide case review from recorded images when a mannequin is scanned (UltraSim, MedSim, Inc., Kfar Sava, Israel; SonoSim, SonoSim Inc., Santa Monica, CA). One product includes a simulated ultrasound machine for training in ‘knobology’ (MedSim, Inc.). Another product enables hands-on practice without a mannequin, either by tracking the mock transducer alone as it is moved in the air, or by tracking the transducer on a live subject instrumented with skin tags (SonoSim, Inc.). None of these vascular ultrasound simulators measures technical skill.

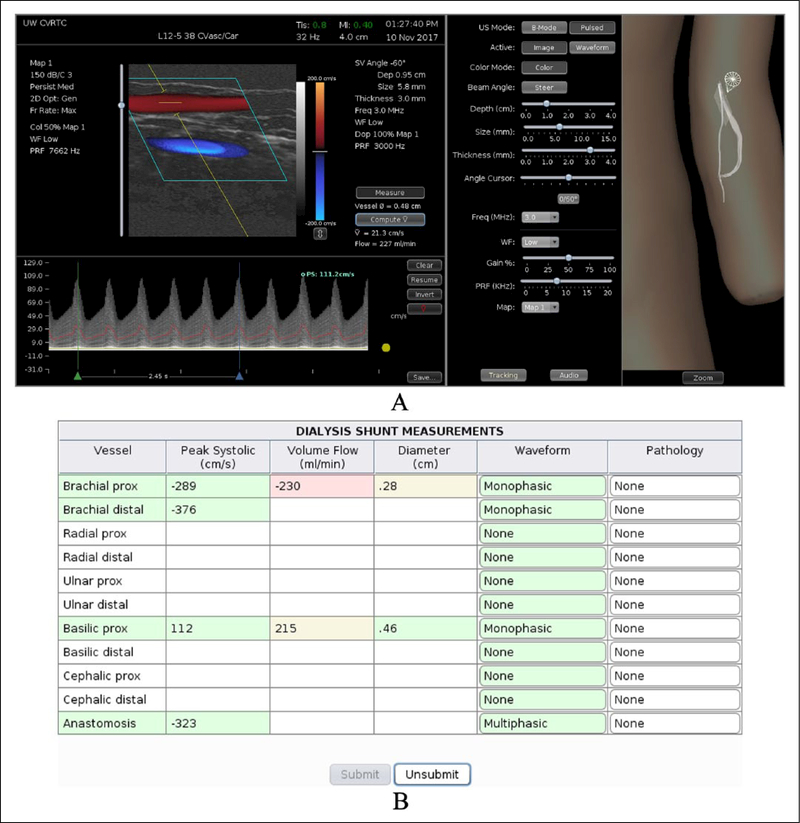

The University of Washington and Sheehan Medical LLC (Mercer Island, WA, USA) jointly developed a computer-based duplex ultrasound simulator that presents real-time color-flow Doppler images and Doppler spectral waveforms along with the corresponding B-mode images. The hardware consists of a personal computer, a mannequin, and a mock transducer whose spatial location and orientation are measured using a tracking device. As the examiner scans a mannequin the computer displays real patient B-mode images that morph according to the position and orientation of the mock transducer. The simulated B-mode images are derived from a three-dimensional (3D) volume of ultrasound image data previously acquired from a normal volunteer or patient. The vessel is reconstructed as a 3D lumen volume and computational fluid dynamics modeling is used to populate the lumen with time-varying velocity vectors that define the blood flow at all points within the vessel. This velocity field is sampled along with the image data to create a color-flow and spectral waveform display that responds in real time to the control panel settings selected by the examiner, including beam steering, pulse repetition frequency, Doppler angle, and sample volume size and depth (Figure 1).

Figure 1.

Computer display of the interface for the duplex ultrasound simulator developed at the University of Washington showing an examination of a dialysis access fistula in the left arm. (A) Left panel: duplex display showing the B-mode image with color-flow Doppler on top and Doppler spectral waveforms on the bottom. The Doppler spectral waveform has been acquired at a beam angle of 60 degrees and the cursor has been placed on the waveform for measurement of peak systolic velocity. Middle panel: controls for the ultrasound display mode and Doppler settings (including Doppler beam angle, sample volume depth, sample volume size, and PRF or scale). Right panel: the 3D display shows the locations of the transducer (‘star’ and cone) and B-mode image (translucent rectangle) on the vessel in the mannequin; this display can be zoomed. (B) Report page. The learner performs a scan and measures velocities that are entered on this table. When the learner ‘submits’ his/her answers, the cells are colored to indicate whether each response is correct (green), close (yellow), or definitely wrong (red). As the mouse is hovered over each cell, the correct value is displayed for feedback. In practice mode, the learner can ‘unsubmit’ the answers and try again. (Note: figure is in color online.)

Technical skill is not just assessed from the PSV that the trainee measures because the correct value could be obtained despite using incorrect technique. Instead, the trainee is presented with case studies on which his/her skill is assessed based on correctly identifying vessel anatomy, selecting appropriate ultrasound parameter settings, measuring blood flow velocity (and, when indicated, measuring volume flow), and making the diagnosis. The validity of the simulation has been demonstrated based on the accuracy of blood flow velocity measurements by examiners compared to the correct values in the flow model. When three experts made 36 PSV measurements on two carotid artery models, the mean deviation from the actual PSV was 8 ± 5%, and was similar between vessel segments and between examiners.48 Measurements of PSV in dialysis access shunt models had similar accuracy.49 These results demonstrate that an examiner can measure PSV from the spectral waveforms using the settings on the simulator with a mean absolute error in the velocity measurement of less than 10%.

The simulator developed at the University of Washington provides an absolute measure of examiner performance because the blood velocity values are known at all locations in the computer models. This is not possible with the scanning of live subjects or even with flow phantoms because their exact velocity values are not known precisely. With the addition of cases with a range of pathologies, this duplex ultrasound simulator may become a useful tool for training healthcare providers in vascular ultrasound applications and for assessing their skills in an objective and quantitative manner.

Simulation of cardiac ultrasound

The need for skills assessment

In the past, echocardiography (echo) was only performed by cardiologists with the assistance of cardiac sonographers. With the advent of low-cost, portable ultrasound machines, physicians in many specialties are adopting bedside or ‘point of care’ ultrasound, and medical schools and residencies are now adding ultrasound training to their curricula.50,51 Because physicians performing a focused cardiac ultrasound (FoCUS) examination acquire the images themselves rather than relying on a sonographer, they require hands-on training to develop the requisite skill. Assessment during training is essential to provide feedback, since frequent feedback is one of the pillars of CBME.2 Indeed, the American Society of Echocardiography (ASE) guidelines recommend that a portion of hands-on studies be proctored in real time to provide this important feedback.52

Assessment of competence is important because ‘Optimization of cardiac views is critical to obtaining a correct diagnosis’.52 There is concern that diagnostic ultrasound will be substandard when performed by practitioners with only brief training compared with dedicated ultrasound physicians or experienced sonographers.53–55 Recent ASE guidelines emphasize the breadth of the experience, that the trainees have ‘acquired and interpreted ultrasound images that represent the full range of diagnostic possibilities’, rather than just the number of patients scanned.56 At the time these guidelines were published (2013), objective metrics of competence in cardiac ultrasound were just being developed.

Current cardiac ultrasound simulation technology (Table 4)

Table 4.

Current echocardiography simulators with skill metrics.

| Image source | Mode | Feedback to trainee | Metrics |

|---|---|---|---|

| Synthetic | TEE | Visual |

|

| Patients | TEE and TTE | Quantitative and visual |

|

TEE, transesophageal echocardiography; TTE, transthoracic echocardiography.

Echo simulators, which typically consist of a mannequin, mock transducer, and computer, were first marketed in the 1990s, before computer technology could provide realistic assessment of technical skill employing simulation. Modern echo simulators offer 3D graphic displays to assist the learner in visualizing the relationship between the transducer position, the location of the image sector in the heart’s anatomy, and the resulting 2D image. In January 2017, one of us (FHS) invited each simulation vendor to submit publications on research utilizing their echo simulators for skills assessment for inclusion in a presentation at the American Institute of Ultrasound in Medicine. The replies of vendors who responded are presented here. There are three echo simulators with the technology necessary to measure the technical skill of the examiner: one simulates transthoracic echo (TTE) and two simulate transesophageal echo (TEE). All of them generate skill metrics using transducer tracking data collected during scanning. The simulators differ in realism of the images, with some displaying synthetic images and some displaying real patient images.

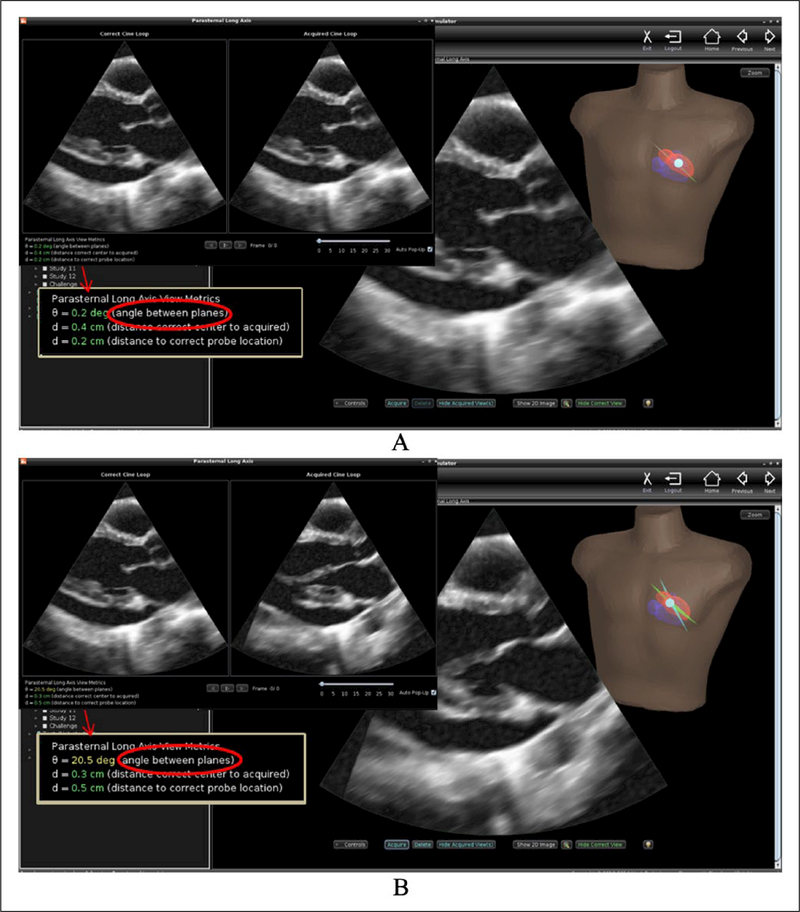

Investigators at the University of Washington were the first to develop an echo simulator with metrics of technical skill. Working initially with a graphic interface intended for tele-ultrasound applications, they showed that novices performed significantly better at visualizing specified anatomy with rather than without the visual guidance afforded by 3D reconstruction of the organ being imaged.57 In the echo simulator, numeric as well as visual feedback is provided to trainees (Figure 2). As the mock transducer is manipulated over the mannequin, the computer displays ultrasound images from a previously acquired 3D data set in a 2D view that changes in real time appropriately for the transducer’s position and orientation. The anatomically correct plane can be displayed during training as a guide. When the trainee ‘acquires’ a cine loop, its plane is also displayed for comparison, and the trainee’s technical skill is measured in terms of the angular deviation between his/her acquired view plane and the correct plane.58 The metric was validated by demonstrating its ability to discriminate trained novices from experts, and is applicable to TEE as well as TTE training.59

Figure 2.

User interface for the echocardiography simulator developed at the University of Washington. (A) The image ‘acquired’ by the trainee appears in a dual display (upper inset) and appears side by side and beating in synchrony with the anatomically correct view. The angular deviation and other metrics are displayed for additional feedback (arrow indicates the enlarged metrics in the inset). This image was very close to correct. (B) Acquisition of a poorly positioned image in which the aortic valve is obscured and the left ventricle is foreshortened; note the difference in visualization of anatomy and the magnitude of the angular deviation.

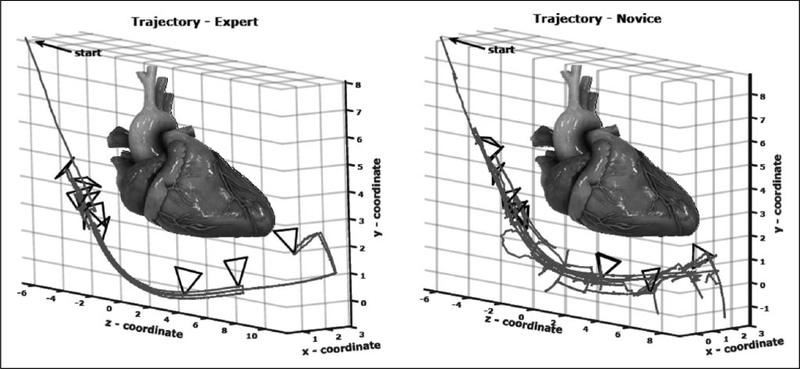

Both the CAE Healthcare (Sarasota, FL, USA) and HeartWorks (Inventive Medical Ltd, London, UK; recently acquired by Medaphor) simulators perform kinematic or motion-based analysis of TEE performance. CAE’s metrics measure the smoothness and fluidity of transducer manipulation. Five of their metrics differed significantly between experts and untrained novices on univariate analysis; two (peak movement and path length) showed independent discriminatory value.60 HeartWorks’ metrics ‘represent economy and efficiency … as well as motion smoothness and fluidity’ (Figure 3). Six of their eight metrics showed significant differences between experts and untrained novices.61

Figure 3.

Kinematic metrics of skill in transesophageal echo (TEE) in the HeartWorks simulator.61 Probe tip trajectory and depth of the transesophageal echo probe are smoother and more fluid for the expert than for the novice. Reproduced from ref. 61 (© Springer International Publishing Switzerland 2016) with permission of Springer.

In summary, metrics for simulator-based assessment of technical skill have been developed and validated for both TTE and TEE. All three of these simulators display 3D graphic feedback, and all provide metrics that can be applied to inform program directors of trainee progress. The simulator developed at the University of Washington is the only one to provide immediate quantitative feedback to learners.

Conclusion

In medical ultrasound, accuracy of diagnosis is critically dependent on the skill of the examiner. Thus, a standardized and readily available method for competency assessment provides an important link between efforts to improve patient safety and the need for better training of health professionals. Simulator-based competency testing in diagnostic ultrasound is likely to contribute to the paradigm change in medical education referred to as CBME because it can: (1) permit quantitative and objective assessment of skill competence for training, certification, and continuing education; (2) promote standardization of examinations; (3) remove the dependency of testing and training on availability of live patients; and (4) improve training efficiency by giving immediate and frequent feedback to the trainee and by informing the instructor on areas of inadequacy.

Simulators have been developed for both vascular and cardiac ultrasound applications. For peripheral vascular ultrasound, the measurement of flow velocities is critical for diagnosis, and realistic display of Doppler spectral waveforms presents unique technical challenges. Flow phantoms allow use of a standard duplex ultrasound system but depict flow abnormalities in an idealized manner that does not provide a realistic scanning experience. The computer-based duplex ultrasound simulator developed at the University of Washington uses a 3D model of the vessel lumen and computational fluid dynamics to create a realistic color-flow Doppler image and Doppler spectral waveforms. At this time, simulators for echocardiography are generally more advanced than those for peripheral vascular ultrasound. Computer-based echocardiography simulators are available that offer 3D graphic displays that provide feedback to the learner as well as metrics for assessment of technical skill.

Acknowledgments

Funding

The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: supported in part by funding from the Edward J Stemmler Medical Education Research Fund, the Wallace H Coulter Foundation, the John L Locke Jr Charitable Trust, the National Institute of Biomedical Imaging and Bioengineering, and the National Institute of Environmental Health Sciences, Bethesda, MD, USA.

Footnotes

Declaration of conflicting interests

The authors declared the following potential conflicts of interest with respect to the research, authorship, and/or publication of this article: Dr Sheehan is the founder of VentriPoint, Inc., of which she is a major equity holder. VentriPoint markets a product for measuring right heart function, which is not the subject of the present report. Dr Sheehan is also the Founder and President of Sheehan Medical LLC, which markets the transthoracic echocardiography (TTE) simulator that she and co-investigators developed and validated at the University of Washington. Dr Sheehan supports research in medical education by lending TTE simulators from her laboratory at the University of Washington to investigators for up to 6 months. Neither the co-author of the present report nor the University of Washington have involvement in Sheehan Medical LLC, and none receive any benefit from simulator sales.

References

- 1.Institute of Medicine. To Err is Human: Building a Safer Health System. Washington, DC: National Academy Press, 1999. [Google Scholar]

- 2.McGaghie WC, Sajid AW, Miller GE, et al. Competency-Based Curriculum Development in Medical Education. Geneva: World Health Organization, 1978, p.91. [PubMed] [Google Scholar]

- 3.Satava RM. Historical review of surgical simulation: A personal perspective. World J Surg 2007; 32: 141–148. [DOI] [PubMed] [Google Scholar]

- 4.Accreditation Council for Graduate Medical Education. Outcome Project: http://njms.rutgers.edu/culweb/medical/documents/ToolboxofAssessmentMethods.pdf (2000, accessed 14 January 2018).

- 5.Accreditation Council for Graduate Medical Education and American Board of Medical Specialties Joint Initiative. ACGME Competencies: Suggested Best Methods for Evaluation. Attachment/ Toolbox of Assessment Methods: https://www.partners.org/Assets/Documents/Graduate-Medical-Education/ToolTable.pdf (2000, accessed 14 January 2018).

- 6.Frank JR, Snell LS, Ten Cate FJ, et al. Competency-based medical education: Theory to practice. Med Teach 2010; 32: 638–645. [DOI] [PubMed] [Google Scholar]

- 7.Epstein RM. Assessment in medical education. N Engl J Med 2007; 356: 387–396. [DOI] [PubMed] [Google Scholar]

- 8.Bahner DP, Adkins EJ, Nagel R, et al. Brightness Mode Quality Ultrasound Imaging Examination Technique (B-QUIET): Quantifying quality in ultrasound imaging. J Ultrasound Med 2011; 30: 1649–1655. [DOI] [PubMed] [Google Scholar]

- 9.Nielsen DG, Gotzsche L, Eika B. Objective structured assessment of technical competence in transthoracic echocardiography: A validity study in a standardised setting. BMC Med Educ 2013; 13: 47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Sohmer B, Hudson C, Hudson J, et al. Transesophageal echocardiography simulation is an effective tool in teaching psychomotor skills to novice echocardiographers. Can J Anaesth 2014; 61: 235–241. [DOI] [PubMed] [Google Scholar]

- 11.Goff BA, Lentz GM, Lee D, et al. Structured assessment of technical skills for obstetric and gynecology residents. Obstet Gynecol 2000; 96: 146–150. [DOI] [PubMed] [Google Scholar]

- 12.Martin JA. Objective structured assessment of technical skill (OSATS) for surgical residents. Br J Surg 1997; 84: 273–278. [DOI] [PubMed] [Google Scholar]

- 13.Hodges B, Regehr G, McNaughton N, et al. OSCE checklists do not capture increasing levels of expertise. Acad Med 1999; 74: 1129–1134. [DOI] [PubMed] [Google Scholar]

- 14.Van Hove PD, Tuijthof GJM, Verdaasdonk EGG, et al. Objective assessment of technical surgical skills. Br J Surg 2010; 97: 972–987. [DOI] [PubMed] [Google Scholar]

- 15.Ilgen JS, Ma IWY, Hatala R, et al. A systematic review of validity evidence for checklists versus global rating scales in simulation-based assessment. Med Educ 2015; 49: 161–173. [DOI] [PubMed] [Google Scholar]

- 16.American College of Emergency Medicine. Emergency ultrasound guidelines. Ann Emerg Med 2009; 53: 550–570. [DOI] [PubMed] [Google Scholar]

- 17.Accreditation Council for Graduate Medical Education. Program requirements for resident education in internal medicine: https://www.acgme.org/Portals/0/PFAssets/ProgramRequirements/140_internal_medicine_2017-07-01.pdf (2017, accessed 14 January 2018).

- 18.Accreditation Council for Graduate Medical Education. Program requirements for graduate medical education in cardiovascular disease: https://www.acgme.org/Portals/0/PFAssets/ProgramRequirements/141_cardiovascular_disease_2017-07-01.pdf (2017, accessed 14 January 2018).

- 19.Accreditation Council for Graduate Medical Education. Program requirements for graduate medical education in general surgery: https://www.acgme.org/Portals/0/PFAssets/ProgramRequirements/440_general_surgery_2017-07-01.pdf (2017, accessed 14 January 2018).

- 20.Gregoratos G, Miller AB. 30th Bethesda Conference: The future of academic cardiology: Task Force 3: Teaching. J Am Coll Cardiol 1999; 33: 1120–1127. [PubMed] [Google Scholar]

- 21.Marinopoulos SS, Dorman T, Ratanawongsa N, et al. Effectiveness of continuing medical education. Evidence Report/Technology Assessment No. 149 (prepared by the Johns Hopkins Evidence-based Practice Center, under Contract No. 290–02-0018). AHRQ Publication No. 07-E006. Rockville, MD: Agency for Healthcare Research and Quality, January 2007. [Google Scholar]

- 22.McGaghie WC, Issenberg SB, Cohen ER, et al. Does simulation-based medical education with deliberate practice yield better results than traditional clinical education? A meta-analysis comparative review of the evidence. Acad Med 2011; 86: 706–711. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.McGaghie WC, Draycott TJ, Dunn WF, et al. Evaluating the impact of simulation on translational patient outcomes. Simul Healthc 2011; 6(Suppl): S42–S47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Issenberg SB, McGaghie WC, Petrusa ER, et al. Features and uses of high-fidelity medical simulations that lead to effective learning: A BEME systematic review. Med Teach 2005; 27: 10–28. [DOI] [PubMed] [Google Scholar]

- 25.Cook DA, Hamstra SJ, Brydges R, et al. Comparative effectiveness of instructional design features in simulation-based education: Systematic review and meta-analysis. Med Teach 2013; 35: e867–e898. [DOI] [PubMed] [Google Scholar]

- 26.Barrington MJ, Wong DM, Slater B, et al. Ultrasound-guided regional anesthesia: How much practice do novices require before achieving competency in ultrasound needle visualization using a cadaveric model. Reg Anesth Pain Med 2012; 37: 334–339. [DOI] [PubMed] [Google Scholar]

- 27.Grantcharov TP, Funch-Jensen P. Can everyone achieve proficiency with the laparoscopic technique? Learning curve patterns in technical skills acquisition. Am J Surg 2009; 197: 447–449. [DOI] [PubMed] [Google Scholar]

- 28.Grantcharov TP, Reznick RK. Training tomorrow’s surgeons: What are we looking for and how can we achieve it? Aust N Z J Surg 2009; 79: 104–107. [DOI] [PubMed] [Google Scholar]

- 29.Passiment M, Sacks H, Huang G. Medical simulation in medical education: Results of an AAMC survey, https://www.aamc.org/download/259760/data (2011, accessed 30 January 2014).

- 30.Levine AI, Schwartz AD, Bryson EO, et al. Role of simulation in U.S. physician licensure and certification. Mt Sinai J Med 2012; 79: 140–153. [DOI] [PubMed] [Google Scholar]

- 31.Cook DA, Brydges R, Zendejas B, et al. Technology-enhanced simulation to assess health professionals: A systematic review of validity evidence, research methods, and reporting quality. Acad Med 2013; 88: 872–883. [DOI] [PubMed] [Google Scholar]

- 32.Beach KW, Bergelin RO, Leotta DF, et al. Standardized ultrasound evaluation of carotid stenosis for clinical trials: University of Washington Ultrasound Reading Center. Cardiovasc Ultrasound 2010; 8: 39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Beach KW, Leotta DF, Zierler RE. Carotid Doppler velocity measurements and anatomic stenosis: Correlation is futile. Vasc Endovascular Surg 2012; 46: 466–474. [DOI] [PubMed] [Google Scholar]

- 34.NASCET: North American Symptomatic Carotid Endarterectomy Trial Steering Committee. North American Symptomatic Carotid Endarterectomy Trial: Methods, patient characteristics, and progress. Stroke 1991; 22: 711–720. [DOI] [PubMed] [Google Scholar]

- 35.Logason K, Baerlin T, Jonsson ML, et al. The importance of Doppler angle of insonation on differentiation between 50–69% and 70–99% carotid artery stenosis. Eur J Vasc Endovasc Surg 2001; 21: 311–313. [DOI] [PubMed] [Google Scholar]

- 36.Grant EG, Benson CB, Moneta GL, et al. Carotid artery stenosis: Gray-scale and Doppler US diagnosis – Society of Radiologists in Ultrasound Consensus Conference. Radiology 2003; 229: 340–346. [DOI] [PubMed] [Google Scholar]

- 37.Grogan J, Castilla M, Lozanski L, et al. Frequency of critical stenosis in primary arteriovenous fistulae before hemo-dialysis access: Should duplex ultrasound surveillance be the standard of care? J Vasc Surg 2005; 41: 1000–1006. [DOI] [PubMed] [Google Scholar]

- 38.Dassabhoy NR, Ram SJ, Nassar R, et al. Stenosis surveillance of hemodialysis grafts by duplex ultrasound reduces hospitalizations and costs of care. Semin Dial 2005; 18: 550–557. [DOI] [PubMed] [Google Scholar]

- 39.Back MR, Bandyk DF. Current status of surveillance of hemo-dialysis access grafts. Ann Vasc Surg 2001; 15: 491–502. [DOI] [PubMed] [Google Scholar]

- 40.Sidawy AN, Spergel LM, Besarab A, et al. The Society for Vascular Surgery: Clinical practice guidelines for the surgical placement and maintenance of arteriovenous hemodialysis access. J Vasc Surg 2008; 48: 2S–25S. [DOI] [PubMed] [Google Scholar]

- 41.Gibson KD, Gilen DL, Caps MT, et al. Vascular access survival and incidence of venous transposition fistulas from the United States Renal Data System Dialysis Morbidity and Mortality Study. J Vasc Surg 2001; 34: 694–700. [DOI] [PubMed] [Google Scholar]

- 42.Lauvao LS, Ihnat DM, Goshima KR, et al. Vein diameter is the major predictor of fistula maturation. J Vasc Surg 2009; 49: 1499–1504. [DOI] [PubMed] [Google Scholar]

- 43.DeJong MR. Developing angle correction methods for the laboratory. ICAVL Newsletter, 2000, Spring Issue.

- 44.Khaw KT. Does carotid duplex imaging render angiography redundant before carotid endarterectomy? Br J Radiol 1997; 70: 235–238. [DOI] [PubMed] [Google Scholar]

- 45.Mead GE, Lewis SC, Wardlaw JM. Variability in Doppler ultrasound influences referral of patients for carotid surgery. Eur J Ultrasound 2000; 12: 137–143. [DOI] [PubMed] [Google Scholar]

- 46.Stanley DG. The importance of Intersocietal Commission for the Accreditation of Vascular Laboratories (ICAVL) certification for noninvasive peripheral vascular tests: The Tennessee experience. J Vasc Ultrasound 2004; 28: 65–69. [Google Scholar]

- 47.Brown OW, Bendick PJ, Bove PG, et al. Reliability of extracranial carotid artery duplex ultrasound scanning: Value of vascular laboratory accreditation. J Vasc Surg 2004; 39: 366–371. [DOI] [PubMed] [Google Scholar]

- 48.Zierler RE, Leotta DF, Sanson K, et al. Development of a duplex ultrasound simulator and preliminary validation of velocity measurements in carotid artery models. Vasc Endovasc Surg 2016; 50: 309–316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Zierler RE, Leotta DF, Sansom K, et al. Simulation of duplex ultrasound for assessment of dialysis access sites (abstr.). J Ultrasound Med 2017; 36(Suppl): S62–S63. [Google Scholar]

- 50.Bahner DP, Goldman E, Way D, et al. The state of ultrasound education in U.S. medical schools: Results of a national survey. Acad Med 2014; 89: 1681–1686. [DOI] [PubMed] [Google Scholar]

- 51.Hall JWW, Holman H, Bornemann P, et al. Point of care ultrasound in family medicine residency programs. Fam Med 2015; 47: 706–711. [PubMed] [Google Scholar]

- 52.Spencer KT, Kimura BJ, Korcarz CE, et al. Focused cardiac ultrasound: Recommendations from the American Society of Echocardiography. J Am Soc Echocardiogr 2013; 26: 567–581. [DOI] [PubMed] [Google Scholar]

- 53.Melamed R, Sprenkle MD, Ulstad VK, et al. Assessment of left ventricular function by intensivists using hand-held echocardiography. Chest 2009; 135: 1416–1420. [DOI] [PubMed] [Google Scholar]

- 54.Martin LD, Howell EE, Ziegelstein RC, et al. Hospitalist performance of cardiac hand-carried ultrasound after focused training. Am J Med 2007; 120: 1000–1004. [DOI] [PubMed] [Google Scholar]

- 55.DeCara JM, Lang RM, Koch R, et al. The use of small personal ultrasound devices by internists without formal training in echocardiography. Eur J Echocardiogr 2003; 4: 141–147. [DOI] [PubMed] [Google Scholar]

- 56.Labovitz AJ, Noble V, Bierig M, et al. Focused cardiac ultrasound in the emergent setting: A consensus statement of the American Society of Echocardiography and American College of Emergency Physicians. J Am Soc Echocardiogr 2010; 23: 1225–1230. [DOI] [PubMed] [Google Scholar]

- 57.Sheehan FH, Ricci MA, Murtagh C, et al. Expert visual guidance of ultrasound for telemedicine. J Telemed Telecare 2010; 16: 77–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.King DL, Harrison MR, King DL Jr, et al. Ultrasound beam orientation during standard two-dimensional imaging: Assessment by three-dimensional echocardiography. J Am Soc Echocardiogr 1992; 5: 569–576. [DOI] [PubMed] [Google Scholar]

- 59.Sheehan FH, Otto CM, Freeman RV. Echo simulator with novel training and competency testing tools. Stud Health Technol Inform 2013; 184: 397–403. [PubMed] [Google Scholar]

- 60.Matyal R, Mitchell JD, Hess PE, et al. Simulator-based transesophageal echocardiographic training with motion analysis. Anesthesiology 2014; 121: 389–399. [DOI] [PubMed] [Google Scholar]

- 61.Mazomenos EB, Vasconcelos F, Smelt J, et al. Motion-based technical skills assessment in transoesophageal echocardiography In: Zheng G, Liao H, Jannin P, et al. (eds) Medical Imaging and Augmented Reality. MIAR 2016. Lecture Notes in Computer Science, vol 9805 Cham: Springer, pp.97–103. [Google Scholar]

- 62.Leung W-C. Competency based medical training: Review. BMJ 2002; 325: 693–696. [PMC free article] [PubMed] [Google Scholar]