Abstract

Bayesian models have advanced the idea that humans combine prior beliefs and sensory observations to optimize behavior. How the brain implements Bayes-optimal inference, however, remains poorly understood. Simple behavioral tasks suggest that the brain can flexibly represent probability distributions. An alternative view is that the brain relies on simple algorithms that can implement Bayes-optimal behavior only when the computational demands are low. To distinguish between these alternatives, we devised a task in which Bayes-optimal performance could not be matched by simple algorithms. We asked subjects to estimate and reproduce a time interval by combining prior information with one or two sequential measurements. In the domain of time, measurement noise increases with duration. This property takes the integration of multiple measurements beyond the reach of simple algorithms. We found that subjects were able to update their estimates using the second measurement but their performance was suboptimal, suggesting that they were unable to update full probability distributions. Instead, subjects’ behavior was consistent with an algorithm that predicts upcoming sensory signals, and applies a nonlinear function to errors in prediction to update estimates. These results indicate that the inference strategies employed by humans may deviate from Bayes-optimal integration when the computational demands are high.

Introduction

Sensorimotor control depends on accurate estimation of internal state variables1–5. Numerous experiments have used Bayesian estimation theory to demonstrate that humans estimate internal states by integrating multiple sources of information including prior beliefs and sensory cues from various modalities6–16. Bayesian estimation is typically formulated in terms of three components: prior distributions representing a priori beliefs about state variables, likelihood functions derived from noisy sensory measurements, and cost functions that characterize reward contingencies17. In this formulation, the likelihood function and prior distribution are combined to compute a posterior distribution and the cost function is used to extract an estimate that maximizes expected reward. This formulation is the basis of most psychophysical studies of Bayesian integration9–15,18–20.

Implicit in this formulation is the assumption that the brain has access to priors, likelihoods, and cost functions. Access to these quantities is appealing as it could support rapid and optimal state estimation without the need to learn new policies for novel behavioral contexts21,22. However, in most experiments, Bayes-optimal behavior can also be achieved by simpler algorithms that do not depend on direct access to likelihoods, priors and cost functions21–23. For example, optimal cue combination in the presence of Gaussian noise may be implemented by a weighted sum of measurements6. Similarly, integration of noisy evidence with prior beliefs may be implemented by a suitable functional mapping between measurements and estimates24,25. Finally, online estimation of a variable from sequential measurements that are subject to Gaussian noise can be achieved by a Kalman filter that only keeps track of the mean and variance26 without representing and updating the full posterior distribution.

In contrast to simple laboratory tasks, optimal inference in natural settings is often intractable and involves approximations that may deviate from optimality27. Therefore, it is critical to go beyond statements of optimality and suboptimality, and assess the inference algorithms humans use during sensorimotor and cognitive tasks28,29. Further, as articulated by Marr30, characterization of the underlying algorithms could establish a bridge between behaviorally relevant computations and neurobiological mechanisms.

We devised an experiment in which the computational demands for optimal Bayesian estimation were incompatible with simple algorithmic solutions. Subjects had to reproduce an interval by integrating their prior belief with one or two measurements of the interval. Several previous experiments have reported a decrease in perceptual or motor variability when subjects are given multiple intervals to measure31–40, but the underlying algorithms are not characterized. An important constraint for developing a suitable algorithmic model is that noise associated with measurement and production of time intervals scales with duration41. A consequence of this so-called scalar property of noise is that simple algorithms that only update certain parameters of the posterior (e.g., mean and/or variance) cannot emulate Bayes-optimal behavior. Therefore, optimal behavior in this paradigm would provide strong evidence that the underlying inference algorithm involves updating probability distributions. Conversely, suboptimal behavior would suggest that subjects rely on a simpler algorithm. We found that when subjects made two measurements their performance was suboptimal. Furthermore, comparison of behavior with various models indicated that subjects relied on an inference algorithm that used measurements to update point estimates using point nonlinearities.

Results

Subjects integrate interval measurements with prior knowledge

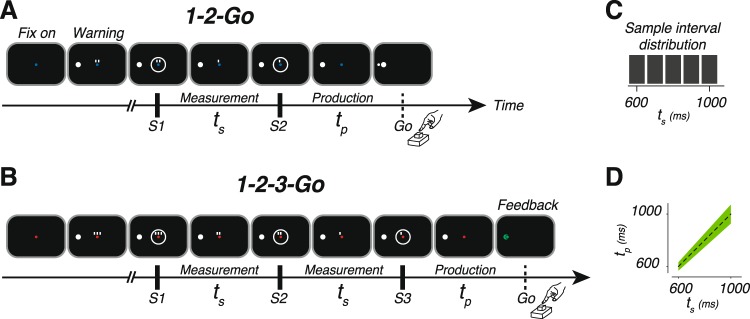

Subjects performed an interval reproduction task consisting of two randomly interleaved trial types (Fig. 1A,B). In “1-2-Go” trials, two flashes (S1 followed by S2) demarcated a sample interval (ts). Subjects had to reproduce ts immediately after S2. The interval between the onset of S2 and when the keyboard was pressed was designated as the production interval (tp). In “1-2-3-Go” trials, ts was presented twice, demarcated once by S1 and S2 and once by S2 and S3, providing the opportunity to make two measurements (Fig. 1B). Similar to 1-2-Go, subjects had to match tp (the interval between S3 and keyboard press) to ts. Across trials, ts was drawn from a discrete uniform distribution ranging between 600 and 1000 ms (Fig. 1C). Subjects received two forms of trial-by-trial feedback based on the magnitude and sign of the error. First, a feedback stimulus was presented whose location relative to the warning stimulus reflected the magnitude and sign of the error (Fig. 1A,B; see Methods). Second, if the error exceeded a threshold window (Fig. 1D), stimuli remained white and a tone denoting incorrect response was presented. Otherwise, the stimuli turned green and a tone denoting correct was presented. The threshold window for correct performance was proportionally larger for longer ts to accommodate the scalar variability of timing due to signal-dependent noise34,42–46. The threshold was adjusted adaptively and on a trial-by-trial basis according to performance (see Methods).

Figure 1.

The 1-2-Go and 1-2-3-Go interval reproduction task. (A,B) Task design. Each trial began with the appearance of a fixation spot (Fix on). The color of the fixation spot informed the subject of the trial type: blue for 1-2-Go, and red for 1-2-3-Go. After a random delay, a warning stimulus (large white circle) appeared. Additionally, two or three small white rectangles were presented above the fixation spot. The number of rectangles was associated with the number of upcoming flashes. After another random delay, two (S1 and S2 for 1-2-Go) or three (S1, S2 and S3 for 1-2-3-Go) white annulae were flashed for 100 ms in a sequence around the fixation spot. Consecutive flashes were separated by the duration of the sample interval (ts). With the disappearance of each flash, one of the small rectangles also disappeared (rightmost first and leftmost last). The white rectangles were provided to help subjects keep track of events during the trial. Subjects had to press a button after the last flash to produce an interval (tp) that matched ts. Immediately after button press, subjects received feedback. The feedback was a small circle that was presented to the left or right of the warning stimulus depending on whether tp was larger or smaller than ts, respectively. The distance of the feedback circle to the center of the warning stimulus was proportional to the magnitude of the error (tp − ts). (C) Experimental distribution of sample intervals. (D) Feedback. Subjects received positive feedback if production times fell within the green region. The width of the positive feedback window was scaled with ts.

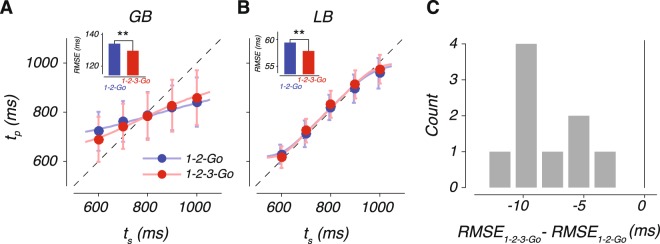

Subjects’ timing behavior exhibited three characteristic features (Fig. 2). First, tp increased monotonically with ts. Second, tp was systematically biased toward the mean of the prior, as evident from the tendency of responses to deviate from ts (diagonal) and gravitate toward the mean ts. As proposed previously24,47–49, this so-called regression to the mean indicated that subjects relied on their knowledge of the prior distribution of ts. Third, performance was better in 1-2-3-Go condition in which subjects made two measurements, as evidenced by a lower root-mean-square error (RMSE) in 1-2-3-Go compared to 1-2-Go condition (Fig. 2C; permutation test; p-value < 0.01 for all subjects; see Supplementary Table 1 for a summary of RMSE data by subject and condition). This observation indicates that subjects combined the two measurements to improve their estimates by decreasing variability and systematic biases, corroborating reports from other behavioral paradigms31–40,50,51. Combined with the systematic bias toward the mean of ts, these results indicated that subjects integrated prior information with one or two measurements to improve their performance.

Figure 2.

Performance in the interval reproduction task. (A) Production interval (tp) as a function of sample interval (ts) for a low sensitivity subject (GB). Filled circles and error bars show the mean and standard deviation of tp for each ts in the 1-2-Go (blue) and 1-2-3-Go (red) conditions. The dotted unity line represents perfect performance and the colored lines show the expected tp from a Bayes Least-Squares (BLS) model fit to the data. Inset: root-mean-square error (RMSE) in the 1-2-Go (blue) and 1-2-3-Go (red) conditions differed significantly (asterisk, p-value < 0.01; permutation test). (B) Same as (A) for a high sensitivity subject (LB). (C) The histogram of changes in RMSE across conditions for all subjects. See also Supplementary Table 1.

A Bayesian model of behavior

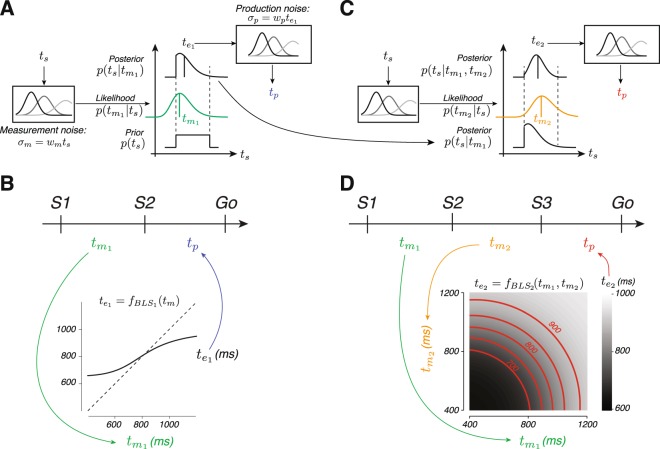

Building on previous work11,18,22,52, we asked whether subjects’ behavior could be accounted for by a Bayesian observer model based on the Bayes-Least Squares (BLS) estimator. For the 1-2-Go trials, the observer model (1) makes a noisy measurement of ts, which we denote by , (2) combines the likelihood function associated with , , with the prior distribution of ts, p(ts), to compute the posterior, , and (3) uses the mean of the posterior as the optimal estimate, . We modeled as a Gaussian distribution centered at ts with standard deviation, σm, proportional to ts with constant of proportionality, wm; i.e., σm = wmts (Fig. 3A, left box). We assumed that the production process was also perturbed by noise and modeled tp as a sample from a Gaussian distribution centered at with standard deviation, σp, proportional to with constant of proportionality, wp; i.e., (Fig. 3A, right box). Note that the entire operation of the BLS estimator can be described in terms of a deterministic mapping of to using a nonlinear function, which we denote as (Fig. 3B)24.

Figure 3.

BLS model of interval integration. (A) BLS model for 1-2-Go trials. The left panel illustrates the measurement process. The measured interval, , is perturbed by zero-mean Gaussian noise whose standard deviation is proportional to the sample interval, ts, with constant of proportionality wm (σm = wmts). The middle panel illustrates the estimation process. The model multiplies the likelihood function associated with (middle panel, green) with the prior (bottom), and uses the mean of the posterior (top) to derive an interval estimate (, black vertical line on the posterior). The right panel illustrates the production process. The produced interval, tp, is perturbed by zero-mean Gaussian noise with standard deviation proportional to , with constant of proportionality wp (). (B) The effective mapping function (, black curve) from the first measurement, , to the optimal estimate, . The dashed line indicates unity. (C) BLS model for 1-2-3-Go trials. The model uses the posterior after the first measurement, , as the prior and combines it with the likelihood of the second measurement (, orange) to compute an updated posterior, . The mean of the updated posterior is taken as the interval estimate (). (D) The effective mapping function (, grayscale) from each combination of measurements, and , to the optimal the estimate, . Red lines indicate combinations of measurements that lead to identical estimates (shown for 700, 750, 800, 850, and 900 ms).

For the 1-2-3-Go trials, the observer model (1) makes two measurements, and , (2) combines the likelihood, , with the prior, p(ts), to compute the posterior, , and (3) uses the mean of the posterior to derive an optimal estimate, . When the measurements are conditionally independent, the posterior is proportional to which can be rewritten as . This revised formulation can be interpreted in terms of an updating strategy in which the observer uses the posterior after one measurement, , as the prior for the second measurement (Fig. 3C; see Methods). In these trials, the mapping from and to can be described in terms of a two-dimensional nonlinear function, denoted by (Fig. 3D). Note that the iso-estimate contours of are nonlinear and convex (Fig. 3D, red). The nonlinearity indicates that the effect of and on is non-separable, and the convexity indicates that is more strongly influenced by the larger of the two measurements. These features are direct consequences of scalar noise and are not present when measurements are perturbed by Gaussian noise (see Appendix). Note that the BLS model for the 1-2-3-Go task reduces RMSE by reducing both prior-induced biases and variability, as previous studies have reported31–40,50,51.

We fit the model to each subject’s data assuming that responses in both 1-2-Go and 1-2-3-Go conditions were associated with the same wm and wp (Methods). The model was augmented in two ways to ensure that estimates of wm and wp were accurate. First, we included an offset parameter to absorb interval-independent biases (e.g., consistently pressing the button too early or too late). Second, trials in which tp grossly deviated from ts were designated as “lapse” trials (see Methods).

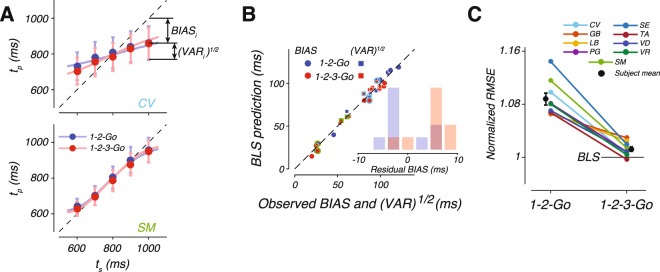

Model fits captured subjects’ behavior for both conditions as shown by a few representative subjects (Figs 2A,B, 4A; see Supplementary Fig. 1 for fits to all the subjects). Following previous work24, we evaluated model fits using two statistics, an overall bias, BIAS, and an overall variability, (see Methods). As shown in Fig. 4B, the model broadly captured the bias and variance for all subjects in both 1-2-Go and 1-2-3-Go conditions. We did not find any systematic difference in between the model and data (see Supplementary Fig. 2). In contrast, the observed BIAS was significantly larger than predicted by the model fits in the 1-2-3-Go condition (Fig. 4B, inset; two tailed t-test, t(8) = 4.6982, p-value = 0.0015), but not in the 1-2-Go condition (two tailed t-test, t(8) = −0.3236, p-value = 0.7546).

Figure 4.

BLS model fits to data. (A) Behavior of two subjects and the corresponding BLS model fits with the same format as in Fig. 2A,B. (B) BIAS (circles) and (squares) of each subject (abscissa) and the corresponding values computed from simulations of the fitted BLS model (ordinate). Red and blue points correspond to 1-2-Go and 1-2-3-Go, respectively. The dotted line plots unity. Data points corresponding to subjects SM and CV are marked by light green and light blue, respectively. Inset: difference between the BIAS observed from data and that expected by the BLS model fit for 1-2-Go (blue) and 1-2-3-Go (red) conditions. (C) Comparison of behavioral performance to model predictions. Each line connects the RMSE for 1-2-Go (left) and 1-2-3-Go (right) conditions for one subject. To facilitate comparison across subjects, RMSE values for each subject were normalized by the RMSE of the BLS model in the 1-2-3-Go condition. The black circles and error bars correspond to the mean and standard error of the normalized RMSE across subjects. See also Supplementary Figs 1, 2, and 3.

We quantified this observation across subjects by normalizing each subject’s RMSE in the 1-2-Go and 1-2-3-Go conditions to the RMSE expected from the BLS model in the 1-2-3-Go condition (Fig. 4C). We found that the observed RMSE in the 1-2-3-Go condition was significantly larger than expected (two tailed t-test, t(8) = 3.5484, p-value = 0.007). Further, the drop in observed RMSE in the 1-2-3-Go was significantly less than expected by the BLS model (see Supplementary Fig. 3). These analyses indicate that subjects were able to integrate the two measurements but failed to optimally update the posterior by the likelihood information associated with the second measurement.

Our original BLS model assumed that the noise statistics for and were identical. However, for two reasons, the noise statistics between the two measurements may differ. First, the internal representation of the first measurement may be subject to additional noise since it has to be held longer in working memory. Second, after the first measurement is made, subjects may be able to benefit from anticipatory and attentional mechanisms to make a more accurate second measurement. Both possibilities can be straightforwardly modeled by a modified BLS model that accommodates different levels of noise for the two measurements. Therefore, we also formulated an optimal estimator, BLSmem, in which the two measurements were associated with different noise statistics (see Methods, Supplementary Fig. 4). Despite having additional parameters, BLSmem failed to capture the BIAS observed from data in 1-2-3-Go trials (two-tailed t-test, t(8) = 4.9690, p-value = 0.0011; Supplementary Fig. 4).

An algorithmic view of Bayesian integration

The success of the BLS model in capturing behavior in the 1-2-Go condition24,47,49 and its failure in the 1-2-3-Go condition suggests that subjects were unable to update the posterior by the second measurement. We examined a number of simple inference algorithms that could account for this limitation. One of the simplest algorithms proposed for integrating sequential measurements is the Kalman filter. The Kalman filter only updates the mean and variance of the posterior26. This strategy is optimal when measurement noise is Gaussian because a Gaussian distribution is fully determined by its mean and variance. More generally, when integrating the likelihood function leaves the parametric form of the posterior distribution unchanged, a simple inference algorithm that updates those parameters can implement optimal integration.

First, we asked whether there exists a similarly simple and optimal updating algorithm when the noise is signal-dependent (i.e., scalar noise). For the posterior to have the same parametric form after one and two measurements, it is necessary that the product of two likelihood functions have the same parametric form as a single likelihood function. We tested this property analytically and verified that the parametric form of the likelihood function associated with scalar noise was not invariant under multiplication (see Appendix). As a result, the updating algorithm requires adjustment within each trial depending on (Supplementary Fig. 5). In other words, any inference algorithm that only updates certain statistics of the posterior (e.g., mean and variance) is expected to behave suboptimally when multiple time intervals have to be integrated. Therefore, we hypothesized that subjects might have used a simple updating algorithm analogous to the Kalman filter to integrate multiple measurements.

A linear-nonlinear estimator (LNE) model for approximate Bayesian inference

The first algorithm we tested was one in which the observer combines the last estimate , with the current measurement, , using a linear updating strategy. If we denote the corresponding weights by 1−kn and kn and set kn = 1/n, this algorithm tracks the running average of the measurements, (k1 and k2 are 1 and 0.5, respectively). This and similar models with linear updating schemes32,53–60 would certainly fail to account for the observed nonlinearities in subjects’ behavior (Supplementary Fig. 6). Therefore, we constructed a linear-nonlinear estimator (LNE) that augmented the linear updating by a point nonlinearity that could account for the observed prior dependent biases in tp (Fig. 5A). The nonlinear function, , was chosen to match the BLS estimator for a single measurement (n = 1), which is determined by wm.

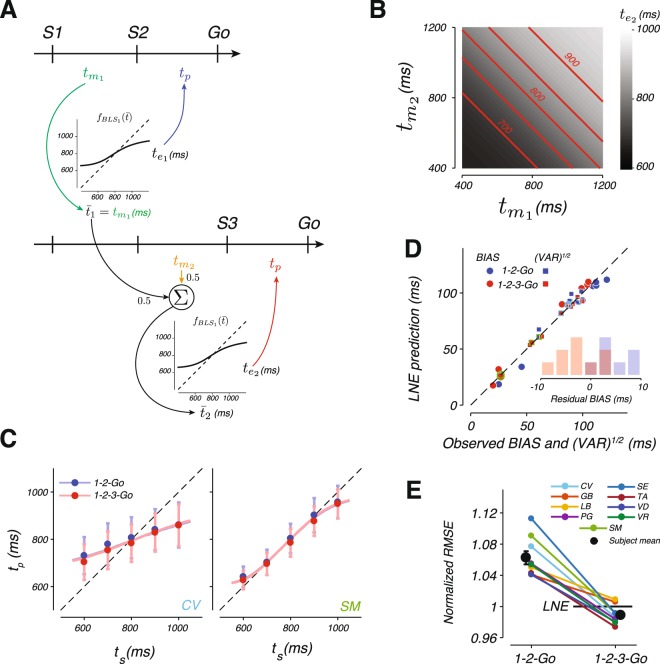

Figure 5.

A linear-nonlinear estimator (LNE) model and its fits to the data. (A) LNE algorithm. LNE derives an estimate by applying a nonlinear function, , to the average of the measurements. In the 1-2-Go trials (top), the average, , is the same as the first measurement, , and the estimate, , is . In 1-2-3-Go trials (bottom), the average, , is updated by the second measurement, (), and the estimate, , is . In both conditions, the produced interval, tp, is perturbed by zero-mean Gaussian noise with standard deviation proportional to the final estimate ( for 1-2-Go and for 1-2-3-Go) with the constant of proportionality wp, as in the BLS model. (B) The mapping from measurements to estimates (grayscale) for the LNE estimator in the 1-2-3-Go trials. Red lines indicate combinations of measurements that lead to identical estimates (shown for 700, 750, 800, 850, and 900 ms). (C) Mean and standard deviation of tp as a function of ts for two example subjects (circles and error bars) along with the corresponding fits of the LNE model (lines). (D) BIAS (circles) and (squares) of each subject (abscissa) and the corresponding values computed from simulations of the fitted LNE model (ordinate). Conventions match Fig. 4B. (E) The RMSE in the 1-2-Go and 1-2-3-Go conditions relative to the corresponding predictions from the LNE model (conventions as in Fig. 4C). See also Supplementary Figs 2, 3, 7, and 8.

Simulation of LNE verified that it could indeed integrate multiple measurements and exhibit prior-dependent biases (see Supplementary Fig. 7). However, the behavior of LNE was qualitatively different from BLS. The contrast between the two models was evident from a comparison of the relationship between measurements and estimates. Unlike BLS (Fig. 3D), estimates derived from LNE are linear with respect to and , a feature that can be visualized by the linear iso-estimate contours of the LNE model (Fig. 5B).

We fitted LNE to each subject independently and asked how well it accounted for the observed statistics. The LNE model broadly captured the observed regression to the mean (Fig. 5C,D; see Supplementary Fig. 8 for fits to all subjects), but had a qualitative failure: subject’s behavior exhibited significantly more BIAS in 1-2-Go condition (Fig. 5D, inset; two tailed t-test, t(8) = 4.9304, p-value = 0.001) and significantly less BIAS in 1-2-3-Go condition (Fig. 5D, inset; two tailed t-test, t(8) = −2.3782, p-value = 0.045) than the biases predicted by the model. This failure can be readily explained in terms of how LNE functions. Since the static nonlinearity in LNE is the same for one and two measurements, the bias LNE generates is the same for the 1-2-Go and 1-2-3-Go conditions and improvements in estimation are achieved mainly through a reduction in VAR. Therefore, when we fitted LNE to data from both conditions, the model consistently underestimated BIAS for the 1-2-Go condition, and overestimated BIAS for the 1-2-3-Go condition (Fig. 5C, red and blue lines nearly overlap). Further, the LNE model made systematic errors predicting subject VAR in 1-2-Go trials (Supplementary Fig. 2).

We further evaluated LNE by asking how it accounted for the observed performance improvement in the 1-2-3-Go condition compared to the 1-2-Go condition. We normalized each subject’s RMSE from the 1-2-Go and 1-2-3-Go conditions to the RMSE expected from the behavior of the fitted LNE model in the 1-2-3-Go condition (Fig. 5E). Most subjects surpassed the predictions of the LNE model (horizontal line) for the 1-2-3-Go condition, and average RMSE reached values that were significantly lower than expected (0.990; two tailed t-test, t(8) = −2.463, p-value = 0.039). Based on these results, we concluded that LNE fails to capture subjects’ behavior both qualitatively and quantitatively.

An extended Kalman filter (EKF) model for approximate Bayesian inference

We considered a moderately more sophisticated algorithm inspired by the extended Kalman filter (EKF)61. This algorithm is shown in Fig. 6A. Upon each new measurement, EKF uses the error between the previous estimate and the current measurement to generate a new estimate. The difference between EKF and the Kalman filter is that the error is subjected to a nonlinear function before being used to update the previous estimate. This nonlinearity is necessary for the algorithm to be able to account for the nonlinear prior-dependent biases observed in behavior.

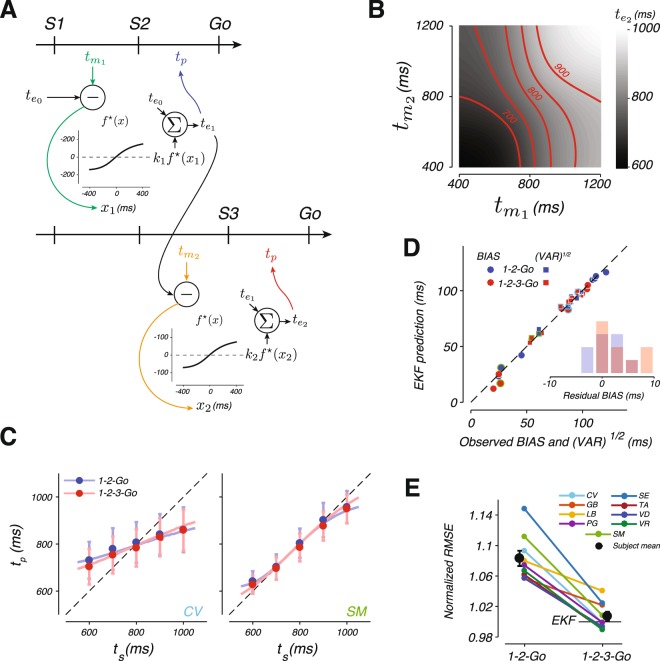

Figure 6.

An extended Kalman filter (EKF) model and its fits to the data. (A) EKF algorithm. EKF is a real-time inference algorithm that uses each measurement to update the estimate. After the first flash, EKF uses the mean of the prior as its initial estimate, . The second flash furnishes the first measurement, . EKF computes a new estimate, , using the following procedure: (1) it measures the difference between and to compute an error, x1, (2) it applies a nonlinear function, , to x1, (3) it scales by a gain factor, k1, whose magnitude depends on the relative reliability of and , and (4) it adds to to compute . In the 1-2-Go condition (top), is the final estimate used for the production of tp. In the 1-2-3-Go condition (bottom), the updating procedure is repeated to compute a new estimate by adding to where x2 is the difference between the second measurement, , and , and k2 is the scale factor determined by the relative reliability of and . is then used as the final estimate for the production of tp. We assumed that the produced interval, tp, is perturbed by zero-mean Gaussian noise with standard deviation proportional to the final estimate ( for 1-2-Go and for 1-2-3-Go) with the constant of proportionality wp, as in the BLS model. (B) The mapping from measurements to estimates (grayscale) for the EKF estimator in the 1-2-3-Go condition. Red lines indicate combinations of measurements that lead to identical estimates (shown for 700, 750, 800, 850, and 900 ms). (C) Mean and standard deviation of tp as a function of ts for two example subjects (circles and error bars) along with the corresponding fits of the EKF model (lines). (D) BIAS (circles) and (squares) of each subject (abscissa) and the corresponding values computed from simulations of the fitted EKF model (ordinate). Conventions match Fig. 4B. (E) The RMSE in the 1-2-Go and 1-2-3-Go conditions relative to the corresponding predictions from the EKF model (conventions as in Fig. 4C). See also Supplementary Figs 2, 3, 9, and 10.

In our experiment, immediately after the first flash, the only information about the sample interval, ts, comes from the prior distribution. Accordingly, we set the initial estimate, , to the mean of the prior distribution. After the first measurement, EKF computes an “innovation” term by applying a static nonlinearity, to the error, x1, between and . This innovation is multiplied by a gain, k1, and added to to compute the new estimate, . In the 1-2-Go condition in which only one measurement is available, serves as the final estimate that the model aims to reproduce.

For the 1-2-3-Go condition, EKF repeats the updating procedure after the second measurement, . It computes the difference between and to derive a prediction error, x2, which is subjected to the same nonlinear function, , to yield a second innovation. This innovation is then scaled by an appropriate gain, k2, and added to to generate an updated estimate, , which the model aims to reproduce.

The two important elements that determine the overall behavior of EKF are the nonlinear function and the gain factor(s) applied to the innovation(s) (k1 and k2) to update the estimate(s). We set the form of the nonlinear function such that biases in after one measurement are the same between EKF and BLS models. This ensures that EKF and BLS behave identically in the 1-2-Go condition. Note that our implementation of EKF assumes that the same nonlinear function is applied after every measurement. If one allows this nonlinear function to be optimized separately for each measurement, EKF would be able to replicate the behavior of BLS exactly (Supplementary Fig. 5).

For the gain factors, we reasoned that the most rational choice is to set the weight of each innovation based on the expected reliability of the corresponding estimate, , relative to the new measurement, , as in the Kalman filter (see Methods). This causes the gain factor to decrease with the number of measurements, and ensures that the influence of each new measurement is appropriately titrated. With these assumptions, EKF remains suboptimal for the 1-2-3-Go condition. However, it captures certain aspects of the nonlinearities associated with the optimal BLS estimator as shown by Fig. 6B (compare to Fig. 3D).

The algorithm implemented by EKF is appealing as it uses a simple updating strategy that can be straightforwardly extended to multiple sequential measurements and is a nonlinear version of error correcting mechanisms proposed for related synchronization tasks54,56,58. Furthermore, EKF captures important features of human behavior. First, integration of each new measurement causes a reduction in RMSE, as seen in 1-2-3-Go compared to 1-2-Go condition. Second, the nonlinear function applied to innovations allows EKF to incorporate prior information and capture prior-dependent biases. Third, since the nonlinearity is applied to each innovation (as opposed to the final estimate), EKF, unlike LNE, is able to capture the reduction in BIAS in 1-2-3-Go compared to 1-2-Go condition.

After simulating the model to ensure it integrates measurements and exhibits prior-dependent biases (Supplementary Fig. 9), we fitted EKF to each subject independently and asked how well it accounted for the observed statistics. Similar to BLS and LNE, EKF broadly captured the observed regression to the mean in the 1-2-Go trials (Fig. 6C,D, blue). This is not surprising since the EKF algorithm is identical to BLS when the prior is integrated with a single measurement. EKF was also able to capture the mean tp as a function of ts in the 1-2-3-Go trials (Fig. 6C,D red). Across subjects, EKF provided a better match to the data when compared to BLS and LNE although it modestly underestimated the BIAS in 1-2-3-Go condition (Fig. 6D, inset; two tailed t-test, t(8) = 4.6055, p-value = 0.02639). See Supplementary Fig. 10 for fits of the EKF model to all subjects.

We also asked if EKF could account for the observed RMSEs. To do so, we performed the same analysis we used to evaluate the BLS and LNE models. We normalized each subject’s RMSE from the 1-2-Go and 1-2-3-Go conditions to the RMSE expected from the EKF model for 1-2-3-Go (Fig. 6E). We found no significant difference between observed and predicted RMSEs for the 1-2-3-Go condition (two-tailed t-test, t(8) = 1.5506, p-value = 0.160), and no significant difference between the observed and predicted change in RMSE from the 1-2-Go to the 1-2-3-Go condition (Supplementary Fig. 3). These results indicate that subjects’ suboptimal behavior is consistent with the approximate Bayesian integration implemented by the EKF algorithm.

To further validate the superiority of the EKF model, we directly compared various models to BLS using log likelihood ratio. Specifically, we computed the ratio of the log likelihood of the data given each model and maximum likelihood parameters ( see Methods) to the log likelihood of the BLS model, , for each subject. We found that EKF provided the best fit for 8 out of 9 subjects (Table 1). For one of the subjects, the fits were poor for all models but LNE provided the best fit.

Table 1.

Predictive log likelihood ratio for each model and subject.

| Model | Subject | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| CV | GB | LB | PG | SM | SE | TA | VR | VD | |

| LNE | 1.1678 (0.5012) | −1.8267 (0.2976) | −0.3094 (0.2020) | 0.1516 (0.3407) | 0.4896 (0.3427) | −1.7151 (0.3493) | −0.0365 (0.2806) | 0.3456 (0.3593) | 0.3793 (0.2175) |

| EKF | 1.1900 (0.1998) | 0.0380 (0.1326) | 0.7168 (0.2234) | 0.3828 (0.1428) | −1.4262 (0.2755) | 1.0909 (0.1543) | 0.2358 (0.1250) | 0.3623 (0.1581) | 0.6537 (0.1002) |

| BLSmem | 0.4621 (0.1832) | −0.0821 (0.0267) | −0.2445 (0.0743) | 0.0086 (0.0518) | −0.0637 (0.2714) | 0.0440 (0.0876) | −0.0191 (0.0195) | −0.0359 (0.0249) | −0.0421 (0.0268) |

Discussion

The neural systems implementing sensorimotor transformations must rapidly compute state estimates to effectively implement online control of behavior. Behavioral studies indicate that, at a computational level, state estimation may be described in terms of Bayesian integration6–15. However, describing behavior with a Bayesian model does not necessarily indicate that the brain implements these computations by representing probability distributions21,22,62. Here, we focused on integration of multiple time intervals and found evidence that the brain relies on simpler algorithms that approximate optimal Bayesian inference.

We demonstrated that humans integrate prior knowledge with one or two measurements to improve their performance. A key observation was that the integration was nearly optimal for one measurement but not for two measurements. In particular, when two measurements were provided, subjects systematically exhibited more BIAS toward the mean of the prior than expected from an optimal Bayesian model. This observation motivated us to investigate various algorithms that could lead to similar patterns of behavior.

Analytical and numerical analyses suggested that simple inference algorithms that update certain parameters of the posterior instead of the full distribution can not integrate multiple measurements optimally when the noise is signal-dependent. We then systematically explored simple inference algorithms that could perform sequential updating and account for the behavioral observations. One of the simplest updating algorithms is the Kalman filter63. However, this algorithm updates estimates linearly and thus cannot account for the nonlinearities in subjects’ behavior, even for a single measurement (Supplementary Fig. 6). The LNE model augmented the Kalman filter such that the final estimate was subjected to a point-nonlinearity. This allowed LNE to generate nonlinear biases but since the nonlinearity was applied to the final estimate, LNE failed to capture the decrease in bias observed in the 1-2-3-Go compared to 1-2-Go condition. Finally, we adapted the EKF, which is a more sophisticated variant of the Kalman filter that applies a static nonlinearity to the errors in estimation at every stage of updating. This algorithm accounted for optimal behavior in the 1-2-Go condition and exhibited the same patterns of suboptimality observed in humans in the 1-2-3-Go condition. Therefore, EKF provides a good characterization of the algorithm the brain uses when there is need to integrate multiple pieces of information presented sequentially. This finding implies that subjects may only rely on the first few moments of a distribution and use nonlinear updating strategy to track those instead of updating the entire posterior. This strategy is simple and in many scenarios could lead to optimal behavior with little computational cost. Moreover, the recursive nature of EKF’s updating strategy allows it to readily generalize to scenarios when it is necessary to update estimates in real time, even when the number of available samples is not known a priori, which could be tested by extensions of our experiment. Finally, the error-correcting nature of the EKF updates are consistent with the correlations between response intervals observed in synchronization and continuation tasks53,57,64–66, corrections to timing perturbations during synchronization53,58,67, and the influence of recent temporal inputs on the perception of interval duration68–70. Therefore, EKF may provide an algorithmic understanding across a range of timing tasks including interval estimation, synchronization, discrimination, and reproduction.

Maintaining and updating probability distributions is computationally expensive. Moreover, it is not currently known how neural networks might implement such operations22. In contrast, EKF is relatively simple to implement. The only requirement is to use the current estimate to predict the next sample, and use a nonlinear function of the error in prediction to update estimates sequentially. Predictive mechanisms that EKF relies on are thought to be an integral part of how brain circuits support perception and sensorimotor function1,5,8,71–74. As such, the relative success of EKF may be in part due to its compatibility with predictive mechanisms that the brain uses to perform sequential updating. This observation makes the following intriguing prediction: when performing 1-2-3-Go task, subjects do not make two measurements; instead, they use the prior to predict the time of the second flash, use the prediction error to update their estimate, and the new estimate to predict the third flash. Two lines of evidence from recent physiological experiments support this prediction. First, individual neurons in the primate medial frontal cortex encode interval duration similarly for single and multiple interval tasks75, providing a basis for maintaining the current interval estimate during a given task. Second, neural signals in several regions of the brain encode intervals prospectively76–80, providing a basis for predicting the timing of upcoming sensory inputs. Future modeling efforts and electrophysiological experiments will be required to link neural signals to the implementation of the EKF algorithm.

While EKF provides a better account of the observed data in our experiments, it may be that our specific formulation of the Bayesian model did not capture the underlying process. Our BLS model was based on three assumptions: (1) that likelihood function is characterized by signal-dependent noise, (2) that the subjective prior matches the experimentally imposed uniform prior distribution, and (3) that the final estimates are derived from the mean of the posterior, which implicitly assumes that subject rely on a quadratic cost function, as was previously demonstrated24. Our formulation of the likelihood function is particularly important, as it is the key factor that prohibits simple algorithms such as EKF to optimally integrate multiple measurements. The inherent signal-dependent noise in timing causes the likelihood function to be skewed toward longer intervals (see Appendix). This characteristic feature was particularly important for explaining human behavior in a task requiring interval estimation following several measurements40. Moreover, it has been shown that subjects exhibit larger biases for longer intervals within the domain of the prior indicating that the brain has an internal model of this signal-dependent noise24,49,81,82. These results support our formulation of the likelihood function. However, one aspect of our formulation that deserves further scrutiny is the assumption that noise perturbing the two measurements was independent. This may need revision given the long-range positive autocorrelations in behavioral variability83–85, and because S2 is shared between the two measurements, which may lead to correlations between and .

Our formulation of the prior and cost function should also be further evaluated. For example, humans may not be able to correctly encode a uniform prior probability distribution for interval estimation47,49. Similarly, the cost function may not be quadratic86. However, since priors and the cost functions impact both 1-2-Go and 1-2-3-Go conditions, moderate inaccuracies in modeling these components may not be able to explain optimal behavior in 1-2-Go and suboptimal behavior in the 1-2-3-Go condition simultaneously. Finally, recent results suggest the performance may be limited by imperfect integration87,88 and imperfect memory38,89, which future models of sequential updating should incorporate.

Methods

Subjects and apparatus

All experiments were performed in accordance with relevant regulations and guidelines for the ethical treatment of subjects, as approved by the Committee on the Use of Humans as Experimental Subjects at MIT, after receiving informed consent. Eleven human subjects (6 male and 5 female) between 18 and 33 years of age participated in the interval reproduction experiment. Of the 11 subjects, 10 were naive to the purpose of the study.

Subjects sat in a dark, quiet room at a distance of approximately 50 cm from a display monitor. The display monitor had a refresh rate of 60 Hz, a resolution of 1920 by 1200, and was controlled by a custom software (MWorks; http://mworks-project.org/) on an Apple Macintosh platform.

Interval reproduction task

Experiment consisted of several 1 hour sessions in which subjects performed an interval reproduction task (Fig. 1). The task consisted of two randomly interleaved trial types referred to as “1-2-Go” and “1-2-3-Go”. On 1-2-Go trials, two flashes (S1 followed by S2) demarcated a sample interval (ts) that subjects had to measure24. On 1-2-3-Go trials, ts was presented twice, once demarcated by S1 and S2 flashes, and once by S2 and S3 flashes. For both trial types, subjects had to reproduce ts immediately after the last flash (S2 for 1-2-Go and S3 for 1-2-3-Go) by pressing a button on a standard Apple keyboard. On all trials, subjects had to initiate their response proactively and without any additional cue (no explicit Go cue was presented). Subjects received graded feedback on their accuracy.

Each trial began with the presentation of a 0.5 deg circular fixation point at the center of a monitor display. The color of the fixation was blue or red for the 1-2-Go and 1-2-3-Go trials, respectively. Subjects were asked to shift their gaze to the fixation point and maintain fixation throughout the trial. Eye movements were not monitored. After a random delay with a uniform hazard (100 ms minimum plus and interval drawn from an exponential distribution with a mean of 300 ms), a warning stimulus and a trial cue were presented. The warning stimulus was a white circle that subtended 1.5 deg and was presented 10 deg to the left of the fixation point. The trial cue consisted of 2 or 3 small rectangles 0.6 deg above the fixation point (subtending 0.2 × 0.4 deg, 0.5 deg apart) for the 1-2-Go and 1-2-3-Go trials, respectively. After a random delay with a uniform hazard (250 ms minimum plus an interval drawn from an exponential distribution with mean of 500 ms), flashes demarcating ts were presented. Each flash (S1 and S2 for 1-2-Go and S1, S2, and S3 for 1-2-3-Go) lasted for 6 frames (100 ms) and was presented as an annulus around the fixation point with an inside and outside diameter of 2.5 and 3 deg, respectively (Fig. 1A,B). The time between consecutive flashes, which determined ts, was sampled from a discrete uniform distribution ranging between 600 and 1000 ms with a 5 samples (Fig. 1C). To help subjects track the progression of events throughout the trial, after each flash, one rectangle from the trial cue would disappear (starting from the rightmost).

Produced interval (tp) was measured as the interval between the time of the last flash and the time when the subject pressed a designated key on the keyboard (Fig. 1A,B). Subjects received trial-by-trial visual feedback based on the magnitude and sign of the relative error, (tp − ts)/ts. A 0.5 deg circle (“analog feedback”) was presented to the right (for error < 0) or left (error > 0) of the the warning stimulus at a distance that scaled with the magnitude of the error. Additionally, when the error was smaller than a threshold, both the warning stimulus and the analog feedback turned green and a tone denoting “correct” was presented. If the production error was larger than the threshold, the warning stimulus and analog feedback remained white and a tone denoting “incorrect” was presented. The threshold was constant as a function of the relative error and therefore scaled with the sample interval (Fig. 1D). This accommodated the scalar variability of timing that leads to more variable production intervals for longer sample intervals. The scaling factor was initialized at 0.15 at the start of every session and adjusted adaptively using a one-up, one-down scheme that added or subtracted 0.001 to the scaling factor for incorrect or correct responses, respectively. These manipulations ensured that the performance across conditions, subjects, and trials remained approximately at a steady state of 50% correct trials.

To ensure subjects understood the task design, the first session included a number of training blocks. Training blocks were conducted with the supervision of an experimenter. Training trials were arranged in 25 trial blocks. In the first block, the subjects performed the 1-2-Go condition with the sample interval fixed at 600 ms. In the second block, we fixed the interval to be 1000 ms. In the third block, the subject performed the 1-2-3-Go task with the interval fixed at 1000 ms. In the fourth block, the subject continued to perform the 1-2-3-Go task, but with the intervals chosen at random from the experimental distribution. In the final training block, the task condition and sample intervals were fully randomized, as in the main experiment. The subject then performed 400 trials of the main experiment. Subjects completed 10 sessions total, performing 800 trials in each of the remaining 9 experimental sessions. To ensure subjects were adapted to the statistics of the prior24, we discarded the first 99 trials of each session. We also discarded any trial when the subject responded before S2 (for 1-2-Go) or S3 (for 1-2-3-Go) or 1000 ms after the veridical ts. Supplementary Table 2 summarizes the number of completed trials for each subject. Data from two subjects were not included in the analyses because they were not sensitive to the range of sample intervals we tested and their production interval distributions were not significantly different for the longest and shortest sample intervals.

Models

We considered several models for the interval estimation: (1) an optimal Bayes Least-Squares model (BLS), (2) an optimal Bayes Least-Squares model that allowed different noise levels for the two measurements in 1-2-3-Go trials, (3) an extended Kalman filter model (EKF), and (4) a linear-nonlinear estimation model (LNE). All models were designed to be identical for the 1-2-Go task where only one measurement was available but differed in their prediction for the 1-2-3-Go trials.

BLS model

We used the Bayesian integration model that was previously shown to capture behavior in the 1-2-Go task24. This model assumes that subjects combine the measurements and the prior distribution probabilistically according to Bayes’ rule:

| 1 |

| 2 |

where p(ts) represents the prior distribution of the sample intervals and p(tm) the probability distribution of the measurements. The likelihood function, λ(tm|ts), was formulated based on the assumption that measurement noise was Gaussian and had zero mean. To incorporate scalar variability into our model, we further assumed that the the standard deviation of noise scales with ts with constant of proportionality wm representing the Weber fraction for measurement.

Following previous work24, we further assumed that subjects’ behavior can be described by a BLS estimator that minimizes the expected squared error, and uses the expected value of the posterior distribution as the optimal estimate:

| 3 |

where denotes the BLS function that maps the measurement () to the Bayesian estimate after one measurement (). The subscript 1 is added to clarify that this equation corresponds to the condition with a single measurement (i.e., 1-2-Go). The notation E[•] denotes expected value. Given a uniform prior distribution with a range from to , the BLS estimator can be written as:

| 4 |

We assume that and match the minimum and maximum of the experimentally imposed sample interval distribution. We extended this model for the 1-2-3-Go task to two measurements. To do so, we incorporated two likelihood functions in the derivation of the posterior. Assuming that the two measurements are conditionally independent, the posterior can be written as:

| 5 |

where and denote the first and second measurements, respectively, and the likelihood function, λ, is from Eq (2). Because measurements are taken in a sequence, it is intuitive to rewrite Eq (5) in a recursive form:

| 6 |

where is the posterior as specified in Eq (1) and Z is a normalization factor which ensures the integral over the density sums to one. Note that Z includes terms related to , allowing it to appropriately normalize the density after propagating the posterior related to the first measurement forward. Therefore, although the posterior for Eqs (5) and (6) are identical, specifying the posterior in this way allows for the algorithm to be updated sequentially.

The corresponding BLS estimator can again be written as the expected value of the posterior:

| 7 |

| 8 |

where denotes the BLS function that uses two measurements ( and ) to compute . The subscript 2 indicates the mapping function is for two measurements (i.e., 1-2-3-Go). We performed the integrations for the BLS model numerically using Simpson’s quadrature.

BLSmem model

We also considered the possibility that the brain may not be able to hold representations of the first measurement or the associated posterior perfectly over time until the time for integration. To model this we assumed two Weber fractions − wm as formulated in the BLS model and wmem which adjusts the Weber fraction of the first measurement in 1-2-3-Go trials to account for noisy memory or inference processes. In 1-2-Go trials, the posterior was set according to Eq (1) with wm controlling the signal dependent noise. In 1-2-3-Go trials, the posterior was set according to:

| 9 |

With the likelihood function associated with the first measurement defined as:

| 10 |

This formulation allows the measurement noise to be different for the two measurements. The optimal estimator was then calculated as:

| 11 |

EKF model

EKF implements an updating algorithm in which, after each flash, the observer updates the estimate, , based on the previous estimate, , and the current measurement, . The updating rule changes by a nonlinear function of the error between and , which we denote by xn.

| 12 |

| 13 |

is a nonlinear function based on the BLS estimator, :

| 14 |

kn is a gain factor that controls the magnitude of the update and is set by the relative reliability of and , which were formulated in terms of two Weber fractions, wn−1 and wm, respectively:

| 15 |

To track the reliability of we used a formulation based on optimal cue combination under Gaussian noise. For Gaussian likelihoods, the reliability of the estimate is related to the inverse of the variance of the posterior. Similarly, the reliability of the interval estimate is related to the inverse of the Weber fraction. Therefore, we used the following algorithm to track the Weber fraction of the estimate, wn:

| 16 |

As in the case of Gaussians, this algorithm ensures that wn decreases with each additional measurement, reflecting the increased reliability of the estimate relative to the measurement. This ensures that the weight of the innovation respects information already integrated into the estimate by previous iterations of the EKF algorithm.

At S1, no measurements are available. Therefore, we set the initial estimate, to the mean of the prior, and its reliability, w0, to ∞. After S2 (one measurement), the EKF estimate is identical to the BLS model. For two measurements, the process is repeated to compute , but the estimate is suboptimal. This formulation can be readily extended to more than two measurements.

LNE model

LNE uses a linear updating strategy similar to a Kalman filter to update estimates by measurements as follows:

| 17 |

The algorithm is initialized such that and we chose the weighting to be kn = 1/n. This choice minimizes the squared errors in 1-2-3-Go trials. Note that any other choice for kn would deteriorate LNE’s performance. Following this sequential and linear updating scheme, LNE passes the final estimate through a nonlinear transfer function specified by the BLS model for one measurement ():

| 18 |

where denotes the linear-nonlinear estimator after n measurements. This formulation ensures that LNE is identical to the BLS in 1-2-Go trials.

Interval production model

In all models, the final estimate is used for the production phase. Following previous work24, we assumed that the production of an interval is perturbed by Gaussian noise whose standard deviation scales with the estimated interval. The model was additionally augmented by an offset term to account for stimulus-independent biases observed in responses:

| 19 |

where wp is the Weber fraction for production, b is the offset term, and te can refer to the estimate for either 1-2-Go and 1-2-3-Go trials.

All models accommodated “lapse trials” in which the produced interval was outside the mass of the production interval distribution. The lapse trials were modeled as trials in which the production interval was sampled from a fixed uniform distribution, p(tp|lapse), independent of ts. With this modification, the production interval distribution can be written as:

| 20 |

where γ represents the lapse rate. With this formulation, we could identify lapse trials as those for which the likelihood of lapse exceeded the likelihood of a nonlapse. To limit cases of falsely identified lapse trials, we set the width of this uniform distribution conservatively to the range of possible production intervals (between 0 and 2000 ms).

Using simulations, we verified that our model was able to detect lapses for the range of wm, wp, and γ values inferred from the behavior of our subject pool. Most subjects had a small probability of a lapse trial that was consistent with previous reports24. Two subjects had relatively unreliable performance with a larger number of lapse trials. However, our conclusions do not depend on the inclusion of these two subjects.

Analysis and model fitting

All analyses were performed using MATLAB R2014b or MATLAB R2017a, The MathWorks, Inc., Natick, Massachusetts, United States. We used a predictive maximum likelihood procedure to fit each model to the data. The likelihood of tp given ts and a set of parameters Θ (specific to each model) was defined as:

| 21 |

For 1-2-Go trials and

| 22 |

For 1-2-3-Go trials. The integrand for Equation 22 is

| 23 |

With the output of corresponding to the equivalent mapping function for fBLS, fLNE, fEKF, or . corresponds to the likelihood function as defined above, with its dependence on the parameters Θ made explicit.

Assuming that production intervals are conditionally independent across trials, the log likelihood of model parameters can be formulated as:

| 24 |

where the superscripts denote trial number. Maximum likelihood fits were derived from N-100 trials and cross validated on the remaining 100 trials (leave N out cross validation, LNOCV). This process was performed iteratively until all the data was fit. The final model parameters were taken as the average of parameter values across all the fits to the data. Fits were robust to changes in the amount of left out data,. See Supplementary Figs 1, 8, and 10 for a summary of maximum likelihood parameters and predictions of each model fit to our subjects. We also computed the maximum likelihood parameters using the full data set for each subject. Parameters found using either the full data set or LNOCV were nearly identical (see Supplementary Table 3).

We evaluated model fits by generating simulated data from that model and comparing various summary statistics (BIAS, , and RMSE) observed for each subject to those generated by model simulations. For the observed data, summary statistics were computed for non-lapse trials and after removing the offset (b). Model simulations were performed without the lapse term and after setting the offset to zero. The summary statistics were computed as follows:

| 25 |

| 26 |

| 27 |

BIAS2 and VAR represent the average squared bias and average variance over the N distinct ts’s of the prior distribution. The terms , represent the mean and variance of production intervals for the i-th sample interval (). The overall RMSE was computed as the square root of the sum of BIAS2 and VAR. To find the BIAS2 and VAR of each model we took the mean value of each after 1000 simulations of the model with the trial number matched to each subject. This ensured an accurate estimate of these quantities that includes the systematic deviations from the true model behavior due to a finite number of trials.

To perform model comparison, we measured the likelihood of the data, given a model and the maximum likelihood model parameters fit to training data, . We then computed the ratio and , the likelihood of the BLS model, and computed the logarithm of that value to measure the log likelihood ratio. To generate confidence intervals, we evaluated the likelihoods using 100 trials of test data that were left out of model fitting. We iterated this process until all the data was used as training data, allowing us to measure the variability of the log likelihood ratio for each subject.

Finally, we further validated our fitting procedure, analyses, and model comparison on data simulated using a generative process that emulates each model. Using this as ground truth data, we confirmed that our analyses identify the correct model and parameters using similar numbers of trials and subjects (Supplementary Figs 7, 9, and 11).

Electronic supplementary material

Acknowledgements

We would like to thank Christopher Stawarz for his help in programing the psychophysical paradigm. We also thank Evan Remington, Jing Wang, Hansem Sohn, Devika Narain, and Eghbal Hosseini for their helpful discussions of experimental results. Finally, we would like to thank Joshua Tenenbaum and Evan Remington for helpful comments on an earlier versions of this manuscript, and Rossana Chung for her editorial assistance.

Author Contributions

S.W.E. and M.J. conceived of and designed the tasks. S.W.E. performed the experiments and analyzed the data. S.W.E. and M.J. interpreted the results and wrote the paper.

Competing Interests

The authors declare no competing interests.

Footnotes

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Supplementary information accompanies this paper at 10.1038/s41598-018-30722-0.

References

- 1.Wolpert DM, Ghahramani Z, Jordan MI. An internal model for sensorimotor integration. Sci. 1995;269:1880–1882. doi: 10.1126/science.7569931. [DOI] [PubMed] [Google Scholar]

- 2.Ariff G, Donchin O, Nanayakkara T, Shadmehr R. A real-time state predictor in motor control: study of saccadic eye movements during unseen reaching movements. J. Neurosci. 2002;22:7721–7729. doi: 10.1523/JNEUROSCI.22-17-07721.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Shadmehr R, Smith MA, Krakauer JW. Error correction, sensory prediction, and adaptation in motor control. Annu. Rev. Neurosci. 2010;33:89–108. doi: 10.1146/annurev-neuro-060909-153135. [DOI] [PubMed] [Google Scholar]

- 4.Franklin DW, Wolpert DM. Computational mechanisms of sensorimotor control. Neuron. 2011;72:425–442. doi: 10.1016/j.neuron.2011.10.006. [DOI] [PubMed] [Google Scholar]

- 5.Todorov E, Jordan MI. Optimal feedback control as a theory of motor coordination. Nat. Neurosci. 2002;5:1226–1235. doi: 10.1038/nn963. [DOI] [PubMed] [Google Scholar]

- 6.Landy MS, Maloney LT, Johnston EB, Young M. Measurement and modeling of depth cue combination - in defense of weak fusion. Vis. Res. 1995;35:389–412. doi: 10.1016/0042-6989(94)00176-M. [DOI] [PubMed] [Google Scholar]

- 7.Mamassian P, Landy MS. Observer biases in the 3D interpretation of line drawings. Vis. Res. 1998;38:2817–2832. doi: 10.1016/S0042-6989(97)00438-0. [DOI] [PubMed] [Google Scholar]

- 8.van Beers RJ, Sittig AC, Gon JJ. Integration of proprioceptive and visual position-information: An experimentally supported model. J. Neurophysiol. 1999;81:1355–1364. doi: 10.1152/jn.1999.81.3.1355. [DOI] [PubMed] [Google Scholar]

- 9.Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nat. 2002;415:429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- 10.Battaglia PW, Jacobs RA, Aslin RN. Bayesian integration of visual and auditory signals for spatial localization. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 2003;20:1391–1397. doi: 10.1364/JOSAA.20.001391. [DOI] [PubMed] [Google Scholar]

- 11.Körding KP, Wolpert DM. Bayesian integration in sensorimotor learning. Nat. 2004;427:244–247. doi: 10.1038/nature02169. [DOI] [PubMed] [Google Scholar]

- 12.Adams WJ, Graf EW, Ernst MO. Experience can change the’light-from-above’ prior. Nat. Neurosci. 2004;7:1057–1058. doi: 10.1038/nn1312. [DOI] [PubMed] [Google Scholar]

- 13.Alais D, Burr D. The ventriloquist effect results from near-optimal bimodal integration. Curr. Biol. 2004;14:257–262. doi: 10.1016/j.cub.2004.01.029. [DOI] [PubMed] [Google Scholar]

- 14.Oruç I, Maloney LT, Landy MS. Weighted linear cue combination with possibly correlated error. Vis. Res. 2003;43:2451–2468. doi: 10.1016/S0042-6989(03)00435-8. [DOI] [PubMed] [Google Scholar]

- 15.Fetsch CR, Pouget A, DeAngelis GC, Angelaki DE. Neural correlates of reliability-based cue weighting during multisensory integration. Nat. Neurosci. 2012;15:146–154. doi: 10.1038/nn.2983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Knill, D. C. & Richards, W. Perception as Bayesian Inference (Cambridge University Press, 1996).

- 17.Blackwell, D. A. & Girshick, M. A. Theory of Games and Statistical Decisions (Courier Corporation, 1979).

- 18.Stocker AA, Simoncelli EP. Noise characteristics and prior expectations in human visual speed perception. Nat. Neurosci. 2006;9:578–585. doi: 10.1038/nn1669. [DOI] [PubMed] [Google Scholar]

- 19.Narain D, van Beers RJ, Smeets JBJ, Brenner E. Sensorimotor priors in nonstationary environments. J. Neurophysiol. 2013;109:1259–1267. doi: 10.1152/jn.00605.2012. [DOI] [PubMed] [Google Scholar]

- 20.Brainard DH, et al. Bayesian model of human color constancy. J. Vis. 2006;6:1267–1281. doi: 10.1167/6.11.10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Maloney LT, Mamassian P. Bayesian decision theory as a model of human visual perception: testing bayesian transfer. Vis. Neurosci. 2009;26:147–155. doi: 10.1017/S0952523808080905. [DOI] [PubMed] [Google Scholar]

- 22.Ma WJ, Jazayeri M. Neural coding of uncertainty and probability. Annu. Rev. Neurosci. 2014;37:205–220. doi: 10.1146/annurev-neuro-071013-014017. [DOI] [PubMed] [Google Scholar]

- 23.Raphan, M., Simoncelli, E. P., Scholkopf, B., Platt, J. & Hoffman, T. Learning to be bayesian without supervision. In Neural Information Processing Systems, 1145–1152 (2006).

- 24.Jazayeri M, Shadlen MN. Temporal context calibrates interval timing. Nat. Neurosci. 2010;13:1020–1026. doi: 10.1038/nn.2590. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Simoncelli EP. Optimal estimation in sensory systems. The Cogn. Neurosci. 2009;IV:525–535. [Google Scholar]

- 26.Kalman RE. A new approach to linear filtering and prediction problems. J. Basic Eng. 1960;82:35–45. doi: 10.1115/1.3662552. [DOI] [Google Scholar]

- 27.Simon HA. A behavioral model of rational choice. Q. J. Econ. 1955;69:99–118. doi: 10.2307/1884852. [DOI] [Google Scholar]

- 28.Griffiths TL, Vul E, Sanborn AN. Bridging levels of analysis for probabilistic models of cognition. Curr. Dir. Psychol. Sci. 2012;21:263–268. doi: 10.1177/0963721412447619. [DOI] [Google Scholar]

- 29.Griffiths TL, Lieder F, Goodman ND. Rational use of cognitive resources: levels of analysis between the computational and the algorithmic. Top. Cogn. Sci. 2015;7:217–229. doi: 10.1111/tops.12142. [DOI] [PubMed] [Google Scholar]

- 30.Marr, D. Vision: A computational approach (1982).

- 31.Keele SW, Roberto N, Ivry RI, Pokorny RA. Mechanisms of perceptual timing: Beat-based or interval-based judgements? Psychol. Res. 1989;50:251–256. doi: 10.1007/BF00309261. [DOI] [Google Scholar]

- 32.Schulze HH. The perception of temporal deviations in isochronic patterns. Percept. Psychophys. 1989;45:291–296. doi: 10.3758/BF03204943. [DOI] [PubMed] [Google Scholar]

- 33.Drake C, Botte MC. Tempo sensitivity in auditory sequences: evidence for a multiple-look model. Percept. Psychophys. 1993;54:277–286. doi: 10.3758/BF03205262. [DOI] [PubMed] [Google Scholar]

- 34.Ivry RB, Hazeltine RE. Perception and production of temporal intervals across a range of durations: evidence for a common timing mechanism. J. Exp. Psychol. Hum. Percept. Perform. 1995;21:3–18. doi: 10.1037/0096-1523.21.1.3. [DOI] [PubMed] [Google Scholar]

- 35.Burr D, Banks MS, Morrone MC. Auditory dominance over vision in the perception of interval duration. Exp. Brain Res. 2009;198:49–57. doi: 10.1007/s00221-009-1933-z. [DOI] [PubMed] [Google Scholar]

- 36.Ogden RS, Jones LA. More is still not better: testing the perturbation model of temporal reference memory across different modalities and tasks. Q. J. Exp. Psychol. 2009;62:909–924. doi: 10.1080/17470210802329201. [DOI] [PubMed] [Google Scholar]

- 37.Hartcher-O’Brien J, Di Luca M, Ernst MO. The duration of uncertain times: audiovisual information about intervals is integrated in a statistically optimal fashion. PLoS One. 2014;9:e89339. doi: 10.1371/journal.pone.0089339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Cai, M. B. & Eagleman, D. M. Duration estimates within a modality are integrated sub-optimally. Front. Psychol. 6 (2015). [DOI] [PMC free article] [PubMed]

- 39.Shi Z, Ganzenm¨uller S, Müller HJ. Reducing bias in auditory duration reproduction by integrating the reproduced signal. PLoS One. 2013;8:e62065. doi: 10.1371/journal.pone.0062065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Di Luca M, Rhodes D. Optimal perceived timing: Integrating sensory information with dynamically updated expectations. Sci. Rep. 2016;6:28563. doi: 10.1038/srep28563. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Gallistel, C. R. Mental magnitudes. In Space, Time and Number in the Brain, 3–12 (Elsevier, 2011).

- 42.Gallistel CR, Gibbon J. Time, rate, and conditioning. Psychol. Rev. 2000;107:289–344. doi: 10.1037/0033-295X.107.2.289. [DOI] [PubMed] [Google Scholar]

- 43.Merchant H, Zarco W, Prado L. Do we have a common mechanism for measuring time in the hundreds of millisecond range? evidence from multiple-interval timing tasks. J. Neurophysiol. 2008;99:939–949. doi: 10.1152/jn.01225.2007. [DOI] [PubMed] [Google Scholar]

- 44.Rakitin BC, et al. Scalar expectancy theory and peak-interval timing in humans. J. Exp. Psychol. Anim. Behav. Process. 1998;24:15–33. doi: 10.1037/0097-7403.24.1.15. [DOI] [PubMed] [Google Scholar]

- 45.Gibbon J. Scalar expectancy theory and weber’s law in animal timing. Psychol. Rev. 1977;84:279–325. doi: 10.1037/0033-295X.84.3.279. [DOI] [Google Scholar]

- 46.Getty DJ. Discrimination of short temporal intervals: A comparison of two models. Percept. Psychophys. 1975;18:1–8. doi: 10.3758/BF03199358. [DOI] [Google Scholar]

- 47.Acerbi L, Wolpert DM, Vijayakumar S. Internal representations of temporal statistics and feedback calibrate motor-sensory interval timing. PLoS Comput. Biol. 2012;8:e1002771. doi: 10.1371/journal.pcbi.1002771. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Miyazaki M, Nozaki D, Nakajima Y. Testing bayesian models of human coincidence timing. J. Neurophysiol. 2005;94:395–399. doi: 10.1152/jn.01168.2004. [DOI] [PubMed] [Google Scholar]

- 49.Cicchini GM, Arrighi R, Cecchetti L, Giusti M, Burr DC. Optimal encoding of interval timing in expert percussionists. J. Neurosci. 2012;32:1056–1060. doi: 10.1523/JNEUROSCI.3411-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Miller NS, McAuley JD. Tempo sensitivity in isochronous tone sequences: the multiple-look model revisited. Percept. Psychophys. 2005;67:1150–1160. doi: 10.3758/BF03193548. [DOI] [PubMed] [Google Scholar]

- 51.Elliott MT, Wing AM, Welchman AE. Moving in time: Bayesian causal inference explains movement coordination to auditory beats. Proc. Royal Soc. Lond. B: Biol. Sci. 2014;281:20140751. doi: 10.1098/rspb.2014.0751. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Knill DC, Pouget A. The bayesian brain: the role of uncertainty in neural coding and computation. Trends Neurosci. 2004;27:712–719. doi: 10.1016/j.tins.2004.10.007. [DOI] [PubMed] [Google Scholar]

- 53.Michon, J. A. Timing in temporal tracking (Institute for Perception RVO-TNO Soesterberg, The Netherlands, 1967).

- 54.Mates J. A model of synchronization of motor acts to a stimulus sequence i. timing and error corrections. Biol. Cybern. 1994;70:463–473. doi: 10.1007/BF00203239. [DOI] [PubMed] [Google Scholar]

- 55.Mates J. A model of synchronization of motor acts to a stimulus sequence II. stability analysis, error estimation and simulations. Biol. Cybern. 1994;70:475–484. doi: 10.1007/BF00203240. [DOI] [PubMed] [Google Scholar]

- 56.Pressing J. Error correction processes in temporal pattern production. J. Math. Psychol. 1998;42:63–101. doi: 10.1006/jmps.1997.1194. [DOI] [PubMed] [Google Scholar]

- 57.Semjen A, Schulze HH, Vorberg D. Timing precision in continuation and synchronization tapping. Psychol. Res. 2000;63:137–147. doi: 10.1007/PL00008172. [DOI] [PubMed] [Google Scholar]

- 58.Repp BH. Sensorimotor synchronization: a review of the tapping literature. Psychon. Bull. Rev. 2005;12:969–992. doi: 10.3758/BF03206433. [DOI] [PubMed] [Google Scholar]

- 59.Schulze H-H, Vorberg D. Linear phase correction models for synchronization: Parameter identification and estimation of parameters. Brain Cogn. 2002;48:80–97. doi: 10.1006/brcg.2001.1305. [DOI] [PubMed] [Google Scholar]

- 60.Vorberg D, Schulze H-H. Linear Phase-Correction in synchronization: Predictions, parameter estimation, and simulations. J. Math. Psychol. 2002;46:56–87. doi: 10.1006/jmps.2001.1375. [DOI] [Google Scholar]

- 61.Stengel, R. F. Optimal control and estimation (Dover Publications Inc., 1994).

- 62.Bowers JS, Davis CJ. Bayesian just-so stories in psychology and neuroscience. Psychol. Bull. 2012;138:389–414. doi: 10.1037/a0026450. [DOI] [PubMed] [Google Scholar]

- 63.Shi Z, Burr D. Predictive coding of multisensory timing. Curr Opin Behav Sci. 2016;8:200–206. doi: 10.1016/j.cobeha.2016.02.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Wing AM, Kristofferson AB. Response delays and the timing of discrete motor responses. Percept. Psychophys. 1973;14:5–12. doi: 10.3758/BF03198607. [DOI] [Google Scholar]

- 65.Hary D, Moore GP. Synchronizing human movement with an external clock source. Biol. Cybern. 1987;56:305–311. doi: 10.1007/BF00319511. [DOI] [PubMed] [Google Scholar]

- 66.Schulze, H.-H. The error correction model for the tracking of a random metronome: Statistical properties and an empirical test. In Macar, F., Pouthas, V. & Friedman, W. J. (eds) Time, Action and Cognition: Towards Bridging the Gap, 275–286 (Springer Netherlands, Dordrecht, 1992).

- 67.Repp BH. Phase correction following a perturbation in sensorimotor synchronization depends on sensory information. J. Mot. Behav. 2002;34:291–298. doi: 10.1080/00222890209601947. [DOI] [PubMed] [Google Scholar]

- 68.Barnes R, Jones MR. Expectancy, attention, and time. Cogn. Psychol. 2000;41:254–311. doi: 10.1006/cogp.2000.0738. [DOI] [PubMed] [Google Scholar]

- 69.Taatgen N, van Rijn H. Traces of times past: representations of temporal intervals in memory. Mem. Cogn. 2011;39:1546–1560. doi: 10.3758/s13421-011-0113-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Burr D, Della Rocca E, Rocca ED, Morrone MC. Contextual effects in interval-duration judgements in vision, audition and touch. Exp. Brain Res. 2013;230:87–98. doi: 10.1007/s00221-013-3632-z. [DOI] [PubMed] [Google Scholar]

- 71.Rao RPN, Ballard DH. Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects. Nat. Neurosci. 1999;2:79–87. doi: 10.1038/4580. [DOI] [PubMed] [Google Scholar]

- 72.Vaziri S, Diedrichsen J, Shadmehr R. Why does the brain predict sensory consequences of oculomotor commands? optimal integration of the predicted and the actual sensory feedback. J. Neurosci. 2006;26:4188–4197. doi: 10.1523/JNEUROSCI.4747-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Stevenson IH, Fernandes HL, Vilares I, Wei K, Körding KP. Bayesian integration and non-linear feedback control in a full-body motor task. PLoS Comput. Biol. 2009;5:e1000629. doi: 10.1371/journal.pcbi.1000629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Friston K. The free-energy principle: a unified brain theory? Nat. Rev. Neurosci. 2010;11:127–138. doi: 10.1038/nrn2787. [DOI] [PubMed] [Google Scholar]

- 75.Merchant H, Pérez O, Zarco W, Gámez J. Interval tuning in the primate medial premotor cortex as a general timing mechanism. J. Neurosci. 2013;33:9082–9096. doi: 10.1523/JNEUROSCI.5513-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Wang J, Narain D, Hosseini EA, Jazayeri M. Flexible timing by temporal scaling of cortical responses. Nat. Neurosci. 2018;21:102–110. doi: 10.1038/s41593-017-0028-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Merchant H, Averbeck BB. The computational and neural basis of rhythmic timing in medial premotor cortex. J. Neurosci. 2017;37:4552–4564. doi: 10.1523/JNEUROSCI.0367-17.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Komura Y, et al. Retrospective and prospective coding for predicted reward in the sensory thalamus. Nat. 2001;412:546–549. doi: 10.1038/35087595. [DOI] [PubMed] [Google Scholar]

- 79.Mello GBM, Soares S, Paton JJ. A scalable population code for time in the striatum. Curr. Biol. 2015;25:1113–1122. doi: 10.1016/j.cub.2015.02.036. [DOI] [PubMed] [Google Scholar]

- 80.Xu M, Zhang S-Y, Dan Y, Poo M-M. Representation of interval timing by temporally scalable firing patterns in rat prefrontal cortex. Proc. Natl. Acad. Sci. 2014;111:480–485. doi: 10.1073/pnas.1321314111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Petzschner FH, Glasauer S. Iterative bayesian estimation as an explanation for range and regression effects: A study on human path integration. J. Neurosci. 2011;31:17220–17229. doi: 10.1523/JNEUROSCI.2028-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Hudson TE, Maloney LT, Landy MS. Optimal compensation for temporal uncertainty in movement planning. PLoS Comput. Biol. 2008;4:e1000130. doi: 10.1371/journal.pcbi.1000130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Kwon O-S, Knill DC. The brain uses adaptive internal models of scene statistics for sensorimotor estimation and planning. Proc. Natl. Acad. Sci. USA. 2013;110:E1064–73. doi: 10.1073/pnas.1214869110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Gilden DL, Thornton T, Mallon MW. 1/f noise in human cognition. Sci. 1995;267:1837–1839. doi: 10.1126/science.7892611. [DOI] [PubMed] [Google Scholar]

- 85.Farrell S, Wagenmakers E-J, Ratcliff R. 1/f noise in human cognition: is it ubiquitous, and what does it mean? Psychon. Bull. Rev. 2006;13:737–741. doi: 10.3758/BF03193989. [DOI] [PubMed] [Google Scholar]

- 86.Körding KP, Wolpert DM. The loss function of sensorimotor learning. Proc. Natl. Acad. Sci. USA. 2004;101:9839–9842. doi: 10.1073/pnas.0308394101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Acerbi L, Vijayakumar S, Wolpert DM. On the origins of suboptimality in human probabilistic inference. PLoS Comput. Biol. 2014;10:e1003661. doi: 10.1371/journal.pcbi.1003661. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88. Drugowitsch, J., Wyart, V., Devauchelle, A.-D. & Koechlin, E. Computational precision of mental inference as critical source of human choice suboptimality. Neuron (2016). [DOI] [PubMed]

- 89.Ma WJ, Husain M, Bays PM. Changing concepts of working memory. Nat. Neurosci. 2014;17:347–356. doi: 10.1038/nn.3655. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.