Abstract.

Magnetic resonance imaging (MRI) provides a number of advantages over computed tomography (CT) for radiation therapy treatment planning; however, MRI lacks the key electron density information necessary for accurate dose calculation. We propose a dictionary-learning-based method to derive electron density information from MRIs. Specifically, we first partition a given MR image into a set of patches, for which we used a joint dictionary learning method to directly predict a CT patch as a structured output. Then a feature selection method is used to ensure prediction robustness. Finally, we combine all the predicted CT patches to obtain the final prediction for the given MR image. This prediction technique was validated for a clinical application using 14 patients with brain MR and CT images. The peak signal-to-noise ratio (PSNR), mean absolute error (MAE), normalized cross-correlation (NCC) indices and similarity index (SI) for air, soft-tissue and bone region were used to quantify the prediction accuracy. The mean ± std of PSNR, MAE, and NCC were: , HU, and for the 14 patients. The SIs for air, soft-tissue, and bone regions are , , and . These indices demonstrate the CT prediction accuracy of the proposed learning-based method. This CT image prediction technique could be used as a tool for MRI-based radiation treatment planning, or for PET attenuation correction in a PET/MRI scanner.

Keywords: MRI-based treatment planning, pseudo computed tomography, dictionary learning, feature selection

1. Introduction

Magnetic resonance imaging (MRI) has several important advantages over computed tomography (CT) for radiation treatment planning.1 Chiefly, MRI significantly improves soft-tissue contrast over CT, which increases the accuracy and reliability of target delineation in multiple anatomic sites. A potential treatment planning process incorporating MRI as the sole imaging modality could eliminate systematic MRI-CT coregistration errors, reduce cost, minimize radiation exposure to the patient, and simplify clinical workflow.2 Despite these advantages, MRI contains neither unique nor quantitative information on electron density, which is needed for accurate dose calculations and generating reference images for patient setup. Therefore, in this proposed MRI-only-based treatment planning process, electron density information that is otherwise obtained from CT must thus be derived from the MRI by synthesizing a so-called pseudo CT (PCT).3,4

Accurate methods for estimating PCT from MRI are crucially needed. The existing estimation methods can be broadly classified into the following four categories:

Atlas-based methods: These methods use single or multiple atlases with deformable registrations.5–8 They can also incorporate pattern recognition techniques to estimate electron density.9,10 The main drawback is that their accuracy depends on that of the intersubject registration.

Classification-based methods: These methods first manually or automatically segment MR images into bone, air, fat and soft-tissue classes, and then assign a uniform electron density to each class.11–16 However, these methods may not reliably be able to differentiate bone from air due to the ambiguous intensity relationship between bone and air using MRI.

Sequence-based methods: These methods create PCTs by using intensity information from standard MR, specialized MR sequences such as the ultrashort echo time (UTE), or a combination of the two.17–25 However, the current image quality of UTE sequences is unsatisfactory for accurate delineation of blood vessels from bone; both similarly appear dark.14,25

Learning-based methods: In these methods, a map between CT and MRI is learned by a training data set and then used for predicting PCT for the target MRI.4,26–32 Recent work have proposed a Gaussian mixture regression (GMR) method for learning from UTE MR sequences.19,33–36 Due to lack of spatial information, the quality of GMR method was suboptimal. Li et al.37 proposed convolutional neural networks. Random forests have also been used for learning the map.38–41 Huynh et al.41 used the structural random forest to estimate a PCT image from its corresponding MRI. However, the redundant features often contain several biases, which may affect the training of decision tree and thus cause prediction errors. Recently, another group of learning-based methods are the dictionary-learning-based methods.3,42,43 Andreasen et al.3,42 used a patch-based method to generate PCTs based on conventional T1-weighted MRI sequences. They refined this method for use in MR-based pelvic radiotherapy. Due to the parallelizable iteration, a fast patch-based PCT synthesis accelerated by graphics processing unit (GPU) for PET/MR attenuation correction was proposed.43 Aouadi et al.44 proposed a sparse patch-based method applied to MR-only radiotherapy planning.

Since recent dictionary-learning-based methods have not taken into consideration patient-specific features when representing an image patch, the purpose of this work is to address this issue by the following improvement.

-

1.

Position and deformation balance: Three-dimensional (3-D) rotation invariant local binary pattern (3-D RILBP) features are innovatively crafted with multiradii level sensitivity and multiderivate image modeling to balance rigidity of image deformation and sensitivity of small position difference.

-

2.

Anatomic signature: A feature selection method is introduced to identify the informative and salient features that are discriminative for clustering bone, air, and soft tissue of each voxel, by minimizing the least absolute shrinkage and selection operator (LASSO) energy function. The identified features, known as the anatomical signature, are used to perform dictionary learning.

-

3.

Joint dictionary learning: To address the challenge of training large amounts of data, for each patch of new arrival MRI, the local self-similarity is applied to restrict the training domain. Then, a joint dictionary learning model is proposed to sparsely represent the new MRI patch by simultaneously training the dictionary of MRI anatomic signature and CT intensity. The alternative direction method (ADM)45 is introduced to train the coupled dictionaries of MR and CT by feeding both to distributed optimization. The basic pursuit (BP)46 method combined with well-trained dictionaries is utilized for the sparse representation of a new MRI patch.

This paper is organized as follows: we provide an overview of the proposed MRI-based PCT framework in the methods, followed by the details on anatomic signature, construction of the coupled similar patch-based dictionary via joint dictionary learning, and the prediction of target CT by sparse representation reconstruction. We compare state-of-art methods based on dictionary learning with our approach in the results and conclude that our dictionary-learning-based PCT estimation framework could be a useful tool for MRI-based radiation treatment planning for rigid structures.

2. Methods

2.1. System Overview

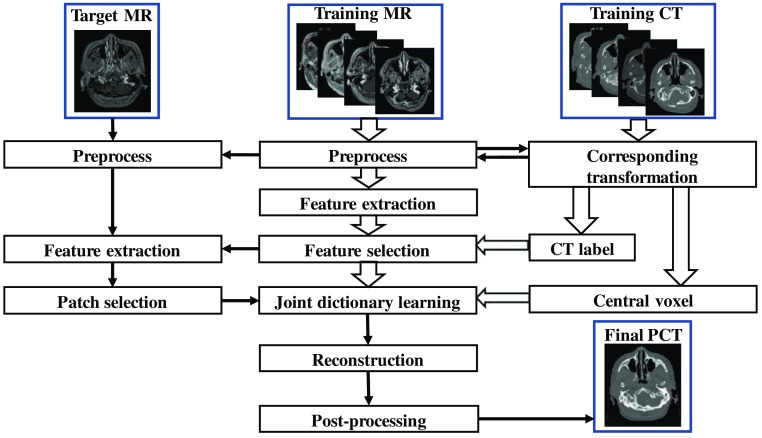

For a given pair of MR and CT training images, the CT image was used as the regression target of the MRI image. We assumed that the training data have been preprocessed by removing noise and uninformative regions and have been aligned and normalized using mean and standard deviation. In the training stage, for each 3-D patch of MR image, we extracted 3-D RILBP features with multiradii-level sensitivity and multiderivate image modeling including the original MR image and the derivate image filtered through standard deviation and mean filters. The mean and standard filtered images were obtained by assigning each voxel a value corresponding to the mean and standard value of the voxels in a spherical neighborhood around the voxel, respectively. The CT label of each patch’s central voxel was obtained using fuzzy C-means labeling. The most informative features corresponding to cluster the labels were identified by an LASSO operator; their statistical discriminative power was evaluated by each one’s Fisher’s score.47 For each patch of new arrival MRI, the training data were collected by searching similar neighbors within a bounded region. Then, we used a joint dictionary learning method to simultaneously train the sparse representation dictionary of both anatomic signature and CT intensities within the searched region. Last, the sparse coefficients of this patch were generated by BP46 methods under the dictionary of MRI features. Finally, we used these coefficients to reconstruct the PCT image under the well-trained dictionary of CT. A brief workflow of our method is shown in Fig. 1.

Fig. 1.

The brief architecture of the proposed method.

2.2. Preprocessing

A rigid intrasubject registration was used to align each pair of brain CT and MR images of the same subject. All pairs were aligned onto a common space by applying a rigid-body intersubject registration. All the training pairs were further registered to a new test MR image. We performed denoising, inhomogeneity correction, and histogram matching for MR images. To reduce the computational cost of training the models, a mask was performed by removing the most useless regions, i.e., the air region outside the body contour, in all MRI images.

2.3. Feature Extraction

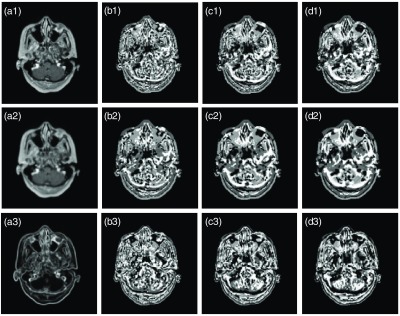

Based on the approach proposed by Pauly et al.,48 in which two-dimensional (2-D) LBP-like features were used for predicting organ bounding boxes in Dixon MR image sets, a 3-D rotation invariant version was implemented for this task. The 3-D version differs from 2-D version. The neighborhood voxels are not defined by a circular neighborhood but instead by a spherical one, which can be solved by the RILBP operator proposed by Fehr and Burkhardt.49 To address MRI artifacts, we used multiderivate image modeling to extract features with different image models, and also used multiple spatial scales with fixed patch size to achieve the feature’s multiradius level sensitivity as recommended previously.41 For 3-D RILBP feature extraction, we used a cube with size of and a sphere with a 3-mm radius for the first scale and then added 2 and 4 mm to those values for the second and third scales, respectively. The 3-D RILBP feature maps of multilevel and multiderivate image modeling in transverse plane are shown in Fig. 2, where each feature map’s voxel is constructed by central feature of feature vector of 3-D patch centered at that voxel’s position.

Fig. 2.

Example of 3-D RILBP feature maps. The (a1–a3) are the image sets including original MRI image, mean of MRI and standard division of MRI. The corresponding feature maps are in the (b1–b3), (c1–c3), and (d1–d3). In (b1–b3), a sphere with radius 3 mm and cubes of size were used. In (c1–c3), the sphere radius was 5 mm and cube size was . In (d1–d3), the sphere radius was 7 mm and cube size was .

2.4. Feature Selection

We denote as the extracted features of MR image patches centered at each position , is the set of CT voxels at each position . Recent studies50–52 have shown the potential drawback of using extremely high-dimensional features for existing learning methods. One major concern is that high-dimensional training data may contain bias and redundant components, which could over fit the learning models and in turn affect the final learning robustness. To address this challenge, we propose a feature selection method using a logistic regression function, as feature selection can be regarded as a binary regression task with respect to each dimension of the original feature.50,51 Further, the goal of feature selection is to identify and group a small subset of the most informative features. Thus, our feature selection is accomplished by implementing the sparsity constraint in the logistic regression, i.e., minimizing the following LASSO energy loss function:

| (1) |

where denotes the original feature, is a sparse coefficient vector that denotes a binary coefficient, with nonzero denoting that the corresponding features are relevant to the anatomic classification, and zero denoting that the nonrelevant features are eliminated during the classifier learning process. denotes the intercept scalar. is an anatomical binary labeling function. is a optimization scalar and is a regularization parameter. denotes the number of voxel samples and denotes the length of vector . is computed by discriminative power, i.e., Fisher’s score47 for each feature of feature vector.

The optimization tries to punish the features that have smaller discriminative power, i.e., uninformative features are eliminated from training the learning-based model. To implement this punishment, is used to be divided by . The optimal solution of intercept scalar and can be estimated by Nesterov’s53 method. Denoted as , the informative features corresponding to the nonzero entries in were selected, which have superior discriminatory power in distinguishing bone, air, and soft-tissues from each other. We defined as the MRI patch’s anatomic signature.

Two-step feature selection was applied by first labeling air and nonair materials in first round and then labeling bone and soft-tissue in the second round. The air, soft-tissue, and bone labels, as shown in Fig. 3, are roughly segmented by fuzzy C-means clustering method. Structures adjacent to both bone and air are difficult to accurately disambiguate in comparison with voxels of other regions. Therefore, more samples were drawn from the bone and air regions during the feature selection process. To balance the three regional samples, ratio parameters were used to control the ratio of the number of samples drawn from air, bone, and soft-tissue regions.

Fig. 3.

Example of the CT image label. (a) and (c) The different slices of CT image, (b) and (d) the corresponding labels, where black area denotes the air label, the white area is the bone label and the gray area is the soft-tissue label.

An example is given in Fig. 4, in which (a) and (b) show training paired MRI and CT image with two types of samples. The samples of the bone region are indicated by red asterisk whereas those selected from soft-tissue regions are indicated by green circles. Figure 4(c) shows a scattered plot of the samples corresponding to the randomly selected two features without feature selection, whereas Fig. 4(d) shows that of the samples corresponding to the two top-ranked features, which are evaluated by Fisher’s score,47 after feature selection. It can be seen with feature selection that bone regions can be distinguished from the soft tissues.

Fig. 4.

An example of feature selection.

2.5. Pseudo Computed Tomography Synthesis

After preselecting the most informative feature as an anatomic signature, we propose a joint dictionary learning framework to handle the challenge of large-scale data training. The aim was to estimate the target CT intensity from a MRI in a patch, or a group of voxels, wise local self-similarity fashion using the joint dictionary learning and sparse representation technique. If a patch (with a size of voxels) in the new arrival MR image is similar to a certain patch of training MR images, then the corresponding CT central voxel for these two MR patches were highly correlated. Each patch of the new arrival MRI image could identify several similar instances from the properly selected training MR patches via a sparse learning technique when the training data set is sufficient enough. Therefore, in this paper the correlation of MR anatomic signature and CT central voxels can be constructed by joint dictionary learning, which tracks the same sparse coefficients of both MR patches and CT intensities. To this end, the framework involves the training and predicting stages. In the training stage, the following two steps are used to efficiently learn the correlation between MR anatomic signature and CT central voxel within the selected similar templates.

-

1.

We selected a small number of similar local training MR patches and their corresponding CT central voxels in distinctive regions as the dataset for our next training step.

-

2.

For the small set of training data, the coupled dictionaries were adaptively trained to sparsely represent the MR anatomic signature and CT intensity by joint dictionary learning, where each dictionary column is initialized by MR signature and their corresponding CT intensities.

The prediction stage consists of the following two steps:

-

1.

For each target MR patch, we extracted similar training MR patches and the corresponding coupled dictionary so that the target patch can be sparsely represented by the selected dictionary.

-

2.

The target CT voxel was predicted by applying sparse reconstruction of training CT intensities dictionary (i.e., a linear combination of CT dictionary columns) using the previously obtained sparse coefficients.

2.5.1. Patch selection based on local similarity

Before constructing the coupled dictionary, patch selection is needed among all candidate MR patches to reduce the computational complexity and to enhance the prediction accuracy by excluding the irrelevant patches based on reasonable similar metrics. Specifically, the criterion for patch similarity is defined between two MRI patches and by a reflecting weight , which can be computed by calculating distance between both CT intensity and MRI anatomic signature

| (2) |

where is the number of voxels in the target patch, is a smoothing parameter, is the standard division of all the patches and features, and denotes the balance parameter of the similarity between anatomic signature and image intensity. First, the new arrival MRI is normalized by mean and standard deviation. Then, the training set of the new MRI patch’s central position can be obtained by sorting the -nearest patches within a specific searching region

| (3) |

where reflecting the local similarities between new MRI patch and training MRI patch . is utilized in our next step of joint dictionary learning.

2.5.2. Joint dictionary learning

Let denotes a database containing a set of anatomic signature, MRI patches, and corresponding CT central voxels: where denotes the anatomic signature, denotes the multidimensional space, and denotes the corresponding MRI patch and CT central voxel intensity value, respectively.

To train the locally-adaptive coupled dictionary for each preselected training database, we initialized the first iteration of the dictionary with each coupled column comprising a pair of similar MRI anatomical signature and a corresponding central voxel of CT. Let denotes the set of weighted anatomic signatures of training MRI patches and denotes the set of corresponding CT intensities. The element-wise product of is obtained by Fisher’s score47 as shown in Sec. 2.4. The goal of applying omega is to further enforce the influence of the most discriminative signatures by adaptive weighting parameter. Then, the initialization of coupled dictionary is generated as .

In the following iterations, supposing we have the roughly constructed dictionary of MRI signatures and of CT intensities on training data from the previous iteration, we utilize the joint dictionary learning method to simultaneously update and with the core idea that the sparse representation tracks the same sparse coefficients of both MR signatures and CT intensities. Let denotes the weights corresponding to each MRI patch computed by local self-similarity in Eq. (2), where . Then, each training MRI anatomic signature and its corresponding CT intensity can be sparsely represented by a pair of coupled and , respectively. Let denotes the set of sparse coefficients. Then the joint dictionary learning framework can be described as follows:

| (4) |

where is the regularization parameter of column sparse term, denotes the parameter of penalization, is the joint -norm, and is ’th row of the matrix . Equation (4) can be solved by ADM method.45

2.5.3. Prediction

For each patch of a new arrival MRI patch, the sparse representation of a new arrival MRI patch anatomic signature under dictionary can be calculated by a BP optimization46

| (5) |

where denotes the anatomic signature of each new MRI patch. Finally, with the corresponding dictionary of CT , we utilized the coefficient to reconstruct the PCT intensity by a linear combination of dictionary columns

| (6) |

Our proposed algorithm is summarized in Algorithm 1. The default parameter setting of Algorithm 1 is shown in Table 1.

Algorithm 1.

Pseudo CT prediction using anatomic signature and joint dictionary learning.

| 1. For each training MR image patch , extract the multiscale and multilevel 3-D RILBP feature . |

| 2. Use fuzzy C-means to segment each corresponding CT into three labels that represent the CT values in the range of bone, soft-tissue and air. |

| 3. For the coupled training MRI features and corresponding CT labels, apply LASSO logistic regression Eq. (1) to select the informative features as an anatomic signature. |

| 4. Use Fisher’s score47 to measure the discriminant power of the anatomic signature to separate bone, air and soft-tissue, and normalize to such that . |

| 5. For each new arrival MRI image patch , select its similar patches from the training patches by computing the weight from Eq. (2), and sorting the K-nearest patches by Eq. (3). |

| 6. For the anatomic features of and corresponding CT voxels , initialize the coupled dictionaries: and . |

| 7. Use joint dictionary learning [Eq. (4)] to train the coupled dictionary . |

| 8. For each new arrival MRI patch, use Eq. (5) to obtain the sparse representation of learned dictionary , and then use Eq. (6) to reconstruct the pseudo CT intensity. |

Table 1.

Default parameter setting.

| Parameter | Default value | Meaning |

|---|---|---|

| Window size | MRI searching region size | |

| Patch size | MRI patch size (for feature extraction) | |

| 25 | Number of similar patches | |

| 63 | Length of anatomic signature | |

| 0.05 | Regularization parameter in Eq. (1) | |

| 1 | Smoothing parameter of weight estimation in Eq. (2) | |

| 0.5 | Balancing parameter in Eq. (2) | |

| 1 | Penalty parameter of joint dictionary learning in Eq. (4) | |

| 0.5 | Regularization parameter of joint dictionary learning in Eq. (4) |

3. Experiments

3.1. Datasets and Quantitative Measurements

To test our prediction method, we applied the proposed method to 14 paired brain MR and CT images. All patients’ MRI () and CT () data were acquired using a Siemens MR and CT scanner. To quantitatively characterize the prediction accuracy, we used three widely used metrics: mean absolute error (MAE), peak signal-to-noise ratio (PSNR), normalized cross correlation (NCC), and similarity index (SI)54 for air, soft-tissue and bone regions. MAE is used to measure how close forecasts or predictions are to the actual outcomes. PSNR is an engineering term for the ratio between the maximum possible power of a signal and the power of corrupting noise that affects the fidelity of its representation. NCC is a measure of similarity between two series as a function of the displacement of one relative to the other. SI has been used to evaluate the image quality of nonrigid image registration. The three metrics are defined as

| (7) |

| (8) |

| (9) |

| (10) |

where is the ground-truth CT image, is the corresponding PCT image, is the maximal intensity value of and , and is the number of voxels in the image. and are the mean of CT and PCT image, respectively. and are the standard deviation of CT and PCT image, respectively. and denote the binary mask (air, soft-tissue, and bone) of CT and PCT image, respectively. We set intensity value HU as air, HU as bone, and the rest as soft-tissue. is the number of elements in set . In general, a better prediction has lower MAE, and higher PSNR, NCC, and SI values.

3.2. Parameter Setting

To compare the influence of multiple parameters within our proposed algorithm (shown in Table 1) we evaluated how variation of one parameter affects performance while fixing the other parameters to their default settings in Table 1. Our parameter testing experiment was performed by testing one patient and training the other 13 patients. This was done by comparing the three metrics in Eqs. (7)–(9). We tested the regularization parameter of Eq. (1) over the range of 0.01 to 0.1 and fixed the other parameter as default settings in Table 1. The performance was not sensitive around . The smoothing parameter and balancing parameter in Eq. (2) were empirically set to 1 and 0.5, respectively. These parameters were decided by their performance on our experiments. In Eq. (4), the parameters and were initialized by 1 and 0.5, in the following iterations, these two parameters were updated by ADM as previously recommended.45

We also fixed the patch size and tested different window sizes, and vice versa. As seen in Table 2, the best MAE and NCC were obtained with a patch size of and a window size of (Table 2).

Table 2.

The performance with different patch size and window size.

| Window size | |||

|---|---|---|---|

| Patch size | 5 × 5 × 5 | 7 × 7 × 7 | 9 × 9 × 9 |

| 3 × 3 × 3 | PSNR = 22.7 dB | PSNR = 22.9dB | PSNR = 22.7 dB |

| NCC = 0.93 | NCC = 0.93 | NCC = 0.92 | |

| MAE = 76.1 HU | MAE = 79.3 HU | MAE = 83.6 HU | |

| 5 × 5 × 5 | N/A | PSNR = 23.7 dB | PSNR = 23.7 dB |

| NCC = 0.93 | NCC = 0.93 | ||

| MAE = 76.1 HU | MAE = 75.9 HU | ||

| 7 × 7 × 7 | N/A | N/A | PSNR = 23.1 dB |

| NCC = 0.93 | |||

| MAE = 76.9 HU | |||

Figures 5(a1)–5(a3) show PSNR, MAE, and NCC metrics as a function of number of similar patches, whereas the other parameters are fixed as in default setting in Table 1. From these curves, 23 similar patches are sufficient for a good prediction. However, since MAE was the highest priority metric that was optimized, the absolute change in PSNR from 23 to 25 similar patches holds less weight than MAE, which resulted in 25 similar patches being the most optimal number for prediction.

Fig. 5.

(a1–a3) The MAE, PSNR, and NCC performance with different number of similar patches and (b1–b3) the MAE, PSNR, and NCC performance with different number of selected features.

The number of selected features also influences the learning performance. If most features are eliminated by feature selection, the precision of PCT prediction may be diminished due to insufficient information. Conversely, if the majority of features are selected, uninformative data may also affect the accuracy of the proposed method. To measure the performance as determined by the number of selected features, we evaluated the PSNR, NCC, and MAE metrics as shown in Figs. 5(b1)–5(b3), and fixed the other parameter as shown in Table 1. One can observe that the optimal number of selected features is 63.

3.3. Contribution of Feature Selection and Joint Dictionary Learning

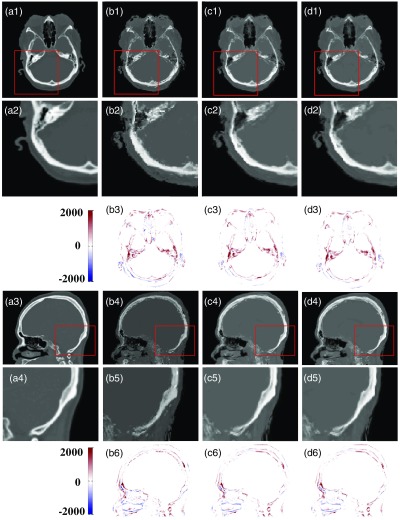

In Sec. 2, we introduced two enhancements over the classic feature-based method, i.e., feature selection and joint dictionary learning. Figure 6 shows detailed visual comparisons between classic feature-based (CF), feature selection-based (FS), and feature selection with jointly dictionary learning-based (FSJDL) methods. Table 3 quantifies the above observations using 14 pairs of MR-CT leave-one-out experiments. We can visually observe the result in Fig. 6 that the FSJDL method best preserves continuity, coalition, and smoothness in the prediction results. As prediction results between methods primarily differ in regions were bone is adjacent to air, visual results within such regions are shown in Figs. 6(b2)–6(d2) and 6(b5)–6(d5). Table 4 shows the consistent improvement in FS over the CF method, and the modest improvement of FSJDL over the FS and CF methods. The prediction estimated by FS is statistically superior to the CF method for MAE, NCC, and PSNR (-test, ). Also, the prediction estimated by FSJDL is significantly superior to the FS method for MAE and NCC ().

Fig. 6.

Comparison of the PCT estimated by CF, FS, and FSJDL, (a1) and (a3) ground truth CT, (b1–d1) and (b4–d4) are the PCT result generated by CF, FS, and FSJDL, respectively. (a2–d2) The zoomed regions indicated by solid red boxes in (a1–d1); (a4–d4) are the zoomed regions indicated by solid red boxes in (a3–d3). (b3–d3) are difference image between ground truth (a1) and the PCT estimated by CF, FS, and FSJDL. (b6–d6) Difference image between ground truth (a3) and the PCT generated by CF, FS, and FSJDL.

Table 3.

Numerical results with compared methods.

| Method | MAE (HU) | PSNR (dB) | NCC |

|---|---|---|---|

| CF | 102.8 ± 22.7 | 21.6 ± 1.2 | 0.87 ± 0.04 |

| FS | 96.4 ± 23.8 | 22.0 ± 1.4 | 0.88 ± 0.04 |

| FSJDL | 82.6 ± 26.1 | 22.4 ± 1.9 | 0.91 ± 0.04 |

Table 4.

Numerical results of the proposed and state-of-the-art dictionary learning methods.

| Method | MAE (HU) | PSNR (dB) | NCC | SI (Air) | SI (Soft-tissue) | SI (Bone) |

|---|---|---|---|---|---|---|

| Int | 148.9 ± 42.3 | 18.3 ± 0.9 | 0.80 ± 0.05 | 0.80 ± 0.09 | 0.57 ± 0.12 | 0.51 ± 0.13 |

| FP | 125.5 ± 24.5 | 19.1 ± 1.1 | 0.80 ± 0.05 | 0.93 ± 0.02 | 0.72 ± 0.07 | 0.56 ± 0.18 |

| FSJDL | 82.6 ± 26.1 | 22.4 ± 1.9 | 0.91 ± 0.04 | 0.98 ± 0.01 | 0.88 ± 0.03 | 0.69 ± 0.08 |

| FSJDL versus Int (-value) | 0.002 | |||||

| FSJDL versus FP (-value) | 0.036 |

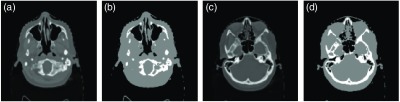

3.4. Comparison of State-of-the-Art Dictionary Learning-Based Methods

We compared our method with two state-of-the-art dictionary-based approaches: an intensity-based method (Int)42 and a fast patch-based method (FP).43 The parameters used in these two methods were set based on their best performance. The prediction results are shown in Fig. 7. The CT images generated by our method are similar to ground truth. Our method outperforms these two methods, specifically with higher similarity in shape and lower difference values. The Int method often results in blurred predictions due to simple averaging of the similar patches. Although the FP method works better than the Int method, its prediction remains noisy. Table 4 quantifies the superiority of our method over the other two in terms of MAE, PSNR, NCC, and SI. Our method’s average MAE of 82.6 HU is significantly better than the 148.9 and 125.5 HU generated by the Int and the FP methods, respectively. Figure 8 shows the detailed prediction performance of the comparison method through histogram analyses of the entire leave-one-out template. It can be seen that for some patients, overall performance is inferior to that of others, presumably owing to lack of database diversity.

Fig. 7.

Comparison with two state-of-the-art methods: (a1–a2) ground truth, (b1–b4) intensity-based method (Int), (c1–c4) fast patch-based method (FP), (d1–d4) classic feature-based method (CF), (e1–e4) feature selection-based method (FS), and (f1–f4) feature selection with joint dictionary learning-based method (FSJDL).

Fig. 8.

(a–c) The MAE, PSNR, and NCC of different methods for each enrolled patient, respectively.

4. Discussion

In our study, we have presented a dictionary-learning-based method of estimating a PCT image from a standard MR image. We introduced an anatomic signature based on feature selection into a dictionary-learning-based method to improve the prediction accuracy of the resultant PCT. Local self-similarity was incorporated to address the challenge of large-scale training data training. Joint dictionary learning was applied to learn the coupled dictionary of MRI anatomic signatures and CT intensities. We evaluated the clinical application of our method on MR-CT brain datasets and compared its performance with the true CT image (ground truth) and two state-of-the-art dictionary-learning-based methods.

The efficacy of using 3-D RILBP was visually demonstrated in Figs. 7(d1)–7(d4) when compared with [Figs. 7(c1)–7(c4)] and numerically demonstrated by detailed metrics comparing FP and CF methods in Fig. 7. We observed that 3-D RILBP features notably improved the prediction accuracy of the PCT. Figure 2 shows that multiradii level sensitivity and multiderivate image modeling provided features containing local texture, edges and spatial information derived from a 3-D MR image patch. Thus, we concluded that 3-D RILBP features can balance image deformation invariance and sensitivity to small structural changes.

The efficacy of using an anatomic signature was demonstrated via the comparison between Figs. 6(c1)–6(c6), compared with Figs. 6(b1)–6(b6), Figs. 7(e1)–7(e4) versus Figs. 7(d1)–7(d4) and Table 4. The PCT generated from FP method was superior compared with that generated with the CF method. Our results showed that the anatomic signature substantially improved the prediction accuracy, both qualitatively and quantitatively. It can be concluded that the use of anatomic signature identified by feature selection can diminish the influence of bias and redundant information contained by high-dimensional training data and preserve the informative and highly relevant features for prediction performance.

Figures 6(d1)–6(d6) compared with Figs. 6(c1)–6(c6), and Figs. 7(f1)–7(f4) compared with Figs. 7(e1)–7(e4), and Fig. 8 FSJDL compared with the FS method validated the efficacy of the local self-similarity and joint dictionary learning framework. The FSJDL method was a significant improvement to FS method. Since the training was based on a small set of similar patches, a CT image could be predicted in about 2 h on 3.00 GHz Intel Xeon 8-core processor, compared to about 1 week required for competing methods in the same experimental environment. Thus, joint dictionary learning drastically reduces computation complexity.

Our method was substantially better than two state-of-the-art dictionary learning methods as evaluated by the local texture, edge and spatial information anatomical signature extraction, the discriminative feature selection, and the joint dictionary learning framework. As shown in Fig. 7, our system could predict PCT images that are similar to ground-truth values and could effectively capture minute changes in electron densities. As shown in Table 4 and Fig. 8, our proposed method significantly improved the performance of PCT. The MAE between our generated PCT and ground truth CT is Hounsfield unit (HU). The previous work14 has shown that an HU error on the order of did not affect the dosimetric accuracy of intensity modulated radiation treatment planning based on PCT. This shows that PCT generated from MRI is a valid clinical application that can be applied to the current workflow of patient treatment planning.

Although we have proposed an accurate method for estimating PCTs derived from brain T1-weighted MR, this work still has several limitations. First, we did not apply this method to other body sites, such as abdomen and lung. For nonrigid structures, we will have to use a deformable MRI-CT registration to deal with the intrasubject mismatch between MRI and CT. Second, a dosimetric comparison between plans generated from the simulation CT and its corresponding predicted CT has not yet been explored. Third, we did not compare our algorithm with the other state-of-the-art machine-learning-based methods. The goals here are proving the efficiency of the feature selection in manufactural feature-based dictionary learning method, and improving the recent dictionary-learning-based methods for PCT estimation. Last, we tested our algorithm on 14 patients’ brain; testing in a larger population can prove the robustness of our algorithm.

5. Conclusion

Here, we proposed anatomic signature and joint dictionary learning-based methods to improve the accuracy of pseudo CT prediction. The anatomic signature was generated by identifying the most informative and discriminative features within the 3-D RFLBP features extracted from multiradii level sensitivity and multiderivate image modeling-based MR images. The feature selection was implemented by LASSO and then the Fisher’s score was adopted to evaluate the relevance of such feature anatomically and statistically. To feasibly implement the specific dictionary learning for each patch of an arriving new MRI, a bounded searching region with most similar neighbors was obtained by computing the local self-similarity. Then, a joint dictionary learning method was incorporated to simultaneously train the sparse representation coupled MRI and CT dictionaries of either MRI anatomic signature or CT intensities. Afterward, the sparse coefficients of new MRI patch signature were optimized by BP methods under the well-trained MRI dictionary. Finally, we used these coefficients to reconstruct the pseudo CT intensity under the well-trained CT dictionary. Experimental results showed that our method can accurately predict pseudo CT images in various scenarios, even in the context of large shape variation, and outperforms two state-of-the-art dictionary-learning-based methods.

Acknowledgments

This research was supported in part by the National Cancer Institute of the National Institutes of Health under Award No. R01CA215718, the Department of Defense (DoD) Prostate Cancer Research Program (PCRP) Award No. W81XWH-13-1-0269 and Dunwoody Golf Club Prostate Cancer Research Award, a philanthropic award provided by the Winship Cancer Institute of Emory University.

Biography

Biographies for the authors are not available.

Disclosures

The author declares no conflicts of interest.

References

- 1.Edmund J. M., Nyholm T., “A review of substitute CT generation for MRI-only radiation therapy,” Radiat. Oncol. 12, 28 (2017). 10.1186/s13014-016-0747-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Devic S., “MRI simulation for radiotherapy treatment planning,” Med. Phys. 39(11), 6701–6711 (2012). 10.1118/1.4758068 [DOI] [PubMed] [Google Scholar]

- 3.Andreasen D., et al. , “Patch-based generation of a pseudo CT from conventional MRI sequences for MRI-only radiotherapy of the brain,” Med. Phys. 42(4), 1596–1605 (2015). 10.1118/1.4914158 [DOI] [PubMed] [Google Scholar]

- 4.Kapanen M., Tenhunen M., “T1/T2*-weighted MRI provides clinically relevant pseudo-CT density data for the pelvic bones in MRI-only based radiotherapy treatment planning,” Acta Oncol. 52(3), 612–618 (2013). 10.3109/0284186X.2012.692883 [DOI] [PubMed] [Google Scholar]

- 5.Uha J., et al. , “MRI-based treatment planning with pseudo CT generated through atlas registration,” Med. Phys. 41(5), 051711 (2014). 10.1118/1.4873315 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Sjolund J., et al. , “Generating patient specific pseudo-CT of the head from MR using atlas-based regression,” Phys. Med. Biol. 60(2), 825–839 (2015). 10.1088/0031-9155/60/2/825 [DOI] [PubMed] [Google Scholar]

- 7.Schreibmann E., et al. , “MR-based attenuation correction for hybrid PET-MR brain imaging systems using deformable image registration,” Med. Phys. 37(5), 2101–2109 (2010). 10.1118/1.3377774 [DOI] [PubMed] [Google Scholar]

- 8.Dowling J. A., et al. , “An atlas-based electron density mapping method for magnetic resonance imaging (MRI)-alone treatment planning and adaptive MRI-based prostate radiation therapy,” Int. J. Radiat. Oncol. 83(1), E5–E11 (2012). 10.1016/j.ijrobp.2011.11.056 [DOI] [PubMed] [Google Scholar]

- 9.Sjolund J., et al. , “Skull segmentation in MRI by a support vector machine combining local and global features,” in 22nd Int. Conf. on Pattern Recognition, pp. 3274–3279 (2014). 10.1109/ICPR.2014.564 [DOI] [Google Scholar]

- 10.Hofmann M., et al. , “MRI-based attenuation correction for PET/MRI: a novel approach combining pattern recognition and atlas registration,” J. Nucl. Med. 49(11), 1875–1883 (2008). 10.2967/jnumed.107.049353 [DOI] [PubMed] [Google Scholar]

- 11.Khateri P., et al. , “A novel segmentation approach for implementation of MRAC in head PET/MRI employing short-TE MRI and 2-point Dixon method in a fuzzy C-means framework,” Nucl. Instrum. Methods Phys. Res. Sect. A 734, 171–174 (2014). 10.1016/j.nima.2013.09.006 [DOI] [Google Scholar]

- 12.Khateri P., et al. , “Generation of a four-class attenuation map for MRI-based attenuation correction of PET Data in the head area using a novel combination of STE/Dixon-MRI and FCM clustering,” Mol. Imaging Biol. 17(6), 884–892 (2015). 10.1007/s11307-015-0849-1 [DOI] [PubMed] [Google Scholar]

- 13.Yu H., et al. , “Toward magnetic resonance-only simulation: segmentation of bone in MR for radiation therapy verification of the head,” Int. J. Radiat. Oncol. 89(3), 649–657 (2014). 10.1016/j.ijrobp.2014.03.028 [DOI] [PubMed] [Google Scholar]

- 14.Gudur M. S. R., et al. , “A unifying probabilistic Bayesian approach to derive electron density from MRI for radiation therapy treatment planning,” Phys. Med. Biol. 59(21), 6595–6606 (2014). 10.1088/0031-9155/59/21/6595 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Siversson C., et al. , “Technical note: MRI only prostate radiotherapy planning using the statistical decomposition algorithm,” Med. Phys. 42(10), 6090–6097 (2015). 10.1118/1.4931417 [DOI] [PubMed] [Google Scholar]

- 16.Yang X. F., Fei B. W., “Multiscale segmentation of the skull in MR images for MRI-based attenuation correction of combined MR/PET,” J. Am. Med. Inf. Assoc. 20(6), 1037–1045 (2013). 10.1136/amiajnl-2012-001544 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Aitken A. P., et al. , “Improved UTE-based attenuation correction for cranial PET-MR using dynamic magnetic field monitoring,” Med. Phys. 41(1), 012302 (2014). 10.1118/1.4837315 [DOI] [PubMed] [Google Scholar]

- 18.Buerger C., et al. , “Investigation of MR-based attenuation correction and motion compensation for hybrid PET/MR,” IEEE Trans. Nucl. Sci. 59(5), 1967–1976 (2012). 10.1109/TNS.2012.2209127 [DOI] [Google Scholar]

- 19.Edmund J. M., et al. , “A voxel-based investigation for MRI-only radiotherapy of the brain using ultra short echo times,” Phys. Med. Biol. 59(23), 7501–7519 (2014). 10.1088/0031-9155/59/23/7501 [DOI] [PubMed] [Google Scholar]

- 20.Keereman V., et al. , “MRI-based attenuation correction for PET/MRI using ultrashort echo time sequences,” J. Nucl. Med. 51(5), 812–818 (2010). 10.2967/jnumed.109.065425 [DOI] [PubMed] [Google Scholar]

- 21.Aasheim L. B., et al. , “PET/MR brain imaging: evaluation of clinical UTE-based attenuation correction,” Eur. J. Nucl. Med. Mol. Imaging 42(9), 1439–1446 (2015). 10.1007/s00259-015-3060-3 [DOI] [PubMed] [Google Scholar]

- 22.Cabello J., et al. , “MR-based attenuation correction using ultrashort-echo-time pulse sequences in dementia patients,” J. Nucl. Med. 56(3), 423–429 (2015). 10.2967/jnumed.114.146308 [DOI] [PubMed] [Google Scholar]

- 23.Berker Y., et al. , “MRI-based attenuation correction for hybrid PET/MRI systems: a 4-class tissue segmentation technique using a combined ultrashort-echo-time/dixon MRI sequence,” J. Nucl. Med. 53(9), 796–804 (2012). 10.2967/jnumed.111.092577 [DOI] [PubMed] [Google Scholar]

- 24.Ladefoged C. N., et al. , “Region specific optimization of continuous linear attenuation coefficients based on UTE (RESOLUTE): application to PET/MR brain imaging,” Phys. Med. Biol. 60(20), 8047–8065 (2015). 10.1088/0031-9155/60/20/8047 [DOI] [PubMed] [Google Scholar]

- 25.Hsu S. H., et al. , “Investigation of a method for generating synthetic CT models from MRI scans of the head and neck for radiation therapy,” Phys. Med. Biol. 58(23), 8419–8435 (2013). 10.1088/0031-9155/58/23/8419 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Rank C. M., et al. , “MRI-based treatment plan simulation and adaptation for ion radiotherapy using a classification-based approach,” Radiat. Oncol. 8, 51 (2013). 10.1186/1748-717X-8-51 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Rank C. M., et al. , “MRI-based simulation of treatment plans for ion radiotherapy in the brain region,” Radiother. Oncol. 109(3), 414–418 (2013). 10.1016/j.radonc.2013.10.034 [DOI] [PubMed] [Google Scholar]

- 28.Kim J., et al. , “Implementation of a novel algorithm for generating synthetic CT images from magnetic resonance imaging data sets for prostate cancer radiation therapy,” Int. J. Radiat. Oncol. 91(1), 39–47 (2015). 10.1016/j.ijrobp.2014.09.015 [DOI] [PubMed] [Google Scholar]

- 29.Korhonen J., et al. , “Influence of MRI-based bone outline definition errors on external radiotherapy dose calculation accuracy in heterogeneous pseudo-CT images of prostate cancer patients,” Acta Oncol. 53(8), 1100–1106 (2014). 10.3109/0284186X.2014.929737 [DOI] [PubMed] [Google Scholar]

- 30.Korhonen J., et al. , “A dual model HU conversion from MRI intensity values within and outside of bone segment for MRI-based radiotherapy treatment planning of prostate cancer,” Med. Phys. 41(1), 011704 (2014). 10.1118/1.4842575 [DOI] [PubMed] [Google Scholar]

- 31.Yang X. F., Fei B. W., “A skull segmentation method for brain MR images based on multiscale bilateral filtering scheme,” Proc. SPIE 7623, 76233K (2010). 10.1117/12.844677 [DOI] [Google Scholar]

- 32.Navalpakkam B. K., et al. , “Magnetic resonance-based attenuation correction for PET/MR hybrid imaging using continuous valued attenuation maps,” Invest. Radiol. 48(5), 323–332 (2013). 10.1097/RLI.0b013e318283292f [DOI] [PubMed] [Google Scholar]

- 33.Johansson A., Karlsson M., Nyholm T., “CT substitute derived from MRI sequences with ultrashort echo time,” Med. Phys. 38(5), 2708–2714 (2011). 10.1118/1.3578928 [DOI] [PubMed] [Google Scholar]

- 34.Johanson A., et al. , “Improved quality of computed tomography substitute derived from magnetic resonance (MR) data by incorporation of spatial information—potential application for MR-only radiotherapy and attenuation correction in positron emission tomography,” Acta Oncol. 52(7), 1369–1373 (2013). 10.3109/0284186X.2013.819119 [DOI] [PubMed] [Google Scholar]

- 35.Jonsson J. H., et al. , “Treatment planning of intracranial targets on MRI derived substitute CT data,” Radiother. Oncol. 108(1), 118–122 (2013). 10.1016/j.radonc.2013.04.028 [DOI] [PubMed] [Google Scholar]

- 36.Jonsson J. H., et al. , “Accuracy of inverse treatment planning on substitute CT images derived from MR data for brain lesions,” Radiat. Oncol. 10, 13 (2015). 10.1186/s13014-014-0308-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Li R. J., et al. , “Deep learning based imaging data completion for improved brain disease diagnosis,” Lect. Notes Comput. Sci. 8675, 305–312 (2014). 10.1007/978-3-319-10443-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Jog A., Carass A., Prince J. L., “Improving magnetic resonance resolution with supervised learning,” in IEEE 11th Int. Symp. on Biomedical Imaging (ISBI), pp. 987–990 (2014). 10.1109/ISBI.2014.6868038 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Andreasen D., et al. , “Computed tomography synthesis from magnetic resonance images in the pelvis using multiple random forests and auto-context features,” Proc. SPIE 9784, 978417 (2016). 10.1117/12.2216924 [DOI] [Google Scholar]

- 40.Chan S. L. S., et al. , “Automated classification of bone and air volumes for hybrid PET-MRI brain imaging,” in Int. Conf. on Digital Image Computing: Techniques and Applications (Dicta), pp. 1–8 (2013). 10.1109/DICTA.2013.6691483 [DOI] [Google Scholar]

- 41.Huynh T., et al. , “Estimating CT image from MRI data using structured random forest and auto-context model,” IEEE Trans. Med. Imaging 35(1), 174–183 (2016). 10.1109/TMI.2015.2461533 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Andreasen D., Van Leemput K., Edmund J. M., “A patch-based pseudo-CT approach for MRI-only radiotherapy in the pelvis,” Med. Phys. 43(8), 4742–4752 (2016). 10.1118/1.4958676 [DOI] [PubMed] [Google Scholar]

- 43.Torrado-Carvajal A., et al. , “Fast patch-based pseudo-CT synthesis from T1-weighted MR images for PET/MR attenuation correction in brain studies,” J. Nucl. Med. 57(1), 136–143 (2016). 10.2967/jnumed.115.156299 [DOI] [PubMed] [Google Scholar]

- 44.Aouadi S., et al. , “Sparse patch-based method applied to MRI-only radiotherapy planning,” Phys. Med. 32, 309 (2016). 10.1016/j.ejmp.2016.07.173 [DOI] [Google Scholar]

- 45.Boyd S., et al. , “Distributed optimization and statistical learning via the alternating direction method of multipliers,” Found. Trends Mach. Learn. 3(1), 1–122 (2010). 10.1561/2200000016 [DOI] [Google Scholar]

- 46.Deng W., Yin W., Zhang Y., “Group sparse optimization by alternating direction method,” Proc. SPIE 8858, 88580R (2013). 10.1117/12.2024410 [DOI] [Google Scholar]

- 47.Ayech M. W., Ziou D., “Automated feature weighting and random pixel sampling in k-means clustering for Terahertz image segmentation,” in IEEE Conf. on Computer Vision and Pattern Recognition Workshops (CVPRW) (2015). 10.1109/CVPRW.2015.7301294 [DOI] [Google Scholar]

- 48.Pauly O., et al. , “Fast multiple organ detection and localization in whole-body MR dixon sequences,” Lect. Notes Comput. Sci. 6893, 239–247 (2011). 10.1007/978-3-642-23626-6 [DOI] [PubMed] [Google Scholar]

- 49.Fehr J., Burkhardt H., “3D rotation invariant local binary patterns,” in 19th Int. Conf. on Pattern Recognition, Vol. 1–6, pp. 616–619 (2008). 10.1109/ICPR.2008.4761098 [DOI] [Google Scholar]

- 50.Avalos M., et al. , “Sparse conditional logistic regression for analyzing large-scale matched data from epidemiological studies: a simple algorithm,” BMC Bioinf. 16, S1 (2015). 10.1186/1471-2105-16-S6-S1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Aseervatham S., et al. , “A sparse version of the ridge logistic regression for large-scale text categorization,” Pattern Recognit. Lett. 32(2), 101–106 (2011). 10.1016/j.patrec.2010.09.023 [DOI] [Google Scholar]

- 52.Yaqub M., et al. , “Investigation of the role of feature selection and weighted voting in random forests for 3-D volumetric segmentation,” IEEE Trans. Med. Imaging 33(2), 258–271 (2014). 10.1109/TMI.2013.2284025 [DOI] [PubMed] [Google Scholar]

- 53.Nesterov Y., Introductory Lectures on Convex Optimization: A Basic Course, Springer US, New York: (2003). [Google Scholar]

- 54.Tang F. H., Ip H. S. H., “Image fusion enhancement of deformable human structures using a two-stage warping-deformable strategy: a content-based image retrieval consideration,” Inf. Syst. Front. 11(4), 381–389 (2009). 10.1007/s10796-009-9151-6 [DOI] [Google Scholar]