Abstract

The prefrontal cortex (PFC) plays an important role in several forms of cost-benefit decision making. Its contributions to decision making under risk of explicit punishment, however, are not well understood. A rat model was used to investigate the role of the medial PFC (mPFC) and its monoaminergic innervation in a Risky Decision-making Task (RDT), in which rats chose between a small, “safe” food reward and a large, “risky” food reward accompanied by varying probabilities of mild footshock punishment. Inactivation of mPFC increased choice of the large, risky reward when the punishment probability increased across the session (“ascending RDT”), but decreased choice of the large, risky reward when the punishment probability decreased across the session (“descending RDT”). In contrast, enhancement of monoamine availability via intra-mPFC amphetamine reduced choice of the large, risky reward only in the descending RDT. Systemic administration of amphetamine reduced choice of the large, risky reward in both the ascending and descending RDT; however, this reduction was not attenuated by concurrent mPFC inactivation, indicating that mPFC is not a critical locus of amphetamine’s effects on risk taking. These findings suggest that mPFC plays an important role in adapting choice behavior in response to shifting risk contingencies, but not necessarily in risk-taking behavior per se.

Keywords: medial prefrontal cortex, decision making, risk, punishment, monoamine

1. Introduction

Adaptive decision making requires weighing the relative costs and benefits associated with available options and arriving at a choice that is beneficial in the long term. Perturbations in decision making involving risks of adverse consequences are characteristic of several psychiatric disorders (Crowley et al., 2010; Ernst et al., 2003; Gowin et al., 2013; Linnet et al., 2011). For example, individuals with substance use disorder (SUD) display exaggerated risk taking, tending to prefer more immediately rewarding options even though they may be accompanied by adverse consequences (Gowin et al., 2013). In contrast, individuals with anorexia nervosa display pathological risk aversion and may fail to take even low or moderate risks to obtain desirable outcomes (Kaye et al., 2013). Given the central role of decision-making deficits in these and other psychiatric disorders, it is important to understand their underlying neural mechanisms.

Human neuroimaging experiments and studies of patients with focal brain damage have provided considerable insight into the neural correlates of risk-based decision making. The majority of these studies have used the Iowa Gambling Task (IGT) or similar simulated gambling tasks to assess risky decision making. In the IGT, subjects choose between four decks of cards, two of which yield large payoffs but even larger losses, and two of which yield small payoffs but even smaller losses (Brand et al., 2007). As subjects learn the task, they develop a strategy in which “risky” decks are avoided in favor of the smaller, but “safer” decks. Patients with lesions of either the ventromedial prefrontal cortex (vmPFC) (Bechara et al., 1994) or dorsolateral prefrontal cortex (dlPFC) consistently choose the risky decks (Clark et al., 2003; Fellows and Farah, 2005; Manes et al., 2002). Neuroimaging studies support these findings and show that the PFC is recruited during the IGT and similar tasks (Ernst et al., 2002; Fukui et al., 2005; Lawrence et al., 2009; Lin et al., 2008). Together, these studies suggest that the PFC is critical for maintaining optimal decision-making behavior.

Experiments in animal models corroborate findings from human lesion and imaging studies. For example, lesions or inactivation of the medial PFC [mPFC, the rodent homologue of human dlPFC (Uylings et al., 2003)] cause rats to make fewer advantageous choices in several tasks that model the human IGT (de Visser et al., 2011; Paine et al., 2015; Zeeb et al., 2015). Notably, however, findings from a different rodent risky decision-making task suggest that the mPFC may not necessarily influence risk taking per se. St. Onge and Floresco (2010) employed a task in which rats chose between a small, certain reward and a large, uncertain reward, the probability of which changed across blocks of trials within a test session. In this task, mPFC inactivation increased choice of the large reward when the probability of large reward delivery decreased across a test session, but decreased choice of the large reward when the order of probability changes was reversed (St Onge and Floresco, 2010). These findings suggest that mPFC inactivation interfered with the ability to update reward value representations as the contingencies changed across the session, rather than directly influencing risk preferences. This conclusion is consistent with the well-documented role of the mPFC in cognitive flexibility (Birrell and Brown, 2000; Floresco et al., 2008; Floresco et al., 2006; Ragozzino, 2007; Ragozzino et al., 1999a; Ragozzino et al., 2003; Ragozzino et al., 1999b).

Monoamine signaling in the mPFC has been implicated in both risky decision making and cognitive flexibility (Dalley et al., 2001; Fitoussi et al., 2015; Floresco and Magyar, 2006; Floresco et al., 2006; McGaughy et al., 2008; St Onge et al., 2011; St Onge and Floresco, 2010). Both dopamine (DA) and norepinephrine (NE) are elevated in the mPFC in the presence of stimuli that predict aversive outcomes such as footshock (Feenstra et al., 1999). This increase in monoamine signaling may indicate a role for NE and DA in ascribing salience to stimuli predictive of aversive outcomes, as NE depletion in the mPFC blocks aversive place conditioning (Ventura et al., 2008; Ventura et al., 2007) and DA receptor blockade impairs the ability to use conditioned punishers to guide instrumental behavior (Floresco and Magyar, 2006). Several studies have also implicated mPFC monoamine neurotransmission in other types of decision making and cognitive flexibility. For example, both DA and serotonin (5-HT) in the mPFC contribute to intertemporal decision making, which involves choices between a small, more immediate reward and a large, delayed reward (Loos et al., 2010; Winstanley et al., 2006; Yates et al., 2014). With respect to cognitive flexibility, DA and NE in the mPFC contribute to the ability to shift behavior when choice contingencies change (Dalley et al., 2001; Mingote et al., 2004; van der Meulen et al., 2007). For instance, depletion of mPFC NE impairs performance on an attentional set shifting task (McGaughy et al., 2008) and DA D2 receptor (D2R) mRNA expression in the mPFC is associated with greater flexibility in shifting choice behavior during risky decision making (Simon et al., 2011). Considered together, these data suggest that during decision making involving risk of punishment, the mPFC, and specifically mPFC monoamine transmission, could be important not only for signaling the motivational value of risky choices, but also for adjusting the salience attributed to these choices as task contingencies change.

Most of the aforementioned studies (both human and rodent) used decision-making tasks in which the “costs” associated with the large reward or net gain consisted of reward omission or a timeout period during which rewards were unavailable. Many real-world decisions, however, involve the possibility of actual harmful consequences. As the neural mechanisms of risky decision making can differ depending on the type of “cost” involved (Orsini et al., 2015a), it is important to determine how the mPFC contributes to decision making under risk of explicit punishment. Previous work from our lab employed correlational approaches to address this issue by evaluating relationships between expression of several neurobiological markers in mPFC and performance on a decision making task that incorporates both rewards (food) and risks of adverse consequences (footshock punishment) (Deng et al., 2018; Simon et al., 2011). Because the results of these studies were correlational in nature, however, the current experiments employed behavioral pharmacological manipulations to more directly address the role of mPFC in this form of decision making. The first goal was to use a pharmacological inactivation approach to determine how the mPFC is involved in decision making involving risk of explicit punishment (Simon et al., 2009; Simon and Setlow, 2012). The second goal was to use a combination of behavioral pharmacological approaches to assess the involvement of mPFC monoamine transmission.

2. Materials and Methods

2.1. Subjects

Male Long Evans rats (60 days of age upon arrival from Charles River Laboratories; n = 71) were housed individually and maintained on a 12 hour light/dark cycle (lights on at 0700) throughout all experiments. Rats were allowed ad libitum access to water, but during behavioral testing, were food restricted to 85% of their free feeding weight, with target weights adjusted upward by 5 g every week to account for growth. All procedures were conducted in accordance with the University of Florida Institutional Animal Care and Use Committee and adhered to the guidelines of the National Institutes of Health.

2.2. Apparatus

For all behavioral sessions, rats were tested in twelve computer-controlled operant test chambers (Coulbourn Instruments), each of which was housed in an individual sound-attenuating cabinet. Every chamber was equipped with a recessed food delivery trough with a photobeam to detect nosepokes and a 1.12-W lamp to illuminate the trough. Each trough was connected to a food hopper, from which 45 mg grain-based food pellets (Test Diet; 5TUM) were delivered into the trough. The food trough was located 2 cm above the floor in the center of the front wall of the chamber and was flanked by two retractable levers. The floor of each test chamber was comprised of stainless steel rods connected to a shock generator (Coulbourn Instruments), which delivered scrambled footshocks. An activity monitor was mounted on the ceiling of each chamber to record locomotor activity during behavioral sessions. The activity monitor used an array of infrared detectors focused over the test chamber to measure movement, which was defined as a relative change in infrared energy falling on the different detectors in the array. Finally, a 1.12 W houselight was affixed to the rear wall of the sound-attenuating cabinet. Test chambers were connected to a computer running Graphic State 3.0 software (Coulbourn Instruments), which simultaneously controlled task events and collected behavioral data.

2.3. Surgical Procedures

Upon arrival, rats were given one week to acclimate to the vivarium on a free feeding regimen before undergoing stereotaxic surgery. Rats were anesthetized with isoflurane gas (1-5% in O2) and were given subcutaneous injections of buprenorphine (0.05 mg/kg), Meloxicam (1 mg/kg) and sterile saline (10 mL). After being placed in a stereotaxic frame (David Kopf), the scalp was disinfected with a chlorohexidine/isopropyl alcohol swab and an adhesive surgical drape was placed over the rat’s body. The scalp was incised and retracted, and three small burr holes were drilled into the skull for jeweler’s screws: two were placed such that they would be anterior to the guide cannulae and the third was placed such that it would be posterior to the guide cannulae. After securing the screws in place, the skull was leveled by adjusting the dorsal/ventral position on the stereotax toothbar, to ensure that the DV coordinates at bregma and lambda were within 0.03 mm of one another. Two additional burr holes were then drilled for bilateral implantation of guide cannulae (Plastics One; double cannulae, 22 gauge, 1.5 mm center to center) directly above the mPFC (AP: +2.7 from bregma; ML: ±0.7 from bregma; DV: −3.6 from skull). The guide cannulae were cemented into place with dental cement and sterile stylets were inserted into the cannulae to prevent clogging. Rats were given an additional subcutaneous injection of 10 mL of sterile saline and placed on a heating pad to recover from surgery. Rats were allowed one week to recover before being food restricted in preparation for behavioral testing.

2.4. Behavioral Procedures

2.4.1. Overview of experimental design.

In all experiments, rats first underwent surgery to implant guide cannulae targeting the mPFC as described above, followed by training in the RDT.

The goal of Experiment 1 was to determine how risky decision making is affected by mPFC inactivation. In Experiment 1.1, rats were trained in the ascending version of the RDT wherein the probabilities of punishment increased across the session, and the effects of mPFC inactivation on risky decision making were evaluated. In Experiment 1.2, rats were trained in the descending version of the RDT wherein the probabilities of punishment decreased across the session, and the effects of mPFC inactivation on risky decision making were evaluated.

Experiment 2 was conducted to ascertain whether broad monoamine neurotransmission in the mPFC contributes to risky decision making. In Experiment 2.1, rats were trained in the ascending RDT and the effects of intra-mPFC amphetamine (which increases availability of dopamine, norepinephrine, and serotonin) on risky decision making were evaluated. In Experiment 2.2, the procedures were identical to those in Experiment 2.1 except that rats were trained in the descending RDT.

Previous work in our lab showed that systemic administration of amphetamine decreases rats’ preference for large, risky rewards. The goal of Experiment 3 was to determine whether the mPFC is a critical locus of action for amphetamine’s effect on risky decision making. In Experiment 3.1, rats were trained in the ascending RDT and the effects of mPFC inactivation on systemic amphetamine-induced decreases in risk taking were evaluated. In Experiment 3.2, the procedures were identical to those in Experiment 3.1 except that rats were trained in the descending RDT.

2.4.2. Risky Decision-making Task Procedures:

Rats were first trained to perform the basic components of the RDT (nosepoking and lever pressing for food delivery) as described previously (Mitchell et al., 2014; Orsini et al., 2015b; Simon et al., 2009). Each RDT session was 60 min in duration and was comprised of five 18-trial blocks. Each trial was 40 s in duration and began with illumination of the house light and food trough light. A nosepoke into the food trough extinguished the trough light and caused the extension of either one lever (forced choice trials) or both levers (free choice trials). If a rat failed to nosepoke within 10 s, the food trough light and house light were extinguished and the trial was scored as an omission. A press on one lever resulted in a small, safe food reward (1 pellet) and a press on the other lever resulted in a large food reward (2 pellets) that was accompanied by a possible 1 s footshock. The probability of receiving a footshock was contingent upon a preset probability that was specific to each trial block: in the ascending RDT, the probability of footshock increased across successive blocks (0, 25, 50, 75 and 100%) whereas in the descending RDT, the probability of footshock decreased across successive blocks (100, 75, 50, 25 and 0%). Shock intensities (0.15–0.5 μA) were adjusted individually for each rat over the course of training to attempt to ensure that their mean baseline performance remained as close as possible to the center of the parametric space. These adjustments were made to ensure there would be sufficient parametric space to observe either increases or decreases in risk taking in each rat as a consequence of pharmacological manipulations. Importantly, however, shock intensities were not adjusted once drug microinjections began. Although the probability of footshock delivery was variable, the large reward was always delivered upon every choice of the large, risky lever. The left/right position of the small, safe and large, risky levers was counterbalanced across rats; however, for the entirety of the experiment, the lever identities remained the same for each rat.

Each block of trials began with eight forced choice trials in which the shock contingencies for that block were established (four presentations of each lever alone, in pseudorandom order). During these forced choice trials, the probability of shock following a lever press for the large reward was dependent across the four trials in that block. For example, in the 25% risk block, one and only one of the four presses on the large reward lever resulted in footshock delivery; similarly, in the 75% risk block, three and only three of the four presses on the large reward lever resulted in footshock delivery. The forced choice trials were followed by 10 free choice trials in which both levers were extended and rats were able to freely choose between them. In contrast to the forced choice trials, the probability of shock on the free choice trials was independent of the other trials in that block. In other words, the probability of shock on each free choice trial was the same, irrespective of shock deliveries on previous trials. If rats failed to lever press after 10 s on either forced or free choice trials, the levers were retracted, the food trough light and house light were extinguished, and the trial was scored as an omission. Finally, food delivery was accompanied by illumination of both the food trough light and house light, which were extinguished after collection of the food or after 10 s, whichever occurred first. Rats were trained in the task until stable choice performance emerged (see Section 2.7 below), at which point they were ready for microinjections.

2.4.3. Microinjection procedures:

Rats initially underwent mock microinjections, in which the injectors were inserted into the cannulae but no drug was administered, and were then immediately tested in the RDT. This was done to ensure that the mere insertion of the microinjectors (and any damage associated with this procedure) did not affect choice behavior. Forty-eight hours later, rats received intra-mPFC microinjections (0.5 μl at a rate of 0.5μl/min in each hemisphere) of either baclofen/muscimol (Experiments 1 and 3) or d-amphetamine (Experiment 2) five minutes before testing in the RDT. In each experiment, microinjections of the different doses of each drug occurred in a randomized, counterbalanced order such that each rat received each dose of drug (as well as their respective vehicles), with a 48 h washout period between successive microinjections. Microinjectors (Plastics One; 28 gauge) extended 1 mm beyond the tip of the guide cannulae and were connected via polyethylene tubing to 10 μl Hamilton syringes mounted on an infusion pump (Harvard Apparatus). After each microinjection, the injectors remained in place for an additional minute to allow for diffusion of the drugs, after which the injectors were removed and sterile stylets were inserted into the guide cannulae.

2.5. Drugs

In Experiment 1, the mPFC was inactivated with a combination of the GABAA receptor agonist muscimol (Tocris Biosciences) and the GABAB receptor agonist baclofen (Sigma-Aldrich). Each drug was dissolved separately in DMSO and then combined 50:50 with physiological saline for an initial concentration of 500 ng/μl. Muscimol and baclofen were then combined in equal volumes so that the final concentration of each drug was 250 ng/μl. These concentrations microinjected in the mPFC have been shown to be effective in disrupting decision making performance in other tasks (Floresco et al., 2008; St Onge and Floresco, 2010). In experiments in which the mPFC was inactivated, the corresponding vehicle consisted of a 50:50 saline:DMSO solution. In Experiment 2, d-amphetamine (2.0, 10.0, 20.0 μg; NIDA Drug Supply Program) was dissolved in physiological saline, which was also used as the vehicle. These doses of amphetamine were chosen based on their efficacy in modulating cost/benefit decision making when administered intra-cerebrally (Orsini et al., 2017). Finally, in Experiment 3, the mPFC was inactivated using the same baclofen/muscimol combination used in Experiment 1. Five minutes after mPFC microinjections, rats received systemic injections of d-amphetamine (1.5 mg/kg) 10 min prior to behavioral testing. This dose of amphetamine reliably decreases choice of the large, risky reward in the RDT (Orsini et al., 2016; Simon et al., 2011).

2.6. Histology

At the completion of each experiment, rats were overdosed with Euthasol and were perfused intracardially with 0.1M PBS followed by 4% paraformaldehyde. Brains were extracted and post-fixed in paraformaldehyde for 24 h, after which they were transferred to a 20% sucrose in 0.1M PBS solution for 2 days before being sectioned. Brains were sectioned (45 μm) on a cryostat maintained at −19°C and sections were mounted on electrostatic slides (Fisherbrand). Brain sections were subsequently stained with 0.25% thionin and coverslipped with Permount (Fisher Scientific).

2.7. Data analysis

Raw data files were extracted from Graphic State 3.0 software and compiled using a custom macro written for Microsoft Excel (Dr. Jonathan Lifshitz, University of Kentucky). All data were analyzed with SPSS 22.0. Choice performance in the RDT was defined as the percentage of free choice trials in each block on which rats chose the large, risky reward. In all statistical analyses, a p-value of 0.05 or less was considered statistically significant. Stable choice behavior was determined by analyzing the free choice trials from the last five consecutive test sessions using a two-factor repeated measures analysis of variance (ANOVA) (Simon and Setlow, 2012; Winstanley et al., 2004). Only when there was neither a main effect of day nor a significant day X trial block interaction was choice behavior considered stable. To evaluate the effects of the pharmacological manipulations, a two-factor repeated measures ANOVA (dose X block) was conducted on choice performance. If this parent ANOVA revealed a significant main effect of dose and/or a significant dose X block interaction, additional repeated measures ANOVAs were used to compare individual doses with the vehicle condition. Choice latencies were defined as the interval between lever extension and lever press, excluding trials on which rats failed to lever press altogether (omissions). To analyze choice latencies, only forced choice trials were used due to insufficient data from free choice trials [i.e., some rats chose one lever exclusively on some blocks; (Orsini et al., 2016; Shimp et al., 2015)]. Choice latencies were analyzed using a three-factor repeated measures ANOVA (lever identity X dose X block). If this analysis yielded significant main effects and/or interactions, additional repeated measures ANOVA were employed to compare individual doses with the vehicle condition for each lever. Baseline locomotor activity (in arbitrary units) was averaged across intertrial intervals (ITIs; the period in which neither lights nor levers were present) across all blocks for each dose and was analyzed with either a paired t-test (Experiment 1) or a repeated measures ANOVA (Experiments 2-3) with drug or dose as the within subjects factors. Locomotor activity during the 1 s shock delivery was used as a measure of shock reactivity and was averaged across blocks 2-5 for each dose. These data were then analyzed with either a paired t-test (Experiment 1), a one-factor repeated measures ANOVA (Experiment 2) or a two-factor repeated measures ANOVA (Experiment 3), with dose(s) as the within subjects factor(s). Finally, omissions were defined as the percentage of forced choice trials (out of the maximum of 40) or free choice trials (out of the maximum of 50), on which a rat failed to lever press. Omissions were analyzed using the same methods used for locomotor activity.

3. Results

3.1. Histology

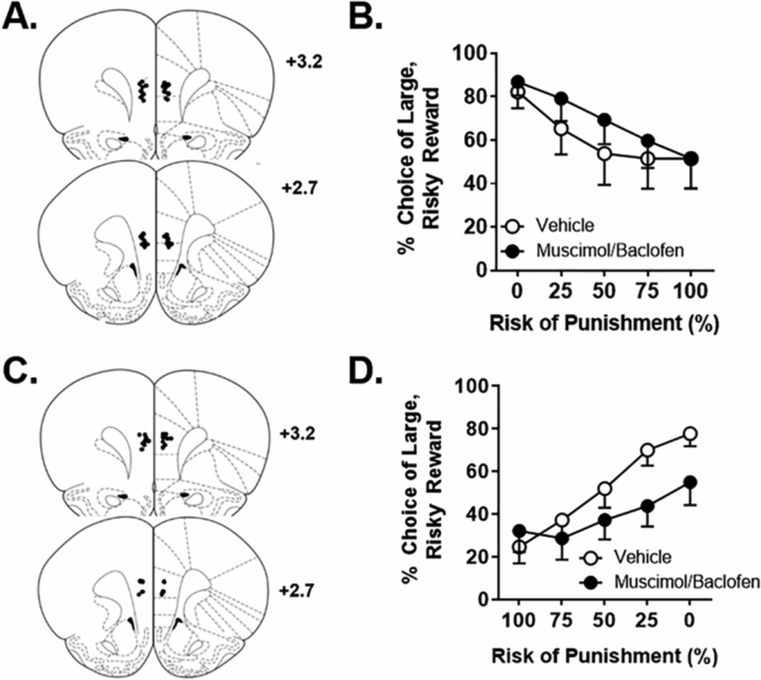

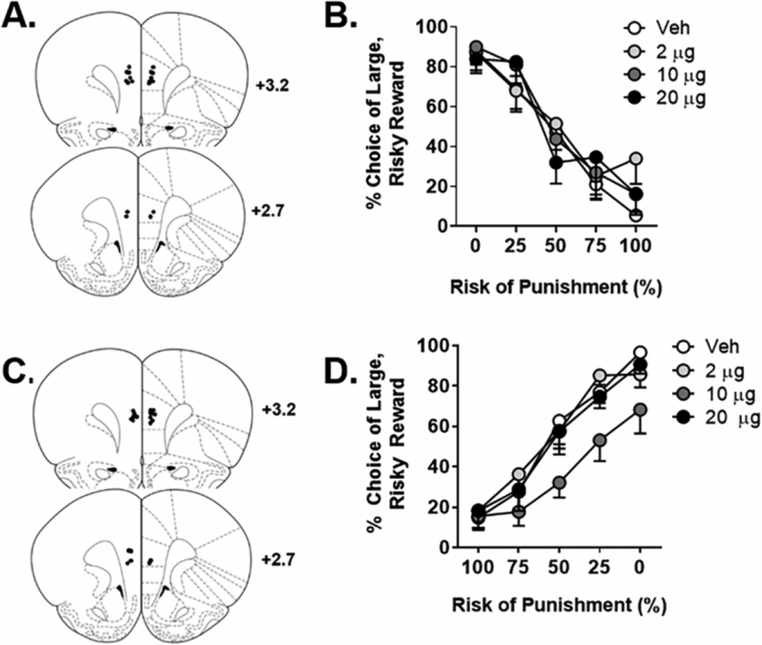

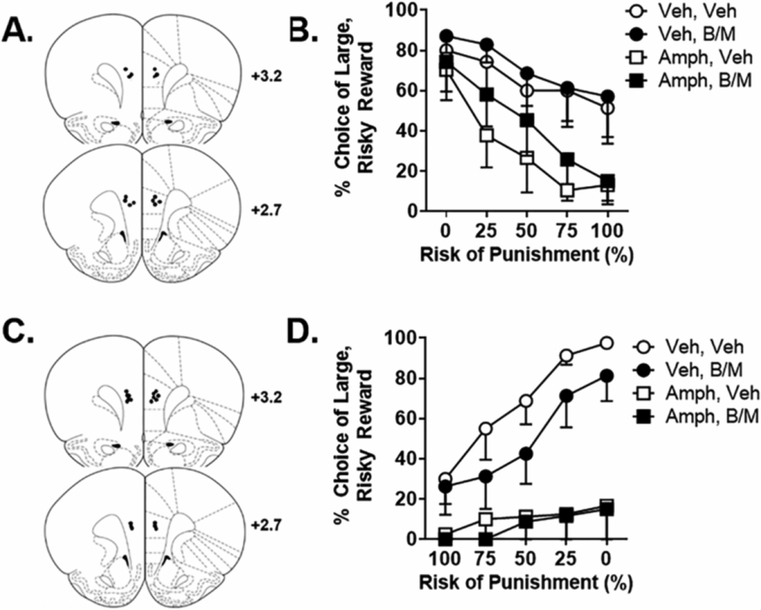

Figures 1A and C display the placements of the intra-mPFC guide cannulae for rats included in Experiment 1. In Experiments 1.1, three out of an initial sixteen rats were excluded due to misplaced cannulae (final n = 13). In Experiment 1.2, there were initially sixteen rats, but one died in surgery and three were excluded due to misplaced cannulae (final n = 12). In Experiment 2.1 (Figure 2A), sixteen rats underwent surgery, but one died during the course of recovery. Three additional rats were excluded due to loss of headcaps during behavioral testing, and two were excluded because of misplaced cannulae, resulting in a final n = 10. The rats in Experiment 2.2 were the same rats used in Experiment 1.2 but between the end of Experiment 1.2 and the start of Experiment 2.2, three rats lost their headcaps, resulting in a final n = 9. In Experiment 3.1 (Figure 3A), out of an initial 9 rats, two were excluded due to misplaced cannulae, yielding a final n = 7. Finally, in Experiment 3.2 (Figure 3C), out of an initial 14 rats, three lost their headcaps during training, one became ill during microinjections and three were excluded due to misplaced cannulae, leaving a final n = 7.

Figure 1. Experiment 1: Effects of mPFC inactivation on risky decision making.

A. Cannula placements in the mPFC in Experiment 1.1. Black circles denote locations of the tips of the injectors used for microinjections. B. Inactivation of the mPFC with microinjection of baclofen/muscimol during the RDT with ascending punishment probabilities caused a significant increase in choice of the large, risky reward. C. Cannula placements in the mPFC in Experiment 1.2. D. Inactivation of the mPFC during the RDT with descending punishment probabilities caused a significant decrease in choice of the large, risky reward. Data are represented as the mean ± SEM (within subjects) percent choice of the large, risky reward.

Figure 2. Experiment 2: Effects of mPFC amphetamine on risky decision making.

A. Cannula placements in the mPFC in Experiment 2.1. Black circles denote locations of the tips of the injectors used for microinjections. B. Microinjections of amphetamine in the mPFC had no effect on choice of the large, risky reward in the RDT with ascending punishment probabilities. C. Cannula placements in the mPFC in Experiment 2.2. D. Microinjections of amphetamine in the mPFC caused a significant decrease in choice of the large, risky reward in the RDT with descending punishment probabilities, with the 10 μg dose differing significantly from vehicle. Data are represented as the mean ± SEM (within subjects) percent choice of the large, risky reward.

Figure 3. Experiment 3: Effects of mPFC inactivation on systemic amphetamine-induced risk aversion.

A. Cannula placements in the mPFC in Experiment 3.1. Black circles denote locations of the tips of the injectors used for microinjections. B. In the RDT with ascending punishment probabilities, mPFC inactivation caused a significant increase in choice of the large, risky reward and systemic amphetamine caused a significant decrease in choice of the large, risky reward, but mPFC inactivation did not alter the effects of amphetamine. C. Cannula placements in the mPFC in Experiment 3.2. D. In the RDT with descending punishment probabilities, systemic amphetamine caused a significant decrease in choice of the large, risky reward, but mPFC inactivation did not alter the effects of amphetamine. Data are represented as the mean ± SEM (within subjects) percent choice of the large, risky reward.

3.2. Experiment 1: Effects of mPFC inactivation on risky decision making

In Experiment 1.1, rats were trained in the ascending RDT for 30 days, at which point they reached a stable baseline, and then received intra-mPFC microinjections of vehicle or baclofen/muscimol prior to testing. Analysis of choice performance yielded a main effect of drug [F (1, 12) = 6.36, p = 0.03; η2p = 0.35], but no significant drug X trial block interaction [F (4, 48) = 1.48, p = 0.22]. Hence, inactivation of the mPFC caused an increase in choice of the large, risky reward (Figure 1B). Note that the main effect of trial block was significant in this [F (4, 48) = 7.13, p < 0.01; η2p = 0.37] and all subsequent experiments (at least p < 0.01) and thus main effects of trial block are not reported for subsequent experiments. The analysis of response latencies during forced choice trials (Table 2) revealed no main effect of lever identity [F (1, 12) = 0.86, p = 0.37] and no main effect of inactivation [F (1, 12) = 0.02, p = 0.88]. Further, there were no significant lever identity X inactivation [F (1, 12) = 0.15, p = 0.71], inactivation X block [F (4, 48) = 0.29, p=0.89] or lever identity X inactivation X block [F (4, 48) = 0.64, p = 0.64] interactions. There was, however, a significant lever identity X block interaction [F (4, 48) = 3.46, p = 0.02; η2p = 0.22], which reflected that, across both vehicle and inactivation conditions, there was an increase in latency to press the large reward lever as the risk of punishment increased across the test session (as in our previous work; Orsini et al., 2016). These latency data suggest that even though mPFC inactivation alters choice behavior, it does not affect the rats’ perception or valuation of the large, risky vs. the small, safe reward. Finally, for this and all subsequent experiments, locomotor activity, shock reactivity and trial omissions are displayed in Table 1, with asterisks indicating significant differences from vehicle.

Table 2.

Data are represented as mean (± SEM) seconds. Column titles refer to the trial block number in the RDT. B/M refers to baclofen/muscimol administration. Note that there were insufficient data in Experiment 3 to calculate the means for latencies to press the small, safe or large, risky lever on forced choice trials.

| Experiment | Small lever |

Large lever |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 | |

| Intra-mPFC inactivation | ||||||||||

| Experiment 1.1 | ||||||||||

| Vehicle | 1.10 (0.17) | 1.06 (0.08) | 1.04 (0.12) | 1.14 (0.12) | 1.25 (0.15) | 0.85 (0.16) | 1.10 (0.25) | 1.47 (0.41) | 2.35 (0.99) | 0.88 (0.47) |

| B/M | 1.07 (0.12) | 1.08 (0.12) | 1.17 (0.13) | 1.01 (0.10) | 1.11 (0.11) | 0.77 (0.06) | 1.02 (0.22) | 1.14 (0.27) | 2.43 (0.66) | 1.63 (0.66) |

| Experiment 1.2 | ||||||||||

| Vehicle | 1.07 (0.17) | 0.89 (0.14) | 0.92 (0.11) | 0.91 (0.11) | 1.24 (0.28) | 2.43 (0.67) | 2.08 (0.62) | 1.64 (0.31) | 1.68 (0.39) | 1.10 (0.12) |

| B/M | 0.94 (0.12) | 1.12 (0.27) | 1.14 (0.27) | 0.92 (0.10) | 1.04 (0.09) | 1.26 (0.16) | 1.42 (0.22) | 1.41 (0.21) | 1.33 (0.22) | 1.12 (0.11) |

| Intra-mPFC amphetamine | ||||||||||

| Experiment 2.1 | ||||||||||

| 0 μg | 0.61 (0.06) | 0.71 (0.08) | 0.80 (0.09) | 0.81 (0.20) | 0.60 (0.05) | 0.69 (0.14) | 0.92 (0.16) | 1.21 (0.11) | 1.20 (0.09) | 1.34 (0.10) |

| 2.0 μg | 0.80 (0.18) | 0.75 (0.08) | 0.72 (0.09) | 0.94 (0.26) | 0.90 (0.24) | 0.91 (0.15) | 0.86 (0.18) | 1.13 (0.17) | 1.30 (0.15) | 1.29 (0.09) |

| 10.0 μg | 1.04 (0.22) | 0.70 (0.08) | 0.73 (0.09) | 0.81 (0.15) | 0.67 (0.12) | 1.15 (0.53) | 0.81 (0.07) | 1.10 (0.25) | 1.53 (0.18) | 2.59 (0.20) |

| 20.0 μg | 0.75 (0.12) | 0.94 (0.17) | 0.68 (0.09) | 0.67 (0.10) | 0.64 (0.04) | 0.67 (0.12) | 0.86 (0.18) | 1.10 (0.13) | 1.54 (0.35) | 1.19 (0.10) |

| Experiment 2.2 | ||||||||||

| 0 μg | 0.87 (0.16) | 0.69 (0.05) | 0.80 (0.08) | 0.93 (0.11) | 1.07 (0.11) | 1.04 (0.19) | 1.25 (0.06) | 1.14 (0.07) | 0.91 (0.09) | 0.80 (0.05) |

| 2.0 μg | 0.82 (0.12) | 0.66 (0.05) | 0.80 (0.08) | 0.90 (0.10) | 0.92 (0.07) | 2.26 (0.36) | 1.29 (0.15) | 1.22 (0.12) | 1.04 (0.13) | 0.98 (0.11) |

| 10.0 μg | 1.33 (0.37) | 0.62 (0.04) | 0.68 (0.04) | 0.76 (0.06) | 0.94 (0.10) | 2.67 (0.66) | 2.00 (0.41) | 2.08 (0.43) | 1.74 (0.34) | 1.31 (0.31) |

| 20.0 μg | 0.73 (0.12) | 0.67 (0.05) | 0.72 (0.08) | 0.90 (0.07) | 0.99 (0.12) | 1.53 (0.24) | 1.98 (0.60) | 1.70 (0.59) | 1.11 (0.16) | 1.05 (0.13) |

Table 1.

Asterisks indicate a significant difference (p < 0.05) from vehicle condition on omissions, locomotor activity, or shock reactivity (as indicated at the top of each column). Data are represented as mean (± SEM). Veh refers to vehicle administration, B/M refers to baclofen/muscimol administration, and amph refers to amphetamine administration. There were insufficient data in Experiment 3.2 to calculate the means for shock reactivity for all conditions in which amphetamine was administered (most rats never chose the large, risky reward).

| Experiment | % Omitted Trials | Locomotion (locomotor units/ITI) |

Shock reactivity (locomotor units/shock) |

|

|---|---|---|---|---|

| Free | Forced | |||

| Intra-mPFC inactivation | ||||

| Experiment 1.1 | ||||

| Vehicle | 0.92 (0.62) | 12.50 (4.05) | 17.16 (2.63) | 2.76 (0.30) |

| B/M | 6.0 (1.94)* | 8.84 (2.56) | 18.30 (2.21) | 2.77 (0.25) |

| Experiment 1.2 | ||||

| Vehicle | 0.83 (0.52) | 4.79 (2.49) | 18.78 (1.86) | 3.30 (0.30) |

| B/M | 1.67 (0.64) | 1.88 (1.07) | 20.32 (2.41) | 2.85 (0.40) |

| Intra-mPFC amphetamine | ||||

| Experiment 2.1 | ||||

| 0 μg | 5.40 (1.61) | 6.75 (2.50) | 23.02 (2.49) | 3.14 (0.29) |

| 2.0 μg | 8.40 (4.48) | 10.50 (3.14) | 12.68 (2.04) | 3.20 (0.19) |

| 10.0 μg | 7.00 (2.67) | 6.00 (2.26) | 23.98 (2.04) | 2.93 (0.15) |

| 20.0 μg | 10.80 (4.70) | 15.15 (4.65) | 24.61 (1.65) | 3.03 (0.28) |

| Experiment 2.2 | ||||

| 0 μg | 4.67 (2.47) | 8.86 (4.16) | 17.76 (1.54) | 3.24 (0.32) |

| 2.0 μg | 3.17 (0.87) | 7.05 (1.01) | 17.12 (1.46) | 3.19 (0.45) |

| 10.0 μg | 12.67 (8.22) | 12.64 (4.63) | 17.82 (1.34) | 3.06 (0.13) |

| 20.0 μg | 2.17 (0.67) | 6.27 (3.03) | 20.71 (1.50) | 3.50 (0.62) |

| Intra-mPFC inactivation plus systemic amphetamine (1.5 mg/kg) | ||||

| Experiment 3.1 | ||||

| Veh, veh | 0.0 (0.0) | 5.71 (4.90) | 16.89 (1.93) | 2.68 (0.65) |

| B/M, veh | 4.29 (4.29) | 2.50 (2.50) | 16.21 (2.48) | 2.51 (0.55) |

| Veh, amph | 27.43 (6.64)* | 41.43 (4.94)* | 26.46 (3.14)* | 2.35 (1.05) |

| B/M, amph | 16.86 (4.97)* | 30.36 (6.65)* | 24.83 (3.18) | 3.04 (0.60) |

| Experiment 3.2 | ||||

| Veh, veh | 0.29 (0.29) | 2.86 (2.07) | 22.97 (2.33) | 4.02 (0.28) |

| B/M, veh | 2.86 (2.86) | 5.07 (2.16) | 20.94 (2.88) | 3.67 (0.25) |

| Veh, amph | 22.29 (9.91) | 53.93 (3.89)* | 33.84 (2.22)* | |

| B/M, amph | 18.57 (6.32) | 53.21 (3.36)* | 28.67 (2.75) | |

The increase in choice of the large, risky reward in Experiment 1.1 induced by mPFC inactivation could reflect an increase in preference for larger but riskier options (i.e., increased risk-taking behavior). Alternatively, it could be interpreted as a deficit in shifting choice behavior from the large to the small reward across trial blocks, suggesting an impairment in behavioral flexibility. To address this possibility, rats in Experiment 1.2 were trained in the descending RDT, wherein the probabilities of punishment decreased across the test session. After training for 26 days, rats reached stable baseline choice performance. As in Experiment 1.1, rats then received intra-mPFC microinjections of vehicle or baclofen/muscimol prior to testing. While there was no main effect of inactivation [F (1, 11) = 2.04, p = 0.18], there was a significant inactivation X block interaction [F (4, 44) = 7.29, p < 0.01; η2p = 0.40]. Inspection of Figure 1D suggests that, in contrast to vehicle conditions, mPFC inactivation impaired rats’ ability to shift their choice preference as risk of punishment decreased (i.e., they perseverated in choice of the small reward despite the decreasing risk of punishment). The analyses of latencies to press each lever on forced choice trials (Table 2) revealed a main effect of lever identity [F (1, 11) = 4.94, p = 0.05; η2p = 0.31] and a significant lever identity X block interaction [F (4, 44) = 2.97, p = 0.03; η2p = 0.21]. As in Experiment 1.1, this reflects the fact that relative to the small, safe lever, latencies to press the large, risky lever were longer during the higher risk blocks and shorter during lower risk blocks. Unless otherwise noted, in all subsequent experiments and their respective analyses, this same pattern was observed and thus will not be discussed further. There was neither a main effect of inactivation [F (1, 11) = 3.09, p = 0.27] nor significant lever identity X inactivation [F (1, 11) = 2.72, p = 0.13], inactivation X block [F (4, 44) = 0.89, p =0.48] or lever identity X inactivation X block [F (4, 44) = 1.444, p = 0.24] interactions. Overall, analyses of choice performance indicate that, relative to the small safe lever, rats took longer to press the large, risky lever when risk of punishment was highest, and that these latencies decreased as risk of punishment decreased. This pattern of behavior, however, was not affected by mPFC inactivation, again suggesting that the valuation of each choice remained intact.

When considered with the results from Experiment 1.1, these findings suggest that rather than affecting risk taking per se (i.e., preference for large, risky vs. small, safe rewards), mPFC inactivation impaired behavioral flexibility such that rats were slower to shift away from their initial choice preference as risk contingencies changed across the test session. Thus, the mPFC seems to be necessary for the ability to rapidly adjust choice behavior in response to shifts in risk contingencies.

3.2. Experiment 2. Effects of intra-mPFC amphetamine on risky decision making

Experiment 2 evaluated the effects of intra-mPFC microinjections of amphetamine, which enhances NE, DA and 5-HT availability through actions at their respective transporters (Heal et al., 2013). In Experiment 2.1, rats were trained for 28 days in the ascending RDT, at which point stable baseline performance was reached, and then received intra-mPFC microinjections of amphetamine (0, 2.0, 10.0 and 20.0 μg). There was no main effect of dose [F (3, 27) = 0.40, p = 0.75] on choice behavior, but there was a dose X block interaction [F (12, 108) = 1.82, p = 0.05; η2p = 0.17] (Figure 2B). While this hints at an effect of intra-mPFC amphetamine on choice behavior, additional analyses revealed that it was driven by a significant dose X block [F (4, 36) = 2.62, p = 0.05; η2p = 0.23] interaction only when comparing the low to the high dose of amphetamine. A similar pattern of results emerged when analyzing latencies to press each lever on the forced choice trials (Table 2). There were significant interactions between dose and block [F (12, 36) = 2.92, p = 0.01; η2p = 0.49] and lever identity, dose and block [F (12, 36) = 3.63, p < 0.01; η2p = 0.55]. There was not, however, a main effect of dose [F (3, 9) = 2.28, p = 0.15]. To determine the basis for the lever identity X dose X block interaction, a subsequent three factor repeated measures ANOVA (dose X lever identity X block) was performed to compare latencies for each dose against latencies under vehicle conditions. This analysis did not reveal significant main effects of dose nor any interactions between dose, lever identity and/or block however (ps > 0.05), indicating that the significant interaction in the initial parent ANOVA was not driven by effects of amphetamine relative to vehicle on latencies to press the small and large reward lever. Given the lack of differences between vehicle and the amphetamine doses in the latency analyses, these findings, together with those from the analysis of choice behavior, suggest that intra-mPFC amphetamine did not strongly modulate risk taking in the ascending RDT.

Consistent with the design of Experiment 1, Experiment 2.2 tested the effects of intra-mPFC amphetamine in the descending RDT. For this experiment, the rats used in Experiment 1.2 were re-trained until choice performance re-stabilized (20 days) then received intra-mPFC microinjections of amphetamine before testing. In contrast to Experiment 2.1, there was a main effect of dose [F (3, 24) = 3.3, p =0.04; η2p = 0.29], but no dose X block interaction [F (12, 96) = 1.60, p = 0.10]. Subsequent two factor repeated measures ANOVAs were then performed to compare each dose to vehicle. There were no differences between vehicle and the low dose [dose, F (1, 8) = 0.03, p = 0.87; dose X block, F (4, 32) = 1.55, p = 0.21] or vehicle and the high dose [dose, F (1, 8) = 0.10, p = 0.76; dose X block, F (4, 32) = 0.32, p = 0.86]; however, there was a significant main effect of dose [F (1, 8) = 5.45, p = 0.05; η2p = 0.41] and a significant dose X block interaction [F (4, 32) = 3.14, p = 0.03; η2p = 0.28] when the medium dose was compared to vehicle. Hence, intra-mPFC microinjection of the medium (10 μg) dose of amphetamine significantly decreased choice of the large, risky reward in the descending RDT (Figure 2D). Analyses of latencies on forced choice trials (Table 2) also revealed effects of intra-mPFC amphetamine. While there were only trends toward a main effect of dose [F (3, 24) = 2.76, p = 0.06] and a dose X block interaction [F (12, 96) = 1.70, p = 0.08], there was a significant lever identity X dose interaction [F (3, 24) = 4.00, p = 0.02; η2p = 0.33]. There was not, however, a significant interaction between lever identity, dose and block [F (12, 96) = 1.29, p = 0.24]. To determine the basis for the lever identity X dose interaction, additional two factor repeated measures ANOVAs were conducted comparing latencies to press each lever between vehicle and each dose of amphetamine. These analyses revealed that only the low and medium doses of amphetamine selectively increased latencies to press the large, risky lever relative to vehicle (all ps < 0.05), results that are consistent with the effects of the medium dose of amphetamine on choice performance. Collectively, these data indicate that intra-mPFC amphetamine, particularly the medium dose, decreases preference for the large, risky option in the descending RDT.

3.3. Experiment 3. Effects of mPFC inactivation on amphetamine-induced alterations in risky decision making

Experiment 2 showed that increasing monoamine neurotransmission in the mPFC via amphetamine can decrease choice of the large, risky reward, and prior work from our lab shows that systemic amphetamine has similar effects in the ascending RDT (Orsini et al., 2015b; Orsini et al., 2016; Simon et al., 2009; Simon et al., 2011). These data suggest that the mPFC could be a locus of the effects of systemic amphetamine on risk-taking behavior. To determine whether the mPFC is critical for amphetamine-induced risk aversion, Experiment 3 employed inactivation of mPFC via baclofen/muscimol in combination with systemic amphetamine prior to testing in the RDT. In Experiment 3.1, rats were trained for 20 days in the ascending RDT, at which point stable performance emerged. Rats then received intra-mPFC microinjections of baclofen/muscimol (or vehicle) 5 min prior to receiving systemic injections of vehicle or amphetamine [1.5 mg/kg, a dose that robustly reduced risk taking in prior studies (Orsini et al., 2015b; Orsini et al., 2016; Simon et al., 2009)]. A three factor repeated measures ANOVA (inactivation X amphetamine X block) revealed a main effect of inactivation [F (1, 5) = 26.29, p < 0.01; η2p = 0.84] and a main effect of amphetamine [F (1, 5) = 8.17, p = 0.04; η2p = 0.62]; however, there were no significant interactions among the variables [inactivation X amphetamine [F (1, 5) = 0.95, p = 0.37], inactivation X block [F (4, 20) = 0.87, p = 0.50], amphetamine X block [F (4, 20) = 0.91, p =0.48]], inactivation X amphetamine X block [F (4, 20) = 0.58, p = 0.68]. These results indicate that, consistent with Experiment 1.1, mPFC inactivation increases choice of the large, risky reward, and that systemic amphetamine decreases choice of the large, risky reward as in previous studies (Orsini et al., 2016; Simon et al., 2011), but mPFC inactivation did not influence amphetamine’s effects on choice of the large, risky reward (Figure 3B). It was not possible to analyze lever press latencies on forced choice trials due to insufficient data (missing data for at least two-thirds of the rats in the analyses). This was driven mainly by the high percentage of omissions on the forced choice trials [inactivation, F (1, 6) = 5.54, p = 0.06; amphetamine, F (1, 6) = 55.91, p < 0.01].

Experiment 3.2 was identical to Experiment 3.1 except that rats were trained in the descending RDT until reaching stable performance (25 days). In Experiment 3.2, a three factor repeated measures ANOVA yielded only a trend toward a main effect of mPFC inactivation [F (1, 5) = 5.01, p = 0.08; η2p = 0.50], as well as a main effect of amphetamine [F (1, 5) = 9.17, p = 0.03; η2p = 0.65] and an amphetamine X block interaction [F (4, 20) = 9.38, p < 0.01; η2p = 0.65]. Although it did not quite reach significance, the trend for mPFC inactivation to decrease choice of the large, risky reward was in the same direction as that in Experiment 1.2 and thus provides a modicum of confirmation for these findings. This difference between the two experiments may be due the smaller sample size in Experiment 3.2 compared to Experiment 1.1 (n = 8 vs. n = 13), thus limiting the power to detect a main effect of the inactivation. The significant effects of amphetamine manifested as a decrease in choice of the large, risky reward, a finding that is consistent with the effects of amphetamine in Experiment 3.1 as well as those reported previously (Orsini et al., 2016; Simon et al., 2011). There were no significant interactions between inactivation and amphetamine [F (1, 5) = 2.43, p = 0.18], inactivation and block [F (4, 20) = 0.59, p = 0.68] or inactivation, amphetamine, and block [F (4, 20) = 0.61, p = 0.66]. These results indicate that, consistent with Experiment 3.1, mPFC inactivation does not attenuate the effects of amphetamine on choice of the large, risky reward (Figure 3D). As in Experiment 3.1, the high percentage of omissions during the forced choice trials precluded analysis of response latencies during these trials. Although there was no effect of mPFC inactivation on omissions on forced choice trials [F (1, 6) = 0.03, p = 0.88], amphetamine significantly increased omissions on these trials [F (1, 6) = 120.17, p < 0.01].

4. Discussion

The current experiments yielded several findings with respect to the role of mPFC in decision making involving risk of explicit punishment. First, the effects of mPFC inactivation on choice of the large, risky reward were dependent upon the order in which probabilities of punishment were presented. When probabilities of punishment increased across the session, mPFC inactivation caused an increase in choice of the large, risky reward. When probabilities decreased across the session, however, mPFC inactivation caused a decrease in choice of the large, risky reward. Subsequent experiments focused on contributions of mPFC monoamine neurotransmission to risky decision making. Microinjection of amphetamine, which blocks NET, serotonin transporters (SERT) and dopamine transporters (DAT), decreased choice of the large, risky reward in the descending RDT, although it had only minimal effects in the ascending RDT. Finally, in both the ascending and descending RDT, systemic amphetamine administration reduced choice of the large, risky reward, but this effect was not attenuated by mPFC inactivation.

4.1. The role of mPFC in risky decision making

Previous research from our lab showed that mPFC expression of both D2 mRNA and the epigenetic factor MeCP2 is associated with preference for the large, risky reward in the RDT (Deng et al., 2018; Simon et al., 2011). These data are correlational in nature, however, and thus do not indicate a clear role of the mPFC in decision making. Hence, the current study was conducted to more directly test the role of the mPFC in decision making involving risk of explicit punishment. In Experiment 1.1, mPFC inactivation increased choice of the large, risky reward when probabilities of punishment increased across the test session (the “ascending RDT”). This apparent increase in risk taking is, at face value, consistent with previous human and rodent studies. Patients with damage to the dlPFC preferentially choose disadvantageous options in the IGT (Clark et al., 2003; Fellows and Farah, 2005; Manes et al., 2002). Similarly, lesions or inactivation of the mPFC in rodents cause an increase in disadvantageous choices in gambling tasks modeled after the IGT (de Visser et al., 2011; Paine et al., 2013; Zeeb et al., 2015). Considered together, these data suggest that one function of the mPFC is to bias choices away from risky options.

An alternative interpretation of these findings, however, is that these deficits reflect an inability to disengage from disadvantageous patterns of choice behavior established during early stages of the tasks. To determine if this accounted for the pattern of data from Experiment 1.1, another cohort of rats (Experiment 1.2) was trained in the “descending RDT” in which probabilities of punishment decreased across the session. This manipulation resulted in a significant decrease in choice of the large, risky reward, suggesting that, rather than mediating risk-taking behavior per se, the mPFC is important for flexibly shifting choice behavior as the punishment contingencies change. This latter result also indicates that the increase in choice of the large, risky reward in Experiment 1.1 was not due to reduced sensitivity to punishment, as the same manipulation had the opposite effect when the order of probabilities was reversed. Further support for this interpretation stems from the fact that even though mPFC inactivation altered choice behavior, it did not affect latencies to choose the large, risky lever vs. small, safe lever. This suggests that the perception or valuation of each choice, a process tightly coupled to value-based decision making, remained unaffected despite the alterations in choice behavior. Thus, mPFC inactivation may have resulted in a dissociation of motivational value attribution and behavioral flexibility, such that rats were less able to shift their choices across blocks of trials in a manner congruent with their changes in response latencies. A similar effect of mPFC inactivation on behavioral flexibility during decision making has been observed in a “probability discounting task”, another rodent model of risky decision making that is similar to the RDT but in which the probability of large reward omission changes across blocks. Using this task, St. Onge et al. (2010) showed that, as with the current findings, the effect of mPFC inactivation on choice behavior depended on the order in which omissions probabilities were presented. Similar data were obtained using a rodent gambling task, in which mPFC lesions cause perseverative choice of one option to the exclusion of other options (Rivalan et al., 2011). Thus, it appears that during decision making, the mPFC is critical for the ability to alter choice behavior in response to changes in risk contingencies. Although this notion is largely consistent with findings from human studies, there is evidence that in contrast to vmPFC damage, dlPFC damage increases disadvantageous choice behavior in a manner that is not easily explained by flexibility deficits (Fellows and Farah, 2005). This suggests that future work is needed to establish whether, perhaps, separate subregions of the mPFC contribute differently to risk taking, as a similar dichotomy may exist with respect to decision making involving risk of punishment.

If the mPFC is critical for behavioral flexibility, it may mediate its effects through dopamine D2 receptors (D2R), as previous work from our lab has shown that greater flexibility in choice performance in the RDT is associated with greater expression of D2R mRNA in the mPFC (Simon et al., 2011). Interestingly, recent work suggests that one mechanism by which D2Rs in the mPFC regulate the cognitive flexibility required for decision making is through their modulation of mPFC projections to the basolateral amygdala (Jenni et al., 2017). Finally, the conclusion that mPFC mediates cognitive flexibility in decision making is consistent with an abundance of work demonstrating the importance of the mPFC for performance of strategy-shifting tasks (Beas et al., 2017; Ragozzino, 2007; Ragozzino et al., 1999a; Ragozzino et al., 2003; Ragozzino et al., 1999b).

Reductions in flexible shifts in reward choice following mPFC inactivation may reflect an impaired ability to use action-outcome associations to guide behavior. Indeed, the mPFC is important for goal-directed behavior (Balleine and Dickinson, 1998; Corbit and Balleine, 2003; Coutureau et al., 2009; Laskowski et al., 2016; Ostlund and Balleine, 2005) and more specifically, for encoding the relationship between a response and the consequences of that response [e.g., damage to the mPFC impairs instrumental reward devaluation (Balleine and Dickinson, 1998; Corbit and Balleine, 2003; Coutureau et al., 2009; Killcross and Coutureau, 2003)]. Thus, an intact mPFC may enable feedback about previous outcomes of actions (i.e., receipt of the large reward plus footshock after selecting the large reward lever when the risk of punishment is high) to guide future choices (i.e., a shift toward selection of the small, safe reward). When the mPFC is offline, however, representations of the outcomes of past actions cannot be accessed and thus, behavior may be rendered more habitual and reflexive (Balleine and Dickinson, 1998). In the case of the RDT, it may be that in the absence of the mPFC, rats choose the most optimal option in the first block of the session, but as the session progresses, are less able to alter their choices as the probability of punishment changes, because they are unable to use information about the outcomes of past trials to inform current choices. For example, in Experiment 1.2, rats showed a strong aversion to the large reward in the first block of trials in which the probability of punishment was 100%. As the probability of punishment decreased, rats increased their choice of the large reward under vehicle conditions. In contrast, when the mPFC was inactivated, they were more likely to continue to avoid the large, risky reward, despite the decreasing probability of punishment.

4.2. The role of mPFC monoamine neurotransmission in risky decision making

Monoamine neurotransmission in the mPFC has been implicated in aversively-motivated behavior, behavioral flexibility, and cost/benefit decision making (Floresco and Magyar, 2006; Floresco et al., 2006; Latagliata et al., 2010; McGaughy et al., 2008; Robbins and Arnsten, 2009; St Onge et al., 2012). To test whether mPFC monoamine neurotransmission contributes to decision making involving risk of punishment, amphetamine, which increases availability of DA, NE and 5-HT via DAT, NET and SERT, respectively (Heal et al., 2013), was microinjected directly into the mPFC prior to testing in either the ascending or descending RDT. Intra-mPFC amphetamine had no effect on choice of the large, risky reward compared to vehicle when the order of punishment probabilities increased across the session (ascending RDT). In contrast, intra-mPFC amphetamine (specifically at 10 μg) caused a decrease in choice of the large, risky reward when the order of punishment probabilities decreased across the session (descending RDT). At first glance, these results appear to contradict one another. Alternatively, however, they may indicate that intra-mPFC amphetamine affects both risk taking and behavioral flexibility. The effects of intra-mPFC amphetamine in the descending RDT are consistent with this interpretation. A decrease in choice of the large, risky reward could reflect both an increase in risk aversion (an effect also observed with systemic amphetamine administration in Experiment 3.2), and a decrease in behavioral flexibility similar to the effects of mPFC inactivation in Experiment 1.2. In the ascending RDT, however, these two effects of intra-mPFC amphetamine would negate each other (i.e., impaired behavioral flexibility would cause greater choice of the large, risky reward, whereas an increase in risk aversion would manifest as a decrease in choice of the large, risky reward). Considered together, monoamine transmission in the mPFC may be important in using risk-related information to flexibly alter choice behavior in the face of shifting choice contingencies.

These effects of amphetamine are likely due to increases in extracellular monoamines such as NE, DA and 5-HT, each of which has been implicated in decision making (Fitoussi et al., 2015; St Onge et al., 2012; Winstanley et al., 2006). For example, previous work showed that blockade of DA receptors in the mPFC impairs the ability to guide behavior away from choices that result in punishment (Floresco and Magyar, 2006), consistent with the potential risk aversion-enhancing effects of amphetamine in the present study. Consistent with this, NE release in the mPFC signals the salience of aversive stimuli (Ventura et al., 2008; Ventura et al., 2007) and appears to be involved in food-seeking behavior in the face of punishment (Latagliata et al., 2010). The fact that amphetamine affects several monoamine transporters may also explain why only the middle dose of amphetamine altered choice performance in the descending RDT. The distribution and affinity of NET and DAT in the mPFC is rather unique, with a low density of DAT on dopaminergic terminals (Ciliax et al., 1995; Hitri et al., 1991; Sesack et al., 1998) and a greater affinity of NET for DA (Moron et al., 2002). Thus, the middle dose may have been sufficient to exert its effects through DAT, but the highest dose may have preferentially saturated NET, which, based on the absence of an effect, could indicate that NE transmission in the mPFC is not involved in risky decision making. Future studies employing more selective pharmacological manipulations will be necessary to reveal whether and how these neurotransmitters are critical for decision making under risk of punishment.

Previous work has shown that systemic amphetamine administration causes risk aversion in the RDT (Orsini et al., 2016; Simon et al., 2011). Given that intra-mPFC amphetamine caused a similar decrease in choice of the large, risky reward (at least in the descending RDT), it is conceivable that the effects of systemic amphetamine on risky choice are mediated through increased monoamine neurotransmission in the mPFC. To test this hypothesis, the mPFC was inactivated prior to systemic amphetamine administration, followed by testing in either the ascending or descending RDT. Both mPFC inactivation and systemic amphetamine altered choice behavior independently, but mPFC inactivation did not alter the ability of amphetamine to decrease choice of the large, risky reward. These results demonstrate that although amphetamine can have actions directly on the mPFC under some conditions (i.e., in Experiment 2), it is not a critical neural substrate through which systemic amphetamine causes risk aversion, and suggests that other brain regions are more likely candidates. One possibility is the nucleus accumbens (NAc) (Mitchell et al., 2014; Stopper and Floresco, 2011), which encodes aversive information (Roitman et al., 2005; Schoenbaum and Setlow, 2003; Setlow et al., 2003; Wenzel et al., 2015) and is critical for some aspects of avoidance behavior (Lichtenberg et al., 2014; Oleson et al., 2012; Ramirez et al., 2015). Further, DA transmission (Badrinarayan et al., 2012; Fernando et al., 2014; Oleson et al., 2012) and D2 DA receptor (D2R) function (Boschen et al., 2011; Danjo et al., 2014; Hikida et al., 2010; Hikida et al., 2013) within the NAc are necessary for some forms of aversively-motivated behavior. Most importantly, greater preference for the large, risky reward in the RDT is associated with lower D2R mRNA expression in the NAc, and direct pharmacological activation of NAc D2/3Rs decreases such risky choices (Mitchell et al. 2014). Collectively, these data suggest that the NAc is a likely neural locus through which systemic amphetamine acts to increase risk aversion.

Related to the effects of systemic amphetamine on risk taking, the current results also show that, in addition to decreasing risky choice as risk of punishment increases across a session, systemic amphetamine similarly decreases risky choice when such risk contingencies are reversed. This finding, which has not been demonstrated previously, suggests that the effects of amphetamine on risky choice are likely due to increased sensitivity to punishment rather than increased flexibility in choice behavior (as previous data from our lab could have suggested). Future experiments are now needed to identify the locus of amphetamine’s action (e.g., NAc) on sensitivity to punishment during decision making.

Considered together, the findings from the current study provide greater understanding of how the mPFC contributes to decision making involving risk of punishment. Based on the results of Experiments 1 and 3, it is clear that the mPFC is necessary for the flexibility required to adjust ongoing choice behavior in the face of changing task conditions. The results of Experiment 2, however, could suggest that, in addition to mediating the behavioral flexibility required for decision making, the mPFC may also process some risk-related information, and that this cognitive process may be mediated by monoamine neurotransmission within the mPFC. The mPFC, however, is clearly not critical for the risk aversion produced by systemic amphetamine, highlighting the need to characterize the roles of other brain regions in processing punishment-related information inherent to risky decision making.

Several limitations of this study are worth noting. One is that only male subjects were used. This is a particularly important consideration for several reasons. First, there is growing evidence for significant sex differences in decision making (Bolla et al., 2004b; Kerstetter et al., 2012; Orsini and Setlow, 2017; Orsini et al., 2016; Pellman et al., 2017; Perry et al., 2007; van den Bos et al., 2013; van den Bos et al., 2012). Indeed, we recently showed that females are significantly more risk averse than males in the RDT (Orsini et al. 2016). Second, in humans, the lateralization of vmPFC function in decision making differs between males and females. Across a series of elegant studies, Tranel and colleagues have shown that bilateral vmPFC damage impairs decision making in both males and females. However, when vmPFC damage is unilateral, these impairments in decision making only appear in males with lesions in the right vmPFC and in females with lesions in the left vmPFC (Reber and Tranel, 2017; Sutterer et al., 2015; Tranel et al., 2002; Tranel et al., 2005). This is consistent with a PET imaging study showing that during the IGT, males exhibit greater activation in the right orbitofrontal cortex, whereas females exhibit greater activation in the left DLPFC (Bolla et al., 2004b). Should similar sex differences in PFC laterality be evident in rats, more targeted approaches than those used in the current study would be necessary to fully investigate differences between male and female PFC function in risk taking behavior. A second limitation is that mPFC pharmacological manipulations were not targeted to distinguish between the prelimbic (PL) and infralimbic (IL) subregions of the mPFC. Such a distinction may be important as these subregions have been shown to subserve different functions. For example, the PL is thought to control execution of goal-directed behavior while the IL is important for suppression of such behavior (Moorman et al., 2015; Peters et al., 2009). Given this, further investigation of mPFC function in decision making should aim to distinguish between the two subregions as it is conceivable that manipulations may yield distinct, subregion-dependent effects.

Individuals with SUD display impaired decision making and elevated risk taking behavior (Gowin et al., 2013), as well as diminished dlPFC activity (Bolla et al., 2004a; Tanabe et al., 2007). Findings from the current study suggest that elevated risk taking in substance users may result from the impact of dlPFC impairments on their ability to shift choices away from disadvantageous options. Results from the current experiments also have implications for psychiatric diseases characterized by pathological risk aversion, such as anorexia nervosa (Kaye et al., 2013). Neuroimaging studies have shown that anorexia is associated with reduced function in the dlPFC (Pietrini et al., 2011). Consequently, this pattern of risk taking behavior in individuals with anorexia may be due to inflexible decision making, biasing them away from options that could ultimately prove beneficial. These findings highlight the utility of preclinical models to determine the neurobiology underlying decision making, and suggest several avenues for future research in this area.

Highlights.

It is unknown how mPFC contributes to decision making involving risk of punishment

mPFC inactivation impairs flexible shifts in risky decision making

Intra-mPFC amphetamine reduces risk taking under some conditions

mPFC is not critical for reductions in risk taking caused by systemic amphetamine

mPFC regulates shifting choice patterns in response to changing cost contingencies

Acknowledgements:

We thank the Drug Supply Program at the National Institute on Drug Abuse for kindly providing D-amphetamine, and Ms. Bonnie McLaurin and Mr. Matt Bruner for technical assistance.

Funding: This work was supported by the National Institutes of Health (DA024671 and DA036534 to B.S.) and by a McKnight Brain Institute Fellowship and a Thomas H. Maren Fellowship (to C.A.O.).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References:

- Badrinarayan A, Wescott SA, Vander Weele CM, Saunders BT, Couturier BE, Maren S, Aragona BJ, 2012. Aversive stimuli differentially modulate real-time dopamine transmission dynamics within the nucleus accumbens core and shell. J Neurosci 32, 15779–15790. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balleine BW, Dickinson A, 1998. Goal-directed instrumental action: contingency and incentive learning and their cortical substrates. Neuropharmacology 37, 407–419. [DOI] [PubMed] [Google Scholar]

- Beas BS, McQuail JA, Ban Uelos C, Setlow B, Bizon JL, 2017. Prefrontal cortical GABAergic signaling and impaired behavioral flexibility in aged F344 rats. Neuroscience 345, 274–286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bechara A, Damasio AR, Damasio H, Anderson SW, 1994. Insensitivity to future consequences following damage to human prefrontal cortex. Cognition 50, 7–15. [DOI] [PubMed] [Google Scholar]

- Birrell JM, Brown VJ, 2000. Medial frontal cortex mediates perceptual attentional set shifting in the rat. J Neurosci 20, 4320–4324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bolla K, Ernst M, Kiehl K, Mouratidis M, Eldreth D, Contoreggi C, Matochik J, Kurian V, Cadet J, Kimes A, Funderburk F, London E, 2004a. Prefrontal cortical dysfunction in abstinent cocaine abusers. J Neuropsychiatry Clin Neurosci 16, 456–464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bolla KI, Eldreth DA, Matochik JA, Cadet JL, 2004b. Sex-related differences in a gambling task and its neurological correlates. Cereb Cortex 14, 1226–1232. [DOI] [PubMed] [Google Scholar]

- Boschen SL, Wietzikoski EC, Winn P, Da Cunha C, 2011. The role of nucleus accumbens and dorsolateral striatal D2 receptors in active avoidance conditioning. Neurobiol Learn Mem 96, 254–262. [DOI] [PubMed] [Google Scholar]

- Brand M, Recknor EC, Grabenhorst F, Bechara A, 2007. Decisions under ambiguity and decisions under risk: correlations with executive functions and comparisons of two different gambling tasks with implicit and explicit rules. J Clin Exp Neuropsychol 29, 86–99. [DOI] [PubMed] [Google Scholar]

- Ciliax BJ, Heilman C, Demchyshyn LL, Pristupa ZB, Ince E, Hersch SM, Niznik HB, Levey AI, 1995. The dopamine transporter: immunochemical characterization and localization in brain. J Neurosci 15, 1714–1723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark L, Manes F, Antoun N, Sahakian BJ, Robbins TW, 2003. The contributions of lesion laterality and lesion volume to decision-making impairment following frontal lobe damage. Neuropsychologia 41, 1474–1483. [DOI] [PubMed] [Google Scholar]

- Corbit LH, Balleine BW, 2003. The role of prelimbic cortex in instrumental conditioning. Behav Brain Res 146, 145–157. [DOI] [PubMed] [Google Scholar]

- Coutureau E, Marchand AR, Di Scala G, 2009. Goal-directed responding is sensitive to lesions to the prelimbic cortex or basolateral nucleus of the amygdala but not to their disconnection. Behav Neurosci 123, 443–448. [DOI] [PubMed] [Google Scholar]

- Crowley TJ, Dalwani MS, Mikulich-Gilbertson SK, Du YP, Lejuez CW, Raymond KM, Banich MT, 2010. Risky decisions and their consequences: neural processing by boys with Antisocial Substance Disorder. PLoS One 5, e12835. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dalley JW, McGaughy J, O'Connell MT, Cardinal RN, Levita L, Robbins TW, 2001. Distinct changes in cortical acetylcholine and noradrenaline efflux during contingent and noncontingent performance of a visual attentional task. J Neurosci 21, 4908–4914. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Danjo T, Yoshimi K, Funabiki K, Yawata S, Nakanishi S, 2014. Aversive behavior induced by optogenetic inactivation of ventral tegmental area dopamine neurons is mediated by dopamine D2 receptors in the nucleus accumbens. Proc Natl Acad Sci U S A 111, 6455–6460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Visser L, Baars AM, van 't Klooster J, van den Bos R, 2011. Transient inactivation of the medial prefrontal cortex affects both anxiety and decision-making in male wistar rats. Front Neurosci 5, 102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deng JV, Orsini CA, Shimp KG, Setlow B, 2018. MeCP2 Expression in a Rat Model of Risky Decision Making. Neuroscience 369, 212–221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ernst M, Bolla K, Mouratidis M, Contoreggi C, Matochik JA, Kurian V, Cadet JL, Kimes AS, London ED, 2002. Decision-making in a risk-taking task: a PET study. Neuropsychopharmacology 26, 682–691. [DOI] [PubMed] [Google Scholar]

- Ernst M, Kimes AS, London ED, Matochik JA, Eldreth D, Tata S, Contoreggi C, Leff M, Bolla K, 2003. Neural substrates of decision making in adults with attention deficit hyperactivity disorder. Am J Psychiatry 160, 1061–1070. [DOI] [PubMed] [Google Scholar]

- Feenstra MG, Teske G, Botterblom MH, De Bruin JP, 1999. Dopamine and noradrenaline release in the prefrontal cortex of rats during classical aversive and appetitive conditioning to a contextual stimulus: interference by novelty effects. Neurosci Lett 272, 179–182. [DOI] [PubMed] [Google Scholar]

- Fellows LK, Farah MJ, 2005. Different underlying impairments in decision-making following ventromedial and dorsolateral frontal lobe damage in humans. Cereb Cortex 15, 58–63. [DOI] [PubMed] [Google Scholar]

- Fernando AB, Urcelay GP, Mar AC, Dickinson TA, Robbins TW, 2014. The role of the nucleus accumbens shell in the mediation of the reinforcing properties of a safety signal in free-operant avoidance: dopamine-dependent inhibitory effects of d-amphetamine. Neuropsychopharmacology 39, 1420–1430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fitoussi A, Le Moine C, De Deurwaerdere P, Laqui M, Rivalan M, Cador M, Dellu-Hagedorn F, 2015. Prefronto-subcortical imbalance characterizes poor decision-making: neurochemical and neural functional evidences in rats. Brain Struct Funct 220, 3485–3496. [DOI] [PubMed] [Google Scholar]

- Floresco SB, Block AE, Tse MT, 2008. Inactivation of the medial prefrontal cortex of the rat impairs strategy set-shifting, but not reversal learning, using a novel, automated procedure. Behav Brain Res 190, 85–96. [DOI] [PubMed] [Google Scholar]

- Floresco SB, Magyar O, 2006. Mesocortical dopamine modulation of executive functions: beyond working memory. Psychopharmacology (Berl) 188, 567–585. [DOI] [PubMed] [Google Scholar]

- Floresco SB, Magyar O, Ghods-Sharifi S, Vexelman C, Tse MT, 2006. Multiple dopamine receptor subtypes in the medial prefrontal cortex of the rat regulate set-shifting. Neuropsychopharmacology 31, 297–309. [DOI] [PubMed] [Google Scholar]

- Fukui H, Murai T, Fukuyama H, Hayashi T, Hanakawa T, 2005. Functional activity related to risk anticipation during performance of the Iowa Gambling Task. Neuroimage 24, 253–259. [DOI] [PubMed] [Google Scholar]

- Gowin JL, Mackey S, Paulus MP, 2013. Altered risk-related processing in substance users: Imbalance of pain and gain. Drug Alcohol Depend 132, 13–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heal DJ, Smith SL, Gosden J, Nutt DJ, 2013. Amphetamine, past and present--a pharmacological and clinical perspective. J Psychopharmacol 27, 479–496. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hikida T, Kimura K, Wada N, Funabiki K, Nakanishi S, 2010. Distinct roles of synaptic transmission in direct and indirect striatal pathways to reward and aversive behavior. Neuron 66, 896–907. [DOI] [PubMed] [Google Scholar]

- Hikida T, Yawata S, Yamaguchi T, Danjo T, Sasaoka T, Wang Y, Nakanishi S, 2013. Pathway-specific modulation of nucleus accumbens in reward and aversive behavior via selective transmitter receptors. Proc Natl Acad Sci U S A 110, 342–347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hitri A, Venable D, Nguyen HQ, Casanova MF, Kleinman JE, Wyatt RJ, 1991. Characteristics of [3H]GBR 12935 binding in the human and rat frontal cortex. J Neurochem 56, 1663–1672. [DOI] [PubMed] [Google Scholar]

- Jenni NL, Larkin JD, Floresco SB, 2017. Prefrontal Dopamine D1 and D2 Receptors Regulate Dissociable Aspects of Decision Making via Distinct Ventral Striatal and Amygdalar Circuits. J Neurosci 37, 6200–6213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaye WH, Wierenga CE, Bailer UF, Simmons AN, Bischoff-Grethe A, 2013. Nothing tastes as good as skinny feels: the neurobiology of anorexia nervosa. Trends Neurosci 36, 110–120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kerstetter KA, Ballis MA, Duffin-Lutgen S, Carr AE, Behrens AM, Kippin TE, 2012. Sex differences in selecting between food and cocaine reinforcement are mediated by estrogen. Neuropsychopharmacology 37, 2605–2614. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Killcross S, Coutureau E, 2003. Coordination of actions and habits in the medial prefrontal cortex of rats. Cereb Cortex 13, 400–408. [DOI] [PubMed] [Google Scholar]

- Laskowski CS, Williams RJ, Martens KM, Gruber AJ, Fisher KG, Euston DR, 2016. The role of the medial prefrontal cortex in updating reward value and avoiding perseveration. Behav Brain Res 306, 52–63. [DOI] [PubMed] [Google Scholar]

- Latagliata EC, Patrono E, Puglisi-Allegra S, Ventura R, 2010. Food seeking in spite of harmful consequences is under prefrontal cortical noradrenergic control. BMC Neurosci 11, 15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lawrence NS, Jollant F, O'Daly O, Zelaya F, Phillips ML, 2009. Distinct roles of prefrontal cortical subregions in the Iowa Gambling Task. Cereb Cortex 19, 1134–1143. [DOI] [PubMed] [Google Scholar]

- Lichtenberg NT, Kashtelyan V, Burton AC, Bissonette GB, Roesch MR, 2014. Nucleus accumbens core lesions enhance two-way active avoidance. Neuroscience 258, 340–346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin CH, Chiu YC, Cheng CM, Hsieh JC, 2008. Brain maps of Iowa gambling task. BMC Neurosci 9, 72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Linnet J, Moller A, Peterson E, Gjedde A, Doudet D, 2011. Inverse association between dopaminergic neurotransmission and Iowa Gambling Task performance in pathological gamblers and healthy controls. Scand J Psychol 52, 28–34. [DOI] [PubMed] [Google Scholar]

- Loos M, Pattij T, Janssen MC, Counotte DS, Schoffelmeer AN, Smit AB, Spijker S, van Gaalen MM, 2010. Dopamine receptor D1/D5 gene expression in the medial prefrontal cortex predicts impulsive choice in rats. Cereb Cortex 20, 1064–1070. [DOI] [PubMed] [Google Scholar]

- Manes F, Sahakian B, Clark L, Rogers R, Antoun N, Aitken M, Robbins T, 2002. Decision-making processes following damage to the prefrontal cortex. Brain 125, 624–639. [DOI] [PubMed] [Google Scholar]

- McGaughy J, Ross RS, Eichenbaum H, 2008. Noradrenergic, but not cholinergic, deafferentation of prefrontal cortex impairs attentional set-shifting. Neuroscience 153, 63–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mingote S, de Bruin JP, Feenstra MG, 2004. Noradrenaline and dopamine efflux in the prefrontal cortex in relation to appetitive classical conditioning. J Neurosci 24, 2475–2480. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell MR, Weiss VG, Beas BS, Morgan D, Bizon JL, Setlow B, 2014. Adolescent risk taking, cocaine self-administration, and striatal dopamine signaling. Neuropsychopharmacology 39, 955–962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moorman DE, James MH, McGlinchey EM, Aston-Jones G, 2015. Differential roles of medial prefrontal subregions in the regulation of drug seeking. Brain Res 1628, 130–146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moron JA, Brockington A, Wise RA, Rocha BA, Hope BT, 2002. Dopamine uptake through the norepinephrine transporter in brain regions with low levels of the dopamine transporter: evidence from knock-out mouse lines. J Neurosci 22, 389–395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oleson EB, Gentry RN, Chioma VC, Cheer JF, 2012. Subsecond dopamine release in the nucleus accumbens predicts conditioned punishment and its successful avoidance. J Neurosci 32, 14804–14808. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orsini CA, Mitchell MR, Heshmati SC, Shimp KG, Spurrell MS, Bizon JL, Setlow B, 2017. Effects of nucleus accumbens amphetamine administration on performance in a delay discounting task. Behav Brain Res 321, 130–136. [DOI] [PMC free article] [PubMed] [Google Scholar]