Introduction

The Accreditation Council for Graduate Medical Education (ACGME) established the Clinical Learning Environment Review (CLER) Program1,2 to provide graduate medical education (GME) leaders and executive leaders of hospitals, medical centers, and other clinical settings with formative feedback in the 6 CLER Focus Areas.3 This feedback is aimed at improving patient care while optimizing the clinical learning environment (CLE). This report details findings from the second set of CLER Site Visits, which the CLER Program conducted from March 31, 2015, to June 10, 2017.

The aggregated findings in this report reflect a mixed methods approach (ie, both quantitative and qualitative information gathering and analysis), which was used by the CLER Program to form a comprehensive base of evidence on how the nation's CLEs engage residents and fellows in the CLER Focus Areas.

In addition to findings from the second set of CLER visits, this report includes an initial look at changes on a selected set of measures in each of the CLER Focus Areas since the first set of CLER visits (2012–2015). This 2-point analysis highlights both progress and challenges in CLEs. These findings can enhance and extend understandings of the complex and dynamic nature of CLEs and help inform conversations on how to continually improve physician training to ensure high-quality patient care within these learning environments.

Selection of Clinical Learning Environments

In 2015, there were 725 ACGME-accredited Sponsoring Institutions (SIs) and nearly 1800 major participating sites, which are the hospitals, medical centers, ambulatory units, and other clinical settings where residents and fellows train. This report contains findings from 287 CLEs that had 3 or more ACGME-accredited core residency programs. These CLEs were affiliated with 287 SIs that collectively oversaw 9167 residency and fellowship programs (89.1% of all ACGME programs) and 111 455 residents and fellows (87.1% of all residents and fellows in ACGME-accredited programs).a Appendix A provides additional information on the general characteristics of these SIs (eg, type of SI, number of programs) compared to all ACGME-accredited SIs.

For SIs with 2 or more clinical sites that served as participating sites, the CLER Program visited 1 site due to resource limitations. This selection was based on 2 factors: (1) which CLE served the largest possible number of programs for that SI, and (2) whether that CLE had the availability of both the designated institutional official (DIO) and the chief executive officer (CEO) for the opening and exit interviews.

CLER Site Visit Protocol

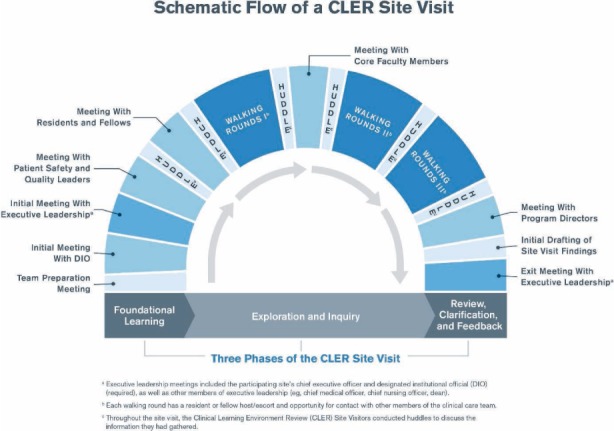

The CLER Site Visit protocol included a structured schedule of events for each visit (FIGURE 1).

FIGURE 1.

Schematic Flow of a Clinical Learning Environment Review (CLER) Site Visit

CLER Program staff notified clinical sites of their CLER Site Visit at least 10 days in advance. This relatively short notice was intended to maximize the likelihood of gathering real-time information from interviewees.

The number of site visitors and visit length varied according to the number of programs and residents and fellows at the site, with teams comprising 2 to 4 CLER Site Visitors and visits lasting 2 to 3 days. A full- or part-time salaried employee of the ACGME led each CLER Site Visit team. Additional team members included other CLER Site Visitors, ACGME staff, or trained volunteers from the GME community.

For each site visit, the CLER Site Visitors conducted group interviews in the same order: (1) an interview with the DIOb; (2) an initial group interview with the CEO, members of the executive team (eg, chief medical officer, chief nursing officer), the DIO, and a resident representative; (3) a short interview with patient safety and quality leaders; (4) a group interview with residents and fellows; (5) a group interview with faculty members; (6) a group interview with program directors; (7) a second interview with patient safety and quality leaders; and (8) an exit meeting with the CEO, members of the executive team, the DIO, and a resident representative. Following specific guidelines, each clinical site provided the site visitors with a list of all individuals attending the group interviews before the site visit. The CLER team conducted all interviews in a quiet location without interruption and ensured that the interviews did not exceed 90 minutes.

The purpose of the initial meetings with executive and patient safety and quality leaders was to allow the CLER team to become familiar with the basic language and culture of the CLE's current activities in the 6 CLER Focus Areas. This information helped inform subsequent interviews and observations during the CLER visit.

The resident and fellow group interviews comprised 6 to 32 peer-selected participants per session. Specifically, residents and fellows at the SI, excluding chief residents, voted for their peers to attend the group interviews. The participants broadly represented ACGME-accredited programs at the clinical site with proportionally more individuals from larger programs. The CLER team primarily interviewed residents and fellows who were in their postgraduate year 2 (PGY-2) or higher to ensure that interviewees had sufficient clinical experience to assess the learning environment. PGY-1 residents in a transitional year residency program were permitted to attend.

For the group interviews with faculty members and program directors, the CLER Program instructed the DIO to invite participants to attend the group interviews. In the faculty member group interviews, each session comprised 5 to 32 clinical faculty members who broadly represented the residency and fellowship programs at the CLE. Program directors were not permitted to attend the faculty member meetings. Group interviews with program directors comprised 3 to 32 leaders of ACGME-accredited core residency programs at each clinical site; sessions included associate program directors when program directors were not available.

For CLEs with more than 30 programs, 2 separate sets of interviews were conducted with residents and fellows, faculty members, and program directors, with no more than 32 participants attending an individual session.

Additionally, the CLER Site Visit team conducted a set of walking rounds, escorted by senior or chief residents and fellows, to observe various patient floors, units, and service areas. The CLER Program asked the DIO to select residents and fellows, preferably from a range of different specialties, to guide each CLER Site Visitor. Residents and fellows who participated in the resident and fellow group meetings or who served as the resident representative in the executive leadership meeting were not permitted to serves as escorts for the walking rounds.

The walking rounds enabled the CLER Site Visit team to gather feedback from physicians, nurses, and other health care professionals (eg, pharmacists, radiology technicians, social workers) in the clinical setting. Each CLER Site Visitor conducted at least 3 sets of walking rounds per clinical site, with each walking round lasting 90 minutes. For larger CLEs, site visitors conducted an additional fourth walking round lasting 60 minutes.

Throughout each visit, the CLER team conducted huddles to discuss the information they had gathered. Later during the visit, they held a team meeting to synthesize their findings, reach consensus, and prepare both an oral report and a draft of a written narrative report. At the exit meeting, the CLER team shared its oral report with executive leadership, which covered initial feedback on the 6 CLER Focus Areas. The written report, delivered approximately 6 to 8 weeks after the visit, reflected the same topics but with a more comprehensive and detailed set of observations. The intention of both the oral and written reports was to provide formative information that would help executive leadership assess their practices in the 6 CLER Focus Areas, inform resident and fellow training, and guide improvements in the CLE to ensure high-quality patient care.

Data Sources

Survey instruments. To conduct the group interviews, the CLER Site Visitors used a structured questionnaire developed under the guidance of experts in GME and/or the 6 CLER Focus Areas. The questionnaires contained both closed- and open-ended questions. After the questionnaires were initially content validated by expert review, the CLER Program field tested the instruments on 4 CLER Site Visits. At the conclusion of each of these visits, the items were refined as part of an iterative design process; with each iteration, the CLER Program reviewed and revised the items as necessary based on feedback from interviewees and interviewers.

Walking rounds. The CLER Program designed the walking rounds to facilitate random, impromptu interviews with residents, fellows, nurses, and other health care professionals across a number of clinical areas (eg, inpatient and outpatient areas, emergency departments) where residents and fellows were trained based on the SI's ACGME-accredited specialty and subspecialty programs.

The aims of the walking rounds were to (1) triangulate, confirm, and cross-check findings from the group interviews and (2) glean new information on residents' and fellows' experiences across the 6 CLER Focus Areas. The walking rounds provided important information that could either confirm or conflict with the information gathered in group interviews.

CLER Site Visit reports. The CLER Site Visitors synthesized findings from each visit in a written report, working from a formal template developed and refined in the early stages of the CLER Program. The template assisted the CLER Site Visit team in ensuring that each of the 6 CLER Focus Areas was fully addressed in the oral and written reports for each clinical site. The reports also included a brief description of the clinical site and any of its notable aspects. All members of the CLER Site Visit team reviewed and edited each report for accuracy and to achieve consensus on the findings.

Other sources of data. Several other sources of data were used to augment the site visit data, including the ACGME annual data reportsc and the 2015 American Hospital Association (AHA) Annual Survey Database.d The ACGME reports provided information on the SIs, programs, and physicians in GME, including the number of ACGME-accredited programs, number of residents and fellows matriculated, and university affiliation. The AHA data offered CLE information, including type of ownership (eg, nongovernment, not-for-profit versus investor-owned, for-profit) and size, as measured by the number of staffed acute care beds.

Data Collection

Group interviews with an audience response system (ARS). CLER Site Visitors conducted group interviews with residents and fellows, faculty members, and program directors using a computerized ARS (Keypoint Interactive version 2.6.6, Innovision Inc, Commerce, MI) that allowed for anonymous answers to closed-ended questions. The ARS data were exported into a Microsoft Excel spreadsheet and then into a software package for statistical analysis. Site visitors documented responses to open-ended questions qualitatively. The 3 surveys—1 each for residents and fellows, faculty members, and program directors—consisted of 45, 35, and 36 closed-ended questions and 25, 25, and 27 open-ended questions, respectively.

Group interviews with no ARS. CLER Site Visitors documented all responses qualitatively for group interviews with the DIO (39 questions); with the CEO, members of the executive team, the DIO, and the resident representative (38 questions); and with patient safety and quality leadership (70 questions).

Data Analysis

Descriptive statistics. Descriptive statistics were used to summarize and describe distribution and general characteristics of SIs, CLEs, and physician groups interviewed. For SIs, characteristics included SI type (eg, teaching hospital, medical school) and the number of ACGME-accredited residency and fellowship programs per institution. CLE characteristics included type of ownership (eg, nongovernment, not-for-profit), number of licensed beds, and total staff count. Demographic information included gender and medical specialty of physicians who participated in the group interviews.

Analysis of ARS data. Analyses were conducted at both the individual (eg, resident and fellow) and the CLE level. For the individual-level analyses, results are based on the total sample of individuals surveyed, presented as percentages. For CLE-level analyses, results show differences between CLEs after individual responses were aggregated at the CLE level and are presented as medians and interquartile ranges. These 2 levels of analysis provided a national overview of the state of CLE engagement in the 6 CLER Focus Areas and revealed how CLEs compared on these outcomes.

Chi-square analysis was used to compare resident and fellow responses and to identify any relationships in responses by (1) gender; (2) residency year; and (3) specialty grouping. Chi-square analysis was also used to explore if differences were associated with the following CLE characteristics: (1) regional location; (2) bed size; and (3) type of ownership. Categories in the annual AHA survey informed grouping of CLE-specific variables (eg, bed size). P values of .05 or less were considered statistically significant. All statistical analyses were conducted using SPSS Statistics version 22.0 (IBM Corp, Armonk, NY).

Analysis of CLER Site Visit reports. Specific findings based on responses to non-ARS questions and interviews on walking rounds were systematically coded in NVivo qualitative data analysis software version 11 (QSR International Pty Ltd, Doncaster, Victoria, Australia) following the principles of content analysis. Three members of the CLER Program staff, trained in qualitative data analysis, generated a master codebook through an iterative process by (1) independently applying codes to the data; (2) peer-reviewing coding; (3) discussing coding discrepancies; and (4) reaching agreement on the codes through consensus. The results were recorded as frequency counts for further descriptive analysis. Overall percentages and percentages stratified by CLE region, bed size, and type of ownership are reported.

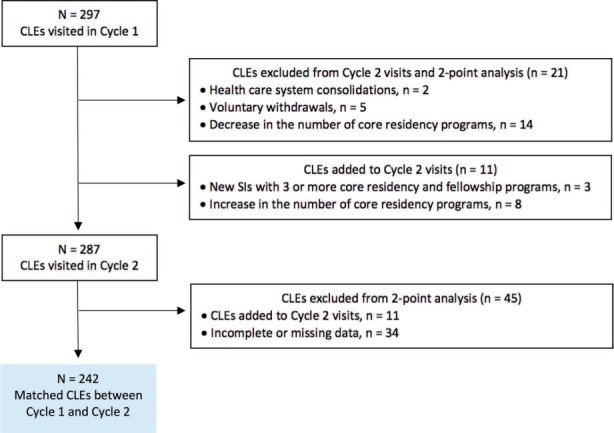

Two-point analysis of selected measures in the CLER Focus Areas. For this report, a selected set of measures in each of the CLER Focus Areas was examined to explore change over time for matched observations (ie, the same CLEs in both sets of visits). The final data set for this 2-point analysis comprised 242 CLEs; reasons for exclusion included health care system consolidations, changes in accreditation status (eg, voluntary withdrawal), changes in the number of core residency programs (eg, fewer than 3 core programs), and incomplete or missing data (see FIGURE 2). The measures examined for this section were the same in both sets of visits (eg, the questions remained constant between Cycle 1 and Cycle 2 of CLER visits).

FIGURE 2.

Matched Clinical Learning Environments (CLEs) Between Cycle 1 and Cycle 2 of Clinical Learning Environment Review Site Visits

Abbreviation: SI, Sponsoring Institution.

The Kolmogorov-Smirnov test was used to test for normality in the data. Based on the results of the Kolmogorov-Smirnov test and tests of symmetry, nonparametric tests were employed in the 2-point analysis. The Wilcoxon signed rank test (and the sign test when the data were nonsymmetrical) was conducted to compare changes in median percentage based on responses to closed-ended questions (ie, ARS data) that were aggregated at the CLE level. Based on coded extractions from the CLER Site Visit reports, the McNemar and marginal homogeneity tests were conducted to compare changes in the qualitative findings.

P values of .05 or less were considered statistically significant. SPSS Statistics version 22.0 was used to conduct statistical analyses.

Development of overarching themes and findings in the CLER Focus Areas. The overarching themes and findings by CLER Focus Areas were determined in 3 stages. First, the CLER Program staff asked each CLER Site Visitor to identify the overarching themes (ie, broad, high-level observations) and the challenges and opportunities in each of the CLER Focus Areas based on their summative experiences and observations through a key informant survey. The CLER Program staff systematically analyzed the content of all responses to discern common themes and note salient concepts. The approach to analysis was inductive in that the themes emerged from the content of the responses.

Next, the CLER Site Visitors reviewed and commented on the results and offered additional findings by consensus. Based on feedback from the site visitors, the CLER Program staff revised the summary of results and presented them to the CLER Evaluation Committee. Lastly, the members of the CLER Evaluation Committee reviewed the results and developed a set of commentaries on the importance of the findings and their impact on patient care and physician training. The work of the committee was achieved by consensus.

Use of terms to summarize quantitative and qualitative results. For the purposes of this report, a specific set of descriptive terms is used to summarize quantitative results from both the ARS and the site visit reports: few (< 10%), some (10%–49%), most (50%–90%), and nearly all (> 90%).

The summary of qualitative data (ie, responses to open-ended questions during group interviews and conversations on walking rounds) is based on the site visitors' assessment of the relative magnitude of responses. The following set of terms is intended to approximate the quantitative terms above: uncommon or limited, occasionally, many, and generally.

Triangulation and Cross-Validation

Triangulation of the findings enhanced overall accuracy in the conclusions. The findings were cross validated for consistency and corroboration using multiple sources of complementary evidence and analytic techniques. For example, the ARS results were more meaningful when supplemented by critical qualitative information and vice versa. Multiple sources of data provided greater insight and minimized inadequacies of individual data sources when a finding was supported in multiple places. This mixed methods approach provided a richer, more balanced, and comprehensive perspective by allowing for deeper dimensions of the data to emerge.

Limitations

As with any formative learning process, limitations to the CLER Program warrant consideration in using the information in this report. Perhaps most important, these findings do not suggest cause and effect.

Second, although this aggregated set of findings is designed to be highly representative, it is based on a series of sampled populations and thus may not be generalizable to all CLEs. As previously mentioned, the CLER teams interviewed a sample of residents, fellows, faculty members, program directors, and other clinical and administrative staff for each visit—with the aim of broad representation across all programs (eg, proportionally more individuals from larger programs). Although the goal was to achieve a broad degree of representativeness, the sample may or may not reflect the entire population. Given that the CLER Program is a formative assessment, this approach to sampling allowed for a broad and in-depth understanding of socially complex systems such as CLEs. The CLEs that were not included in this sample may represent different experiences and consequently could yield different conclusions as CLER goes on to consider them in the future.

Footnotes

a Source: The ACGME annual data report. The ACGME annual data report contains the most recent data on the programs, institutions, and physicians in graduate medical education as reported by all medical residency SIs and ACGME-accredited programs.

b A formal interview with the DIO was added to the second set of visits to the CLEs to further explore the CLE's engagement in the CLER Focus Areas.

c The ACGME annual data report contains the most recent data on the programs, institutions, and physicians in GME as reported by all medical residency SIs and ACGME-accredited programs.

d The AHA Annual Survey Database includes data from the AHA Annual Survey of Hospitals, the AHA registration database, the US Census Bureau population data, and information from hospital accrediting bodies and other organizations.

References

- 1.Weiss KB, Bagian JP, Nasca TJ. The clinical learning environment: the foundation of graduate medical education. JAMA. 2013;309(16):1687–1688. doi: 10.1001/jama.2013.1931. [DOI] [PubMed] [Google Scholar]

- 2.Weiss KB, Wagner R, Nasca TJ. Development, testing, and implementation of the ACGME Clinical Learning Environment Review (CLER) Program. J Grad Med Educ. 2012;4(3):396–398. doi: 10.4300/JGME-04-03-31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Weiss KB, Bagian JP, Wagner R. CLER Pathways to Excellence: expectations for an optimal clinical learning environment [executive summary] J Grad Med Educ. 2014;6(3):610–611. doi: 10.4300/JGME-D-14-00348.1. [DOI] [PMC free article] [PubMed] [Google Scholar]