Abstract

Abstract

The digitalization of modern imaging has led radiologists to become very familiar with computers and their user interfaces (UI). New options for display and command offer expanded possibilities, but the mouse and keyboard remain the most commonly utilized, for usability reasons. In this work, we review and discuss different UI and their possible application in radiology. We consider two-dimensional and three-dimensional imaging displays in the context of interventional radiology, and discuss interest in touchscreens, kinetic sensors, eye detection, and augmented or virtual reality. We show that UI design specifically for radiologists is key for future use and adoption of such new interfaces. Next-generation UI must fulfil professional needs, while considering contextual constraints.

Teaching Points

• The mouse and keyboard remain the most utilized user interfaces for radiologists.

• Touchscreen, holographic, kinetic sensors and eye tracking offer new possibilities for interaction.

• 3D and 2D imaging require specific user interfaces.

• Holographic display and augmented reality provide a third dimension to volume imaging.

• Good usability is essential for adoption of new user interfaces by radiologists.

Keywords: Computer user interface, Computed tomodensitometry, Virtual reality, Volume rendering, Interventional radiology

Introduction

The digitalization of modern imaging facilitates exchange and archiving, and enables the application of advanced image analysis solutions such as computer-aided detection (CAD) for identification of small lesions in several organs. The shift from analog to digital imaging should have led to an increase in efficiency among radiologists by reducing the time for interpretation and image manipulation. This has not been clearly demonstrated, and one limiting factor is represented by what is called the computer user interface. Advances in recent years have enabled the availability of touchscreens and new sensor devices for eye, kinetic or voice commands at low cost, offering expanded possibilities for this human–computer interaction.

Terminology and concepts

The user interface (UI), also known as the human–machine interface, is defined as all the mechanisms (hardware or software) that supply information and commands to a user in order to accomplish a specific task within an interactive system. All machines (e.g. cars, phones, hair dryers) have a UI. The computer has a global UI called an operating system, such as Windows or Mac OS X. A web browser has a specific UI, and a web site itself has a specific UI. In practice, the UI is the link between the machine and the operator. In informatics, the UI includes inputs and outputs. Inputs communicate a user’s needs to the machine, and the most common are the keyboard, mouse, touch interface and voice recognition. New sensor devices for eye and kinetic commands that have recently been developed offer greater sophistication at low cost, enhancing the potential of this human–computer interaction [1]. Outputs communicate the results of a computer’s calculation to the user. The most common UI is a display screen, but sound and haptic feedbacks are sometimes used.

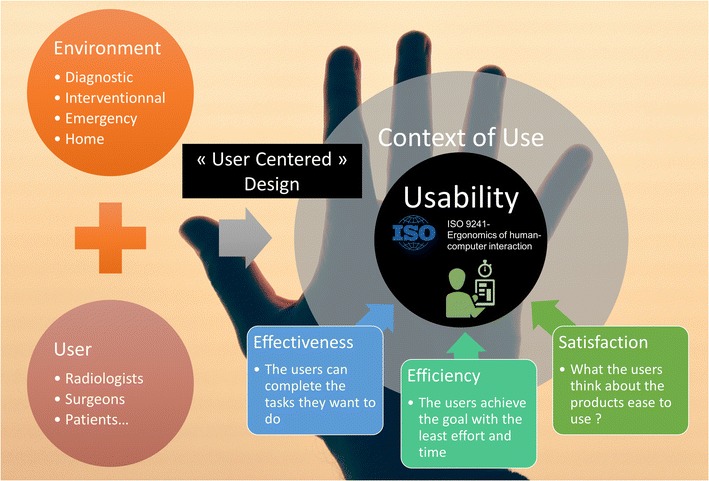

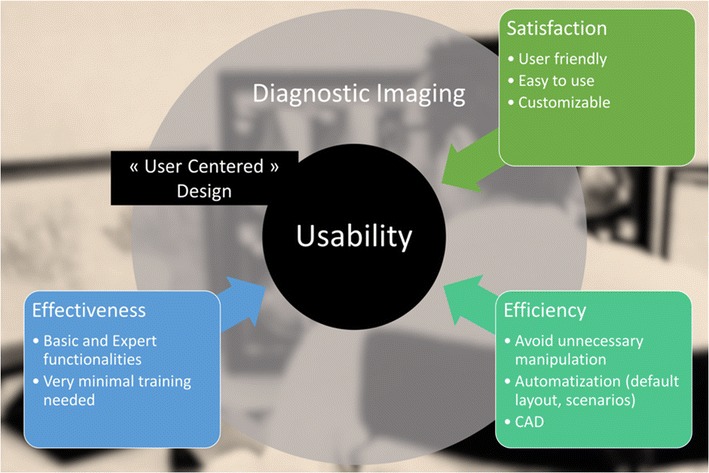

The user experience (UX) is the outcome of the UI. The objective is to provide the best usability to achieve a good UX. This depends on both user specificity and the specific usage context. For these reasons, the UX is evaluated on the basis of both psychology and ergonomics. Ergonomic requirements are defined by International Organization for Standardization (ISO) 9241 regulatory standard, to ensure the operator’s comfort and productivity, preventing stress and accidents (Fig. 1) [2]. Usability is high when efficacy, efficiency and satisfaction are high. Efficacy is the user's ability to complete the planned task. Efficiency is measured by the time to completion. Satisfaction is the user’s subjective evaluation of the comfort of use. The UI needs to be developed and designed specifically for the context of use. This “user-centred design” aims to maximize usability.

Fig. 1.

The usability of a computer user interface (CUI) in radiology is evaluated by three indicators. The UI is designed to maximize the usability in a specified context of use. In medical imaging, the usage context can be defined as a user (the reader of the images) inside his environment

Computed radiology and specific needs

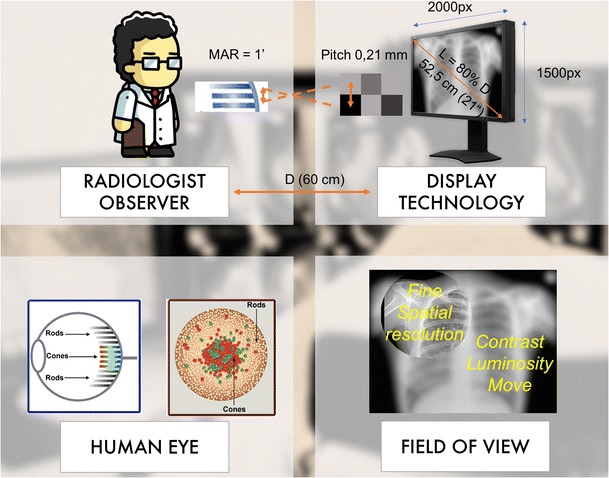

With regard to medical imaging, the UI is specifically constrained by human and contextual factors [3]. The interaction occurs between a human observer and a display technology (Fig. 2). With respect to human visual ability, the eye is made of cones and rods. The cones are concentrated in the macula at the centre of the field of vision and provide the best spatial resolution. On the periphery of the visual field, the image becomes blurry. The eye's maximum power of discrimination is 0.21 mm. The physiological focal point of the eye is around 60 cm from the viewer. This creates the technical standards for the practice of radiology. The diagonal of the display should be located at 80% of the distance to the eye, which corresponds to a screen of approximately 50 cm (about 21 in.). For this size, the resolution providing a pitch of 0.21 mm is 1500×2000 pixels [4]. Diagnostics are performed using “macular vision”. The radiologist needs to explore the whole image by moving the eye. This justifies the need for pan and zoom tools to study a particular region of interest.

Fig. 2.

The human–machine interaction is constrained by human and contextual factors. Typically, the display is around 60 cm from the radiologist. At this distance, the field of view is around 50 cm (around 21 in.). Considering the maximum angular resolution of the eye, the display can have maximum pitch of 0.21 mm. This corresponds to a 3-megapixel screen. The human retina contains two types of photoreceptors, rods and cones. The cones are densely packed in a central yellow spot called the “macula” and provide maximum visual acuity. Visual examination of small detail involves focusing light from that detail onto the macula. Peripheral vision and rods are responsible for night vision and motion detection

With regard to contextual constraints, we differentiate diagnostic from interventional radiology. Indeed, with regard to the former, the constraints of UX are more about managing the workflow for maximum productivity. One challenge is the integration of different commands and information in a common UI. Regarding the latter, the limit is clearly in maintaining operator sterility.

In this paper, we review and discuss different UI tools available for radiology over time. We also try to provide an outlook for the future and suggestions for improvements.

Review of the literature

Interfaces for 2D images

Imaging devices have largely provided two-dimensional images: X-ray planar imaging at the beginning of the twentieth century, and then computed tomography (CT) or magnetic resonance (MR) sliced imaging in the 1970s. Initially, the image was printed like a photo, and there was no machine interaction. Today, if we look at any interpretation room around the world, chances are good that we will find the same setup, combining a chair, a desk and one or more computers with a keyboard, a mouse and a screen. The picture of modern radiology can be understood through the evolution of the computer UI, as the image has become digital and is displayed on computers.

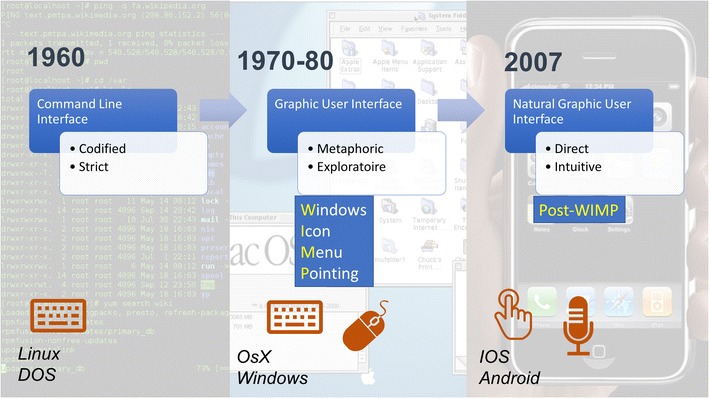

In the 1960s, the command-line interface (CLI) was the only way to communicate with computers. The keyboard was the only input, and a strict computer language had to be known to operate the system. In 1966, Douglas Engelbart invented the computer mouse. Together with Xerox, and then Apple's Mac OS X or Microsoft Windows, they participated in developing a graphical user interface (GUI), known as “WIMP” (windows, icons, menus and pointing device) [5], which vastly improved the user experience. This system made computers accessible to everyone, with minimal skill required. Today, the WIMP UI remains nearly unchanged, and it is the most commonly used UI for personal computers. In 2007, the post-WIMP era exploded with the introduction of the “natural user interface (NUI)” using touchscreens and speech recognition introduced by Apple iOS, followed by Google Android, used mainly for tablet personal computers (PC) and smartphones (Fig. 3) [6].

Fig. 3.

History of common computer user interfaces. The most common user interface (UI) is the graphical UI (GUI) used by operating systems (OS) of popular personal computers in the 1980s. It is designed with a mouse input to point to icons, menus and windows. Recently, new OS with specific interfaces for touchscreens have emerged, known as natural user interfaces (NUI)

Digital radiology and the current workstation were introduced during the WIMP era. The specific UI was designed with a keyboard and a mouse, and this setup has remained in use for approximately 30 years. Resistance to change and the “chasm” or delay in the new technology adoption curve explains the UI stagnation globally in the field of radiology [7].

However, is there really a better alternative to a mouse and a keyboard? Weiss et al. tried to answer this question, and compared five different setups of IU devices for six different PACS users during a 2-week period [8]. The study did not include post-WIMP UI. The authors concluded that no one device was able to replace the pairing of the keyboard and mouse. The study also revealed that the use of both hands was thought to be a good combination. However, the evaluation focused on image manipulation and did not consider single-handed control needed for microphone use in reporting.

The authors proposed an interesting marker of efficacy for radiologic IU as the highest ratio of “eyes-to-image” versus “eyes-to-interface” device time.

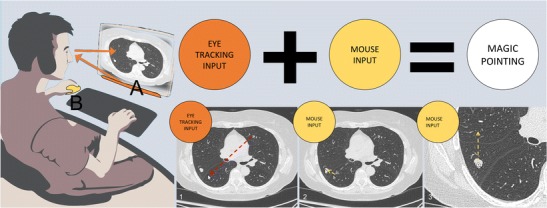

Some solutions for improving the WIMP-UI have been tested. They combine an “eye tracking” technology with the pointing technique [9]. The objective is to eliminate a large portion of cursor movement by warping the cursor to the eye gaze area [10]. Manual pointing is still used for fine image manipulation and selection. Manual and gaze input cascaded (MAGIC) pointing can be adapted to computer operating systems using a single device (Fig. 4).

Fig. 4.

Potential use of manual and gaze input cascaded (MAGIC) pointing for diagnostic radiology. The radiologist is examining lung parenchyma. When he focuses on an anomaly, the eye tracking device automatically moves the pointer around the region of interest. A large amount of mouse movement is eliminated (dotted arrow), and is limited to fine pointing and zooming. This cascade follows the observer's examination, making the interaction more natural

Regarding post-WIMP UI, and especially touchscreens, there is abundant literature, most of which deals with emergency setup involving non-radiologist readers as well [11]. Indeed, the tablet PC offers greater portability and teleradiology possibilities. Tewes et al. showed no diagnostic difference between the use of high-resolution tablets and PACS reading for interpreting emergency CT scans [12]. However, touchscreen adoption is not evident at the moment, even if full high-definition screens fulfil quality assurance guidelines. Users have found the windowing function less efficient than the mouse, and have also noted screen degradation due to iterative manipulations. Technically, portable tablet size and hardware specifications are not powerful enough for image post-processing. However, cloud computing and streaming can provide processor power similar to a stand-alone workstation (Fig. 5). Their portability makes them more adaptable for teleradiology and non-radiology departments. One possible solution discussed recently is a hybrid type of professional tablet PC for imaging professionals [13]. The interface is designed to enable direct interaction on the screen using a stylet and another wheel device. Microsoft and Dell are currently proposing design solutions for specific use with photo and painting software. These desktops could be used for radiology workstations with a few UX-specific design modifications (Fig. 6).

Fig. 5.

Tactile version of the Anywhere viewer (Therapixel, France). Cloud computing allows powerful post-processing with online PACS

Fig. 6.

Potential use of Surface Studio® (Microsoft, Redmond, WA, USA). The screen is tactile (a). The stylet would be handy for direct measurement and annotation of the image (b). The wheel could be used to select functions such as Windows and for scrolling of images (c). A properly designed software interface could replace traditional mouse and computer workstations

Interventional radiology is a specific process with specific needs, the most important of which is maintaining the sterility of the operating site while manipulating the images. Ideally, the operation has to be autonomic for at least basic features such as selecting series, reformatting, slicing, and pan and zoom manipulation. Some have proposed taking a mouse or trackpad inside the sterile protected area, or even using a tablet PC to visualize images. However, the most efficient setup in these conditions is touchless interaction [14], which will minimize the risk of contamination.

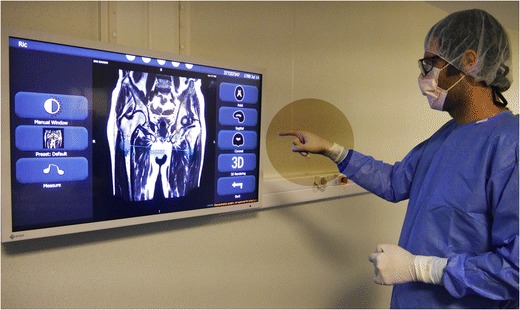

Iannessi et al. developed and tested a touchless UI for interventional radiology [15]. Unlike previous efforts, the authors worked on redesigning a specific IU adapted to the kinetic recognition sensor without a pointer (Fig. 7). The user experience has been clearly improved with respect to simple control of the mouse pointer [16]. This is also a good example of environment constraints and user-centred design. Indeed, the amplitude of the arm movements had to be reduced to a minimum, considering the high risk of contamination inside a narrow operating room.

Fig. 7.

Touchless image viewer for operating rooms, Fluid (Therapixel, Paris, France). The surgeon or the interventional radiologist interacts in sterile conditions with gloved hands. The viewer interface is redesigned without a pointer; the tools are selected with lateral movements

Interfaces for 3D images

Three-dimensional imaging volumes began to be routinely produced in the 1990s. They were originally acquired on MRI or reconstructed from multi-slice helical CT acquisitions, and volume acquisition later became available from rotational angiography or ultrasound as well [17]. With the exception of basic X-ray study, medical imaging examination rarely does not include 3D images.

Volume acquisition can now be printed in three dimensions, similar to the case with 2D medical films [18]. Obviously, this option can be considered only for selected cases such as preoperative planning, prosthesis or education. It is expensive and absolutely not conducive to productive workflow [19].

Some authors dispute the added value of 3D representations. Indeed, radiology explores the inside of organs, and except in rare situations, 2D slices give more information than a 3D representation of the surfaces.

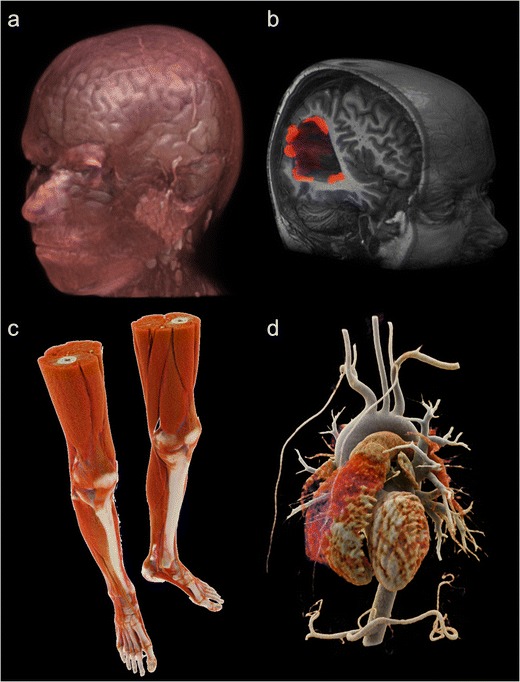

However, mental transformation from 2D to 3D can be difficult. For example, when scoliosis needs to be understood and measured, 3D UI appears to be more efficient [20]. Some orthopedic visualization, cardiovascular diagnoses and virtual colonoscopic evaluations are also improved by 3D UI [21–23]. For the same reasons, 3D volume representations are appreciated by surgeons and interventional radiologists, as they help to guide complex surgery or endovascular procedures [24–26]. Preoperative images improve surgical success [27]. Moreover, advanced volume rendering provides more realistic representations, transforming medical images into a powerful communication tool with patients (Fig. 8) [28, 29].

Fig. 8.

Current possibility for 3D volume rendering (VR). VR post-processed from MRI acquisition (a, b). Hyper-realistic cinematic VR processed from CT scan acquisition (c, d)

However, both use and usability of such acquisition volumes remain poor. There are many reasons for the non-use of 3D images, including the absence of full automation of the required post-treatment. Also, exploitation of 3D volume is hindered by the lack of adapted display and command UI [30]. By displaying 3D images on 2D screens, we lose part of the added information provided by the 3D volume [31].

With regard to inputs, touchless interfaces have been demonstrated as one interesting option. A kinetic sensor placed in front of a screen senses 3D directional movements in order to manipulate the virtual object with almost natural gestures [14, 32].

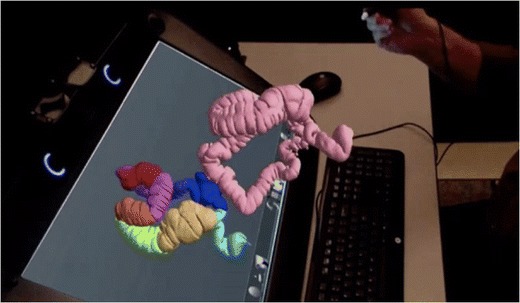

For displays, some authors have explored the use of holographic imaging in radiology, especially in the field of orthopedic diagnostic imaging [23, 33, 34]. In 2015, the first holographic medical display received FDA approval. This includes 3D glasses and a stylet for manipulation (Fig. 9).

Fig. 9.

True 3D viewer (EchoPixel, Mountain View, CA, USA). This is the first holographic imaging viewer approved by the FDA as a tool for diagnosis as well as surgical planning. A stylet can interact with the displayed volume of the colon, providing an accurate three-dimensional representation of patient anatomy

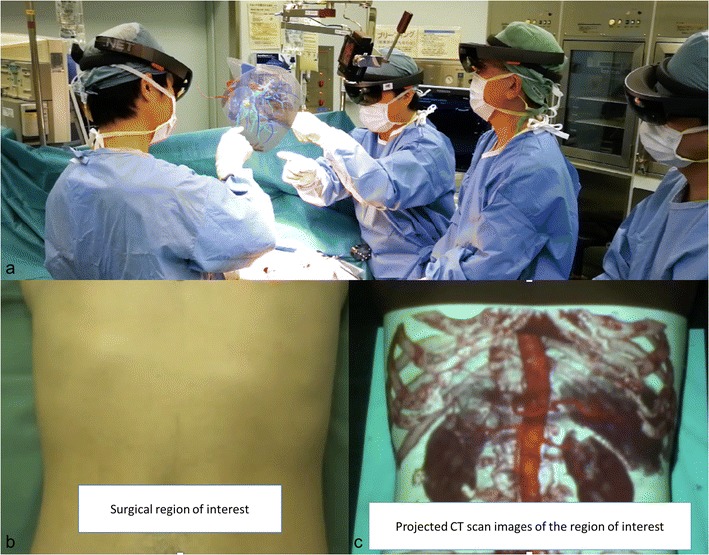

Another possibility for displaying 3D volume is the use of augmented reality. The principle is to show the images with a real-time adjustment to observe cephalogyric motion. This can be done using a head-mounted device such as Google Glass, a handheld device such as a smartphone, or a fixed device. Nakata et al. studied the latest developments in 3D medical imaging manipulation. The authors demonstrated improved efficiency of such UI compared to a two-button mouse interaction [35]. Augmented reality and 3D images have also been used in surgical practice for image navigation [36, 37]. Conventional registration requires a specific acquisition, and the process is time-consuming [38]. Sugimoto et al. proposed a marker-less surface registration which may improve the user experience and encourage the use of 3D medical images (Fig. 10) [39]. Recent promotion of the HoloLens (Microsoft, Redmond, WA, USA), a headset mixed-reality device including an efficient UI controlled by voice, eye and gesture, may help to accelerate radiological applications of augmented reality, especially for surgery (Fig. 10) [40].

Fig. 10.

Augmented reality using 3D medical images is employed for planning and guiding surgical procedures. Surgeons wear a head-mounted optical device to create augmented reality (a). They can interact with the volume of the patient’s liver during surgery. Spatial augmented reality obtained by projection of the volume rendering on the patient (b, c). This see-thru visualization helps in guiding surgery

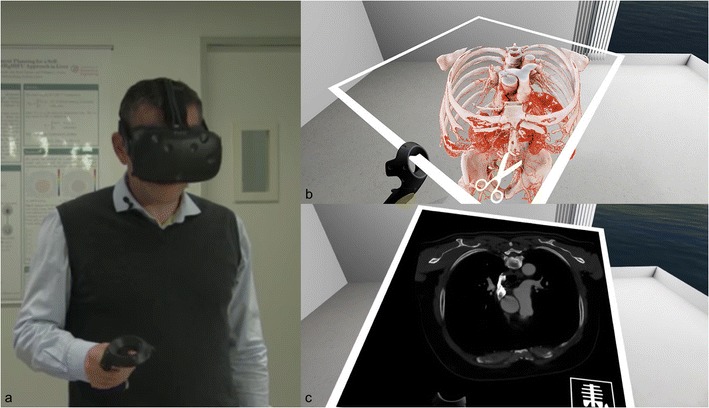

Another UI for displaying 3D medical images is virtual reality. In this case, it is a completely immersive experience. The operator wears a device and the environment is artificially created around him. Some authors have proposed including a 3D imaging volume inside the environment to give the user the opportunity to interact with it (Fig. 11).

Fig. 11.

Virtual reality headset with medical images. The user wears a head-mounted display that immerses him in the simulated environments. A hand device allows him to interact with the virtual objects (a). The user experiences a first-person view inside and interacts with the 3D volume of the medical images (a). The volume can be sliced as a CT scan in any reformatted axis (b)

Outlook for the future

We believe that UX and UI specifically designed for radiology is the key for future use and adoption of new computer interface devices. A recent survey including 336 radiologists revealed that almost one-third of the radiologists were dissatisfied with their computing workflow and setup [41]. In addition to innovative hardware devices, efforts should focus on an efficient software interface. We are mainly concerned with PACS software in this discussion. Indeed, a powerful specific UI has to meet the radiologist's needs, and these needs are high (Fig. 12).

Fig. 12.

User-centred design for diagnostic imaging viewer. To maximize usability, the design of the interface needs to integrate the user and environmental requirements and constraints. Automatization and CAD have to facilitate use in order to minimize time and effort for image analysis

Regarding image manipulation, Digital Imaging and Communications in Medicine (DICOM) viewers are typically built with two blocks: the browser for study images and series selection, and the image viewer with manipulation tools. The key elements of the PACS UI architecture are hanging protocol and icons, image manipulation, computer-aided diagnosis and visualization features [42]. The goal of a hanging protocol is to present specific types of studies in a consistent manner and to reduce the number of manual image ordering adjustments performed by the radiologist [43]. In fact, automated scenarios should be promoted in order to present the maximum information by default at initial presentation [44]. In addition, the hanging protocols and icons should be user-friendly, intuitive and customizable. Visualization features can be incorporated into a stand-alone facility and integrated with the workstation. The software requires expert functionality that entails more than just simple scrolling, magnification and windowing.

For diagnostic imaging, in addition to the UI for image manipulation, radiologists need a UI for workflow management that includes medical records and worklists [42]. As teleradiology evolves, the concept of “SuperPACS” will probably drive the next UI to an integrated imaging viewer [45]. Indeed, medical information is tedious and labor-intensive when it is not integrated on the same interface and/or computer. The interface should aggregate all needed information for the reporting task. It is the same for the reporting and the scheduling systems. Enhancing the performance of automated voice recognition should enable real-time dictation, where we can fully interact with the images [41].

As explained above, 3D manipulation and display must be promoted for the added value they provide. Even though the technology may be ready for robust utilization, there is an intractable delay in radiologist adoption [7]. Radiologists, like any customer, are resistant to change, and the design of radiology-specific UI will hasten the revolution [14, 30].

Conclusion

Since the digitalization of radiology, UI for radiologists have followed the evolution of common interfaces in computer science. The mouse and the keyboard remain the most widely adopted UI.

We highlight the importance of designing a specific UI dedicated to the radiologist in terms of both the hardware and software in order to make the experience more efficient, especially with the evolution of teleradiology and the need for increased productivity. Touch technology (touch or stylus) is promising, but requires exact customization for good radiologist usability.

Algorithmic advances will facilitate the take-up of 3D imaging through automated detailed and informative volume rendering. However, specific UI will be needed for 3D image display. Augmented and virtual reality are promising candidates to fill this gap. With regard to image manipulation, contactless interfaces appear to be more suitable for interventional radiology units that already have a good level of usability.

Acknowledgments

We thank Andrea Forneris and Dario Baldi for their assistance in providing post-processing volume and cinematic rendering images.

We thank Sergio Aguirre, CEO of EchoPixel, Inc., for his assistance in providing material for holographic medical images.

We thank Pr Philippe Cattin, Head of the Department of Biomedical Engineering at the University of Basel, for his support in providing images of VR use in medicine.

Compliance with ethical standards

Competing interest

Iannessi Antoine is co-founder of Therapixel SA, therapixel.com. Therapixel is a medical imaging company for custom user interface dedicated to surgeons.

Clatz Olivier is CEO and co-founder of Therapixel SA, therapixel.com. Therapixel is a medical imaging company for custom user interface dedicated to surgeons.

Maki Sugimoto is COO and co-founder of Holoeyes Inc., Holoeyes.jp. Holoeyes is a medical imaging company specialized in virtual reality and 3D imaging user interface.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Berman S, Stern H. Sensors for gesture recognition systems. IEEE Trans Syst Man Cybern Part C Appl Rev. 2012;42(3):277–290. doi: 10.1109/TSMCC.2011.2161077. [DOI] [Google Scholar]

- 2.Iso (1998) {ISO 9241–11:1998 ergonomic requirements for office work with visual display terminals (VDTs) -- part 11: guidance on usability}. citeulike-article-id:3290754

- 3.Krupinski EA. Human factors and human-computer considerations in Teleradiology and Telepathology. Healthcare (Basel) 2014;2(1):94–114. doi: 10.3390/healthcare2010094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Norweck JT, Seibert JA, Andriole KP, Clunie DA, Curran BH, Flynn MJ, et al. ACR-AAPM-SIIM technical standard for electronic practice of medical imaging. J Digit Imaging. 2013;26(1):38–52. doi: 10.1007/s10278-012-9522-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Engelbart DC, English WK. A research center for augmenting human intellect. San Francisco: Paper presented at the Proceedings of the December 9–11, 1968, fall joint computer conference, part I; 1968. [Google Scholar]

- 6.Dam A v. Post-WIMP user interfaces. Commun ACM. 1997;40(2):63–67. doi: 10.1145/253671.253708. [DOI] [Google Scholar]

- 7.Kenn H, Bürgy C (2014) “Are we crossing the chasm in wearable AR?”: 3rd workshop on wearable Systems for Industrial Augmented Reality Applications. Paper presented at the Proceedings of the 2014 ACM International Symposium on Wearable Computers: Adjunct Program, Seattle

- 8.Weiss DL, Siddiqui KM, Scopelliti J. Radiologist assessment of PACS user interface devices. J Am Coll Radiol. 2006;3(4):265–273. doi: 10.1016/j.jacr.2005.10.016. [DOI] [PubMed] [Google Scholar]

- 9.Zhai S, Morimoto C, Ihde S. Manual and gaze input cascaded (MAGIC) pointing. Pittsburgh: Paper presented at the Proceedings of the SIGCHI conference on Human Factors in Computing Systems; 1999. [Google Scholar]

- 10.Fares R, Fang S, Komogortsev O. Can we beat the mouse with MAGIC? Paris: Paper presented at the Proceedings of the SIGCHI Conference on Human Factors in Computing Systems; 2013. [Google Scholar]

- 11.John S, Poh AC, Lim TC, Chan EH, Chong le R. The iPad tablet computer for mobile on-call radiology diagnosis? Auditing discrepancy in CT and MRI reporting. J Digit Imaging. 2012;25(5):628–634. doi: 10.1007/s10278-012-9485-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Tewes S, Rodt T, Marquardt S, Evangelidou E, Wacker FK, von Falck C. Evaluation of the use of a tablet computer with a high-resolution display for interpreting emergency CT scans. Rofo. 2013;185(11):1063–1069. doi: 10.1055/s-0033-1350155. [DOI] [PubMed] [Google Scholar]

- 13.Jo J, L'Yi S, Lee B, Seo J. TouchPivot: blending WIMP & post-WIMP interfaces for data exploration on tablet devices. Denver: Paper presented at the Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems; 2017. [Google Scholar]

- 14.Reinschluessel AV, Teuber J, Herrlich M, Bissel J, Eikeren M v, Ganser J, et al. Virtual reality for user-centered design and evaluation of touch-free interaction techniques for navigating medical images in the operating room. Denver: Paper presented at the Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems; 2017. [Google Scholar]

- 15.Iannessi A, Marcy PY, Clatz O, Fillard P, Ayache N. Touchless intra-operative display for interventional radiologist. Diagn Interv Imaging. 2014;95(3):333–337. doi: 10.1016/j.diii.2013.09.007. [DOI] [PubMed] [Google Scholar]

- 16.Mewes A, Hensen B, Wacker F, Hansen C. Touchless interaction with software in interventional radiology and surgery: a systematic literature review. Int J Comput Assist Radiol Surg. 2017;12(2):291–305. doi: 10.1007/s11548-016-1480-6. [DOI] [PubMed] [Google Scholar]

- 17.Huang Q, Zeng Z. A review on real-time 3D ultrasound imaging technology. Biomed Res Int. 2017;2017:6027029. doi: 10.1155/2017/6027029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Bucking TM, Hill ER, Robertson JL, Maneas E, Plumb AA, Nikitichev DI. From medical imaging data to 3D printed anatomical models. PLoS One. 2017;12(5):e0178540. doi: 10.1371/journal.pone.0178540. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kim GB, Lee S, Kim H, Yang DH, Kim YH, Kyung YS, et al. Three-dimensional printing: basic principles and applications in medicine and radiology. Korean J Radiol. 2016;17(2):182–197. doi: 10.3348/kjr.2016.17.2.182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Mandalika VBH, Chernoglazov AI, Billinghurst M et al (2017) A hybrid 2D/3D user Interface for radiological diagnosis. J Digit Imaging. 10.1007/s10278-017-0002-6 [DOI] [PMC free article] [PubMed]

- 21.Rowe SP, Fritz J, Fishman EK. CT evaluation of musculoskeletal trauma: initial experience with cinematic rendering. Emerg Radiol. 2018;25(1):93–101. doi: 10.1007/s10140-017-1553-z. [DOI] [PubMed] [Google Scholar]

- 22.Kmietowicz Z. NICE recommends 3D heart imaging for diagnosing heart disease. BMJ. 2016;354:i4662. doi: 10.1136/bmj.i4662. [DOI] [PubMed] [Google Scholar]

- 23.Drijkoningen T, Knoter R, Coerkamp EG, Koning AH, Rhemrev SJ, Beeres FJ. Inter-observer agreement between 2-dimensional CT versus 3-dimensional I-space model in the diagnosis of occult scaphoid fractures. Arch Bone Joint Surg. 2016;4(4):343–347. [PMC free article] [PubMed] [Google Scholar]

- 24.Schnetzke M, Fuchs J, Vetter SY, Beisemann N, Keil H, Grutzner PA, et al. Intraoperative 3D imaging in the treatment of elbow fractures--a retrospective analysis of indications, intraoperative revision rates, and implications in 36 cases. BMC Med Imaging. 2016;16:24. doi: 10.1186/s12880-016-0126-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Stengel D, Wich M, Ekkernkamp A, Spranger N. Intraoperative 3D imaging : diagnostic accuracy and therapeutic benefits. Unfallchirurg. 2016;119(10):835–842. doi: 10.1007/s00113-016-0245-6. [DOI] [PubMed] [Google Scholar]

- 26.Nicolau S, Soler L, Mutter D, Marescaux J. Augmented reality in laparoscopic surgical oncology. Surg Oncol. 2011;20(3):189–201. doi: 10.1016/j.suronc.2011.07.002. [DOI] [PubMed] [Google Scholar]

- 27.Simpfendorfer T, Li Z, Gasch C, Drosdzol F, Fangerau M, Muller M, et al. Three-dimensional reconstruction of preoperative imaging improves surgical success in laparoscopy. J Laparoendosc Adv Surg Tech A. 2017;27(2):181–185. doi: 10.1089/lap.2016.0424. [DOI] [PubMed] [Google Scholar]

- 28.Dappa E, Higashigaito K, Fornaro J, Leschka S, Wildermuth S, Alkadhi H. Cinematic rendering - an alternative to volume rendering for 3D computed tomography imaging. Insights Imaging. 2016;7(6):849–856. doi: 10.1007/s13244-016-0518-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Kroes T, Post FH, Botha CP. Exposure render: an interactive photo-realistic volume rendering framework. PLoS One. 2012;7(7):e38586. doi: 10.1371/journal.pone.0038586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Sundaramoorthy G, Higgins WE, Hoford J, Hoffman EA (1992) Graphical user interface system for automatic 3-D medical image analysis. Paper presented at the [1992] Proceedings Fifth Annual IEEE Symposium on Computer-Based Medical Systems

- 31.Venson JE, Albiero Berni JC, Edmilson da Silva Maia C, Marques da Silva AM, Cordeiro d'Ornellas M, Maciel A. A case-based study with radiologists performing diagnosis tasks in virtual reality. Stud Health Technol Inform. 2017;245:244–248. [PubMed] [Google Scholar]

- 32.Åkesson D, Mueller C. Using 3D direct manipulation for real-time structural design exploration. Comput-Aided Des Applic. 2018;15(1):1–10. doi: 10.1080/16864360.2017.1355087. [DOI] [Google Scholar]

- 33.Di Segni R, Kajon G, Di Lella V, Mazzamurro G. Perspectives and limits of holographic applications in radiology (author's transl) Radiol Med. 1979;65(4):253–259. [PubMed] [Google Scholar]

- 34.Redman JD. Medical applications of holographic visual displays. J Sci Instrum. 1969;2(8):651–652. doi: 10.1088/0022-3735/2/8/311. [DOI] [PubMed] [Google Scholar]

- 35.Nakata N, Suzuki N, Hattori A, Hirai N, Miyamoto Y, Fukuda K. Informatics in radiology: intuitive user interface for 3D image manipulation using augmented reality and a smartphone as a remote control. Radiographics. 2012;32(4):E169–E174. doi: 10.1148/rg.324115086. [DOI] [PubMed] [Google Scholar]

- 36.Elmi-Terander A, Skulason H, Soderman M, Racadio J, Homan R, Babic D, et al. Surgical navigation technology based on augmented reality and integrated 3D intraoperative imaging: a spine cadaveric feasibility and accuracy study. Spine (Phila Pa 1976) 2016;41(21):E1303–E1311. doi: 10.1097/BRS.0000000000001830. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Grant EK, Olivieri LJ. The role of 3-D heart models in planning and executing interventional procedures. Can J Cardiol. 2017;33(9):1074–1081. doi: 10.1016/j.cjca.2017.02.009. [DOI] [PubMed] [Google Scholar]

- 38.Guha D, Alotaibi NM, Nguyen N, Gupta S, McFaul C, Yang VXD. Augmented reality in neurosurgery: a review of current concepts and emerging applications. Can J Neurol Sci. 2017;44(3):235–245. doi: 10.1017/cjn.2016.443. [DOI] [PubMed] [Google Scholar]

- 39.Sugimoto M, Yasuda H, Koda K, Suzuki M, Yamazaki M, Tezuka T, et al. Image overlay navigation by markerless surface registration in gastrointestinal, hepatobiliary and pancreatic surgery. J Hepatobiliary Pancreat Sci. 2010;17(5):629–636. doi: 10.1007/s00534-009-0199-y. [DOI] [PubMed] [Google Scholar]

- 40.Pratt P, Ives M, Lawton G, Simmons J, Radev N, Spyropoulou L, et al. Through the HoloLens™ looking glass: augmented reality for extremity reconstruction surgery using 3D vascular models with perforating vessels. Eur Radiol Exp. 2018;2(1):2. doi: 10.1186/s41747-017-0033-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Sharma A, Wang K, Siegel E. Radiologist digital workspace use and preference: a survey-based study. J Digit Imaging. 2017;30(6):687–694. doi: 10.1007/s10278-017-9971-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Joshi V, Narra VR, Joshi K, Lee K, Melson D. PACS administrators' and radiologists' perspective on the importance of features for PACS selection. J Digit Imaging. 2014;27(4):486–495. doi: 10.1007/s10278-014-9682-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Luo H, Hao W, Foos DH, Cornelius CW. Automatic image hanging protocol for chest radiographs in PACS. IEEE Trans Inf Technol Biomed. 2006;10(2):302–311. doi: 10.1109/TITB.2005.859872. [DOI] [PubMed] [Google Scholar]

- 44.Lindskold L, Wintell M, Aspelin P, Lundberg N. Simulation of radiology workflow and throughput. Radiol Manage. 2012;34(4):47–55. [PubMed] [Google Scholar]

- 45.Benjamin M, Aradi Y, Shreiber R. From shared data to sharing workflow: merging PACS and teleradiology. Eur J Radiol. 2010;73(1):3–9. doi: 10.1016/j.ejrad.2009.10.014. [DOI] [PubMed] [Google Scholar]