Abstract

Rising US health care costs have lead to the creation of alternative payment and care delivery models designed to maximize outcomes and/or minimize costs through changes in reimbursement and care delivery. The impact of these interventions in cancer care is unclear. We performed a systematic review to describe the landscape of new alternative payment and care delivery models in cancer care. In this systematic review, 22 alternative payment and/or care delivery models in cancer care were identified. These included six bundled payments, four accountable care organizations, nine patient-centered medical homes, and three other interventions. Only 12 interventions reported outcomes; the majority (n=7, 58%) improved value, four had no impact, and one reduced value, but only initially. Heterogeneity of outcomes precluded a meta-analysis. Despite growth in alternative payment and delivery models in cancer, there is limited evidence to evaluate their efficacy.

Keywords: Healthcare Delivery, Alternative Payment Models, Cancer, Cancer Delivery, Value-Based Payment Models, Value-Based Healthcare Delivery, Value-Based Cancer Care, Alternative Care Delivery Models, Affordable Care Act

INTRODUCTION

The annual cost of cancer care is particularly high and expected to approach $173 billion by 2020,1 which has important implications for patients as financial toxicity has been shown to disproportionately affect cancer patients2 and lead to increased mortality.3 In response to rising US healthcare costs, there has been an increased emphasis on optimizing value, defined as health outcomes achieved per dollar spent.4 Components of the Affordable Care Act (ACA) further catalyzed the move towards value, and the Centers for Medicare and Medicaid Services (CMS) intends to tie 50% of traditional fee-for service (FFS) payments to value by 2018.5

The innovative care delivery and reimbursement models rolled out in the ACA have recently been applied to cancer care and are now reported in the literature. The most widespread alternative payment models are bundled payments and accountable care organizations (ACO), each of which utilizes changes in reimbursement methods to incentivize improvements in care delivery. The most common new care delivery model is the patient-centered medical home (PCMH), which is centered upon enhanced care coordination to control costs of care. Despite representing a large portion of overall healthcare costs,1 cancer care has been largely excluded from initial experiments of alternative payment and care delivery models. For example, large analyses of ACOs have not addressed cancer6,7 and the largest federal bundled payment initiative excluded cancer care.8

We conducted a systematic review to identify alternative payment and delivery models that have been tested in cancer care since the passage of the ACA. The purpose of this review was to describe the landscape of alternative payment and delivery models in oncology, to evaluate the efficacy of these models on value in cancer care, and to critically examine the quality of available evidence.

METHODS

This systematic review adheres to the guidelines set by the PRISMA standards for systematic reviews of studies that evaluate healthcare interventions.9

Definitions

The ideal value-based intervention should be directed at improving the balance between the quality of health outcomes achieved and the costs to achieve those outcomes.4 Although we believe that many of the new payment and delivery models were intended to improve value, we felt that it would be difficult to confirm whether or not interventions met this strict definition. Therefore, we defined interventions as alternative payment models or care delivery models, which we deemed were more accurate descriptions.

We defined alternative payment models as interventions that involved changing the financing of care delivery with an expressed goal of incentivizing improved clinical outcomes as well as reduced utilization and cost of care. Specifically, we defined a bundled payment model as an alternative payment model that replaces traditional FFS with a single payment to providers and/or facilities for all services a beneficiary receives during a pre-determined episode of care to treat a given condition, with or without performance accountability.10 We defined an ACO as an alternative payment model that involves a network of health care providers that share accountability for the cost, quality, and coordination of care to a population of patients who are enrolled in a traditional FFS program with opportunities for shared savings to incentivize improved care coordination.11 In contrast, we defined a care delivery model as an intervention that primarily focuses on changing the way care is delivered, instead of how care is reimbursed. Specifically, we defined a PCMH as a care delivery model that adheres to the standards set by the National Committee for Quality Assurance, which include having a physician-led care team to direct disease management, care coordination, a standardized evidence base, patient engagement and patient education with funding to support care enhancements in addition to traditional payment mechanisms.12 Lastly, we defined interventions in oncology as those that affect patients with a current diagnosis of cancer; interventions focused on screening only were excluded.

When reporting the impact of each intervention on value in cancer care, we defined value as health outcomes achieved over the costs to achieve those outcomes. For example, if costs or utilization were reduced with no reported effect on outcomes, the impact would be positive. Similarly, if costs or utilization were increased with no effect on outcomes, the impact would be negative

Search Strategy

Because many current alternative payment and care delivery models were designed in response to components of the Affordable Care Act (ACA), the search was limited to articles in the English language that were published after 2010, the year the ACA took effect. We systematically searched PubMed/MEDLINE, EMBASE, CINAHL, and Cochrane Central Register of Controlled Clinical Trials (January 2010 to March 2017) using terminology describing alternative payment and care delivery models in cancer care. We included these four databases to identify relevant publications from the peer-reviewed journal literature, non-peer-reviewed professional news publications, meeting abstracts, dissertations, and book sections. The final search strategy was developed using a combination of Medical Subject Headings (MeSH) and keyword terms in PubMed/Medline (Table 1) and was adapted for use in the other databases. Additional relevant references were harvested from the bibliographies of eligible publications.

Table 1.

PubMed/MEDLINE Search Strategy

| 1 | (“patient centered medical home” OR PCMH OR “Patient-Centered Care/economics”[Mesh]) OR ((“Patient-Focused” OR “Patient-Centered”) AND (“Medical Home” OR “Medical Homes”)) |

| 2 | “Pay for Performance” OR “Pay for performance” OR “Paying for performance” OR “Reimbursement, Incentive”[Mesh] OR “Incentive Reimbursement” OR “Incentive Reimbursements” |

| 3 | “accountable care organizations”[Mesh] OR “accountable care organizations” OR “accountable care organization” |

| 4 | (“compensation” OR “payment” OR “payments” OR “purchasing” OR “reimbursement” OR “reimbursements” OR “spending” OR “funding”) AND (“ budget-based” OR “value-based” OR “Episode-Based” OR “bundle” OR “bundles” OR “bundled” OR “bundling” OR “Capitation Fee”[Mesh] OR “capitation” OR “capitated” OR “cap” OR “caps”) |

| 5 | “Alternative payment models” OR “Alternative payment model” OR “Value-Based Purchasing”[Mesh] OR “Value-Based Purchasing” |

| 6 | 1 OR 2 OR 3 OR 4 OR 5 |

| 7 | (“2010/01/01”[PDat]: “2017/12/31”[PDat]) |

| 8 | English[lang] |

| 9 | 6 AND 7 AND 8 |

Abbreviation: Mesh = Medical Subject Heading, PDat = Publication Date, lang = language

Study Selection

We used Endnote X8 reference management software package to aggregate citations from all search results. All article title and abstracts were reviewed by one investigator (EMA,SMS), and all full-text articles were reviewed in duplicate by two investigators (EMA,SMS; kappa 0.94) for the decision to include the article in the review. Studies were eligible for inclusion if they were original reports, described an alternative payment or care delivery model in cancer, and were conducted in the United States. We excluded studies of interventions addressing cancer screening alone, those that measured value in the absence of an intervention, those in which the intervention did not have the goal of decreasing costs or improving outcomes, and those utilizing theoretical models to estimate the potential impact of an alternative payment or care delivery model. When multiple publications reported redundant outcomes for a given intervention, we included only the most comprehensive article(s). However, if multiple publications reported unique outcomes for a given intervention, all were included.

Data Extraction

Data extraction was performed by two investigators (EMA,SMS) and checked by one additional investigator (SM), with differences resolved by discussion and consensus. We collected data on study and intervention characteristics including: first author, intervention name, payer or funder, clinical setting, cancer type(s), intervention type (bundled payment, ACO, PCMH, other), brief description of intervention, publication type, and whether the study was peer-reviewed or not peer-reviewed. For studies that reported results, we collected information on study design, the number of patients included in study and control populations, specific outcomes measured, and results reported. In terms of study design, we defined a pre-post study as a study comparing outcomes in a patient population before and after an intervention. We defined a concurrent comparator study as a study comparing outcomes in two patient groups over the same time horizon, where only one group was exposed to the intervention. Lastly, we defined a pre-post with concurrent control study as a study comparing differences between a study population and a control population over the same time period before and after an intervention.

Assessment of Study Quality

In studies with results, we examined the quality of evidence using the Effective Public Health Practice Project Quality Assessment Tool for Quantitative Studies, which provides an overall methodological rating of strong, moderate or weak based on evaluation of six categories: selection bias, study design, confounders, blinding, data collection methods, and withdrawals and dropouts.13 Quality assessments were performed independently by two authors (EMA and SMS) with differences resolved by discussion and consensus.

Data Analysis

Given the heterogeneity of interventions, study populations, outcome definitions, and the large proportion of interventions without results, we did not pool outcomes in a meta-analysis.

RESULTS

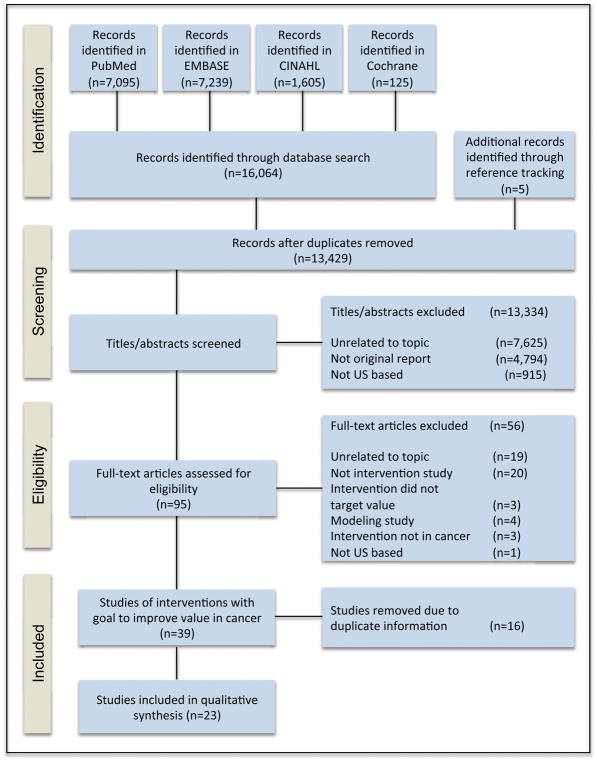

Figure 1 illustrates the selection process for studies included in this systematic review. Our search identified 16,064 articles, with an additional five identified through reference tracking. After removing duplicates, we screened 13,429 titles and abstracts for eligibility and excluded 13,334. Fifty-six articles were excluded during full-text review and 16 redundant articles were excluded during data abstraction in favor of more comprehensive reports describing the same intervention, leaving 23 studies describing 22 unique alternative payment or care delivery models in cancer included in the review.

Figure 1.

PRISMA diagram.

Characteristics and results from the included studies are presented in Table 2. The 23 articles that met inclusion criteria described 22 unique interventions including six (27%) bundled payments,14–19 four (18%) ACOs,20–23 nine (41%) PCMHs,12,24–32 and three (14%) other alternative payment or care delivery models.33–35 The majority of interventions that reported practice setting were implemented in the community (n=16 of 21, 76%) and most that reported payer type involved private payers (n= 13 of 15, 87%). Approximately half (n=13, 57%) of the articles reported results regarding the impact on value.

Table 2.

Summary of alternative payment and care delivery models in cancer care

| First Author | Intervention | Payer type | Study Design | Setting | Cancer studied | Peer-review | Patients in intervention | control | Brief Description | Outcomes Measured | Results | Impact on Value |

|---|---|---|---|---|---|---|---|---|

| Bundled Payments | ||||||||

| Bandell14 | BCBS of Florida Radical Prostatectomy Bundle | Private | NA | Community | Prostate | No | NA | BCBS partnered with Mobile Surgery International to develop prostatectomy bundle for early stage prostate cancer. | NA | NA | NA |

| Butcher15 | CTCA Bundle | Private | NA | Community | prostate, breast, lung and colorectal | No | NA | CTCA developed a bundle for the diagnosis and care planning of 4 cancer types. Episodes included medical, surgical and radiation oncologist consults, imaging and pathology services, and other consult services. | NA | NA | NA |

| Castelluci16 | BCBS of CA Breast Cancer Bundle | Private | NA | Community | Breast | No | NA | BCBS partnered with Valley Radiotherapy Associated Medical Group to develop a radiation bundle for early stage breast cancer. | NA | NA | NA |

| Feeley17 | MD Anderson Head and Neck Bundle | Private | NA | NCI designated cancer center | Head and neck | No | NA | UHC partnered with MD Anderson to develop a bundle covering comprehensive services to manage head and neck cancer. Episode length of 12 months. | NA | NA | NA |

| Loy18 | EBRT Bundle | Private | Pre-post with concurrent control | Community | Breast, Lung, Skin, Prostate cancer, and Bone metastases | Yes | 515 | 433 | Humana partnered with 21st Century Oncology practice to form a bundle for EBRT. Episode length of 90 days. | Guideline concordance, under- and over- treatment. | No change in guideline concordant care for breast, lung, and skin cancers. Improvements in guideline care for bone metastases and prostate cancer. Under-treatment declined from 4% to 0%. Over-treatment was unchanged. |

Neutral |

| Newcomer19 | UHC Bundle Pilot | Private | Pre-post with concurrent control | Community | Breast, lung, colon | Yes | 810 | NA | UHC partnered with 5 medical oncology groups. Episodes included hospital care, hospice, case management, and eliminated % based chemotherapy drug incentive. Episodes length of 4–12 months. | Hospitalizations, therapeutic radiology use, chemotherapy drug cost, net savings. | Decreased hospitalization and therapeutic radiology use. Increase in chemotherapy drug costs. Net savings of $33.36 million. |

Increased |

| Accountable Care Organizations | ||||||||

| Butcher20 | Aetna Cancer-Specific ACO | Private | Pre-post for costs, Cohort study for utilization | Community | Breast, Lung, Colon | No | 184 | NA | Aetna partnered with Texas Oncology to form ACO pilot. | ER visits, hospitalizations, LOS, costs. | 40% fewer emergency room visits. 16.5% fewer hospitalizations. 36% fewer inpatient days. 10% lower 1-year costs, 12% lower 2-year costs. | Increased |

| Colla21 | PGPD Project | Medicare | Pre-post with concurrent control | Community | All | Yes | 104,766 | 727,969 | Medicare claims analysis of cancer outcomes after PGPD. | Hospitalizations, costs. | PGPD enrollment was associated with a $721 (3.9%) reduction in annual Medicare spend per patient, which was driven exclusively by decreased inpatient stays. | Increased |

| Herrel22 | MSSP ACO Analysis | Medicare | Pre-post with concurrent control | Mixed settings | Colorectal, bladder, esophageal, kidney, liver, ovarian, pancreatic, lung, or prostate cancer | Yes | 19,439 | 365,080 | Medicare claims analysis of surgical cancer outcomes after MSSP enrollment. | Mortality, readmissions, complications, LOS. | ACO enrollment had no effect on 30-day mortality, readmissions, complications, or LOS. | Neutral |

| Mehr23 | BCBS Cancer- Specific ACOs | Private | NA | NCI designated cancer center, Community | All | Yes | NA | BCBS partnered with Moffit Cancer Center, Baptist Health South Florida and Advanced Medical Specialties, to form a cancer ACO. | NA | NA | NA |

| Patient Centered Medical Homes | ||||||||

| Bosserman24 | Wilshire Oncology Pilot| Private | NA | Community | All | Yes | NA | Wilshire Oncology partnered with CA Anthem Blue Cross WellPoint to form an oncology medical home pilot. | NA | NA | NA |

| Butcher25 | COA Initiative | Mixed | NA | Community | All | No | NA | COA approached CMMI and private payers about a medical home demonstration project. | NA | NA | NA |

| Goyal27 | North Carolina Medicaid PCMH | Medicaid | Concurrent comparator | Mixed settings | Breast | Yes | 308 | 262 | Medicaid claims analyses of breast cancer patients enrolled in the Community Care of North Carolina PCMH. | Outpatient or ER visits for chemo-related adverse event. | PMCH enrollment was not associated with differences in outpatient or ER visits for chemo-related adverse events. | Decreased |

| Kohler26 | Concurrent comparator | Mixed settings | Breast | Yes | 3,857 person-months | 5,550 person-months | Medicaid claims analyses of breast cancer patients enrolled in the Community Care of North Carolina PCMH. | Outpatient service use, ER visits, hospitalizations, costs. | PCMH enrollment was associated with higher monthly outpatient service utilization, no effect on ER visits or hospitalizations, and a $429 per month increase in expenditures for the first 15 months. This effect was not significant at 24–36 months. | ||

| Kuntz28 | Michigan Oncology Medical Home | Private | Pre-Post | Community | All | Yes | 85 | 485 | 4 oncology practices partnered with Priority Health to develop a model that reimbursed for chemo and treatment planning and advanced care planning consultation with a shared savings opportunity. | ER visits, hospitalizations, costs. | Reduced ER visits by 47% and hospitalizations by 68%. Estimated savings per patient was $550. | Increased |

| Reinke29 | CareFirst | Private | Pre-post with concurrent control | NA | NA | No | 8 pilot practices | 7 control practices | A Maryland/Washington BCBS plan that created a voluntary medical home model based on its pathway program. | Office visits, # cycles per patient, % receiving chemo, % receiving all-generic chemotherapy. | No effect on office visits, average number of cycles given per patient, proportion of patients receiving chemotherapy, and proportion of patients receiving all-generic chemotherapy. | Neutral |

| Shah30 | National PCMH | NA | Concurrent comparator | Mixed settings | All | No* | NA | NA | Survey analysis of PCMH access and outcomes for cancer survivors. | ER visits, prescribing behavior, outpatient visits, hospitalizations, costs. | Reduced ER visits and prescription medications for cancer survivors with access to PCMH. No effect on outpatient visits, hospitalizations, or total costs. | Neutral |

| Sprandio12 | CMOH | Private | Pre-post | Community | All | Yes | NA|NA | CMOH, a nine-Oncologist physician practice, formed the first oncology medical home. | ER visits, hospitalizations, LOS, costs. | Reduced ER visits by 68%, hospitalizations by 51%, and LOS by 21%. Approximate aggregate savings of $1 million per physician per year to insurers. | Increased |

| Tirodkar31 | Patient-centered oncology practice standards pilot | NA | NA | Mixed | NA | Yes | NA | Pilot of incorporating patient-centered oncology practice standards at 5 oncology practices. Standards included improved communication, care coordination, and performance measurement. | NA | NA | NA |

| Waters32 | COME HOME | Medicare | Pre-Post | Community | Breast, Lung, or Colon | Yes | 16,353 | NA | Received a grant from CMMI to replicate and scale a patient centered cost-reduction program across 7 oncology practices. | ER visits, hospitalizations, patient satisfaction. | Participating sites reduced ER visits by 23% and hospitalizations by 28%. Patient satisfaction rates remained >90%. | Increased |

| Other Alternative Payment or Care Delivery Interventions | ||||||||

| Kwon33 | Glioma IPU | NA | NA | Community | Glioma, metastatic cancer to the brain, meningioma | No* | NA | Virtual IPU including neurosurgery, neuro oncology, radiation oncology, neuroradiology, and neuropathology. | NA | NA | NA |

| Sharma34 | The Diabetes Oncology Program | NA | Pre-post | Community | All | No× | 98 | 383 | Integrated care model including oncologist, endocrinologist, primary care physician, nursing staff, and diabetes educator. | ER visits, hospitalizations. | Reduced aggregate ER visits, observation stays, and hospitalizations by 3.4% in cancer patients with diabetes. | Increased |

| Timmins35 | Gynecologic Oncology capitated model | Private | NA | Community | Gynecologic cancers | No* | NA | Private practice group partnered with health plan to create a capitated annual payment per patient (excluding drug costs). | NA | NA | NA |

Abbreviations: BCBS = Blue Cross Blue Shield; NA = Not available; CTCA = Cancer Treatment Centers of America; CA = California; NCI = National Cancer Institute; UHC = United Healthcare; EBRT = External beam radiation; ER = Emergency room; LOS = length of stay; PGPD = Physician Group Practice Demonstration; MSSP = Medicare Shared Savings Program; COA = Community Oncology Alliance; CMMI = Center for Medicare & Medicaid Innovation; CMOH = Consultants in Medical Oncology and Hematology; COME HOME = Community Oncology Medical Home; IPU = Integrated Practice Unit.

Abstract Only

Graduate School Dissertation

Quality of Evidence

Of the 23 articles included, 12 (52%) were published in the peer-reviewed literature and 13 (57%) published results and thus could be assessed for quality. Table 3 summarizes the quality of evidence assessments conducted on the 13 studies that reported outcomes. Over half (n=7, 54%) received a weak global rating and the remaining (n=6, 46%) received a moderate global rating. No studies evaluating alternative payment or care delivery models in cancer received a strong global rating.

Table 3.

Component and Global Assessment of Study Quality for Studies with Published Results

| First Author | Intervention | Selection Bias | Study Design | Confounders | Blinding | Data Collection | Withdrawals and Dropouts | Global Rating |

|---|---|---|---|---|---|---|---|---|

| Loy18 | EBRT Bundle | 3 | 2 | 3 | 2 | 2 | 3 | 3 |

| Newcomer19 | UHC Bundle Pilot | 2 | 2 | 1 | 2 | 2 | 3 | 2 |

| Butcher20 | Aetna Cancer-Specific ACO | 3 | 2 | 3 | 2 | 3 | 3 | 3 |

| Colla21 | PGPD Project | 2 | 2 | 1 | 2 | 1 | 3 | 2 |

| Herrel22 | MSSP ACO Analysis | 2 | 2 | 1 | 2 | 1 | 3 | 2 |

| Kohler26 | NC Medicaid PCMH | 2 | 2 | 1 | 2 | 1 | 3 | 2 |

| Goyal27 | NC Medicaid PCMH | 2 | 2 | 1 | 2 | 1 | 2 | 2 |

| Kuntz28 | Michigan Oncology Medical Home | 2 | 2 | 3 | 2 | 3 | 3 | 3 |

| Reinke29 | CareFirst | 3 | 2 | 3 | 2 | 3 | 3 | 3 |

| Shah30 | National PCMH* | 2 | 3 | 3 | 2 | 3 | 3 | 3 |

| Sprandio12 | CMOH | 2 | 2 | 3 | 2 | 3 | 3 | 3 |

| Waters32 | COME HOME | 2 | 2 | 3 | 2 | 3 | 3 | 3 |

| Sharma34 | The Diabetes Oncology Program× | 2 | 2 | 3 | 2 | 2 | 2 | 2 |

Scores of 1, 2, and 3 correspond to strong, moderate, and weak quality, respectively, using the Effective Public Health Practice Project Quality Assessment Tool for Quantitative Studies.13

Abbreviations: EBRT = external beam radiation therapy; UHC = United Healthcare; ACO = Accountable care organization; PGPD = Physician Group Practice Demonstration; MSSP = Medicare Shared Savings Program; NC = North Carolina; PCMH = Patient Centered Medical Home; CMOH = Consultants in Medical Oncology and Hematology; COME HOME = Community Oncology Medical Home.

Abstract Only

Graduate School Dissertation Thesis

Bundled Payment Models

We identified six bundled payment interventions. Five were tested in the community setting; all six were developed in partnership with private payers. Notably, there was considerable heterogeneity in episode definition. The Blue Cross Blue Shield (BCBS) of Florida radical prostatectomy bundle for early stage prostatectomy was the only bundle that covered exclusively surgical therapy.14 Two bundles covered radiation therapy: the BCBS of California bundle covered radiation therapy for early stage breast cancer16 and the 21st Century Oncology external beam radiation therapy (EBRT) bundle covered radiation therapy for 13 common cancers with an episode duration of 90 days.18 The Cancer Treatment Centers of America (CTCA) bundle included services associated with diagnosis and care planning, but excluded treatment services.15 The United Healthcare (UHC) bundle included physician hospital care, hospice services, and case management for breast, colon, and lung cancer with chemotherapy medications reimbursed at average sales price and all other physician services reimbursed as fee-for-service.19 The MD Anderson head and neck bundle included all services associated with the treatment and management of newly diagnosed head and neck cancer for a duration of 12 months, making it the most comprehensive bundle in cancer to date.17

Only two bundled payment interventions reported results. The 21st Oncology EBRT bundle demonstrated improved guideline adherence for patients with bone metastases and prostate cancer but no effect for patients with breast, lung, and skin cancer.18 Costs were not assessed. The UHC bundle decreased utilization of inpatient hospitalization and therapeutic radiology and paradoxically increased chemotherapy drug costs.19 Overall, there was a net savings to UHC of $33.36 million.

Accountable Care Organizations

Four studies related to ACOs in cancer care. Two described cancer-specific ACOs20,23 and two described the impact of general ACO enrollment on the cost and quality of care in Medicare patients with a cancer diagnosis.21,22

The two cancer-specific ACOs were developed in partnership with private payers; one took place in the community setting20 and the other took place both at an NCI-designated cancer center and within the community.23 The BCBS of Florida cancer-specific ACO was developed in partnership with Baptist Health South Florida, Advanced Medical Specialties, and Moffitt Cancer Center and focused on “common cancers”.23 Results of this intervention have not yet been published. The Aetna cancer-specific ACO was developed in partnership with US Oncology’s Texas affiliate with results published after enrollment of 184 patients diagnosed with breast, lung, or colon cancers.32 A concurrent comparator study design was used to compare emergency room (ER) visits, inpatient admissions, and length of stay (LOS) between patients in the ACO and all other patients with newly diagnosed cancer covered by Aetna in Texas. A pre-post design was used to compare costs between ACO-enrolled patients and a cohort of “identical patients” in the year before ACO implementation. ACO enrollment led to approximately 40 percent fewer ER visits, 16.5 percent fewer inpatient admissions, and 36 percent fewer inpatient days for patients; overall costs were reduced by 10 percent after year one.20

The remaining two studies described the impact of patient enrollment in the Medicare Physician Group Practice Demonstration (PGPD) and the Medicare Shared Savings Program (MSSP) ACO on financial and clinical outcomes for patients with cancer, with mixed results.21,22 The Medicare PGPD was a precursor to modern ACOs and represented Medicare’s first physician pay-for-performance (P4P) initiative at the level of the physician group practice.37 The PGPD study used Medicare FFS claims to compare cancer patients enrolled in PGPD to local controls pre- and post-PGPD implementation.21 PGPD enrollment was associated with a Medicare spending reduction of $721 (3.9%) per cancer patient annually. Savings were derived entirely from reductions in inpatient stays and notably there were no reductions in cancer-specific procedures or chemotherapy administration. The MSSP ACO study used Medicare claims to compare costs and outcomes associated with major surgical oncology procedures for nine solid organ cancers performed at MSSP ACO Hospitals versus controls before and after participation in MSSP ACOs.22 This study found no difference in perioperative outcomes including 30 day mortality, readmissions, complications and inpatient LOS.

Oncology Patient Centered Medical Homes

We identified seven unique Oncology PCMHs and two studies that evaluated the impact of primary care focused PCMHs on cancer care. Five of the seven Oncology PCMHs took place in community settings and one in mixed settings; one PCMH did not report setting information. Four of the Oncology PCMHs contracted with private payers,12,24,28,29 one with Medicare,32 and one with mixed payers,25 and one did not report payer-type.31

There were five Oncology PCMHs with outcomes published, among which results were mixed. The CareFirst, Maryland-Washington BCBS Oncology PCMH had neutral effects on value, with no difference in office visits, average chemotherapy cycles per patient, proportion of patients receiving chemotherapy, and percentage of patients receiving all-generic chemotherapy in a difference in difference analysis of eight intervention practices compared to seven controls before and after the PCMH was formed.29

Three analyses showed improved value associated with enrollment in an Oncology PCMH.12,28,32 The Michigan Oncology Medical Home, a four oncology practice partnership with Priority Health, documented fewer ER visits, decreased hospitalizations, and an estimated savings of $550 per patient.28 The Consultants in Medical Oncology and Hematology (CMOH), a nine clinician oncology practice in Pennsylvania, developed the first Oncology PCMH and showed a reduction in annual ER visits, hospital admissions, and LOS in patients treated with chemotherapy, reporting an aggregate savings of $1 million per physician per year to insurers.12 The Community Oncology Medical Home (COME HOME) program based out of New Mexico Cancer Center and developed by Innovative Oncology Business Solutions received a three-year award from CMS to replicate and scale their Oncology medical home to seven oncology practices across the country. Participating sites reduced ER visits by 23 percent and inpatient hospitalizations by 28 percent.32 Patient satisfaction rates remained greater than 90 percent.

The two analyses of the impact of primary care-focused PCMHs on cancer care found that one reduced value28 while the other was value neutral.30 Specifically, an analysis of utilization and costs associated with breast cancer patients in the North Carolina (NC) Medicaid PCMH found reduced value through increased monthly outpatient service utilization, no effect on ER visits or hospitalizations, and a $429 per month increase in expenditure for the first 15 months.27 Notably, this increase in cost was no longer significant at 24–36 months post-diagnosis. In a national patient survey that assessed the impact of PCMH access on outcomes for cancer survivors, PCMH access was associated with lower ER visits and prescription medication use, with no effect on outpatient visits, admissions, and total costs.30

Other Alternative Payment or Care Delivery Models

We identified three alternative payment or care delivery models in cancer care that could not be categorized as bundles, ACOs, or medical homes.33–35 Examples included the Glioma integrated practice unit (IPU),33 a virtual IPU for the management of patients with glioma, the Diabetes Oncology Program,34 an integrated care model to enhance coordination of care for cancer patients with diabetes, and a year-long capitated payment for patients with gynecologic malignancies.35

DISCUSSION

To our knowledge, this is the most comprehensive review of alternative payment and delivery models in cancer to date. Our systematic review included both peer-reviewed and non-peer reviewed literature and identified 22 interventions including six bundled payments, two cancer-specific ACOs, two non specialty-specific ACOs, seven Oncology PCMHs, two primary care focused PCMHs, and three non-categorized alternative payment or care delivery models. Of the 12 interventions that reported results, the majority (n=7, 58%) improved value,12,19–21,28,32,34 four were value neutral,18,22,29,30 and one initially reduced value,27 though this effect was no longer significant at the two-three year time-point.

Almost all interventions with published results that impacted value focused solely on reducing healthcare utilization and/or costs of care (n=10 of 12, 83%). In contrast, only two studies investigated the impact of the intervention on measures of care quality, an important component of the value equation.4 The 21st Oncology EBRT bundle18 measured guideline concordance (a process measure) and Herrel et al studied the impact of Medicare ACOs on 30-day mortality and surgical complications.22 No interventions included an analysis of patient reported outcomes, the collection of which has recently been shown to improve cancer patient survival.36 The cancer community should not ignore the importance of measuring outcomes as a means to improve value and to ensure that quality of care is not sacrificed at the expense of reducing costs.

Our findings regarding clinical setting and payer/sponsor are also notable. The majority of interventions were conducted in the community setting (n=16 of 21, 76%). Since approximately three-quarters of oncologists practice in non-academic settings,38 the testing of alternative payment and care delivery models in the community promises broad applicability to the larger oncology care delivery system. Additionally, most interventions that reported payer involvement were performed through commercial insurance contracts (n= 13 of 15, 87%). Our finding of published results for only half (n=12, 54%) of interventions may be related to a tendency of commercial insurers to keep data proprietary as they compete in the commercial insurer marketplace. The dominance of commercially funded interventions may impede transparency and limit the ability of the broader cancer community to learn from practice innovations that improve value. Commercial insurers and community practices may also be more likely than academics to publish results only for interventions that improve value (i.e. publication bias), absent a scholarly mission and an incentive structure that rewards publishing for its own sake. In contrast, results of publicly funded interventions are easily accessed, both since Medicare claims data are publically available and because the Centers for Medicare & Medicaid Services often publish their own analyses of projects for public access. Similar transparency and reduced publication bias in the cancer world will be critical for enhancing our understanding. Moving forward, there is reason to expect growth in federal involvement in alternative payment and care delivery models in cancer, which may lead to increased transparency and reduction in publication bias. In 2016, CMS began piloting the voluntary Oncology Care Model (OCM), a FFS payment model with additional monthly per-member care coordination payments and P4P incentives.39 Results of the OCM, when available, are likely to be informative.

We found that a large number of interventions were described only in the non-peer reviewed literature (n=10, 45%) and that studies reporting results were of overall poor methodological quality. Selective publication in the non-peer-reviewed literature may limit dissemination of information about alternative payment and care delivery models to clinicians, who may have less exposure to business publications than to medical journals. Independent of peer-review, the low quality of evaluations is also of concern. A lack of rigor in evaluations of alternative payment and care delivery models in cancer may imply to clinicians that understanding approaches to improving value is less important than understanding other aspects of care. Ultimately, amid calls for greater physician engagement in care value,40 clinician access to descriptions of alternative payment and care delivery models and reliable, unbiased estimates of their impact are critical.

The optimal study design for assessing interventions to improve value is not clear. Agreement on a trusted approach to measure the impact of alternative payment and care delivery models41 will be critical for efforts to improve value in cancer care. Outside of randomized control trials, which are seldom feasible, the next most rigorous analytical technique to study the impact of a healthcare intervention is the pre-post study design with a concurrent control. This difference-in-difference technique prevents bias from time invariant changes in the healthcare system not attributed to the intervention itself. Large analyses of this type have been performed for alternative payment and care delivery models outside of cancer,6,7,42 and trust in the quality of these results has provoked meaningful debate about how the healthcare system should move forward to improve value.43,44 We found five examples of alternative payment and care delivery models in cancer that used variations of this technique18,19,21,22,29 and hope the usage of this methodology only increases alongside the implementation of government initiatives such as OCM.

Our study has several important limitations. First, due to the heterogeneity of intervention type, populations, pilot maturity, and outcome measurement, we were unable to pool data or perform a meta-analysis to estimate the overall impact of alternative payment and care delivery models in cancer. For example, within bundled payment interventions, episode definitions varied widely from including comprehensive services17 to including only services related to diagnosis and care planning.15 Second, the lack of consistent reporting of results and the poor quality of the literature limit our ability to accurately estimate the effect of individual interventions. However, our inclusion of any type of system-level alternative payment or care delivery model with any degree of reporting allows us to present a complete picture of new payment and delivery models affecting the delivery of cancer care. In addition, our careful attention to study quality allows for transparency and full understanding of the spectrum of literature. Third, our review may have inadvertently excluded ongoing or past alternative payment or care delivery models in cancer. However, our search of multiple electronic databases and our inclusion of both peer-reviewed and non-peer-reviewed literature make it unlikely that we missed any large alternative payment or care delivery model experiments. Finally, we classified intervention types using pre-defined criteria independent of each intervention’s self-identified type. For this reason our categorizations may differ from the intervention’s self-description, but we offer consistency that will facilitate comparisons as the field moves forward.

Conclusion

Despite growth in alternative payment and delivery models in cancer care since passage of the ACA, our systematic review found that there is limited evidence to evaluate their efficacy. Reports of outcomes are often lacking and are of variable quality when available, so the overall efficacy of alternative payment and delivery models in cancer remains unclear. Moving forward, there is a need for both payers and providers to participate in alternative payment and care delivery models in cancer and to publish their impact using methodological rigor and standardized reporting of outcomes. Rigorous evaluations and increased transparency will allow for continued innovation in cancer care and the highest possible value for our patients and society.

Acknowledgments

Funding:

Dr. Korenstein’s work on this project was supported in part by a Cancer Center Support Grant from the National Cancer Institute to Memorial Sloan Kettering Cancer Center (P30 CA008748).

Footnotes

Conflicts of Interests:

There are no conflicts of interest to report.

References

- 1.Mariotto AB, Yabroff KR, Shao Y, et al. Projections of the cost of cancer care in the United States: 2010–2020. J Natl Cancer Inst. 2011;103:117–28. doi: 10.1093/jnci/djq495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Zafar SY. Financial Toxicity of Cancer Care: It’s Time to Intervene. J Natl Cancer Inst. 2016;108(5):djv370. doi: 10.1093/jnci/djv370. [DOI] [PubMed] [Google Scholar]

- 3.Ramsey SD, Bansal A, Fedorenko CR, et al. Financial Insolvency as a Risk Factor for Early Mortality Among Patients With Cancer. J Clin Oncol. 2016;34:980–6. doi: 10.1200/JCO.2015.64.6620. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Porter ME. What is value in health care? N Engl J Med. 2010;363:2477–81. doi: 10.1056/NEJMp1011024. [DOI] [PubMed] [Google Scholar]

- 5.Burwell SM. Setting value-based payment goals--HHS efforts to improve U.S. health care. N Engl J Med. 2015;372:897–9. doi: 10.1056/NEJMp1500445. [DOI] [PubMed] [Google Scholar]

- 6.McWilliams JM, Chernew ME, Landon BE, et al. Performance differences in year 1 of pioneer accountable care organizations. N Engl J Med. 2015;372:1927–36. doi: 10.1056/NEJMsa1414929. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.McWilliams JM, Hatfield LA, Chernew ME, et al. Early Performance of Accountable Care Organizations in Medicare. N Engl J Med. 2016;374:2357–66. doi: 10.1056/NEJMsa1600142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Dummit LA, Kahvecioglu D, Marrufo G, et al. Association Between Hospital Participation in a Medicare Bundled Payment Initiative and Payments and Quality Outcomes for Lower Extremity Joint Replacement Episodes. JAMA. 2016;316:1267–78. doi: 10.1001/jama.2016.12717. [DOI] [PubMed] [Google Scholar]

- 9.Shamseer L, Moher D, Clarke M, et al. Preferred reporting items for systemic review and meta-analysis protocols (PRISMA-P) 2015: exploration and explanation. BMJ. 2015 doi: 10.1136/bmj.g7647. [DOI] [PubMed] [Google Scholar]

- 10.Centers for Medicare and Medicaid Services. Bundled Payments for Care Improvement Initiative (BPCI): General Information. https://innovation.cms.gov/initiatives/bundled-payments/

- 11.Center for Medicare & Medicaid Services. Accountable Care Organizations (ACO) https://www.cms.gov/Medicare/Medicare-Fee-for-Service-Payment/ACO/

- 12.Sprandio JD. Oncology patient-centered medical home. J Oncol Pract. 2012;8:47s–9s. doi: 10.1200/JOP.2012.000590. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Effective Public Health Practice Project. Quality Assessment Tool For Quantitative Studies. Hamilton, ON: Effective Public Health Practice Project; 1998. http://www.ephpp.ca/index.html. [Google Scholar]

- 14.Bandell B. South Florida Business Journal. 2011. Blue Cross signs first bundled payment deal with Miami firm. [Google Scholar]

- 15.Butcher L. Flat Fee for Cancer Treatment Plan Introduced Oncology Times. 2011;33(23):14–16. [Google Scholar]

- 16.Castelluci M. Anthem Blue Cross of California pilots bundled payments on breast cancer treatment. Modern Healthcare. 2016 [Google Scholar]

- 17.Feeley TW, Spinks TE, Guzman A. Developing bundled reimbursement for cancer care. N Engl J Med Catalyst. 2016 [Google Scholar]

- 18.Loy BA, Shkedy CI, Powell AC, et al. Do Case Rates Affect Physicians’ Clinical Practice in Radiation Oncology?: An Observational Study. PLoS One. 2016;11:e0149449. doi: 10.1371/journal.pone.0149449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Newcomer LN, Gould B, Page RD, et al. Changing physician incentives for affordable, quality cancer care: results of an episode payment model. J Oncol Pract. 2014;10:322–6. doi: 10.1200/JOP.2014.001488. [DOI] [PubMed] [Google Scholar]

- 20.Butcher L. How Oncologists Are Bending the Cancer Cost Curve. Oncology Times. 2013;35:2. [Google Scholar]

- 21.Colla CH, Lewis VA, Gottlieb DJ, et al. Cancer spending and accountable care organizations: Evidence from the Physician Group Practice Demonstration. Healthc (Amst) 2013;1:100–107. doi: 10.1016/j.hjdsi.2013.05.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Herrel LA, Norton EC, Hawken SR, et al. Early impact of Medicare accountable care organizations on cancer surgery outcomes. Cancer. 2016;122:2739–46. doi: 10.1002/cncr.30111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Mehr SR. Applying accountable care to oncology: developing an oncology ACO. Am J Manag Care. 2013;19(Spec No 3):E3. [PubMed] [Google Scholar]

- 24.Bosserman LD, Verrilli D, McNatt W. Partnering With a Payer to Develop a Value-Based Medical Home Pilot: A West Coast Practice’s Experience. J Oncol Pract. 2012;8:38s–40s. doi: 10.1200/JOP.2012.000591. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Butcher L. Inside look: first oncology medical home. Oncology Times. 2012;34:12–12. [Google Scholar]

- 26.Goyal RK, Wheeler SB, Kohler RE, et al. Health care utilization from chemotherapy-related adverse events among low-income breast cancer patients: effect of enrollment in a medical home program. N C Med J. 2014;75:231–8. doi: 10.18043/ncm.75.4.231. [DOI] [PubMed] [Google Scholar]

- 27.Kohler RE, Goyal RK, Lich KH, et al. Association between medical home enrollment and health care utilization and costs among breast cancer patients in a state Medicaid program. Cancer. 2015;121:3975–81. doi: 10.1002/cncr.29596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Kuntz G, Tozer JM, Snegosky J, et al. Michigan Oncology Medical Home Demonstration Project: first-year results. J Oncol Pract. 2014;10:294–7. doi: 10.1200/JOP.2013.001365. [DOI] [PubMed] [Google Scholar]

- 29.Reinke T. Oncology medical home study examines physician payment models. Manag Care. 2014;23:9–10. [PubMed] [Google Scholar]

- 30.Shah AB, Li C. Access to a medical home and its impact on Healthcare Utilization and medical expenditure amongst Cancer survivors. Value in Health. 2015;18:A217. [Google Scholar]

- 31.Tirodkar MA, Acciavatti N, Roth LM, et al. Lessons From Early Implementation of a Patient-Centered Care Model in Oncology. J Oncol Pract. 2015;11:456–61. doi: 10.1200/JOP.2015.006072. [DOI] [PubMed] [Google Scholar]

- 32.Waters TM, Webster JA, Stevens LA, et al. Community Oncology Medical Homes: Physician-Driven Change to Improve Patient Care and Reduce Costs. J Oncol Pract. 2015;11:462–7. doi: 10.1200/JOP.2015.005256. [DOI] [PubMed] [Google Scholar]

- 33.Kwon I, Ahn C, White P, et al. Development of an integrated practice unit: Utilizing a lean approach to impact value of care for brain tumor patients. Journal of Clinical Oncology. 2016;34 [Google Scholar]

- 34.Sharma J. Integrated care of the diabetic-oncology patient. DeSales University, ProQuest Dissertations Publishing; 2013. [Google Scholar]

- 35.Timmins P, Panneton K. A new model for provider/insurer relations in gyn oncology. Gynecologic Oncology. 2013;130:e96–e97. [Google Scholar]

- 36.Basch E, Deal AM, Dueck AC, et al. Overall survival results of a trial assessing patient-reported outcomes for symptom monitoring during routine cancer treatment. JAMA. 2017;318(2):197–198. doi: 10.1001/jama.2017.7156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kautter J, Pope GC, Leung M, et al. Center for Medicare Services. Evaluation of Medicare Physician Group Practice Demonstration: Final Report. 2012 https://downloads.cms.gov/files/cmmi/medicare-demonstration/PhysicianGroupPracticeFinalReport.pdf.

- 38.The State of Cancer Care in America, 2017: A Report by the American Society of Clinical Oncology. J Oncol Pract. 2017;13:e353–e394. doi: 10.1200/JOP.2016.020743. [DOI] [PubMed] [Google Scholar]

- 39.Centers for Medicare and Medicaid Services. Oncology Care Model. 2016 https://innovation.cms.gov/initiatives/oncology-care/

- 40.Greenberg J, Dudley J. Engaging Medical Specialists in Improving Health Care Value. Harv Bus Rev. 2015 [Google Scholar]

- 41.Mandelblatt JS, Ramsey SD, Lieu TA, Phelps CE. Evaluating frameworks that provide value measures for health care inverventions. Value Health. 2017 doi: 10.1016/j.jval.2016.11.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.McWilliams JM, Landon BE, Chernew ME. Changes in healthcare spending and quality for Medicare beneficiaries associated with a commercial ACO contract. JAMA. 2013 doi: 10.1001/jama.2013.276302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Schulman KA, Richman DB. Reassessing ACOs and Healthcare Reform. JAMA. 2016;316(7):707–8. doi: 10.1001/jama.2016.10874. [DOI] [PubMed] [Google Scholar]

- 44.Song Z, Fisher ES. The ACO experiment in infancy – looking back and looking forward. JAMA. 2016;316(7):705–6. doi: 10.1001/jama.2016.9958. [DOI] [PubMed] [Google Scholar]