Significance

Partial differential equations (PDEs) are among the most ubiquitous tools used in modeling problems in nature. However, solving high-dimensional PDEs has been notoriously difficult due to the “curse of dimensionality.” This paper introduces a practical algorithm for solving nonlinear PDEs in very high (hundreds and potentially thousands of) dimensions. Numerical results suggest that the proposed algorithm is quite effective for a wide variety of problems, in terms of both accuracy and speed. We believe that this opens up a host of possibilities in economics, finance, operational research, and physics, by considering all participating agents, assets, resources, or particles together at the same time, instead of making ad hoc assumptions on their interrelationships.

Keywords: partial differential equations, backward stochastic differential equations, high dimension, deep learning, Feynman–Kac

Abstract

Developing algorithms for solving high-dimensional partial differential equations (PDEs) has been an exceedingly difficult task for a long time, due to the notoriously difficult problem known as the “curse of dimensionality.” This paper introduces a deep learning-based approach that can handle general high-dimensional parabolic PDEs. To this end, the PDEs are reformulated using backward stochastic differential equations and the gradient of the unknown solution is approximated by neural networks, very much in the spirit of deep reinforcement learning with the gradient acting as the policy function. Numerical results on examples including the nonlinear Black–Scholes equation, the Hamilton–Jacobi–Bellman equation, and the Allen–Cahn equation suggest that the proposed algorithm is quite effective in high dimensions, in terms of both accuracy and cost. This opens up possibilities in economics, finance, operational research, and physics, by considering all participating agents, assets, resources, or particles together at the same time, instead of making ad hoc assumptions on their interrelationships.

Partial differential equations (PDEs) are among the most ubiquitous tools used in modeling problems in nature. Some of the most important ones are naturally formulated as PDEs in high dimensions. Well-known examples include the following:

-

i)

The Schrödinger equation in the quantum many-body problem. In this case the dimensionality of the PDE is roughly three times the number of electrons or quantum particles in the system.

-

ii)

The nonlinear Black–Scholes equation for pricing financial derivatives, in which the dimensionality of the PDE is the number of underlying financial assets under consideration.

-

iii)

The Hamilton–Jacobi–Bellman equation in dynamic programming. In a game theory setting with multiple agents, the dimensionality goes up linearly with the number of agents. Similarly, in a resource allocation problem, the dimensionality goes up linearly with the number of devices and resources.

As elegant as these PDE models are, their practical use has proved to be very limited due to the curse of dimensionality (1): The computational cost for solving them goes up exponentially with the dimensionality.

Another area where the curse of dimensionality has been an essential obstacle is machine learning and data analysis, where the complexity of nonlinear regression models, for example, goes up exponentially with the dimensionality. In both cases the essential problem we face is how to represent or approximate a nonlinear function in high dimensions. The traditional approach, by building functions using polynomials, piecewise polynomials, wavelets, or other basis functions, is bound to run into the curse of dimensionality problem.

In recent years a new class of techniques, the deep neural network model, has shown remarkable success in artificial intelligence (e.g., refs. 2–6). The neural network is an old idea but recent experience has shown that deep networks with many layers seem to do a surprisingly good job in modeling complicated datasets. In terms of representing functions, the neural network model is compositional: It uses compositions of simple functions to approximate complicated ones. In contrast, the approach of classical approximation theory is usually additive. Mathematically, there are universal approximation theorems stating that a single hidden-layer neural network can approximate a wide class of functions on compact subsets (see, e.g., survey in ref. 7 and the references therein), even though we still lack a theoretical framework for explaining the seemingly unreasonable effectiveness of multilayer neural networks, which are widely used nowadays. Despite this, the practical success of deep neural networks in artificial intelligence has been very astonishing and encourages applications to other problems where the curse of dimensionality has been a tormenting issue.

In this paper, we extend the power of deep neural networks to another dimension by developing a strategy for solving a large class of high-dimensional nonlinear PDEs using deep learning. The class of PDEs that we deal with is (nonlinear) parabolic PDEs. Special cases include the Black–Scholes equation and the Hamilton–Jacobi–Bellman equation. To do so, we make use of the reformulation of these PDEs as backward stochastic differential equations (BSDEs) (e.g., refs. 8 and 9) and approximate the gradient of the solution using deep neural networks. The methodology bears some resemblance to deep reinforcement learning with the BSDE playing the role of model-based reinforcement learning (or control theory models) and the gradient of the solution playing the role of policy function. Numerical examples manifest that the proposed algorithm is quite satisfactory in both accuracy and computational cost.

Due to the curse of dimensionality, there are only a very limited number of cases where practical high-dimensional algorithms have been developed in the literature. For linear parabolic PDEs, one can use the Feynman–Kac formula and Monte Carlo methods to develop efficient algorithms to evaluate solutions at any given space–time locations. For a class of inviscid Hamilton–Jacobi equations, Darbon and Osher (10) recently developed an effective algorithm in the high-dimensional case, based on the Hopf formula for the Hamilton–Jacobi equations. A general algorithm for nonlinear parabolic PDEs based on the multilevel decomposition of Picard iteration is developed in ref. 11 and has been shown to be quite efficient on a number of examples in finance and physics. The branching diffusion method is proposed in refs. 12 and 13, which exploits the fact that solutions of semilinear PDEs with polynomial nonlinearity can be represented as an expectation of a functional of branching diffusion processes. This method does not suffer from the curse of dimensionality, but still has limited applicability due to the blow-up of approximated solutions in finite time.

The starting point of the present paper is deep learning. It should be stressed that even though deep learning has been a very successful tool for a number of applications, adapting it to the current setting with practical success is still a highly nontrivial task. Here by using the reformulation of BSDEs, we are able to cast the problem of solving PDEs as a learning problem and we design a deep-learning framework that fits naturally to that setting. This has proved to be quite successful in practice.

Methodology

We consider a general class of PDEs known as semilinear parabolic PDEs. These PDEs can be represented as

| [1] |

with some specified terminal condition . Here and represent the time and -dimensional space variable, respectively, is a known vector-valued function, is a known matrix-valued function, denotes the transpose associated to , and denote the gradient and the Hessian of function with respect to , denotes the trace of a matrix, and is a known nonlinear function. To fix ideas, we are interested in the solution at , for some vector .

Let be a -dimensional Brownian motion and be a -dimensional stochastic process which satisfies

| [2] |

Then the solution of Eq. 1 satisfies the following BSDE (cf., e.g., refs. 8 and 9):

| [3] |

We refer to Materials and Methods for further explanation of Eq. 3.

To derive a numerical algorithm to compute , we treat as parameters in the model and view Eq. 3 as a way of computing the values of at the terminal time , knowing and . We apply a temporal discretization to Eqs. 2 and 3. Given a partition of the time interval : , we consider the simple Euler scheme for :

| [4] |

and

| [5] |

where

| [6] |

Given this temporal discretization, the path can be easily sampled using Eq. 4. Our key step next is to approximate the function at each time step by a multilayer feedforward neural network

| [7] |

for , where denotes parameters of the neural network approximating at .

Thereafter, we stack all of the subnetworks in Eq. 7 together to form a deep neural network as a whole, based on the summation of Eq. 5 over . Specifically, this network takes the paths and as the input data and gives the final output, denoted by , as an approximation of . We refer to Materials and Methods for more details on the architecture of the neural network. The difference in the matching of a given terminal condition can be used to define the expected loss function

| [8] |

The total set of parameters is .

We can now use a stochastic gradient descent-type (SGD) algorithm to optimize the parameter , just as in the standard training of deep neural networks. In our numerical examples, we use the Adam optimizer (14). See Materials and Methods for more details on the training of the deep neural networks. Since the BSDE is used as an essential tool, we call the methodology introduced above the deep BSDE method.

Examples

Nonlinear Black–Scholes Equation with Default Risk.

A key issue in the trading of financial derivatives is to determine an appropriate fair price. Black and Scholes (15) illustrated that the price of a financial derivative satisfies a parabolic PDE, nowadays known as the Black–Scholes equation. The Black–Scholes model can be augmented to take into account several important factors in real markets, including defaultable securities, higher interest rates for borrowing than for lending, transaction costs, uncertainties in the model parameters, etc. (e.g., refs. 16–20). Each of these effects results in a nonlinear contribution in the pricing model (e.g., refs. 17, 21, and 22). In particular, the credit crisis and the ongoing European sovereign debt crisis have highlighted the most basic risk that has been neglected in the original Black–Scholes model, the default risk (21).

Ideally the pricing models should take into account the whole basket of underlyings that the financial derivatives depend on, resulting in high-dimensional nonlinear PDEs. However, existing pricing algorithms are unable to tackle these problems generally due to the curse of dimensionality. To demonstrate the effectiveness of the deep BSDE method, we study a special case of the recursive valuation model with default risk (16, 17). We consider the fair price of a European claim based on 100 underlying assets conditional on no default having occurred yet. When default of the claim’s issuer occurs, the claim’s holder receives only a fraction of the current value. The possible default is modeled by the first jump time of a Poisson process with intensity , a decreasing function of the current value; i.e., the default becomes more likely when the claim’s value is low. The value process can then be modeled by Eq. 1 with the generator

| [9] |

(16), where is the interest rate of the risk-free asset. We assume that the underlying asset price moves as a geometric Brownian motion and choose the intensity function as a piecewise-linear function of the current value with three regions ():

| [10] |

(17). The associated nonlinear Black–Scholes equation in becomes

| [11] |

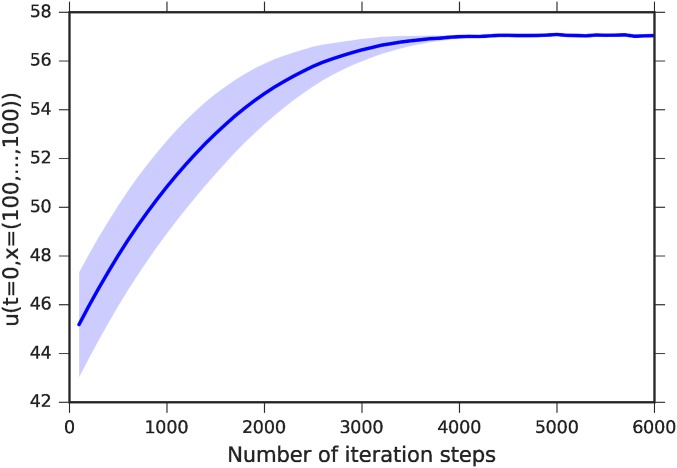

We choose , and the terminal condition for . Fig. 1 shows the mean and the SD of as an approximation of , with the final relative error being . The not explicitly known “exact” solution of Eq. 11 at has been approximately computed by means of the multilevel Picard method (11): . In comparison, if we do not consider the default risk, we get . In this case, the model becomes linear and can be solved using straightforward Monte Carlo methods. However, neglecting default risks results in a considerable error in the pricing, as illustrated above. The deep BSDE method allows us to rigorously incorporate default risks into pricing models. This in turn makes it possible to evaluate financial derivatives with substantial lower risks for the involved parties and the societies.

Fig. 1.

Plot of as an approximation of against the number of iteration steps in the case of the -dimensional nonlinear Black–Scholes equation with equidistant time steps () and learning rate . The shaded area depicts the mean the SD of as an approximation of for five independent runs. The deep BSDE method achieves a relative error of size in a runtime of s.

Hamilton–Jacobi–Bellman Equation.

The term curse of dimensionality was first used explicitly by Richard Bellman in the context of dynamic programming (1), which has now become the cornerstone in many areas such as economics, behavioral science, computer science, and even biology, where intelligent decision making is the main issue. In the context of game theory where there are multiple players, each player has to solve a high-dimensional Hamilton–Jacobi–Bellman (HJB) type equation to find his/her optimal strategy. In a dynamic resource allocation problem involving multiple entities with uncertainty, the dynamic programming principle also leads to a high-dimensional HJB equation (23) for the value function. Until recently these high-dimensional PDEs have basically remained intractable. We now demonstrate below that the deep BSDE method is an effective tool for dealing with these high-dimensional problems.

We consider a classical linear-quadratic Gaussian (LQG) control problem in 100 dimensions,

| [12] |

with , , and with the cost functional . Here is the state process, is the control process, is a positive constant representing the “strength” of the control, and is a standard Brownian motion. Our goal is to minimize the cost functional through the control process.

The HJB equation for this problem is given by

| [13] |

[e.g., Yong and Zhou (ref. 24, pp. 175–184)]. The value of the solution of Eq. 13 at represents the optimal cost when the state starts from . Applying Itô’s formula, one can show that the exact solution of Eq. 13 with the terminal condition admits the explicit formula

| [14] |

This can be used to test the accuracy of the proposed algorithm.

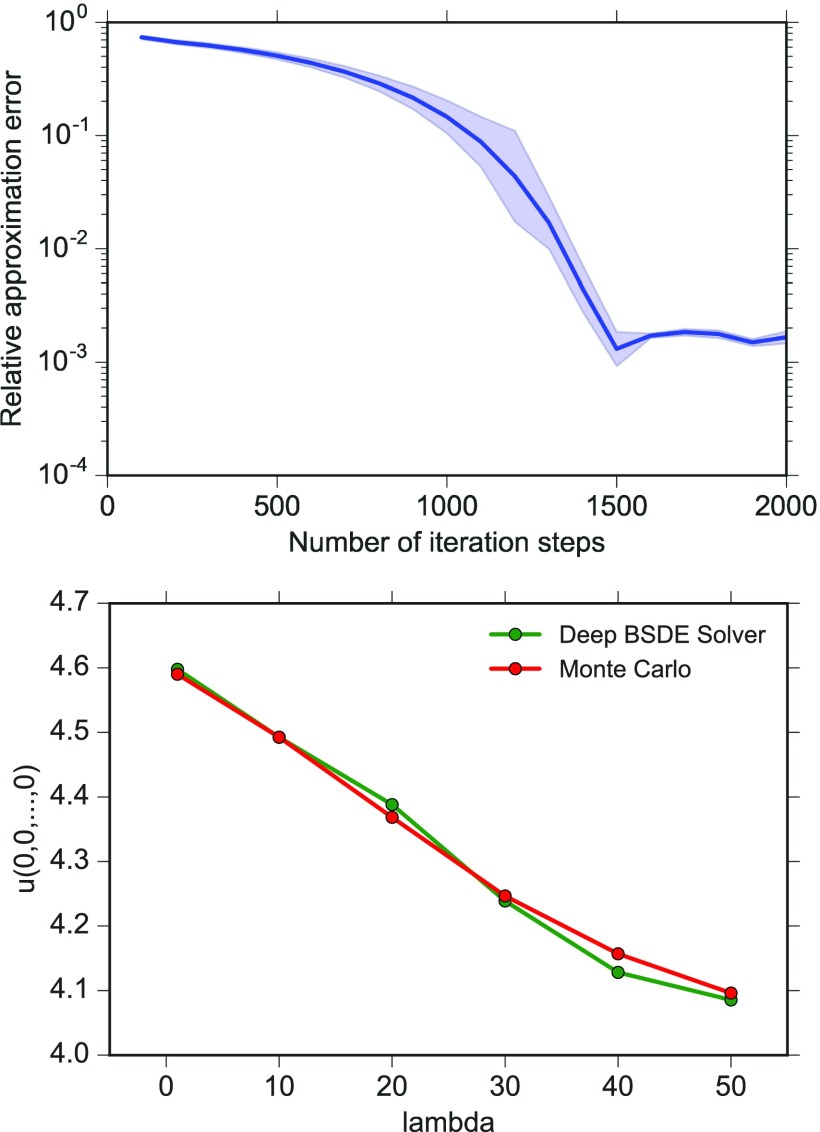

We solve the HJB Eq. 13 in the 100-dimensional case with for . Fig. 2, Top shows the mean and the SD of the relative error for in the case . The deep BSDE method achieves a relative error of in a runtime of s on a Macbook Pro. We also use the BSDE method to approximatively calculate the optimal cost against different values of (Fig. 2, Bottom). The curve in Fig. 2, Bottom clearly confirms the intuition that the optimal cost decreases as the control strength increases.

Fig. 2.

(Top) Relative error of the deep BSDE method for when against the number of iteration steps in the case of the -dimensional HJB Eq. 13 with equidistant time steps () and learning rate . The shaded area depicts the mean the SD of the relative error for five different runs. The deep BSDE method achieves a relative error of size in a runtime of s. (Bottom) Optimal cost against different values of in the case of the -dimensional HJB Eq. 13, obtained by the deep BSDE method and classical Monte Carlo simulations of Eq. 14.

Allen–Cahn Equation.

The Allen–Cahn equation is a reaction–diffusion equation that arises in physics, serving as a prototype for the modeling of phase separation and order–disorder transition (e.g., ref. 25). Here we consider a typical Allen–Cahn equation with the “double-well potential” in 100-dimensional space,

| [15] |

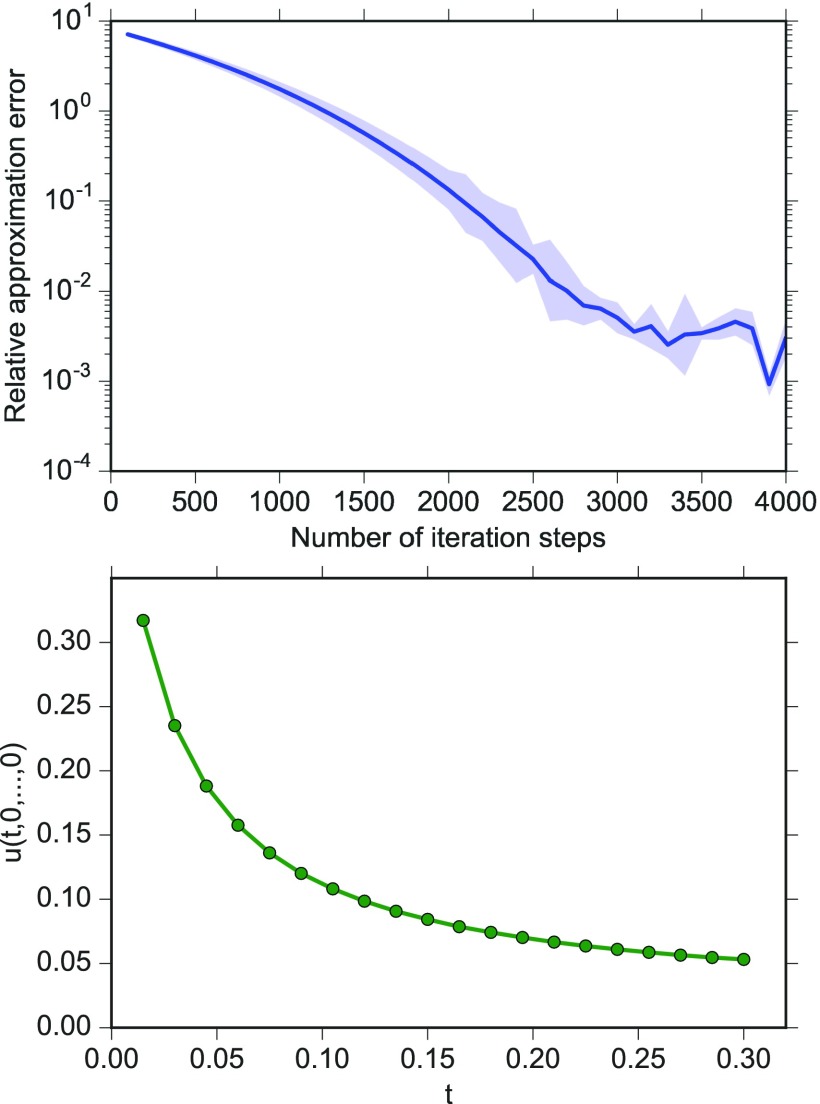

with the initial condition , where for . By applying a transformation of the time variable , we can turn Eq. 15 into the form of Eq. 1 such that the deep BSDE method can be used. Fig. 3, Top shows the mean and the SD of the relative error of . The not explicitly known exact solution of Eq. 15 at , has been approximatively computed by means of the branching diffusion method (e.g., refs. 12 and 13): . For this -dimensional example PDE, the deep BSDE method achieves a relative error of in a runtime of s on a Macbook Pro. We also use the deep BSDE method to approximatively compute the time evolution of for (Fig. 3, Bottom).

Fig. 3.

(Top) Relative error of the deep BSDE method for against the number of iteration steps in the case of the -dimensional Allen–Cahn Eq. 15 with equidistant time steps () and learning rate . The shaded area depicts the mean the SD of the relative error for five different runs. The deep BSDE method achieves a relative error of size in a runtime of s. (Bottom) Time evolution of for in the case of the -dimensional Allen–Cahn Eq. 15 computed by means of the deep BSDE method.

Conclusions

The algorithm proposed in this paper opens up a host of possibilities in several different areas. For example, in economics one can consider many different interacting agents at the same time, instead of using the “representative agent” model. Similarly in finance, one can consider all of the participating instruments at the same time, instead of relying on ad hoc assumptions about their relationships. In operational research, one can handle the cases with hundreds and thousands of participating entities directly, without the need to make ad hoc approximations.

It should be noted that although the methodology presented here is fairly general, we are so far not able to deal with the quantum many-body problem due to the difficulty in dealing with the Pauli exclusion principle.

Materials and Methods

BSDE Reformulation.

The link between (nonlinear) parabolic PDEs and BSDEs has been extensively investigated in the literature (e.g., refs. 8, 9, 26, and 27). In particular, Markovian BSDEs give a nonlinear Feynman–Kac representation of some nonlinear parabolic PDEs. Let be a probability space, be a -dimensional standard Brownian motion, and be the normal filtration generated by . Consider the following BSDEs,

| [16] |

| [17] |

for which we are seeking a -adapted solution process with values in . Under suitable regularity assumptions on the coefficient functions , , and , one can prove existence and up-to-indistinguishability uniqueness of solutions (cf., e.g., refs. 8 and 26). Furthermore, we have that the nonlinear parabolic PDE is related to the BSDEs 16 and 17 in the sense that for all it holds -a.s. that

| [18] |

(cf., e.g., refs. 8 and 9). Therefore, we can compute the quantity associated to Eq. 1 through by solving the BSDEs 16 and 17. More specifically, we plug the identities in Eq. 18 into Eq. 17 and rewrite the equation forwardly to obtain the formula in Eq. 3.

Then we discretize the equation temporally and use neural networks to approximate the spatial gradients and finally the unknown function, as introduced in the methodology of this paper.

Neural Network Architecture.

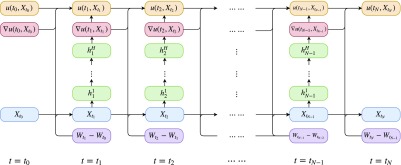

In this subsection we briefly illustrate the architecture of the deep BSDE method. To simplify the presentation we restrict ourselves in these illustrations to the case where the diffusion coefficient in Eq. 1 satisfies that . Fig. 4 illustrates the network architecture for the deep BSDE method. Note that denotes the variable we approximate directly by subnetworks and denotes the variable we compute iteratively in the network. There are three types of connections in this network:

Fig. 4.

Illustration of the network architecture for solving semilinear parabolic PDEs with hidden layers for each subnetwork and time intervals. The whole network has layers in total that involve free parameters to be optimized simultaneously. Each column for corresponds to a subnetwork at time . are the intermediate neurons in the subnetwork at time for .

-

i)

is the multilayer feedforward neural network approximating the spatial gradients at time . The weights of this subnetwork are the parameters we aim to optimize.

-

ii)

is the forward iteration giving the final output of the network as an approximation of , completely characterized by Eqs. 5 and 6. There are no parameters to be optimized in this type of connection.

-

iii)

is the shortcut connecting blocks at different times, which is characterized by Eqs. 4 and 6. There are also no parameters to be optimized in this type of connection.

If we use hidden layers in each subnetwork, as illustrated in Fig. 4, then the whole network has layers in total that involve free parameters to be optimized simultaneously.

It should be pointed out that the proposed deep BSDE method can also be used if we are interested in values of the PDE solution in a region at time instead of at a single space-point . In this case we choose to be a nondegenerate -valued random variable and we use two additional neural networks parameterized by for approximating the functions and . Upper and lower bounds for approximation errors of stochastic approximation algorithms for PDEs and BSDEs, respectively, can be found in refs. 27–29 and the references therein.

Implementation.

We describe in detail the implementation for the numerical examples presented in this paper. Each subnetwork is fully connected and consists of four layers (except the example in the next subsection), with one input layer ( dimensional), two hidden layers (both dimensional), and one output layer ( dimensional). We choose the rectifier function (ReLU) as our activation function. We also adopted the technique of batch normalization 30 in the subnetworks, right after each linear transformation and before activation. This technique accelerates the training by allowing a larger step size and easier parameter initialization. All of the parameters are initialized through a normal or a uniform distribution without any pretraining.

We use TensorFlow (31) to implement our algorithm with the Adam optimizer (14) to optimize parameters. Adam is a variant of the SGD algorithm, based on adaptive estimates of lower-order moments. We set the default values for corresponding hyperparameters as recommended in ref. 14 and choose the batch size as 64. In each of the presented numerical examples the means and the SDs of the relative -approximation errors are computed approximatively by means of five independent runs of the algorithm with different random seeds. All of the numerical examples reported are run on a Macbook Pro with a 2.9-GHz Intel Core i5 processor and 16 GB memory.

Effect of Number of Hidden Layers.

The accuracy of the deep BSDE method certainly depends on the number of hidden layers in the subnetwork approximation Eq. 7. To test this effect, we solve a reaction–diffusion-type PDE with a different number of hidden layers in the subnetwork. The PDE is a high-dimensional version () of the example analyzed numerically in Gobet and Turkedjiev (32) (),

| [19] |

in which is the explicit oscillating solution

| [20] |

Parameters are chosen in the same way as in ref. 32: . A residual structure with skip connection is used in each subnetwork with each hidden layer having neurons. We increase the number of hidden layers in each subnetwork from zero to four and report the relative error in Table 1. It is evident that the approximation accuracy increases as the number of hidden layers in the subnetwork increases.

Table 1.

The mean and SD of the relative error for the PDE in Eq. 19, obtained by the deep BSDE method with different numbers of hidden layers

| No. of layers† | |||||

| Relative error | 29 | 58 | 87 | 116 | 145 |

| Mean, % | 2.29 | 0.90 | 0.60 | 0.56 | 0.53 |

| SD | 0.0026 | 0.0016 | 0.0017 | 0.0017 | 0.0014 |

The PDE is solved until convergence with 30 equidistant time steps (N=30) and 40,000 iteration steps. Learning rate is 0.01 for the first half of iterations and 0.001 for the second half.

We count only the layers that have free parameters to be optimized.

Acknowledgments

The work of J.H. and W.E. is supported in part by National Natural Science Foundation of China (NNSFC) Grant 91130005, US Department of Energy (DOE) Grant DE-SC0009248, and US Office of Naval Research (ONR) Grant N00014-13-1-0338.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

References

- 1.Bellman RE. Dynamic Programming. Princeton Univ Press; Princeton: 1957. [Google Scholar]

- 2.Goodfellow I, Bengio Y, Courville A. Deep Learning. MIT Press; Cambridge, MA: 2016. [Google Scholar]

- 3.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 4.Krizhevsky A, Sutskever I, Hinton GE. Imagenet classification with deep convolutional neural networks. In: Bartlett P, Pereira F, Burges CJC, Bottou L, Weinberger KQ, editors. Advances in Neural Information Processing Systems. Vol 25. Curran Associates, Inc.; Red Hook, NY: 2012. pp. 1097–1105. [Google Scholar]

- 5.Hinton G, et al. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process Mag. 2012;29:82–97. [Google Scholar]

- 6.Silver D, Huang A, Maddison CJ, Guez A, et al. Mastering the game of Go with deep neural networks and tree search. Nature. 2016;529:484–489. doi: 10.1038/nature16961. [DOI] [PubMed] [Google Scholar]

- 7.Pinkus A. Approximation theory of the MLP model in neural networks. Acta Numerica. 1999;8:143–195. [Google Scholar]

- 8.Pardoux É, Peng S. 1992. Backward stochastic differential equations and quasilinear parabolic partial differential equations. Stochastic Partial Differential Equations and Their Applications (Charlotte, NC, 1991), Lecture Notes in Control and Information Sciences, eds Rozovskii BL, Sowers RB (Springer, Berlin), Vol 176, pp 200–217.

- 9.Pardoux É, Tang S. Forward-backward stochastic differential equations and quasilinear parabolic PDEs. Probab Theor Relat Fields. 1999;114:123–150. [Google Scholar]

- 10.Darbon J, Osher S. Algorithms for overcoming the curse of dimensionality for certain Hamilton–Jacobi equations arising in control theory and elsewhere. Res Math Sci. 2016;3:19. [Google Scholar]

- 11.E W, Hutzenthaler M, Jentzen A, Kruse T. 2016. On multilevel Picard numerical approximations for high-dimensional nonlinear parabolic partial differential equations and high-dimensional nonlinear backward stochastic differential equations. arXiv:1607.03295.

- 12.Henry-Labordère P. 2012. Counterparty risk valuation: A marked branching diffusion approach. arXiv:1203.2369.

- 13.Henry-Labordère P, Tan X, Touzi N. A numerical algorithm for a class of BSDEs via the branching process. Stoch Proc Appl. 2014;124:1112–1140. [Google Scholar]

- 14.Kingma D, Ba J. 2015. Adam: A method for stochastic optimization. arXiv:1412.6980. Preprint, posted December 22, 2014.

- 15.Black F, Scholes M. 1973. The pricing of options and corporate liabilities. J Polit Econ 81:637–654; reprinted in Black F, Scholes M (2012) Financial Risk Measurement and Management, International Library of Critical Writings in Economics (Edward Elgar, Cheltenham, UK), Vol 267, pp 100–117.

- 16.Duffie D, Schroder M, Skiadas C. Recursive valuation of defaultable securities and the timing of resolution of uncertainty. Ann Appl Probab. 1996;6:1075–1090. [Google Scholar]

- 17.Bender C, Schweizer N, Zhuo J. A primal–dual algorithm for BSDEs. Math Finance. 2017;27:866–901. [Google Scholar]

- 18.Bergman YZ. Option pricing with differential interest rates. Rev Financial Stud. 1995;8:475–500. [Google Scholar]

- 19.Leland H. Option pricing and replication with transaction costs. J Finance. 1985;40:1283–1301. [Google Scholar]

- 20.Avellaneda M, Levy A, Parás A. Pricing and hedging derivative securities in markets with uncertain volatilities. Appl Math Finance. 1995;2:73–88. [Google Scholar]

- 21.Crépey S, Gerboud R, Grbac Z, Ngor N. Counterparty risk and funding: The four wings of the TVA. Int J Theor Appl Finance. 2013;16:1350006. [Google Scholar]

- 22.Forsyth PA, Vetzal KR. Implicit solution of uncertain volatility/transaction cost option pricing models with discretely observed barriers. Appl Numer Math. 2001;36:427–445. [Google Scholar]

- 23.Powell WB. Approximate Dynamic Programming: Solving the Curses of Dimensionality. Wiley; New York: 2011. [Google Scholar]

- 24.Yong J, Zhou X. Stochastic Controls. Springer; New York: 1999. [Google Scholar]

- 25.Emmerich H. The Diffuse Interface Approach in Materials Science: Thermodynamic Concepts and Applications of Phase-Field Models. Vol 73 Springer; New York: 2003. [Google Scholar]

- 26.El Karoui N, Peng S, Quenez MC. Backward stochastic differential equations in finance. Math Finance. 1997;7:1–71. [Google Scholar]

- 27.Gobet E. Monte-Carlo Methods and Stochastic Processes: From Linear to Non-Linear. Chapman & Hall/CRC; Boca Raton, FL: 2016. [Google Scholar]

- 28.Heinrich S. The randomized information complexity of elliptic PDE. J Complexity. 2006;22:220–249. [Google Scholar]

- 29.Geiss S, Ylinen J. 2014. Decoupling on the Wiener space, related Besov spaces, and applications to BSDEs. arXiv:1409.5322.

- 30.Ioffe S, Szegedy C. 2015. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv:1502.03167. Preprint, posted February 11, 2014.

- 31.Abadi M, et al. 12th USENIX Symposium on Operating Systems Design and Implementation. USENIX Association; Berkeley, CA: 2016. Tensorflow: A system for large-scale machine learning; pp. 265–283. [Google Scholar]

- 32.Gobet E, Turkedjiev P. Adaptive importance sampling in least-squares Monte Carlo algorithms for backward stochastic differential equations. Stoch Proc Appl. 2017;127:1171–1203. [Google Scholar]