Abstract

Objective.

To develop and field test an Implementation Assessment Tool for assessing progress of hospital Units in implementing improvements for prevention of ventilator associated pneumonia (VAP) in a two-state collaborative, including data on actions implemented by participating teams and contextual factors that may influence their efforts. Using the data collected, learn how implementation actions can be improved, and analyze effects of implementation progress on outcome measures.

Design.

We developed the tool as an interview protocol that included quantitative and qualitative items addressing actions on CUSP and clinical interventions, for use in guiding data collection via telephone interviews.

Setting/Participants/Patients.

We conducted interviews with leaders of improvement teams from Units that participated in the two-state VAP prevention initiative.

Methods/Interventions.

We collected data from 43 hospital Units as they implemented actions for the VAP initiative, and performed descriptive analyzes of the data with comparisons across the two states.

Results.

Early in the VAP prevention initiative, most Units had made only moderate progress overall in using many of the CUSP actions known to support their improvement processes. For contextual factors, a relatively small number of barriers were found to have important negative effects on implementation progress, in particular, barriers related to workload and time issues. We modified coaching provided to the participating Unit teams to reinforce training in weak spots the interviews identified.

Conclusion.

These assessments provided important new knowledge regarding the implementation science of quality improvement projects, for feedback during implementation and to better understand which factors most affect implementation.

INTRODUCTION

The experiences of healthcare organizations that have undertaken quality improvement initiatives have yielded very mixed results, highlighting how difficult it is to achieve sustainable, improved outcomes.1–2 Many initiatives to implement best practices have faltered during the implementation phase. It is increasingly apparent that implementation components and contextual factors influence providers’ ability to translate interventions into the clinical setting.3–4

Multiple factors can challenge efforts of organizations to make sustainable changes in healthcare practices, including not only the interventions themselves but also the implementation actions taken and external forces within which they operate. When assessing quality improvement initiatives, greater attention needs to be paid to the actions required to make improvements, and to situational factors that can affect those actions.5 Such information can be used (1) to learn how implementation actions themselves can be improved in the future, and (2) to analyze effects of the implementation interventions on measures of resulting outcomes.

In this paper, we describe the development, application, and evaluation of an Implementation Assessment Tool that was performed as part of a two-state quality improvement collaborative for the prevention of ventilator associated pneumonia (VAP). This tool was used to collect data on the actions implemented by teams participating in the collaborative and the contextual factors that may have influenced their implementation efforts. As part of this assessment, we make a clear distinction between these “implementation components” and “contextual factors”.6–7

The collaborative, called the Comprehensive Unit-based Safety Program (CUSP) to Eliminate Ventilator-Associated Pneumonia (VAP), was funded by the National Institutes of Health (NIH) and Agency for Healthcare Research and Quality (AHRQ). Forty-three units from Maryland and Pennsylvania hospitals are participating in this 3-year collaborative cohort, from August 2012 to December 2015. Units included intensive care units (ICUs), adult long term care facilities, and rehabilitation facilities. The hospitals included both teaching and non-teaching facilities located across a range of urban, suburban, and rural areas. Participation in the collaborative and the interview was voluntary and financial support was not provided for participation.

The VAP prevention initiative is a multifaceted intervention including two interventions, a VAP intervention and CUSP. The VAP intervention was based on a conceptual model to increase caregivers’ use of 6 evidence-based therapies for the prevention of VAP, including maintaining elevation of the head of the bed to ≥30 degrees, performing oral care 6 times daily, the use of chlorhexidine 2 times daily while performing oral care, the use of subglottic suctioning endotracheal tubes for patients ventilated >72 hours, the use of spontaneous awakening trials (sedation vacation), and the use of spontaneous breathing trials.8–9

CUSP is a 5-step iterative and validated process to improve safety culture.10–13 CUSP focuses on the intrinsic versus extrinsic motivation of front-line staff, provides tools to help teams investigate when something goes wrong in care or there is an infection, and educates staff on why that is important. CUSP is designed to tap into the wisdom and experience of front-line staff, create partnerships with senior executives to support staff in their efforts to improve patient safety and quality of care. Participating teams were trained on the VAP intervention and CUSP implementation through monthly conference calls and coaching by members of the research team, including the Armstrong Institute for Patient Safety and Quality, Hospital and Healthsystem Association of Pennsylvania, and Maryland Hospital Association.

METHODS

Implementation Assessment Tool Development

The Implementation Assessment Tool is an interview protocol developed to collect data via telephone interviews with the team leaders of each of the units participating in the VAP prevention collaborative. Results from these interviews, reported here, describe the early implementation progress and experiences of the participating units.

We drew upon three key sources to develop the contents of the Implementation Assessment Tool. The first was the content of CUSP as applied in this VAP prevention collaborative, which was the primary topic of interest for implementation experiences. The other two sources were contents of the Team Checkup Tool (TCT),14 developed by Johns Hopkins researchers in 2005 and a success factor survey developed by RAND researchers in 2006.15–17 These two instruments addressed similar sets of factors that are well documented in the literature as influencing experiences in implementing quality improvement initiatives.

We used an iterative process to develop the contents of the interview protocol, drawing from these three sources. Questions were developed to address both implementation components and contextual factors relevant to implementation of VAP interventions in hospital ICUs. Some questions were designed to gather quantitative data, which we used directly in descriptive analyses and other statistical modeling. Others were designed to collect qualitative data, which we used to collect experiential data reported by the team leaders. In this paper we report results based on the quantitative data collected.

A draft of the written protocol was tested by interviewing a small number of team leaders and getting feedback from those interviews. Revisions were made in response to lessons from this test before extending the interviews to the full number of team leaders.

The Johns Hopkins University School of Medicine Institutional Review Board reviewed and approved this research. Informed consent was obtained from participants at the onset of interviews.

Measures Defined

The quantitative measures collected in the Implementation Assessment Tool consist of 33 items grouped into 4 composite measures (Table 1). Three of the composite measures address implementation components: training on patient safety, implementation of CUSP tools, and leadership commitment and support. The fourth composite measure addresses barriers to progress as key contextual factors.

Table 1:

Four Quantitative Measures of the Implementation Assessment Tool

| Measure | Interview Question | Response Options |

|---|---|---|

| 1. Number of patient safety training actions taken by the Units | Since you began participating in the project, how many of your staff have viewed a video or presentation on the science of safety? Is a patient safety presentation now part of new staff orientation for your unit? |

None/few, less than one half, one half or more, almost all/all (1–4 scale) Yes or No responses |

| 2. Implementation of the CUSP Tools by the Units | What CUSP tools are you implementing?

|

Yes or No responses for each tool |

| 3. Number of leadership support actions taken by the organization | Is the organization leadership taking the following steps to reinforce its support for the work?

|

Yes or No responses for each action |

| 4. Reported barriers to progress | In the past six months, how often did each of the following factors slow your CUSP team’s progress in implementing the CUSP and VAP interventions?

|

Barriers were each scored on 1–4 scale (1=Never/Rarely to 4 = Almost Always) |

Measure 1: Patient safety training actions.

This composite measure consists of the sum of dichotomous responses to two interview items. Responses to the first item (scale of 1–4) were re-coded as dichotomous responses where 1= “almost all/all” response and 0 = any other response. The second item had yes/no responses, coded yes=1 and no=0. The two dichotomous variables were summed, yielding a response range from 0 to 2.

Measure 2: Implementation of the CUSP tools.

This composite measure aggregates the yes/no responses to eight items about use of specific CUSP tools, where yes=1 and no=0. It is measured as the sum of responses to these items, yielding a response range from 0 to 8.

Measure 3: Leadership support actions.

This composite measure aggregates the yes/no responses to six items about leadership support actions reported by the Units, where yes=1 and no=0. It is measured as the sum of responses to these items, yielding a response range of 0 to 6.

Measure 4: Barriers to progress.

Responses for each of 18 possible barriers that affected Units’ implementation progress were obtained using likert-scale items for each barrier, with response options of never/rarely, occasionally, frequently, or almost all/all (scale of 1–4). To identify important barriers, responses for each barrier were re-coded to a dichotomous variable where 1= “almost all/all” response and 0 = any other response. These re-coded data were used to develop three measures. The first was number of important barriers overall, and the second was the number of important barriers by barrier category. These composite measures were calculated as the sum of the 1/0 values for individual barriers (across all the barriers and across barriers within each category). The third measure was the rank ordering of each individual barrier based on the percentage of Units rating it as an important barrier (coded 1 on the dichotomous variable), sorted in descending order.

Data Collection

Interviews using the Implementation Assessment Tool were conducted with the team leaders of the participating Units in January to July 2013. They were provided a copy of the interview protocol for review in advance of the interviews. The team leaders were the interviewees of interest. We allowed them to include other team members on the interview if they wished, but few decided to do so. Two members of the research team conducted the telephone interview with each quality improvement team leader. One of the researchers conducted the interview, and both took notes. The interviews took an average of one hour to conduct.

Data Analysis

Descriptive analyses were performed for each of the four composite measures as well as for the individual items within each of the measures. These included estimated means, medians, and standard deviations for each measure. Distributions of responses also were developed. Comparisons were made for Units in each of the two states in the collaborative (States 1 and 2 for presenting results). Alternative approaches were explored for presenting the data for each composite variable, to identify the most effective way to do so for different uses of the data. Aggregated results were presented to the participating teams via a webinar presentation, and were available for download, to allow them to compare their experiences with those of other teams.

RESULTS

A total of 43 interviews were conducted; 23 interviews in hospitals in State 1 and 20 interviews in hospitals in State 2. The backgrounds of the leads varied, including nurses (most respondents), nurse managers, CUSP team leaders, infection preventionists, respiratory therapists and physicians.

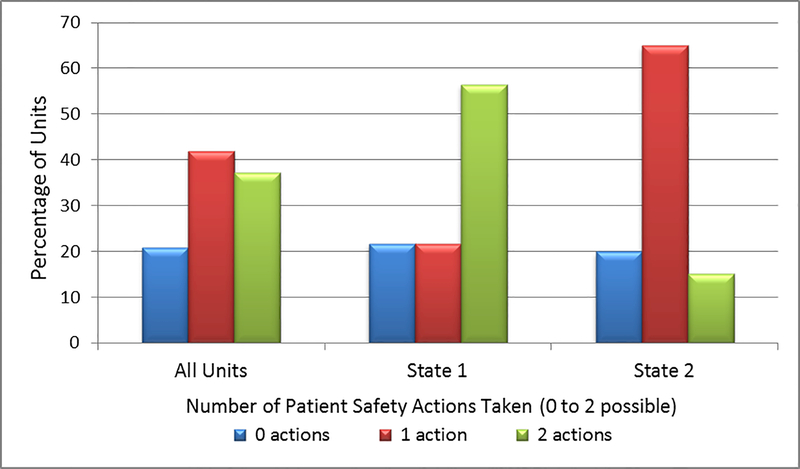

For the composite variable of Patient Safety Training Actions Taken, the 43 units took an average of 1.2 patient safety training actions, and 37.2% of the units took both possible actions (Table 2). Across both states, the Units had an aggregated 74.4% rate of showing the video to new staff, versus 41.9% of all Units showing it to all/almost all of their existing staff. Conversely, 20.9% of the units took neither training action. In the percentage distributions of the units, 56.5% of the Units in State 1 took both actions, compared to 15.0% of the State 2 Units (Figure 1).

Table 2:

Implementation Components: Summary Results for Measures 1–3

| Composite Measures | Responses (n=43) |

|---|---|

| Measure 1: Number of Patient Safety Training Actions Taken by the Units | |

| Mean number of actions (composite, possible number = 2) | 1.2 |

| Standard deviation | 0.8 |

| Distribution of Actions (composite) (%) | |

| No action | 20.9 |

| One action | 41.9 |

| Two actions | 37.2 |

| Individual items: Percentage of Units taking each action | |

| All/almost all staff view video on safety (4 on a 1–4 scale) | 41.9 |

| Video used for new staff orientation (Y/N) | 74.4 |

| Measure 2: Implementation of CUSP Tools | |

| Mean number used (composite, possible number = 8) | 3.0 |

| Standard deviation | 1.8 |

| Individual items: Units using each CUSP tool (%) | |

| Daily Goals checklist | 32.6 |

| Culture checkup tool | 27.9 |

| Learning from Defects tool | 23.3 |

| Shadowing | 23.3 |

| Morning briefing | 60.5 |

| Barrier identification and mitigation | 20.9 |

| Observing rounds (fly on the wall) | 60.5 |

| Structured communications | 48.8 |

| Measure 3: Number of Leadership Support Actions Taken | |

| Mean # of actions (possible number = 6) | 3.5 |

| Standard deviation | 1.6 |

| Individual items: Units reporting each leadership support action (%) | |

| Ensures employee training on the science of safety | 65.1 |

| Meets with the team on the unit at least monthly | 41.9 |

| Makes VAP prevention an organization-wide goal and in strategic plan | 74.4 |

| Fosters learning by disseminating CUSP teams successes and lessons | 60.5 |

| Provides protected time for VAP prevention team leaders | 32.6 |

| Reviews VAP rates at least quarterly at board meetings | 74.4 |

Figure 1.

Number of Patient Safety Training Actions Taken by the Units, Overall and by State (2 possible actions)

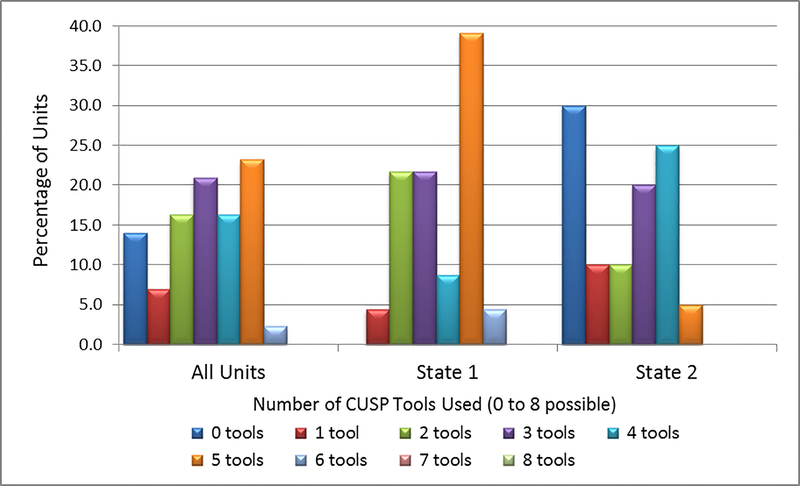

For the composite variable of Number of CUSP Tools Used, the 43 Units used a mean number of 3.0 tools of the 8 available tools (Table 2), and the distribution of Units by the number tools utilized ranged from 0 to 6. State 1 and State 2 Units differed substantially in their distributions (Figure 2). While 45% of the Units in State 1 reported using 5 or more tools, only 5% of the Units in State 2 used 5 or more tools. Conversely, none of the State 1 units and 30% of the State 2 Units reported using no tools at all (Figure 2).

Figure 2. Percentage Distribution of ICU Units by the Number of CUSP Tools Used, Overall and by State (8 possible tools).

Note: No Units used more than 6 of the 8 available CUSP tools.

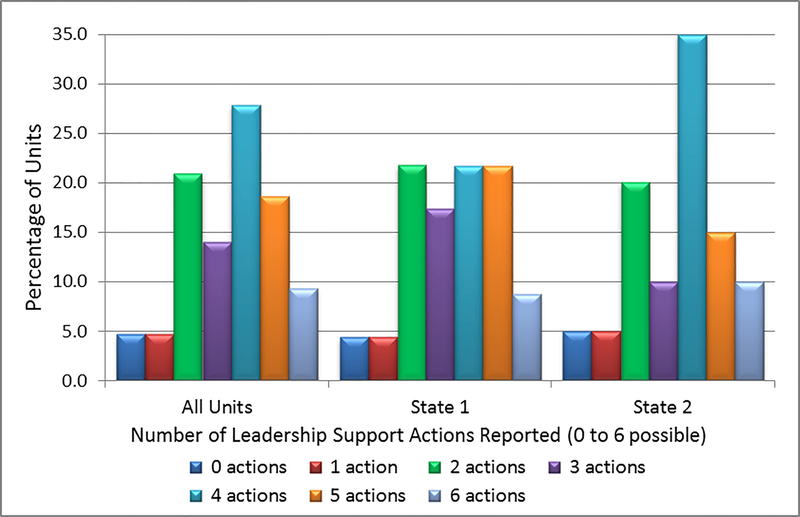

For the composite variable of Number of Leadership Support Actions Taken, an average of 3.5 leadership actions out of 6 possible actions was taken, as measured by the “strongest support” measure (Table 2). The Units varied widely in the number of actions they took, as well as in which specific leadership support actions they were likely to use. The distribution in the number of actions taken differed for the two states (Figure 3). A more even distribution is observed in State 1 Units, whereas the distribution of State 2 units showed a clear peak at 4 actions taken.

Figure 3.

Percentage Distribution of Units by the Number of Leadership Support Actions Taken, Overall and by State (6 possible actions)

For the composite variable of Barriers to Progress Encountered, out of the 18 potential barriers listed, the 43 Units identified a mean of 3.3 barriers that most frequently affected their implementation progress (Table 3). The “workload and time issues” were the biggest barriers encountered, with an average of 2.1 barriers identified, whereas less than one barrier was identified for each of the other three groups.

Table 3:

Contextual Factors: Number of Barriers by Group Reported by Units

| All Units (n=43) | Number of Possible Barriers | Barriers Experienced – Mean Number (Standard Deviation) |

|---|---|---|

| All Barriers | 18 | 3.28 (2.87) |

| Barrier group: | ||

| Leadership support issues | 4 | 0.35 (0.78) |

| Team skills and cohesion issues | 6 | 0.58 (1.03) |

| Stakeholder push-back issues | 3 | 0.28 (0.59) |

| Workload and time issues | 5 | 2.07 (1.53) |

Overall, the State 2 Units reported being affected by more barriers than did the State 1 Units. The Units in the two states were found to share issues with some barriers, and they differed on other barriers (Table 4). The barrier of “competing priorities” was the most frequently reported barrier by Units in both states, although the percentages reporting it differed by state (47.8% in State 1 and 70% in State 2). The barriers of “data collection burden” and “not enough time” also were in the top 5 barriers for Units in both states, and “data system problems,” “staff turnover on unit,” and “lack of physician leadership support” were in the top 7 for both states. The states differed markedly for some other barriers: “confusion on CUSP” was a top-five barrier for State 2 Units, but a low priority for State 1 Units, while “executive leadership support” was a top-five barrier for State 1 units but a low priority for State 2 Units.

Table 4:

Contextual Factors: Top Barriers Reported by Units, Overall and by State

| All Units (n=43) | State 1 (n=23) | State 2 (n=20) | |||

|---|---|---|---|---|---|

| Barrier | Percent | Barrier | Percent | Barrier | Percent |

| Competing priorities | 58.1 | Competing priorities | 47.8 | Competing priorities | 70.0 |

| Data collection burden | 48.8 | Data collection burden | 43.5 | Data collection burden | 55.0 |

| Not enough time | 39.5 | Not enough time | 39.1 | Staff turnover on unit | 45.0 |

| Data system problems | 32.6 | Data system problems | 26.1 | Confusion on CUSP | 40.0 |

| Staff turnover on unit | 27.9 | Leader support - exec | 13.0 | Not enough time | 40.0 |

| Leader support - MDs | 18.6 | Leader support - MDs | 13.0 | Data system problems | 40.0 |

| Confusion on CUSP | 18.6 | Staff turnover on unit | 13.0 | Leader support - MDs | 25.0 |

| Poor buy-in- MD staff | 16.3 | Knowledge of evidence | 8.7 | Turnover CUSP team | 25.0 |

| Turnover CUSP team | 14.0 | Poor buy-in- MD staff | 8.7 | Poor buy-in- MD staff | 25.0 |

| Leader support - exec | 9.3 | Lack team agree on goals | 4.3 | Lack team agree on goals | 15.0 |

| Lack team agree on goals | 9.3 | Turnover CUSP team | 4.3 | Poor team teamwork | 15.0 |

| Poor buy-in - nurse staff | 9.3 | Poor buy-in - nurse staff | 4.3 | Poor buy-in - nurse staff | 15.0 |

| Poor team teamwork | 7.0 | Leader support - nurses | 0.0 | Leader support - nurses | 10.0 |

| Knowledge of evidence | 4.7 | Little autonomy | 0.0 | Lack QI skills | 10.0 |

| Leader support - nurses | 4.7 | Lack QI skills | 0.0 | Leader support - exec | 5.0 |

| Lack QI skills | 4.7 | Confusion on CUSP | 0.0 | Little autonomy | 5.0 |

| Little autonomy | 2.3 | Poor team teamwork | 0.0 | Poor buy-in - other staff | 5.0 |

| Poor buy-in - other staff | 2.3 | Poor buy-in - other staff | 0.0 | Knowledge of evidence | 0.0 |

DISCUSSION

The first step for improving healthcare outcomes is the documentation, through clinical research, of best practices for various aspects of care. The next step is the translation from research to practice – to cross the bridge to health care delivery in the field. Many efforts by health care organizations to implement best practices have faltered, highlighting how difficult it is to achieve sustainable improvements in outcomes. With awareness of this issue, we pursued development and application of the Implementation Assessment Tool to assess the progress of participating Units as they implemented a multifaceted intervention for VAP prevention. We had two aims for this work: to learn how implementation actions themselves can be improved, and to analyze effects of the implementation interventions on measures of resulting outcomes.

What we learned was that, early in the implementation of the VAP prevention initiative, many units had made only moderate progress overall in taking many of the CUSP actions known to support their improvement processes, and they varied widely in the extent of the efforts taken. It was clear that more work was needed, which led our project team to modify the coaching provided to the participating Unit teams to reinforce training on the principles and practices of CUSP and other weak areas identified from the interviews.

For the contextual factors we examined, the Unit team leaders identified a relatively small number of barriers as having important negative effects on their implementation progress. Many of them shared some of the same barriers as being important, but they also identified a variety of other barriers, reflecting the unique circumstances under which each Unit operated.

These quantitative results were reinforced by the qualitative data collected in the interviews, highlighting that the Units lacked a cogent understanding of what is involved in CUSP and what actions they should take to create an environment sensitive to patient safety. These findings showed the value of having a combination of quantitative and qualitative data on implementation experiences, where the qualitative data provide more nuanced information that can be used on its own merits as well as to help interpret the quantitative data.

As we communicated the interview results to the participating units, they learned that they shared many of the same implementation successes and challenges, while reinforcing the need for additional training. To that end, we could work together to advance the science of improved patient care.

A key success in using the Implementation Assessment Tool was the positive responses by team leaders to the opportunity to share during the telephone interviews. We observed a level of energy and enthusiasm by the team leaders that was not apparent on many of the group conference coaching calls conducted during the collaborative. The interviewees welcomed having this forum to communicate successes and barriers, and they appreciated the ability to offer feedback on the tools and provide input that may contribute to evolution of the interventions and the project as a whole. Due to federal restrictions, we could not hold face-to-face collaborative meetings, making it difficult to build synergy among participants; these interviews helped to build that sense of community.18

We note, however, several challenges that are inherent to collecting data on implementation experiences, largely because it is resource-intensive to do so. From past experience, we knew that asking participating entities to complete information logs yields high rates of incomplete data because of non-compliance.8,19 We opted to use an interview approach instead, which avoided that problem but also required substantial researcher time to conduct the interviews and enter data. Using the interview protocol developed, we found that the interviews lasted an hour or longer.

Additional limitations included issues of how to time the interviews relative to implementation status, the need for validation of the predictive power of the implementation measures with respect to effects on outcomes, and need to conduct additional interviews later in the collaborative to track changes in implementation status over time. To be fully comparable, the data should be collected from all participants at approximately the same time relative to their start of improvement work, which we were not able to achieve. We have not yet tested the predictive power of these measures because the two-state project is still generating the outcome data. We plan to perform those analyses when we have complete outcome data, which also will inform future modifications to the Implementation Assessment Tool. We are also conducting a second set of interviews to be able to perform a comparison of interview data at two points of time during implementation.

The results of these interviews are currently being used to modify and improve the approach for the two-state VAP prevention collaborative, as well as to inform the AHRQ funded national initiative to eliminate VAP.20 These modifications include changes addressing the limitations described here, with the goal of shortening interviews while preserving the richness of data generated in the two-state project.

These assessments provide important new knowledge regarding the implementation science of quality improvement projects, both for feedback during implementation and to better understand which implementation factors most affect resulting healthcare outcomes. The lessons learned can likely be generalized to other efforts focused on eliminating healthcare-associated infections.

ACKNOWLEGEMENTS

We thank Christine G. Holzmueller, BLA, for her thoughtful review and assistance in editing of the manuscript. We acknowledge the tremendous efforts of the state hospital associations, state lead partners, and participating teams in Maryland and Pennsylvania hospitals. Their leadership and courage in this innovative effort reflect an unrelenting passion and dedication to improve quality and safety for their patients. This publication, or other product is derived from work supported under a contract with the Agency for Healthcare Research and Quality.

Financial support. This research was supported by a contract with the Agency for Healthcare Research and Quality (RFTO #9) and the National Institutes of Health (Contract # HHSA2902010000271)

Footnotes

Potential conflicts of interest. All authors report no conflicts of interest relevant to this article. Address correspondence to Sean M. Berenholtz, MD, MHS, Armstrong Institute for Patent Safety and Quality, Johns Hopkins University, 750 East Pratt Street, 15th Floor, Baltimore, MD 21202 (sberenho@jhmi.edu).

Contributor Information

Kisha Jezel Ali, Armstrong Institute for Patient Safety and Quality, Johns Hopkins Medicine, Baltimore, Maryland, USA;.

Donna O. Farley, Adjunct Staff with RAND Corporation; McMinnville, Oregon, USA;.

Kathleen Speck, Armstrong Institute for Patient Safety and Quality, Johns Hopkins Medicine, Baltimore, Maryland, USA;.

Mary Catanzaro, Hospital and Healthsystem Association of Pennsylvania, Harrisburg, Pennsylvania, USA;.

Karol G. Wicker, Maryland Hospital Association, Elkridge, Maryland, USA;.

Sean M. Berenholtz, Armstrong Institute for Patient Safety and Quality, Johns Hopkins Medicine, Baltimore, Maryland, USA;.

REFERENCES

- 1.Hughes RG. Tools and Strategies for Quality Improvement and Patient Safety In: Hughes RG, editor. Patient Safety and Quality: An Evidence-Based Handbook for Nurses. Rockville (MD): Agency for Healthcare Research and Quality (US); 2008. Apr. Chapter 44 [PubMed] [Google Scholar]

- 2.Wiltsey SS, Kimberly J, Cook N, Calloway A, Castro F, Charns M. The sustainability of new programs and innovations: a review of the empirical literature and recommendations for future research. Implement Sci 2012; 7:17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Marsteller JA, Sexton JB, Hsu YJ, Hsaio CJ, Pronovost PJ, Thompson D. A multicenter phased cluster-randomized controlled trial to reduce central line-associated blood-stream infections. [published online ahead of print August 10, 2012] Critical Care Med [DOI] [PubMed] [Google Scholar]

- 4.Helfrich CD, Damschroder LJ, Hagedorn HJ, et al. A critical synthesis of literature on the promoting action on research implementation in health services (PARIHS) framework. Implement Sci 2010; 5:82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Schyve PM. Leadership in Healthcare Organizations: A guide to Joint Commission Leadership Standards. California: Governance Institute; 2009. [Google Scholar]

- 6.Merriam Webster Dictionary. Implement. Available at: http://www.merriam-webster.com/dictionary/implement. Accessed November 1, 2013.

- 7.Merriam Webster Dictionary. Context. Available at: http://www.merriam-webster.com/dictionary/context. Accessed November 1, 2013.

- 8.Berenholtz SM, Pham JC, Thompson DA, et al. An Intervention to Reduce Ventilator- Associated Pneumonia in the ICU. Infect Control Hosp Epidemiol 2011; 32(4): 305–314. [DOI] [PubMed] [Google Scholar]

- 9.Pronovost PJ, Berenholtz SM, Needham DM. Translating evidence into practice: a model for large scale knowledge translation. BMJ 2008; 337:963–5. [DOI] [PubMed] [Google Scholar]

- 10.Using a comprehensive unit-based safety program to prevent healthcare-associated infections. Agency for Healthcare Research and Quality http://www.ahrq.gov/professionals/quality-patient-safety/cusp/using-cusp-prevention/index.html. Updated 2013. Accessed Aug 16, 2013.

- 11.Sexton JB, Berenholtz SM, Goeschel CA. Assessing and improving safety climate in a large cohort of intensive care units. Crit Care Med 2011; 39(5): 934–9. [DOI] [PubMed] [Google Scholar]

- 12.Timmel J, Kent PS, Holzmueller CG, Paine L, Schulick RD, Pronovost PJ. Impact of the Comprehensive Unit-Based Safety Program (CUSP) on Safety Culture in a Surgical Inpatient Unit. Jt Comm J Qual Patient Saf 2010; 36(6): 252–260. [DOI] [PubMed] [Google Scholar]

- 13.Weaver SJ, Lubomksi LH, Wilson RF, Pfoh ER, Martinez KA, Dy SM. Promoting a Culture of Safety as a Patient Safety Strategy: A Systematic Review. Ann of Internal Med 2013; 158: 369–374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lubomski LH, Marsteller JA, Hsu YJ, et al. The team checkup tool: evaluating QI team activities and giving feedback to senior leaders. Jt Comm J Qual Patient Saf 2008; 34 (10): 619–23, 561. [DOI] [PubMed] [Google Scholar]

- 15.Farley DO, Damberg CL, Ridgely MS, et al. Assessment of the AHRQ Patient Safety Initiative: Final Report, Evaluation Report IV (CA): RAND Corporation; 2008. [Google Scholar]

- 16.Morganti KG, Lovejoy S, Haviland AM, Haas AC, Farley DO. Measuring Success for Health Care Quality Improvement Interventions. Medical Care 2012; 50(12): 1086–92. [DOI] [PubMed] [Google Scholar]

- 17.Morganti KG, Lovejoy S, Beckjord EB, Haviland AM, Haas AC, Farley DO. A Retrospective Evaluation of the Perfecting Patient Care University Training Program for Health Care Organizations. Am J Med Qual 2014; 29(1): 30–8. [DOI] [PubMed] [Google Scholar]

- 18.Aveling E, Martin GP, Armstrong N, Banerjee J, Dixon-Woods M. Quality improvement through clinical communities: Eight lessons for practice. J Health Organ Manage 2012; 26:158–74. [DOI] [PubMed] [Google Scholar]

- 19.Berenholtz SM, Lubomski LH, Weeks K, et al. Eliminating Central Line-Associated Bloodstream Infections: A National Patient Safety Imperative. Infect Control Hosp Epidemiol 2014; 35(1):56–62. [DOI] [PubMed] [Google Scholar]

- 20.Johns Hopkins Awarded $7.3 Million to Prevent Ventilator-Associated Pneumonia. Johns Hopkins Medicine Web Site. http://www.hopkinsmedicine.org/news/media/releases/johns_hopkins_awarded_73_million_to_prevent_ventilator_associated_pneumonia. Updated September 24, 2013. Accessed January 30, 2014.