Abstract

For humans and for non-human primates heart rate is a reliable indicator of an individual’s current physiological state, with applications ranging from health checks to experimental studies of cognitive and emotional state. In humans, changes in the optical properties of the skin tissue correlated with cardiac cycles (imaging photoplethysmogram, iPPG) allow non-contact estimation of heart rate by its proxy, pulse rate. Yet, there is no established simple and non-invasive technique for pulse rate measurements in awake and behaving animals. Using iPPG, we here demonstrate that pulse rate in rhesus monkeys can be accurately estimated from facial videos. We computed iPPGs from eight color facial videos of four awake head-stabilized rhesus monkeys. Pulse rate estimated from iPPGs was in good agreement with reference data from a contact pulse-oximeter: the error of pulse rate estimation was below 5% of the individual average pulse rate in 83% of the epochs; the error was below 10% for 98% of the epochs. We conclude that iPPG allows non-invasive and non-contact estimation of pulse rate in non-human primates, which is useful for physiological studies and can be used toward welfare-assessment of non-human primates in research.

Introduction

Heart rate is an important indicator of the functional status and psycho-emotional state for humans, non-human primates (NHP) [1–3] and other animals [4, 5]. A common and the most direct tool for measuring heart rate is the electrocardiogram (ECG) [6]. ECG acquisition requires application of several cutaneous electrodes, which is not always possible even for human subjects [7]. ECG acquisition in NHP is complicated by the fact that conventional ECG electrodes require shaved skin and that awake animals do not easily tolerate skin-attachments unless extensively trained to do so. Hence, ECG in NHPs is either collected from sedated animals [6, 8, 9] or by implanting a telemetry device in combination with highly invasive intracorporal sensors [10–12]. For a non-invasive ECG acquisition from non-sedated monkeys a wearable jacket can be used [1, 13, 14], but this also requires extensive training and physical contact when preparing the animal, which may affect the physiological state.

If heart rate is of interest, but not other ECG parameters, a low-cost alternative to ECG is provided by the photoplethysmogram (PPG). PPG utilizes variations of the light reflected by the skin which correlate with changes of blood volume in the microvascular bed of the skin tissue [15]. PPG allows quite accurate estimation of pulse rate [16–18]. For many applications, pulse rate is a sufficient proxy for heart rate, the latter of which can be, strictly speaking, only assessed from direct cardiac measurements, such as ECG. Conventional PPG still requires a contact sensor comprising a light source to illuminate the skin and a photodetector to measure changes in the reflected light intensity, as used for example in medical-purpose pulse oximeters.

Imaging photoplethysmogram (iPPG) has been proposed [19–21] as a remote and non-contact alternative to the conventional PPG in humans. iPPG is acquired using a video camera instead of a photodetector, under dedicated [19, 22–24] or ambient [21, 25] light. The video is usually recorded from palm or face regions [21–25].

Pilot studies have demonstrated the possibility of extracting iPPG from anesthetized animals, specifically pigs [26, 27]. Since iPPG allows easy and non-invasive estimation of pulse rate, this technique would be very useful for NHP studies. If applicable in non-sedated and behaving animals, it could, for instance, contribute to the welfare of NHP used in research. To our best knowledge, there were no attempts to acquire iPPG from NHPs. In this paper, we demonstrate iPPG extraction from NHP facial videos and provide the first empirical evidence that rhesus monkeys pulse rate can be successfully estimated from iPPG.

Materials and methods

Animals and animal care

Research with non-human primates represents a small but indispensable component of neuroscience research. The scientists in this study are aware and are committed to the great responsibility they have in ensuring the best possible science with the least possible harm to the animals [28].

Four adult male rhesus monkeys (Macaca mulatta) participated in the study (Table 1). All animals had been previously implanted with cranial titanium or plastic “head-posts” under general anesthesia and aseptic conditions, for participating in neurophysiological experiments. The surgical procedures and purpose of these implants were described previously in detail [29, 30]. Animals were extensively trained with positive reinforcement training [31] to climb into and stay seated in a primate chair, and to have their head position stabilized via the head-post implant. This allows implant cleaning, precise recordings of gaze and neurophysiological recordings while the animals work on cognitive tasks in front of computer screen. Here, we made opportunistic use of these situations to record facial videos in parallel. The experimental procedures were approved of by the responsible regional government office (Niedersaechsisches Landesamt fuer Verbraucherschutz und Lebensmittelsicherheit (LAVES)). The animals were pair- or group-housed in facilities of the German Primate Center (DPZ) in accordance with all applicable German and European regulations. The facility provides the animals with an enriched environment (incl. a multitude of toys and wooden structures [32, 33]), natural as well as artificial light and access to outdoor space, exceeding the size requirements of European regulations. The animals’ psychological and veterinary welfare is monitored daily by the DPZ’s veterinarians, the animal facility staff and the lab’s scientists (see also [34]).

Table 1. Subjects data and illumination conditions of experimental sessions.

For illumination we used either fluorescent lamps mounted at the room ceiling (Philips Master Tl-D 58W/840, 4000K cold white) or setup halogen lamps (Philips Brilliantline 20W 12V 36D 4000K). Luminance was measured by luminance meter LS-100 (Minolta) with close-up lens No 135 aiming at the ridge of the monkey nose (settings: calibration preset; measuring mode abs.; response slow; measurement distance ≈ 55 cm).

| Animal | Sessions | Age, full years | Weight, kg | Illumination | Luminance, cd/m2 |

|---|---|---|---|---|---|

| Sun | 1, 2 | 16 | 12.0 | fluorescent | 87.5 |

| Fla | 3, 4 | 8 | 14.4 | fluorescent+daylight | 12.9 |

| Mag | 5, 6, 9, 10 | 11 | 10.6 | fluorescent+daylight | 12.8 |

| 7 | 12 | 10.1 | halogen+daylight | 4.0 | |

| Lin | 8 | 10 | 9.6 | fluorescent+daylight | 12.7 |

During the video recordings, the animals were head-stabilized and sat in the primate chair in their familiar experimental room, facing the camera. Recording sessions were performed during the preparation phase for the cognitive task. Animals were not rewarded during the video recording to minimize facial motion from sucking, chewing or swallowing.

Materials and set-up

Our study of pulse rate estimation consisted of ten experimental sessions. Video acquisition parameters for Sessions 1–8 where RGB video was recorded are provided in Table 2. We have tried several acquisition parameters to see whether they affect the iPPG quality, but did not observe any considerable difference. Most methods and results are reported for the RGB video. In addition, monochrome near-infrared (NIR) video was acquired during Session 2 (simultaneously with RGB) and in Sessions 9 and 10, as a control to compare pulse rate estimation from NIR and RGB video, see Section Estimation of pulse rate from near-infrared video for details. All videos were acquired at ambient (non-dedicated) light, see Table 1 for details. We used different illumination conditions since setting a particular illumination is often not possible in everyday situations, and we wanted our video-based approach to be able to cope with this variability.

Table 2. Video acquisition parameters for Sessions 1–8.

Kinect here abbreviates the RGB sensor of a Microsoft Kinect for Xbox One; Chameleon stands for Chameleon 3 U3-13Y3C (FLIR Systems, OR, USA), with either 10-30mm Varifocal Lens H3Z1014 (Computar, NC, USA) set approximately to 15 mm, or 3.8-13mm Varifocal Lens Fujinon DV3.4x3.8SA-1 (Fujifilm, Japan) set to 13 mm. Color resolution for both cameras is 8 bit per channel. For Chameleon 3 aperture was fully opened, automatic white-balance was disabled.

| Session | Sensor | Frame rate, fps | Video resolution, pixels | Distance to monkey face, cm | Session duration, s | Epoch duration, s |

|---|---|---|---|---|---|---|

| 1 | Kinect | 30 | 1920 × 1080 | 25 | 420 | 34.1 |

| 2 | Kinect | 30 | 1920 × 1080 | 50 | 307 | 34.1 |

| 3 | Chameleon, 10-30mm | 50 | 1280 × 1024 | 30 | 275 | 20.5 |

| 4 | Chameleon, 10-30mm | 100 | 640 × 512 | 30 | 160 | 20.5 |

| 5 | Chameleon, 10-30mm | 50 | 1280 × 1024 | 30 | 204 | 20.5 |

| 6 | Chameleon, 10-30mm | 50 | 640 × 512 | 30 | 93 | 20.5 |

| 7 | Chameleon, 10-30mm | 50 | 1280 × 1024 | 30 | 500 | 20.5 |

| 8 | Chameleon, 3.8-13mm | 50 | 1280 × 1024 | 30 | 140 | 20.5 |

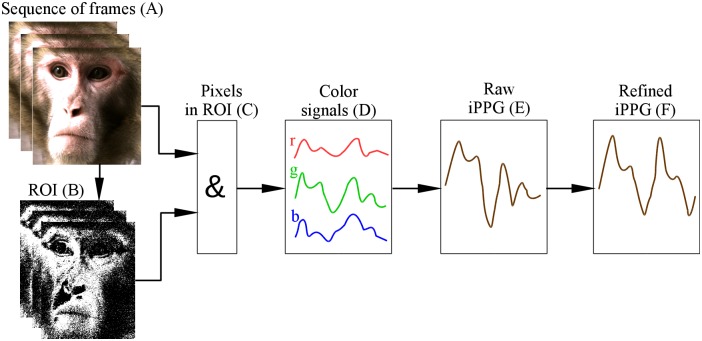

Facial videos of rhesus monkeys were processed to compute iPPG signals. Fig 1 illustrates the main steps of iPPG extraction, which we will consider below in detail: selection of pixels that might contain pulse-related information is described in Section Selecting and refining the region of interest, computation and processing of iPPG is considered in Section Extraction and processing of imaging photoplethysmogram.

Fig 1. Flowchart of iPPG extraction.

(A) We start from a sequence of RGB frames and define for them a region of interest (ROI). (B) For each frame we select ROI pixels containing pulse-related information (shown in white). (C) For these pixels we (D) compute across-pixel averages of unit-free non-calibrated values for red, green and blue color channels. (E) iPPG signal is computed as a combination of three color signals and then (F) refined by using several filters (see main text for detail).

To estimate pulse rate from iPPG (videoPR) we computed the discrete Fourier transform (DFT) of iPPG signal in sliding overlapping windows; for each window pulse rate is estimated by the frequency with highest amplitude in the heart-rate bandwidth, which is 90–300 BPM (1.5–5 Hz) for rhesus monkeys [2, 35–37]. This approach is commonly used for human iPPG [22, 24, 25], so we applied it here to make our results comparable. The length of DFT window was 1024 points for all sessions except Session 4 where a window length of 2048 points was used due to higher video frame rate. This window length corresponds to 34.1 s for Sessions 1, 2 and 20.5 s for other sessions, see Table 2. A larger window leads to poor temporal resolution, while a smaller window results in crude frequency resolution (≥ 4 BPM).

Video processing, ROI selection and computation of color signals are implemented in C++ using OpenCV (opencv.org). iPPG extraction and processing, as well as pulse rate estimation, are implemented in Matlab 2016a (www.mathworks.com).

Reference pulse rate (refPR) values were obtained by a pulse-oximeter CAPNOX (Medlab GmbH, Stutensee) using a probe attached to the monkey’s ear. This pulse-oximeter does not provide a data recording option, but it detects pulses and displays on the screen a value of pulse rate computed as an inverse of the average inter-pulse interval over the last 5 s. The pulse-oximeter measures pulse rate within the range 30–250 BPM with a documented accuracy of ± 3 BPM. The pulse-oximeter screen was recorded simultaneously with the monkeys’ facial video and pulse rate was extracted off-line from the video.

To compare videoPR with refPR, we used epochs of length equal to the length of DFT windows with 50% overlap (see Table 2). For this we averaged the refPR values over the epochs. Note that the quality of the raw iPPG signal was low compared to the contact PPG acquired by the pulse-oximeter. Therefore we were unable to directly detect pulses in the signal, which would provide better temporal resolution (see Section Pulse rate estimation for details).

Selecting and refining the region of interest

To reduce spatially uncorrelated noise and enhance the pulsatile signal, we averaged values of color channels over the ROI [21, 38]. In order to select pixels containing maximal amount of pulsatile information, we used the following three-step algorithm:

-

Step 1

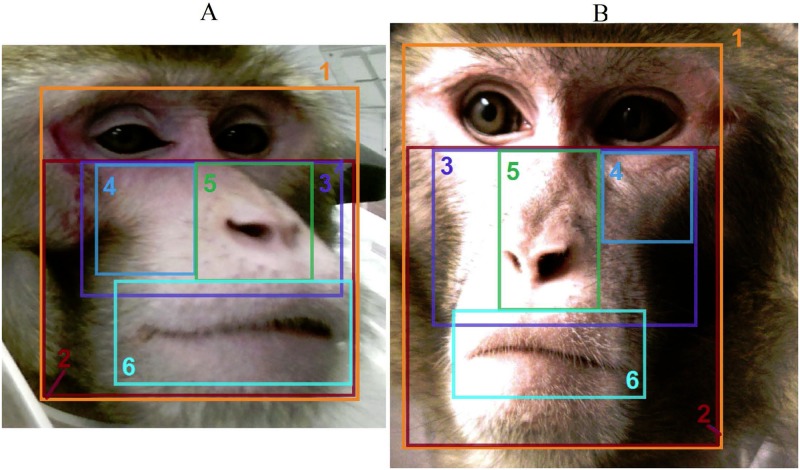

A rectangular boundary for the ROI was selected manually for the first frame of the video. Since no prominent motion was expected from a head-stabilized monkey, this boundary remained the same for the whole video. We tried six heuristic regions shown in Fig 2; the best iPPG extraction for most sessions was achieved for the nose-and-cheeks region 3 (Fig 3).

-

Step 2

Since hair, eyes, teeth, etc. provide no pulsatile information and deteriorate quality of the acquired iPPG, only skin pixels should constitute the ROI. To distinguish between skin and non-skin pixels, we transformed frames from the RGB to the HSV (Hue-Saturation-Value) color model as recommended in [39, 40]. We excluded all pixels having either H, S or V value outside of a specified range. Three HSV ranges describing monkey skin for different illumination conditions (Table 1) were selected by manual adjustment under visual control of the resulting pixel area, see Table 3.

-

Step 3In addition, for each frame we excluded all outlier pixels that differed significantly from other pixels in the ROI. The aim of this step was to eliminate pixels corrupted by artifacts. Namely, we excluded pixel (i, j) in the k-th frame if for it the value of any color channel did not satisfy the inequality

where mk and σk are the mean and standard deviation of color channel c for pixels included in the ROI of the k-th frame at steps 1-2; the coefficient 1.5 was selected based on our previous work [41].

Fig 2. Regions of interest.

Regions used for iPPG extraction from the video of Sessions 1 (A) and 5 (B): (1) full face, (2) face below eyes, (3) nose and cheeks, (4) cheek, (5) nose, (6) mouth with lips.

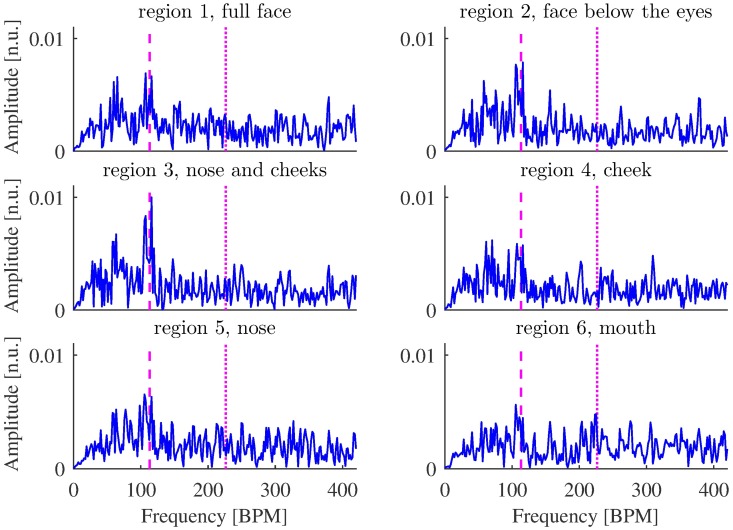

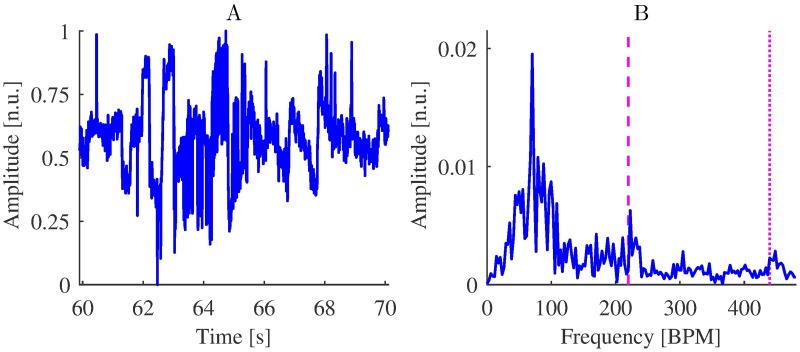

Fig 3. Amplitude spectrum densities of raw iPPG acquired from regions 1-6 (Fig 2) from video of Session 1, 315-349 s.

The frequency corresponding to the refPR value and its second harmonic are indicated by dashed and dotted lines, respectively. Although the peak corresponding to the pulse rate is most prominent for region 3, it is distinguishable for other regions as well.

Table 3. HSV ranges used for skin detection in RGB videos.

All values are provided in normalized units (n.u).

| Session | Hue (H), n.u | Saturation (S), n.u. | Value (V), n.u. |

|---|---|---|---|

| 1, 2 | [0.000, 0.167] | [0.039, 0.431] | [0.000, 0.941] |

| 3, 4, 5, 6, 8 | [0.000, 0.278] | [0.039, 0.569] | [0.000, 0.941] |

| 7 | [0.000, 0.278] | [0.039, 0.569] | [0.392, 0.941] |

Extraction and processing of imaging photoplethysmogram

Color signals , and were computed as averages for each color channel over the ROI obtained by refining the nose-and-cheeks region 3 (Fig 3) for every frame k. Then, prior to iPPG extraction, we mean-centered and scaled each color signal to make them independent of the light source brightness level and spectrum, which is a standard procedure in iPPG analysis [22, 24]:

where mk,M is an M-point running mean of color signal :

For k < M we use m1,M. We followed [24] in taking M corresponding to 1 s.

For iPPG extraction we used the “green-red difference” (G-R) method [42], which is simple and effective for computing human iPPG [24, 41]. This method is based on the assumption that green color signal carries maximal amount of pulsatile information, while red color signal contains little relevant information but allows to compensate those artifacts common for both color channels (see Section Imaging photoplethysmography under visible and near-infrared light for a discussion of pulsatile information provided by different colors). Thus iPPG was computed as a difference of green and red color signals:

where gk and rk are mean-centered and scaled green and red color signals, respectively.

We have also employed two other methods for iPPG extraction, CHROM [22] and POS [24], that are most effective for computing human iPPG [24, 41]. POS computes iPPG as a combination of color signals:

where and are L-point running standard deviations of xk = gk − bk and yk = gk + bk − 2rk, respectively.

CHROM combines color signals in a slightly different way:

where and are L-point running standard deviations of xk = 0.77rk − 0.51gk and yk = 0.77rk + 0.51gk − 0.77bk. For both methods we took L corresponding to 1 s so that the time window captured at least one cardiac cycle as recommended in [24].

Note that our implementation of POS and CHROM methods is slightly different from the original in [22, 24]: to ensure signal smoothness, we compute running means and standard deviations instead of computing segments of iPPG in several overlapped windows and then gluing them by the overlap-add procedure described in [22]. Processing of iPPG signal for POS and CHROM methods was the same as for G-R method.

Since we used low-cost cameras, we expected a poor quality of the raw iPPG signal compared to contact PPG. Therefore we post-processed iPPG using three following steps, typical for iPPG signal processing [22, 25, 42, 43].

-

Step 1

We suppressed frequency components outside of the heart-rate bandwidth 90–300 BPM (1.5–5 Hz) using finite impulse response filter of the 127th order with a Hamming window as suggested in [25].

-

Step 2We suppressed outliers in the iPPG signal by cropping amplitude of narrow high peaks that could deteriorate performance on the next step:

where the coefficient 3 was selected based on the classical three-sigma rule, mk,W and σk,W are W-point running mean and standard deviation of the iPPG signal respectively:

We took W equal to 2 s to have estimates of mean and standard deviation over several cardiac cycles. -

Step 3

We applied a wavelet filtering proposed in [44] to suppress secondary frequency components. This filtering consists of two steps: first a wide Gaussian window suppresses frequency components remote from the frequency corresponding to maximum of average power over 30 s (global filtering for suppressing side bands that could cause ambiguities in local filtering). Then a narrow Gaussian window is applied for each individual time-point’s maximum (local filtering to emphasize the “true” maximum). As suggested in the original article, we employed Morlet wavelets. We used scaling factors 2 and 5 for global and local filtering, respectively (see [44] for details), since these parameters provide best pulse rate estimation in our case. We implemented wavelet filtering using Matlab functions cwtft/icwtft from Wavelet Toolbox.

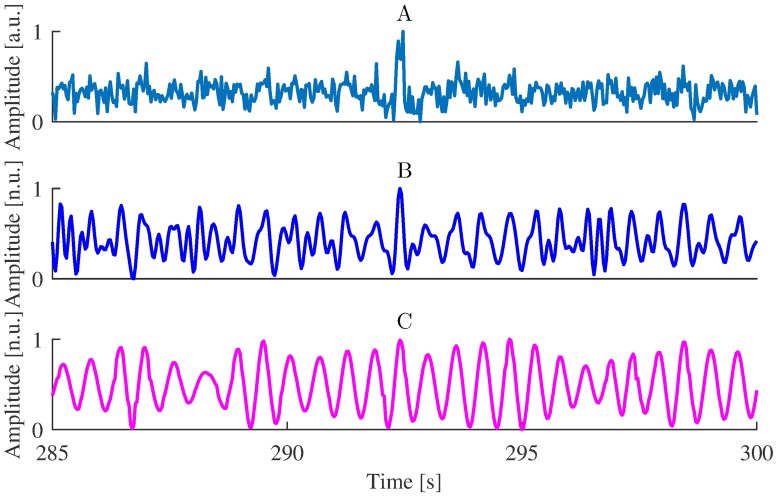

To demonstrate the effect of the multi-step iPPG processing, we show in Fig 4 how each processing step magnifies the pulse-related component.

Fig 4. Effect of iPPG processing.

(A) Raw iPPG extracted using G-R method from the video of Session 1, (B) iPPG after band-pass filtering, (C) iPPG after wavelet filtering.

Estimation of pulse rate from near-infrared video

To check the possibility of iPPG extraction from monochrome near-infrared (NIR) video in NHP, we recorded video using the Kinect NIR sensor during Session 2. Additionally, we conducted Sessions 9 and 10 (see Table 1) using the monochrome camera Chameleon 3 U3-13Y3M (FLIR Systems, OR, USA) with ultraviolet/visual cut-off filter R-72 (Edmund Optics, NJ, USA) blocking light with wavelengths below 680 nm. Details of the recorded video are presented in Table 4. Extraction of iPPG from monochrome NIR video was similar to that from RGB video: we computed raw iPPG as average intensity over all pixels in a ROI (monochrome NIR video provides a single intensity channel, so we could not use G-R method in this case) and then processed the signal as described in Section Extraction and processing of imaging photoplethysmogram. For ROI selection we determined skin pixels as having intensity within the range specified in Table 4.

Table 4. Description of experimental sessions with monochrome near-infrared video recording.

| Session | Sensor | Frame rate, fps | Video resolution, pixels | Distance to monkey face, cm | Session duration, s | Pixel intensity range, n.u. |

|---|---|---|---|---|---|---|

| 2 | Kinect NIR | 30 | 512 × 424 | 50 | 307 | [0.39,0.99] |

| 9 | Chameleon, 10-30mm | 100 | 1280 × 1024 | 30 | 133 | [0.31,0.94] |

| 10 | Chameleon, 10-30mm | 50 | 1280 × 1024 | 30 | 184 | [0.31,0.94] |

Data analysis

To access the quality of pulse rate estimation, we compared iPPG-based pulse rate estimates (videoPR) with the reference pulse rate estimates by contact pulse oximetry (refPR). For each session we computed the mean absolute error (as the absolute difference between videoPR and refPR, averaged across all epochs) and the Pearson correlation coefficient. To facilitate the interpretation of the results we also present percentages of epochs with estimation error in a certain range.

Additionally, in Section Effects of motion on iPPG quality, we assessed the quality of iPPG by computing the signal-to-noise ratio as suggested in [22] (we considered frequency components as contributing to signal if they are in range [refPR − 9BPM, refPR + 9BPM] or [2 ⋅ refPR − 18BPM, 2 ⋅ refPR + 18BPM]). The signal-to-noise ratio is a better indicator of iPPG quality than an error of pulse rate estimation, since pulse rate can be estimated correctly even from a low-quality iPPG. In the same section we estimated the amount of motion that may affect iPPG acquisition by averaging over time mean squared differences between successive frames:

where K is the number of frames, W and H are the width and length of the region of interest, and with being the value of color channel c of the pixel (i, j) in the k-th frame.

Results

Main result: Estimation of pulse rate from RGB video

To check the usefulness of the iPPG signal for pulse rate estimation in rhesus monkeys, we computed several quality metrics characterizing values of pulse rate derived from the video (videoPR) in comparison with the reference pulse rate from the pulse-oximeter (refPR).

The values of quality metrics presented in Table 5 show that pulse rate estimation from iPPG was successful. Bearing in mind that accuracy of refPR is ± 3 BPM, obtained values of mean absolute error for videoPR are rather good. Altogether, for 80% of epochs error of pulse rate estimation was below 7 BPM (which is 5% of rhesus monkey average heart rate 140 BPM), and for 83% of epochs error of pulse rate estimation was below 5% of refPR value. The low correlation between videoPR and refPR for Session 4 is explained by two outlier data-points for which refPR is above average while videoPR is well below average (estimation errors for these epochs are 18 and 11 BPM); after excluding these two points the correlation for Session 4 was 0.58.

Table 5. Quality metrics for iPPG-based pulse rate estimates.

Error was calculated as the difference between videoPR and refPR; the Pearson correlation coefficient was computed between videoPR and refPR across epochs in each session. To facilitate interpretation of the results and comparison with previous studies [41, 45–47], we also present percentages of epochs with estimation error in certain range.

| Session | Animal | Number of epochs | Mean absolute error, BPM | Epochs with absolute error below | Correlation | |||

|---|---|---|---|---|---|---|---|---|

| 3.5 BPM | 7 BPM | 5% | 10% | |||||

| 1 | Sun | 23 | 2.32 | 74% | 100% | 87% | 100% | 0.88 |

| 2 | Sun | 16 | 3.95 | 69% | 75% | 75% | 94% | 0.52 |

| 3 | Fla | 26 | 2.81 | 69% | 96% | 96% | 100% | 0.74 |

| 4 | Fla | 15 | 4.32 | 60% | 80% | 80% | 93% | 0.02 |

| 5 | Mag | 19 | 3.78 | 58% | 89% | 95% | 100% | 0.92 |

| 6 | Mag | 8 | 2.16 | 75% | 100% | 100% | 100% | 0.98 |

| 7 | Mag | 48 | 7.01 | 31% | 56% | 67% | 98% | 0.85 |

| 8 | Lin | 12 | 3.98 | 50% | 83% | 92% | 100% | 0.88 |

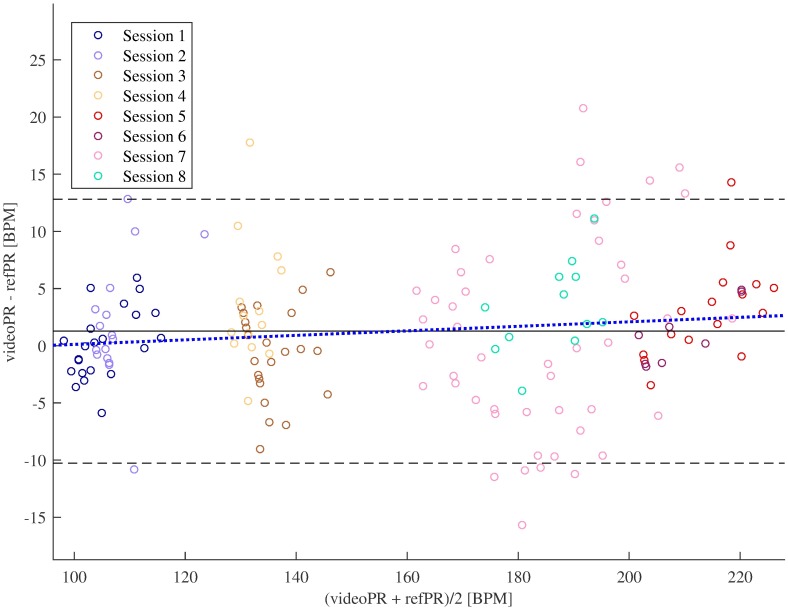

Fig 5 shows the Bland-Altman plots for all sessions (in four monkeys). One can see that for most epochs the error of pulse rate estimation was low; it was slightly higher for high pulse rate.

Fig 5. Bland-Altman plots of pulse rate estimates.

Solid line shows mean error (videoPR—refPR), dashed lines indicate mean error ± 1.96 times standard deviation of the error. Dotted line represents the regression line, regression coefficient was insignificant (p = 0.085).

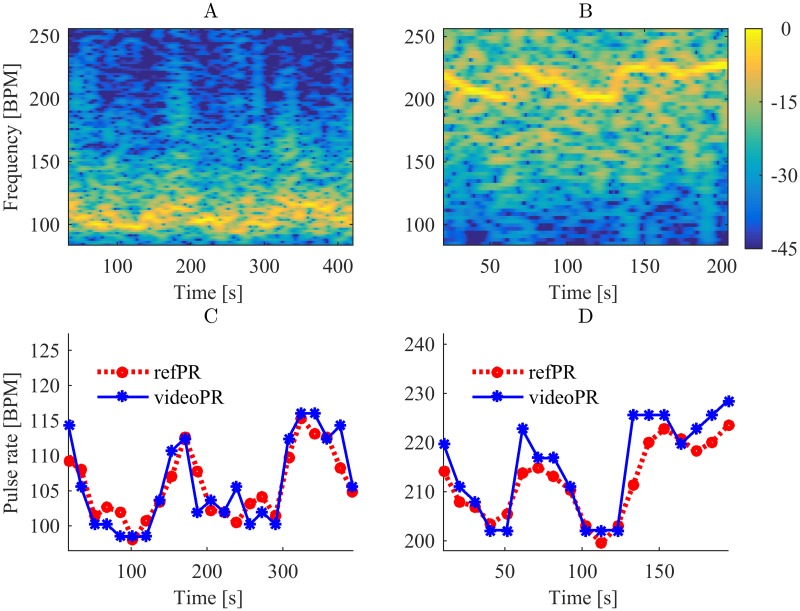

Fig 6 shows power spectrograms of the processed iPPG and average values of videoPR for Sessions 1 and 5 in comparison with the reference pulse rate from the pulse-oximeter (refPR). For both sessions, videoPR allows to track the pulse rate changes comparably to the data from pulse-oximeter (see also S1 Fig).

Fig 6. Power spectrograms of iPPG (A, B) and pulse rate values (C, D) for Sessions 1 and 5 respectively.

Multi-step iPPG processing results in better pulse rate estimation

In this study we used a multi-step procedure for iPPG signal processing. To demonstrate that all the steps are important for the quality of pulse rate estimation, we estimated pulse rate omitting certain processing steps. Average quality metrics for these estimates are presented in Table 6, as one can see only a combination of filters improves the pulse rate estimation.

Table 6. Quality metrics averaged over all sessions for different variants of iPPG processing.

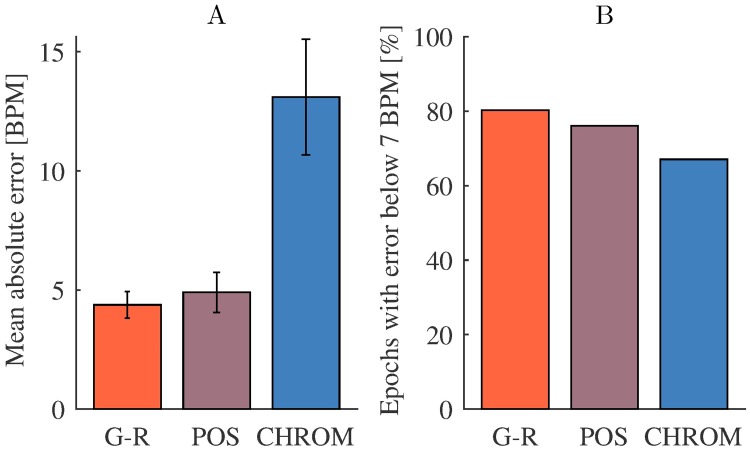

Comparison of methods for iPPG extraction

All results reported so far were obtained using G-R method [42] for iPPG extraction. We have additionally estimated pulse rate from the iPPG signal extracted by CHROM [22] and POS [24] methods (iPPG processing was the same as for G-R method). Fig 7 shows that performance of G-R and POS methods was rather similar. Worse performance of CHROM was likely due to the fact that it is based on a model of a human skin [24], thus additional research is required to adjust it for iPPG extraction in NHP.

Fig 7. Comparison of iPPG extraction methods.

(A) Mean values with 95% confidence intervals (estimated as 1.96 times standard error) of absolute pulse rate estimation error and (B) share of epochs with absolute error below 7 BPM for G-R, POS and CHROM methods across Sessions 1-8. Note that the large error of CHROM method apparent in panel A is mainly caused by several epochs with high error, as evident from only a minor reduction of percent epochs with error < 7 BPM.

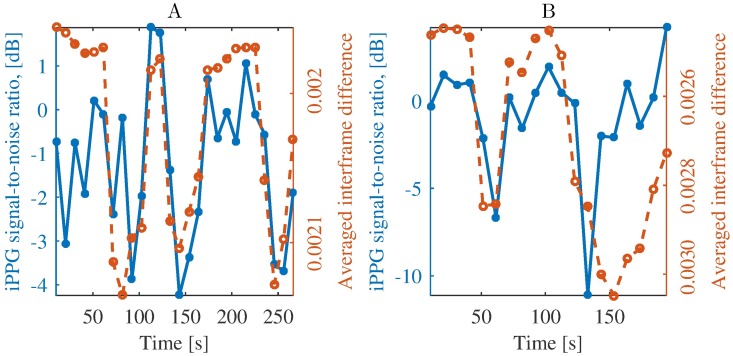

Effects of motion on iPPG quality

Although we have considered here iPPG extraction for head-stabilized monkeys, our subjects exhibited a considerable number of facial movements. Fig 8 illustrates their negative impact on iPPG extraction quality: the more prominent are movements during an epoch, the lower is quality of extracted iPPG.

Fig 8. Influence of facial movements on quality of iPPG signal from Sessions 3 (A) and 5 (B).

We assessed quality of iPPG by signal-to-noise ratio and estimated amount of motion by averaged interframe difference, see Section Data analysis. In epochs with more motion, signal-to-noise ratio decreases, which reflects the adverse influence of motion on iPPG signal quality. Note the inverted y-axis for motion (averaged interframe difference).

Pulse rate estimation from near-infrared video was not successful

As indicated by the quality metrics in Table 7, pulse rate estimation from near-infrared (NIR) video was not successful. The low quality of estimation reflects that pulse rate had most of the time lower power than other frequencies related to movements, lighting variations, other artifacts or noise (see Fig 9).

Table 7. Quality metrics for pulse rate estimates computed from monochrome NIR video.

| Session | Number of epochs | Mean absolute error, BPM | Epochs with absolute error below | Correlation | |

|---|---|---|---|---|---|

| 3.5 BPM | 7 BPM | ||||

| 2 | 16 | 52.5 | 13% | 19% | -0.02 |

| 9 | 12 | 37.4 | 25% | 42% | -0.13 |

| 10 | 17 | 97.3 | 6% | 6% | -0.01 |

Fig 9. iPPG extraction from near-infrared video.

(A) Raw iPPG extracted from the video of Session 9 (compare with Fig 4A) and (B) amplitude spectrum densities of the iPPG signal 55-75 s (compare with Fig 3). The frequencies corresponding to the refPR value and its second harmonic are indicated by dashed and dotted lines, respectively. While the peak corresponding to the pulse rate is distinguishable, the signal is strongly contaminated with noise that hampers correct pulse rate estimation.

Discussion

Imaging photoplethysmography under visible and near-infrared light

Quality of the photoplethysmogram (PPG) acquisition in general strongly depends on the light wavelength. The PPG signal is generated by variations of the reflected light intensity due to changes in scattering and absorption of skin tissue. In the ideal case, light would be only absorbed by blood haemoglobin, which would make PPG a perfect indicator of the blood volume changes [16, 48]. However, light is also absorbed and scattered by water, melanin, skin pigments and other substances [16].

There is no general consensus about the optimal wavelength for PPG acquisition, but the trend is in favor of employing visible light [18, 49]. Although traditionally PPG was acquired at near-infrared (NIR) wavelengths [15, 16], experiments have shown that the light from the visible spectral range allows to acquire comparably accurate PPG [48, 50, 51] or to even reach higher accuracy [52, 53]. Theoretical studies and simulations in [42] indicate that the best signal-to-noise ratio in iPPG should be obtained for wavelengths in the ranges of 420–455 and 525–585 nm, with peaks at 430, 540 and 580 nm corresponding to violet, green and yellow light, respectively (see [42, Section 3.5] for details). Passing through epidermis and bloodless skin is optimal for green (510–570 nm), red (710–770 nm) and NIR light (770–1400 nm) [54, 55]. However, absorption of haemoglobin is maximal for the green and yellow (570–590 nm) light [52, 56, 57]. This results in a better signal-to-noise ratio for these wavelengths [18, 49, 52].

Our results show that monochrome NIR video acquired with single non-specialized cameras and without dedicated illumination was not suitable for iPPG extraction, while RGB visible-light video was suitable. The striking difference between the results is not altogether surprising. Combining several color channels when extracting iPPG from RGB video, is more effective than considering data from a single wavelength [21, 22]. Specifically, it makes iPPG extraction from RGB video less sensitive to movements and lighting variations, while for NIR video to compensate them simultaneous video acquisition from several cameras is typically used [58, 59].

Additional research is however required to check whether visible or NIR light is generally preferable for iPPG extraction in NHP. In particular, two following obstacles may hinder accurate iPPG extraction from RGB video of NHP faces under visible light:

High melanin concentration. Melanin strongly absorbs visible light with wavelengths below 600 nm [16], which degrades the quality of PPG for humans with a high melanin concentration [43, 47]. This often motivated using red or NIR light for PPG acquisition [16, 17], but some modern methods for iPPG extraction successfully alleviate this problem [47]. To the best of our knowledge, melanin concentration in monkey facial skin was not compared to that of humans, and the question of melanin concentration influence on iPPG extraction in NHP remains open.

-

Insufficient light penetration depth. For a light of wavelength λ within 380–950 nm (visible and NIR light) with fixed intensity, the higher λ is, the deeper the light penetrates the tissue [51, 60]. This is important since pulsatile variations are more prominent in deeper lying blood vessels. For instance, the penetration depth of the blue light (400-495 nm) is only sufficient to reach the human capillary level [42, 61], providing little pulsatile information [23]. Green light penetrates up to 1 mm below the human skin surface, which makes the reflected light sensitive to the changes in the upper blood net plexus and in the reticular dermis [42, 60]. To assess blood flow in the deep blood net plexus, one uses red or NIR light with penetration depth above 2 mm [21, 60]. Insufficient penetration depth of visible light may hinder iPPG acquisition from certain parts of human body [19, 42], but human faces allow extraction of accurate iPPG from the green and even the blue light component [21, 51].

For rhesus monkeys iPPG extraction is simplified by the fact that their facial skin has a dense superficial plexus of arteriolar capillaries [62]. It has been previously demonstrated [63] that subtle changes in facial color of rhesus monkeys caused by blood flow variations can be detected using a camera sensor. For other NHP it is not clear which wavelength if any would be sufficient to penetrate the skin and systematic studies are required to answer this question.

Video acquisition and region of interest selection

A good video sensor is necessary for successful iPPG extraction. In this study we used three different sensors: RGB and NIR sensors of a Microsoft Kinect for Xbox One and Chameleon 3 1.3 MP (color and monochrome models), details are provided in Table 8.

Table 8. Characteristics of sensors used for the experiments (according to [64–66]).

| Camera | Max. video resolution, pixels | Pixel size, μm | Max. frame rate, fps |

|---|---|---|---|

| Kinect RGB | 1920 × 1080 | 3.1 | 30 |

| Kinect NIR | 512 × 424 | 10 | 30 |

| Chameleon 3 1.3 MP | 1280 × 1024 | 4.8 | 149 |

Although all these CMOS-sensors have moderate characteristics, they are better than sensors of most general-purpose cameras used for iPPG extraction in humans (see, for instance, [25, 67]). Still, specialized cameras, like those used in [23, 42], could provide more robust and precise iPPG extraction in NHP. For the choice of the camera such characteristics as pixel noise level and sensitivity are crucial, since iPPG extraction implies detection of minor color changes. Besides, sufficient frame rate is required to capture heart cycles. For humans the minimal frame rate for iPPG extraction is 15 fps [25], though in most cases 30 fps and higher are used [67]. Since heart rate for rhesus monkeys is almost twice as high as for humans, higher frame rate is required. In our study frame rate of 30 fps (provided by Microsoft Kinect) was sufficient to estimate pulse rate from iPPG.

For practical applications, one needs a criterion for rejecting video frames if they contain too little reliable pulsatile information for accurate iPPG extraction. One possible solution is to reject video frames as invalid if the signal-to-noise ratio of the acquired iPPG is not above a threshold computed from ROIs that do not contain exposed skin (e.g. regions covered by hair). See also [68] for a method of rejecting invalid video based on spectral analysis.

In this paper we have only considered manual selection of ROI, which is acceptable for head-stabilized NHPs. In the general case, automatic face tracking would be of interest [69]. In this study, a cascade classifier using the Viola-Jones algorithm [70] allowed detection of monkey faces in recorded video. Furthermore, techniques for automatic selection of regions providing most pulsatile information in humans [38, 46, 71, 72] can be adopted. This might improve quality of iPPG extraction in NHPs, since application of adaptive model-based techniques to ROI refinement results in better iPPG quality and more accurate pulse rate estimation in humans [46, 72].

For non-head-stabilized NHP, robustness of the iPPG extraction algorithm to motion is especially important. For humans this problem can be successfully solved by separating pulse-related variations from image changes reflecting the motion [73], but for NHP additional research is required. In particular, hair covering parts of NHP face can provide references for successful compensation of small motion (see [42], where similar methods are proposed for humans).

An even more challenging task is iPPG extraction for freely moving NHP. In this case distance of the animal face to camera sensor and relative angles to the sensor and to the light sources are time dependent. For humans, a model taking this into account was suggested in [24], however its stable performance has been only demonstrated for relatively short (several meters) and nearly constant distances from the face to the camera. Nevertheless, recent progress in iPPG extraction from a long distance video [67] and in compensation of the variable illumination angle and intensity [24, 74] gives hope that required models might be developed in future.

Pulse rate estimation

In our study estimated pulse rates were in the range of 95–125 BPM for monkey Sun, 125–150 BPM for Fla and 160–230 BPM for Mag, which agrees with previously reported values of heart rate (120-250 BPM) for rhesus monkeys sitting in a primate chair [2, 35–37]. Performance of our method for pulse rate estimation (Table 5) was only slightly worse than those reported for humans: mean absolute error obtained in [75] was 2.5 BPM; fraction of epochs with error below 6 BPM (about 8% of average human pulse rate) for the best method considered in [45] was achieved for 87% of epochs; reported values of the Pearson correlation coefficient vary from 0.87 in [45] to 1.00 in [25]. Comparing our results with outcomes of human studies, one should also allow for the imperfect reference since data from pulse-oximeter are not as reliable as ECG.

In this study we used the discrete Fourier transform (DFT) for pulse rate estimation. Despite of its popularity this method is often criticized as being imprecise [19, 45]). Indeed, application of DFT implies analysis of iPPG signal in a rather long time window (20-40 s), while pulse rate is non-stationary and scarcely remains constant for several heart beats in row [19]. These fluctuations blur the iPPG spectrum and may hinder pulse rate estimation.

As an alternative to using DFT, we estimated instantaneous pulse rate from inter-beat intervals defined either as difference between adjacent systolic peaks (maximums of iPPG signal) or as diastolic minima (troughs of the signal) [7]. Since the wavelet filter greatly smoothed the signal and modified its shape (see Fig 4), we excluded this processing step. We tested several methods for peak detection in PPG [76, 77], but the results were inconclusive and highly dependent on the quality of iPPG signal, therefore we do not present them here. Further research is required to find suitable algorithms for estimation of inter-beat intervals from iPPG in NHP. Specifically, the choice of methods for iPPG signal processing prior to peak detection seems to be important. Note that the post-processing applied here destroys the shape of pulse waves (see Fig 4). However, in this study the quality of iPPG signal was only sufficient for a rough frequency-domain analysis, thus the shape of iPPG signal was not critical.

Another possible method for pulse rate estimation is provided by the wavelet transform [19, 43], which can be naturally applied after wavelet filtering (without reconstructing the filtered iPPG signal by inverse wavelet transform).

Limitations

In this study we have acquired data from four adult male rhesus monkeys. Since our subjects were of various ages (8-16 years) and had pulse rate values from a wide range, we consider this sample size sufficient to demonstrate feasibility of iPPG extraction for pulse rate estimation in adult male rhesus monkeys. However, a study with a larger number of subjects, also including females and juveniles, might help to determine a more general applicability of this method.

Since non-invasive ECG acquisition from non-sedated monkeys requires extensive training (similar to the surface electromyography, see [78, 79]), we used pulse-oximetry to obtain reference pulse rate values. However, ECG provides a more accurate reference and is less affected by motion.

Finally, here we used only relatively simple methods of iPPG extraction and processing. Meanwhile, several advanced methods were suggested recently for human iPPG, especially for ROI selection and iPPG extraction. Using these methods (probably with certain modifications for the NHP data), should enhance of iPPG extraction quality and allow iPPG extraction in NHP without head-stabilization.

Summary

Here we evaluated and documented the feasibility of imaging photoplethysmogram extraction from RGB facial video of head-stabilized macaque monkeys. Our results show that one can estimate, accurately and non-invasively, the pulse rate of awake non-human primates without special hardware for illumination and video acquisition and using only standard algorithms of signal processing. This makes imaging photoplethysmography a promising tool for non-contact remote estimation of pulse rate in non-human primates, suitable for example for behavioral and physiological studies of emotion and social cognition, as well as for the welfare-assessment of animals in research.

Supporting information

(A) Imaging photoplethysmogram (iPPG) aligns with contact photoplethysmogram (PPG) recorded using pulse oximeter P-OX100L (Medlab GmbH, Stutensee; documented accuracy ± 1%) for Session 8 (in addition to the basic reference pulse oximetry, which was the same as for other sessions). (B) Pulse rate estimated from this more precise PPG (refPR2) has very good agreement with the reference pulse rate refPR (mean absolute error 1.41, Pearson correlation 0.98). Notably, videoPR computed from iPPG has even better agreement with refPR2 than with refPR (mean absolute error 3.24, Pearson correlation 0.90). Comparison of imaging photoplethysmogram with a contact photoplethysmogram. (A) Imaging photoplethysmogram (iPPG) aligns with contact photoplethysmogram (PPG) recorded using pulse oximeter P-OX100L (Medlab GmbH, Stutensee; documented accuracy ± 1%) for Session 8 (in addition to the basic reference pulse oximetry, which was the same as for other sessions). (B) Pulse rate estimated from this more precise PPG (refPR2) has very good agreement with the reference pulse rate refPR used in all sessions (mean absolute error 1.28 BPM, Pearson correlation 0.99). Notably, videoPR computed from iPPG has even better agreement with refPR2 than with refPR (mean absolute error 3.24 BPM, Pearson correlation 0.90, cf. metrics of correspondence for videoPR and refPR in Table 5).

(TIF)

Acknowledgments

The authors would like to thank Dr. D. Pfefferle for the help with data acquisition.

Data Availability

All data files are available from the figshare public repository (DOI: https://doi.org/10.6084/m9.figshare.5818101).

Funding Statement

We acknowledge funding from Hermann and Lilly Schilling Foundation (Germany), https://www.deutsches-stiftungszentrum.de/stiftungen/hermann-und-lilly-schilling-stiftung-f%C3%BCr-medizinische-forschung (received by IK). We acknowledge funding from the Ministry for Science and Education of Lower Saxony and the Volkswagen Foundation through the program “Niedersächsisches Vorab,” https://www.volkswagenstiftung.de/unsere-foerderung/unser-foerderangebot-im-ueberblick/vorab.html. We acknowledge funding from the DFG research unit 2591 “Severity assessment in animal based research,” http://gepris.dfg.de/gepris/projekt/321137804 (received by AG, ST). We acknowledge additional support by the Leibniz Association through funding for the Leibniz ScienceCampus Primate Cognition and the Max Planck Society, https://www.leibniz-gemeinschaft.de/en/home/. The funder had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Hassimoto M, Kuwahara M, Harada T, Kaga N, Obata M, Mochizuki H, et al. Diurnal variation of the QT interval in rhesus monkeys. International Journal of Bioelectromagnetism. 2002;4(2). [Google Scholar]

- 2. Grandi LC, Ishida H. The physiological effect of human grooming on the heart rate and the heart rate variability of laboratory non-human primates: a pilot study in male rhesus monkeys. Frontiers in Veterinary Science. 2015;2 10.3389/fvets.2015.00050 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Downs ME, Buch A, Sierra C, Karakatsani ME, Chen S, Konofagou EE, et al. Long-term safety of repeated blood-brain barrier opening via focused ultrasound with microbubbles in non-human primates performing a cognitive task. PloS one. 2015;10(5):e0125911 10.1371/journal.pone.0125911 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. van Hoof RHM, Hermeling E, Sluimer JC, Salzmann J, Hoeks APG, Roussel J, et al. Heart rate lowering treatment leads to a reduction in vulnerable plaque features in atherosclerotic rabbits. PloS one. 2017;12(6):e0179024 10.1371/journal.pone.0179024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Gacsi M, Maros K, Sernkvist S, Farago T, Miklosi A. Human analogue safe haven effect of the owner: behavioural and heart rate response to stressful social stimuli in dogs. PLoS One. 2013;8(3):e58475 10.1371/journal.pone.0058475 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Yamaoka A, Koie H, Sato T, Kanayama K, Taira M. Standard electrocardiographic data of young Japanese monkeys (Macaca fusucata). Journal of the American Association for Laboratory Animal Science. 2013;52(4):491–494. [PMC free article] [PubMed] [Google Scholar]

- 7. Schäfer A, Vagedes J. How accurate is pulse rate variability as an estimate of heart rate variability?: A review on studies comparing photoplethysmographic technology with an electrocardiogram. International journal of cardiology. 2013;166(1):15–29. 10.1016/j.ijcard.2012.03.119 [DOI] [PubMed] [Google Scholar]

- 8. Sun X, Cai J, Fan X, Han P, Xie Y, Chen J, et al. Decreases in electrocardiographic r-wave amplitude and QT interval predict myocardial ischemic infarction in Rhesus monkeys with left anterior descending artery ligation. PloS one. 2013;8(8):e71876 10.1371/journal.pone.0071876 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Huss MK, Ikeno F, Buckmaster CL, Albertelli MA. Echocardiographic and Electrocardiographic Characteristics of Male and Female Squirrel Monkeys (Saimiri spp.). Journal of the American Association for Laboratory Animal Science. 2015;54(1):25–28. [PMC free article] [PubMed] [Google Scholar]

- 10. Chui RW, Derakhchan K, Vargas HM. Comprehensive analysis of cardiac arrhythmias in telemetered cynomolgus monkeys over a 6-month period. Journal of pharmacological and toxicological methods. 2012;66(2):84–91. 10.1016/j.vascn.2012.05.002 [DOI] [PubMed] [Google Scholar]

- 11. Hoffmann P, Bentley P, Sahota P, Schoenfeld H, Martin L, Longo L, et al. Vascular origin of vildagliptin-induced skin effects in cynomolgus monkeys pathomechanistic role of peripheral sympathetic system and neuropeptide Y. Toxicologic pathology. 2014;42(4):684–695. 10.1177/0192623313516828 [DOI] [PubMed] [Google Scholar]

- 12. Niehoff M, Sternberg J, Niggemann B, Sarazan R, Holbrook M. Pimobendan, etilefrine, moxifloxacine and esketamine as reference compounds to validate the DSI PhysioTel® system in cynomolgus monkeys. Journal of Pharmacological and Toxicological Methods. 2014;70:338 10.1016/j.vascn.2014.03.102 [DOI] [PubMed] [Google Scholar]

- 13. Kremer JJ, Foley CM, Xiang Z, Lemke E, Sarazan RD, Osinski MA, et al. Comparison of ECG signals and arrhythmia detection using jacketed external telemetry and implanted telemetry in monkeys. Journal of Pharmacological and Toxicological Methods. 2011;64(1):e47 10.1016/j.vascn.2011.03.164 [DOI] [Google Scholar]

- 14. Derakhchan K, Chui RW, Stevens D, Gu W, Vargas HM. Detection of QTc interval prolongation using jacket telemetry in conscious non-human primates: comparison with implanted telemetry. British journal of pharmacology. 2014;171(2):509–522. 10.1111/bph.12484 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Challoner AVJ. Photoelectric plethysmography for estimating cutaneous blood flow. Non-invasive physiological measurements. 1979;1:125–51. [Google Scholar]

- 16. Kamal AAR, Harness JB, Irving G, Mearns AJ. Skin photoplethysmography—a review. Computer methods and programs in biomedicine. 1989;28(4):257–269. 10.1016/0169-2607(89)90159-4 [DOI] [PubMed] [Google Scholar]

- 17. Allen J. Photoplethysmography and its application in clinical physiological measurement. Physiological measurement. 2007;28(3):R1 10.1088/0967-3334/28/3/R01 [DOI] [PubMed] [Google Scholar]

- 18. Tamura T, Maeda Y, Sekine M, Yoshida M. Wearable photoplethysmographic sensors—past and present. Electronics. 2014;3(2):282–302. 10.3390/electronics3020282 [DOI] [Google Scholar]

- 19. Hülsbusch M, Blazek V. Contactless mapping of rhythmical phenomena in tissue perfusion using PPGI In: Medical Imaging 2002. International Society for Optics and Photonics; 2002. p. 110–117. [Google Scholar]

- 20. Takano C, Ohta Y. Heart rate measurement based on a time-lapse image. Medical engineering & physics. 2007;29(8):853–857. 10.1016/j.medengphy.2006.09.006 [DOI] [PubMed] [Google Scholar]

- 21. Verkruysse W, Svaasand LO, Nelson JS. Remote plethysmographic imaging using ambient light. Optics express. 2008;16(26):21434–21445. 10.1364/OE.16.021434 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. de Haan G, Jeanne V. Robust pulse rate from chrominance-based rPPG. IEEE Transactions on Biomedical Engineering. 2013;60(10):2878–2886. 10.1109/TBME.2013.2266196 [DOI] [PubMed] [Google Scholar]

- 23. Kamshilin AA, Nippolainen E, Sidorov IS, Vasilev PV, Erofeev NP, Podolian NP, et al. A new look at the essence of the imaging photoplethysmography. Scientific reports. 2015;5 10.1038/srep10494 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Wang W, den Brinker A, Stuijk S, de Haan G. Algorithmic principles of remote-PPG. IEEE Transactions on Biomedical Engineering. 2017;64(7):1479–1491. 10.1109/TBME.2016.2609282 [DOI] [PubMed] [Google Scholar]

- 25. Poh MZ, McDuff DJ, Picard RW. Advancements in noncontact, multiparameter physiological measurements using a webcam. IEEE Transactions on Biomedical Engineering. 2011;58(1):7–11. 10.1109/TBME.2010.2086456 [DOI] [PubMed] [Google Scholar]

- 26.Blanik N, Pereira C, Czaplik M, Blazek V, Leonhardt S. Remote Photopletysmographic Imaging of Dermal Perfusion in a porcine animal model. In: The 15th International Conference on Biomedical Engineering. Springer; 2014. p. 92–95.

- 27.Addison PS, Foo DMH, Jacquel D, Borg U. Video monitoring of oxygen saturation during controlled episodes of acute hypoxia. In: Engineering in Medicine and Biology Society (EMBC), 2016 IEEE 38th Annual International Conference of the. IEEE; 2016. p. 4747–4750. [DOI] [PubMed]

- 28. Roelfsema PR, Treue S. Basic neuroscience research with nonhuman primates: a small but indispensable component of biomedical research. Neuron. 2014;82(6):1200–1204. 10.1016/j.neuron.2014.06.003 [DOI] [PubMed] [Google Scholar]

- 29. Dominguez-Vargas AU, Schneider L, Wilke M, Kagan I. Electrical Microstimulation of the Pulvinar Biases Saccade Choices and Reaction Times in a Time-Dependent Manner. Journal of Neuroscience. 2017;37(8):2234–2257. 10.1523/JNEUROSCI.1984-16.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Schwedhelm P, Baldauf D, Treue S. Electrical stimulation of macaque lateral prefrontal cortex modulates oculomotor behavior indicative of a disruption of top-down attention. Scientific reports. 2017;7(1):17715 10.1038/s41598-017-18153-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Prescott MJ, Buchanan-Smith HM. Training nonhuman primates using positive reinforcement techniques. Journal of Applied Animal Welfare Science. 2003;6(3):157–161. 10.1207/S15327604JAWS0603_01 [DOI] [PubMed] [Google Scholar]

- 32. Calapai A, Berger M, Niessing M, Heisig K, Brockhausen R, Treue S, et al. A cage-based training, cognitive testing and enrichment system optimized for rhesus macaques in neuroscience research. Behavior research methods. 2017;49(1):35–45. 10.3758/s13428-016-0707-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Berger M, Calapai A, Stephan V, Niessing M, Burchardt L, Gail A, et al. Standardized automated training of rhesus monkeys for neuroscience research in their housing environment. Journal of neurophysiology. 2018;119(3):796–807. 10.1152/jn.00614.2017 [DOI] [PubMed] [Google Scholar]

- 34. Pfefferle D, Plümer S, Burchardt L, Treue S, Gail A. Assessment of stress responses in rhesus macaques (Macaca mulatta) to daily routine procedures in system neuroscience based on salivary cortisol concentrations. PloS one. 2018;13(1):e0190190 10.1371/journal.pone.0190190 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Tatsumi T, Koto M, Komatsu H, Adachi J. Effects of repeated chair restraint on physiological values in the rhesus monkey (Macaca mulatta). Experimental animals. 1990;39(3):361–369. 10.1538/expanim1978.39.3_353 [DOI] [PubMed] [Google Scholar]

- 36. Clarke AS, Mason WA, Mendoza SP. Heart rate patterns under stress in three species of macaques. American Journal of Primatology. 1994;33(2):133–148. 10.1002/ajp.1350330207 [DOI] [PubMed] [Google Scholar]

- 37. Hassimoto M, Harada T, Harada T. Changes in hematology, biochemical values, and restraint ECG of rhesus monkeys (Macaca mulatta) following 6-month laboratory acclimation. Journal of medical primatology. 2004;33(4):175–186. 10.1111/j.1600-0684.2004.00069.x [DOI] [PubMed] [Google Scholar]

- 38. Kumar M, Veeraraghavan A, Sabharwal A. DistancePPG: Robust non-contact vital signs monitoring using a camera. Biomedical optics express. 2015;6(5):1565–1588. 10.1364/BOE.6.001565 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Zarit BD, Super BJ, Quek FKH. Comparison of five color models in skin pixel classification. In: Proceedings of the International Workshop on Recognition, Analysis, and Tracking of Faces and Gestures in Real-Time Systems. IEEE; 1999. p. 58–63.

- 40.Vezhnevets V, Sazonov V, Andreeva A. A survey on pixel-based skin color detection techniques. In: Proceedings Graphicon. vol. 3. Moscow, Russia; 2003. p. 85–92.

- 41.Unakafov AM. Pulse rate estimation using imaging photoplethysmography: generic framework and comparison of methods on a publicly available dataset. arXiv preprint arXiv:171008369. 2017.

- 42.Hülsbusch M. An image-based functional method for opto-electronic detection of skin-perfusion. RWTH Aachen (in German); 2008.

- 43. Bousefsaf F, Maaoui C, Pruski A. Continuous wavelet filtering on webcam photoplethysmographic signals to remotely assess the instantaneous heart rate. Biomedical Signal Processing and Control. 2013;8(6):568–574. 10.1016/j.bspc.2013.05.010 [DOI] [Google Scholar]

- 44. Bousefsaf F, Maaoui C, Pruski A. Peripheral vasomotor activity assessment using a continuous wavelet analysis on webcam photoplethysmographic signals. Bio-Medical Materials and Engineering. 2016;27(5):527–538. 10.3233/BME-161606 [DOI] [PubMed] [Google Scholar]

- 45. Holton BD, Mannapperuma K, Lesniewski PJ, Thomas JC. Signal recovery in imaging photoplethysmography. Physiological measurement. 2013;34(11):1499 10.1088/0967-3334/34/11/1499 [DOI] [PubMed] [Google Scholar]

- 46.Stricker R, Müller S, Gross HM. Non-contact video-based pulse rate measurement on a mobile service robot. In: Robot and Human Interactive Communication, 2014 RO-MAN: The 23rd IEEE International Symposium on. IEEE; 2014. p. 1056–1062.

- 47. Wang W, Stuijk S, de Haan G. A Novel Algorithm for Remote Photoplethysmography: Spatial Subspace Rotation. IEEE Transactions on Biomedical Engineering. 2016;63(9):1974–1984. 10.1109/TBME.2015.2508602 [DOI] [PubMed] [Google Scholar]

- 48. Lindberg LG, Öberg PÅ. Photoplethysmography: Part 2. Influence of light source wavelength. Medical and Biological Engineering and Computing. 1991;29(1):48–54. [DOI] [PubMed] [Google Scholar]

- 49. Sun Y, Thakor N. Photoplethysmography revisited: from contact to noncontact, from point to imaging. IEEE Transactions on Biomedical Engineering. 2016;63(3):463–477. 10.1109/TBME.2015.2476337 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Spigulis J, Gailite L, Lihachev A, Erts R. Simultaneous recording of skin blood pulsations at different vascular depths by multiwavelength photoplethysmography. Applied optics. 2007;46(10):1754–1759. 10.1364/AO.46.001754 [DOI] [PubMed] [Google Scholar]

- 51. Vizbara V, Solosenko A, Stankevicius D, Marozas V. Comparison of green, blue and infrared light in wrist and forehead photoplethysmography. Biomedical Engineering. 2013;17(1). [Google Scholar]

- 52. Damianou D. The wavelength dependence of the photoplethysmogram and its implication to pulse oximetry. University of Nottingham; 1995. [Google Scholar]

- 53.Maeda Y, Sekine M, Tamura T, Moriya A, Suzuki T, Kameyama K. Comparison of reflected green light and infrared photoplethysmography. In: 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE; 2008. p. 2270–2272. [DOI] [PubMed]

- 54. Blazek V, Schultz-Ehrenburg U. Quantitative Photoplethysmography: Basic Facts and Examination Tests for Evaluating Peripheral Vascular Functions. VDI-Verlag; 1996. [Google Scholar]

- 55. Wu T, Blazek V, Schmitt HJ. Photoplethysmography imaging: a new noninvasive and noncontact method for mapping of the dermal perfusion changes In: EOS/SPIE European Biomedical Optics Week. International Society for Optics and Photonics; 2000. p. 62–70. [Google Scholar]

- 56. Smith AM, Mancini MC, Nie S. Second window for in vivo imaging. Nature nanotechnology. 2009;4(11):710 10.1038/nnano.2009.326 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Lee J, Matsumura K, Yamakoshi K, Rolfe P, Tanaka S, Yamakoshi T. Comparison between red, green and blue light reflection photoplethysmography for heart rate monitoring during motion. In: 35th annual international conference of the IEEE engineering in medicine and biology society (EMBC). IEEE; 2013. p. 1724–1727. [DOI] [PubMed]

- 58. van Gastel M, Stuijk S, de Haan G. Motion robust remote-PPG in infrared. IEEE Transactions on Biomedical Engineering. 2015;62(5):1425–1433. 10.1109/TBME.2015.2390261 [DOI] [PubMed] [Google Scholar]

- 59. Lindqvist A, Lindelöw M. Remote Heart Rate Extraction from Near Infrared Videos. Chalmers University of Technology, Gothenburg, Sweden; 2016. [Google Scholar]

- 60. Bashkatov AN, Genina EA, Kochubey VI, Tuchin VV. Optical properties of human skin, subcutaneous and mucous tissues in the wavelength range from 400 to 2000 nm. Journal of Physics D: Applied Physics. 2005;38(15):2543. [Google Scholar]

- 61. Kviesis-Kipge E, Curkste E, Spigulis J, Eihvalde L. Real-time analysis of skin capillary-refill processes using blue LED In: SPIE Photonics Europe. International Society for Optics and Photonics; 2010. p. 771523–771523. [Google Scholar]

- 62. Montagna W, Yun JS, Machida H. The skin of primates XVIII. The skin of the rhesus monkey (Macaca mulatta). American journal of physical anthropology. 1964;22(3):307–319. 10.1002/ajpa.1330220317 [DOI] [PubMed] [Google Scholar]

- 63. Higham JP, Brent LJN, Dubuc C, Accamando AK, Engelhardt A, Gerald MS, et al. Color signal information content and the eye of the beholder: a case study in the rhesus macaque. Behavioral Ecology. 2010;21(4):739–746. 10.1093/beheco/arq047 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64. Bamji CS, O’Connor P, Elkhatib T, Mehta S, Thompson B, Prather LA, et al. A 0.13 μm CMOS system-on-chip for a 512×424 time-of-flight image sensor with multi-frequency photo-demodulation up to 130 MHz and 2 GS/s ADC. IEEE Journal of Solid-State Circuits. 2015;50(1):303–319. 10.1109/JSSC.2014.2364270 [DOI] [Google Scholar]

- 65. Pagliari D, Pinto L. Calibration of Kinect for Xbox one and comparison between the two generations of Microsoft sensors. Sensors. 2015;15(11):27569–27589. 10.3390/s151127569 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Chameleon3 USB 3.0 Digital Camera, Technical Reference. Point Grey; 2016.

- 67. Blackford EB, Estepp JR, Piasecki AM, Bowers MA, Klosterman SL. Long-range non-contact imaging photoplethysmography: cardiac pulse wave sensing at a distance In: SPIE BiOS. International Society for Optics and Photonics; 2016. p. 971512–971512. [Google Scholar]

- 68. Zhao F, Li M, Qian Y, Tsien JZ. Remote measurements of heart and respiration rates for telemedicine. PLoS One. 2013;8(10):e71384 10.1371/journal.pone.0071384 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. Witham CL. Automated Face Recognition of Rhesus Macaques. Journal of Neuroscience Methods. 2017. 10.1016/j.jneumeth.2017.07.020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Viola P, Jones M. Rapid object detection using a boosted cascade of simple features. In: Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. vol. 1. IEEE; 2001. p. I–511.

- 71. Feng L, Po LM, Xu X, Li Y, Ma R. Motion-resistant remote imaging photoplethysmography based on the optical properties of skin. IEEE Transactions on Circuits and Systems for Video Technology. 2015;25(5):879–891. 10.1109/TCSVT.2014.2364415 [DOI] [Google Scholar]

- 72. Bousefsaf F, Maaoui C, Pruski A. Automatic Selection of Webcam Photoplethysmographic Pixels Based on Lightness Criteria. Journal of Medical and Biological Engineering. 2017;37(3):374–385. 10.1007/s40846-017-0229-1 [DOI] [Google Scholar]

- 73. Moço AV, Stuijk S, de Haan G. Motion robust PPG-imaging through color channel mapping. Biomedical optics express. 2016;7(5):1737–1754. 10.1364/BOE.7.001737 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.McDuff DJ, Estepp JR, Piasecki AM, Blackford EB. A survey of remote optical photoplethysmographic imaging methods. In: 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). IEEE; 2015. p. 6398–6404. [DOI] [PubMed]

- 75.Lewandowska M, Rumiński J, Kocejko T, Nowak J. Measuring pulse rate with a webcam—a non-contact method for evaluating cardiac activity. In: Federated Conference on Computer Science and Information Systems (FedCSIS); 2011. p. 405–410.

- 76. Shin HS, Lee C, Lee M. Adaptive threshold method for the peak detection of photoplethysmographic waveform. Computers in Biology and Medicine. 2009;39(12):1145–1152. 10.1016/j.compbiomed.2009.10.006 [DOI] [PubMed] [Google Scholar]

- 77. Elgendi M, Norton I, Brearley M, Abbott D, Schuurmans D. Systolic peak detection in acceleration photoplethysmograms measured from emergency responders in tropical conditions. PLoS One. 2013;8(10):e76585 10.1371/journal.pone.0076585 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Lewis S, Russold MF, Dietl H, Ruff R, Dörge T, Hoffmann KP, et al. Acquisition of muscle activity with a fully implantable multi-channel measurement system. In: Instrumentation and Measurement Technology Conference (I2MTC), 2012 IEEE International. IEEE; 2012. p. 996–999.

- 79. Morel P, Ferrea E, Taghizadeh-Sarshouri B, Audí JMC, Ruff R, Hoffmann KP, et al. Long-term decoding of movement force and direction with a wireless myoelectric implant. Journal of neural engineering. 2015;13(1):016002 10.1088/1741-2560/13/1/016002 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(A) Imaging photoplethysmogram (iPPG) aligns with contact photoplethysmogram (PPG) recorded using pulse oximeter P-OX100L (Medlab GmbH, Stutensee; documented accuracy ± 1%) for Session 8 (in addition to the basic reference pulse oximetry, which was the same as for other sessions). (B) Pulse rate estimated from this more precise PPG (refPR2) has very good agreement with the reference pulse rate refPR (mean absolute error 1.41, Pearson correlation 0.98). Notably, videoPR computed from iPPG has even better agreement with refPR2 than with refPR (mean absolute error 3.24, Pearson correlation 0.90). Comparison of imaging photoplethysmogram with a contact photoplethysmogram. (A) Imaging photoplethysmogram (iPPG) aligns with contact photoplethysmogram (PPG) recorded using pulse oximeter P-OX100L (Medlab GmbH, Stutensee; documented accuracy ± 1%) for Session 8 (in addition to the basic reference pulse oximetry, which was the same as for other sessions). (B) Pulse rate estimated from this more precise PPG (refPR2) has very good agreement with the reference pulse rate refPR used in all sessions (mean absolute error 1.28 BPM, Pearson correlation 0.99). Notably, videoPR computed from iPPG has even better agreement with refPR2 than with refPR (mean absolute error 3.24 BPM, Pearson correlation 0.90, cf. metrics of correspondence for videoPR and refPR in Table 5).

(TIF)

Data Availability Statement

All data files are available from the figshare public repository (DOI: https://doi.org/10.6084/m9.figshare.5818101).