Significance

Head-mounted video display systems and image processing as a means of enhancing low vision are ideas that have been around for over 20 years. Recent developments in virtual and augmented reality technology and software have opened up new research opportunities that will lead to benefits for low vision patients.

Since the Visionics low vision enhancement system (LVES), the first head-mounted video display LVES, was engineered 20 years ago, various other devices have come and gone with a recent resurgence of the technology over the past few years. In this paper, we discuss the history of the development of LVESs, describe the current state of available technology by outlining existing systems, and explore future innovation and research in this area. While LVESs have now been around for more than two decades, there is still much that remains to be explored. With the growing popularity and availability of virtual reality and augmented reality technologies, we can now integrate these methods within low vision rehabilitation to conduct more research on customized contrast enhancement strategies, image motion compensation, image remapping strategies, and binocular disparity, all while incorporating eye tracking capabilities. Future research should utilize this available technology and knowledge to learn more about the visual system in the low vision patient and extract this new information to create prescribable vision enhancement solutions for the visually impaired individual.

Keywords: low vision rehabilitation, head-mounted video display, low vision enhancement system, vision impairment, assistive technology

Low vision refers to chronic disabling vision impairment caused by disorders of the visual system that cannot be corrected with glasses/contact lenses, medical treatment, or surgery. The types of vision impairments that come under the rubric of low vision include reductions in visual acuity, loss of contrast sensitivity, central scotomas, peripheral visual field loss, night blindness, slow glare recovery, photophobia, metamorphopsia, and oscillopsia. Often low vision patients have combinations of these impairments. As a result of their vision impairments, low vision patients have difficulty with or are unable to perform valued activities.1,2 Consequently, low vision can have a significant impact on patients’ daily functioning, independence, social interactions, quality of life, and ultimately physical and mental health.3–5

Low vision rehabilitation focuses on maximizing visual function through the use of adaptive strategies and accommodations and with vision enhancing assistive technology. Linear magnification (e.g., CCTV, large print), relative distance magnification (e.g., high add, microscope, hand magnifier), and angular magnification (e.g., telescopes, binoculars, bioptics, head-mounted video display systems) are the primary low vision enhancement strategies used to compensate for reduced visual acuity.6 Other common low vision enhancement strategies include illumination control with filters (e.g., sunglasses and color-tinted lenses), task lighting (e.g., high intensity light sources and illuminated magnifiers) and head-mounted video display systems that employ automatic gain control, which compensate for abnormal light and dark adaptation and glare recovery.6,7 Contrast enhancement (e.g., contrast stretching, contrast reversal, edge enhancement, and color and luminance contrast substitution), which is implemented primarily with CCTV magnifiers, computer accommodation software, colored filters (to transform color contrast to luminance contrast), and head-mounted video display systems, is employed to compensate for reduced contrast sensitivity.8,9

Traditionally, magnification has been accomplished using conventional optics, however there are limitations including fixed level of magnification and reduced field of view, as well as narrow depth of field and close working distance for higher levels of near magnification. Furthermore, other than color filters, optical devices cannot be used to enhance image contrast. Head-mounted video display systems equipped with optical or digital zoom magnification from system-mounted forward-looking video cameras, illumination control, and contrast enhancement capabilities have been used in low vision rehabilitation for more than twenty years to overcome the limitations of conventional optical devices.7,10–12 These systems are intended to enable hands-free functioning, provide binocular or bi-ocular (same image presented to each eye without retinal disparity) viewing, and modify the image presented to the retina in real-time to compensate for the patient’s specific visual limitations under changing viewing conditions.13,14 In principle, there is an image processing strategy for each type of vision impairment that will optimally enhance the patient’s perception of visual information in the retinal image. The ideal head-mounted low vision enhancement system (LVES) would be able to compensate for each type of vision impairment, without compromising field of view, binocularity, working distance and resolution, and more importantly aid in the effective and efficient performance of the varied tasks of daily living without forcing the user to accept performance trade-offs in order to accommodate system limitations.15 In recent years, there have been remarkable advances in personal computing (e.g., smartphones) making customized real-time digital image processing to optimally enhance low vision realizable and affordable. The big question now is do we know enough about visual impairments and their relation to daily functioning to design customized low vision enhancement algorithms that would be optimal for the individual patient?

Over the past 25 years, progress has been relatively slow in developing and implementing image processing algorithms that can provide customizable enhancement of the patient’s view of the environment. For example, customized contrast enhancement and image remapping strategies first demonstrated in the laboratory more than three decades ago16,17 have not yet been implemented in commercial head-mounted LVES systems. Although the past rate of progress has been limited by the capabilities and cost of enabling technology, recent advances in cameras, displays, and computing power now make adoption of vision enhancement strategies demonstrated in the laboratory feasible, if not imminent. The purpose of this paper is to provide a review of the current state of head-mounted LVES technology and to suggest areas of research and development in personalized digital image processing that could be translated to practice in the very near future.

Head-mounted Low Vision Enhancement Systems

The Visionics Low Vision Enhancement System was the first commercially available LVES based on a head-mounted video display system.18 The displays were two 19mm diameter black and white cathode ray tubes mounted in the temple arms of the headset and imaged at the user’s far point for each eye through exit pupils in the plane of the user’s entrance pupils by field-correcting relay optics and final aspheric mirrors. The display images were 50°×40° with 40° binocular overlap, yielding a 60° horizontal binocular field of view. Display resolution was 5 arcmin/pixel (equivalent to 20/100 visual acuity). A monochrome CCD video camera in front of each eye provided unmagnified stereo video images of the environment for orientation. A single, tiltable (level to 45° down gaze), center-mounted, “cyclopean” video camera equipped with motor-driven optical zoom magnification (1.5X to 12X) and autofocus (with an auxiliary flip-up macro lens) provided the same magnified video image to both eyes. All video cameras had automatic gain control to maintain constant average display luminance. The Visionics LVES was battery-powered with user controls for switching between orientation and zoom cameras or external video and switching between manual focus control and autofocus. The user also could control magnification, contrast stretching, and contrast reversal.

Visionics LVES competitors that came on the market in the late 1990s and had similar features to the Visionics LVES included the Enhanced Vision Systems V-max, the Keeler NuVision, the Innoventions Magnicam, and the Bartimaeus Clarity TravelViewer-to-go; the latter two of which employed stand-mounted or hand-held instead of head-mounted video cameras.13,14 The most enduring head-mounted video display LVES was the Enhanced Vision Systems Jordy, the successor to the V-max.

Several evaluative studies were conducted with these early LVESs. The use of these devices resulted in significantly better distance and intermediate task performance than did previously prescribed optical aids.19 The head-mounted LVES technology provided some improvement in home performance of activities of daily living (ADLs) but optical aids remained optimal for the majority of those tasks. Younger patients performed better overall.20 Newly diagnosed patients responded most positively to the technology, otherwise preference could not be predicted by age, gender, diagnosis, or previous electronic magnification experience.21 No significant differences in outcomes between LVES devices were reported.20,21

All of the early head-mounted LVESs eventually faded from the marketplace. In order to incorporate the computing power needed for image enhancement strategies, large computer processing systems and hardware was needed. At the time, these were expensive components and the companies serving the boutique low vision market did not have the resources to advance the technology to the next level. But recently, head-mounted LVES technology has made a comeback. Products currently available include the eSight Eyewear, NuEyes Pro Smartglasses, CyberTimez Cyber Eyez, Evergaze seeBOOST, IrisVision, and the return of a redesigned Enhanced Vision Systems Jordy. These new systems employ more modern color micro- or cell phone displays (LCD or OLED) and smaller, higher resolution color video cameras with cell phone camera optics. Table 1 compares the specifications of these new head-mounted LVESs to each other and to the specifications of the original Visionics LVES. With the exception of the IrisVision, they all have smaller fields of view than did the original Visionics LVES. Some devices like the NuEyes, Cyber Eyez, and eSight also incorporate non-vision features such as optical character recognition for text-to-speech and speech-output artificial intelligence software for face and object recognition. With major investments in technology development to serve the consumer virtual and augmented reality and personal theater markets, feature-rich enabling technology for head-mounted LVESs undoubtedly will continue to evolve and advance to become more powerful and more attractive to wear at lower cost. To take advantage of these trends, attention now has to be focused on developing and testing image-processing strategies to optimize low vision enhancement.

Table 1.

Manufacture specifications of head-mounted video display low vision enhancement systems.

| Visionics LVES | Jordy | SeeBOOST | eSight 3 | NuEyes | Cyber Eyez App | Iris Vision | |

|---|---|---|---|---|---|---|---|

| Price (USD) | $5,000 (in 1994) | $3,620 | $3,500 | $9,995 | $5,995 | $1,997 (software only) | $2,500 |

|

| |||||||

| Weight (grams) | 992.2 | 236 | 25 (plus the weight of eyeglasses) | 104 | 125 | 371.4 | 425.2 |

|

| |||||||

| Binocularity | Binocular | Cyclopean* | Monocular | Cyclopean* | Cyclopean* | Monocular | Cyclopean* |

|

| |||||||

| Display resolution | −640 ×

480 −5 arcmin/pixel −20/100 Snellen equivalent |

−640 ×

480 −2.8 arcmin/pixel −20/56 Snellen equivalent |

−800 ×

600 −2.25 arcmin/pixel −20/45 Snellen equivalent |

−1024 × 728 −2.2 arcmin/pixel −20/44 Snellen equivalent |

−1280 ×

720 −1.4 arcmin/pixel −20/28 Snellen equivalent |

−640 ×

360 −1.9 arcmin/pixel −20/37.5 Snellen equivalent |

−1210 ×

920 −3.3 arcmin/pixel −20/65 Snellen equivalent |

|

| |||||||

| Magnification | 1.5–12X | 1–14X | 1.4–7X | 1–24X | 0.6–12X (with additional optical lens) | 1–15X | 1–8X |

|

| |||||||

| Field of view (Horizontal x Vertical) | 50 × 40 degrees | 30 × 17 degrees | 30 × 22.5 degrees | 37.5 × 28 degrees | 30 × 17 degrees | 20 degrees | 70 × 50 degrees |

| Picture of device |

|

|

|

|

|

|

|

viewing the same image with both eyes, no disparity

Pictures are the authors’ photographs unless otherwise referenced below:

Visionics LVES: https://er.jsc.nasa.gov/seh/pg66s95.html

SeeBoost: SeeBoost product brochure

Cyber Eyez App M300 package: https://cybertimez.com/?product=cyber-eyez-m300-complete-package

Contrast Enhancement Strategies

Reduced contrast sensitivity is common among low vision patients and is often cited as a major contributor to reductions in the patient’s ability to function visually.22,23 Patients with low vision often report difficulty seeing facial features, interpreting facial expressions, and recognizing familiar people. Significant contrast sensitivity loss can make seeing facial details, which are already low in contrast, even more difficult.24–26 Difficulties with orientation and mobility also are attributed frequently to reductions in contrast sensitivity in this population.27,28 To generalize, one might expect any activity that depends on recognition, identification, and interpretation of visual information depends heavily on the person’s ability to see varying levels of detail in the image.

Most vision scientists prefer to describe images and image processing in the spatial frequency domain (sums of sinusoidal spatial modulations of luminance along each meridian with the amplitude and phase varying as a function of spatial frequency and of orientation). In this framework, contrast sensitivity at each spatial frequency can be interpreted as how much the amplitude at each frequency is reduced by the visual system. Thus, the visual system is characterized as a linear filter for which the contrast sensitivity function (CSF) is analogous to a modulation transfer function (MTF).

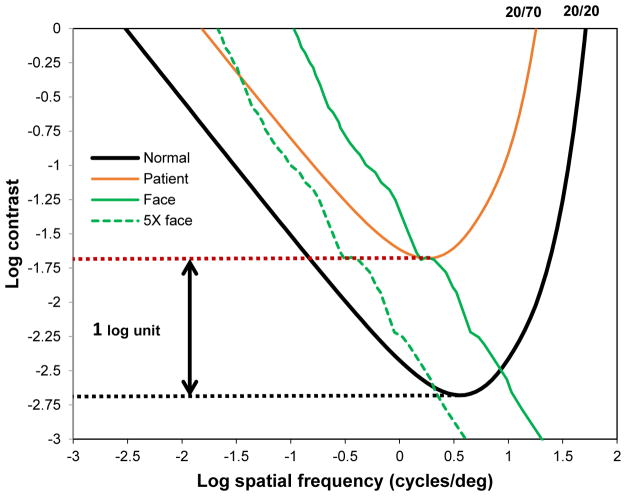

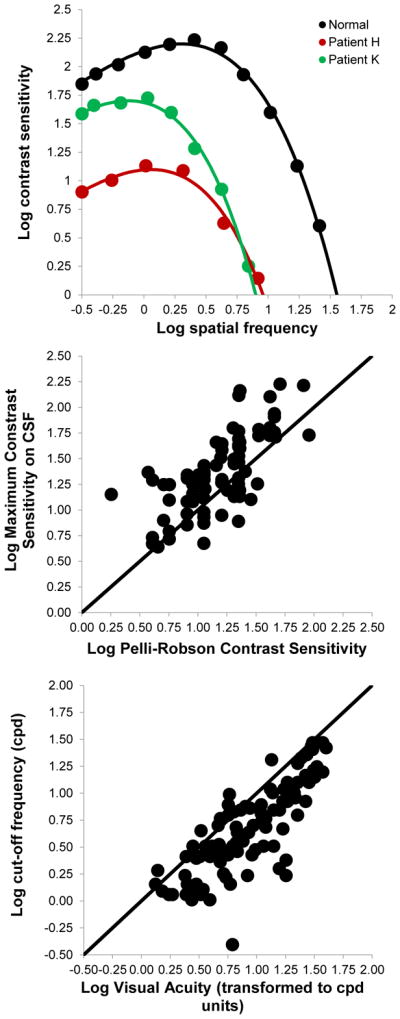

As shown with the y-intercepts in the top panel and the scatter plot in the middle panel of Figure 1, the maximum height of the contrast sensitivity function corresponds to contrast sensitivity measured with a Pelli-Robson, Mars, or other letter chart, and the cut-off frequency (the spatial frequency that requires 100% contrast to be visible, as shown with the x-intercepts in the top panel of Figure 1) corresponds to visual acuity measured with an ETDRS or other high contrast letter chart (shown in the bottom panel of Figure 1).29 As illustrated in the top panel of Figure 1, Chung and Legge demonstrated that to a good approximation, the contrast sensitivity function has the same shape for people with low vision as it does for normally sighted people when plotted on log contrast sensitivity versus log spatial frequency coordinates.30

Figure 1.

The top figure compares log contrast sensitivity as a function of log spatial frequency for the average of 5 normally sighted subjects (black points) and two low vision patients (green points and red points) from Chung and Legge.30 Y-intercept values out the log contrast sensitivity at the peak of the CSF for normal (black) and low vision patients (green and red) and x-intercept values are the respective cut-off frequencies. The middle figure is log contrast sensitivity at the peak of the contrast sensitivity vs spatial frequency function (CSF) vs log contrast sensitivity measured with the Pelli-Robson chart. The bottom figure illustrates log cut-off frequency for the CSF vs log visual acuity measured with the ETDRS chart expressed in units of log spatial frequency (unpublished data from 80 low vision patients obtained in 1994). The solid line in the middle and bottom figures is the identity line (slope=1 and intercept=0).]

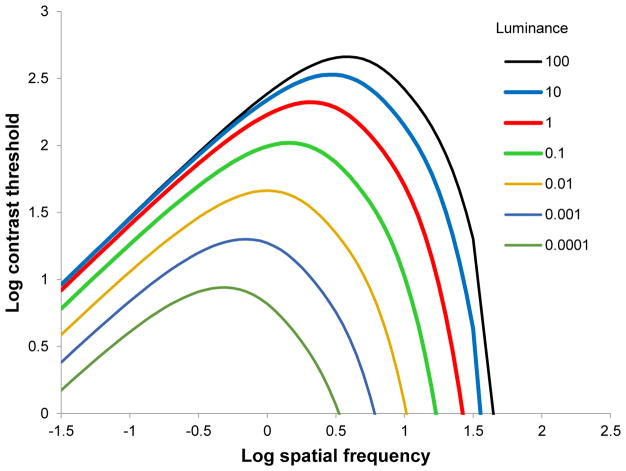

The contrast threshold function is the inverse of the contrast sensitivity function (−log contrast sensitivity). As illustrated by the area within the red and black curves in Figure 2, only contrasts versus spatial frequency in the image that fall above the contrast threshold function will be visible to the person. For most natural images, including images of faces, contrast decreases inversely proportional to spatial frequency (1/f contrast spectrum, which when plotted on log contrast versus log spatial frequency coordinates is a line with negative slope).31,32 As shown by the solid green line in Figure 2, a normal face at 10 feet has high contrast at low spatial frequencies and 1/f drop in contrast with increasing spatial frequency. At about 10 cycles/degree, contrast in the face image is too low to be visible to the normally sighted person (solid line falls below the normal contrast threshold function). For the low vision patient, all information in the face image above 3 cycles/degree is invisible. If the face image is magnified by 5X, its contrast threshold function will be shifted to the left by 0.7 log cycles/degrees (dashed green line). But, because this patient’s contrast thresholds are elevated overall by 1 log unit, magnification would not improve the visibility of the part of the face contrast spectrum that was visible to the normally sighted person but below the patient’s contrast threshold in the unmagnified image (falls within the black curve but not the red curve). Indeed, in this example, magnification could make the visibility of the face image even worse for the patient. Contrast at spatial frequencies between 0.5 and 1 log cycles/degree on the display has to be increased by at least an amount ranging from 0.1 to 0.75 log unit over the frequencies of interest in order to optimize the visibility of the unmagnified face image. Peli et al. first demonstrated the feasibility of this frequency selective contrast enhancement strategy in 1984, but a considerable amount of work still needs to be done to develop and test the optimal contrast enhancement algorithm for low vision users representing a wide variety of visual impairment.16,33

Figure 2.

Log contrast threshold vs log spatial frequency for a normally sighted person (black curve) and a low vision patient (red curve). The area within the red curve represents contrasts that are visible to the patient. The area within the black curve are contrasts visible to a normally sighted person.

As shown in Figure 3, for a normally sighted person both the maximum contrast sensitivity and the cut-off frequency increase with mean luminance.34,35 The same luminance dependence of the contrast sensitivity function has been shown for patients with retinal diseases.36 The increase in these two parameters with increasing luminance explains why increasing ambient light can be helpful to low vision patients, although manipulating the ambient light level alone does nothing to change image contrast, which in the environment is determined by the ratio of reflectances. Even though visual acuity/cut-off frequency increases with luminance for both normally sighted and low vision observers, that occurs only over a limited range, after which it asymptotes at a maximum value. Therefore, contrast enhancement alone is not sufficient compensation for most patients, and it also is necessary to magnify the image to compensate for the loss of resolution (no benefit is gained from enhancing contrast of spatial frequencies that exceed the cut-off). However, as illustrated in Figure 2, middle spatial frequency bands (e.g., 3 to 7 cycles/degree) are often above the resolution limit but still below contrast threshold for the patient.30 Using digital image processing, with current technology we can now enhance the contrast of selected spatial frequency bands in live video images without significant frame delays.

Figure 3.

Normal log contrast sensitivity for different average luminance levels (see legend). Cut-off frequency (corresponds to visual acuity) increases with luminance. Contrast sensitivity at the peak also increases with luminance.

It has been demonstrated in past studies that individuals with low vision can recognize frequency-selective contrast enhanced images of faces better than faces that are unprocessed with the enhancement system.16,33,37 In addition, removing the image detail may actually improve recognition by reducing crowding in the image for the low vision patient.9 Because of between patient variations in the contrast sensitivity function,30 it most likely will be necessary to custom-prescribe the optimal parameters for contrast enhancement.

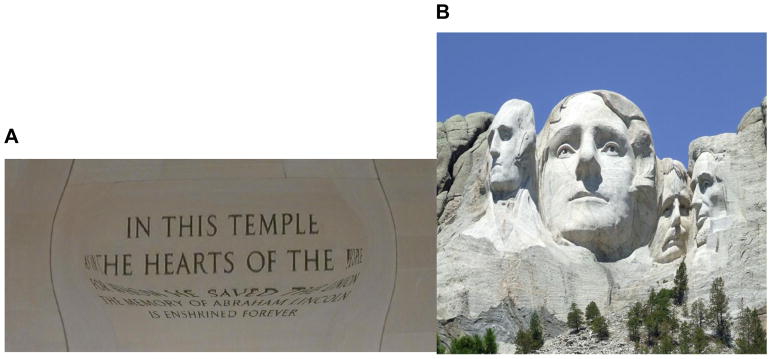

Most video magnifiers incorporate contrast stretching. As illustrated in the middle panel of Figure 4, contrast stretching consists of mapping pixel intensities above a criterion value to the maximum intensity, mapping pixel intensities below another criterion to the minimum intensity, and linearly rescaling the pixel intensities that fall between the two criteria. To minimize distorting the color or introducing color artifacts, contrast stretching should be limited to the luminance component of the video signal (L channel in the Lab color space), as was done in the middle panel of Figure 4. However, this type of contrast stretching at the pixel level does not take spatial frequency information into account.

Figure 4.

Left picture: original unprocessed image. Middle picture: contrast stretched in the luminance (L) channel of the original image and combined with unaltered color channel images (a,b). Right picture: unsharp mask applied to luminance (L) channel of original picture and combined with unaltered color channel images (a,b).

Edge enhancement is another contrast enhancement strategy that many of the current head-mounted LVESs use to compensate for reduced contrast sensitivity. Edge enhancement selectively stretches the contrast at sharp luminance gradients in the image (at edges of objects and features).38,39 Usually edges are defined by high spatial frequencies. One very old photographic method that could be applied to enhancing contrast of digital video images in a defined frequency band is unsharp masking. For this technique, the video image is masked with a blurred negative copy of the image (low frequencies only), which leaves only frequencies that are higher than the cut-off frequency of the mask. The contrast of the masked image is stretched and then multiplied with the original image. The right-most panel of Figure 4 illustrates the result of unsharp masking applied only to the luminance channel of the original image in the left-most panel. The technology now exists to implement these contrast enhancement strategies, but our knowledge of what constitutes optimal contrast enhancement for different patients and how effective contrast enhancement can be for different patients is still inadequate.

Image Remapping Strategies

Typically, there is one-to-one, one-to-many (in the case of magnification), or many-to-one (in the case of minification) mapping of camera pixels to display pixels. Loshin and Juday suggested that pixel mapping could be customized to distort images in order to prevent visual information from falling in the patient’s scotoma.17 As shown in Figure 5, the image can be torn and stretched around the scotoma. This form of local image remapping produces distortions in the image. Some demonstration studies have been reported that suggest such remapping could be beneficial to the patient, especially for reading.40–42 However, to properly implement this remapping strategy, the tear and distortion of the image on the display would have to be stabilized on the retina to keep it registered with the patient’s scotoma regardless of the direction of gaze. This approach requires eye tracking built into the head-mounted system with real time image remapping occurring at video frame rates.

Figure 5.

Image remapped around scotoma (black area) with a tear in the image and distortions to prevent any information from being lost.

We developed an image remapping method for magnification, which could best be described as a virtual bioptic telescope. This new magnification strategy, which is now incorporated in the IrisVision, consists of a magnified region of interest, called the “magnification bubble” that is embedded in a larger unmagnified field of view. Our approach borrows from some of the earlier ideas of Loshin and Juday, but like a bioptic telescope, head movements rather than eye movements are used to relocate the bubble to a new location. This approach avoids inherent problems associated with current eye tracking systems such as poor accuracy and precision, poor reliability, time lags because of frame delays, and difficulty with calibrations for users who cannot fixate reliably. The size and shape of the magnification bubble can be manipulated by the user to accommodate individual preferences and the requirements of specific tasks. For example, as shown in Figure 6A and 6B, a rectangular view might be optimal for reading and a circular view for seeing facial expressions. The amount of magnification within the bubble also can be controlled by the user. Unlike a conventional bioptic telescope, which overlays a magnified image on the unmagnified field of view and creates an artifactual ring scotoma, the magnification bubble distorts the image by remapping the transition from the magnified image in the bubble to the unmagnified surrounding field so as not to overlay and lose any visual information.

Figure 6.

Two magnification bubbles of different shape. 6A depicts a rectangular bubble potentially more helpful for reading tasks, while 6B depicts a circular bubble that may be used for object and facial recognition. Note the distortion around margins of the bubble to prevent information from being lost due to image overlap.

Presumably low vision patients fixate the bubble with the macula, or with a preferred retinal locus (PRL) if the macula is impaired, to look at the magnified area of interest.43–45 Experiments simulating scotomas in normally sighted subjects imply that training can influence development and location of the PRL.46,47 Because there are currently no eye tracking capabilities incorporated into the system, head movements alone change the visual information that falls in the bubble. Users can accomplish this movement of the bubble to a new region of interest quite comfortably and with little training. In addition to being able to manipulate magnification within the bubble and to change the size and shape of the bubble, the user has the option of manually relocating the bubble to any part of the video display. With an integrated eye tracking system in the future, it might be feasible to use eye movements to relocate the bubble on the display so that it always stays superposed on the part of the retina used for fixation (assuming the PRL is consistent under different viewing conditions and directions of gaze). Saunders and Woods have discussed system requirements that must be met to make such gaze-controlled image processing acceptable to the user.48 The development of accurate, precise, and reliable gaze-controlled real-time image processing in head-mounted displays, which is being pursed for many different applications, creates research opportunities for problems that have received relatively little attention in the low vision rehabilitation field.

One recent study completed by Aguilar and Castet tested a gaze-controlled system which magnified a portion of text while maintaining global viewing of the rest of the text. Their results suggest that user preference and reading speed was greater for the gaze-controlled condition when compared to uniformly applied magnification without any specificity to region of interest (mimicking commercial CCTVs), but there was no significant difference between the gaze-controlled system when compared to a system with zoom-induced text reformatting.49 We know that limitations exist in eye pointing and visual search with this type of gaze-contingent system from experiments described by Ashmore et al. using a fisheye magnification system in normally sighted observers. Based on information they gathered, hiding this magnification bubble during visual search leads to improvement in speed and accuracy over eye pointing with no bubble or with a bubble that is continually attached to the user’s gaze.50 We also know that fixation stability in patients with central vision impairment is generally poor,51,52 which may add complication to eye tracking systems during calibration and subsequently during image remapping. More research is needed to determine the most effective way to incorporate eye tracking and gaze-control with magnification within LVESs when performing various tasks.

Conceptually, one could extend the image remapping strategy to produce custom distortions in images that are designed to undo the effects of, and thereby correct, metamorphopsia. This approach also would require precise eye tracking to register the compensating image distortions with the part of the retina experiencing metamorphopsia. Virtual and augmented reality systems are creating a demand for high performance eye tracking systems in head-mounted displays in order to increase computing efficiency with gaze-referenced high level of detail rendering of graphical objects, so ongoing research and development is soon likely to produce the enabling eye tracking technology at a price consumers can afford.

Motion Compensation Strategies

The visual vestibulo-ocular reflex (VOR) produces eye movements that compensate for head movements to keep the image stationary on the retina (i.e., “doll’s eye” phenomenon). When viewing an angularly magnified image, the velocity of image motion relative to head motion is magnified by the same factor.53 When there is a mismatch between velocities recorded by the visual system and those recorded by the vestibular system, the individual can adapt to a limited extent with neural changes in the gain of the VOR to a limited extent. However, the range of physiological gain change is too small to be useful for the levels of magnification used for low vision enhancement, and this results in image slip on the retina.53,54 With increased levels of magnification, the image presented to the retina spans a larger field of view, and the magnitude of VOR eye movements needed to properly compensate for image motion eventually falls outside the range of gain control of the reflex. Image motion velocities greater than 20 degrees per second (which for example are commonly experienced when walking), progressively decrease contrast sensitivity and visual acuity with increasing velocity,55 which often is described clinically as dynamic visual acuity.7,14 Because the camera incorporated in a head-mounted LVES moves with the users’ head movements, magnified image motion not only decreases image resolution, but also increases the risk for motion sickness and other symptoms of visual discomfort as the user intentionally or inadvertently moves his/her head.56 Embedding the magnification bubble in a bioptic design allows for the visual information outside of the bubble to be presented with no added magnification, which minimizes the overall image motion experienced with increased levels of magnification. Although this stragegy reduces the susceptibility to motion sickness, magnified image motion within the bubble still imposes limits on the user’s performance because of dynamic visual acuity limitations on resolution.14

Mismatches between head and image motion beyond the range of VOR adaptation is a problem that has plagued virtual and augmented reality systems for decades.57–59 But advances in angular and linear motion sensor technology, now routinely incorporated in smart phones and modern virtual and augmented reality systems, and increases in computer graphics speed and power have made it possible to inexpensively match image motion to head motion plus the VOR. Improvements in the accuracy and precision of MEMS accelerometers and gyroscopes aid in better calculation of linear and angular motion needed to compensate for magnified image motion. For example, with the current capabilities of a smartphone, it is now possible to convert angular magnification to linear magnification in head-mounted LVESs. This strategy has been implemented in the IrisVision by texture mapping a high resolution image from the camera, or from stored or streamed media content, onto a virtual screen that moves in virtual reality at the negative of the velocity of natural head movements. This strategy eliminates artefactual image motion from the VVOR when viewing snapshot images and media content. With further development, this approach has the potential of eliminating magnified image motion from head-mounted video camera movements. This strategy makes any amount of magnification practical in an arbitrarily large field of view that can be explored with head movements.

When coupled with eye tracking, image motion compensation strategies could be employed to neutralize oscillopsia in conditions like nystagmus or bilateral vestibular loss. One could also consider extending motion compensation strategies to enhancement of visual flow fields, which are important to perceiving self-motion in the environment, maintaining balance and preventing falls, and to judging closing velocities to prevent collisions. Such an extension is likely to press the state of current technology. With the constantly accelerating rate of technology development, it is not too early to begin investigating these possibilities.

Augmented Reality Strategies

Unlike virtual reality (VR), which refers to a computer-generated environment in which the user is immersed and with which the user can interact, augmented reality refers to graphic overlays on, or graphic objects inserted in, live images of the real environment. Eli Peli’s strategy of vision multiplexing, which can be implemented optically, digitally, or with hybrid technology, is a pioneering application of augmented reality to low vision enhancement.60 The term “multiplexing” is used when multiple streams of information share a single mode of transmission. In the case of vision, multiplexing refers to a controlled form of spatial diplopia (superposed semi-transparent images that are visible simultaneously) or temporal diplopia (alternate presentation or alternate suppression of superposed images).60,61 Two examples of optical strategies for implementing vision multiplexing are Peli prisms,62 which in the case of hemianopic visual fields superimpose images from unseen portions of the peripheral field onto non-fixating seeing areas of remaining field, and the intraocular telescope, which magnifies a wide field centrally-viewed image in one eye only. Both methods can be implemented in AR with a head-mounted (e.g., SeeBoost) or heads-up (e.g., Google Glass or Cyber Eyez) display.60

Considerable basic research has been conducted on perceptual “filling-in” phenomena with artificial scotomas and the physiological blind spot.63,64 Most low vision patients with central scotomas are unaware of blind areas in their vision because the visual system covers them over with images that blend in with the background.65,66 This filling-in phenomenon causes objects, words, facial features, etc. to unexpectedly vanish or be replaced with incomprehensible patterns manufactured by the visual system. Consequently, the scotoma interferes with reading, visual search, face recognition, reaching for and grasping objects, and detecting obstacles. Making the scotoma visible by controlling the filling-in (e.g., with stabilized graphics annuli that are distinct from the rest of the background)67 may prove to be helpful in that at least the person would know where the blind spot is and how it has to be moved in order to look behind it.68 Currently, we do not know what kind of filling-in will occur with augmented reality graphic images (or image remapping around scotomas as discussed earlier) and whether or not it will cause confusion. There is much research that still needs to be done to further understand the filling-in process and determining if and how we can control the image that fills in the blind spot.

Combined with object and face recognition artificial intelligence software (e.g., OrCam MyEye and Microsoft Seeing AI application), augmented reality strategies could be employed to assist low vision patients by augmenting visible, but uninterpretable visual information (e.g., highlighting obstacles to assist mobility, highlighting scan paths or fixation history to assist visual search, and tagging or captioning objects and faces to assist with recognition). Combined with GPS-based navigation software, augmented reality strategies also could be employed to assist low vision patients with wayfinding. Many such systems undoubtedly will be developed for the normally-sighted consumer, so the implementation of such hybrid AR strategies might be more of an issue of adaptation or accommodation, rather than an independent effort to develop a dedicated LVES product.

Future Innovation

The low vision field needs to prepare to realize the promise of head-mounted LVESs as virtual reality and augmented reality technology development expands. This preparation can be accomplished only with innovative low vision research. We need to learn more about eye movements in the visually impaired population, how the neural visual system adapts to visual impairments, and how people with different types and degrees of visual impairment respond to various image processing strategies that are now possible to implement at video frame rates with head-mounted computer technology. Using these strategies to simplify and create improved fixation patterns may for example enhance reading performance in patients with central vision impairment.69 Future advances in head-mounted display technology that can be used as a platform for the ultimate head-mounted LVES still need to address the issues of size, weight, field of view, resolution, battery life, user interfacing, and attractive design while also having the computer power to integrate customizable low vision enhancement operations. The development of display technology with improved diffractive and light weight optics have helped in the advancement of these systems, but in order to increase both resolution and field of view, even better high-density displays must be developed along with high-density drivers. Fortunately, the requirements for low vision applications are less demanding in this regard than are the requirements of the general augmented reality and virtual reality consumer market, so the burden of enabling hardware development does not fall on the low vision industry.

Arguably the single most important required innovation still outstanding is to provide binocular disparity information to the patient under conditions of magnification. Because scotomas are rarely binocularly symmetric, binocular viewing, with its increased field of view and accompanying depth cues, offers potential advantages to the patient. However, diplopia and rivalry have negative consequences and constant suppression would defeat attempts to gain a binocular advantage.70,71 Also, scotomas and monocularly-measured preferred retinal locations are not likely to fall on corresponding points.72 We don’t know how patients respond when this happens but it is certainly an area of needed research and exploration. Binocular disparity of objects in different depth planes drives vergence, which will alter the overlap of scotomas in the two eyes resulting in a change in the binocular scotoma.73 This result has consequences for avoiding diplopia in remapped images. Diplopia also is a concern for magnification within a region of interest centered on the preferred retinal locus because angular magnification (pixel magnification) is employed, which also magnifies binocular disparity. Thus, the unmagnified part of the scene might be fused, but not necessarily zero disparity because of Panum’s area, and angular magnification in the region of interest then magnifies the disparity angle beyond the Panum tolerance limit causing diplopia.

The next generation of low vision enhancement will employ image processing algorithms individually prescribed to optimize each patient’s vision. While there is some existing research highlighting the benefits of image remapping and contrast enhancement image processing techniques,9 additional research on optimizing algorithm parameters and their implementation in commercially available technology must expand to increase the potential benefit of low vision enhancement systems for individual patients. These new devices and low vision enhancement strategies require skilled rehabilitation services, and the field therefore has to be prepared to train the patient.

Eye care professionals will likely have to soon learn new methods of vision rehabilitation. A similar situation was created on a small scale with the introduction of the implantable miniature telescope 74 and with various competing versions of phosphene-based prosthetic vision systems.75,76 While optics and CCTVs are still the convention, with new head-mounted LVES and artificial intelligence products we are experiencing the vanguard of a new wave of technology that soon will demand significant changes in how low vision patients are evaluated and the rehabilitation services that are provided to them. The limitations of optical aids point out the potential gaps electronic systems may fill across all individual users within the low vision population. With growing technology, we move closer to truly customizing products for each individual visual impairment.

Acknowledgments

National Eye Institute grant R01EY026617 (Massof – PI). National Eye Institute grant R44EY028077 (Werblin – PI).

References

- 1.Rovner BW, Casten RJ. Activity Loss and Depression in Age-Related Macular Degeneration. Am J Geriatr Psychiatry. 2002;10:305–10. [PubMed] [Google Scholar]

- 2.West SK, Rubin GS, Broman AT, et al. How Does Visual Impairment Affect Performance on Tasks of Everyday Life? The See Project. Salisbury Eye Evaluation Arch Ophthalmol. 2002;120:774–80. doi: 10.1001/archopht.120.6.774. [DOI] [PubMed] [Google Scholar]

- 3.Rovner BW, Casten RJ, Tasman WS. Effect of Depression on Vision Function in Age-Related Macular Degeneration. Arch Ophthalmol. 2002;120:1041–4. doi: 10.1001/archopht.120.8.1041. [DOI] [PubMed] [Google Scholar]

- 4.Salive ME, Guralnik J, Glynn RJ, et al. Association of Visual Impairment with Mobility and Physical Function. J Am Geriatr Soc. 1994;42:287–92. doi: 10.1111/j.1532-5415.1994.tb01753.x. [DOI] [PubMed] [Google Scholar]

- 5.Rubin GS, Bandeen-Roche K, Huang GH, et al. The Association of Multiple Visual Impairments with Self-Reported Visual Disability: See Project. Invest Ophthalmol Vis Sci. 2001;42:64–72. [PubMed] [Google Scholar]

- 6.Dickinson C. Low Vision: Principles and Practice. Oxford: Butterworth; 1988. [Google Scholar]

- 7.Genensky S, Baran P, Moshin H, Steingold H. A closed circuit TV system for the visually handicapped. Am Found Blind Res Bull. 1969;19:191. [Google Scholar]

- 8.Moshtael H, Aslam T, Underwood I, Dhillon B. High tech aids low vision: a review of image processing for the visually impaired. Transl Vis Sci Technol. 2015;4:6. doi: 10.1167/tvst.4.4.6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Wolffsohn JS, Peterson RC. A Review of Current Knowledge on Electronic Vision Enhancement Systems for the Visually Impaired. Ophthalmic Physiol Opt. 2003;23:35–42. doi: 10.1046/j.1475-1313.2003.00087.x. [DOI] [PubMed] [Google Scholar]

- 10.Vargas-Martin F, Peli E. Augmented-view for Restricted Visual Field: Multiple Device Implementations. Optom Vis Sci. 2002;79:715–23. doi: 10.1097/00006324-200211000-00009. [DOI] [PubMed] [Google Scholar]

- 11.Peli E. Head mounted display as a low vision aid. Proceedings of the Second International Conference on Virtual Reality and Persons with Disabilities; Northridge, CA: Center on Disabilities, California State University, Northridge; 1994. pp. 115–22. [Google Scholar]

- 12.Leat SJ, Mei M. Custom-Devised and Generic Digital Enhancement of Images for People with Maculopathy. Ophthalmic Physiol Opt. 2009;29:397–415. doi: 10.1111/j.1475-1313.2008.00633.x. [DOI] [PubMed] [Google Scholar]

- 13.Massof R. Electro-optical head-mounted low vision enhancement. Practical Optom. 1998;9:214–20. [Google Scholar]

- 14.Harper R, Culham L, Dickinson C. Head Mounted Video Magnification Devices for Low Vision Rehabilitation: A Comparison with Existing Technology. Br J Ophthalmol. 1999;83:495–500. doi: 10.1136/bjo.83.4.495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Ehrlich JR, Ojeda LV, Wicker D, et al. Head-Mounted Display Technology for Low-Vision Rehabilitation and Vision Enhancement. Am J Ophthalmol. 2017;176:26–32. doi: 10.1016/j.ajo.2016.12.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Peli E, Peli T. Image-enhancement for the Visually Impaired. Opt Eng. 1984;23:47–51. [Google Scholar]

- 17.Loshin DS, Juday RD. The Programmable Remapper: Clinical Applications for Patients with Field Defects. Optom Vis Sci. 1989;66:389–95. doi: 10.1097/00006324-198906000-00009. [DOI] [PubMed] [Google Scholar]

- 18.Massof RW, Rickman DL, Lalle PA. Low-Vision Enhancement System. J Hopkins Apl Tech D. 1994;15:120–5. [Google Scholar]

- 19.Massof R, Baker FH, Dagnelie G, et al. Low Vision Enhancement System: Improvements in Acuity and Contrast Sensitivity. Optom Vis Sci. 1995;72(Suppl):20. [Google Scholar]

- 20.Culham LE, Chabra A, Rubin GS. Clinical Performance of Electronic, Head-Mounted, Low-Vision Devices. Ophthalmic Physiol Opt. 2004;24:281–90. doi: 10.1111/j.1475-1313.2004.00193.x. [DOI] [PubMed] [Google Scholar]

- 21.Culham LE, Chabra A, Rubin GS. Users’ Subjective Evaluation of Electronic Vision Enhancement Systems. Ophthalmic Physiol Opt. 2009;29:138–49. doi: 10.1111/j.1475-1313.2008.00630.x. [DOI] [PubMed] [Google Scholar]

- 22.Colenbrander A, Fletcher D. Contrast Sensitivity and ADL Performance. Invest Ophthalmol Vis Sci. 2006;47(Suppl):5834. [Google Scholar]

- 23.Legge GE, Rubin GS, Luebker A. Psychophysics of Reading. V. The Role of Contrast in Normal Vision. Vision Res. 1987;27:1165–77. doi: 10.1016/0042-6989(87)90028-9. [DOI] [PubMed] [Google Scholar]

- 24.Fiorentini A, Maffei L, Sandini G. The Role of High Spatial Frequencies in Face Perception. Perception. 1983;12:195–201. doi: 10.1068/p120195. [DOI] [PubMed] [Google Scholar]

- 25.Hayes T, Morrone MC, Burr DC. Recognition of Positive and Negative Bandpass-Filtered Images. Perception. 1986;15:595–602. doi: 10.1068/p150595. [DOI] [PubMed] [Google Scholar]

- 26.Nasanen R. Spatial Frequency Bandwidth Used in the Recognition of Facial Images. Vision Res. 1999;39:3824–33. doi: 10.1016/s0042-6989(99)00096-6. [DOI] [PubMed] [Google Scholar]

- 27.Marron JA, Bailey IL. Visual Factors and Orientation-Mobility Performance. Am J Optom Physiol Opt. 1982;59:413–26. doi: 10.1097/00006324-198205000-00009. [DOI] [PubMed] [Google Scholar]

- 28.Pelli DG. The visual requirements of mobility. In: Woo GC, editor. Low Vision. New York: Springer; 1987. pp. 134–46. [Google Scholar]

- 29.Robson JG. Spatial and temporal contrast-sensitivity functions of the visual system. J Opt Soc Am. 1966;56:1141–2. [Google Scholar]

- 30.Chung ST, Legge GE. Comparing the Shape of Contrast Sensitivity Functions for Normal and Low Vision. Invest Ophthalmol Vis Sci. 2016;57:198–207. doi: 10.1167/iovs.15-18084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Field DJ. Relations between the Statistics of Natural Images and the Response Properties of Cortical Cells. J Opt Soc Am (A) 1987;4:2379–94. doi: 10.1364/josaa.4.002379. [DOI] [PubMed] [Google Scholar]

- 32.Geisler WS. Visual Perception and the Statistical Properties of Natural Scenes. Annu Rev Psychol. 2008;59:167–92. doi: 10.1146/annurev.psych.58.110405.085632. [DOI] [PubMed] [Google Scholar]

- 33.Peli E, Goldstein RB, Young GM, et al. Image Enhancement for the Visually Impaired. Simulations and Experimental Results. Invest Ophthalmol Vis Sci. 1991;32:2337–50. [PubMed] [Google Scholar]

- 34.Barten PG. Formula for the Contrast Sensitivity of the Human Eye. In: Miyake Y, Rasmussen DR, editors. Proc. SPIE 5294, Image Quality and System Performance; December 18, 2003; Bellevue, WA: International Society for Optics and Photonics; 2003. pp. 231–9. [Google Scholar]

- 35.Vanmeeteren A, Vos JJ. Resolution and Contrast Sensitivity at Low Luminances. Vision Res. 1972;12:825–33. doi: 10.1016/0042-6989(72)90008-9. [DOI] [PubMed] [Google Scholar]

- 36.Alexander KR, Derlacki DJ, Fishman GA. Contrast Thresholds for Letter Identification in Retinitis Pigmentosa. Invest Ophthalmol Vis Sci. 1992;33:1846–52. [PubMed] [Google Scholar]

- 37.Peli E, Lee E, Trempe CL, et al. Image Enhancement for the Visually Impaired: The Effects of Enhancement on Face Recognition. J Opt Soc Am (A) 1994;11:1929–39. doi: 10.1364/josaa.11.001929. [DOI] [PubMed] [Google Scholar]

- 38.Dawson BM. Image Filtering for Edge Enhancement. Technol Trends. 1986;20:93–8. [Google Scholar]

- 39.Peli E, Goldstein RB, Woods RL, et al. Wide-band Enhancement of TV Images for the Visually Impaired. Invest Ophthalmol Vis Sci. 2004;45(Suppl):4355. [Google Scholar]

- 40.Loshin DS, Juday RD, Barton RS. Design of a Reading Test for Low-Vision Image Warping. Visual Inform Process II. 1993;1961:67–72. [Google Scholar]

- 41.Ho JS, Loshin DS, Barton RS, et al. Testing of Remapping for Reading Enhancement for Patients with Central Visual Field Losses. Visual Inform Process IV. 1995;2488:417–24. [Google Scholar]

- 42.Gupta A, Mesik J, Engel SA, Smith R, et al. Beneficial effects of spatial remapping for reading with simulated central field loss. Invest Ophthalmol Vis Sci. 2018;59:1105–12. doi: 10.1167/iovs.16-21404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Crossland MD, Engel SA, Legge GE. The Preferred Retinal Locus in Macular Disease: Toward a Consensus Definition. Retina. 2011;31:2109–14. doi: 10.1097/IAE.0b013e31820d3fba. [DOI] [PubMed] [Google Scholar]

- 44.Fuchs W. Pseudo-fovea. In: Ellis Willis D., editor. A Source Book of Gestalt Psychology. London, England: Kegan Paul, Trench, Trubner & Company; 1938. pp. 357–61. [Google Scholar]

- 45.Timberlake GT, Mainster MA, Peli E, et al. Reading with a Macular Scotoma. I. Retinal Location of Scotoma and Fixation Area. Invest Ophthalmol Vis Sci. 1986;27:1137–47. [PubMed] [Google Scholar]

- 46.Barraza-Bernal MJ, Rifai K, Wahl S. A Preferred Retinal Location of Fixation Can Be Induced When Systematic Stimulus Relocations Are Applied. J Vis. 2017;17:11. doi: 10.1167/17.2.11. [DOI] [PubMed] [Google Scholar]

- 47.Liu R, Kwon M. Integrating Oculomotor and Perceptual Training to Induce a Pseudofovea: A Model System for Studying Central Vision Loss. J Vis. 2016;16:10. doi: 10.1167/16.6.10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Aguilar C, Castet E. Evaluation of a Gaze-Controlled Vision Enhancement System for Reading in Visually Impaired People. PLoS One. 2017;12:e0174910. doi: 10.1371/journal.pone.0174910. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Ashmore M, Duchowski AT, Shoemaker G. Efficient eye pointing with a fisheye lens. Proceedings of Graphics Interface; May 7, 2005; Toronto: Canadian Human-Computer Communications Society; 2005. pp. 203–10. [Google Scholar]

- 50.Culham LE, Fitzke FW, Timberlake GT, et al. Assessment of Fixation Stability in Normal Subjects and Patients Using a Scanning Laser Ophthalmoscope. Clin Vision Sci. 1993;8:551–61. [Google Scholar]

- 51.Rohrschneider K, Becker M, Kruse FE, et al. Stability of Fixation: Results of Fundus-Controlled Examination Using the Scanning Laser Ophthalmoscope. Ger J Ophthalmol. 1995;4:197–202. [PubMed] [Google Scholar]

- 52.Demer JL, Porter FI, Goldberg J, et al. Dynamic Visual Acuity with Telescopic Spectacles: Improvement with Adaptation. Invest Ophthalmol Vis Sci. 1988;29:1184–9. [PubMed] [Google Scholar]

- 53.Demer JL, Porter FI, Goldberg J, et al. Predictors of Functional Success in Telescopic Spectacle Use by Low Vision Patients. Invest Ophthalmol Vis Sci. 1989;30:1652–65. [PubMed] [Google Scholar]

- 54.Kelly DH. Visual Processing of Moving Stimuli. J Opt Soc Am (A) 1985;2:216–25. doi: 10.1364/josaa.2.000216. [DOI] [PubMed] [Google Scholar]

- 55.Peli E. Visual, Perceptual, and Optometric Issues with Head-mounted Displays (HMD) Playa del Ray, CA: Society for Information Display; 1996. [Google Scholar]

- 56.Kennedy RS, Drexler J, Kennedy RC. Research in Visually Induced Motion Sickness. Appl Ergon. 2010;41:494–503. doi: 10.1016/j.apergo.2009.11.006. [DOI] [PubMed] [Google Scholar]

- 57.Hettinger LJ, Riccio GE. Visually induced motion sickness in virtual environment. Presence. 1992;1:306–10. [Google Scholar]

- 58.Akiduki H, Nishiike S, Watanabe H, et al. Visual-Vestibular Conflict Induced by Virtual Reality in Humans. Neurosci Lett. 2003;340:197–200. doi: 10.1016/s0304-3940(03)00098-3. [DOI] [PubMed] [Google Scholar]

- 59.Saunders DR, Woods RL. Direct Measurement of the System Latency of Gaze-Contingent Displays. Behav Res Methods. 2014;46:439–47. doi: 10.3758/s13428-013-0375-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Peli E. Vision Multiplexing: An Engineering Approach to Vision Rehabilitation Device Development. Optom Vis Sci. 2001;78:304–15. doi: 10.1097/00006324-200105000-00014. [DOI] [PubMed] [Google Scholar]

- 61.Apfelbaum H, Apfelbaum D, Woods R, Peli E. The Effect of Edge Filtering on Vision Multiplexing. Digest of Technical Papers - SID International Symposium. 2005;36:1398–1401. [Google Scholar]

- 62.Peli E, Jung JH. Multiplexing Prisms for Field Expansion. Optom Vis Sci. 2017;94:817–29. doi: 10.1097/OPX.0000000000001102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Andrews PR, Campbell FW. Images at the Blind Spot. Nature. 1991;353:308. doi: 10.1038/353308a0. [DOI] [PubMed] [Google Scholar]

- 64.Ramachandran VS, Gregory RL. Perceptual Filling in of Artificially Induced Scotomas in Human Vision. Nature. 1991;350:699–702. doi: 10.1038/350699a0. [DOI] [PubMed] [Google Scholar]

- 65.Schuchard RA. Perception of Straight Line Objects across a Scotoma. Invest Ophthalmol Vis Sci. 1991;32(Suppl):816. [Google Scholar]

- 66.Zur D, Ullman S. Filling-in of Retinal Scotomas. Vision Res. 2003;43:971–82. doi: 10.1016/s0042-6989(03)00038-5. [DOI] [PubMed] [Google Scholar]

- 67.Spillmann L, Otte T, Hamburger K, et al. Perceptual Filling-in from the Edge of the Blind Spot. Vision Res. 2006;46:4252–7. doi: 10.1016/j.visres.2006.08.033. [DOI] [PubMed] [Google Scholar]

- 68.Pratt JD, Stevenson SB, Bedell HE. Scotoma Visibility and Reading Rate with Bilateral Central Scotomas. Optom Vis Sci. 2017;94:279–89. doi: 10.1097/OPX.0000000000001042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Calabrese A, Bernard JB, Faure G, et al. Clustering of Eye Fixations: A New Oculomotor Determinant of Reading Speed in Maculopathy. Invest Ophthalmol Vis Sci. 2016;57:3192–202. doi: 10.1167/iovs.16-19318. [DOI] [PubMed] [Google Scholar]

- 70.Ross N, Goldstein J, Massof R. Association of Self-reported Task Difficulty with Binocular Central Scotoma Locations. Invest Ophthalmol Vis Sci. 2013;54 E-Abstract 2188. [Google Scholar]

- 71.Tarita-Nistor L, Gonzalez EG, Markowitz SN, et al. Binocular Interactions in Patients with Age-Related Macular Degeneration: Acuity Summation and Rivalry. Vision Res. 2006;46:2487–98. doi: 10.1016/j.visres.2006.01.035. [DOI] [PubMed] [Google Scholar]

- 72.Tarita-Nistor L, Eizenman M, Landon-Brace N, et al. Identifying Absolute Preferred Retinal Locations During Binocular Viewing. Optom Vis Sci. 2015;92:863–72. doi: 10.1097/OPX.0000000000000641. [DOI] [PubMed] [Google Scholar]

- 73.Arditi A. The Volume Visual-Field: A Basis for Functional Perimetry. Clin Vision Sci. 1988;3:173–83. [Google Scholar]

- 74.Hau VS, London N, Dalton M. The Treatment Paradigm for the Implantable Miniature Telescope. Ophthalmology and therapy. 2016;5:21–30. doi: 10.1007/s40123-016-0047-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Chen SC, Suaning GJ, Morley JW, Lovell NH. Rehabilitation Regimes Based upon Psychophysical Studies of Prosthetic Vision. J Neural Eng. 2009:6. doi: 10.1088/1741-2560/6/3/035009. [DOI] [PubMed] [Google Scholar]

- 76.Xia P, Hu J, Peng Y. Adaptation to Phosphene Parameters Based on Multi-Object Recognition Using Simulated Prosthetic Vision. Artif Organs. 2015;39:1038–45. doi: 10.1111/aor.12504. [DOI] [PubMed] [Google Scholar]