Abstract

Objective

With the increasing amount of information presented on current human–computer interfaces, eye‐controlled highlighting has been proposed, as a new display technique, to optimise users’ task performances. However, it is unknown to what extent the eye‐controlled highlighting display facilitates visual search performance. The current study examined the facilitative effect of eye‐controlled highlighting display technique on visual search with two major attributes of visual stimuli: stimulus type and the visual similarity between targets and distractors.

Method

In Experiment 1, we used digits and Chinese words as materials to explore the generalisation of the facilitative effect of the eye‐controlled highlighting. In Experiment 2, we used Chinese words to examine the effect of target‐distractor similarity on the facilitation of eye‐controlled highlighting display.

Results

The eye‐controlling highlighting display improved visual search performance when words were used as searching target and when the target‐distractor similarity was high. No facilitative effect was found when digits were used as searching target or target‐distractor similarity was low.

Conclusions

The effectiveness of the eye‐controlled highlighting on a visual task was influenced by both stimulus type and target‐distractor similarity. These findings provided guidelines for modern interface design with eye‐based displays implemented.

Keywords: eye‐controlled, highlighting, stimulus type, target‐distractor similarity, visual search

What is already known about this topic

The eye‐controlled highlighting technique, in which an array of nine search items is highlighted based on the current gaze position of the user, has been shown to improve search performance when using digits and icons as the stimuli compared with a non‐highlighting condition.

Previous research has demonstrated the effects of a traditional highlighting technique while searching for a target among digits or Chinese characters.

Search efficiency decrements as the level of discriminability between the target and distractors decreases in a traditional interface.

What this topic adds

An eye‐controlled highlighting condition, adopting one item as a basic highlighting unit, could also improve search performance compared with a non‐highlighting condition.

Eye‐controlled highlighting technique was suitable for searching for targets among Chinese characters but not for targets among digits.

Eye‐controlled highlighting technique was more suitable when searching Chinese characters with greater similarity but was not suitable for distinct words.

Computer visual interface plays a crucial role in presenting information so as to support human–computer interactions (HCIs). With the increasing complexity of these interactions, the amount of information on computer visual interface becomes massive. It not only impairs users’ operation performance but also confuses users’ understanding of the presented information.

To enhance the effectiveness of information presentation, researchers developed dynamic techniques to present information based on users’ eye movement. This technique is called gaze‐contingent displays (GCDs). A typical application of GCDs is the multi‐resolution display, whose display resolution can be dynamically change according to users’ gaze (Duchowski, Cournia, & Murphy, 2004). The attended region will become sharp and clear (i.e., high resolution), while the un‐attended region will become blurry (i.e., low resolution). Currently, the GCDs have been used in various HCI scenarios (Mauderer, 2017), including visual research (Geisler, Perry, & Najemnik, 2006), reading (Rayner, Castelhano, & Yang, 2009), virtual reality (Vinnikov & Allison, 2014), user interface design (Klauck, Sugano, & Bulling, 2017), and even in the area of user interaction (Stellmach & Dachselt, 2012).

Inspired by the early GCDs research, we have proposed a novel dynamic computer highlighting display named eye‐controlled highlighting display, which combines traditional static highlighting technique with eye tracking technique (Li, Jiang, Wang, & Ge, 2017). Using the eye‐controlled highlighting, information could be highlighted based on the users’ look. When users attend to a computer screen, the contents (e.g., texts, images, and icons) within the looked‐at region would be enlarged. By contrast, the traditional highlighting display is static in nature: the saliency of one or more items has already been enhanced regardless of observers’ look (Fisher & Tan, 1989; Li, Tseng, & Chen, 2016; Scheiter & Eitel, 2015).

Both the eye‐controlled and traditional highlighting display techniques increase the saliency of a particular item on screens to facilitate information process. However, the traditional highlighting display cannot be adapted to users’ constantly updated interests and needs in real‐life scenarios. By contrast, the eye‐controlled highlighting display dynamically changes content saliency according to users’ eye movements. The gaze‐based dynamic information display is user friendly and easy to interact with.

In our previous study, we have showed the eye‐controlled highlighting display could facilitate visual search performance in the context of a massive amount of information (Li et al., 2017). Participants were required to search for a target among 100 small‐sized items. With the eye‐controlled highlighting display, an array of nine search items was simultaneously highlighted based on the participants’ gaze position. Compared with a non‐highlighting condition, the eye‐controlled highlighting improved users’ searching performances when they searched for digits and icons. This finding confirmed the validity of the technique. However, it is still unclear to what extent the eye‐controlled highlighting display can facilitate visual search. Thereby, it is necessary to investigate the applicability of eye‐controlled highlighting display with a typical HCI visual search task.

To address this question, we conducted two experiments to examine the applicability of the eye‐highlighting display technique with a visual search task. They were designed to investigate two important attributes of a visual search: stimulus type and the visual similarity between targets and distractors.

The effects of highlighting on visual search may vary when different types of stimuli are used (e.g., digits, icons, and words). Take blinking highlighting technique, e.g., Wang et al. (2015) reported that when colourful icons were used as searching target, blinking the words beneath icons was the most effective highlighting technique to facilitate searching performance. By contrast, such facilitative effect of blinking highlighting technique disappeared when grey icons were to be searched. When only digits were the searching target, Fisher and Tan (1989) found that blinking highlighting method failed to improve searching performance and suggested colour change highlighting might be the most appropriate method to assist digit searching. In accord with Fisher and Tan (1989), Wu and Yuan (2003) found that blinking highlighting method was worse than colour change method in facilitating searching for words and digits (in a table format). Together, these findings indicated that the effectiveness of a given highlighting technique is contingent on stimulus type. However, these findings were derived based on the traditional highlighting display technique, which is static. It remains unknown whether the eye‐controlled highlighting display technique can facilitate visual search performance regardless of stimuli types.

In addition, the current study attempted to explore the facilitative effect of the eye‐controlled highlighting technique by examining the role of searching target‐distractor similarity. This is due to the findings that task difficulties affect the effectiveness of the traditional highlighting technique. For instance, Ge, Xu, and Zhong (2001) examined the benefit of highlighting digits with an underline was greater when there were more distractors. However, it is unclear whether task difficulty also affects the current eye‐controlled highlighting display technique, like the traditional one.

One way to manipulate task difficulty is to change the similarity between the searching target and distractors (i.e., stimuli similarity). This is because previous studies have consistently shown that stimuli similarity affected search performance. For example, Duncan and Humphreys (1989) argued that the extent of similarity between targets and distractors plays a major role in visual search performance. Wolfe and Horowitz (2004) showed that the ratio of distractor‐target saliency increases as the target shifts away from the distractors in the colour space, suggesting the crucial role of similarity in a visual search task. Using closed structure materials, Han and Cao (2010) findings were also consistent with the stimuli similarity hypothesis. Godwin, Hout, and Menneer (2014) investigated the effect of target‐distractor similarity on visual search performance by recording participants’ eye movements. Thereby, the current study used target‐distractor similarity to examine how task difficulty influences the effectiveness of the eye‐controlled highlighting display.

With the purpose of exploring the effect of eye‐controlled highlighting in a realistic context, this study adopted digits and Chinese words as search items. Both items are commonly seen among Chinese users and have also been adopted as materials in many studies (e.g., Godwin et al., 2014; Liu, Yu, & Zhang, 2016; Yu, Zhang, Priest, Reichle, & Sheridan, 2017). Therefore, Experiment 1 used digits and Chinese words as two types of stimuli. Furthermore, Chinese characters, unlike digits and English letters, are square or rectangular and have a varying number of strokes with the size equally spaced (Goonetilleke, Lau, & Shih, 2002). This characteristic may be more likely to cause problems for users when searching for Chinese characters relative to alphanumeric characters (Wang, 2013). While Goonetilleke et al. (2002) found no impact of the visual complexity of Chinese texts on search performance, target‐distractor similarity is likely a factor that should be accounted for in visual search. We used Chinese words with different levels of target‐distractor similarity as stimuli in Experiment 2. In addition, in contrast to our earlier study (Li et al., 2017), this study adopted one search item as a basic highlighting unit as opposed to nine items. Previous evidence has shown that participants tended to spend longer time examining each fixation in small‐sized central regions than that in large‐sized central regions (Parkhurst, Culurciello, & Niebur, 2000). Therefore, we used a relatively small‐sized highlighting region in this study, since Chinese words are complex in visual structure and are likely to require longer fixations.

In sum, the present study aimed to investigate the specific application of the eye‐controlled highlighting display technique to visual searching. Experiment 1, using digits and Chinese words as materials, explored the effect of stimulus type on the eye‐controlled highlighting display technique. Experiment 2, using Chinese words as the material, we further examined the influence of target‐distractor similarity on the eye‐controlled highlighting display technique.

EXPERIMENT 1

The purpose of Experiment 1 was to investigate the effect of stimulus type on the effectiveness of the eye‐controlled highlighting. We used digits and Chinese words as stimuli with distinct features.

Method

Participants

A total of 64 college participants (28 males, 36 females) aged 18–24 years old were recruited for this experiment. All the participants were right‐handed and normally sighted. These participants were randomly assigned to four groups: digits with no highlighting, digits with eye‐controlled highlighting, words with no highlighting, and words with eye‐controlled highlighting. Oral informed consent was obtained from each participant, following a research protocol approved by the Institutional Review Board of Zhejiang Sci‐Tech University. All experimental methods were conducted in accordance with approved guidelines regarding all relevant aspects, including the recruitment, experimental process information, compensation, and debriefing of participants.

Materials and design

A 2 (stimulus type: digits and words) × 2 (mode of highlighting: no highlighting and eye‐controlled highlighting) between‐subject design was adopted.

The visual display was equally divided into 10 × 10 grids. Each resultant box (144 × 90 pixels) was an independent unit for interaction. Search items were placed in the centre of these boxes, with one item in each box. For each trial, 100 digits/words were randomly selected as stimuli from a pool of 300 digits/words. The items were initially presented with a font size of 7 pounds (FOV: 0.23° × 0.09°) and grey colour (RGB (200, 200, 200)). Digits ranging from 100 to 999 (e.g., 156) were randomly generated by the program without repetition. As for Chinese words, non‐repetitive Chinese two‐character words were used (e.g., 投资).

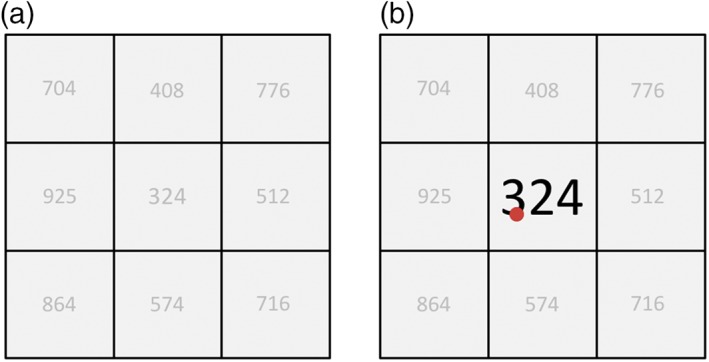

On the no highlighting condition, the font size of the stimuli remained the same during search (Fig. 1a). On the eye‐controlled highlighting condition, the size of the stimuli changed according to the location of the current gaze (Fig. 1b). When the gaze was located within one box, the item in this box became highlighted: its size was doubled (14 pounds, FOV: 0.46° × 0.18°) and its colour changed to black (RGB (0, 0, 0)). When participants’ gaze moved out of the previously fixated box, the size and colour of the item returned to its initial state (7 pounds and grey).

Figure 1.

A schematic illustration of search displays under two conditions. (a) No highlighting condition—the font size of the stimuli remained the same during search; (b) eye‐controlled highlighting condition—the size of the stimuli changed according to the location of the current gaze. When the gaze was located within one box, the item in this box became highlighted: its size was doubled (14 pounds, FOV: 0.46° × 0.18°) and its colour changed to black (RGB (0, 0, 0)) (Note. The borders of all rectangles are not visible in the actual experiment).

Apparatus

The SMI‐iView X RED eye‐tracker system was used in the current study to track users’ eye movements with a sampling rate of 120 Hz. In addition, a computer was implemented to run the experimental program and record data. The eye‐tracking system is contact free and thus was placed at the bottom of a monitor (1,440 × 900 pixels). The participants were seated 60 cm from the monitor (FOV: 37.7° × 24.5°). A bracket was set up on the desk to support the participant's head to prevent inaccuracies in eye tracking caused by head movement. The lighting in the lab was provided by fluorescent lamps with low intensity. The experimental program was compiled by Visual C# 2010, which included the API functions developed by the SMI Company to connect with the eye‐tracking system.

Procedure

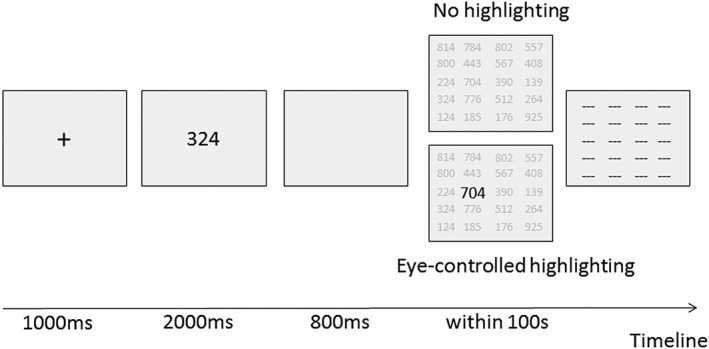

As shown in Fig. 2, on each trial, a black ‘+’ was presented at the centre of the display with a light grey background colour (RGB (240, 240, 240)) for 1,000 ms. The target was then presented for 2,000 ms to inform participants what the target was. After an 800 ms blank, the search field with 100 items (embedded in the 10 × 10 grids) was displayed. Participants were required to indicate that he/she found the target by clicking mouse. If the participant failed to finish searching within 100 s, the program automatically ended that trial and proceeded to the next one.

Figure 2.

Illustration of one trial in the visual search task. First, a black ‘+’ was presented at the centre of the display with a light grey background colour (RGB (240, 240, 240)) for 1,000 ms. the target was then presented for 2000 ms to inform participants what the target was. After an 800‐ms blank, the search field with 100 items (embedded in the 10 × 10 grids) was displayed. Participants were required to indicate that he/she found the target by clicking mouse. Right after that, all stimuli on the display were masked with ‘‐‐‐’. Participants then needed to indicate the target location by moving the cursor to target location and click the mouse again. If the participant failed to finish searching within 100 s, the program automatically ended that trial and proceeded to the next one. The program recorded the searching time and accuracy for every trial.

To measure the accuracy of participants’ search, we asked participants to indicate the target location. Specifically, right after participants clicked mouse to claim he/she found the target, all stimuli on the display were masked with ‘‐‐‐’. Participants then needed to indicate the target location by moving the cursor to target location and click the mouse again. The program recorded the searching time and accuracy for every trial. Feedback was provided to participants in the practice session but not in the experiment session.

Before the experiment, participants were required to read the instructions to become familiar with the task, and then the experimenter conducted a five‐point calibration. The calibration would end when the errors on the X and Y axis was smaller than 0.5°. After calibration, participants were asked to complete five practice trials followed by the formal experiment section. There were 20 trials for each condition and one short break was scheduled at the middle of the experiment.

Results and discussion

We analysed search time as a primary‐dependent variable and search accuracy as a supplemental‐dependent variable. The search time was the average time of all successful trials (trials that were completed correctly and timely).

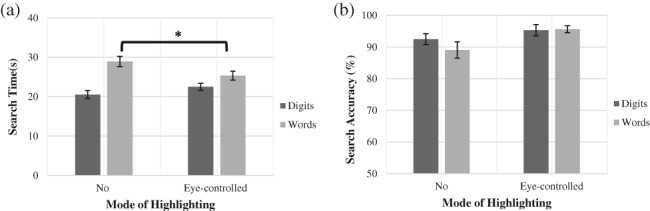

Table 1 shows the average search time and search accuracy under four conditions. A 2 (stimulus type: digits vs words) × 2 (mode of highlighting: no highlighting vs eye‐controlled highlighting) two‐way analysis of variance (ANOVA) was conducted. The results revealed that participants searched the target faster with digits (M = 21.55, SD = 3.85) than that with words (M = 27.15, SD = 5.12),which was supported by a significant main effect of stimulus type (F(1, 60) = 26.55, p < .001,η 2 = .31). However, we did not find the main effect of the highlighting mode on search time (F(1, 60) = 0.55, p = .46). Importantly, the interaction effect of stimulus type and highlighting mode on search time was significant (F(1, 60) = 6.51, p < .05, η 2 = .10). Further simple effect analysis indicated that, as shown in Fig. 3a, when words were used as stimuli, the search time was significantly faster in the eye‐controlled condition (M = 25.36, SD = 4.54) than that in the no highlighting condition (M = 28.95, SD = 5.16), t (30) = −2.09, p < .05. However, there was no significant difference between the two highlighting conditions when digits were used as stimuli (t (30) = 1.47, p = .15).

Table 1.

Mean (standard deviation) of search time and search accuracy in four conditions (Experiment 1, N = 64)

| Number | Word | |||

|---|---|---|---|---|

| No highlighting | Eye‐controlled highlighting | No highlighting | Eye‐controlled highlighting | |

| Search time(s) | 20.56 (3.99) | 22.53 (3.55) | 28.95 (5.16) | 25.36 (4.54) |

| Search accuracy (%) | 92.50 (6.83) | 95.31 (7.18) | 89.06 (10.36) | 95.63 (4.43) |

Note. Search Accuracy = ((the number of total trials − the number of incorrect trials − the number of time‐out trials)/the number of total trials) × 100%; the search time was the average time of all successful trials.

Figure 3.

Means of target searching time (a) and accuracy (b) in no highlighting or eye‐controlled highlighting conditions with digits or words (Experiment 1). Each error bar represents a unit of standard error. The asterisk indicates a significant difference in the searching time of words between the no highlighting and eye‐controlled highlighting conditions (independent sample t‐test, p < .05).

The ANOVA results on search accuracy showed that the effect of highlighting mode was significant (F(1, 60) = 6.24, p < .05, η 2 = .09), suggesting that the eye‐controlled highlighting led higher search accuracy (M = .95, SD = .06) than the no highlighting (M = .91, SD = .09). However, there was no significant main effect of stimulus type (F(1, 60) = .69, p = .41) or the interaction effect (F(1, 60) = 1.00, p = .32).

In sum, these findings indicated that the facilitative effect of eye‐controlled highlighting on visual search performance was dependent on stimulus type. When stimuli were two‐character Chinese words, eye‐controlled highlighting decreased the search time. This effect, however, was not significant when stimuli were digits. In addition, eye‐controlled highlighting can consistently help participants find the target more accurately when stimuli were digits or words.

EXPERIMENT 2

Experiment 2 aimed to investigate the effect of target‐distractor similarity on the effectiveness of eye‐controlled highlighting display. We used Chinese words with different levels of target‐distractor similarity as stimuli.

Method

Participants

A total of 48 college participants (24 males, 24 females), 18–24 years old were recruited for the current experiment. All of the participants were right‐handed and normally sighted. These participants were randomly assigned to two groups: no highlighting and the eye‐controlled highlighting.

Materials and design

A 2 (stimulus similarity: low vs high) × 2 (mode of highlighting: no highlighting vs eye‐controlled highlighting) mixed design was adopted in Experiment 2 with the stimulus similarity as a within‐subject factor and the mode of highlighting as a between‐subject factor.

The setup of the visual display was identical to that in Experiment 1 except for the following. A total of 119 non‐repetitive Chinese two‐character words were used as stimuli. Among them, 20 words were targets (10 for the low‐similarity condition and the other 10 for the high‐similarity condition) and 99 were distractors. The distractors were the same across trials and conditions, but the positions of the distractors were randomised. For each trial, one word was randomly selected from ten words as the target. On the high‐similarity condition, the target word shared one character with all distractors (e.g., 糖果‐糖原). On the low‐similarity condition, both characters were different between the target and distractors (e.g., 西瓜‐糖原).

The visual similarity (e.g., shape) of the stimuli was assessed by 15 participants. Each participant was asked to rate word pairs that consisted of the target and distractor stimuli on a 7‐points Likert scale. Paired t‐test showed that the average rating of the word pairs from low‐similarity condition (M = 1.31, SD = 0.37) was significantly lower than from high‐similarity condition (M = 3.94, SD = 1.24), t (14) = 9.62, p < .001, Cohen's d = 2.57.

There were 20 trials for each condition. Each participant was required to complete 40 trials from two conditions that were presented in random order.

Apparatus

The apparatus was identical to that used in Experiment 1.

Procedure

The procedure was identical to that used in Experiment 1.

Results and discussion

The dependent variables in Experiment 2 were identical to that calculated in Experiment 1.

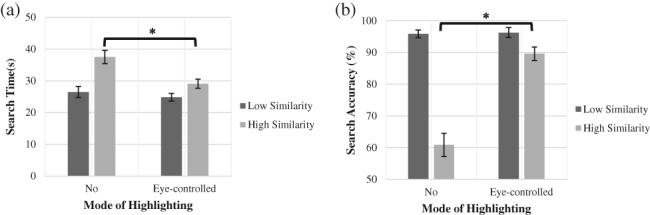

Table 2 shows the average search time and search accuracy under four conditions. A 2 (stimulus similarity: low vs high) × 2 (mode of highlighting: no highlighting vs eye‐controlled highlighting) mixed ANOVA was conducted. The mode of highlighting had a significant effect on search time (F(1, 46) = 6.64, p < .05, η 2 = .126), suggesting that participants searched the target faster with eye‐controlled highlighting (M = 26.96, SD = 5.39) than that with no highlighting (M = 31.99, SD = 7.89). Moreover, with increased target‐distractor similarity, participants exhibited increased search time (low: M = 25.65, SD = 7.28; high: M = 33.29, SD = 9.67), which was supported by a significant main effect of stimulus similarity (F(1, 46) = 35.68, p < .001, η 2 = 0.44). Furthermore, the interaction between highlighting mode and stimulus similarity was significant (F(1, 46) = 7.02, p < .05, η 2 = 0.132). Simple effect analysis indicated that, as shown in Fig. 4a, in the high‐similarity condition, the search time was significantly faster in the eye‐controlled highlighting condition (M = 29.09, SD = 7.10) than that in the no highlighting condition (M = 37.50, SD = 10.19), t(46) = −3.32, p < .05. In the low‐similarity condition, there was no significant difference between the two highlighting conditions (t(46) = −0.78, p = .44).

Table 2.

Mean (standard deviation) of search time and search accuracy in four conditions (Experiment 2, N = 48)

| Low similarity | High similarity | |||

|---|---|---|---|---|

| No highlighting | Eye‐controlled highlighting | No highlighting | Eye‐controlled highlighting | |

| Search time(s) | 26.47 (8.52) | 24.83 (5.85) | 37.50 (10.19) | 29.09 (7.10) |

| Search accuracy (%) | 95.83 (5.84) | 96.25 (7.70) | 60.83 (17.92) | 89.60 (10.42) |

Figure 4.

Means of target searching time (a) and accuracy (b) in no highlighting or eye‐controlled highlighting conditions with low‐similarity words or high‐similarity words (Experiment 2). Each error bar represents a unit of standard error. The asterisk indicates a significant difference in the searching time (a)/accuracy (b) of high‐similarity words between the no highlighting and eye‐controlled highlighting conditions (independent sample t‐test, p < .05).

We also found the main effects on search accuracy in both the highlighting mode (F(1, 46) = 27.76, p < .001, η 2 = 0.38) and stimulus similarity (F(1, 46) = 134.35, p < .001, η 2 = 0.75), suggesting that the eye‐controlled highlighting display facilitated the search accuracy (no highlighting: M = .78, SD = .11, eye‐controlled highlighting: M = .93, SD = .08), and with increased level of similarity, participants exhibited decreased search accuracy(low: M = .96, SD = .07, high: M = .75, SD = .21). Moreover, a significant interaction suggested that highlighting mode effect in the high and low level of similarity conditions was significantly different (F(1, 46) = 62.12, p < .01, η 2 = 0.58). A series of t tests revealed that, as shown in Fig. 4b, when words with high similarity were used as stimuli, the search accuracy was significantly higher in the eye‐controlled highlighting condition (M = .90, SD = .10) than that in the no highlighting condition (M = .61, SD = .18), t(46) = 6.80, p < .001. However, when low‐similarity words were used as stimuli, no significant difference was found between the two highlighting modes (t(46) = 0.21, p = .83).

In sum, these findings indicated that the facilitative effect of eye‐controlled highlighting on visual search performance was further dependent on target‐distractor similarity. When stimuli were highly similar words, eye‐controlled highlighting augmented visual searching and improved searching accuracy. This effect, however, was not significant when target and distractors shared low visual similarity.

GENERAL DISCUSSION

The present study examined how the facilitative effect of eye‐controlled highlighting display on visual search performance was affected by stimulus type (Experiment 1) and target‐distractor similarity (Experiment 2). The results showed that the facilitation of eye‐controlled highlighting on a visual search task was influenced by both the stimulus type and target‐distractor similarity. Specifically, the eye‐controlled highlighting was beneficial when words were used as stimuli, especially words with high similarity. When digits or words with low similarity were used, there was no evidence that the eye‐controlled highlighting would enhance searching performance.

Together, the findings of the two experiments indicated that our eye‐controlled highlighting method (tracking the observer's eye movement and highlighting search items accordingly) was proven to be useful in assisting target searching as compared with a baseline condition, where no highlighting was implemented. This finding was consistent with our early work on this display technique (Li et al., 2017).

Moreover, we initially found that the effect of stimulus type and eye‐controlled highlighting decreases searching time for two‐character Chinese words, but not for the three‐digit numbers. This is consistent with previous studies examining how traditional highlighting display facilitated searching when Chinese characters were used as stimuli (So & Chan, 2009; Wang, 2013; Wang et al., 2015). However, this current finding is to some extent different from those findings using traditional static highlighting. When using digits as search items, several studies have demonstrated the benefit of highlighting (e.g., Fisher & Tan, 1989; Wu & Yuan, 2003). In addition, our previous study showed that eye‐controlled highlighting enhanced searching performance when three‐digit numbers were used (Li et al., 2017). However, the size of the highlighted region was much larger than that in the current study. These inconsistent findings suggested that the size of the highlighted region may be a critical factor that modulates the effectiveness of eye‐controlled highlighting. To further understand the effectiveness of the eye‐controlled highlighting display, future studies should examine the interaction between stimulus type and the size of the highlighted region. The comparison between our findings with previous evidence suggests that eye‐controlled highlighting has unique contribution to augment visual search performance.

Regarding the different facilitation of the eye‐controlled highlighting on searching for digits versus words in Experiment 1, we assume the reason for this difference may be related to the different strategies adopted by participants in the two conditions. Research has suggested that visual searching is a parallel process when the target is highly distinct from distractors. Therefore, while using digits as stimuli, parallel processing was an effective and achievable strategy for participants in the no‐highlighting condition. When eye‐controlled highlighting is added to the search task, a parallel strategy may not be achieved, since the dynamic highlighting technology could invoke exogenous saccades and narrow the attention of participants, consequently leading to a serial search. However, this might not be the case for word searching. Evidence from Chinese characters research suggests that visual search was serially conducted when stimuli were Chinese characters (Zhang & Jin, 1995). Therefore, the disadvantages brought on by the eye‐controlled highlighting were not observed when words were used as stimuli. In contrast, the words presented in this experiment were small in size, making them difficult to recognise by participants. The presence of the eye‐controlled highlighting facilitates the process of perception and recognition, therefore leading to faster target searching.

This searching strategy assumption, however, needs to be further verified with additional studies. It should be noted that digits are visually less complex than words. Future studies could use more complex stimuli (e.g., six‐digit numbers), which are more difficult to distinguish and may show a similar facilitative effect of the eye‐controlled highlighting.

We also found that the effects of target‐distractor similarity and eye‐controlled highlighting were more applicable when the searched materials had greater similarity (more difficult). The effect of target‐distractor similarity on visual search performance has been demonstrated in a great number of studies (e.g., Duncan & Humphreys, 1989; Wolfe & Horowitz, 2004). We hypothesized that target‐distractor similarity modulates the impact of eye‐controlled highlighting by affecting the difficulty of the search task. When the level of target‐distractor similarity was low, the target became salient due to its uniqueness of form, making it easier for participants to find the target even without highlighting. In contrast, the condition of high similarity required more detailed recognition and processing. In this circumstance, the involvement of eye‐controlled highlighting assisted the participant in processing the target and distinguishing it from distractors more rapidly and accurately. We argued that eye‐controlled highlighting has the benefit of facilitating the processing of the attended item, but it may cause a slower attentional switch between items and lead to a serial search strategy. Accordingly, if the target is distinct from the distractors, the disadvantages of eye‐controlled highlighting may outweigh its benefits.

The biggest difference between the eye‐controlled highlighting and static highlighting is that the former is user‐centred, while the latter is system‐centred. In a visual search with traditional static highlighting, the attention of the user is guided to the highlighted items, resulting in greater efficiency of searching when the item highlighted is relevant to the task (Ozcelik, Arslan‐Ari, & Cagiltay, 2010). In a realistic context, however, the interests and demands of the user are commonly unknown or change over time, which may lead to a mismatch between ‘what they want’ and ‘what they get’. The eye‐controlled highlighting has solved this problem to some degree, since the eye movement of the user is closely relevant to his or her interest or attention. From the perspective of visual cognition, eye‐controlled highlighting combines top‐down with bottom‐up processing by utilising gaze information. Furthermore, the present study focused on the suitability of the eye‐controlled highlighting in the visual search task with different stimuli types and similarities, and how this technique influences visual processing and attention. We hope these findings may have laid some empirical foundation for interface design when implementing eye‐based displays.

To widely implement the eye‐controlled highlighting display technique, future studies need to focus on optimising the display system to make it more flexible and adaptable. In the current study, a search item is instantaneously highlighted after the onset of eye movement, due to the assumption that the shift of attention depends on saccades. However, studies suggest that saccades are neither necessary nor sufficient for the control of attention (Zhao, Gersch, Schnitzer, Dosher, & Kowler, 2012). Thereby, the eye‐controlled display may highlight an item that observers do not intend to attend, which interrupt and hinder visual search. To obtain a more accurate analysis of users’ intentions and behaviours, information obtained with other modalities, such as EEGs, can be collected and incorporated into the display to achieve a more accurate dynamic display system.

CONCLUSION

In summary, the present study confirmed that, compared to the non‐highlighting condition, eye‐controlled highlighting display technique has its unique advantage to improve visual search performance. Specifically, eye‐controlled highlighting was beneficial to visual search performance when words and high‐similarity words were used. When digits or low‐similarity words were to be searched, eye‐controlled highlighting imparted no significant enhancement.

ACKNOWLEDGEMENTS

Support for this work was provided by the National Natural Science Foundation of China (NSFC: 31671146), the Program of Department of Education of Zhejiang Province (Y201635486), and the foundation of Equipment pre‐research of 2015 (9140C770202150C77317) in China Astronaut Research and Training Center.

Contributor Information

Qijun Wang, Email: wqj-zstu@zstu.edu.cn, Email: wqijun1981@163.com.

Liezhong Ge, Email: 0816211@zju.edu.cn.

REFERENCES

- Duchowski, A. T. , Cournia, N. , & Murphy, H. (2004). Gaze‐contingent displays: A review. CyberPsychology & Behavior, 7(6), 621–634. 10.1089/cpb.2004.7.621 [DOI] [PubMed] [Google Scholar]

- Duncan, J. , & Humphreys, G. W. (1989). Visual search and stimulus similarity. Psychological Review, 96(3), 433–458. 10.1037/0033-295X.96.3.433 [DOI] [PubMed] [Google Scholar]

- Fisher, D. L. , & Tan, K. C. (1989). Visual displays: The highlighting paradox. Human Factors, 31(1), 17–30. 10.1177/001872088903100102 [DOI] [PubMed] [Google Scholar]

- Ge, L. , Xu, W. , & Zhong, J. (2001). An study on underline highlighting in difference display backgrounds and difficulty levels. Chinese Journal of Ergonomics, 7(4), 6–8. 10.3969/j.issn.1006-8309.2001.04.002 [DOI] [Google Scholar]

- Geisler, W. S. , Perry, J. S. , & Najemnik, J. (2006). Visual search: The role of peripheral information measured using gaze‐contingent displays. Journal of Vision, 6(9), 858–873. 10.1167/6.9.1 [DOI] [PubMed] [Google Scholar]

- Godwin, H. J. , Hout, M. C. , & Menneer, T. (2014). Visual similarity is stronger than semantic similarity in guiding visual search for numbers. Psychonomic Bulletin and Review, 21(3), 689–695. 10.3758/s13423-013-0547-4 [DOI] [PubMed] [Google Scholar]

- Goonetilleke, R. S. , Lau, W. C. , & Shih, H. M. (2002). Visual search strategies and eye movements when searching Chinese character screens. International Journal of Human‐Computer Studies, 57(6), 447–468. 10.1006/ijhc.2002.1027 [DOI] [Google Scholar]

- Han, Z. , & Cao, L. (2010). Stimulus similarity and closed structure material in visual search procession. Journal of Pyschological Science, 33(2), 361–363. https://doi.org/10.16719/j.cnki.1671-6981.2010.02.026 [Google Scholar]

- Klauck, M. , Sugano, Y. , & Bulling, A. (2017). Noticeable or distractive?: A design space for gaze‐contingent user interface notifications. Proceedings of the 2017 CHI conference extended abstracts on human factors in computing systems (pp. 1779–1786). New York, NY: ACM. 10.1145/3027063.3053085 [DOI]

- Li, H. , Jiang, K. , Wang, Q. , & Ge, L. (2017). Research on interactive highlighting based on eye tracking technology. Journal of Pyschological Science, 40(2), 269–276. https://doi.org/10.16719/j.cnki.1671-6981.20170203 [Google Scholar]

- Li, L. Y. , Tseng, S. T. , & Chen, G. D. (2016). Effect of hypertext highlighting on browsing, reading, and navigational performance. Computers in Human Behavior, 54, 318–325. 10.1016/j.chb.2015.08.012 [DOI] [Google Scholar]

- Liu, N. , Yu, R. , & Zhang, Y. (2016). Effects of font size, stroke width, and character complexity on the legibility of Chinese characters. Human Factors and Ergonomics in Manufacturing & Service Industries, 26(3), 381–392. 10.1002/hfm.20663 [DOI] [Google Scholar]

- Mauderer, M. (2017). Augmenting visual perception with gaze‐contingent displays (unpublished doctoral dissertation). University of St Andrews.

- Ozcelik, E. , Arslan‐Ari, I. , & Cagiltay, K. (2010). Why does signaling enhance multimedia learning? Evidence from eye movements. Computers in Human Behavior, 26(1), 110–117. 10.1016/j.chb.2009.09.001 [DOI] [Google Scholar]

- Parkhurst, D. , Culurciello, E. , & Niebur, E. (2000, November). Evaluating variable resolution displays with visual search: Task performance and eye movements. Proceedings of the 2000 symposium on eye tracking research & applications (pp. 105–109). New York, NY: ACM. 10.1145/355017.355033 [DOI]

- Rayner, K. , Castelhano, M. S. , & Yang, J. (2009). Eye movements and the perceptual span in older and younger readers. Psychology and Aging, 24(3), 755–760. 10.1037/a0014300 [DOI] [PubMed] [Google Scholar]

- Scheiter, K. , & Eitel, A. (2015). Signals foster multimedia learning by supporting integration of highlighted text and diagram elements. Learning and Instruction, 36, 11–26. 10.1016/j.learninstruc.2014.11.002 [DOI] [Google Scholar]

- So, J. C. , & Chan, A. H. (2009). Influence of highlighting validity on dynamic text comprehension performance. Proceedings of the international multiconference of engineers and computer scientists (Vol. 2, pp. 18–20). Hong Kong: Newswood Limited. 10.1063/1.3256250 [DOI]

- Stellmach, S. , & Dachselt, R. (2012, May). Look & touch: Gaze‐supported target acquisition. Proceedings of the SIGCHI conference on human factors in computing systems (pp. 2981–2990). New York, NY: ACM. 10.1145/2207676.2208709 [DOI]

- Vinnikov, M. , & Allison, R. S. (2014, March). Gaze‐contingent depth of field in realistic scenes: The user experience. Proceedings of the symposium on eye tracking research and applications (pp. 119–126). New York, NY: ACM. 10.1145/2578153.2578170 [DOI]

- Wang, H. F. (2013). Influence of highlighting, columns, and font size on visual search performance with respect to on‐screen Chinese characters. Perceptual and Motor Skills, 117(2), 528–541. 10.2466/24.26.PMS.117x17z3 [DOI] [PubMed] [Google Scholar]

- Wang, L. , Ge, L. , Jiang, T. , Liu, H. , Li, H. , Hu, X. , & Zheng, H. (2015). Influence of highlighting words beneath icon on performance of visual search in tablet computer In Shumaker R. & Lackey S. (Eds.), International conference on virtual, augmented and mixed reality. (pp. 81–87). Cham: Springer; 10.1007/978-3-319-21067-4_10 [DOI] [Google Scholar]

- Wolfe, J. M. , & Horowitz, T. S. (2004). What attributes guide the deployment of visual attention and how do they do it? Nature Reviews Neuroscience, 5(6), 495–501. 10.1038/nrn1411 [DOI] [PubMed] [Google Scholar]

- Wu, J. H. , & Yuan, Y. (2003). Improving searching and reading performance: The effect of highlighting and text color coding. Information Management, 40(7), 617–637. 10.1016/S0378-7206(02)00091-5 [DOI] [Google Scholar]

- Yu, L. , Zhang, Q. , Priest, C. , Reichle, E. D. , & Sheridan, H. (2017). Character‐complexity effects in Chinese reading and visual search: A comparison and theoretical implications. The Quarterly Journal of Experimental Psychology, 1–12. 10.1080/17470218.2016.1272616 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang, Y. , & Jin, Z. (1995). Attention in encoding and integration of visual feature information of Chinese character. Chinese Journal of Ergonomics, 1(1), 51–54. https://doi.org/10.13837/j.issn.1006-8309.1995.01.011 [Google Scholar]

- Zhao, M. , Gersch, T. M. , Schnitzer, B. S. , Dosher, B. A. , & Kowler, E. (2012). Eye movements and attention: The role of pre‐saccadic shifts of attention in perception, memory and the control of saccades. Vision Research, 74, 40–60. 10.1016/j.visres.2012.06.017 [DOI] [PMC free article] [PubMed] [Google Scholar]