Abstract

Clinical decision making is driven by information in the form of patient data and clinical knowledge. Currently prevalent systems used to store and retrieve this information have high failure rates, which can be traced to well-established system constraints. The authors use an industrial process model of clinical decision making to expose the role of these constraints in increasing variability in the delivery of relevant clinical knowledge and patient data to decision-making clinicians. When combined with nonmodifiable human cognitive and memory constraints, this variability in information delivery is largely responsible for the high variability of decision outcomes. The model also highlights the supply characteristics of information, a view that supports the application of industrial inventory management concepts to clinical decision support. Finally, the clinical decision support literature is examined from a process-improvement perspective with a focus on decision process components related to information retrieval. Considerable knowledge gaps exist related to clinical decision support process measurement and improvement.

Health care can be considered an industrial process, a view which has become more acceptable lately due to the successful application of industrial quality improvement methods in health care settings1,2 and the increasing recognition of the role of systems in producing variation and error.3,4 If delivering health care is likened to an industrial production, then the main production process is clinical decision making and the main products are clinical decisions. A key ingredient required to fuel decision production is information in the form of clinical knowledge and patient medical history data.5,6 The production worker is the clinical decision maker who brings memory-based expertise and knowledge to the process.*

Yet, from a systems perspective, human memory is largely nonmodifiable. Efforts to improve decision production processes should thus focus on non-memory-based, or external, information storage and delivery systems that supplement clinician memory-based information. Adopting a production view of decision making with an emphasis on externally stored information sources has important implications for clinical guideline implementation, error reduction, clinician workflow and productivity, and justification of computerized patient-record systems. Such a view is not intended to diminish the essential role of individual clinician expertise and skill in making clinical decisions. Rather, it is meant to explain the high decision variability associated with current levels of process dependence on individual memory and serves to focus attention on information retrieval aspects of decision processing that are more amenable to improvement than human memory.

In spite of the critical role of information in producing decisions, systems that support its efficient and effective delivery to clinician decision makers have generally been lacking in health care production environments, with demonstrable adverse effects.7 Surprisingly, the process of delivering basic clinical knowledge and patient data to clinical decision makers has received relatively little attention from informatics researchers.8,9 The informatics literature also sheds little light on information delivery process variables and methods to measure them. For instance, most of the published evaluative work related to the retrieval of clinical knowledge and patient data by clinical decision makers focuses on decision outcome† rather than decision process components.10,11,12,13 While the problem of limited retrievability of patient data and essential clinical knowledge to support clinical decision making is widely acknowledged, and while the need for computerized patient record (CPR) systems to address this is regarded as a fundamental truth in informatics,14 there is little empirical data available to permit an estimation of decision-making process gains that could be expected to result from implementation of such systems.9,15

This article addresses these problems. A simple model of the health care encounter as an industrial process is presented that, because of the central role played by decision making in that process, incorporates a clinical decision making model. This combined model is used to demonstrate how system constraints result in highly variable information delivery to decision-making clinicians. When incomplete data is combined with the limitations and variability of human memory and cognitive processing, high variability in decision outcomes is the expected result. Since outcome variability is usually attributable to process variability,16 methods to measure and reduce decision-support process variability are explored. In particular, relevant prior work is discussed from a process improvement perspective, and significant knowledge gaps related to monitoring decision support processes are exposed. In so doing, we hope to highlight the need for further decision-support research focused on the delivery of appropriately selected and displayed patient data and clinical knowledge to clinicians. Finally, the combined model is used to help develop the concept of information as a production supply to which industrial inventory management principles can be applied.

A Process View of Clinical Decision Making

A Decision-making Model

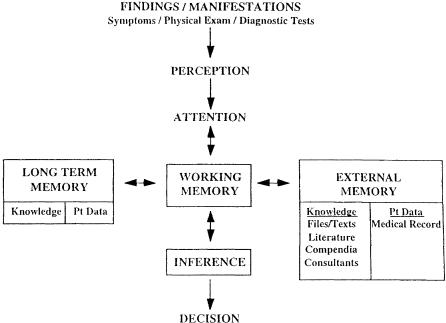

A simple model of clinical decision making, patterned after the more general “information processing system” model for the human decision maker put forward by Newell and Simon over 20 years ago, is presented in Figure 1.17,18 According to this model, physicians pay attention to clinical information that is perceived (presenting complaints, medical history, physical examination, and laboratory findings), combine this information with medical domain knowledge stored in their own memory or available in some form of “external” memory (i.e., reference texts, journal articles, personal complendia), and then make inferences that lead to decisions or conclusions. The general relevance of the model to clinical decision support, including specific process weaknesses with corresponding opportunities for computers to assist with decision making, has been discussed elsewhere.19

Figure 1.

Decision-making model.

The model's relevance to this article lies in its explicit representation of the role of internal (i.e., memory-based) and external knowledge and patient data sources in decision making. Other decision-making models, which tend to focus on inference, handle the concept of information supply implicitly via the consideration of decision making under conditions of uncertainty.20,21,22 These models generally consider uncertainty to arise as a result of a clinical situation for which there is no sound scientific evidence available to help guide decision making. The information-availability issue addressed by this article, on the other hand, relates to facilitating access to pertinent patient data and/or relevant clinical knowledge that exists but is frequently inaccessible when needed for decision making because of system constraints.5,8 The model's representation of inference as an undefined process also fits nicely with the present focus, which is the supply of information to support inference rather than inference itself.

A Process Model of Decision-based Health Care Encounters

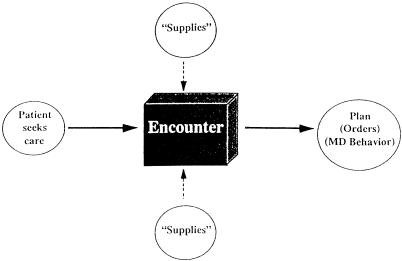

Clinical decision making can be characterized by an industrial process model with process inputs and out-puts and supplies needed to fuel the process (Fig. 2).‡ While such a characterization is hardly new,23 it is resisted by clinicians who believe that every encounter is unique and that medicine, which routinely deals with life and death rather than nuts and bolts, is fundamentally different from manufacturing. Yet it is precisely because the stakes are so high that such a process orientation is warranted, as error in medicine is usually a result of systems or process failures rather than the shortcomings of individuals.7,24 Besides, clinical encounters are not really all that unique. Most primary care encounters can be attributed to a limited number of complaints,25 and many decision tasks are routine and recurring (e.g., deciding whether or not to order imaging studies for a patient with back pain). Although the combination of decision inputs and the information supplies necessary to process them may be unique for any given encounter, the types and sources of these inputs and supplies and the processes involved in acquiring them are limited. For instance, the types of patient data of most importance to clinical decision making are easily defined,26 and generic “prototypical questions” commonly posed by clinical decision makers have been described.9 In essence, much of the patient data and many of the clinical knowledge needs associated with recurring decision tasks can be anticipated.

Figure 2.

Process view of encounters.

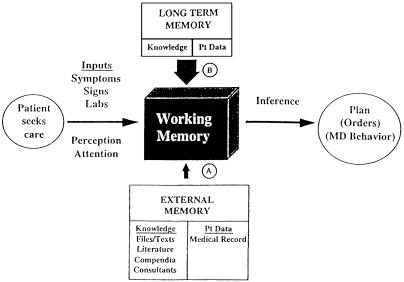

As seen in Figure 2, the decision-producing process begins following a patient-generated service request lthat leads to an encounter with a clinician.§ As a result of that encounter, decisions are made that lead to recommendations for treatment and/or further diagnostic testing, the principal outputs of the process. Since decision making constitutes the most visible portion of the encounter process, the decision-making model presented earlier can be substituted for the black process box in Figure 2, with the process inputs and supplies defined by the decision-making model. The resultant combined model (Fig. 3) is created by rotating the decision-making model 90 degrees counterclockwise and superimposing it onto the encounter process model. In so doing, it becomes clear that longterm memory-based and externally stored information are key supplies that must be delivered to working memory during the encounter process.

Figure 3.

Process view of decision-producing encounter. System constraints operating at A force increased information supply dependence at B.

In addition to highlighting the role of information as a production supply, the combined model helps to demonstrate the effect of constraining access to information sources on process output variability (i.e., decision variability). Limited access to externally stored information, a result of system constraints described later, forces increased process dependence on memory-based information. Yet interclinician memory content is highly variable due to variability in combinations of medical school, residency, and practice experience. Variable retention in memory and variable retrieval efficiency from memory further compound this exposure-related memory content variability. Given the highly variable nature of memory-based information, decision process dependence on memory as the information source is likely to be associated with a higher level of decision variability than when the information source is more stable and predictable. Since the large variability in interclinician memory is not likely reducible, the model suggests that attempts to reduce decision variability should focus instead on reducing variability in the content and availability of externally stored information.

System Constraints on Information Access

Individual Cognitive Constraints, Human Error, and Systems

Clinicians operate under well-recognized cognitive constraints.20,27 Given these cognitive limitations, the need for clinicians to simultaneously manage the myriad decision inputs and information supplies typical of many clinical decisions presents a serious problem. Cognitive constraints have traditionally been blamed for many errors in medicine and are responsible for the so-called “knowledge-performance gap,” the mismatch between what physicians know and how they actually behave in practice.28 Cognitive constraints are inherent to the human condition, however, and are not correctable (i.e., the “nonperfectability of man”27); results are a property of the system, and individuals operating within that system cannot improve their performance on demand.4 Efforts to improve decision making must therefore focus on improving systems rather than individuals.24

If the system within which decision making occurs determines the extent to which fixed individual cognitive processing constraints ultimately impact decision outcomes, then systems designed to compensate for these constraints should improve decision performance. This has, indeed, been convincingly proven to be true. Decision support systems that incorporate computerized reminders and alerts, for example, have repeatedly been demonstrated to improve decision compliance with accepted standards,28,29,30,31,32 so much so that inclusion of control groups in the design of future randomized trials to study reminder system effectiveness may be unethical.33 On the other hand, decision-improvement strategies that do little to alter the system within which clinicians make decisions have generally not been shown to be successful.34 For instance, traditional guideline implementation strategies (e.g., dissemination, education, incentives) may actually increase cognitive burden and usually do not produce significant sustainble changes in clinician decision-making behavior unless accompanied by a system improvement such as an alternative information delivery strategy.35,36

System Constraints Related to Information Access

Since systems may ultimately determine the degree to which fixed human cognitive constraints are actually expressed as poor decisions, it is important to understand the common systems within which clinical decision making occurs and their associated constraints. In a study of the role of system failures in human error in medicine, Leape et al.7 detected 264 preventable adverse drug events attributed to 16 separate system failures in a large urban hospital system. The two most common system failures detected, inadequate dissemination of drug knowledge and inadequate availability of patient data, were associated with 29% and 18% of errors, respectively.7 The finding that system constraints related to the availability of externally stored clinical knowledge and patient data figure so prominently in poor decision making is consistent with predictions based on the combined model presented earlier. Moreover, system modifications designed to reduce these constraints have been clearly demonstrated to improve decision making.10,12,13,37,38 Specific failures associated with systems for storage and retrieval of clinical knowledge and patient data should be considered in the context of a more general operating constraint in clinical production environments: time pressure.39 Time pressure, cited most commonly by physicians as being responsible for their mistakes,40 unmasks the information access inefficiencies described below.

Clinical Knowledge Storage and Retrieval Systems

Today, the burden of maintaining and accessing clinical knowledge resources rests on the individual clinician. Each clinician must develop and manage a wide array of external knowledge resources.39 Many of these resources, such as a personal file system or a handwritten compendium, must be personally developed and maintained. Others, such as a library or locally accessible CD-ROM literature database, are maintained organizationally. Even though clinicians are not individually responsible for maintaining this latter type of resource, they still must manage access to them and maintain resource-specific navigational and information retrieval skills.

There are several serious problems with a knowledge storage and retrieval system that must be managed by individual end users of the system. Foremost among these is redundancy, with respect to both effort and storage. Since maintenance responsibility falls on the shoulders of the system users, all users are effectively replicating the efforts of other users when they attempt to create and maintain their own personal cluster of knowledge resources. Given the magnitude of individual effort required to maintain even a single resource cluster component, such as a personal file of journal articles,41 the redundancy of effort involved in having all users each maintaining multiple similar resource components is staggering. Equally impressive is the redundancy of content in such a system, which occurs both across and within clinicians. Articles stored in the files of one clinician are likely to be similar to articles in the files of other clinicians. Moreover, other knowledge resources, such as textbooks, probably contain much of the same information found in the article file. Content replication within an individual clinician's resource cluster helps to compensate for the retrieval inefficiency described below, as the likelihood of locating a desired knowledge item in a timely fashion increases with the number of storage locations for that item. Content replication across resource clusters is an unavoidable byproduct of a knowledge publication and distribution system dependent on locally stored printed material.

Retrieval inefficiency is another major problem with clinician-directed knowledge resource maintenance. Barriers to retrieving knowledge are either physical or functional: physical barriers are those related to the distance of a resource from where patient care is actually occurring, while functional barriers relate to the organization and searchability of any given knowledge resource once it has been physically accessed.39 Functional barriers are resource specific and also include extensiveness and relevance. Knowledge resource cost variables (availability, searchability) predict knowledge resource use better than perceived resource benefit (i.e., the quality of the knowledge resource).39 Searching MEDLINE, for instance, frequently yields a low ratio of clinical applicability-to-retrieval effort,42,43,44,45 and it is thus used infrequently in production settings.39,46 Time and effort are the major barriers to knowledge access: these barriers have largely blocked the integration of knowledge seeking into usual workflow and have traditionally limited the usefulness of decision-support applications.28,47,48,49 Yet another serious problem with separately maintained knowledge resources is excessive content variability. This is due largely to factors which affect individual decisions to add new items (e.g., a new article or book) to existing personally maintained resources. Besides differences in the dollar costs of resources and individuals' ability to pay for them, these factors include differing individual areas of interest, differing perceived personal knowledge gaps, differing perceptions of the significance and prevalence of particular clinical problems, and varied levels of individual effort and skills related to resource maintenance. Problems related to the distribution of update information introduce another source of content variability into individually maintained resources: Simultaneous delivery of new knowledge to such a widely distributed system is virtually impossible, and highly varied information dissemination is the norm rather than the exception.50,51 Since large content and retrieval variability are inherent properties of clinician-directed knowledge-access systems, these systems are unable to compensate for the large variability of memory-based knowledge. The result is the inability to reliably update decision-making clinicians in a timely fashion. Awareness of the importance of knowledge updating for clinical problem solving is not new,21,39 but recent evidence from the study by Leape et al.7 linking knowledge-access constraints to clinician errors provides compelling documentation of just how serious the problem is.

The widespread existence of a knowledge-support system characterized by seemingly unacceptable levels of redundancy, inefficiency, and variability can perhaps best be understood if one considers that this system was probably never consciously conceived of as a “system” in the first place. Rather, in most health care delivery settings, this loosely connected array of redundant knowledge resources managed by end users has emerged by default in the absence of organizationally sanctioned clinical knowledge storage and delivery master strategies. Yet information is the “central and indispensable tool of practice.”6 That an organization would not provide such an essential production tool to its workers stems from the traditional treatment of physicians as independently contracted professionals who must supply their own knowledge storage and retrieval tools, rather than as employed production workers. In fact, the number of physicians who are independent contractors or part owners of a practice is declining rapidly. The proportion of patient care physicians practicing as employees rose from 24.2% in 1983 to 42.3% in 1994, and nearly two-thirds of physicians in practice for less than five years practice as employees.52 While this shift in physician role from independent contractor to employee appears to be accelerating,52 a parallel shift in responsibility for the management of externally stored clinical knowledge as a production tool has yet to occur. In the absence of real-time knowledge support, quality is frequently dependent on inspection by others to detect and correct errors later. Interestingly, physicians rarely complain about inadequate institutional knowledge support, perhaps because they are too busy to consider the flaws of usual knowledge-management strategies.4

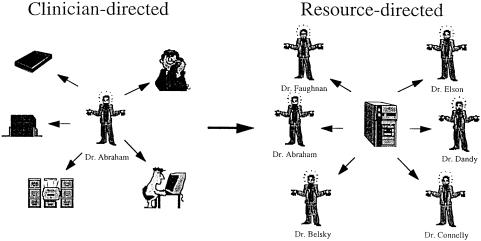

The logical solution to the above problems of redundancy, retrieval inefficiency, and update distribution involves a critical change from a system in which knowledge resources are maintained by individual clinicians to a system in which clinicians have ready access to centrally developed and maintained knowledge resources (Fig. 4). Moving away from a clinician-directed model toward a resource-centric system provides a clear example of the paradigm shift in knowledge management recently discussed by Matheson53 in this journal. While this shift may have already begun to occur as a result of technological advances related to Web clients and servers,54,55 considerable information indexing and retrieval hurdles remain.56 In spite of the obvious role of technology in implementing the shift, it is important to keep in focus that the shift is not about the move from paper to computer-based information; instead, it is about the move from clinician-directed to resource-directed information organization and distribution.

Figure 4.

Knowledge management paradigm shift.

Finally, viewing knowledge retrieval from a systems perspective ought to moderate the objectives of increasingly popular medical school and residency informatics curricula.57 Unless accompanied by substantive improvements in information storage and delivery systems, training individual clinicians to be better information retrievers will do little to solve the knowledge management problems outlined above.58 Moreover, a truly successful information-retrieval system should integrate retrieval into usual workflow and require minimal training to use.47

Patient Data Storage and Retrieval Systems

The principal patient care-related functional requirement of a patient-record system is that once recorded, data should be rapidly and reliably retrievable (ideally, in the context of related observations).15 Paper-record systems are rarely able to meet this simple requirement, and the disorganization and consequent limited retrievability of patient data contained in traditional medical records is widely acknowledged to hamper routine decision making by clinicians.14,15

The medical record can be viewed as an interwoven system of stories, often with multiple authors.59 In practice, decision-making clinicians must be able to use the record to reconstruct stories related to specific clinical problems. To accomplish this, physicians routinely use the following four retrieval strategies with medical records: (1) first-time reading, or getting a fast overview and understanding of a case; (2) re-reading, or triggering of a memory picture; (3) searching for facts (i.e., targeted data retrieval); and (4) problem solving.60 These retrieval strategies are accompanied by several levels of text processing ranging from reading all the words in a paragraph to skipping a paragraph altogether. Graphical, textural, and positional features of the chart, logically related and controlled, are essential for orientation, navigation, and effective limitation of search space.60

Retrieving data from paper records is thus like an improvisational dance with highly conditioned cues triggering each sequence of steps. While mastery of medical record navigation is an essential clinical skill, no amount of navigational skill can overcome the systemic limitations of paper records. Placing a current clinical event in proper context, for instance, often requires that a physician thumb through a thick paper record with relevant information scattered throughout. This is a time-consuming and labor-intensive endeavor that can easily overwhelm human cognitive processing limitations and render the paper record virtually useless when it is most needed.15

Retrieving data from patient records is frequently associated with overt process failure, a fact largely attributable to retrieval inefficiency and incomplete storage (i.e., missing data).9,26,61 Tang et al.9 found that pertinent patient data were unavailable in 81% of cases studied in an internal medicine clinic with a mean of 3.7 missing data items per case, even though the medical record itself was unavailable only 5% of the time. Most of the unavailable data items were generated at the study institution and would thus have been expected to be available. Data relating to prior diagnostic testing accounted for 36% of the unavailable data, while data relating to medical history and past medications/treatments accounted for 31% and 23%, respectively. Although alternate professional data sources, the patient, and/or family members were used to successfully reconstruct history in 68% of instances of missing data items, the data need simply went unmet in the remaining 32%.9 A survey of internists by Zimmerman26 also documented a high data retrieval failure rate: 69% of respondents reported significant difficulty in retrieving the data they desired, and such difficulty occurred about 25% of the time. These failure rates are all the more impressive when added to the failure rate for retrieving the record itself, which can be as high as 30%.14 In addition to reducing decision-making efficiency, these patient data access constraints have been clearly demonstrated to adversely affect decision outcomes7 and increase resource utilization.10,13 As with knowledge storage and retrieval systems, storage redundancy in paper record systems partially compensates for retrieval inefficiency. Problems with paper records, including those related to incompleteness, redundancy, and retrieval inefficiency, have been cataloged elsewhere.14

Information as Inventory

Viewing decision-generating health care encounters as an industrial process and externally stored information as a critical production supply suggests that industrial inventory management strategies can be applied to medical information management. Indeed, concepts such as storage redundancy, understocking, and retrieval inefficiency (i.e., difficulty locating stock) all invoke the image of information as inventory. As pointed out above, usual information inventory management strategies frequently fail outright to deliver needed clinical knowledge48 and patient data9 in a timely fashion. Knowledge delivery, for instance, often bears no temporal relationship to a patient encounter where it is actually needed. This approach is exemplified by usual continuing medical education35 and guideline-dissemination strategies.28 Another flawed approach that is temporally related to specific patient encounters involves the postencounter delivery of feedback to physicians, most commonly by pharmacists reviewing drug orders. Common errors detected are usually related to drug dosing, drug-drug interactions, patient medication allergies, or formulary restrictions. Some forms of disease state management also deliver postencounter patient-specific feedback, usually triggered by computer algorithms applied to electronically available laboratory and claims data.62,63 Although delayed information delivery strategies do help prevent error, they represent an attempt to manage decision quality via inspection and involve considerable rework.64

Berwick4 has recently called attention to an alternative inventory management approach in health care production environments. This strategy, called just-in-time (JIT), strives for continuous production flow: supplies are delivered when actually needed, an approach that minimizes overstocking and production stops.65 The effect of successful implementation of JIT management is conversion from a “push” system, where work in progress is merely pushed along to the next stage in the production process, to a “pull” system, where each process step anticipates supply requirements of the next step and pulls those supplies so that they are available at the beginning of that next process step. Such a pull system already occurs in health care with procedure-based encounters: necessary equipment is sterilized and ready for use before the clinician arrives to perform the procedure. Although equally sensible, this same approach has not been effectively instituted for decision-based encounters. While pulling a patient's chart so that it is available at the time of an encounter does anticipate in a macro sense the patient medical history data needs of the physician, this strategy often fails to deliver needed information at a more granular level. Moreover, it does not include the delivery of relevant clinical knowledge.

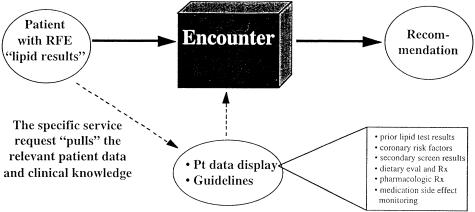

This notion of anticipating what clinical knowledge and which patient history items need to be delivered to a decision-making clinician is most applicable to those decision tasks that are recurring. Besides consuming considerable clinician time and cognitive effort resources, these recurring tasks have in common a task-specific set of readily anticipated patient data and clinical knowledge information needs. It is these routine decision tasks with anticipatable information supply requirements that should be most amenable to systematic improvement. Because these decision tasks occur commonly, such improvement should be associated with a large productivity payoff. Anticipatory patient data and clinical knowledge delivery also represent a natural extension of Tang's concept of generic “prototypical questions” to specific clinical situations.9 An example of JIT information delivery for one commonly recurring decision task, interpretation of a serum cholesterol test result, is shown in Figure 5.

Figure 5.

Just-in-time information delivery. Matching information delivery to the reason-for-encounter (RFE).

Implementation of JIT requires the capture of coded triggers to activate appropriate information displays. In order to make this strategy work, enough patient data and clinical knowledge must be available electronically and identifiable as relevant to specific decision tasks. While the use of a structured clinical vocabulary is essential to support JIT triggers,66 it is not necessary to completely codify the patient record to achieve effective JIT information delivery.67 Rather, blocks of descriptive text need only be tagged to facilitate indexing and future retrieval for problem-specific summary displays. This is fortunate, as a standardized clinical vocabulary capable of efficiently representing all clinically relevant concepts may not yet exist, and achieving a completely coded record is not feasible with current technology in production settings.68,69 Besides, merely retrieving passages of unstructured text for visual scanning and interpretation matches established patterns of patient record use by decision-making clinicians for individual patient care.60

While rarely labeled as JIT, efforts to implement JIT decision support have generally revolved around physician order entry (POE), with considerable success.13,37,38,70,71 Templates can also be used to implement JIT decision support either during data gathering or during order entry.72 Because of the requirement for increased computer data entry by physicians that usually accompanies POE or the use of templates, these strategies must be implemented in a fashion that improves overall workflow or risk rejection by physician users.73,74

Process Improvement and Decision Making

Defining Process Variables

The use of industrial process-improvement methods has become quite popular in health care settings.2,75,76 Like the systems view of human error discussed earlier, process improvement emphasizes poor process design as the cause of substandard care rather than individual incompetence: its goals are to improve mean performance and reduce performance variation.1,64 Information seeking, as a highly visible part of the decision-making process, is a logical process component upon which to focus measurement efforts. Candidate process variables related to information seeking include source-specific and/or data-specific seek times and failure rates for patient historical data and/or clinical knowledge, retrieval accuracy, decision-making performance (e.g., decision-making time, accuracy, and variability), and decision-maker satisfaction with, or difficulty ratings of, information seeking.

Research on the effectiveness of computerized decision-support applications has traditionally emphasized the outcomes of decision making rather than the process of decision making. For instance, studies of the effectiveness of computerized reminder systems have generally used physician behavior as the principal end point (e.g., whether or not an appropriate action was taken in specific clinical circumstances,27 a screening test was recommended or dispensed,77,78 or a vaccine administered79). How much decision-making time related to information retrieval is saved, if any, has usually not been addressed. Similarly, most of the published work related to the decision-making impact of varying displays of patient data10,13 or relevant knowledge11,12 has focused on decision outcomes, with an emphasis on billable resource utilization other than decision-maker cognitive time and effort. At first glance, Blumenthal's80 call for the widespread application of industrial quality management science to clinical decision making appears to address this process orientation gap.8 However, Blumenthal stops short of recommending the application of process improvement methods to monitoring the decision-making process itself. Instead, he emphasizes the development of a statistical quality-control view of physiologic and pathophysiologic variables (e.g., blood pressure values) in order to help clinicians recognize when stable physiologic processes become unstable. The recent use of statistical process control methods by Kahn et al.81 to monitor expert system performance appears to be a step in the right direction.

Clinical Knowledge-Retrieval Process Measurement

Several authors have studied the knowledge-seeking behavior of physicians, and a recent overview is provided by Hersh.82 While these efforts have characterized physician clinical-knowledge needs48,83,84,85,86 and resource preferences,39,46,48,84,87,88,89,90 they have not measured actual knowledge-retrieval process components other than providing estimates of service-request frequency and process-failure rates. For instance, no estimates exist for the time spent on an average clinical knowledge-retrieval task. Estimates of service-request frequency vary widely∥ depending on how questions are gathered, and they range from one clinical knowledge question generated for every 15 patients in primary care settings84 to 1.8 questions per patient in the inpatient setting.83 Estimates of ambulatory care process failure (unmet clinical knowledge need during an encounter) range from 0.1284 to 5.249 unanswered questions per half day, or from 8%84 to 70%48 of the total number of questions identified. Similar estimates presently do not exist for the inpatient setting. Considerably more process data exist for MEDLINE searching than for knowledge retrieval in general.8,42,82 These data are of relatively little use for knowledge-support process improvement, however, as literature searching represents only a small fraction of physician knowledge-seeking behavior.39

In addition to failing to define knowledge retrieval process components, studies characterizing knowledge needs may be misleading with respect to understanding the relationship between knowledge delivery systems and decision variability. This is because the recall88,91 and observational83,84 methods used to study clinical knowledge retrieval are biased toward eliciting consciously recognized and possibly extraordinary knowledge needs. For instance, of 103 unanswered questions from family physicians collected in a recent study, only 4 were asked by more than 1 physician.86 This suggests that knowledge needs related to routine clinical situations are frequently not recognized, perhaps because clinicians who deal with such situations feel unduly confident that they know the proper way to manage them.5,51,92

Unrecognized knowledge needs related to routine decisions may thus play a larger role in practice variation than recognized knowledge needs related to extraordinary decisions. This is significant because it is the large variation in such routine decisions that is both most disturbing from a health policy perspective and logically most amenable to reduction by improvement in knowledge delivery systems. Moreover, since routine decisions occur with high frequency, improved knowledge delivery efficiency related to routine decisions will likely be associated with higher productivity payoffs than improved efficiency related to nonrecurring decisions

Patient Data Retrieval Process Measurement

Data From Clinical Production Environments

An understanding of processes related to knowledge retrieval alone, however, is not enough; most clinical decisions require a synthesis of clinical knowledge and patient data.5,83 As with clinical knowledge retrieval, process-oriented literature related to patient data retrieval is sparse.9 We have found no published observational data from production settings regarding the amount of physician time spent retrieving data for specific decision tasks. Nonetheless, there is some data available regarding time spent retrieving patient data in general. Using work-sampling/time-motion methods. Mamlin and Baker91 found that physicians in a general medicine clinic spent 38% of their time charting (data retrieval and entry, combined), or 12 minutes out of the average 31 minutes spent per patient. Tang et al.,94 using observational methods across a variety of clinical settings, found that on average physicians spent 9% of their time reading. About 60% of physician reading activity in this study was related to retrieving patient data, and 8% was related to retrieving clinical knowledge. Zimmerman,26 using recall-based methods, found that internists spent from 3% to 75% (median 25%) of their total time per patient studying the medical record, or from 1 to 25 minutes (median 7.5 minutes) per patient. Another study found that nephrologists spent an average of 5 minutes per patient reviewing the medical record before entering the examination room.95

Data From Patient Data Retrieval Simulations

Given the difficulty of accurately isolating and measuring patient data retrieval process components associated with specific decision tasks under actual working conditions, it is not surprising that decision-making simulations have played an important role in our understanding of the impact of patient data retrieval structures on retrieval process and decision outcomes. We are aware of only 2 such studies, however, published over 20 years apart. The first was a simulated data retrieval exercise designed to compare the impact of four different paper-record formats on the task of retrieving standard patient data from the record. In that study, Fries15 found that a record with fixed-format, time-oriented flow sheet organization permitted access to data in one fourth the time of other formats. Retrieval accuracy was also improved: no errors in data retrieval were observed with the time-oriented record compared with a 10-15% error rate with other formats. This work was recently replicated by Willard et al.61 using alternative computerized patient data display formats. In this study, a targeted data retrieval simulation was used to compare data retrieval times and accuracy for clinical microbiology results using a Web browser-based reporting system versus a conventional laboratory reporting system. Participants using the browser-based system, which provided a summarized data display with facilitated access to more detailed information, were able to answer a set of routine questions (e.g., “From what site and when has Pseudomonas aeruginosa been isolated?”) in 45% less time than with the conventional reporting system. Half of the searches using the conventional results-reporting system involved at least one major retrieval error, whereas no errors were seen with the summarized display system.61

Unpublished data from a pilot study conducted by one of the present authors (RE) extends these findings from targeted data retrieval to simulated decision tasks: physician interpretation time for new serum lipid test results was reduced by 39% when the usual paper record was accompanied by a printed summary display of patient data relevant to lipid management. Interestingly, low-density lipoprotein cholesterol goal setting, a cornerstone of lipid-related decision making, was more often concordant with guideline recommendations when the summary display was available, even though the display did not include knowledge-based advice. (Elson, RB. The impact of anticipatory patient data displays on physician decision making: a dissertation proposal for a doctoral degree in health informatics, University of Minnesota, accepted April 1996.)

Data obtained during actual work in production settings corroborate the findings from these simulations. The use of a cholesterol summary reporting system at a large Minnesota health maintenance organization has cut the average physician interpretation time for new lipid test results from 101 seconds to 49 seconds per test, with estimated plan-wide savings of $100,000 per year (personal communication, Michael Koopmeiners, MD HealthPartners, Minneapolis). Garrett et al.95 conducted a randomized clinical trial to compare the efficacy of a CPR with that of the usual paper record in a nephrology clinic. They found that during encounters in which physicians were assigned to use a CPR, less time was spent obtaining data from the record and significantly fewer data retrieval errors were made related to overlooking medical problems and drug therapies.95

Conclusions

Efforts to improve clinical decision making based on educating and/or motivating individuals have had a minimal impact on decision-making quality and variability, due in large part to nonmodifiable human memory and cognitive processing constraints. We have presented a view of decision making as an industrial production in order to facilitate the identification of modifiable sources of decision-making variability external to individual decision makers. Currently prevalent systems that control access to available clinical knowledge and patient history data constrain decision-maker access to information needed to optimally process clinical decisions. These systems thus contribute to excessive decision variability and are responsible for process failure rates that would be considered unacceptably high in almost any industrial production setting.

In spite of the obvious importance of clinical knowledge and patient data to clinical decision makers, process data related to retrieving these types of information remain scarce. Such items of data are important for effective decision-support process improvement and for measuring the value of clinical information systems. While characterization of clinician information-seeking behavior permits estimation of the demands likely to be placed on information storage and retrieval systems, it is ultimately the systems themselves that must be examined and modified if the problems of knowledge updating for clinical problem solving and unreliable retrieval of patient data are to be solved. As the use of electronic patient data and clinical knowledge sources increases, it will become easier to track information seek times and other process variables related to information retrieval in clinical production settings. While this will facilitate efforts to incrementally improve computer-based information-retrieval processes, we may never be able to quantitatively compare such processes with their paper-based equivalents unless similar data are collected now. Decision-making simulations provide a feasible method for collecting such data.

Admittedly, the absence of formal quantitative assessments of information retrieval efficiency gains associated with CPR systems will not likely hinder their adoption. As evidence of the harmful effects of current information support systems and the beneficial effects of computerized alternatives on decision quality and resource utilization continues to mount, maintenance of the status quo will increasingly become difficult to justify. Simultaneously, competitive advantage related to patient care workflow efficiency along with more efficient capture of data for performance measurement will make computerized systems easier to justify. Changes in physician roles from independent contractors to employees, coupled with the increasing recognition of the inability of individual physicians to effectively meet their information needs on their own, should further increase pressure on organizations to develop new information-support strategies.

As these strategies are developed, information should be regarded as a key supply needed to fuel a core production process. Inventory management strategies that optimize the timely transfer of information stock to decision-making clinicians will reduce decision variability, improve workflow, and reduce quality dependence on inspection. Information storage and distribution strategies based on Web-browser technology are facilitating a paradigm shift from clinician-directed toward resource-directed information management, and this shift will make it easier to implement JIT information management solutions. This shift will also depend on control systems designed to intelligently select and effectively display task-specific patient data and clinical knowledge. These control systems, in turn, will depend on a still undetermined standardized clinical vocabulary and emerging strategies for the real-time capture of coded data. Nonetheless, complete codification of patient data is not necessary for clinical production purposes, and may be counterproductive.

Finally, the framing of clinical decision making as an industrial process is not intended to depersonalize decision making or suggest a diminished role for individual clinician expertise. While the payoffs of improved information-management strategies are consistent with the societal and institutional imperatives of reduced practice variation and more appropriate resource utilization, individual clinician decision makers and their patients will benefit most directly. Clinicians will be free to focus their expertise on synthesizing available clinical knowledge and patient data rather than wasting precious time and effort on simple information-retrieval tasks, and patients will benefit from higher quality decisions as a result. If efficiency gains and associated time savings are not entirely translated into higher production expectations, then physicians may even have more time to spend communicating with and enjoying their patients.

Supported in part by Grant T15 LM-07041 (Training in Medical Informatics) from the National Library of Medicine, Bethesda, MD (Drs. Elson and Faughnan).

Footnotes

The focus here is on encounters related to arriving at recommendations for treatment or further diagnostic testing.

Decision “outcome” as used here refers to the actual decision made, not to the outcome of care that may have resulted from testing or treatment related to a decision.

Other important products besides decisions result from clinician-patient encounters, such as satisfied or educated patients, but these are not considered here. This is not intended to minimize the importance of aspects of patient care not mechanistically related to formulating recommendations.

In addition to face-to-face interactions, decision-based encounters include telephone calls, prescription refill requests, and the clinician review of test results even when a patient is not present.

The variation in these estimates is due to differing methods used to quantify information needs, ranging from mail survey-based recall,89 immediate postencounter interviewer-stimulated recall,48, delayed interviewer-stimulated recall,90 video-stimulated recall85 to tape-recorded84 and anthropologist-based83 observation of recognized and verbally stated questions.83,84

References

- 1.Berwick D, Godfrey AB, Roessner J. Curing Health Care: New Strategies for Quality Improvement. San Francisco: Jossey-Bass, 1990.

- 2.Horn SD, Hopkins DE (eds). Clinical Practice Improvement: A New Technology for Developing Cost-Effective Quality Health Care. Medical Outcomes and Practice Guidelines Library, vol. 1. New York: Faulkner & Gray, 1994.

- 3.Bogner MS (ed). Human Error in Medicine. Hillsdale, NJ: Lawrence Erlbaum 1994.

- 4.Berwick DM. A primer on leading the improvement of systems. BMJ. 1996;312: 619-23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Smith R. What clinical information do doctors need? BMJ. 1996;313: 1062-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Huth EJ. Needed: an economics approach to systems for medical information. Ann Intern Med. 1985;103: 617-9. [DOI] [PubMed] [Google Scholar]

- 7.Leape LL, Bates DW, Cullen DJ, Cooper J, Demonaco HJ, Gallivan T, et al. Systems analysis of adverse drug events. ADE Prevention Study Group. JAMA. 1995;274: 35-43. [PubMed] [Google Scholar]

- 8.Shapiro AR. Knowledge retrieval and the medical information sciences. In: Kuhn RL (ed). Frontiers of Medical Information Sciences. New York: Praeger, 1988; 21-31.

- 9.Tang PC, Fafchamps D, Shortliffe EH. Traditional medical records as a source of clinical data in the outpatient setting. Proc Annu Symp Comput Appl Med Care. 1994; 575-9. [PMC free article] [PubMed]

- 10.Tierney WM, McDonald CJ, Martin DK, Rogers MP. Computerized display of past test results: effect on outpatient testing. Ann Intern Med. 1987;107: 569-74. [DOI] [PubMed] [Google Scholar]

- 11.Tierney WM, McDonald CJ, Hui SL, Martin DK. Computer predictions of abnormal test results. Effects on outpatient testing. JAMA. 1988;259: 1194-8. [PubMed] [Google Scholar]

- 12.Tierney WM, Miller ME, McDonald CJ. The effect on test ordering of informing physicians of the charges for outpatient diagnostic tests. N Engl J Med. 1990;322: 1499-1504. [DOI] [PubMed] [Google Scholar]

- 13.Connelly DP, Sielaff BH, Willard KE. A clinician's workstation for improving laboratory use: integrated display of laboratory results. Am J Clin Pathol. 1995;104: 243-52. [DOI] [PubMed] [Google Scholar]

- 14.Dick RS, Steen EB (eds). The computer-based patient record: an essential technology for health care. Washington, DC: National Academy Press, 1991. [PubMed]

- 15.Fries JF. Alternatives in medical record formats. Med Care. 1974;12: 871-81. [DOI] [PubMed] [Google Scholar]

- 16.Donabedian A. The role of outcomes in quality assessment and assurance. QRB Qual Rev Bull. 1992;18: 356-60. [DOI] [PubMed] [Google Scholar]

- 17.Newell A, Simon HA. Human Problem Solving. Englewood Cliffs, NJ: Prentice-Hall, 1972.

- 18.Connelly DP, Johnson PE. The medical problem solving process. Hum Pathol. 1980;11: 412-9. [DOI] [PubMed] [Google Scholar]

- 19.Elson RB, Connelly DP. Computerized decision support systems in primary care. Prim Care. 1995;22: 365-84. [PubMed] [Google Scholar]

- 20.Tversky A, Kahneman D. Judgments under uncertainty: heuristics and biases in judgments reveal some heuristics of thinking under uncertainty. Science. 1974;185: 1124-31. [DOI] [PubMed] [Google Scholar]

- 21.Elstein AS, Shulman LS, Sprafka SA. Medical Problem Solving: An analysis of Clinical Reasoning. Cambridge, MA: Harvard University Press, 1978.

- 22.Patel VL, Evans DA, Groen GJ. Biomedical knowledge and clinical reasoning. In: Evans DA, Patel VL (eds). Cognitive Science in Medicine: Biomedical Modeling. Cambridge, MA: MIT Press, 1989.

- 23.Codman EA. The product of a hospital. Surg Gyn Obstet. 1914;22: 491-6. [Google Scholar]

- 24.Moray N. Error reduction as a systems problem. In: Bogner MS (ed). Human Error in Medicine. Hillsdale, NJ: Lawrence Erlbaum, 1994; 67-92.

- 25.Schneeweiss R, Rosenblatt RA, Cherkin DC, Kirkwood CR, Hart G. Diagnosis clusters: a new tool for analyzing the content of ambulatory medical care. Med Care. 1983;21: 105-22. [DOI] [PubMed] [Google Scholar]

- 26.Zimmerman J. Physician utilization of medical records: preliminary determinations. Med Inf (Lond). 1978;8: 27-35. [DOI] [PubMed] [Google Scholar]

- 27.McDonald CJ. Protocol-based computer reminders, the quality of care and the non-perfectability of man. N Engl J Med. 1976;295: 1351-5. [DOI] [PubMed] [Google Scholar]

- 28.Elson RB, Connelly DP. Computerized patient records in primary care: their role in mediating guideline-driven physician behavior change. Arch Fam Med. 1995;4: 698-705. [DOI] [PubMed] [Google Scholar]

- 29.Shea S, DuMouchel W, Bahamonde L. A meta-analysis of 16 randomized controlled trials to evaluate computer-based clinical reminder systems for preventive care in the ambulatory setting. J Am Med Inform Assoc. 1996;3: 399-409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Balas EA, Austin SM, Mitchell JA, Ewigman BG, Bopp KD, Brown GD. The clinical value of computerized information services: a review of 98 randomized clinical trials. Arch Fam Med. 1996;5: 271-8. [DOI] [PubMed] [Google Scholar]

- 31.Johnston ME, Langton KB, Haynes RB, Mathieu A. Effects of computer-based clinical decision support systems on clinician performance and patient outcome: a critical appraisal of research. Ann Intern Med. 1994;120: 135-42. [DOI] [PubMed] [Google Scholar]

- 32.Grimshaw JM, Russell IT. Effect of clinical guidelines on medical practice: a systematic review of rigorous evaluations. Lancet. 1993;342: 1317-22. [DOI] [PubMed] [Google Scholar]

- 33.Austin SM, Balas EA, Mitchell JA, Ewigman BG. Effect of physician reminders on preventive care: meta-analysis of randomized clinical trials. Proc Annu Symp Comput Appl Med Care. 1994; 121-4. [PMC free article] [PubMed]

- 34.Woolf SH. Practice guidelines: a new reality in medicine. III. Impact on patient care. Arch Intern Med. 1993;153: 2646-55. [PubMed] [Google Scholar]

- 35.Davis DA, Thomson MA, Oxman AD, Haynes RB. Changing physician performance: a systematic review of the effect of continuing medical education strategies. JAMA. 1995;274: 700-5. [DOI] [PubMed] [Google Scholar]

- 36.Elson RB, Connelly DP. Are reminder systems a form of CME? JAMA. 1995;274: 1836-7. Letter. [DOI] [PubMed] [Google Scholar]

- 37.Pestotnik SL, Classen DC, Evans RS, Burke JP. Implementing antibiotic practice guidelines through computer-assisted decision support: clinical and financial outcomes. Ann Intern Med. 1996;124: 884-90. [DOI] [PubMed] [Google Scholar]

- 38.Teich JM, Schmiz JL, O'Connell EM, Fanikos J, Marks PW, Shulman LN. An information system to improve the safety and efficiency of chemotherapy ordering. Proc Annu Symp Comput Appl Med Care. 1996; 498-502. [PMC free article] [PubMed]

- 39.Curley SP, Connelly DP, Rich EC. Physicians' use of medical knowledge resources: preliminary theoretical framework and findings. Med Decis Making. 1990;10: 231-41. [DOI] [PubMed] [Google Scholar]

- 40.Ely JW, Levinson W, Elder NC, Mainous AGr, Vinson DC. Perceived causes of family physicians' errors. J Fam Pract. 1995;40: 337-44. [PubMed] [Google Scholar]

- 41.Haynes RB, McKibbon KA, Fitzgerald D, Guyatt GH, Walker CJ, Sackett DL. How to keep up with the medical literature: VI. How to store and retrieve articles worth keeping. Ann Intern Med. 1986;105: 978-84. [DOI] [PubMed] [Google Scholar]

- 42.Haynes RB, McKibbon KA, Walker CJ, Ryan N, Fitzgerald D, Ramsden MF. Online access to MEDLINE in clinical settings: a study of use and usefulness. Ann Intern Med. 1990;112: 78-84. [DOI] [PubMed] [Google Scholar]

- 43.Haynes RB, Johnston ME, McKibbon KA, Walker CJ, Willan AR. A program to enhance clinical use of MEDLINE: a randomized controlled trial. Online J Curr Clin Trials. 1993;56. [PubMed]

- 44.Walker CJ, McKibbon KA, Haynes RB, Ramsden MF. Problems encountered by clinical end users of MEDLINE and GRATEFUL MED. Bull Med Libr Assoc. 1991;79: 67-9. [PMC free article] [PubMed] [Google Scholar]

- 45.Walker CJ, McKibbon KA, Haynes RB, Johnston ME. Performance appraisal of online MEDLINE access routes. Proc Annu Symp Comput Appl Med Care. 1992; 483-7. [PMC free article] [PubMed]

- 46.Connelly DP, Rich EC, Curley SP, Kelly JT. Knowledge resource preferences of family physicians. J Fam Pract. 1990;30: 353-9. [PubMed] [Google Scholar]

- 47.Tang PC, Annevelink J, Fafchamps D, Stanton WM, Young CY. Physicians' workstations: integrated information management for clinicians. Proc Annu Symp Comput Appl Med Care. 1991; 569-73. [PMC free article] [PubMed]

- 48.Covell DG, Uman GC, Manning PR. Information needs in office practice: are they being met? Ann Intern Med. 1985;103: 596-9. [DOI] [PubMed] [Google Scholar]

- 49.Gorman PN, Helfand M. Information seeking in primary care: how physicians choose which clinical questions to pursue and which to leave unanswered. Med Decis Making. 1995;15: 113-9. [DOI] [PubMed] [Google Scholar]

- 50.Stross JK, Harlan WR. Dissemination of relevant information on hypertension. JAMA. 1981;246: 360-2. [PubMed] [Google Scholar]

- 51.Williamson JW, German PS, Weiss R, Skinner EA, Bowes FD. Health science information management and continuing education of physicians: a survey of U.S. primary care practitioners and their opinion leaders. Ann Intern Med. 1989;110: 151-60. [DOI] [PubMed] [Google Scholar]

- 52.Kletke PR, Emmons DW, Gillis KD. Current trends in physicians' practice arrangements: from owners to employees. JAMA. 1996;276: 555-60. [PubMed] [Google Scholar]

- 53.Matheson NW. Things to come: postmodern digital knowledge management and medical informatics. J Am Med Inform Assoc. 1995;2: 73-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Cimino JJ, Socratous SS, Clayton PD. Internet as clinical information system: application development using the world wide web. J Am Med Inform Assoc. 1995;2: 273-84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Lowe HJ, Lomax EC, Polonkey SE. The World Wide Web: a review of an emerging internet-based technology for the distribution of biomedical information. J Am Med Inform Assoc. 1996;3: 1-14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Hersh WR, Brown KE, Donohoe LC, Campbell EM, Horacek AE. CliniWeb: Managing clinical information on the World Wide Web. J Am Med Inform Assoc. 1996;3: 273-80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.AAFP. Medical informatics and computer applications: core educational guidelines for family practice residents. Fam Pract Mgmt 1996;3: 41-7. [Google Scholar]

- 58.Elson RB. Staying afloat in the medical information flood (editorial). Fam Pract Mgmt. 1996;3: 16-7. [Google Scholar]

- 59.Kay S, Purves IN. Medical records and other stories: a narratological framework. Meth Inform Med. 1996;35: 72-87. [PubMed] [Google Scholar]

- 60.Nygren E, Henriksson P. Reading the medical record. I. Analysis of physicians' ways of reading the medical record. Comput Methods Programs Biomed. 1992;39: 1-12. [DOI] [PubMed] [Google Scholar]

- 61.Willard KE, Johnson JR, Connelly DP. Radical improvements in the display of clinical microbiology results: a Web-based clinical information system. Am J Med. 1996;101: 541-9. [DOI] [PubMed] [Google Scholar]

- 62.Harris JM, Jr. Disease management: new wine in new bottles? Ann Intern Med. 1996;124: 838-42. [DOI] [PubMed] [Google Scholar]

- 63.Epstein RS, Sherwood LM. From outcomes research to disease management: a guide for the perplexed. Ann Intern Med. 1996;124: 832-7. [DOI] [PubMed] [Google Scholar]

- 64.Walton M. The Deming Management Method. New York: Perigee, 1986.

- 65.Ohno T. Toyota Production System: Beyond Large Scale Production. Cambridge, MA: Productivity Press, 1988.

- 66.Cimino C, Barnett GO. Analysis of physician questions in an ambulatory care setting. Comput Biomed Res. 1992;25: 366-73. [DOI] [PubMed] [Google Scholar]

- 67.Cimino JJ. Data storage and knowledge representation for clinical workstations. Int J Biomed Comput. 1994;34: 185-94. [DOI] [PubMed] [Google Scholar]

- 68.Chute CG, Cohn SP, Campbell KE, Oliver DE, Campbell JR. The content coverage of clinical classifications. For The Computer-Based Patient Record Institute's Work Group on Codes & Structures. J Am Med Inform Assoc. 1996;3: 224-33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Mullins HC. Conference Summary Report: Moving Toward International Standards in Primary Care Informatics: Clinical Vocabulary. New Orleans, 1995. AHCPR Pub. No. 96-0069.

- 70.Broverman CA, Clyman JI, Schlesinger JM. Clinical decision support for physician order-entry: design challenges. Proc Annu Symp Comput Appl Med Care. 1996; 572-6. [PMC free article] [PubMed]

- 71.Tierney WM, Miller ME, Overhage JM, McDonald CJ. Physician inpatient order writing on microcomputer workstations: effects on resource utilization. JAMA. 1993;269: 379-83. [PubMed] [Google Scholar]

- 72.Yamazaki S, Satomura Y, Suzuki T, Arai K, Honda M, Takabayashi K. The concept of template assisted electronic medical record. Medinfo 1995;8 Pt 1: 249-52. [PubMed]

- 73.Sittig DF, Stead WW. Computer-based physician order entry: the state of the art. J Am Med Inform Assoc. 1994;1: 108-23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Margolis CZ, Warshawsky SS, Goldman L, Dagan O, Wirtschafter D, Pliskin JS. Computerized algorithms and pediatricians' management of common problems in a community clinic. Acad Med. 1992;67: 282-4. [DOI] [PubMed] [Google Scholar]

- 75.Laffel G, Blumenthal D. The case for using industrial quality management science in health care organizations. JAMA. 1989;262: 2869-73. [PubMed] [Google Scholar]

- 76.Kritchevsky SB, Simmons BP. Continuous quality improvement: concepts and applications for physician care. JAMA. 1991;266: 1817-23. [DOI] [PubMed] [Google Scholar]

- 77.Frame PS, Zimmer JG, Werth PL, Hall WJ, Eberly SW. Computer-based vs manual health maintenance tracking: a controlled trial. Arch Fam Med. 1994;3: 581-8. [DOI] [PubMed] [Google Scholar]

- 78.Ornstein SM, Garr DR, Jenkins RG, Rust PF, Arnon A. Computer-generated physician and patient reminders: tools to improve population adherence to selected preventive services. J Fam Pract. 1991;32: 82-90. [PubMed] [Google Scholar]

- 79.Rosser WW, Hutchison BG, McDowell I, Newell C. Use of reminders to increase compliance with tetanus booster vaccination. Can Med Assoc J. 1992;146: 911-7. [PMC free article] [PubMed] [Google Scholar]

- 80.Blumenthal D. Total quality management and physicians' clinical decisions. JAMA. 1993;269: 2775-8. [PubMed] [Google Scholar]

- 81.Kahn MG, Bailey TC, Steib SA, Fraser VJ, Dunagan WC. Statistical process control methods for expert system performance monitoring. J Am Med Inform Assoc. 1996;3: 258-69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Hersh WR. Information Retrieval: A Health Care Perspective. In: Orthner HF (ed). Computers and Medicine. New York: Springer-Verlag, 1995.

- 83.Osheroff JA, Forsythe DE, Buchanan BG, Bankowitz RA, Blumenfeld BH, Miller RA. Physicians' information needs: analysis of questions posed during clinical teaching. Ann Intern Med. 1991;114: 576-81. [DOI] [PubMed] [Google Scholar]

- 84.Ely JW, Burch RJ, Vinson DC. The information needs of family physicians: case-specific clinical questions. J Fam Pract. 1992;35: 265-9. [PubMed] [Google Scholar]

- 85.Timpka T, Arborelius E. The GP's dilemmas: a study of knowledge need and use during health care consultations. Meth Inform Med. 1990;29: 23-9. [PubMed] [Google Scholar]

- 86.Chambliss ML, Conley J. Answering clinical questions. J Fam Pract. 1996;43: 140-4. [PubMed] [Google Scholar]

- 87.Osheroff JA, Bankowitz RA. Physicians' use of computer software in answering clinical questions. Bull Med Libr Assoc. 1993;81: 11-9. [PMC free article] [PubMed] [Google Scholar]

- 88.Northup DE, Moore-West M, Skipper B, Teaf SR. Characteristics of clinical information-searching: investigation using critical incident technique. J Med Educ 1983;58: 873-81. [PubMed] [Google Scholar]

- 89.Timpka T, Ekstrom M, Bjurulf P. Information needs and information seeking behaviour in primary health care. Scand J Prim Health Care. 1989;7: 105-9. [DOI] [PubMed] [Google Scholar]

- 90.Stinson ER, Mueller DA. Survey of health professionals' information habits and needs. JAMA. 1980;243: 140-3. [PubMed] [Google Scholar]

- 91.Lindberg DA, Siegel ER, Rapp BA, Wallingford KT, Wilson SR. Use of MEDLINE by physicians for clinical problem solving. JAMA. 1993;269: 3124-9. [PubMed] [Google Scholar]

- 92.Stross JK, Harlan WR. The dissemination of new medical information. JAMA. 1979;241: 2622-4. [PubMed] [Google Scholar]

- 93.Mamlin JJ, Baker DH. Combined time-motion and work sampling study in a general medicine clinic. Med Care. 1973;11: 449-56. [DOI] [PubMed] [Google Scholar]

- 94.Tang PC, Jaworski MA, Fellencer CA, Kreider N, LaRosa MP, Marquardt WC. Clinician information activities in diverse clinical practices. Proc Annu Symp Comput Appl Med Care. 1996; 12-16. [PMC free article] [PubMed]

- 95.Garrett LJ, Hammond WE, Stead WW. The effects of computerized medical records on provider efficiency and quality of care. Methods Inf Med. 1986;25: 151-7. [PubMed] [Google Scholar]