Abstract

Background

Diagnosis of attention deficit hyperactivity disorder (ADHD) relies on subjective methods which can lead to diagnostic uncertainty and delay. This trial evaluated the impact of providing a computerised test of attention and activity (QbTest) report on the speed and accuracy of diagnostic decision-making in children with suspected ADHD.

Methods

Randomised, parallel, single-blind controlled trial in mental health and community paediatric clinics in England. Participants were 6-17 years-old and referred for ADHD diagnostic assessment; all underwent assessment-as-usual, plus QbTest. Participants and their clinician were randomised to either receive the QbTest report immediately (QbOpen group) or the report was withheld (QbBlind group). The primary outcome was number of consultations until a diagnostic decision confirming/excluding ADHD within six-months from baseline. Health economic cost-effectiveness and cost utility analysis was conducted. Assessing QbTest Utility in ADHD: A Randomised Controlled Trial was registered at ClinicalTrials.gov (https://clinicaltrials.gov/ct2/show/NCT02209116).

Results

One hundred and thirty two participants were randomised to QbOpen group (123 analysed) and 135 to QbBlind group (127 analysed). Clinicians with access to the QbTest report (QbOpen) were more likely to reach a diagnostic decision about ADHD (Hazard Ratio 1.44, 95% CI 1.04 to 2.01). At six-months, 76% of those with a QbTest report had received a diagnostic decision, compared with 50% without. QbTest reduced appointment length by 15% (Time Ratio 0.85, 95% CI 0.77 to 0.93), increased clinicians’ confidence in their diagnostic decisions (Odds Ratio 1.77, 95% CI 1.09 to 2.89) and doubled the likelihood of excluding ADHD. There was no difference in diagnostic accuracy. Health economic analysis showed a position of strict dominance, however cost savings were small suggesting that the impact of providing the QbTest report within this trial can best be viewed as ‘cost neutral’.

Conclusion

QbTest may increase the efficiency of ADHD assessment pathway allowing greater patient throughput with clinicians reaching diagnostic decisions faster without compromising diagnostic accuracy.

Keywords: QbTest, Attention deficit hyperactivity disorder, ADHD, assessment, continuous performance test

Introduction

Attention deficit hyperactivity disorder (ADHD) is a common mental health disorder affecting approximately 3-5% of school age children (NICE, 2008), and is characterised by core symptoms of inattention, impulsivity and hyperactivity. ADHD frequently co-exists with other neurodevelopmental and psychiatric disorders and is a risk factor for major educational, social and occupational impairment, placing a huge burden on health, education, social care, and criminal justice systems. There has been a rapid growth in diagnosis over the last 30 years with the number of children recognised and treated for ADHD in the UK increasing almost 10-fold since the early 1980s (NICE, 2008). This has placed considerable strain on healthcare systems, and exposed serious limitations in existing methods of ADHD assessment.

There is no single test or biomarker used to diagnose ADHD (Bolea-Alamañac et al., 2014). In the absence of any objective measure to identify ADHD, clinical assessment and diagnosis is based on the clinician’s integration of various forms of subjective information including direct observation and reports from parents, teachers and young people. This approach is heavily reliant on subjective measures and clinical interpretation, which can lead to a lack of reliability and consistency in the diagnosis of ADHD (Ogundele et al., 2011). Furthermore, the process of ‘gold standard’ clinical interviews and data collection from multiple informants, and their interpretation, is time consuming and often impracticable in ‘real world’ clinical settings with frequent missing data and inconsistencies between observer reports leading to diagnostic uncertainty and delay (Kovshoff et al., 2012; Fridman et al., 2017). Early diagnosis and timely interventions reduce the risk of adverse long-term outcomes that are associated with ADHD such as antisocial behaviour, poor academic performance and social functioning (Shaw et al., 2012). Hence, there is a clear need for clinicians to swiftly identify ADHD when it is present and commence effective interventions (Faraone, 2015) as well as to confidently exclude ADHD when the diagnosis is not supported and so avoid unnecessary, costly and potentially harmful treatments. Objective measures of ADHD that augment, but do not replace, clinical assessment may help to increase diagnostic efficiency, reduce variability in practice and increase public confidence in ADHD diagnosis.

Objective measures of attention such as the continuous performance test (CPT) have been available for several decades. Many studies have shown impaired CPT performance in ADHD compared with typically developing controls, but the utility of the CPT in clinical assessment and diagnosis of ADHD remains unclear (Hall et al., 2016b). Specifically, although the CPT demonstrates good sensitivity to ADHD and correlates well with symptoms (Epstein et al., 2003), several studies have shown significant overlap in the performance of children with ADHD and typically developing children (Schatz et al., 2001; Zelnik et al., 2012; Grodzinsky & Barkley, 1999) leading to high false positive and false negative rates when attempting to use the CPT to aid diagnosis. There is also poor specificity of the CPT when comparing ADHD to clinical controls (Riccio & Reynolds, 2001; Solanto et al., 2004) probably reflecting the trans-diagnostic nature of attentional impairments. Moreover, there is evidence that variability in intellectual ability may confound the interpretation of CPT performance in ADHD (Munkvold et al., 2014; Milioni et al., 2017; Park et al., 2011). These are important considerations when using the CPT in a clinical setting and have so far undermined the use of CPT as a diagnostic tool. In addition, there are several variants of the CPT, including the Conners CPT (Conners, 1995) which requires the participant to respond rapidly to a series of stimuli but withhold the response to the target stimulus; the A-X CPT in which participants respond only to the target (‘X’) when it is preceded by a specific cue (‘A’) and the Tests of Variables of Attention (TOVA) (Dupuy et al., 1993) which requires participants to respond to a target shape but withhold the response to other shapes. All measure vigilance and sustained attention but differ in the demands they place on other executive functions such as inhibitory control (the Conners) or working memory (A-X CPT). The choice of CPT may be influenced by the age, clinical status and intellectual ability of the group under investigation.

Recent evidence suggests that combining a CPT with an objective measure of motor activity may add value in the clinical assessment of ADHD (Hall et al., 2016b). One study using this approach to augment clinical assessment reported sensitivity and specificity of 81% and 91% respectively (Gilbert et al., 2016). QbTest (Qbtech Ltd) combines a computerised CPT with an infra-red camera to detect motor activity during the test and provides an objective standardised measurement of attention, impulsivity and activity, corresponding to the three symptom domains of ADHD. QbTest is highly correlated with blinded observer ratings of ADHD symptoms in placebo-controlled trials (Wehmeier et al., 2011) and can help differentiate ADHD from other conditions (Vogt and Shameli, 2011). In studies designed to assess ‘stand-alone’ diagnostic accuracy, QbTest has only moderate sensitivity and low specificity to ADHD (Hult et al., 2015, Söderström et al., 2014). Importantly, these studies used QbTest independently of other clinical information. However, QbTest is not designed to act as a ‘stand-alone’ tool and is intended to augment, but not replace, the multi-informant approach. The United States FDA has approved QbTest as a decision-aid tool to augment, but not replace, standard clinical assessment of ADHD. Audit data suggest that when combined with other clinical information in a real-world setting, QbTest may reduce the number of appointments required to reach a diagnosis, potentially resulting in a cost-saving in a healthcare service (Hall et al., 2016a). This assessment approach has been shown to be acceptable to both families and clinicians (Hall et al., 2017).

In this trial, we evaluate the impact of QbTest on clinical diagnostic decision-making when added to routine clinical assessment of ADHD compared to assessment as usual using a pragmatic diagnostic randomised control trial design. Routine clinical care was chosen as the control condition to determine the added value to standard clinical practice of introducing this technology. We hypothesised that providing clinicians with a QbTest report would accelerate diagnostic decision-making (both confirming and excluding ADHD) without compromising diagnostic accuracy (Hall et al., 2014).

Methods

Trial design

The Assessing QbTest Utility in ADHD-Trial (AQUA-Trial) was a two-arm, parallel group single-blind multi-centre diagnostic randomised control trial (RCT) conducted across 10 child and adolescent mental health services (CAMHS) and community paediatric clinic sites in England. Both CAMHS and paediatric services were selected to reflect the mix of ADHD services across England and included an even split between sites new to QbTest (5 sites) and sites where QbTest was established practice (5 sites). All participants received a QbTest at one of their first three clinic appointments (98.4% conducted before, or at, appointment number 2). Participants were randomly assigned to their clinician either immediately receiving the QbTest report (QbOpen group, n=123) or having the report withheld until the study end (QbBlind group, n=127) in a 1:1 ratio stratified by site by a web-based system. Thus, all clinicians at a site assessed patients both with and without a QbTest report. Further randomisation details can be found in the protocol (Hall et al., 2014).

Participants

Eligible participants were children aged 6-17 years and referred for their first ADHD assessment. Exclusion criteria were previous or current ADHD diagnosis, being non-fluent in English and suspected moderate/severe intellectual disability. The study was conducted according to CONSORT (Consolidated Standards of Reporting Trials; Moher et al., 2010) guidelines (Appendix 1) (Moher et al., 2010) and received ethical approval from Coventry and Warwick Research Ethics Committee (Ref: 14/WM/0166). Written informed consent was obtained after the procedures had been fully explained; for children under 16-years-old, written consent was obtained from the parent/legal guardian and verbal or written assent was obtained from the child/young person. The trial progress was overseen by an independent Trial Steering Committee.

Procedures

Assessment as Usual

All participants received ‘assessment as usual’ for ADHD. As a pragmatic trial, assessments were not standardised and could vary between sites, with stratified randomisation used to balance these potential effects. Appendix 2 summarises assessment practices, which typically included an interview with the child and their family and the completion of at least one standardised informant-based behavioural assessment measure.

QbTest

QbTest (www.qbtech.com) combines a computerised CPT to measure attention and impulsivity with a high-resolution infra-red motion-tracking system to measure activity. The test takes 20-minutes to complete. There are two versions of the test: QbTest for children aged 7 to 12 years is designed as a simple target detection (‘go/no-go’) task in which participants must press a hand-held responder button each time a circle appears on-screen but withhold the response when a cross appears in front of the circle. This is similar to the Conners CPT as it includes an inhibitory component. QbTest+ for those aged 12+ years includes a working memory component (to avoid ceiling effects in the older age group) similar to an A-X CPT (described above). Participants monitor a stream of blue and red squares and circles and must respond each time two consecutive stimuli match on both colour and shape. This version requires participants to hold each stimulus in working memory in order to determine whether the next stimulus is a match. Physical activity is measured during the CPT via an infrared camera that tracks the path of a reflector attached to the centre of the participant’s forehead (Teicher et al., 1996). These elements of the test are visually displayed in a report which provides information on each of the three symptom domains of ADHD and summary scores for each individual based on deviation from a normative data set, based on age group and gender (Hall et al., 2014). Further details on the QbTest are reported elsewhere (Hult et al., 2015). All clinicians (including consultant psychiatrists and paediatricians, nurse specialists and healthcare assistants) underwent Qbtech approved training in conducting and interpreting test reports (healthcare assistants did not interpret tests). Qbtech provide additional support to clinicians in interpreting tests when needed. Clinicians were informed that QbTest is a diagnostic decision aid to be used alongside a comprehensive assessment and is not a ‘stand-alone’ diagnostic test.

Outcomes

Clinicians completed a short structured clinical record pro forma after each consultation. The pro forma documented information about the appointment duration, diagnosis, and confidence in the decision. The primary outcome was number of appointments until a diagnosis of ADHD was confirmed or excluded within six months of baseline. Secondary outcomes included: number of days until a diagnostic decision, duration of consultations (recorded in minutes by the clinician) until a diagnosis, clinician’s confidence in diagnostic decision (rated on a 7-point Likert scale from ‘definitely ADHD’ to ‘definitely not ADHD’), and stability in diagnosis (any change in diagnosis from first confirmed diagnosis throughout the study period).

The impact on diagnostic accuracy of adding the QbTest report to routine assessment was evaluated by comparing the clinician’s diagnosis with (QbOpen group) and without access to QbTest report (QbBlind group) against an independent consensus research diagnosis made blind to group allocation using the Development and Well-being Assessment (DAWBA; Goodman et al., 2000). Two experienced child psychiatrists (CH and MM) blind to group allocation reached a clinical consensus diagnoses using DSM-5 and ICD-10 (hyperkinetic disorder) criteria. Clinicians making the independent research diagnoses had access where available to clinician completed Children’s Global Assessment Scale scores (CGAS; Shaffer et al., 1983) and Swanson, Nolan and Pelham version IV (SNAP-IV; Swanson et al., 2001), but did not have access to clinic records or structured pro formas. The child’s Quality Adjusted Life Year (QALY) was measured by the EQ5DY (Wille et al., 2010). All outcome assessors (researchers) were blind to arm allocation throughout the trial.

Statistical analysis

We initially powered the study with 178 participants to detect at least a minimal clinically important difference (MCID) in time to diagnosis (Hall et al., 2016a). An upward revision to the required sample size was made (Hall et al., 2014, Hall et al., 2016 erratum), based on the findings of a blinded review of the first 145 participants. This revealed that approximately 30% of the sample had not received a diagnostic decision within the six-month study period. In our revised protocol, a discrete-time survival approach using multilevel complementary-log-log regression was chosen as the most appropriate way to model ‘time’ to diagnostic decisions when (diagnostic) events occur in discrete-time (i.e. appointments) and some children may not receive a diagnosis within the six-month follow-up period (Hall et al., 2016 erratum).

Our revised power calculation estimated that 268 participants were required to detect a difference with 90% power at two tailed 0.05 significance level, assuming 20% total variability to be explained by time, based on information shown in the audit data (Hall et al., 2016a). This revision was agreed by the independent Trial Steering Committee and the revision published (Hall et al., 2016 erratum)

Analysis was conducted in accordance with ICH 9 principles (European Medicines Agency, 1998) and CONSORT (Moher et al., 2010) with those children who did not receive either the intervention (QbTest with report) or comparator (QbTest without report) after randomisation excluded from the analysis while the intention-to-treat principle was still preserved (European Medicines Agency, 1998). This procedure has been well documented in other RCTs (Ngandu et al., 2015, Wagenlehner et al., 2015).

All time-to-event secondary outcomes were analysed using multilevel Weibull modelling, see Appendix 3 for full Statistical Analysis Plan. All continuous outcomes were analysed using multilevel linear modelling; all categorical secondary outcomes were analysed using multilevel non-linear modelling (Goldstein, 2011; Browne & Rasbash, 2009). The diagnostic accuracy between groups was compared using ROC curve modelling. A secondary analysis, not specified in the published protocol, was conducted on the primary outcome stratified by type of QbTest administered i.e. 6-12-years version or 12+years version (see Appendix 4). Missing values in continuous outcomes were imputed with multivariate modelling using MLwiN (v2.36) software built-in MCMC algorithm under a missing-at-random assumption (Leckie & Charlton, 2013, Browne & Rasbash, 2009). Site was included as a higher level unit for each multilevel modelling (Kahan, 2014, Kahan & Morris, 2013). STATA 14 (StataCorp, 2015) was used to analyse all data. Prior to recruitment of the first participant, the trial was prospectively registered with ClinicalTrials.gov (NCT02209116; https://clinicaltrials.gov/ct2/show/NCT02209116), it was also later registered with the ISRCTN (ISRCTN11727351; https://www.isrctn.com/ISRCTN11727351).

Economic evaluation

We used an NHS cost perspective in accordance with NICE guidance (NICE, 2012). The cost analysis focused on the staffing required to deliver a diagnosis confirming or excluding ADHD. After each of the child’s appointments with CAMHS or community paediatrics, the healthcare professionals in clinic completed a short pro forma detailing the time spent with each child and their family. The annual salary figures were obtained from the employing NHS Trusts in 2016 prices and the cost per minute was translated using average working week by grade from the PSSRU Cost of Health and Social Care 2016 (Curtis & Burns, 2016) (see Appendix 5 for full resource costing). As QbTest was administered to participants in both arms of this trial, the test cost cancelled out and was not specifically added to the calculation for the economic evaluation.

The primary outcome of number of appointments until a diagnosis of ADHD was confirmed or excluded could not be used for the economic analysis, as the cost of appointments and all related staff time formed part of the economic costs and would as such have resulted in double counting. Hence, the economic analysis used two secondary outcome measures; days until diagnostic decision and the EQ5DY (Wille et al., 2010). The health economic analysis was based on a six-month time frame, and discounting was not applied to costs or outcomes. As complete data were available on days until a diagnostic decision, a complete case analysis was used (n=250) and a bootstrap of 1000 replications was run using this data. As a large number of individuals (n= 153) failed to complete the EQ5DY questionnaires, we used multiple imputation, a well-recognised method to adjust for the problems of missing data, to generate 30 imputed datasets for each intervention group and used these QALY scores to link to total healthcare staff costs at each time point.

Results

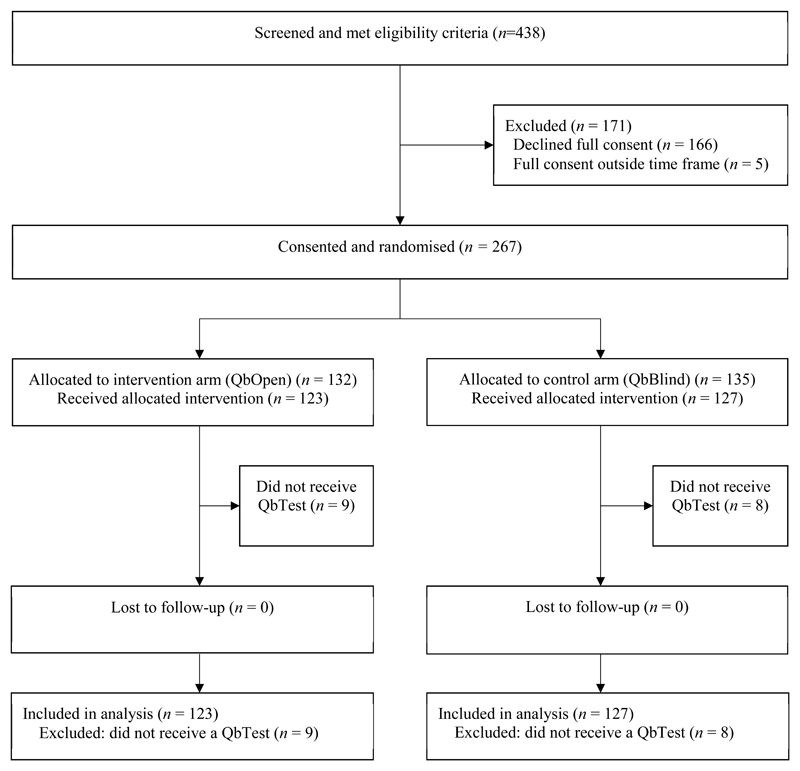

Figure 1 shows the flow of participants through the trial. Participant recruitment began on 8th August 2014 and recruitment ended on 15th December 2015. The last participant exited the trial on 15th June 2016 when the trial ended. Of the 438 eligible participants referred for an ADHD assessment to the 10 study sites, 267 were consented and randomised, the remainder did not consent. Out of the 267 enrolled, 132 were randomised in the intervention arm (QbOpen) and 135 in the control arm (QbBlind). In both arms, similar numbers did not receive a QbTest (QbOpen n=9 and QbBlind n=8). These 17 participants did not engage with services after consenting and therefore did not receive any form of clinical assessment, including QbTest and were consequently excluded from the study, resulting in analysis of 123 participants in the QbOpen arm and 127 in the QbBlind arm.

Figure 1. Trial profile.

Analysis was conducted in accordance with the European Medicines Agency Guidelines (1998) and CONSORT 2010 (Moher et al., 2010). Participants who did not receive a QbTest were excluded from analysis.

Table 1 shows that participants in the intervention and control groups had similar characteristics at baseline, indicating that potential confounders such as age and gender should not have impacted on group comparisons. Independent consensus research diagnoses derived from the DAWBA (n = 241) indicated the following diagnoses (allowing more than one diagnosis per participant): 171 (71%) ADHD (DSM 5 ADHD + ICD-10 HKD), 85 (35%) oppositional defiant disorder/conduct disorder, 48 (20%) any anxiety disorder, 41 (17%) chronic tic disorder/Tourette syndrome, 22 (9%) autism spectrum disorder, 8 (3%) depressive disorder, 26 (11%) learning difficulties and 1 (0.4%) attachment disorder; 45 (19%) were classified as having no psychiatric diagnosis using DAWBA. No adverse effects with QbTest were reported.

Table 1.

Sociodemographic and clinical characteristics of participants with QbTest report withheld (QbBlind group) or QbTest report disclosed (QbOpen group). Figures are number (percentage) of participants unless stated otherwise.

| QbBlind (control; report withheld) (n = 127) | QbOpen (intervention; report disclosed) (n = 123) | |

|---|---|---|

| Sex (%) | ||

| Male | 102 (80) | 95 (77) |

| Female | 25 (20) | 28 (23) |

|

Age (years) Mean age (SD) Min-max |

9.4 (2.8) (5.9, 16.2) |

9.5 (2.8) (6.0, 17.4) |

| Ethnicity %* | n = 89 | n = 83 |

| White | 80 (90) | 73 (88) |

| Mixed and other | 9 (10) | 10 (12) |

| Strengths & Difficulties Questionnaire – Parent (SDQ-P)*:mean(SD) | n = 108 | n = 90 |

| Emotional problems | 4.9 (2.8) | 4.4 (2.9) |

| Conduct problems | 5.9 (2.4)+ | 5.9 (2.7)+ |

| Hyperactivity | 8.8 (1.3)++ | 8.9 (1.6)++ |

| Peer problems | 4.6 (2.4)+ | 4.1 (2.4)+ |

| Pro-social behaviour | 5.3 (2.3) | 5.6 (2.1) |

| Total difficulties score | 24.3 (5.9)+ | 23.3 (6.2)+ |

| Impact score | 5.9 (2.6)+ | 5.8 (2.6)+ |

| Strengths & Difficulties Questionnaire – Teacher (SDQ-T)*:mean(SD) | n = 85 | n = 75 |

| Emotional problems | 2.9 (3.1) | 2.7 (2.6) |

| Conduct problems | 3.9 (2.9) | 3.3 (2.7) |

| Hyperactivity | 7.6 (2.5)+ | 7.2 (2.8)+ |

| Peer problems | 2.9 (2.3) | 2.4 (2.8) |

| Pro-social behaviour | 5.2 (2.4) | 5.3 (2.5) |

| Total difficulties score | 17.5 (7.4)+ | 15.7 (6.9) |

| Impact score | 3.0 (2.0)+ | 2.6 (1.7)+ |

| Children’s Global Assessment Scale (CGAS):mean(SD) | 54.9 (9.9) | 56.2 (11.7) |

| Type of clinical service(%) | n = 127 | n = 123 |

| CAMHS | 60 (47) | 59 (48) |

| Community Paediatrics | 67 (53) | 64 (52) |

Data are n (%) or mean (SD/range). ‘Other’ ethnicity includes Pakistani, Indian and Other Asian.

Data not available for all randomised participants.

scores are in the abnormal range (top 10%);

scores are in the top 5%. CAMHS = child and adolescent mental health services. Higher scores on the Strengths and Difficulties Questionnaire (SDQ) indicate more problems with the exception of pro-social behaviour. Children’s Global Assessment Scale (CGAS) is rated by clinicians. Lower scores indicate more problems. CGAS scores 51-60 represent some noticeable problems in more than one area and variable functioning with sporadic difficulties or symptoms in several but not all social areas. Ethnicity was self-reported.

Primary outcome

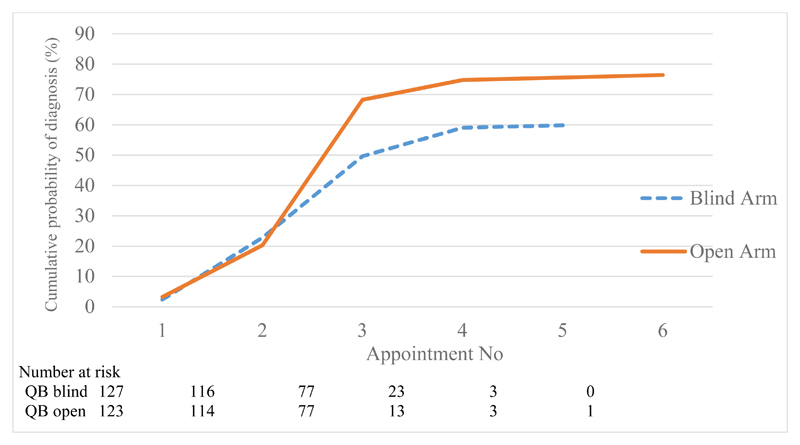

Participants whose clinician had access to the QbTest report (QbOpen group) were significantly more likely to receive an earlier diagnostic decision about ADHD (Figure 2 and Appendix 6). Participants whose clinician had access to a QbTest report were 44% more likely during the study period to receive a diagnostic decision either confirming or excluding ADHD compared with those having assessment as usual where the QbTest report was withheld (HR = 1.44; 95% CI = 1.04 to 2.01; p = .029).

Figure 2. Primary outcome - Observed cumulative probability of confirmed diagnosis by appointment number with QbTest report withheld (QbBlind group) or QbTest report disclosed (QbOpen group).

Note: time between appointments may not be at a consistent interval

Number at risk is defined in survival analysis as the number of patients who have not yet had the event of interest (in this trial; a confirmed diagnostic decision) or dropped out at the beginning of each time interval.

Secondary outcomes

Clinicians were more likely to make a diagnostic decision about ADHD when they had access to a QbTest report (QbOpen) than when the QbTest report was withheld (QbBlind) (94/123 (76%) v. 76/127 (60%), OR = 2.43; 95% CI = 1.35 to 4.49; p =.003; Figure 2). Further exploratory analysis shows that clinicians were twice as likely to exclude a diagnosis of ADHD when they had access to a QbTest report (25/123 (20%) v. 11/127 (9%), RRR = 2.14; 95% CI = 1.00 to 4.59; p = .049). Clinicians were also more confident in their diagnostic decision about ADHD in the QbOpen group compared with the QbBlind group (OR = 1.77; 95% CI = 1.09 to 2.89; p =.022). Secondary outcomes are presented in Table 2 and further analysis, including a secondary analysis of the primary outcome stratified by the type of QbTest administered i.e. 6-12-years version or 12+years version (see Appendix 4), are provided in the supplementary online appendices.

Table 2.

Secondary outcomes and group differences for QbOpen (QbTest report disclosed) versus QbBlind (QbTest report withheld). Figures are number (percentage) of participants unless stated otherwise.

| QbBlind arm (n = 127) | QbOpen arm (n = 123) | Comparison | |

|---|---|---|---|

| Diagnostic decision made (%) | 76 (60) | 94 (76) | OR = 2.43; 95% CI [1.34 to 4.39]; p =.003 RD = 0.15; 95% CI [0.05 to 0.25]; p =.005 |

| Diagnostic status (%)# | |||

| ADHD confirmed | 65 (51) | 69 (56) | |

| ADHD excluded | 11 (9) | 25 (20) | RRR = 2.14; 95% CI [1.00 to 4.59]; p =.049 |

| No decision made | 51 (40) | 29 (24) | |

| Diagnostic confidence (ADHD/not ADHD)* | n =121 | n =122 | |

| Possible / Uncertain | 29 (24) | 16 (13) | OR = 1.77; 95% CI [1.09 to 2.89]; p =.022 |

| Probable | 34 (28) | 32 (26) | |

| Definitely | 58 (48) | 74 (61) | |

| Time to diagnosis in minutes (observed median survival time[95%CI]) | 165 (150 to180) | 150 (140 to 155) | Time Ratio = 0.85;95% CI [0.77 to 0.93]; p =.001 |

| Days to diagnosis (observed median survival time[95%CI]) | 108 (91 to 140) | 96 (85 to 99) | Time ratio = 0.90; 95% CI [0.73 to 1.10]; p =.285 |

| Stability (kappa, [95%CI]) | 0.90 (0.7 to 1) | 1(1 to 1) | (χ2(1)=0.01, p=0.32) |

| Diagnostic accuracy* | |||

| Sensitivity [95%CI] | 96.1 (86.5 to 99.5) | 86.0 (72.1 to 94.7) | ROC comparison χ2(df)=0.22(1), p =.636 |

| Specificity [95%CI] | 36.0 (1.0 to 57.5) | 39.5 (24.9 to 55.6) |

Data not available for all randomised participants. DAWBA n = 241. 123 DAWBAs were rated on partial information (missing parent/teacher data). 9 participants did not return DAWBA data.

Exploratory analysis (not pre-specified). RD = risk difference.

There was a reduction of 15% in the total consultation time in minutes required to reach a diagnostic decision for participants in the QbOpen group compared with the QbBlind group (Time Ratio = 0.85; 95% CI = 0.77 to 0.93; p =.001; see Appendix 7). Although fewer days were required to reach a diagnostic decision in the QbOpen group than QbBlind group, the difference was not significant (Time ratio = 0.90; 95% CI = 0.73 to 1.10; p = .28). Stability in diagnosis was high in both groups (QbOpen κ = 1.00 v. QbBlind κ =0.90).

Diagnostic accuracy

Independent consensus research DAWBA diagnoses were available for 241/250 participants. In 123/241 participants, DAWBAs were missing from one informant (i.e. either parent or teacher). The analysis was conducted on the whole sample when DAWBA information was provided from at least one informant (n = 241). Sensitivity of clinician confirmed diagnosis with respect to consensus research DAWBA diagnosis was slightly higher in QbBlind group (96.1%) than in the QbOpen group (86.0%), with similar specificity in the QbOpen (39.5%) group and the QbBlind group (36.0%). Appendix 8 shows that there was no difference in diagnostic accuracy (sensitivity/specificity) between the two trial arms (χ2=0.22 (1); p = .64).

Economic evaluation

Full cost data were obtained for each appointment with each child. Table 3 details the mean number of clinic appointments, their cost and time to diagnosis by intervention group. The observed incremental difference in days until diagnosis was -1.35 for the QbOpen group compared with the QbBlind group. The incremental cost was -£2.33 (95% CI = -2.67 to -2.00). An incremental cost effectiveness ratio for time to diagnosis was calculated. This yielded an incremental cost effectiveness ratio (ICER) of £1.72. Further details showing the cost effectiveness plane which provides an indication of the variability of the findings can be obtained in online Appendix 9.

Table 3.

Mean number of Clinic appointments until diagnosis, time and cost.

| QbOpen (n = 123) Mean (SD) |

QbBlind (n = 127) Mean (SD) |

|

|---|---|---|

| Number of clinic appointments until diagnosis | 2.69 (0.85) | 2.72 (0.91) |

| Number of minutes spent at clinic appointments | 141.97 (53.84) | 152.83 (75.88) |

| Day Number | 82.54 (49.53) | 83.94 (58.14) |

| Cost of clinic appointments | £87.62 (£40.45) | £90.06 (£41.19) |

Calculation of an ICER for the QALY results would have resulted in a negative value, an unhelpful statistic in decision making, as such the net monetary benefit (NMB) was calculated. The incremental NMB was calculated by multiplying the incremental QALY (0.006568) by the willingness-to-pay (WTP) threshold value, and subtracting the value of the incremental costs (-2.44518). A positive net benefit demonstrates cost effectiveness, and a negative net benefit demonstrates that the intervention is not cost effective. A WTP of £20,000 was chosen as recommended within NICE guidance. The NMB at £20000 is £133.81. Using a range of WTPs from £5000 to £35,000 generate positive NMBs throughout, demonstrating both cost savings and the cost effectiveness of QbOpen compared to QbBlind (Appendix 10 & 11).

In both analysis scenarios presented, QbOpen represents a position of strict dominance. That is to say, within this trial, where QbTest was administered in both arms, early clinician access to the QbTest report slightly reduces costs and improves health economic outcomes in both cases. In scenario one, this is in terms of time to reach a diagnosis analysed using bootstrapping, and in scenario two in terms of the QALYs generated through multiple imputation.

Discussion

In children and young people referred to child psychiatry and paediatric services for an ADHD diagnostic assessment, the provision of a computerised test of attention and activity (QbTest) report to clinicians, when added to routine assessment, resulted in significantly quicker diagnostic decision making, but did not affect diagnostic accuracy (sensitivity/specificity). Within six-months of the first assessment appointment, clinicians with access to a QbTest report were 1.44 times more likely to reach a diagnostic decision about ADHD and the consultation time to diagnosis was reduced by 15%. It is notable that six-months after their first ADHD assessment appointment, 30% of children and young people had still not received a diagnostic decision. However, there were significantly fewer participants still waiting for a diagnostic decision when clinicians had access to a QbTest report (24%) than when such a report was not available (40%). Those clinicians with access to a QbTest report were also more confident in their diagnostic decisions and were twice more likely to exclude a diagnosis of ADHD. This suggests that QbTest may assist clinicians in both reducing diagnostic delays and in excluding ADHD when standard assessment information is either missing or contradictory. Although there was a reduction in the number of days needed to make a diagnostic decision for clinicians with access to the QbTest report, this difference was not statistically significant overall due to the large variability in appointment scheduling between sites.

Health economic analysis revealed that when the QbTest report was available to clinicians, compared to when the report was withheld, there were small cost-savings for the health service and improved outcomes. However, caution should be exercised in terms of over claiming cost savings as the differences are small and overall, the impact of providing the QbTest report within this trial can best be viewed as ‘cost neutral’. As QbTest was administered to participants in both arms of this trial, the test cost was cancelled out and was not specifically added to the calculation for the economic evaluation. Hence, the overall reduction in cost does not include purchasing and administering the test. The current U.K. cost for QbTest is between £20 and £22 (QbTech 2017) depending on the volume of patients seen. Thus, health services implementing QbTest will need to balance cost of the test against benefit of faster diagnostic decision making. A limitation is that the health economic analysis was based on a six month time horizon, and discounting was not applied to costs or outcomes. As such, it was not possible to determine the longer term costs associated with cases still awaiting a diagnostic determination which was more common (QbBlind 40% vs. Qb Open 24%) when clinicians did not have access to the QbTest report.

This research adds to the limited RCT evidence investigating objective computerised assessment technology in children and young people with ADHD (Epstein et al., 2016). The pragmatic design of the trial, including broad inclusion criteria increases its ecological validity and generalisability to routine care in similar clinical settings. The choice of assessment as usual as a comparator allows an estimate of the added value of providing QbTest reports to clinicians over and above standard care. The strength of the costing approach was that complete and individualised cost information was obtained on each child. The economic analysis is constructed from a detailed resource profile and as such is transparent and can be used by decision makers in other health care settings. This trial design is in line with the United States FDA approved use of QbTest as a technology which augments, but does not replace, clinical assessment of ADHD.

Our finding in the U.K., that just under one third of participants had not received an ADHD diagnostic determination within 6 months is supported by a recent European CAPPA survey of ADHD diagnostic practice (Fridman et al., 2017). This study found that, among ten EU countries, the UK had the longest mean duration from first doctor visit to a formal diagnosis of 18.3 months, compared to the shortest mean duration of 3.0 months for Italy and 10.8 months for the EU countries overall. As such, there are some limitations in generalising these findings beyond the UK to countries, including North America where ADHD diagnostic decision making is typically significantly faster than the UK. In countries where time to diagnosis is significantly shorter than the UK, independent replication of the AQUA trial is recommended. However, in the UK (and other countries) where time to diagnosis is long, the impact of QbTest on reducing time to diagnosis and increasing patient throughput is likely to be felt most and there is the strongest case for adoption of QbTest into ADHD care pathways.

Limitations of the study include that follow-up was limited to a six-month time horizon. Given that overall, almost one third of participants had still not received a diagnostic decision after six months, it was not possible to determine the impact of QbTest on the eventual diagnosis of those participants still awaiting a diagnostic decision at the end of the study. The recording of diagnostic decisions was made by clinicians who could not be blinded to group allocation. Hence, we used independent blinded research diagnostic assessments to compare diagnostic accuracy between the two trial arms. We are also reassured from the results of interviewing clinicians in the trial (Hall et al., 2017) that there was no suggestion that lack of blinding had any impact on diagnostic decision-making which we found to be faster than in a European CAPPA study examining diagnostic practice in the UK (Fridman et al., 2017). Another potential limitation with respect to external validity is that participants in the comparison group underwent the QbTest procedure, although the QbTest report was withheld from clinicians. It could be argued that this was not strictly ‘assessment as usual’ as clinicians’ observation of the child’s behaviour during the QbTest procedure (clinicians sat in the room) could possibly assist diagnostic determination. In this case, the effect of observing QbTest in the control group might be to reduce, rather than increase, differences in diagnostic decision-making between the groups. In addition, our protocol and trial design was not adequately powered to assess the potential interaction effect of age (stratified by those using the younger 7-12 version of QbTest and ‘older’ 12+ version) on the primary outcome. We recommend that the interaction with age and QbTest type on diagnostic decision making should be addressed by future adequately powered studies.

A limitation to the secondary outcome measure of clinician diagnostic confidence is that the Likert scale used was specifically developed for the study and does not have established validity or reliability. It is also possible that clinicians’ confidence in decision making using QbTest was influenced by their prior experience of using the test which varied between sites. However, we found no evidence in post hoc analyses that prior experience with QbTest affected the primary outcome. Furthermore, in order to minimise the potential effect of between site variations in practice, we stratified the randomisation of participants by study site. Finally, the assessment of the impact of QbTest on diagnostic accuracy is limited by the lack of a true ‘gold standard’ diagnostic measure. Previous studies, have investigated the diagnostic validity of the QbTest, with Area Under Curve results varying from .70-.80 (Hult et al., 2015). However, given that QbTest is not a ‘stand-alone’ diagnostic tool, and was not used as such in the trial, we compared accuracy between the study arms (clinicians’ diagnosis with or without QbTest) versus the independent DAWBA research diagnosis. The advantage of the DAWBA research consensus diagnosis is that it was made blind to group allocation. However, a limitation is that DAWBA diagnoses were made without access to participant’s clinical records and therefore should not be considered equivalent to a clinical ‘gold standard’ diagnosis. Importantly, DAWBA information was missing from one informant in more than half (123/241) of participants. As such, the low specificity of clinicians’ diagnosis with respect to more stringent research diagnoses is not unexpected, with specificity being similar between the two arms. Although there was no statistical difference in sensitivity between the two arms, the slightly lower sensitivity in the QbOpen arm suggests that clinicians may be applying slightly more stringent criteria when using QbTest, which has to be balanced against a more rapid diagnosis and increased exclusion of non-ADHD cases. Results in Table 2 show that in the QbOpen arm, despite a (non-significant) fall in sensitivity, slightly more children received an ADHD diagnosis in the QbOpen arm (56%, n=69) than in the QbBlind arm (51%, n=66) by 6 months. Importantly, there was no overall reduction in specificity with QbTest, indicating that the increase in ADHD diagnoses in the QbOpen arm was due to more rapid diagnosis rather than an increase in false positive diagnoses. Therefore, there doesn’t appear to be any evidence to suggest that clinicians with QbTest results (QbOpen) were missing more cases of ADHD, on the contrary, they appear to diagnose slightly more cases with ADHD than in the QbBlind arm. However, interpretation of the sensitivity and specificity needs to be treated with caution as around a third of all participants had no clinician diagnostic determination by six-months and were therefore excluded from the 2x2 tables estimating sensitivity and specificity. Additionally, DAWBA diagnoses were made with more than half of the participants’ information missing from one informant (parent/teacher).

In summary, our results suggest that adoption of objective computerised assessment technology (QbTest), as an adjunct to clinical diagnostic decision making in the assessment of ADHD, appears to increase the speed and efficiency of clinical decision making without appearing to compromise diagnostic accuracy. Overall, our results suggest that the greatest impact of QbTest on diagnostic decision-making may be in cases where diagnosis would typically be deferred, possibly due to missing or inconsistent information. QbTest appears to give clinicians added confidence in their diagnosis (Hall et al., 2017), particularly in ruling out ADHD when it is not present. In line with the FDA, our results do not support use of QbTest as a ‘stand-alone’ diagnostic test for ADHD as we did not find QbTest increased diagnostic accuracy over standard clinical assessment. The health economic analysis suggests that QbTest could increase patient throughput and reduce waiting times without significant increases in overall healthcare system costs. Furthermore, as qualitative data from parents and clinicians (Hall et al., 2017) are supportive of the acceptability, feasibility and added value of including an objective measure to ADHD assessment, the findings of this trial suggest that QbTest could now be routinely adopted in the UK to help streamline and improve ADHD care pathways, with replication of the AQUA trial recommended in other healthcare systems where time to ADHD diagnosis is much shorter than in the UK.

Supplementary Material

Key Points.

The prevalence of ADHD diagnosis in healthcare systems in children and young people has increased but diagnostic practice remains variable, with significant diagnostic delays and reliance on subjective assessment measures.

This pragmatically-designed RCT is the first to show that adding QbTest to standard care can reduce the time needed to make a diagnostic decision on ADHD, increase the likelihood of excluding ADHD and improve clinicians’ confidence in their decision-making, without compromising diagnostic accuracy.

Adding QbTest to standard practice could result in efficiencies for health care services by improving diagnostic efficiency, without adding significant additional cost to the health service budget.

This trial supports adoption of QbTest in the ADHD assessment pathway in the UK. However, replication of the AQUA trial is recommended in other healthcare systems where time to diagnostic decision making is typically much faster than the U.K.

Diagnostic randomised controlled trials are feasible to conduct in real-word clinical services and can make an important contribution to understanding the impact on decision-making, clinical utility and cost-effectiveness of objective assessment technologies.

Acknowledgements

The research reported in this paper was funded by the National Institute of Health Research (NIHR) Collaboration for Leadership in Applied Health Research and Care East Midlands (CLAHRC-EM). The research was supported by the NIHR MindTech Healthcare Technology Co-operative. The views represented are the views of the authors alone and do not necessarily represent the views of the Department of Health in England, NHS, or the National Institute for Health Research. Both the funding sources and Qbtech Ltd had no role in the design, collection, analysis and interpretation of data, or in the writing of the manuscript. The corresponding author (CH) had full access to all the data in the study and takes responsibility for integrity and accuracy of the data.

We thank the healthcare professionals and site principal investigators for their time and commitment to making the trial possible; The AQUA Trial Group members: Sarah Curran, Julie Clarke, Samina Holsgrove, Teresa Jennings, Neeta Kulkarni, Maria Moldavsky, Dilip Nathan, Anne-Marie Skarstam, Kim Selby, Hena Vijayan and Adrian Williams. We also thank staff at trial sites for their valuable contribution; Susan Good, Ann-Maria Regan, Jo Dodd, Aaron Hobson, Charmaine Khon, Gail Melvin, Jo McGarr, Hanah Sfar-Gandoura, Cheryl Gillot, Carol Wright, Rahab Omer, Oluyinka Akinsoji, Femi Balogun, Louise Cooper, Rachel Elvins, Mary Kelsall, Kath Norhgate, Suhail Rafiq, Hillary Lloyd, Mary Waterworth, Eleanor Stracey, Nicolette Kaye. We thank Qbtech Ltd for making QbTest available to study sites and providing training and support for clinicians. We also thank Julie Moss and Angela Summerfield for administration support, Professor John Norrie for statistical consultation. Finally, we thank our participants for taking part in this trial.

Footnotes

Conflict of interests: CH and KS are members of the NICE guideline committee for ADHD. DD reports grants, personal fees and non-financial support from Shire, personal fees and non-financial support from Eli Lilly, personal fees and non-financial support from Meddice, outside the submitted work. All other authors report no conflicts of interests.

Ethical considerations

Written informed consent was obtained after the procedures had been fully explained; for children under 16-years-old, written consent was obtained from the parent/legal guardian and verbal or written assent was obtained from the child/young person.

References

- Bolea-Alamañac B, Nutt DJ, Adamou M, Asherson P, Bazire S, Coghill D, Heal D, Muller U, Nash J, Santosh P, Sayal K, et al. Evidence-based guidelines for the pharmacological management of attention deficit hyperactivity disorder: Update on recommendations from the British Association for Psychopharmacology. Journal of Psychopharmacology. 2014;28(3):179–203. doi: 10.1177/0269881113519509. [DOI] [PubMed] [Google Scholar]

- Browne WJ, Rasbash J. MCMC estimation in MLwiN. Citeseer; 2009. [Google Scholar]

- Conners C. The Conners Rating Scales: instruments for the assessment of childhood psychopathology. Duke University; Durham: 1995. [Google Scholar]

- Curtis L, Burns A. Unit Costs of Health and Social Care. Personal Social Services Research Unit, University of Kent; 2016. [Google Scholar]

- Dupuy T, Greenberg L. TOVA manual for test of variables of attention computer program. University of Minnesota; Minneapolis: 1993. [Google Scholar]

- Epstein JN, Erkanli A, Conners CK, Klaric J, Costello JE, Angold A. Relations between continuous performance test performance measures and ADHD behaviors. Journal of abnormal child psychology. 2003;31(5):543–554. doi: 10.1023/a:1025405216339. [DOI] [PubMed] [Google Scholar]

- Epstein JN, Kelleher KJ, Baum R, Brinkman WB, Peugh J, Gardner W, Lichtenstein P, Langberg JM. Impact of a web-portal intervention on community ADHD care and outcomes. Pediatrics. 2016:e20154240. doi: 10.1542/peds.2015-4240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- European Medicines Agency. Statistical principles for clinical trials (E9): ICH tripartite guideline. European Medicines Agency; 1998. [Google Scholar]

- Faraone SV. Attention deficit hyperactivity disorder and premature death. The Lancet. 2015;385(9983):2132–2133. doi: 10.1016/S0140-6736(14)61822-5. [DOI] [PubMed] [Google Scholar]

- Fridman M, Banaschewski T, Sikirica V, Quintero J, Chen KS. access to diagnosis, treatment, and supportive services among pharmacotherapy-treated children/adolescents with aDhD in europe: data from the caregiver Perspective on Pediatric ADHD survey. Neuropsychiatric disease and treatment. 2017;13:947–958. doi: 10.2147/NDT.S128752. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilbert H, Qin L, Li D, Zhang X, Johnstone SJ. Aiding the diagnosis of AD/HD in childhood: using actigraphy and a continuous performance test to objectively quantify symptoms. Research in developmental disabilities. 2016;59:35–42. doi: 10.1016/j.ridd.2016.07.013. [DOI] [PubMed] [Google Scholar]

- Goldstein H. Multilevel statistical models. Vol. 922 John Wiley & Sons; 2011. [Google Scholar]

- Goodman R, Ford T, Richards H, Gatward R, Meltzer H. The Development and Well-Being Assessment: description and initial validation of an integrated assessment of child and adolescent psychopathology. Journal of Child Psychology and Psychiatry. 2000;41(05):645–655. [PubMed] [Google Scholar]

- Grodzinsky GM, Barkley RA. Predictive power of frontal lobe tests in the diagnosis of attention deficit hyperactivity disorder. The Clinical Neuropsychologist. 1999;13(1):12–21. doi: 10.1076/clin.13.1.12.1983. [DOI] [PubMed] [Google Scholar]

- Groom MJ, Young Z, Hall CL, Gillott A, Hollis C. The incremental validity of a computerised assessment added to clinical rating scales to differentiate adult ADHD from autism spectrum disorder. Psychiatry Research. 2016;243:168–173. doi: 10.1016/j.psychres.2016.06.042. [DOI] [PubMed] [Google Scholar]

- Hall CL, Selby K, Guo B, Valentine AZ, Walker GM, Hollis C. Innovations in Practice: an objective measure of attention, impulsivity and activity reduces time to confirm attention deficit/hyperactivity disorder diagnosis in children–a completed audit cycle. Child and Adolescent Mental Health. 2016a;21(3):175–178. doi: 10.1111/camh.12140. [DOI] [PubMed] [Google Scholar]

- Hall CL, Valentine AZ, Groom MJ, Walker GM, Sayal K, Daley D, Hollis C. The clinical utility of the continuous performance test and objective measures of activity for diagnosing and monitoring ADHD in children: a systematic review. European Child & Adolescent Psychiatry. 2016b;25:677–699. doi: 10.1007/s00787-015-0798-x. [DOI] [PubMed] [Google Scholar]

- Hall CL, Valentine AZ, Walker GM, Ball HM, Cogger H, Daley D, Groom MJ, Sayal K, Hollis C. Study of user experience of an objective test (QbTest) to aid ADHD assessment and medication management: a multi-methods approach. BMC Psychiatry. 2017;17(1):66. doi: 10.1186/s12888-017-1222-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall CL, Walker GM, Valentine AZ, Guo B, Kaylor-Hughes C, James M, Daley D, Sayal K, Hollis C. Protocol investigating the clinical utility of an objective measure of activity and attention (QbTest) on diagnostic and treatment decision-making in children and young people with ADHD—‘Assessing QbTest Utility in ADHD’(AQUA): a randomised controlled trial. BMJ Open. 2014;4(12):e006838. doi: 10.1136/bmjopen-2014-006838. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall CL, Walker GM, Valentine AZ, Guo B, Kaylor-Hughes C, James M, Daley D, Sayal K, Hollis C. Protocol investigating the clinical utility of an objective measure of activity and attention (QbTest) on diagnostic and treatment decision-making in children and young people with ADHD—‘Assessing QbTest Utility in ADHD’(AQUA): a randomised controlled trial. BMJ Open. 2016;4(12):e006838. doi: 10.1136/bmjopen-2014-006838. erratum. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hult N, Kadesjö J, Kadesjö B, Gillberg C, Billstedt E. ADHD and the QbTest: diagnostic validity of QbTest. Journal of Attention Disorders. 2015 doi: 10.1177/1087054715595697. 1087054715595697. [DOI] [PubMed] [Google Scholar]

- Kahan BC. Accounting for centre-effects in multicentre trials with a binary outcome–when, why, and how? BMC Medical Research Methodology. 2014;14(1):1. doi: 10.1186/1471-2288-14-20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahan BC, Morris TP. Analysis of multicentre trials with continuous outcomes: when and how should we account for centre effects? Statistics in Medicine. 2013;32(7):1136–1149. doi: 10.1002/sim.5667. [DOI] [PubMed] [Google Scholar]

- Kovshoff H, Williams S, Vrijens M, Danckaerts M, Thompson M, Yardley L, Hodgkins P, Sonuga-Barke EJ. The decisions regarding ADHD management (DRAMa) study: uncertainties and complexities in assessment, diagnosis and treatment, from the clinician’s point of view. European Child & Adolescent Psychiatry. 2012;21(2):87–99. doi: 10.1007/s00787-011-0235-8. [DOI] [PubMed] [Google Scholar]

- Leckie G, Charlton C. Runmlwin-a program to Run the MLwiN multilevel modelling software from within stata. Journal of Statistical Software. 2013;52(11):1–40. [Google Scholar]

- Milioni ALV, Chaim TM, Cavallet M, de Oliveira NM, Annes M, dos Santos B, Louzã M, da Silva MA, Miguel CS, Serpa MH, Zanetti MV. High IQ may “mask” the diagnosis of ADHD by compensating for deficits in executive functions in treatment-naïve adults with ADHD. Journal of attention disorders. 2017;21(6):455–464. doi: 10.1177/1087054714554933. [DOI] [PubMed] [Google Scholar]

- Moher D, Hopewell S, Schulz KF, Montori V, Gøtzsche PC, Devereaux P, Elbourne D, Egger M, Altman DG. CONSORT 2010 explanation and elaboration: updated guidelines for reporting parallel group randomised trials. Journal of Clinical Epidemiology. 2010;63(8):e1–e37. doi: 10.1016/j.jclinepi.2010.03.004. [DOI] [PubMed] [Google Scholar]

- Munkvold LH, Manger T, Lundervold AJ. Conners’ continuous performance test (CCPT-II) in children with ADHD, ODD, or a combined ADHD/ODD diagnosis. Child Neuropsychology. 2014;20(1):106–126. doi: 10.1080/09297049.2012.753997. [DOI] [PubMed] [Google Scholar]

- Ngandu T, Lehtisalo J, Solomon A, Levälahti E, Ahtiluoto S, Antikainen R, Backman L, Hanninen T, Jula A, Laatikainen T, Lindstrom J, et al. A 2 year multidomain intervention of diet, exercise, cognitive training, and vascular risk monitoring versus control to prevent cognitive decline in at-risk elderly people (FINGER): a randomised controlled trial. The Lancet. 2015;385(9984):2255–2263. doi: 10.1016/S0140-6736(15)60461-5. [DOI] [PubMed] [Google Scholar]

- NICE. Attention deficit hyperactivity disorder:diagnosis and managment of ADHD in children, young people and adults Clinical Guideline 72. London: National Institute for Health and Clinical Excellence; 2008. [Google Scholar]

- NICE. Methods for the development of NICE public health guidance. 3rd Edition. London: 2012. [PubMed] [Google Scholar]

- Ogundele MO, Ayyash HF, Banerjee S. Role of computerised continuous performance task tests in ADHD. Progress in Neurology and Psychiatry. 2011;15(3):8–13. [Google Scholar]

- Park MH, Kweon YS, Lee SJ, Park EJ, Lee C, Lee CU. Differences in performance of ADHD children on a visual and auditory continuous performance test according to IQ. Psychiatry investigation. 2011;8(3):227–233. doi: 10.4306/pi.2011.8.3.227. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riccio CA, Reynolds CR. Continuous performance tests are sensitive to ADHD in adults but lack specificity. Annals of the New York Academy of Sciences. 2001;931(1):113–139. doi: 10.1111/j.1749-6632.2001.tb05776.x. [DOI] [PubMed] [Google Scholar]

- Schatz AM, Ballantyne AO, Trauner DA. Sensitivity and specificity of a computerized test of attention in the diagnosis of attention-deficit/hyperactivity disorder. Assessment. 2001;8(4):357–365. doi: 10.1177/107319110100800401. [DOI] [PubMed] [Google Scholar]

- Solanto MV, Etefia K, Marks DJ. The utility of self-report measures and the continuous performance test in the diagnosis of ADHD in adults. CNS spectrums. 2004;9(9):649–659. doi: 10.1017/s1092852900001929. [DOI] [PubMed] [Google Scholar]

- Shaffer D, Gould MS, Brasic J, Ambrosini P, Fisher P, Bird H, Aluwahlia S. A children's global assessment scale (CGAS) Archives of General Psychiatry. 1983;40(11):1228–1231. doi: 10.1001/archpsyc.1983.01790100074010. [DOI] [PubMed] [Google Scholar]

- Shaw M, Hodgkins P, Caci H, Young S, Kahle J, Woods AG, Arnold LE. A systematic review and analysis of long-term outcomes in attention deficit hyperactivity disorder: effects of treatment and non-treatment. BMC Medicine. 2012;10(1):99. doi: 10.1186/1741-7015-10-99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Söderström S, Pettersson R, Nilsson KW. Quantitative and subjective behavioural aspects in the assessment of attention-deficit hyperactivity disorder (ADHD) in adults. Nordic journal of psychiatry. 2014;68(1):30–37. doi: 10.3109/08039488.2012.762940. [DOI] [PubMed] [Google Scholar]

- StataCorp. Stata Statistical Software: Release 14. College Station, TX: StataCorp LP; 2015. [Google Scholar]

- Swanson JM, Kraemer HC, Hinshaw SP, Arnold LE, Conners CK, Abikoff HB, Clevenger W, Davies M, Elliott GR, Greenhill LL, Hechtman L, et al. Clinical relevance of the primary findings of the MTA: success rates based on severity of ADHD and ODD symptoms at the end of treatment. Journal of the American Academy of Child & Adolescent Psychiatry. 2001;40(2):168–179. doi: 10.1097/00004583-200102000-00011. [DOI] [PubMed] [Google Scholar]

- Teicher MH, Ito Y, Glod CA, Barber NI. Objective measurement of hyperactivity and attentional problems in ADHD. Journal of American Academy of Child and Adolescent Psychiatry. 1996;35(3):334–342. doi: 10.1097/00004583-199603000-00015. [DOI] [PubMed] [Google Scholar]

- Vogt C, Shameli A. Assessments for attention-deficit hyperactivity disorder: Use of objective measurements. The Psychiatrist. 2011;35(10):380–383. [Google Scholar]

- Wagenlehner FM, Umeh O, Steenbergen J, Yuan G, Darouiche RO. Ceftolozane-tazobactam compared with levofloxacin in the treatment of complicated urinary-tract infections, including pyelonephritis: a randomised, double-blind, phase 3 trial (ASPECT-cUTI) The Lancet. 2015;385(9981):1949–1956. doi: 10.1016/S0140-6736(14)62220-0. [DOI] [PubMed] [Google Scholar]

- Wehmeier PM, Schacht A, Wolff C, Otto WR, Dittmann RW, Banaschewski T. Neuropsychological outcomes across the day in children with attention-deficit/hyperactivity disorder treated with atomoxetine: results from a placebo-controlled study using a computer-based continuous performance test combined with an infra-red motion-tracking device. Journal of Child & Adolescent Psychopharmacology. 2011;21(5):433–444. doi: 10.1089/cap.2010.0142. [DOI] [PubMed] [Google Scholar]

- Wille N, Badia X, Bonsel G, Burström K, Cavrini G, Devlin N, Egmar AC, Greiner W, Gusi N, Herdman M, Jelsma J, et al. Development of the EQ-5D-Y: a child-friendly version of the EQ-5D. Quality of Life Research. 2010;19(6):875–886. doi: 10.1007/s11136-010-9648-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zelnik N, Bennett-Back O, Miari W, Goez HR, Fattal-Valevski A. Is the test of variables of attention reliable for the diagnosis of attention-deficit hyperactivity disorder (ADHD)? Journal of Child Neurology. 2012;27(6):703–707. doi: 10.1177/0883073811423821. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.