Abstract

A central challenge in sensory neuroscience involves understanding how neural circuits shape computations across cascaded cell layers. Here we attempt to reconstruct the response properties of experimentally unobserved neurons in the interior of a multilayered neural circuit, using cascaded linear-nonlinear (LN-LN) models. We combine non-smooth regularization with proximal consensus algorithms to overcome difficulties in fitting such models that arise from the high dimensionality of their parameter space. We apply this framework to retinal ganglion cell processing, learning LN-LN models of retinal circuitry consisting of thousands of parameters, using 40 minutes of responses to white noise. Our models demonstrate a 53% improvement in predicting ganglion cell spikes over classical linear-nonlinear (LN) models. Internal nonlinear subunits of the model match properties of retinal bipolar cells in both receptive field structure and number. Subunits have consistently high thresholds, supressing all but a small fraction of inputs, leading to sparse activity patterns in which only one subunit drives ganglion cell spiking at any time. From the model’s parameters, we predict that the removal of visual redundancies through stimulus decorrelation across space, a central tenet of efficient coding theory, originates primarily from bipolar cell synapses. Furthermore, the composite nonlinear computation performed by retinal circuitry corresponds to a boolean OR function applied to bipolar cell feature detectors. Our methods are statistically and computationally efficient, enabling us to rapidly learn hierarchical non-linear models as well as efficiently compute widely used descriptive statistics such as the spike triggered average (STA) and covariance (STC) for high dimensional stimuli. This general computational framework may aid in extracting principles of nonlinear hierarchical sensory processing across diverse modalities from limited data.

Author summary

Computation in neural circuits arises from the cascaded processing of inputs through multiple cell layers. Each of these cell layers performs operations such as filtering and thresholding in order to shape a circuit’s output. It remains a challenge to describe both the computations and the mechanisms that mediate them given limited data recorded from a neural circuit. A standard approach to describing circuit computation involves building quantitative encoding models that predict the circuit response given its input, but these often fail to map in an interpretable way onto mechanisms within the circuit. In this work, we build two layer linear-nonlinear cascade models (LN-LN) in order to describe how the retinal output is shaped by nonlinear mechanisms in the inner retina. We find that these LN-LN models, fit to ganglion cell recordings alone, identify filters and nonlinearities that are readily mapped onto individual circuit components inside the retina, namely bipolar cells and the bipolar-to-ganglion cell synaptic threshold. This work demonstrates how combining simple prior knowledge of circuit properties with partial experimental recordings of a neural circuit’s output can yield interpretable models of the entire circuit computation, including parts of the circuit that are hidden or not directly observed in neural recordings.

Introduction

Motivation

Computational models of neural responses to sensory stimuli have played a central role in addressing fundamental questions about the nervous system, including how sensory stimuli are encoded and represented, the mechanisms that generate such a neural code, and the theoretical principles governing both the sensory code and underlying mechanisms. These models often begin with a statistical description of the stimuli that precede a neural response such as the spike-triggered average (STA) [1, 2] or covariance (STC) [3–8]. These statistical measures characterize to some extent the set of effective stimuli that drive a response, but do not necessarily reveal how these statistical properties relate to cellular mechanisms or neural pathways. Going beyond descriptive statistics, an explicit representation of the neural code can be obtained by building a model to predict neural responses to sensory stimuli.

A classic approach involves a single stage of spatiotemporal filtering and a time-independent or static nonlinearity; these models include linear-nonlinear (LN) models with single or multiple pathways [1, 9–11] or generalized linear models (GLMs) with spike history feedback [12, 13]. However, these models do not directly map onto circuit anatomy and function. As a result, the interpretation of such phenomenological models, as well as how they precisely relate to underlying cellular mechanisms, remains unclear. Ideally, one would like to generate more biologically interpretable models of sensory circuits, in which sub-components of the model map in a one-to-one fashion onto cellular components of neurobiological circuits [14]. For example, model components such as spatiotemporal filtering, thresholding, and summation are readily mapped onto photoreceptor or membrane voltage dynamics, synaptic and spiking thresholds, and dendritic pooling, respectively.

A critical aspect of sensory circuits is that they operate in a hierarchical fashion in which sensory signals propagate through multiple nonlinear cell layers [15–17]. Fitting models that capture this widespread structure using neural data recorded from one layer of a circuit in response to controlled stimuli raises significant statistical and computational challenges [18–22]. A key issue is the high dimensionality of both stimulus and parameter space, as well as the existence of hidden, unobserved neurons in intermediate cell layers. The high dimensionality of parameter space can necessitate prohibitively large amounts of data and computational time required to accurately fit the model. One approach to address these difficulties is to incorporate prior knowledge about the structure and components of circuits to constrain the model [11, 21, 23–25]. Although prior knowledge of the exact network architecture and sequence of nonlinear transformations would greatly constrain the number of possible circuit solutions, such prior knowledge is typically minimal for most neural circuits.

In this work, we learn hierarchical nonlinear models from recordings of ganglion cells in the salamander retina, with the goal of building more interpretable models. In particular, we focus on models with two stages of linear-nonlinear processing (LN-LN models), analogous to the specific cell layers the retina. LN-LN models have been previously proposed to describe cascaded nonlinear computation in sensory pathways such as in V1 [11, 21] and retina [18, 22, 23, 26, 27] (we elaborate on differences across these studies and our work below). In the retina, it has been proposed that there is a nonlinear transformation between bipolar and ganglion cells, however, building models that capture these nonlinearities has been a challenge due to the issues described above. Here, we find that with appropriate regularization, we are able to learn LN-LN models from recordings of ganglion cells alone that are both more accurate and more interpretable than their LN counterparts. In particular, inferred LN-LN model subunits quantitatively match properties of bipolar cells in the retina. Moreover, although the focus of this paper is on LN-LN models, we demonstrate that the algorithms we use to fit them are also useful in directly learning the STA and STC eigenvectors using very little data.

Further analysis of our learned LN-LN models reveals novel insights into retinal function, namely that: transmission between every subunit and ganglion cell pair is well described by a high threshold expansive nonlinearity (suppressing all but a small fraction of inputs), bipolar cell terminals are sparsely active, visual inputs are most decorrelated at the subunit layer, pre-synaptic to ganglion cells, and finally the composite computation performed by the retinal ganglion cell output corresponds to a boolean OR function of bipolar cell feature detectors. Collectively, these results shed light on the nature of hierarchical nonlinear computation in the retina. Our computational framework is general, however, and we hope it will aid in providing insights into hierarchical nonlinear computations across the nervous system.

Background

The retina is a classic system for exploring the relationship between quantitative encoding models and measurements of neurobiological circuit properties [28, 29]. Signals in the retina flow from photoreceptors through populations of horizontal, bipolar, and amacrine cells before reaching the ganglion cell layer.

To characterize this complex multilayered circuitry, many studies utilize descriptive statistics such as the spike-triggered average, interpreted as the average feature encoded by a ganglion cell [1–3]. Responses are often then modeled using a linear-nonlinear (LN) framework (schematized in Fig 1A). A major reason for the widespread adoption of LN models is their high level of tractability; learning their parameters can be accomplished by solving a simple convex optimization problem [2], or alternatively, estimated using straightforward reverse correlation analyses [1]. However, LN models have two major drawbacks: it is difficult to map them onto biophysical mechanisms in retinal circuitry, and they do not accurately describe ganglion cell responses across diverse stimuli. Regarding mechanisms, the spatiotemporal linear filter of the LN model is typically interpreted as mapping onto the aggregate sequential mechanisms of phototransduction, signal filtering and transmission through bipolar and amacrine cell pathways, and summation at the ganglion cell, while the nonlinearity is mapped onto the spiking threshold of ganglion cells. Regarding accuracy, while previous studies have found that these simple models can, for some neurons, capture most of the variance of the responses to low-resolution spatiotemporal white noise [9, 12, 20], they do not describe responses to stimuli with more structure such as natural scenes [13, 30–33].

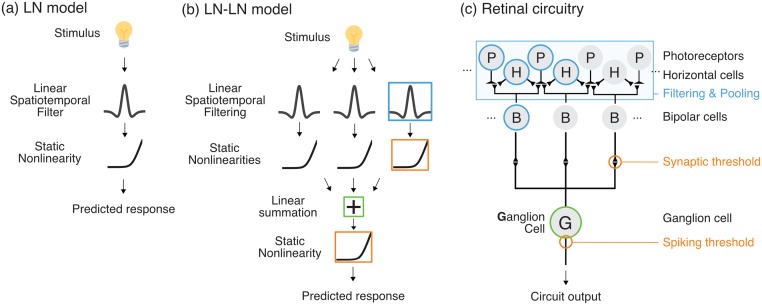

Fig 1. Schematic of the LN-LN model and corresponding retinal circuitry.

(a) The LN-LN cascade contains a bank of linear-nonlinear (LN) subunits, whose outputs are pooled at a second linear stage before being passed through a final nonlinearity. (b,c) The LN-LN model mapped on to a retinal circuit. The first LN stage consists of bipolar cell subunits and the bipolar-to-ganglion cell synaptic threshold. The second LN stage is pooling at the ganglion cell, plus a spiking threshold. The contribution of inhibitory amacrine cells is omitted here.

A likely reason for these drawbacks are the nonlinearities within the retina. There can be strong rectification of signals that occurs pre-synaptic to ganglion cells [15, 34–36], breaking the assumption of composite linearity in the pathway from photoreceptors just up to the ganglion cell spiking threshold [17]. Indeed, nonlinear spatial integration within ganglion cell receptive fields was first described in the cat retina [37] in Y-type ganglion cells. A hypothetical model for this computation was proposed as a cascade of two layers of linear-nonlinear operations (LN-LN) [26, 27]. If one keeps the mean luminance constant, avoiding light adaptation in photoreceptors, the first major nonlinearity is thought to lie at the presynaptic terminal of the bipolar to ganglion cell synapse. Ganglion cells pool over multiple bipolar cell inputs, each of which can be approximated as linear-nonlinear components, termed subunits of the ganglion cell. Due to the roughly linear integration [9] that occurs at bipolar cells, we (computationally) distill mechanisms in photoreceptors and inhibitory horizontal cells into a single spatiotemporal filter with positive and negative elements that gives rise to bipolar cell signals. The second LN layer corresponds to summation or pooling across multiple subunits at the ganglion cell soma, followed by a spiking threshold. The subunit nonlinearities in these models have been shown to underlie many retinal computations including latency encoding [29], object motion sensitivity [38], and sensitivity to fine spatial structure (such as edges) in natural scenes [35]. Fig 1 shows a schematic of the LN-LN cascade and its mapping onto retinal anatomy. Functionally, these models with multiple nonlinear pathways both provide a more accurate description of ganglion cell responses and are more amenable to interpretation.

Early work on characterizing these multiple pathways motivated the use of the significant eigenvectors of the spike-triggered covariance (STC) matrix as the set of features that drives a cell, focusing on low-dimensional full field flicker stimuli [10, 39] to reduce the amount of data required for accurately estimating these eigenvectors. Significant STC eigenvectors will span the same linear subspace as the true biological filters that make up the pathways feeding onto a ganglion cell [3, 40–42]. However, the precise relationship between these eigenvectors (which obey a biologically implausible orthogonality constraint) and the individual spatiotemporal filtering properties intrinsic to multiple parallel pathways in a neural circuit remains unclear.

Instead, we take the approach of directly fitting a hierarchical, nonlinear, neural model, enabling us to jointly learn a set of non-orthogonal, biophysically plausible set of pathway filters, as well as an arbitrary, flexible nonlinearity for each pathway. Much recent and complementary work on fitting such models make simplifying assumptions in order to make model fitting tractable. For example, assuming the subunits are shifted copies of a template results in models with a single convolutional subunit filter [21, 23, 24]. However, this obscures individual variability in the spatiotemporal filters of subunits of the same type across visual space, which has been shown to be functionally important in increasing retinal resolution [43]. Another common assumption is that the subunit nonlinearities have a particular form, such as quadratic [11, 25] or sigmoidal [44]. Fitting multi-layered models with convolutional filters and fixed nonlinearities has also been successfully used to describe retinal responses to natural scenes [32, 33], although this work maximizes predictive accuracy at the expense of a one-to-one mapping of model components onto retinal circuit elements. Finally, other work focuses on particular ganglion cell types with a small number of inputs [22], constrains the input stimulus to a low-dimensional subspace (such as two halves of the receptive field [45]), or constrains the coefficients of receptive fields to be non-negative [46], thus discarding known properties of the inhibitory surround. Our approach is most similar to MacFarland et. al. [18], who formulate LN-LN models for describing nonlinearities in sensory pathways. Their model formulation uses spatiotemporal filters and smooth parameterized nonlinearities with an additional sparsity regularization penalty on the filters (encouraging filters to contain few non-zero elements), fit using gradient descent. They demonstrate that these methods recover the parameters of a simulated model cell with two subunit pathways. Our work differs technically in the types of regularization penalties we apply and our use of high-dimensional spatiotemporal stimuli, and scientifically in our focus on gaining insight into the nonlinear computations underlying spatiotemporal processing in the retina.

In this work, we do not make assumptions about or place restrictions on the number or tiling of subunit filters, the shapes of the subunit nonlinearities, the sign of receptive field elements, or the stimulus dimensionality. We additionally use a low-rank regularization penalty, that encourages approximately spatiotemporally separable filters [47, 48], a property that is common to receptive fields in a wide variety of sensory systems. In order to fit these models, we use methods based on proximal consensus algorithms (described in Methods). These allow us to use this prior knowledge about model parameters to not only fit hierarchical nonlinear models, but also perform spike-triggered analyses using much less data than otherwise required.

Results

Learning hierarchical nonlinear models of the retinal response

Our LN-LN model architecture (schematized in Fig 1) follows previous work [18]. The stimulus is first passed through a set of LN subunits. Each subunit filter is a spatiotemporal stimulus filter, constrained to have unit norm. The subunit nonlinearity is parameterized using a set of basis functions (Gaussian bumps) that tile the input space [12, 20] (see Methods). This parameterization is flexible enough that we could learn, for each individual subunit, any smooth nonlinearity that can be expressed as a linear combination of our basis functions. The second LN layer pools subunits through weighted summation, followed by a spiking nonlinearity that we model using a parameterized soft rectifying function r(x) = g log(1 + ex−θ). Here g is an overall gain, and θ is a threshold. The full set of parameters for the model consists of the spatiotemporal subunit filters, the subunit nonlinearity parameters, and the gain and threshold of the final nonlinearity.

Model fitting and performance

We recorded ganglion cell responses to a 40 minute stimulus consisting of a 1-D spatiotemporal white noise bars stimulus. For both LN and LN-LN models we fit model parameters by optimizing the sum of the log-likelihood of recorded spikes under a Poisson noise model [12] with additional regularization terms. We found that some form of regularization was critical to prevent over-fitting of the LN-LN model to the subset of data used for training. In particular, the regularization penalties we used were ℓ1 and nuclear norm penalties applied to the spatiotemporal subunit filters. The ℓ1 norm encourages filters to be sparse (have few non-zero coefficients) and the nuclear norm penalty encourages the space-time filter to be low rank (comprised of a small number of features separable in space and time). We chose the weights of the ℓ1 and nuclear norm regularization penalties, both for the LN and LN-LN models, through cross-validation on a small subset of cells, and then held these weights constant across all cells. Our subsequent results indicate that we do not have to fine tune these hyperparameters on a cell by cell basis to achieve good predictive performance. Finally, because different cells may have different numbers of functional subunits, for the LN-LN models, we chose the optimal number of subunits on a cell-by-cell basis by maximizing performance on held-out data through cross-validation. No additional structure was imposed on the subunits such as spatial repetition, overlap, or non-negativity. We optimize the log-likelihood and regularization terms using proximal algorithms (see Methods for an overview).

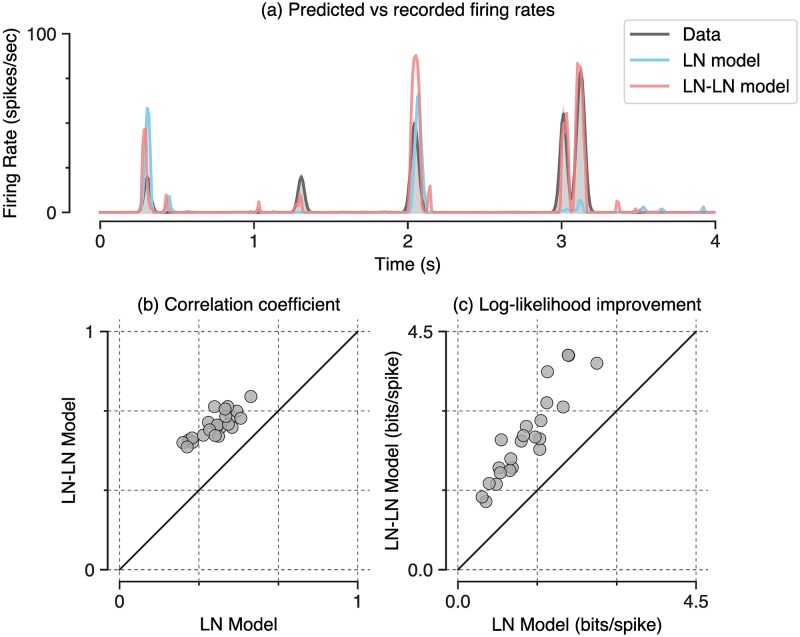

We find that the LN-LN model significantly outperforms the LN model at describing responses of ganglion cells, for all recorded cells. Fig 2A shows firing rate traces for an example cell, comparing the recorded response (gray) with an LN model (blue) and an LN-LN model (red). We quantify the similarity between predicted and recorded firing rate traces using either the Pearson correlation coefficient or the log-likelihood of held-out data under the model. All log-likelihood values are reported as an increase over the log-likelihood of a fixed mean firing rate model, scaled by the firing rate (yielding units of bits/spike). Summarized across n = 23 recorded ganglion cells, we find that the LN-LN model yields a consistent improvement over the LN model using either metric (Fig 2B and 2C). Overall, this demonstrated performance improvement indicates that nonlinear spatial integration is fundamental in driving ganglion cell responses, even to white noise stimuli, and that an LN model is not sufficient to capture the response to spatiotemporal white noise [22, 49]. This salient, intermediate rectification that we identify computationally is consistent with previous measurements of bipolar-to-ganglion cell transmission in the retina [45, 50].

Fig 2. LN-LN models predict held-out response data better than LN models.

(a) Firing rates for an example neuron. The recorded firing rate (shaded, gray), is shown along with the LN model prediction (dashed, green) and the LN-LN prediction (solid, red). (b) LN-LN performance on held out data vs. the LN model, measured using correlation coefficient between the model and held out data. Note that all cells are above the diagonal. (c) Same as in (b), but with the performance metric of log-likelihood improvement over the mean rate in bits per spike.

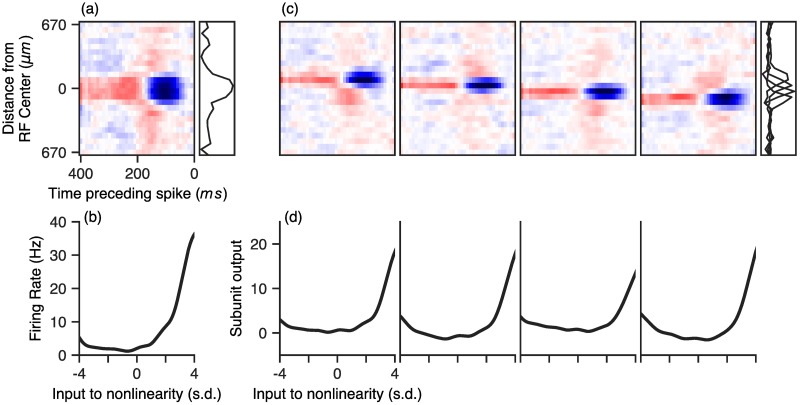

Internal structure of learned models

Given the improved performance of our hierarchical nonlinear subunit models, we examined the internal structure of the model for insights into retinal structure and computation. Fig 3 shows a visualization of the parameters learned for an example cell. Fig 3A and 3B shows the parameters for the classical LN model, for comparison, while Fig 3C and 3D shows the corresponding subunit filters and subunit nonlinearities in the first stage of the LN-LN model, fit to the same cell. The subunit filters had a similar temporal structure, but smaller spatial profiles compared to that of the LN model and the nonlinearities associated with each subunit were roughly monotonic with high thresholds (quantified below). These qualitative properties were consistent across all cells.

Fig 3. Example LN-LN model parameters fit to a recording of an OFF retinal ganglion cell.

(a and b): LN-model parameters, consisting of a single spatial filter (a) and nonlinearity (b). (c and d) LN-LN model parameters. (c) First layer filters (top) and nonlinearities (bottom) of an LN-LN model fit to the same cell. Spatial profiles of filters are shown in gray to the right of the filters. The subunit filters have a much smaller spatial extent compared to the LN filter, but similar temporal profiles.

Physiological properties of learned LN-LN models

Here we examine, in more detail, quantitative properties of learned LN-LN models that can be compared to physiological properties of the retina. We find that model subunits quantitatively resemble bipolar cells in terms of receptive field properties and number, and that these intermediate subunits consistently have high-threshold nonlinearities.

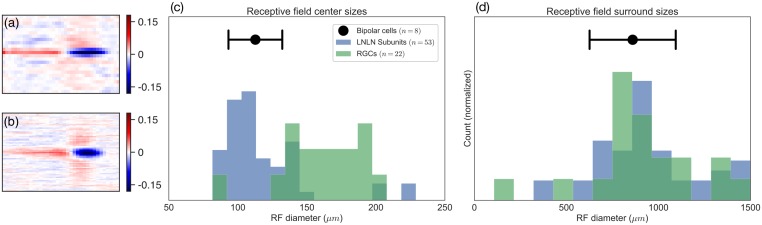

Inferred hidden units quantitatively resemble bipolar cell receptive fields

Mapping the LN-LN model onto retinal anatomy leads us to believe that the first layer filters (some examples of which are shown in Fig 3C) should mimic or capture filtering properties pre-synaptic to bipolar cells in the inner retina. To examine this possibility, we compared the first layer model filters to properties of bipolar cell receptive fields. An example learned model subunit receptive field is shown in Fig 4A, while a bipolar cell receptive field, obtained from direct intracellular recording of a bipolar cell, is shown in Fig 4B. Qualitatively, we found that the filters in the LN-LN model matched these bipolar cell RFs, as well as previously reported bipolar cell receptive fields [50, 51]: both had center-surround receptive fields with similar spatial extents. We further quantified the degree of space-time separability of the filters using the numerical or stable rank [52], which is a measure of rank insensitive to small amounts of noise in the matrix (a stable rank of one indicates the filter is exactly space-time separable). We found that the degree of space-time separability of recorded bipolar cell receptive fields and inferred model subunits were quite similar (1.28 ± 0.01 and 1.39 ± 0.03, respectively), indicating that the nuclear norm penalty was not artificially reducing the rank of our model filters. This also demonstrates the advantage of using a soft rank penalty, such as the nuclear norm, as opposed to explicitly constraining the rank to any specific integer.

Fig 4. Comparison of subunit filter parameters with intracellular bipolar cell recordings.

(a) An example subunit bipolar cell. (b) A recorded bipolar cell receptive field. (c) Receptive field centers sizes for subunit filters (blue), LN model filters (green), and recorded bipolar cells (black point). (c) Same as in (b), but with receptive field surround sizes.

To quantitatively compare these model-derived and ground-truth bipolar cell receptive fields, we fit the spatial receptive field with a difference of Gaussians function to estimate the RF center and surround sizes. We find the RF centers for the LN-LN subunit filters are much smaller than the corresponding LN model filter. Furthermore, the size of these LN-LN subunit centers matched the size of the RF center measured from intracellular recordings of n = 8 bipolar cells (Fig 4C). The recorded bipolar cells, LN model filters (ganglion cells), and LN-LN subunit filters all had similar surround sizes (Fig 4D). More example bipolar cell receptive fields are provided in S1 Fig.

Note that this match between LN-LN model subunits and the RF properties of bipolar cells was not a pre-specified constraint placed on our model, but instead arose as an emergent property of predicting ganglion cell responses to white noise stimuli. These results indicate that our modeling framework not only enables higher performing predictive models of the retinal response, but can also reconstruct important aspects of the unobserved interior of the retina.

Number of inferred subunits

The number of subunits utilized in the LN-LN model for any individual cell was chosen to optimize model predictive performance on a held-out data set via cross-validation. That is, we fit models with different numbers of subunits and selected the one with the best performance on a validation set. We find that for models with more subunits than necessary, extra subunits are ignored (the learned nonlinearity for these subunits is flat, thus they do not modulate the firing rate).

Fig 5A shows the model performance, quantified as the difference between the LN-LN model and the LN model, across a population of cells as a function of the number of subunits included in the LN-LN model. We find that models with four to six subunits maximized model performance on held-out data. Note that the stimuli used here are one-dimensional spatiotemporal bars that have constant luminance across one spatial dimension. Thus each model subunit likely corresponds to the combination of multiple bipolar cell inputs whose receptive fields overlap a particular bar in the stimulus. Previous anatomical studies of bipolar cell density and axonal branching width [53, 54] as well as functional studies [46] suggest that a typical ganglion cell in the salamander retina receives input from 10–50 bipolar cells whose receptive fields are tiled across two dimensional space. The number of independently activated groups of such a two dimensional array of bipolar cells, in response to a one dimensional bar stimulus is then expected to be reduced from the total number of bipolar cells, roughly, by a square root factor: i.e. . This estimate is largely consistent with the typical number of subunits in Fig 5A, required to optimize model predictive performance. This estimate also suggests that the large majority of bipolar-to-ganglion cell synapses are rectifying (strongly nonlinear), as linear connections are not uniquely identifiable in an LN-LN cascade. Indeed, in the salamander retina, strong rectification appears to be the norm [45, 50].

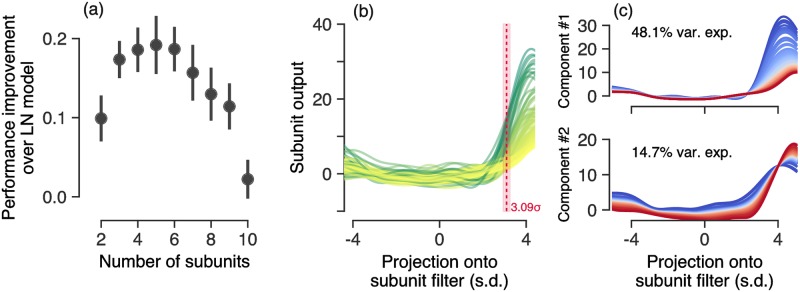

Fig 5. LN-LN model parameter analysis.

(a) Performance improvement (increase in correlation coefficient relative to an LN model) as a function of the number of subunits used in the LN-LN model. Error bars indicate the standard error across 23 cells. (b) Subunit nonlinearities learned across all ganglion cells. For reference the white noise input to a subunit nonlinearity has standard deviation 1, which sets the scale of the x-axis. Red line and shaded fill indicate the mean and s.e.m. of nonlinearity thresholds (see text for details). (c) Visualization of the principal axes of variation in subunit nonlinearities by adding or subtracting principal components from the mean nonlinearity. (top) The principal axis of variation in subunit nonlinearities results in a gain change, while (bottom) the second principal axis corresponds to a threshold shift. These two dimensions captured 63% of the nonlinearity variability across cells.

LN-LN models have subunit nonlinearities with high thresholds

The nonlinearities for all of the measured subunits are overlaid in Fig 5B. Each LN-LN subunit nonlinearity takes as input the projection of the stimulus onto the corresponding subunit spatiotemporal filter. Since the stimulus components are white noise with unit standard deviation, and the spatiotemporal filter is constrained to have unit norm, the projection of the stimulus onto the filter has a standard Normal distribution, thus we can compare nonlinearities on a common axis. Despite the fact that the model could separately learn an arbitrary function over the input for each subunit nonlinearity, we find that the nonlinearities are fairly consistent across the different subunits of many cells. Subunit nonlinearities look roughly like thresholding functions, relatively flat for most inputs but then increasing sharply after a threshold. We quantified the threshold as input for which the nonlinearity reaches 40% of the maximum output, across n = 92 model-identified subunits the mean threshold was 3.09 ± 0.14 (s.e.m.) standard deviations. We additionally computed LN thresholds for ganglion cells and found that they were similarly consistent across the population (3.22 ± 0.11 standard deviations). We decomposed the set of nonlinearities using principal components analysis and show the two primary axes of variation in Fig 5C. The primary axis of variation results in a gain change, while the secondary axis induces a threshold shift. Due to the high thresholds of these nonlinearities, subunits only impact ganglion cell firing probability for large input values. The slight rise on the left side of the nonlinearities is likely due to weak ON- inputs to the ganglion cell, and a stimulus ensemble that drives the ON- pathways more strongly [39, 55] may be necessary to uniquely identify them. Our ganglion cell population consisted of 18 Fast-Off, 4 Slow-Off, and 1 On cell (classification shown in S2 Fig). We did not find significant differences across cell types in terms of the number of identified subunits or the subunit thresholds.

Computational properties of learned LN-LN models

We now turn from a quantitative analysis of the physiological properties of the retina, described above, to their implications in terms of the computational function of the retina in processing visual stimuli. In particular, in the next two sub-sections we predict that the dominant contribution to stimulus decorrelation in efficient coding theory occurs at the bipolar cell synaptic threshold, and that the composite function computed by a retinal ganglion cell corresponds to a logical OR of its bipolar cell inputs.

Stimulus decorrelation at different stages in hierarchical retinal processing

Natural stimuli have highly redundant structure. Efficient coding theories [56, 57] state that sensory systems ought to remove these redundancies in order to efficiently encode natural stimuli. The simplest such redundancy is that nearby points in space and time contain similar, or correlated, luminance levels [58]. The transmission of such correlated structure would thus be highly inefficient. Efficient coding has been used to explain why responses are much less correlated than natural scenes, although the mechanistic underpinnings of decorrelation in the retina remain unclear.

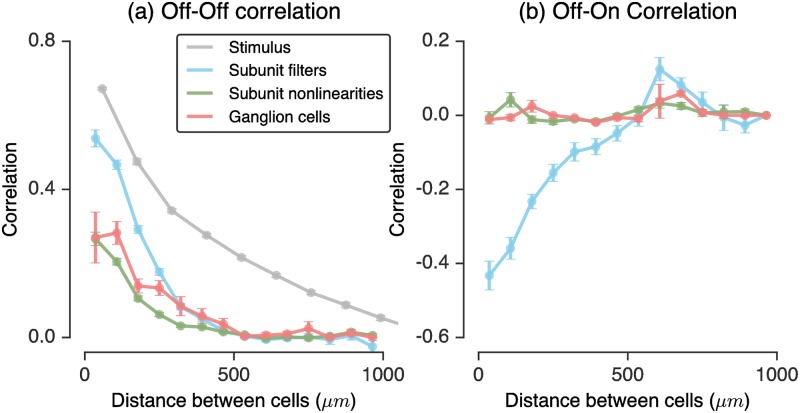

Early work [59] suggested a simple mechanism: the linear center-surround receptive field of ganglion cells (and more recently, of bipolar cells [60]) could contribute to redundancy reduction simply by transmitting only differences in stimulus intensity across nearby positions in space. However, it was recently shown [61] using LN models that most of the decorrelation of naturalistic stimuli in the retina could be attributed to ganglion cell nonlinearities, as opposed to linear filtering. Given that we fit an entire layer of subunits pre-synaptic to each ganglion cell layer, we can analyze the spatial representation of naturalistic images at different stages of hierarchical retinal processing, thereby localizing the computation of decorrelation to a particular stage in the model.

To do so, we generated the response of the entire population of model subunits to a spatial stimulus similar to previous work [61], namely spatially pink noise, low pass filtered in time. We computed the correlation of stimulus intensities as a function of spatial distance, as well as the correlation between pairs of model units as a function of spatial distance. We examined pairs of units across different stages of the LN-LN model: after linear filtering by the subunits, after the subunit nonlinearity, and finally at the ganglion cell firing rates (the final stage). Fig 6 shows that the correlation at these stages drops off with distance between either the subunits or ganglion cells (with distance measured between receptive field centers). Pitkow & Meister [61] found that the nonlinearity in an LN model was primarily responsible for most of the decorrelation (overall suppression of the correlation curve towards zero). However, without the ability to map the LN nonlinearity to biophysical components in the retina, it is hard to map this result onto a particular biophysical mechanism. Instead, our model predicts that this decorrelation is primarily due to the subunit nonlinearities, as opposed to ganglion cell spiking nonlinearities. In fact, the correlation between the ganglion cell model firing rates slightly increases after pooling across subunits. The most decorrelated representation occurs just after thresholding at the subunit layer. In this manner, our modeling framework suggests a more precisely localized mechanistic origin for a central tenet of efficient coding theory. Namely, our results predict that the removal of visual redundancies, through stimulus decorrelation across space, originates primarily from high-threshold nonlinearities associated with bipolar cell synapses.

Fig 6. Decorrelation in LN-LN subunit models.

A naturalistic (pink noise) stimulus was shown to a population of nonlinear subunits. The correlation in the population after filtering at the subunit layer (blue), after the subunit nonlinearity (green), and after pooling and thresholding at the ganglion cell layer (red), in addition to the stimulus correlations (gray) are shown. Left: the correlation as a function of distance on the retina for Off-Off cell pairs. Right: correlation for Off-On cell pairs. For each plot, distances were binned every 70μm, and error bars are the s.e.m. within each bin.

The nature of nonlinear spatial integration across retinal subunits

Retinal ganglion cells emit responses in sparse, temporally precise patterns [62], presumably to keep firing rates low thereby preserving energy [61, 63]. LN models can emulate sparse, precise firing in only one way: by using nonlinearities with high thresholds relative to the distribution of stimuli projected onto their linear filter. This way, only a small fraction of stimuli will cause the model to generate a response. Indeed, nonlinearities in LN models fit to ganglion cells have high thresholds [9]. LN-LN models, in contrast, can generate sparse responses using two qualitatively distinct operating regimes: either the subunit thresholds (first nonlinearity) could be high and the ganglion cell or spiking threshold (second nonlinearity) could be low, or the subunit thresholds could be low and spiking thresholds could be high. Both of these scenarios give rise to sparse firing at the ganglion cell output. However, they correspond to categorically distinct functional computations.

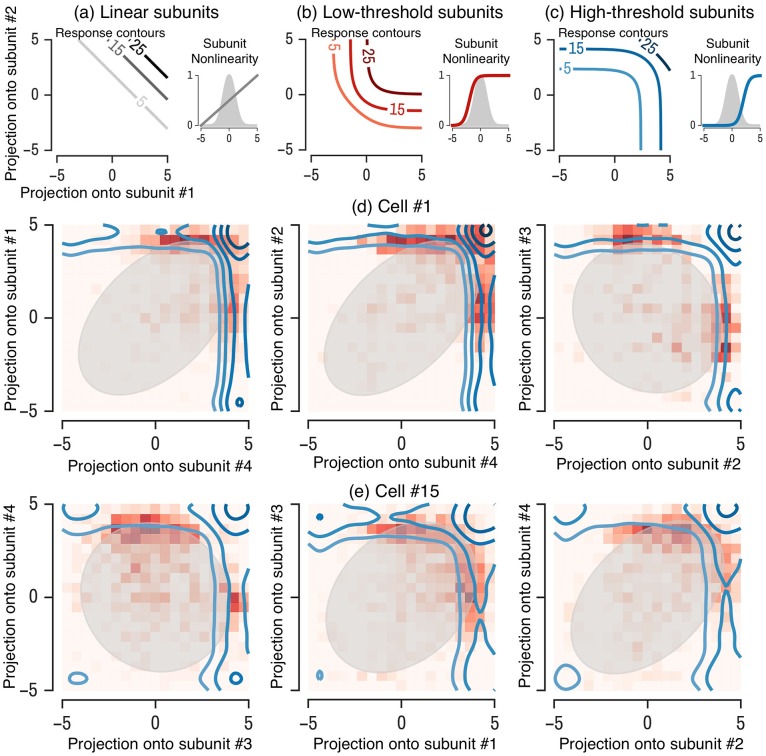

These various scenarios are diagrammed in Fig 7A–7C. Each panel shows the response of a model in a two-dimensional space defined by the projection of the stimulus onto two subunit filters pre-synaptic to the ganglion cell (the two-dimensional space is easier for visualization, but the same picture holds for multiple subunits). We show the response as contours where the firing probability is constant (iso-response contours). Here, the subunit nonlinearities play a key role in shaping the geometry of the response contours, and therefore shape the computation performed by the cell. Note that the ganglion cell nonlinearity would act to rescale the output, but cannot change the shape of the contours. Therefore, it is fundamentally the subunit nonlinearities alone that determine the geometry of the response contours.

Fig 7. Visualization of subunit contours.

Contours of equal firing probability are shown in a 2D space defined by the projection of the visual stimulus along each of two subunits. (a) Example contour plots for a model with low threshold subunit nonlinearities (inset) has concave contours. (b) A model with high threshold subunit nonlinearities has convex contours. (c & d) Contours from a model for two example ganglion cells, for three different pairs of subunits (left to right). In each panel, a histogram of the recorded firing rate is shown (red squares) as well as the stimulus distribution (gray oval).

Low-threshold subunit nonlinearities give rise to concave contours (Fig 7B), whereas high-threshold subunits give rise to convex contours (Fig 7C). Because final output rate is determined by the subunit and final thresholds, both of these descriptions could yield sparse firing output with the same overall rate (by adjusting the final threshold), but correspond to different computations. Low-threshold subunits can be simultaneously active across many stimuli, and thus yield spiking when subunits are simultaneously active (an AND-like combination of inputs). On the other hand, high-threshold subunits are rarely simultaneously active and thus usually only one subunit is active during a ganglion cell firing event, giving rise to an OR-like combination of inputs. By comparison, a cell that linearly integrates its inputs would have linear contours (Fig 7A).

In our models fit to retinal ganglion cells, we find all cells are much more consistent with the high threshold OR-like model. Subunit nonlinearities tend to have high thresholds, and therefore result in convex contours (shown for different pairs of subunits for two example cells in Fig 7D and 7E). For each example ganglion cell, we show the corresponding model contours along with the 2 standard deviation contour of the stimulus distribution (gray oval) and the empirical firing histogram (red checkers) in the 2D space defined by the projection of the stimulus onto a given pair of subunit filters identified by the LN-LN cascade model. Note that while the stimulus is uncorrelated (i.e. white, or circular), non-orthogonality of subunit filters themselves yield correlations in the subunit activations obtained by applying each subunit filter to the stimulus. Hence the stimulus distribution in the space of subunit activations (grey shaded ovals) is not circular. In all recorded cells, we find that the composite computation implemented by retinal ganglion cell circuitry corresponds to an OR function associated with high subunit thresholds (as schematized in Fig 7C). Moreover, both the AND computation and the linear model are qualitatively ruled out by the shape of the model response contours as well as the empirical firing histogram over subunit activations, which closely tracks the model response contours (i.e. the boundaries of the red histograms are well captured by the model contours).

These results are consistent with previous studies of nonlinear spatial integration in the retina. For example, Bollinger et. al. [45] discovered convex iso-response contours for a very simple two dimensional spatial stimulus, and Kaardal et. al. [44] performed an explicit hypothesis test between an AND-like and OR-like nonlinear integration over a low dimensional subspace obtained via the un-regularized STC eigenvectors, finding that OR outperformed AND. However, the techniques of [45] can only explore a low-dimensional stimulus space, whereas our methods enable the discovery of iso-response contours for high-dimensional stimuli. Moreover, in contrast to the hypothesis testing approach taken in [44], our general methods to learn LN-LN models reveal that an OR-model of nonlinear integration is a good model on an absolute scale of performance amongst all models in the LN-LN family, rather than simply being better than an AND-model.

A multi-dimensional view of cascaded retinal computation

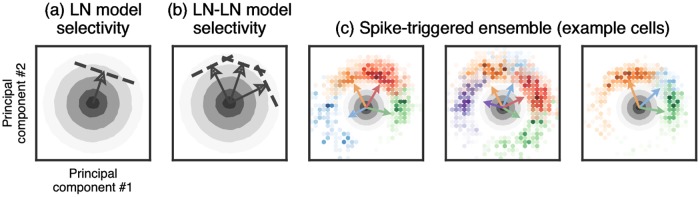

A simple, qualitative schematic of the distinct computational regime in which retinal ganglion cells operate in response to white noise stimuli can be obtained by considering the geometry of the spike triggered ensemble in N dimensional space. In particular, the distribution of stimuli concentrates on a constant radius sphere in N dimensional stimulus space. More precisely, any high dimensional random stimulus realization x has approximately the same vector length, because the fluctuations in length across realizations, relative to the mean length, is . Thus we can think of all likely white-noise stimuli as occurring on the N − 1 dimensional surface of a sphere in N dimensional space. Each subunit filter can be thought of as a vector pointing in a particular direction in N dimensional stimulus space. The corresponding input to the subunit nonlinearity for any stimulus is the inner-product of the stimulus with the subunit filter, when both are viewed as N dimensional vectors. The high threshold of the subunit nonlinearity means that the subunit only responds to a small subset of stimuli on the sphere, corresponding to a small cap centered around the subunit filter. For a single subunit model (i.e. an LN model), the set of stimuli that elicit a spike then corresponds simply to this one cap (Fig 8A). In contrast, the OR like computation implemented by an LN-LN model with high subunit thresholds responds to stimuli in a region consisting of a union of small caps, one for each subunit (Fig 8B).

Fig 8. Stimulus selectivity in LN and LN-LN models.

Each panel shows the raw stimulus distribution (gray contours) projected onto the top two principal components of the spike-triggered subunit activations (with subunits identified by the LN-LN model). The LN model (a) fires in response to stimuli in a single region, or cap, of stimulus space (indicated by the arrow and dashed threshold), whereas the LN-LN model (b) fires in response to a union of caps, each defined by an individual subunit. (c) Spike-triggered subunit activations for three representative cells are shown as colored histograms (colors indicate which model-identified subunit was maximally active during the spike), with the corresponding subunit filter directions shown as colored arrows (see text for details). Color intensity of the histogram indicates the probability density of the spike-triggered ensemble (STE), thus drops in intensity between changes in color indicate a multimodal STE, with high density modes centered near subunit filter directions.

To verify this conceptual picture, in Fig 8C we visualize a two-dimensional projection of the spike-triggered stimulus ensemble for three example ganglion cells, using principal components analysis of the spike-triggered subunit activations. That is, we project the spike-triggered ensemble onto the subunit filters identified in the LN-LN model, and subsequently project those subunit activations onto the two dimensions that capture the most variance in subunit activations. Note that this is different from just taking the top two principal components of the STC matrix, as the top STC component is typically the average of the subunit filters [10], which does not differentiate the subunit activations. This procedure identifies a subspace that captures the radial spread of subunit filters in high-dimensional stimulus space. We find that the spike-triggered ensemble projected onto this subspace (Fig 8C) curves around the radial shell defined by the stimulus distribution, and matches the conceptual picture shown in Fig 8B. For ease of visualization, we colored elements in the spike-triggered ensemble by which LN-LN model subunit was maximally active during that spike, and we normalize the spike-triggered histogram by the raw stimulus distribution (gray ovals). This picture provides a simple, compelling view for why LN models are insufficient to capture the retinal response to white noise, and further visualizes the aspect of retinal computation LN-LN models capture that the LN model does not: ganglion cells encode the union of different types of stimuli, with each stimulus type having large overlap with precisely one subunit filter.

Regularized spike-triggered analysis

Given a mathematical model of a multilayered neural circuit, we can connect the pathways in such models back to descriptive statistics, namely, spike-triggered statistics such as the spike-triggered average (STA) and covariance (STC). That is, we can show that the STA and the STC eigenvectors of a general LN-LN model are linear combinations its pathway filters (see Methods and [64]). Therefore, we expect certain types of structure in said pathways to persist after the linear combination, assuming the number of pathways is small relative to the stimulus dimension. This immediately suggests that the same proximal algorithms and regularization terms we used to fit LN-LN models can be used to regularize the STA and the STC eigenvectors directly (for situations where one is interested in the descriptive statistics, but not the full encoding model).

To illustrate the benefits of the regularization terms used to fit the LN-LN models, we apply these penalties to perform regularized spike-triggered analysis. We formulate optimization problems for regularizing the spike-triggered average and covariance which only require access to the un-regularized estimates (see Methods). This is useful for the situation where working with the full spike-triggered ensemble or raw dataset is prohibitive due to computational time or memory constraints.

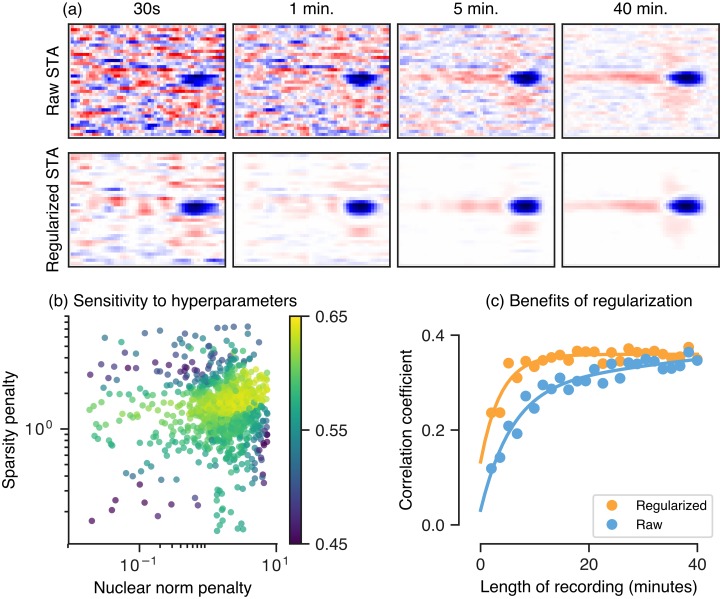

Fig 9A compares a regularized spike-triggered average with the raw, un-regularized STA for an example recorded ganglion cell in response to a 1-D spatiotemporal white noise stimulus. For long recordings, the regularized STA closely matches the raw STA, while for short recordings the regularized STA has less high frequency noise and retains much of the structure observed if the STA had been estimated using more data. Fig 9B shows the held-out performance of the regularized STA for an example cell across different regularization weights, scanned over a broad range, demonstrating that performance is largely insensitive to the strengths of the weights of the ℓ1 and nuclear norm penalty functions. Thus regularization weights need not be fine tuned to achieve superior performance. We further quantified the performance of the regularized STA by using it as the linear filter of an LN model, and found that with regularization, about 5 minutes of recording was sufficient to achieve the performance (on held-out data) obtained through 40 minutes of recording without regularization (Fig 9C).

Fig 9. Regularization for estimating receptive fields (via a regularized spike-triggered-average).

(a) Top row: the raw spike-triggered average computed using different amounts of data (from left to right, 30s to 40min), bottom row: the regularized spike-triggered average computed using the same amount of data as the corresponding column. (b) Performance (held-out log-likelihood) as a function of two regularization weights, the nuclear norm (x-axis, encourages low-rank structure) and the ℓ1-norm (y-axis, encourages sparsity), for an example cell. (c) Correlation coefficient (on held-out data) between the firing rate of a retinal ganglion cell and LN model whose filter is fixed to be a regularized or raw (un-regularized) STA, as a function of the amount of training data for estimating the STA (length of recording).

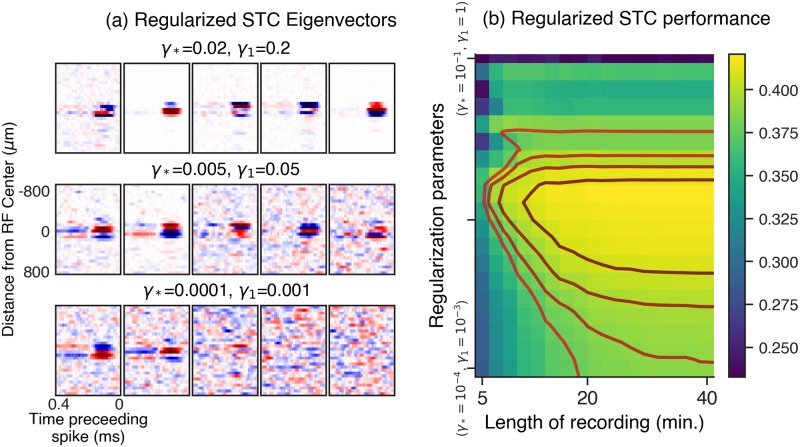

Fig 10 demonstrates the improvement in our ability to estimate the relevant subspace spanned by significant STC eigenvectors, both in terms of the qualitative improvement in eigenvectors for an example cell (Fig 10A) and quantified across the population (Fig 10B). In Fig 10A, we show the top regularized STC eigenvectors for different values of the nuclear norm (γ*) and ℓ1-norm (γ1) regularization penalties (Eq 11 in Methods and Table 1). We score the performance of the STC subspace in Fig 10B in terms of how well stimuli, after projection onto the subspace, can be used to predict spikes, by computing the subspace overlap (defined in Methods) between the raw or regularized STC subspace and the best fit LN-LN subspace. This quantity ranges between zero for orthogonal subspaces and one for overlapping subspaces.

Fig 10. Regularized spike-triggered covariance.

(a) Example panels of the output of our regularized spike-triggered covariance algorithm. Each panel contains the five most significant regularized eigenvectors of the STC matrix, reshaped as spatiotemporal filters. The bottom panel shows the result with no regularization added, and the upper panels show the result with increasing weights on the regularization penalties. Here γ1 is the regularization weight applied to an ℓ1 penalty encouraging sparsity, and γ* is a regularization weight applied to a nuclear norm penalty, encouraging approximate spatiotemporal separability of the eigenvectors, when reshaped as spatiotemporal filters. (b) Summary across a population of cells. The heatmap shows the held-out performance of regularized STC (measured as the subspace overlap with the best fit LN-LN subspace, see text for details). The y-axis in (b) represents a line spanning 3 orders of magnitude in two-dimensional regularization parameter space (γ*, γ1), ranging from the point (γ* = 10−4, γ1 = 10−3) to (γ* = 10−1, γ1 = 1).

Table 1. Common regularization penalties and their proximal operators (in closed form).

| Penalty function, ϕ(x) | Proximal operator, | Computational complexity |

|---|---|---|

| ℓ2-norm, γ‖x‖2 | ||

| ℓ1-norm, γ‖x‖1 | ||

| Nuclear norm, ‖X‖* | X = USVT, , | |

| Non-negativity, |

Since the LN-LN subspace is the best subspace found by the LN-LN model for predicting spiking, a large subspace overlap between the regularized STC and LN-LN subspaces indicates the ability of regularized STC to find stimulus subspaces predictive of neural firing without actually fitting a model of the neuron. With appropriate regularization, one can recover the best predictive subspace using about 10 minutes of data; without regularization, one requires 40 minutes of data to recover a subspace with comparative predictive accuracy. Note that even for the full length of this experiment (40 minutes), regularization still improves our regularized STC estimate. Thus, we find the subspace spanned by regularized STC eigenvectors becomes very similar to the subspace obtained from the filters in an LN-LN model, as predicted by our theoretical analysis.

Discussion

In summary, we combine proximal algorithms with non-smooth regularization terms to model stimulus driven neural processing in circuits with multiple parallel, hierarchical nonlinear pathways using limited experimental data. We found that models employing two stages of linear and nonlinear computation, namely LN-LN models, demonstrated a robust improvement over the classical standard of LN models at predicting responses to white noise across a population of ganglion cells. Beyond performance considerations alone, the gross architecture of the LN-LN model maps directly onto the hierarchical, cascaded, anatomy of the retina, thereby enabling the possibility that we can generate quantitative hypotheses about neural signal propagation and computation in the unobserved interior of the retina simply by examining the structure of our model’s interior. Since learning our model only requires measurements of the inputs and outputs to the retinal circuit, this approach is tantamount to the computational reconstruction of unobserved hidden layers of a neural circuit. The advantage of applying this method in the retina is that we can experimentally validate aspects of this computational reconstruction procedure.

Indeed, using intracellular recordings of bipolar cells, we found that our learned subunits matched properties of bipolar cells, both in terms of their receptive field center-surround structure, and in terms of the approximate number of bipolar cells connected to a ganglion-cell. However care must be taken not to directly identify the learned subunits in our model with bipolar cells in the retina. Instead, they should be thought of as functional subunits that reflect the combined contribution of not only bipolar cells, but also horizontal cells and amacrine cells that sculpt the composite response of retinal ganglion cells to stimuli. Nevertheless, the correspondence between subunits and bipolar cell RFs (which are also shaped by horizontal cells), suggests that even for Gaussian white noise stimuli, it is important to learn functional subunits that loosely correspond to the composite effect that bipolar cells and associated circuitry have on ganglion-cell responses.

The interior of our models also reveal several functional principles underlying retinal processing. First, all subunits across all cells had strikingly consistent nonlinearities corresponding to monotonically increasing threshold-like functions with very high thresholds. This inferred biophysical property yields several important consequences for neural signal processing in the inner retina. First, it predicts that subunit activation patterns are sparse across the ensemble of stimuli, with typically only one subunit actively contributing to any given ganglion cell spike. Second, it predicts that the dominant source of stimulus decorrelation, a central tenet of efficient coding theory, has its mechanistic origin at the first strongly nonlinear processing stage of the retina, namely in the synapse from bipolar cells to ganglion cells. Third, it implies that the composite function computed by individual retinal ganglion cells corresponds to a Boolean OR function of bipolar cell feature detectors.

Taken together, the proximal algorithms framework provides a unified way to both estimate hierarchical nonlinear models of sensory processing and compute spike-triggered statistics using limited data. When applied to the retina, these techniques recover aspects of the interior of the retina without requiring direct measurements of retinal interneurons. Moreover, by identifying candidate mechanisms for cascaded nonlinear computation in retinal circuitry, our results provide a higher resolution view of retinal processing compared to classic LN models, thereby setting the stage for the next generation of efficient coding theories that may provide a normative explanation for such processing. For example, considerations of efficient coding have been employed to explain aspects of the linear filter [65] and nonlinearity [61] of retinal ganglion cells when viewed through the coarse lens of an LN model. An important direction for future research would be the extension of these basic theories to more sophisticated ones that can explain the higher resolution view of retinal processing uncovered by our learned LN-LN models. Principles that underlie such theories of LN-LN processing might include subthreshold noise rejection [66, 67], sensitivity to higher order statistical structure in natural scenes, and energy efficiency [63]. Indeed the ability to extract these models from data in both a statistically and computationally efficient manner constitutes an important step in the genesis and validation of such a theory.

Another phenomenon robustly observed in the retina is adaptation to the luminance and contrast of the visual scene. Adaptation is thought to be a critical component of the retinal response to natural scenes [30], and a promising direction for extensions of our work would be to include luminance and contrast adaptation in subunit models. Luminance adaptation (adapting to the mean light intensity) is mediated by photoreceptor cells, and could be modeled by prepending a simple photoreceptor model (e.g. [68]) to an LN-LN model. There are two major sites of contrast adaptation, at the bipolar-to-ganglion cell synapse [69, 70] and at the spiking mechanism of ganglion cells [69, 71]. Extending the simple thresholding nonlinearities in our model with a dynamical model of adaptation (e. g. [19]) is a first step towards understanding the interaction between nonlinear subunits and adaptation.

While our work utilized white noise stimuli, the methods do not require any particular form of stimulus and will thus generalize to other stimulus distributions. In particular, stimuli that differentially activate subunits will be the most effective at differentiating LN and LN-LN models. Stimuli with coarse spatial resolution will not differentially activate subunits within the receptive field, thus are a poor choice for studying nonlinear spatial integration. However, fine textures as present in natural stimuli, are very likely to activate these nonlinear mechanisms in the retina, and thus are a critical component for understanding vision in the context of ethologically relevant stimuli.

The computational motifs identified by LN-LN models are likely to generalize across different species because they rely on a few key properties. For example, our predictions about the primary source of decorrelation in the retina rely on three features of the underlying circuitry identified by LN-LN models: (a) bipolar cell receptive fields are smaller than those of ganglion cells, (b) bipolar cell receptive field centers are largely non-overlapping, and (c) bipolar cell synapses have high thresholds. In addition, the logical OR combination of features relies on high thresholds and bipolar receptive fields that are (largely) non-overlapping. These properties (high threshold subunits with smaller, non-overlapping receptive fields) are common across multiple species.

Beyond the retina, multiple stages of cascaded nonlinear computation constitutes a ubiquitous motif in the structure and function of neural circuits. The tools we have applied here to elucidate hierarchical nonlinear processing in the retina are similarly applicable across neural systems more generally. Thus we hope our work provides mathematical and computational tools for efficiently extracting and analyzing both informative descriptive statistics and hierarchical nonlinear models across many different sensory modalities, brain regions, and stimulus ensembles, thereby furthering our understanding of general principles underlying nonlinear neural computation.

Methods

Ethics statement

This study was performed in strict accordance with the recommendations in the Guide for the Care and Use of Laboratory Animals of the National Institutes of Health, and the Stanford institutional animal care and use committee (IACUC) protocol (11619).

Experiments

Experimental data was collected from the tiger salamander retina using a multi-electrode array (Multi-Channel Systems), as described elsewhere [9]. Isolated ganglion cells were identified using custom spike sorting software. The stimulus used was a 100 Hz white noise bars stimulus, where the luminance of each bar was drawn independently from a Gaussian distribution. Spatially, the stimulus spanned approximately 2.8 mm on the retina (50 bars at 55.5 μm / bar). Intracellular recordings were performed as described elsewhere [72]. Off bipolar cells were identified by their flash response, receptive field size, and level in the retina. Data were analyzed using the pyret package [73]. The experimental data, as well as general purpose code to fit hierarchical LN-LN models and perform regularized STA and STC analysis, are available at https://github.com/baccuslab/inferring-hidden-structure-retinal-circuits.

LN-LN models

In this section, we specify the mathematical formulation of our LN-LN models. The model takes a spatiotemporal stimulus, represented as a vector x, and generates a predicted firing rate, r(x). First, the stimulus is projected onto a number of subunit filters. The number of subunits is a hyper-parameter of the model, chosen through cross validation (we repeatedly fit models with increasing numbers of subunits until held-out performance on a validation set decreases). If we have k subunits, then the stimulus is projected onto each of the k filters: wi for i = 1, …, k. These projections are then passed through separate subunit nonlinearities. The nonlinearities are parameterized using a set of Gaussian basis functions (or bumps) that tile the relevant input space [12, 20]. This parameterization enforces smoothness of the nonlinearity. We typically use p = 30 evenly spaced Gaussian bumps that tile the range spanned by the projection of the stimulus onto the linear filter (results were not sensitive to the number of bumps over a range of 10–30 bumps). For example, a nonlinearity h(u) is parameterized as

where ϕ is the basis function, e.g. ϕ(x) = exp(−x2), Δj indicates the spacing between the basis functions, p is the number of bases used, and aj is a weight on that particular basis function. Since the basis functions and spacings are fixed beforehand, the only free parameters are the aj’s. For subunit i, the corresponding nonlinearity has a set of weights aij for j = 1, …, p.

The output of the k subunits is then summed and passed through a final nonlinearity. This final nonlinearity is parameterized as a soft rectifying function r(x) = g log(1 + ex−θ), with two parameters: g is an overall gain and θ is the threshold. The full LN-LN model is then given by:

where the parameters to optimize are the subunit filters wi for i = 1, …, k, subunit nonlinearity weights aij for j = 1, …, p, and final nonlinearity parameters θ and g.

We optimize the parameters using a maximum likelihood objective assuming a Poisson noise model for spiking. Rather than optimize all of the parameters simultaneously, we alternate between optimizing blocks of parameters (joint optimization using gradient descent was prone to getting stuck at solutions that were less accurate). That is, we alternate between optimizing three blocks of parameters: the subunit filters wi, the subunit nonlinearities aij, and the final nonlinearity parameterized by θ and g. We optimize each block of parameters by minimizing the negative log-likelihood of the data plus any regularization terms using proximal algorithms. The subunit filters are the only parameters with regularization penalties (the nuclear norm applied to the filter reshaped as a spatiotemporal matrix and the ℓ1 norm), to encourage space-time separability and sparseness of the filters. The proximal operator for each of these regularization penalties is given in Table 1, and the proximal operator for the log-likelihood term (which does not have a closed-form solution) is solved using gradient descent. In addition, after optimizing the block of parameters corresponding to the subunit filters, we rescale them to have unit norm before continuing the alternating minimization scheme. This ensures that the distribution of input to the nonlinearities spans the same range, and gets rid of an ambiguity between the scale of the subunit filters and the scale of the domain of the subunit nonlinearity. We find that the parameters converge after several rounds of alternating minimization, and are robust with respect to random initialization of the parameters.

Proximal operators and algorithms

The framework of proximal algorithms allows us to efficiently optimize functions with non-smooth terms. The name proximal comes from the fact that these algorithms utilize the proximal operator (defined below) as subroutines or steps in the optimization algorithm. For brevity, we skip the derivation of these algorithms, instead referring the reader to the more thorough treatment by Parikh and Boyd [74] or Polson et al. [75]. The proximal operator for a function ϕ given a starting point v is defined as:

| (1) |

The proximal operator is a mapping from a starting point v to a new point x that tries to minimize the function ϕ(x) (first term above) but stays close to the starting point v (second term), where the parameter ρ trades off between these two objectives. The proximal operator is a building block that we will use to create more complicated algorithms. We will take advantage of the fact that for many functions ϕ of interest to us, we can analytically compute their proximal operators, thus making these operators a computationally cheap building block.

We used these building blocks to solve optimization problems involving the sum of a number of simpler terms:

| (2) |

where in our application the ϕi’s represent either a model fitting objective (e.g. a log-likelihood) or different regularization penalties on the parameters, x. For example, for learning the parameters of a linear filter in an LN model, the objective consists of a log-likelihood f(x) along with regularization penalties that impose prior beliefs about the filter, x. We focus on two main penalties. Sparsity, which encodes the belief that many filter coefficients are zero, is penalized by the ℓ1-norm (ϕ1(x) = ‖x‖1). Additionally, spatiotemporal filters are often approximately space-time separable (they are well modeled as the outer product of a few spatial and temporal factors). We encoded this penalty by the nuclear norm, ℓ*, which encourages the parameters x, when reshaped to form a spatiotemporal matrix, to be a low-rank matrix (the nuclear norm ℓ* of a matrix is simply the sum of its singular values). Another natural penalty would be one that encourages the parameters to be smooth in space and/or time, which could be accomplished by applying an ℓ1 or ℓ2 penalty to the spatial or temporal differences in parameters. As shown below, these types of penalties are easy to incorporate into the proximal algorithm framework. Other commonly used regularization penalties, and their corresponding proximal operators, are listed in Table 1.

The proximal consensus algorithm is an iterative algorithm for solving (2) that takes a series of proximal operator steps. It first creates a copy of the variable x for each term ϕi in the objective. The algorithm proceeds by alternating between taking proximal steps for each function ϕi using that variable copy xi, and then enforcing all of the different variable copies to agree (reach consensus) by averaging them. The algorithm is:

where i indexes each of the terms in the objective function, xi is a copy of the variable, is the average of the variable copies, and ui is a dual variable that can be thought of as keeping a running average of the error between each variable copy and the average. Intuitively, we can think of each variable copy xi as trying to minimize a single term ϕi in the objective, and the average, or consensus forces the different copies to agree. After convergence, each copy xi will be close to the mean value , which is the set of parameters that minimizes the original composite objective.

This algorithm has a number of desirable properties. First, the updates for each term xi can be carried out in parallel, therefore allowing for speedups when run on a cluster or multi-core computer. Second, it converges even when terms in the objective are non-differentiable. Due to the repeated application of the proximal operator, this algorithm works best when the terms ϕi have proximal operators that are easy to compute.

This is exactly the case for the regularization terms described above: for the ℓ1 norm, the proximal operator corresponds to soft thresholding of the parameters. For the nuclear norm, the proximal operator corresponds to soft thresholding of the singular values of parameters reshaped as a matrix. Occasionally, the proximal operator may not have a closed form solution. In this case, the proximal step can be carried out through gradient based optimization of (1) directly. This is the case for some log-likelihoods, such as the log-likelihood of a particular firing rate under Poisson spiking. In this case, gradient step based optimization of (1) often dominates the computational cost of the algorithm. As many methods for fitting neural models involve gradient step updates on the log-likelihood, such methods can then be augmented with additional regularization terms with no appreciable effect on runtime, by using proximal consensus algorithms for optimization. Our code for solving formulating and solving optimization problems using proximal algorithms is provided online at https://github.com/ganguli-lab/proxalgs.

Relationship between descriptive statistics and encoding models

Here, we derive the relationship between the pathways of any differentiable encoding model and spike-triggered statistics under Gaussian noise stimulation. We represent a visual stimulus as an N dimensional vector x. We view a functional neural model as an arbitrary nonlinear function r = f(x), over N dimensional stimulus space, where r determines the probability that the neuron fires in a small time window following a stimulus x: r(x) = p(spike ∣ x). The derivation will show how the STA is related to the gradient of the model ∇r(x), and the STC is related to the Hessian, ∇2r(x).

The STA and STC are the mean and covariance, respectively, of the spike-triggered stimulus ensemble, which reflects the collection of stimuli preceding each spike [3]. This distribution over stimuli, conditioned on a spike occurring, can be expressed via Bayes rule,

| (3) |

where p(x) is the prior distribution over stimuli and p(spike) is the average firing probability over all stimuli. Here, we assume a white noise stimulus distribution, in which each component of x is chosen independently from a Gaussian distribution with zero mean and unit variance. The STA and STC are given by

| (4) |

| (5) |

Focusing first on the STA:

| (6) |

where μ = p(spike) is the overall probability of spiking. The last step in the derivation uses Stein’s lemma, which states that if the expectation is taken over a multivariate Gaussian distribution with identity covariance matrix, corresponding to our white noise stimulus assumption. This calculation thus yields the simple statement that the spike-triggered average is proportional to the gradient (or gain) of the response function, averaged over the input distribution [64]. Applying Stein’s lemma again yields an expression for the STC matrix:

| (7) |

Intuitively, these results state that the STA is related to the slope (first derivative) and the STC is related to the Hessian curvature (matrix of second derivatives) of the multi-dimensional nonlinear response function r(x).

For example, consider a linear-nonlinear model r = f(wT x) which has the following gradient: ∇r(x) = f′(wT x)w and Hessian: ∇2r(x) = f″(wT x)wwT. Plugging these expressions into Eqs (6) and (7) reveals that the STA is proportional to w and the STC is proportional to wwT. Therefore, we recover the known result [2] that the STA of the LN model is proportional to the linear filter, and there will be one significant direction in the STC, which is also proportional to the linear filter (with mild assumptions on the nonlinearity, f, to ensure that slope and curvature terms in (6) and (7) are non-zero).

We can extend this to the case of a multilayered circuit with k pathways, each of which first filters the stimulus with a filter w1 … wk. Regardless of how these pathways are then combined, we can write this circuit computation as r = f(WT x) where W is a matrix whose columns are the k pathway filters, and f is a k-dimensional time-independent (static) nonlinear function. We can think of the k dimensional vector u = WT x as the activity pattern across each of the k pathways before any nonlinearity. The gradient for such a model is ∇r(x) = WT∇f(u), where ∇f(u) is the gradient of the k-dimensional nonlinearity. Using Eq (6), the STA is then a linear combination of the pathway filters:

where the weights are given by

and correspond to the average sensitivity, or slope of the neural response r with respect to changes in the activity of the ith filter.

The Hessian for the multilayered model is ∇2r(x) = W∇2fWT, where ∇2f is the k-by-k matrix of second derivatives of the k-dimensional nonlinearity f(u). From Eq (7), the STC is then given by:

| (8) |

where the k-by-k matrix H is:

This expression implies that nontrivial directions in the column space of CSTC correspond to (span the same space as) the column space of W. Therefore, the significant eigenvectors of the STC matrix will be linear combinations of the k pathway filters, and the number of significant eigenvectors is at most k.

Note that Eqs (6) and (7) are valid for any differentiable model, including those with more than two layers, divisive interactions, feedback, and so on.

Regularized STA

To compute a regularized STA, without explicitly building an encoding model, we can form an optimization problem that directly denoises the STA:

| (9) |

Here, ϕi(x) are the regularization penalty functions, with an associated regularization weight γi, and xSTA is the raw (sample) STA from recorded data (which is noisy due to finite sampling). Note that we use mean squared error to quantify distance from the raw estimate, but other loss functions may be also used. For the penalty functions ϕi, we use an ℓ1 penalty that encourages the estimated filter to be sparse (few non-zero coefficients), and a nuclear norm penalty, which is the sum of the singular values of the spatiotemporal filter x when viewed as a spatiotemporal matrix. The nuclear norm penalty is advantageous compared to explicitly forcing the spacetime filter x to be low-rank, as it is a “soft” penalty which allows for many small singular values, whereas explicitly forcing the filter to be low-rank forces those to be zero.

Regularized STC analysis

The STC eigenvectors are obtained by an eigendecomposition of the STC matrix C [10, 76], which is equivalent to solving an optimization problem:

| (10) |

where U denotes a matrix whose columns are the orthonormal eigenvectors of C. In order to regularize these eigenvectors, we wish to add penalty terms to (10), which precludes a closed form solution to the problem. We circumvent this by reformulating the problem using a convex relaxation. First, we consider the matrix X = UUT, corresponding to the outer product of the eigenvectors. Because of the cyclic property of the trace, namely that Tr(UTCU) = Tr(UUTC) = Tr(XC), the function to be optimized in (10) depends on the eigenvector matrix U only through the combination X = UUT. Thus we can directly optimize over the variable X. However, the non-convex equality constraint UTU = I in (10) is not easily expressible in terms of X. X is however a projection operator, obeying X2 = X. We replace this with the constraint that X should be contained within the convex hull of the set of rank-d projection matrices. This space of matrices is a convex body known as the fantope [77].

The advantage of this formulation is that we obtain a convex optimization problem which can be further augmented with additional functions that penalize the columns of X to impose prior knowledge about the structure of the eigenvectors of C. Columns of X are linear combinations of the eigenvectors of C, which are themselves linear combinations of the small set of spatiotemporal filters we are interested in identifying. Therefore, if we expect the spatiotemporal filters of individual biological pathways to have certain structure (for example, smooth, low-rank, or sparse), then we also expect to see those properties in both the eigenvectors and in the columns of X.

Putting this logic together, to obtain regularized STC eigenvectors, we solve the following convex optimization problem:

| (11) |

Here C is the raw (sample) STC matrix, which is again noisy due to limited recorded data, and denotes the fantope, or convex hull of all rank d projection matrices. Each ϕi is a regularization penalty function applied to each of the columns of X; i.e. , where xj denotes the j’th column of X. Again, we can solve this optimization problem efficiently using proximal consensus algorithms, described above. Common regularization penalties and their corresponding proximal operators are shown in Table 1. The optimization yields a matrix in the fantope , which may itself have rank higher than d, so we perform a final eigendecomposition of this matrix to obtain its top-eigenvectors. These eigenvectors constitute our regularized estimate of the eigenvectors of the significant (expansive) STC eigenvectors (to find suppressive directions, one could invert C in (11)). A major computational advantage of this formulation is that we only need to store and work with the N by N raw STC covariance matrix itself, without ever needing access to the spike-triggered ensemble, an N by M matrix where M (the number of spikes) is typically much greater than N.

Subspace overlap

We quantify the overlap between two subspaces as the average of the cosine of the principal (or canonical) angles between the subspaces. The principal angles between two subspaces and generalize the idea of angles between vectors. Here we describe a pair of p and q dimensional subspaces in n dimensional space as the span of the columns of the matrices X and Y. Assuming without loss of generality that p ≤ q, then we have p principal angles θ1, …, θp that are defined recursively for k = 1, …, p as: