Abstract

Symptom report scales are used in clinical practice to monitor patient outcomes. Using them permits the definition of a minimum clinically important difference (MCID) beyond which a patient may be judged as having responded to treatment. Despite recommendations that clinicians routinely use MCIDs in clinical practice, statisticians disagree about how MCIDs should be used to evaluate individual patient outcomes and responses to treatment. To address this issue, we asked how clinicians actually use MCIDs to evaluate patient outcomes in response to treatment. Sixty-eight psychiatrists made judgments about whether hypothetical patients had responded to treatment based on their pre- and posttreatment change scores on the widely used Positive and Negative Syndrome Scale. Psychiatrists were provided with the scale’s MCID on which to base their judgments. Our secondary objective was to assess whether knowledge of the patient’s genotype influenced psychiatrists’ responder judgments. Thus, psychiatrists were also informed of whether patients possessed a genotype indicating hyperresponsiveness to treatment. While many psychiatrists appropriately used the MCID, others accepted a far lower posttreatment change as indicative of a response to treatment. When psychiatrists accepted a lower posttreatment change than the MCID, they were less confident in such judgments compared to when a patient’s posttreatment change exceeded the scale’s MCID. Psychiatrists were also less likely to identify patients as responders to treatment if they possessed a hyperresponsiveness genotype. Clinicians should recognize that when judging patient responses to treatment, they often tolerate lower response thresholds than warranted. At least some conflate their judgments with information, such as the patient’s genotype, that is irrelevant to a post hoc response-to-treatment assessment. Consequently, clinicians may be at risk of persisting with treatments that have failed to demonstrate patient benefits.

Keywords: quality of care, patient decision making, clinical practice guidelines, managed care

A crucial task in clinical practice is evaluating whether a patient has responded to treatment.1 If a patient is failing to respond, the potential side effects of continued treatment can outweigh its benefits.1 Persisting with an ineffective treatment also delays the provision of more effective alternatives. On the other hand, failure to detect a patient’s response to treatment can lead to the termination of a potentially effective treatment plan, depriving the patient of its benefits.2

How should clinicians decide whether a patient has responded to treatment? In psychiatry, patient-reported outcomes are often used to monitor symptoms that are not easily observed by the clinician (e.g., hallucinations, paranoid thoughts).3 Yet psychiatric symptoms are often highly variable and can fluctuate over time, independent of any treatment effects.4 Thus, the clinician must decide on the minimum pre-/posttreatment change in the symptom report that indicates a treatment benefit. Symptom report scales, such as the Positive and Negative Syndrome Scale (PANSS) for the assessment of symptom severity in schizophrenia, provide a minimum clinically important difference (MCID) calculated on the basis of large clinical samples.5 If a patient’s pre- and posttreatment change in symptoms reaches or exceeds the MCID, the clinician may judge that the patient has responded to treatment. However, some statisticians have argued that MCIDs based on large clinical samples fail to account for patient variation and measurement error at the individual level and recommend that clinicians use a larger MCID when assessing individual patient outcomes.6,7 The Federal Drug Administration in the United States has made a similar point, emphasizing that MCIDs based on large clinical samples may not be adequate for the assessment of individual patient outcomes.8

In light of the debate around how MCIDs should be used in clinical practice, we asked how clinicians actually use them in their assessment of individual patient outcomes. In particular, are clinicians sensitive to a specific scale’s MCID when using a symptom report scale to evaluate patient outcomes? If so, do they demand a higher or lower threshold for identifying treatment benefits than the one prescribed by a symptom report scale’s MCID? Doubts have been raised regarding clinicians’ ability to interpret minimum important clinical differences,9 generating concern around recommendations for their use in clinical decision making.2

Assessing an individual patient’s response to treatment is a crucial issue for the implementation of stratified medicine where treatment effects are identified in subgroups but applied to individual patients. While such subgroups are often defined according to the possession of a particular genotype, Kitsios and Kent10 have warned against the prevailing tendency to pharmacogenetic exceptionalism—that is, to privilege genetic information and give it greater weight in clinical decision making than is warranted. Thus, our secondary objective was to determine whether knowledge of a patient’s genotype affects psychiatrists’ judgment of patient responses to treatment.

Method

Participants

The study protocol was approved by the institution ethics committee, and each participant provided written informed consent. Psychiatrists from health and social care trusts in Northern Ireland, United Kingdom, were approached during professional development meetings and were invited to participate in the study. A total of 70 psychiatrists (97% response rate) were recruited. Two psychiatrists provided identical responses to all vignettes and thus their data were excluded from the analyses. Psychiatrists were asked to judge a number of patient vignettes and outcomes and to rate their confidence in each judgment. Fourteen psychiatrists failed to provide all judgments, and 26 respondents failed to provide all confidence ratings. We included in the analyses only complete vignettes for which psychiatrists provided both a responder judgment and a confidence rating.

Psychiatrists provided information about their age, gender, specialty within psychiatry, number of years of experience in clinical practice, and whether or not they had completed their clinical training. Twenty-two (33%) were trainee psychiatrists. Across all psychiatrists, there was a mean of 10 years of clinical experience (SD = 7.6). Table 1 provides the demographic characteristics.

Table 1.

Demographic Characteristics (N = 68)

| Variable | n (%) |

|---|---|

| Gender | |

| Male | 39 (57) |

| Female | 28 (41) |

| Not stated | 1 (1) |

| Trainee psychiatrist | |

| No | 43 (63) |

| Yes | 22 (32) |

| Not stated | 2 (3) |

| Trainee general practitioner | 1 (1) |

| Subspecialty | |

| Psychotherapy | 7 (10) |

| Not stated | 21 (31) |

| General adult | 22 (32) |

| Intellectual disability | 1 (1) |

| Psychiatry of old age | 7 (10) |

| Learning disability | 2 (3) |

| Addiction | 3 (4) |

| Generalized anxiety disorder and addiction | 1 (1) |

| Liaison psychiatry | 2 (3) |

| Community mental health team | 1 (1) |

| Trainee general practitioner | 1 (1) |

Materials

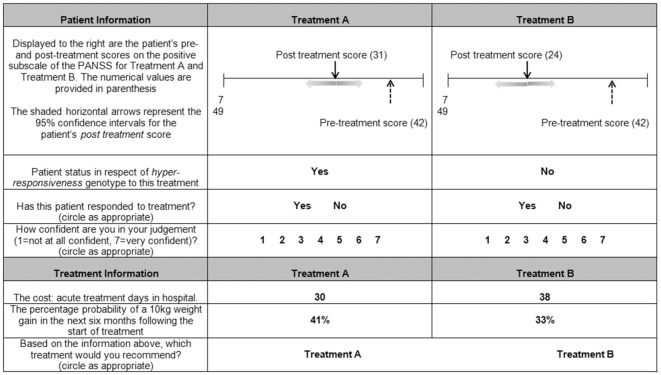

In collaboration with our two practicing psychiatrists (FAO and JK), we generated hypothetical patient vignettes and patient outcomes based on the positive subscale of the PANSS. The positive subscale contains seven items (e.g., “delusions and hostility”), each scored by the psychiatrist on a scale of 1 (symptom absent) to 7 (symptom extreme). Psychiatrists were told that the MCID for the positive subscale of the PANSS was 15.3 with a statistical reliability (Cronbach’s alpha) of 0.73 and a standard deviation of 6.08 based on published studies using large clinical samples,5,9 and they were instructed to use the provided MCID as they saw fit in their assessment of the patient outcomes. To aid psychiatrists’ use of the MCID, the pre- and posttreatment scores were displayed on a visual scale representing the full range of possible PANSS scores (Figure 1). Shaded horizontal arrows represented the 95% confidence intervals around the posttreatment score calculated based on the provided MCID and the associated statistical reliability and standard deviation scores (Figure 1).

Figure 1.

Example vignette. The visual scale, representing the full range of possible Positive and Negative Syndrome Scale (PANSS) scores, displayed the patient’s pre- and posttreatment scores. Shaded arrows indicated the 95% confidence intervals around the patient’s posttreatment scores based on the scale’s minimum clinically important difference (MCID) and the associated statistical reliability and standard deviation scores. Vignettes indicated for which treatment the patient possessed a hyperresponsiveness genotype, associated with a 30% increase in average treatment effectiveness. Psychiatrists were asked to judge whether they believed the patient had responded to each treatment and provided a confidence rating for each judgment.

On each of 26 vignettes, psychiatrists were presented a patient’s total PANSS score prior to treatment, fixed at 42, representing a high symptom intensity,11 and the patient’s total PANSS score following two hypothetical treatments (Figure 1). Hence, psychiatrists made two patient judgments on each vignette. Posttreatment scores ranged from 3 to 26, indicating a range of improvements in positive symptoms on the PANSS. The posttreatment range was based on typical patient improvements reported for antipsychotic medication.12 The posttreatment scores differed between the two treatments on each vignette by as little as 1 point and by as much as 20 points.

The vignette also identified whether the patient possessed a specific genotype (Figure 1), which psychiatrists were told clinical trials had demonstrated was linked to hyperresponsiveness to treatment (30% more effective than average). Only one of the two treatments on each vignette displayed the presence of a hyperresponsiveness genotype. The treatments for which the patient possessed a hyperresponsiveness genotype was not varied between participants. Apart from the patient information illustrated in each vignette, psychiatrists were instructed to assume that all patients were otherwise identical. Based on the patient information, psychiatrists were asked to judge whether they believed that the patient had responded to each treatment and to indicate their degree of confidence in their judgment on a 7-point scale (1 = not at all confident, 7 = very confident). All vignettes were completed in one sitting, and respondents were instructed to take as long as they felt necessary to complete the study. The vignettes were presented in a randomly generated order for each psychiatrist.

Statistical Analysis

We determined for each respondent the minimum posttreatment score, past which patients were rated as having responded to treatment. If a psychiatrist’s judgments were not perfectly consistent with a single minimum threshold, such as in the case that a psychiatrist switched inconsistently between judging a patient as a responder and as a nonresponder, we estimated their most likely minimum threshold.13,14 We did so by first ordering the psychiatrist’s responder judgments according to the patient’s change score from the lowest to the highest change score. We then scored each change score as a possible “switch point,” such that

The change score with the lowest “switch point” value indicated the psychiatrist’s most likely minimum accepted posttreatment change score. It follows that a candidate switch point will receive a low probability score if few yes responses (i.e., responder judgments) are made below the switch point and many yes responses are made above the switch point. This would indicate that the corresponding change score truly reflects the point at which the psychiatrist switches from identifying patients as nonresponders to identifying them as responders. If, however, some yes responses are made below the candidate switch point or some no responses are made above the switch point, then the probability score will increase, raising the possibility that an alternative switch point may provide a better candidate as the psychiatrist’s true switch point.

A random effects logistic regression model was conducted on psychiatrists’ judgments of whether (1 = Yes) or not (0 = No) they believed each patient had responded to treatment. This analysis included the vignette information, the posttreatment change score, and the presence of a hyperresponsiveness genotype (1 = present, 0 = absent), respondents’ gender (1 = female, 0 = male), clinical training (1 = completed training, 0 = not completed training), and years of clinical experience.

Results

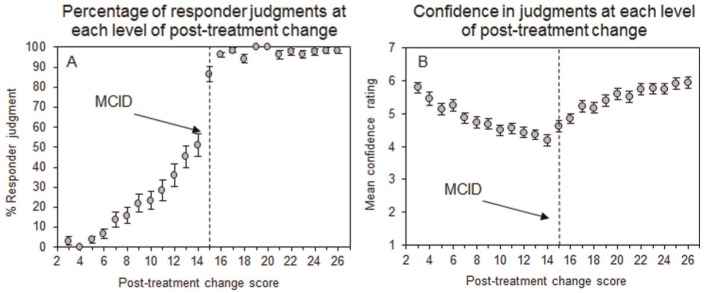

Psychiatrists accepted a mean minimum posttreatment change score of 10.43 (SD = 3.26) on which they identified patients as having responded to treatment. This value is significantly lower than the 15.3 scale MCID, which the psychiatrists were told was used in most relevant trials (one-sample t test: t[66] = 25.09, P < 0.001). Figure 2A confirms that as the posttreatment change score increased in size, more patients were identified as having responded to treatment. The percentage of patients identified as treatment responders increased to almost 100% after the MCID of 15 had been exceeded, indicating that many psychiatrists used the MCID provided to them to identify responders to treatment. Yet over a quarter (28%) of psychiatrists identified patients with a posttreatment change score of 11 or less as responders to treatment (Figure 2A). Therefore, many psychiatrists accepted a far lower minimum posttreatment change score than the recommended MCID of 15.

Figure 2.

Percentage of judgments that patient responded (A) and mean confidence ratings (B) at each level of posttreatment change on the Positive and Negative Syndrome Scale (PANSS). Vertical dashed bars indicate the minimum clinically important difference (MCID) of 15.3 on which psychiatrists were instructed to base their judgments that patient responded.

Psychiatrists were least confident in their patient judgments when the posttreatment change score was close to the MCID (Figure 2B). Inspection of Figure 2B confirms that confidence ratings increased either side of the MCID, indicating that psychiatrists were aware of the importance of the MCID in informing their patient judgments. However, the mean confidence rating was 4.19 (out of 7) at a posttreatment change score 1 point below the MCID (i.e., change score = 14) and was significantly lower than the mean confidence rating of 4.86 at a change score 1 point above the MCID (i.e., change score = 16; paired t test; t[66] = 5.16, P < 0.001), indicating that psychiatrists were more confident in their patient judgments when the patient’s change score exceeded the MCID. This difference was even larger at 2 points below (i.e., change score = 13; M = 4.36) and above (i.e., change score = 17; M = 5.22; paired t test; t[66] = −6.66, P < 0.001) the MCID. Thus, while many patients were identified as treatment responders despite posttreatment change scores that did not exceed the MCID, psychiatrists were less confident in those judgments than when the patient’s change score exceeded the MCID.

Our regression analysis confirmed that larger posttreatment change scores were associated with a higher likelihood that patients were identified as responders to treatment (Table 2). Yet controlling for each patient’s posttreatment change score, the odds of classifying a patient as a responder to treatment were significantly reduced by a factor of 2.86 (1/0.35) if they possessed a genotype that indicated hyperesponsiveness to the treatment (Table 2).

Table 2.

Logistic Regression Model Used to Predict Judgments of a Patient Response to Treatment

| 95% Confidence Interval |

|||

|---|---|---|---|

| Variable | Odds Ratio | Lower | Upper |

| Patient characteristics | |||

| Posttreatment change score | 2.28* | 2.13 | 2.44 |

| Absence of a hyperresponsiveness genotype | 2.86* | 2.12 | 3.84 |

| Psychiatrist characteristics | |||

| Female gender | 1.89 | 0.59 | 7.30 |

| Completed clinical training | 2.83 | 0.86 | 9.39 |

| Years of clinical experience | 1.03 | 0.94 | 1.14 |

| Participants, n | 67 | ||

| Degrees of freedom | 5 | ||

| Observations, n | 3421 | ||

Note: The logistic regression analysis was a random-effects model conducted on respondents’ judgments of whether (1 = Yes) or not (0 = No) they believed each patient had responded to treatment.

P < 0.0001.

Discussion

How should clinicians decide whether a patient has responded to treatment? The Federal Drug Administration in the United States has emphasized that MCIDs based on large clinical samples may not be adequate for the assessment of individual patient outcomes.8 Symptom report scales, such as the PANSS used to assess symptom severity in schizophrenia,11 provide a numerical MCID, past which clinicians may judge that a patient has responded to treatment. In light of the debate around how MCIDs should be used in clinical practice,6,7 we asked how clinicians actually use them in their assessment of individual patient outcomes. While psychiatrists were able to use the MCID to assess patient outcomes—the percentage of patients identified as treatment responders increased sharply when the posttreatment change in symptoms exceeded the MCID (Figure 2A)—many psychiatrists accepted a lower minimum posttreatment change score on which to identify patients as responders to treatment. In fact, the mean minimum posttreatment change score endorsed by psychiatrists was significantly lower than the scale’s published MCID. Furthermore, even though patients were often identified as having responded to treatment despite low posttreatment changes in symptom reports, psychiatrists were less confident in such judgments compared to when a patient’s posttreatment symptom report exceeded the scale’s MCID. These findings imply that psychiatrists may often accept poorer patient outcomes, despite low levels of confidence in their own assessments. We tentatively suggest that under some conditions clinicians may persist with treatments that have not demonstrated sufficient benefits for the patient, exposing the patient to potential side effects of treatment, and potentially delaying the provision of more effective alternatives.1

Clinicians have received criticism concerning their understanding of numerical information about patient outcomes.15 Their understanding of the MCID is reportedly poorer than for other numerical formats used to communicate patient outcomes.9 If clinicians fail to correctly interpret the MCID, then recommendations and guidelines by health authorities to encourage their use in clinical practice8 may have detrimental effects on medical decision making. Our current findings provide a positive message by showing that many psychiatrists used the MCID to inform their patient judgments. The percentage of patients identified as responders to treatment increased toward the MCID and increased to almost 100% when the posttreatment change score matched or exceeded the MCID.

Our regression analysis revealed that even when controlling for patients’ posttreatment change in symptoms, psychiatrists were less likely to identify a patient as having responded to treatment if they possessed a genotype associated with a stronger average treatment response. This is counterintuitive for while genetic information can be informative about the potential benefits of a treatment, it is redundant when the patient’s actual response is known. Our findings imply that clinicians’ judgments of their patient’s response can be biased when they are aware that a patient possesses a genotype that is supposedly indicative of hyperresponsiveness to treatment. Our findings in this regard accord generally with other recent studies showing that subjective factors can bias clinical decision making.16 Furthermore, in studies of judgment analysis, individuals have a tendency to use all the information provided to them, even when some of it is irrelevant.17,18

Our study has some limitations. We presented psychiatrists with hypothetical patient outcomes, rather than using real patients, in order to ensure an adequate range of patient outcomes. On each vignette, psychiatrists evaluated a patient’s outcome following two hypothetical treatments. Specifically, we did not explicitly tell participants how long the patient had been on each treatment regime. Thus, psychiatrists may have classified a patient as having responded to treatment when the change score was below the MCID, due to a belief that given enough time over the course of the treatment, the participant would eventually reach or surpass the MCID. Moreover, psychiatrists were provided with a single pre- and posttreatment change score on which to base their judgments. In clinical practice, psychiatrists typically assess a patient on many occasions and clinical assessments are often made in the context of various other pieces of relevant patient information.19,20 Moreover, the confidence intervals around the patient’s posttreatment score were displayed visually (Figure 1). This, however, may have made it difficult for participants to estimate what the upper and lower bounds of the patient’s posttreatment score were. Future research could perhaps use numbers to highlight the upper and lower bounds of the patient’s change score.

Our current concern was clinicians’ use of the MCID in their assessment of individual patient outcomes. There is no gold standard for the interpretation of the MCID in the assessment of individual patient outcomes. Some researchers have suggested that a group average MCID can be applied to assess individual patients.21 Others have argued that a larger MCID is needed to account for individual variation in response to treatment.6 Despite discussion among researchers about how MCIDs should be used by clinicians to assess individual patients, our findings show that psychiatrists often accept posttreatment outcomes that fall below even the lowest recommended criterion for identifying patient response. Finally, future research could investigate how psychiatrists judge patient responses on the PANSS scale when they are ignorant of the MCID.

Conclusions

Psychiatrists should be cautious about how they use an MCID when determining an individual patient’s response to treatment and must temper the influence of other patient information in this regard, such as their genetic profile, especially when the patient’s response to treatment is already known.

Footnotes

Financial support for this study was provided by a grant from the Department of Education and Learning. The funding agreement ensured the authors’ independence in designing the study, interpreting the data, writing, and publishing the report.

References

- 1. Emsley R, Rabinowitz J, Medori R. Time course for antipsychotic treatment response in first-episode schizophrenia. Am J Psychiatry. 2006;163:743–5. [DOI] [PubMed] [Google Scholar]

- 2. Kinon BJ, Chen L, Ascher-Svanum H, et al. Predicting response to atypical antipsychotics based on early response in the treatment of schizophrenia. Schizophr Res. 2008;102:230–40. [DOI] [PubMed] [Google Scholar]

- 3. McCabe R, Saidi M, Priebe S. Patient-reported outcomes in schizophrenia. Br J Psychiatry Suppl. 2007;50:s21–8. [DOI] [PubMed] [Google Scholar]

- 4. Perkins D, Lieberman J, Gu H, et al. Predictors of antipsychotic treatment response in patients with first-episode schizophrenia, schizoaffective and schizophreniform disorders. Br J Psychiatry. 2004;185:18–24. [DOI] [PubMed] [Google Scholar]

- 5. Thwin SS, Hermes E, Lew R, et al. Assessment of the minimum clinically important difference in quality of life in schizophrenia measured by the Quality of Well-Being Scale and disease-specific measures. Psychiatry Res. 2013;209:291–6. [DOI] [PubMed] [Google Scholar]

- 6. Donaldson G. Patient-reported outcomes and the mandate of measurement. Qual Life Res. 2008;17:1303–13. [DOI] [PubMed] [Google Scholar]

- 7. Hays RD, Brodsky M, Johnston MF, Spritzer KL, Hui KK. Evaluating the statistical significance of health-related quality-of-life change in individual patients. Eval Health Prof. 2005;28:160–71. [DOI] [PubMed] [Google Scholar]

- 8. McLeod LD, Coon CD, Martin SA, Fehnel SE, Hays RD. Interpreting patient-reported outcome results: US FDA guidance and emerging methods. Expert Rev Pharmacoecon Outcomes Res. 2011;11:163–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Johnston BC, Alonso-Coello P, Friedrich JO, et al. Do clinicians understand the size of treatment effects? A randomized survey across 8 countries. CMAJ. 2016;188:25–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Kitsios GD, Kent DM. Personalised medicine: not just in our genes. BMJ. 2012;344:e2161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Kay SR, Fiszbein A, Opler LA. The Positive and Negative Syndrome Scale (PANSS) for Schizophrenia. Schizophr Bull. 1987;13:261–76. [DOI] [PubMed] [Google Scholar]

- 12. Leucht S, Kane JM, Kissling W, Hamann J, Etschel E, Engel RR. What does the PANSS mean? Schizophr Res. 2005;79:231–8. [DOI] [PubMed] [Google Scholar]

- 13. Hanoch Y, Rolison J, Gummerum M. Good things come to those who wait: time discounting differences between adult offenders and nonoffenders. Pers Individ Dif. 2013;54:128–32. [Google Scholar]

- 14. Kirby K. Instructions for inferring discount rates from choices between immediate and delayed rewards. Williamstown, MA: Williams College; 2000. Available from: https://scholar.google.co.uk/scholar?hl=en&q=Instructions+for+inferring+discount+rates+from+choices+between+immediate+and+delayed+rewards.&btnG=&as_sdt=1%2C5&as_sdtp=#0 [Google Scholar]

- 15. Marcatto F, Rolison JJ, Ferrante D. Communicating clinical trial outcomes: effects of presentation method on physicians’ evaluations of new treatments. Judgement Decis Mak. 2013;8:29–33. [Google Scholar]

- 16. Srebnik N, Miron-Shatz T, Rolison JJ, Hanoch Y, Tsafrir A. Physician recommendation for invasive prenatal testing: the case of the “precious baby.” Hum Reprod. 2013;28:3007–11. [DOI] [PubMed] [Google Scholar]

- 17. Rolison JJ, Evans JS, Dennis I, Walsh CR. Dual-processes in learning and judgment: Evidence from the multiple cue probability learning paradigm. Organ Behav Hum Decis Process. 2012;118:189–202. [Google Scholar]

- 18. Rolison JJ, Evans JS, Walsh CR, Dennis I. The role of working memory capacity in multiple-cue probability learning. Q J Exp Psychol (Hove). 2011;64:1494–514. [DOI] [PubMed] [Google Scholar]

- 19. Goldberg DP, Cooper B, Eastwood MR, Kedward HB, Shepherd M. A standardized psychiatric interview for use in community surveys. Br J Prev Soc Med. 1970;24:18–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Aboraya A. Use of structured interviews by psychiatrists in real clinical settings: results of an open-question survey. Psychiatry (Edgmont). 2009;6:24–8. [PMC free article] [PubMed] [Google Scholar]

- 21. de Vet HCW, Terluin B, Knol DL, et al. Three ways to quantify uncertainty in individually applied “minimally important change” values. J Clin Epidemiol. 2010;63:37–45. [DOI] [PubMed] [Google Scholar]