Abstract

Digital tracking of human motion offers the potential to monitor a wide range of activities detecting normal versus abnormal performance of tasks. We examined the ability of a wearable conformal, sensor system, fabricated from stretchable electronics with contained accelerometers and gyroscopes, to specifically detect, monitor and define motion signals and “signatures,” associated with tasks of daily living activities. The sensor system was affixed to the dominant hand of healthy volunteers (n = 4) who then completed four tasks. For all tasks examined, motion data could be captured, monitored continuously, uploaded to the digital cloud, and stored for further analysis. Acceleration and gyroscope data were collected in the x, y, and z-axes, yielding unique patterns of component motion signals for each task studied. Upon analysis, low frequency (< 10 Hz) tasks (walking, drinking from a mug, and opening a pill bottle), showed low inter-subject variability (< 0.3 G difference) and low inter-repetition variability (< 0.1 G difference) when comparing the acceleration of each axis for a single task. High frequency (≥ 10 Hz) activity (brushing teeth) yielded low inter-subject variability of peak frequencies in acceleration of each axis. Each motion task was readily distinguishable and identifiable (with ≥ 70% accuracy) by independent observers from motion signatures alone, without the need for direct visual observation. Stretchable electronic technologies offer the potential to provide wireless capture, tracking and analysis of detailed directional components of motion for a wide range of individual activities and functional status.

Keywords: patient monitoring, wireless, stretchable electronics, wearable sensors, motion signatures, BioStampRC

Introduction

Motion and mobility are vital components of human existence.1 Whole body human movement, as well as movement of specific body elements, i.e. arms, legs, and head, occur as part of a wide range of activities – extending from personal care, to work and employment tasks, as well as recreation and sport pursuits. The extent of motion associated with these activities, in terms of range, reproducibility and frequency of movement, varies with the overall state of an individual, often increasing with training and conditioning, and conversely declining with aging, injury or disease.

Human motion parameters are also important indicators of an individual’s overall health.2–5 Recently there has been an emergence of interest and devices aimed at tracking human motion, particularly in the form of easy-to-use, wireless, discrete wearable constructs for consumer, athletic and general purpose use, beyond those for healthcare professionals. This has been driven largely by a convergence of advances in electronics, accelerated diffusion of the smartphone, development of “wearable “ technologies, coupled with emergence of the “digitized self,” and an increasing motivation with aging “baby boomers,” for sustained and improved health with aging.6–10 Up to this point, detailed tracking of motion parameters, i.e. range, duration or components of motion, has largely remained a laboratory-based process relying predominantly on complex and expensive visual tracking methods.11–13 More recently, personal motion tracking has progressed with the advent of small sensors, yielding wearable systems such as the Fitbit™, JawBone™, or the Apple Watch™. While these devices have advanced personal motion tracking, they still measure overall body translation, e.g. number of steps taken, without resolution of details of body component movement.14–19 Human motion is complex in that overall motion, or limb motion, in free space has six degrees of freedom, i.e. x, y, z pitch, yaw and roll components. A wearable sensor system that is able to track, measure and support the analysis of these more granular details of motion would be a valuable advance to further quantitate the description and understanding of human motion in health and disease.20

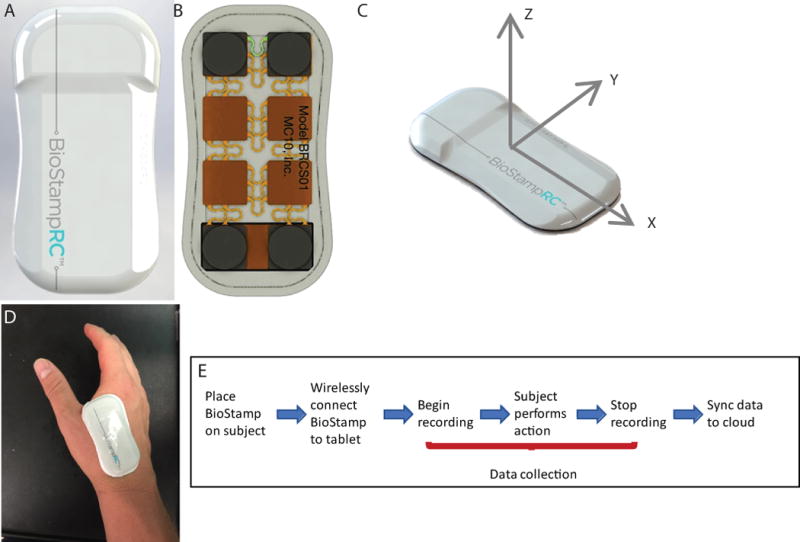

Recently, a wearable, conformal, skin-adherent motion sensor was developed, based on stretchable electronics with incorporated tri-axial accelerometers and gyroscopes – the BioStampRC™, (MC10 Inc.)21 (Figure 1 A, B). Herein we hypothesize that composite purposeful movements and motions associated with specific tasks of activities of daily living could be successfully captured, monitored and defined regarding directional components utilizing the BioStampRC™. Further, we posit that a given task set of motions will yield an identifiable “signature” as defined by the motion signals, allowing pattern recognition and identification of the motion activity without direct visualization. First, human volunteers with affixed BioStampRC™s, were asked to perform specific motion tasks including: walking, brushing teeth, drinking from a mug, and opening pill bottle and motions signals were captured, monitored and defined as to their constituent directional components. Next, to address whether resultant motion data created an identifiable “signature”, we employed both qualitative and quantitative measures through broad visual assessment of signals, single-blinded comparison studies, and quantification of signal frequency and amplitude for each task and subject. Finally, identification of a series of sequential motion tasks, from signals alone, without the benefit of visual information was performed.

Figure 1. BioStampRC™ Wireless Motion Capture System.

(A) Front of BioStampRC™ wearable sensor (B) Back of BioStampRC™ wearable sensor (C) Axes orientation for BioStampRC™ motion (D) Placement of sensor on subject’s dominant hand for duration of daily routine activities (E) Series of steps for motion data collection with BioStampRC™ system.

Methods

Wireless Motion Capture

Four healthy volunteers (3 for single motion tasks, and 1 for the motion series) participated in this study (2 male, 2 female, ages 22-24). A single BioStampRC™, (Figure 1 C), was applied and adhered, via affixed tape backing, to the dominant hand of each subject between the index finger and thumb. Figure 1 D depicts the timeline of events for motion capture with the BioStampRC™ system. In brief, a motion study event was created through the MC10 Investigator Portal, allowing for selection of components (accelerometer and gyroscope), sampling frequency (100 Hz), and amplitudes (± 4 G and ± 2000 degrees/s) needed for the study. The BioStampRC™ was then connected via Bluetooth to a tablet (Samsung Galaxy) through the MC10 Discovery Application. Once connected, motion data signals from the BiostampRC™ could be visualized in real-time on the tablet and assigned for recording to a motion activity previously created through the MC10 Investigator Portal. To clearly define the motion event and establish a baseline subject orientation, all subjects were asked to be motionless for the first and last 5 seconds of recording. Following completion of the motion tasks, recording was stopped, and motion data was uploaded via Bluetooth to the Samsung Tablet and then synced via WiFi to the MC10 Investigator Portal.

Specific Motion Protocols for Activities of Daily Living

Following informed consent, volunteers performed specific motion tasks as outlined in the following protocols, with each task performed in triplicate per subject: Protocols performed included: 1) gross translation (walking): Each volunteer was asked to walk at a self-defined, comfortable pace for 30 meters, then stop, turn around, and walk back 30 meters at the same pace. A length of 30 meters was pre-determined and marked by cones for visualization. 2) hygiene (brushing teeth): Each volunteer was asked to grab a toothbrush from the sink counter with their dominant hand, imitate the placement of toothpaste on the tooth brush with their non-dominant hand, and then proceed to brush their teeth in a self-defined, comfortable way for 30 seconds. 3) hydration (drinking from a mug): Each volunteer began the motion in a sitting position with both hands, palms down on each knee. Each volunteer was then asked to reach out with the dominant hand, grab the mug by the handle, and then bring it to their lips, as if drinking, and then place the mug back on the table and then return to starting position. 4) medication compliance (opening a pill bottle): Each volunteer began the motion in a sitting position with both hands, palms down on each knee. Each volunteer was then asked to reach out with their non-dominant hand to grab a push-and-twist pill bottle from a table, bring the pill bottle in front of them and then push-and-twist the lid using dominant hand. Once lid was removed, volunteers separated hands for a brief pause before putting the lid back on, and returning the bottle to table.

Motion Capture Analysis

Motion data were downloaded from the MC10 digital cloud and analyzed visually or computationally using MATLAB (Mathworks, Inc.), as described below. All motion signals (accelerometer and gyroscope) were assessed as raw signals, without additional filtering or adjustment in the x, y, and z axes of the BioStampRC™.

Qualitative

A qualitative analysis was completed by visually comparing each subject’s motion signals and signature in the x, y, and z-axes with regard to signal appearance, presence of patterns, frequency of patterns and signal amplitude. In order to establish the efficacy of visual identification and distinguishing of motion signals and patterns, a single-blinded analysis approach was utilized in which labeled signatures of motion patterns for each of the motion tasks were given to 10 volunteers. The volunteers were asked to use the labeled signals and signatures as a reference and match them to a string of un-labeled motion signatures generated from a series of daily routine activities. Accuracy in matching the motion patterns was assessed as % accuracy for each of the four motion tasks.

Semi-Quantitative

A semi-quantitative analysis was completed by analyzing the degree of overlap of signals – as to shape, height and signal envelope, between subjects performing the same activity. Motion signals for a given task of a given subject, were traced on Mylar sheets. The degree of similarity between subjects was then assessed via determining the degree of overall signal envelope congruence – defined as comparative likeness and overlap – employing a 1-5 score of overlap (1 = no overlap with 5 = complete overlap). This analysis was completed for each of the four activities. These results were then categorized based on their respective likeness. An activity labeled “identical” yielded an overlap score of ≥ 4 between volunteers, an activity labeled “similar” yielded an overlap of > 2 and < 4, and an activity labeled “dissimilar” yielded an overlap of ≤ 2.

Quantitative

For low frequency motion signatures (< 10 Hz), a quantitative analysis was performed by averaging the signal amplitude of each subject’s motion in the x, y, and z-axes for each activity. The amplitudes were calculated by offsetting each signal from their baseline orientational forces. For high frequency motion signals (≥ 10 Hz) a quantitative analysis was performed through Fast Fourier Transform of the signal. All signals were statistically compared for inter-subject similarities using MATLAB to calculate the Pearson correlation coefficients between the signals of each subject.

Results

For all tasks studied, and in all subjects evaluated, the BioStampRC™ effectively captured motion data that was uploadable to the cloud and analyzable, yielding defined x, y, z motion data component information (see Figure 2 as example). Further, motion data collected for a specific motion task yielded a unique acceleration and gyroscope signature.

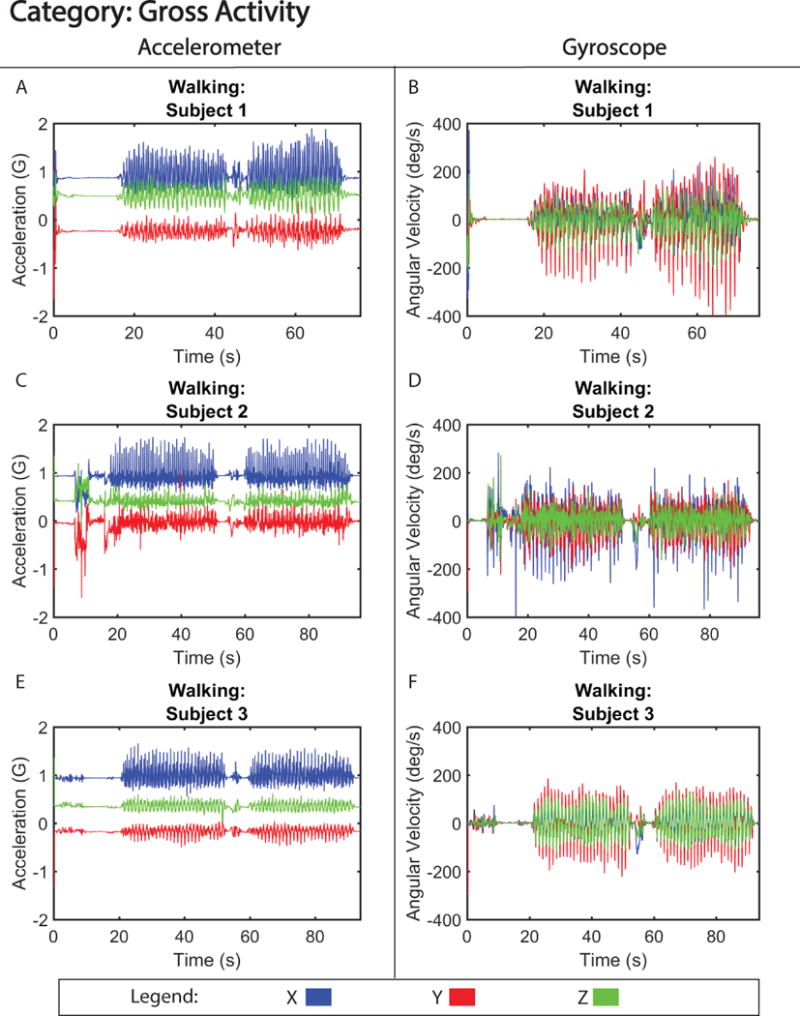

Figure 2. Motion Signature for Gross Translation.

Tri-axial acceleration (A,C,E) and gyroscope (B, D, F) motion from three subjects walking 30 meters.

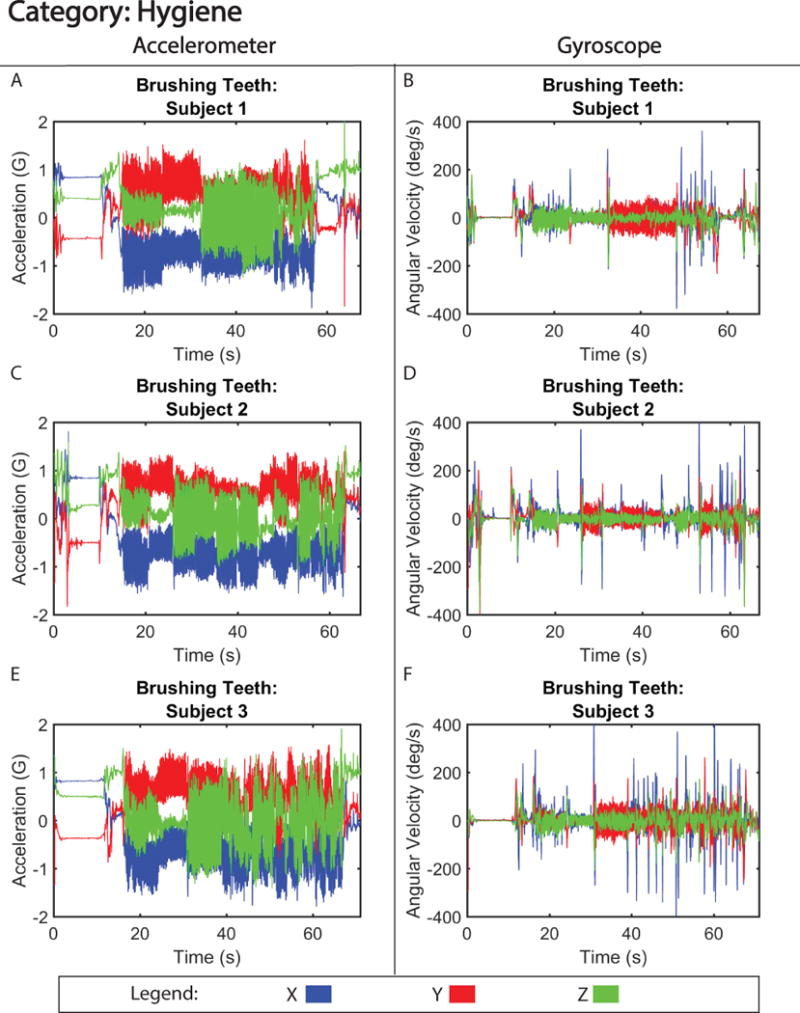

Figure 2 shows motion data collected during gross translation (walking) from three separate subjects. From visual analysis of the walking signals, the acceleration signatures between subjects were labeled as similar (Table I). The motion of walking produced a signal with only small discrepancies in acceleration amplitude (< 0.06 G difference) (Figure S1), and motion pattern, between the three subjects. Furthermore, correlation analysis of the signals revealed high correlation between subjects, with coefficients between 0.86 (subject 1 versus 2) and 0.92 (subject 2 versus 3). One of the distinct differences observed between subjects was the variability in gyroscopic velocity detected during motion associated with swinging of arms as they walked. For example, subject 2 exhibited a higher angular velocity (−150 to 150 degrees/s) around the x-axis compared to subjects 1 and 3 (−100 to 100 degrees/s; −25 to 25 degrees/s) (Figure 2 B, D, and F), suggesting that subject 2 swung their arms faster while walking. Despite these differences, the walking pattern was able to be accurately identified 100% of the time in our single-blinded study (Table II). In contrast, the motion of brushing teeth displayed more distinct differences between subject motion signatures (Figure 3), creating a signal that is dissimilar between volunteers (Table I), and had lower correlation between subjects, with coefficients ranging between 0.62 (subject 1 versus 3) and 0.68 (subject 2 versus 3). This is likely due to the preferential differences in how the subject chose to brush their teeth, i.e. starting with the top row of teeth as opposed to the bottom row. However, unlike walking, the motion signature of brushing teeth is a high frequency (> 10 Hz) motion, which made it easily distinguishable 100% of the time (Table II) and when quantified, showed similar accelerative frequency patterns between the three volunteers (Figure S2).

Table I.

Percent Overlap of Motion Signatures

| Motion Pattern | Walking | Brushing Teeth | Opening and Closing Pill Bottle | Drinking from Mug |

|---|---|---|---|---|

| Overlap Score* | 3 | 2 | 3 | 4 |

| Pattern Identifier Based on Overlap % | Similar | Dissimilar | Similar | Identical |

Overlap Score (Scale of 1-5): 1 = no overlap of signal, 5 = complete overlap of signal

Table II.

Percent Accuracy of Matching Motion Signatures

| Motion Pattern | Walking | Brushing Teeth | Opening and Closing Pill Bottle | Drinking from Mug |

|---|---|---|---|---|

| % Participants Correctly Matched Motion Patterns | 100% | 100% | 80% | 70% |

Figure 3. Motion Signature for Hygiene.

Tri-axial acceleration (A,C,E) and gyroscope (B, D, F) motion from three subjects brushing their teeth.

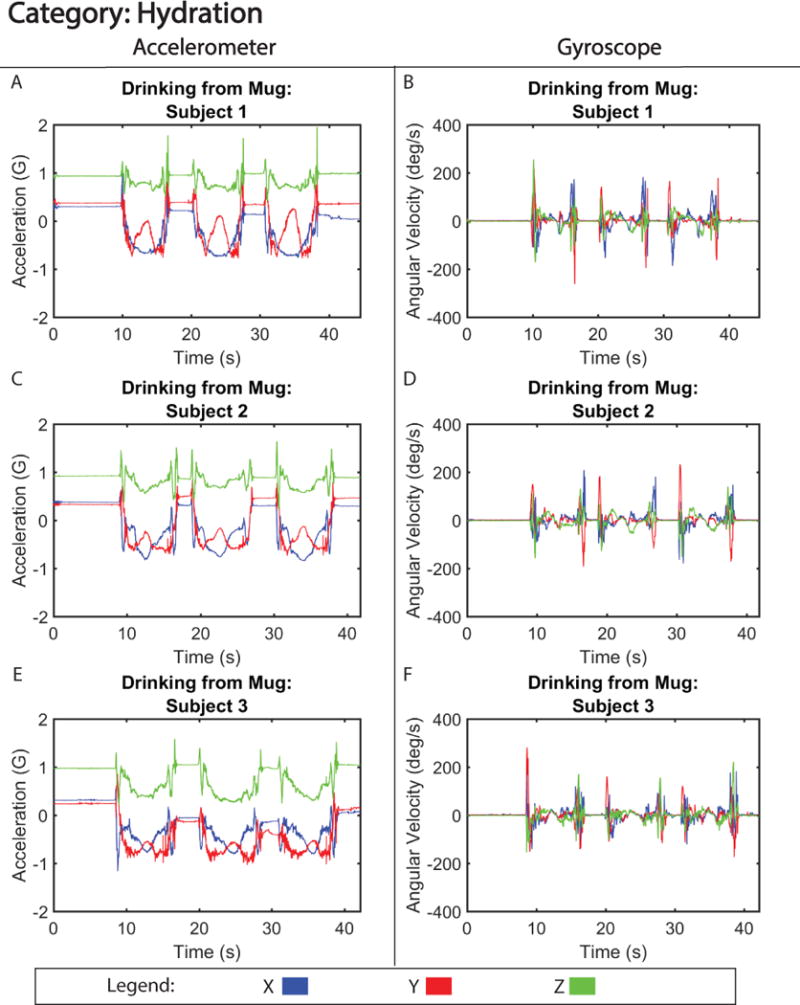

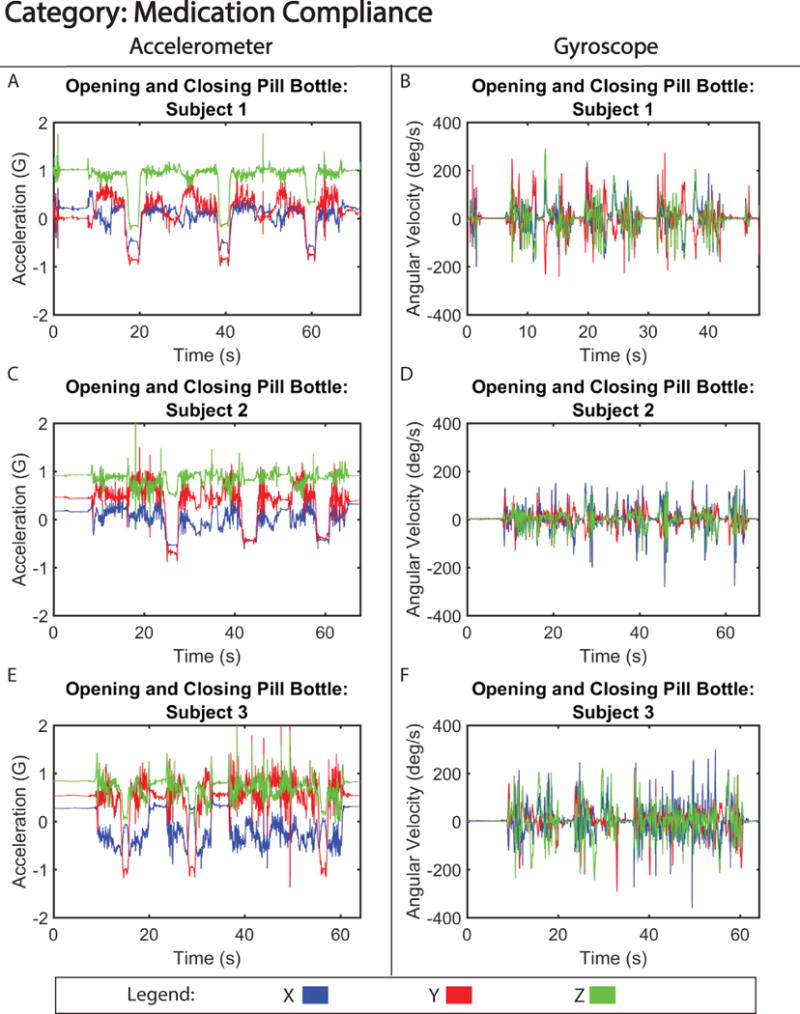

Lower frequency motion signals generated from drinking from a mug or opening and closing a pill bottle, are shown in figures 4 and 5, respectively. These motions produced motion signatures that were considered similar and identical, respectively, from the perspective of inter-subject pattern variability (Table I). As to the identifiability of the motion signatures by trained subjects they were identifiable at the 80% and 70% rate for, drinking from a mug and opening and opening and closing a pill bottle respectively (Table II). Motion signatures from these categories, specifically the acceleration, (Figure 4 A, C, and E and Figure 5 A, C, and E) show more granular details of the motion with low inter-subject variability, i.e. acceleration force difference less than 0.3 G for both motion tasks (Figure S3 and S4). Furthermore, drinking from a mug accelerative signals were highly correlated between subjects, with correlation coefficients between 0.85 (subject 2 versus 3) and 0.89 (subject 1 versus 2). However, opening pill bottle accelerative signals were less correlated, with coefficients between 0.72 (subject 1 versus 3) and 0.76 (subject 1 versus 2). The decline in correlation and ability of blinded observers to correctly identify the signal could largely be attributed to erroneous behavior exhibited by subject 3, who had a difficult time opening the pill bottle on the third repetition. When examining gyroscopic motion for the same activities (Figure 4 B, D, and F and Figure 5 B, D, and F), there were small qualitative differences in the angular velocity that could reflect each subject’s ability or preference for twisting open the pill bottle and lifting the mug to their mouth.

Figure 4. Motion Signature for Hydration.

Tri-axial acceleration (A,C,E) and gyroscope (B, D, F) motion from three subjects drinking from a mug.

Figure 5. Motion Signature for Medication Compliance.

Tri-axial acceleration (A,C,E) and gyroscope (B, D, F) motion from three subjects opening and closing a pill bottle.

Discussion

In the present study, we report on the ability of a wearable, conformal, stretchable electronic sensor system to capture, track and monitor motion associated with specific tasks of daily living activities, that upon data analysis yields motion content information with regard to x, y and z components. Further, in examining the signals generated from contained sensor gyroscopes and accelerometers, defined signal patterns or “motion signatures” are evident and emerge. This study reveals that these activity signatures are noticeably distinct for the activities tested. Further, we show that sequential signatures patterns are readily identified and interpretable by a range of individual examiners with a high degree of accuracy, allowing non-visual identification of motion tasks over time. We specifically chose the four, albeit complex, motion tasks studied as we envision one particular utility of our approach is as a means of monitoring individuals remotely as to performance of a daily routine, particularly in situations where direct visual observation by a caregiver may not be possible.

Published motion studies to date have largely focused on capturing information from single ambulatory motions, i.e. walking, sitting, or standing. These studies typically employ video and visual cues to capture motion.22–25 Our study goes beyond qualitative imaging to quantitative detection of motion and its vectorial and elemental components. Video capturing, while effective for monitoring complex motion, presently is cumbersome, generally bulky and requires placement of cameras at some distance from the monitored individual, thus not lending itself to convenient “on board” monitoring of individuals. Further, visual analysis raises privacy issues and requires the movement of the subject to be limited to within the field of view of the camera or sensor.

Prior studies utilizing accelerometers have employed systems that are bulky, requiring the subject to be tethered to the motion capture sensor.26 Previous wearable sensors have been heavy enough to adversely affect the motion itself, producing a signal that does not accurately represent the motion. The BioStampRC™ has shown great efficacy for use in wireless patient monitoring as a result of its lightweight, wireless, stretchable, conformable, and water-resistant design,27 allowing the subject to wear it on any location of the body for over a 24-hour period without interference as to daily routine activities or sacrifice of the subject’s privacy and desired sense of independence.

The present sensor additionally provides both accelerometer and gyroscopic signals over six degrees of freedom, allowing for detailed spatial and rotation information of the wearer’s motion. The utility of one sensor type over the other for motion identification is dependent upon the type of motion to be studied. Accelerometers afford the ability to capture detailed aspects of motion. Even without movement, accelerometers capture gravitational force, providing information as to the orientation of the sensor or limb, though obscuring acceleration force due to motion in the sensitive axis. The sensitivity to axis orientation furthermore makes it difficult to integrate acceleration data with velocity and spatial position information without significant accumulation of error or drift or complex filtering and signal processing.28 Hence, the human motion captured with an accelerometer must have a significant acceleration component to create an identifiable pattern. Alternatively, the gyroscope is not influenced by gravitational force, with all angular velocity measured local to the sensor. However, attention to orientation and placement of the sensor in relation to the body segment of interest is essential as rotation about the sensitive axis can easily shift with human motion. Although not specifically examined in this study, it is recognized that gyroscopes are comparatively less sensitive to error accumulation than accelerometers and can be integrated to provide angular information with minimal drift over small time points.29

Due to the aforementioned sensitivity, placement of the BioStampRC™ was carefully chosen to be on the dominant hand with minimal variation between subjects. The hand location afforded the ability of the accelerometer to capture fine motor details, and the gyroscope to record rotation about the entire arm segment. From our study it was apparent that the motion signatures generated from with the BioStampRC™ at this location recorded enough acceleration force for the accelerometer to create identifiable motion patterns (up to 70% of the time). However, it is clear that the ability to accurately define these patterns is dependent on the motion performed and the sensor utilized (accelerometer or gyroscope). Gyroscope data for lower frequency motions is less identifiable than patterns generated from accelerometers. The accelerometer’s ability to capture high frequency, detailed motions allowed some motion patterns to be identified simply via their high frequency characteristics (walking, brushing teeth). However, having both types of sensors on board in the BioStampRC™ was important for the identification of both torsional and spatial movement of the subject as well as for accurate identification and matching of signatures.

The results of our study suggest expanded utility of these sensors and of our approach to motion analysis for a wide variety of applications. Future potential applications include wireless subject and/or patient monitoring in the hospital, in a care facility or at home - as to the conduct of activities of daily living, medication compliance, fall detection, and general hygiene/self-care, particularly when direct observation by a caregiver is not possible. Although not presented in this study, motion signatures could alternatively be used to track onset, progression or recovery from diseases impacting mobility, rehabilitation, or training and progression of fitness and athletic performance. Beyond direct human applications these systems and analysis approach may be applied to veterinary as well as robotic applications as well. Independent of application, it is becoming increasingly evident that improving methods of human motion capture, analysis and interpretation, as presented in this study, has widespread translational applicability for monitoring and improving outcomes in a variety of industries.

Study Limitations

The current study displays the motion signatures from a single BiostampRC™ placed on the dominant hand. While one sensor was adequate to identify motion patterns for the tasks examined, its scope is necessarily limited to the applied body location and segment. The use of multiple sensors would provide additional information regarding whole-body motion of the user and allow for the ability to capture motion activities that may require body segments other than the wearer’s dominant hand. The BioStampRC™ system has the capability to record motion from multiple sensors simultaneously. Future studies are planned to explore this extended range potential.

Coupling motion signatures with computer-generated pattern recognition software would enable more autonomous monitoring and analysis, for identification of motions and for detection of changes or progression in motion signatures. To expand the utility of this system and approach for population monitoring will require additional studies to gather and analyze motion signatures from a wide range of subjects, examining subpopulations accounting for ranges of age distribution, gender, ethnicity and other demographic variables.

Other studies have utilized networks of a variety of sensor types to monitor patient health through measurement of additional health parameters such as ECG,30 heart rate and body temperature,31 and blood pressure.32 Creating a network of sensors and sensor types, able to collect acceleration and gyroscopic data, would allow for an integration of multiple data types, better defining from multiple perspectives, the wide variety of activities of a subject.

Supplementary Material

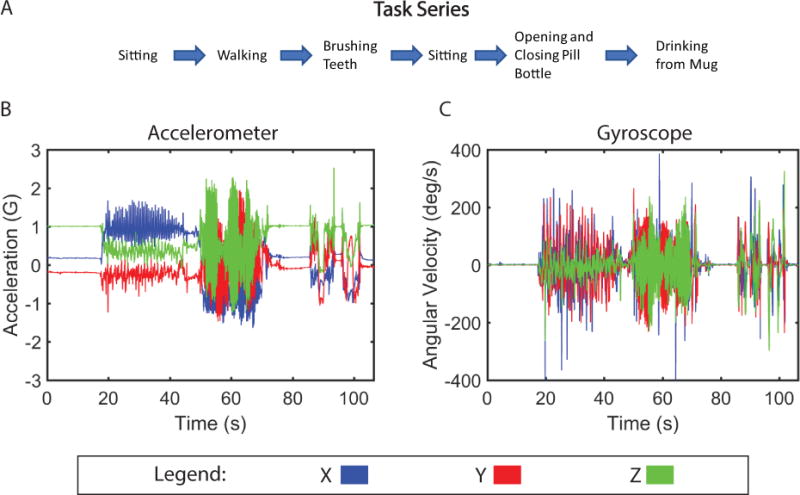

Figure 6. Motion Signature for Series of Daily Routine Tasks.

(A) Timeline of motions performed by subject during series of tasks. (B) Tri-axial acceleration and (C) gyrosocope motion from one subject performing task series.

Acknowledgments

The authors acknowledge and thank A.J. Aranyosi and Roozbeh Ghaffari. for technical support and guidance in this project, and NIH Cardiovascular Biomedical Engineering Training Grant T32 HL007955 and the Arizona Center for Accelerated Biomedical Innovation (ACABI) for funding support.

Source of Funding:

Partial funding NIH Cardiovascular Biomedical Engineering Training Grant T32 HL007955.

Partial funding from a general grant through the University of Arizona Center for Accelerated Biomedical Innovation.

Footnotes

Conflicts of Interest:

We have received research support from MC10, Inc.

References

- 1.Bruce A, Hanrahan S, Vaughan K, Mackinnon L, Pandy MG. The Biophysical Foundations of Human Movement. 3rd. Human Kinetics; Champaign, IL: 2013. [Google Scholar]

- 2.Beissner KL, Collins JE, Holmes H. Muscle force and range of motion as predictors of function in older adults. Phys Ther. 2000;80:556–563. [PubMed] [Google Scholar]

- 3.Roach KE, Miles TP. Normal hip and knee active range of motion: the relationship to age. Phys Ther. 1991;71:656–65. doi: 10.1093/ptj/71.9.656. [DOI] [PubMed] [Google Scholar]

- 4.Mazzeo R, Cavanach P, Evans W. Exercise and physical activity for older adults. Med Sci Sport Exerc. 1998;30:992–1008. [PubMed] [Google Scholar]

- 5.Normal Joint Range of Motion Study | NCBDDD | CDC. Available at: https://www.cdc.gov/ncbddd/jointROM/. Accessed November 17, 2017.

- 6.Lupton D. Quantifying the body: Monitoring and measuring health in the age of mHealth technologies. Crit Public Health. 2013;23:393–403. [Google Scholar]

- 7.Kim D-H, Lu N, Ghaffari R, et al. Materials for multifunctional balloon catheters with capabilities in cardiac electrophysiological mapping and ablation therapy. Nat Mater. 2011;10:316–323. doi: 10.1038/nmat2971. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.LIGHTBODY K. Meet The New Wave Of Wearables: Stretchable Electronics. Fast Co. 2016 [Google Scholar]

- 9.Swan JH, Friis R, Turner K. Getting tougher for the fourth quarter: Boomers and physical activity. J Aging Phys Act. 2008;16:261–279. doi: 10.1123/japa.16.3.261. [DOI] [PubMed] [Google Scholar]

- 10.Schwab K. The Fourth Industrial Revolution: what it means and how to respond. World Econ Forum. 2016:1–7. [Google Scholar]

- 11.Moeslund TB, Hilton A, Kr??ger V. A survey of advances in vision-based human motion capture and analysis. Comput Vis Image Underst. 2006;104:90–126. [Google Scholar]

- 12.Gleicher M. Animation from observation. ACM SIGGRAPH Comput Graph. 1999;33:51–54. [Google Scholar]

- 13.Kovar L, Gleicher M, Pighin F. Motion graphs. ACM Trans Graph. 2002;21:1–10. [Google Scholar]

- 14.Fitbit Flex 2™ Fitness Wristband. Available at: https://www.fitbit.com/flex2. Accessed November 17, 2017.

- 15.Diaz KM, Krupka DJ, Chang MJ, et al. Fitbit?: An accurate and reliable device for wireless physical activity tracking. Int J Cardiol. 2015;185:138–140. doi: 10.1016/j.ijcard.2015.03.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.UP MOVE by Jawbone | A Smarter Activity Tracker For A Fitter You. Available at: https://jawbone.com/fitness-tracker/upmove. Accessed November 17, 2017.

- 17.McDonough M. Your fitness tracker may be accurately tracking steps, but miscounting calories. The Washington Post. https://www.washingtonpost.com/lifestyle/wellness/fitbit-and-jawbone-are-accurately-tracking-steps-but-miscounting-calories/2016/05/24/64ab67e6-20fd-11e6-8690-f14ca9de2972_story.html%0Ahttps://www.washingtonpost.com/lifestyle/wellness/fitbit-and-jawbone-a. Published 2016.

- 18.Apple Watch Series 3 - Apple. Available at: https://www.apple.com/apple-watch-series-3/#sports-watch. Accessed November 17, 2017.

- 19.o.N.: Apple Watch set to drive wearables into the mainstream? Biometric Technol Today. 2014;2014:1. [Google Scholar]

- 20.Benoît Mariani KA. Assessment of Foot Signature Using Wearable Sensors for Clinical Gait Analysis and Real-Time Activity Recognition. Communications. 2012;5434:170. [Google Scholar]

- 21.Wearable Sensors | BiostampRC System | MC10. Available at: https://www.mc10inc.com/our-products/biostamprc. Accessed November 17, 2017.

- 22.Vasilescu MaO. Human motion signatures: analysis, synthesis, recognition. Object Recognit Support by user Interact Serv Robot. 2002;3:456–460. [Google Scholar]

- 23.Boulares M, Jemni M. 3D motion trajectory analysis approach to improve sign language 3D-based content recognition. Procedia Computer Science. 2012;13:133–143. [Google Scholar]

- 24.Chang I-C, Huang C-L. The model-based human body motion analysis system. Image Vis Comput. 2000;18:1067–1083. [Google Scholar]

- 25.Lakany H. Extracting a diagnostic gait signature. Pattern Recognit. 2008;41:1644–1654. [Google Scholar]

- 26.Mayagoitia RE, Nene AV, Veltink PH. Accelerometer and rate gyroscope measurement of kinematics: An inexpensive alternative to optical motion analysis systems. J Biomech. 2002;35:537–542. doi: 10.1016/s0021-9290(01)00231-7. [DOI] [PubMed] [Google Scholar]

- 27.Kim D-H, Ghaffari R, Lu N, Rogers JA. Flexible and Stretchable Electronics for Biointegrated Devices. Annu Rev Biomed Eng. 2012;14:113–128. doi: 10.1146/annurev-bioeng-071811-150018. [DOI] [PubMed] [Google Scholar]

- 28.Mayagoitia RE, Lötters JC, Veltink PH, Hermens H. Standing balance evaluation using a triaxial accelerometer. Gait Posture. 2002;16:55–59. doi: 10.1016/s0966-6362(01)00199-0. [DOI] [PubMed] [Google Scholar]

- 29.Moon Y, McGinnis RS, Seagers K, et al. Monitoring gait in multiple sclerosis with novel wearable motion sensors. PLoS One. 12:2017. doi: 10.1371/journal.pone.0171346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Yoo HJ, Yoo J, Yan L. Wireless fabric patch sensors for wearable healthcare. 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBC’10. 2010:5254–5257. doi: 10.1109/IEMBS.2010.5626295. [DOI] [PubMed] [Google Scholar]

- 31.Nakajima N. Short-range wireless network and wearable bio-sensors for healthcare applications. 2nd International Symposium on Applied Sciences in Biomedical and Communication Technologies, ISABEL 2009. 2009 [Google Scholar]

- 32.Mukhopadhyay SC. Wearable sensors for human activity monitoring: A review. IEEE Sens J. 2014;15:1321–1330. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.