Abstract

Background

The use of ambulatory assessment to study behavior and physiology in daily life is becoming more common, yet barriers to implementation remain. Limitations in budget, time, and expertise may inhibit development or purchase of dedicated ambulatory assessment software. Research Electronic Data Capture (REDCap) is widely used worldwide, offering a cost-effective and accessible option for implementing research studies.

Objectives

To present a step-by-step guideline on how to implement ambulatory assessment using REDCap and provide preliminary evidence of feasibility.

Methods

Feasibility and acceptability data are presented for randomized participants (N ranged from 19 to 36, depending on analysis) from an ongoing 8-week smoking cessation pharmacological clinical trial (ClinicalTrials.gov Identifier: NCT02737358). Participants (N = 36; 50% female) completed up to three ambulatory assessment surveys per day, depending on the phase of the study. These included self-report and video confirmation of smoking biomarkers and medication adherence.

Results

Participants completed 74.8% of morning reports (86.6% for study completers), 73.8% of videos confirming smoking biomarkers, and 70.4% of videos confirming medication adherence. Study completers reported that the REDCap assessments were easy to use, and 78.9% of participants preferred the REDCap assessments to traditional, paper measures.

Conclusions

These data from a pharmacological trial suggest feasibility of remote data collection using REDCap. As REDCap functionality is continually evolving, it is likely that options for collecting ambulatory assessment data via this platform will progressively improve allowing for greater individualization of assessment scheduling for enhancing data collection in clinical trials.

Keywords: Ambulatory assessment, ecological momentary assessment, REDCap, medication adherence, smoking cessation, clinical trial

Introduction

Ambulatory assessment (1) refers to the collection of self-report, physiological, or other data from individuals in their natural environments during everyday life. Researchers have employed ambulatory assessment to study individuals’ behavior in real-time in naturalistic settings for over three decades. Several reviews have addressed advantages, challenges, and design considerations when implementing ambulatory assessment with clinical populations (2–5). We argue that ambulatory assessment may yield valuable information when used to track participant behavior within a randomized controlled clinical trial. ambulatory assessment may be used to determine whether and when real-world changes in physiology and/or behavior occur during the course of treatment. A number of researchers have already employed ambulatory assessment in clinical trials of substance use disorder treatments to examine real-world outcomes that could not otherwise be examined with traditional methodologies (6–9).

Despite the opportunity to examine unique clinical outcomes, ambulatory assessment is still not widely implemented in clinical trials. Typically, participants in clinical trials complete in-person visits on a weekly/monthly basis. Assessment of substance use and medication adherence between visits is often based on retrospective recall. Research has shown that some substance use (i.e., alcohol) is underestimated when measured via retrospective self-report over longer periods of recall (10,11) and retrospective reports of cigarettes per day tend to be biased toward round numbers (12). Additionally, medication adherence is significantly overestimated when relying on participant self-report (13,14). In many cases, substance use and medication adherence can be biologically confirmed at weekly visits, but these assessments provide only a “snapshot” of behavior and do not allow for determination of precisely when relapse or missed doses occurred. Further, remote assessments may allow for reduced in-person visits in some trials, potentially allowing for expanded recruitment and increased study retention.

Many commercially available options exist for collecting ambulatory assessment data. Research teams can contract with companies specializing in ambulatory technology. Often, these companies will handle programming of study assessments, test the product, store data, and provide data reports and data download options. This option may allow for the most sophisticated assessment designs developed by expert programmers, though these options are often costly. Furthermore, the researcher may have little control over the product once it has been finalized and may not be able to easily adapt it for future studies. Alternatively, a research team may purchase specialized software that allows them to program their own assessments and assessment schedules. Specialized software companies offer more affordable self- program platforms, where the researcher programs the assessments and can update the protocol as needed. The most versatile self-program options currently cost about $1000–$6000, afford the researcher more control over the assessment, and are often designed to allow non-programmers to develop the assessment.

REDCap (15) offers a lower cost alternative to the data collection options described previously. Though institutions that use REDCap may incur costs associated with maintaining the server infrastructure, REDCap is licensed by Vanderbilit University at no cost to non-profit institutions. Currently over 2,000 institutions representing more than 100 countries and over 1,000 institutions in the United States alone have licensed the application (16).

The goal of this report is to provide information on the implementation of ambulatory assessment using a database management system (REDCap) for clinical researchers. This paper will illustrate our team’s experiences and lessons learned in the implementation of REDCap to capture data remotely in a clinical trial for smoking cessation in-between scheduled weekly in-person clinic visits. We will provide information on design considerations, limitations, and areas for future development. A detailed step by step account of study set up in REDCap is provided in a technical Appendix. Though most of the functions we will discuss are embedded in REDCap, customized programming or additional software was necessary to create some specialized features.

Methods

Our research team used REDCap to augment in-person data collection during an 8-week clinical trial for smoking cessation (ClinicalTrials.gov Identifier: NCT02737358). Adult smokers were randomized to receive N-acetylcysteine or placebo in a double-blind fashion. All study activities were approved by the local Institutional Review Board and participants provided written consent prior to study activities being conducted.

Participants

Adherence and remote survey satisfaction data are presented for the first 36 participants randomized to treatment; however, some participants were still enrolled at the time of this writing and their data is only included for phases of the study which they have fully completed. Medication video adherence monitoring was available for a sub-sample (n = 20), as it was added to the protocol later. The average age of the sample was 41.1 (SD = 12.7, Range = 23–62). The sample consisted of equal males (n = 18; 50.0%) and females. The sample was diverse in race (52.8% White/Caucasian; 41.7% Black/African American), education (61.1% had at least some college education), and marital status (33.3% married, 19.4% divorced/separated, 47.2% never married). The majority of participants were employed at least part-time (n = 27; 75.0%).

REDCap overview

REDCap provides a platform for developing online assessments to be completed remotely by participants by emailing or texting a survey link (patient-completed assessment). Those that administer REDCap at their institution are invited to participate in the REDCap consortium, where members can ask technical questions and receive feedback and suggestions for problem solving from other consortium partners. The application offers a variety of methods of data collection and links directly to a number of statistical packages. This manuscript is based off of REDCap Version 6.16.3.

REDCap also serves as an integrated database for collecting and storing data through traditional methods, such as direct data entry from case report forms and in- office survey submissions from study participants, and exports all or selected data directly into a variety of statistical packages. However, REDCap has the capability to perform more complex functions, such as scheduling survey delivery to participants based on participant-specific inputs necessary for an ambulatory assessment study. REDCap allows for repeated administration of the same assessment (i.e., longitudinal design) a predetermined number of times, which is useful in ambulatory assessment studies when information is collected from participants over the course of several days or weeks, often multiple times per day. A single participant identifier can be used to link the data to other projects at the analysis stage. The types of REDCap assessments used in the current study are described in the next section. Additionally, considerations for when to schedule assessments are discussed.

Self-report assessments

REDCap provides access to a number of standardized self-report assessments through its Shared Library, as well as a variety of question types and formatting options to create customized instruments and facilitate data collection. Although building self-report questionnaires will not be discussed in detail here, it is important to note that a traditional, retrospective assessment might not be the best fit for most ambulatory assessment research designs for two reasons (1): if participants will be asked to complete surveys during their daily routine, those surveys must be brief to minimize participant burden and maximize likelihood of completion; and (2) the psychometric properties of a retrospective questionnaire do not automatically translate to an intensive longitudinal design in which participants are often asked to recall only very recent behavior. Within and between-individual psychometric properties of the measure should ideally be examined when validating ambulatory assessment measures (17). In our clinical trial, participants completed a daily morning log of past day substance use and smoking.

Image capture

Though not implemented in the current study, a researcher can provide the option for a participant to upload a file as part of a survey using REDCap’s “File Upload” field type. Participants completing the survey from any mobile device with camera-capabilities can upload a photograph directly from their camera. Researchers have employed this method in weight management research to estimate participants’ caloric consumption from photographs of their food (18). Other applications are possible. Participants may be asked to photograph the label on any alcoholic beverages they consume or photograph the results of a self-administered physiological test.

Video capture

Also using the “File Upload” field type, participants can upload videos from a mobile device with video camera capabilities. This feature may be particularly useful for remote monitoring of biomarkers or medication adherence. In our clinical trial, participants were asked to upload videos of themselves taking their medication twice a day, for the first 6 days of the study. Participants were also asked to record themselves leaving breath carbon monoxide (CO) samples at the same time as medication videos were taken to confirm their smoking status. Breath CO is an accurate measure of combustible cigarette use but requires frequent collection (2–3 times per day) for an accurate assessment of smoking status (19). Video capture of breath CO has been used by other research groups (on non-REDCap platforms) to confirm abstinence and requires loaning the participant a monitor for the duration of the study (20,21). Videos are immediately uploaded, or a previously recorded video can be accessed from the device’s video library. To ensure the integrity of the video, time extraction can be completed to assess when the video was taken. This can be done with free time-stamping software and can also be extracted through REDCap customized programming (22).

Assessment scheduling

Previous ambulatory assessment studies have utilized a number of sampling strategies to capture real-world behavior (5). For example, assessments can be time-based, meaning that they occur at fixed times of day (e.g., every morning at 8 AM for two weeks), event-based, meaning that they occur during or following a specific event (e.g., uploading video of self taking medication in a clinical trial), or random, meaning that assessments are completed following a prompt that is randomly delivered to the participant. The optimal sampling strategy depends on the research question. REDCap allows researchers to program when survey invitations (i.e., tailored messages with a link to the REDCap survey) are sent to research participants. Invitations can be sent out when certain criteria are met. For example, invitations may be restricted to certain subgroups of participants or certain days in the study. (See the technical Appendix for specific instructions.)

The most straightforward assessment schedule in REDCap is the time-based strategy. Researchers can specify a specific time of day that a survey should be sent or the amount of time that should elapse before another survey is sent after certain criteria are met. It is also possible to approximate a “random” survey schedule by programming multiple invitation schedules and randomly assigning each participant to a schedule at study initiation.

Researchers who require an event-based sampling strategy may not find REDCap suitable for their needs, but rather may require an app which allows participants to report an “event” (e.g., logging a cigarette, taking medication) at any time. The current alternative in REDCap is to schedule an event-based assessment that remains available until completed or expired, which may be a practical approach if the “events” occur regularly and infrequently, such as taking medication twice per day.

Interface with twilio for text messaging

Study surveys created in REDCap can be sent directly to the participant via programmable SMS (text messaging) containing both a message from the researcher and the survey link. This is facilitated through the use of a third party web service, Twilio. Confidentiality of participant responses are maintained. Only phone numbers are temporarily logged on the Twilio site. Sending text messages through Twilio comes with a modest fee to purchase the phone number from which messages will be sent and to distribute each survey link by SMS. Sending surveys via a survey link requires the participant to have access to the internet on the mobile device. A separate account is created with Twilio, and account information is manually entered by the researcher into REDCap.

Twilio also provides an option for participants to be asked and respond to survey questions via text message or via a phone call. The advantage of these options are that a mobile device with internet capabilities is not required, as all responses are texted (for text message surveys) or spoken (for phone call surveys). However, additional security precautions are necessary to ensure confidentiality of participant responses.

Procedure

Throughout the duration of our trial (Days 1–57), participants were asked to complete a daily “Morning Report” in which they answered questions regarding past (calendar) day smoking, substance use, and medication adherence. During the first 6 days of the study, participants were asked to make a smoking quit attempt and complete assessments remotely via REDCap twice daily to: (a) biochemically verify smoking status via breath CO samples; (b) confirm medication adherence by uploading a video of themselves taking their medication; and (c) complete self-report measures designed to capture smoking, craving, withdrawal, and smoking satisfaction (if smoking occurred). The day that participants were randomized to a treatment condition (Day 0), “test” surveys were sent to the participant’s mobile device to be completed while in the laboratory, while staff were available to answer questions. These “test” surveys allowed participants to practice completing medication and CO sample videos and allowed staff to verify that the participant had a compatible mobile device with all necessary functionality. In the event that participants did not own a compatible mobile device, one was provided to them. After study initiation, a lead-in period including morning report and CO sample videos were added between participant screening and randomization. The duration of this period varied based on scheduling. This lead-in period was added in order to (1) troubleshoot issues with video upload and (2) obtain more accurate estimates of ad-lib CO values. Lead-in data are not reported here.

Morning Reports (Days 1–57). This brief assessment was delivered via text message and/or email at 8 AM each morning. With customized programming to enhance REDCap’s basic functionality, our surveys expired 16- hours after survey delivery. To reduce participant burden and maximize flexibility for the participant, participants were given a 16-hour window to complete questions about their smoking, substance use, and medication adherence on the previous day. Survey expiration prevented the participant from being able to access the survey if they inadvertently clicked an older link, and ensured that recall of past day smoking, drug use, and medication dosing occurred no more than 24 hours after the day of interest.

CO Sample Videos/Medication Videos/Surveys (Days 1–6). The first daily assessment was delivered via text message at 8:15 each morning. As above, participants were given a 16-hour window to complete these assessments (e.g., CO sample video, medication video, surveys). Participants first completed surveys, then provided a video recording of themselves leaving a breath CO sample, being sure that the value on the breathalyzer was visible to the camera, and finally completed a video of themselves taking their medication. We allowed participants to record medication videos prior to when the morning survey link was available and upload at a later time. Research staff were then able to extract the timestamp from the video.

The second daily assessment was identical to the first and was programmed to be sent 8 hours after completion of the first assessment. If the first assessment was not completed, the second assessment was not sent. We opted to use this method to ensure that assessments on the same day were completed at least 8 hours apart. Given a 16-hour expiration window for the first survey, it was likely that a participant would have an available survey at any time during the day or night.

Results

Feasibility of data collection

Of the 36 randomized participants, approximately half (n = 19; 52.8%) owned a compatible mobile device (Android or iOS operating system, with sufficient data/minutes available, processing speed, and functioning camera) and completed morning reports, surveys, and video uploads using their own device. The remaining participants (n = 17) were loaned an iPhone for at least the first week of the study (some were loaned the phone for study duration).

Morning report adherence data (n = 31)

Data is presented for the first 31 study participants to complete the study. There were five randomized participants currently enrolled at the time of this publication, and their data are not included. Of note, 23 out of 31 participants completed the 8-week trial, while 8 participants discontinued early (n = 6) or were lost to follow up (n = 2). Data include rates of adherence with morning reports while the participant was enrolled in the study. For participants lost to follow up, the number of expected morning reports was 64 (maximum possible days with one week visit window) for adherence purposes. Participants completed an average of 74.8% (SD = 30.2, Median = 86.2%, Range = 0–100%) of the morning report assessments. When only considering participants who were retained through study completion, the average completion rate was 86.6% (SD = 17.5, Median = 94.3%, Range = 27.4–100%).

Adherence data for the twice daily surveys/CO video samples (n = 36)

Results are presented for the first 36 randomized participants (5 participants were still enrolled in the study at the time of this report but completed days 1–6, and are included). Participants completed an average of 73.8% (SD = 30.7, Median = 83.3%, Range = 0–100%) of the remote surveys and CO video samples, which required an acceptable CO video completed at least 8 hours apart from other CO submissions.

Adherence data for the medication video samples (n = 20)

The medication adherence videos were added to the protocol after study initiation, so adherence is based on a sub-sample of 20 participants who have completed days 1–6 and were asked to upload medication videos. Participants submitted an average of 8.5 acceptable videos (out of a possible 12) which showed them taking the medication, for a mean acceptable medication video rate of 70.4% (SD = 27.6, Median = 75.0%). Videos were not counted if it was not clear to research staff that the medication had been ingested.

Patient satisfaction data (n = 19)

One month follow-up data on patient satisfaction was available for 19 participants to date. All participants reported moderate (10.5%) or strong (89.5%) agreement with the statement that the “surveys, videos, and morning reports were easy to understand/use.” When asked if they would have preferred paper and pencil assessments for the morning report assessments, most participants reported moderate (n = 1; 5.3%) or strong disagreement (n = 14; 73.7%). However, the remaining four participants strongly preferred paper-based surveys (21.1%), suggesting that participants had clear and strong preferences for electronic or paper-based surveys. Finally, participants differed on how much the completion of morning reports, videos, and surveys impacted their daily routine, with 36.9% reporting that their routine was altered by the assessments.

Overall, most participants felt that monitoring their CO level was helpful during their quit attempt (n = 18, 89.5%). Though medication videos were added to the protocol later, nine participants provided feedback on medication videos at one month follow-up. All participants (n = 9; 100%) reported that the medication videos were easy to complete, that the medication videos kept them on a consistent medication schedule, and that the text messages also served to remind them to take their medication. These results should be interpreted with caution as those who were lost to follow up did not provide feedback.

Discussion

In this illustration of REDCap within the context of a pharmacotherapy trial for smoking cessation, frequent assessments and confirmation of smoking status are possible in the critical first week of the study through ambulatory assessment methods while still maintaining in-person clinic visits throughout the study. Adherence rates were comparable to other ambulatory assessment protocols with varying samples of smokers and study designs (23–24), though some studies have obtained adherence rates above 80% (25–28). Adherence with the medication video required both medication ingestion and submission of a video, which is a fairly high threshold. An estimated 12–40% of participants in clinical trials show no evidence of medication adherence via biochemical verification at at least 50% of their clinic visits (14). Our sample was comparable, as 20% of participants had less than 50% adherence with the medication videos. Without a control group, however, it is unclear whether the act of submitting medication videos regularly increased medication adherence.

Participants were largely satisified with REDCap stating that the surveys were easy to complete and preferred over paper and pencil assessments. However, participants did vary on how much the completion of assessments impacted their daily routine. In most ambulatory assessment studies, the goal is to monitor behavior as unobtrusively as possible. However, in the context of a clinical trial, improved medication adherence and abstinence as a result of medication adherence and CO monitoring is desirable. Thus, this is not necessarily a limitation in the context of the current study. The sample was diverse in age, race, and education suggesting feasibility across a wide range of individuals.

Despite advantages of using REDCap for data collection, there are a number of limitations that researchers must consider. REDCap may not be a viable option for investigators at institutions without a current license.

At least for the video monitoring portion of our study, access to a smartphone was required for data collection. It is possible to send survey links from REDCap to a participant email address, circumventing the need for Twilio or a mobile device. However, participants would need reliable and consistent access to the internet and the “real-time” nature of data collection may be diminished if this access was limited to times that a participant was at a computer. Also, certain features, such as video recording may be significantly more complex and require additional hardware and software to be available on the computer. Though an added expense for researchers, providing a compatible mobile device to participants for the duration of the study may be a reliable alternative. We had only one unreturned mobile device in our trial, though in a larger scale trial researchers should budget for at least some lost study phones. However, because paper and pencil assessments are not equivalent to real- time, electronic assessments (29), the use of loaner devices are strongly favored over use of paper and pencil diaries when feasible.

Additionally, REDCap is not as dynamic as stand alone software or mobile applications, making truly random survey administration challenging. This platform may not be as flexible for delivering interventions “in-the-moment” when participants are in times or contexts of greatest need. Video capture is also not as seamless as it could be if programmed through a stand-alone application. REDCap requires several steps for file upload, and some participants struggled with recording and upload which required additional staff oversight and training. The additional burden placed on staff is a trade-off for recruiting a more diverse population for inclusion. We also believe the advantages of real-time or near real-time data offset the disadvantages of additional staff burden.

Though applicable to all mobile-phone administered surveys, some participants in our clinical trial strongly preferred paper and pencil assessments to phone-based assessments. However, this represented a minority of the sample to-date in the current clinical trial. As clinical trials begin to implement remote assessments, it will be important to ensure that technologically-inclined participants are not overly represented in clinical trials, potentially skewing results. If low socioeconomic status and older age are related to non-retention in technology-enhanced trials, results may not generalize to these populations. Those with limited smartphone experience and discomfort with technology may require additional training and explanation, but they must not be unnecessarily exluded from trials. Because this trial also included in-person visits, we were able to troubleshoot issues and provide participants with additional training/feedback as needed. This may not be possible for a completely remote trial.

In conclusion, investigators may already have access to a cost-effective option at their institutions for implementing ambulatory assessment via REDCap. Much of the functionality necessary to implement an ambulatory assessment design is available within REDCap itself, including survey scheduling, longitudinal survey designs, branching options, security features, and interface with a text messaging provider. REDCap may be particularly useful when investigators do not have the budget or time for app development. It may also be useful for pilot trials and for adding brief ambulatory assessment protocols to collect additional outcome data in a clinical trial.

Though some studies may still require more sophisticated, specialized software, REDCap provides sufficient functionality for many ambulatory assessment projects and may supplement data collection for largely in-person studies. Future developments to REDCap will likely continue to increase the ease of self-programming an ambulatory assessment study and may also allow for more sophisticated survey scheduling designs.

Acknowledgments

The authors would like to thank John Clark and Erin Quigley for programming enhanced REDCap features for the purpose of this clinical trial, including survey expiration (prior to REDCap upgrades) and automatic timestamp extraction from video uploads.

Funding

The authors wish to acknowledge funding sources for this manuscript. Funding for this study was provided by NIDA grant R34 DA042228 (McClure), pilot research funding from the Hollings Cancer Center’s Cancer Center Support Grant P30 CA138313 at the Medical University of South Carolina, and pilot research funding from the Department of Psychiatry and Behavioral Sciences at the Medical University of South Carolina. Effort in preparing this work was supported by NIDA grant K01 DA036739 (McClure), NIDA grant R01 DA038700 (Froeliger, Gray, Kalivas), NIDA grant R01 DA042114 (Gray), NIDA Grant UG3 DA043231 (McRae-Clark, Gray), NIDA grant U01 DA031779 (Gray), and NIAAA grant T32 AA007474 (Woodward). Additional funding and support was provided by the South Carolina Clinical and Translational Institute at the Medical University of South Carolina (UL1 TR001450).

Appendix. A Technical “How To” Guide for Creating an Ambulatory Assessment Study in REDCap

This step-by-step guide for developing an ambulatory assessment study in REDCap is ideal for those with some basic knowledge of REDCap functionality (e.g., creating a study, adding instruments). For those new to REDCap, we recommend accessing the REDCap website for a number of freely available video tutorials to get you started (https://projectredcap.org/resources/videos/). Once you have created a new study and have added your instruments, you are ready to add the ambulatory assessment specific features as detailed below.

-

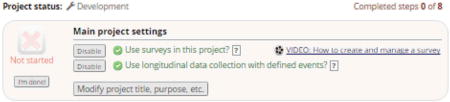

Enable project-level settings under Project Setup tab.

Under “Main project settings”, enable both “Use surveys in this project” and “Use longitudinal data collection with defined events”. Enabling use of surveys allows for collection of participant-entered data. Enabling longitudinal data collection adds a new section under Project Setup for “Define your events and designate instruments for them” (discussed in more detail later).

-

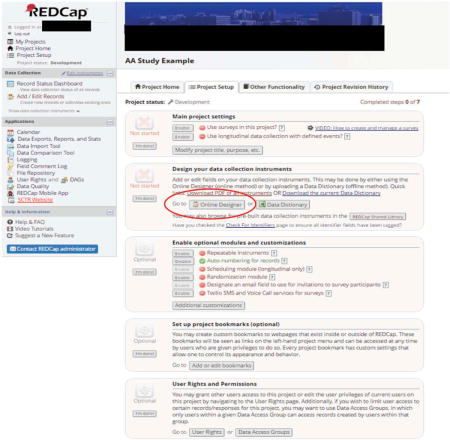

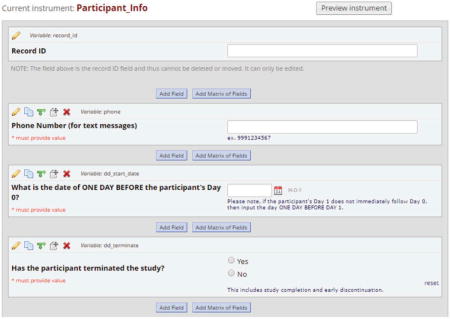

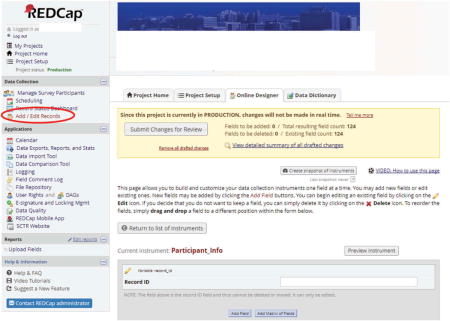

Create a “Participant Information” form in the Online Designer

We recommend that the first data collection instrument be a general, participant information form to be completed by the research staff (not the participant). You can rename the form, “My First Instrument” that automatically appears in REDCap for this purpose. For example, your “Participant Info” form may contain1:

We recommend that the first data collection instrument be a general, participant information form to be completed by the research staff (not the participant). You can rename the form, “My First Instrument” that automatically appears in REDCap for this purpose. For example, your “Participant Info” form may contain1:- Participant ID number (a unique study identifier which must always be the first field in the first instrument in a REDCap database)

- Participant email and phone number (to receive survey links via email and/or text message)

- Participant study start date

-

A field to update participant study status as they advance through the study with options, such as “enrolled”, “completed” or “discontinued”

The latter two fields (#3 and #4) are used as part of the Automated Survey Invitation logic to determine whether or not an assessment is sent to a specific participant and when the survey is sent (see following details).

-

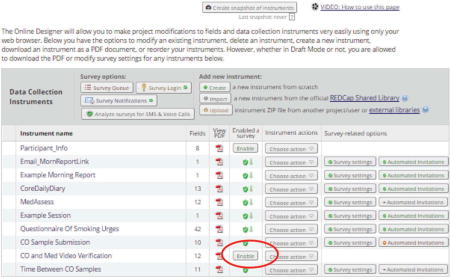

“Enable” Data Collection Instruments as Surveys

For instruments that will be done as a survey, each one must be “enabled” as a survey on the Online Designer page.

Once an instrument has been enabled as a survey, you can customize its look and functionality from the Survey Settings button located on the same row in the Online Designer page table. In the example above, for all forms except the last in each assessment set, we selected “auto-continue to the next survey” under survey settings. Auto-continuing allows the researcher to send out a survey invitation for only the first instrument. After completion of the first instrument, the next instrument will automatically appear for the participant to complete.

Alternatively, we could have included all assessment fields in one instrument with section headers for organization. We chose to break up the assessments into separate instruments to facilitate data export for analysis and to provide natural page breaks for the surveys completed by participants. Breaks in the survey are especially important when completing on mobile devices for ease of use.

Note. Survey expiration is now also available in the Version 7.3.4 of REDCap under Survey Settings.

-

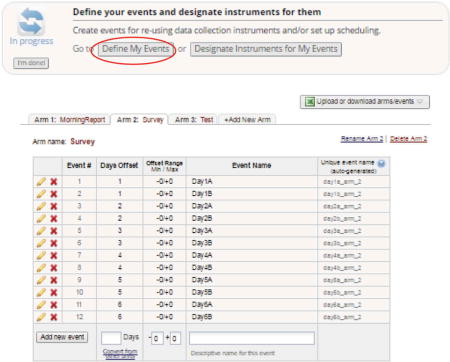

Select “Define My Events” under the Project Setup tab to create an “event”

An “event” is any instance where an assessment is to be administered. For example, to send out a morning and evening assessment every day for one week, 14 “events” are needed. Different study arms can have the same or different schedules for “events”.

-

Select “Designate Instruments for My Events” to choose which instruments are administered at each “event”

Assign each instrument to one or more events as appropriate. Surveys that auto-continue to the next instrument will be denoted by a green, downward facing arrow, as shown in the figure below. Instruments that do not automatically continue to the next are shown by a green check mark.

-

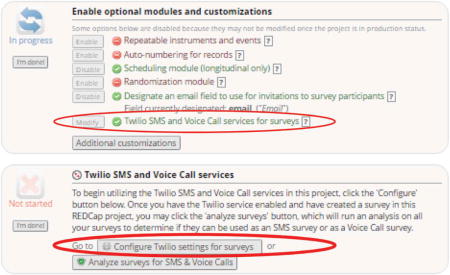

If using Twilio, link your Twilio account

If using Twilio SMS for text message routing, be sure to enable and configure Twilio settings. This will require a separate creation of a Twilio account at a modest cost per text message. You will need to enter specific information from your Twilio account into REDCap where prompted to enable the services.

Twilio settings for surveys will also need to be configured to let REDCap know which field is your designated phone number field and to enable SMS text messaging via survey links (other options are available but beyond the scope of this guide).

-

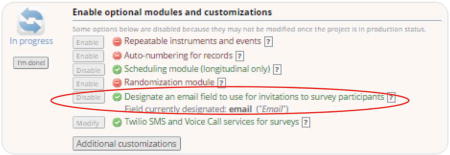

If sending surveys via email, you will also need to designate an email field.

Since our study’s first instrument, “Participant Information”, was not enabled as a survey, we needed to create a text field validated to “email” format to collect participant emails. That field was then designated as the source for the email list used to distribute surveys.

-

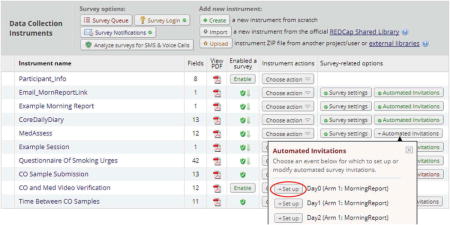

To schedule when assessments should be sent to participants, select the “Automated Invitations” button on the Online Designer page associated with the first survey. A list of events to which the survey has been assigned will appear.

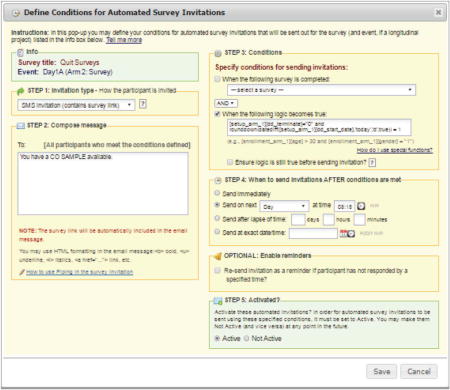

Select “Set up” for each event that you wish to schedule an automated survey invitation. As shown in the figure below, several scheduling options are included.

Select “Set up” for each event that you wish to schedule an automated survey invitation. As shown in the figure below, several scheduling options are included.- Step 1: Invitation type – Select the method by which the survey will be sent, e.g., text or phone (options will only appear if email and phone fields have been enabled).

- Step 2: Compose message – Compose the actual text to be included in the invitation. The survey link will automatically appear below the researcher’s message.

-

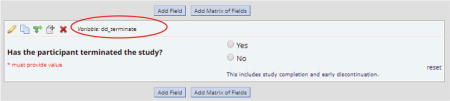

Step 3: Conditions – Specify the conditions for scheduling the survey. In our example, this is the step where information from the “Participant Info” instrument becomes part of the logic to determine whether a survey should be scheduled. As shown in the figure below, we set up a binary variable called “dd_terminate” to indicate whether the participant had completed or discontinued the study. While the participant was enrolled in the study, this variable was set to 0 (“No”). When the participant discontinued or completed the study, the variable was changed to 1 (“Yes”). The following logic ensured that participants were still enrolled prior to scheduling an invitation for a particular survey/event.

We also included logic to determine how many days the participant had been enrolled in the study in order to send the correct event’s survey to a participant. If the difference between the participant’s start date and the current date was equal to a predetermined value, the appropriate survey for that particular day would be scheduled.

We also included logic to determine how many days the participant had been enrolled in the study in order to send the correct event’s survey to a participant. If the difference between the participant’s start date and the current date was equal to a predetermined value, the appropriate survey for that particular day would be scheduled. -

Step 4: When to send invitations AFTER conditions are met – Indicate when the invitation is to be sent once conditions established in Step 3 have been met. In our time-based example, the first survey is made available every day at 8:15 AM.Of note, there is an “ensure logic is still true before sending invitation”, feature available in Step 3. This feature can be helpful in cases where a survey is scheduled but then a variable is changed that results in the logic no longer being “true”. In our case, surveys were scheduled to be delivered the day after the logic became true. If the “ensure logic is still true” option were selected, the date difference would no longer be “true” on the next day which would cause the invitation to be cancelled.

- Step 5: Activated? – When the conditions from Step 3 are met, survey invitations will begin to be scheduled according to Step 4. This feature allows you to set up your invitation schedule for a survey while developing and testing your database without having them actually scheduled and sent.

- Test your database and automated invitation schedule

-

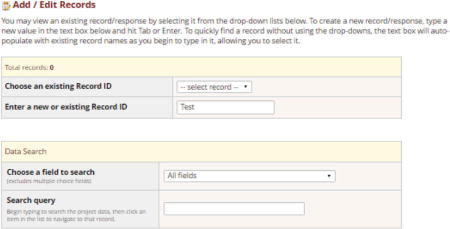

Create a test participant by selecting “Add/Edit Records” from the menu located on the left hand side of the Online Designer.

-

Enter the Participant ID number for test subject in “Enter a new or existing Record ID.”

-

Creating a new record will display the record’s grid containing a copy of each instrument/survey designated to its appropriate event(s) as established on the “Designate Instruments for My Events” page. Select the gray circle icon adjacent to the first instrument (in our example “Participant Info”) to enter the participant information.

- Select “Manage Survey Participants” from the menu on the left hand side of the REDCap screen. Then select the tab, “Survey Invitation Log” to verify that any survey invitations that should have been scheduled already appear in the log. Not all invitations will be immediately scheduled, as they are not scheduled until the conditions for the Automated Survey Invitation are met.

-

Footnotes

For extra security, a participant password for accessing their specific surveys can be created, and this would also be stored as a field on this form (the “Survey Login” feature is optional, but requires that the password be stored in the participant record).

Declaration of interest

The authors have no conflicting interests to report.

References

- 1.Fahrenberg J. Ambulatory assessment: issues and perspectives In. In: Fahrenberg J, Myrtek M, editors. Ambulatory assessment: computer assisted psychological and psychophysiological methods in monitoring and field studies. Seattle (WA): Hogrefe & Huber; 1996. pp. 3–20. [Google Scholar]

- 2.Fahrenberg J, Myrtek M, Pawlik K, Perrez M. Ambulatory assessment-monitoring behavior in daily life settings. Eur J Psychol Assess. 2007;23:206–13. doi: 10.1027/1015-5759.23.4.206. [DOI] [Google Scholar]

- 3.Shiffman S, Stone AA, Hufford MR. Ecological momentary assessment. Annu Rev Clin Psychol. 2008 Apr;4:1–32. doi: 10.1146/annurev.clinpsy.3.022806.091415. [DOI] [PubMed] [Google Scholar]

- 4.Conner TS, Lehman BJ. Getting started: launching a study in daily life. In: Mehl MR, Conner TS, editors. Handbook of research methods for studying daily life. New York: Guilford Press; 2012. pp. 89–105. [Google Scholar]

- 5.Trull TJ, Ebner-Priemer UW. Ambulatory assessment. Annu Rev Clin Psychol. 2013 Mar;9:151–76. doi: 10.1146/annurev-clinpsy-050212-185510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ghitza UE, Agage DA, Schmittner JP, Epstein DH, Preston KL. Clonidine maintenance prolongs opioid abstinence and decouples stress from craving in daily life: a randomized controlled trial with ecological momentary assessment. Am J Psychiatry. 2015 Aug;172:760–67. doi: 10.1176/appi.ajp.2014.14081014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kranzler HR, Armeli S, Feinn R, Tennen H. Targeted naltrexone treatment moderates the relations between mood and drinking behavior among problem drinkers. J Consult Clin Psychol. 2004 Apr;72:317–27. doi: 10.1037/0022-006X.72.2.317. [DOI] [PubMed] [Google Scholar]

- 8.Miranda R, Jr, MacKillop J, Treloar H, Blanchard A, Tidey JW, Swift RM, Chun T, et al. Biobehavioral mechanisms of topiramate’s effects on alcohol use: an investigation pairing laboratory and ecological momentary assessments. Addict Biol. 2016 Jan;21:171–82. doi: 10.1111/adb.12192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Tidey JW, Monti PM, Rohsenow DJ, Gwaltney CJ, Miranda R, McGeary JE, MacKillop J, et al. Moderators of naltrexone’s effects on drinking, urge and alcohol effects in non-treatment-seeking heavy drinkers in the natural environment. Alcohol Clin Exp Res. 2008 Jan;32:58–66. doi: 10.1111/j.1530-0277.2007.00545.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Carney MA, Tennen H, Affleck G, Del Boca FK, Kranzler HR. Levels and patterns of alcohol consumption using timeline follow-back, daily diaries and real-time “electronic interviews”. J Stud Alcohol. 1998 Jul;59:447–54. doi: 10.15288/jsa.1998.59.447. [DOI] [PubMed] [Google Scholar]

- 11.Searles JS, Helzer JE, Rose GL, Badger GJ. Concurrent and retrospective reports of alcohol consumption across 30, 90, and 366 days: interactive voice response compared with the Timeline Follow Back. J Stud Alcohol. 2002 May;63:352–62. doi: 10.15288/jsa.2002.63.352. [DOI] [PubMed] [Google Scholar]

- 12.Shiffman S. How many cigarettes did you smoke? Assessing cigarette consumption by global report, time-line follow-back, and ecological momentary assessment. Health Psychol. 2009 Sep;28:519–26. doi: 10.1037/a0015197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.McCann DJ, Petry NM, Bresell A, Isacsson E, Wilson E, Alexander RC. Medication nonadherence, “professional subjects,” and apparent placebo responders: overlapping challenges for medications development. J Clin Psychopharmacol. 2015 Oct;35:566–73. doi: 10.1097/JCP.0000000000000372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.McRae-Clark AL, Baker NL, Sonne SC, DeVane CL, Wagner A, Norton J. Concordance of direct and indirect measures of medication adherence in a treatment trial for cannabis dependence. J Subst Abuse Treat. 2015 Oct;57:70–74. doi: 10.1016/j.jsat.2015.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)— a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009 Apr;42:377–81. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Project REDCap [Internet] 2017 Jul 24; https://projectREDCap.org.

- 17.Shrout PE, Lane SP. Psychometrics. In: Mehl MR, Conner TS, editors. Handbook of research methods for studying daily life. New York: Guilford Press; 2012. pp. 620–35. [Google Scholar]

- 18.Martin CK, Han H, Coulon SM, Allen HR, Champagne CM, Anton SD. A novel method to remotely measure food intake of free-living people in real-time: the remote food photography method (rfpm) Br J Nutr. 2009 Feb;101:446–56. doi: 10.1017/S0007114508027438. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.SRNT Subcommittee on Biochemical Verification. Biochemical verification of tobacco use and cessation. Nicotine Tob Res. 2002 May;4:149–59. doi: 10.1080/14622200210123581. [DOI] [PubMed] [Google Scholar]

- 20.Dallery J, Raiff BR, Kim SJ, Marsch LA, Stitzer M, Grabinski MJ. Nationwide access to an internet-based contingency management intervention to promote smoking cessation: a randomized controlled trial. Addiction. 2017;112(5):875–83. doi: 10.1111/add.13715. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hertzberg JS, Carpenter VL, Kirby AC, Calhoun PS, Moore SD, Dennis MF, Dennis PA, et al. Mobile contingency management as an adjunctive smoking cessation treatment for smokers with posttraumatic stress disorder. Nicotine Tob Res. 2013;15(11):1934–38. doi: 10.1093/ntr/ntt060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Clark J, Quigley E, Lenert L, McRae-Clark A, Wagner AM, Obeid J. Directly observed therapy using REDCap, Twilio, and video uploads from mobile devices. Poster presented at 2017 REDCapCon; New York, NY. 2010. [Google Scholar]

- 23.Hedeker D, Mermelstein RJ, Berbaum ML, Campbell RT. Modeling mood variation associated with smoking: an application of a heterogeneous mixed-effects model for analysis of ecological momentary assessment (EMA) data. Addiction. 2009 Feb;104:297–307. doi: 10.1111/j.1360-0443.2008.02435.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Piasecki TM, Jahng S, Wood PK, Robertson BM, Epler AJ, Cronk NJ, Rohrbaugh JW, et al. The subjective effects of alcohol-tobacco co-use: an ecological momentary assessment investigation. J Abnorm Psychol. 2011 Aug;120:557–71. doi: 10.1037/a0023033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Businelle MS, Ma P, Kendzor DE, Reitzel LR, Chen M, Lam CY, Bernstein I, Wetter DW. Predicting quit attempts among homeless smokers seeking cessation treatment: an ecological momentary assessment study. Nicotine Tob Res. 2014 Oct;16:1371–78. doi: 10.1093/ntr/ntu088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Serre F, Fatseas M, Debrabant R, Alexandre JM, Auriacombe M, Swendsen J. Ecological momentary assessment in alcohol, tobacco, cannabis and opiate dependence: a comparison of feasibility and validity. Drug Alcohol Depend. 2012 Nov;126:118–23. doi: 10.1016/j.drugalcdep.2012.04.025. [DOI] [PubMed] [Google Scholar]

- 27.Shiffman S, Gwaltney CJ, Balabanis MH, Liu KS, Paty JA, Kassel JD, Hickcox M, Gnys M. Immediate antecedents of cigarette smoking: an analysis from ecological momentary assessment. J Abnorm Psychol. 2002 Nov;111:531–45. doi: 10.1037//0021-843x.111.4.531. [DOI] [PubMed] [Google Scholar]

- 28.Tomko RL, Saladin ME, McClure EA, Squeglia LM, Carpenter MJ, Tiffany ST, Baker NL, Gray KM. Alcohol consumption as a predictor of reactivity to smoking and stress cues presented in the natural environment of smokers. Psychopharmacology. 2017 Feb;234:427–35. doi: 10.1007/s00213-016-4472-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Stone AA, Shiffman S, Schwartz JE, Broderick J, Hufford MR. Patient non-compliance with paper diaries. Bmj. 2002 May;324:1193–94. doi: 10.1136/bmj.324.7347.1193. [DOI] [PMC free article] [PubMed] [Google Scholar]