Abstract

Background

Subject motion in PET studies leads to image blurring and artifacts; simultaneously-acquired MR data provides a means for motion correction (MC) in integrated PET/MRI scanners.

Purpose

To assess the effect of realistic head motion and MR-based MC on static FDG PET images in dementia patients.

Study type

Observational study

Population

30 dementia subjects were recruited.

Field strength/ sequence

3T hybrid PET/MR scanner where EPI-based and T1-weighted MPRAGE sequences were acquired simultaneously with the PET data.

Assessment

Head motion parameters estimated from higher temporal resolution MR volumes were used for PET MC. The MR-based MC method was compared to PET frame-based MC methods in which motion parameters were estimated by co-registering five-minute frames before and after accounting for the attenuation-emission mismatch. The relative changes in standardized uptake value ratios (SUVRs) between the PET volumes processed with the various MC methods, without MC, and the PET volumes with simulated motion were compared in relevant brain regions.

Statistical tests

The absolute value of the regional SUVR relative change was assessed with pair-wise paired t-tests testing at the p=0.05 level, comparing the values obtained through different MR-based MC processing methods as well as across different motion groups. The intra-region voxel-wise variability of regional SUVRs obtained through different MR-based MC processing methods was also assessed with pair-wise paired t-tests testing at the p=0.05 level.

Results

MC had a greater impact on PET data quantification in subjects with larger amplitude motion (higher than 18% in the medial orbitofrontal cortex) and greater changes were generally observed for the MR-based MC method compared to the frame-based methods. Furthermore, a mean relative change of ~4% was observed after MC even at the group level, suggesting the importance of routinely applying this correction. The intra-region voxel-wise variability of regional SUVRs was also decreased using MR-based MC. All comparisons were significant at the p=0.05 level.

Conclusion

Incorporating temporally correlated MR data to account for intra-frame motion has a positive impact on the FDG PET image quality and data quantification in dementia patients.

Keywords: PET/MRI, motion correction, dementia, simultaneous imaging

INTRODUCTION

Advances in recent years have led to hardware that allows simultaneous positron emission tomography (PET) and magnetic resonance imaging (MRI) in humans (1,2). This hybrid imaging modality has opened up opportunities for research and clinical applications (3). In the investigation of dementia, the two modalities provide complementary information and are already commonly used for both excluding other diseases causing cognitive impairment as well for dementia subtype classification (4–6). For example, combining separately acquired PET measurements of cerebral metabolic rate of glucose utilization using [18F]-fluorodeoxyglucose (FDG) and MR-derived brain atrophy measures showed greater accuracy in predicting cognitive decline and conversion to Alzheimer’s disease (AD) in patients with mild cognitive impairment (MCI) than using cerebrospinal fluid sampling or clinical biomarkers alone (7–9); combining both imaging biomarkers also improved the accuracy of differentiating either AD or MCI patients from healthy controls than using either modality alone (10). Apart from simultaneous data acquisition, an additional benefit of hybrid PET/MRI scanners is that the information obtained from simultaneous MR acquisitions can be used to correct for non-ideal PET effects such as photon attenuation, partial volume effects, and subject motion (11–15).

As the PET data acquisition is on the order of tens of minutes, subject motion is inevitable (16) and introduces blurring and image quality degradation. Although the visual interpretation of static PET images is usually not severely compromised by motion (17), the inaccurate quantification could lead to bias and increased variability when conducting group studies (16). While not all the patients move more than healthy subjects (and vice versa), a greater proportion of them are prone to motion (17). As a result, this subject population is more susceptible to motion-related effects, leading to decreased PET image quality and quantitative accuracy (3,17). Consequently, applying some form of motion correction (MC) is required in PET studies (17).

Various methods have been devised to cope with subject motion, ranging from those aimed at preventing motion using head restraints (not particularly generalizable because they may be uncomfortable and do not completely restrain the subject (18)) to those trying to compensate for subject motion. The first group in this second category is that of image-based methods, or frame-based MC (FBMC), in which the head motion is estimated by co-registering the reconstructed PET images to a reference position (19–21). FBMC has been designated as the MC method of choice in large, multicenter studies (e.g. AD Neuroimaging Initiative: ADNI, http://adni.loni.usc.edu/methods/pet-analysis/pre-processing and Japanese ADNI: J-ADNI (17)). Nevertheless, simulation studies have shown that FBMC methods are reliable only when intra-frame motion is less than 5 mm (22) as they are not able to compensate for the motion present within each short frame and the co-registration of the images generated from low-statistics frames is more susceptible to errors (21). Another group of methods known as event-based methods manipulate the raw list-mode PET data such that the coincidence events are repositioned in the line of response (LOR) space in a way that resembles the acquisition in the absence of motion (23–25). This approach requires the estimation of the motion during the scan, either using an external motion tracking system (e.g. an optical system with cameras and/or markers (18,26)) or from a different imaging modality such as MRI (12,27), as in the case of integrated PET/MRI devices.

Proof-of-principle studies have been performed previously, demonstrating the feasibility of MR-based MC (MRMC) (12,27). However, those studies only involved a limited number of healthy volunteers and the motion was mainly represented by large voluntary positional changes of the head. To the best of our knowledge the impact of MRMC has not previously been assessed in dementia patients exhibiting realistic motion (instead of sudden head positional changes). Thus, in this project we aimed to assess the effect of realistic head motion and MRMC on static FDG PET images in dementia patients and assess the effects of MRMC compared to the commonly used FBMC method.

MATERIALS AND METHODS

MR and PET Data Acquisition

Thirty subjects (Table 1) with either MCI (nMCI = 2), AD (nAD = 18), or frontotemporal dementia (FTD, nFTD = 10) were included in this study. All patients exhibited a mild level of cognitive impairment or dementia symptoms (i.e., Clinical Dementia Rating less than or equal to 1). Written informed consent was obtained from all participants or an authorized surrogate decision-maker. The Institutional Human Research Committee approved the study. The patients were scanned in supine position with no restraints other than the foam pads routinely used for MR examinations placed around their heads. The PET data were acquired on a prototype MR-compatible brain PET scanner (“BrainPET”) designed to fit inside the MAGNETOM Trio 3T MRI scanner (Siemens Healthcare, Erlangen, Germany). Approximately 5 mCi (~185 MBq) of FDG were administered shortly after initiation of MR acquisition and PET data were acquired in list mode format for 70 minutes. The data collected from 50–70 minutes were analyzed in this study. Resting state functional MRI (fMRI) data were acquired using an echo-planar imaging (EPI) based sequence (TE = 30 ms, TR = 2000 ms, slice thickness = 3 mm) simultaneously during most of this time interval.

Table 1.

Subjects scanned in this study

| Number | |

|---|---|

| Total | 30 |

| Male | 18 |

| Female | 12 |

|

| |

| Alzheimer’s Disease (AD) | 18 |

| Typical AD | 8 |

| Posterior Cortical Atrophy | 2 |

| Logopenic Primary Progressive Aphasia | 8 |

|

| |

| Frontotemporal Lobar Degeneration (FTD) | 10 |

| Behavioral Variant FTD | 5 |

| Semantic Dementia | 4 |

| Progressive Nonfluent Aphasia | 1 |

|

| |

| Amnestic Mild Cognitive Impairment (MCI) | 2 |

For generating the PET data without MC (PETNoMC), the 20-minute list mode data were split into four 5-minute blocks. The corresponding volumes were reconstructed using the 3D ordered-subsets expectation maximization (OSEM) algorithm (28,29), accounting for random coincidences (30), detector sensitivity and scatter (31). Attenuation correction was carried out using an MR-based method (15) to generate an attenuation map (μ-map) from the T1-weighted MR image (sequence acquisition parameters: TE = 1.52 ms, TR = 2200 ms, reconstruction matrix size = 256×256×256, total acquisition time = 8 min 24 sec, with motion navigators incorporated for real-time MR MC (32)). The volume obtained with this sequence (ran before the fMRI) was set as the “reference position” for PET MC. The μ-map of the MR radiofrequency coil was combined with the head μ-map. The final reconstructed PET volumes consisted of 153 slices with 256×256 voxels, 1.25 mm isotropic; all subsequent analyses were performed in the PET space. The volumes corresponding to the four 5-minute frames were simply averaged to obtain the non-motion corrected 20-minute static frame. The MC methods are described in detail in the following sub-sections.

Region-based analyses were carried out with anatomical labels derived from the T1-weighted MR image using the FreeSurfer software. The cortex was parcellated into 70 regions according to the Desikan-Killiany Atlas and the subcortical regions, cerebellum, and brainstem (hereafter referred to as the “subcortical regions”) were segmented into 41 regions. Of these segmentations and parcellations, 28 representative regions, with mean sizes ranging from 1.25±0.25 cm3 in the left amygdala to 11.62±2.67 cm3 in the right inferior parietal cortex (a complete list of region sizes are provided in supplementary data Table S1), were selected for further analysis.

MR-assisted Motion Estimation and Correction

Motion estimates derived from the fMRI raw data were used to correct the PET data before image reconstruction (12). The original EPI volumes (not those prospectively motion corrected) were used to estimate the true position of the subject’s head in the scanner. The subsequent volumes were registered to the first EPI volume using SPM8, 6 degrees of freedom rigid body transformation and normalized mutual information as the cost function. Six motion parameters (3 rotations and 3 translations along the main axes) characterized the motion at each time point. For times during the scan when fMRI data were not acquired (e.g. during the acquisition of the T1-weighted sequence, which on average happened 10.81% of the total PET acquisition time of each subject; detailed acquisition times are provided in supplementary data Table S2), piecewise cubic polynomials were used for motion parameter interpolation. The motion parameters were median filtered twice in successive 20-second blocks, before and after interpolation, to remove high frequency components and to obtain motion estimates for the whole duration of the scan (shown in Figure 1 for a representative subject).

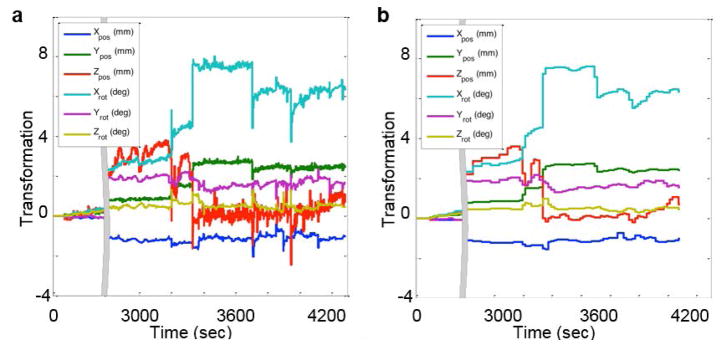

Fig. 1.

Transformation parameters of a representative subject derived from MR-assisted motion estimation. The left panel (A) plots the raw parameters and the right (B) plots the parameters after median filtering and interpolation. The 6 curves denote the 6 transformation parameters (translations and rotations in the x, y, and z axes)

To qualitatively assess the overall motion of a subject, a 21×21×21-voxel cube centered at (50,50,50) of the image matrix was placed in the image space and transformed according to the estimated 6 transformation parameters at any given time point. The Euclidean distances of the 8 pairs of corresponding vertices before (ai) and after transformation (ai′) were summed to provide a motion magnitude metric (M) at each time point during the scan:

| (1) |

These values were integrated over the 20 minutes to obtain the total aggregate motion. The 6 subjects with the highest aggregate motion (top quintile) and 6 with the lowest aggregate motion (bottom quintile) out of the 30 subjects were identified.

The algorithm for performing the PET MC was previously described (12). Briefly, the 20 minutes of raw PET data were divided into four 5-minute blocks (same duration as that used for the image-based methods described in the next section and similar to the ADNI data processing protocol) that were subdivided into multiple 20-second sub-frames and transformed individually using the filtered motion estimates. The corresponding LORs were moved by applying the inverse transformation of the motion estimates and the data were binned into sinograms. The μ-map of the head was derived in the reference position; the hardware was “moved” with the scanner for sensitivity and attenuation correction. During scatter correction, a time-weighted average of the sub-frame coil sinograms was added to the μ-maps for scatter estimation and for final reconstruction of the whole motion-corrected image frame. The volume corresponding to the 20-minute acquisition (PETMRMC) was produced by averaging the four 5-minute volumes reconstructed with corrected LOR data. Co-registration to the T1-weighted MR volume in reference position was performed to ensure all PET volumes were affected equally by voxel interpolation.

Frame-based Motion Correction

For comparison purposes, two FBMC methods were also applied: FBMC with a single-pass reconstruction (PETFBMC-SP) and FBMC with two-pass reconstruction (PETFBMC-TP) (33). In the first case, the four 5-minute frames were reconstructed using the μ-map in the reference position. In the second case, the T1-weighted MR image was co-registered to each of those first pass PET volumes (also using SPM8) and the transformation parameters were used to generate frame-specific μ-maps (n=4) used for the second pass reconstruction. In both cases, the four reconstructed dynamic PET frames were de-noised using empirically determined thresholds (60,000 and 600 Bq/mL) and blurred with a 3 mm FWHM Gaussian kernel; three of these frames were co-registered (also using SPM8 with the same setup described previously) to the last PET frame to obtain the transformation parameters used to move the original images. The moved original images and the original last frame were summed and averaged.

Post-processing of FDG PET Data

The PETNoMC and PETMRMC volumes were also de-noised and blurred with a 3 mm FWHM Gaussian kernel and the 10 planes at the edges of the field of view (FOV) were eliminated to prevent the prevent the registration from being biased by noisy data and maintain similar image quality to the FBMC volumes. The PETNoMC, PETFBMC-SP, and PETFBMC-TP images were registered to the PETMRMC image using SPM8 (same setup as previously described) such that all PET, MR, and FreeSurfer label images were in the reference position. Tissue activity concentration ratios were obtained by normalizing the PET images by the mean activity concentration of the cerebellar cortex. Henceforth, we will use standardized uptake value ratios (SUVRs) to denote these tissue ratios as this term is more frequently used in the AD literature and the two ratios are mathematically equivalent.

Assessment of the Effect of Simulated Motion on FDG PET Data

To investigate the effect of motion on SUVRs, we simulated motion by applying the MR-derived transformers to individual PET volumes. Specifically, for each subject the PETMRMC volume was moved in image space using each one of the 60 sets of transformers obtained during the 20-minute acquisition of a subject; the resulting volumes were averaged to yield the simulated volume (PETsim), an approximation of the PETNoMC volume. The inverse of the first motion estimate was applied to PETsim to move it back to the reference position for analysis. The main metric used for analysis in this work was the absolute value of the regional relative change (absRCROI), defined as |100×(PETMRMC - PETsim)|/PETsim. The mean SUVRs of each FreeSurfer region of interest (ROI) in PETsim and PETMRMC were used to assess the effect of simulated motion on the PET data. The motion transformers from each of the 30 subjects were applied to their original PETMRMC volumes, yielding 30 PETsim volumes that were compared to the original ones in each case.

Assessment of the Effect of Motion Correction on Patient FDG PET Data

First, the PETNoMC, PETFBMC-SP, PETFBMC-TP, and PETMRMC images were qualitatively inspected (e.g. by assessing the sharpness of the cortical ribbon). Next, voxel-wise relative change values (RCvox) within the FreeSurfer label-derived brain mask, were computed as 100×(PETMRMC - PETNoMC)/PETMRMC. To assess the additional effect of mis-registration, RCvox values were also calculated between PETMRMC and PETNoMC images that were not co-registered to reference space and displayed.

For the quantitative analyses, 15 planes at the bottom of the FOV were removed from the FreeSurfer labels and emission volumes to exclude possible missing data due to co-registration (e.g. when a region initially outside of the FOV moves inside after a translation in the axial direction or for certain rotations). Mean regional SUVR values for PETNoMC, PETFBMC-SP, PETFBMC-TP, and PETMRMC were calculated for voxels in all FreeSurfer-defined regions and a composite region of the postcentral, precentral, and pericalcarine areas that exhibit preserved metabolism in this patient population (34).

To assess the impact of MC at the ROI level (for affected and preserved cortical regions and subcortical regions), the mean absRCROI values were calculated between PETNoMC and either PETFBMC-SP, PETFBMC-TP, or PETMRMC across all subjects and for the two extreme quintiles separately. The absRCROI values across all subjects were also compared to those obtained from the simulation comparisons.

To assess regional voxel-wise variability, for the regional SUVRs of the composite region, the intra-region voxel-wise mean, standard deviation (SD), and coefficient of variation (CV, defined as SD/mean) for PETNoMC, PETFBMC-SP, PETFBMC-TP, and PETMRMC were also calculated.

Metrics and Statistical Analyses

In summary, the metrics used in this work were:

-

absolute value of the regional relative change:

(2) used to evaluate the relative change of mean SUVRs obtained in a region using different image processing methods;

-

voxel-wise relative change values:

(3) used for inspecting the SUVR relative change with and without MC for voxels in the brain;

-

coefficient of variation:

(4) used to examine the variation of SUVRs within the composite region.

Statistical analyses were carried out with pair-wise one-sided paired t-tests testing at the p=0.05 level to compare the absRCROI values obtained through different MR-based MC processing methods as well as for the same MC method but across different motion groups. The intra-region voxel-wise variability of regional SUVRs obtained through different MR-based MC processing methods was also assessed with pair-wise paired t-tests testing at the p=0.05 level.

RESULTS

MR-assisted Motion Estimation

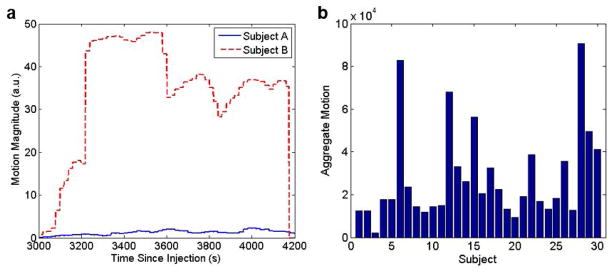

The simple mean of the translation and rotation amplitudes observed across all patients during the 20-minute PET acquisition were 3.41±2.98 mm and 1.92°±1.66°, respectively, and the maximum values were 12.9 mm and 6.2°, respectively. Figure 1A shows the 6 rigid body MR-derived transformation parameters at each time point for a representative subject. The same motion parameters after median filtering and interpolation are shown in Figure 1B. Figure 2A shows the motion magnitude curves obtained, as described in Equation 1, from the 6 rigid body motion parameters for the subject shown in Figure 1 and for another representative subject who exhibited lower amplitude movement. The aggregate motion for all subjects is shown in Figure 2B and the motion magnitude curves of all subjects are included in the supplementary data (Figure S1).

Fig. 2.

(A) Motion magnitude parameters of two representative subjects. Subject A exhibited less movement while subject B (the subject in Figure 1) showed more movement. (B) The aggregate motion magnitudes of all subjects enrolled in this study

Qualitative Assessment of MC Effects on FDG PET Data

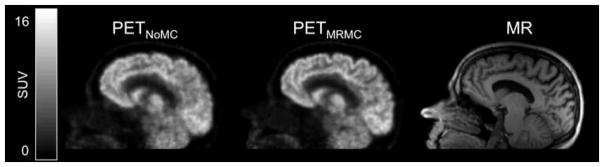

The PET images before and after MRMC and the corresponding MR for a representative subject are shown in Figure 3. The images reconstructed with the different MC methods for a different subject are shown in Figure S2. The image blurring due to subject motion is reduced and the FDG uptake in the cortical ribbon can be better appreciated qualitatively after MRMC, more closely resembling the anatomy shown in the morphological T1-weighted MR image.

Fig. 3.

The sagittal views of static (50–70 minutes post injection) PET images from a representative subject without MC (left) or with MRMC (center). A matching sagittal view of a morphological T1-weighted MR image (right) is also provided

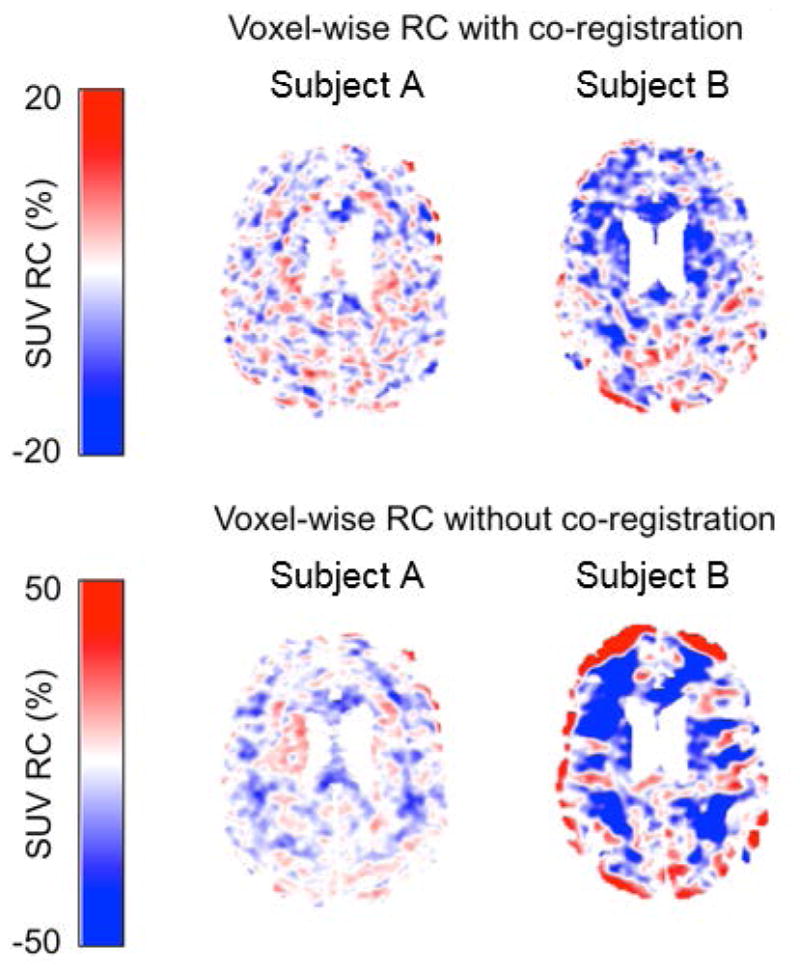

The SUV RCvox between PETNoMC and PETMRMC for two representative subjects are shown in Figure 4, demonstrating greater RCvox (mean absolute value 8.0%) for the subject who exhibited greater amplitude motion and smaller RCvox (mean absolute value 5.4%) for the subject with less motion (Fig. 4, top). The RCvox values were greater in both subjects when comparing the PETNoMC and PETMRMC volumes without co-registration, the subject moving less showed greater RCvox values (mean absolute value 21.0%) than the one moving more (mean absolute value 9.90%) in this scenario as well (Fig 4, bottom).

Fig. 4.

Maps of SUV RCvox values of the 2 representative subjects shown in Figure 2A. The top and bottom panels show the SUV RCvox values with and without co-registration of PETNoMC respectively. The subject with more motion (subject 2) showed greater RC after MC was applied

Quantitative Assessment of MC Effects on FDG PET Data

Considerable variability in the absRCROI values was observed for all the methods. For example, in regions such as the medial orbitofrontal cortex, values up to 46.4% and 18.5% were observed for one subject in the simulated and actual data, respectively.

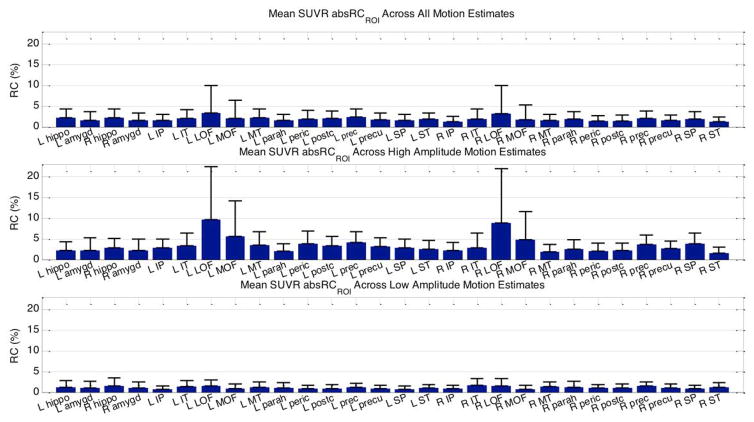

At the group level, the mean absRCROI values between PETsim and PETMRMC SUVRs were observed to be within 4% in the simulated motion data (Figure 5). Using the top and bottom quintile of estimates (most and least motion, respectively) yielded mean absRCROI values within 10% and 2.5%, respectively.

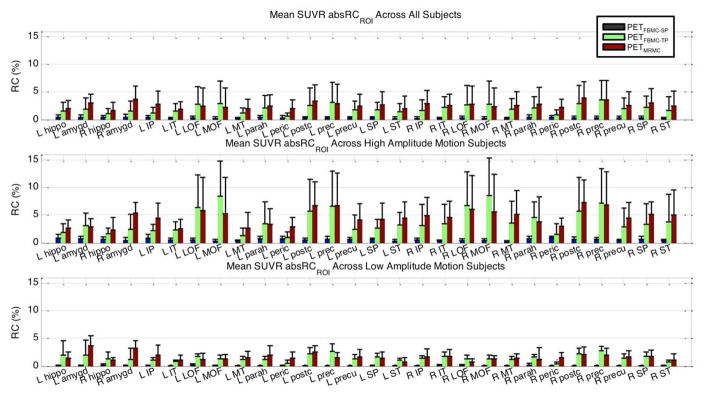

Fig. 5.

The mean and SD of SUVR absRCROI values, comparing the PET volumes processed with MRMC to the simulated PET data, in selected ROIs when all 30 estimates (top), the quintile of estimates with the most motion (middle), and the quintile of estimates with the least motion (bottom) were applied. The abbreviations used in this figure: L: left; R: right; hippo: hippocampus; amygd: amygdala; I: inferior; P: parietal; T: temporal; LOF: lateral orbitofrontal; MOF: medial orbitofrontal; M: middle; parah: parahippocampal; peric: pericalcarine; postc: postcentral; prec: precentral; precu: precuneus; S: superior

The absRCROI values across subjects comparing the three motion-corrected to the PETNoMC images are shown in Figure 6. The mean absRCROI values were within 4%. Single-pass FBMC showed the smallest changes and two-pass FBMC and MRMC yielded larger changes; in addition, more regions showed the largest changes after MRMC. The top and bottom quintiles of subjects (more and less motion, respectively) had mean absRCROI values within 9% and 4%, respectively. The differences in performance between different MC methods still held for these subject subgroups. All comparisons were significant at the p=0.05 level.

Fig. 6.

The mean and SD of SUVR absRCROI values, comparing the PET volumes processed with single-pass FBMC (blue), two-pass FBMC (green), or MRMC (red) to the PET volumes without MC, in selected ROIs across all 30 subjects (top), the quintile of subjects with the most motion (middle), and the quintile of subjects with the least motion (bottom). Abbreviations are same as those in Figure 5

The intra-region voxel-wise variability of regional SUVRs was decreased as MRMC was applied. For the composite region, the mean of the regional CV across subjects changed from 0.1946 (no MC) to 0.1951 (FBMC-SP), 0.1929 (FBMC-TP), and 0.1883 (MRMC). The pair-wise comparisons of these means using a paired t-test showed that each difference was significant at the p=0.05 level.

DISCUSSION

Our main goals were to assess the effect of realistic head motion on static FDG PET images and to compare the impact of MRMC and FBMC methods in dementia patients.

All patients exhibited motion in spite of the spatial constraints imposed by the MR coils inside the BrainPET scanner. The motion magnitude, directionality and pattern (e.g. gradual vs. sudden) varied from patient to patient. Consequently, the impact of MC was also variable, both between subjects and in different ROIs. The “unblurring” effect of MC is expected to depend on the nature of the subject’s motion and the length of the reconstructed frame. Although MC reduces the PET quantification bias for individual high-motion subjects (and highlights the need to perform MC when including these subjects in a group study), the overall impact at the group level depends on the variability in subject motion and the proportion of high-motion subjects.

The impact different MC methods have on the PET data was assessed on a region-by-region basis. The absRCROI values of SUVRs showed that the single-pass FBMC method had a smaller than two-pass FBMC or MRMC. The differences between the single- and two-pass FMBC highlight the importance of minimizing the mismatch between the emission and attenuation data even in the case of FBMC. The observation that more regions showed the largest changes after MRMC suggests this method might be more accurate than even two-passed FBMC.

Simulations were performed because there is currently no established metric to assess the impact of motion compensation on the PET data quantification. Intuitively, the mean SUVRs in cortical regions should decrease with motion (or alternatively the values should increase after MC). Examining the mean and SD absRCROI values between PETsim and PETMRMC confirmed the qualitative observation that the impact of MC was variable across subjects; applying motion estimates from the subjects with greater motion resulted in greater RC values than applying those from a subject with less motion. In addition, the simulations also confirmed that the effects of MC were also variable across regions, with larger RCs observed generally in smaller regions. The absRCROI values observed in real data were similar to those obtained from the simulations, suggesting the effect of MC on the PET data acquired from this group of subjects was within the expected range.

The effect of MC methods on PET intra-region voxel-wise CV in the regional SUVRs was also examined in a relevant composite region (34). Compared to no MC and the two FBMC methods, MRMC showed a decrease in the intra-region voxel-wise CV.

In most of the regions observed, MRMC showed a larger effect compared to two-pass FBMC. This and the decrease in intra-region voxel-wise CV observed using MRMC suggest this method could be more effective in reducing motion effects in PET images than FBMC and has the potential to reduce motion-induced regional variability in FDG PET imaging compared to the standard FBMC method. The way different MC methods are applied could explain the differences observed in PET quantification. Compared to the single-pass FBMC, the two-pass FBMC addressed not only the inter-frame subject motion but also the emission-attenuation mismatch. In addition, the MRMC approach was also able to compensate for intra-frame motion and thus had an even larger impact on PET quantification. However, both methods are susceptible to co-registration errors (when using an image-based approach for MR-assisted motion estimation) and the effect of MC is dependent on the extent of subject motion during the scan. Studies have shown that FBMC methods would be accurate when there is only limited intra-frame motion (22).

While two-pass FBMC and MRMC performed similarly with regards to the chosen metrics in this particular group of patients, the results suggest that with larger subject motion or longer scan times, the difference between the two methods could be larger. Therefore, in contrast to this study in which we focused only on the motion within one 20-minute frame, in longer PET acquisitions the patient would have a greater chance of exhibiting a larger range of motion and tremors or sudden jerks. In addition, if the PET data are reconstructed as one volume (in contrast to the four 5-minute blocks used in this work), the MRMC method might yield slightly better reconstructions due to better data statistics and outperform FBMC further. Furthermore, the MRMC methods are likely more accurate than FBMC in the case of studies performed using tracers that only localize to specific regions of the brain (such as raclopride) as these images lack the anatomical features required for accurate co-registration.

Another area warranting further investigation is the post-processing of the PET/MR data. To remove spurious noise from the EPI volume co-registration process, the motion estimates were median-filtered in 20-second blocks. This would result in the event-based method being conceptually analogous to a frame-based method that uses short 20-second reconstructed PET frames for co-registration. However, the EPI volumes used for co-registration are higher image quality than the PET ones reconstructed from 20 seconds of data. A possible limitation of this method, however, is that susceptibility artifacts could distort the EPI volumes and affect the motion estimates derived from these data. However, the head, relative to other parts of the body, is less susceptible to such distortions and can be seen as a rigid body. The choice of a PET frame for co-registration is also a possible limitation. While we believe using the last frame for FBMC had a minimal impact on our results, the choice of the reference frame needs to be considered carefully when using FDG data acquired at different time points and even more so for other tracers. The effect of emission-attenuation mismatch affecting both FBMC methods (in inter-frame and second-pass co-registrations) is also a potential area for further investigation. Another limitation is that motion can be tracked only when acquiring fMRI data. As the MR component of the integrated scanner should never be used just as an expensive motion tracking device, deriving motion from the MR data acquired for other purposes (e.g. arterial spin labeling or diffusion weighted imaging) or using sequences such as those in (35) in tandem with PET-based or external motion tracking systems would enable diverse PET/MR studies with full motion tracking throughout the whole acquisition time. In terms of the computation time, while adjusting the LORs for MRMC is time consuming in our current implementation, it is a parallelizable task and thus using cluster systems with parallel computing capabilities the PET reconstruction time for MRMC would be comparable (and thus feasible for routine use) to the other methods.

One study design limitation is that we only analyzed the effects of motion on regional SUVR changes and focused on static PET images. Further studies should investigate how MC affects kinetic parameters obtained from dynamic studies. Additionally, motion-related effects should also be investigated in aged-matched healthy controls. Motion tracking in this work was done based on EPI data and the subject motion could have been affected by the inherent acoustical noise.

In conclusion, MRMC and FBMC had comparable impact on PET data quantification in a group of dementia patients. In addition, greater SUVR absRCROI values (compared to PETNoMC) were observed in more cortical regions for PETMRMC than PETFBMC-TP, and reduced intra-region voxel-wise CV in the composite cortical region was observed for PETMRMC. These results suggest that incorporating temporally correlated MR data to account for intra-frame subject motion in the reconstruction of FDG PET images has a positive impact on the reconstructed PET image quality and data quantification. The improved PET data quantification using spatiotemporally correlated MR data may enable more accurate assessment of subtle changes in brain metabolism and allow for reduced sample sizes in clinical trials of novel therapeutic agents.

Supplementary Material

Motion magnitude parameters of all subjects

The sagittal (top) and transverse (bottom) views of static (50–70 minutes post injection) PET images from a representative subject, from left to right: without MC, with single-pass FBMC, two-pass FBMC, or with MRMC.

Acknowledgments

This work was funded by the NIH grants 5R01-EB014894 and 5R01-DC014296 and by the U.S. Department of Defense (DoD) through the National Defense Science & Engineering Graduate Fellowship (NDSEG) Program.

Footnotes

Conflict of Interest: The authors declare that they have no conflict of interest.

Ethical approval: All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

References

- 1.Catana C. Principles of Simultaneous PET/MR Imaging. Magn Reson Imaging Clin N Am. 2017;25:231–243. doi: 10.1016/j.mric.2017.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Catana C, Guimaraes AR, Rosen BR. PET and MR imaging: the odd couple or a match made in heaven? J Nucl Med. 2013;54:815–824. doi: 10.2967/jnumed.112.112771. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Catana C, Drzezga A, Heiss WD, Rosen BR. PET/MRI for neurologic applications. J Nucl Med. 2012;53:1916–1925. doi: 10.2967/jnumed.112.105346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Dickerson BC. Quantitating severity and progression in primary progressive aphasia. J Mol Neurosci. 2011;45:618–628. doi: 10.1007/s12031-011-9534-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Dickerson BC, Sperling RA. Neuroimaging biomarkers for clinical trials of disease-modifying therapies in Alzheimer’s disease. NeuroRx. 2005;2:348–360. doi: 10.1602/neurorx.2.2.348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Teipel S, Drzezga A, Grothe MJ, et al. Multimodal imaging in Alzheimer’s disease: validity and usefulness for early detection. Lancet Neurol. 2015;14:1037–1053. doi: 10.1016/S1474-4422(15)00093-9. [DOI] [PubMed] [Google Scholar]

- 7.Shaffer JL, Petrella JR, Sheldon FC, et al. Predicting cognitive decline in subjects at risk for Alzheimer disease by using combined cerebrospinal fluid, MR imaging, and PET biomarkers. Radiology. 2013;266:583–591. doi: 10.1148/radiol.12120010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Walhovd KB, Fjell AM, Brewer J, et al. Combining MR imaging, positron-emission tomography, and CSF biomarkers in the diagnosis and prognosis of Alzheimer disease. AJNR Am J Neuroradiol. 2010;31:347–354. doi: 10.3174/ajnr.A1809. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Cheng YW, Chen TF, Chiu MJ. From mild cognitive impairment to subjective cognitive decline: conceptual and methodological evolution. Neuropsychiatr Dis Treat. 2017;13:491–498. doi: 10.2147/NDT.S123428. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Zhang D, Wang Y, Zhou L, Yuan H, Shen D Alzheimer’s Disease Neuroimaging I. Multimodal classification of Alzheimer’s disease and mild cognitive impairment. Neuroimage. 2011;55:856–867. doi: 10.1016/j.neuroimage.2011.01.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Bowen SL, Byars LG, Michel CJ, Chonde DB, Catana C. Influence of the partial volume correction method on (18)F-fluorodeoxyglucose brain kinetic modelling from dynamic PET images reconstructed with resolution model based OSEM. Phys Med Biol. 2013;58:7081–7106. doi: 10.1088/0031-9155/58/20/7081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Catana C, Benner T, van der Kouwe A, et al. MRI-assisted PET motion correction for neurologic studies in an integrated MR-PET scanner. J Nucl Med. 2011;52:154–161. doi: 10.2967/jnumed.110.079343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Chen KT, Izquierdo-Garcia D, Poynton CB, Chonde DB, Catana C. On the accuracy and reproducibility of a novel probabilistic atlas-based generation for calculation of head attenuation maps on integrated PET/MR scanners. Eur J Nucl Med Mol Imaging. 2017;44:398–407. doi: 10.1007/s00259-016-3489-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hutchcroft W, Wang G, Chen KT, Catana C, Qi J. Anatomically-aided PET reconstruction using the kernel method. Phys Med Biol. 2016;61:6668–6683. doi: 10.1088/0031-9155/61/18/6668. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Izquierdo-Garcia D, Hansen AE, Forster S, et al. An SPM8-based approach for attenuation correction combining segmentation and nonrigid template formation: application to simultaneous PET/MR brain imaging. J Nucl Med. 2014;55:1825–1830. doi: 10.2967/jnumed.113.136341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Herzog H, Tellmann L, Fulton R, et al. Motion artifact reduction on parametric PET images of neuroreceptor binding. J Nucl Med. 2005;46:1059–1065. [PubMed] [Google Scholar]

- 17.Ikari Y, Nishio T, Makishi Y, et al. Head motion evaluation and correction for PET scans with 18F-FDG in the Japanese Alzheimer’s disease neuroimaging initiative (J-ADNI) multi-center study. Ann Nucl Med. 2012;26:535–544. doi: 10.1007/s12149-012-0605-4. [DOI] [PubMed] [Google Scholar]

- 18.Lopresti BJ, Russo A, Jones WF, et al. Implementation and performance of an optical motion tracking system for high resolution brain PET imaging. IEEE Transactions on Nuclear Science. 1999;46:2059–2067. [Google Scholar]

- 19.Fulton RR, Meikle SR, Eberl S, Pfeiffer J, Constable CJ, Fulham MJ. Correction for head movements in positron emission tomography using an optical motion-tracking system (vol 49, pg 116, 2002) IEEE Transactions on Nuclear Science. 2002;49:2037–2038. [Google Scholar]

- 20.Picard Y, Thompson CJ. Motion correction of PET images using multiple acquisition frames. IEEE Transactions on Medical Imaging. 1997;16:137–144. doi: 10.1109/42.563659. [DOI] [PubMed] [Google Scholar]

- 21.Montgomery AJ, Thielemans K, Mehta MA, Turkheimer F, Mustafovic S, Grasby PM. Correction of head movement on PET studies: comparison of methods. J Nucl Med. 2006;47:1936–1944. [PubMed] [Google Scholar]

- 22.Jin X, Mulnix T, Gallezot JD, Carson RE. Evaluation of motion correction methods in human brain PET imaging--a simulation study based on human motion data. Med Phys. 2013;40:102503. doi: 10.1118/1.4819820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Carson RE, CBW-SLJ, AJC Design of a Motion-Compensation OSEM List-Mode Algorithm for Resolution-Recovery Reconstruction for the HRRT. Paper presented at: Nuclear Science Symposium Conference Record IEEE; 2003. [Google Scholar]

- 24.Qi J, Huesman RH. Correction of motion in PET using event-based rebinning method: Pitfall and solution. Journal of Nuclear Medicine. 2002;43:146p–146p. [Google Scholar]

- 25.Daube-Witherspoon M, Yan Y, Green M, Carson R, Kempner K, Herscovitch P. Correction for Motion Distortion in PET by Dynamic Monitoring of Patient Position. Journal of Nuclear Medicine. 1990;31:816. [Google Scholar]

- 26.Olesen OV, Sullivan JM, Mulnix T, et al. List-Mode PET Motion Correction Using Markerless Head Tracking: Proof-of-Concept With Scans of Human Subject. IEEE Transactions on Medical Imaging. 2013;32:200–209. doi: 10.1109/TMI.2012.2219693. [DOI] [PubMed] [Google Scholar]

- 27.Ullisch MG, Scheins JJ, Weirich C, et al. MR-based PET motion correction procedure for simultaneous MR-PET neuroimaging of human brain. PLoS One. 2012;7:e48149. doi: 10.1371/journal.pone.0048149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Hong IK, Chung ST, Kim HK, Kim YB, Son YD, Cho ZH. Ultra fast symmetry and SIMD-based projection-backprojection (SSP) algorithm for 3-D PET image reconstruction. IEEE Trans Med Imaging. 2007;26:789–803. doi: 10.1109/tmi.2007.892644. [DOI] [PubMed] [Google Scholar]

- 29.Hudson HM, Larkin RS. Accelerated image reconstruction using ordered subsets of projection data. IEEE Trans Med Imaging. 1994;13:601–609. doi: 10.1109/42.363108. [DOI] [PubMed] [Google Scholar]

- 30.Byars LG, Sibomana M, Burbar Z, et al. Variance reduction on randoms from delayed coincidence histograms for the HRRT. 2005 IEEE Nuclear Science Symposium Conference Record; 2005. pp. 2622–2626. [Google Scholar]

- 31.Watson CC. New, faster, image-based scatter correction for 3D PET. IEEE Transactions on Nuclear Science. 2000;47:1587–1594. [Google Scholar]

- 32.Tisdall MD, Hess AT, Reuter M, Meintjes EM, Fischl B, van der Kouwe AJ. Volumetric navigators for prospective motion correction and selective reacquisition in neuroanatomical MRI. Magn Reson Med. 2012;68:389–399. doi: 10.1002/mrm.23228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Fulton RR, Meikle SR, Eberl S, Pfeiffer J, Constable C, Fulham MJ. Correction for Head Movements in Positron Emission Tomography Using an Optical Motion Tracking System. IEEE Trans Nucl Sci. 2002;49:116–123. [Google Scholar]

- 34.Brown RK, Bohnen NI, Wong KK, Minoshima S, Frey KA. Brain PET in suspected dementia: patterns of altered FDG metabolism. Radiographics. 2014;34:684–701. doi: 10.1148/rg.343135065. [DOI] [PubMed] [Google Scholar]

- 35.Feng L, Grimm R, Block KT, et al. Golden-angle radial sparse parallel MRI: combination of compressed sensing, parallel imaging, and golden-angle radial sampling for fast and flexible dynamic volumetric MRI. Magn Reson Med. 2014;72:707–717. doi: 10.1002/mrm.24980. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Motion magnitude parameters of all subjects

The sagittal (top) and transverse (bottom) views of static (50–70 minutes post injection) PET images from a representative subject, from left to right: without MC, with single-pass FBMC, two-pass FBMC, or with MRMC.