Significance

Skilled behaviors are learned through a series of trial and error. The ubiquity of such processes notwithstanding, current theories of learning fail to explain how the speed and the magnitude of learning depend on the pattern of experienced sensory errors. Here, we introduce a theory, formulated and tested in the context of a specific behavior—vocal learning in songbirds. The theory explains the observed dependence of learning on the dynamics of sensory errors. Furthermore, it makes additional strong predictions about the dynamics of learning that we verify experimentally.

Keywords: power-law tails, sensorimotor learning, dynamical Bayesian inference, vocal control

Abstract

Traditional theories of sensorimotor learning posit that animals use sensory error signals to find the optimal motor command in the face of Gaussian sensory and motor noise. However, most such theories cannot explain common behavioral observations, for example, that smaller sensory errors are more readily corrected than larger errors and large abrupt (but not gradually introduced) errors lead to weak learning. Here, we propose a theory of sensorimotor learning that explains these observations. The theory posits that the animal controls an entire probability distribution of motor commands rather than trying to produce a single optimal command and that learning arises via Bayesian inference when new sensory information becomes available. We test this theory using data from a songbird, the Bengalese finch, that is adapting the pitch (fundamental frequency) of its song following perturbations of auditory feedback using miniature headphones. We observe the distribution of the sung pitches to have long, non-Gaussian tails, which, within our theory, explains the observed dynamics of learning. Further, the theory makes surprising predictions about the dynamics of the shape of the pitch distribution, which we confirm experimentally.

Learned behaviors—reaching for an object, talking, and hundreds of others—allow the organism to interact with the ever-changing surrounding world. To learn and execute skilled behaviors, it is vital for such behaviors to fluctuate from iteration to iteration. Such variability is not limited to inevitable biological noise (1, 2), but rather a significant part of it is controlled by animals themselves and is used for exploration during learning (3, 4). Furthermore, learned behaviors rely heavily on sensory feedback. The feedback is needed, first, to guide the initial acquisition of the behaviors and then to maintain the needed motor output in the face of changes in the motor periphery and fluctuations in the environment. Within such sensorimotor feedback loops, the brain computes how to use the inherently noisy sensory signals to change patterns of activation of inherently noisy muscles to produce the desired behavior. This transformation from sensory feedback to motor output is both robust and flexible, as demonstrated in many species in which systematic perturbations of the feedback dramatically reshape behaviors (1, 5–8).

Since many complex behaviors are characterized by both tightly controlled motor variability and robust sensorimotor learning, we propose that, during learning, the brain controls the distribution of behaviors. In contrast, most prior theories of animal learning have assumed that there is a single optimal motor command that the animal tries to produce and that, after learning, deviations from the optimal behavior result from the unavoidable (Gaussian) downstream motor noise. Such prior models include the classic Rescorla–Wagner (RW) model (9), as well as more modern approaches belonging to the family of reinforcement learning (10–12), Kalman filters (13, 14), or dynamical Bayesian filter models (15, 16). In many of these theories, the variability of the behavior is intrinsic to motor exploration and deliberately controlled, but the distribution of this variability is not itself shaped by the animal’s experience (17–19). Such theories have addressed many important experimental questions, such as evaluating the optimality of the learning process (13, 20–23), accounting for multiple temporal scales in learning (7, 13, 24, 25), identifying the complexity of behaviors that can be learned (26), and pointing out how the necessary computations could be performed using networks of spiking neurons (12, 27–31).

However, despite these successes, most prior models that assume that the brain aims to achieve a single optimal output have been unable to explain some commonly observed experimental results. For example, since such theories assume that errors between the target and the realized behavior drive changes in future motor commands, they typically predict large behavioral changes in response to large errors. In contrast, experiments in multiple species report a decrease in both the speed and the magnitude of learning with an increase in the experienced sensory error (6, 22, 32, 33). One can rescue traditional theories by allowing the animal to reject large errors as “irrelevant”—unlikely to have come from its own actions (22, 34). However, such rejection models have not yet explained why the same animals that cannot compensate for large errors can correct for even larger ones, as long as their magnitude grows gradually with time (6, 33).

Here we present a theory (Fig. 1) of a classic model system for sensorimotor learning—vocal adaptation in a songbird—in which the brain controls a probability distribution of motor commands and updates this distribution by a recursive Bayesian inference procedure. The distribution of the song pitch is empirically heavy tailed (Fig. 2C), and the pitch variability is much smaller in a song directed at a female vs. the undirected song, suggesting that the variability and hence its non-Gaussian tails are deliberately controlled. Thus, our model does not make the customary Gaussian assumptions. The focus on learning and controlling (non-Gaussian) distributions of behavior allows us to capture successfully all of the above-described nonlinearities in learning dynamics and, furthermore, to account for previously unnoticed learning-dependent changes in the shape of the distribution of the behavior.

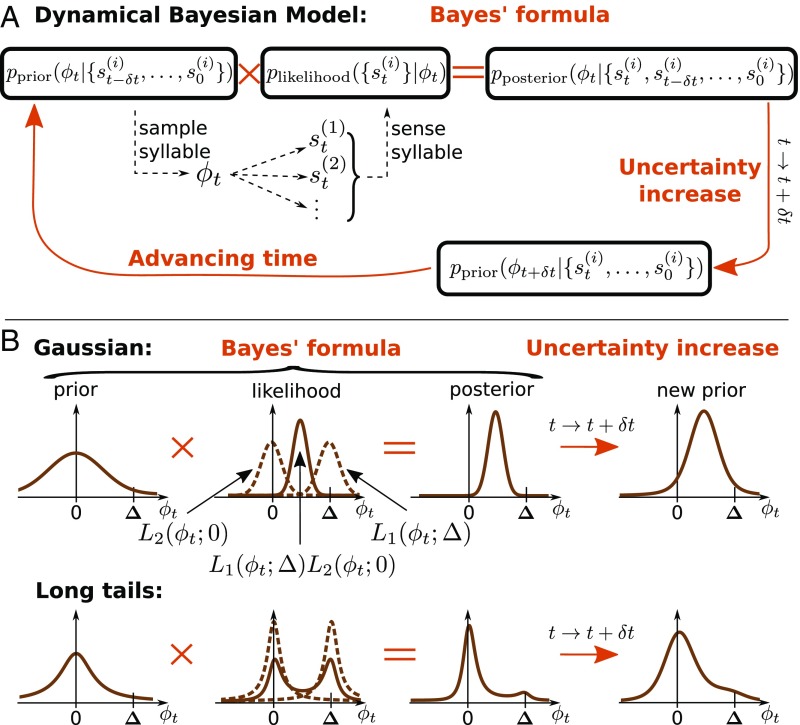

Fig. 1.

The dynamical Bayesian model (Bayesian filter). (A) A Bayesian filter consists of the recursive application of two general steps (35): (i) an observation update, which corresponds to novel sensory input and updates the underlying probability distribution of plausible motor commands using Bayes’ formula, and (ii) a time evolution update, which denotes the temporal propagation and corresponds to uncertainty increasing with time (main text); here the probability distribution is updated by convolution with a propagator. These two steps are repeated for each new piece of sensory data in a recursive loop. (B) Example distributions for the entire procedure in two scenarios: Gaussian (Top) and heavy-tailed (Bottom) distributions. The x axis, , represents the motor command which results in a specific pitch sung by the bird. The outcome of this motor command is then measured by two different sensory modalities, represented by , with corresponding likelihood functions and , respectively. The shift for modality 1 is induced by the experimentalist, which results in the animal compensating its pitch toward . Dashed brown lines represent the individual likelihood functions from the individual modalities, and the solid lines represent their product, which signals how likely it is that the correct motor command corresponds to . Heavy-tailed distributions can produce a bimodal likelihood, which, multiplied by the prior, suppresses large-error signals. In contrast, Gaussian likelihoods are unimodal and result in greater compensatory changes in behavior.

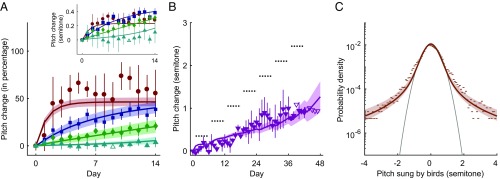

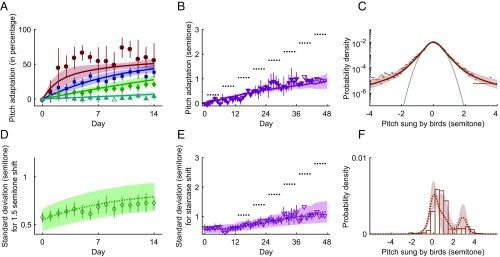

Fig. 2.

Experimental data and model fitting. The same six parameters of the model are used to simultaneously fit all data. (A) The symbols with error bars are four groups of experimental data, with different colors and symbols indicating different shift sizes (red-brown circle, 0.5-semitone shift; blue square, 1-semitone shift; green diamond, 1.5-semitones shift; cyan upper triangle, 3-semitones shift). The error bars indicate the SE of the group mean, accounting for variances across individual birds and within one bird (Materials and Methods). For each group, the data are combined from three to eight different birds, and the sign of the experimental perturbation (lowering or raising pitch) is always defined so that adaptive (i.e., error-correcting) vocal changes are positive. Data points without error bars had only a single bird, and they are not used for the fitting, which we denote by open symbols. The mean pitch sung on day 0 by each bird is defined as the zero-semitone compensation (). The solid lines with 1-SD bands (Materials and Methods) are results of the model fits, with the same color convention as in experimental data. Inset shows learning curves in absolute units, without rescaling by the shift size and without model error bands. (B) The lower triangles with error bars show the data from a staircase-shift experiment, with the same plotting conventions as in A. The data are combined from three birds. During the experiment, every 6 d, the shift size is increased by 0.35 semitone, as shown by the dotted horizontal line segments. On the last day of the experiment, the experienced pitch shift is 2.8 semitones. The magenta solid line with 1-SD band is the model fit. The combined quality of fit for the five curves collectively (four step perturbations and a staircase perturbation) is /df (compared with /df for the null model of the nonadaptive, zero-pitch compensation line). Note, however, that such Gaussian statistics of the fit quality should be taken with a grain of salt for nonnormally distributed data. (C) Dots represent the distribution of pitch on day 0, before the pitch shift perturbation (the baseline distribution), where the data are from 23 different experiments (all pitch shifts combined). The gray parabola is a Gaussian fit to the data within the semitone range. The empirical distribution has long, nonexponential tails. The brown solid line with 1-SD band is the model fit. Deviance of the model fit relative to the perfect fit [the latter estimated as the Nemenman–Shafee–Bialek entropy of the data (37, 38)] is 0.057 per sample point.

Results

Biological Model System.

Vocal control in songbirds is a powerful model system for examining sensorimotor learning of complex tasks (36). The phenomenology we are trying to explain arises from experimental approaches to inducing song plasticity (33). Songbirds sing spontaneously and prolifically and use auditory feedback to shape their songs toward a “template” learned from an adult bird tutor during development. When sensory feedback is perturbed (see below) using headphones to shift the pitch (fundamental frequency) of auditory feedback (33), birds compensate by changing the pitch of their songs so that the pitch they hear is closer to the unperturbed one. As shown in Fig. 2A, the speed of the compensation and its maximum value, which is measured as a fraction of the pitch shift and referred to as the magnitude of learning hereafter, decrease with the increasing shift magnitude, so that a shift of three semitones results in near-zero fractional compensation. Crucially, the small compensation for large perturbation does not reflect the limited plasticity of the adult brain since imposing the perturbation gradually, rather than instantaneously, results in a large compensation (Fig. 2B).

Data.

We use experimental data collected in our previous work (8, 33) to develop our mathematical model of learning. As detailed in ref. 39, we used a virtual auditory feedback system (8, 40) to evoke sensorimotor learning in adult songbirds. For this, miniature headphones were custom fitted to each bird and used to provide online auditory feedback in which the pitch (fundamental frequency) of the bird’s vocalizations could be manipulated in real time, with a loop delay of roughly 10 ms. In addition to providing pitch-shifted feedback, the headphones largely blocked the airborne transmission of the bird’s song from reaching the ear canals, thereby effectively replacing the bird’s natural airborne auditory feedback with the manipulated version. Pitch shifts were introduced after a baseline period of at least 3 d in which birds sang while wearing headphones but without pitch shifts. All pitch shifts were implemented relative to the bird’s current vocal pitch and were therefore “correctable” in the sense that if the bird changed its vocal pitch to fully compensate for the imposed pitch shift, the pitch of auditory feedback heard through the headphones would be equal to its baseline value. All data were collected during undirected singing (i.e., no female bird was present).

Mathematical Model.

To describe the data, we introduce a dynamical Bayesian filter model (Fig. 1A). We focus on just one variable learned by the animal during repeated singing—the pitch of the song syllables. Even though the animal learns the motor command and not the pitch directly, we do not distinguish between the produced pitch and the motor command leading to it because the latter is not known in behavioral experiments. We set the mean “baseline” pitch sung by the animal as , representing the “template” of the tutor’s song, or the scalar target memorized during development, and nonzero values of denote deviations of the sung pitch from the target.

However, while an instantaneous output of the motor circuit in our model is a scalar value of the pitch, the state of the motor learning system at each time step is a probability distribution over motor commands that the animal expects can lead to the target motor behavior. This is in contrast to the more common assumption that the state of the learning system is a scalar, usually the mean behavior, which is then corrupted by the downstream noise (34). Thus, at time , the animal has access to the prior distribution over plausible motor commands, . We remain deliberately vague about how this distribution is stored and updated in the animal memory (e.g., as a set of moments, or values, or samples, or yet something else) and focus instead not on how the neural computation is performed, but on modeling which computation is performed by the animal. We assume that the bird randomly selects and produces the pitch from this distribution of plausibly correct motor commands. In other words, we suggest that the experimentally observed variability of sung pitches is dominated by the deliberate exploration of plausible motor commands, rather than by noise in the motor system. This is supported by the experimental finding that the variance of pitch during singing directed at a female (performance) is significantly smaller than the variance during undirected singing (practice) (4, 41).

After producing a vocalization, the bird then senses the pitch of the produced song syllable through various sensory pathways. Besides the normal airborne auditory feedback reaching the ears, which we can pitch shift, information about the sung pitch may be available through other, unmanipulated pathways. For example, efference copy may form an internal short-term memory of the produced specific motor command (42). Additionally, proprioceptive sensing presumably also provides unshifted information (43). Finally, unshifted acoustic vibrations might be transmitted through body tissue in addition to the air, as is thought to be the case in studies that use pitch shifts to perturb human vocal production (44, 45).

We denote all feedback signals as where the index denotes different sensory modalities. Because sensing is noisy, feedback is not absolutely accurate. We posit that the animal interprets it using Bayes’ formula. That is, the posterior probability of which motor commands would lead to the target with no error is changed by the observed sensory signals, , where represents the probability of observing a certain sensory feedback value given the produced motor command was the correct one. In its turn, the motor command is chosen from the prior distribution, , which represents the a priori probability of the command to result in no sensory error. In other words, if the sensory feedback indicates that the pitch was likely too high, then the posterior is shifted toward motor commands that have a higher probability of producing a lower pitch and hence no sensory error—similar to how an error would be corrected in a control-theoretic approach to the same problem. We discuss this in more detail below.

Finally, the animal expects that the motor command needed to produce the target pitch with no error may change with time because of slow random changes in the motor plant. In other words, in the absence of new sensory information, the animal must increase its uncertainty about which command to produce with time (this is a direct analogue of increase in uncertainty of the Kalman filter without new measurements). Such increase in uncertainty is given by , the propagator of statistical field theories (46). Overall, this results in the distribution of motor outputs after one cycle of the model

| [1] |

where is the normalization constant.

We choose to be 1 d in our implementation of the model and lump all vocalizations (which we record) and all sensory feedback (which are unknown) in one time period together. That is, we look at timescales of changes across days, rather than faster fluctuations on timescales of minutes or hours. This matches the temporal dynamics of the learning curves (Fig. 2 A and B). Since the bird sings hundreds of song bouts daily, we now use the law of large numbers and replace the unknown sensory feedback for individual vocalizations by its expectation value . For simplicity, we focus on just two sensory modalities, the first one affected by the headphones and the second one not affected, and we remain agnostic about the exact nature of this second modality among the possibilities noted above. Thus, the expectation values of the feedbacks are the shifted and the unshifted versions of the expected value of the sung pitch, and , where is the experimentally induced shift (more on the minus sign below). Note that since is the motor command that the animal expects to produce the target pitch, the term should be viewed as the probability of generating the feedback given that was the correct motor command or as a likelihood of being the correct command given the observed . This introduces a negative sign, the compensation, into the analysis—for a positive , the most likely to lead to the target is negative and vice versa. While potentially confusing, this is the same convention that is used in all filtering applications—a positive sensory signal means the need to compensate and to lower the motor command, and the negative signal leads to the opposite. In other words, the bird uses the sensory feedback to determine what it should have sung and not only what it sang. With that, we refer to the conditional probability distributions for each sensory modality as the likelihood functions for a certain motor command being the target given the observed sensory feedback. Thus, assuming that both sensory inputs are independent measurements of the motor output, we rewrite Eq. 1 as

| [2] |

where 0 and represent the centers of the likelihoods (or the maximum likelihoods). This explains our choice of denoting the experimental shift , so that the compensation by the animal is instead , and is centered on as well. Note that the likelihoods for the shifted and unshifted modalities are centered around and 0, respectively, and bias the learning of what should be sung toward these centers irrespective of the current value of . We emphasize again that, in this formalism, we do not distinguish the motor noise and the sensory noise and assume that both are smaller than the deliberate exploratory variance (which is supported by the substantial variance reduction in directed vs. undirected song). This is consistent with not distinguishing individual vocalizations and focusing on time steps of 1 d in the Bayesian update equation above.

As illustrated in Fig. 1B, such Bayesian filtering behaves differently for Gaussian and heavy-tailed likelihoods and propagators. Indeed, if the two likelihoods are Gaussians, their product is also a Gaussian centered between them. In this case, the learning speed of an animal is linear in the error , no matter how large this error is, which conflicts with the experimental results in songbirds and other species (5, 8, 22, 36). Similarly, if the two likelihoods have long tails, then when the error is small, their product is also a single-peaked distribution as in the Gaussian case. However, when the error size is large, the product of such long-tailed likelihoods is bimodal, with evidence peaks at the shifted and the unshifted values, with a valley in the middle. Since the prior expectations of the animal are developed before the sensory perturbation is turned on, they peak near the unshifted value. Multiplying the prior by the likelihood then leads to suppression of the shifted peak and hence of large error signals in animal learning.

In Eq. 2, there are three distributions to be defined: , , and , corresponding to the evidence term from the shifted channel, the evidence term from the unshifted channel, and the time propagation kernel, respectively. The prior at the start of the experiment , , is not an independent degree of freedom: It is the steady state of the recurrent application of Eq. 2 with no perturbation, . We have verified numerically that a wide variety of shapes of , , and result in learning dynamics that can approximate the experimental data (Materials and Methods). To constrain the selection of specific functional forms of the distributions, we point out that the error in sensory feedback obtained by the animal is a combination of many noisy processes, including both sensing itself and the neural computation that extracts the pitch from the auditory input and then compares it to the target pitch. By the well-known generalized central limit theorem, the sum of these processes is expected to converge to what are known as Lévy alpha-stable distributions, often simply called stable distributions (47) (Materials and Methods). If the individual noise sources have finite variances, the stable distribution will be a Gaussian. However, if the individual sources have heavy tails and infinite variances, then their stable distribution will be heavy tailed as well (Cauchy distribution is one example). Most stable distributions cannot be expressed in a closed form, but they can be evaluated numerically (Materials and Methods). Here we assume symmetric stable distributions, truncated at semitones (Materials and Methods). Such distributions are characterized by three parameters: the stability parameter (measuring the proportion of the probability in the tails), the scale or width parameter , and the location or the center parameter (the latter can be predetermined to be 0, , or the previous time-step value in our case). For three distributions , , and , this results in the total of six unknown parameters.

Fits to Data.

We fitted the set of six parameters of our model simultaneously to all of the data shown in Fig. 2. Our dataset consists of 23 individual experiments across five experimental conditions: four constant pitch-shift learning curves and one gradual, staircase-shift learning curve (see Materials and Methods for details). As mentioned previously, birds learn the best (larger and faster compensation) for smaller perturbations, here 0.5 semitone (Fig. 2A). In contrast, for a large 3-semitone perturbation, the birds do not compensate at all within the 14 d of the experiment. However, the birds are able to learn and compensate large perturbations when the perturbation increases gradually, as in the staircase experiment in Fig. 2B. Importantly, the baseline distribution (Fig. 2C) has a robust non-Gaussian tail, supporting our model. We note that our six-parameter model fits are able to simultaneously describe all of these data with a surprising precision, including their most salient features: dependence of the speed and the magnitude of the compensation on the perturbation size for the constant and the staircase experiments, as well as the heavy tails in the baseline distribution.

Predictions.

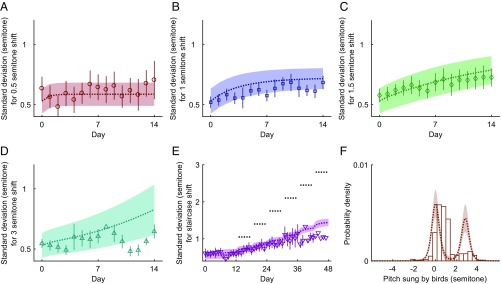

Mathematical models are useful to the extent that they can predict experimental results not used to fit them. Quantitative predictions of qualitatively new results are particularly important for arguing that the model captures the system’s behavior. To test the predictive power of our model, we used it to predict the dynamics of higher-order statistics of pitches during learning, rather than using it to simply predict the mean behavior. We first use the model to predict time-dependent measures of the variability (SD in this case) of the pitch. As shown in Fig. 3 A–E, our model correctly predicted time-dependent behaviors in the SD in both single-shift (Fig. 3 A–D) and staircase-shift experiments (Fig. 3E) with surprising accuracy. We stress again that no new parameter fits were done for these curves. Potentially even more interesting, Fig. 3F shows that our model is capable of predicting unexpected features of the probability distribution of pitches, such as the asymmetric and bimodal structure of the pitch distribution at the end of the staircase-shift experiment. This bimodal structure is predicted by our theory, since the theory posits that the (bimodal) likelihood distribution (Fig. 1B, Bottom) will iteratively propagate into the observable pitch distribution (the prior). The existence of the bimodal pitch distribution in the data therefore provides strong evidence in support of our theory. Importantly, this phenomenon can never be reproduced by models based on animals learning a single motor command with Gaussian noise around it, rather than a heavy-tailed distribution of motor commands.

Fig. 3.

Predictions of our model using the parameter values obtained from fitting the data shown in Fig. 2. The dots with error bars (A–E) and the histogram (F) represent experimental data with colors, symbols, error bars (from bootstrapping), and other plotting conventions as in Fig. 2. The dotted lines with 1-SD bands represent model predictions. Our model correctly predicts the behaviors of the SDs of the pitch distributions. Specifically, the best-fit model lines predict increases in the SD in B, C, and E, which correspond to 1 semitone, 1.5 semitones, and the staircase shift, respectively. At the same time, the data show that the SD increases for B, C, and E (P value for a positive dependence of the SD when regressed on time is , , and for B, C, and E, respectively). (F) Our model predicts that, at the end of the staircase experiment (mean and SD shown in Figs. 2B and 3E, respectively), the pitch distribution should be bimodal, while it is unimodal initially (compare Fig. 2C). This is also supported by the data. Specifically, a fit with a mixture of two Gaussian peaks has an Akaike’s information criterion score higher than a fit to a single Gaussian by 50 (in decimal log units), which is highly statistically significant (the data here are from day 47 from the single bird who was exposed to the staircase shift for the longest time, and the amount of data is insufficient to fit more complex distributions). Further, the two peaks are centered far from each other ( of a semitone and semitones, with error bars obtained by bootstrapping), illustrating the true bimodality. Neither the data nor the models show unambiguous bimodality in other learning cases.

Discussion

We introduced a mathematical framework within the class of observation–evolution models (35) for understanding sensorimotor learning: a dynamical Bayesian filter with non-Gaussian (heavy-tailed) distributions. Our model describes the dynamics of the whole probability distribution of the motor commands, rather than just its mean value. We posit that this distribution controls the animal’s deliberate exploration of plausible motor commands. The model reproduces the learning curves observed in a range of songbird vocal adaptation experiments, which classical behavioral theories have not been able to do to date. Further, also unlike the previous models, our approach predicts learning-dependent changes in the width and shape of the distribution of the produced behaviors.

To further increase the confidence in our model, we show analytically (Materials and Methods) that traditional linear models with Gaussian statistics (13) cannot explain the different levels of compensation for different perturbation sizes. While we cannot exclude that birds would continue adapting if exposed to perturbations for longer time periods and would ultimately saturate at the same level of adaptation magnitude, the Gaussian models are also argued against by the shape of the pitch distribution, which shows heavy tails (Figs. 2C and 3F), and by our ability to predict not just the mean pitch, but the whole pitch distribution dynamics during learning.

An important aspect of our dynamical model is its ability to reproduce multiple different timescales of adaptation (Fig. 2 A and B) using a nonlinear dynamical equation with just a single timescale of the update (1 d). As with other key aspects of the model, this phenomenon results from the non-Gaussianity of the distributions used and specifically the many timescales built into a single long-tailed kernel of uncertainty increase over time. This is in contrast to other multiscale models that require explicit incorporation of many timescales (7, 13). While multiple timescales could be needed to account for other features of the adaptation, our model clearly avoids this for the present data. We expect that an extension of our model to include multiple explicit timescales will account for individual differences across animals, for the dynamics of acquisition of the song during development, and for the slight shift of the peak of the empirical distribution in Fig. 3F from .

Previous analyses of the speed and magnitude of learning in the Bengalese finch have noted that both depend on the overlap of the distribution of the natural variability at the baseline and at the shifted means (25, 39): Small overlaps result in slower and smaller learning, so that different overlaps lead to different timescales. However, these prior studies have not provided a computational mechanism or a learning–theoretic explanation of why or how such overlap might determine the dynamics of learning. Our dynamical inference model provides such a computational mechanism.

We have chosen the family of so-called Lévy alpha-stable distributions to provide the central ingredient of our model: the heavy tails of the involved probability distributions. In general, a symmetric alpha-stable distribution has a relatively narrow peak in the center and two long fat tails, and this might provide some valuable qualitative insights into how the nervous system processes sensory inputs. For example, a narrow peak in the middle of the likelihood function suggests that the brain puts a high belief in the sensory feedback. However, the heavy tails say that it also puts certain weight (nearly constant) on the probability of very large errors outside of the narrow central region. We have verified that the actual choice of the stable distributions is not crucial for our modeling. For example, one could instead take each likelihood as a power-law distribution or as a sum of two Gaussians with equal means, but different variances. The latter might correspond to a mixture of high (narrow Gaussian) and low (wide Gaussian) levels of certainty about sensory feedback, potentially arising from variations in environmental or sensory noise or from variations in attention. As shown in Materials and Methods, different choices of the underlying distributions result in essentially the same fits and predictions. This suggests that the heavy tails themselves, rather than their detailed shape, are crucial for the model.

Another extension of this work would be to use this framework to account for interindividual differences in behavior and neural activity (48, 49), which are not easily addressable given current experimental limitations. Like in many other behavioral modeling studies (13, 34), the present version of our model can fit only an average animal (because of our need to aggregate large datasets to accurately estimate behavioral distributions), making our results semiquantitative with respect to the statistics of any particular individual. Nevertheless, our framework represents a class of model that can explain a broader range of qualitative results than previous efforts, including, in particular, the shape of the distribution of exploratory behaviors.

Finally, while we used Bengalese finches as the subject of this study, nothing in the model relies on the specifics of the songbird system. Our approach might, therefore, also be applied to studies of sensorimotor learning in other model systems, and we predict that any animal with a heavy-tailed distribution of motor outputs should exhibit similar phenomenology in its sensorimotor learning. Exploring whether the model allows for such cross-species generalizations is an important topic for future research, as are questions of how networks of neurons might implement such computations (50–53).

Materials and Methods

Experiments.

The data used are taken from the experiments in ref. 33 and are described in detail there. Briefly, subjects were nine male adult Bengalese finches (females do not produce song) aged over 190 d. Lightweight headphones and microphones were used to shift the perceived pitches of birds’ own songs by different amounts, and the pitch of the produced song was recorded. For each day, only data from 10 AM to 12 PM are used. The same birds were used in multiple (but not all) pitch-shift experiments separated by at least 32 d. Changes in vocal pitch were measured in semitones, which are a relative unit of the fundamental frequency (pitch) of each song syllable:

The error bars reported for the group means in Fig. 2 indicate the error of the mean that accounts for variances both across individual birds and within one bird. Specifically, if represents the pitch of the th vocalization from the th bird on a specific day (, ), then the mean pitch for the day for each bird is , and the global mean pitch is . With these, we define the error of the mean used in Fig. 2 as

| [3] |

where the first term in the square root represents the variance across birds, and the second term is the variance within one bird, averaged over the birds.

Stable Distributions.

A probability distribution is said to be stable if a linear combination of two variables distributed according to the distribution has the same distribution up to location and scale (47). By the generalized central limit theorem, the probability distributions of sums of a large number of i.i.d. random variables with infinite variances tend to be stable distributions (47). A general stable distribution does not have a closed-form expression, except for three special cases: Lévy, Cauchy, and Gaussian. A symmetric stable variable can be written in the form , where is called the standardized symmetric stable variable and follows the following distribution (47):

| [4] |

Thus, any symmetric stable distribution is characterized by three parameters: the type, or the tail weight, parameter ; the scale parameter ; and the center . takes the range (47). If , the corresponding distribution is the Gaussian, and if , it is the Cauchy distribution. can be any positive real number, and can be any real number. The above integral is difficult to compute numerically. However, due to the common occurrence of stable distributions in various fields, such as finance (54), communication systems (55), and brain imaging (56), there are many algorithms to compute it approximately. We used the method of ref. 57. In this method, the central and tail parts of the distribution are calculated using different algorithms: the central part is approximated by 96-points Laguerre quadrature and the tail part is approximated by Bergstrom expansion (58).

Note that even though we take the propagator and the likelihood distributions as stable distributions in our model, their iterative application (effectively, a product of many likelihood distributions iterated with a convolution with the kernel), as well as truncation, results in finite variance predictions, allowing us to compare predicted variances of the behavior with experimentally measured ones.

Fitting.

Our model consists of three truncated stable distributions, one for each of the two likelihood functions resembling the feedback modalities and a third for the propagation kernel. We use truncation to ensure biological plausibility: Neither extremely large errors nor extremely large pitch changes are physiologically possible. We truncate the distributions to the range semitones—much larger than imposed pitch shifts and slightly larger than the largest observed pitch fluctuations in our data, 7 semitones. This leaves us with nine parameters of which we need to fit six from data, namely the type parameters and the scale parameters , while the center parameters are predetermined: The two likelihoods are at 0 and , respectively, while the propagation kernel is centered around the previous time-step value (Eq. 1). The prior (and accordingly the posterior) is a discrete distribution with a resolution of 1,600 bins covering equidistantly the entire support of the distribution ( semitones). The value within each bin is computed by the Bayes formalism given in Eq. 2. As described earlier in Mathematical Model, the initial prior is given as the steady-state distribution after repeatedly applying Eq. 2 to a uniform distribution. In other words, the prior is not an independent variable in the model, but it is determined self-consistently by the likelihoods and the kernel. The resulting prior distribution is compared with the empirical distribution to compute the quality of fit according to the objective functions described below. Furthermore we point out that it is essential to have a non-Gaussian kernel: With a Gaussian kernel the baseline ( prior distribution) is, essentially, Gaussian and would thus not match the data in Fig. 2D. Similarly, the likelihood functions must be long tailed; otherwise the combined likelihood would not be bimodal as found empirically in Fig. 3F, and large perturbations would not be rejected—even if the prior was long tailed (e.g., due to a long-tailed kernel).

We construct an objective function that is a sum of terms representing the quality of fit for each of the three datasets to our disposition: the for four adaptations of the means to the respective constant shifts (Fig. 2A), the for the adaptation of the mean to the staircase shifts (Fig. 2B), and the log-likelihood of the observed baseline pitch probability distribution (Fig. 2C). Using Eq. 3 to define the error of the mean, we calculate as

| [5] |

where represents the days for a specific experiment with total duration of days, while , , and represent the theoretical result, the mean, and SE of the experimental data on day , respectively. To make sure that all three terms contribute on about the same scale to the objective function, we multiply the baseline fit term by 10. We use this objective function because the data we fit are heterogeneous: For learning with a sensory perturbation, we use only the means and the error bars of the produced pitch curves, while for the baseline distribution, we use the whole distribution. We choose to do it this way because it is computationally intensive to calculate likelihoods for distributions of vocalizations for every day and to do it repeatedly for parameter sweeps. Given that we are fitting only a handful of parameters, while the datasets are very constraining, we do not think that we lose accuracy by fitting the summary statistics instead of performing a full maximum-likelihood estimation.

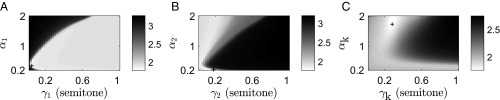

The objective function landscape is not trivial in this case, and there is not a single best set of parameters. Fig. 4 illustrates this by showing the quality of fit as a function of each pair of , while keeping the other four parameters fixed. There is a large subspace (a plateau or a long nonlinear valley, depending on the projection used) that provides similar fit values. In other words, the effective number of important parameters is less than six. Thus, choosing the maximum of the objective function and characterizing the error ellipsoid, or linear sensitivity to the parameters, to get the best-fit parameter values and their uncertainties is not appropriate. As suggested in the literature on sloppy models (59, 60), where such nontrivial likelihood landscapes are discussed, instead we focus on values and uncertainties of the fits and predictions themselves. For this, we sweep through the entire parameter space and, for each set of parameters , we calculate the value of the objective function and the corresponding fitted or predicted curve . Then for the mean fits/predictions (lines in Figs. 2, 3, and 5), we have

| [6] |

For the SDs, denoted by shaded regions in Figs. 2, 3, and 5, we have

| [7] |

There are many ways of doing the sweep over the parameters. Here we choose first to find a local minimum (however shallow it is). Then for each parameter, we choose six data points on each side of the minimum, distributed uniformly in the log space between the local minimum and the extremal parameter values ( for each and for each ). The extremal values avoid and , which are singular and dramatically slow down computations. Thus, there are a total of 13 grid points for each parameter and a total of total parameter samples.

Fig. 4.

(A–C) Objective function as a function of the two parameters (stability and scale) for (A) the first (shifted) likelihood, (B) the second (unshifted) likelihood, and (C) the propagation kernel, while the respective other four parameters are held fixed. The gray shades represent the decimal logarithm of the objective function (effectively, logarithms of the negative log-likelihood), and lighter shades mean a better fit. Because of the logarithmic scaling, small changes in the shading represent large changes in the quality of the fit. The black crosses show the parameter values for the deepest local minimum in this range of parameters. Note that, even though the minimum in C is close to the Gaussian kernel (), a Gaussian kernel cannot fit the data well. Specifically, it cannot reproduce a non-Gaussian distribution of the baseline pitch, instead essentially matching the parabola in Fig. 2C.

Fig. 5.

Fits and predictions with the power-law family of heavy-tailed distributions instead of the stable distribution family. (A–C) Equivalent to the panels in Fig. 2 A–C. (D–F) Equivalent to the panels in Fig. 3 C, E, and F. The shaded areas around the theoretical curves represent confidence intervals for 1 SD. The quality of all of the five fitted mean compensation curves combined is /df , so that the truncated stable distributions used in the main text provide for (slightly) better fits. At the same time, the deviance of the fitted baseline distribution in C relative to the perfect fit, estimated as the NSB entropy of the data (37, 38), is 0.022 per sample point, slightly better than for the truncated stable distribution model (Fig. 2).

Choice of the Shape of Distributions.

For Figs. 2 and 3 in the main text, we have chosen stable distributions for , , and . To investigate effects of this choice, we repeated the fitting and the predictions for different distribution choices. We consider a family of power-law distributions and a family of mixtures of Gaussians of different width . Distributions in either family produce very similar fits to the stable distribution model. For example, Fig. 5 shows the fits and predictions for the power-law distribution model, and the power-law family fits the means of the pitch compensation data slightly worse, but the baseline pitch distribution slightly better, than the truncated stable distribution model (Fig. 2). The detailed shape of the distributions seems less important than the existence of the heavy tails.

Linear Dependence on Pitch Shift in a Kalman Filter with Multiple Timescales.

We emphasized that traditional learning models cannot account for the nonlinear dependence of the speed and the magnitude of learning on the error signal. Here we show this for one such common model, originally proposed by Körding et al. (13). This Kalman filter model belongs to the family of Bayes filters, which are dynamical models describing the temporal evolution of the probability distribution of a hidden state variable (can be a vector or a scalar) and its update using the Bayes formula for integrating information provided by observations, which are conditionally dependent on the current state of the hidden variable. The specific attributes of a Kalman filter within the general class of Bayes filters (35) are the linearity of the temporal evolution of the hidden state (the pitch for the birds, but referred to as disturbances in ref. 13 and hereon), the linear relation between the measurements (observations) and the hidden variable, and the Gaussian form of the measurement noise and the distribution of disturbances.

One can argue that Kalman filter models with multiple timescales may be able to account for the diversity of learning speeds in our pitch-shift experiments. We explore this in the context of an experimentally induced constant shift to one disturbance in the Kalman filter model with multiple timescales from ref. 13. If there is a constant shift , equation 3 in ref. 13 takes the form

| [8] |

The first step in the Kalman filter dynamics is the prediction

| [9] |

where is the mean disturbance vector at time given measurements up to time and with being the relaxation timescale of . We assume that the shift occurs when the disturbances have relaxed to the steady state: . Therefore, we approximate the standard Kalman filter equation describing the observation update of the expectation value of the disturbance after a measurement at time as (see ref. 35 for a detailed formal description)

| [10] |

where is the covariance matrix of the measurement noise, and is the covariance matrix of the hidden variables. does not depend on the measurement and is thus not affected by the shift . Thus, the steady-state prediction variance is given by a solution to the equation

| [11] |

where is the matrix determining the temporal evolution of the mean disturbances, Eq. 9, and is the covariance matrix of the intrinsic (temporal evolution) noise.

From Eq. 11 we see that is constant if the perturbation occurs when the system was at the steady state. We now wish to find the new steady state given the constant perturbation . Consider, for simplicity, two disturbances, each one with its own temporal scale . The components of the steady-state covariance are

| [12] |

and we define

| [13] |

Substituting Eq. 9 in Eq. 10 we get

| [14] |

In the steady state, , we get

| [15] |

| [16] |

Thus, we find that the sum of the disturbances is proportional to independent of the size of even for systems with multiple timescales.

Generalizing the result to disturbances with different timescales, we get the following equations at steady state:

| [17] |

These equations are solved by

| [18] |

which generalizes the linear dependence of learning on for arbitrary . Thus, this (and similar) Kalman filter-based model cannot explain the experimental results studied here.

Acknowledgments

We are grateful to the NVIDIA Corporation for supporting our research with donated Tesla K40 graphics processing units. This work was partially supported by NIH Brain Research through Advancing Innovative Neurotechnologies (BRAIN) Initiative Theory Grant 1R01-EB022872, James S. McDonnell Foundation Grant 220020321, NIH Grant NS084844, and NSF Grant 1456912.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

Data deposition: The code and data reported in this paper have been deposited on GitHub and are available at https://github.com/EmoryUniversityTheoreticalBiophysics/NonGaussianLearning.

References

- 1.Shadmehr R, Smith MA, Krakauer JW. Error correction, sensory prediction, and adaptation in motor control. Annu Rev Neurosci. 2010;33:89–108. doi: 10.1146/annurev-neuro-060909-153135. [DOI] [PubMed] [Google Scholar]

- 2.McDonnell MD, Ward LM. The benefits of noise in neural systems: Bridging theory and experiment. Nat Rev Neurosci. 2011;12:415–426. doi: 10.1038/nrn3061. [DOI] [PubMed] [Google Scholar]

- 3.Neuringer A. Operant variability: Evidence, functions, and theory. Psychon Bull Rev. 2002;9:672–705. doi: 10.3758/bf03196324. [DOI] [PubMed] [Google Scholar]

- 4.Kao MH, Doupe AJ, Brainard MS. Contributions of an avian basal ganglia–forebrain circuit to real-time modulation of song. Nature. 2005;433:638–643. doi: 10.1038/nature03127. [DOI] [PubMed] [Google Scholar]

- 5.Linkenhoker BA, Knudsen EI. Incremental training increases the plasticity of the auditory space map in adult barn owls. Nature. 2002;419:293–296. doi: 10.1038/nature01002. [DOI] [PubMed] [Google Scholar]

- 6.Knudsen EI. Instructed learning in the auditory localization pathway of the barn owl. Nature. 2002;417:322–328. doi: 10.1038/417322a. [DOI] [PubMed] [Google Scholar]

- 7.Smith MA, Ghazizadeh A, Shadmehr R. Interacting adaptive processes with different timescales underlie short-term motor learning. PLoS Biol. 2006;4:e179. doi: 10.1371/journal.pbio.0040179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Sober SJ, Brainard MS. Adult birdsong is actively maintained by error correction. Nat Neurosci. 2009;12:927–931. doi: 10.1038/nn.2336. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Rescorla R, Wagner A. In: A theory of Pavlovian conditioning: Variations in the effectiveness of reinforcement and nonreinforcement. Classical Conditioning II. Black A, Prokasy W, editors. Appleton-Century-Crofts; New York: 1972. pp. 64–99. [Google Scholar]

- 10.Joel D, Niv Y, Ruppin E. Actor–critic models of the basal ganglia: New anatomical and computational perspectives. Neural Networks. 2002;15:535–547. doi: 10.1016/s0893-6080(02)00047-3. [DOI] [PubMed] [Google Scholar]

- 11.Sutton RS, Barto AG. Reinforcement Learning: An Introduction. 2nd Ed MIT Press Cambridge; MA: 2012. [Google Scholar]

- 12.Lak A, Stauffer W, Schultz W. Dopamine neurons learn relative chosen value from probabilistic rewards. eLife. 2016;5:e18044. doi: 10.7554/eLife.18044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kording KP, Tenenbaum JB, Shadmehr R. The dynamics of memory as a consequence of optimal adaptation to a changing body. Nat Neurosci. 2007;10:779–786. doi: 10.1038/nn1901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Wolpert DM. Probabilistic models in human sensorimotor control. Hum Mov Sci. 2007;26:511–524. doi: 10.1016/j.humov.2007.05.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gallistel CR, Mark TA, King AP, Latham PE. The rat approximates an ideal detector of changes in rates of reward: Implications for the law of effect. J Exper Psych Anim Behav Proc. 2001;27:354–372. doi: 10.1037//0097-7403.27.4.354. [DOI] [PubMed] [Google Scholar]

- 16.Gershman SJ. A unifying probabilistic view of associative learning. PLoS Comput Biol. 2015;11:e1004567. doi: 10.1371/journal.pcbi.1004567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Doya K, Sejnowski T. A computational model of avian song learning. In: Gazzaniga M, editor. The New Cognitive Neurosciences. 2nd Ed. MIT Press, Cambridge; MA: 2000. pp. 469–482. [Google Scholar]

- 18.Fiete I, Seung H. Birdsong learning. In: Squire L, editor. Encyclopedia of Neuroscience. Academic; Oxford: 2009. pp. 227–239. [Google Scholar]

- 19.Farries M, Fairhall A. Reinforcement learning with modulated spike timing–dependent synaptic plasticity. J Neurophys. 2007;98:3648–3665. doi: 10.1152/jn.00364.2007. [DOI] [PubMed] [Google Scholar]

- 20.Donchin O, Shadmehr R. Linking motor learning to function approximation: Learning in an unlearnable force field. In: Dietterich T, Becker S, Gharamani Z, editors. Advances in Neural Information Processing Systems 14. Vol 1. MIT Press, Cambridge; MA: 2001. p. 7. [Google Scholar]

- 21.van Beers RJ. Motor learning is optimally tuned to the properties of motor noise. Neuron. 2009;63:406–417. doi: 10.1016/j.neuron.2009.06.025. [DOI] [PubMed] [Google Scholar]

- 22.Wei K, Körding K. Relevance of error: What drives motor adaptation? J Neurophysiol. 2009;101:655–664. doi: 10.1152/jn.90545.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Beck JM, Ma WJ, Pitkow X, Latham P, Pouget A. Not noisy, just wrong: The role of suboptimal inference in behavioral variability. Neuron. 2012;74:30–39. doi: 10.1016/j.neuron.2012.03.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Wei K, Körding K. Uncertainty of feedback and state estimation determines the speed of motor adaptation. Front Comput Neurosci. 2010;4:11. doi: 10.3389/fncom.2010.00011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kelly CW, Sober SJ. A simple computational principle predicts vocal adaptation dynamics across age and error size. Front Integr Neurosci. 2014;8:9. doi: 10.3389/fnint.2014.00075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Genewein T, Hez E, Razzaghpanah Z, Braun DA. Structure learning in Bayesian sensorimotor integration. PLoS Comput Biol. 2015;11:27. doi: 10.1371/journal.pcbi.1004369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Shadmehr R, Donchin O, Hwang EJ, Hemminger SE, Rao A. Learning dynamics of reaching. In: Riehle A, Vaadia E, editors. Motor Cortex and Voluntary Movements: A Distributed System for Distributed Function. CRC Press, Boca Raton; FL: 2005. pp. 297–328. [Google Scholar]

- 28.Dayan P, Niv Y. Reinforcement learning: The good, the bad and the ugly. Curr Opin Neurobiol. 2008;18:185–196. doi: 10.1016/j.conb.2008.08.003. [DOI] [PubMed] [Google Scholar]

- 29.Fischer BJ, Peña JL. Owl’s behavior and neural representation predicted by Bayesian inference. Nat Neurosci. 2011;14:1061–1066. doi: 10.1038/nn.2872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Neymotin SA, Chadderdon GL, Kerr CC, Francis JT, Lytton WW. Reinforcement learning of two-joint virtual arm reaching in a computer model of sensorimotor cortex. Neural Comput. 2013;25:3263–3293. doi: 10.1162/NECO_a_00521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Schultz W. Neuronal reward and decision signals: From theories to data. Physiol Rev. 2015;95:853–951. doi: 10.1152/physrev.00023.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Robinson FR, Noto CT, Bevans SE. Effect of visual error size on saccade adaptation in monkey. J Neurophysiol. 2003;90:1235–1244. doi: 10.1152/jn.00656.2002. [DOI] [PubMed] [Google Scholar]

- 33.Sober SJ, Brainard MS. Vocal learning is constrained by the statistics of sensorimotor experience. Proc Natl Acad Sci USA. 2012;109:21099–21103. doi: 10.1073/pnas.1213622109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Hahnloser R, Narula G. A Bayesian account of vocal adaptation to pitch-shifted auditory feedback. PLoS ONE. 2017;12:e0169795. doi: 10.1371/journal.pone.0169795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kaipo J, Somersalo E. Statistical and Computational Inverse Problems. Springer; New York: 2004. [Google Scholar]

- 36.Brainard MS, Doupe A. What songbirds teach us about learning. Nature. 2002;417:351–358. doi: 10.1038/417351a. [DOI] [PubMed] [Google Scholar]

- 37.Nemenman I, Shafee F, Bialek W. In: Entropy and inference, revisited. Advances in Neural Information Processing Systems (NIPS) Dietterich T, Becker S, Gharamani Z, editors. Vol 14. MIT Press; Cambridge, MA: 2002. pp. 471–478. [Google Scholar]

- 38.Nemenman I. Coincidences and estimation of entropies of random variables with large cardinalities. Entropy. 2011;13:2013–2023. [Google Scholar]

- 39.Kuebrich B, Sober S. Variations on a theme: Songbirds, variability, and sensorimotor error correction. Neuroscience. 2015;296:48–54. doi: 10.1016/j.neuroscience.2014.09.068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Hoffmann LA, Kelly CW, Nicholson DA, Sober SJ. A lightweight, headphones-based system for manipulating auditory feedback in songbirds. J Vis Exp. 2012;69:e50027. doi: 10.3791/50027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ölveczky BP, Andalman AS, Fee MS. Vocal experimentation in the juvenile songbird requires a basal ganglia circuit. PLoS Biol. 2005;3:e153. doi: 10.1371/journal.pbio.0030153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Niziolek C, Nagarajan S, Houde J. What does motor efference copy represent? Evidence from speech production. J Neurosci. 2013;33:16110–16116. doi: 10.1523/JNEUROSCI.2137-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Suthers RA, Goller F, Wild JM. Somatosensory feedback modulates the respiratory motor program of crystallized birdsong. Proc Natl Acad Sci USA. 2002;99:5680–5685. doi: 10.1073/pnas.042103199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Liu Y, et al. Selective and divided attention modulates auditory–vocal integration in the processing of pitch feedback errors. Eur J Neurosci. 2015;42:1895–1904. doi: 10.1111/ejn.12949. [DOI] [PubMed] [Google Scholar]

- 45.Scheerer NE, Jones JA. The predictability of frequency-altered auditory feedback changes the weighting of feedback and feedforward input for speech motor control. Eur J Neurosci. 2014;40:3793–3806. doi: 10.1111/ejn.12734. [DOI] [PubMed] [Google Scholar]

- 46.Zinn-Justin J. Quantum Field Theory and Critical Phenomena. 4th Ed Clarendon; Oxford: 2002. [Google Scholar]

- 47.Nolan JP. Stable Distributions - Models for Heavy Tailed Data. Birkhauser; Boston: 2015. [Google Scholar]

- 48.Hahn A, Krysler A, Sturdy C. Female song in black-capped chickadees (Poecile atricapillus): Acoustic song features that contain individual identity information and sex differences. Behav Proc. 2013;98:98–105. doi: 10.1016/j.beproc.2013.05.006. [DOI] [PubMed] [Google Scholar]

- 49.Mets D, Braind M. Genetic variation interacts with experience to determine interindividual differences in learned song. Proc Natl Acad Sci USA. 2018;115:421–426. doi: 10.1073/pnas.1713031115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Fiser J, Berkes P, Orbán G, Lengyel M. Statistically optimal perception and learning: From behavior to neural representations. Trends Cogn Sci. 2010;14:119–130. doi: 10.1016/j.tics.2010.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Buesing L, Bill J, Nessler B, Maass W. Neural dynamics as sampling: A model for stochastic computation in recurrent networks of spiking neurons. PLoS Comput Biol. 2011;7:e1002211. doi: 10.1371/journal.pcbi.1002211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Kappel D, Habenschuss S, Legenstein R, Maass W. Network plasticity as Bayesian inference. PLoS Comput Biol. 2015;11:e1004485. doi: 10.1371/journal.pcbi.1004485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Petrovici MA, Bill J, Bytschok I, Schemmel J, Meier K. Stochastic inference with spiking neurons in the high-conductance state. Phys Rev E. 2016;94:042312. doi: 10.1103/PhysRevE.94.042312. [DOI] [PubMed] [Google Scholar]

- 54.Mittnik S, Paolella MS, Rachev ST. Diagnosing and treating the fat tails in financial returns data. J Empir Finance. 2000;7:389–416. [Google Scholar]

- 55.Nikias CL, Shao M. Signal Processing with Alpha-Stable Distributions and Applications, Adaptive and Learning Systems for Signal Processing, Communications, and Control. Wiley; New York: 1995. [Google Scholar]

- 56.Salas-Gonzalez D, Górriz JM, Ramírez J, Illán IA, Lang EW. Linear intensity normalization of FP-CIT SPECT brain images using the -stable distribution. NeuroImage. 2013;65:449–455. doi: 10.1016/j.neuroimage.2012.10.005. [DOI] [PubMed] [Google Scholar]

- 57.Belov IA. On the computation of the probability density function of -stable distributions. Math Model Anal. 2005;2:333–341. [Google Scholar]

- 58.Bergström H. On some expansions of stable distribution functions. Ark Mat. 1952;2:375–378. [Google Scholar]

- 59.Gutenkunst R, et al. Universally sloppy parameter sensitivities in systems biology models. PLoS Comput Biol. 2007;3:1871–1878. doi: 10.1371/journal.pcbi.0030189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Transtrum M, et al. Perspective: Sloppiness and emergent theories in physics, biology, and beyond. J Chem Phys. 2015;143:010901. doi: 10.1063/1.4923066. [DOI] [PubMed] [Google Scholar]