Abstract

To determine the effects of gamification on student education, researchers implemented “Kaizen,” a software-based knowledge competition, among a first-year class of undergraduate nursing students. Multiple-choice questions were released weekly or bi-weekly during two rounds of play. Participation was voluntary, and students could play the game using any Web-enabled device. Analyses of data generated from the game included: (1) descriptive, (2) logistic regression modeling of factors associated with user attrition, (3) generalized linear mixed model for retention of knowledge, and (4) analysis of variance of final exam performance by play styles. Researchers found a statistically significant increase in the odds of a correct response (1.8, 1.0-3.4) for a Round 1 question repeated in Round 2, suggesting retention of knowledge. They also found statistically significant differences in final exam performance among different play styles.

To maximize the benefits of gamification, researchers must use the resulting data both to power educational analytics and to inform nurse educators how to enhance student engagement, knowledge retention, and academic performance.

Keywords: Analytics, digital data, gamification, undergraduate nursing education

BACKGROUND AND SIGNIFICANCE

Engaging adult learners while promoting effective learning outcomes can be a daunting task for many nursing faculty. Undergraduate nursing students must master numerous skills and apply new information in a brief period before they are launched into health care settings as brand new nurses. As a result, nursing instructors may hesitate to try new strategies for fear of losing valuable learning time or receiving poor student evaluations. Many nurse educators may resort to lectures, PowerPoint presentations, and competency checklists, teaching tools that students often find dull and boring.1 This gap in teaching and learning can be understood through the lens of adult learning theory, in that nursing students are adults who typically prefer to direct their own learning in ways that are meaningful to them.1

Technology is also an integral part of the lives of most contemporary students, and most expect access to course materials for college classes through some form of technological device. As students born around the turn of the millennium continue to enter higher education, their familiarity with smartphones and technology presents new opportunities to enhance traditional education.2 One way to use computers, tablets, and smartphones to enhance student learning is through gamification. Gamification refers to the use of game design elements in non-game contexts.2 Game elements, such as scoreboards or badges to provide visual measurement of student progress or to reward achievement, can increase engagement and motivation for learners in varied settings.2–9 In addition, software-based game initiatives provide a real-time data trail that can mathematically monitor and uncover opportunities for new insights into student learning.

Educational games that incorporate quizzes may encourage long-term retention of material.10 The use of multiple-choice questions (MCQs) for formative assessment can help students prepare for high-stakes exams, and are helpful to students with different learning styles.11 Finally, completing MCQs in the context of a game allows learners to practice for summative assessments while increasing opportunities for engagement and motivation.12

OBJECTIVE

For this study, instructors used gamification as a strategy to increase engagement and to enhance student learning. They implemented “Kaizen,” a software-based knowledge competition among undergraduate students enrolled in the first semester of a baccalaureate nursing program. This article reports on student engagement with the game, investigates factors associated with cessation of play (attrition), and explores the impact on knowledge retention and final exam grades across different levels of student engagement within the Kaizen game.

MATERIALS AND METHODS

Setting and participants

The study was conducted at a school of nursing (SON) in the southeastern United States between August 27, 2015, and April 26, 2016. The university’s Institutional Review Board approved this project. Students enrolled in a first-semester nursing skills course (course 1) were invited to participate during the orientation session in the fall semester of 2015 (n=133). Students were informed that their participation was voluntary and that they could choose an alias to maintain anonymity. Prior to the orientation session, faculty randomly divided students into teams (n=18) of seven to eight students each. They provided the students with a link for access to the game and announced that it would open on August 27, 2015 and close on December 5, 2015 (Round 1, 101 days).

Round 2 began in the spring semester of 2016 with students who enrolled in the second level skills course (course 2); it opened on January 21, 2016 and closed on April 26, 2016 (97 days). One to six questions were released once or twice a week during the 2015-2016 Kaizen SON game. These questions were structured to help students practice for the final exam, which was the only cognitive exam given in the course. All other evaluation methods for the course were skills validations.

Game description and structure

The Web-based software “Kaizen” draws its name from a Japanese word meaning continuous improvement. It was developed in 2012 as part of a collaborative Clinical and Translational Science Award (CTSA) as a way to enhance learning among medical residents in the setting of newly recommended work hour restrictions. Faculty at the SON adapted the Kaizen gaming platform for nursing students in 2014. Faculty use Kaizen to deliver questions at predetermined intervals to students competing as individual players whose scores contribute to team scores. Immediate feedback, including a rationale for the correct answer, engages participants in learning. For example, the basic question, “What is the correct amount of time to rub hands together while washing with soap and water?” provided a rationale for the correct answer (a minimum of 15 seconds) that cited Centers for Disease Control guidelines.

Badges for milestone and achievement levels are awarded as players rise in rank, providing positive reinforcement. Leaderboards display individual and team progress, encouraging students to compete against each other to win rounds (see Figure 1). The Kaizen game can be accessed from the participant’s phone, tablet, or computer using a password-protected link. Once participants log in, they can change their user names to remain anonymous. The Kaizen software records participant- and question-level data that enable subsequent analyses of educational outcomes.

FIGURE 1.

Badges for milestone and achievement levels are awarded as players rise in rank, providing positive reinforcement. Leaderboards display individual and team progress, encouraging students to compete against each other to win rounds.

Before the games started, the game manager planned the game structure, which included setting the total number of questions and their value, and deciding which badges could be earned (eg, Marathon badges to reward consecutive days of logging in, Hotstreak badges to reward consecutive correct responses, and Level badges or threshold scores needed before “going up a level”). The game manager also emailed a “status of competition” message to all players on an approximately weekly basis, highlighting current scores for individuals and teams with the purpose of enhancing engagement and catalyzing competition. The game manager made random team assignments among students. Students who made no progress in the program from first to second semester were not eligible to participate in Round 2 of the game. Students who played only in Round 2 were considered new and joined teams through random assignment.

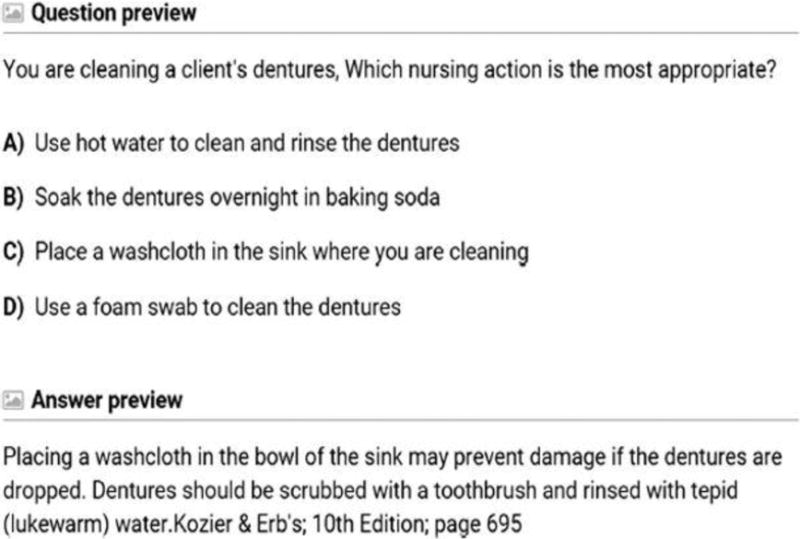

Questions were written by nursing instructors, based on course objectives (see Figure 2), and were synchronized with course content and released in the week following the introduction of the content. Since students were in their first semester, instructors used scaffolding so that knowledge/comprehension questions led to application questions as students gained mastery of content. Some of the questions included images and figures for students to identify basic anatomy or disease processes.

FIGURE 2.

Questions were written by nursing instructors, based on F2 course objectives, and were synchronized with course content and released in the week following the intro-duction of the content.

Data collection, independent and dependent variables

Whenever a student logged in to Kaizen and completed a question, all associated data were recorded (e.g., time, date, questions answered, accuracy of response). Independent variables included overall game (eg, total number of students, total players, teams, questions posted/completed, and accuracy of responses) as well as participant level (eg, play duration, questions answered, device used to log in, badges earned) data. Dependent variables included attrition (loss of Round 1 players in Round 2), retention of knowledge, and semester final examination score.

Analyses

Analyses were divided into (1) descriptive, (2) attrition, (3) retention of knowledge, and (4) final exam performance.

Descriptive analyses

Descriptive analyses at the overall game level included the calculation of daily average users (DAU), or unique players answering at least one question on days with and without email reminders, a common measure of user engagement. Additional game level descriptive statistics included participants, teams, and questions (posted, answered, accuracy). Player level analyses included elements such as play duration, devices used to log in, and badges earned.

In addition, researchers performed a K-Means cluster analysis of days played and questions answered to determine patterns of play style clustering among participants. Based on these patterns, participants were classified into three utilization/log-in clusters: (1) engaged throughout the round (played frequently, answered most questions); (2) answered in bursts (logged in intermittently completing multiple questions in a single session, still answered most questions); and (3) slightly engaged (rarely logged in, answered fewer questions). Researchers also computed “player efficiency ratings” (PER) that combined accuracy (points for answering questions correctly), consistency of play (bonus points for answering more questions in the question bank over a longer period of time), and timeliness of answers (bonus points awarded for answering a question closer to the day of release). The PER is a global reflection of player effectiveness that shows student engagement with the game and understanding of course material. Finally, researchers fit negative binomial models to examine the impact of email reminders (“status of competition emails”) on the count of unique daily users.

Attrition

Attrition was defined as non-participation in Round 2 for players who had completed at least one question in Round 1. A player could meet the dependent outcome of attrition either by not playing in Round 2 or if he or she had left the educational program after Round 1. Univariate logistic regression models were used to identify variables associated with attrition. Independent variables that approached statistical significance (p<0.15) in univariate modeling were considered for inclusion in a multivariable logistic regression model. Because many of the variables were related, formal collinearity diagnostics were used to examine potential multicollinearity issues. Collinearity diagnostics were performed on independent variables under consideration, and when potential instances were found (condition index > 30), researchers selected only one of the involved variables for inclusion. For example, “play style” and “badges earned” exhibited collinearity, and researchers chose the latter for inclusion, as it would consume fewer degrees of freedom. Using this information, a multivariable logistic regression model was constructed.

Retention of knowledge

Retention of knowledge analyses focused on eight questions from Round 1 that were re-introduced in Round 2, allowing students who played both rounds to answer these questions twice. Researchers focused on paired responses, and estimated the change from Round 1 to Round 2 in the odds of correctly answering the repeated questions. Because of covariance between paired responses from the same individual, and within responses to the same question, the analysis was implemented using a generalized linear mixed model (GLIMMIX) for binary response with random effects for player and question.

Final exam performance

Finally, researchers examined differences in final exam performance for different “play styles” (as determined by cluster analysis) and PERs grouped into tertiles via ANOVA in the first semester course. Players had to provide written consent for inclusion in these analyses due to Family Educational Rights and Privacy Act (FERPA) guidelines. Statistical significance for all tests was defined as a two-tailed P value < 0.05; no correction for multiple testing was applied due to the exploratory rather than confirmatory nature of the analyses. All analyses were performed using SAS software, version 9.4 (SAS Institute, Cary, NC).

RESULTS

Utilization

Data from two rounds of Kaizen showed overall game use characteristics. During Round 1, 71% of students (n=94) logged in and answered at least one question. A total of 73 players (55%) logged in during the first 4 weeks, versus 46 (35%) in the final 3 weeks. In Round 2, the percentage of players who logged in fell to 47% (n=57), starting at 38 (31%) in the first 4 weeks, then dropping in subsequent weeks, before rising to 35 players (29%) in the final weeks. The overall proportion of correct responses was similar in both rounds (Round 1, 75%; Round 2, 74%). Researchers gauged the impact of the “status of competition” emails by comparing the number of DAUs on days with and without emails. They found a statistically significant increase in DAUs in both Rounds 1 and 2 on days when emails were sent (see Table 1).

Table 1.

Descriptive characteristics of X game August 2015 to April 2016

| Round 1 | Round 2 | |

|---|---|---|

| Overall Game Characteristicsa | ||

| Students in course | 133 | 121 |

| Players answered at least one question | 94 (71%) | 57 (47%) |

| Teams | 18 | 16 |

| Game length (in days) | 100 | 93 |

| Unique players | ||

| Weeks 1-4 | 73 | 38 |

| Weeks 5-8 | 67 | 9 |

| Weeks 9-12 | 40 | 14 |

| Weeks 13-15b | 46 | 35 |

| Daily average usersc,d | ||

| Day of email reminder | 12.9 | 12.2 |

| Other days | 4.9 | 0.9 |

| Questions posted | 39 | 39 |

| Questions posted | ||

| Weeks 1-4 | 11 | 11 |

| Weeks 5-8 | 13 | 12 |

| Weeks 9-12 | 12 | 8 |

| Weeks 13-15 | 3 | 8 |

| Questions posted | ||

| Days with 0 questions posted | 73 | 62 |

| Days with 1 question posted | 15 | 23 |

| Days with 2 questions posted | 12 | 8 |

| Questions answered | ||

| Weeks 1-4 | 473 | 129 |

| Weeks 5-8 | 840 | 94 |

| Weeks 9-12 | 498 | 225 |

| Weeks 13-16 | 540 | 916 |

| Questions answered correctly | 1757 (75%) | 1015 (74%) |

| Days between question postinge | 3.8 (± 2.9) | 3.1 (± 4.0) |

| Days from question posting to answer | ||

| 0 | 420 (18) | 96 (7) |

| 1-7 | 859 (37) | 325 (24) |

| 8-14 | 335 (14) | 173 (13) |

| >14 | 737 (31) | 770 (56) |

| Overall Player Characteristicsa | ||

| Days played (answer at least 1 question)f | 4 (2,9) | 2 (1,3) |

| Play duration (days from start to end of play)f | 48 (7,85) | 6 (0,90) |

| Play styleg | ||

| Engaged throughout | 13 (14%) | 6 (11%) |

| Answered in bursts | 44 (47%) | 27 (47%) |

| Just slightly engaged | 37 (39%) | 24 (42%) |

| Questions answeredf | 34.5 (12,37) | 39 (4,39) |

| Percent correctf | 75 (68.4,81.8) | 75 (66.7,87.2) |

| Player Efficiency Rating (PER)f,h | 36.8 (11.8,48.8) | 34.8 (4.0,47.3) |

| Devices used | ||

| Phone/Tablet | 28 (30%) | 20 (35%) |

| Laptop/Desktop computer | 30 (32%) | 25 (44%) |

| Both | 36 (38%) | 12 (21%) |

| Badges earned5 | ||

| Level | 4.4 (± 2.1) | 6.2 (± 4.2) |

| Marathon | 0.05 (± 0.3) | 0.04 (± 0.3) |

| Hotstreak | 1.0 (± 0.7) | 0.8 (± 0.9) |

| Total | 5.4 (± 2.7) | 7.1 (± 5.0) |

Presented as “n” or n (%) unless otherwise specified

Round 1 lasted 15 weeks, while Round 2 lasted 14 weeks

Averaged number of unique users completing a question the day of reminder email versus days without email reminders during the duration of the game

We fit negative binomial models modeling the daily count of number of unique players to determine the impact of email reminders in Round 1 (IRR 2.6; 1.6-4.5; p = 0.0002) and Round 2 (IRR 13.3; 3.6-49.3; p = 0.0001). There were 12 email reminders in Round 1 and 5 for round 2

Mean (±standard deviation)

Median (Q1,Q3)

As determined by K-Means cluster analysis of days played and questions answered to determine patterns of play-style clustering among our participants

PER combines accuracy (points for answering questions correctly), consistency of play (bonus points for answering more questions in the question bank over a longer period of time) and timeliness of answers (bonus points awarded for answering a question closer to the day of release)

Player level characteristics showed a longer median duration of play in Round 1 (48 IQR1,3 7, 85). Players most commonly used both laptop and desktop computers and their phones and tablets to log in for Round 1, whereas laptop and desktop computers were the preferred way to access the game in Round 2. Researchers also performed cluster analysis to identify patterns in playing style. Among the three predominant playing styles, “answered in bursts” was most frequent in both rounds, eclipsing both “engaged throughout” and “slightly engaged” players. Researchers calculated PER, combining the accuracy, consistency of play, and timeliness of answers, and found median performance to be slightly higher in Round 1 (see Table 1).

Attrition

Attrition was defined as non-participation in Round 2 for a player who had completed one or more questions in Round 1. The results of the multivariable model can be seen in Table 2. Notably, there was a decreased risk of attrition for every additional badge earned, and members of teams who added new players in Round 2 were more likely to continue playing.

Table 2.

Logistic regression analysis of factors associated with attrition or the non-participation of a player in Round 2, who had completed ≥1 question in Round 1.

| Univariate OR(95%CI)a | Multivariable OR (95%CI)a | |

|---|---|---|

| Badges earned (per 1 badge) | 0.94 (0.81-1.09) | 0.74 (0.59-0.92) |

| Team added a new player in Round 2 | ||

| Yes | 0.21 (0.08-0.53) | 0.16 (0.05-0.49) |

| No | 1.0 | 1.0 |

| Instructor | ||

| A | 1.0 | 1.0 |

| B | 4.13 (1.23-13.83) | 4.41 (0.99-19.70) |

| C | 2.97 (0.88-9.98) | 2.43 (0.58-10.10) |

| D | 1.35 (0.37-4.92) | 0.68 (0.16-2.96) |

OR (Odds ratio); 95%CI (Confidence interval)

Retention of knowledge

To gauge retention of knowledge, researchers used a generalized linear mixed model (GLIMMIX) to determine the change in the odds of a correct answer among the set of eight questions reintroduced in Round 2, using data from students who answered at least some of these questions in both rounds. They found a statistically significant increase in the odds of a correct response (1.8, 1.0-3.4) for a repeated question among players (see Table 3).

Table 3.

Cross-tabulation of paired responses (eight repeated questions) to examine retention of knowledge (n=151 paired responses by 33 students)

| Round 2 | |||

|---|---|---|---|

| Round 1 | Correct | Incorrect | Total (%) |

| Correct | 90 | 12 | 102 (68%) |

| Incorrect | 25 | 24 | 49 (32%) |

| Total (%) | 115 (76%) | 36 (24%) | 151 |

Note. Generalized linear mixed model (GLIMMIX) estimated OR of correct response Round 2 vs. Round 1 = 1.8, 95% CI =1.0-3.4

Final exam performance

Eighty of 94 students (85%) provided informed consent to participate in this analysis. Researchers found statistically significant differences in final exam scores for different play styles and PERs. On average, students in the “engaged throughout” group and in the highest PER tertile had higher exam scores (see Table 4).

Table 4.

Analysis of variance (ANOVA) of performance on final examination per Round 1 Play Style and Round 1 Player Efficiency Rating (PER) among students consenting to the use of their grades in analyses of our first semester nursing students (total students 94)

| Exam scorea Mean ± SD |

p-value | |

|---|---|---|

| Play Styleb | ||

| Engaged throughout | 52.6 ± 2.3 | 0.045 |

| Answered in bursts | 51.5 ± 4.0 | |

| Just slightly engaged | 45.8 ± 16.2 | |

| PER Tertilec,d | ||

| Highest | 52.4 ± 3.2 | 0.017 |

| Middle | 51.1 ± 3.9 | |

| Lowest | 44.6 ± 17.6 |

Out of 60 points

Students consenting to the use of exam scores in analysis per play style group: Engaged throughout (12 of 13, 92%); Answered in bursts (39 of 44, 89%) and Just slightly engaged (29 of 37, 78%)

PER (Player Efficiency Rating) Tertile: PER is a metric that combined the accuracy, consistency of play and timeliness of answers. Higher PER was desirable, and here we group students per PER tertile.

Students consenting to the use of exam scores in analysis per PER tertile: Highest (27 of 30, 90%), Middle (29 0f 32, 91%) and Lowest (24 of 32, 75%)

DISCUSSION

Gamification provides a novel way to engage students, and software-based gamification provides an additional route through which to offer educational opportunities.[6, 9, 13–19] The Kaizen intervention is an example of structural gamification, where game elements are added to propel a learner through content without altering the underlying content. In this case, the use of leaderboards, points, levels, and badges was applied to an educational context in which students were continually assessed. The Kaizen software also allowed for the integration of two categories of structural gamification, competition (e.g., versus other users and teams), and progression (e.g., advancing across point driven levels), and used badges to recognize competence (e.g., hot-streaks for consecutive correct answers and marathon streaks for uninterrupted participation).20 By encouraging students to participate in software-based games, educators gain access not just to a new way of engaging students, but to a trove of digital data that can be analyzed to optimize content delivery and enhance longitudinal student participation.

At first, students were quite interested in the gamified knowledge competition software, but there was a decrement in DAUs over time. Further analyses revealed statistically significant increases in DAUs when “status of competition” emails were sent, and attrition levels declined when students earned badges and new players were added in subsequent rounds. There was also an association with positive educational outcomes, such as retention of knowledge and improved final exam grades among students who played consistently throughout the length of the game (highly engaged and highest PER groups). These findings suggest that, while student engagement may wane over time, monitoring and adapting the delivery of a game-based intervention helps to encourage the investment of different student populations and achieve improved educational outcomes.

Gamification provides exciting new opportunities to engage students. There is a growing body of literature on how gamification can favorably affect student engagement in different educational settings.2,4,6,14,21–25 However, there are limited data in the extant literature that tie gamification to traditional educational outcomes, or that focus on the data resulting from game-based interventions as the substrate to inform educational analytics.26 In this study, researchers were able to obtain informed consent from the majority of first-semester students (n=80; 85%) and a statistically significant difference in final exam performance favoring those who were more closely engaged with the gamified software, playing both frequently and longitudinally over the duration of the game, was observed. In addition, in concordance with a previous analysis in a graduate medical education setting, researchers saw evidence of improved retention of knowledge in students.19 Findings in both of these studies provide evidence that offering additional educational opportunities through gamified software-based knowledge competition will benefit engaged students.

Optimizing engagement includes getting students to play longitudinally (throughout the length of the game) and in a timely fashion (within 24 hours after a question is posted) to better assess student comprehension of content. Analyses revealed an increase of 2.5 to more than 10 in DAUs in the 24 hours after a “status of competition” email was sent by the game manager. Additionally, in the analysis of factors associated with user attrition, researchers learned that earning badges and the addition of new players to a team in the second round decreased the likelihood of player loss. These observations indicate how to mitigate attrition and enhance participation among nursing school students. More important, these findings point to the need for data capture, monitoring, and evaluation when gamified interventions such as Kaizen are added to an existing educational program. Such monitoring and evaluation will allow educators to further tailor educational content so that students can gain maximum benefit from game-based interventions. It is critical to underscore the importance of such practices, not only to benefit local interventions, but also to increase the body of evidence on best practices for gamified software-based educational interventions.

The limitations of this study include the restriction of analyses to a single undergraduate nursing program in the southeastern USA. However, the Kaizen software is a platform that can accommodate content across multiple schools and program types (undergraduate, graduate). While the invitation to play was extended to all nursing students, those who participated in Kaizen did so voluntarily, limiting the sample to those who chose to participate (potential for self-selection bias). The students who chose to participate could have had higher baseline grade point averages than those who did not.

In addition, to comply with FERPA regulations, researchers obtained informed consent from all students who participated in the final exam performance analyses, and while the majority of students consented, researchers were not able to include data from all participants. Additionally, study data thus far encompass only 1 academic year of Kaizen use in the SON. It is possible that a larger sample size of participants and/or a longer period of data collection could affect results. Regarding analyses (player attrition, retention of knowledge, final exam performance) it is possible only to describe associations between variables and not causality.

CONCLUSION

While it is certainly possible that researchers have failed to identify effects that really exist (type II errors), this study has shown that nursing students are not only open to supplemental educational tools such as Kaizen, but also that they do use them, retain some of the knowledge, and achieve statistically significant differences in mean final exam scores with greater participation. In assessing use of Kaizen in the SON, researchers were able to identify the impact of “status of competition” emails on participation, but they cannot account for the impact of internal communications among team members.

This study adds to the literature by reporting on the use of Kaizen, a novel game-based, software-driven instructional strategy, in the first year of undergraduate nursing education. The study also identifies methods by which to analyze and interpret participant data to glean insight into how to maximize learning with such techniques. A necessary step towards maximizing the benefits of gamification is the use of the resulting data to power educational analytics and uncover potential strategies to further increase student engagement, retention of knowledge, and performance. While the promise of gamification is great, more emphasis on educational analytics is needed to maximize student benefit.

Acknowledgments

The authors would like to acknowledge UAB School of Nursing faculty who made this research possible:

Nanci Claus, MSN, RN, Instructor

Matthew Jennings, MEd, Instructor

Michael Mosley, MSN, RN, Instructor

Jacqueline Moss, PhD, RN, Professor

Summer Powers, DNP, RN, Assistant Professor

FUNDING STATEMENT

The National Center for Advancing Translational Sciences of the National Institutes of Health supported this research in part under award number UL1TR001417. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

References

- 1.Royse MA, Newton SE. How gaming is used as an innovative strategy for nursing education. Nurs Educ Perspect. 2007;28(5):263–7. [PubMed] [Google Scholar]

- 2.Brigham TJ. An introduction to gamification: Adding game elements for engagement. Med Ref Serv Q. 2015;34(4):471–80. doi: 10.1080/02763869.2015.1082385. [DOI] [PubMed] [Google Scholar]

- 3.Allam A, Kostova Z, Nakamoto K, Schulz PJ. The effect of social support features and gamification on a Web-based intervention for rheumatoid arthritis patients: randomized controlled trial. J Med Internet Res. 2015;17(1):e14. doi: 10.2196/jmir.3510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Anderson J, Rainie L. Gamification and the Internet: Experts expect game layers to expand in the future, with positive and negative results. Games Health J. 2012;1(4):299–302. doi: 10.1089/g4h.2012.0027. [DOI] [PubMed] [Google Scholar]

- 5.Brown M, O’Neill N, van Woerden H, Eslambolchilar P, Jones M, John A. Gamification and adherence to web-based mental health interventions: A systematic review. JMIR Ment Health. 2016;3(3):e39. doi: 10.2196/mental.5710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Brull S, Finlayson S. Importance of gamification in increasing learning. J Contin Educ Nurs. 2016;47(8):372–5. doi: 10.3928/00220124-20160715-09. [DOI] [PubMed] [Google Scholar]

- 7.Bukowski M, Kuhn M, Zhao X, Bettermann R, Jonas S. Gamification of clinical routine: The Dr. Fill approach Stud Health Technol Inform. 2016;225:262–6. [PubMed] [Google Scholar]

- 8.Dithmer M, Rasmussen JO, Gronvall E, et al. “The heart game”: Using gamification as part of a telerehabilitation program for heart patients. Games Health J. 2016;5(1):27–33. doi: 10.1089/g4h.2015.0001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kerfoot BP, Kissane N. The use of gamification to boost residents’ engagement in simulation training. JAMA Surg. 2014;149(11):1208–9. doi: 10.1001/jamasurg.2014.1779. [DOI] [PubMed] [Google Scholar]

- 10.Vinney LA, Howles L, Leverson G, Connor NP. Augmenting college students’ study of speech-language pathology using computer-based mini quiz games. Am J Speech Lang Pathol. 2016;25(3):416–25. doi: 10.1044/2015_AJSLP-14-0125. [DOI] [PubMed] [Google Scholar]

- 11.Einig S. Supporting students’ learning: The use of formative online assessments. Accounting Education: An International Journal. 2013;22(5):425–44. [Google Scholar]

- 12.Douglas MWJ, Ennis S. Multiple-choice question tests: a convenient, flexible, and effective learning tool? A case study. Innovations in Education and Teaching International. 2012;49(2):111–21. [Google Scholar]

- 13.Ahmed M, Sherwani Y, Al-Jibury O, Najim M, Rabee R, Ashraf M. Gamification in medical education. Med Educ Online. 2015;20:29536. doi: 10.3402/meo.v20.29536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Day-Black C, Merrill EB, Konzelman L, Williams TT, Hart N. Gamification: An innovative teaching-learning strategy for the digital nursing students in a community health nursing course. ABNF J. 2015;26(4):90–4. [PubMed] [Google Scholar]

- 15.El Tantawi M, Sadaf S, AlHumaid J. Using gamification to develop academic writing skills in dental undergraduate students. Eur J Dent Educ. 2016 doi: 10.1111/eje.12238. [DOI] [PubMed] [Google Scholar]

- 16.McCoy L, Lewis JH, Dalton D. Gamification and multimedia for medical education: A landscape review. J Am Osteopath Assoc. 2016;116(1):22–34. doi: 10.7556/jaoa.2016.003. [DOI] [PubMed] [Google Scholar]

- 17.Mokadam NA, Lee R, Vaporciyan AA, et al. Gamification in thoracic surgical education: Using competition to fuel performance. J Thorac Cardiovasc Surg. 2015;150(5):1052–8. doi: 10.1016/j.jtcvs.2015.07.064. [DOI] [PubMed] [Google Scholar]

- 18.Muntasir M, Franka M, Atalla B, Siddiqui S, Mughal U, Hossain IT. The gamification of medical education: A broader perspective. Med Educ Online. 2015;20:30566. doi: 10.3402/meo.v20.30566. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Nevin CR, Westfall AO, Rodriguez JM, et al. Gamification as a tool for enhancing graduate medical education. Postgrad Med J. 2014;90(1070):685–93. doi: 10.1136/postgradmedj-2013-132486. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kapp KM. Types of structural gamification. https://www.td.org/Publications/Newsletters/Links/2016/09/Types-of-Structural-Gamification. September 16, 2016. Last accessed 2/5/17.

- 21.Cugelman B. Gamification: What it is and why it matters to digital health behavior change developers. JMIR Serious Games. 2013;1(1):e3. doi: 10.2196/games.3139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hsu SH, Chang JW, Lee CC. Designing attractive gamification features for collaborative storytelling websites. Cyberpsychol Behav Soc Netw. 2013;16(6):428–35. doi: 10.1089/cyber.2012.0492. [DOI] [PubMed] [Google Scholar]

- 23.McKeown S, Krause C, Shergill M, Siu A, Sweet D. Gamification as a strategy to engage and motivate clinicians to improve care. Healthc Manage Forum. 2016;29(2):67–73. doi: 10.1177/0840470415626528. [DOI] [PubMed] [Google Scholar]

- 24.Mesko B, Gyorffy Z, Kollar J. Digital literacy in the medical curriculum: A course with social media tools and gamification. JMIR Med Educ. 2015;1(2):e6. doi: 10.2196/mededu.4411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Oprescu F, Jones C, Katsikitis M. I play at work: Ten principles for transforming work processes through gamification. Front Psychol. 2014;5:14. doi: 10.3389/fpsyg.2014.00014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Lewis ZH, Swartz MC, Lyons EJ. What’s the point?: A review of reward wystems implemented in gamification interventions. Games Health J. 2016;5(2):93–9. doi: 10.1089/g4h.2015.0078. [DOI] [PubMed] [Google Scholar]