Abstract

The quantitative monitoring of airborne urediniospores of Puccinia striiformis f. sp. tritici (Pst) using spore trap devices in wheat fields is an important process for devising strategies early and effectively controlling wheat stripe rust. The traditional microscopic spore counting method mainly relies on naked-eye observation. Because of the great number of trapped spores, this method is labour intensive and time-consuming and has low counting efficiency, sometimes leading to huge errors; thus, an alternative method is required. In this paper, a new algorithm was proposed for the automatic detection and counting of urediniospores of Pst, based on digital image processing. First, images of urediniospores were collected using portable volumetric spore traps in an indoor simulation. Then, the urediniospores were automatically detected and counted using a series of image processing approaches, including image segmentation using the K-means clustering algorithm, image pre-processing, the identification of touching urediniospores based on their shape factor and area, and touching urediniospore contour segmentation based on concavity and contour segment merging. This automatic counting algorithm was compared with the watershed transformation algorithm. The results show that the proposed algorithm is efficient and accurate for the automatic detection and counting of trapped urediniospores. It can provide technical support for the development of online airborne urediniospore monitoring equipment.

Introduction

Wheat stripe (yellow) rust, which is caused by Puccinia striiformis f. sp. tritici (Pst), is a wheat disease that is prevalent across the world, particularly in cool and moist regions1,2. It is one of the most important and devastating airborne wheat diseases in China and has caused severe yield reduction, resulting in significant economic loss3. Urediniospores of Pst are heteroecious macro-cyclic rust pathogens that require a living host (wheat/grasses, Berberis/Mahonia spp.) to complete the asexual and sexual phases of their life cycle4–6. Urediniospores maintain the dominant asexual stage of the pathogen population on the primary hosts. This is the main period for the wide-scale stripe rust epidemics reported on wheat2. Urediniospores have the capacity for long-distance dispersal by wind movement, which may extend to hundreds and perhaps thousands of kilometres from the centre of origin of Pst7–9. Urediniospores of Pst infect wheat as a result of urediniospore deposition by dispersed air or raindrops on the leaf surface10. Therefore, the aerial dispersal of Pst urediniospores is the main reason for the occurrence and prevalence of the disease of wheat stripe rust. The occurrence of the disease is closely related to the number of urediniospores in wheat field air. The efficient capturing and quantitative monitoring of urediniospores in the atmosphere of a wheat field can provide critical information for airborne wheat disease prediction.

Because of the improvement and popularization of spore trap devices, airborne fungal spores are commonly collected by these devices11–13. Previous studies have often used them for sampling onto microscope slides or plastic tape, which were coated with a thin film of petroleum jelly and then examined microscopically at hundredfold magnification. The traditional microscopic spore counting method mainly relies on naked-eye observation by professional and technical personnel in the laboratory. Because of the great number of trapped spores, this method is labour-intensive and time-consuming and has low efficiency, sometimes leading to huge errors. Thus, the timely identification and counting of trapped spores is hindered. Studies on the application of molecular-biological techniques to detect and quantify fungus have been reported14–16. However, it is difficult to translate the techniques to practical applications because of the high technical requirements and great operational complexes.

Machine vision techniques have been widely used in the automatic diagnosis and grading of plant diseases in recent years. Several algorithms for the digital image process have been used in studies on plant diseases. Image recognition of plant diseases17–19 and the automatic classification of disease severity20–22 can be achieved using appropriate image processing technologies. Recently, some studies on the automatic counting of fungal spores with using micro-image processing have been reported. Li et al.23 proposed a method based on the watershed transformation algorithm to count the urediniospores of Pst. However, the results appeared to incorrectly split the positions of the spores and demonstrated over-segmentation when the urediniospores had rough boundaries or a complicated touching condition. Chesmore et al.24 developed an image analysis system for rapidly discriminating between T. walkerii and T. indica. Principal components analysis was performed on many parameters, including the perimeter, surface area, number of spines, spine size, maximum and minimum ray radii, aspect ratio and roundness, to obtain a linear separation of species. The analysis system achieved 97% accuracy for separating T. walkerii and T. indica. Wang et al.25 proposed a new method based on image processing and artificial neural network to automatically detect powdery mildew spores. The 63.6% correct rate of testing pictures showed that it could achieve the automatic detection and counting of powdery mildew spores. Xu et al.26 used a computer-assisted digital image processing method, which obtained the spore image exterior outline characteristics for spore type analysis and automatic counting. All previous works demonstrated excellent performance. However, when the spores largely touched each other or there was no strong gradient existing in the touching spores, these studies usually had the problem of over-segmentation or under-segmentation. In reality, in-field trapped pathogen spores may touch or even overlap. Under the touching condition, it is difficult to separate the touching spores, which may affect the counting accuracy. Therefore, the touching spores in the image need to be split into separate spores. A variety of segmentation algorithms for touching objects in images, such as cells27–29, fruits30,31, and cereals32–34, have been reported, and there have been some achievements in these fields. However, these algorithms are not suitable for the segmentation and counting of spores. Hence, it is necessary and urgent to develop a new method for the rapid and accurate identification and quantification of spores.

On the basis of simulating the wheat field environment, this study develops a new algorithm for the automatic detection and counting of trapped pathogen urediniospores of Pst. First, the urediniospores are segmented using the K-means clustering algorithm and converted into a binary image. Second, to remove noise, pre-processing measures, including region filling, small area removal and open operations, are implemented to fill the spores, filter noise and smooth the urediniospore edges. Third, a feature combination of shape factor of target contour and area is selected as the basis for the discrimination of touching urediniospores. Fourth, the contour of touching urediniospores is extracted and divided into several contour segments based on concavity. Then, the contour segments that belong to the same urediniospores are merged, which is the main issue to solve. In this section, the distance measurement is proposed to eliminate the segments that have a low possibility of belonging to the same urediniospore. The candidate ellipse is then fitted using the least-squares ellipse fitting algorithm, and the deviation error measurement is used to determine its fitness evaluation. If the contour segments satisfy the conditions of the distance and the deviation error measurement, they are merged to create a new segment. Finally, the ellipses are fitted with these new segments as the best representative ellipses for the touching urediniospores. The objective of this study is to develop a new algorithm for the automatic detection and counting of trapped pathogen spores, and to assess the algorithm’s detection accuracy by comparing the automatic counting algorithm with the watershed transformation algorithm.

Results

To verify the validity and accuracy of the proposed automatic counting algorithm in this paper, the counting test was performed on a total of 120 urediniospore images. To accurately obtain the real number of urediniospores, the results of a manual counting method were used as the actual number of urediniospores. The performance of the proposed algorithm was compared with that of the watershed transformation algorithm23. A personal computer with a 3.3 GHz processor and 8 GB RAM was used as the hardware part of the computer vision system, and all the algorithms were developed in MATLAB R2014a (The MathWorks Inc, Natick, MA, USA). The examples are demonstrated below.

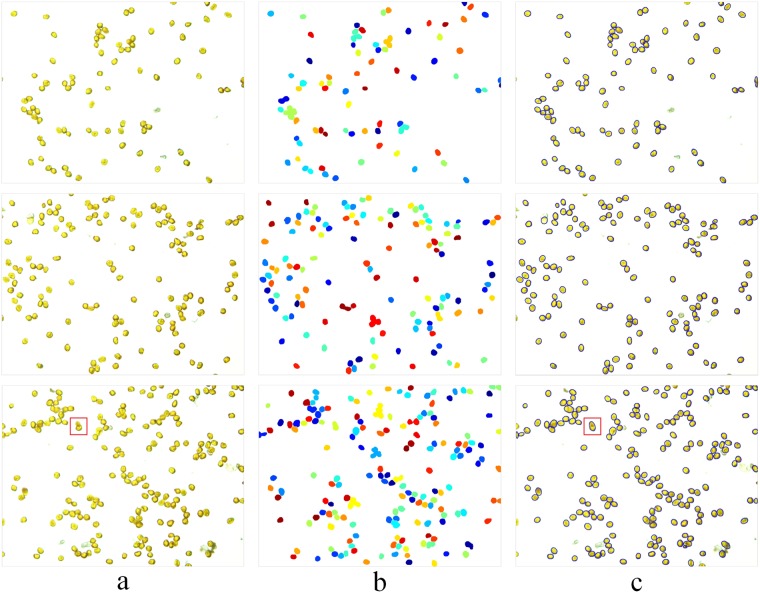

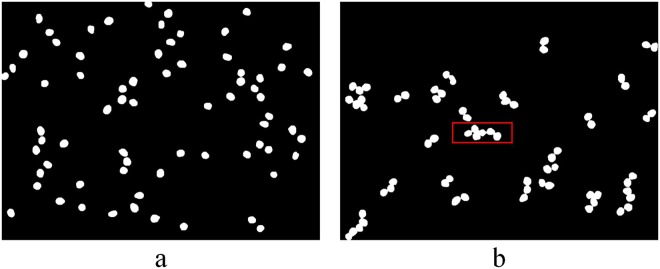

A comparison of the results processed by the proposed algorithm and the watershed transformation algorithm is shown in Fig. 1. As shown in Fig. 1a, there were not only individual urediniospores but also touching clumps and even some occluded urediniospores, which may not have been possible to separate correctly using previous techniques. Figure 1b shows the result processed by the watershed transformation algorithm, in which the cases of two urediniospore adhesions and several urediniospores connected in series were well separated. However, it appeared to be over-segmented when the urediniospores had rough boundaries or under complicated touching/cluster conditions, and some of the segmentation locations were not accurate, which made the counting accuracy low. Figure 1c shows the result processed by the proposed algorithm, in which the cases above were well separated. Moreover, the proposed algorithm was robust, because it performed well, even when the contours of touching urediniospores were irregular.

Figure 1.

Segmentation and counting results of the part of test images: (a) original digital image of urediospores; (b) segmentation effect and counting results based on the watershed segmentation algorithm; (c) segmentation effect and counting results based on the proposed algorithm.

To evaluate both methods, the counting accuracy of detection was used, which was the ratio of the number of correctly detected urediniospores to the total number of urediniospores in the input image. Comparisons of the counting accuracy results are shown in Table 1. The lowest counting accuracy of the proposed method was 92.7%. The highest counting accuracy was 100%. The total average counting accuracy was 98.6%, which was an increase of 6 percentage points over that of the watershed transformation algorithm (92.6%). The experimental results of the lowest accuracy, highest accuracy and total average accuracy show that the proposed algorithm had better validity and correctness than the watershed transformation algorithm for urediniospore image segmentation and counting. Therefore, the proposed method is efficient and accurate for the automatic detection and counting of trapped urediniospores.

Table 1.

Comparisons of counting accuracy of two different segmentation algorithms.

| Range of spores in one image | Number of samples | Watershed transformation algorithm | Proposed algorithm | ||||

|---|---|---|---|---|---|---|---|

| Lowest accuracy/% | Highest accuracy/% | Average accuracy/% | Lowest accuracy/% | Highest accuracy/% | Average accuracy/% | ||

| 9 ~ 59 | 48 | 81.3 | 106 | 95.3 | 95.5 | 100 | 99.5 |

| 60 ~ 110 | 37 | 85.9 | 101 | 94.7 | 94.9 | 100 | 98.9 |

| 111 ~ 161 | 22 | 84.2 | 100 | 92.1 | 93.2 | 100 | 98.2 |

| 162 ~ 177 | 13 | 82.7 | 97.2 | 88.4 | 92.7 | 100 | 97.7 |

| Total | 120 | 83.5 | 101 | 92.6 | 93.6 | 100 | 98.6 |

Discussion

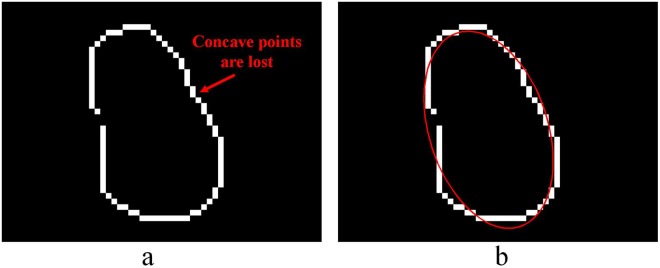

It is inevitable that overlap will arise while capturing the urediniospores of wheat stripe rust. As shown in the red boxes in Fig. 1a and c, the urediniospore in the upper part of the occluded urediniospores is blurred, because of the shallow depth of field under high optical magnification. In this case, the concave point may be lost at the contour of the concave object (Fig. 2a), which leads to a segmentation fault and affects the accuracy of automatic counting. From Fig. 2b, two urediniospores are misdivided into one urediniospore by the ellipse fitting algorithm. Thus, further research is needed to solve the problem of the segmentation count for overlapping urediniospores.

Figure 2.

Example of overlapping urediniospore segmentation: (a) contour segments; (b) separation result.

Currently, we mainly focus on the automatic detection and counting of pathogenic urediniospores of Pst that are trapped using a spore trap device via an indoor simulation. The scenarios are more complicated in a wheat field environment. Some atmospheric particles, such as pollen and other fungal spores, may also affect the accuracy of the automatic counting of urediniospores. Thus, further work is required to improve the counting accuracy of urediniospores that are collected in an actual farmland environment.

Materials and Methods

Materials

Fresh urediniospores of Pst were selected as the experimental objects and obtained from the College of Plant Protection at Northwest A&F University, Yangling, Shaanxi, China. The other materials and instruments included petroleum jelly, microscope slides, an aurilave, portable volumetric spore traps (TPBZ3, Zhejiang Top Instrument Co., Ltd, China), microscope (BX52, Olympus, Japan) and a desktop computer.

Image acquisition

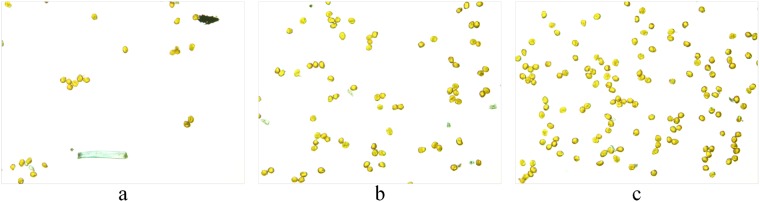

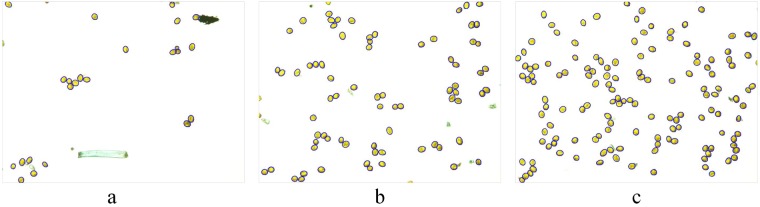

The experiment was conducted in the laboratory of the College of Mechanical and Electronic Engineering at Northwest A&F University, Yangling, Shaanxi, China. The collection of airborne fungal urediniospores was conducted by simulating the wheat field environment. Portable volumetric spore traps were used to collect airborne urediniospores of Pst. In the laboratory, an aurilave was used first to slowly and continuously blow the urediniospores above the trap to spread them into the air. Airborne particles were then deposited onto microscope slides that were uniformly coated with a thin film of petroleum jelly. To obtain different densities of urediniospores on the slides, urediniospores were collected continuously for 5, 10 or 15 mins and repeated 10 times to obtain 30 slides containing different urediniospore densities. The fungal urediniospores on the slides were observed and photographed at the genus level with the aid of a light microscope under ×200 magnification. All the 150 experimental images (4,140 × 3,096 pixels) of urediniospores (five points each slide under the microscope were randomly selected to take images) were acquired for the validation of the proposed algorithm using a digital imaging system (DP72, Olympus, Japan) of 72 dpi at 24 bits using the RGB model. Thirty images were randomly selected for algorithm training, and the remaining 120 images were used for testing. Partial original images are shown in Fig. 3. The urediniospores were broadly ellipsoidal to broadly obovoid, with a mean of 24.5 × 21.6 μm, and yellow to orange. A large number of urediniospores in the images touched each other, which made it difficult to automatically count them.

Figure 3.

Original RGB images for different capture times: (a) image after capturing for 5 min; (b) image after capturing for 10 min; (c) image after capturing for 15 min.

Segmentation of the urediniospore images using the K-means clustering algorithm

Image segmentation from the background is an important step in the image processing technique. Urediniospore target segmentation can be viewed as a clustering problem in the case in which the classification of the pixel points is unknown and the image can be divided into several regions based on the characteristic value of the pixel points. The K-means clustering algorithm is an unsupervised partitioning algorithm that divides data into a predetermined class k to minimise the error function and is widely used in image segmentation fields35,36. It is accurate and highly efficient for largescale data processing, which makes it more suitable to segmenting urediniospore images in this work. Because only the urediniospore region was required in this research, k = 2 was used, and the original image was divided into two classes: the urediniospore region and the background region.

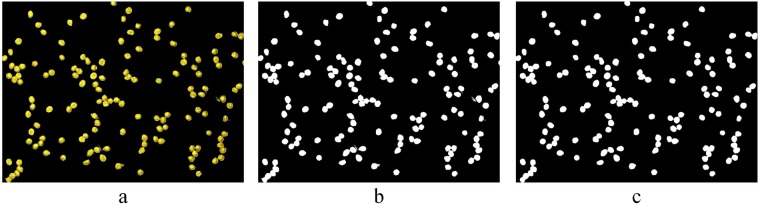

The size of images we acquired was large, and it was time consuming to process and segment them. First, we resized them to 911 × 682 pixels. The colour space was transformed from the RGB colour space to the L*a*b* colour space. The Kmeans clustering algorithm was then used to cluster the urediniospore images into two classifications based on the a* and b* colour components. Figure 3c is the original image. Figure 4a shows the processing results of K-means clustering, demonstrating that it could be applied for image segmentation. Figure 4b is the binary image of Fig. 4a using the fixed-threshold algorithm based on the threshold of 0.2. From Fig. 4b, we can observe that it contained noise, such as holes and spurs, which affected the accuracy of the subsequent contour detection and urediniospore count. Therefore, further processing was needed.

Figure 4.

Results of the urediniospore image after the Kmeans algorithm and preprocessing: (a) extracted image of urediniospores after the K-means algorithm with k = 2; (b) binary image of the extracted urediniospores using the fixed threshold algorithm; (c) binary image after morphology preprocessing.

Pre-processing of the urediniospore image

To remove noise, pre-processing measures, including region filling, small-area removal and open operations, were implemented to fill the spores, filter noise and smooth the spore edges. First, the holes in the urediniospores were filled using the operation of region filling. Second, the areas of less than 190 pixels, that is, small particles, were removed using the area opening operation. At the moment when the areas of the urediniospores on the boundary of the image were less than 190 pixels, the urediniospores were removed from the binary image and counted as the urediniospores of the other images. Finally, the morphological opening operation with a ‘disk’-shaped structural element with a radius of 5 pixels was performed to remove spurs and smooth the urediniospore contour. As a result, the processed image (Fig. 4c) showed no noise and the contour became smoother, which was helpful in subsequent processing.

The identification of touching urediniospores based on the shape factor and area

Figure 4c shows that the binary image included not only individual urediniospores but also two or more touching urediniospores. The regional contour of the touching urediniospores was more complex and larger than that of the individual urediniospores. Thus, a feature combination of the shape factor of the target contour and area was selected as the basis for the discrimination of touching urediniospores. The shape factor (SF) formulas37 can be defined as

| 1 |

where S is the area pixel value of a connected region and L is the perimeter pixel value of a connected region.

The contour of the touching urediniospores was larger and more complicated than that of the individual urediniospores after sampling statistics because it was concave, and the shape factor was smaller. When 0.9080 < SF < 1.0912 and 200 < S < 523, the objects were identified as individual urediniospores. When 0.2625 < SF < 0.7606 and 600 < S < 2301, the objects were identified as touching urediniospores. Thus, SF and S were set to be 0.8 and 560 according to various tests. Let i be a connected region. If

| 2 |

is satisfied, region i is assessed to be an individual urediniospore, otherwise it is assessed to be touching urediniospores. Figure 5 shows the result of touching urediniospore identification for Fig. 4c, where the individual urediniospores and touching urediniospores were correctly discriminated.

Figure 5.

Binary images of individual and touching urediniospores: (a) binary image of individual urediniospores; (b) binary image of touching urediniospores.

Touching urediniospore contour segmentation based on concavity

From the above processing step, if the region was identified as an individual urediniospore in the binary image, then the ellipse number was directly counted by the least-squares ellipse fitting method. To automatically count the spores precisely, these touching urediniospores must be separated, which is the main contribution of this work.

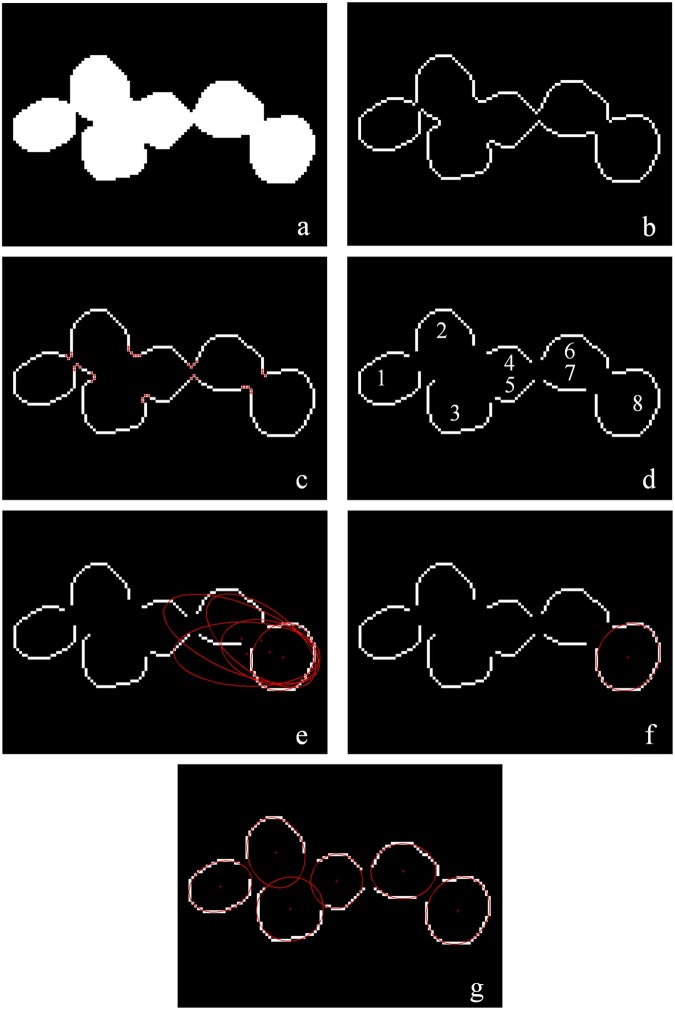

As an example, the contour of the touching urediniospores in the red rectangular box in Fig. 5b was extracted by the canny edge detector, which is shown after enlargement in Fig. 6b, and the contour points were stored in an ordered list. The following detection method for the concavity was used.

Figure 6.

Processing example of the proposed algorithm: (a) binary image after pre-processing; (b) contour of touching urediniospores; (c) red concave points on the contour; (d) contour segments; (e) candidate ellipses; (f) best representative ellipse of one urediniospores; (g) final separation result.

Let pt(xt, yt) be a contour point, and the angle between the vectors ptpt−h and ptpt+h be defined as the concavity of pt, where pt−h and pt+h denote the hth adjacent contour points of pt; h was set to 3 according to the pretest. The formula for concavity can be expressed as

| 3 |

If the point is determined to be the concave point, then it needs to meet the following two conditions (Fig. 6c): concavity(pt) is in the range angle(δ1,δ2); line does not traverse the touching urediniospores.

With 30 urediniospore image samples for statistical analyses, the angles of the concave point pt between the vectors ptpt−h and ptpt + h were in the range of 50° to 150°. Thus, the values of δ1 and δ2 were set to 50° and 150°, respectively.

With these concave points, the contour (C) of the touching urediniospores was divided into N contour segments and K concave points (Fig. 4d):

| 4 |

The contours of the touching urediniospores were stored in an ordered cell structure using the 8-connected boundary tracking method and then used for subsequent urediniospore contour segment merging.

Touching urediniospore contour segment merging

After segmentation in the above processing step, one contour of the same urediniospores may be divided into several contour segments. The aim of this subsection is to merge the contour segments that belong to the same urediniospores. First, several measurements and the ellipse fitting algorithm are proposed, and then the merging steps are explained.

Distance measurement between contour segments

If the distance between two contour segments is large, then the possibility that these two segments belong to the same urediniospore is low and vice versa. Thus, according to this theory, a method based on the distance measurement is used to express the relevance between these contour segments to eliminate the segments that had a low probability of belonging to the same urediniospore. The distance between two contour segments can be described by as

| 5 |

where d(pi1,pj1), , , and are the Euclidian distances between the end points of contours CSi and CSj, and is the Euclidian distance between the middle points of contours CSi and CSj.

Ellipse fitting

Because the urediniospores in the image resembled an ellipsoidal shape, to separate the touching urediniospores, the least-squares ellipse fitting algorithm proposed by Fitzgibbon et al.38 was implemented to fit the contour of the urediniospores. A general ellipse can be described by an implicit second-order polynomial:

| 6 |

with an ellipse constraint:

| 7 |

where and are the coefficients of the ellipse, and x and y are coordinates of sample points lying on it.

Equation (7) can be represented in vector form:

| 8 |

where the constraint matrix C is of size 6 × 6, and

| 9 |

Polynomial F(α, Xi) is called the algebraic distance of data point (xi, yi) to ellipse F(xi, yi) = 0. Because of sample point errors, the deviation resulted in F(xi, yi) ≠ 0. The fitting problem of the ellipse can be resolved by minimising the sum of the squared algebraic distances of the set of N data points to the ellipse:

| 10 |

which can be reformulated in vector form:

| 11 |

where the design matrix D of size N × 6 is

| 12 |

Vector α can be calculated using the Lagrange coefficient and differential based on Equations (8) and (11), which leads to the following:

| 13 |

where W is the scatter matrix of size 6 × 6, and λ is an eigenvalue for W:

| 14 |

Equation (13) is a generalised eigenvector system. According to the generalised eigenvalue, the solution method can derive the following:

| 15 |

where λi and ui are the eigenvalue and eigenvector for Equation (13).

The sum of the squared algebraic distances of the points to the ellipse can be derived as

| 16 |

Thus, the chosen eigenvector αi (i is the eigenvector number) that corresponds to the minimal positive eigenvalue λi represents the best-fit ellipse for the given set of points.

Deviation error measurement from the contour segments to the fitted ellipse

When elliptical fitting is conducted using the candidate contour segments, the fitting degree between the candidate contour segments and fitted ellipse is not considered, which will result in a great deviation from the actual ellipse. In this work, to exclude the wrong candidate contour segments, the deviation error measurement is proposed to determine its fitness evaluation from the contour segments to the fitted ellipse. Thus, the deviation error measurement can be represented as

| 17 |

where CS# denotes the data points of given contour segments, CE is the candidate ellipse, E is the sum of the least-squares algebraic distances of the data points to the ellipse and M# is the total number of points on CS#.

If the value of the deviation error is less than the threshold, then the contour segment belongs to the contour of the same urediniospore; otherwise, it does not.

Steps of contour segment merging

Fig. 6d shows an example that illustrates the merging steps. As shown in Fig. 6d, the contour of the touching urediniospores was divided into eight contour segments. The merging steps of the contour segments of the touching urediniospores are as follows:

Step 1: Select the longest segment of these contour segments CS8.

Step 2: Set a distance measurement threshold ωDM and measure the distance between the selected segment CS8 and each of the remaining segments CSi. Build a set CS* if DM(CS8,CSi) < ωDM is satisfied; that is,

| 18 |

Thus, a set CS* is constructed with CS4, CS5, CS6 and CS7 because their distance measurements are smaller than ωDM.

Step 3: Fit ellipses with CS8 and each segment in CS* using the least-squares ellipse fitting algorithm, which is shown as the ellipses in Fig. 6e. Calculate the DEMs between these two contour segments (CS8 and CS4, CS8 and CS5, CS8 and CS6, and CS8 and CS7) and their corresponding fitted ellipses. Merge CS8 with all CSi whose DEMs are smaller than σDEM to create CSnew, which can be represented as

| 19 |

Because there are no segments to satisfy the condition, CS8 is merged as a new contour segment.

Step 4: Fit the ellipse with this new segment CSnew as the correct ellipse for one of the touching urediniospores shown as Fig. 6f, where the number of urediniospores is Num + 1, and delete CSnew.

Step 5: Delete CSnew from the contour segment list CSN. Check if all CSN are deleted. If so, output all correct ellipses and end the program. If not, go back to Step 1, and the steps are iterated until all the contour segments are selected to fit the best representative ellipses (Fig. 6g).

With 30 urediniospore image samples for statistical analyses, when the parameter ωDM was less than 40, all contour segments of the same spore were included in CS*, and when the parameter σDEM was less than 95, the wrong candidate contour segments were excluded. Thus, ωDM and σDEM were set to 40 and 95, respectively. The results of the proposed algorithm for segmenting and counting the image in Fig. 3 are shown in Fig. 7. This shows that the touching urediniospores were well divided.

Figure 7.

Segmentation effect and counting results of the original image of urediniospores under different capture times: (a) image after capturing for 5 min; (b) image after capturing for 10 min; (c) image after capturing for 15 min.

Acknowledgements

This research was supported by the overall innovation project of science and technology of Shaanxi province (No. 2015KTZDNY01-06). The authors thank Huaibo Song, Dandan Wang, and Gangming Zhan for their helpful suggestions.

Author Contributions

Y.L. and D.J.H. conceived and designed the research. Y.L. carried out the experiments and image processing, wrote the main manuscript text and prepared figures. Z.F.Y. contributed to the data processing and critical revision of the manuscript. All authors reviewed the manuscript.

Competing Interests

The authors declare no competing interests.

Footnotes

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Wellings CR. Global status of stripe rust: a review of historical and current threats. Euphytica. 2011;179:129–141. doi: 10.1007/s10681-011-0360-y. [DOI] [Google Scholar]

- 2.Chen WQ, Wellings C, Chen XM, Kang ZS, Liu TG. Wheat stripe (yellow) rust caused by Puccinia striiformis f. sp.tritici. Mol. Plant Pathol. 2014;15:433–446. doi: 10.1111/mpp.12116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Wan AM, et al. Wheat stripe rust epidemic and virulence of Puccinia striiformis f. sp tritici in China in 2002. Plant Dis. 2004;88:896–904. doi: 10.1094/PDIS.2004.88.8.896. [DOI] [PubMed] [Google Scholar]

- 4.Wellings CR. Puccinia striiformis in Australia: a review of the incursion, evolution, and adaptation of stripe rust in the period 1979–2006. Aust. J. Agric. Res. 2007;58:567–575. doi: 10.1071/AR07130. [DOI] [Google Scholar]

- 5.Jin Y, Szabo LJ, Carson M. Century-Old Mystery of Puccinia striiformis Life History Solved with the Identification of Berberis as an Alternate Host. Phytopathology. 2010;100:432–435. doi: 10.1094/PHYTO-100-5-0432. [DOI] [PubMed] [Google Scholar]

- 6.Wang MN, Chen XM. First Report of Oregon Grape (Mahonia aquifolium) as an Alternate Host for the Wheat Stripe Rust Pathogen (Puccinia striiformis f. sp tritici) Under Artificial Inoculation. Plant Dis. 2013;97:839–839. doi: 10.1094/PDIS-09-12-0864-PDN. [DOI] [PubMed] [Google Scholar]

- 7.Brown JKM, Hovmoller MS. Epidemiology - Aerial dispersal of pathogens on the global and continental scales and its impact on plant disease. Science. 2002;297:537–541. doi: 10.1126/science.1072678. [DOI] [PubMed] [Google Scholar]

- 8.Zeng S-M, Luo Y. Long-distance spread and interregional epidemics of wheat stripe rust in China. Plant Dis. 2006;90:980–988. doi: 10.1094/PD-90-0980. [DOI] [PubMed] [Google Scholar]

- 9.Chen X, Penman L, Wan A, Cheng P. Virulence races of Puccinia striiformis f. sp tritici in 2006 and 2007 and development of wheat stripe rust and distributions, dynamics, and evolutionary relationships of races from 2000 to 2007 in the United States. Can. J. Plant Pathol. 2010;32:315–333. doi: 10.1080/07060661.2010.499271. [DOI] [Google Scholar]

- 10.Wang H, Yang XB, Ma Z. Long-Distance Spore Transport of Wheat Stripe Rust Pathogen from Sichuan, Yunnan, and Guizhou in Southwestern China. Plant Dis. 2010;94:873–880. doi: 10.1094/PDIS-94-7-0873. [DOI] [PubMed] [Google Scholar]

- 11.Isard SA, et al. Predicting Soybean Rust Incursions into the North American Continental Interior Using Crop Monitoring, Spore Trapping, and Aerobiological Modeling. Plant Dis. 2011;95:1346–1357. doi: 10.1094/PDIS-01-11-0034. [DOI] [PubMed] [Google Scholar]

- 12.Choudhury RA, et al. Season-Long Dynamics of Spinach Downy Mildew Determined by Spore Trapping and Disease Incidence. Phytopathology. 2016;106:1311–1318. doi: 10.1094/PHYTO-12-15-0333-R. [DOI] [PubMed] [Google Scholar]

- 13.Prados-Ligero AM, Melero-Vara JM, Corpas-Hervias C, Basallote-Ureba MJ. Relationships between weather variables, airborne spore concentrations and severity of leaf blight of garlic caused by Stemphylium vesicarium in Spain. Eur. J. Plant Pathol. 2003;109:301–310. doi: 10.1023/A:1023519029605. [DOI] [Google Scholar]

- 14.Kunjeti S, et al. Detection and quantification of Bremia lactucae by spore trapping and quantitative PCR. Phytopathology. 2016;106:22–22. doi: 10.1094/PHYTO-03-16-0143-R. [DOI] [PubMed] [Google Scholar]

- 15.Meitz-Hopkins JC, von Diest SG, Koopman TA, Bahramisharif A, Lennox CL. A method to monitor airborne Venturia inaequalis ascospores using volumetric spore traps and quantitative PCR. Eur. J. Plant Pathol. 2014;140:527–541. doi: 10.1007/s10658-014-0486-6. [DOI] [Google Scholar]

- 16.Glynn NC, Haudenshield JS, Hartman GL, Raid RN, Comstock JC. Monitoring sugarcane rust spore concentrations by real-time qPCR and passive spore trapping. Phytopathology. 2011;101:S61–S61. [Google Scholar]

- 17.Deng X, et al. Detection of citrus Huanglongbing based on image feature extraction and two-stage BPNN modeling. Int. J. Agric. Biol. Eng. 2016;9:20–26. doi: 10.1186/s13036-015-0018-8. [DOI] [Google Scholar]

- 18.Wang, H., Li, G., Ma, Z. & Li, X. Image Recognition of Plant Diseases Based on Backpropagation Networks. 2012 5th International Congress on Image and Signal Processing (Cisp) 894–900 (2012).

- 19.Barbedo JGA, Koenigkan LV, Santos TT. Identifying multiple plant diseases using digital image processing. Biosys. Eng. 2016;147:104–116. doi: 10.1016/j.biosystemseng.2016.03.012. [DOI] [Google Scholar]

- 20.Zhang S, Wu X, You Z, Zhang L. Leaf image based cucumber disease recognition using sparse representation classification. Comput. Electron. Agric. 2017;134:135–141. doi: 10.1016/j.compag.2017.01.014. [DOI] [Google Scholar]

- 21.Shrivastava S, Singh SK, Hooda DS. Color sensing and image processing-based automatic soybean plant foliar disease severity detection and estimation. Multimed. Tools Appl. 2015;74:11467–11484. doi: 10.1007/s11042-014-2239-0. [DOI] [Google Scholar]

- 22.Parikh, A., Raval, M. S., Parmar, C., Chaudhary, S. & Ieee Disease Detection and Severity Estimation in Cotton Plant from Unconstrained Images. Proceedings of 3rd Ieee/Acm International Conference on Data Science and Advanced Analytics, (Dsaa 2016), 594–601 (2016).

- 23.Li XL, et al. Development of automatic counting system for urediospores of wheat stripe rust based on image processing. Int. J. Agric. Biol. Eng. 2017;10:134–143. [Google Scholar]

- 24.Chesmore D, Bernard T, Inman AJ, Bowyer RJ. Image analysis for the identification of the quarantine pest Tilletia indica. EPPO Bulletin. 2003;33:495–499. doi: 10.1111/j.1365-2338.2003.00686.x. [DOI] [Google Scholar]

- 25.Wang D, Wang B, Yan Y. The identification of powdery mildew spores image based on the integration of intelligent spore image sequence capture device. 2013 Ninth International Conference on Intelligent Information Hiding and Multimedia Signal Processing (Iih-Msp 2013) 2013;2013:177–180. [Google Scholar]

- 26.Xu, P. Y. & Li, J. G. Computer assistance image processing spores counting, Loach, K. ed. 2009 International Asia Conference on Informatics in Control, Automation, and Robotics, Proceedings2009, 203–206 (2009).

- 27.Yu DG, Pham TD, Zhou XB. Analysis and recognition of touching cell images based on morphological structures. Comput. Biol. Med. 2009;39:27–39. doi: 10.1016/j.compbiomed.2008.10.006. [DOI] [PubMed] [Google Scholar]

- 28.Gharipour A, Liew AW-C. Segmentation of cell nuclei in fluorescence microscopy images: An integrated framework using level set segmentation and touching-cell splitting. Pattern Recogn. 2016;58:1–11. doi: 10.1016/j.patcog.2016.03.030. [DOI] [Google Scholar]

- 29.Wang P, Hu X, Li Y, Liu Q, Zhu X. Automatic cell nuclei segmentation and classification of breast cancer histopathology images. Math. Comput. Modell. 2016;122:1–13. [Google Scholar]

- 30.Wang D, Song H, Tie Z, Zhang W, He D. Recognition and localization of occluded apples using K-means clustering algorithm and convex hull theory: a comparison. Multimed. Tools Appl. 2016;75:3177–3198. doi: 10.1007/s11042-014-2429-9. [DOI] [Google Scholar]

- 31.Yue X, Yinghui L, Huaibo S, Dongjian H. Segmentation method of overlapped double apples based on Snake model and corner detectors. Transactions of the CSAE. 2015;31:196–203. [Google Scholar]

- 32.Yan L, Park CW, Lee SR, Lee CY. New separation algorithm for touching grain kernels based on contour segments and ellipse fitting. J. Zhejiang Univ. Sci. Comput. Electron. 2011;12:54–61. doi: 10.1631/jzus.C0910797. [DOI] [Google Scholar]

- 33.Mebatsion HK, Paliwal J. A Fourier analysis based algorithm to separate touching kernels in digital images. Biosys. Eng. 2011;108:66–74. doi: 10.1016/j.biosystemseng.2010.10.011. [DOI] [Google Scholar]

- 34.Lin P, Chen YM, He Y, Hu GW. A novel matching algorithm for splitting touching rice kernels based on contour curvature analysis. Comput. Electron. Agric. 2014;109:124–133. doi: 10.1016/j.compag.2014.09.015. [DOI] [Google Scholar]

- 35.Cheng, H., Peng, H. & Liu, S. An improved K-means clustering algorithm in agricultural image segmentation. In: Tan H. Piageng 2013: Image Processing and Photonics for Agricultural Engineeringed., vol. 8761 (2013).

- 36.Yao H, Duan Q, Li D, Wang J. An improved K-means clustering algorithm for fish image segmentation. Math. Comput. Modell. 2013;58:784–792. [Google Scholar]

- 37.Xu MJ, et al. A deep convolutional neural network for classification of red blood cells in sickle cell anemia. PLoS Comp. Biol. 2017;13:27. doi: 10.1371/journal.pcbi.1005746. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Fitzgibbon A, Pilu M, Fisher RB. Direct least square fitting of ellipses. IEEE T. Patteron Anal. 1999;21:476–480. doi: 10.1109/34.765658. [DOI] [Google Scholar]