Abstract

Background

To predict and prevent mental health crises, we must develop new approaches that can provide a dramatic advance in the effectiveness, timeliness, and scalability of our interventions. However, current methods of predicting mental health crises (eg, clinical monitoring, screening) usually fail on most, if not all, of these criteria. Luckily for us, 77% of Americans carry with them an unprecedented opportunity to detect risk states and provide precise life-saving interventions. Smartphones present an opportunity to empower individuals to leverage the data they generate through their normal phone use to predict and prevent mental health crises.

Objective

To facilitate the collection of high-quality, passive mobile sensing data, we built the Effortless Assessment of Risk States (EARS) tool to enable the generation of predictive machine learning algorithms to solve previously intractable problems and identify risk states before they become crises.

Methods

The EARS tool captures multiple indices of a person’s social and affective behavior via their naturalistic use of a smartphone. Although other mobile data collection tools exist, the EARS tool places a unique emphasis on capturing the content as well as the form of social communication on the phone. Signals collected include facial expressions, acoustic vocal quality, natural language use, physical activity, music choice, and geographical location. Critically, the EARS tool collects these data passively, with almost no burden on the user. We programmed the EARS tool in Java for the Android mobile platform. In building the EARS tool, we concentrated on two main considerations: (1) privacy and encryption and (2) phone use impact.

Results

In a pilot study (N=24), participants tolerated the EARS tool well, reporting minimal burden. None of the participants who completed the study reported needing to use the provided battery packs. Current testing on a range of phones indicated that the tool consumed approximately 15% of the battery over a 16-hour period. Installation of the EARS tool caused minimal change in the user interface and user experience. Once installation is completed, the only difference the user notices is the custom keyboard.

Conclusions

The EARS tool offers an innovative approach to passive mobile sensing by emphasizing the centrality of a person’s social life to their well-being. We built the EARS tool to power cutting-edge research, with the ultimate goal of leveraging individual big data to empower people and enhance mental health.

Keywords: passive mobile sensing, personal sensing, mobile sensing, mental health, risk assessment, crisis prevention, individual big data, telemedicine, mobile apps, cell phone, depression

Motivation

If health professionals cannot predict and prevent mental health crises, the field faces a crisis of its own. Although we have many evidence-based treatments, greater population-wide access to these treatments has thus far failed to yield significant reductions in the burden of mental health disorders [1-3]. This failure manifests, for example, in the meteoric rise of major depression in the World Health Organization’s rankings of conditions responsible for lost years of healthy life [4] and in the recent increase in suicide rates in some 50 World Health Organization member states, including an increase of 28% in the United States from 2000 to 2015 [5,6]. To predict and prevent mental health crises, we must develop new approaches that can provide a dramatic advance in the effectiveness, timeliness, and scalability of our interventions.

Intervening at critical moments in a person’s life—that is, during mental health crises, including times of risk for suicide, self-harm, psychotic breakdown, substance use relapse, and interpersonal loss—could dramatically enhance the effectiveness of mental health intervention. Even with the most extreme mental health crises, such as acute suicide risk, we find that the most effective interventions provide barriers to harmful behaviors at the critical moment of action. Take, for example, blister packaging on medications commonly used in suicide attempts. By placing a time-consuming, distracting barrier at just the right moment between a person and a suicide attempt, public health policy makers saved lives [7]. If we can improve the timeliness of our interventions, then even low-intensity interventions can have a major impact on improving health and saving lives [8]. Just-in-time adaptive interventions, delivered via mobile health apps and tailored to a person’s environment and internal state, headline a host of exciting developments in low-intensity, high-impact interventions [9].

Before we can take full advantage of these approaches, however, we face a critical challenge: prediction. It is one thing to recognize the tremendous power of precise timing for interventions, and it is quite another to possess the ability to identify the right time. Furthermore, as we face the challenge of predicting mental health crises, we must commit ourselves to meeting the highest standards of reliability, feasibility, scalability, and affordability. Given the large proportion of individuals experiencing mental health crises who do not receive treatment [10], prediction methods must reach those who are not in traditional mental health care. Current methods of predicting mental health crises (eg, clinical monitoring, screening) usually fail on most, if not all, of these criteria.

Luckily for us, 77% of Americans carry with them an unprecedented opportunity to detect risk states and provide precise life-saving interventions [11]. Smartphones enable the kind of access and insight into an individual’s behavior and mood that clinicians dream of. Packed with sensors and integral to many people’s lives, smartphones present an opportunity to empower individuals (and their clinicians, if individuals so choose) to leverage the data they generate every day through their normal phone use to predict and prevent mental health crises. This approach to data collection is called passive mobile sensing. Collecting high-quality, passive mobile sensing data will enable the generation of predictive machine learning algorithms to solve previously intractable problems and identify risk states before they become crises. To facilitate this goal, our team built the Effortless Assessment of Risk States (EARS) tool.

Justification

We designed the EARS tool to capture multiple indices of behavior through an individual’s normal phone use. We selected these indices based on findings that demonstrate their links to mental health states such as depression and suicidality. These indices include physical activity, geolocation, sleep, phone use duration, music choice, facial expressions, acoustic vocal quality, and natural language use.

Physical activity, geolocation, sleep, phone use duration, and music choice data convey information about how individuals interact with their environments. Physical activity has a rich history of positive outcomes for mental health, including a finding from the Netherlands Mental Health Survey and Incidence Study (N=7076) that showed a strong correlation between more exercise during leisure time and both a lower incidence of mood and anxiety disorders and a faster recovery when those disorders do strike [12]. Geolocation overlaps with physical activity in part, but it can also provide important insight into the quality of a person’s daily movement and the environments in which they are spending their time. Saeb and colleagues demonstrated the power of 3 discrete movement quality variables derived from smartphone global positioning system (GPS) data to predict depressive symptom severity [13]. Meanwhile, environmental factors indexed by GPS data, such as living in a city and being exposed to green areas, have consequences for social stress processing and long-term mental health outcomes [14,15]. Sleep provides another powerful signal to help us predict depression and suicidality. One of the most common prodromal features of depression, sleep disturbance (including delayed sleep onset, difficulty staying asleep, and early morning wakening), also relates significantly to suicidality [16,17]. While evidence suggests that phone use duration may affect depression and suicidality via sleep disturbance [18], we believe that phone use duration also deserves study in its own right. For example, Thomée and colleagues found that high phone use predicted higher depressive symptoms, but not sleep disturbance, in women 1 year later [19]. Finally, music choice may provide affective insight, as recent findings suggest that listeners choose music to satisfy emotional needs, especially during periods of negative mood [20].

In contrast with the variables described above, EARS collects other signals that directly reflect both the form and content of an individual’s interpersonal engagement. Facial expressions can convey useful information about an individual’s mood states, an especially core feature of clinical depression. For example, Girard and colleagues found that participants with elevated depressive symptoms expressed fewer smiles and more signifiers of disgust [21]. Capturing these indicators of mood in a person’s facial expressions has become much more efficient and affordable in recent years with the advent of automated facial analysis [22].

While perhaps less intuitive than facial expression indicators, several aspects of acoustic vocal quality provide robust measures of depression and suicidality. These aspects include speech rate, vocal prosody, vowel space, and other machine learning-derived features [23-26]. Whereas acoustic voice quality ignores the semantic content of communication, natural language processing focuses on it. In addition to strong depression signals in written language in laboratory settings [27], accumulating evidence suggests that social media natural language content, especially expressions of anger and sadness, may identify suicidal individuals [28,29].

We are not the first research team to recognize the potential of passive mobile sensing to capture these behavioral indices. Numerous passive mobile sensing tools capture one or more of the variables described above. Table 1 [30-36] compares the 8 most feature-rich tools (Multimedia Appendix 1 compares the 12 most feature-rich tools [30-40]; for a broader survey of available tools, see the Wikipedia page “Mobile phone based sensing software” [41]).

Table 1.

A comparison of the most feature-rich, research-grade, passive mobile sensing appsa.

| Feature | EARSb | AWARE [30] | EmotionSense [31] | Purple Robot [32] | Beiwe [33] | Funf [34] | RADAR-CNSc [35] | StudentLife [36] |

| Geolocation | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Accelerometer | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Bluetooth colocation |

|

✔ | ✔d | ✔ | ✔ | ✔ | ✔ | ✔ |

| Ambient light | ✔ | ✔ | ✔ | ✔ |

|

|

✔ | ✔ |

| Ambient noise |

|

✔ | ✔ | ✔ |

|

|

|

✔ |

| Charging time | ✔ |

|

|

|

|

|

|

|

| Screen-on time | ✔ |

|

✔ |

|

✔ | ✔ |

|

|

| App use | ✔ | ✔ | ✔d | ✔d |

|

✔ | ✔ | ✔ |

| Screen touch events |

|

|

|

|

✔ |

|

|

|

| SMSe frequency | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

|

| SMS transcripts | ✔ |

|

|

✔ |

|

|

|

|

| Call frequency | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Browser history |

|

|

|

|

|

✔ |

|

|

| In-call acoustic voice sample | ✔ |

|

|

|

|

|

|

|

| All typed text | ✔ | ✔ |

|

|

|

|

|

|

| Facial expressions | ✔ |

|

|

|

|

|

|

|

| Music choice | ✔ |

|

|

|

|

|

|

|

| Barometer |

|

✔ |

|

|

|

|

|

|

| Wearables | ✔ |

|

|

|

|

|

✔ |

|

| Video diaryf | ✔ |

|

|

|

|

|

|

|

| Audio diaryf |

|

|

|

|

✔ |

|

|

|

| Ecological momentary assessmentf | ✔ | ✔ | ✔ |

|

✔ | ✔ |

|

✔ |

| Count | 16 | 11 | 10 | 9 | 9 | 9 | 8 | 8 |

aEARS, AWARE, EmotionSense, Purple Robot, Beiwe, Funf, and RADAR-CNS are open source.

bEARS: Effortless Assessment of Risk States.

cRADAR-CNS: Remote Assessment of Disease and Relapse – Central Nervous System.

dAvailability of the feature is limited to Android versions Kit-Kat 4.4 and below.

eSMS: short message service.

fFeature is not passive (ie, requires active engagement from the user).

Passive mobile sensing apps focus on sensor data (eg, GPS, accelerometer) and phone call and text messaging (short message service [SMS]) occurrence (eg, when calls and SMS happen, but not their contents). The EARS tool improves on these apps by adding several indices specifically relevant to interpersonal communication.

What the Effortless Assessment of Risk States Tool Does

The EARS tool captures multiple indices of a person’s social and affective behavior via their naturalistic use of a smartphone. As noted above, these indices include facial expressions, acoustic vocal quality, natural language use, physical activity, music choice, phone use duration, sleep, and geographical location. Critically, the EARS tool collects these data passively, with almost no burden on the user. For example, the EARS tool collects all language typed into the phone. These various data channels are encrypted and uploaded to a secure cloud server, then downloaded and decrypted in our laboratory. Preliminary analyses of these data are underway in our laboratory. Future iterations of the EARS tool will incorporate additional variables and automated analysis on the mobile device itself, which will facilitate both privacy and speed.

The first version of the EARS tool included four key features. A custom keyboard logged every n th word typed into the phone across all apps (n to be determined by the research team). A patch into the Google Fit application programming interface (Google Inc, Mountain View, CA, USA) collected physical activity data, including walking, running, biking, and car travel. A daily video diary used a persistent notification to prompt users to open the EARS app and record a 2-minute video of themselves talking about their day. While the video diary required active engagement of the user (similar to ecological momentary assessment), it provided a critical bridge in the early EARS tool while our team worked to develop passive means to capture facial expression and acoustic voice data. Each of the above data types (text entry, physical activity, and video diary) were tagged with the final data type of the first EARS tool: geolocation information. Since the Google Fit upload and video diary each occurred only once per day, the keyboard logger provided the richest source of geolocation data. Every time a user entered text into their phone, that text entry triggered a geotag. This approach to gathering geolocation data enabled us to avoid the battery drain of constant GPS data collection.

We piloted the first version of the EARS tool in the Effortless Assessment of Stressful Experiences (EASE) study (approved by the University of Oregon Institutional Review Board; protocol number 07212016.019). The EASE study employed an academic stress paradigm to test whether the EARS tool generates data that index stress. We recruited 24 undergraduate students over fall and winter terms of 2016 and 2017 via the Psychology and Linguistics Human Subjects Pool. We acquired informed consent (including descriptions of our double encryption and data storage procedure), then twice collected weeklong sets of passive mobile sensing data. We conducted the baseline assessment 3 to 7 weeks before the students’ first final examination (avoiding weeks when they had a midterm or other major project due) and the follow-up assessment during the week prior to the students’ last final examination. Self-report questionnaires of perceived stress and mental health symptoms were administered on the last day of each assessment and asked about symptoms over the past week. Participants tolerated the EARS tool well, reporting minimal burden. One individual declined participating in the study due to privacy concerns, and 1 individual declined due to dissatisfaction with the EARS keyboard. One participant dropped out due to privacy concerns, and 1 participant dropped out without explanation. None of the participants who completed the study reported needing to use the provided battery packs.

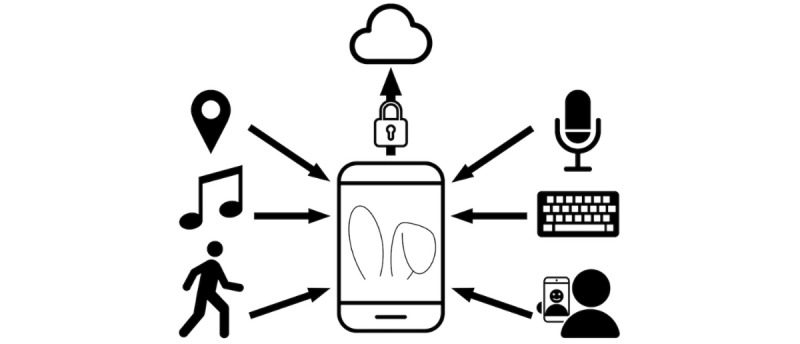

The current version of the EARS tool includes the four features of the original, plus several enhancements (see Figure 1 [42-51]). The first major enhancement is the addition of a “selfie scraper,” which is a pipeline that gathers all photos captured by the device’s camera and encrypts and uploads them to our laboratory. During the decryption process, facial recognition software scans the photos and retains only selfies of the participant, discarding all photos that do not include the user’s face or that include other faces. This feature enables us to collect facial expression data passively, bringing us a step closer to full passivity. Second, the current version takes another step closer to full passivity with the addition of passive voice collection. The EARS tool records through the device’s microphone (but not the earpiece) during phone calls, encrypts these recordings, then uploads them for acoustic voice quality analysis. The third important upgrade of the current version is the constant collection of inertial measurement unit data. These data power fine-grained analysis of physical activity and sleep, over and above what we can glean from Google Fit data. Fourth, paired with the inertial measurement unit data, the ability to sample ambient light via the phone’s sensors further enhances the EARS tool’s measurement of sleep. The fifth new feature monitors the notification center to capture what music the phone user listens to across various music apps. Sixth, we have added the automatic collection of 4 indices of phone use: SMS frequency, call frequency, screen-on time, and app use time.

Figure 1.

The Effortless Assessment of Risk States (EARS) tool collects multiple indices of behavior and mood.

The current version of the tool also facilitates integration with wearable technology and adds customization for research teams. To facilitate integration of wearable technology, the EARS tool includes a mechanism to collect raw data from wearable devices (eg, wrist wearables that measure actigraphy and heart rate). This improves efficiency of data collection and reduces the burden on the participant because it cuts out the step of signing in to each participant’s individual wearable account to download these data. Capturing raw wearable data may also yield physiological variables that are otherwise obscured by the preprocessing that occurs in standard wearable application programming interfaces. One such variable is respiratory sinus arrhythmia, a measure of parasympathetic nervous system activity that is associated with emotional and mental health states [52].

We hope to share the EARS tool with other research teams, and we recognize that different research questions will call for different passive mobile sensing variables. As such, we are making the EARS tool as modular as possible, so that teams seeking to test hypotheses related to, say, natural language use and selfies do not also have to collect geolocation and app use data. By using Android’s flavors functionality, teams can compile a version of EARS that includes the code only for variables of interest, which helps to optimize the tool for battery drain and prevent unnecessary collection of confidential data.

A person’s smartphone use over time generates what we call “individual big data.” Individual big data comprise time-intensive, detail-rich data streams that capture the trends and idiosyncrasies of a person’s existence. With the EARS tool, we seek to harness these individual big data to power innovation and insight. Our research team aims to detect risk states within participants by determining a person’s behavioral set point and analyzing their deviations around that set point. We see this goal as one of many possible applications of the EARS tool.

Effortless Assessment of Risk States Tool Engineering

We programmed the EARS tool in Java for the Android mobile platform (Google Inc). In building the EARS tool, we concentrated on two main considerations: (1) privacy and encryption, and (2) phone use impact. The EARS tool collects a massive amount of personal data, so ensuring that these data remain secure and cannot be used to identify users is of paramount concern. To achieve this, we have implemented a process to deidentify and encrypt the data.

To deidentify the data, the EARS tool uses the Android secure device identification (SSAID) to store and identify the data. The SSAID is accessible only when an Android user gives specific permission and is linked only to the hardware device, not a user or account name. We collect the SSAID and participant name at installation and store the SSAID key on non–cloud-based secure university servers. As a result, in the event of a breach of Amazon Web Services (AWS; Amazon.com, Inc, Seattle, WA, USA), there is no easy way for someone outside of our team to link the data with the name of the user who generated it. Obviously, this basic first step does not protect the actual content of the data. Therefore, we employ state-of-the-art encryption at multiple points in the pipeline.

After the sensors generate the data, the data are immediately encrypted by the EARS tool using 128-bit Advanced Encryption Standard (AES) encryption, a government standard endorsed by the US National Institute of Standards and Technology. On encryption, the unencrypted data are immediately deleted. When transmitting the data to AWS, the EARS tool uses a secure socket layer connection to the server, meaning that all data in transit are encrypted a second time using the industry standard for encrypting data travelling between networks. After transmission to the cloud, the EARS tool then deletes the encrypted data from the phone’s memory. On upload to AWS, the data are then also protected by Amazon’s server side encryption, which uses 256-bit AES encryption.

By encrypting the user data twice, once on the phone using our own encryption, and a second time on the AWS servers using Amazon’s, we can ensure that the data cannot be accessed at any time by anyone outside our team. This means that even Amazon does not have access to the unencrypted data, either by rogue employees or by government demands. We aimed to improve acceptability of the EARS tool for users by taking these steps to protect users’ data and allay privacy concerns.

We took another important step toward maximizing acceptability of the EARS tool by prioritizing phone use impact. The EARS tool runs in the background at all times. To minimize the impact on the user’s day-to-day experience, we have endeavored to make the EARS tool as lightweight as possible. First, the tool consumes around 30 MB of RAM. Most Android phones have between 2 and 4 GB of RAM, meaning the tool uses between 1% and 2% of memory. Second, the use of phone sensors can have a large impact on the battery life of a phone, as they draw relatively large amounts of power. To combat this, we have moved most cloud uploads to late at night when the phone is usually plugged in, and only when the device is connected to a Wi-Fi network. We also limit GPS readings to once every 5 minutes and, if possible, obtain location data from known Wi-Fi points, rather than connecting to a satellite. Current testing on a range of phones indicated that the tool consumes approximately 15% of the battery over a 16-hour period. Third, installation of the EARS tool causes minimal change in the user interface or user experience. Once installation is completed, the only difference the user will notice is the custom keyboard. With the exception of the optional video diary feature, everything else is collected in the background with no user interaction.

The EARS tool is hosted on GitHub (GitHub, Inc, San Francisco, CA, USA) on the University of Oregon, Center for Digital Mental Health’s page (GitHub username: C4DMH). It is licensed under the Apache 2.0 open source license, a permissive free software license that enables the free use, distribution, and modification of the EARS tool [53].

Considerations

We must temper our enthusiasm for the exciting possibilities associated with these new types of data with careful consideration of several challenges. First, EARS is currently implemented only on the Android platform. While Android boasts 2 billion users worldwide, some 700 million people use an iPhone [54]. In the interest of eliminating as many sample selection confounding variables as possible, we have begun adapting the EARS tool for the iOS operating system. Given that most app developers begin on one platform, then port their product over to the other, EARS for iOS should match the functionality of EARS for Android; however, variation in software and sensors will likely result in some differences between the versions. The 4.6 billion people on this planet who do not use an Android or iOS smartphone present a much more stubborn challenge. That most of these people live in low- or middle-income countries or in countries outside of Europe and North America gives us pause, as we consider the historical affluent- and white-centric approach of clinical psychology. The steady increase in smartphone adoption around the world will probably reduce the impact of this limitation, but we remain mindful that the EARS tool carries built-in socioeconomic and cultural limitations alongside its passive mobile sensing features.

Those passive mobile sensing features generate significant ethical concerns as well, principal among which are recruitment and enrollment methods and protecting participants’ data. These concerns loom large in mobile health because they reflect the most prominent way in which for-profit app developers exploit smartphone users: app developers bury unsavory content in terms-of-service agreements they know users are unlikely to read, often leveraging these to sell users’ data. Some users are aware of these practices and may greet research using the EARS tool with skepticism. However, in our pilot study, only 2 of 28 screened participants declined further participation or dropped out due to privacy concerns. Nevertheless, this issue warrants attention as we scale up our studies and incorporate more diverse populations. The critical task, we believe, is to carefully assess who is empowered by the data and to clearly convey our priorities and precautions to the user. We value the empowerment of the user (eg, to take better control of their mental health) over the empowerment of a commercial or government entity (eg, acquiring user data without any benefit to the user). We oppose practices that empower others at the expense of the user and uphold respect for the user’s autonomy by insisting on an opt-in model for all applications of the EARS tool. This means that we reject any protocol that employs autoenrollment in its recruitment approach, such as embedding EARS features into an update of an existing app or requiring members of a specific health plan to participate. To date, we have acquired informed consent in person. As we scale our studies up and out, however, we will need to acquire informed consent remotely. To that end, we are developing a feature in the EARS tool to administer and confirm a participant’s informed consent.

Once a participant has opted in, our duty to protect their confidentiality and anonymity grows exponentially. The EARS tool encrypts all data locally on the phone as soon as the user generates them. Those encrypted data arrive in our laboratory via AWS, a US Health Insurance Portability and Accountability Act-compliant commercial cloud service that provides state-of-the-art security in transit. On completion of or withdrawal from a study, a participant’s uninstallation of the EARS tool automatically deletes all encrypted EARS data still residing on the phone. As a critical next step to ensure data privacy as we expand this line of research, we aim to conduct processing and analysis locally, on the participant’s phone, as soon as possible. In our current protocol, during a study, a participant’s encrypted data exist in 3 locations: the phone, the AWS cloud, and our laboratory’s secure server. These 3 storage locations increase the risk to the participant. As phones become more powerful and our research generates optimized data analysis algorithms, we aim to limit participants’ exposure to risk of privacy breach by executing our protocols within the phones themselves. We will take the first step in that direction soon—recent advances in facial recognition and automatic expression analysis software should enable us to locate the selfie scraper entirely on the phone so that only deidentified output will travel via the cloud to our laboratory [55].

Future Directions

We have described in detail the data collection capabilities of the EARS tool. EARS data, however, are only as useful as the research questions they aim to answer. The next steps for our team include (1) testing the EARS tool with a large, representative sample to establish norms for each behavior, and (2) deploying the EARS tool in the next wave of an ongoing longitudinal study of adolescent girls. We are especially eager to collaborate with adolescent participants because they are digital natives, and we believe their data may be especially revealing. We aim to use the data from these 2 studies to determine which passive mobile sensing variables best predict mental health outcomes. As we discover the predictive power of each variable and the variables’ relationships with each other, we will optimize the data pipeline on the backend of the EARS tool to provide meaningful, manageable output for research teams.

Our team built this tool with the goal of predicting and preventing mental health crises such as suicide, but we believe that the EARS tool could serve many uses. The EARS tool could provide rich, observational data in efficacy and effectiveness studies in clinical psychology. It could also index general wellness via fine-grained data on activity, sleep, and mood for public health researchers. Furthermore, if we find that EARS data do predict changes in behavior and mood, medical researchers and health psychologists could use the EARS tool to derive mental and physical health insights. A future version of the EARS tool could provide clinical assessment and actionable feedback for users, including reports that a user could be encouraged to share with their primary care provider. We imagine exciting applications for social and personality psychologists to test self-report and laboratory findings against unobtrusive, ecologically valid behavioral data. Developmental psychologists, especially those who study adolescence, may also find that the EARS tool provides particularly rich insights, given adolescents’ extensive use of mobile computing and their status as digital natives. These applications of the EARS tool depend on rigorous signal processing, exploratory analysis, hypothesis testing, and machine learning methods. The potential is huge, and the work calls for the creative contributions of researchers from myriad areas of study.

Conclusion

Engineers, mobile phone programmers, psychologists, and data scientists have done extraordinary work over the last decade, the sum of which could revolutionize mental health care. We believe that passive mobile sensing could be the catalyst of that revolution. The EARS tool offers an innovative approach to passive mobile sensing by emphasizing the centrality of a person’s social life to their well-being. We built the EARS tool to power cutting-edge research, with the ultimate goal of leveraging individual big data to empower people.

Acknowledgments

This work was supported by a grant from the Stress Measurement Network, US National Institute on Aging (R24AG048024). MLB is supported by a US National Institute of Mental Health K01 Award (K01MH111951). The authors would like to thank Benjamin W Nelson and Melissa D Latham for their contributions to this manuscript. The authors also thank Liesel Hess and Justin Swanson for their early contributions to the programming of the EARS tool. Finally, the authors thank the Obama White House 2016 Opportunity Project for facilitating the further development of the EARS tool.

Abbreviations

- AES

Advanced Encryption Standard

- AWS

Amazon Web Services

- EARS

Effortless Assessment of Risk States

- EASE

Effortless Assessment of Stressful Experiences

- GPS

global positioning system

- RADAR-CNS

Remote Assessment of Disease and Relapse – Central Nervous System

- SMS

short message service

- SSAID

secure device identification

Comparison of the 12 most feature-rich, research-grade, passive mobile sensing apps.

Footnotes

Conflicts of Interest: None declared.

References

- 1.Kessler RC, Berglund P, Demler O, Jin R, Koretz D, Merikangas KR, Rush AJ, Walters EE, Wang PS, National Comorbidity Survey Replication The epidemiology of major depressive disorder: results from the National Comorbidity Survey Replication (NCS-R) JAMA. 2003 Jun 18;289(23):3095–105. doi: 10.1001/jama.289.23.3095.289/23/3095 [DOI] [PubMed] [Google Scholar]

- 2.Mojtabai R, Jorm AF. Trends in psychological distress, depressive episodes and mental health treatment-seeking in the United States: 2001-2012. J Affect Disord. 2015 Mar 15;174:556–61. doi: 10.1016/j.jad.2014.12.039.S0165-0327(14)00825-8 [DOI] [PubMed] [Google Scholar]

- 3.Reavley NJ, Jorm AF. Mental health reform: increased resources but limited gains. Med J Aust. 2014 Oct 6;201(7):375–376. doi: 10.5694/mja13.00198. [DOI] [PubMed] [Google Scholar]

- 4.Murray CJL, Vos T, Lozano R, Naghavi M, Flaxman AD, Michaud C, Ezzati M, Shibuya K, Salomon JA, Abdalla S, Aboyans V, Abraham J, Ackerman I, Aggarwal R, Ahn SY, Ali MK, Alvarado M, Anderson HR, Anderson LM, Andrews KG, Atkinson C, Baddour LM, Bahalim AN, Barker-Collo S, Barrero LH, Bartels DH, Basáñez M, Baxter A, Bell ML, Benjamin EJ, Bennett D, Bernabé E, Bhalla K, Bhandari B, Bikbov B, Bin AA, Birbeck G, Black JA, Blencowe H, Blore JD, Blyth F, Bolliger I, Bonaventure A, Boufous S, Bourne R, Boussinesq M, Braithwaite T, Brayne C, Bridgett L, Brooker S, Brooks P, Brugha TS, Bryan-Hancock C, Bucello C, Buchbinder R, Buckle G, Budke CM, Burch M, Burney P, Burstein R, Calabria B, Campbell B, Canter CE, Carabin H, Carapetis J, Carmona L, Cella C, Charlson F, Chen H, Cheng AT, Chou D, Chugh SS, Coffeng LE, Colan SD, Colquhoun S, Colson KE, Condon J, Connor MD, Cooper LT, Corriere M, Cortinovis M, de VKC, Couser W, Cowie BC, Criqui MH, Cross M, Dabhadkar KC, Dahiya M, Dahodwala N, Damsere-Derry J, Danaei G, Davis A, De LD, Degenhardt L, Dellavalle R, Delossantos A, Denenberg J, Derrett S, Des JDC, Dharmaratne SD, Dherani M, Diaz-Torne C, Dolk H, Dorsey ER, Driscoll T, Duber H, Ebel B, Edmond K, Elbaz A, Ali SE, Erskine H, Erwin PJ, Espindola P, Ewoigbokhan SE, Farzadfar F, Feigin V, Felson DT, Ferrari A, Ferri CP, Fèvre EM, Finucane MM, Flaxman S, Flood L, Foreman K, Forouzanfar MH, Fowkes FGR, Fransen M, Freeman MK, Gabbe BJ, Gabriel SE, Gakidou E, Ganatra HA, Garcia B, Gaspari F, Gillum RF, Gmel G, Gonzalez-Medina D, Gosselin R, Grainger R, Grant B, Groeger J, Guillemin F, Gunnell D, Gupta R, Haagsma J, Hagan H, Halasa YA, Hall W, Haring D, Haro JM, Harrison JE, Havmoeller R, Hay RJ, Higashi H, Hill C, Hoen B, Hoffman H, Hotez PJ, Hoy D, Huang JJ, Ibeanusi SE, Jacobsen KH, James SL, Jarvis D, Jasrasaria R, Jayaraman S, Johns N, Jonas JB, Karthikeyan G, Kassebaum N, Kawakami N, Keren A, Khoo J, King CH, Knowlton LM, Kobusingye O, Koranteng A, Krishnamurthi R, Laden F, Lalloo R, Laslett LL, Lathlean T, Leasher JL, Lee YY, Leigh J, Levinson D, Lim SS, Limb E, Lin JK, Lipnick M, Lipshultz SE, Liu W, Loane M, Ohno SL, Lyons R, Mabweijano J, MacIntyre MF, Malekzadeh R, Mallinger L, Manivannan S, Marcenes W, March L, Margolis DJ, Marks GB, Marks R, Matsumori A, Matzopoulos R, Mayosi BM, McAnulty JH, McDermott MM, McGill N, McGrath J, Medina-Mora ME, Meltzer M, Mensah GA, Merriman TR, Meyer A, Miglioli V, Miller M, Miller TR, Mitchell PB, Mock C, Mocumbi AO, Moffitt TE, Mokdad AA, Monasta L, Montico M, Moradi-Lakeh M, Moran A, Morawska L, Mori R, Murdoch ME, Mwaniki MK, Naidoo K, Nair MN, Naldi L, Narayan KMV, Nelson PK, Nelson RG, Nevitt MC, Newton CR, Nolte S, Norman P, Norman R, O'Donnell M, O'Hanlon S, Olives C, Omer SB, Ortblad K, Osborne R, Ozgediz D, Page A, Pahari B, Pandian JD, Rivero AP, Patten SB, Pearce N, Padilla RP, Perez-Ruiz F, Perico N, Pesudovs K, Phillips D, Phillips MR, Pierce K, Pion S, Polanczyk GV, Polinder S, Pope CA, Popova S, Porrini E, Pourmalek F, Prince M, Pullan RL, Ramaiah KD, Ranganathan D, Razavi H, Regan M, Rehm JT, Rein DB, Remuzzi G, Richardson K, Rivara FP, Roberts T, Robinson C, De LFR, Ronfani L, Room R, Rosenfeld LC, Rushton L, Sacco RL, Saha S, Sampson U, Sanchez-Riera L, Sanman E, Schwebel DC, Scott JG, Segui-Gomez M, Shahraz S, Shepard DS, Shin H, Shivakoti R, Singh D, Singh GM, Singh JA, Singleton J, Sleet DA, Sliwa K, Smith E, Smith JL, Stapelberg NJC, Steer A, Steiner T, Stolk WA, Stovner LJ, Sudfeld C, Syed S, Tamburlini G, Tavakkoli M, Taylor HR, Taylor JA, Taylor WJ, Thomas B, Thomson WM, Thurston GD, Tleyjeh IM, Tonelli M, Towbin JA, Truelsen T, Tsilimbaris MK, Ubeda C, Undurraga EA, van DWMJ, van OJ, Vavilala MS, Venketasubramanian N, Wang M, Wang W, Watt K, Weatherall DJ, Weinstock MA, Weintraub R, Weisskopf MG, Weissman MM, White RA, Whiteford H, Wiebe N, Wiersma ST, Wilkinson JD, Williams HC, Williams SRM, Witt E, Wolfe F, Woolf AD, Wulf S, Yeh P, Zaidi AKM, Zheng Z, Zonies D, Lopez AD, AlMazroa MA, Memish ZA. Disability-adjusted life years (DALYs) for 291 diseases and injuries in 21 regions, 1990-2010: a systematic analysis for the Global Burden of Disease Study 2010. Lancet. 2012 Dec 15;380(9859):2197–223. doi: 10.1016/S0140-6736(12)61689-4.S0140-6736(12)61689-4 [DOI] [PubMed] [Google Scholar]

- 5.World Health Organization . World Health Statistics 2016: Monitoring Health for the Sustainable Development Goals (SDGs) (World Health Statistics Annual) Geneva, Switzerland: World Health Organization; 2016. [Google Scholar]

- 6.[No authors listed] QuickStats: age-adjusted rate* for suicide, by sex - National Vital Statistics System, United States, 1975-2015. MMWR Morb Mortal Wkly Rep. 2017 Mar 17;66(10):285. doi: 10.15585/mmwr.mm6610a7. doi: 10.15585/mmwr.mm6610a7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Yip PSF, Caine E, Yousuf S, Chang S, Wu KC, Chen Y. Means restriction for suicide prevention. Lancet. 2012 Jun 23;379(9834):2393–9. doi: 10.1016/S0140-6736(12)60521-2.S0140-6736(12)60521-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Johnson EJ, Goldstein D. Medicine. Do defaults save lives? Science. 2003 Nov 21;302(5649):1338–9. doi: 10.1126/science.1091721.302/5649/1338 [DOI] [PubMed] [Google Scholar]

- 9.Nahum-Shani I, Smith SN, Spring BJ, Collins LM, Witkiewitz K, Tewari A, Murphy SA. Just-in-time adaptive interventions (JITAIs) in mobile health: key components and design principles for ongoing health behavior support. Ann Behav Med. 2016 May 18;52(6):446–462. doi: 10.1007/s12160-016-9830-8.10.1007/s12160-016-9830-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Thornicroft G, Chatterji S, Evans-Lacko S, Gruber M, Sampson N, Aguilar-Gaxiola S, Al-Hamzawi A, Alonso J, Andrade L, Borges G, Bruffaerts R, Bunting B, de AJMC, Florescu S, de GG, Gureje O, Haro JM, He Y, Hinkov H, Karam E, Kawakami N, Lee S, Navarro-Mateu F, Piazza M, Posada-Villa J, de GYT, Kessler RC. Undertreatment of people with major depressive disorder in 21 countries. Br J Psychiatry. 2017 Dec;210(2):119–124. doi: 10.1192/bjp.bp.116.188078. doi: 10.1192/bjp.bp.116.188078.bjp.bp.116.188078 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Pew Research Center . Mobile fact sheet. Washington, DC: Pew Research Center; 2018. [2018-02-12]. http://www.pewinternet.org/fact-sheet/mobile/ [Google Scholar]

- 12.Ten Have M, de Graaf R, Monshouwer K. Physical exercise in adults and mental health status findings from the Netherlands mental health survey and incidence study (NEMESIS) J Psychosom Res. 2011 Nov;71(5):342–8. doi: 10.1016/j.jpsychores.2011.04.001.S0022-3999(11)00112-7 [DOI] [PubMed] [Google Scholar]

- 13.Saeb S, Zhang M, Karr CJ, Schueller SM, Corden ME, Kording KP, Mohr DC. Mobile phone sensor correlates of depressive symptom severity in daily-life behavior: an exploratory study. J Med Internet Res. 2015;17(7):e175. doi: 10.2196/jmir.4273. http://www.jmir.org/2015/7/e175/ v17i7e175 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lederbogen F, Kirsch P, Haddad L, Streit F, Tost H, Schuch P, Wüst S, Pruessner JC, Rietschel M, Deuschle M, Meyer-Lindenberg A. City living and urban upbringing affect neural social stress processing in humans. Nature. 2011 Jun 22;474(7352):498–501. doi: 10.1038/nature10190.nature10190 [DOI] [PubMed] [Google Scholar]

- 15.Alcock I, White MP, Wheeler BW, Fleming LE, Depledge MH. Longitudinal effects on mental health of moving to greener and less green urban areas. Environ Sci Technol. 2014 Jan 21;48(2):1247–55. doi: 10.1021/es403688w. [DOI] [PubMed] [Google Scholar]

- 16.Lopresti AL, Hood SD, Drummond PD. A review of lifestyle factors that contribute to important pathways associated with major depression: diet, sleep and exercise. J Affect Disord. 2013 May 15;148(1):12–27. doi: 10.1016/j.jad.2013.01.014.S0165-0327(13)00069-4 [DOI] [PubMed] [Google Scholar]

- 17.Wojnar M, Ilgen MA, Wojnar J, McCammon RJ, Valenstein M, Brower KJ. Sleep problems and suicidality in the National Comorbidity Survey Replication. J Psychiatr Res. 2009 Feb;43(5):526–31. doi: 10.1016/j.jpsychires.2008.07.006. http://europepmc.org/abstract/MED/18778837 .S0022-3956(08)00156-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Lemola S, Perkinson-Gloor N, Brand S, Dewald-Kaufmann JF, Grob A. Adolescents' electronic media use at night, sleep disturbance, and depressive symptoms in the smartphone age. J Youth Adolesc. 2015 Feb;44(2):405–18. doi: 10.1007/s10964-014-0176-x. [DOI] [PubMed] [Google Scholar]

- 19.Thomée S, Härenstam A, Hagberg M. Mobile phone use and stress, sleep disturbances, and symptoms of depression among young adults--a prospective cohort study. BMC Public Health. 2011;11:66. doi: 10.1186/1471-2458-11-66. http://bmcpublichealth.biomedcentral.com/articles/10.1186/1471-2458-11-66 .1471-2458-11-66 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Randall WM, Rickard NS. Reasons for personal music listening: a mobile experience sampling study of emotional outcomes. Psychol Music. 2017;45(4):479–495. doi: 10.1177/0305735616666939. [DOI] [Google Scholar]

- 21.Girard JM, Cohn JF, Mahoor MH, Mavadati SM, Hammal Z, Rosenwald DP. Nonverbal social withdrawal in depression: evidence from manual and automatic analysis. Image Vis Comput. 2014 Oct;32(10):641–647. doi: 10.1016/j.imavis.2013.12.007. http://europepmc.org/abstract/MED/25378765 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Cohn JF, De la Torre F. Automated face analysis for affective computing. In: Calvo R, D'Mello S S, Gratch J, Kappas A, editors. The Oxford Handbook of Affective Computing. New York, NY: Oxford University Press; 2014. pp. 1–58. [Google Scholar]

- 23.Cohn JF, Kreuz TS, Matthews I, Yang Y, Nguyen MH, Padilla MT, Zhou F, De la Torre F. Detecting depression from facial actions and vocal prosody. Third Annual Conference on Affective Computing and Intelligent Interaction and Workshops; Sep 10-12, 2009; Amsterdam, Netherlands. 2009. [DOI] [Google Scholar]

- 24.Cummins N, Scherer S, Krajewski J, Schnieder S, Epps J, Quatieri TF. A review of depression and suicide risk assessment using speech analysis. Speech Commun. 2015 Jul;71:10–49. doi: 10.1016/j.specom.2015.03.004. [DOI] [Google Scholar]

- 25.Low LA, Maddage NC, Lech M, Sheeber LB, Allen NB. Detection of clinical depression in adolescents' speech during family interactions. IEEE Trans Biomed Eng. 2011 Mar;58(3):574–86. doi: 10.1109/TBME.2010.2091640. http://europepmc.org/abstract/MED/21075715 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Scherer S, Lucas GM, Gratch J, Rizzo AS, Morency LP. Self-reported symptoms of depression and PTSD are associated with reduced vowel space in screening interviews. IEEE Trans Affect Comput. 2016 Jan 1;7(1):59–73. doi: 10.1109/TAFFC.2015.2440264. [DOI] [Google Scholar]

- 27.Rude SS, Gortner EM, Pennebaker JW. Language use of depressed and depression-vulnerable college students. Cogn Emot. 2004 Dec;18(8):1121–1133. doi: 10.1080/02699930441000030. [DOI] [Google Scholar]

- 28.Jashinsky J, Burton SH, Hanson CL, West J, Giraud-Carrier C, Barnes MD, Argyle T. Tracking suicide risk factors through Twitter in the US. Crisis. 2014;35(1):51–9. doi: 10.1027/0227-5910/a000234.334K5X21L0436430 [DOI] [PubMed] [Google Scholar]

- 29.Coppersmith G, Ngo K, Leary R, Wood A. Exploratory analysis of social media prior to a suicide attempt. 3rd Workshop on Computational Linguistics and Clinical Psychology: From Linguistic Signal to Clinical Reality; June 16, 2016; San Diego, CA, USA. 2016. pp. 106–117. http://www.aclweb.org/anthology/W16-0311 . [Google Scholar]

- 30.Ferreira D, Kostakos V, Dey AK. AWARE: mobile context instrumentation framework. Front ICT. 2015 Apr 20;2:6. doi: 10.3389/fict.2015.00006. [DOI] [Google Scholar]

- 31.Servia-Rodriguez S, Rachuri K, Mascolo C, Rentfrow P, Lathia N, Sandstrom G. Mobile sensing at the service of mental well-being: a large-scale longitudinal study. 26th International World Wide Web Conference; Apr 3-7, 2017; Perth, Australia. 2017. pp. 103–112. [DOI] [Google Scholar]

- 32.CBITs TECH Web Site. [2018-02-12]. Purple Robot https://tech.cbits.northwestern.edu/purple-robot/

- 33.Torous J, Kiang MV, Lorme J, Onnela J. New tools for new research in psychiatry: a scalable and customizable platform to empower data driven smartphone research. JMIR Ment Health. 2016;3(2):e16. doi: 10.2196/mental.5165. http://mental.jmir.org/2016/2/e16/ v3i2e16 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Aharony N, Gardner A, Saha SK. Funf open sensing. [2018-02-12]. https://github.com/funf-org .

- 35.Hotopf M, Narayan V. RADAR-CNS. 2016. [2018-07-12]. https://www.radar-cns.org/

- 36.Wang R, Chen F, Chen Z, Li T, Harari G, Tignor S, Zhou X, Ben-Zeev D, Campbell AT. StudentLife: assessing mental health, academic performance and behavioral trends of college students using smartphones. 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing; Sep 13-17, 2014; Seattle, WA, USA. 2014. pp. 3–14. [DOI] [Google Scholar]

- 37.Place S, Blanch-Hartigan D, Rubin C, Gorrostieta C, Mead C, Kane J, Marx BP, Feast J, Deckersbach T, Pentland AS, Nierenberg A, Azarbayejani A. Behavioral indicators on a mobile sensing platform predict clinically validated psychiatric symptoms of mood and anxiety disorders. J Med Internet Res. 2017 Mar 16;19(3):e75. doi: 10.2196/jmir.6678. http://www.jmir.org/2017/3/e75/ v19i3e75 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.LiKamWa R, Liu Y, Lane ND, Zhong L. MoodScope: building a mood sensor from smartphone usage patterns. 11th Annual International Conference on Mobile Systems, Applications, and Services; Jun 25-28, 2013; Taipei, Taiwan. 2013. pp. 389–402. [DOI] [Google Scholar]

- 39.Wahle F, Kowatsch T, Fleisch E, Rufer M, Weidt S. Mobile sensing and support for people with depression: a pilot trial in the wild. JMIR Mhealth Uhealth. 2016 Sep 21;4(3):e111. doi: 10.2196/mhealth.5960. http://mhealth.jmir.org/2016/3/e111/ v4i3e111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Apple Inc ResearchKit. [2018-07-12]. https://developer.apple.com/researchkit/

- 41.Wikipedia. [2018-07-12]. Mobile phone based sensing software https://en.wikipedia.org/wiki/Mobile_phone_based_sensing_software .

- 42.Kimmi Studio Cloud [digital image] [2018-02-12]. https://thenounproject.com/KimmiStudio/collection/icons/?i=892023 .

- 43.Kimmi Studio Location [digital image] [2018-02-12]. https://thenounproject.com .

- 44.Mushu Lock [digital image] [2018-02-12]. https://thenounproject.com .

- 45.Wibowo SA Microphone [digital image] [2018-02-12]. https://thenounproject.com .

- 46.i cons Music [digital image] [2018-02-12]. https://thenounproject.com .

- 47.Kimmi Studio Phone [digital image] [2018-02-12]. https://thenounproject.com/KimmiStudio/collection/icons/?i=874636 .

- 48.Castillo B. Bunny ears [digital image] [2018-02-12]. https://thenounproject.com .

- 49.Hoogendoorn J. Keyboard [digital image] [2018-02-12]. https://thenounproject.com .

- 50.Hoffman I. Walking [digital image] [2018-02-12]. https://thenounproject.com .

- 51.Mikicon Taking selfie [digital image] [2018-02-12]. https://thenounproject.com .

- 52.Beauchaine TP. Respiratory sinus arrhythmia: a transdiagnostic biomarker of emotion dysregulation and psychopathology. Curr Opin Psychol. 2015 Jun 01;3:43–47. doi: 10.1016/j.copsyc.2015.01.017. http://europepmc.org/abstract/MED/25866835 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Center for Digital Mental Health . The EARS tool. San Francisco, CA: GitHub Inc; 2018. [2018-02-12]. https://github.com/C4DMH . [Google Scholar]

- 54.Reisinger D. Here's how many iPhones are currently being used worldwide. 2017. Mar 6, [2018-02-13]. http://fortune.com/2017/03/06/apple-iphone-use-worldwide/

- 55.Bader D. The Galaxy S8 has face recognition and iris scanning, and you have to choose one. 2017. Apr 7, [2018-02-13]. https://www.androidcentral.com/galaxy-s8-face-recognition-iris-scanning .

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Comparison of the 12 most feature-rich, research-grade, passive mobile sensing apps.