Abstract

Selected abstracts were presented as orals within the conference programme and the remaining accepted abstracts were presented as electronic posters.

Abstracts selected as oral presentations

OP.001

Supporting the patient innovator: Developing a novel communication device for tracheostomy patients in the ICU

Fiona Howroyd1, Ruth Capewell1 and Charlotte Small1

1Queen Elizabeth Hospital, Birmingham, UK

Abstract

F Howroyd, R Capewell, C Small, D Buckley, L Buckley, V Shingari, C Qian, D McWilliams, C Dawson, C Snelson (2017)

Their inability to communicate effectively whilst he had a tracheostomy on the Intensive Care Unit (ICU), had such a profound impact on Duncan Buckley and his wife, Lisa-Marie, that they developed a concept for a novel interactive communication device, called ICU CHAT. Together, they have been embedded within the multidisciplinary ICU research team at the Queen Elizabeth Hospital Birmingham (QEHB), supported by the Human Interface Technologies team from the University of Birmingham, and funded by the National Institute of Health Research Surgical Reconstruction and Microbiology Research Centre, to further develop their prototype for clinical trial.

The team followed a human centered design process (1), with the following stages:

1. Literature review of Augmentative and Alternative Communication (AAC) devices for patients with a tracheostomy on the ICU.

2. Bench testing with ICU survivors, family members and staff.

3. Multidisciplinary development of a clinical trial protocol.

The review determined that although there are a range of AAC devices available on the market, there are practical limitations to their use. The current literature does not explore factors relating to device requirements and patient usability in the ICU.

Bench testing established the safety and appropriateness of system components by combining usability assessment with qualitative appraisal. Testing determined that both patients and staff preferred to use a tablet/laptop sized screen as visual display attached to a mobile stand. The interface with the best usability ratings was the camera mouse; laptop software allowing tracking of facial gestures to control an on-screen cursor.

The clinical trial protocol “Feasibility of the use of a novel interactive communication device, ICU-CHAT, for patients with tracheostomy on the ICU” has secured Health Research Authority permissions and is open to recruitment at QEHB.

This early translational research demonstrates the ability of clinical and academic teams to support potential patient innovators, whose reflections on experiences during their ICU stay enable technology development to be truly patient-centered. The structured process used by the research group optimises usability engineering process (2) and facilitates regulatory approval prior to rigorous evaluation via clinical trial.

Bibliography

- 1.BSI (2010) Ergonomics of human-system interaction – Part 210: Human-centred design for interactive systems (ISO 9241-210:2010). London: British Standards Institute.

- 2.IEC (2007) IEC 62366: 2007 Medical devices–Application of usability engineering to medical devices. Geneva: International Electrotechnical Commission.

OP.002

The burden of early oliguria in critical illness

Neil Glassford1,2, Johan Mårtensson3, David Garmory1, Glenn Eastwood1,2, Michael Bailey2 and Rinaldo Bellomo1,4

1Department of Intensive Care Medicine, Austin Health, Melbourne, Australia

2ANZICS-RC, School of Public Health and Preventative Medicine, Monash University, Melbourne, Australia

3Section of Anaesthesia and Intensive Care Medicine, Department of Physiology and Pharmacology, Karolinska Institutet, Stockholm, Sweden

4School of Medicine, University of Melbourne, Melbourne, Australia

Abstract

Early oliguria (EO) during the first 24 h of intensive care unit (ICU) admission is more likely to be related to the reason for admission than to subsequent complications or therapeutic intervention, and so may be a more accurate predictor of outcome than later estimates of urine output (UO). Moreover, the accumulation of a number of discontinuous hours of EO may offer an earlier indication of renal dysfunction than the fixed periods common to modern definitions of acute kidney injury (AKI).

We used electronic patient record data, including hourly fluid balance information, to explore EO in terms of severity and duration to test whether patients accumulating hours of EO differ in demographics, process-of-care, and outcomes from those who do not. We developed statistical models to assess the predictive value of EO.

We studied 1911 patients; 61.6% male, with a median age of 65 (IQR: 51.3—74.7) years and a median APACHE III score of 56 (IQR: 42—72). Over the first 24 h of ICU admission, 1215 (63.6%) patients experienced EO, defined as a cumulative total of ≥4 hours of oliguria (urine output of <30 ml/h). Of these, 191 (15.7%) were exposed to EO within 6 hours of admission. Patients in the EO group were more unwell, required significantly more interventions and had higher ICU and hospital mortality (Table 1). EO was independently associated with ICU and hospital mortality after comprehensive composite adjustment (adjusted OR/hour EO 1.05, 95%CI:1.02–1.08, p = 0.003 and 1.04, 95%CI:1.02–1.07, p = 0.001 respectively).

We identified that EO accumulation is a common occurrence in patients admitted to the ICU for 24 h or more. The early burden of oliguria appears to be an important and novel additional predictor of outcome in critically ill patients.

OP.003

Long term consequences of acute kidney injury in survivors of critical illness – a national population-based cohort study

Steven Tominey1, Robert Lee2, Timothy S Walsh3,4 and Nazir Lone2,4

1University of Edinburgh Medical School, Edinburgh, UK

2Usher Institute of Population Health Sciences and Informatics, Edinburgh, UK

3MRC Centre for Inflammation Research, Edinburgh, UK

4University Department of Anaesthesia, Critical Care, and Pain Medicine, Edinburgh, UK

Abstract

Background: During periods of critical illness, acute kidney injury (AKI) is commonplace and in a proportion of patients renal replacement therapy (RRT) is required. Little research has been published relating to the long-term consequences of kidney injury in ICU survivors. We aimed to evaluate the association between AKI and both mortality and emergency hospital readmission at one-year following discharge for a complete five-year cohort Scottish ICU survivors. Additionally, we aimed to explore the causes for both outcomes.

Table 1.

Characteristics and outcomes of patients with early oliguria.

| Non-EO | EO | p-value | |

|---|---|---|---|

| 696 | 1215 | ||

| Age, years | 62.95 (50.43-73.24) | 66.35 (51.47-76.01) | 0.004 |

| Male | 487 (69.97%) | 691 (56.87%) | <0.001 |

| APACHE 3 score | 54 (40-67) | 58 (43-75) | <0.001 |

| Surgical admission | 319 (45.83%) | 617 (50.78%) | 0.08 |

| IPPV during admission | 510 (73.28%) | 872 (71.77%) | 0.49 |

| IPPV at admission | 481 (94.31%) | 763 (87.5%) | <0.001 |

| Duration of IPPV, hours | 17.35 (8.82-49.25) | 24.32 (11-82.03) | <0.001 |

| Baseline creatinine, micromol/l | 80 (63-110) | 78 (60-116) | 0.33 |

| KDIGO AKI 3 at 24 h | 12 (1.7%) | 122 (10%) | <0.001 |

| KDIGO AKI 3 at d7 | 24 (3.5%) | 172 (14.2%) | <0.001 |

| CRRT during admission | 14 (2%) | 127 (10.5%) | <0.001 |

| ICU Mortality | 30 (4.31%) | 111 (9.14%) | <0.001 |

| Hospital Mortality | 51 (7.33%) | 175 (14.42%) | <0.001 |

Methods: Participants were identified from the Scottish Intensive Care Society Audit Group database (01/01/2009-31/12/2013; n = 33,764), and linked to national hospital and death records. Those with end-stage renal disease requiring dialysis were excluded. Exposures: primary: receipt of RRT; secondary: AKI derived using modified-RIFLE criteria. Outcomes: 1-year mortality and emergency hospital readmission. Exploratory analyses were undertaken to examine causes of mortality and first emergency readmission. Associations between potential risk factors and outcomes were estimated using univariable Cox regression and multivariable Cox regression to adjust for potential confounders.

Results: Of 33,764 participants, 2,137 (6.33%) required RRT and 4,817 (13.96%) developed AKI defined by modified-RIFLE criteria. RRT was associated with increased crude 1-year mortality (10.90% vs 8.34%; HR = 1.33, 95%CI 1.16–1.52, p < 0.001) as was AKI (10.92% vs 8.38%; HR = 1.33. 95%CI: 1.21–1.46, p < 0.001). After adjustment for potential confounders, these associations were no longer significant (RRT adjHR = 1.08. 95%CI: 0.93–1.26, p = 0.297; AKI adjHR = 0.96. 95%CI: 0.86–1.07, p = 0.456). However, both RRT (47.03% vs 40.30%; adjHR = 1.09. 95%CI:1.01–1.17, p = 0.022) and AKI (47.50% vs 40.02%; adjHR = 1.08. 95%CI:1.03–1.13, p = 0.003) were associated with increased risk of 1-year emergency readmission, persisting after adjustment. The causes of death and emergency readmission differed in those receiving RRT, compared to those not. Specifically, there were increases in renal and endocrine causes of mortality and readmission, particularly acute and chronic kidney disease, and diabetes mellitus. For 1-year emergency readmission, increased gastrointestinal causes were additionally associated with the receipt of RRT.

Conclusions: This large, population-level cohort study has demonstrated that receipt of RRT is associated with a small increased risk of 1-year emergency readmission but not mortality. These findings will help clinicians and patients understand the long-term consequences associated with AKI and receipt of RRT during an ICU stay. The increased risk of readmission may indicate that hospital discharge policies need to be enhanced to reduce the risk of emergency readmission in patients receiving RRT.

Acknowledgements: Funding: Medical Research Scotland. We wish to thank SICSAG for providing data and the staff at participating hospitals.

OP.004

Rib fractures: Elderly patients receive lower standards of care than younger patients

Neil Roberts1, Emma Harrison1, James Butler1, Julia Gibb2, Rebecca Norman2, Jonathan Outlaw2, Jonathan Abeles2, Laura Shepherd1, Ruth Creamer1 and Ben Warrick1

1Royal Cornwall Hospitals Trust, Truro, UK

2University of Exeter Medical School, Truro, UK

Abstract

Background: Rib fractures represent a significant proportion of trauma seen in Emergency Departments. There is often associated lung injury with contusion or haemopneumothorax. Analgesia and respiratory support represent the cornerstones of management. There is an increasing frailty burden in healthcare. Elderly patients have previously been shown to receive poorer head injury care than younger patients. This audit examined whether this is the case with rib fractures too.

Methods: Retrospective audit of all adult patients with rib fractures from primary traumatic events, who were admitted for active treatment to a district general hospital over a 6-month period (July-Dec 2015). Patients were identified through TARN, WebPACS imaging system and emergency department software database, cross-referenced then imaging and notes reviewed. Demographics and characteristics of injury were recorded, along with markers of care such as level of trauma call, maximum imaging, critical care and analgesia such as epidural or patient-controlled-analgesia use, and outcomes including length of stay (LOS) and 30-day mortality.

Results: 43 patients identified for inclusion after review of 2461 imaging reports and 58 sets of notes. 15 (35%) not captured by TARN. 17 (40%) were low-energy mechanism (fall < 2 m). Median age 67 (range 32–96). Median of 5 fractures (range 1–22). 8 had flail chest (19%). Median hospital LOS 6 days (range 1–25). Median Charlson Comorbidity Index was 4 (range 0–11). Median ISS peaked at 16.5 in age 51–60 but was still 9 at age 81–90 and 6 at 91–100. Overall 30-day mortality was 11.6%. Hospital trauma call decreased from 75% in age group 51–60 to 0% in age 91–100, with increase in ‘no trauma call’ from 25% to 100%. Full Trauma CT decreased 88% to 25% across these age groups, with 75% age 91–100 having only chest XR as maximum imaging. Primary critical care admission decreased from 50% age 61–70 to 0% age 91–100. Elderly patients were less likely to receive advanced analgesia. Median LOS increased with age. 30-day mortality was 43% in age 81–90 and 50% 91–100, with no other patients dying.

Conclusions: Elderly patients receive less aggressive and lower standard care and have higher mortality and LOS despite less severe injuries. Earlier recognition of these injuries may facilitate improved care pathways and outcomes. Hospitals should have a lower threshold for hospital trauma call and trauma scan in elderly patients.

OP.005

Socioeconomic status is associated with 30-day mortality after injury: A cross-sectional analysis of national TARN data

Philip McHale1, Daniel Hungerford1, Tim Astles2 and Ben Morton2,3

1University of Liverpool, Liverpool, UK

2Aintree University Hospital NHS Foundation Trust, Liverpool, UK

3Liverpool School of Tropical Medicine, Liverpool, UK

Abstract

Introduction: The relationship between socioeconomic status and mortality is well defined across multiple different pathologies. However, the relationship between deprivation and survival from trauma is less clear. There is substantial evidence of a social gradient in injury risk but contradictory evidence of a gradient for mortality. To address this issue, we analysed this relationship using data from the Trauma Audit and Research Network (TARN).

Methods: We obtained TARN data for patients admitted to hospitals in England and Wales with trauma (TARN identifies patients though Hospital Episode Statistics) January 2015 – January 2016. This dataset includes Injury Severity Score (ISS), demographics, four-digit postcode and 30-day mortality. Using mortality as binary outcome, we performed multiple logistic regression with age group, sex and ISS (split into minor <15, major 15–24, and severe 25+). Additional analysis was performed, stratifying injuries into minor and major categories (using the ISS of 15). National quintiles of deprivation were constructed from the four-digit postcode using area weighted Lower Super Output Area Index of Multiple Deprivation (IMD) scores.

Results: There were 52,422 patients admitted to hospitals in England and Wales with trauma. Compared to patients from the least deprived quintile, those from the most deprived are significantly more likely to die within 30 days. Other quintiles are also significantly more likely, however there is no clear social gradient. Additionally, increasing ISS is associated with increased mortality, as is increasing age and males compared with females. Stratified analysis showed that sex and IMD were not significantly associated with mortality for major injuries, but both were significant for minor injuries. The adjusted odds ratio for those in the most deprived areas was 1.39 compared to those from the least deprived (p < 0.001).

Conclusion: The results show that deprivation was related to mortality only in minor injuries. This is potentially because of relative strength of effect in major injuries overcoming potential effect of deprivation (or other demographics) on mortality. However, another possibility would be that the increased resource available for the care of major injuries ameliorates the effect of demographics. Targeting older patients with minor trauma from more deprived backgrounds for preventative interventions and considering clinical practice pathways (e.g. increased orthogeriatrician input) could potentially impact on outcomes for these patients.

Oral presented in session 39 of the Conference programme – Abstract ID: 0405

Agitation bubble contrast (ABC) – a novel sonographic sign for diagnosing free intraperitoneal gas in the presence of peritoneal free fluid

Emese Kinga Gaal1, Theophilus Samuels1 and Matyas Andorka1

1Surrey and Sussex Healthcare NHS Trust, Redhill, UK

Abstract

Introduction: Free intraperitoneal gas can be elicited using either an erect chest radiograph or computed tomography (CT) scan. Obtaining these imaging modalities can be difficult and expose a critically ill patient to the stresses of intra-hospital transfer.

Bedside sonography is an incredibly useful tool. By completing non-specialist training, such as the Core Ultrasound Skills in Intensive Care (CUSIC) accreditation, the operator can diagnose significant pathology at the bedside.

We present a novel sonographic sign that substituted the need to perform a radiological investigation for free intraperitoneal gas.

Methods: We describe a case involving a 59-year-old man admitted with severe hospital acquired pneumonia. He deteriorated significantly, developing severe metabolic acidosis, increasing vasopressor support requiring invasive ventilation. On Day 3 post admission, he developed abdominal tenderness; urgent surgical review requested CT scan. During preparation for the CT transfer we performed a focused bedside ultrasound scan (Sonosite X-Porte, Fujifilm).

Results: Bedside ultrasonography confirmed hypovolaemia, left lower lobe pneumonia, and free fluid with gas artefact above the liver with an oscillating gas-fluid interface.

Differential diagnoses for the gas artefact were aerated lung at the anterior costophrenic angle, free peritoneal gas or gas filled bowel loop; aerated lung was visible with sliding cephalad to this gas artefact.1 We hypothesised that using a ballottement technique to the right side of the abdomen following appropriate analgesia would generate bubbles in the free peritoneal fluid.

Swirling of bubbles in the peritoneal fluid confirmed the suspected free peritoneal gas, which would not have happened if the gas artefact had originated from a gas filled bowel loop (figure 1 – permission to use image given by next of kin).

Discussion: The result was discussed with the surgical team, resulting in the patient being taken directly to the operating theatre. Exploratory laparotomy confirmed free peritoneal fluid and found duodenal perforation.

While radiological investigations may be superior to bedside sonography for detecting free air, by using this novel manoeuvre, which to the best of our knowledge has not been previously described, we managed to avoid unnecessary delay and radiation exposure.

Conclusion: Agitation bubble contrast manoeuvre can be a useful tool to sonographically confirm free peritoneal gas in the presence of free abdominal fluid.

Reference

- 1.Goudie, Adrian. Detection of Intraperitoneal Free Gas by Ultrasound. Australasian Journal of Ultrasound in Medicine 2013; 16: 56–61. [DOI] [PMC free article] [PubMed]

Abstracts selected for ePoster presentations

EP.001

Delayed donation after brain death: Concept and support level by Flemish donor coordinators

Karen Embo1, Willem Stockman2, Piet Lormans1 and Johan Froyman2

1AZ Delta, Roeselare, Belgium

2AZ Delta Roeselare, Roeselare, Belgium

Abstract

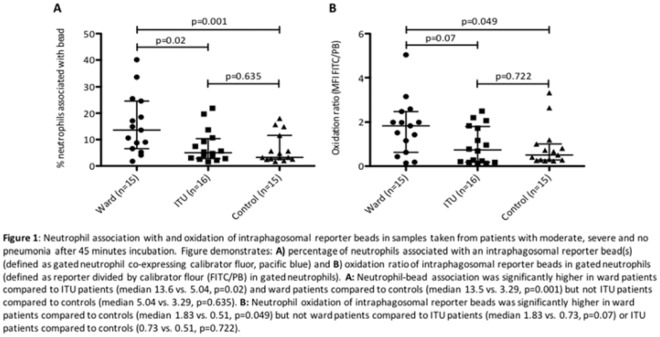

Objective: In the practice of organ donation demand exceeds supply. Therefore new techniques and concepts are being explored. Delayed DBD (donation after brain death) is a concept in which stabilizing care is intentionally continued in a potential donor that has yet to reach the point of brain death. It concerns patients with evolving intracranial pathology, in which further therapy is futile and who are eligible for donation. By prolonging care the harvesting procedure can be transformed from a DCD (donation after circulatory death) to a DBD procedure. The objective is to extend the donor pool, augment organ per donor ratio and improve organ quality by lowering total ischemia time.

Figure 1.

A: fluid-gas interface (white arrow), B: bubbles after ABC manoeuvre (white arrows).

Delayed DBD was defined as a DBD procedure in which donor management (the time between establishing futility of further therapy and the declaration of brain death), exceeds 24 hours.

Off course this can only be carried out with full support of staff and family of the potential donor.

Belgium is a member of Eurotransplant. Each participating hospital appoints two donor coordinators (1 nurse, 1 physician) responsible for everything concerning organ donation. With our study we aimed to introduce the concept to all Flemish donor coordinators, measure support and discover difficulties.

Methods: An electronic survey was designed and distributed. The concept was explained, followed by a questionnaire to investigate support and concerns.

Results: 94 coordinators received the survey. The response rate was 38% (18 nurses, 18 physicians). 25 (69,4%) were overall supportive. 8 (22,2%) responded neutral, 3 (8,3%) dismissive. Chi-square test showed no difference in support amongst nurses versus physicians (p > 0,05). 21 (58,3%) thought this policy could be explained to the family of the donor, 4 (11,1%) didn’t. 28 (77,7%) found it defendable amongst their team, 5 (13,9%) didn’t. Most coordinators, 33/36 (91,7%), found the policy justifiable towards the donor. 1 (2,8%) didn’t. The cost of extra ICU admission days wasn’t an issue for 23 coordinators (63,9%). 5 (13,9%) considered this a problem. 6 (16,7%) found the occupation of an ICU bed in sight of other admissions problematic. 25 (69,4%) didn’t.

Conclusion: Most donor coordinators were supportive of the delayed DBD practice. Difficulties were mainly reported in explaining the policy towards the family.

Potential bias must be noted. Responders are possibly more engaged and might respond more enthusiastically to new concepts in organ donation.

EP.003

Intensive Care staff perceptions of palliative care delivery in their Intensive Care Units

Manon Lewis 1

1St Georges University Hospitals NHS Foundation Trust, London, UK

Abstract

Background: End of life discussions occur daily in most intensive care units (ICU). This work aims to explore the perception of the delivery of end of life care (EoLC) in ICU, to identify staff members feelings regarding palliation, and whether EoLC is perceived to be delivered well.

Methods: 47 staff members from three adult intensive care units within one hospital (General, Neuro and Cardiothoracic ICU) participated via a structured interview. Clinical intensive care doctors and nurses of all grades and experience were included. Data was collected, then categorised into themes of response to expose trends.

Results: 46 participants (98%) felt EoLC was part of ICUs responsibility, and 34 participants (72%) reported feeling comfortable and competent managing EoLC.

22 (46%) participants would seek advice from the hospital palliative care team (PCT).

34 participants (72%) believe EoLC is delivered well on their ICUs.

Discussion: The main theme demonstrated was the perceived delay in active withdrawal of treatment. Reasons for this include varying EoLC experience amongst doctors. Delays were also attributed to a perceived reluctance from seniors to withdraw care from patients who’s families were against palliation, either for cultural or religious reasons. Although this unease was clear, there was no mention of litigation or complaints. Numerous nursing staff reported a lack of timely DNACPR form completions, clear ceilings of care and timely withdrawal plans.

Secondly, there was resistance amongst staff to reduce physiological monitoring during withdrawal. Multiple staff members suggested this reluctance was related to the stigma of the Liverpool Care Pathway. It was felt that without numerical values inadequate assessment of symptoms was made. No member of staff made reference to the ICU end of life qualitative symptom chart which is available on the unit.

Finally, there was discordance regarding referral to palliative medicine. Some staff members looked directly to the PCT to advise on EoLC, whereas others would seek guidance within ICU. This discordance may be related to a lack of evidence based gold standards for EoLC in ICU.

Conclusion: Improved EoLC in ICU needs better guidance and education to empower senior decision making. Principles, rather than tick box guidance may resolve the unease related to end of life care and allow for sufficient flexibility to individualise palliation of complex ICU patients.

This guidance should be based on a combination of palliative care and ICU withdrawal gold standards in order to produce excellence in evidence based standardised care.

EP.004

The rule of 3s – three factors that triple the likelihood of families overriding first person consent for organ donation in the UK

James Morgan1, Paul Murphy2,3, Dale Gardiner3,4, Cathy Hopkinson5, Cathy Miller5 and Olive McGowan5

1Yorkshire Deanery, Leeds & Bradford School of Anaesthesia, UK

2Leeds Teaching Hospitals NHS Trust, Leeds, UK

3NHS Blood and Transplant, London, UK

4Nottingham Teaching Hospitals NHS Trust, Nottingham, UK

5NHS Blood and Transplant, Bristol, UK

Abstract

Between April 1st 2012 and March 31st 2015, 263 of the 2244 families in the UK, whose loved ones had registered to donate organs for transplantation after their death on the NHS Organ Donor Register, chose to override this decision; an override rate of 11.7%. Multivariable logistic regression analysis was applied to data relating to various aspects of the family approach in order to identify factors associated with such overrides. The factors associated with family overrides were failure to involve the Specialist Nurse for Organ Donation (SNOD) in the family approach (odds ratio [OR] 3.0), donation after circulatory death (OR 2.7) and ethnicity (OR 2.7). This adds to the body of data linking involvement of the SNOD in the family approach to improved UK consent rates, and suggests that there may be, from the perspective of the family, fundamental differences between donation after brainstem death and donation after circulatory death.

Keywords: Organ donation, donation after brainstem death, donation after circulatory death, organ donor register, organ transplantation

EP.005

Factors affecting organ donation status of University students: A cross-sectional study of a large University in the UK

Joe Alderman1,2,3 and Andrew Owen1,2

1College of Medical and Dental Sciences, University of Birmingham, Birmingham, UK

2Critical Care Unit, Queen Elizabeth Hospital, University Hospitals Birmingham NHS Foundation Trust, Birmingham, UK

3City Hospital Birmingham, Sandwell & West Birmingham Hospitals NHS Trust, Birmingham, UK

Abstract

Introduction: Nearly 500 people die per year in the UK awaiting an organ transplant; the current waiting list constists of over 6400 people. (1) Much progress has been made in recent years to improve donation rates, which have increased by 20% since 2011/12. (2) Despite recent successes, there remain significant problems with availability of donor organs in the UK, particularly in the black and minority ethnic population, who make up 11.9% of the UK population, but just 5.8% of the organ donation register. (3,4) Ethnic diversity and religion are linked, with far greater diversity in Muslim and Buddhist communities than in Christians, or those with no religion. (5) This project aimed to understand the factors affecting University of Birmingham students' donor status, and to assess opinions regarding an ‘opt-out’ system for organ donation.

Methods: A questionnaire was distributed via email, social media, and message boards to students at the University of Birmingham, UK from 2012–14 following an initial pilot phase. Demographic data and organ donor status were collected, and degree of agreement with specific phrases was assessed using a five-point likert scale. The results were analysed using Microsoft Excel and SPSS (v24; IBM). Likert data were transposed into stratified horizontally displaced bar graphs, with red-coloured right-shifted bars indicating disagreement, left-shifted green-coloured bars indicating agreement.

Results: Overall, 749 responses were returned. Of these, 498(66%) were female. 477(63.7%) of respondents were donors – substantially higher than the national average of 36%. (2) 203 (27.1%) were not donors, and 68 (9.2%) were unaware of their status. Subset analysis indicated that non-religious students were broadly likely to be donors (70.4%, n = 406), and that religious students taken as a group had a lower donor rate (55.2%, n = 179). Further analysis by religion was difficult with low numbers, but indicated variance in donation rates between different respondents from different religions

Over half of students volunteered their postcodes. Deprivation index correlated moderately strongly with donor propensity (Pearson R = 0.501; R2 = 0.251).

Overall, 74% of respondents would be in favour of an opt-out system, though this was reduced to 57% in those who were not organ donors.

Conclusion: This study, though limited, provides evidence of divergent opinions regarding organ donation between students based on their religious affiliation. Given the aforementioned deficit in donation amongst minority ethnic groups within the UK, further work is warranted to understand and overcome perceived barriers to donation.

References: Redacted. Availble via email on request

EP.006

Evaluating unintentional nasogastric tube displacement in critically ill patients

Helen Prescott1, Hayley Prior1, Alexander Sykes2, Eloise Shaw2, Tom Beadman2, Cara Valente2 and Gareth Gibbon1

1Nottingham University Hospitals NHS Trust, Nottingham, UK

2University of Nottingham Medical School, Nottingham, UK

Abstract

Introduction: The use of nasogastric tubes (NGTs) in critical care is routine. However, their dislodgement is not uncommon and risks patient harm. Within Nottingham University Hospitals Critical Care (NUHCC) department there is concern about the frequency of unintentional NGT displacement (UND) yet there is no consensus as to the best method for securing NGTs. Adhesive dressings and nasal clips tend to be used first line with looped systems reserved for patients deemed at increased risk of UND. In this study we sought to capture UND events in real time and to look further at the governance and management of NGTs within our critical care areas.

Methods: Patients within NUHCC department were reviewed at multiple time points (typically 3 times per week) over a 15-week period and the following recorded: presence or absence of a NGT; method for securing NGT; incidence of UND during preceding 48 hours; nasal pressure damage; completion of NGT care plan. For 6 weeks of the study, at the same time as data collection, nursing staff were quizzed about the types of patients that may benefit from a looped securing system. The number of adverse events captured by our study was compared with the Trust’s incident reporting system.

Results: On average two thirds of our patients have an NGT in situ at any one time. Of the 211 patients with NGTs reviewed during the study period 31(15%) suffered at least one UND. 36 patients (17%) had a looped securing system for part of their admission. Just 3 (8%) suffered UND whilst the looped system was definitely known to be in place. E-mail reminders and posters helped increase knowledge amongst nursing colleagues about the types of patient that may benefit from a looped system but had little effect on the percentage of NGT care plans completed (mean 73%). Our manual data collection system was able to identify more episodes of UND than the Trust’s incident reporting system. The converse was true for pressure damage.

Conclusions: The majority of patients admitted to NUHCC department have an NGT inserted but at least 15% will suffer one or more UNDs. The incidence of UND in patients with looped systems may be lower but numbers are small. Simple interventions improved knowledge amongst nursing colleagues of “at risk” patients but did not affect completion of NGT care plans. The Trust’s incident reporting system is well utilised for recording pressure damage but not UND.

EP.007

Sodium Administration in the ICU

Nick Tilbury1, Ian Lyons1 and Gareth Moncaster1

1Kings Mill Hospital, Mansfield, UK

Abstract

Introduction: Dysnatraemias are common in critically ill patients both on admission(1) and during ICU stay(2) and are associated with increased morbidity and mortality(1,2). While particular care is taken with fluid balance, the total sodium intake is often not considered, though it may be associated with respiratory dysfunction (3). We audited the total sodium intake in patients on our ITU.

Methods: 30 patients were reviewed. Data was obtained from prescription charts, observation charts and patient notes for a 24 hour period within our intensive care unit. Data on sodium content of medications was obtained from their summary of product characteristics.

Results: Eleven of thirty (36.7%) patients received Level 2 care, and the remainder received level 3 care. Twenty-two patients (73.3%) received greater than the WHO recommended daily intake of sodium (2 g). The mean total sodium intake was 2.8 mmol/kg (63.4 mg/kg). A significant proportion of sodium was due to intravenous medications, with the mean sodium dose being 1.2 mmol/kg; of this 0.6 mmol/kg (52.1%) resulted from solvents administered with the medication. Oral medications accounted for an average of 0.33 mmol/kg of the sodium. 10/30 patients (33.3%) were eating and drinking and the sodium intake for this could not be accurately quantified, and was not analysed.

Conclusion: Total sodium intake is not routinely considered in the ICU, despite being excessive in many critically ill patients. Intravenous medications and their solvents account for 41.8% of the total sodium load in critically ill patients. We recommend that greater consideration be given to sodium administration in the critically ill, particularly for ‘occult’ sources of sodium, as a result of medications and their solvent. As we were unable to account for oral intake, it is likely that in many patients the total sodium intake is higher than suggested. Work is ongoing in our unit to improve the delivery and monitoring of sodium.

References

Funk GC, Lindner G, Druml W, et al. Incidence and prognosis of dysnatraemias on ICU admission. Intensive Care Medicine 2010; 36: 304–311.

Vandergheynst F, Sakr Y, Felleiter P, et al. Incidence and prognosis of dysnatraemias in critically ill patients: analysis of a large prevalence study. Eur J Clin Invest 2013; 43: 933–948.

Bihari S, Peake SL, Prakash S, et al. Sodium balance, not fluid balance, is associated with respiratory dysfunction in mechanically ventilated patients: a prospective multicentre study. Crit Care Resusc 2015; 17: 23–28.

EP.008

Nasogastric feed and propofol use in the nutrition of critically unwell patients: An acute trust experience

Sean Menezes1, Brigid Sharkey2 and Shibaji Saha2

1Colchester Hospital University NHS Foundation Trust, Colchester, UK

2Queen's Hospital – Barking, Havering and Redbridge University Hospitals NHS Trust, Romford, UK

Abstract

Nutrition for critically unwell patients remains a concern despite improved outcomes seen from early feeding. In most critical care units (CCUs), there is a significant delay in initiating enteral nutrition that worsens the patient’s catabolic state, leading to organ dysfunction. Additionally, the use of lipid-rich propofol as a sedative provides a high-fat caloric intake, potentially worsening suboptimal nutrition. We describe the nutritional support provided to our CCU patients to determine the daily recommended calories and protein provided in the acute period and evaluate the role of propofol as a nutritional source.

Clinical notes were examined for patients admitted to an acute trust’s general CCU over 14 days including only those who were intubated and ventilated with no contra-indications to nasogastric (NG) feeds. The volume and type of NG feed and the volume of propofol used over a 24-hour period were recorded to a maximum of seven days of admission. Daily calculated recommended energy intake (cREI) and protein intake (cRPI) were calculated by the CCU dietician. This was determined using their pre-admission nutritional status and ideal body weight with a target of 25–35 kcal/kg/day and 0.625–1.875 g of protein/kg/day to create a required hourly-rate of NG feed.

There were 37 patients identified, 13 women and 24 men, accounting for 122-patient days. There were 33 medical and 4 surgical patients with a median age of 64.5 (range 22–85.4). NG feeds provided an average of 50.9% of the daily cREI for all patients. The average daily provision of protein was only 47.3% of the daily cRPI. In both cases, this was <15% on day 1 (D1) of admission but improved to roughly 60% on D7. Propofol provided an average of 36.1% of the daily intake with 68.7% of the calories on D1 and reduced to 29.1% by D7. When considering NG feeds and propofol together, our patients met 70.4% of the daily cREI. This was an average of 31.9% on D1 of admission and improved to 83.0% by D7.

Nutrition continues to be suboptimal within our CCU, reflected in the poor total calories and protein provided to our patients. This is worse on D1 due to the investigations requiring patient transfer and the lower priority in initiating NG feed. Additionally, propofol continues to be a significant source of calories. We have explored different strategies and have determined that a 24-hour volume target would be better suited than our current practice.

EP.009

Improving the delivery of daily calorific targets via the enteral route in a critically ill patient population: A quality improvement cycle in a mixed surgical and medical intensive care unit in the United Kingdom

Brian Johnston1, Toseef Ahmed1, Zeyad Al-Moasseb1, Martin Habgood1, Sarah Clarke1 and Anton Krige1

1East Lancashire Hospitals Trust, Blackburn, UK

Abstract

Introduction: Enteral nutrition and adequate calorie intake has been associated with reduced infections and improved survival in critically ill patients. Despite this evidence data suggests patients do not achieve their daily calorific requirement. This ‘iatrogenic underfeeding’ is thought to be widespread with the CALORIES study revealing that only 10%-30% of prescribed daily kCal was delivered to patients. Utilising quality improvement methodology, we aimed to deliver greater than 85% of prescribed kCal/day by transitioning from an hourly based enteral feeding protocol to a 24-hour volume based feeding protocol, starting feeding at a higher rate and increasing the permissible gastric residual volume from 250 ml to 300 ml.

Methods: Baseline data assessing the percentage of daily kCal delivered to ventilated patients via the enteral route was collected in December 2015 (cycle 1). Following presentation of baseline data new intervention guidelines were agreed based on the PEPuP protocol. Nurse champions were identified and responsible for cascade training the PEPuP protocol to all nursing staff. Educational tools to help determine daily calorific requirement and volume of feed required were provided. Repeat data was collected 6 months (cycle 2) after intervention implementation followed by two weekly cycles utilising PDSA methodology between July 2016 and July 2017 (cycle 3 to 12).

Results: Ten and twelve patients were included in cycles 1 and 2 respectively. Five patients were included during each PDSA cycle (cycle 3 to 12). During cycle one the percentage of kCal achieved via enteral feeding was 25.1%. Following intervention this increased to 82.6% (p < 0.001) during cycle 2. This significant increase in daily kCal achieved via the enteral route was maintained throughout cycle 3 to 12 with patients meeting an average of 86.5% daily kCal via enteral feeding, increasing further to 95.3% of daily kCal when calories from Propofol were included. Episodes of gastric residual volumes >250 ml were not appreciably increased following switching to volume based protocol.

Conclusion: Switching to a 24-hour volume based feeding regimen is a simple and cost-effective method of ensuring patients meet daily calorific targets. Through the use of quality improvement methodology, we demonstrated this approach is achievable and sustainable. The success of this quality improvement project has led to the adoption of the protocol in other ICU units in a regional critical care network. Future enhancements to the protocol will be targeted additional protein supplementation and institution of trophic feeding for those patients that would traditionally be nil by mouth prior to instigation of enteral feeding.

EP.010

Immunonutrition for Acute Respiratory Distress Syndrome (ARDS) in Adults; a Cochrane Meta-Analysis

Victoria Burgess1, Ahilanandan Dushianthan1, Rebecca Cusack1 and Mike Grocott1

1Universtiy Hospital Southampton, Southampton, UK

Abstract

Background: Acute respiratory distress syndrome (ARDS) is an acute overwhelming systemic inflammatory process associated with significant morbidity and mortality. Pharmaconutrients as part of a feeding formula, or supplemented additionally, have been investigated to improve clinical outcome in critical illness and ARDS. The objective of this study is to evaluate the effect of immune moderating nutrition in patients with ARDS.

Method: We searched MEDLINE, Embase, CENTRAL, conference proceedings and trial registrations for appropriate studies up to March 2017. All randomised controlled trials (RCT’s) of adult patients with ARDS and/or acute lung injury were included. Two authors independently assessed the quality of the studies and extracted data from the included trials. Quality of evidence and analytical methods were presented in accordance to Cochrane standards. All cause mortality, duration of mechanical ventilation, ICU and hospital length of stay, new organ failures, and adverse reaction were assessed.

Results: Ten RCT’s comprising 1020 patients were included. Immunonutrition intervention was omega-3 fatty acids (eicosapentaenoic acid, docosahexaenoic acid) and gamma-linolenic acid (GLA), and antioxidants. Some studies had a high risk of bias, others were heterogenous in nature and varied in a number of ways; the type and duration of intervention given, calorific targets, and outcomes reported. For the primary outcome, all cause mortality, there was no significant difference between groups (RR 0.79, P = 0.13 Figure 1). The mortality for the immunonutrition and control groups was 23% and 28% respectively. There was a significant reduction in ventilator days (3 days P = 0.0002), and ICU length of stay (2.5 days P = 0.0009), and Pa02/Fi02 ratio at day 4 and 7. There was no difference in ventilator or ICU free days between groups or in adverse events reported. These analyses were subject to significant statistical heterogeneity and the effect was sensitive to analytical methods.

Conclusion: This meta-analysis consisted of 10 heterogeneous studies of varying quality, studying the effect of Omega-3 fatty acids, GLA and/or antioxidants in ARDS population. The results suggest that there is no long-term mortality benefit from this intervention. However, there may be improvements in duration of mechanical ventilation, ICU length of stay and oxygenation without any significant increase in serious adverse events. The quality of evidence is moderate due to number of reasons; some studies were unable to achieve calorific targets, significant dropouts not accounted for in the ITT analysis, and variations in the type and duration of intervention provided.

Figure 1.

Meta-analysis of the primary outcome of mortality.

EP.011

Serotonin Syndrome as a side effect of Antibiotic therapy on the Intensive care Unit

Will Watson 1

1Wishaw General Hospital, Lanarkshire, UK

Abstract

Introduction: Serotonin syndrome is a neurological disorder caused by an excess of Serotonin within the CNS. We present a case, precipitated by introduction of Linezolid for treatment of pneumonia.

Case report: A 61 year old gentleman was admitted to our ICU with polytrauma. He underwent multiple operations for limb fractures, intra-abdominal sepsis due to bowel perforation, and had a tracheostomy, to facilitate weaning from ventilation. He was limited in his ability to receive chest physio, due to dorsally angulated sternal fracture, felt to be at risk of damaging his great vessels. He developed increasingly drug resistant ventilator associated pneumonia, as well as chronic metalwork infection in his limbs. He had been given a variety of antimicrobials, which had either been ineffective, or in the case of Meropenem, he had developed a reaction to. After a further clinical and biochemical deterioration, he was commenced on Linezolid. Prior to admission he had been on a Fentanyl patch for chronic pain secondary to Rheumatoid arthritis, and this had been supplemented with PRN Oxycodone. He was started on Citalopram during his admission for low mood. Within 24 hours of commencing Linezolid, he developed worsening agitation, Hypertension, tachycardia, hyper reflexia, and clonus. The Linezolid and Citalopram were stopped, and he was treated with Diazepam, Cyproheptadine, sedation, and ventilation. His symptoms of Serotonin syndrome improved over the next week, but he sadly succumbed to a further episode of sepsis.

Discussion: There have been reports in the literature of Linezolid causing Serotonin syndrome1. The underlying mechanism is due to the MAOI effect that Linezolid exhibits, which decreases the breakdown of biogenic amines, leading to accumulation within the CNS. In combination with proserotonergic agents, this can lead to the development of clinical serotonin syndrome. Drugs implicated include antidepressants, dopamine agonists, opioids, and stimulants. The incidence in those taking an SSRI alongside Linezolid is 3%2 Symptoms can occur immediately, but may take up to 3 weeks to appear2 Immediate management involves supportive care, treating agitation with benzodiazepines, cooling, and consideration of serotonin antagonists, such as chlorpromazine and Cyproheptadine. Further management involves removal of the offending agents, with careful consideration into which drugs are clinically necessary in the circumstances.

References

- 1.Taylor JJ, Wilson JW, Estes LL. Linezolid and serotonergic drug interactions: a retrospective survey. Clin Infect Dis 2006; 43: 180–187. [DOI] [PubMed]

- 2.Quinn DK, Stern TA. Linezolid and Serotonin Syndrome. Prim Care Companion J Clin Psychiatry 2009; 11: 353–356. [DOI] [PMC free article] [PubMed]

EP.012

Partial Anomalous Pulmonary venous return (PAPVR) Case Report

Raghda Abed1, Guy Rousseau1 and Mark Meller1

1North Devon District hospital, Barnstaple, UK

Abstract

Background: Partial anomalous pulmonary venous return (PAPVR) is a vascular anomaly when some of the pulmonary veins connect to the right atrium or one of its venous tributaries rather than the left atrium. Approximately 10% are left sided. An isolated PAPVR is usually small, without haemodynamic compromise and rarely requires surgical correction. It’s rarely seen in adults and more commonly an incidental finding in asymptomatic patients undergoing pulmonary vascular studies for other indications.

Clinical case: A 61 year old male, presented with a one month history of severe shortness of breath and chest pain. He had a background of rheumatoid arthritis.

Clinically the patient had crepitations bilaterally and was hypoxic. A CTPA ruled out pulmonary embolism, but showed ground glass changes on both lungs. Pneumocystis Carinii was grown from sputum culture. He continued to deteriorate and was transferred to ICU. Central venous cannulation of the right internal jugular vein(IJV) under ultrasound guidance was complicated by an accidental arterial puncture. Subsequent Ultrasound-guided cannulation of the left IJV was uneventful, and the lumen of the left IJV was noticeably more distended and easier to locate than the right.

Post procedure CXR showed the CVC tracing a path along the left heart border. With the patient on 2litres of oxygen via a nasal cannulae, a blood sample taken from the CVC showed a pO2 of 37.2 kPa and pCO2 of 4.5 kPa, the peripheral arterial blood sample (taken simultaneously), showed a pO2 of 9.07 kPa and pCO2 of 4.6 kPa. The transduced waveform from the central line had the appearance of pulmonary artery trace.

The CT pulmonary angiogram (CTPA) which was performed to exclude pulmonary embolism a few days prior to CVC was reviewed and on closer inspection revealed an anomalous left upper lobe pulmonary vein draining into the left subclavian vein.

Conclusion: In our case the, the left IJV was more filled and easier to cannulate compared to the right. The oxygen saturation from CVC blood sample was higher than that of the arterial line and the wave form similar to that of pulmonary circulation. Such findings together with the radiological evidence of abnormal CVC placement caused a certain amount of confusion as to the actual location of the CVC. We hope that this report will highlight PAPVR as a possible yet rare cause of CVC “displacement” and anomalous venous blood gas analysis results.

EP.013

Fatal Air Embolism during Elective Oesophagoscopy

Adnan Akram Bhatti1 and Priti Gandre1

1North Middlesex University Hospital, London, UK

Abstract

Introduction: Elective upper gastro-enteral endoscopy is a common day-stay procedure with minimal complication and mortality rates. We report a case of air embolism as a rare but catastrophic complication of this otherwise low-risk procedure.

Case summary: A 77-year-old man with past medical history of hypertension and oesophageal stricture came to the endoscopy unit for elective oesophageal dilatation as a day procedure. Sedation was administered at the start of the procedure with Fentanyl and Midazolam by the gastroenterologist as per the local protocol. Shortly after the start of endoscopy, the patient suffered a generalised tonic clonic seizure followed by asystolic cardiac arrest. Spontaneous circulation returned after three cycles of cardiopulmonary resuscitation. Brain and chest CT scans were performed within 60 minutes of the seizure which showed numerous unilateral cerebral air locules and surgical emphysema in the posterior mediastinum and the neck. A repeat endoscopy was performed to rule out oesophageal perforation as per advice from the tertiary centre. The patient did not regain consciousness and developed rapid deterioration with midline shift and coning within 24 hours of ICU admission. Brain stem death was confirmed the following day.

Discussion: Air embolism during endoscopy is caused by a direct communication between the pressurised air source of the endoscope and an exposed blood vessel in the gut. Rapid intravenous injection of a relatively small volume of air (up to 2 ml/kg) can cause acute haemodynamic collapse.

Initial management of air embolism includes high-flow oxygen, rapid intravenous fluids and positioning the patient urgently in Trendelenburg and left lateral side. Aspiration can be tried through central venous catheter, if available. Bedside echocardiogram can diagnose air embolus quickly and accurately. Hyperbaric oxygen therapy within 24 hours can be helpful in reducing cerebral complications of air embolism but centres that provide this treatment are rare in the UK. Carbon dioxide insufflation instead of air during endoscopy can decrease the fatality of air embolism.

The case also raises questions regarding quality of sedation and monitoring in elective endoscopy procedures administered by non-anaesthetists. Guidelines on monitoring for sedation by the British Society of Gastroenterologists differ from those by the Association of Anaesthetists of Great Britain and Ireland. Although uncommon complications like air embolism cannot be completely avoided, prompt recognition and treatment of any complication is more likely if full monitoring and a qualified expert in dealing with life-threatening complications are present during all the procedures.

EP.014

Interventional Radiology for the Management of Delayed Massive Hepatic Haemorrhage due to HELLP Syndrome

Eleanor Damm1 and Nehal Patel1

1Royal Stoke University Hospital, Stoke-on-Trent, UK

Abstract

Introduction: The HELLP syndrome is a life-threatening pregnancy complication characterized by haemolysis, elevated liver enzymes, and low platelet count, occurring in 0.5%–0.9% of all pregnancies (1, 2). About 70% of the cases develop before delivery, the majority between the 27th and 37th gestational weeks; the remainder within 48 hours after delivery. (2)

The HELLP syndrome usually occurs with pre-eclampsia. However, in 20% of cases there may be no evidence of pre-eclampsia before or during labour. (3)

The case: A 32 years old primipara 37/40 + 1; presented with a one-day history of constant upper abdominal pain, nausea, vomiting and reduced fetal movements. No visual disturbances or headaches were reported. She had a low risk pregnancy; SBP <110, DBP <75, and urine NAD throughout.

On presentation: BP 138/88, HR 78, CTG normal, Hb 139, platelets 54, ALT 284, ALP 220, Bilirubin 21. HELLP syndrome was diagnosed. Her platelet count deteriorated rapidly over hours, requiring a category 2 LSCS section. Initially recovering well, she collapsed two days post-partum. An intra-abdominal bleed was suspected. The major obstetric haemorrhage protocol was activated. Once stabilized, a CT angiography uncovered a 21 × 5.7 × 19 cm sub-capsular hepatic hematoma with a normal post-partum uterus.

She successfully underwent emergency embolization of the right hepatic and both uterine arteries. She was admitted to ITU as a level 2 patient for pain management, blood pressure control, and ongoing transfusion requirements.

Discussion/Conclusion: HELLP remains difficult to clinically diagnose with often non-specific symptoms (4). Maternal and fetal morbidity and mortality remains high (5).

HELLP can present independent of pre-eclampsia and a high index of suspicion is required.

There is an expanding role for interventional radiology for management of massive obstetric haemorrhage.

References

- 1.Weinstein L. Syndrome of hemolysis, elevated liver enzymes, and low platelet count: A severe consequence of hypertension in pregnancy. American Journal of Obstetrics and Gynecology 1982; 142: 159–167. [DOI] [PubMed]

- 2.Rath W, Faridi A, Dudenhausen J. HELLP Syndrome. Journal of Perinatal Medicine 2000; 28: 249--260. [DOI] [PubMed]

- 3.Haram K, Svendsen E, Abildgaard U. The HELLP syndrome: Clinical issues and management. A Review. BMC Pregnancy and Childbirth 2009; 9: 8. [DOI] [PMC free article] [PubMed]

- 4.Weinstein L. It has been a great ride: The history of HELLP syndrome. American Journal of Obstetrics and Gynecology 2005; 193: 860–863. [DOI] [PubMed]

- 5.Sibai BM, Ramadan MK, Usta I, et al. Maternal morbidity and mortality in 442 pregnancies with hemolysis, elevated liver enzymes, and low platelets (HELLP syndrome). American Journal of Obstetrics and Gynecology 1993; 169: 1000–1006. [DOI] [PubMed]

EP.015

The Highs and Lows of Quetiapine Toxicity

Andrew Chamberlain1 and Joseph Carter1

1York Teaching Hospital NHS Foundation Trust, York, UK

Abstract

Background: Some studies have shown quetiapine to be relatively safe in overdose when compared to other antipsychotics. We argue the contrary, advocating early aggressive treatment of cardiac dysrhythmias and seizures.

Case Description: A 38-year-old female presented to the Emergency Department having ingested 16 g of quetiapine 4 hours previously. She required intubation and ventilation for reduced GCS and was admitted to Critical Care. Approximately 6 hours following admission, the patient started with several tachyarrhythmias, followed by a tonic-clonic seizure. She subsequently developed torsades de pointes, so advanced life support was commenced. Magnesium sulphate was administered, along with intravenous lipid emulsion (Intralipid® 20%), and 8.4% sodium bicarbonate.

Following 30 minutes of CPR, the patient had a return of spontaneous circulation. However, she was significantly hypotensive despite numerous adrenaline boluses. She was switched to a metaraminol infusion, and subsequently noradrenaline following the establishment of central venous access. After 2 hours, the patient’s noradrenaline requirement had reduced from 0.75 mcg.kg-1.min-1 to 0 mcg.kg-1.min-1.

The patient was extubated the following day but, as a consequence of prolonged CPR, had suffered a number of rib fractures. Paradoxical chest movement impeded her ventilation and she required reintubation. Following treatment for a hospital acquired pneumonia, the patient had a percutaneous tracheostomy on day 15. She was successfully decannulated 9 days later.

Discussion: Quetiapine toxicity is one of many cases which requires a more generalised management approach due to the lack of a specific antidote. The use of lipid emulsion for quetiapine toxicity is not well established, and its general use in the management of drug poisoning appears to be sporadic. In this case, one could argue that lipid emulsion therapy should have been used at the first sign of toxicity, but it is not known whether this therapy alone would have altered the outcome.

Of particular difficulty during resuscitation was the apparent ineffectualness of intravenous adrenaline. The most accepted mechanism is that beta stimulation can worsen hypotension in the setting of quetiapine-induced alpha blockade. As such, the early use of alpha agonists with minimal beta agonism, such as metaraminol and noradrenaline, are far more effective.

Despite the extended recovery phase, the combination of treatment given in this case ultimately resulted in successful resuscitation.

Conclusion: Lipid emulsion therapy can be used as a part of the management for quetiapine toxicity, but adrenaline should be avoided due to refractory hypotension. Clinicians should have a low threshold for admission to critical care.

EP.016

Human neutrophil function is rapidly impaired by complement C5a in a clinically relevant model of bacteraemia

Alex Wood1, Arlette Vassallo1, Klaus Okkenhaug2, A John Simpson3, Jonathon Scott3, Charlotte Summers1, Edwin Chilvers1 and Andrew Conway Morris1

1Department of Medicine, University of Cambridge, Cambridge, UK

2Signalling Programme, Babraham Institute, Cambridge, UK

3Institute of Cellular Medicine, Newcastle University, Newcastle, UK

Abstract

Introduction: Nosocomial infections commonly affect patients admitted to intensive care and are associated with worse outcomes. A key determinant of susceptibility to these infections is the recently identified syndrome of immune-cell failure. The mechanisms underpinning this deleterious process remain incompletely understood.1 Previously, we have demonstrated that the complement protein C5a (present at high concentrations in plasma from critically ill patients) impairs phagocytosis of yeast particles by healthy donor and patient neutrophils.2 In this study, we investigated the underlying mechanism, duration and preventability of C5a-induced neutrophil dysfunction in a clinically relevant in vitro model.

Methods: A new assay was developed to assess neutrophil function using small (<2 mL) volumes of blood, without the need for time-consuming and potentially cell-perturbing purification steps. Healthy human or murine samples were exposed to C5a or control, with subsequent or concomitant addition of pH-sensitive Staphylococcus aureus bioparticles. Phagocytosis and C5a receptor (C5aR) expression were quantified by flow cytometry with an Attune™ Nxt Acoustic Focusing Cytometer (Life Technologies, Paisley, UK). Selective small molecule inhibitors, soluble pro-inflammatory agents, and neutrophils from genetically modified mice were used to address our experimental questions.

Results: C5a rapidly reduced neutrophil phagocytosis of Staphylococcus aureus in human whole blood by 40% (p < 0.0001). Moreover, this phagocytic impairment increased over time post-C5a exposure. In contrast to C5a, LPS and platelet activating factor (PAF) pre-treatment increased phagocytosis (p < 0.01 and p < 0.05 respectively). When neutrophils phagocytosed Staphylococcus aureus prior to or alongside C5a exposure, phagocytosis was unimpaired. C5a, phagocytosis and soluble priming agents all reduced C5aR expression (p < 0.01), but only phagocytosis protected cells from C5a-induced phagocytic impairment. C5a-induced phagocytic impairment was PI3-kinase dependent in isolated neutrophils, but not in whole blood.

Conclusions: This study is the first to demonstrate the ability of C5a to rapidly induce a prolonged impairment of neutrophil phagocytosis in a whole blood model of bacteraemia. It also indicates phagocytosis has a protective effect against subsequent C5a-induced neutrophil dysfunction, which is unlikely to be mediated by cell-surface receptor downregulation. Finally, this study highlights differences in the pharmacological tractability of PI3-kinase enzymes between isolated and blood neutrophils, which may influence the efficacy of locally- or systemically-administered therapies.

References

- 1.Conway Morris A, Anderson N, Brittan M, et al. Combined dysfunctions of immune cells predict nosocomial infection in critically ill patients. Br J Anaesth 2013; 111: 778–787. [DOI] [PubMed]

- 2.Conway Morris A, Brittan M, Thomas S, et al. C5a-mediated neutrophil dysfunction is RhoA-dependent and predicts infection in critically ill patients. Blood 2011; 117: 5178–5188. [DOI] [PubMed]

EP.017

The whole blood phagocytosis assay: A near patient test to promote a personalised approach to immunomodulatory therapy in community acquired pneumonia

Jesus Reine1, Jamie Rylance1, Daneila Ferreira1, Robert Parker2, Ingeborg Welters3,4, Ben Morton1,2

1Liverpool School of Tropical Medicine, Liverpool, UK

2Aintree University Hospital NHS Foundation Trust, Liverpool, UK

3Royal Liverpool and Broadgreen University Hospitals NHS Trust, Liverpool, UK

4University of Liverpool, Liverpool, UK

Abstract

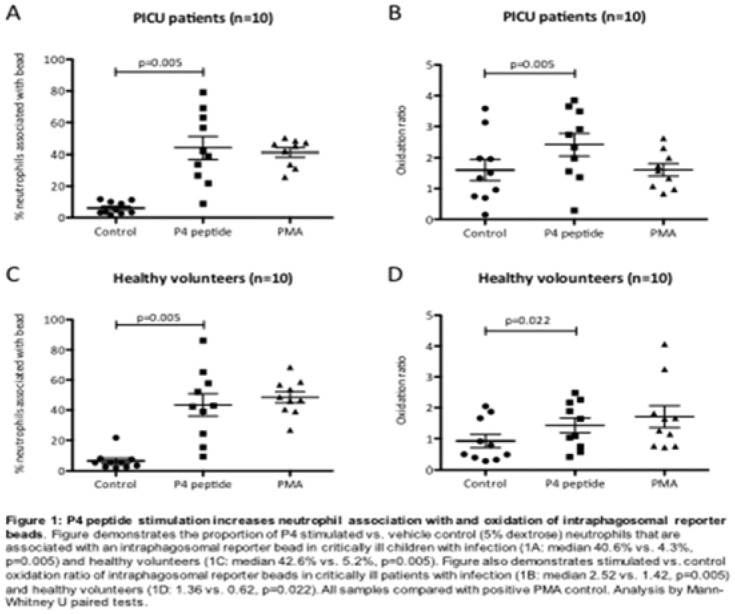

Introduction: Severe community-acquired pneumonia (CAP) results from a profound systemic inflammatory response to lung infection and causes high mortality rates. Immunomodulatory drugs offer the promise of improving outcomes independent from antimicrobial use. However, clinical trials of untargeted immunostimulatory agents have not demonstrated benefit, and experts now advocate personalised therapy. However, there are currently no clinical tests used to measure immune function.

Our aim was to refine and validate a novel ‘whole blood phagocytosis assay’ to measure ex vivo neutrophil function in blood samples from CAP patients. This test could potentially measure neutrophil function to guide safe and effective administration of immunomodulatory therapies.

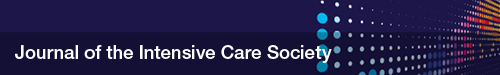

Methods: Clinical: A prospective case-control study conducted at Aintree University Hospital and the Royal Liverpool University Hospital (REC: 15/NW/0869): 07/2016 – 04/2017. We recruited healthy volunteers for assay refinement and three patient groups: 1) severe CAP admitted to critical care, 2) moderate CAP admitted to the medical ward and 3) age-matched volunteers from outpatient clinic without acute inflammatory illness. CAP diagnosed in line with British Thoracic Society definitions. Clinical severity and outcome data collected prospectively.

Laboratory: A single citrated blood sample taken <48 hrs of CAP diagnosis and processed within four hours. Whole blood was suspended and incubated with fluorochorome labelled reporter beads (fluoresce in response to phagolysosome function (Morton et al. Shock 2016). Subsequently, red blood cells were lysed and neutrophil association with and oxidation of the reporter beads was measured by flow cytometry.

Results: The assay was refined using 30 healthy volunteers’ samples to confirm optimal conditions for the standard operating procedure. Subsequently we recruited 46 patients (16 severe-CAP, 15 moderate-CAP and 15 controls). Patient demographics, severity and outcome data are displayed in Table 1. The proportion of isolated neutrophils association with reporter beads was significantly increased in moderate-CAP compared to both severe-CAP and control patients (Figure 1). There was also signal toward increased oxidative burst (bacterial killing mechanism) in moderate-CAP patients.

Conclusions: We have demonstrated differential neutrophil function in unstimulated neutrophils between moderate-CAP, severe-CAP and control patients in this preliminary study. The potential advantages of this solution are: 1) direct measurement of neutrophil activity, 2) minimal sample processing, 3) reproducibility and 4) results available <3 hrs sampling. We plan to conduct a larger scale evaluation study to determine if the assay can stratify patients by outcome and prospectively test potential immunostimulatory agents (e.g. GM-CSF and potential unlicensed agents such as P4 peptide) prior to administration.

EP.018

Retention of CO2 Does Not Impact the Oxygen-Haemoglobin Dissociation Curve In ICU Patients

Nick Rosculet1, Romit Samanta1, Abishek Dixit1, S Harris2, N MacCallum2, David Brealey2, Peter Watkinson3, Andrew Jones4, S Ashworth5, Richard Beale4, Stephen Brett5, Duncan Young3, Mervyn Singer2, Ari Ercole1, Charlotte Summers1

1Department of Medicine, University of Cambridge School of Clinical Medicine, Cambridge, UK

2Bloomsbury Institute for Intensive Care Medicine, University College London, London, UK

3Critical Care Research Group (Kadoorie Centre), Nuffield Department of Clinical Neurosciences, Medical Sciences Division, Oxford University, Oxford, UK

4Department of Intensive Care, Guy's and St Thomas' NHS Foundation Trust, London, UK

5Centre for Perioperative Medicine and Critical Care Research, Imperial College Healthcare NHS Trust, London, UK

Abstract

Introduction: Since its initial description in 1904, the oxygen-haemoglobin dissociation curve (ODC) has been well described under physiological conditions1,2. However, the impact of pathology has been less well characterized, with most data arising from small clinical studies of anaesthetized adults/patients (<100 subjects), or experimentally-induced hypoxaemia/hypercapnia. Routinely collected clinical data, including arterial blood gas analyses, are now available from many thousands of critically ill patients. We sought to investigate the impact of pCO2 on the ODC of critically ill adults, and hypothesized that pCO2 would not significantly alter the relationship between pO2 and haemoglobin saturation.

Methods: Data was extracted from the National Institute for Health Research Critical Care Health Informatics Collaborative (NIHR ccHIC). Statistical analysis was undertaken on 399,000 blood gases from 13,942 patients, using R version 3.4.0. After data cleaning, the predicted oxygen saturation for each arterial blood gas sample was calculated using both the Severinghaus1 and Dash, Kroman and Bassingthwaighte2 equations. Non-linear regression modelling was undertaken to construct ODCs based on both the predicted and observed data, to allow comparison. Observed data was stratified into strata based on pCO2 to investigate the influence of hypercapnia on the ODC.

Results: No clinically significant impact of pCO2 on the relationship between pO2 and oxygen saturation was observed in samples obtained from critically ill adults (mean difference 0.35 kPa (SD = 0.2 kPa) for a given oxygen saturation). Interestingly, we did not observe “right shift” of the ODC in response to elevated arterial pCO2, and there was no impact of either acute (HCO3 < 28 mmol/L) or chronic (HCO3 > 28 mmol/L) hypercapnia on the relationship between haemoglobin saturation and pO2.

Figure 1 –The observed oxygen dissociation curve of critically ill patients with varying levels of hypercapnia.

Conclusions: These data suggest that the relationship between haemoglobin saturation and pO2 described by data from small scale studies may not reflect physiology observed in critically ill adults, and further that the right shift of the ODC reported in experimental hypercapnia, induced in healthy subjects, is not reproduced in the critically ill.

References

- 1.Severinghaus JW. Simple, accurate equations for human blood O2 dissociation computations. J Appl Physiol Respir Environ Exerc Physiol 1979; 46: 599–602. [DOI] [PubMed]

- 2.Dash RK, Kroman B, Bassingthwaighte JB. Simple accurate mathematical models of blood HbO2 and HbCO2 dissociation curves at varied physiological conditions: evaluation and comparison with other models. Eur J Appl Physiol 2016; 116: 97–113. [DOI] [PMC free article] [PubMed]

EP.019

Predicting secondary infections using cell-surface markers of immune cell dysfunction: The INFECT study

Andrew Conway Morris1, Deepankar Datta2, Manu Shankar-Hari3, Jacqueline Stephen4, Christopher Weir4, Jillian Rennie2, Jean Antonelli4, Anthony Burpee5, Anthony Bateman6, Noel Warner7, Kevin Judge7, Jim Keenan7, Alice Wang7, K Alun Brown8, Sion Lewis8, Tracey Mare8, Alastair Roy9, Gillian Hulme10, Ian Dimmick10, Adriano G Rossi2, A John Simpson11 and Timothy S Walsh2

1Department of Medicine, University of Cambridge, Cambridge, UK

2Centre for Inflammation Research, University of Edinburgh, Edinburgh, UK

3Guys and St Thomas NHS Foundation Trust, London, UK

4Edinburgh Clinical Trials Unit, Usher Institute of Population Health Sciences and Informatics, University of Edinburgh, Edinburgh, UK

5Applied Cytometry, Sheffield, UK

6Western General Hospital, Edinburgh, UK

7BD Bioscience, San Jose, CA, USA

8Vascular Immunology Research Laboratory, Rayne Institute (King’s College London), St Thomas’ Hospital, London, UK

9Sunderland Royal Hospital, Sunderland, UK

10Flow Cytometry Core Facility Laboratory, Faculty of Medical Sciences, Centre for Life, Newcastle University, Newcastle, UK

11Institute of Cellular Medicine, Newcastle University, Newcastle, UK

Abstract

Background: Cellular immune dysfunctions are common in intensive care patients and predict a number of significant complications1. The enable the targeting of immunomodulatory treatments at the appropriate patients, clinically useable measures of dysfunction need to be developed. The objective of this study was to confirm the predictive value of cellular markers of immune dysfunction for secondary infection in a multi-centre context. These markers had previously been identified as potential predictors in a single-centre derivation study2.

Methods: A prospective, observational, cohort study was undertaken in four UK intensive care units. Blood samples were taken on alternate days to day 12 post-enrolment. Three cellular markers of immune cell dysfunction (neutrophil CD88, monocyte Human Leukocyte Antigen-DR (HLA-DR) and percentage of regulatory T-cells (Tregs)) were assayed on site using standardised flow cytometric measures. Patients were observed for the development of secondary infections using pre-defined, objective criteria.

Main Results: Data was available from 138 of 148 patients recruited. Reduced neutrophil CD88, reduced monocyte HLA-DR and elevated proportions of Tregs were all associated with subsequent development of infection with positive likelihood ratios (95% CI) of 1.37 (1.02–1.82), 1.81 (1.28–2.57) and 1.60 (1.09–2.36) respectively. The burden of immune dysfunction predicted a progressive increase in risk of infection, from 14% for patients with no dysfunction to 59% for patients with dysfunction of all three markers. Modelling the clinical use of these tests, they perform best between 3–9 days after ICU admission and predict not only secondary infections but also prolonged ICU stay and duration of organ support.

Conclusions: This study confirms our previous findings that three cell surface markers can predict secondary infection, demonstrates the feasibility of standardized flow cytometry and presents a tool which can be used to stratify critically ill patients to enable targeting of immunomodulatory therapies.

References

- 1.Hotchkiss RS, Monneret G, Payen D. Sepsis-induced immunosuppression: from cellular dysfunctions to immunotherapy. Nat Rev Immunol 2013; 13: 862–874. [DOI] [PMC free article] [PubMed]

- 2.Conway Morris A, Anderson N, Brittan M, et al. Combined dysfunctions of immune cells predict nosocomial infection in critically ill patients. Br J Anaes 2013; 11: 778–787. [DOI] [PubMed]

EP.020

Development of critical care rehabilitation guidelines in clinical practice; a quality improvement project

Sarah Elliott 1

1Medway NHS Foundation Trust, Gillingham, UK

Abstract

Introduction: Rehabilitation in critical care has the potential to restore lost function and improve quality of life on discharge, but patients are often viewed as too unstable to participate in physical rehabilitation. Following a physiotherapy service evaluation of the provision of critical care rehabilitation, a number of concerns were raised in our practice. It was identified that there was a need to standardise pathways for clinical decision making in early rehabilitation so interventions are safe, timely and consistent. The NICE Guidelines (2009) and GPICS (2015) both advocate the need for a structured rehabilitation program that addresses both physical and psychological needs of the patient by utilizing standardized assessment and outcome measures.

Methodology: PDSA cycles were used as a method for quality improvement within this setting. After consideration of the literature, the participants identified the guidelines devised by Stiller et al (2007) as a protocol that could be trialled within clinical practice.

After trialling these guidelines the participants felt it did not fully meet the needs of clinicians and patients at the Hospital. Therefore we developed our own, local evidence based critical care rehabilitation guidelines which incorporate core components from existing literature. The participants suggested that our guidelines should be; flexible, patient centred, time efficient, be in a user friendly flow chart in order to standardise our approach to rehabilitation.

Results: The guidelines have been designed not as a formal protocol, but to highlight key considerations that physiotherapists may consider when clinically reasoning whether or not the patient is suitable for rehabilitation. Type and duration of exercise are considered and the physiotherapist is prompted to review the therapeutic intervention and its impact before making future plans.

Discussion: The overwhelming reflections by physiotherapists regarding the use of rehabilitation guidelines was that they didn’t take into account the individual needs of the patient and the psychological benefit that exercise may bring. It also highlighted that we need to review the types and frequency of exercises and the MDT’s understanding of the term rehabilitation as this often caused conflict between physiotherapists and MDT when deciding treatment plans.

Conclusion: Following this project the participants surmised that in our clinical setting we were seeking to create Trust critical care rehabilitation guidelines that can act as a reference or teaching aid for all members of the MDT and that they may guide; assessment, clinical decision making, patient centred care, adherence to guidelines and options of rehabilitation.

EP.021

Outcomes for patients with Guillain Barre Syndrome transferred to a new weaning and long-term ventilation service in Liverpool, UK

Robert Parker1,2, Verity Ford1, Karen Ward1, Helen Ashcroft1, Nick Duffy1, Biswajit Chakrabarti1 and Robert Angus1

1Liverpool Sleep and Ventilation Centre, Aintree University Hospital, Liverpool, UK

2Critical Care Department, Aintree University Hospital, Liverpool, UK

Abstract

Introduction: In October 2010 the long-term ventilation service in Liverpool began providing support for non-spinal cord injury patients ventilated via a tracheostomy. As part of the Ventilation Centre (VIC) building work it became possible to look after stable tracheostomy ventilated patients away from ICU. A service has been established to assess, transfer and offer weaning for slow to wean patients from the North West and North Wales.

Methods: Prospective data collection has been done by two of the authors, VF and RP for the first 5 years. Outcomes have been assessed regarding underlying reason for failure to wean, weaning success, and follow up to one year. Failure to wean has been classified as neuromuscular disease, COPD, obesity, kyphoscoliosis and chest wall deformity, post-surgery and other. This enables comparison with published UK data. Of the first 95 transfers for weaning it was noted that 10 had Guillain Barre Syndrome and this was the main reason for weaning failure. This is a group which may be traditionally felt to have a poor prognosis, and in whom the evidence is limited.

Results: The median age for GBS patients was 58 years, and 50% were male. All were transferred from a General ICU, the median length of stay in the referring ICU prior to transfer was 69 days (range 16–265 days). The median VIC length of stay before discharge was 65.5 days (range 18–121 days), including discharge planning. They were profoundly weak on admission, mean MRC sum score 22/60. Despite this all were weaned and decannulated, seven discharged with long-term nocturnal NIV, three with no support. All those with NIV were issued cough assist devices initially for home use. Two went directly home, and eight to rehabilitation. Nine were alive one year after VIC discharge and six were living in their own home. No patients had PEG feeding on discharge, all were orally fed. Whilst four patients were treated for pneumonia on the VIC, no patients required re-escalation of care to the General ICU.