Abstract

Background

The process of medical image fusion is combining two or more medical images such as Magnetic Resonance Image (MRI) and Positron Emission Tomography (PET) and mapping them to a single image as fused image. So purpose of our study is assisting physicians to diagnose and treat the diseases in the least of the time.

Methods

We used Magnetic Resonance Image (MRI) and Positron Emission Tomography (PET) as input images, so fused them based on combination of two dimensional Hilbert transform (2-D HT) and Intensity Hue Saturation (IHS) method. Evaluation metrics that we apply are Discrepancy (Dk) as an assessing spectral features and Average Gradient (AGk) as an evaluating spatial features and also Overall Performance (O.P) to verify properly of the proposed method.

Results

In this paper we used three common evaluation metrics like Average Gradient (AGk) and the lowest Discrepancy (Dk) and Overall Performance (O.P) to evaluate the performance of our method. Simulated and numerical results represent the desired performance of proposed method.

Conclusions

Since that the main purpose of medical image fusion is preserving both spatial and spectral features of input images, so based on numerical results of evaluation metrics such as Average Gradient (AGk), Discrepancy (Dk) and Overall Performance (O.P) and also desired simulated results, it can be concluded that our proposed method can preserve both spatial and spectral features of input images.

Keywords: Medical image fusion, Magnetic Resonance Image (MRI), Positron Emission Tomography (PET), Two dimensional Hilbert transform (2-D HT), IHS

At a glance commentary

Scientific background on the subject

The important goal of bringing up our proposed method based on combination of 2-D HT and IHS method to fuse PET and MRI images is highlighting the main and significant features and also ignoring the uncommon features in fused image.

What this study adds to the field

The advantage of this method is improving spatial information of fused image because of using IHS space and also improving spectral information due to applying 2-D HT transform. Our proposed method provides better results both in terms of visual and quantitative criteria than other fusion methods that mentioned in this paper.

Image fusion is the process of integrating two or more images in to a single image. The main purpose of image fusion is to preserve all significant, related and relevant information existing in each of the input images. Medical image fusion is one of the most important subcategory of image fusion. Generally the multi-modal medical image fusion is the process of combining the information from each of the input medical images that taken from specific organ of the body in to a single image, this resulted image is named fused image. Fused image is more useful than any of the input medical images. Nowadays medical imaging techniques, are used extensively in fields of diagnosis and treatment of diseases by doctors. Since each of the medical imaging modalities are not able to show all of the useful information of the specific organ under study, so employing the medical image fusion arises. Different medical images have different features, for example the origin of Magnetic Resonance Image (MRI) is very powerful magnetic source and radio waves. These images can represent the details of internal body structures and give useful information about soft tissues such as heart, brain tumors, lungs and livers. The main important advantages of MRI imaging system are non-invasive and does not use ionizing radiation and also create images that contain high spatial resolution. In addition it provides structural information of special organs. On the other hand the origin of PET images is based on positron emission. Also this imaging system can provide functional information and metabolisms of specific tissues in addition to anatomical information. This property gives the possibility to the physicians that early diagnose the diseases and progressive of tumors. PET images are colorful and have rather low spatial resolution [1], [2], therefore we need special fusion method to fuse PET and MRI images to find single fused image that contain both spatial and spectral useful information.

Material and methods

Medical image fusion methods commonly implemented in three important levels such as pixel level fusion, feature level fusion and decision level fusion [3], [4], [5]. In this paper we want to fuse PET and MRI images at pixel level. Also medical image fusion include the various fields such as image processing [6] computer vision [7], pattern recognition [8] and machine learning [1], [2], [9]. There are several different image methods such as the Brovey Transform (BT), Intensity Hue Saturation (IHS) [10] and Principal Component Analysis (PCA) [11], that provide the basis for many common image fusion techniques. These methods are commonly applied for fusing the RGB images. Each of the image fusion techniques that mentioned above have advantages and disadvantage. One of the benefits of these techniques is improving the spatial resolution of the fused image also on the other hand the main drawback of listed methods is the disability to preserve the original chromaticity of input images. Thus, using the transform domain techniques are usually used for fusing images. These transform methods are such as discrete wavelet transform (DWT) [12], curvelet transform (CVT) [13], [14], and contourlet transform (CT) [13]. Another part of image fusion techniques is pyramid methods like Gradient pyramid, Gaussian pyramid, FSD pyramid and Laplacian pyramid. In our paper we compare our proposed method with FSD pyramid technique and Gradient pyramid. Generally FSD pyramid technique is one of the computationally efficient version of Gaussian pyramid. This fusion method is similar to Laplacian pyramid method but one of the main differences between each other is omitting the upsampling step in FSD pyramid. Based on above mentioned reason we use FSD pyramid instead of Laplacian pyramid technique. Another pyramid fusion method is Gradient pyramid technique. Performance of Gradient pyramid in achieved by applying the 4 different filters such as horizontal, vertical and two diagonal filters [15]. Now in this section we want to explain briefly the Hilbert transform (HT) and the IHS method that form our proposed method.

The Hilbert Transform (HT) is one of the main and useful transform in signal processing. This transform provides a ±90° phase change to the input signal, this result implies that if the input signal is a cosine function after computing its Hilbert transform we will achieve sine function [16]. For applying Hilbert transform (HT) in signal processing, there are three steps:

-

1)

Calculating the Fourier Transform to each of the input signal

-

2)

Suppressing the negative frequencies

-

3)

Applying inverse Fourier Transform to obtain the complex form function

Real part of the resulted signal is dealt with Analytic Signal (AS) and imaginary part is Hilbert transform (HT) of input signal. Based on explanation of calculating Hilbert Transform that stated above for input signal like S(t) we can write equations as follows [17], [18]:

| (1) |

where is the HT of the signal S (t), and is calculated by the convolution:

| (2) |

Three different mathematical methods for applying the Hilbert Transform (HT) are [19]:

-

1)

Using the Cauchy integral in the complex plane

-

2)

Using the Fourier Transform in the frequency domain

-

3)

Looking at the ±90° phase-shift

In this paper we used the second method for applying the Hilbert Transform (HT). For real signal like f(t), if we apply the Fourier Transform, we will have the complex pair with real and imaginary part like following equations [19]:

| (3) |

| (4) |

| (5) |

| (6) |

| (7) |

Based on Eqs. (3), (4), (5), (6), (7) we can achieve the final equations for implementing of Hilbert Transform (HT) as follows:

| (8) |

| (9) |

And also implementation of 2-D Hilbert transform in spatial domain is given by Ref. [20]:

| (10) |

In the frequency domain, the corresponding association for the 2-D HT is:

| (11) |

And the 2D-AS is given by:

| (12) |

Eqs. (11), (12) can be understood as the multiplication of the transformed image by a proper mask in the frequency domain. Two dimensional Hilbert transform (2-D HT) and Analytic Signal have important applications in different fields of image processing such as corner detection [16], [20]. AM-FM image models give a detailed description of localized two dimensional (2-D) frequency modulation (FM) and amplitude modulation (AM). Also these images are powerful tools for characterizing and processing textured images [21], [22], phase congruency calculations [23], edge detection [24] and operating images since the broadcast bandwidth is efficiently compact.

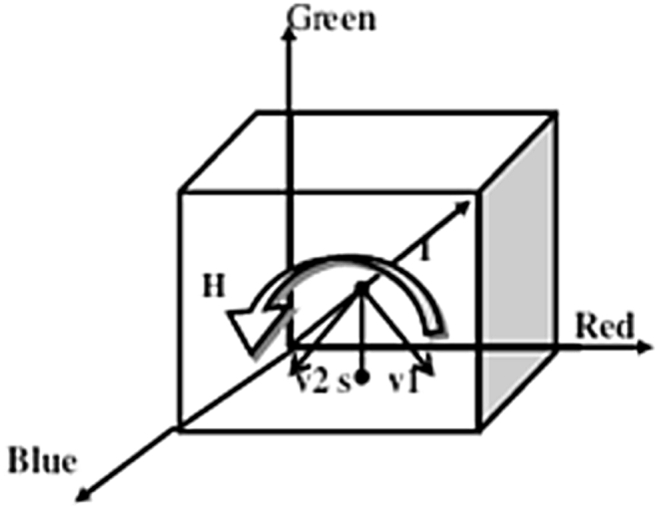

Image fusion based on IHS (Intensity-Hue-Saturation) technique is one of the most ordinarily used for sharpening. This color transform has become a standard method in image analysis for development of color, feature improvement and spatial resolution [25]. Also the IHS method is very simple and achieved by the matrices multiplication. For this purpose, it could be employed even for real-time systems [25]. One of the main features of IHS color space is reflecting the spectral information in to elements of Hue (H) and Saturation (S). This reason causes smaller changes of Intensity (I) component in visual system perception. The IHS space is deal with the human observation of colors. Many IHS transformation procedures are developed to convert the RGB values. Generally the instruction of IHS color space that commonly used can be summarized in three levels in bellow [25]:

-

1)

Converting three components of the input image R, G and B into the IHS color space

| (13) |

Where I is the intensity value. Variables V1 and V2 are used to represent the hue (H) and saturation (S):

| (14) |

| (15) |

-

2)

Replaying the intensity (I) element by the other image with higher spatial resolution after transformation

-

3)

Getting back to RGB space with the original values of Hue (H) and Saturation (S).

| (16) |

In Eq. (16) Rnew, Gnew and Bnew show the red, green and blue components of the fused image. Fig. 1 shows the common cube model of IHS space that shows the how to transform RGB image in IHS space.

Fig. 1.

The color cube model of IHS space [25].

The IHS method is very simple and achieved by the matrices multiplication. For this purpose, it could be employed even for real-time systems [26].

In this study PET and MRI brain images used for applying proposed method. All of these input images are the same size 256×256. Preprocessing operations before the research was done to all of the input images and all of them have already been registered. There are several colormaps for representing color images like PET images such as RGB space, IHS space, HSV space and etc. Although our method apply to RGB and IHS colormaps but we have considered to display PET images in RGB space and use RGB space to IHS space algorithm in our proposed method. It is necessary to note that our proposed method has been implemented in MATLAB software. In order to apply this method to fuse PET and MRI input images, first we need to see how to apply two dimensional Hilbert transform (2-D HT) on MRI images. This process is summarized in few steps as follows:

Step 1. Applying two dimensional Hilbert transform to brain MRI images by use of Fourier transform that mentioned in section Material and methods and achieve series of coefficients related to the same transform.

Step 2. Employing multiplication of suitable mask and Fourier transform of input MRI image.

Step 3. Applying the appropriate fusion rules to combine the two dimensional Hilbert transform (2-D HT) coefficients of input images. Three common fusion rules to select the best rule is as follows:

| (17) |

| (18) |

| (19) |

Which is the two dimensional Hilbert transform (2-D HT) coefficients of MRI image. Also is the two dimensional Hilbert transform (2-D HT) coefficients of PET image. In this paper we use maximum fusion rule to fuse PET and MRI images based on combination of two dimensional Hilbert transform (2-D HT) and IHS method.

Step 4. Reconstructing fused image by applying inverse two dimensional Hilbert Transform (2-D HT) based on the combined coefficients that resulted in Step 3.

Also applying our proposed method to color image in RGB space like PET as input image abbreviated in 5 levels as follows:

Level 1. Using RGB to IHS algorithm to convert the color PET image from RGB space to HIS space.

Level 2. Separating the intensity component (I) of PET image in IHS domain. Hue (H) and saturation (S) of PET image in IHS space are kept the same.

Level 3. Applying two dimensional Hilbert transform (2-D HT) just for intensity component (I) of PET image. The process of applying (2-D HT) for intensity component (I) of PET is similar to applying this transform for MRI image that previously expressed.

Level 4. Using the inverse two dimensional Hilbert transform (2-D HT) to achieve the new intensity component as .

Level 5. Representing the fused image in RGB space by using of IHS to RGB space algorithm.

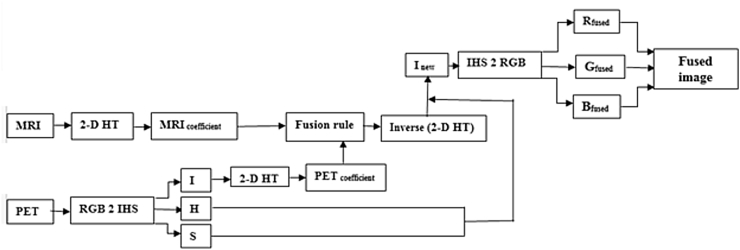

Block diagram of PET and MRI image fusion based on combination of two dimensional Hilbert transform (2-D HT) and IHS method as our proposed method is shown in Fig. 2.

Fig. 2.

Block diagram of combination of proposed method (2-D HT and IHS).

Results

For evaluating our proposed method we use quantitative evaluation metrics like Average Gradient (AGK) and Discrepancy (DK). Also final results are achieved by calculating Overall Performance (O.P) as a trade-off between Average Gradient (AGK) and Discrepancy (DK) [27].

Average Gradient (AGK) is a criteria to assess the ability of fused image to preserve the spatial quality of input images. And also the Average Gradient (AGK) reflects the clarity of the fused image. A larger average gradient means a higher spatial resolution. The spatial quality of a M×N fused image can be measured by the Average Gradient (AGK) at each band. The formula for calculating this measurement is computed as follows:

| (20) |

Also Discrepancy (DK) is a measure for evaluating the ability of fused images to remain the spectral features of input images. A lower value of Discrepancy (DK) represents a higher spectral resolution. Equation of this criteria is given by:

| (21) |

where Fk(x,y) and Lk(x,y) are the pixel values of the fused image at position (x,y). And M×N is the size of the both input images and fused image as 256×256.

At last, Overall Performance (O.P) is calculated by the difference between the Average Gradient (AGK) and Discrepancy (DK). Small amount of overall performance (O.P) means a higher overall fusion quality.

| (22) |

Numerical results based on Average Gradient (AGK), Discrepancy (DK) and Overall Performance (O.P) are given in Table 1.

Table 1.

Numerical results of comparing our proposed method (2-D HT and IHS method) based on Average Gradient (AGK), Discrepancy (Dk) and Overall Performance (O.P).

| Method | DK | AGK | O.P |

|---|---|---|---|

| IHS | 14.773 | 5.171 | 9.61 |

| Proposed method (2-D D HT and IHS) | 12.826 | 5.227 | 7.568 |

| Gradient pyramid | 15.941 | 4.664 | 11.249 |

| FSD pyramid | 16.095 | 4.728 | 11.367 |

| 2-D HT | 20.285 | 4.891 | 15.189 |

| Haar wavelet | 13.326 | 5.194 | 8.311 |

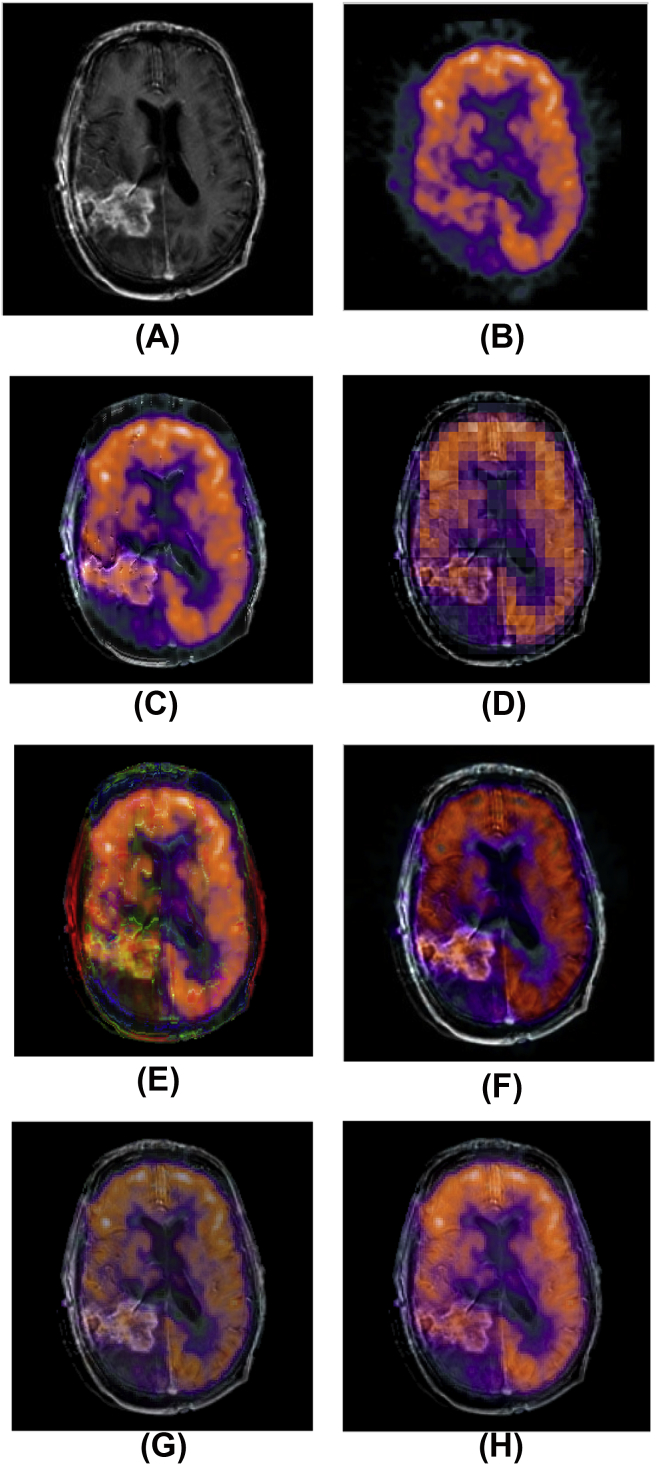

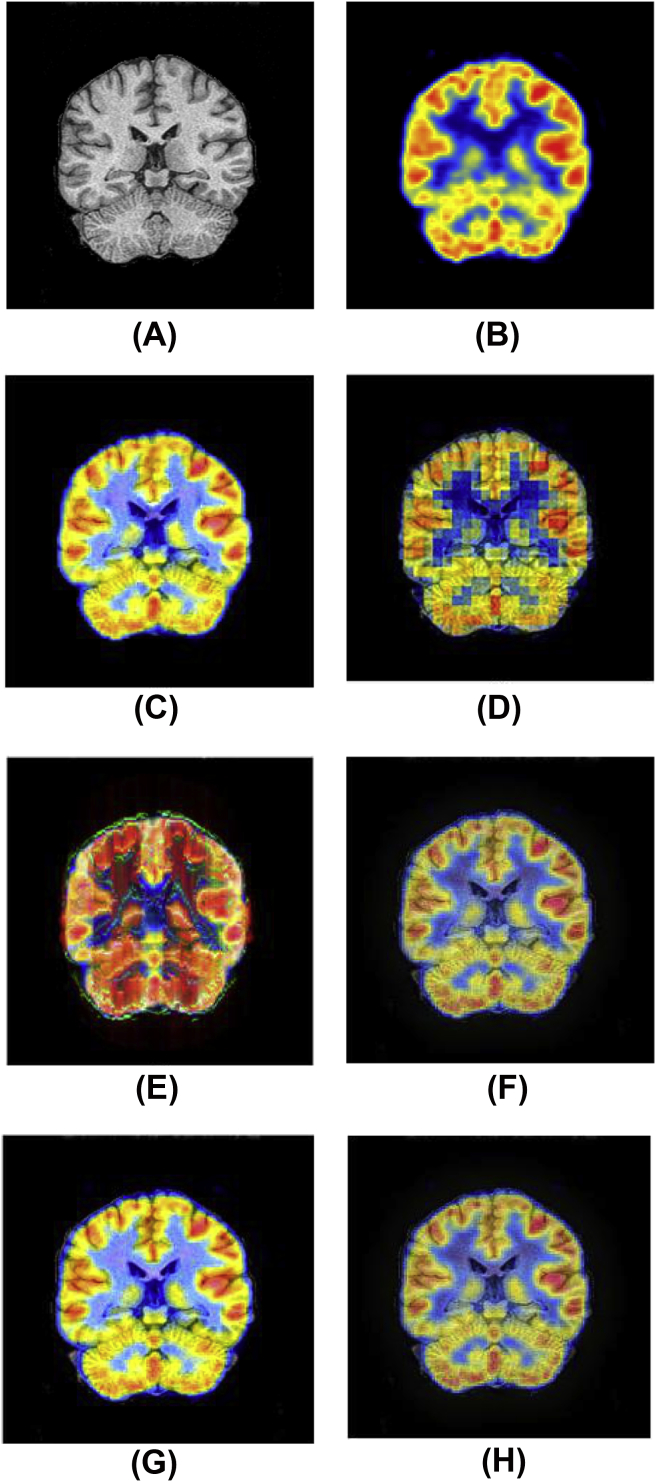

Also simulated results of our method are compared with 5 other fusion methods like IHS, FSD pyramid, Gradient pyramid, two dimensional Hilbert transform (2-D HT) and Haar wavelet in Fig. 3, Fig. 4.

Fig. 3.

(A) Brain MRI image with tumor. (B) Brain PETimage with tumor. (C) Fused image using proposed method (2-D HT and IHS). (D) Fused image using Haar wavelet. (E) Fused image using 2-D HT. (F) Fused image using IHS (G) Fused image using FSD pyramid. (H) Fused image using Gradient pyramid.

Fig. 4.

(A) Normal brain MRI image. (B) Normal PETimage. (C) Fused image using proposed method (2-D HT and IHS). (D) Fused image using Haar wavelet. (E) Fused image using 2-D HT. (F) Fused image using FSD pyramid (G) Fused image using IHS method. (H) Fused image using Gradient pyramid.

Fig. 3(A) and (B) are the MRI and PET brain images with tumor and Fig. 3(C–H) are respectively the resulted fused image based on proposed method (combination of 2-D HT and IHS), Haar wavelet, 2-D HT, FSD pyramid, IHS method and Gradient pyramid. And also Fig. 4(A) and (B) are the normal brain MRI and PET images Also Fig. 4(C–H) are respectively the resulted fused image based on proposed method (combination of 2-D HT and IHS), Haar wavelet, 2-D HT, FSD pyramid, IHS method and Gradient pyramid.

Discussion

In this paper we proposed new image fusion method based on combination of two dimensional Hilbert transform (2-D HT) and IHS method to fuse 9 series of PET and MRI normal brain images and also contain tumor. These simulated fused results are shown in Fig. 3, Fig. 4. Also for confirming the performance of our proposed method, we compared our method with 5 other common image fusion methods like IHS, FSD pyramid, Gradient pyramid, two dimensional Hilbert transform (2-D HT) and Haar wavelet. As we know there are two common evaluating analysis criteria for measuring the fusion algorithms such as qualitative and quantitative analysis [28]. Since in our work we used both normal brain PET and MRI images and brain images with tumor, so quality evaluations of the fused image plays very important role. So that whatever the quality of the fused image is high, doctors can easily recognize the location of the brain tumors and treat these disease in the least of the time. Due to the fusion results of our proposed method in Fig. 3, Fig. 4 obviously we can find out that our method's fusion results can preserve the spatial information of MRI image and also spectral features of PET image better than other fusion methods that mentioned above. Second of common evaluation metrics of fusion methods are quantitative analysis criteria. This evaluation metrics is based on mathematical equations. In this paper we used two cases of this evaluation metrics such as Average Gradient (AGK) and Discrepancy (DK) and Overall Performance as a connector between these mentioned metrics. Although the simulated fusion results of FSD pyramid in Fig. 3, Fig. 4 are similar to Gradient pyramid in Fig. 3, Fig. 4 and also the simulated fusion results of our proposed method and IHS method are partly similar to each other, but in case of quantitative numerical results are different. As we know, the best fusion method is the technique that presents the best qualitative and quantitative fusion results simultaneously. Since our proposed method consists of IHS method and two dimensional Hilbert transform (2-D HT) so we expect that this method has a better performance than IHS method and two dimensional Hilbert transform (2-D HT) alone in the field of preserving both quantitative and quality assessment criteria. Based on quantitative numerical results that are shown in Table 1, it can be realize that the performance of our proposed method is better than another fusion methods that we used in this paper; because of minimum Overall Performance (O.P), Discrepancy (Dk) and relatively high Average Gradient (AGK).

Conclusion

In this paper we applied combination of IHS method and 2-D Hilbert transform to fuse 9 series of MRI and PET images. Based on values of quantitative assessment criteria that is listed in Table 1 and simulated results in Fig. 3, Fig. 4 we realized that our proposed method has minimum Discrepancy (DK), this means that our method can preserve the spectral features and has good Average Gradient (AGK) which means that spatial features has been preserved too. Also Overall Performance (O.P) of combination of two dimensional Hilbert transform (2-D HT) and IHS method is the lowest. Because O.P is the trade-off between spectral and spatial features, therefore desirable performance of our proposed method is proved.

Conflicts of interest

The authors declare no conflicts of interest.

Footnotes

Peer review under responsibility of Chang Gung University.

References

- 1.James A.P., Dasarathy B.V. Medical image fusion: a survey of the state of the art. Inf Fusion. 2014;19:4–19. [Google Scholar]

- 2.Shen R., Cheng I., Basu A. Cross-scale coefficient selection for volumetric medical image fusion. IEEE Trans Biomed Eng. 2013;60:1069–1079. doi: 10.1109/TBME.2012.2211017. [DOI] [PubMed] [Google Scholar]

- 3.Piella G. A general framework for multiresolution image fusion: from pixels to regions. Inf Fusion. 2003;4:259–280. [Google Scholar]

- 4.Calhoun V.D., Adal T. Feature-based fusion of medical imaging data. IEEE Trans Inf Technol Biomed. 2009;13:711–720. doi: 10.1109/TITB.2008.923773. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Madabhushi A., Agner S., Basavanhally A., Doyle S., Lee G. Computer-aided prognosis: predicting patient and disease outcome via quantitative fusion of multi-scale, multi-modal data. Comput Med Imaging Graph. 2011;35:506–514. doi: 10.1016/j.compmedimag.2011.01.008. [DOI] [PubMed] [Google Scholar]

- 6.Rajalakshmi T., Prince S. Retinal model-based visual perception: applied for medical image processing. Biol Inspired Cogn Archit. 2016;18:95–104. [Google Scholar]

- 7.Peng G., Zhang Z., Li W. Computer vision algorithm for measurement and inspection of O-rings. Measurement. 2016;94:828–836. [Google Scholar]

- 8.Brown G.D. Dectin-1: a signalling non-TLR pattern-recognition receptor. Nat Rev Immunol. 2006;6:33–43. doi: 10.1038/nri1745. [DOI] [PubMed] [Google Scholar]

- 9.Kotsavasiloglou C., Kostikis N., Hristu-Varsakelis D., Arnaoutoglou M. Machine learning-based classification of simple drawing movements in Parkinson's disease. Biomed Signal Process Control. 2017;31:174–180. [Google Scholar]

- 10.Daneshvar S., Ghassemian H. MRI and PET image fusion by combining IHS and retina-inspired models. Inf Fusion. 2010;11:114–123. [Google Scholar]

- 11.He C., Liu Q., Li H., Wang H. Multimodal medical image fusion based on IHS and PCA. Procedia Eng. 2010;7:280–285. [Google Scholar]

- 12.Bhavana V., Krishnappa H. Multi-modality medical image fusion using discrete wavelet transform. Procedia Comput Sci. 2015;70:625–631. [Google Scholar]

- 13.Choi Y., Sharifahmadian E., Latifi S. Quality assessment of image fusion methods in transform domain. Int J Inf Theory. 2014;3:7–18. [Google Scholar]

- 14.Candès E., Demanet L., Donoho D., Ying L. Fast discrete curvelet transforms. Multiscale Model Simul. 2006;5:861–899. [Google Scholar]

- 15.Xu H., Wang Y., Wu Y., Qian Y. Infrared and multi-type images fusion algorithm based on contrast pyramid transform. Infrared Phys Technol. 2016;78:133–146. [Google Scholar]

- 16.Singh A. Survey paper on Hilbert transform with its applications in signal processing. Int J Comput Sci Inf Technol. 2014;5:3880–3882. [Google Scholar]

- 17.Lorenzo-Ginori J.V. International conference image analysis and recognition. Springer; New York: 2007. An approach to the 2D Hilbert transform for image processing applications; pp. 157–165. [Google Scholar]

- 18.Wang S., Yan K., Xue L. Quantitative interferometric microscopy with two dimensional Hilbert transform based phase retrieval method. Opt Commun. 2017;383:537–544. [Google Scholar]

- 19.Kschischang F.R. The Hilbert transform. 2006;83:277–285. University of Toronto. [Google Scholar]

- 20.Kohlmann K. Corner detection in natural images based on the 2-D Hilbert transform. Signal Process. 1996;48:225–234. [Google Scholar]

- 21.Havlicek J., Tay P., Bovik A. Handbook of image and video processing. Elsevier Inc.; 2005. AM-FM image models: fundamental techniques and emerging trends; pp. 377–395. [Google Scholar]

- 22.Havlicek J.P., Havlicek J.W., Mamuya N.D., Bovik A.C. Image processing, 1998. ICIP 98. Proceedings. 1998 International conference on. 1. IEEE. 1998. Skewed 2D Hilbert transforms and computed AM-FM models; pp. 602–606. [Google Scholar]

- 23.Zhou Y., Kwong S., Gao W., Wang X. A phase congruency based patch evaluator for complexity reduction in multi-dictionary based single-image super-resolution. Inf Sci. 2016;367–8:337–353. [Google Scholar]

- 24.Pei S.C., Ding J.J. Acoustics, speech, and signal processing, 2003. Proceedings. (ICASSP'03). 2003 IEEE international conference on. 3. IEEE. 2003. The generalized radial Hilbert transform and its applications to 2D edge detection (Any direction or specified directions) pp. 357–360. [Google Scholar]

- 25.Al-Wassai F.A., Kalyankar N., Al-Zuky A.A. 2011. The IHS transformations based image fusion. arXiv Preprint arXiv:11074396. [Google Scholar]

- 26.Huang Q., Zhao Z., Gao Q., Meng Y., Ma J., Chen J. Improved fusion method for remote sensing imagery using NSCT and extend IHS. IFAC-PapersOnLine. 2015;48:64–69. [Google Scholar]

- 27.Li Z., Jing Z., Yang X., Sun S. Color transfer based remote sensing image fusion using non-separable wavelet frame transform. Pattern Recognit Lett. 2005;26:2006–2014. [Google Scholar]

- 28.Jagalingam P., Hegde A.V. A review of quality metrics for fused image. Aquat Procedia. 2015;4:133–142. [Google Scholar]