Abstract

Recent theories assert that visual working memory (WM) relies upon the same attentional resources and sensory substrates as visual attention to external stimuli. Behavioral studies have observed competitive tradeoffs between internal (i.e., WM) and external (i.e., visual) attentional demands, and neuroimaging studies have revealed representations of WM content as distributed patterns of activity within the same cortical regions engaged by perception of that content. Although a key function of WM is to protect memoranda from competing input, it remains unknown how neural representations of WM content are impacted by incoming sensory stimuli and concurrent attentional demands. Here, we investigated how neural evidence for WM information is affected when attention is occupied by visual search—at varying levels of difficulty—during the delay interval of a WM match-to-sample task. Behavioral and functional magnetic resonance imaging (fMRI) analyses suggested that WM maintenance was impacted by the difficulty of a concurrent visual task. Critically, multivariate classification analyses of category-specific ventral visual areas revealed a reduction in decodable WM-related information when attention was diverted to a visual search task, especially when the search was more difficult. This study suggests that the amount of available attention during WM maintenance influences the detection of sensory WM representations.

Keywords: dual-task, multivariate pattern analysis, visual short-term memory, visual search, working memory decoding

We are constantly called upon to maintain information temporarily in mind, but this working memory (WM) must also operate in the face of immediate and variable demands for our attention in the environment (e.g., rehearsing a shopping list while navigating heavy traffic). While attention has typically been described as the selective processing of information that is currently available to the senses—and WM conversely acts on information unavailable to the senses—a large body of evidence indicates that demands on WM and attention reciprocally influence one another (Awh & Jonides, 2001; Awh, Vogel, & Oh, 2006; Gazzaley & Nobre, 2012), and engage many of the same brain regions (Ikkai & Curtis, 2011; Jerde, Merriam, Riggall, Hedges, & Curtis, 2012; LaBar, Gitelman, Parrish, & Mesulam, 1999; Mayer et al., 2007; Nee & Jonides, 2009; Nobre et al., 2004). This has encouraged the reconceptualization of WM as internally-oriented attention that endogenously activates perceptual representations in much the same way as attention to external stimuli would (Chun, 2011; Chun & Johnson, 2011; D’Esposito & Postle, 2015; Kiyonaga & Egner, 2013). Accordingly, WM-related sustained increases in mean neural population activity, as indexed by univariate fMRI signal (in dorsolateral prefrontal cortex, for instance), were once assumed to represent the information being held in WM; however, many now consider those responses to reflect attentional control over sensory regions that represent the information content itself (Lara & Wallis, 2015; Postle, 2015; Sreenivasan, Curtis, & D’Esposito, 2014). In other words, attention is recruited to activate sensory representations for the purpose of WM.

Recent multivariate neural evidence also supports this “sensory recruitment” model of WM, whereby short-term representations are maintained via distributed patterns of activity within the same sensory cortical regions engaged by perceptual attention toward that content (e.g., area MT for memory of moving dot arrays; Riggall & Postle, 2012). The orientation of a Gabor grating maintained in WM, for instance, can be successfully decoded or reconstructed based on early visual cortex activity patterns derived from actually perceiving oriented stimuli (Albers, Kok, Toni, Dijkerman, & de Lange, 2013; Ester, Anderson, Serences, & Awh, 2013; Harrison & Tong, 2009; Serences, Ester, Vogel, & Awh, 2009). Conversely, perceived spatial locations can also be decoded from parietal cortex based on activity patterns derived from spatial WM maintenance (Jerde et al., 2012), providing further evidence for overlap in the representational codes for perception and WM. If WM content is indeed maintained in sensory cortices, via attention-dependent activation, a critical question is: What happens to such internally-attended information in the face of incoming sensory input and concurrent attentional demands? Behavioral studies have shown that WM often suffers when attention is otherwise occupied during the WM delay (e.g., Fougnie & Marois, 2009), and the extent of that impairment scales with the time-consumption of the intervening task (Barrouillet, Portrat, & Camos, 2011). Here, we employed fMRI to determine how this competition between internal and external attentional demands impacts the patterns of neural activation associated with sensory representations of WM content.

Many behavioral studies suggest that concurrent attentional demands can alter the “activation status” of a WM representation, relegating it to a distinct format outside of an internal focus of attention (i.e., “silent coding,” Stokes, 2015), into which it can be reinstated when it becomes task-relevant again (Gunseli, Olivers, & Meeter, 2015; Kiyonaga & Egner, 2014; Kiyonaga, Egner, & Soto, 2012; Olivers, Peters, Houtkamp, & Roelfsema, 2011; van Moorselaar, Olivers, Theeuwes, Lamme, & Sligte, 2015). Correspondingly, the active neural trace of a WM representation—as detected by multivariate pattern analyses—is modulated by internal shifts of attention across a trial; immediately task-relevant representations elicit measurable neural signatures, while evidence for task-irrelevant memory representations is degraded (LaRocque, Lewis-Peacock, Drysdale, Oberauer, & Postle, 2012; LaRocque, Riggall, Emrich, & Postle, 2016; Lewis-Peacock, Drysdale, Oberauer, & Postle, 2012; Rose et al., 2016; Sprague, Ester, & Serences, 2016).

If activation in visual WM occurs by directing attention internally toward perceptual representations, then directing attention outwardly toward a visual task should similarly modulate WM representational information, and neural activation patterns in regions that represent the WM content should become less discriminable. Here, we used multivariate pattern classification of fMRI data to investigate whether WM category decoding is impacted when attention is occupied by visual search—at varying levels of difficulty—during the delay interval of a WM match-to-sample task. If WM and visual search both rely on attention, neural evidence for WM category representations should be degraded during visual search, and that degradation should be even more pronounced when a more difficult visual search condition diverts attention away from WM maintenance for a longer period of time.

Materials and Methods

Participants

Thirty healthy volunteers gave written informed consent to participate in accordance with the Duke University Institutional Review Board. All participants were fluent in English, reported normal or corrected-to-normal vision, and were compensated $20 per hour for their participation. Two participants were excluded for missing data, leaving 28 participants in the final analyses (16 male; mean age: 30; range 18–45).

Design

The experimental protocol was designed to independently vary “internal” (i.e., WM) and “external” (i.e., visual) attentional load in a fully balanced 2 (WM load: 1 item vs. 2) × 2 (visual search difficulty: easy vs. hard) factorial design. The task comprised a delayed match-to-sample WM test, with a sequence of delay-spanning visual searches (Figure 1a). We employed WM sample stimuli with known cortical sensitivities (i.e., faces and houses), so that we could examine the discriminability of visual cortical WM representations, via classifiers trained on the WM category, in the fusiform face (FFA; Kanwisher, McDermott, & Chun, 1997) and parahippocampal place areas (PPA; Epstein & Kanwisher, 1998).

Figure 1.

Behavioral task design. a) During the delay interval of a match-to-sample working memory (WM) task, participants completed a series of four visual searches. b) WM load conditions: Participants maintained either 1 or 2 face or house stimuli in WM. c) Visual search load conditions: Participants searched for the vertical body or tool target amongst horizontal (easy) or tilted (hard) distractors.

Across different trials, participants had to maintain either one (low WM load) or two (high WM load) faces or houses for a later memory probe (Figure 1b). During the WM delay, participants performed a series of four visual searches for a perfectly vertical target stimulus among horizontal (easy search) or slightly tilted (hard search) distractors (Figure 1c). We borrowed this attentional manipulation approach from the time-based resource-sharing model of WM storage and processing, whereby a harder visual search task should occupy attention—that would otherwise be dedicated to WM maintenance—for a longer period of time (Barrouillet et al., 2011). Our main analyses focus on this search epoch of the trial, as we wanted to characterize how WM would be impacted by this secondary demand. In order to produce a balanced design, wherein WM category classification would be uncontaminated by overlapping visual input, visual search stimuli were either bodies (which have been shown to preferentially recruit the extrastriate body area [EBA; Downing, Jiang, Shuman, & Kanwisher, 2001]) or tools (which have been shown to recruit lateral occipitotemporal cortex; Chao, Haxby, & Martin, 1999). The design thus produced four main conditions: Low WM/Easy Search, Low WM/Hard Search, High WM/Easy Search, and High WM/Hard Search.

Dual-task WM/Visual Search Procedure

The task was programmed and presented in Matlab (Mathworks Inc., Natick, MA) using the Psychophysics Toolbox extensions (Brainard, 1997). Face stimuli were 144 trial unique grayscale images of male and female faces, drawn from several databases (Endl et al., 1998; Kanade, Cohn, & Tian, 2000; Lundqvist, Flykt, & Ohman, 1998; Minear & Park, 2004; Oosterhof & Todorov, 2008; Tottenham et al., 2009), and cropped to include only the “eye and mouth” region. House stimuli were 144 trial unique grayscale exterior images drawn from local real estate websites. Visual search stimuli were 16 male and female bodies, with heads cropped (Downing et al., 2001), and 16 tools (hammers and wrenches) drawn from freely available online sources. Stimuli were displayed on a back-projection screen against a neutral grey background (RGB: 128 128 128), and viewed through a mirror mounted to the head coil simulating a viewing distance of approximately 80 cm. Behavioral responses were executed with the left and right hands on MRI-compatible response boxes.

Each trial began with a variable inter-trial interval, followed by the WM sample for 2 s. Low load WM samples consisted of a single, centrally-presented face or house. High load WM samples consisted of either two faces or two houses presented side-by-side. After a variable inter-stimulus interval, a series of four visual search displays appeared for 1.5 s each, separated by 500 ms fixation intervals, producing a search sequence lasting 8 s in total. Each search array comprised four stimuli (either all tool or all body images) at the corners of an imaginary square. In all conditions, the target stimulus was perfectly vertical, while three distractors were tilted to the left or right. The task was to indicate whether the target stimulus was oriented right-side up or upside down. For easy search trials, the distractors were perfectly horizontal (i.e., tilted 90° to the left or right), making them easily discriminable from the vertical target. For hard search trials, on the other hand, distractors were slanted only 15° to the left or right, making their orientation less discriminable from the vertical target (Treisman & Gelade, 1980). Importantly, the type and number of stimuli were identical for easy and hard searches, equating the amount of perceptual input across all conditions—only the orientation difference between the target and distractor stimuli varied, serving as the manipulation of search difficulty. All searches within a given trial were of the same difficulty level. The search sequence was followed by a variable inter-stimulus interval, then a WM probe for 3 s. Participants were asked to rate their confidence, on a 4-point scale, that a single WM probe item was either a match (50% of trials) or non-match to an item from the WM sample set. Underneath the probe image, a visual guide instructed which finger of the left hand should be used to indicate a response of either “Definitely the same”, “Maybe the same”, “Maybe different”, or “Definitely different”.

WM samples were selected in random order and never repeated across the experiment, except as matching probes. Visual search stimuli, locations, and orientations were also selected in random order on every trial, but could repeat across trials. The duration of all inter-trial as well as pre- and post-search inter-stimulus intervals were jittered between 2.5–5 s (step-size = 500 ms), selected at random from a pseudo-exponential distribution (Dale, 1999), and counter-balanced to equate the length of all runs. Therefore, the onset of the visual search series was unpredictable, and the total length of individual trials could vary, but the duration of the search series was held constant at 8 s for all trials. Participants completed a practice run of 16 trials outside of the scanner, then nine experimental runs inside the scanner—each comprising 16 trials—for a total of 144 trials. All trial conditions occurred equally often, and in random order, both within and across runs.

Functional Localizer Procedure

Participants also completed a functional localizer task to define cortical regions of interest (ROIs) that preferred each of the WM and visual search stimulus categories (i.e., faces, houses, bodies, and tools). Each stimulus category was presented in separate blocks; each block entailed a series of 15 images, centrally-presented for 750 ms, and separated by a 250 ms fixation. Participants were asked to make a button response to direct repetitions of a specific stimulus (i.e., 1-back task). The run comprised 16 blocks (4 of each condition) which were separated by 10 s inter-block intervals and occurred in random order.

Image Acquisition

Functional data were recorded on a 3.0 tesla GE MR750 scanner, using a gradient-echo, T2*-weighted multi-phase echoplanar imaging (EPI) sequence. Forty contiguous axial slices were acquired in interleaved order, parallel to the anterior-posterior commissure (AC-PC) plane (voxel size: 3 × 3 × 3 mm; repetition time [TR] = 2 s; echo time [TE] = 28 ms; flip angle = 90°; FOV: 24 cm). Structural data were obtained with a 3D T1-weighted fast inversion-recovery-prepared spoiled gradient recalled (FSPGR) pulse sequence, recording 154 slices of 1 mm thickness and an in-plane resolution of 1 × 1 mm.

fMRI Analyses

Analyses were done in Matlab using SPM8 (Wellcome Department of Imaging Neuroscience, London, UK; http://www.fil.ion.ucl.ac.uk/spm/software/spm8). The first five volumes of each run were discarded to allow for a steady state of tissue magnetization. Functional data were then slice-time corrected and spatially realigned to the first volume, coregistered with participants’ structural scans, and normalized to the Montreal Neurological Institute (MNI) template brain. Normalized functional images retained their native spatial resolution.

Mass-univariate Analyses

For analyses based on task-related changes in mean signal intensity, the normalized images were spatially smoothed with a Gaussian kernel of 9 mm3 full width half maximum, before applying a 128 s temporal high-pass filter in order to remove low-frequency noise. A model of the main task was created for each subject via vectors corresponding to the onset of the visual search series (8 s boxcar) for each experimental condition; the model accounted for WM and visual search load conditions, as well as stimulus category for both WM and search task components, resulting in a total of 16 regressors of interest. All univariate analyses collapsed across stimulus category conditions, however, producing four main conditions of interest—Low WM/Easy Search, Low WM/Hard Search, High WM/Easy Search, High WM/Hard Search. WM sample and probe periods, error trials (for both visual search and WM probe), head-motion parameters, and grand means of each run were also modeled as separate nuisance regressors. Onset vectors were convolved with a canonical hemodynamic response function (HRF) to produce a design matrix, against which the blood-oxygenation level-dependent signal at each voxel was regressed.

Single-subject contrasts were then calculated to establish the hemodynamic correlates of working memory load (all 2 item WM > all 1 item WM), visual search difficulty (all Hard Search > all Easy Search), and their interaction effects (High WM + Easy Search > Low WM + Hard Search; Low WM + Hard Search > High WM + Easy Search). Group effects were subsequently assessed by submitting the individual statistical parametric maps to 1-sample t-tests where subjects were treated as random-effects. To control for false-positives we applied a whole-brain voxel-wise FDR-correction (p < .05, combined with a cluster extent of 20 voxels). To illustrate the nature of the observed activations, mean β estimates for each condition were extracted from 6 mm spherical ROIs, centered on peak group activations, using MarsBaR software (http://marsbar.sourceforge.net).

ROI definition

Regions of sensitivity for the WM categories were derived from the independent functional localizer task. A model of the localizer was created for each subject via vectors corresponding to the onset of the stimulus blocks (15 s duration) for each of the four stimulus categories (face, house, body, tool). Single-subject contrasts were then calculated to establish the hemodynamic correlates of house-viewing (all House > all other categories) and face-viewing (all Face > all other categories). Group maps were furthermore constrained by anatomical masks of the fusiform and parahippocampal gyri (generated with the WFU_Pickatlas Toolbox; Maldjian, Laurienti, Kraft, & Burdette, 2003), for the face and house contrasts, respectively, and submitted to FDR-correction (p < .05) to identify clusters of maximal responsivity to the stimulus categories.

Multivariate Analyses

While standard mass-univariate analyses allow us to localize regions where mean signal intensity is sensitive to internal and external load demands, such variations do not convey precise informational content. Instead, the strength of multivariate decoding can arguably serve as a proxy for the quality of a neural representation (Emrich, Riggall, LaRocque, & Postle, 2013; Ester et al., 2013). We therefore created two models for multivariate analyses, using unsmoothed images, with the purpose of gauging how the discriminability of the neural WM representation is impacted when attention is diverted to processing external stimuli. The first ‘temporal’ model included WM category (face vs. house) and visual search load (easy vs. hard) conditions via vectors of onsets (2 s duration; i.e., a single TR) for each event in a trial—WM sample, WM delay, search trials, pre-probe delay, WM probe—convolved with a canonical HRF. The second ‘searchlight’ model was identical except visual searches within a trial were now reflected by a single onset (8 s duration). Head-motion parameters and grand means of each run were also modeled as separate nuisance regressors. ROI and searchlight classification analyses were implemented by training linear support vector machines (SVM), via the “caret” and “kernlab” packages in R (Kuhn, 2008; Zeileis, Hornik, Smola, & Karatzoglou, 2004) and The Decoding Toolbox (Hebart, Görgen, & Haynes, 2015), using a leave-one-run-out cross-validation procedure. Our design produced 9 experimental runs, wherein each condition occurred equally often. Each classifier was thus trained on the patterns corresponding to maintenance of each WM category over 8 runs, then tested on its ability to decipher the remembered category on the 9th run. The training set was then shuffled so that each run served once as the testing set, and classifier accuracy for a given searchlight or ROI would reflect the average classifier performance over those 9 iterations.

Event-related ROI-based MVPA

Activity patterns coding WM information fluctuate over the course of an unfilled WM delay (Lewis-Peacock et al., 2012; Meyers, Freedman, Kreiman, Miller, & Poggio, 2008; Myers et al., 2015; Sreenivasan, Vytlacil, & D’Esposito, 2014; Stokes et al., 2013; Wolff, Ding, Myers, & Stokes, 2015). Furthermore, behavioral findings have shown that attention demands and time-related decay can interact in their impact on WM maintenance (Kiyonaga & Egner, 2014). Because the fate of neural WM representation patterns in a dual-task setting—when external stimuli must be attended—is unknown, we examined how the neural activity patterns conveying WM content would be impacted by the difficulty of a secondary task, and moreover, how this impact might accumulate or evolve over the course of a trial as attention continued to be otherwise occupied. Specifically, we wanted to assess the discriminability of WM information (i.e., whether the remembered category was a face or a house) across distributed regions that are engaged for the perception of the WM categories. We therefore conducted event-related multivariate pattern analysis (MVPA) within PPA and FFA ROIs that were independently and functionally defined with a separate localizer task. Classifiers were trained and tested on beta estimates from all voxels in each ROI; to account for differences in univariate activity that might influence decoding performance, these classifier inputs were mean-centered and scaled for each condition, at each time-point, within each ROI. To assess decoding of WM category across time, separate classifiers were trained for each task event, wherein the inputs were beta estimates for each 2 s event across a trial, at both levels of visual attentional load (i.e., from the ‘temporal’ model). Furthermore, to account for random resampling and model calculations within the “caret” package, each classifier was repeated 50 times; these accuracies were averaged to produce a single accuracy value for the classification. We therefore obtained, for each participant, two WM category mean classification accuracies (one each for easy and hard visual search conditions) at each of 8 trial time points. To assess any potential differences in classification between easy and hard external attention conditions, these mean accuracy values were submitted to one-sample t-tests against chance (50%), and paired t-tests against one another.

Searchlight MVPA

While ROI-based classification analyses test how memory information is represented by brain regions known to be sensitive to specific categories, we further examined the distribution of WM category information across the entire brain. Although many recent studies have decoded WM content information from primarily sensory regions that perceive that content (during an unfilled delay), a handful of studies have also found multivariate WM information in prototypically “attentional” frontal and parietal regions (Christophel, Hebart, & Haynes, 2012; Ester, Sprague, & Serences, 2015; Sprague et al., 2016). One study also suggests a unique role for parietal cortex in WM content representation in the face of predictable irrelevant distractors—which can presumably be anticipated and ignored (Bettencourt & Xu, 2015). To identify regions that might convey locally distributed patterns of WM category information, during completion of a delay-spanning visual task, we conducted whole-brain searchlight MVPA (Haynes et al., 2007; Kriegeskorte, Goebel, & Bandettini, 2006). The searchlight was a spherical cluster with a radius of 3 voxels, thus containing up to 123 voxels. Unlike in the ROI-based MVPA, inputs to the searchlight analysis were beta estimates across the entire 8 s search sequence (i.e., from the ‘searchlight’ model). A separate classifier was trained to discriminate the WM category based on multivariate patterns of input from all the voxels in a given searchlight, and that procedure was repeated for searchlights surrounding every grey matter voxel in the brain. The resultant subject-level accuracy maps were submitted to t-tests at the group-level against chance performance (50%) and thresholded with an FDR-correction of p < .05.

Results

Behavioral Results

Visual search accuracy (% correct) was lower when the search distractors were less discriminable from the target (Easy: M = 94.8%, SD = 9.1%; Hard: M = 84.8%, SD = 12.9%), F(1, 27) = 27.3, p < .001, and search correct response time (RT) was also drastically slower in this high attentional load condition (Easy: M = 848 ms, SD = 105 ms; Hard: M = 1126 ms, SD = 112 ms), F(1, 27) = 257.1, p < .001. The external attentional load manipulation was, therefore, an effective means of modulating the time-consumption of WM delay-spanning processing: When the search was harder, it took more time to complete, suggesting that attention would be diverted from WM maintenance during that time (Figure 2a). While search accuracy was unaffected by internal (WM) load (Figure 2b, p = .7), search was slightly faster when 2 items were maintained in WM, F(1, 27) = 4.2, p = .052. Although we expected increased WM demands to impair concurrent attentional performance, this unexpected finding is consistent with the theory that increased attentional load should reduce processing of irrelevant distraction (de Fockert, Rees, Frith, & Lavie, 2001; Kim, Kim, & Chun, 2005; Lavie, 2005), which would improve search efficiency. Alternatively, higher demands may engage more cognitive control, and therefore benefit ongoing processing (e.g., Jha & Kiyonaga, 2010; Waskom, Kumaran, Gordon, Rissman, & Wagner, 2014). However, neither search accuracy nor RT displayed an interaction between internal and external attention factors (all p > .4).

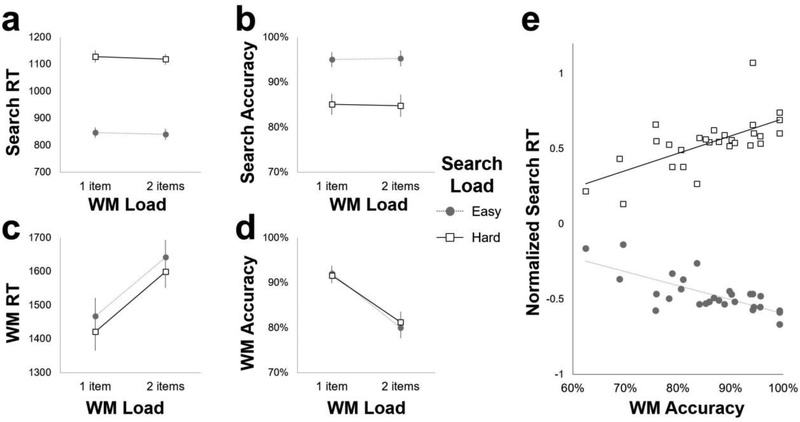

Figure 2.

Behavioral results. a) Mean visual search response time (RT) was faster for easy searches compared to hard searches, and slightly faster when 2 items were maintained in WM. b) Visual search accuracy was also greater for easy searches compared to hard searches. c) WM probe response times were faster when 1 item was maintained in WM, and faster after harder visual search series. d) WM probe recognition accuracy was greater for 1 item compared to 2 item WM. e) The magnitude of the visual search RT load effect (Hard search RT – Easy search RT) correlated with WM probe recognition accuracy (x-axis) across participants. Here, individual normalized RTs are plotted separately for Easy (filled circles) and Hard (empty squares) search conditions (y-axis).

WM probe performance was slower (Low: M = 1464 ms, SD = 296 ms; High: M = 1636 ms, SD = 260 ms), F(1, 27) = 76.2, p < .001 (Figure 2c), and less accurate (Low: M = 91.7%, SD = 9.1%; High: M = 80.4%, SD = 12.3%), F(1, 27) = 103.3, p < .001 (Figure 2d), when 2 items were remembered (vs. 1). The WM manipulation was thus effective at increasing internal attentional demands. Face WM (88%) was slightly better than house WM (85%), but not significantly so (p = .07), whereas the visual search stimulus category had no influence on WM performance (p = .4). WM probe responding was unexpectedly faster after harder visual search sequences, F(1, 27) = 8.2, p = .008. Like the unexpected improvement to visual search RT during higher WM load, this finding also suggests that the engagement of control during harder visual search may have benefitted WM speed (e.g., Jha & Kiyonaga, 2010; Waskom et al., 2014). However, probe accuracy was unaffected by the search difficulty, and neither probe accuracy nor RT displayed an interaction between internal and external load factors (all p > .4).

While participants had the option to submit WM responses on a 4-point scale, responses disproportionately favored the extremes of the scale (either “definitely same” or “definitely different”). Fewer than 25% of all responses used either “maybe” option, and four participants neglected to use those responses at all; thus, we report WM probe performance collapsed across confidence levels. Nonetheless, we conducted an additional control ANOVA of WM accuracy, with the added factor of Response Confidence, and found no interactions between the Confidence factor and either WM load (p = .12) or Search Difficulty (p = .5), nor a 3-way interaction (p = .35). Because the jittered delay lengths produced total WM delays that ranged from 12.5–17.5 s, we also conducted a control ANOVA with a factor of Delay Length—split into three bins for long, medium, and short delays—and found no main effect of Delay Length on WM probe accuracy (p = .5) and no interactions between Delay Length and any other factors (all p > .2). Thus, neither WM recognition confidence nor total duration of the WM delay appear to have significantly impacted the results.

The absence of an interaction between the WM and visual search load factors was surprising because of the abundant prior evidence that WM performance can be impaired by concurrent attention demands (but see Hollingworth & Maxcey-Richard, 2012; Vogel, Woodman, & Luck, 2006; Woodman, Vogel, & Luck, 2001; Woodman & Luck, 2007). Thus, we investigated whether any potential reciprocity between internal and external attention demands may have manifested in another way in the present data set—for instance, via a tradeoff between the two task components. First, we conducted a mixed-effects logistic regression analysis, wherein the probability of a correct WM response was predicted by visual search RT on a trial-by-trial basis, accounting for individual differences within subjects (i.e., modeling a random effect of subject). We limited the predictive factor of search RT to correct responses on the first search in each series, when WM encoding processes are expected to spillover and be maximally impacted by the external attentional task. Indeed, visual search RT significantly predicted a correct WM response, odds ratio = 0.50 [95% CI 0.30, 0.83], p = .007, whereby faster search RT predicted better WM accuracy on a trial-by-trial basis. Within this model, WM accuracy was predicted by neither search load, p = .96, nor the interaction between search RT and search load, p = .61. These results are consistent with the idea that faster completion of the visual search task freed up (shared) attention for WM maintenance, and therefore facilitated WM performance.

We also examined correlations between mean visual search and WM “load effects” (i.e., High Load – Low Load), and found that the two were negatively correlated with one another, r = –.52, p = .005. A larger effect of visual search load on search RT was associated with a smaller effect of WM load on probe accuracy. A larger visual search load effect was also strongly associated with better WM accuracy overall (r = .75, p < .001). To better characterize this association, we examined correlations between normalized visual search RT and WM accuracy at each search difficulty level (Figure 2e). When the search series was easy, faster search performance was associated with better memory, r = –.73, p < .001. When the search series was harder, however, the pattern was reversed: instead, slower search RT was associated with better memory accuracy, r = .65, p < .001. This pattern might emerge if the current visual search load were to impact the WM maintenance strategy that would most benefit WM performance. For instance, when the intervening visual task is hard and cannot be completed quickly, it may benefit WM maintenance to alternate attention between visual searching and refreshing the WM content (which would extend the search time). When the search is easier, however, it may be most effective to complete is as quickly as possible and then turn to WM maintenance processes. Thus, while harder visual search did not reduce WM accuracy overall, performance on the visual search task component was strongly related to WM performance both across the entire task (Figure 2e) and on a trial-by-trial basis. We next examined how these simultaneous WM and visual attention demands are reflected in neural measures.

Mass-univariate fMRI Results

We initially conducted mean signal intensity-based analyses to localize areas that respond to attentional task load, and may reflect competition between internal and external task demands. First we identified regions that displayed a main effect of the external (visual search) attentional load, during the search sequence (Figure 3a; all whole-brain FDR-corrected, p < .05). These encompassed a large bilateral network of frontal, parietal, and occipital cortical regions that are considered part of a “cognitive control network” and are typically engaged when task demands are high (Bertolero, Yeo, & D’Esposito, 2015; Niendam et al., 2012; Power & Petersen, 2013), as well as the cerebellum, thalamus and basal ganglia. Thus, both visual search behavior and univariate neural measures were highly responsive to the external (visual attentional) load manipulation.

Figure 3.

Univariate fMRI results. All maps are whole-brain FDR-corrected, p < .05. a) A large bilateral network of frontal, parietal, occipital, and subcortical regions showed elevated activation for hard versus easy visual searches. b) Lateral prefrontal, parietal, and posterior temporal clusters were sensitive to the interaction between WM and search load levels. Beta values are displayed for each trial condition from ROIs surrounding local maxima of the interaction effect in left and right middle frontal gyrus (MFG) and superior parietal lobule (SPL) clusters.

While we observed no main effect of WM load (during completion of the search task), there was a robust interaction between visual search and WM demands (Figure 3b). Lateral prefrontal, parietal, posterior temporal (around the temporo-parietal junction [TPJ]) and cerebellar clusters were sensitive to the combination of load in both the internal and external domains. Unthresholded t-maps for both main and interaction effects are available online (http://neurovault.org/collections/AZELKTWQ/). As illustrated with beta values extracted from ROIs centered on local maxima of the interaction (Figure 3b), the magnitude of the response to search difficulty was dramatically magnified when WM load was high as well. Notably, rather than an activation increase with each increasing level of task demand, the heightened response to WM load during the harder visual search was reversed during the easier search. This interaction is consistent with the suggestion, from the behavioral results, that fundamentally different WM maintenance strategies may be employed during different attentional states. In sum, both the behavioral and univariate neural responses to WM load were impacted by concurrent visual search demands. Next we assessed the fate of distributed neural patterns of WM category information in the face of competition for attention by external stimuli.

Multivariate fMRI Results

ROI-based MVPA Results

Our primary multivariate analysis addressed (1) how the discriminability of WM category information evolves across the trial in face- and house-sensitive ROIs, and (2) how that evolution is impacted by the difficulty of an intervening visual task. WM category classification in the PPA (which was independently defined by univariate contrasts of a separate functional localizer task), displayed a u-shaped pattern across the trial (Figure 4a). Unsurprisingly, regardless of the search difficulty of the current trial, classification of the WM category was highly accurate (M = 85%) during presentation of the WM sample (i.e., when the stimulus was actually being perceived). Regardless of the difficulty condition of the visual search, WM decoding accuracy dropped after the offset of the WM sample. WM classification performance diverged, however, with start of the visual search series, depending on the attentional demands of that search sequence. When the search was easier, WM category classification remained above chance (S1: t(27) = 2, p = .05). When the search was more difficult (and therefore diverting attention for a longer period of time), however, WM category classification dropped down to chance levels (S1: t(27) = −.26, p = .8), and remained at chance throughout the rest of the search series (S2: t(27) = −1.3, p = .2; S3: t(27) = .8, p = .4; S4: t(27) = .7, p = .5). This difference between the easy and hard visual search conditions with respect to chance-level decoding were also borne out in direct comparison between these conditions: WM category classification was significantly better for the easier search condition, especially early in the search series (F(1, 27) = 4, p = .05; S1: t(27) = 1.9 , p = .07; S2: t(27) = 2.3, p = .03; Figure 4b). The distribution of classifier accuracies for individuals at the second search trial (when classification between the two conditions significantly differs) also illustrates that many more participants displayed highly accurate classification when the visual attention demands were low (Figure 4b). Thus, even in the face of persistent visual input—as well as a secondary task being performed on that input—category-diagnostic WM stimulus information was present in distributed patterns of neural activity when external visual attention demands were low, but not when external demands were high.

Figure 4.

Multivariate fMRI results. A) WM category classification in parahippocampal place area (PPA; independently and functionally defined), at 2 s time-points across the trial. WM decoding accuracy for easy (grey) visual searches was better than for hard (orange) visual searches (grey boxes indicate time-points where the conditions significantly differ). Ribbons represent ± 1 SEM. C = WM cue; D = delay; S = search; P = probe. b) Open circles display WM category classification accuracies for each individual participant, for low (grey) and high (orange) attentional load, at the second visual search trial in the series. Filled black circles mark the mean classifier accuracy for each condition. c) WM category classification in PPA—same as (a)—median split by behavioral WM probe accuracy performance. d) Clusters of searchlights across the ventral occipitotemporal cortex classified WM category significantly above chance, FDR-corrected, p < .05, during the 8 s visual search sequence.

As more time passed across the visual search task, classifier performance converged to chance regardless of the difficulty of the search, suggesting that repeated perceptual input and attentional processing can eventually impede the detection of sensory WM patterns, even in a lower demand condition. When the probe appeared, however, WM category classification again increased above chance (M = 68%). A mixed-effects logistic regression analysis also revealed that longer visual search RTs significantly predicted worse classifier accuracy in PPA on a trial-by-trial basis, particularly for higher WM performers (search RT × WM performance interaction, p = .033). Accordingly, when we median split the group by behavioral WM probe performance to illustrate this result, the attention-sensitive decoding pattern was especially pronounced (Figure 4c). Only the higher WM performance group displayed more accurate WM category classification for easy versus hard search conditions (S2: t(13) = 2.4, p = .036), whereas the lower WM performance group displayed highly similar classifier performance for easy and hard search conditions across the entire trial (S2: t(13) = .67 p = .5). Here, we thus have evidence that the neural patterns of activity that convey information about the WM content are impeded—or possibly recoded in a different format (Olivers et al., 2011; Stokes, 2015)—when demands on visual attention are high and require longer processing times. While these mean differences between conditions (and improvement over chance performance) are modest, the classifier accuracies are consistent with previous decoding studies of WM category and the modulation of that classifier evidence via a retro-cueing (Lewis-Peacock, Drysdale, & Postle, 2014) or external magnetic stimulation (Rose et al., 2016). Here, however, rather than endogenously shifting attentional priority within WM (e.g., in response to a retro-cue), attention was occupied by a demanding perceptual task.

An alternative explanation for this outcome is that, while the WM representations themselves remain unaltered by the search difficulty, their detection is affected by unrelated attentional activity within the same brain regions. While the classification preprocessing steps (i.e., mean-centering and scaling of classifier inputs) help to mitigate this concern, if this were true, we would expect the univariate response in the decoding ROI (i.e., PPA) to positively relate to the search load effect on decoding accuracy. While univariate activity in the PPA was descriptively greater during harder search difficulty, the univariate search load effect was in fact uncorrelated with the search-related difference in decoding accuracy (from the same ROI) at all search time-points (all r < .1, all p > .7). To further corroborate this null effect, we also conducted Bayesian correlations between the univariate and multivariate search load effects at each search time point, which revealed moderate Bayes factors ranging from 3.9 to 5.5 in favor of the null hypothesis. Thus, it is unlikely that the univariate response to search difficulty can explain the observed sensitivity of WM category decoding to the difficulty of the intervening visual task.

In the FFA, WM category classification followed a similar u-shaped pattern to that in the PPA, but decoding remained at chance levels, for both easy and hard search conditions, at all visual search time-points, for all conditions (all p > .2). That is, while stimulus category pattern classification was accurate during perception of the stimuli (i.e., during sample and probe periods), we were unable to classify the WM category from the FFA during the intervening visual task, in either attentional load condition. The functionally-defined FFA was substantially smaller than the PPA. Moreover, several prior studies that have used face and house WM stimuli have focused on the PPA (over the FFA) and have found better pattern classification, or more sensitive and behaviorally meaningful activations associated with “place” processing in general (Derrfuss, Ekman, Hanke, Tittgemeyer, & Fiebach, 2017; Gazzaley, Cooney, Rissman, & D’Esposito, 2005; G. Kim, Lewis-Peacock, Norman, & Turk-Browne, 2014; Lewis-Peacock & Norman, 2014; Yi, Woodman, Widders, Marois, & Chun, 2004). While WM recognition performance was comparable for face (87%) and house (85%) memory, our findings and others suggest that WM-related activity in the FFA may be less diagnostic than that in the PPA, at least under the kind of dual task conditions imposed during the visual search period in our experiment.

Searchlight MVPA Results

We also applied a searchlight procedure to identify regions that convey locally distributed patterns of WM category information, across the duration of the search sequence. Indeed, large clusters of searchlights covering the ventral occiptotemporal cortex classified the WM category significantly above chance (Figure 4d; whole-brain FDR-corrected, p < .05), even in the face of persistent visual input and additional attention demands. Individual searchlights were scattered across the rest of the brain (including frontal and parietal regions), but searchlights that classified the WM category above chance were overwhelmingly located in the ventral visual regions that typically respond to perception of stimuli from those categories (see whole-brain classifier accuracy map at http://neurovault.org/collections/AZELKTWQ/). We also ran two additional searchlight analyses, split by the difficulty of the intervening visual search. These analyses halved the number of beta inputs into each classifier, and neither analysis revealed searchlights that passed whole-brain FDR-correction (but the spatial distribution of classifier performance for easy and hard visual search conditions can be examined at http://neurovault.org/collections/AZELKTWQ/).

Discussion

Here, we tested the hypothesis that demands on visual attention should impact neural representations of visual WM content, based on the idea that WM maintenance occurs via attention-dependent recruitment of sensory cortices. We manipulated levels of both WM and visual search load in a dual-task paradigm, and found converging behavioral and neuroimaging evidence that these “internal” and “external” attentional demands impact one another. For one, performance on the visual search portion of the task related to recognition accuracy at the WM probe—both on average across participants, and on a trial-by-trial basis within participants—suggesting that visual search and WM maintenance are mutually reliant on attention. Secondly, an interaction in the univariate fMRI response in fronto-parietal regions indicated that the neural response to load in one domain (i.e., WM) was strongly influenced by the load in the other domain (i.e., visual search). Finally, the discriminability of multivariate patterns of WM category activity in extrastriate visual cortex (specifically PPA) was reduced under higher visual attentional demands, and related to the speed of performance of the search task, suggesting that the quality of the sensory cortical WM representation may be influenced by the amount of available attention during the WM maintenance period.

While the univariate interaction effect emerged in frontal and parietal regions that have often been implicated in WM and attentional processes (Constantinidis & Klingberg, 2016; Curtis & D’Esposito, 2003; Eriksson, Vogel, Lansner, Bergström, & Nyberg, 2015), the pattern of activation observed here was novel, and is consistent with the possibility that different combinations of attentional load demands may provoke distinct task strategies (cf. Derrfuss et al., 2017). That is, rather than a quantitative increase in “neural effort” (i.e., linear activation increases) with each increasing level of task demand, the heightened response to WM load during the harder visual search was reversed during easier search (Figure 3b). If each increasing level of load engaged fronto-parietal regions more strongly, we would have expected a greater response to high WM load, even when the search was easy. Instead, high WM load related to less activity (vs. low load) when the search was easy. Combined with the correlation between search RT and WM accuracy—whose direction also flips between easy and hard search conditions (Figure 2e)—these data suggest that a harder visual attention task might invoke a qualitatively different WM maintenance or cognitive control strategy than the one used when the secondary task is easier. This is further suggested by the (counterintuitively) faster WM probe recognition after harder search series, and faster visual search performance when two items were maintained in WM (as opposed to one). These findings may reflect reduced processing of distracting stimuli (and hence better performance) when attentional demands were high (de Fockert et al., 2001; Kim et al., 2005; Lavie, Hirst, de Fockert, & Viding, 2004), or may suggest that higher demands provoked greater engagement of cognitive control and therefore benefitted ongoing performance (Jha & Kiyonaga, 2010; Waskom et al., 2014). Thus, load demands in one domain clearly impact performance in the other domain, and strategies for managing dual-task demands may differ under different load conditions.

Critically, event-related pattern classification within the PPA demonstrated the sensitivity of neural WM category information to visual attentional demand levels (Figure 4). Regardless of the difficulty condition of the visual search, WM decoding accuracy sharply declined during the WM delay. Most importantly, however, this reduction in decoding accuracy during the search sequence was more profound when the search was more time-consuming, even though the amount of perceptual input was matched in the easy and hard search conditions. The more that attention was required to complete the delay-spanning visual search, the more the detection of neural WM category representations in ventral visual cortex suffered. WM category classification was also predicted by behavioral performance (i.e., search RTs), suggesting that a longer time spent on the visual search task detracted attention from WM maintenance for longer, and WM pattern classification therefore suffered more. Finally, search RT also related to WM accuracy, suggesting that the amount of time spent on the delay-spanning task determines both the discriminability of category-diagnostic patterns in PPA as well as WM accuracy.

While visual attentional demands impacted the classification of the WM category representation, the reduction in WM category discriminability under attentional load did not lead to an overall deterioration in behavioral WM recognition performance. This is consistent with a recent finding that WM orientation decoding in visual cortex is disrupted by irrelevant perceptual distraction, without an impairment to task performance (Bettencourt & Xu, 2015). Taken together with the univariate interaction results, the data suggest that a visual WM representation strategy may be more feasible when attentional demands are lenient, but that WM content must be maintained by some other strategy when visual attention is concurrently taxed (Derrfuss et al., 2017; Olivers et al., 2011). These results are consistent with earlier indications that WM representations can be transferred into a different activation status to prioritize the immediately relevant task, and then restored into the focus of attention when they are needed to guide behavior (Kiyonaga et al., 2012; LaRocque et al., 2012, 2016; Lewis-Peacock et al., 2012; Sprague et al., 2016), suggesting that different attentional states may promote distinct means of WM retention. Indeed, interest has grown recently in characterizing a hidden or “silent” WM coding scheme (Stokes, 2015). For instance, neural evidence for previously irrelevant (i.e., silent) WM items can be restored by external stimulation (Rose et al., 2016; Wolff et al., 2015; Wolff, Jochim, Akyürek, & Stokes, 2017), suggesting that this representational state may be implemented via patterned short-term changes in network synaptic weights (Erickson, Maramara, & Lisman, 2009; LaRocque et al., 2014; Stokes et al., 2013). It remains unclear, however, why and when an activity silent maintenance strategy is used (as opposed to persistent activity). Our findings raise the intriguing possibility that such a representational format—that is undetectable with the fMRI methods used here—might be relied upon specifically when WM information must be maintained in the absence of sustained attention toward the WM content.

A broad searchlight classifier also decoded the WM category during a delay-spanning series of visual searches, in the local patterns conveyed by clusters of voxels around the ventral visual regions that typically respond to perception of the WM categories (i.e., fusiform and parahippocampal gyri). This result supports the notion that WM maintenance is achieved through activation of sensory representations and marks an informative advance in the limits of WM decoding. That is, prior studies have successfully decoded WM content from visual cortices over an unfilled delay interval (i.e., no other perceptual input; Emrich et al., 2013; Ester et al., 2013; Harrison & Tong, 2009; Riggall & Postle, 2012; Serences et al., 2009), and from superior parietal cortex over an interval that included task-irrelevant perceptual input (Bettencourt & Xu, 2015), leaving open the question of what happens to (sensory) WM representations in the face of (complex) incoming sensory signals that require attention. Of course, the information must be represented somehow, because it is retrieved after completion of the search task, but these data suggest that a visual representational format can still be employed, even during a concurrent visual search task.

Multivariate decoding of the WM category was consistent with expectations, but these results bear several further considerations. For one, WM classification in the present study was performed at the category level, rather than the finer-grained level of specific exemplars. Our decoding analysis could therefore be interpreted to reflect a more abstract representation of the current task, rather than the particular WM sample, per se. Recent studies, however, support the notion that classifier evidence in sensory and category-responsive regions does indeed convey item-specific information (LaRocque et al., 2016; Rose et al., 2016). A task with more abstract stimulus representation demands, might be expected to influence activity patterns in more dorsal and anterior brain regions, as opposed to visual regions (Christophel, Klink, Spitzer, Roelfsema, & Haynes, 2017). Moreover, the present study used all novel WM stimuli, whereas WM capacity and representational format may be dramatically impacted by stimulus familiarity and real-world relevance (Brady, Störmer, & Alvarez, 2016; Endress & Potter, 2014). Regardless, the fact that category decoding is influenced by visual search difficulty serves as evidence that information about WM representation (whether it be abstract or specific) is affected by simultaneous attentional load. Future investigations should probe the specificity of distributed WM representations, and how they are influenced by factors like stimulus abstraction and novelty.

While many prior studies of WM decoding have used a retro-cue procedure to differentiate perceptual from maintenance activity, here the contributions of residual perceptual activity are primarily abated by decoding WM category information during a secondary perceptual task. That is, any perceptual activity related to the WM sample is unlikely to persist across exposure to a series of additional perceptual stimuli (and a delay of 12.5–15 s), supporting our interpretation that the decoded category information is related to WM maintenance. Several previous studies have already established that WM category maintenance activity for faces and scenes can be decoded from extrastriate visual regions (Lorenc, Lee, Chen, & D’Esposito, 2015; Sreenivasan, Vytlacil, et al., 2014). Our goal was instead to examine whether such activity patterns are sensitive to visual attentional task demands, whereby any potential confounds due to perceptual bleed-over would affect all task conditions equally, because secondary perceptual input was equated in all trial-types. An important question for future research will be to determine how representations of WM content are influenced by other demands, such as distraction from perceptually similar stimuli (cf. Gayet et al., 2017; Jha, Fabian, & Aguirre, 2004; Postle, 2005; Soto, Humphreys, & Rotshtein, 2007; Yoon, Curtis, & D’Esposito, 2006).

A critical function of WM is to maintain information in the face of competing demands, yet surprisingly little is known about how such attentional demands interact with WM storage. The present findings suggest that attention is necessary to maintain detectable visual WM category representations in sensory areas, but those distributed activity patterns must not correspond to the sole functional substrate of WM maintenance (Bettencourt & Xu, 2015; Derrfuss et al., 2017; Ester et al., 2015; Lee & Baker, 2016), since the material can still be remembered when WM decoding falls to chance. Thus, although the quality of sensory multivariate evidence for a WM item has recently been taken to reflect the precision of the WM representation (Emrich et al., 2013; Ester et al., 2013), we must explore additional maintenance formats to fully understand how we are best able to juggle our internal goals with persistent concurrent demands for our attention.

Acknowledgements

We thank Jiefeng Jiang and Phil Kragel for analysis help. This research was supported in part by National Institute of Mental Health Award R01MH087610 to TE.

References

- Albers AM, Kok P, Toni I, Dijkerman HC, & de Lange FP Shared Representations for Working Memory and Mental Imagery in Early Visual Cortex (2013). Retrieved from http://www.sciencedirect.com/science/article/pii/S0960982213006908 [DOI] [PubMed]

- Awh E, & Jonides J (2001). Overlapping mechanisms of attention and spatial working memory. Trends in Cognitive Sciences, 5(3), 119–126. [DOI] [PubMed] [Google Scholar]

- Awh E, Vogel EK, & Oh SH (2006). Interactions between attention and working memory. Neuroscience, 139(1), 201–208. [DOI] [PubMed] [Google Scholar]

- Barrouillet P, Portrat S, & Camos V (2011). On the law relating processing to storage in working memory. Psychological Review, 118(2), 175. [DOI] [PubMed] [Google Scholar]

- Bertolero MA, Yeo BTT, & D’Esposito M (2015). The modular and integrative functional architecture of the human brain. Proceedings of the National Academy of Sciences, 201510619. 10.1073/pnas.1510619112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bettencourt KC, & Xu Y (2015). Decoding the content of visual short-term memory under distraction in occipital and parietal areas. Nature Neuroscience. 10.1038/nn.4174 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brady TF, Störmer VS, & Alvarez GA (2016). Working memory is not fixed-capacity: More active storage capacity for real-world objects than for simple stimuli. Proceedings of the National Academy of Sciences, 113(27), 7459–7464. 10.1073/pnas.1520027113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH (1997). The Psychophysics Toolbox. Spatial Vision, 10(4), 433–436. 10.1163/156856897X00357 [DOI] [PubMed] [Google Scholar]

- Chao LL, Haxby JV, & Martin A (1999). Attribute-based neural substrates in temporal cortex for perceiving and knowing about objects. Nature Neuroscience, 2(10), 913–919. 10.1038/13217 [DOI] [PubMed] [Google Scholar]

- Christophel TB, Hebart MN, & Haynes J-D (2012). Decoding the Contents of Visual Short-Term Memory from Human Visual and Parietal Cortex. The Journal of Neuroscience, 32(38), 12983–12989. 10.1523/JNEUROSCI.0184-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Christophel TB, Klink PC, Spitzer B, Roelfsema PR, & Haynes J-D (2017). The Distributed Nature of Working Memory. Trends in Cognitive Sciences, 21(2), 111–124. 10.1016/j.tics.2016.12.007 [DOI] [PubMed] [Google Scholar]

- Chun MM (2011). Visual working memory as visual attention sustained internally over time. Neuropsychologia, 49(6), 1407–1409. 10.1016/j.neuropsychologia.2011.01.029 [DOI] [PubMed] [Google Scholar]

- Chun MM, & Johnson MK (2011). Memory: Enduring Traces of Perceptual and Reflective Attention. Neuron, 72(4), 520–535. 10.1016/j.neuron.2011.10.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Constantinidis C, & Klingberg T (2016). The neuroscience of working memory capacity and training. Nature Reviews Neuroscience, 17(7), 438–449. 10.1038/nrn.2016.43 [DOI] [PubMed] [Google Scholar]

- Curtis CE, & D’Esposito M (2003). Persistent activity in the prefrontal cortex during working memory. Trends in Cognitive Sciences, 7(9), 415–423. 10.1016/S1364-6613(03)00197-9 [DOI] [PubMed] [Google Scholar]

- Dale AM (1999). Optimal experimental design for event-related fMRI. Human Brain Mapping, 8(2–3), 109–114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Derrfuss J, Ekman M, Hanke M, Tittgemeyer M, & Fiebach CJ (2017). Distractor-resistant Short-Term Memory Is Supported by Transient Changes in Neural Stimulus Representations. Journal of Cognitive Neuroscience, 1–19. 10.1162/jocn_a_01141 [DOI] [PubMed] [Google Scholar]

- D’Esposito M, & Postle BR (2015). The Cognitive Neuroscience of Working Memory. Annual Review of Psychology, 66(1), 115–142. 10.1146/annurev-psych-010814-015031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Downing PE, Jiang Y, Shuman M, & Kanwisher N (2001). A Cortical Area Selective for Visual Processing of the Human Body. Science, 293(5539), 2470–2473. 10.1126/science.1063414 [DOI] [PubMed] [Google Scholar]

- Emrich SM, Riggall AC, LaRocque JJ, & Postle BR (2013). Distributed Patterns of Activity in Sensory Cortex Reflect the Precision of Multiple Items Maintained in Visual Short-Term Memory. The Journal of Neuroscience, 33(15), 6516–6523. 10.1523/JNEUROSCI.5732-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Endl W, Walla P, Lindinger G, Lalouschek W, Barth FG, Deecke L, & Lang W (1998). Early cortical activation indicates preparation for retrieval of memory for faces: An event-related potential study. Neuroscience Letters, 240(1), 58–60. 10.1016/S0304-3940(97)00920-8 [DOI] [PubMed] [Google Scholar]

- Endress AD, & Potter MC (2014). Large capacity temporary visual memory. Journal of Experimental Psychology: General, 143(2), 548 10.1037/a0033934 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Epstein R, & Kanwisher N (1998). A cortical representation of the local visual environment. Nature, 392(6676), 598–601. 10.1038/33402 [DOI] [PubMed] [Google Scholar]

- Erickson MA, Maramara LA, & Lisman J (2009). A Single Brief Burst Induces GluR1-dependent Associative Short-term Potentiation: A Potential Mechanism for Short-term Memory. Journal of Cognitive Neuroscience, 22(11), 2530–2540. 10.1162/jocn.2009.21375 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eriksson J, Vogel EK, Lansner A, Bergström F, & Nyberg L (2015). Neurocognitive Architecture of Working Memory. Neuron, 88(1), 33–46. 10.1016/j.neuron.2015.09.020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ester EF, Anderson DE, Serences JT, & Awh E (2013). A Neural Measure of Precision in Visual Working Memory. Journal of Cognitive Neuroscience, 25(5), 754–761. 10.1162/jocn_a_00357 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ester EF, Sprague TC, & Serences JT (2015). Parietal and Frontal Cortex Encode Stimulus-Specific Mnemonic Representations during Visual Working Memory. Neuron, 87(4), 893–905. 10.1016/j.neuron.2015.07.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fockert J. W. de, Rees G, Frith CD, & Lavie N (2001). The Role of Working Memory in Visual Selective Attention. Science, 291(5509), 1803–1806. 10.1126/science.1056496 [DOI] [PubMed] [Google Scholar]

- Fougnie D, & Marois R (2009). Attentive tracking disrupts feature binding in visual working memory. Visual Cognition, 17(1–2), 48–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gayet S, Guggenmos M, Christophel TB, Haynes J-D, Paffen CLE, Stigchel S. V. der, & Sterzer P (2017). Visual Working Memory Enhances the Neural Response to Matching Visual Input. Journal of Neuroscience, 37(28), 6638–6647. 10.1523/JNEUROSCI.3418-16.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gazzaley A, Cooney JW, Rissman J, & D’Esposito M (2005). Top-down suppression deficit underlies working memory impairment in normal aging. Nature Neuroscience, 8(10), 1298–1300. 10.1038/nn1543 [DOI] [PubMed] [Google Scholar]

- Gazzaley A, & Nobre AC (2012). Top-down modulation: bridging selective attention and working memory. Trends in Cognitive Sciences, 16(2), 129–135. 10.1016/j.tics.2011.11.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gratton G, H G, & Donchin E (1992). Optimizing the use of information: Strategic control of activation of responses. Journal of Experimental Psychology: General, 121(4), 480–506. 10.1037/0096-3445.121.4.480 [DOI] [PubMed] [Google Scholar]

- Gunseli E, Olivers CNL, & Meeter M (2015). Task-Irrelevant Memories Rapidly Gain Attentional Control With Learning. Journal of Experimental Psychology: Human Perception and Performance, No Pagination Specified. 10.1037/xhp0000134 [DOI] [PubMed] [Google Scholar]

- Harrison SA, & Tong F (2009). Decoding reveals the contents of visual working memory in early visual areas. Nature, 458(7238), 632–635. 10.1038/nature07832 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haynes J-D, Sakai K, Rees G, Gilbert S, Frith C, & Passingham RE (2007). Reading Hidden Intentions in the Human Brain. Current Biology, 17(4), 323–328. 10.1016/j.cub.2006.11.072 [DOI] [PubMed] [Google Scholar]

- Hollingworth A, & Maxcey-Richard AM (2012). Selective Maintenance in Visual Working Memory Does Not Require Sustained Visual Attention. Journal of Experimental Psychology. Human Perception and Performance. 10.1037/a0030238 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ikkai A, & Curtis CE (2011). Common neural mechanisms supporting spatial working memory, attention and motor intention. Neuropsychologia, 49(6), 1428–1434. 10.1016/j.neuropsychologia.2010.12.020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jerde TA, Merriam EP, Riggall AC, Hedges JH, & Curtis CE (2012). Prioritized Maps of Space in Human Frontoparietal Cortex. The Journal of Neuroscience, 32(48), 17382–17390. 10.1523/JNEUROSCI.3810-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jha AP, Fabian SA, & Aguirre GK (2004). The role of prefrontal cortex in resolving distractor interference. Cognitive, Affective, & Behavioral Neuroscience, 4(4), 517–527. 10.3758/CABN.4.4.517 [DOI] [PubMed] [Google Scholar]

- Jha AP, & Kiyonaga A (2010). Working-memory-triggered dynamic adjustments in cognitive control. Journal of Experimental Psychology. Learning, Memory, and Cognition, 36(4), 1036–1042. 10.1037/a0019337 [DOI] [PubMed] [Google Scholar]

- Kanade T, Cohn JF, & Tian Y (2000). Comprehensive database for facial expression analysis. In Fourth IEEE International Conference on Automatic Face and Gesture Recognition, 2000. Proceedings (pp. 46–53). 10.1109/AFGR.2000.840611 [DOI] [Google Scholar]

- Kanwisher N, McDermott J, & Chun MM (1997). The Fusiform Face Area: A Module in Human Extrastriate Cortex Specialized for Face Perception. The Journal of Neuroscience, 17(11), 4302–4311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim G, Lewis-Peacock JA, Norman KA, & Turk-Browne NB (2014). Pruning of memories by context-based prediction error. Proceedings of the National Academy of Sciences, 111(24), 8997–9002. 10.1073/pnas.1319438111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim SY, Kim MS, & Chun MM (2005). Concurrent working memory load can reduce distraction. Proceedings of the National Academy of Sciences of the United States of America, 102(45), 16524. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiyonaga A, & Egner T (2013). Working memory as internal attention: Toward an integrative account of internal and external selection processes. Psychonomic Bulletin & Review, 20(2), 228–242. 10.3758/s13423-012-0359-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiyonaga A, & Egner T (2014). Resource-sharing between internal maintenance and external selection modulates attentional capture by working memory content. Frontiers in Human Neuroscience, 8, 670 10.3389/fnhum.2014.00670 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiyonaga A, Egner T, & Soto D (2012). Cognitive control over working memory biases of selection. Psychonomic Bulletin & Review, 19(4), 639–646. 10.3758/s13423-012-0253-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, & Bandettini P (2006). Information-based functional brain mapping. Proceedings of the National Academy of Sciences of the United States of America, 103(10), 3863–3868. 10.1073/pnas.0600244103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhn M (2008). Caret package. Journal of Statistical Software, 28(5), 1–26.27774042 [Google Scholar]

- LaBar KS, Gitelman DR, Parrish TB, & Mesulam M-M (1999). Neuroanatomic Overlap of Working Memory and Spatial Attention Networks: A Functional MRI Comparison within Subjects. NeuroImage, 10(6), 695–704. 10.1006/nimg.1999.0503 [DOI] [PubMed] [Google Scholar]

- Lara AH, & Wallis JD (2015). The Role of Prefrontal Cortex in Working Memory: A Mini Review. Frontiers in Systems Neuroscience, 173 10.3389/fnsys.2015.00173 [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaRocque JJ, Eichenbaum AS, Starrett MJ, Rose NS, Emrich SM, & Postle BR (2014). The short- and long-term fates of memory items retained outside the focus of attention. Memory & Cognition, 43(3), 453–468. 10.3758/s13421-014-0486-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaRocque JJ, Lewis-Peacock JA, Drysdale AT, Oberauer K, & Postle BR (2012). Decoding Attended Information in Short-term Memory: An EEG Study. Journal of Cognitive Neuroscience, 25(1), 127–142. 10.1162/jocn_a_00305 [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaRocque JJ, Riggall AC, Emrich SM, & Postle BR (2016). Within-Category Decoding of Information in Different Attentional States in Short-Term Memory. Cerebral Cortex. 10.1093/cercor/bhw283 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lavie N (2005). Distracted and confused?: Selective attention under load. Trends in Cognitive Sciences, 9(2), 75–82. 10.1016/j.tics.2004.12.004 [DOI] [PubMed] [Google Scholar]

- Lavie N, Hirst A, de Fockert JW, & Viding E (2004). Load Theory of Selective Attention and Cognitive Control. Journal of Experimental Psychology: General, 133(3), 339–354. 10.1037/0096-3445.133.3.339 [DOI] [PubMed] [Google Scholar]

- Lee S-H, & Baker CI (2016). Multi-Voxel Decoding and the Topography of Maintained Information During Visual Working Memory. Frontiers in Systems Neuroscience, 2 10.3389/fnsys.2016.00002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis-Peacock JA, Drysdale AT, Oberauer K, & Postle BR (2012). Neural evidence for a distinction between short-term memory and the focus of attention. Journal of Cognitive Neuroscience, 24(1), 61–79. 10.1162/jocn_a_00140 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis-Peacock JA, Drysdale AT, & Postle BR (2014). Neural Evidence for the Flexible Control of Mental Representations. Cerebral Cortex, bhu130 10.1093/cercor/bhu130 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis-Peacock JA, & Norman KA (2014). Competition between items in working memory leads to forgetting. Nature Communications, 5 10.1038/ncomms6768 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lorenc ES, Lee TG, Chen AJ-W, & D’Esposito M (2015). The Effect of Disruption of Prefrontal Cortical Function with Transcranial Magnetic Stimulation on Visual Working Memory. Frontiers in Systems Neuroscience, 9 10.3389/fnsys.2015.00169 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lundqvist D, Flykt A, & Ohman A (1998). The Karolinska directed emotional faces (KDEF). CD ROM from Department of CLinical Neuroscience, Psychology Section, Karolinska Institutet, (91–630). [Google Scholar]

- Maldjian JA, Laurienti PJ, Kraft RA, & Burdette JH (2003). An automated method for neuroanatomic and cytoarchitectonic atlas-based interrogation of fMRI data sets. NeuroImage, 19(3), 1233–1239. 10.1016/S1053-8119(03)00169-1 [DOI] [PubMed] [Google Scholar]

- Mayer JS, Bittner RA, Nikolic D, Bledowski C, Goebel R, & Linden DE. (2007). Common neural substrates for visual working memory and attention. Neuroimage, 36(2), 441–453. [DOI] [PubMed] [Google Scholar]

- Meyers EM, Freedman DJ, Kreiman G, Miller EK, & Poggio T (2008). Dynamic Population Coding of Category Information in Inferior Temporal and Prefrontal Cortex. Journal of Neurophysiology, 100(3), 1407–1419. 10.1152/jn.90248.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Minear M, & Park DC (2004). A lifespan database of adult facial stimuli. Behavior Research Methods, Instruments, & Computers, 36(4), 630–633. 10.3758/BF03206543 [DOI] [PubMed] [Google Scholar]

- Myers NE, Rohenkohl G, Wyart V, Woolrich MW, Nobre AC, & Stokes MG (2015). Testing sensory evidence against mnemonic templates. eLife, 4, e09000 10.7554/eLife.09000 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nee DE, & Jonides J (2009). Common and distinct neural correlates of perceptual and memorial selection. NeuroImage, 45(3), 963–975. 10.1016/j.neuroimage.2009.01.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niendam TA, Laird AR, Ray KL, Dean YM, Glahn DC, & Carter CS (2012). Meta-analytic evidence for a superordinate cognitive control network subserving diverse executive functions. Cognitive, Affective, & Behavioral Neuroscience, 12(2), 241–268. 10.3758/s13415-011-0083-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nobre AC, Coull JT, Maquet P, Frith CD, Vandenberghe R, & Mesulam MM (2004). Orienting Attention to Locations in Perceptual Versus Mental Representations. Journal of Cognitive Neuroscience, 16(3), 363–373. 10.1162/089892904322926700 [DOI] [PubMed] [Google Scholar]

- Olivers CNL, Peters J, Houtkamp R, & Roelfsema PR (2011). Different states in visual working memory: when it guides attention and when it does not. Trends in Cognitive Sciences. 10.1016/j.tics.2011.05.004 [DOI] [PubMed] [Google Scholar]

- Oosterhof NN, & Todorov A (2008). The functional basis of face evaluation. Proceedings of the National Academy of Sciences, 105(32), 11087–11092. 10.1073/pnas.0805664105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Postle BR (2005). Delay-period activity in the prefrontal cortex: one function is sensory gating. Journal of Cognitive Neuroscience, 17(11), 1679–1690. 10.1162/089892905774589208 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Postle BR (2015). The cognitive neuroscience of visual short-term memory. Current Opinion in Behavioral Sciences, 1, 40–46. 10.1016/j.cobeha.2014.08.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Power JD, & Petersen SE (2013). Control-related systems in the human brain. Current Opinion in Neurobiology, 23(2), 223–228. 10.1016/j.conb.2012.12.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riggall AC, & Postle BR (2012). The Relationship between Working Memory Storage and Elevated Activity as Measured with Functional Magnetic Resonance Imaging. The Journal of Neuroscience, 32(38), 12990–12998. 10.1523/JNEUROSCI.1892-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rose NS, LaRocque JJ, Riggall AC, Gosseries O, Starrett MJ, Meyering EE, & Postle BR (2016). Reactivation of latent working memories with transcranial magnetic stimulation. Science, 354(6316), 1136–1139. 10.1126/science.aah7011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Serences JT, Ester EF, Vogel EK, & Awh E (2009). Stimulus-Specific Delay Activity in Human Primary Visual Cortex. Psychological Science, 20(2), 207–214. 10.1111/j.1467-9280.2009.02276.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Soto D, Humphreys GW, & Rotshtein P (2007). Dissociating the neural mechanisms of memory-based guidance of visual selection. Proceedings of the National Academy of Sciences, 104(43), 17186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sprague TC, Ester EF, & Serences JT (2016). Restoring Latent Visual Working Memory Representations in Human Cortex. Neuron, 91(3), 694–707. 10.1016/j.neuron.2016.07.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sreenivasan KK, Curtis CE, & D’Esposito M (2014). Revisiting the role of persistent neural activity during working memory. Trends in Cognitive Sciences, 18(2), 82–89. 10.1016/j.tics.2013.12.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sreenivasan KK, Vytlacil J, & D’Esposito M (2014). Distributed and Dynamic Storage of Working Memory Stimulus Information in Extrastriate Cortex. Journal of Cognitive Neuroscience, 26(5), 1141–1153. 10.1162/jocn_a_00556 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stokes MG (2015). “Activity-silent” working memory in prefrontal cortex: a dynamic coding framework. Trends in Cognitive Sciences, 19(7), 394–405. 10.1016/j.tics.2015.05.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stokes MG, Kusunoki M, Sigala N, Nili H, Gaffan D, & Duncan J (2013). Dynamic Coding for Cognitive Control in Prefrontal Cortex. Neuron, 78(2), 364–375. 10.1016/j.neuron.2013.01.039 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, … Nelson C (2009). The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research, 168(3), 242–249. 10.1016/j.psychres.2008.05.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Treisman AM, & Gelade G (1980). A feature-integration theory of attention. Cognitive Psychology, 12(1), 97–136. 10.1016/0010-0285(80)90005-5 [DOI] [PubMed] [Google Scholar]

- van Moorselaar D, Olivers CNL, Theeuwes J, Lamme VAF, & Sligte IG (2015). Forgotten But Not Gone: Retro-Cue Costs and Benefits in a Double-Cueing Paradigm Suggest Multiple States in Visual Short-Term Memory. Journal of Experimental Psychology: Learning, Memory, and Cognition. 10.1037/xlm0000124 [DOI] [PubMed] [Google Scholar]

- Vogel EK, Woodman GF, & Luck SJ (2006). The time course of consolidation in visual working memory. Journal of Experimental Psychology: Human Perception and Performance, 32(6), 1436–1451. 10.1037/0096-1523.32.6.1436 [DOI] [PubMed] [Google Scholar]

- Waskom ML, Kumaran D, Gordon AM, Rissman J, & Wagner AD (2014). Frontoparietal Representations of Task Context Support the Flexible Control of Goal-Directed Cognition. Journal of Neuroscience, 34(32), 10743–10755. 10.1523/JNEUROSCI.5282-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolff MJ, Ding J, Myers NE, & Stokes MG (2015). Revealing hidden states in visual working memory using electroencephalography. Frontiers in Systems Neuroscience, 9 10.3389/fnsys.2015.00123 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolff MJ, Jochim J, Akyürek EG, & Stokes MG (2017). Dynamic hidden states underlying working-memory-guided behavior. Nature Neuroscience, 20(6), 864–871. 10.1038/nn.4546 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woodman GF, & Luck SJ (2007). Do the contents of visual working memory automatically influence attentional selection during visual search? Journal of Experimental Psychology: Human Perception and Performance, 33(2), 363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woodman Geoffrey F., Vogel EK, & Luck SJ (2001). Visual Search Remains Efficient When Visual Working Memory Is Full. Psychological Science, 12(3), 219–224. [DOI] [PubMed] [Google Scholar]