Abstract

Successful negotiation of obstacles during walking relies on the integration of visual information about the environment with ongoing locomotor commands. When information about the body and the environment is removed through occlusion of the lower visual field, individuals increase downward head pitch angle, reduce foot placement precision, and increase safety margins during crossing. However, whether these effects are mediated by loss of visual information about the lower extremities, the obstacle, or both remains to be seen. Here we used a fully immersive, virtual obstacle negotiation task to investigate how visual information about the lower extremities is integrated with information about the environment to facilitate skillful obstacle negotiation. Participants stepped over virtual obstacles while walking on a treadmill with one of three types of visual feedback about the lower extremities: no feedback, end-point feedback, and a link-segment model. We found that absence of visual information about the lower extremities led to an increase in the variability of leading foot placement after crossing. The presence of a visual representation of the lower extremities promoted greater downward head pitch angle during the approach to and subsequent crossing of an obstacle. In addition, having greater downward head pitch was associated with closer placement of the trailing foot to the obstacle, further placement of the leading foot after the obstacle, and higher trailing foot clearance. These results demonstrate that the fidelity of visual information about the lower extremities influences both feedforward and feedback aspects of visuomotor coordination during obstacle negotiation.

NEW & NOTEWORTHY Here we demonstrate that visual information about the lower extremities is utilized for precise foot placement and control of safety margins during obstacle negotiation. We also found that when a visual representation of the lower extremities is present, this information is used in the online control of foot trajectory. Together, our results highlight how visual information about the body and the environment is integrated with motor commands for planning and online control of obstacle negotiation.

Keywords: locomotion, obstacle negotiation, virtual reality, visuomotor coordination

INTRODUCTION

Locomotor skills such as obstacle negotiation depend on the integration of visual information about the body and the environment with ongoing motor commands. Although people typically focus on the travel path during approach to impending obstacles (Patla and Vickers 1997), the amount of time spent fixating an obstacle varies with obstacle height (Patla and Vickers 1997). This suggests that there is an important relationship between visual information about impending obstacles and preparation for obstacle crossing. The role of visual information during obstacle negotiation has commonly been studied by occluding portions of the visual field during the approach to an impending obstacle. By occluding available visual information during the penultimate step before an obstacle, several studies have found that individuals decrease precision by making more collisions (Patla and Greig 2006; Rietdyk and Rhea 2011) and increasing foot placement variability (Patla and Greig 2006) compared with trials with full vision (Matthis et al. 2015; Matthis and Fajen 2014; Patla 1998). People also attempt to increase safety margins by lifting their legs higher (Graci et al. 2010; Mohagheghi et al. 2004; Rhea and Rietdyk 2007; Rietdyk and Rhea 2006; Timmis and Buckley 2012) and placing their leading and trailing feet further away from the obstacles (Mohagheghi et al. 2004; Rhea and Rietdyk 2007; Rietdyk and Rhea 2006; Timmis and Buckley 2012).

The effects of restricting visual information on obstacle negotiation performance may result from a lack of spatial information about the environment (exteroceptive information) and/or a lack of information about the body’s state relative to the environment (exproprioceptive information). Foot placement is planned in a feedforward manner ~2–2.5 steps before an obstacle or target based on exteroceptive information about the obstacle’s spatial location (Matthis et al. 2015; Matthis and Fajen 2014; Timmis and Buckley 2012). This suggests that restricting the view of the environment >2.5 steps before the obstacle may impair planning and result in a higher collision rate. In addition, leading foot clearance is fine-tuned in a feedback manner during obstacle crossing with exproprioceptive information about the leading leg’s location relative to the obstacle (Patla 1998; Rhea and Rietdyk 2007; Rietdyk and Rhea 2006). In this case, loss of visual information about the position of the lower extremities relative to an impending obstacle would likely impair the online control of the leading limb. Although previous studies provide clues about how visual information is used to guide obstacle negotiation, these studies often removed visual information about the environment and the body simultaneously. As a result, it remains to be seen how visual information about the body is integrated with information about the environment to facilitate skillful obstacle negotiation.

Obtaining useful visual information about the environment requires coordination of gaze behavior with ongoing locomotor commands. Visual input is dependent on how we orient our head and where we fixate our eyes while we walk. Although directly fixating a target or obstacle provides high-resolution spatial information, peripheral vision is often sufficient for guiding obstacle negotiation (Franchak and Adolph 2010; Marigold et al. 2007; Timmis et al. 2016). The human visual field is ~135° in the vertical direction (Harrington 1981), and, as a result, we maintain visual access to the ground ~75 cm in front of our feet when standing (Franchak and Adolph 2010) if the head and eyes are in a neutral position. During locomotion over uneven terrain, individuals rotate their heads down slightly, ~16° from neutral (Marigold and Patla 2008), which brings the lower edge of the field of view ~22 cm from the body. This suggests that the leading limb may be within the field of view during walking and this information could therefore be used for online control during obstacle negotiation.

To better understand how visual information about the body is utilized during locomotion, a number of studies have used immersive virtual reality (VR) with head-mounted displays (HMDs) to investigate how behaviors such as distance estimation and affordance perception are modified in the presence of a visual avatar (Bodenheimer et al. 2007; Bodenheimer and Fu 2015; Leyrer et al. 2011, 2015; Lin 2014; Lin et al. 2015; McManus et al. 2011; Mohler et al. 2008, 2010; Phillips et al. 2010; Renner et al. 2013; Ries et al. 2009; Thompson et al. 2004). Distance estimation in virtual environments becomes more accurate with a full-body avatar compared with having no avatar when assessed verbally (Leyrer et al. 2011) or by having participants walk a specified distance (Mohler et al. 2008, 2010; Phillips et al. 2010; Ries et al. 2009). Moreover, people’s estimate of their ability to step over an object, step down a ledge, or duck below an object most closely matches real-world ability when a first-person avatar is provided (Bodenheimer and Fu 2015; Lin et al. 2015). Given the importance of visual information about the body for improving the perception of distance and estimating performance capability, it is plausible that this information is also used to facilitate obstacle negotiation.

Here we investigated how visual information about the lower extremities influences the coordination between head pitch angle and obstacle negotiation. We used a virtual obstacle negotiation task because this allowed us to have fine experimental control over the fidelity of visual feedback provided to the user. We tested virtual obstacle negotiation performance with three types of visual feedback about the lower extremities: no visual feedback, information about the feet only, and rendering of the feet and legs with a link-segment model. We hypothesized that 1) provision of visual information about the body will lead to reduced variability of foot placement during obstacle negotiation; 2) individuals will increase downward head pitch angle, a proxy of gaze, when approaching obstacles, and this effect would be heightened by the presence of visual information about the lower extremities; 3) greater downward head pitch angle will be associated with lower foot clearance due to higher certainty about the relative positions of the obstacle and the foot; and 4) the relationship between head pitch angle and foot clearance will be strongest in the presence of visual information about the lower extremities. The results of this study highlight how visual information about the lower extremities is integrated with ongoing locomotor behavior to achieve successful obstacle negotiation in VR. Moreover, understanding how visual information about the lower extremities affects obstacle negotiation may help designers of VR-based clinical interventions determine the necessary fidelity of lower body feedback required for users to achieve natural obstacle crossing performance in immersive VR.

METHODS

Participants.

Eighteen healthy young individuals participated in this study [10 men, 8 women; age 26 ± 4 yr (average and standard deviation)]. All participants had normal vision or corrected-to-normal vision. Study procedures were approved by the Institutional Review Board at the University of Southern California, and all participants provided written informed consent before testing began.

Experimental setup.

Participants’ lower extremity kinematics were tracked with infrared-emitting markers (Qualisys) placed on the following landmarks: toe (approximately the 4th metatarsal head), heel (back of a shoe), knee (lateral epicondyle), and hip (greater trochanter). An additional marker was placed on the HMD (Oculus Rift Development Kit 2, Oculus VR) to measure approximate eye height. Marker trajectories were used to control the virtual leg models, and these trajectories were recorded for the duration of the experiment.

Virtual obstacle negotiation task.

The virtual environment was developed with Sketchup (Trimble Navigation), and the participants’ interaction with the environment was controlled with Vizard (WorldViz). The virtual environment consisted of a corridor with obstacles spanning its width (Fig. 1A). A total of 40 virtual obstacles were placed along the corridor at random intervals of between 5 and 10 m. The obstacles had a height and a depth of 0.14 m and 0.10 m, respectively. The virtual environment was displayed within the HMD, which had a 100° horizontal and vertical field of view, a resolution of 960 × 1,080 pixels for each eye, a mass of ~450 g, and 100% binocular overlap.

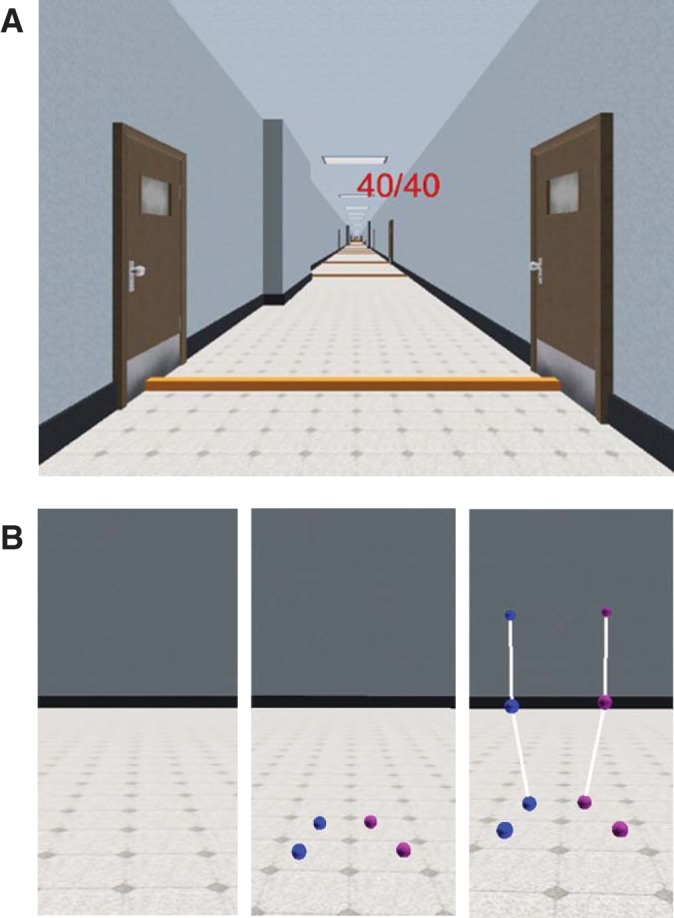

Fig. 1.

A: virtual corridor with obstacles. Participants were able to see their score, and 1 point was deducted for each collision. B: visual feedback conditions from a third-person viewpoint. Spheres represent the position of markers placed on the lower extremities. Segments connecting the spheres were used to provide a visual representation of limb segment length.

Participants viewed the scene through the HMD while walking on a treadmill (Bertec Fully Instrumented Treadmill). The velocity of the scene was synchronized with the treadmill at 1.0 m/s, and the orientation of the viewpoint was controlled by an inertial measurement unit within the HMD. Participants began by walking on the treadmill while wearing the HMD for a period of 1 min to become familiar with the setup (Fig. 2A). During this familiarization trial, no visual information about the lower extremities was provided, and no virtual obstacles were placed in the corridor. Then, participants performed three obstacle negotiation trials and three obstacle-free trials. For each type of trial, three feedback conditions were included: 1) no body model, 2) an end-point foot model, and 3) a link-segment leg model (Fig. 1B). The end-point model was used to determine whether visual information about the end point of the limb improved obstacle negotiation performance. The link-segment model was included because previous studies have observed that the perceived size of the body influences the perceived size of objects in the environment (van der Hoort et al. 2011; van der Hoort and Ehrsson 2016). Hence, the addition of segmental length information could encourage more consistent obstacle negotiation performance. The locations of the models’ joints were determined by marker positions, and thus the models were naturally calibrated to each participant’s anthropometry. Participants viewed the environment and the lower extremity model from a first-person perspective (Fig. 1A).

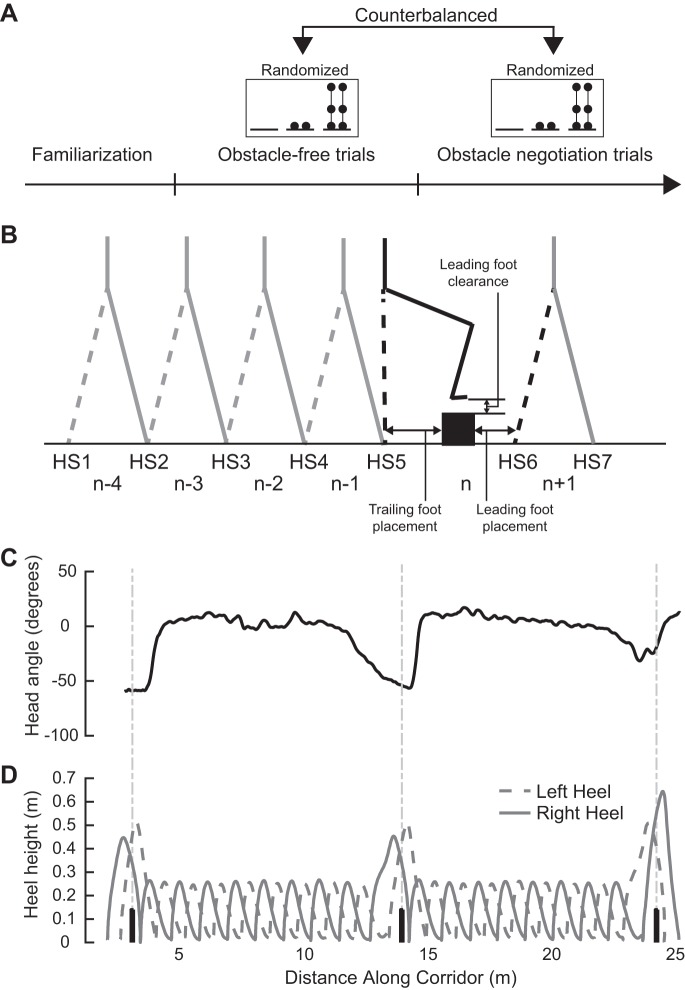

Fig. 2.

A: experimental protocol. Participants were counterbalanced to begin the experiment with either obstacle-free trials or obstacle negotiation trials. The box above each trial represents 3 feedback conditions: 1) no model, as represented by a horizontal line at the floor level; 2) end-point feedback, as represented by 2 dots; and 3) link-segment feedback, as represented by dots at the foot, knee, and hip and sticks connecting the dots. B: schematic showing dependent variables. Solid and dashed lines represent the leading and trailing limbs during obstacle crossing, respectively. Each step number was defined as the time period between 2 consecutive heel strikes (HS). C: representative time series of head pitch angle during an obstacle negotiation trial. The solid black line refers to the head angle. Vertical gray lines refer to points along the corridor where the centers of the obstacles were located. D: representative time series data for heel trajectory. The dashed gray line refers to the left heel trajectory, and the solid gray line refers to the right heel trajectory. Black boxes are scaled to the height and width of the obstacles along the path.

During the obstacle negotiation trials, participants were instructed to avoid collisions with obstacles and to maximize their score. The virtual obstacles had collision sensors capable of detecting when any of the markers entered the obstacle volume. Participants began with a score of 40, and 1 point was deducted for each collision. In addition, the obstacle changed color from brown to blue at the onset of a collision. During the three obstacle-free trials, participants were instructed to walk down the hallway. By examining behavior during trials with and without obstacles, we could determine how the presence of obstacles influenced head pitch angle independent from the effects of lower extremity visual feedback. The order of the feedback conditions was randomized, and the order of the obstacle and obstacle-free trials was counterbalanced across participants (Fig. 2A).

Data recording and analysis.

Toe, heel, and head marker positions were recorded at 100 Hz, and the data were processed in MATLAB R2016b (MathWorks, Natick, MA). We first assessed the effects of lower extremity feedback condition on a set of metrics that characterized obstacle crossing performance. The following dependent variables were calculated to measure obstacle negotiation performance: 1) collision rate, 2) average and standard deviation of foot clearance (the minimum vertical distance between the toe and the obstacle), 3) average and standard deviation of foot placement before the obstacle for the trailing limb (horizontal distance between the toe and the rear edge of the obstacle), and 4) average and standard deviation of foot placement after crossing for the leading limb (horizontal distance between leading foot position and the front edge of the obstacle). Each metric of distance was normalized by each individual’s leg length. The participants’ average leg length (greater trochanter to the floor) was 0.87 ± 0.06 m.

Only successful trials were used for foot clearance and foot placement analyses. Eight of 2,160 obstacles were omitted from our analyses because the software generated false collisions. We also had false negatives in 282/2,160 cases for the leading limb and in 215/2,160 cases for the trailing limb, meaning that there was an actual collision that was recorded as a success. In these cases, we retained the data for our analyses but changed the outcome status of each case to a collision. False positives and false negatives were identified in our data processing when the value of either foot placement or clearance did not match the outcome status. For instance, if an obstacle with a negative value of foot clearance was identified as a success, we checked the raw data by plotting the marker trajectory. If the marker trajectory truly showed a mismatched outcome status, we changed the outcome status to a collision. These mismatches likely resulted from brief lapses in network communication between Qualisys Track Manager and Vizard.

We used head pitch angle, measured from the inertial measurement unit embedded in the HMD, as an indirect measure of participants’ strategy for acquiring visual information while walking. We quantified the average head pitch angle for the four steps leading up to each obstacle limb (n − 4, n − 3, n − 2, and n − 1, respectively), the crossing step with the leading limb (n), and the crossing step with the trailing limb (n + 1; Fig. 2B). Averages were computed over the duration of each step (from heel strike on one limb to heel strike on the opposite limb). A head pitch angle of 0° corresponded to having the head in a neutral position, while negative values represented rotation toward the ground (forward pitch). We evaluated how the presence of obstacles influenced acquisition of visual information by comparing head pitch angle during the obstacle crossing trials with the behavior averaged during the respective obstacle-free trials.

Statistical analysis.

Statistical analyses of all dependent variables were performed with linear mixed-effects (LME) models in R (R Project for Statistical Computing). We tested whether the type of visual information provided about the lower extremities affected the following dependent variables: 1) collision rate, 2) leading and trailing foot placement, 3) leading and trailing foot clearance, 4) variability of leading and trailing foot placement, and 5) variability of leading and trailing foot clearance. We also tested for effects of visual feedback and step number (n − 4, n − 3, n − 2, n − 1, n, n + 1) on head pitch angle. For all models, we included a random intercept of each participant because it is typically recommended to include a random intercept for each participant in longitudinal studies to account for differences between participants (Seltman 2012). We tested each model to determine whether random slopes for each explanatory variable were necessary, using a log-likelihood test.

Foot clearance and placement of the leading and trailing limbs were tested for associations with head pitch angle one step before crossing to determine how the acquisition of visual information about the environment influenced subsequent control of foot trajectory. Our interests were in the main effect of the head pitch angle on foot clearance/placement variables and the interaction between head pitch angle and visual feedback condition. These models required a random slope for feedback condition in addition to the random intercept for each participant. For all of our statistical analyses, significance was set at the P < 0.05 level. Post hoc comparisons were performed for significant main effects or interactions, with Bonferroni corrections for multiple comparisons. We used the R package lme4 to fit the models, the package lmerTest to calculate model P values with Satterthwaite approximations for the degrees of freedom for the LME analyses, and the package multcomp for multiple comparisons. Satterthwaite approximations adjusted the degrees of freedom based on differences in variance between conditions.

RESULTS

Foot placement and clearance.

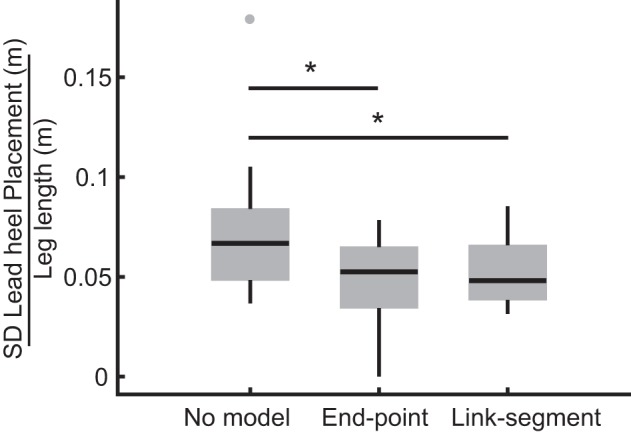

Visual information about the lower extremities had a systematic effect on obstacle crossing behavior. A representative example of the foot trajectory during obstacle negotiation is shown in Fig. 2D. The results of the likelihood ratio tests revealed that all dependent variables including collision rate and foot placement and clearance metrics were better fit without random slopes. We found a significant main effect of the type of visual information provided about the lower extremities (no model, end-point model, or link-segment model) on the variability of leading foot placement [F(2,33) = 4.30, P = 0.02] (Fig. 3). Specifically, the variability of leading foot placement was significantly reduced when end-point (Bonferroni corrected P = 0.03) and link-segment (Bonferroni corrected P = 0.04) feedback were presented compared with no visual feedback. There were no significant differences in any other variables related to obstacle crossing across conditions (Table 1).

Fig. 3.

Box and whisker plot illustrating leading foot placement variability. Horizontal lines within each box indicate median values, and bottom and top boundaries of the box indicate the 25th and 75th percentiles. Points outside each box indicate outliers. Asterisks denote significant differences at the Bonferroni corrected P < 0.05 level.

Table 1.

Average and standard deviation of foot placement and clearance

| Variables | No Model | End Point | Link Segment | P Value |

|---|---|---|---|---|

| Trailing foot placement, m | ||||

| M | 0.28 ± 0.14 | 0.28 ± 0.14 | 0.29 ± 0.13 | 0.96 |

| SD | 0.11 ± 0.04 | 0.10 ± 0.04 | 0.11 ± 0.04 | 0.85 |

| Leading foot clearance, m | ||||

| M | 0.15 ± 0.12 | 0.15 ± 0.12 | 0.15 ± 0.11 | 0.86 |

| SD | 0.08 ± 0.04 | 0.07 ± 0.04 | 0.07 ± 0.03 | 0.50 |

| Leading foot placement, m | ||||

| M | 0.26 ± 0.08 | 0.27 ± 0.07 | 0.25 ± 0.06 | 0.67 |

| SD | 0.06 ± 0.03 | 0.04 ± 0.02* | 0.05 ± 0.02* | 0.02* |

| Trailing foot clearance, m | ||||

| M | 0.19 ± 0.08 | 0.19 ± 0.10 | 0.20 ± 0.08 | 0.74 |

| SD | 0.06 ± 0.03 | 0.06 ± 0.03 | 0.06 ± 0.02 | 0.89 |

Values are means (M) ± standard deviation (SD).

P < 0.05 significant difference compared with no-feedback condition.

We also found that some foot placement metrics differed between success and collision trials. Both the trailing foot before crossing and the leading foot after crossing were placed further from the obstacle during collision trials compared with success trials [F(1,82) = 9.32, P = 0.003 for trailing foot and F(1,82) = 7.02, P = 0.01 for leading foot]. In addition, trailing foot placement variability was higher in collision trials than in success trials [F(2,79) = 3.16, P = 0.048], and this difference was larger in the no-visual feedback condition than in the end point-only condition (Bonferroni corrected P = 0.036).

Collision rate.

Although the type of visual information provided about the lower extremities influenced crossing behavior, it did not affect the overall collision rate or the frequency of each type of collision. There was no significant effect of the type of visual information provided about the lower extremities on collision rates [F(2,34) = 1.01, P = 0.38]. The average number of collisions per trial in the no-model, end-point, and link-segment feedback conditions was 17 (SD 13), 14 (SD 13), and 13 (SD 12), respectively.

Changes in head pitch angle during obstacle negotiation.

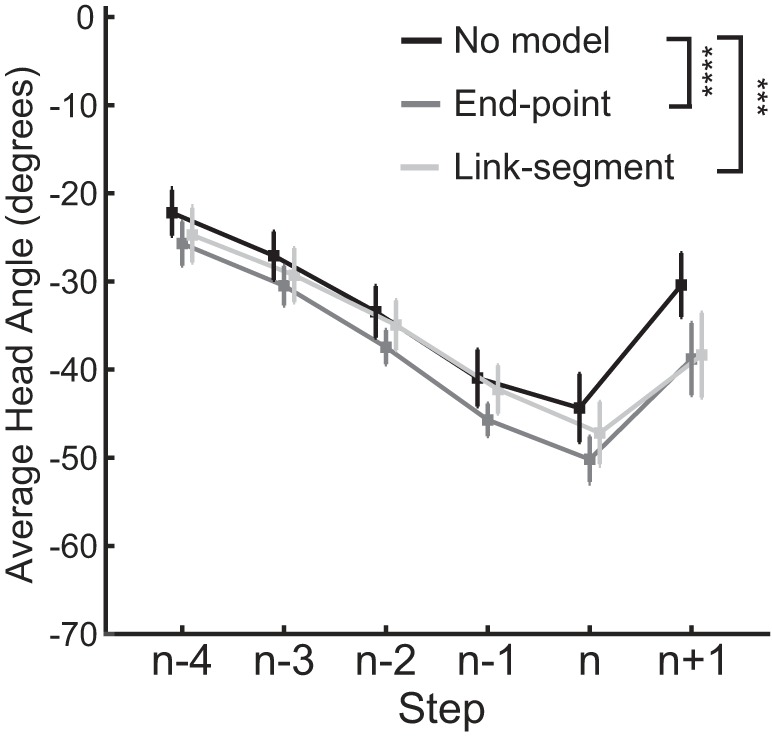

As participants approached and crossed impending obstacles, we observed a consistent time course of changes in head pitch angle. During the approach steps, participants gradually increased downward head pitch angle until they crossed the obstacle with the leading limb (Fig. 2C). After crossing, participants reduced the magnitude of downward head pitch angle toward the level observed in the trials without obstacles. The results of the likelihood ratio test revealed that the model of head pitch angle as a function of the type of visual information provided about the lower extremities and step number required random slopes for each type of visual information in addition to a random intercept for each participant. The model returned significant main effects of the type of visual information provided about the lower extremities [F(2,299) = 14.84, P < 0.001] and step number [F(5,299) = 70.03, P < 0.001] on the head pitch angle (Fig. 4). Post hoc analyses revealed that the magnitude of the head pitch angle at step n − 4 was significantly less than the magnitude at all other steps (all Bonferroni corrected P < 0.05), while the magnitude of the head pitch angle during the crossing step (step n) was significantly greater than all other steps (all Bonferroni corrected P < 0.05). On average, the magnitude of the head pitch angle was 6 (SD 1) greater (Bonferroni corrected P < 0.001) with the end-point model and 3 (SD 1) greater (Bonferroni corrected P = 0.004) with the link-segment model compared with when no visual feedback was provided. These changes in head pitch during approach and crossing were the result of negotiating obstacles rather than the novelty of the lower extremity feedback. This was supported by our findings that the average head pitch angle was between 0 and −5° while walking with no obstacles and pitch angle did not differ across visual feedback conditions in the no-obstacle trials [F(2,26) = 3.00, P = 0.07]. Finally, there was no difference in head pitch angle during success vs. collision trials.

Fig. 4.

Average head pitch angle as a function of step number and feedback condition. Error bars indicate standard error. The black line refers to the condition when no visual information about lower extremities was provided, the dark gray line refers to the condition when an end-point foot representation was provided, and the light gray line refers to the condition when the link-segment leg representation was provided. ***Bonferroni corrected P < 0.005, ****Bonferroni corrected P < 0.001.

Associations between head pitch angle before crossing and obstacle crossing performance.

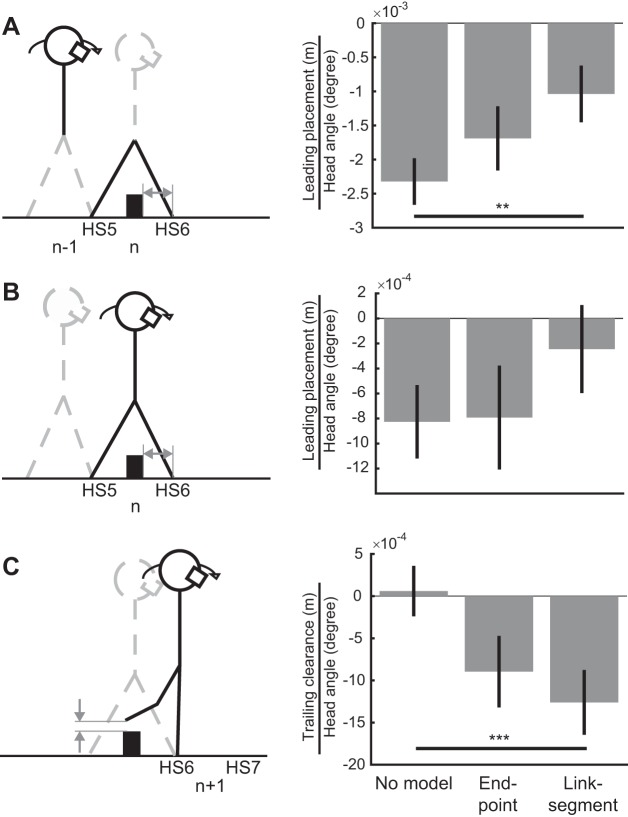

We next investigated whether head pitch angle during the approach to an obstacle was associated with subsequent obstacle crossing performance. Multiple metrics of crossing behavior at the individual obstacle level were predicted by head pitch angle during the approach step. There was a main effect of head pitch angle during the approach step (n − 2) on the trailing foot placement before the obstacle [F(1,1482) = 32.62, P < 0.001, β = 2e-3 m/°, standard error = 4e-4 m/°] and head pitch angle during the final approach step (n − 2) on leading foot placement after the obstacle [F(1,2015) = 82.01, P < 0.001; Fig. 5A]. Specifically, greater downward head pitch angle before crossing was associated with closer placement of the trailing foot to the obstacle and farther placement of the leading foot beyond the obstacle after crossing. There was also an interaction between the type of visual information provided about the lower extremities and head pitch angle during the final approach step (n − 1) on leading foot placement after the obstacle [F(2,609) = 4.92, P = 0.008; Fig. 5A]. The correlation between head pitch angle and leading foot placement was less strong with link-segment feedback compared with when no visual feedback about the lower extremities was provided (Bonferroni corrected P = 0.006).

Fig. 5.

Estimated regression coefficients for the dependent variables from linear models describing the relationship between leading foot placement after the obstacle and head angle during the approach step (A), leading foot placement after the obstacle and head angle during lead foot crossing (B), and trailing foot clearance and head angle during trailing foot crossing (C) in each feedback condition. Negative coefficients indicate that greater downward head angle was associated with larger values for each crossing variable. Illustrations on left represent the period analyzed for head angle and the corresponding crossing variable. Solid black lines indicate the analyzed step for head angle (thin black arrows) and the respective obstacle crossing performance variable (solid gray arrows). Error bars represent standard error. HS, heel strike. **Bonferroni corrected P < 0.01, ***Bonferroni corrected P < 0.005.

Associations between head pitch angle during obstacle crossing and crossing strategy.

Crossing performance at the individual obstacle level was also associated with head pitch angle during obstacle crossing. There was a main effect of head pitch angle during leading limb crossing (n) on leading foot placement [F(1,1900) = 14.51, P < 0.001; Fig. 5B]. Specifically, greater downward head pitch angle during crossing was associated with farther placement of the leading foot after crossing. Head pitch angle was also associated with measures of foot clearance. There was a significant main effect of the head pitch angle during the crossing step of the leading limb (n) on trailing foot clearance [F(1,1847) = 41.06, P < 0.001, β = −1e-3 m/°, standard error = 2e-4 m/°) such that greater downward head pitch during the previous step was associated with higher trailing foot clearance. Moreover, there was a main effect of the head pitch angle during trailing foot crossing (n + 1) on trailing foot clearance [F(1,2017) = 16.63, P < 0.001]. Greater downward head pitch angle was associated with higher trailing foot clearance. Finally, there was an interaction between head pitch angle during trailing foot crossing (n + 1) and the type of visual information provided about the lower extremities on trailing foot clearance [F(2,696) = 6.04, P = 0.003; Fig. 5C]. Specifically, the correlation between head pitch angle and concurrent trailing foot clearance was significantly stronger with link-segment feedback compared with no feedback (Bonferroni corrected P = 0.002). Together, these results suggest that visual information about the body and/or the obstacles was used for online control of obstacle negotiation.

DISCUSSION

The objective of this study was to determine how the presence and acquisition of visual information about one’s body influence obstacle negotiation performance in a virtual environment. To this end, we used a VR environment to determine how the provision of different levels of visual information about the lower extremities influenced negotiation of virtual obstacles while walking on a treadmill. We found that the variability of leading foot placement after crossing was reduced when visual information about the foot was provided and we also found that downward head pitch angle was increased when either form of visual feedback was provided. Furthermore, at the individual obstacle level, trailing foot placement before the obstacle, leading limb placement after the obstacle, and trailing foot clearance were associated with head pitch angle before and during crossing. Moreover, the association between trailing foot clearance and head pitch angle during crossing was strongest when the link-segment leg representation was provided. Our results demonstrate that visual information about the lower extremities is integrated with ongoing locomotor commands to modulate acquisition of visual information and foot placement during obstacle negotiation.

Provision of visual information about lower extremities facilitates precise foot placement during obstacle negotiation.

In line with our hypothesis, the precision of leading foot placement increased when visual information about the lower extremities was provided. This agrees with previous research in which the variability of foot placement during real-world obstacle negotiation increased when the lower visual field was occluded (Mohagheghi et al. 2004; Rhea and Rietdyk 2007; Timmis and Buckley 2012). This was also consistent with previous research that found when online visual feedback of end-point trajectory was provided along with proprioceptive feedback, participants reduced movement variability (Franklin et al. 2007). Our finding that foot placement was also more variable during collision trials in the no-visual feedback condition further supports our conclusion that visual information was used to promote more consistent foot placement. Together, these results show that online visual information about both the upper and lower extremities is used to improve the precision of visually guided motor behaviors such as obstacle negotiation.

Coordination between head pitch angle and obstacle crossing performance.

During the approach to impending obstacles, individuals increased their downward head pitch angle until they reached the obstacle and then gradually decreased the head pitch angle after crossing an obstacle. The magnitude and timing of changes in head pitch angle differed from what has previously been reported in the real world. In our task, participants reached a peak head pitch angle of ~40~50°, while previous studies reported that head pitch angle is ~16° while walking over uneven terrain (Marigold and Patla 2008). This discrepancy likely results from the lack of peripheral vision in VR with an HMD. Individuals may have compensated for the narrower vertical field of view in the HMD compared with the real world by increasing their downward head pitch angle to obtain the visual information that would naturally be acquired in the real world. The presence of this compensation suggests that information in the peripheral visual field, including information about the environment and information about the body, is utilized to perform obstacle negotiation.

When any visual information about the lower extremities was provided, participants increased head pitch angle relative to the no-model condition. Given the increase in precision of leading foot placement when end-point information was provided, this suggests that the visual information about the lower extremities accessed by increasing head pitch angle was used to guide foot placement. We also found that trailing limb clearance was most strongly coordinated with head pitch angle during crossing when link segment information was provided. Thus, for a given head pitch angle, participants had a greater safety margin for the trailing limb in the presence, not absence, of visual information about the lower extremities. This finding is inconsistent with previous studies that demonstrate that increased downward gaze and increased safety margins are typically observed when the lower visual field is occluded (Marigold and Patla 2008; Mohagheghi et al. 2004; Rhea and Rietdyk 2007; Rietdyk and Rhea 2006; Timmis and Buckley 2012). However, a potential explanation for this discrepancy is that participants in our study, particularly specific to fully immersive VR, used visual information about the trailing limb to increase the probability of successful clearance because of the absence of a visual representation of the trunk. Therefore, future work will need to determine how occlusion of the trailing limb by the trunk influences visuomotor coordination during obstacle negotiation. Greater safety margins may be also be a consequence of closer placement of the trailing foot to the obstacle before crossing, as this would require modification of the foot’s trajectory to guarantee successful clearance.

An increase in head pitch angle was also associated with closer placement of the trailing limb to the obstacle, further placement of the leading limb after the obstacle, and higher trailing foot clearance. These associations were observed when head pitch was measured during the step before crossing or during the crossing step, even in the absence of visual information about the lower extremities. Acquiring more visual information about the obstacle may have led our participants to reduce the safety margin associated with trailing foot placement because of increased certainty about the body’s position relative to the obstacle. This would necessarily result in further leading foot placement after the obstacle if participants maintained a consistent step length during obstacle crossing. The increase in trailing foot clearance may have been necessary to reduce the probability of collisions by the trailing foot given the closer placement of the trailing foot to the obstacles. The observation of correlations between head pitch angle during the step before crossing and metrics of obstacle negotiation may reflect the use of visual information about the lower extremities in a feedforward manner to control the trajectory of the lower extremities.

Changes in correlations between obstacle negotiation performance metrics and head pitch angle when a link-segment representation was provided further suggest that visual information about the lower extremities is used during obstacle negotiation. Having a link-segment representation reduced the correlation between leading foot placement after crossing and head pitch angle before crossing compared with when no visual feedback about the lower extremities was provided. This dissociation may indicate that in the absence of visual information about the lower extremities, leading foot placement relies on feedforward planning but when visual information about the lower extremities is available, leading foot placement is more reliant on online control.

Performance metrics did not differ between success and collision trials. More specifically, foot placement and head pitch angle variables revealed similar patterns in success and collision trials. Although we observed changes in performance based on the amount of information provided about the lower extremities, the use of this information does not appear to differ during successful trials vs. trials with collisions.

Clinical implications.

Because of recent advances in VR technology, cost-effective and accessible VR systems have gained attention as a potential tool for rehabilitation (Arias et al. 2012; Gobron et al. 2015; Kim et al. 2017; Malik et al. 2017). However, there has been little investigation of how features of the virtual environment influence locomotor performance. Our results show that the types of visual information provided about the lower extremities may contribute to different obstacle negotiation strategies. These effects might be more prominent in populations with neurological impairments where processing of multimodal sensory information is damaged (Malik et al. 2017; Michel et al. 2009; van Hedel et al. 2005). Therefore, careful consideration of how visual information about the lower extremities is provided and utilized by people with neurological impairments during interactions with a virtual environment may have significant influence on the potential utility of VR in rehabilitation.

Limitations.

There are a few primary limitations of this study. First, collision with obstacles was indicated to participants only by binary visual feedback, which may have caused difficulty in recognizing the timing and side of the collision. This could be remedied in future studies through the provision of specific haptic feedback that is coordinated with the onset of virtual obstacle collision. Second, we did not measure gaze directly but used head pitch angle as a proxy of gaze. Although acquiring visual input partially depends on the orientation of an individual’s head while walking, a direct measure of gaze during obstacle negotiation would provide a more accurate understanding of how we use visual information during obstacle negotiation. Third, we did not use a realistic representation of the body (i.e., an avatar), and this may have affected the observed obstacle negotiation strategy. Although provision of an end-point representation and a sense of vertical scale via segmental information contributed to changes in obstacle negotiation performance, further investigation is required to determine how the inclusion of a realistic avatar would influence crossing behavior. Finally, obstacle negotiation performance in VR may differ from that in the real world, and, as a result, future studies should characterize differences in spatiotemporal coordination and gaze control during obstacle negotiation in real and virtual environments.

Conclusions.

This study aimed to investigate how visual information about one’s own body is utilized during obstacle negotiation in a virtual environment. We presented three levels of visual feedback about the lower extremities in VR and explored how this information influenced participants’ obstacle negotiation strategy. Our results revealed that visual information about the lower extremities promoted more consistent obstacle crossing behavior. Moreover, we observed that individuals actively acquired visual information about the environment by increasing downward head pitch angle during obstacle negotiation. Finally, visual information acquisition strategies were associated with obstacle negotiation performance at the individual obstacle level, and provision of visual information about the lower extremities impacted these associations. Our findings suggest that visual information about the lower extremities is used in planning, execution, and online control during obstacle negotiation. These results support the need to consider the type of visual information provided about the lower extremities when designing locomotor tasks in VR.

GRANTS

This research was supported by National Institute of Child Health and Human Development Grant R21 HD-088342 01 and by an Undergraduate Research Associates Grant from the University of Southern California.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the authors.

AUTHOR CONTRIBUTIONS

A.K., Z.Z., and J.M.F. conceived and designed research; A.K. and Z.Z. performed experiments; A.K., K.S.K., and J.M.F. analyzed data; A.K., K.S.K., and J.M.F. interpreted results of experiments; A.K., K.S.K., and J.M.F. prepared figures; A.K., K.S.K., and J.M.F. drafted manuscript; A.K., K.S.K., and J.M.F. edited and revised manuscript; A.K., K.S.K., Z.Z., and J.M.F. approved final version of manuscript.

REFERENCES

- Arias P, Robles-García V, Sanmartín G, Flores J, Cudeiro J.. Virtual reality as a tool for evaluation of repetitive rhythmic movements in the elderly and Parkinson’s disease patients. PLoS One 7: e30021, 2012. doi: 10.1371/journal.pone.0030021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bodenheimer B, Fu Q. The effect of avatar model in stepping off a ledge in an immersive virtual environment. In: Proceedings of the ACM SIGGRAPH Symposium on Applied Perception. New York: ACM, 2015, p. 115–118. [Google Scholar]

- Bodenheimer B, Meng J, Wu H, Narasimham G, Rump B, McNamara TP, Carr TH, Rieser JJ. Distance estimation in virtual and real environments using bisection. In: Proceedings of the 4th Symposium on Applied Perception in Graphics and Visualization. New York: ACM, 2007, p. 35–40. [Google Scholar]

- Franchak JM, Adolph KE. Visually guided navigation: head-mounted eye-tracking of natural locomotion in children and adults. Vision Res 50: 2766–2774, 2010. doi: 10.1016/j.visres.2010.09.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Franklin DW, So U, Burdet E, Kawato M. Visual feedback is not necessary for the learning of novel dynamics. PLoS One 2: e1336, 2007. doi: 10.1371/journal.pone.0001336. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gobron SC, Zannini N, Wenk N, Schmitt C, Charrotton Y, Fauquex A, Lauria M, Degache F, Frischknecht R. Serious games for rehabilitation using head-mounted display and haptic devices. In: Augmented and Virtual Reality. International Conference on Augmented and Virtual Reality. Cham: Springer, 2015, p. 199–219. [Google Scholar]

- Graci V, Elliott DB, Buckley JG. Utility of peripheral visual cues in planning and controlling adaptive gait. Optom Vis Sci 87: 21–27, 2010. doi: 10.1097/OPX.0b013e3181c1d547. [DOI] [PubMed] [Google Scholar]

- Harrington DO. Visual Fields: Textbook and Atlas of Clinical Perimetry (5th ed.). St. Louis: Mosby, 1981. [Google Scholar]

- Kim A, Darakjian N, Finley JM. Walking in fully immersive virtual environments: an evaluation of potential adverse effects in older adults and individuals with Parkinson’s disease. J Neuroeng Rehabil 14: 16, 2017. doi: 10.1186/s12984-017-0225-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leyrer M, Linkenauger SA, Bülthoff HH, Mohler BJ. Eye height manipulations: a possible solution to reduce underestimation of egocentric distances in head-mounted displays. ACM Trans Appl Percept 12: 1:1–1:23, 2015. doi: 10.1145/2699254. [DOI] [Google Scholar]

- Leyrer M, Linkenauger SA, Bülthoff HH, Kloos U, Mohler B. The influence of eye height and avatars on egocentric distance estimates in immersive virtual environments. In: Proceedings of the ACM SIGGRAPH Symposium on Applied Perception in Graphics and Visualization. New York: ACM, 2011, p. 67–74. [Google Scholar]

- Lin Q. People’s Perception and Action in Immersive Virtual Environments (IVEs) (PhD thesis). Nashville, TN: Vanderbilt University, 2014. [Google Scholar]

- Lin Q, Rieser J, Bodenheimer B. Affordance judgments in HMD-based virtual environments: stepping over a pole and stepping off a ledge. ACM Trans Appl Percept 12: 6:1–6:21, 2015. doi: 10.1145/2720020. [DOI] [Google Scholar]

- Malik RN, Cote R, Lam T. Sensorimotor integration of vision and proprioception for obstacle crossing in ambulatory individuals with spinal cord injury. J Neurophysiol 117: 36–46, 2017. doi: 10.1152/jn.00169.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marigold DS, Patla AE. Visual information from the lower visual field is important for walking across multi-surface terrain. Exp Brain Res 188: 23–31, 2008. doi: 10.1007/s00221-008-1335-7. [DOI] [PubMed] [Google Scholar]

- Marigold DS, Weerdesteyn V, Patla AE, Duysens J. Keep looking ahead? Re-direction of visual fixation does not always occur during an unpredictable obstacle avoidance task. Exp Brain Res 176: 32–42, 2007. doi: 10.1007/s00221-006-0598-0. [DOI] [PubMed] [Google Scholar]

- Matthis JS, Barton SL, Fajen BR. The biomechanics of walking shape the use of visual information during locomotion over complex terrain. J Vis 15: 10, 2015. doi: 10.1167/15.3.10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matthis JS, Fajen BR. Visual control of foot placement when walking over complex terrain. J Exp Psychol Hum Percept Perform 40: 106–115, 2014. doi: 10.1037/a0033101. [DOI] [PubMed] [Google Scholar]

- McManus EA, Bodenheimer B, Streuber S, de la Rosa S, Bülthoff HH, Mohler BJ. The influence of avatar (self and character) animations on distance estimation, object interaction and locomotion in immersive virtual environments. In: Proceedings of the ACM SIGGRAPH Symposium on Applied Perception in Graphics and Visualization. New York: ACM, 2011, p. 37–44. [Google Scholar]

- Michel J, Benninger D, Dietz V, van Hedel HJ. Obstacle stepping in patients with Parkinson’s disease. Complexity does influence performance. J Neurol 256: 457–463, 2009. doi: 10.1007/s00415-009-0114-0. [DOI] [PubMed] [Google Scholar]

- Mohagheghi AA, Moraes R, Patla AE. The effects of distant and on-line visual information on the control of approach phase and step over an obstacle during locomotion. Exp Brain Res 155: 459–468, 2004. doi: 10.1007/s00221-003-1751-7. [DOI] [PubMed] [Google Scholar]

- Mohler BJ, Bülthoff HH, Thompson WB, Creem-Regehr SH. A full-body avatar improves egocentric distance judgments in an immersive virtual environment In: Proceedings of the 5th Symposium on Applied Perception in Graphics and Visualization. New York: ACM, 2008, p. 194. [Google Scholar]

- Mohler BJ, Creem-Regehr SH, Thompson WB, Bülthoff HH. The effect of viewing a self-avatar on distance judgments in an HMD-based virtual environment. Presence Teleoperators Virtual Environ 19: 230–242, 2010. doi: 10.1162/pres.19.3.230. [DOI] [Google Scholar]

- Patla AE. How is human gait controlled by vision. Ecol Psychol 10: 287–302, 1998. doi: 10.1080/10407413.1998.9652686. [DOI] [Google Scholar]

- Patla AE, Greig M. Any way you look at it, successful obstacle negotiation needs visually guided on-line foot placement regulation during the approach phase. Neurosci Lett 397: 110–114, 2006. doi: 10.1016/j.neulet.2005.12.016. [DOI] [PubMed] [Google Scholar]

- Patla AE, Vickers JN. Where and when do we look as we approach and step over an obstacle in the travel path? Neuroreport 8: 3661–3665, 1997. [DOI] [PubMed] [Google Scholar]

- Phillips L, Ries B, Kaeding M, Interrante V. Avatar self-embodiment enhances distance perception accuracy in non-photorealistic immersive virtual environments. In: Proceedings of the 2010 IEEE Virtual Reality Conference. Washington, DC: IEEE Computer Society, 2010, p. 115–1148.; [Google Scholar]

- Renner RS, Velichkovsky BM, Helmert JR. The perception of egocentric distances in virtual environments—a review. ACM Comput Surv 46: 23:1–23:40, 2013. [Google Scholar]

- Rhea CK, Rietdyk S. Visual exteroceptive information provided during obstacle crossing did not modify the lower limb trajectory. Neurosci Lett 418: 60–65, 2007. doi: 10.1016/j.neulet.2007.02.063. [DOI] [PubMed] [Google Scholar]

- Ries B, Interrante V, Kaeding M, Phillips L.. Analyzing the effect of a virtual avatar’s geometric and motion fidelity on ego-centric spatial perception in immersive virtual environments In: Proceedings of the 16th ACM Symposium on Virtual Reality Software and Technology. New York: ACM, 2009, p. 59–66. [Google Scholar]

- Rietdyk S, Rhea CK. Control of adaptive locomotion: effect of visual obstruction and visual cues in the environment. Exp Brain Res 169: 272–278, 2006. doi: 10.1007/s00221-005-0345-y. [DOI] [PubMed] [Google Scholar]

- Rietdyk S, Rhea CK. The effect of the visual characteristics of obstacles on risk of tripping and gait parameters during locomotion. Ophthalmic Physiol Opt 31: 302–310, 2011. doi: 10.1111/j.1475-1313.2011.00837.x. [DOI] [PubMed] [Google Scholar]

- Seltman HJ. Experimental Design and Analysis (Online). 2012. http://www.stat.cmu.edu/~hseltman/309/Book/Book.pdf.

- Thompson WB, Willemsen P, Gooch AA, Creem-Regehr SH, Loomis JM, Beall AC. Does the quality of the computer graphics matter when judging distances in visually immersive environments? Presence Teleoperators Virtual Environ 13: 560–571, 2004. doi: 10.1162/1054746042545292. [DOI] [Google Scholar]

- Timmis MA, Buckley JG. Obstacle crossing during locomotion: visual exproprioceptive information is used in an online mode to update foot placement before the obstacle but not swing trajectory over it. Gait Posture 36: 160–162, 2012. doi: 10.1016/j.gaitpost.2012.02.008. [DOI] [PubMed] [Google Scholar]

- Timmis MA, Scarfe AC, Pardhan S. How does the extent of central visual field loss affect adaptive gait? Gait Posture 44: 55–60, 2016. doi: 10.1016/j.gaitpost.2015.11.008. [DOI] [PubMed] [Google Scholar]

- van der Hoort B, Ehrsson HH. Illusions of having small or large invisible bodies influence visual perception of object size. Sci Rep 6: 34530, 2016. doi: 10.1038/srep34530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van der Hoort B, Guterstam A, Ehrsson HH. Being Barbie: the size of one’s own body determines the perceived size of the world. PLoS One 6: e20195, 2011. doi: 10.1371/journal.pone.0020195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Hedel HJ, Wirth B, Dietz V. Limits of locomotor ability in subjects with a spinal cord injury. Spinal Cord 43: 593–603, 2005. doi: 10.1038/sj.sc.3101768. [DOI] [PubMed] [Google Scholar]