Abstract

Clinical trials and systematic reviews of clinical trials inform healthcare decisions. There is growing concern, however, about results from clinical trials that cannot be reproduced. Reasons for nonreproducibility include that outcomes are defined in multiple ways, results can be obtained using multiple methods of analysis, and trial findings are reported in multiple sources (“multiplicity”). Multiplicity combined with selective reporting can influence dissemination of trial findings and decision-making. In particular, users of evidence might be misled by exposure to selected sources and overly optimistic representations of intervention effects. In this commentary, drawing from our experience in the Multiple Data Sources in Systematic Reviews (MUDS) study and evidence from previous research, we offer practical recommendations to enhance the reproducibility of clinical trials and systematic reviews.

Keywords: Multiplicity, Selective reporting, Reproducibility

Background

Clinical trials and systematic reviews of clinical trials inform healthcare decisions, but there is growing concern that the methods and results of some clinical trials are not reproducible [1]. Poor design, careless execution, and variation in reporting contribute to nonreproducibility [2, 3]. In addition, trials may not be reproducible because trialists have reported their studies selectively [4]. Although steps now being taken toward “open science” are designed to enhance reproducibility [5, 6], such as trial registration and mandatory results reporting [7–13], making trial protocols and results public may lead to a glut of data and sources that few scientists have the resources to explore. This well-needed approach will thus not serve as a panacea for the problem of nonreproducibility.

Goodman and colleagues argue that “multiplicity, combined with incomplete reporting, might be the single largest contributor to the phenomenon of nonreproducibility, or falsity, of published claims [in clinical research]” ([14], p. 4). We define multiplicity in clinical research to include assessing multiple outcomes, using multiple statistical models, and reporting in multiple sources. When multiplicity is used by investigators to selectively report trial design and findings, misleading information is transmitted to evidence users.

Multiplicity was evident in a study we recently conducted, the Multiple Data Sources in Systematic Reviews (MUDS) project [15–19]. In this paper, drawing from our experience in the MUDS study and evidence from previous research, we offer practical recommendations to enhance the reproducibility of clinical trials and systematic reviews.

Multiplicity of outcomes

Choosing appropriate outcomes is a critical step in designing valid and useful clinical trials. An outcome is an event following an intervention that is used to assess its safety and/or efficacy [20]. For randomized controlled trials (RCTs), outcomes should be clinically relevant and important to patients, and they should capture the causal effects of interventions; core outcome sets aim to do this [21].

A clear outcome definition includes the domain (e.g., pain), the specific measurement tool or instrument (e.g., short form of the McGill Pain Questionnaire), the time point of assessment (e.g., 8 weeks), the specific metric used to characterize each participant’s results (e.g., change from baseline to a specific time point), and the method of aggregating data within each group (e.g., mean) (Table 1) [22, 23]. Multiplicity in outcomes occurs when, for one outcome “domain,” there are variations in the other four elements [17]. For example, a trial can collect data on many outcomes under the rubric of “pain,” introducing multiplicity and the possibility for selectively reporting a pain outcome associated with the most favorable results. Likewise, a systematic review may specify only the outcome domain, allowing for variations in all other elements [24].

Table 1.

Elements needed to define an outcome

| Element | Description |

|---|---|

| 1. Domain | Title or concept that describes the outcome |

| 2. Specific measure | Tool or instrument that assesses the outcome domain, including the name of the tool or instrument and/or specific diagnostic criteria and ascertainment procedures |

| 3. Time point | When the outcome will be assessed |

| 4. Specific metric | Ways to characterize measurement on each individual (e.g., change in a measurement from baseline to a specific time point) |

| 5. Method of aggregation | Ways to summarize individual-level measurements into group-level statistics for estimating treatment effect, including if the outcome will be treated as a continuous, categorical, or time-to-event variable and, if relevant, the specific cutoff or categories |

To illustrate how “cherry-picking” an outcome can work, in a Pfizer study that compared celecoxib with placebo in osteoarthritis of the knee, the investigators noted, “The WOMAC [Western Ontario and McMaster Universities] pain subscale was the most responsive of all five pain measures. Pain–activity composites resulted in a statistically significant difference between celecoxib and placebo but were not more responsive than pain measures alone. However, a composite responder defined as having 20% improvement in pain or 10% improvement in activity yielded much larger differences between celecoxib and placebo than with pain scores alone” ([25], p. 247).

Multiplicity of analyses and results

The goal of the analysis in an RCT is to draw inferences regarding the intervention effect by contrasting group-level quantities. Numerical contrasts between groups, which are typically ratios (e.g., relative risk) or differences in values (e.g., difference in means), are the results of the trial. There are numerous ways to analyze data for a defined outcome; thus, multiple methods of analysis introduce another dimension of multiplicity in clinical trials [17]. For example, one could analyze data on all or a subset of the participants, use multiple methods for handling missing data, and adjust for different covariates.

Although it makes sense that a range of analyses may be conducted to ensure that the findings are robust to different assumptions made about the data, performing analysis multiple ways and obtaining different results can lead to selective reporting of results deemed as favorable by the study investigators [26, 27].

Multiplicity of sources

Trial results can be reported in multiple places. This creates problems for users because many sources present incomplete or unclear information, and by reporting in multiple sources, investigators may present conflicting results. When we compared all data sources for trials included in the MUDS project, we found that information about trial characteristics and risk of bias often differed across reports, and conflicting information was difficult to disentangle [18]. In addition, important information about certain outcomes was available only in nonpublic sources [17]. Additionally, information within databases may change over time. In trial registries, outcomes may be changed, deleted, or added; although changes are documented in the archives of ClinicalTrials.gov, they are easily overlooked.

The consequences of multiplicity in RCTs

Compared with the number of “domains” in a trial, multiplicity in outcome definitions and methods of analysis may lead to an exponentially larger number of RCT results. This, combined with multiple sources of RCT information, leads to challenges for subsequent evidence synthesis [17].

There are many ways that multiplicity leads to research waste. Arguably, the most prominent example is that when one uses inconsistent outcome definitions across RCTs, trial findings cannot be combined in systematic reviews and meta-analyses even when the individual trials studied the same question [28, 29].

Aggregating results from trials depends on consistency in both outcome domains and the other four elements. Failure to synthesize the quantitative evidence means that health policy, practice guidelines, and healthcare decision-making are not informed by RCT evidence, even though RCTs exist [2, 30, 31]. For example, in a Cochrane eyes and vision systematic review and meta-analysis of RCTs examining methods to control inflammation after cataract surgery, 48 trials were eligible for inclusion in the review. However, no trial contributed to the meta-analysis, because the outcome domain “inflammation” was assessed and aggregated inconsistently [32, 33].

Multiplicity combined with selective reporting can mislead decision-making. There is ample evidence that outcomes associated with positive or statistically significant results are more likely to be reported than outcomes associated with negative or null results [4, 34]. Selective reporting can have three types of consequence for a systematic review: (1) a systematic review may fail to locate an entire trial because it remains unpublished (potential for publication bias); (2) a systematic review may locate the trial but fail to locate all outcomes assessed in the trial (potential for bias in selective reporting of outcomes); and (3) a systematic review may locate all outcomes but fail to locate all numerical results (potential for bias in selective reporting of results) [35]. Any three types of selective reporting threaten the reproducibility of clinical trials and the validity of systematic reviews because they lead to overly optimistic representations of intervention effects. To improve the reproducibility of clinical trials and systematic reviews, we have the recommendations outlined below for trialists and systematic reviewers.

Recommendation 1: Trialists should define outcomes using the five-element framework and use core outcome sets whenever possible

Many trials do not define their outcomes completely [23]; yet, simply naming an outcome domain for a trial is insufficient to limit multiplicity, and it invites selective reporting. When outcomes are defined solely in terms of their domains, there is much room for making up multiple outcomes post hoc and cherry-picking favorable results.

In MUDS, we collected data from 21 trials of gabapentin for neuropathic pain. By searching for all sources of information about the trials, we identified 74 reports that described the trial results, including journal articles, conference abstracts, trial registrations, approval packages from the U.S. Food and Drug Administration, and clinical study reports. We also acquired six databases containing individual participant data. For the single outcome domain “pain intensity,” we identified 8 specific measurements (e.g., short form of the McGill Pain Questionnaire, visual analogue scale), 2 specific metrics, and 39 methods of aggregation for an 8-week time window. This resulted in 119 defined outcomes.

Recommendation 2: Trialists should produce and update, as needed, a dated statistical analysis plan (SAP) and communicate the plan to the public

It is possible to obtain multiple results for a single outcome by using different methods of analysis [17, 36]. In MUDS, using gabapentin for neuropathic pain as an example, we identified 4 analysis populations and 5 ways of handling missing data from 21 trials, leading to 287 results for pain intensity at an 8-week time window.

We recommend that trialists should produce a SAP before the first participant is randomized, following the recommended guidelines [37]. The International Conference on Harmonisation defines a SAP as “a document that contains a more technical and detailed elaboration of the principal features of the analysis than those described in the protocol, and includes detailed procedures for executing the statistical analysis of the primary and secondary variables and other data” ([38], p. 35). Currently, SAPs are usually prepared for industry-sponsored trials; however, in our opinion, SAPs may not be prepared with the same level of detail for non-industry-sponsored trials [39]. Others have shown a diverse practice with regard to SAP content [37]. The National Institutes of Health has a less specific but similar policy, which became effective January 25, 2018 [40].

It is entirely possible that additional analyses might be conducted after the SAP is drafted, such as at the behest of peer reviewers or a data monitoring committee. In cases such as this, investigators should document and date any amendments to the SAP or protocol and communicate post hoc analyses clearly. SAPs should be made publicly available and linked to other trial information (see Recommendation 3).

Recommendation 3: Trialists should make information about trial methods and results public, provide references to the information sources in a trial registry, and keep the list of sources up-to-date

Trial methods and results should be made public so that users can assess the validity of the trial findings. Users of trial information should anticipate that there may be multiple sources associated with a trial. A central indexing system, such as a trial registry, for listing all trial information sources should be available so that systematic reviewers can find multiple sources without unnecessary expenditure of resources.

Recommendation 4: Systematic reviewers should anticipate a multiplicity of outcomes, results, and sources for trials included in systematic reviews and should describe how they will handle such issues before initiating their research

Systematic reviewers sometimes use explicit rules for data extraction and analysis. For example, some systematic reviewers extract outcomes that were measured using the most common scale or instrument for a particular domain. Although such approaches may be reproducible and efficient, they may exclude data that users consider informative. When rules for selecting from among multiple outcomes and results are not prespecified, the choice of data for meta-analysis may be arbitrary or data-driven. In the MUDS example, if we were to pick all possible combinations of the three elements (specific measure, specific metric, and method of aggregation) for a single outcome domain, pain intensity at an 8-week window (i.e., holding domain and time point constant), we could conduct 34 trillion different meta-analyses [18].

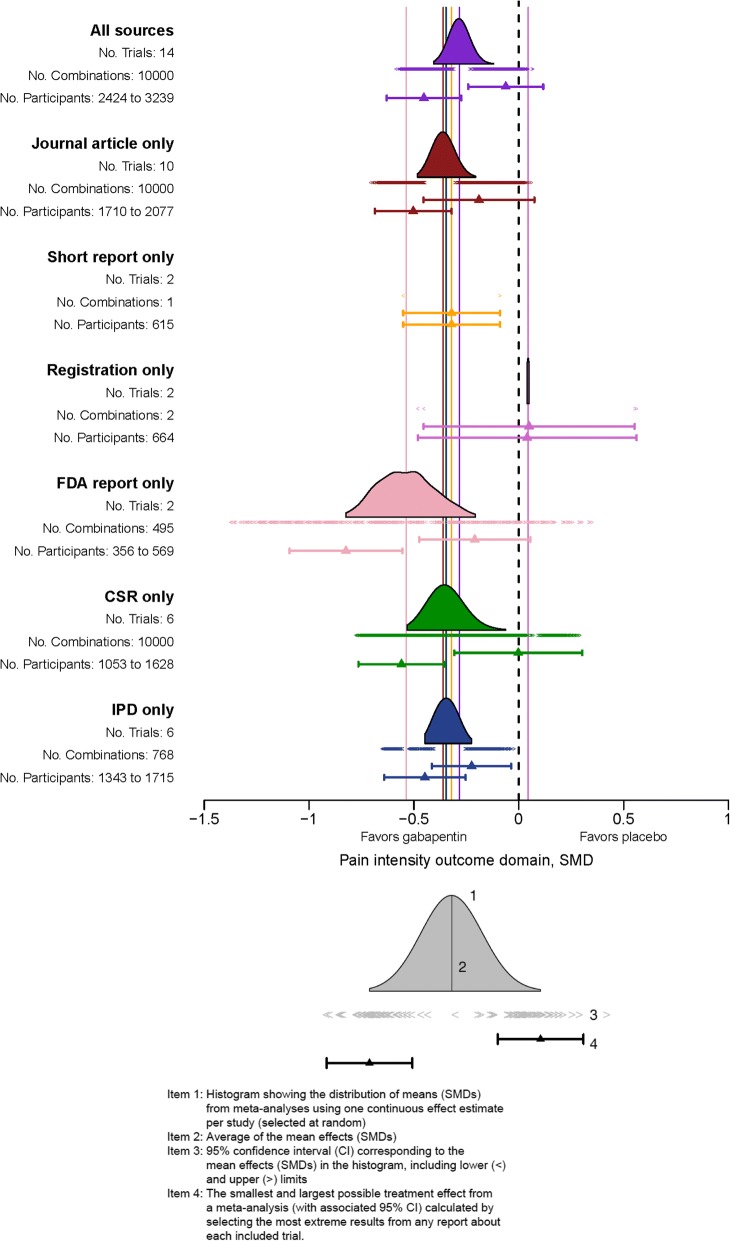

Many authoritative sources recommend looking for all sources of information about each trial identified for a systematic review [41, 42]. To investigate whether multiplicity in results and multiplicity in data sources might influence the conclusions of meta-analysis on pain at 8 weeks, we performed a resampling meta-analysis [43] using MUDS data from the 21 trials and 74 sources as follows:

In each resampling iteration, we randomly selected one possible result from each trial within a prespecified 8-week time window.

We combined the sampled results using a random effects meta-analysis.

We iterated the first two steps 10,000 times;

We generated a histogram that shows the distribution of the estimates from meta-analyses.

As shown in the top histogram of Fig. 1, when all sources of data were used, meta-analyses that included the largest and smallest estimates from each trial could lead to different conclusions on the effectiveness of gabapentin with nonoverlapping 95% CIs. When the resampling meta-analyses were repeated using only one data source at a time, we found that there was variation in the results by data source.

Fig. 1.

Results of the resampling meta-analyses for pain intensity at 8 weeks [18]. CSR Clinical Study Report, FDA U.S. Food and Drug Administration, IPD Individual patient data, SMD Standardized mean difference

Conclusions

Multiplicity of outcomes, analyses, results, and sources, coupled with selective reporting, can affect the findings of individual trials as well as the systematic reviews and meta-analyses based on them. We encourage trialists and systematic reviewers to consider our recommendations aimed at minimizing the effects of multiplicity on what we know about intervention effectiveness.

Funding

This work was supported by contract ME 1303 5785 from the Patient-Centered Outcomes Research Institute (PCORI) and a fund established at The Johns Hopkins University for scholarly research on reporting biases by Greene LLP. The funders were not involved in the design or conduct of the study, manuscript preparation, or the decision to submit the manuscript for publication.

Abbreviations

- MUDS

Multiple Data Sources in Systematic Reviews

- RCT

Randomized controlled trial

- SAP

Statistical analysis plan

Authors’ contributions

All authors served as key investigators of the MUDS study, contributing to its design, execution, analysis, and reporting. Specifically, EMW served as the project director and managed the daily operation of the study. NF contributed to the data collection, management, and analysis. HH led the data analysis. KD obtained and secured the funding and oversaw all aspects of the study. For this commentary, TL wrote the initial draft with input from EMW, NF, and KD. HH generated the figure. All authors reviewed and critically revised the manuscript, and all authors read and approved the final manuscript.

Ethics approval and consent to participate

Not applicable, because this is a commentary.

Competing interests

Up to 2008, KD served as an unpaid expert witness for the plaintiffs’ lawyers (Greene LLP) in litigation against Pfizer that provided several gabapentin documents used for several papers we referenced and commented on. Thomas Greene has donated funding to The Johns Hopkins University for scholarship related to reporting that has been used by various doctoral students, including NF.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Tianjing Li, Phone: +1 410-502-4630, Email: tli19@jhu.edu.

Evan Mayo-Wilson, Email: evan.mayo-wilson@jhu.edu.

Nicole Fusco, Email: nicole.a.fusco@gmail.com.

Hwanhee Hong, Email: hwanhee.hong@duke.edu.

Kay Dickersin, Email: kdicker3@jhu.edu.

References

- 1.Ebrahim S, Sohani ZN, Montoya L, Agarwal A, Thorlund K, Mills EJ, Ioannidis JP. Reanalyses of randomized clinical trial data. JAMA. 2014;312(10):1024–1032. doi: 10.1001/jama.2014.9646. [DOI] [PubMed] [Google Scholar]

- 2.Glasziou P, Altman DG, Bossuyt P, Boutron I, Clarke M, Julious S, Michie S, Moher D, Wager E. Reducing waste from incomplete or unusable reports of biomedical research. Lancet. 2014;383(9913):267–276. doi: 10.1016/S0140-6736(13)62228-X. [DOI] [PubMed] [Google Scholar]

- 3.Turner L, Shamseer L, Altman DG, Schulz KF, Moher D. Does use of the CONSORT Statement impact the completeness of reporting of randomised controlled trials published in medical journals? A Cochrane review. Syst Rev. 2012;1:60. doi: 10.1186/2046-4053-1-60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Dwan K, Gamble C, Williamson PR, Kirkham JJ. Reporting Bias Group. Systematic review of the empirical evidence of study publication bias and outcome reporting bias — an updated review. PLoS One. 2013;8(7):e66844. doi: 10.1371/journal.pone.0066844. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Institute of Medicine . Sharing clinical trial data: maximizing benefits, minimizing risk. Washington, DC: National Academies Press; 2015. [PubMed] [Google Scholar]

- 6.Nosek BA, Alter G, Banks GC, et al. Scientific standards: promoting an open research culture. Science. 2015;348(6242):1422–1425. doi: 10.1126/science.aab2374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.DeAngelis CD, Drazen JM, Frizelle FA, Haug C, Hoey J, Horton R, Kotzin S, Laine C, Marusic A, Overbeke AJ, Schroeder TV, Sox HC, Van Der Weyden MB. International Committee of Medical Journal Editors. Clinical trial registration: a statement from the International Committee of Medical Journal Editors. JAMA. 2004;292(11):1363–1364. doi: 10.1001/jama.292.11.1363. [DOI] [PubMed] [Google Scholar]

- 8.Zarin DA, Tse T, Williams RJ, Rajakannan T. Update on trial registration 11 years after the ICMJE policy was established. N Engl J Med. 2017;376(4):383–391. doi: 10.1056/NEJMsr1601330. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Food and Drug Administration Amendments Act (FDAAA), 42 USC §801. 2007.

- 10.National Institutes of Health NIH policy on dissemination of NIH-funded clinical trial information. Fed Regist. 2016;81(183):64922. [Google Scholar]

- 11.Clinical trials registration and results information submission: final rule. 42 CFR 11. 2016. [PubMed]

- 12.Hudson KL, Lauer MS, Collins FS. Toward a new era of trust and transparency in clinical trials. JAMA. 2016;316(13):1353–1354. doi: 10.1001/jama.2016.14668. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Zarin DA, Tse T, Williams RJ, Carr S. Trial reporting in ClinicalTrials.gov — the final rule. N Engl J Med. 2016;375(20):1998–2004. doi: 10.1056/NEJMsr1611785. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Goodman SN, Fanelli D, Ioannidis JP. What does research reproducibility mean? Sci Transl Med. 2016;8(341):341ps12. doi: 10.1126/scitranslmed.aaf5027. [DOI] [PubMed] [Google Scholar]

- 15.Mayo-Wilson E, Hutfless S, Li T, Gresham G, Fusco N, Ehmsen J, Heyward J, Vedula S, Lock D, Haythornthwaite J, Payne JL, Cowley T, Tolbert E, Rosman L, Twose C, Stuart EA, Hong H, Doshi P, Suarez-Cuervo C, Singh S, Dickersin K. Integrating multiple data sources (MUDS) for meta-analysis to improve patient-centered outcomes research. Syst Rev. 2015;4:143. doi: 10.1186/s13643-015-0134-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Mayo-Wilson E, Doshi P, Dickersin K. Are manufacturers sharing data as promised? BMJ. 2015;351:h4169. doi: 10.1136/bmj.h4169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Mayo-Wilson E, Fusco N, Li T, Hong H, Canner J, Dickersin K. MUDS Investigators. Multiple outcomes and analyses in clinical trials create challenges for interpretation and research synthesis. J Clin Epidemiol. 2017;86:39–50. doi: 10.1016/j.jclinepi.2017.05.007. [DOI] [PubMed] [Google Scholar]

- 18.Mayo-Wilson E, Li T, Fusco N, Bertizzolo L, Canner JK, Cowley T, Doshi P, Ehmsen J, Gresham G, Guo N, Haythornthwaite JA, Heyward J, Hong H, Pham D, Payne JL, Rosman L, Stuart EA, Suarez-Cuervo C, Tolbert E, Twose C, Vedula S, Dickersin K. Cherry-picking by trialists and meta-analysts can drive conclusions about intervention efficacy. J Clin Epidemiol. 2017;91:95–110. doi: 10.1016/j.jclinepi.2017.07.014. [DOI] [PubMed] [Google Scholar]

- 19.Mayo-Wilson E, Li T, Fusco N, Dickersin K. MUDS investigators. Practical guidance for using multiple data sources in systematic reviews and meta-analyses (with examples from the MUDS study) Res Synth Methods. 2018;9(1):2–12. doi: 10.1002/jrsm.1277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Meinert CL. Clinical trials dictionary: terminology and usage recommendations. 2. Hoboken, NJ: Wiley; 2012. [Google Scholar]

- 21.Williamson PR, Altman DG, Blazeby JM, Clarke M, Devane D, Gargon E, Tugwell P. Developing core outcome sets for clinical trials: issues to consider. Trials. 2012;13:132. doi: 10.1186/1745-6215-13-132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Saldanha IJ, Dickersin K, Wang X, Li T. Outcomes in Cochrane systematic reviews addressing four common eye conditions: an evaluation of completeness and comparability. PLoS One. 2014;9(10):e109400. doi: 10.1371/journal.pone.0109400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Zarin DA, Tse T, Williams RJ, Califf RM, Ide NC. The ClinicalTrials.gov results database — update and key issues. N Engl J Med. 2011;364(9):852–860. doi: 10.1056/NEJMsa1012065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Dosenovic S, Jelicic Kadic A, Jeric M, Boric M, Markovic D, Vucic K, Puljak L. Efficacy and safety outcome domains and outcome measures in systematic reviews of neuropathic pain conditions. Clin J Pain. 2018;34(7):674–684. doi: 10.1097/AJP.0000000000000574. [DOI] [PubMed] [Google Scholar]

- 25.Trudeau J, Van Inwegen R, Eaton T, Bhat G, Paillard F, Ng D, Tan K, Katz NP. Assessment of pain and activity using an electronic pain diary and actigraphy device in a randomized, placebo-controlled crossover trial of celecoxib in osteoarthritis of the knee. Pain Pract. 2015;15(3):247–255. doi: 10.1111/papr.12167. [DOI] [PubMed] [Google Scholar]

- 26.Page MJ, McKenzie JE, Kirkham J, Dwan K, Kramer S, Green S, Forbes A. Bias due to selective inclusion and reporting of outcomes and analyses in systematic reviews of randomised trials of healthcare interventions. Cochrane Database Syst Rev. 2014;10:MR000035. doi: 10.1002/14651858.MR000035.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Page MJ, McKenzie JE, Chau M, Green SE, Forbes A. Methods to select results to include in meta-analyses deserve more consideration in systematic reviews. J Clin Epidemiol. 2015;68(11):1282–1291. doi: 10.1016/j.jclinepi.2015.02.009. [DOI] [PubMed] [Google Scholar]

- 28.Saldanha IJ, Li T, Yang C, Owczarzak J, Williamson PR, Dickersin K. Clinical trials and systematic reviews addressing similar interventions for the same condition do not consider similar outcomes to be important: a case study in HIV/AIDS. J Clin Epidemiol. 2017;84:85–94. doi: 10.1016/j.jclinepi.2017.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Saldanha IJ, Lindsley K, Do DV, Chuck RS, Meyerle C, Jones L, Coleman AL, Jampel HJ, Dickersin K, Virgili G. Comparison of clinical trials and systematic review outcomes for the 4 most prevalent eye diseases. JAMA Ophthalmol. 2017;135(9):933–940. doi: 10.1001/jamaophthalmol.2017.2583. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Chalmers I, Glasziou P. Avoidable waste in the production and reporting of research evidence. Lancet. 2009;374(9683):86–89. doi: 10.1016/S0140-6736(09)60329-9. [DOI] [PubMed] [Google Scholar]

- 31.Chan AW, Song F, Vickers A, Jefferson T, Dickersin K, Gøtzsche PC, Krumholz HM, Ghersi D, van der Worp HB. Increasing value and reducing waste: addressing inaccessible research. Lancet. 2014;383(9913):257–266. doi: 10.1016/S0140-6736(13)62296-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Juthani VV, Clearfield E, Chuck RS. Non-steroidal anti-inflammatory drugs versus corticosteroids for controlling inflammation after uncomplicated cataract surgery. Cochrane Database Syst Rev. 2017;7:CD010516. doi: 10.1002/14651858.CD010516.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Clearfield E, Money S, Saldanha I, Chuck R, Lindsley K. Outcome choice and potential loss of valuable information - an example from a Cochrane Eyes and Vision systematic review. Abstracts of the Global Evidence Summit, Cape Town, South Africa. Cochrane Database Syst Rev. 2017;9(Suppl 1). 10.1002/14651858.CD201702.

- 34.Song F, Parekh S, Hooper L, Loke YK, Ryder J, Sutton AJ, Hing C, Kwok CS, Pang C, Harvey I. Dissemination and publication of research findings: an updated review of related biases. Health Technol Assess. 2010;14(8). 10.3310/hta14080. [DOI] [PubMed]

- 35.Sterne JA, Sutton AJ, Ioannidis JP, Terrin N, Jones DR, Lau J, Carpenter J, Rücker G, Harbord RM, Schmid CH, Tetzlaff J, Deeks JJ, Peters J, Macaskill P, Schwarzer G, Duval S, Altman DG, Moher D, Higgins JP. Recommendations for examining and interpreting funnel plot asymmetry in meta-analyses of randomised controlled trials. BMJ. 2011;343:d4002. doi: 10.1136/bmj.d4002. [DOI] [PubMed] [Google Scholar]

- 36.Vedula SS, Li T, Dickersin K. Difference in reporting of analyses in internal company documents versus published trial reports: comparisons in industry-sponsored trials in off-label uses of gabapentin. PLoS Med. 2013;10(1):e1991378. doi: 10.1371/journal.pmed.1001378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Gamble C, Krishan A, Stocken D, Lewis S, Juszczak E, Doré C, Williamson PR, Altman DG, Montgomery A, Lim P, Berlin J, Senn S, Day S, Barbachano Y, Loder E. Guidelines for the content of statistical analysis plans in clinical trials. JAMA. 2017;318(23):2337–2343. doi: 10.1001/jama.2017.18556. [DOI] [PubMed] [Google Scholar]

- 38.International Conference on Harmonisation of technical requirements for registration of pharmaceuticals for human use. Statistical Principles for Clinical Trials E9. Step 4 version. 5 February 1998. http://www.ich.org/fileadmin/Public_Web_Site/ICH_Products/Guidelines/Efficacy/E9/Step4/E9_Guideline.pdf. Accessed 11 Sept 2018.

- 39.Finfer S, Bellomo R. Why publish statistical analysis plans? Crit Care Resusc. 2009;11(1):5–6. [PubMed] [Google Scholar]

- 40.General instructions for NIH and other PHS agencies. SF424 (R&R) Application Packages. 25 September 2017. https://grants.nih.gov/grants/how-to-apply-application-guide/forms-e/general-forms-e.pdf. Accessed 11 Sept 2018.

- 41.JPT H, Deeks JJ. Chapter 7: Selecting studies and collecting data. In: JPT H, Green S, editors. Cochrane handbook for systematic reviews of interventions. Version 5.1.0 (updated March 2011): The Cochrane Collaboration; 2011. Available from https://training.cochrane.org/handbook. Accessed 11 Sept 2018.

- 42.Institute of Medicine . Finding what works in health care: standards for systematic reviews. Washington, DC: National Academies Press; 2011. [PubMed] [Google Scholar]

- 43.Tendal B, Nuesch E, Higgins JP, Juni P, Gotzsche PC. Multiplicity of data in trial reports and the reliability of meta-analyses: Empirical study. BMJ. 2011;343:d4829. doi: 10.1136/bmj.d4829. [DOI] [PMC free article] [PubMed] [Google Scholar]